- 1Faculty of Humanities, Kyoto University of Advanced Science, Kyoto, Japan

- 2Developmental Disorders Section, Department of Rehabilitation for Brain Functions, Research Institute of National Rehabilitation Center for Persons with Disabilities, Tokorozawa, Japan

A head-mounted display could potentially restrict users’ visual fields and thereby impair their spatial cognitive ability. Spatial cognition can be assisted with immersive visual guidance. However, whether this technique is useful for individuals with autism-spectrum disorder (ASD) remains unclear. Given the recent virtual reality (VR) contents targeting individuals with ASD, the relationship between ASD-related traits and the effectiveness of immersive visual guidance should be clarified. This pilot study evaluated how ASD-related traits (autistic traits and empathizing–systemizing cognitive styles) among typically developing individuals are related to the effectiveness of visual guidance. Participants performed visual search and spatial localization tasks while using immersive visual guidance. In the visual search task, participants searched immersive VR environments for a target object and pushed a button according to the target color as quickly as possible. In the localization task, they viewed immersive visual guidance for a short duration and localized the guided direction via a controller. Results showed that visual search times were hastened with systemizing cognition. However, ASD-related traits were not significantly related to localization accuracy. These findings suggest that immersive visual guidance is generally useful for individuals with higher ASD-related traits.

1 Introduction

1.1 Immersive visual guidance technique assists users’ spatial cognition

Many head-mounted displays (HMDs) restrict users’ visual field. A solution involves immersive visual guidance, which enhances spatial cognition by converting surrounding items into symbolic information (Bork et al., 2018). Users’ attention can be guided and directed toward the surrounding items by an isosceles triangle (Gruenefeld et al., 2018a), a moving arrow (Gruenefeld et al., 2018b), or 3D radar that depicts their location (Gruenefeld et al., 2019). Visual guidance can enhance two types of spatial cognition (Harada and Ohyama, 2021): spatial localization and visual search. Localization refers to the ability to recognize the location of visual items based on sensory-perceptual cues. Visual search refers to the ability to pay attention and detect target items.

Recently, some virtual reality (VR) contents have been used for individuals with autism-spectrum disorder (ASD) or higher autistic individuals (Lorenzo et al., 2013; Ip et al., 2018; Kumazaki et al., 2021; Li et al., 2023). ASD is a developmental disorder characterized by difficulties in social interactions and excessively limited behavioral patterns and interests (American Psychiatric Association, 2013). McCleery et al. (2020) reported that repeatedly experiencing social interactions in immersive VR environments reduces the social anxiety and phobic responses of individuals with ASD. Given the recent trends, an important endeavor is to clarify how the effectiveness of immersive visual guidance changes with ASD-related traits to provide effective spatial assistance for individuals with ASD.

1.2 Relationship between ASD-related traits and spatial cognitive ability

ASD-related traits are continuously distributed even within typically developing individuals and can be evaluated using three scales: the Autism Quotient (AQ: Baron-Cohen et al., 2001), Systemizing Quotient (SQ: Baron-Cohen et al., 2003), and Empathy Quotient (EQ: Baron-Cohen and Wheelwright, 2004). The AQ indicates generalized autistic traits that comprise social skills, attention switching, local preferences, social communication, and imagination. The SQ and EQ indicate an individual’s tendency to recognize causes and outcomes on the basis of underlying rules and emotional aspects, respectively. Individuals with ASD are more systemizing and less empathetic than typically developing individuals (Harmsen, 2019). Less empathizing cognition impairs social interactions, which may result in difficulties in social environments for individuals with ASD.

Previous studies have suggested that individuals with ASD or higher ASD-related. Traits have atypical spatial cognitive characteristics. Spatial cognition in children with ASD has been reported to be based more strongly on intrinsic coordinates than on external spatial coordinates (Haswel et al., 2009; Wada et al., 2014). This tendency is related to the impairment in social skills (Haswel et al., 2009). With regard to the spatial cognitive ability of individuals with ASD or higher ASD-related traits, previous studies have provided mixed evidence (Muth et al., 2014). Individuals with ASD frequently show superior visual search performance (O’Riordan, 2004), and such superior performance has been extensively reported in the literature (see Kaldy et al., 2016 for a review). This topic has also been discussed from the perspective of atypical perceptual–cognitive characteristics in individuals with ASD, such as enhanced perception (O’Riordan, 2004) and attentional systems (Keehn et al., 2013). By contrast, Visser et al. (2013) reported the typical or inferior performance of localization in individuals with ASD. Given an atypical spatial cognitive ability, the effectiveness of visual guidance may change with ASD-related traits. However, to our knowledge, no study has investigated this topic.

1.3 Purpose and study design

This pilot study evaluated whether the effectiveness of immersive visual guidance changed according to ASD-related traits. We recruited typically developing individuals and conducted visual search and localization tasks using immersive visual guidance. We analyzed the effects of AQ, SQ, and EQ on visual search and localization performance. Previous studies have reported that individuals with ASD exhibit superior visual search performance (Kaldy et al., 2016) but inferior localization performance (Visser et al., 2013). Therefore, we hypothesized that the effect of visual guidance on visual search and localization performance will be related to autistic traits and systemizing and/or empathizing cognitive styles.

2 Methods

2.1 Participants

This study included 34 individuals with typical development (14 males and 20 females). Participants’ ages ranged from 18 to 35 years (M = 21.68; SD = 3.97). They were naïve to the purpose of this study. Participants’ ASD-related traits were evaluated via the Japanese versions of the AQ (Wakabayashi et al., 2004) and EQ and SQ questionnaires (Wakabayashi et al., 2006). The AQ questionnaire asked how participants agreed with 50 items that described social situations, behavioral tendencies, and obsessions. For example, “I prefer to do something with others rather than alone.” The EQ and SQ questions asked how participants agreed with 47 items that described emotional communication and mechanism of systems. For example, “I prefer to take care of other people (EQ item)” and “If I were to buy a car, I prefer to know detailed information about the engine’s performance (SQ item).” Responses were rated on a 4-point Likert scale (agree, partially agree, partially disagree, or disagree).

2.2 Equipment and materials

A laptop (DELL ALIENWARE m15; Core i7-9750H), with a GeForce RTX 2080 8GB GDDR6 Max-Q (NVIDIA) GPU, was used for the experiments. A VIVE Pro Eye (HTC) was employed as the VR system. The HMD and two controllers were tracked using three sensors (SteamVR Base Station 2.0). The VR environments were established via Unity 2018.4.13f1 and SteamVR.

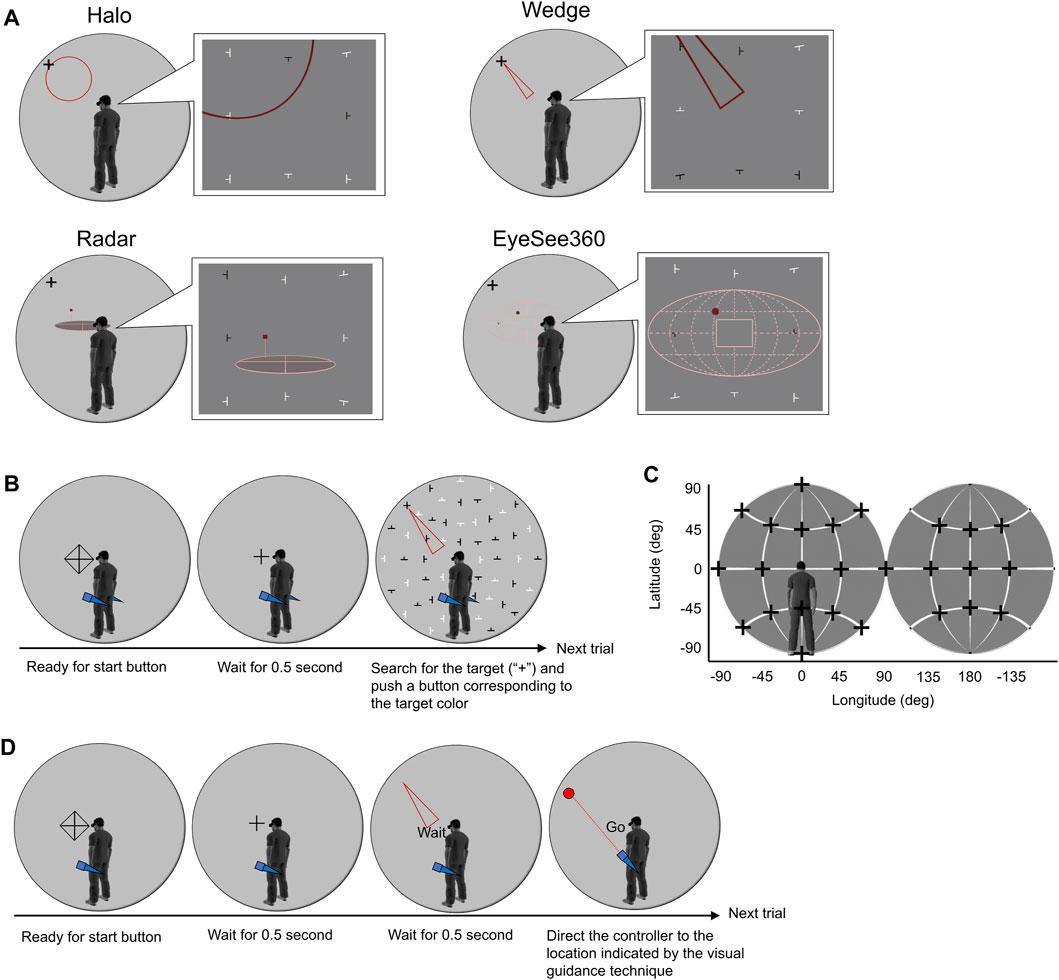

In total, four visual guidance types were used (Figure 1A): two first-person (Halo and Wedge: Gruenefeld et al., 2018a) and two bird’s eye types (Radar and EyeSee360: Gruenefeld et al., 2019). These were downloaded from Gruenefeld (2017). The Halo depicted a red circle between the center of HMD’s view and target direction. The circle size indicated the distance between the HMD’s direction and target direction. In addition, the circle position indicated the direction that the target was located. Target directions were calculated based on the size and position of the red circle. The Wedge depicted a red isosceles triangle whose vertex was directed toward the target direction. Target direction was calculated based on the orientation of the triangle and length of the base. The Radar was composed of a red cube and an elliptical disk that contained cross lines. The cube indicated the position of target. Furthermore, the crossed point represented the position of the HMD in the virtual environment. Target direction was calculated based on the relative position of red cube on the disk. The EyeSee360 comprised a red dot, squire frame, and projected map. The dot and frame indicated the target direction and current field of view, respectively. The rotation of the HMD moved the dot horizontally and frame vertically. The dotted lines on the map were drawn at 45° intervals. The projected map covered 360°, and the left and right ends indicted the participants’ back. Target direction was calculated based on the relative status among the dot, frame, and projected map.

FIGURE 1. Schematic illustration of the experimental settings. (A) Immersive visual guidance technique. (B) Trial sequence of the search task. (C) Possible target directions of the visual search task and guided directions on the localization task. The crosses (+) indicate the target and guided directions. (D) Trial sequence of the localization task. The examples indicate Wedge condition.

2.3 Procedures

After the experimental instructions were provided, participants were equipped with an HMD and held two controllers. They performed the visual search and localization tasks for one guidance type sequentially. This was also repeated for the other three types. The order of the guidance types was not completely counterbalanced because the sample size was not a multiple of 4. To examine whether the order of guidance type was significantly unbalanced, we conducted a chi-squire test. In this analysis, the frequency was calculated for each of the guidance type (Halo, Wedge, Radar, and EyeSee360) and order (1, 2, 3, and 4). The test showed that the order was not significantly unbalanced across the guidance type (χ2 = 0.343, p = .999). Hence, the order of guidance type was almost balanced.

2.3.1 Visual search task

Participants searched an immersive VR environment for a target with the use of visual guidance. The trial sequence is shown in Figure 1B. After the fixation cross (“+”), a visual guidance, target, and distractors were presented. The target was a white or black cross (“+”), and the distractors were white or black “T” rotated at 0, 90, 180, and 270°. The possible directions of the targets are shown in Figure 1C. Participants were asked to search for the target while using visual guidance and push a button that corresponded to the target’s color as quickly as possible. The colors and directions of the targets were randomized across the trials. Before the task, participants performed practice trials until they became more proficient.

The visual search task was divided into experimental blocks of 50 trials. There were 200 trials: visual guidance (Halo, Wedge, Radar, and EyeSee360) × target direction (25) × target color (white and black). The type of visual guidance was manipulated between the experimental blocks, and the colors and directions of the targets were manipulated within a block.

2.3.2 Spatial localization task

Participants were provided with visual guidance that indicated a certain direction and localized that direction via a controller. Participants were instructed that fast responses were not required because this task measured the localization accuracy and not speed. The trial sequence is shown in Figure 1D. After the fixation cross (“+”), a visual guidance, which indicated a certain direction, and the instruction “Wait” were presented for 0.5 s. Participants were asked to fixate their gaze position on the instruction at this phase. The possible guided directions were identical to the target directions in the search task (Figure 1C). Subsequently, an instruction “Go” was presented. Participants moved their gaze, directed the controller in the guided direction, and pushed a button. The guided direction was randomized across the experimental trials. Before the task, participants performed practice trials until they became more proficient.

The localization task was divided into experimental blocks of 50 trials. There were 200 trails: visual guidance (4) × guidance indication (25) × repetition (2). The visual guidance factor was manipulated between the experimental blocks, and the guidance indication was manipulated within a block.

2.4 Data analysis

Experimental data were illustrated by the Rstudio (2023.03.1) and Surfer (25.3.290: Golden Software). Heatmaps were depicted via Kriging methods. Analyses of variance (ANOVAs) and multiple regression analyses were conducted using JASP (0.17.2). Mauchly’s sphericity tests showed significant violations of the spherical assumption for some ANOVAs. Hence, the results were corrected via Greenhouse–Geisser correction.

To evaluate visual search performance, search times were calculated based on the time from the start till the participant pushed the button. To evaluate localization performance, the error size in degrees between the localized and guided directions was calculated. Larger search times and error of localization indicated lower performance. Before the visual search performance was analyzed, we excluded data from 92 incorrect trials (e.g., an incorrect button that corresponded to the target color was pushed: 1.353%). We also excluded outlier values as in previous studies (Henderson and Macquistan, 1993; Franconeri and Simons, 2003) because these values impair the reliability of statistical tests. Outliers were defined as values greater than the mean +3 standard deviations. Consequently, 92 (1.353%) and 106 outliers (1.559%) in the visual search and localization tasks were excluded, respectively.

3 Results

3.1 Visual search performance

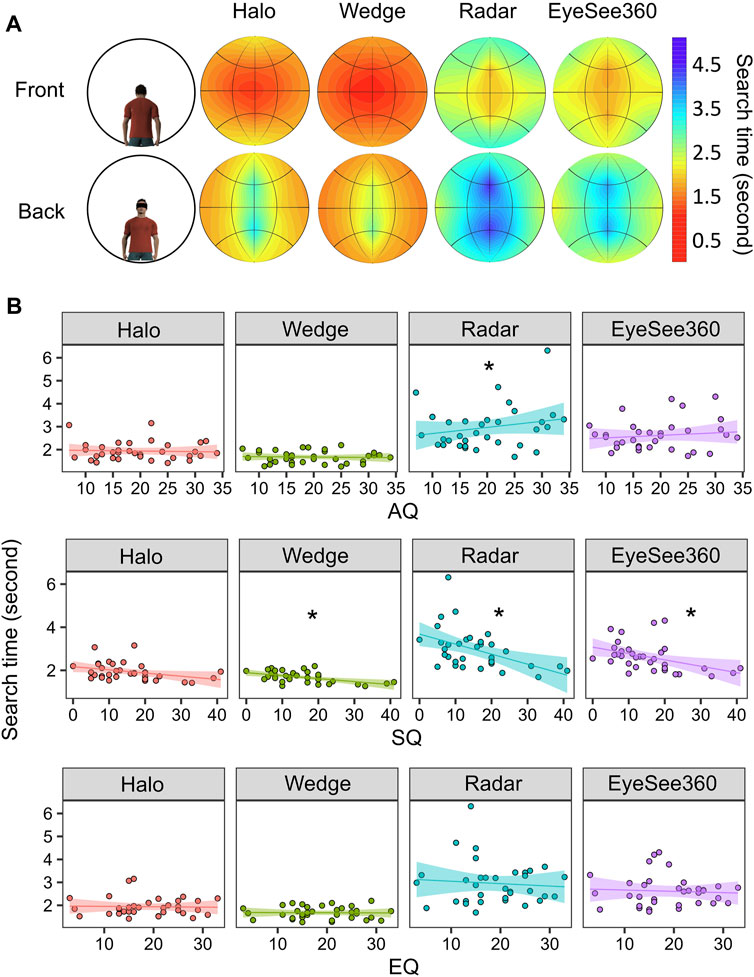

Figure 2A shows the mean search times. In the heatmaps, the target longitude, target latitude, and mean search time were entered on the x-, y-, and z-axes, respectively. Results of the two-way repeated-measures ANOVA with the factor of visual guidance type are shown in Supplementary Material S1. Figure 2B shows the search time as functions of the AQ, SQ, and EQ scores. To clarify the effect of ASD-related traits on visual search performance, a regression analysis was conducted for each guidance type with the predictors of the AQ, SQ, and EQ scores on the search times. The regression models were significant for the Wedge (F = 3.590, p = .025, adjusted R2 = .191), Radar (F = 5.618, p = .004, adjusted R2 = .296), and EyeSee360 conditions (F = 3.156, p = .039, adjusted R2 = .164) but not for the Halo condition (F = 1.459, p = .246, adjusted R2 = .040). For the Wedge condition, the search was hastened with the SQ score (t = −3.251, p = .003, β = −.531); the AQ and EQ scores did not influence the search time (|t|s < 0.504, ps > .618, βs < .093). For the Radar condition, the search was fastened with the SQ score (t = −3.829, p < .001, β = −.583). However, it was delayed with the AQ score (t = 2.387, p = .023, β = .413). Furthermore, it was not influenced by the EQ score (t = 0.671, p = .508, β = .112). For the EyeSee360 condition, the search was fastened with the SQ score (t = −2.979, p = .006, β = −.494). However, it was not influenced by the AQ and EQ scores (|t|s < 1.471, ps > .152, βs < .277). These results demonstrated that systemizing cognition promoted search performance.

FIGURE 2. The visual search performance. (A) Mean search times. The colors represent the mean search times. (B) Effect of ASD-related traits on search times. The colored areas around the lines represent standard errors. The asterisks represent significant effects of autistic traits and systemizing or empathizing cognitive styles (p < .05).

3.2 Spatial localization performance

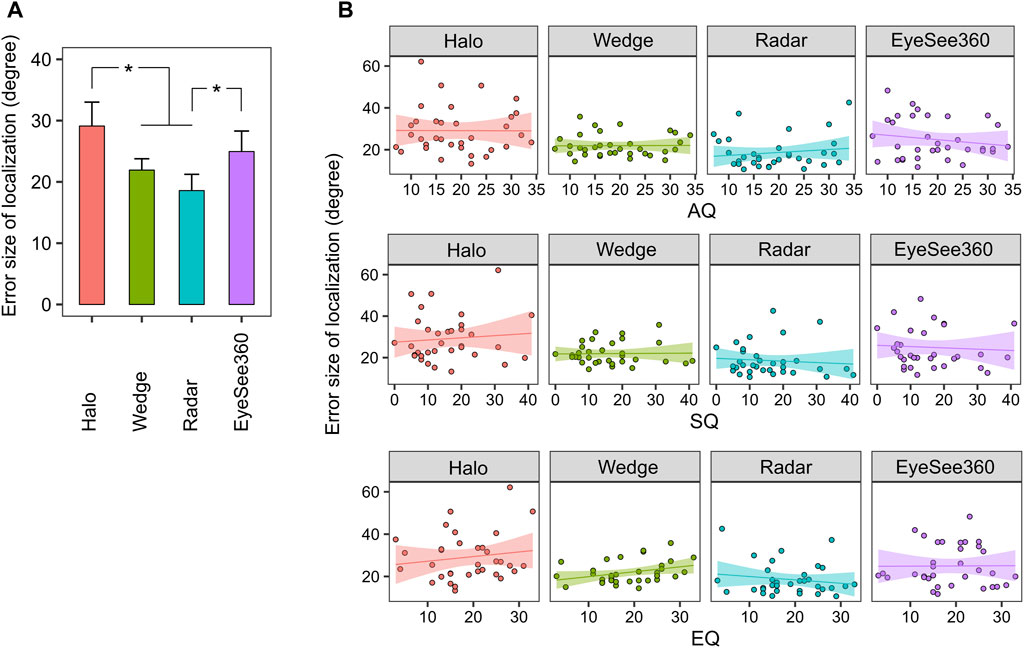

3.2.1 Error of localization

Figure 3A shows the mean error of localization. Results of the one-way repeated-measures ANOVA are shown in Supplementary Material S2. Figure 3B shows the error size of the localization as functions of the AQ, SQ, and EQ scores. To examine the effect of ASD-related traits on localization performance, a regression analysis was conducted for each guidance type with the predictors of the AQ, SQ, and EQ scores on error size. The results showed that none of the models were significant (Fs < 1.929, ps > .146, adjusted R2 < .078). This suggested that neither autistic traits nor systemizing or empathizing cognitive styles influenced localization performance.

FIGURE 3. The localization performance. (A) Mean error size of localization. The error bars represent 95% confidence intervals. Asterisks represent significant differences (p < .05). (B) Effect of ASD-related traits on error size. The colored areas around the lines represent standard errors.

3.2.2 Longitudinal and latitudinal biases of localization

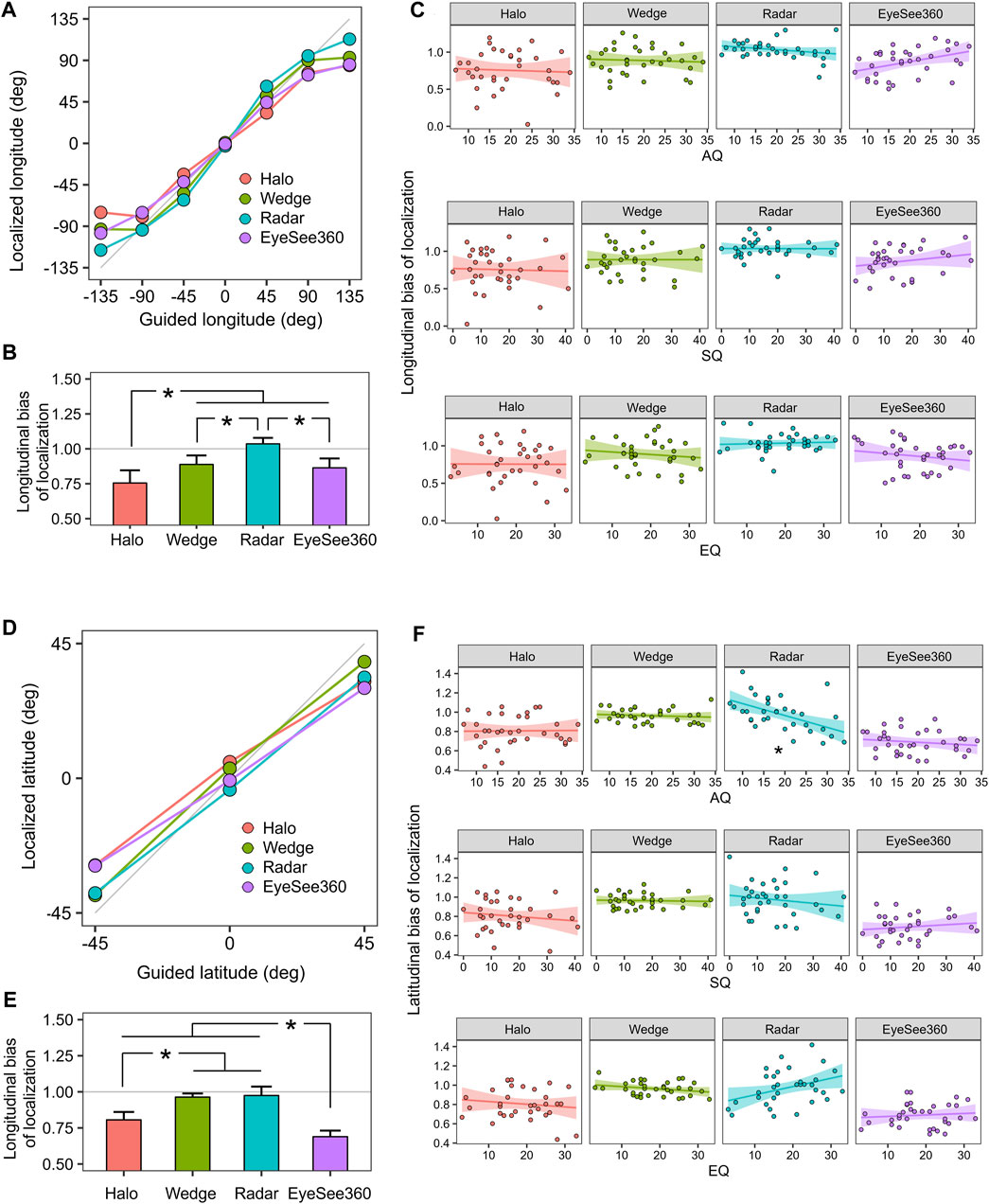

We also analyzed localization biases regarding longitudinal and latitudinal directions. Data obtained from the latitude 90 and -90 conditions (i.e., those guided just above and underneath the participants) were excluded. Figure 4A shows the mean localized longitude as a function of guided longitude. To investigate how the target longitude influenced localized longitude, we calculated a regression coefficient for each participant and guidance type. The regression coefficient indicated the longitudinal bias of localization: smaller values less than 1 indicated biases for the front, and larger values indicated those for the back. Figure 4B shows the mean regression coefficients. Results of the one-way repeated-measures ANOVA are shown in Supplementary Material S3. Figure 4C shows the longitudinal bias of localization as a function of the AQ, SQ, and EQ scores. To examine the effect of ASD-related traits, multiple regression analysis was conducted for each of guidance type with the predictive factors of AQ, SQ, and EQ scores. None of the models were significant (Fs < 2.098, ps > .121, adjusted R2s < .091).

FIGURE 4. Localization biases for the longitudinal and latitudinal directions. (A) Mean localized longitude as a function of guided longitude. The gray line represents non-biased localization. The smaller slope indicates longitudinal bias for the front. (B) Mean longitudinal bias of localization. The error bars represent 95% confidence intervals. The asterisks represent the significant differences (p < .05). (C) Effect of ASD-related traits on the longitudinal bias of localization. The colored areas around the lines represent standard errors. (D) Mean localized latitude as a function of guided latitude. (E) Mean latitudinal bias of localization. (F) Effect of ASD-related traits on the latitudinal bias of localization. The asterisk represents the significant effect of autistic traits and systemizing and empathizing cognitive styles on latitudinal bias (p < .05).

Figure 4D shows the mean localized latitude as a function of target latitude. To investigate the latitudinal bias of localization, we calculated a regression coefficient for each participant and guidance type. The regression coefficient indicated the latitudinal bias of localization: smaller values of less than 1 indicated latitudinal bias for the front, and larger values indicated bias for the back. Figure 4E shows the mean regression coefficients. Results of the one-way ANOVA are shown in Supplementary Material S4. Figure 4F shows the latitudinal bias of localization as functions of the AQ, SQ, and EQ scores. To examine the effect of ASD-related traits, a multiple regression analysis was conducted for each guidance type with the predictors of the AQ, SQ, and EQ scores. For the Radar condition, the model was significant (F = 4.277, p = .013, adjusted R2 = .230). However, the models for the other conditions were not (Fs < 1.912, ps > .149, adjusted R2s < .077). In the Radar condition, the frontward latitudinal bias increased with the AQ score (t = 2.387, p = .023, β = −.448). It did not change with the SQ and EQ scores (|t|s < 0.705, ps > .486, |β|s < .130).

4 Discussion

This pilot study examined how the effectiveness of immersive visual guidance changed according to ASD-related traits. Typically developing participants completed the visual search and localization tasks while using visual guidance. The visual search performance increased with participants’ systemizing cognitive styles; however, the localization performance did not change. These results suggested that the immersive visual guidance was useful for assisting visual cognition in individuals with systemizing cognitive style.

4.1 Effect of ASD-related traits on visual search performance

Our results indicated that visual search performance was positively correlated with systemizing cognitive styles. This correlation is consistent with results from previous studies that reported systemizing cognition improved performance in spatial cognition tasks such as mental rotation tests (Cook and Saucier, 2010) and Gottschaldt’s hidden figure test (Conson et al., 2020). Conversely, the visual search performance was negatively correlated with autistic traits when Radar guidance was used. The incongruency between autistic and systemizing effects may stem from the diversity of cognitive performance on the autism spectrum. When autistic traits are high, those with systemizing cognition may use Radar effectively; however, those with less systemizing cognition may not. Some studies asserted that individuals with ASD have a strong preference for perceiving individual information (Mottron et al., 2006) and have difficulty integrating them into global information (Frith and Hoppe, 1994). Radar required users to integrate the cube, elliptical disk, crossed lines, and status of the HMD’s rotation to recognize the target direction. Therefore, those with less systemizing cognition would have difficulties in using the Radar.

4.2 Effect of ASD-related traits on localization performance

Unlike visual search performance, localization performance while using visual guidance was not correlated with autistic traits and systemizing and empathizing cognitive styles, except for the latitudinal bias of localization in the Radar condition. Few studies have investigated localization performance in individuals with ASD. Visser et al. (2013) conducted an experiment in which participants heard a sound presented by a speaker around them and localized the source of sound. The results showed a strong impairment of latitudinal localization in individuals with ASD; however, this impairment was not observed for horizontal directions. Conversely, we did not observe a strong impairment. This inconsistency may stem from the differences in processing between visual and auditory localization. Visser et al. explained that impaired latitudinal localization in individuals with ASD is related to abnormal connectivity within the cochlear and inferior colliculi, which influences the detection of sound elevation (Zwiers et al., 2014). Therefore, how ASD-related traits relate to visual localization remains unclear. Future research is warranted.

4.3 Conclusion

We examined how the effectiveness of visual guidance changes with ASD-related traits. Specifically, visual search performance was positively correlated with systemizing cognition while using visual guidance. However, localization performance showed minimal correlation with autistic traits. Hence, visual guidance would be effective for improving spatial cognition in individuals with higher ASD-related traits.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/ek467/.

Ethics statement

The studies involving humans were approved by Ethics Committees of National Rehabilitation Center for Persons with Disabilities and Kyoto University of Advanced Science. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

YH: Conceptualization, Data curation, Formal Analysis, Funding acquisition, Investigation, Methodology, Project administration, Visualization, Writing–original draft, Writing–review and editing. MW: Conceptualization, Funding acquisition, Investigation, Methodology, Resources, Supervision, Writing–review and editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was partially supported by the Japan Society for the Promotion of Science KAKENHI (grant numbers 20K19855, 21H05053, and 23K12937) for the equipment, payment to participants, and publication fees.

Acknowledgments

We thank Naomi Ishii for her assistance with the experimental settings and helpful discussions. Furthermore, we thank Prof. Akio Wakabayashi for use of the AQ, SQ, and EQ scales, and Dr. Reiko Fukatsu and Dr. Yuko Seko for their continuous encouragement.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1291516/full#supplementary-material

References

American Psychiatric Association (2013). The diagnostic and statistical manual of mental disorders. Arlington, VA: DSM5.

Baron-Cohen, S., Richler, J., Bisarya, D., Gurunathan, N., and Wheelwright, S. (2003). The systemizing quotient: an investigation of adults with Asperger syndrome or high–functioning autism, and normal sex differences. Philos. Trans. R. Soc. Lond 358, 361–374. doi:10.1098/rstb.2002.1206

Baron-Cohen, S., and Wheelwright, S. (2004). The empathy quotient: an investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. J. Autism Dev. Disord. 34, 163–175. doi:10.1023/b:jadd.0000022607.19833.00

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., and Clubley, E. (2001). The autism-spectrum quotient (AQ): evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 31, 5–17. doi:10.1023/a:1005653411471

Bork, F., Schnelzer, C., Ulrich, E., and Navab, N. (2018). Towards efficient visual guidance in limited field-of-view head-mounted displays. IEEE Trans. Vis. Comput. Graph 24, 2983–2992. doi:10.1109/tvcg.2018.2868584

Conson, M., Senese, V. P., Baiano, C., Zappullo, I., and Warrier, V.UNICAMPSY17 groupet al. (2020). The effects of autistic traits and academic degree on visuospatial abilities. Cogn. Process 21, 127–140. doi:10.1007/s10339-019-00941-y

Cook, C. M., and Saucier, D. M. (2010). Mental rotation, targeting ability and Baron-Cohen’s empathizing-systemizing theory of sex differences. Pers. Individ. Dif. 49, 712–716. doi:10.1016/j.paid.2010.06.010

Franconeri, S. L., and Simons, D. J. (2003). Moving and looming stimuli capture attention. Percept. Psychophys. 65, 999–1010. doi:10.3758/bf03194829

Frith, U., and Happé, F. (1994). Autism: beyond “theory of mind.”. Cognition 50, 115–132. doi:10.1016/0010-0277(94)90024-8

Gruenefeld, U. (2017). OutOfView. Available from: https://github.com/UweGruenefeld/OutOfView.

Gruenefeld, U., Ali, A. E., Boll, S., and Heuten, W. (2018a). “Beyond Halo and Wedge: visualizing out-of-view objects on head-mounted virtual and augmented reality devices,” in Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services, 40, Oldenburg Germany, October 5 - 8, 2018, 1–11.

Gruenefeld, U., Koethe, I., Lange, D., Weiß, S., and Heuten, W. (2019). “Comparing techniques for visualizing moving out-of-view objects in head-mounted virtual reality,” in Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, March 23 - March 27 2019, 742–746.

Gruenefeld, U., Lange, D., Hammer, L., Boll, S., and Heuten, W. (2018b). “FlyingARrow: pointing towards out-of-view objects on augmented reality devices,” in Proceedings of the 7th ACM International Symposium on Pervasive Displays, 20, Munich Germany, June 6 - 8, 2018, 1–6.

Harada, Y., and Ohyama, J. (2021). Quantitative evaluation of visual guidance effects for 360-degree directions. Virtual Real 26, 759–770. doi:10.1007/s10055-021-00574-7

Harmsen, I. E. (2019). Empathy in autism spectrum disorder. J. Autism Dev. Disord. 49, 3939–3955. doi:10.1007/s10803-019-04087-w

Haswell, C., Izawa, J., Dowell, L. R., Mostofsky, S. H., and Shadmehr, R. (2009). Representation of internal models of action in the autistic brain. Nat. Neurosci. 12, 970–972. doi:10.1038/nn.2356

Henderson, J. M., and Macquistan, A. D. (1993). The spatial distribution of attention following an exogenous cue. Percept. Psychophys. 53, 221–230. doi:10.3758/bf03211732

Ip, H. H. S., Wong, S. W. L., Chan, D. F. Y., Byrne, J., Li, C., Yuan, V. S. N., et al. (2018). Enhance emotional and social adaptation skills for children with autism spectrum disorder: a virtual reality enabled approach. Comput. Educ. 117, 1–15. doi:10.1016/j.compedu.2017.09.010

Kaldy, Z., Giserman, I., Carter, A. S., and Blaser, E. (2016). The mechanisms underlying the ASD advantage in visual search. J. Autism Dev. Disord. 46, 1513–1527. doi:10.1007/s10803-013-1957-x

Keehn, B., Muller, R., and Townsend, J. (2013). Atypical attentional networks and the emergence of autism. Neurosci. Biobehav Rev. 37, 164–183. doi:10.1016/j.neubiorev.2012.11.014

Kumazaki, H., Yoshikawa, Y., Muramatsu, T., Haraguchi, H., Fujisato, H., Sakai, K., et al. (2021). Group-based online job interview training program using virtual robot for individuals with autism spectrum disorders. Front. Psychiatry 12, 704564. doi:10.3389/fpsyt.2021.704564

Li, C., Belter, M., Liu, J., and Lukosch, H. (2023). Immersive virtual reality enabled interventions for autism spectrum disorder: a systematic review and meta-analysis. Electronics 12, 2497. doi:10.3390/electronics12112497

Lorenzo, G., Pomares, J., and Lledó, A. (2013). Inclusion of immersive virtual learning environments and visual control systems to support the learning of students with Asperger syndrome. Comput. Educ. 62, 88–101. doi:10.1016/j.compedu.2012.10.028

McCleery, J. P., Zitter, A., Solórzano, R., Turnacioglu, S., Miller, J. S., Ravindran, V., et al. (2020). Safety and feasibility of an immersive virtual reality intervention program for teaching police interaction skills to adolescents and adults with autism. Autism Res. 13, 1418–1424. doi:10.1002/aur.2352

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., and Burack, J. (2006). Enhanced perceptual functioning in autism: an update, and eight principles of autistic perception. J. Autism Dev. Disord. 36, 27–43. doi:10.1007/s10803-005-0040-7

Muth, A., Honekopp, J., and Falter, C. M. (2014). Visuo-spatial performance in autism: a meta-analysis. J. Autism Dev. Disord. 44, 3245–3263. doi:10.1007/s10803-014-2188-5

O’Riordan, M. A. (2004). Superior visual search in adults with autism. Autism 8, 229–248. doi:10.1177/1362361304045219

Visser, E., Zwiers, M. P., Kan, C. C., Hoekstra, L., van Opstal, J., and Buitelaar, J. K. (2013). Atypical vertical sound localization and sound-onset sensitivity in people with autism spectrum disorders. J. Psychiatry Neurosci. 38, 398–406. doi:10.1503/jpn.120177

Wada, M., Suzuki, M., Takaki, A., Miyao, M., Spence, C., and Kansaku, K. (2014). Spatio-temporal processing of tactile stimuli in autistic children. Sci. Rep. 4, 5985. doi:10.1038/srep05985

Wakabayashi, A., Baron-Cohen, S., and Wheelwright, S. (2004). The autism-spectrum quotient (AQ) Japanese version: evidence from high-functioning clinical group and normal adults. Jpn. Psychol. Res. 75, 78–84. doi:10.4992/jjpsy.75.78

Wakabayashi, A., Baron-Cohen, S., and Wheelwright, S. (2006). Individual and gender differences in empathizing and systemizing: measurement of individual differences by the empathy quotient (EQ) and the systemizing quotient (SQ). Jpn. Psychol. Res. 77, 271–277. doi:10.4992/jjpsy.77.271

Keywords: autistic trait, systemizing cognition, empathizing cognition, spatial cognition, immersive visual guidance, visual search, spatial localization

Citation: Harada Y and Wada M (2023) Autism-related traits are related to effectiveness of immersive visual guidance on spatial cognitive ability: a pilot study. Front. Virtual Real. 4:1291516. doi: 10.3389/frvir.2023.1291516

Received: 09 September 2023; Accepted: 31 October 2023;

Published: 30 November 2023.

Edited by:

Jiayan Zhao, Wageningen University and Research, NetherlandsReviewed by:

Matthew Coxon, York St John University, United KingdomStefan Marks, Auckland University of Technology, New Zealand

Copyright © 2023 Harada and Wada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuki Harada, aGFyYWRheXV1a2kwMEBnbWFpbC5jb20=

Yuki Harada

Yuki Harada Makoto Wada

Makoto Wada