95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 08 January 2024

Sec. Virtual Reality in Medicine

Volume 4 - 2023 | https://doi.org/10.3389/frvir.2023.1244096

This article is part of the Research Topic Redefining The Pedagogy in Virtual and Augmented Reality in Medical Science Education View all 6 articles

While mastery of neuroanatomy is important for the investigation of the brain, there is an increasing interest in exploring the neural pathways to better understand the roles of neural circuitry in brain functions. To tackle the limitations of traditional 2D-display-based neuronavigation software in intuitively visualizing complex 3D anatomies, several virtual reality (VR) and augmented reality (AR) solutions have been proposed to facilitate neuroanatomical education. However, with the increasing knowledge on brain connectivity and the functioning of the sub-systems, there is still a lack of similar software solutions for the education and exploration of these topics, which demand more elaborate visualization and interaction strategies. To address this gap, we designed the immerSive custOmizable Neuro learnIng plAtform (SONIA), a novel, user-friendly VR software system with a multi-scale interaction paradigm that allowed flexible customization of learning materials. With both quantitative and qualitative evaluations through user studies, the proposed system was shown to have high usability, attractive visual design, and good educational value. As the first immersive system that integrated customizable design and detailed narratives of the brain sub-systems for the education of neuroanatomy and brain connectivity, SONIA showcased new potential directions and provided valuable insights regarding medical learning and exploration in VR.

The human brain is a highly complex organ that consists of small anatomical structures that are tightly packed and interconnected through different pathways. To aid spatial understanding and exploration of the brain’s 3D anatomy, volumetric data is often sliced into 2D representation due to the limitations of traditional media (e.g., paper and 2D screens). However, this often fails to effectively reflect the complex geometry and spatial arrangement of the anatomical structures (Ekstrand et al., 2018; Xiao et al., 2018). With the advancement of modern bioimaging techniques, the exploration of functional and structural brain connectivity is gaining increasing interest. Intuitive demonstration of brain connectivity along the associated neuroanatomy and the insights gained through various studies will be instrumental to the education and further exploration of neuroscience (Petersen et al., 2019). So far, a number of augmented reality (AR) and virtual reality (VR) solutions (Fiani et al., 2020; Hellum et al., 2022) have been proposed to provide more intuitive visualization and understanding of neuroanatomy for educational and surgical planning purposes, with positive responses from user studies. These solutions have employed a range of display devices, including mobile devices (e.g., tablet and smartphone), VR headsets, and Hololens. In comparison to the primary focus on the anatomy, only a few AR/VR systems (Karmonik et al., 2018; Keiriz et al., 2018; Petersen et al., 2019; Schloss et al., 2021) have been proposed to visualize and demonstrate the neural pathways and brain networks. Keiriz et al. (2018) proposed NeuroCave, a web-based immersive platform for exploring connectomic data. Later, workflows that leverage existing software solutions to visualize brain tractograms and functional connectivities have been demonstrated (Karmonik et al., 2018; Petersen et al., 2019). More recently, Schloss et al. (2021) built a VR application to visualize the information pathways of visual and auditory systems for educational purposes. While existing solutions tackle the challenges in spatial understanding of the 3D anatomy through visualization, very few experimented with new user interaction paradigms, which can potentially enhance the usability and learning experience (Hellum et al., 2023a). In addition, among the limited efforts (Karmonik et al., 2018; Keiriz et al., 2018; Petersen et al., 2019; Schloss et al., 2021) in visualizing brain networks, no reports attempted to incorporate descriptive insights along the pathway exploration or learning module design.

To meet the emerging need for the education, demonstration, and investigation of brain connectivity and to promote related neuroscientific insights, we proposed the immerSive custOmizable Neuro learnIng plAtform (SONIA), which provides interactive visualization and learning modules for both neuroanatomy and the associated structural and functional networks. The new VR system has several novel features. First, inspired by VR-based geological data navigation (Piumsomboon et al., 2018; Huang and Chen, 2019), we experimented with a multi-scale interaction paradigm that places the user at the centre of a large, expanded brain while also manipulating a small brain model to facilitate spatial understanding of brain anatomy. Second, we designed a progression-based strategy with completion metrics and multimedia interactions, including visual guidance and audio voice-over to provide a stimulating and enriching user experience. Finally, the system’s customizable design to incorporate detailed narratives of brain sub-systems opens the door for future projects, allowing many different types of content to be visualized and explored with the proposed software framework. To demonstrate the proposed system, we created an interactive visualization of the research work of Xie et al. (2021) on the functional system and brain network of anxiety. We conducted quantitative and qualitative user assessments that indicated that the system exhibited excellent usability, visual design, and educational value. Thus, in conjunction with conventional learning materials composed of 2D graphic representations, our proposed novel, customizable, and intuitive VR system has significant promise and value for the education and exploration of neuroanatomy and neural pathways. The code of the SONIA system is made publicly available at https://github.com/HealthX-Lab/SONIA.

To demonstrate the proposed VR system, we used the anxiety-relevant functional brain network summarized in a recent review by Xie et al. (2021). A summary of the network, which involves six key anatomical structures (amygdala, hippocampus, striatum, medial prefrontal cortex (mPFC), hypothalamus, and the bed nucleus of the stria terminalis (BNST)) is illustrated in Figure 1 of the paper by Xie et al. (2021). Briefly, Xie et al. (2021) summarized five subsystems that regulate anxiety, including cognitive control, fear conditioning, uncertainty anticipation, motivation processing, and stress regulation; with each subsystem made up of pathways between two to three anatomical structures. For the system, we constructed the virtual brain model based on the AAL116 brain atlas (Tzourio-Mazoyer et al., 2002), which is widely used in neuroimaging research. Five of the six key structures involved in the anxiety-related functional systems were extracted from the atlas. As the AAL116 atlas does not contain the BNST, it was segmented manually according to Theiss et al. (2017) on the MNI152 brain template (Fonov et al., 2011) in the same space as the AAL116 atlas. All atlas structures were converted to.fbx mesh models from the discrete labels in the NIFTI images for use in the 3D VR environment. For the virtual brain models, we only highlight these six structures while keeping the rest as semi-transparent to provide the additional visual references to further enhance the spatial understanding of the anatomy and richness in the final rendering. Finally, both opaque and semi-transparent lines were added between both the six key structures and the rest of structures from the AAL116 atlas, respectively, to indicate functional and anatomical connectivities between them.

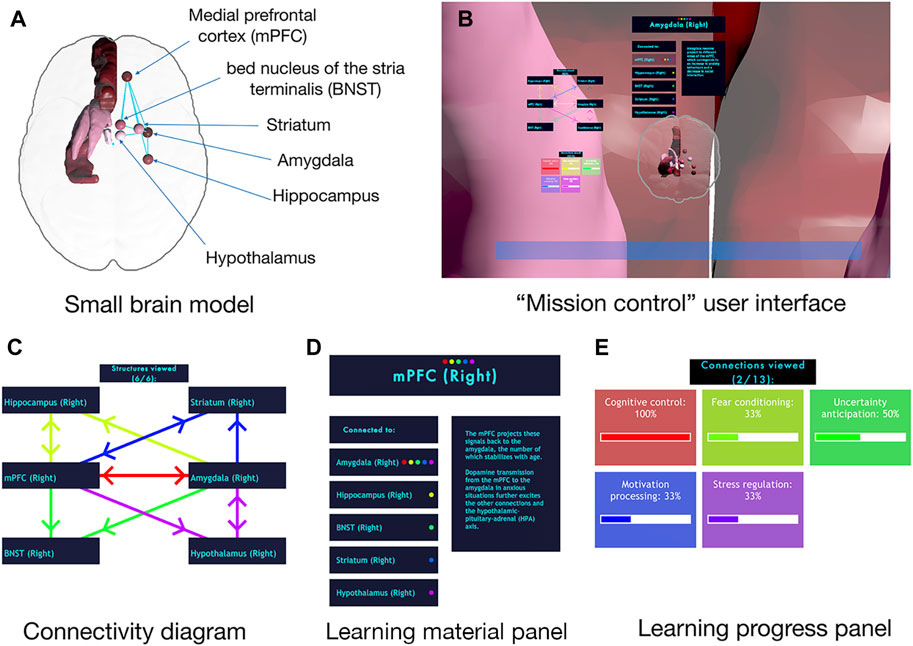

FIGURE 1. Overview of the virtual reality environment set-up. (A) The spatial relationship between the “large brain” environment and the “mission control” platform; (B) The layout of the user-interface, which consists of three information panels and a small brain model that allows the user to interact with the “large brain” environment and complete the learning modules.

For the virtual environment, we explored a multi-scale visualization paradigm with two brain models of different sizes to facilitate interaction, visualization, and spatial understanding of the neuroanatomy. Multi-scale VR interaction was recently suggested for the exploration and navigation of geographic data to facilitate the understanding of spatial arrangement (Piumsomboon et al., 2018; Huang and Chen, 2019). For anatomical navigation, we expected that this approach would also benefit the spatial understanding of the neuroanatomy, as well as provide an enriching, fun, and immersive experience for the user.

In the VR environment, the user is positioned on a “mission control” platform suspended at the center of a magnified brain model at the scale of a large house, which is out of reach for the user, but still allows clear recognition of the spatial arrangement of different anatomical structures (hereafter referred to as the large brain). At the same time, a smaller forward-facing brain model (the small brain) that mirrors the large brain is placed in front of the user to allow interaction with the learning modules and the large brain model. Along with the small brain, three floating information panels are also presented to the user to display the schematic diagram of anxiety-related functional subsystems, descriptions for all brain connectivities, and the percentage of completion for the learning content for each functional sub-system. The schematic of the designed VR environment is demonstrated in Figure 1 and the details of each visual element for the “mission control” platform are illustrated in Figure 2. Different from the magnified brain, the small brain displays the geometries of the six key anatomical structures with different shades of red on the left hemisphere while the right hemisphere depicts a graph representation such that each region is a color-coded sphere (located in the regional centroid) and their connectivities are denoted by connecting lines. The node in this graph representation offers clear visualization of the connectivity relationships between the anatomies and makes it easier to select each anatomical structure, allowing the small brain model to serve as the main media to interact with the rest of the visual elements in the virtual environment. Corresponding to the right hemisphere of the small brain model, lines that connect the centroids of the key structures are also shown on the same side of the magnified brain.

FIGURE 2. Detailed demonstration for the user-interface of SONIA. (A) Composition of the small brain model that allows interaction and visualization of brain anatomy and connectivity; (B) Inside view of the “mission control” platform; (C) Schematic diagram of anxiety-related functional systems and brain structures; (D) Information panel for displaying learning materials regarding the key brain regions shown in (C); (E) Information panel that notifies learning material progress. Note that across (C, D, E), the same color-coding strategies are used consistently to code for different processes in the response to anxiety.

Our VR system was created using the Unity game engine (version 2021.3.2f1) with the SteamVR plugin. We employed the HTC VIVE Pro Eye VR HMD headset and a Razer Blade 15 laptop (Intel Core i7 CPU, NVIDIA GeForce RTX 2070 GPU, and 16 GB RAM) to run the system. No lagging or frame freezing was observed for our system, and it ran consistently at an average of 45–50 frames per second. Only one VR controller is required to perform target selection and confirmation for the VR system.

Before understanding the subsystems and brain networks that regulate a neural process, it is important to first elucidate the spatial arrangement of each neuroanatomy that is involved. Therefore, we designed the workflow for the user in the SONIA system in two general phases (anatomical learning and connectivity learning), both utilizing a single VR controller in the dominant hand for pointing and selection. In the first phase, the user is guided to learn about the key brain structures involved in the target neural network. Upon completion, the user is guided to the second phase to explore the connections between the structures and the roles of different subsystems in a neural process, until all subsystems have been visited. At each step of the workflow, we have designed appropriate user interaction strategies that fully utilize the visual elements in the environment to provide a stimulating experience. In both stages of the system, the user does not need to select the structures and connections in any predefined order, thus giving them the opportunity to select items and knowledge points that most interest them, or were perhaps closely related to the structures that they had just visited. By granting participants this freedom, the users are given a chance to exercise limited agency in their own educational experiences and learn at their own pace. We will further elaborate the user interaction strategies and system workflows for the two phases in the following sections.

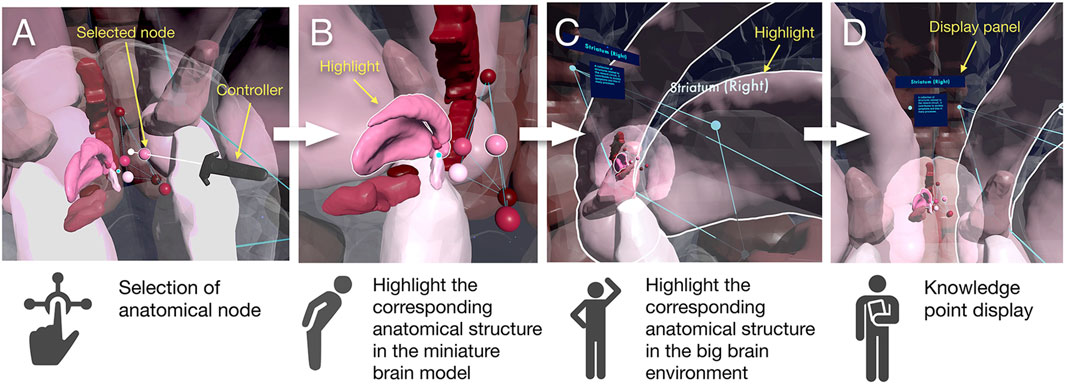

During anatomical learning, the user is tasked to navigate all key brain structures to learn their spatial arrangements and roles in the neurological system. To accomplish this, a short virtual stick extends from the controller with a small sphere at the tip, which is used as the default pointing and selection tool. The workflow of this phase is illustrated in Figure 3. When the user touches the target object with the virtual stick, the hit object becomes highlighted with a white halo, which, when confirmed by pressing the controller’s trigger button, will remain to indicate that the structure has been successfully selected. This user interaction strategy is only applicable on the right hemisphere of the small brain model, where the key anatomical structures are represented as interconnected nodes for selection to reduce visual clutter. Once a node selection is confirmed, the corresponding anatomical structure in its full geometric representation in the left hemisphere of the small brain becomes highlighted with a white halo. Syncing with the interaction upon the small brain, the same white halo that indicates selection and hover-over is also shown in the corresponding structures in the right side of the large brain. This signals the user of the link between the two brain models of different scales.

FIGURE 3. Workflow for anatomical learning phase. (A) the user points the controller line into a structure and presses the trigger to select it, (B) the structure becomes highlighted in the small brain, (C) the structure becomes highlighted in the large brain (background), (D) the display panel showing the name and description of the selected structure.

Two information panels are employed as the key user interface (UI) elements in anatomical learning. First, the learning material panel is positioned above the small brain to display the names and the key knowledge points for the selected brain anatomy. Second, the connectivity diagram (Figure 2C) demonstrating the relationship between the anatomical structures is placed to the left of the small brain. Although the diagram is empty at the beginning, once a structure is visited, the corresponding item will become visible until all structures have been selected at least once. The connections between structures will also be revealed as the item list populates.

Upon completing the anatomical learning module, the user will proceed to the connectivity learning phase, where all three information panels illustrated in Figures 2C–E are employed together with the small and large brain models to fulfill an interactive learning experience. Note that among the three panels, only the one that displays the learning materials has selectable menus for direct user interaction, and its setup is different from that of the anatomical learning phase. While we maintain the user interaction strategy in Section 2.4 for picking anatomical structures using the small brain, a ‘laser pointer’ now extends from the controller, which is used to select menu items in the learning material panel, as it is not within the arm’s reach.

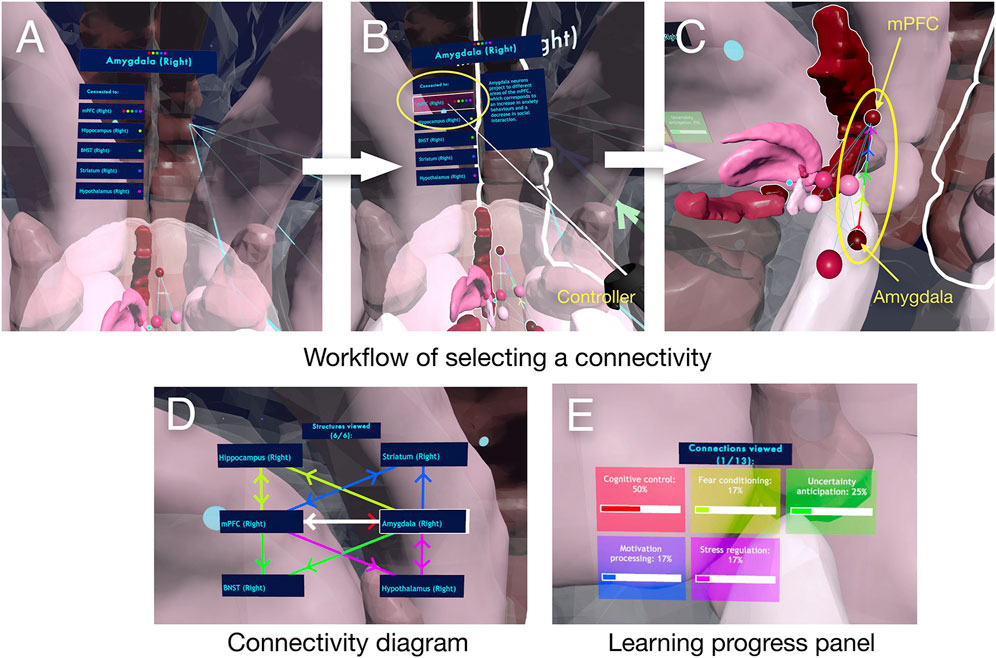

To start investigating a connection, the user needs to first pick a brain structure from the small brain model. Then, the name of this structure and those in the network that it passes information towards will be listed in the learning material panel. For each menu item that represents a unidirectional connection, small dots with the color-coding that signifies the membership of a subsystem are marked on it as well. Then, a further selection of an item in the list will trigger the display of the key knowledge points regarding the description of the connectivity between the two structures within the brain network under study. At the same time, this connection under investigation and its directionality will be annotated in both the large and small brain models using colour coding strategies corresponding to the subsystem(s) that it belonged to, as well as in the connectivity diagram (Figure 2C) using a white color. As the user gradually explores all connections in the connectivity diagram, the learning progress panel will track the completion of the learning materials for each subsystem with bar graphs showing the percentages of the connections that have been viewed. To better demonstrate the workflow, an example of exploring the connection from the amygdala to the mPFC using the SONIA system is shown in Figure 4.

FIGURE 4. Workflow of the connectivity learning phase using the connection from the amygdala to the mPFC as an example (yellow circles indicate important events). (A) Information panel showing all available brain structures that the amygdala is connected to when it is selected by the user. (B) When the mPFC is selected, the description of the connection in anxiety processing is shown. (C) the connection becomes highlighted in the small (and the large) brain. (D) connectivity diagram, with the currently selected connection highlighted in white. (E) subsystem completion diagram with percentages of completion for each subsystem for anxiety processing. Please note that all the arrows indicating the directions of the connectivity are color-coded by the corresponding subsystems.

As mentioned previously, colour coding is used extensively throughout the experience, such as on all the information panels, both to denote a belonging to a particular structural subsystem and to show which structures and/or connections are selected. As a part of the customizable design, visually distinct colours are automatically generated by SONIA for each of the system’s subsystems. The use of colour coding facilitates the user to establish immediate association between the connections and their subsystems. Note that the white color is reserved for our software system to indicate that the structure and/or connections have been selected.

To enable flexibility and adaptability for new learning materials, our proposed SONIA system was designed in such a way as to allow alternative datasets that define the anatomical models and the functional relationship between them to be loaded. Specifically, the following data are necessary for the system to function: a collection of 3D model files (e.g., .fbx, .obj, etc.) for the anatomical structures, a .csv spreadsheet containing the names and descriptions of the structures, and a .csv spreadsheet with the connectivity matrix between structures. Additional files are optional but can further enhance the learning experience. They include .csv files that list the subsystem names and descriptions, membership of structures and connections to the subsystems, as well as extra 3D model files for peripheral anatomical structures and their connectivity matrix to help enrich the visual content if needed. Besides these customizable data for alternative learning modules, the users are also welcome to tweak the visualization styles (e.g. colors and mesh textures) in the Unity editor. As the Unity scenes, user interaction strategies, and UI displays are programmed, they will remain unchanged in customization. Furthermore, to achieve the optimal visualization of the information panel for displaying connectivity diagrams, the user will be encouraged to design the layout that best suits their target applications and population. By placing these required files in a specific folder and updating the editor script variables to point to the correct locations, different learning contents can be generated for either subject-specific brain models or existing brain atlases (e.g., AAL116). With even a simple set of meshes, a connectivity map, and structure descriptions, an interactable experience can be produced. By leveraging the AAL116 atlas, our demonstrated case study took full advantage of such a setup. As no frame rate loss or system errors were observed with full rendering of all brain parcellations of the atlas with different levels of transparency, we believe that the system is highly scalable for complex neuroanatomical models.

The usability of the SONIA system was assessed with both quantitative and qualitative evaluations in user studies. Upon informed consent, we recruited 11 subjects (age = 31.1 ± 6.0, 4 female, 7 male) to participate in the study. All participants were either somewhat or very familiar with neuroanatomy and/or the concept of brain connectivity, and represent the main target users of the system. Among them, only one did not have VR experience before the study. The study was approved by the Ethics Research Board of Concordia University. All methods were performed in accordance with the relevant guidelines and regulations.

For the study, each participant spent 20–30 min to complete the learning modules. To enhance the usability, we implemented a virtual guidance system, where an audio voice-over based on the text-to-speech plugin in Unity was used to interact with the user. It guided them through each interaction scheme and visual change, and explained all the subsystems as displayed in the learning material panel. Subjects with glasses were allowed to wear them while participating, as the HTC VIVE Pro Eye headset is compatible with them. No participants experienced motion sickness.

Upon completing the VR experience, we asked each participant to complete a three-part questionnaire, consisting of both quantitative and qualitative assessments. With quantitative evaluation, we measured three main attributes, including usability, effectiveness of VR feature designs, and perceived learning values based on Likert-scale questions (scale of 1-5, ranging from “strongly disagree” to “strongly agree”), with an emphasis on the software design perspective. Specifically, questions related to usability were placed in Part 1 of the questionnaire, and questions related to VR feature design and learning values were included in Part 2. Afterwards, in Part 3 of the questionnaire, the participants were asked to provide freeform suggestions and clarification of their answers in the quantitative assessments regarding the VR system (Adams and Cox, 2008).

Usability: General usability for the overall software system is crucial for educational VR (Makransky and Petersen, 2021). To validate this, we employed the standard and popular 10-item System Usability Scale (SUS) (Brooke, 1995), which measures complexity, user-friendliness, and confidence when using an instrument, including virtual training (Strojny and Dużmańska-Misiarczyk, 2023). Among the 10 questions of SUS, each odd-numbered question is scored as x-1, and each even-numbered question is scored as 5-x, where x is the question’s resulting value. The scores for each participant are then summed, and then multiplied by 2.5—resulting in a maximum SUS score of 100. A software system that receives a SUS score above 68 indicates good usability.

Effectiveness of VR feature designs: Compared with most existing medical VR educational applications, we explored novel visual and user-interaction designs to facilitate learning, whose effectiveness should be verified, and thus five questions were provided to further complement the SUS results. For the visual design, we employed three questions. Specifically, we examined the general cognitive load (“Did you find the user interface to be too cluttered and/or visually distracting?”) and aesthetic (“Was the visual style pleasant to interact with?”) of the visual elements, which can be important for educational VR (Tractinsky et al., 2000; Makransky and Petersen, 2021; Fink et al., 2023), as well as the face validity of the design to use a color-coding strategy for grouping knowledge points regarding each neural sub-system (“Did the colour coding for each subsystem aid in your understanding of the connections between anatomical structures?”). Note that the score of visual cognitive load was inverted by subtracting the value from 6 when performing the analyses because a lower score is more desirable, unlike the rest of questions in Part 2, where a higher score is preferred. The average score of these questions was also computed to obtain an overall assessment of the visual design, which should appropriately balance the accuracy of data representation in the UI with the artistic colours and placements of visual elements. For the user-interaction design, we tested the face validity of the learning progress feedback mechanism implemented in the VR user interface to communicate the procession of navigating through the anatomical structures and neural connectivity (“Did the user interface effectively communicate the progress of navigating the included anatomical structures and neural response subsystems?”).

Perceived learning value: Learning value is important for educational VR application. Besides assessing the effectiveness of the proposed multi-scale representation of the brain on anatomical learning (“Did the projections/mirroring of the big brain around the scene aid your understanding of the spatial positioning of the anatomical structures?”), we collected ratings regarding the amount of learning using the system (Huang et al., 2023) as an overall evaluation for the perceived learning value of the software tool (“Do you feel that you have learnt a lot using this system?”).

For the total SUS score, a one-sample t-test was used to assess whether the results are significantly different from 68, and for each sub-score in Part 1 and 2, we compared the results to a neutral response (score = 3), also with one-sample t-tests (a higher score is better). Here, a p-value<0.05 indicates a statistically significant difference. Finally, in Part 3 of the questionnaire, we provided open-ended questions to allow the participants to provide additional suggestions on how to improve the system further and justify some of the ratings given to the previous sections as they see fit. The responses were reviewed carefully to help understand the quantitative assessments and potential directions for future improvements.

The full SUS score from the user study was 79.8 ± 11.6, significantly greater than the minimum 68 for a usable system (p = 0.007). Every sub-score, except system complexity, indicated a positive user-interaction (p < 0.05) with the SONIA system for the overall ease of use, intuitiveness, and consistency. Interestingly, the opinions of the participants were divided in terms of the complexity of the system, resulting in a rating of 2.3 ± 1.2 (lower score indicates lower perceived system complexity), though this value was not statistically different from a neutral response (p = 0.07).

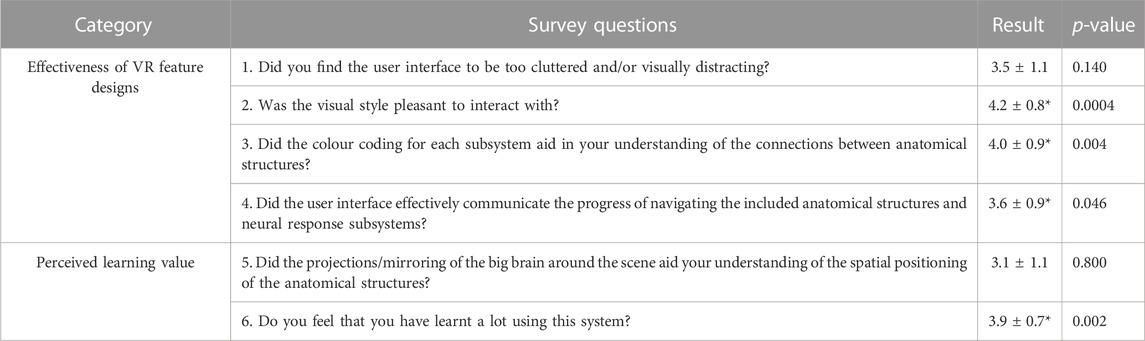

In Part 2 of the questionnaire, the feedback for visual, interaction, and learning experience was generally positive. While the overall visual score was 3.9 ± 0.5 (p = 0.0001), for three associated sub-scores, the results for the complexity of the visual elements, pleasantness of graphic styles, and usefulness of colour-coding schemes for sub-system representation were 3.5 ± 1.1 (inverted score, p = 0.14), 4.2 ± 0.8 (p = 0.0004), and 4.0 ± 0.9 (p = 0.004), respectively. With a score of 3.6 ± 0.9, the interaction design was rated as effective for navigating the anatomies and connections (p = 0.046). Finally, though participants were mostly neutral (3.1 ± 1.1, p = 0.80) regarding the multi-scale strategy for enhancing anatomical understanding, they felt strongly that they had learnt a lot (3.9 ± 0.7, p = 0.002) while using the system. To help guide the readers, we have summarized the results of individual questions in Table 1.

TABLE 1. Summary of user questionnaire results (mean ± std) for survey questions related to the effectiveness of VR feature design and perceived learning value. For the results (score = 1–5), a higher value is more desired, the p-values for comparing the results against a neutral response (score = 3) are also provided. Note that the results that are statistically significant are marked with “*”.

Besides quantitative responses, the feedback form also contained a qualitative section to allow participants to remark freely upon general impressions, opinions, and improvements regarding the system. Participants commented about both positive and negative aspects of their experience. In particular, users found the utility and function of the system to be helpful and novel, standing out as a good way to represent the data. In terms of the user interface, participants often found it to be containing too much visual information. Due to the large volume of text and UI placements, they felt mildly overwhelmed, and found it difficult to absorb some of the informative material.

Specifically, most participants (9/11) listed a small number of remarks on both the usefulness and difficulties that they encountered with the system, as well as suggestions for improvements. Among them, the issue of complexity of the user interface and the large volume of knowledge points was mentioned by several participants (5/11), but none reported being unable to learn or being too overwhelmed. Although the multi-scale strategy for spatial learning was rated neutrally, a subset of participants (4/11) reported liking it and praised the system as a learning tool. The more detailed responses show that although improvements are still needed to better present the complex information, which is indeed challenging as suggested by previous works (Keiriz et al., 2018; Ille et al., 2021; Schloss et al., 2021), the overall system was generally well received.

Using the functional network of anxiety regulation (Xie et al., 2021) as a case study, the proposed SONIA VR system is the first to integrate descriptive insights along neural pathway exploration and learning module design. Besides visualization of the 3D anatomy, we explored novel interaction and user-interface designs intended to benefit the usability and user experience. As the knowledge of neuroanatomy is a prerequisite to understanding neural pathways, we designed the workflow of the system to encompass the phases of anatomical and connectivity learning. In each phase, following the popular practice of player agency (a practice in game design to leverage control of the environment towards the player), the user is free to select the anatomies and the associated connections at their will and pace to trigger changes in the UI elements in the virtual environment. Together with the information panel that displays the progress of completion, these components are designed to enhance the motivation and ease of using the system. Both strategies have shown positive impacts in the design of games and educational content (Plass et al., 2015; Taub et al., 2020). The system focused on this built-in reward system (the visual demonstration of progress), rather than point- or trophy-based rewards, as these less tangible markers of completion have been shown to be less impactful on feelings of reward and on learning amount (McKernan et al., 2015). The positive feedback from our user evaluation regarding the willingness to use the system frequently partially signifies the benefit of these user-interaction designs.

Besides leveraging the superiority in 3D visualization of virtual reality (Ekstrand et al., 2018), the ease of use of our system is key to facilitating the understanding of complex neural pathways in the brain. To achieve this, we implemented a number of visualization and interaction strategies. First, the small brain model is used as the central device to interact with the rest of the environment and UI elements; Second, both node-based and full anatomical representations are used to reduce clutter, facilitate spatial understanding, and simplify object selection; finally, systematic colour-coding is employed to signify the association to the subsystems of the brain network. Their positive impacts are confirmed by the SUS assessments, particularly in the sub-scores for the intuitiveness and ease of use, as well as the visualization and interaction experience evaluations. In terms of the complexity of both the software design and visual elements, the participants indicated slightly favorable (but not statistically significant) opinions. The discrepancy may be subject to the participants’ varied levels of experience with the VR system and neuroscience. This leaves more room to improve the system further in our future strategies. Potential solutions could involve further simplification of the UI and options to expand descriptions rather than have them be constantly present - these changes would serve to reduce the amount of textual information presented to the user at any given time. As reading text in VR requires more visual effort, resolution of the VR headset display and types of lenses can also affect user experience. With the progressive improvement in display technology, it is highly likely that these effects will be further mitigated in the future. Another interesting fact is that the participants reported that they were neutral on the beneficial role of multi-scale representation of the brain for anatomical understanding. This may be due to the choice of scales and the limited freedom of active movement in the large brain model, which is in contrast to previous works in geographic data exploration, where multiscale approaches have been shown to be beneficial (Piumsomboon et al., 2018; Huang and Chen, 2019). However, as the representation forms the overall visual style of the system, creating a visually appealing and enriching environment, the overall visual styles were highly appreciated by the participants. In addition, the user study confirmed a highly positive learning experience from the participants.

As a proof of concept, we showcased the proposed VR system, which is the first of its type and experimented with the new user interaction designs using one example of anxiety-related brain networks. While the system was developed with the HTC Vive Pro Eye headset, it can be adapted to standalone headsets easily. Overall, through the quantitative and qualitative assessments, we have confirmed the perceived usability and usefulness of the SONIA system as a software tool for learning brain networks. Specifically, with a mean SUS score of 79.8 out of 100, the software system has shown great general usability. When testing the effectiveness of the visual element design and interaction scheme of learning progress, scores of 3.9 ± 0.5 and 3.6 ± 0.9 out of 5 were obtained, respectively, both significantly surpassing the neutral score of 3 (p < 0.05). Finally, while the effectiveness of multi-scale representation to enhance anatomical understanding is unclear (p > 0.05), the perceived amount of knowledge acquisition is great (3.9 ± 0.7 out of 5, p < 0.05). To enhance the replicability of our study, we have shared the source code of the SONIA system, as well as the full user study questionnaire in the Supplementary Material. With the customizable design, which supports easy adaptation of alternative learning content, we will continue to evaluate the performance and impact of the software platform with new materials and network models. As brain connectivity is a more advanced topic than neuroanatomy and the main focus of SONIA, our target user group (and the recruited participants in the user study) are those who are at least somewhat familiar with neuroanatomy. There have been a number of previous reports on the value and effectiveness of VR-based neuroanatomical education (Stepan et al., 2017; Lopez et al., 2021; Souza et al., 2021; Gloy et al., 2022). Earlier, Stephan et al. (2017) compared the effectiveness, satisfaction, and motivation of neuroanatomy learning between using VR-based 3D atlas and online textbooks. By measuring subjective attributes (usefulness, enjoyment, ease of learning, engagement, and presence) and test scores at different stages of learning, they found no difference in learning outcomes between the two methods on pre-intervention, post-intervention, and retention quizzes while the VR group found their learning experience more engaging, enjoyable, useful, and motivational. Later, in their investigation, Lopez et al. (2021) examined the advantage of using VR-facilitated interactive learning over traditional lectures in neuroanatomy education. Based on detailed oral examinations using a brain MRI with lesions, they found that the VR-facilitated group showed better performance in identifying structures and describing functional implications. For the education of human encephalon anatomical parts, Souza et al. (2021) developed a VR-based anatomical puzzle game and assessed it in a series of four different user studies for preliminary feedback, comparison with a physical anatomical puzzle, collaborative group learning, and remote learning. With both subjective evaluations (ease-of-use, fun, usefulness, and presence) and objective measures (knowledge test and time), they found positive responses in the subjective metrics with VR-based education, but no clear improvement of knowledge acquisition and retention was confirmed. More recently, Gloy et al. (2022) assessed the response time and accuracy of post-intervention quizzes when using an immersive anatomical atlas for learning in contrast to reading standard atlas textbooks, and they demonstrated better learning efficiency and knowledge retention with VR-based education. While the previous studies all demonstrated positive altitudes and potential benefits of VR in anatomical learning, the nuanced conclusions can differ in terms of knowledge acquisition and retention. This could be due to the differences in learning materials, formats of knowledge quizzes (oral vs. written exam, multiple choice vs. blank filling), the number and skill levels of participants, and the choice of control group (lecture vs. reading). Furthermore, in different studies, the level of user-guidance in VR-based education also varies, with a spectrum of full self-exploration (Stephan et al., 2017; Gloy et al., 2022), VR-guided self-exploration (Souza et al., 2021), and instructor-guided exploration (Lopez et al., 2021), and this could also contribute to the discrepancies in learning outcome evaluations.

These previous reports (Stepan et al., 2017; Lopez et al., 2021; Souza et al., 2021; Gloy et al., 2022) mainly focus on VR-based neuroanatomical education while the primary focus and contribution of our system lies in the understanding, education, and exploration of brain connectivity, which has rarely been investigated. To better accommodate the needs of connectivity learning, which is more complex and requires the associated narratives to accompany pairs of anatomical structures, we implemented a multi-scale visualization strategy to facilitate the spatial understanding, a color-coding system to organize key knowledge points, and a progression-feedback-based display system to deliver learning materials. Compared with the commonly used paradigm of virtual anatomical puzzles and 3D atlases (Stepan et al., 2017; Lopez et al., 2021; Souza et al., 2021; Gloy et al., 2022), our VR features enable more complex learning materials than these previous reports. Furthermore, as the first VR system of its kind, our customizable open-access design also enables good flexibility in learning content design.

For the proposed system, our evaluation metrics encompass usability, effectiveness of designed VR features, and perceived learning value. So far, as most relevant studies aim to validate VR as a tool in anatomical education against traditional classroom/textbook learning, very few have attempted more elaborate VR feature designs similar to our approach. Therefore, instead of a single metric of ease-of-use (Stepan et al., 2017; Lopez et al., 2021; Souza et al., 2021; Gloy et al., 2022), we employed the standard 10-item SUS to fully gauge the system’s usability. For more nuanced insights, we further evaluated the cognitive load, aesthetic, and face validity of the proposed key VR features for learning material delivery. One major limitation of our pilot study lies in learning outcome assessment, where we primarily relied on the perceived learning yield. A control-group with traditional teaching and objective knowledge quizzes would be more ideal, but was beyond the scope of this initial preliminary user study. While additional participants with more detailed demographic analysis could be desirable, the number of participants that we recruited (11) is still within the range for a valid user study (Macefield, 2009). Overall, this preliminary user study offers positive feedback with good insights to allow further system improvement in a more thorough future validation. Specifically, we will tailor the knowledge display strategy to further reduce the VR system’s visual complexity. The revised software system will be tested against lecture-based learning with equal amounts of instruction time. By incorporating a larger cohort and individuals with knowledge in neuroscience, we will compare the two learning modalities with subjective measures including usability, enjoyment, usefulness, and presence, as well as objective quiz scores immediately and 8 weeks after intervention to further probe knowledge acquisition and retention. Finally, we will use multi-variate analyses to further inspect the impact of sex, familiarity with VR, and knowledge level of neuroscience.

We have built a novel virtual-reality system, SONIA, with a customizable design to help create an immersive learning experience for understanding and demonstration of functional brain systems and networks. Unlike the prior arts that primarily focus on simple anatomical visualization, our proposed system integrates a more immersive, user-friendly, and enriching environment with detailed narratives of the brain sub-systems and effective user-interaction strategies, which is validated through user studies. Through this prototype as the first system of its kind, we demonstrate new potential directions regarding medical learning and exploration in VR.

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

O.H. was responsible for the study concept, image processing, software implementation, data analysis and interpretation, and writing of the manuscript. C.S. was responsible for the study concept, consultation of content design of the learning materials, and editing of the manuscript. Y.X. was responsible for the study concept, image processing, data analysis, provided the overall study oversight, and edited the manuscript. All authors contributed to the article and approved the submitted version.

The authors thank the insightful discussion on brain networks and VR user interaction design with Dr. Najmeh Khalili-Mahani. A preprint version of the manuscript is available on ArXiv (Hellum et al., 2023b) at https://arxiv.org/abs/2301.09772.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1244096/full#supplementary-material

Adams, A., and Cox, A. (2008). Questionnaires, in-depth interviews and focus groups. Res. Methods Human-Computer Interact. 2008, 17–34. doi:10.1017/cbo9780511814570.003

Ekstrand, C., Jamal, A., Nguyen, R., Kudryk, A., Mann, J., and Mendez, I. (2018). Immersive and interactive virtual reality to improve learning and retention of neuroanatomy in medical students: a randomized controlled study. CMAJ Open 6 (1), E103–E109. doi:10.9778/cmajo.20170110

Fiani, B., De Stefano, F., Kondilis, A., Covarrubias, C., Reier, L., and Sarhadi, K. (2020). Virtual reality in neurosurgery: "can you see it?"-A review of the current applications and future potential. World Neurosurg. 141, 291–298. doi:10.1016/j.wneu.2020.06.066

Fink, M. C., Eisenlauer, V., and Ertl, B. (2023). What variables are connected with system usability and satisfaction? Results from an educational virtual reality field trip. Comput. Educ. X Real. 3, 100043. doi:10.1016/j.cexr.2023.100043

Fonov, V., Evans, A. C., Botteron, K., Almli, C. R., McKinstry, R. C., Collins, D. L., et al. (2011). Unbiased average age-appropriate atlases for pediatric studies. Neuroimage 54 (1), 313–327. doi:10.1016/j.neuroimage.2010.07.033

Gloy, K., Weyhe, P., Nerenz, E., Kaluschke, M., Uslar, V., Zachmann, G., et al. (2022). Immersive anatomy atlas: learning factual medical knowledge in a virtual reality environment. Anat. Sci. Educ. 15 (2), 360–368. doi:10.1002/ase.2095

Hellum, O., Kersten-Oertel, M., and Xiao, Y. M. (2023a). Assessment of user-interaction strategies for neurosurgical data navigation and annotation in virtual reality. Virtual Real. 27 (2), 1345–1355. doi:10.1007/s10055-022-00740-5

Hellum, O., Mu, Y., Kersten-Oertel, M., and Xiao, Y. (2022). A novel prototype for virtual-reality-based deep brain stimulation trajectory planning using voodoo doll annotation and eye-tracking. Comput. Methods Biomechanics Biomed. Eng. Imaging & Vis. 10 (4), 418–424. doi:10.1080/21681163.2021.1997645

Hellum, O., Steele, C., and Xiao, Y. (2023b). SONIA: an immersive customizable virtual reality system for the education and exploration of brain network. arXiv preprint arXiv: 2301.09772.

Huang, W. M., and Chen, J. (2019). A multi-scale VR navigation method for VR globes." International Journal of Digital Earth. Int. J. Digit. Earth 12 (2), 228–249. doi:10.1080/17538947.2018.1426646

Huang, X., Huss, J., North, L., Williams, K., and Boyd-Devine, A. (2023). Cognitive and motivational benefits of a theory-based immersive virtual reality design in science learning. Comput. Educ. Open 4, 100124. doi:10.1016/j.caeo.2023.100124

Ille, S., Ohlerth, A. K., Colle, D., Colle, H., Dragoy, O., Goodden, J., et al. (2021). Augmented reality for the virtual dissection of white matter pathways. Acta Neurochir. 163 (4), 895–903. doi:10.1007/s00701-020-04545-w

Karmonik, C., Boone, T. B., and Khavari, R. (2018). Workflow for visualization of neuroimaging data with an augmented reality device. J. Digit. Imaging 31 (1), 26–31. doi:10.1007/s10278-017-9991-4

Keiriz, J. J. G., Zhan, L., Ajilore, O., Leow, A. D., and Forbes, A. G. (2018). NeuroCave: a web-based immersive visualization platform for exploring connectome datasets. Netw. Neurosci. 2 (3), 344–361. doi:10.1162/netn_a_00044

Lopez, M., Arriaga, J. G. C., Nigenda Álvarez, J. P., González, R. T., Elizondo-Leal, J. A., Valdez-García, J. E., et al. (2021). Virtual reality vs traditional education: is there any advantage in human neuroanatomy teaching? Comput. Electr. Eng. 93, 107282. doi:10.1016/j.compeleceng.2021.107282

Macefield, R. (2009). How to specify the participant group size for usability studies: a practitioner's guide. J. Usability Stud. 5 (1), 34–45.

Makransky, G., and Petersen, G. (2021). The cognitive affective model of immersive learning (camil): a theoretical research-based model of learning in immersive virtual reality. Educ. Psychol. Rev. 33, 937–958. doi:10.1007/s10648-020-09586-2

McKernan, B., Martey, R. M., Stromer-Galley, J., Kenski, K., Clegg, B. A., Folkestad, J. E., et al. (2015). We don't need no stinkin' badges: the impact of reward features and feeling rewarded in educational games. Comput. Hum. Behav. 45, 299–306. doi:10.1016/j.chb.2014.12.028

Petersen, M. V., Mlakar, J., Haber, S. N., Parent, M., Smith, Y., Strick, P. L., et al. (2019). Holographic reconstruction of axonal pathways in the human brain. Neuron 104 (6), 1056–1064.e3. doi:10.1016/j.neuron.2019.09.030

Piumsomboon, T., Lee, G. A., and Billinghurst, M. (2018). “Snow dome: a multi-scale interaction in mixed reality remote collaboration,” in Extended abstracts of the 2018 CHI conference on human factors in computing systems (Montreal QC, Canada: Association for Computing Machinery).

Plass, J. L., Homer, B. D., and Kinzer, C. K. (2015). Foundations of game-based learning. Educ. Psychol. 50 (4), 258–283. doi:10.1080/00461520.2015.1122533

Schloss, K. B., Schoenlein, M. A., Tredinnick, R., Smith, S., Miller, N., Racey, C., et al. (2021). The UW virtual brain project: an immersive approach to teaching functional neuroanatomy. Transl. Issues Psychol. Sci. 7 (3), 297–314. doi:10.1037/tps0000281

Souza, V., Maciel, A., Nedel, L., Kopper, R., Loges, K., and Schlemmer, E. (2021). VR Neuro game: a virtual reality game to support neuroanatomy teaching and learning. J. Interact. Syst. 12, 253–268. doi:10.5753/jis.2021.2090

Stepan, K., Zeiger, J., Hanchuk, S., Del Signore, A., Shrivastava, R., Govindaraj, S., et al. (2017). Immersive virtual reality as a teaching tool for neuroanatomy. Int. Forum Allergy & Rhinology 7 (10), 1006–1013. doi:10.1002/alr.21986

Strojny, P., and Dużmańska-Misiarczyk, N. (2023). Measuring the effectiveness of virtual training: a systematic review. Comput. Educ. X Real. 2, 100006. doi:10.1016/j.cexr.2022.100006

Taub, M., Sawyer, R., Smith, A., Rowe, J., Azevedo, R., and Lester, J. (2020). The agency effect: the impact of student agency on learning, emotions, and problem-solving behaviors in a game-based learning environment. Comput. Educ. 147, 103781. doi:10.1016/j.compedu.2019.103781

Theiss, J. D., Ridgewell, C., McHugo, M., Heckers, S., and Blackford, J. U. (2017). Manual segmentation of the human bed nucleus of the stria terminalis using 3T MRI. Neuroimage 146, 288–292. doi:10.1016/j.neuroimage.2016.11.047

Tractinsky, N., Katz, A. S., and Ikar, D. (2000). What is beautiful is usable. Interact. Comput. 13 (2), 127–145. doi:10.1016/s0953-5438(00)00031-x

Tzourio-Mazoyer, N., Landeau, B., Papathanassiou, D., Crivello, F., Etard, O., Delcroix, N., et al. (2002). Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15 (1), 273–289. doi:10.1006/nimg.2001.0978

Xiao, Y. M., Drouin, S., Gerard, I. J., Fonov, V., Aubert-Broche, B., Ma, Y. H., et al. (2018). An augmented-reality system prototype for guiding transcranial Doppler ultrasound examination. Multimedia Tools Appl. 77 (21), 27789–27805. doi:10.1007/s11042-018-5990-9

Keywords: virtual reality, human-computer interaction, neuroanatomy, education, brain connectivity

Citation: Hellum O, Steele C and Xiao Y (2024) SONIA: an immersive customizable virtual reality system for the education and exploration of brain networks. Front. Virtual Real. 4:1244096. doi: 10.3389/frvir.2023.1244096

Received: 21 June 2023; Accepted: 18 December 2023;

Published: 08 January 2024.

Edited by:

Ekaterina Prasolova-Førland, Norwegian University of Science and Technology, NorwayReviewed by:

Stefan Marks, Auckland University of Technology, New ZealandCopyright © 2024 Hellum, Steele and Xiao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yiming Xiao, WWltaW5nLnhpYW9AY29uY29yZGlhLmNh

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.