95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 19 July 2023

Sec. Virtual Reality in Industry

Volume 4 - 2023 | https://doi.org/10.3389/frvir.2023.1209535

This article is part of the Research Topic Virtual Reality in Industry: Spotlight on Women View all 7 articles

Samantha Lewis-Fung1

Samantha Lewis-Fung1 Danielle Tchao1

Danielle Tchao1 Hannah Gabrielle Gray2,3

Hannah Gabrielle Gray2,3 Emma Nguyen

Emma Nguyen Susanna Pardini1,4,5

Susanna Pardini1,4,5 Laurence R. Harris2

Laurence R. Harris2 Dale Calabia3,6

Dale Calabia3,6 Lora Appel1,6,7*

Lora Appel1,6,7*Introduction: Anxiety in people with epilepsy (PwE) is characterized by distinct features related to having the condition and thus requires tailored treatment. Although virtual reality (VR) exposure therapy is widely-used to treat a number of anxiety disorders, its use has not yet been explored in people with epilepsy. The AnxEpiVR study is a three-phase pilot trial that represents the first effort to design and evaluate the feasibility of VR exposure therapy to treat epilepsy-specific interictal anxiety. This paper describes the results of the design phase (Phase 2) where we created a minimum viable product of VR exposure scenarios to be tested with PwE in Phase 3.

Methods: Phase 2 employed participatory design methods and hybrid (online and in-person) focus groups involving people with lived experience (n = 5) to design the VR exposure therapy program. 360-degree video was chosen as the medium and scenes were filmed using the Ricoh Theta Z1 360-degree camera.

Results: Our minimum viable product includes three exposure scenarios: (A) Social Scene—Dinner Party, (B) Public Setting—Subway, and (C) Public Setting—Shopping Mall. Each scenario contains seven 5-minute scenes of varying intensity, from which a subset may be chosen and ordered to create a customized hierarchy based on appropriateness to the individual’s specific fears. Our collaborators with lived experience who tested the product considered the exposure therapy program to 1) be safe for PwE, 2) have a high level of fidelity and 3) be appropriate for treating a broad range of fears related to epilepsy/seizures.

Discussion: We were able to show that 360-degree videos are capable of achieving a realistic, immersive experience for the user without requiring extensive technical training for the designer. Strengths and limitations using 360-degree video for designing exposure scenarios for PwE are described, along with future directions for testing and refining the product.

Anxiety is a common and debilitating comorbid condition in people with epilepsy (PwE) that often goes unrecognized and untreated (Munger Clary, 2014). In particular, relatively little research has focused on epilepsy/seizure-specific (ES) interictal anxiety, which includes a number of distinct disorders that require tailored treatment: anticipatory anxiety, seizure phobia, social phobia, and epileptic panic disorder) (Hingray et al., 2019). Unlike peri-ictal (pre-ictal, ictal, and post-ictal) anxiety, ES-interictal anxiety is independent of the seizures themselves and is characterized by exaggerated epilepsy-related fears, worries, preoccupations, and avoidance behaviours, which are not regularly screened for during routine assessments of peri-ictal anxiety, classical anxiety disorders, or depression (Munger Clary et al., 2023). Moreover, phobic and agoraphobic symptoms of ES-interictal anxiety are associated with maladaptive coping behaviors (e.g., avoiding public transportation) that often delay treatment and reduce overall quality of life for PwE (Munger Clary et al., 2023; News, 2023).

Exposure therapy (ET) is a type of cognitive and behavioural therapy that has been shown to be effective for treating anxiety disorders (Kaplan et al., 2011; Abramowitz, 2013). Exposures are typically administered in a hierarchical manner, starting with scenarios that provoke a lower level of anxiety and allowing patients to habituate to their anxiety before proceeding to higher intensity levels (Abramowitz, 2013). Despite its benefits, ET is an under-utilized treatment which may in part be due to a lack of trained professionals offering this specific intervention (Kaplan et al., 2011). Standard ET involves a combination of imaginal and in vivo exposures, each with their own challenges and limitations. With imaginal exposures, patients may not be able or willing to visualize sufficiently fearful stimuli, and the therapist is often unable to observe whether the patient is engaging in any avoidance behaviours during exposure. Meanwhile, in vivo exposures rely on real-world interactions where conditions are not always predictable or repeatable (e.g., social interactions), and sometimes altogether not feasible (e.g., reproducing trauma) (Deacon et al., 2013; Boeldt et al., 2019).

Virtual reality (VR) using computer-generated images (CGI) has been successfully combined with ET to treat various anxiety disorders (Bouchard et al., 2017; Ionescu et al., 2021; Andersen et al., 2023), with comparable outcomes to in vivo ET (Powers and Emmelkamp, 2008; Bouchard et al., 2017). It can be viewed as a safe and practical alternative to conventional methods (Sutton, 2020; Andersen et al., 2023) and allows the user to face their fears through interacting with the virtual environment (Reeves et al., 2021). Several studies have highlighted its ability to overcome the limitations of imaginal and in vivo exposures (Riva, 2022); indeed, VR is able to simulate real-world environments with a high degree of fidelity and allows for the design of immersive experiences where feared stimuli can be manipulated in a controlled manner. In this way, the exposures are not limited by the patient’s imagination (as in imaginal ET) and are more customizable and repeatable than in vivo ET (Boeldt et al., 2019). Additionally, VR-ET has been helpful as an intermediary step to bridge the gap between imaginal and in vivo exposures (Riva, 2022) and may have a particular advantage for increasing adherence to treatment, as well as in cases where in vivo ET is difficult or impossible (Deng et al., 2019). Nevertheless, the adoption of CGI-based VR-ET into clinical practice has been limited by the cost and complexity of programming three-dimensional graphic environments (Ionescu et al., 2021).

Relatively newer to the healthcare research space, 360-degree video technology presents an attractive alternative to CGI for designing VR environments for healthcare interventions (Ionescu et al., 2021; Stevens and Sherrill, 2021). Specialized 360-degree video cameras are portable, require few technical skills to operate, and are a more accessible and affordable medium compared to CGI (Stevens and Sherrill, 2021). Equipped with such a convenient and versatile tool, clinicians can quickly film their own 360-degree videos to be used in virtual exposures for their patients (Stevens and Sherrill, 2021). These benefits, in addition to the photorealism and authentic sense of presence afforded by this medium, have more recently become recognized by researchers designing VR-ET programs (Holmberg et al., 2020; Reeves et al., 2021; Lundin et al., 2022). Additionally, unlike with CGI, 360-degree video is not susceptible to the “Uncanny Valley Effect,” defined as an unpleasant feeling users experience when encountering highly realistic but imperfect renderings of people in a simulated environment (Seyama and Nagayama, 2007; Reeves et al., 2022). In the context of VR-ET, this effect is known to reduce realism and adherence to treatment (Benbow and Anderson, 2019). As 360-degree video is in its early stages of development as a tool for mental health interventions and studies describing its use are heterogeneous, developing diverse content tailored to patients’ needs has been recommended as an appropriate gap for research to prioritize (Ionescu et al., 2021).

Although VR-ET is becoming widely-used to treat a number of anxiety disorders, its use has not yet been explored in PwE (Gray et al., 2023). The AnxEpiVR Study is a three-phase pilot trial that represents the first effort to design and evaluate the feasibility of VR-ET to treat ES-interictal anxiety [for full methods, see (Gray et al., 2023)]. Phase 1 involved surveying PWE (n = 14) and people affected by epilepsy (n = 4) (e.g., loved ones or friends, professionals working with the epilepsy population) to gather information on epilepsy/seizure-specific fears [for full methods and results of Phase 1, see (Tchao et al., 2023)]. Questions were intentionally created to collect information useful for informing the design of VR-ET scenarios for PwE (i.e., anxiety-provoking locations and elements that might be readily filmed). Participants described anxiety-provoking scenes, which were categorized under the following themes: location, social setting, situational, activity, physiological, and previous seizure. While scenes tied to previous seizures were typically highly personalized and idiosyncratic, public settings and social situations were commonly reported fears. Factors consistently found to increase ES-interictal anxiety included the potential for danger (physical injury or inability to get help), social factors (increased number of unfamiliar people, social pressures), and specific triggers (stress, sensory, physiological, and medication-related).

Phase 2, the focus of the present paper, involved designing VR-ET scenarios based on the results of Phase 1. Phase 3 will involve piloting the intervention designed in Phase 2 through a home-based trial where participants (target n = 5 PwE) receive daily virtual VR-ET sessions for 2 weeks (ClinicalTrials.gov ID: NCT05296057). This study was reviewed and approved by the York University Human Participants Review Committee and approved on 31 May 2022 (certificate number: 2022–105). Participants provided written informed consent to participate in this study.

This paper describes Phase 2, the design phase of the three-part AnxEpiVR pilot study. The primary objective was to develop a minimum viable product (MVP) consisting of a hierarchical and customizable set of VR-ET scenarios to be piloted in the ET program that will be administered to PwE in Phase 3. Our primary objective was to provide a detailed description of our design process for creating VR scenarios for PwE that 1) are safe for this population 2) have a high level of fidelity, and 3) would be appropriate for treating a broad range of fears related to epilepsy/seizures. As a secondary objective, we aimed to synthesize our learnings from this process to describe key strengths, limitations, and future considerations for both A) the MVP, and B) broadly, 360-degree video as a medium to create VR-ET for PwE.

With limited available literature specifically addressing ES-interictal anxiety and the fear triggers for PwE (Tchao et al., 2023), we based the design of our MVP on information gathered from Phase 1 participants and employed a participatory design approach. A participatory design approach seeks the involvement of people with lived experience throughout the design process to develop a product that would be meaningful and useful to end-users (Spinuzzi, 2005). In recent years, this approach has been increasingly used in healthcare (Teal et al., 2023) for its ability to reduce research “waste,” time and costs spent focusing on research that is not important or relevant for patients and clinicians (Slattery et al., 2020). For example, the approach has been used by other researchers to design VR-ET programs that are authentic and grounded in people’s real-world experiences (Flobak et al., 2019). It is useful for tailoring treatments to specific populations and has been successfully applied to develop 360-degree video VR-ET public speaking scenarios for adolescents (Flobak et al., 2019) and simulations of potentially stressful situations to help incarcerated women prepare for what they may face when they return home (Teng et al., 2019).

Our process relied on both synchronous and asynchronous, hybrid (online and in-person) focus groups to 1) generate a rich understanding of participants’ experiences and beliefs, 2) generate information on collective views, and 3) clarify, extend, and qualify data collected through our Phase 1 survey (Gill et al., 2008; Kitzinger, 1995). End-user feedback was collectively considered and analyzed while being compared to themes identified in Phase 1 (feared locations and elements), as well as insights from researcher debrief notes, to determine which suggestions could and should be incorporated at a given stage, and what could be better addressed in a future design. The final MVP was again compared to these themes to summarize the feasibility of using 360-degree film to simulate scenarios related to ES-interictal anxiety. From this process, we were able to construct an account of the strengths, limitations, and future directions for the MVP, as well as for the use of 360-degree film for creating VR-ET for PwE.

360-degree video was the medium chosen because it is a less resource intensive (time and cost) option for creating VR-ET scenarios with a high degree of realism. All videos were filmed in 4K and 30 frames/sec using the Ricoh Theta Z1 360° camera. Videos were edited using Adobe Premiere Pro (e.g., trimming, combining, adding transitions, special effects) and then uploaded to an unlisted playlist on YouTube for access by the study team and collaborators with lived experience. Scenes were viewed and evaluated on a computer screen in two dimensions as well as on a Meta Quest 2 VR head-mounted device (HMD).

Initial designs for exposure scenes to be recorded in Phase 2 were based on ES-interictal fears described in Phase 1 in conjunction with literature describing VR-ET design for other populations. Design changes were incorporated iteratively throughout Phase 2, following feedback collected from collaborators with lived experience and team design discussions. Figure 1 provides an overview of the timeline of Phase 2 activities, including filming and collection of feedback.

To better understand potential challenges with filming 360-degree videos, an initial prototype of one of the Phase 2 exposure scenarios was filmed with members of the study team. Scenes were rehearsed to make social interactions feel natural while introducing different elements to provoke anxiety. The videos were reviewed intermittently during filming to optimize camera positioning, sound (e.g., volume of dialogue with respect to background noise) and lighting conditions. Care was taken to verify that objects of focus, such as a person, were not in the camera’s stitch line (i.e., where the two spherical images generated by the 360-degree camera are merged). Finally, the prototype scenes were edited in Adobe Premiere Pro to test various special effects to simulate anxiety/seizure. Debrief notes from team discussions during filming sessions were recorded to serve as guidelines for the final three sets.

The three scenarios selected for filming in Phase 2 included A) Dinner Party, B) Subway, and C) Shopping Mall. These particular settings were chosen because they encompass factors that contribute to ES-interictal anxiety as reported by participants in Phase 1 such as social factors, potential for physical danger, and presence of known environmental seizure triggers (Tchao et al., 2023). Additionally we sought to film settings that would be relevant to a wider population and impactful when considering quality of life and social wellbeing.

Storyboards were planned following review of the prototype (Feedback Session #1) from an epileptologist as well as a neuropsychologist who specializes in epilepsy. Filming of the final exposure scenes occurred between December 2022 and January 2023, allowing for multiple opportunities to film in public spaces where environmental factors (e.g., crowds, noise) were difficult to control or varied by time of day. Actors who were part of the scenes (i.e., who interacted with the camera or were in close proximity to the camera) provided their permission to be filmed through a digital consent form.

Feedback was collected at various time points from our end-users (collaborators with lived experience): one PwE, two family members of PwE, and two professionals (one epileptologist and one neuropsychologist who works with PwE) (Table 1). Feedback was based on watching the exposure scenarios on YouTube on a computer screen or in a Meta Quest 2 VR HMD.

The final AnxEpiVR ET hierarchies include three scenarios: A) Dinner Party, B) Subway, and C) Shopping Mall (Figure 2), each containing seven 5-min scenes. Supplementary Appendix A provides a list of the final exposure hierarchies with descriptions of each scene, including the specific fears that the scenes were designed to evoke. Examples can be viewed as 360-degree YouTube videos through the AnxEpiVR Project Website.

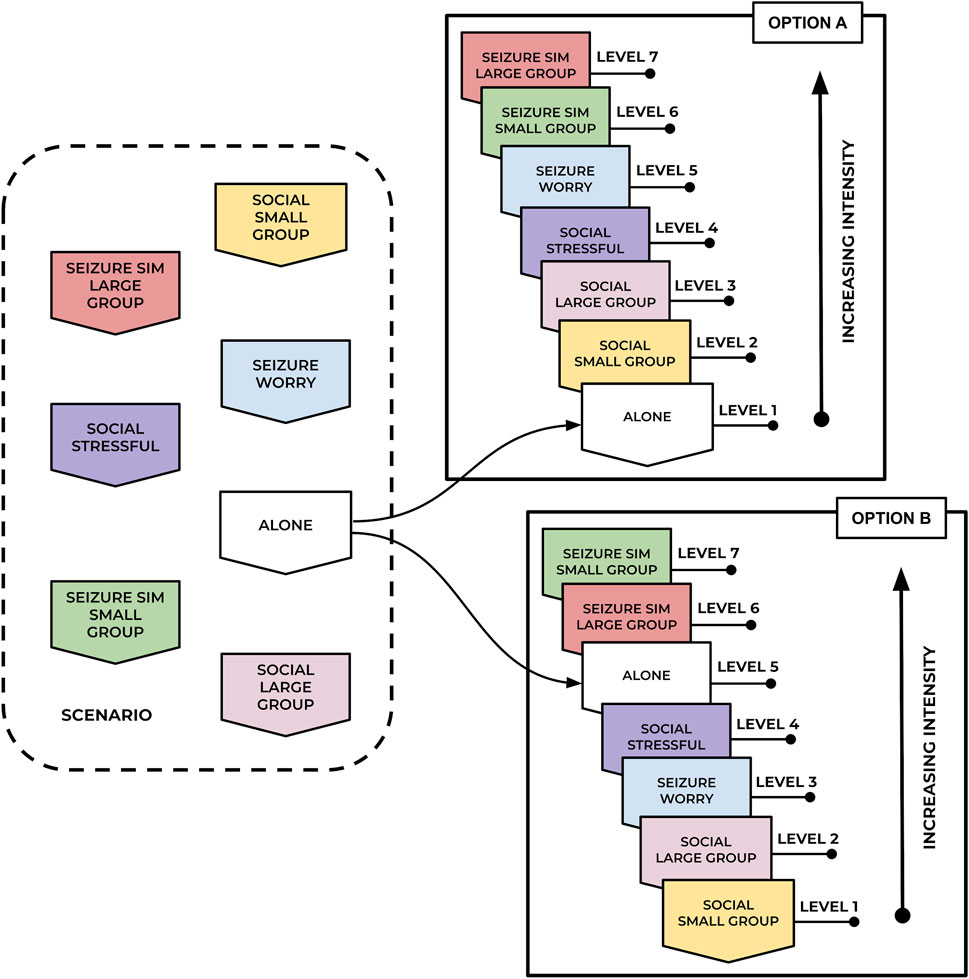

The three scenarios follow a similar progression where each scene centers around a distinct fear related to epilepsy/seizures (e.g., being alone and unable to receive help, social fears, physical danger) and incorporates additional feared elements, including increasing levels of noise or crowds, that would be naturally present in the environment. Additionally, seizure simulations are included in all scenarios to address anxiety specifically associated with a previous seizure experience. Together, the seven scenes within a given set are intended to form the building blocks from which a subset (or all scenes) may be selected and ordered in increasing intensity to create a graded exposure hierarchy suited to an individual’s unique fears (Figure 3).

FIGURE 3. Examples of personalized exposure hierarchies. Option A may suit PwE who experience anxiety in larger social groups while Option B may suit PwE who fear being alone in case of a seizure.

Table 2 presents a summary of what was achieved in filming the three exposure scenarios, as well as feedback from our lived experience collaborators. Certain elements (e.g., flashing lights or other visual patterns) were not included in videos for safety considerations to avoid known seizure triggers in the simulated environment. Likewise, physiological states (e.g., extreme hunger, lack of sleep, headache) identified as anxiety triggers were not explored due to limitations in feasibility.

The general impressions provided by our collaborators with lived experience who viewed the final videos were that the exposure scenarios managed to capture many fears related to ES-interictal anxiety, such as fear of being judged, fear of drawing attention to oneself, fear of having a seizure while alone, fear of having a seizure in front of others, and a fear of interacting with others. The acting was considered good or sufficient, and the videos were deemed generally appropriate for triggering varying levels of fear in PwE. Future considerations based on lessons learned by the team combined with recommendations from our end-users with lived experience are summarized in Table 2 and described in further detail below.

As it is not possible to see oneself in 360-degree video, the camera was mounted onto a torso mannequin (nicknamed “Cameron”) dressed in gender-neutral clothing for the filming of most scenes (Figure 4). This was a design decision made after using the headset to view the prototype which was filmed with the camera mounted to a tripod. The tripod was removed from the video in the editing phase but this effect resulted in a sense of “floating”. Having the mannequin in place of the tripod was perceived as more natural during testing as it allowed the viewer to see shoulders/torso when looking downwards in VR.

FIGURE 4. Torso mannequin with mounted Ricoh Theta Z1 360-degree camera (“Cameron”): Image captured during the filming of the Subway scenario.

For scenes requiring Cameron to be seated, the torso was removed from its stand and placed directly on a chair or bench. At the dinner party scenes, Cameron was seated close to the dining table so that the table blocked any view of where legs should be. When seated away from a table (e.g., on the subway train or platform bench) a knapsack was placed on Cameron’s “lap” to hide direct view of the legs.

Seizure simulations were filmed with the camera positioned directly on the floor to represent the point of view of an individual lying on their side after falling during a seizure. When these scenes were tested in the HMD with the viewer in a seated position, the research team noted that the images felt unnatural because the low perspective in the virtual environment gave the sensation that the viewer’s head was resting/positioned upright directly on top of the floor. Alternatively, we found that lying on our side while testing the scene resulted in a more realistic sensation, as the HMD’s gyroscope automatically maintains the correct orientation of the image when the viewer rotates their head position sideways (see Figure 5). In practice, this would mean requesting that the PwE lie on their side during the exposure.

FIGURE 5. Camera positioned directly on floor to simulate fall during seizure. For seizure simulations it is necessary for the user to lie on their side in the real world to achieve the correct perspective in the virtual scene.

Although user feedback indicated that including camera movement within the scene might increase realism and sense of presence, we intentionally avoided introducing first-person motion into our scenes to reduce the likelihood of inducing simulator sickness, which has symptoms that overlap with anxiety (e.g., nausea, dizziness). Simulator sickness can occur when the user is static but the virtual environment is moving, resulting in a sensory mismatch between what the user sees and feels (Laessoe et al., 2023). Exclusion of first-person motion presented certain challenges with the scene narrative. For example, while waiting for the subway, the PwE cannot move toward the train once it arrives. It was thus necessary to plan scenes where it would be natural for the PwE to remain stationary, or include scene descriptions to preface each scene that would account for why the PwE might not be moving (e.g., waiting for a friend). As a compromise, some of the subway scenes included combining clips of different locations to give the appearance of moving through the transit system (e.g., from top of stairs, to subway platform, to on the train). However, the transitions were described by some of our lived experience end-users as disruptive to the viewing experience.

Additionally, we found it challenging to incorporate elements emphasizing the potential for physical danger should a seizure occur. For example, we explored different spaces in the subway station that might be considered “dangerous” such as the top of the stairs/escalator or on the platform near the tracks. However, with first-person motion excluded, it was more difficult to draw attention to the risk of falling than if, for example, the PwE walked down a crowded staircase or along a subway platform, stepping closer to the tracks to pass bystanders.

Filming in a private setting (e.g., dinner party) involved volunteer actors from the research team and provided greater flexibility when designing storyboards or manipulating environmental factors (e.g., background noise, number of people in scene). In contrast, filming in public spaces presented challenges with less predictable levels of crowding and noise. At least one member of the research team was required to remain near the camera while filming at subway stations or in the shopping mall to monitor for possible theft (which did not occur) as well as for unwanted attention from strangers that did not fit with the scene (which occurred frequently, including taking pictures of the mannequin, pointing, close examination). These unpredictable conditions made it particularly difficult to film exposure scenes where the viewer is completely surrounded by “crowds” or completely “alone.” In some cases, we needed to re-film scenes several times due to unplanned interactions between Cameron and strangers, or travel to different locations to achieve the desired number of people present in the scene. For “crowded” scenes, although we chose to film at transfer stations and busier areas of the mall during peak times, the number of people remained overall fewer than anticipated. Scenes on the subway train were filmed with the study team standing or seated close to the mannequin to try and create a greater sense of crowding as well as maintain the privacy of nearby strangers. This challenge with simulating crowds was reflected in user feedback, which included suggestions to add scenes with more crowding. Additionally, several end-users commented that the “alone” scenes felt very “long” and might be more effective if used in a session with a therapist who could help to incorporate imaginal elements. However, users agreed that PwE who fear being alone might benefit from the full 5 minutes to allow for sufficient time to habituate to their fear.

Verbal interactions with the PwE were achieved by incorporating rhetorical questions (e.g., “Don’t you agree?”) or simple questions (e.g., “What’s your name?” “Are you feeling okay?”). Users commented that being engaged in dialogue increased their sense of presence during testing, whereas in scenes without dialogue they felt a greater sense of being “a bystander,” watching the scene play out. However, due to the nature of 360-degree video, the degree to which the PwE can interact with the actors is limited to responding to these types of questions; the scene will always play out as prescribed, and cannot adapt to the PwE’s response. During testing, end-users commented that this limitation may disrupt the PwE’s sense of presence if their natural response to a question does not fit the content or timing of the subsequent dialogue.

Additional scenarios were suggested in our feedback collection, such as filming scenes from a workplace or school setting. It was pointed out that PwE experiencing ES-interictal anxiety may already avoid social settings such as a dinner party and would connect better with these more common, less avoidable settings. We would argue that exposure to social activities such as parties could be beneficial for reducing isolation and promoting overall social wellbeing, but agree that a school/work scene could present a viable scenario to film in the future. In fact, this type of setting may lend itself particularly well to being filmed in 360-degree because the dialogue is naturally more structured and predictable (e.g., raising one’s hand to answer a question, providing a brief explanation/presentation in front of others) when compared to the back-and-forth conversation that would occur at a party.

Each scenario includes three scenes focusing on seizure occurrences designed to provoke anxiety at different intensities. In the first type of scene, “Seizure Worry,” the PwE remains conscious and seated while strangers or “acquaintances” express concern (e.g., asking how they are feeling, commenting that they do not look well). These comments may contribute to worry of an oncoming seizure, or can suggest that the PwE might be experiencing an absence seizure (i.e., brief lapse in consciousness). In the second type of scene, the PwE regains consciousness after a seizure has occurred and is helped by one or two people nearby. In the third type of scene, the PwE regains consciousness while surrounded by many people who react to the situation with varying degrees of helpfulness (e.g., offering to call an ambulance, kneeling beside the PwE, staring while walking past on the subway platform).

Premiere Pro was used to add special effects (e.g., double-vision, heartbeat, blinking) to the seizure simulations. Following in-person testing, however these effects were removed as both the core research team and lived experience end-users felt they did not effectively help to simulate seizures and detracted from the sense of presence. Instead, a “pre-seizure” clip filmed upright using the mannequin was combined with a “post-seizure” clip filmed with only the camera at floor-level. Fade-to-black transitions were not necessarily deemed representative of what a PwE would experience, but were determined to be the best option for stitching together clips to demonstrate the occurrence of a seizure.

Overall, our collaborators with lived experience provided positive feedback on the effectiveness of these scenes. For example, one individual commented, “[It is] good to expose PwE to this [in VR] since in real life PwE often can’t see people’s faces during a seizure.” Another said that, “Actors being helpful/kind was good as an intermediary step before seizure simulation with crowds.” Suggestions for future iterations included testing additional special effects to simulate the seizure (e.g., garbled audio, slowing down film speed), filming seizures near hard and/or uneven surfaces (e.g., bathtub, stairs), or filming a seizure simulation in third person (i.e., PwE witnesses an actor having a seizure). It was also recommended that dialogue regarding seizure worry be more generalized. For example, some comments in the scenes, such as from an “acquaintance” reminding the PwE about medication or limiting alcohol, may not apply either because many PwE would already naturally self-monitor or because acquaintances would not know enough to make this type of suggestion.

Beyond feedback related to the filmed content of the exposure scenarios, some collaborators provided suggestions related to the delivery of the intervention. One piece of feedback that was incorporated included adding brief scene descriptions (1-2 sentences), to provide adequate context for the PwE prior to viewing the exposure (see Supplementary Appendix A). Based on user suggestions, wording was modified to be more open-ended and brief (i.e., to allow the PwE more freedom to form their own interpretation while experiencing the scene) and to include specific physiological cues (e.g., “sweating,” “heart beating”) as opposed to feelings (e.g., “anxious,” “worried”). These scene descriptions are intended to be read aloud to participants by the researcher prior to each exposure in Phase 3. Based on user feedback, future iterations may include incorporating scene descriptions into the exposure as text preceding the scene, or else developing personalized scene descriptions to be read aloud by the PwE in first-person (e.g., “I am waiting for the subway…”). Additionally, as a way to increase fear intensity, the neuropsychologist gave examples of how a therapist might instruct the PwE to spin around in a chair or sit near something warm prior to an exposure to evoke physiological sensations that mimic anxiety (e.g., dizziness, warmth/sweating).

VR-ET has been successfully used to treat anxiety disorders including specific phobias and post-traumatic stress disorder, typically relying on CGI to create customized virtual environments for the exposures (Emmelkamp et al., 2002; Baños et al., 2011; Radkowski et al., 2011). To our knowledge, this is the first VR-ET program designed specifically for PwE who experience ES-interictal anxiety. We created our program using 360-degree video, which has been described as a promising medium for designing VR-ET that has only more recently started to be explored (Deng et al., 2019; Andersen et al., 2023). Our methodology involved a true participatory design process which spanned several months, allowing time to build shared knowledge with our collaborating lived experience end-users (Teng et al., 2019) through their involvement as integral members of the research team. Below, we discuss the successes and challenges we encountered while creating our MVP to highlight the strengths and limitations of using this medium for creating VR-ET scenarios.

Through the development of our MVP, we were able to show that the 360-degree video medium is capable of achieving a realistic, immersive experience for the user without requiring extensive technical training for the designer, and on a modest budget. Our collaborators, including a neuropsychologist and epileptologist, reviewed the exposure scenes and believed them to be safe and inclusive of elements that could effectively evoke ES-interictal anxiety for a variety of PwE. Based on feedback from our collaborators with lived experience, we believe that 360-degree video may be a particularly effective medium for creating seizure simulations due to its ability to portray the reactions of bystanders, including facial expressions, with photo realistic quality. This is consistent with literature evaluating the ability for 360-degree video to induce a strong sense of presence (Holmberg et al., 2020; Reeves et al., 2021; Lundin et al., 2022).

Certainly, we encountered a number of challenges when creating our VR-ET program. In some cases, these challenges were unrelated to using 360-degree video. For example, using special effects to simulate the sensory experience of having a seizure was a challenge since the symptoms experienced can be highly individualized or dependent on seizure type. Likewise, we chose not to incorporate certain fear triggers for safety reasons (i.e., seizure triggers such as flashing lights, visual patterns), or for challenges with feasibility unrelated to VR medium (e.g., internal states such as hunger, stress, fatigue). On the other hand, some difficulties we encountered were directly related to the 360-degree video medium, which we will discuss below.

One such challenge identified during the design process was creating exposure scenes that could both be readily filmed and suitable for a broad range of PwE. ES-interictal anxiety is a complex and heterogeneous condition that comprises unique fear stimuli, making it necessary to consider which elements can be effectively portrayed through 360-degree video. It is important to highlight that designing VR-ET for PwE is simply less straightforward when compared to designing VR-ET for certain anxiety disorders such as specific phobias, where it is easier to identify and simulate the fear stimulus and modify conditions to produce varying levels of intensity in the exposures. For example, to treat arachnophobia, many VR-ETs use CGI to manipulate the appearance of the spiders and vary the level of interaction with them to create their graded hierarchy (Miloff et al., 2016; Lindner, 2021). Likewise, in VR-ET for acrophobia, individuals might progress in an ordinal manner through different stories of a tall building to finally walking out onto a plank protruding from a great height (Rimer et al., 2021). In contrast, phobic stimuli for PwE are varied and unique (Tchao et al., 2023) and may need to be addressed through a more tailored VR-ET design.

Although our MVP captures a number of different fear stimuli and allows for customization through scene selection and ordering, there may be overall an insufficient number of levels of intensity to address specific fears. As each scene incorporates a number of different fear elements, it was not possible to isolate and manipulate each specific fear variable (e.g., crowds) in a graded hierarchy, as is more commonly done in CGI-based exposures. Nevertheless, this limitation of our MVP was actually identified as a strength by one collaborator with lived experience who felt that having a combination of fears in each scene better portrayed exposure to “real life” scenarios.

In general, we found exerting “control” over elements of the scene to be challenging, particularly when filming in public locations. For example, we were unable to capture the level of crowding we desired due to unpredictable numbers of bystanders, which may reduce our MVP’s potential to elicit fear. Even so, filming in very crowded conditions would not have been possible due to concerns for the privacy of bystanders (it was determined unrealistic to obtain consent for filming from all strangers who might enter the scene) as well as unwanted attention from bystanders (e.g., staring and pointing at the camera/mannequin). With additional resources, a larger team of actors could be used to create a localized crowd around the camera to better achieve the desired effect.

Lastly, the greatest difficulty with designing VR-ET using 360-degree video entails recreating believable and socially realistic storylines (Flobak et al., 2019). Although feedback we received was mostly positive or minor in this regard, we found the lack of interactivity that 360-degree video allows to be a barrier to creating believable narratives. Generally, when compared with CGI, 360-degree video provides a more passive experience for users since they cannot move freely within the virtual environment. Instead, users view the landscape from positions predetermined by the designer and can only interact with assets through pre-scripted dialogue. This is a known limitation, though studies have found that 360-degree video has been successfully implemented for types of exposures that require only one-directional interaction (e.g., fear of heights, speaking in front of a classroom, crowded areas) (Stevens and Sherrill, 2021). On the other hand, our scenarios also included combinations of specific fears within the context of public and social settings that generally require two-directional interaction with the environment (e.g., walking across a subway platform and onto a train, initiating conversations with strangers at a party, completing a shopping task with a time pressure). Based on feedback from our collaborators, the lack of potential for interactivity may have reduced our VR-ET program’s ability to create a sense of presence, to some degree. The social interactions that we were able to include were generic and limited to simple questions requiring short responses, and the lack of translational movement made it more difficult to simulate physical danger task-based activities.

There are a few limitations of our methodology that are important to highlight when considering the generalizability of our findings. The first is that our VR-ET program is an MVP, which means that it should not be considered “finished” or “complete.” For instance, we chose to delay incorporating some of our collaborators’ feedback, such as creating a fourth work/school scenario that may resonate with PwE who don’t relate to attending dinner parties. We made this type of decision consciously, based on design science research. Instead of attempting to create a “perfect” product, we focused on building an MVP from which we were able and will continue to gather feedback, identify challenges, and iterate based on evaluation. In other words, we felt it was important to first test if our design includes any fundamental flaws before investing additional time to refine it. Design science research highlights the importance of incorporating this type of evaluation during the creation process, and has found that this iterative approach is a cost-effective way to efficiently identify and correct design flaws early-on (Thomke and Reinertsen, 2012; Abraham et al., 2014). Although this approach allowed us to flexibly incorporate user feedback and learnings to strengthen our VR-ET program throughout the design process, it must also be kept in mind when considering any limitations of our current product.

Second, although feedback was solicited from people with lived experience (n = 5) throughout the design process, this small sample consisted only of collaborators with the research team and included only one PwE, limiting the overall generalizability of guidance provided. Furthermore, we are not able to describe if the feedback from our PwE collaborator differed from our non-PwE collaborators in any way, and not every collaborator tested the exposure scenes in the HMD; some viewed videos only on a computer screen. While these individuals could comment on certain aspects such as the appropriateness of the scene design or quality of the actors’ performance, without the fully immersive experience, they were less able to comment on the overall realism of the exposures.

Lastly, our MVP was filmed using members of the research team as actors in the scenes, with the same individuals appearing in multiple locations intended to include only strangers. Thus, it is possible that viewers may be distracted by repeated appearances of actors, or that Phase 3 participants may recognize certain team members from the initial study consent and screening process. Additionally, although the acting was described as believable during the feedback process, there may be limitations to the actors’ performance given lack of professional training. In particular, any seemingly unnatural interactions that were recorded while simulating social interactions may interfere with the PwE’s sense of presence in the scene.

Emerging technological advancements have the potential to help address a number of the challenges and limitations we encountered, and could be incorporated into future iterations of the AnxEpiVR VR-ET or other VR-ET programs. It is becoming easier to embed computer graphic elements into 360-degree video, which could be helpful for introducing interactivity with the virtual environment (i.e., social interaction, movement through the scene). For example, to create adaptive social interactions, designers could make use of new OpenAI chat-based language models (OpenAI, 2023) to mimic human conversation through speech/text. Such AI-based conversational agents are already emerging in certain self-guided training and mental health applications (Suganuma et al., 2018; Moore et al., 2022). Also on the horizon are other OpenAI deep learning models such as DALL-E2, which can generate digital images from natural language descriptions (DALL·E, 2023). This technology may create opportunities to build entirely bespoke 3D environments using CGI based on individual patient descriptions of scenes. At the same time, 360-degree cameras may become more accessible in the future (e.g., embedded in cell phones) making it easier for healthcare professionals or family members to capture environments for personalized exposure scenarios (Stevens and Sherrill, 2021).

Through a participatory design process, we developed an MVP VR-ET consisting of three customizable hierarchies that are ready to be piloted with PwE in Phase 3 of the AnxEpiVR Study. People with lived experience collaborated with the research team to help guide the design, providing feedback from a variety of perspectives to improve the final product. We chose 360-degree video to create our exposure scenarios due to its affordability, accessibility, and potential for evoking a strong sense of presence. The technology adequately captured many fears related to epilepsy-specific interictal anxiety, such as fear of having a seizure while alone or in front of others. The product was judged as safe, suitable for a variety of users, and to have a reasonably high level of fidelity. Despite challenges specific to 360-degree video, such as limited user interactivity with the virtual world and less control of feared stimuli within a scene, emerging technological advancements have potential to help address some limitations encountered and to assist with creating more personalized exposures.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

The studies involving human participants were reviewed and approved by York University Human Participants Review Committee and approved on 31 May 2022 (certificate number: 2022–105). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

LA and HG contributed to the conception of the study. All authors LA, HG, SL-F, DT, EN, SP, and LH contributed to the design of the protocol and the interpretation of the findings. SL-F, DT, and LA wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

This pilot trial was possible due to the generosity of York University’s Faculty of Health through their Junior Faculty Funds/ Minor Research Grant, as well as the Anxiety Research Fund powered by Beneva. Both funders provided funds based on a competitive review of the study protocol, but were not involved in the design, analysis or write-up of the manuscript. Thanks to the Frontiers editorial board we will receive a 50% discount on open access fees for this special issue.

We would like to extend our gratitude to Glenda Carman-HG, David Gold, Esther Bui, and Kathleen Langstroth for sharing their expertise to optimize this virtual reality exposure therapy. We would also like to acknowledge our appreciation for our collaborators who donated their time to assist with filming the exposure scenarios: Eaton Asher, Melina Bellini, DC, Kyle Cheung, Eduardo Garcia-Giller, Isabella Garito, Penelope Serrano Jimenez, Pi Nasir, Michael Reber, Essete Tesfaye, and Bill Zhang.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1209535/full#supplementary-material

Abraham, R., Aier, S., and Winter, R. (2014). “Fail early, fail often: Towards coherent feedback loops in design science research evaluation,”. AIS Electronic Library (AISeL): Association for Information Sytems in 2014 International Conference on Information Systems (ICIS 2014). Available from: http://aisel.aisnet.org/icis2014/proceedings/ISDesign/3/(Accessed December 7, 2022).

Abramowitz, J. S. (2013). The practice of exposure therapy: Relevance of cognitive-behavioral theory and extinction theory. Behav. Ther. 44 (4), 548–558. doi:10.1016/j.beth.2013.03.003

Andersen, N. J., Schwartzman, D., Martinez, C., Cormier, G., and Drapeau, M. (2023). Virtual reality interventions for the treatment of anxiety disorders: A scoping review. J. Behav. Ther. Exp. Psychiatry 81, 101851. doi:10.1016/j.jbtep.2023.101851

Baños, R. M., Guillen, V., Quero, S., García-Palacios, A., Alcaniz, M., and Botella, C. (2011). A virtual reality system for the treatment of stress-related disorders: A preliminary analysis of efficacy compared to a standard cognitive behavioral program. Int. J. Hum-Comput Stud. 69 (9), 602–613. doi:10.1016/j.ijhcs.2011.06.002

Benbow, A. A., and Anderson, P. L. (2019). A meta-analytic examination of attrition in virtual reality exposure therapy for anxiety disorders. J. Anxiety Disord. 61, 18–26. doi:10.1016/j.janxdis.2018.06.006

Boeldt, D., McMahon, E., McFaul, M., and Greenleaf, W. (2019). Using virtual reality exposure therapy to enhance treatment of anxiety disorders: Identifying areas of clinical adoption and potential obstacles. Front. Psychiatry 10, 773. doi:10.3389/fpsyt.2019.00773

Bouchard, S., Dumoulin, S., Robillard, G., Guitard, T., Klinger, É., Forget, H., et al. (2017). Virtual reality compared with in vivo exposure in the treatment of social anxiety disorder: A three-arm randomised controlled trial. Br. J. Psychiatry J. Ment. Sci. 210 (4), 276–283. doi:10.1192/bjp.bp.116.184234

DALL·E (2023). Creating images from text. Available from: https://openai.com/research/dall-e (Accessed April 19, 2023).

Deacon, B. J., Farrell, N. R., Kemp, J. J., Dixon, L. J., Sy, J. T., Zhang, A. R., et al. (2013). Assessing therapist reservations about exposure therapy for anxiety disorders: The therapist beliefs about exposure scale. J. Anxiety Disord. 27 (8), 772–780. doi:10.1016/j.janxdis.2013.04.006

Deng, W., Hu, D., Xu, S., Liu, X., Zhao, J., Chen, Q., et al. (2019). The efficacy of virtual reality exposure therapy for PTSD symptoms: A systematic review and meta-analysis. J. Affect Disord. 257, 698–709. doi:10.1016/j.jad.2019.07.086

Emmelkamp, P. M. G., Krijn, M., Hulsbosch, A. M., de Vries, S., Schuemie, M. J., and van der Mast, C. A. P. G. (2002). Virtual reality treatment versus exposure in vivo: A comparative evaluation in acrophobia. Behav. Res. Ther. 40 (5), 509–516. doi:10.1016/s0005-7967(01)00023-7

Flobak, E., Wake, J. D., Vindenes, J., Kahlon, S., Nordgreen, T., and Guribye, F. (2019). “Participatory design of VR scenarios for exposure therapy,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–12. (CHI ’19). doi:10.1145/3290605.3300799

Gill, P., Stewart, K., Treasure, E., and Chadwick, B. (2008). Methods of data collection in qualitative research: Interviews and focus groups. Br. Dent. J. 204 (6), 291–295. doi:10.1038/bdj.2008.192

Gray, H. G., Tchao, D., Lewis-Fung, S., Pardini, S., Harris, L. R., and Appel, L. (2023). Virtual reality therapy for people with epilepsy and related anxiety: Protocol for a 3-phase pilot clinical trial. JMIR Res. Protoc. 12, e41523. doi:10.2196/41523

Hingray, C., McGonigal, A., Kotwas, I., and Micoulaud-Franchi, J. A. (2019). The relationship between epilepsy and anxiety disorders. Curr. Psychiatry Rep. 21 (6), 40. doi:10.1007/s11920-019-1029-9

Holmberg, T. T., Eriksen, T. L., Petersen, R., Frederiksen, N. N., Damgaard-Sørensen, U., and Lichtenstein, M. B. (2020). Social anxiety can Be triggered by 360-degree videos in virtual reality: A pilot study exploring fear of shopping. Cyberpsychol. Behav. Soc. Netw. 23 (7), 495–499. doi:10.1089/cyber.2019.0295

Ionescu, A., Van Daele, T., Rizzo, A., Blair, C., and Best, P. (2021). 360° videos for immersive mental health interventions: A systematic review. J. Technol. Behav. Sci. 6 (4), 631–651. doi:10.1007/s41347-021-00221-7

Kaplan, J. S., David, F., and Tolin, P. (2011). Exposure therapy for anxiety disorders. Available from: https://www.psychiatrictimes.com/view/exposure-therapy-anxiety-disorders (Accessed March 28, 2023).

Kitzinger, J. (1995) Qualitative research: Introducing focus groups. BMJ 311 (7000), 299–302. doi:10.1136/bmj.311.7000.299

Laessoe, U., Abrahamsen, S., Zepernick, S., Raunsbaek, A., and Stensen, C. (2023). Motion sickness and cybersickness – sensory mismatch. Physiol. Behav. 258, 114015. doi:10.1016/j.physbeh.2022.114015

Lindner, P. (2021). Better, virtually: The past, present, and future of virtual reality cognitive behavior therapy. Int. J. Cogn. Ther. 14 (1), 23–46. doi:10.1007/s41811-020-00090-7

Lundin, J., Lundström, A., Gulliksen, J., Blendulf, J., Ejeby, K., Nyman, H., et al. (2022). Using 360-degree videos for virtual reality exposure in CBT for panic disorder with agoraphobia: A feasibility study. Behav. Cogn. Psychother. 50 (2), 158–170. doi:10.1017/s1352465821000473

Miloff, A., Lindner, P., Hamilton, W., Reuterskiöld, L., Andersson, G., and Carlbring, P. (2016). Single-session gamified virtual reality exposure therapy for spider phobia vs. traditional exposure therapy: Study protocol for a randomized controlled non-inferiority trial. Trials 17 (1), 60. doi:10.1186/s13063-016-1171-1

Moore, N., Ahmadpour, N., Brown, M., Poronnik, P., and Davids, J. (2022). Designing virtual reality–based conversational agents to train clinicians in verbal de-escalation skills: Exploratory usability study. JMIR Serious Games 10 (3), e38669. doi:10.2196/38669

Munger Clary, H. M. (2014). Anxiety and epilepsy: What neurologists and epileptologists should know. Curr. Neurol. Neurosci. Rep. 14 (5), 445. doi:10.1007/s11910-014-0445-9

Munger Clary, H. M., Giambarberi, L., Floyd, W. N., and Hamberger, M. J. (2023). Afraid to go out: Poor quality of life with phobic anxiety in a large cross-sectional adult epilepsy center sample. Epilepsy Res. 190, 107092. doi:10.1016/j.eplepsyres.2023.107092

News, N. (2023). Fear of public places is common in adults with epilepsy. Neuroscience News. Available from: https://neurosciencenews.com/agoraphobia-epilepsy-22398/(Accessed March 27, 2023).

OpenAI (2023). OpenAI API. Available from: https://platform.openai.com (Accessed April 11, 2023).

Powers, M. B., and Emmelkamp, P. M. G. (2008). Virtual reality exposure therapy for anxiety disorders: A meta-analysis. J. Anxiety Disord. 22 (3), 561–569. doi:10.1016/j.janxdis.2007.04.006

Radkowski, R., Huck, W., Domik, G., and Holtmann, M. (2011). “Serious games for the therapy of the posttraumatic stress disorder of children and adolescents,” in Virtual and mixed reality - systems and applications. Editor R. Shumaker (Berlin, Heidelberg: Springer), 44–53. (Lecture Notes in Computer Science).

Reeves, R., Curran, D., Gleeson, A., and Hanna, D. (2022). A meta-analysis of the efficacy of virtual reality and in vivo exposure therapy as psychological interventions for public speaking anxiety. Behav. Modif. 46 (4), 937–965. doi:10.1177/0145445521991102

Reeves, R., Elliott, A., Curran, D., Dyer, K., and Hanna, D. (2021). 360° video virtual reality exposure therapy for public speaking anxiety: A randomized controlled trial. J. Anxiety Disord. 83, 102451. doi:10.1016/j.janxdis.2021.102451

Rimer, E., Husby, L. V., and Solem, S. (2021). Virtual reality exposure therapy for fear of heights: Clinicians’ attitudes become more positive after trying VRET. Front. Psychol. 12, 671871. Available from: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.671871. (Accessed April 4, 2023). doi:10.3389/fpsyg.2021.671871

Seyama, J., and Nagayama, R. S. (2007). The uncanny valley: Effect of realism on the impression of artificial human faces. Presence Teleoperators Virtual Environ. 16 (4), 337–351. doi:10.1162/pres.16.4.337

Slattery, P., Saeri, A. K., and Bragge, P. (2020). Research co-design in health: A rapid overview of reviews. Health Res. Policy Syst. 18 (1), 17. doi:10.1186/s12961-020-0528-9

Stevens, T. M., and Sherrill, A. M. (2021). A clinician’s introduction to 360° video for exposure therapy. Transl. Issues Psychol. Sci. 7, 261–270. doi:10.1037/tps0000302

Suganuma, S., Sakamoto, D., and Shimoyama, H. (2018). An embodied conversational agent for unguided internet-based cognitive behavior therapy in preventative mental health: Feasibility and acceptability pilot trial. JMIR Ment. Health 5 (3), e10454. doi:10.2196/10454

Sutton, J. (2020). What is virtual reality therapy? The future of psychology. PositivePsychology.com. Available from: https://positivepsychology.com/virtual-reality-therapy/(Accessed March 28, 2023).

Tchao, D., Lewis-Fung, S., Gray, H., Pardini, S., Harris, L. R., and Appel, L. (2023). Describing epilepsy-related anxiety to inform the design of a virtual reality exposure therapy: Results from Phase 1 of the AnxEpiVR clinical trial. Epilepsy Behav. Rep. 21, 100588. doi:10.1016/j.ebr.2023.100588

Teal, G., McAra, M., Riddell, J., Flowers, P., Coia, N., and McDaid, L. (2023). Integrating and producing evidence through participatory design. CoDesign 19 (2), 110–127. doi:10.1080/15710882.2022.2096906

Teng, M. Q., Hodge, J., and Gordon, E. (2019). “Participatory design of a virtual reality-based reentry training with a women’s prison,” in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (New York, NY, USA: Association for Computing Machinery), 1–8. (CHI EA ’19). doi:10.1145/3290607.3299050

Thomke, S., and Reinertsen, D. (2012). Six myths of product development. Harvard Business Review. Available from: https://hbr.org/2012/05/six-myths-of-product-development (Accessed December 7, 2022).

Keywords: epilepsy, anxiety, virtual reality, exposure therapy, cognitive behavioural therapy (CBT), nonpharmacological intervention, 360-degree video, user-centred design

Citation: Lewis-Fung S, Tchao D, Gray HG, Nguyen E, Pardini S, Harris LR, Calabia D and Appel L (2023) Designing virtual reality exposure scenarios to treat anxiety in people with epilepsy: Phase 2 of the AnxEpiVR clinical trial. Front. Virtual Real. 4:1209535. doi: 10.3389/frvir.2023.1209535

Received: 20 April 2023; Accepted: 03 July 2023;

Published: 19 July 2023.

Edited by:

Dioselin Gonzalez, Independent researcher, Greater Seattle Area, United StatesReviewed by:

Kai Wang, China United Network Communications Group, ChinaCopyright © 2023 Lewis-Fung, Tchao, Gray, Nguyen, Pardini, Harris, Calabia and Appel. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lora Appel, bG9yYS5hcHBlbEB1aG4uY2E=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.