- Chair of Human-Machine Communication, TUM School of Computation, Information and Technology, Technical University of Munich, Munich, Germany

Virtual reality offers exciting new opportunities for training. This inspires more and more training fields to move from the real world to virtual reality, but some modalities are lost in this transition. In the real world, participants can physically interact with the training material; virtual reality offers several interaction possibilities, but do these affect the training’s success, and if yes, how? To find out how interaction methods influence the learning outcome, we evaluate the following four methods based on ordnance disposal training for civilians: 1) Real-World, 2) Controller-VR, 3) Free-Hand-VR, and 4) Tangible-VR in a between-subjects experiment (n = 100). We show that the Free-Hand-VR method lacks haptic realism and has the worst training outcome. Training with haptic feedback, e.g., Controller-VR, Tangible-VR, and Real-World, lead to a better overall learning effect and matches the participant’s self-assessment. Overall, the results indicate that free-hand interaction is improved by the extension of a tracked tangible object, but the controller-based interaction is most suitable for VR training.

1 Introduction

A virtual environment offers unique advantages compared to the real world. There are no physical limitations, dangerous situations, or expensive resources. Best of all, it is accessible at any time and from anywhere. Therefore, virtual reality (VR) provides multiple opportunities for generating immersive training scenarios with convincing training success. Explosive ordnance disposal (EOD) training is one field of application for which all these advantages can contribute to a more effective training. Moreover, the current situation in Ukraine emphasizes the demand for EOD experts since Ukrainian civilians, like English teachers, become educated in EOD (Bajrami, 2022). This training is highly relevant, as after a war, the disposal of all unexploded ordnance takes many years, causes many civilian casualties, and is life-threatening for the EOD specialists (Khamvongsa and Russell, 2009). Even the slightest differences in training success can have unimaginable consequences, so it could be assumed that there are many applications and scientific contributions to increase training quality. But in fact, due to the used technologies, the scientific contributions are primarily no longer state-of-the-art (Chung et al., 1996) or only used for simply visualizing explosive ordnance on a movable QR code marker (Tan, 2020). Recently, one scientific contribution investigated the suitability of VR with a non-see-through Head-Mounted-Display (HMD) and Mixed Reality (MR) in a Cave Automatic Virtual Environment (CAVE) for the collaborative training of civilian EOD experts (Rettinger and Rigoll, 2022). Compared to conventional training, the results demonstrated that MR significantly achieved the best results, followed by VR training. However, an HMD offers crucial advantages in terms of initial cost, maintenance, and flexibility, so it is important to investigate whether training success of VR training can be improved. Here, the interaction could have an impact, as it differs in certain aspects between a CAVE and an HMD. For example, if a user holds a tangible object in his hands, he can see it in the CAVE but not through an HMD. Although the user can haptically feel and move the tangible object in both technologies, only the HMD offers the possibility to visualize the object in a different way. This means that, e.g., the object’s cross-section or specific animations of internal components can be presented more understandably. There are plenty of creative interaction ideas in research, i.e., Haptic PIVOT: Dynamic appearance and disappearance of a haptic proxy in the user’s palm (Kovacs et al., 2020), VR Grabbers: A chopstick-like tool for grabbing virtual objects (Yang et al., 2018), or Haptic Links: Bi-manual grabbing by two controllers connected with a chain (Strasnick et al., 2018), but it is unclear which type of interaction is suited best for VR training. Previous research results indicate that controller-based interaction is more suitable for VR training than free-hand interaction, even though it provides a natural and intuitive interaction for the user (Caggianese et al., 2019). One reason for this is the problem of occlusion, which negatively affects the stability and accuracy of the sensor data and, thus, the user’s interaction. Since there is a lack of knowledge on how to circumvent this problem for VR training, we investigate a new approach and compare it with conventional interaction methods to determine how the interaction modality affects the learning outcome.

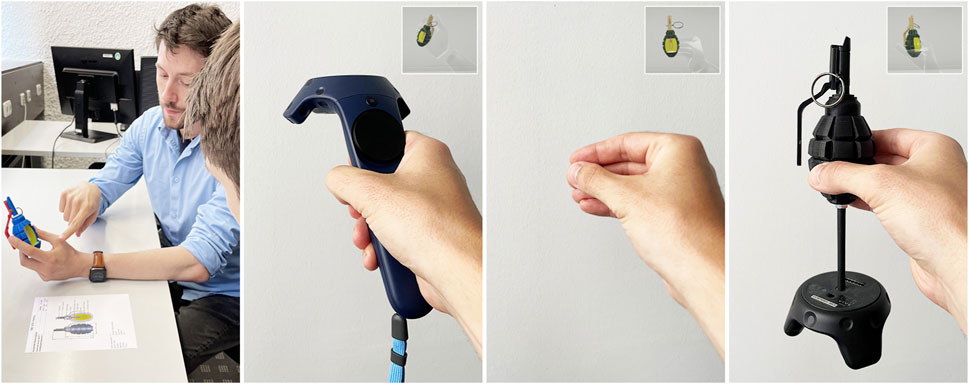

Therefore, we investigate how different interaction methods affect collaborative VR EOD training for civilians by comparing the following four conditions depicted in Figure 1: 1) Conventional training (Real-World), 2) VR training with controllers (Controller-VR), 3) VR training with a Leap Motion (Free-Hand-VR), and 4) VR training with a Leap Motion and a tangible grenade model (Tangible-VR).

FIGURE 1. Four compared interaction methods for ordnance disposal training: 1) Real-World, 2) Controller-VR, 3) Free-Hand-VR, and 4) Tangible-VR (left to right).

For this purpose, we design a training scenario in cooperation with a training center for EOD training and a humanitarian foundation to explain a grenade’s technical functionality, component specifics, and implications after detonation to investigate the following research questions:

RQ1: Does a tangible object improve Free-Hand-VR training?

RQ2: Which of the interaction methods is preferred for VR training?

All significant results of a between-subjects user study (n = 100) revealed that the Free-Hand-VR condition performed worst. They also indicated that the extension of the Free-Hand-VR interaction method with a tangible object (Tangible-VR) performed substantially better. This should be investigated further, as this interaction method performs overall slightly worse than the controller condition (Controller-VR).

2 Related work

VR training has a variety of applications, including industrial (Funk et al., 2016; Tocu et al., 2020; Ulmer et al., 2020), medical (Rettinger et al., 2021; Yu et al., 2021; Rettinger et al., 2022a), and safety training (Zhao et al., 2018; Clifford et al., 2019; Gisler et al., 2021). Many related research contributions also report a higher training success compared to conventional training in the real world. Beyond that, state-of-the-art technologies offer multiple possibilities for interacting with virtual objects like controllers, data gloves, tangible objects, or free-hand sensors. Here, traditional VR controllers provide a better usability (Masurovsky et al., 2020), performance (Gusai et al., 2017), and learning curve (Caggianese et al., 2019) than free-hand sensors, thanks to their better stability and accuracy. Tangible objects positively affect interaction (Hinckley et al., 1994; Wang et al., 2020), performance (Franzluebbers and Johnsen, 2018; Bozgeyikli and Bozgeyikli, 2022), presence (Hoffman, 1998; Insko, 2001; Kim et al., 2017), spatial skills, and cognition (Mazalek et al., 2009; Cuendet et al., 2012).

Strandholt et al.investigated the influence of physical representations in VR. In a user study (n = 20), participants interacted with three virtual tools (hammer, screwdriver, and saw) using different interaction methods (Strandholt et al., 2020). They compared the following three conditions: 1) HTC Vive controller for which the movements correspond to the virtual tool, 2) A Vive tracker is attached to the physical tools so that the physical representation corresponds to the virtual one, and 3) like condition 2 but with a physical surfaces (a table or a board) on which the tools are used. The results demonstrate that the physical tool with the physical surface condition is the most realistic, followed by the physical tool without a surface.

Muender et al. (2019) evaluated the effect of tangibles with different levels of detail based on a user study (n = 24) and an expert interview (n = 8). Uniformly shaped objects with no similarities to the virtual objects were compared with lego-build and 3D-printed tangibles resembling the virtual object. The user study results do not show significant differences in haptics, grasping precision, or perceived performance. Overall, the results demonstrate that Lego provides the best compromise between sufficient details and fast implementation.

Wang et al. (2020) explored how haptic feedback with a real tangible physical surface differs from interaction without a physical surface in a collaborative system. For this purpose, they conducted a user study (n = 28) in which the participants’ had to solve a Lego brick assembly task. The results show that the user experience is significantly better with the physical surface condition. This interaction method is also preferred by most of the participants.

Gusai et al. (2017) investigated two interaction modalities in a collaborative scenario. The conditions HTC Vive Controller and Leap Motion Controller were compared in a user study (n = 30). The participant’s task was picking up and putting down virtual objects by pushing and releasing a button in the first condition and using natural grasping and releasing gestures in the second condition. In total, the Vive controller performed best since it has higher accuracy and stability. In response to the question about which method the participants would prefer, 87 percent chose the HTC Vive Controller.

Overall, previous work does not focus on the training’s affects of the interaction methods but rather on the user experience. In this work, we focus on the impact of the interaction methods Controller-VR and Free-Hand-VR on the training success. Since the mentioned contributions lead to the assumption that the training success is worse with the Free-Hand-VR condition, we also investigate the effects of combining a tracked tangible object with a free-hand sensor.

3 Concept: interaction methods

There are various explosive ordnances, differing not only optically but also regarding, e.g., the used explosives, their architectures, or ignition mechanisms. EOD experts require all this complex knowledge of these ordnances to perform the following steps: 1) Identify the explosive ordnance, 2) Assess the associated risks, and 3) Defuse or detonate it in a controlled way. The acquisition of these skills requires a large investment of time, so in this experiment, we focus the training content on the functionality of one ordnance, its component designations, and implications after detonation. For this, we use the Soviet F-1 hand grenade, which offers advantages regarding the manageable training complexity and compact size.

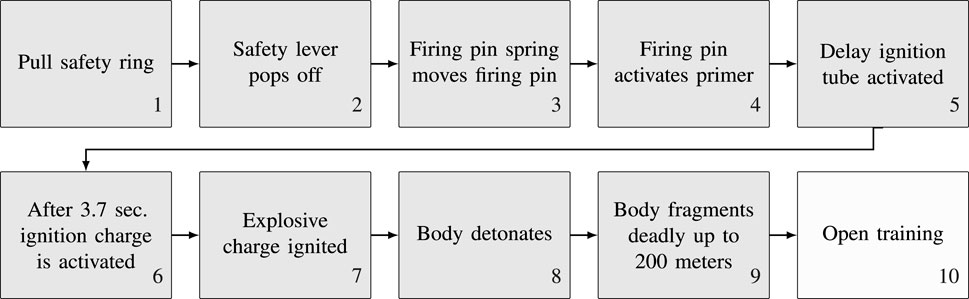

The design of the training procedure depicted in Figure 2 is carried out in cooperation with a training center for EOD training and a humanitarian foundation. It focuses on explaining the technical functionality of the grenade, its component designations, and implications after detonation to the subjects. This content, which the instructor teaches, is divided into ten steps. In the first nine steps, the instructor explains the content identically to every participant. This technical instruction takes about 3 minutes and is followed by open training, where the participants can study the relevant training materials independently. Meanwhile, the instructor continues to assist them in clarifying any questions or ambiguities. The maximum training duration is limited to 10 minutes. The following sub-chapters describe the four interaction methods used for the experiment.

3.1 Real-World training

In this method, we use one of the most up-to-date tools in the area of EOD training. This is a 3D-printed replica of the grenade with a cut-away window to depict inner processes and components, as illustrated in Figure 3. Its color-coded components facilitate the distinction between the components for studying the technical functionality. The grenade, designed by the Advanced Ordnance Teaching Materials (AOTM) program (Tan, 2014), additionally provides the ability to test the firing mechanism interactively. After the user pulls the safety ring, a spring-loaded firing pin triggers the primer. In addition to the printed model, the participants receive a print-out illustrating the respective components and their technical designations (see Figure 1).

FIGURE 3. Different perspectives of the 3D-printed F-1 grenade, used in the Real-World method. This is one of the state-of-the-art training tools in explosive ordnance disposal.

3.2 VR training conditions

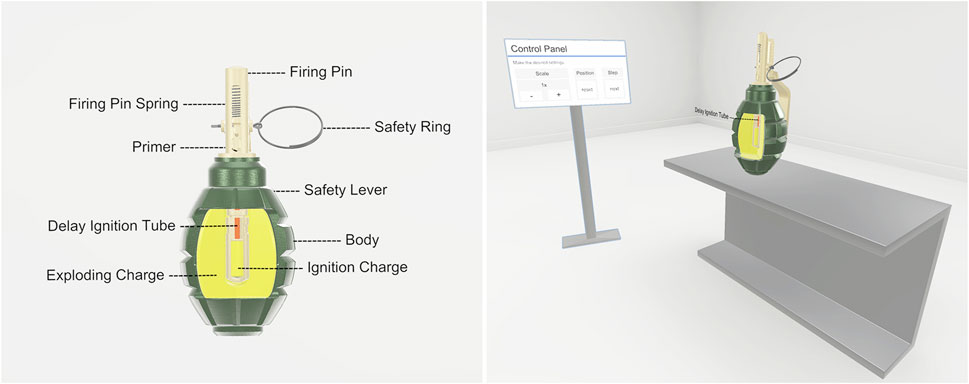

For the other three training scenarios, we use a VR HMD. There are two virtual objects the participants and the instructor can interact with. One is a digital 3D model of the grenade that users can grab, transform in six degrees of freedom (6-DOF), and release by using one of the respective interaction methods. This allows the model to be viewed from different perspectives and distances, similar to the Real-World, with identical scaling.

In VR-based training scenarios, users can control the grenade and the training via a virtual touch screen which offers three functionalities. As shown in Figure 4, it is possible to interactively scale the virtual grenade up and down to see the details of the individual components. Another function is placing the virtual grenade on the virtual table (see Figure 4) if the users do not want to hold it in their hands. The training is broken down into several explanation steps; at each step, only the currently relevant information is displayed. The user can iterate over these steps using the virtual touch screen. As illustrated in Figure 4, it is also possible to display all the components and their designations. The grenade model is rendered accordingly as a whole or cross-section to ensure that the relevant information can be clearly extracted from the respective training steps. This type of information visualization is used to avoid affecting the participants’ cognitive performance with unnecessary information (Sweller and Chandler, 1994; Sweller, 2010). After the instructor has explained the defined training content, participants can go through the training and interact with all virtual objects. In this multi-user application, both persons are in the same physical room and tracking space, so the virtual position matches the physical one, and the participants can also hear their voices from the physically correct position (see Figure 5). Users’ head movements are tracked by the HMD and, depending on the condition, wrist or finger movements are tracked using the HTC Vive controllers or a Leap Motion. By using these data, both users are visualized as an avatar, allowing them to see the virtual representation of their counterparts and themselves and thus improving cognitive abilities (Steed et al., 2016). An inverse kinematic technique is used for a realistic representation of the avatars’ movements.

FIGURE 4. The left image represents all designations and components of the virtual grenade model, and the right image illustrates the virtual environment in which a 6×scaled grenade is placed on a table.

Regarding the used hardware, the training operates on two separate computers. These communicate over a local area network, one acting as the host and the other as the client, with a round-trip delay time of about 11 ms. Each of these computers are equipped with one HTC Vive Pro HMD with a resolution of 1440 × 1600 pixels per eye. Two HTC lighthouse 2.0 base stations are used for tracking. The VR application runs at 90 Hz and is implemented with Unity version 2019.4.11.

3.2.1 Controller-VR training

In this training condition, both instructor and participant receive two conventional HTC Vive controllers. These are represented in the virtual environment as the hands of the avatar and transform accordingly. So the controller transformations are identical to the virtual hand and the virtual grenade as long as the user holds the grenade. By pressing, holding, and releasing the trigger of the controller, the grenade can be grabbed, held, and released. A collision between the virtual hand and the virtual touchscreen button enables the control of the desired functions.

3.2.2 Free-hand-VR training

For this interaction method, the user’s hand movements are detected via optical sensors, so they have their hands free and do not receive any haptic feedback. Leap Motion controllers are used for tracking; these are attached to the HMD by 3D-printed fixtures. The virtual grenade can be grabbed and transformed by the pinch or grasp gesture. Screen control is also realized by the collision between its virtual button and the virtual hand.

3.2.3 Tangible-VR training

In this case, the Free-Hand-VR condition is extended with a tangible object of the grenade. Figure 1 depicts an HTC Vive tracker attached to a modified 3D-printed grenade model. For a more efficient manipulation of this tangible object, the size (Kwon et al., 2009; Bergström et al., 2019) and shape (Ban et al., 2012; Muender et al., 2019) of the 3D-printed object are identical to that of the virtual model. Due to the identical tracking spaces, its position corresponds to that of the virtual grenade. Similar to the previous interaction method, users can perceive their virtual hands and fingers, including their movements. Therefore, the users of this interaction method grasp the tracked 3D model of the grenade instead of the air, which provides tactile feedback. This offers the advantage that possible tracking issues of the Leap Motion can not interfere with the interaction with the grenade.

4 Experiment

4.1 Study design

We designed the lab study using a between-subjects design in order to evaluate the respective training success of the four conditions: 1) Real-World, Controller-VR, Free-Hand-VR, and Tangible-VR. These interaction methods were the independent variable. As dependent variables, we measured the haptic realism, concentration ability, mental workload, and training success.

4.2 Procedure

The experiment was divided into four phases. Initially, the participants were informed about the purpose, signed a consent form, and completed a questionnaire with metadata. Next, they were introduced to the relevant hardware and interaction options and performed one of the assigned training conditions. Besides the participant, two other persons were involved in the respective training methods. One person acted as the instructor, and the other ensured that the instructor and participant did not injure each other or stumble over the cables of the head-mounted displays. The observer behaved silently and inconspicuously to prevent negatively affecting the participants’ mental load (Rettinger et al., 2022b). After the training was completed, another questionnaire was to obtain a subjective evaluation of the training. Last, the participants received an online test 1 week after participation to record the training’s success.

4.3 Participants

We recruited 100 participants (48 female, 52 male) aged 18–33 years (M = 23.29, SD = 3.73). These were primarily students within an age range of 15 years to ensure that their cognitive abilities did not differ excessively between them (Salthouse, 1998). Since the complexity of the training was kept low in terms of interaction and visualization details, participants with a low prior experience in VR were also included. They had mean self-reported expertise with VR of 1.77 (SD = 1.86), which ranged from 1 = “no experience”, to 7 = “expert”. Regarding gender, age, and level of education, these participants were equally distributed to the four conditions. One exclusion criterion was that participants were not allowed to be experienced in disposing of explosive ordnance. Their previous knowledge about hand grenades was the same for all participants. They were only aware, from action movies, that pulling the safety ring is required before setting off the grenade.

5 Results

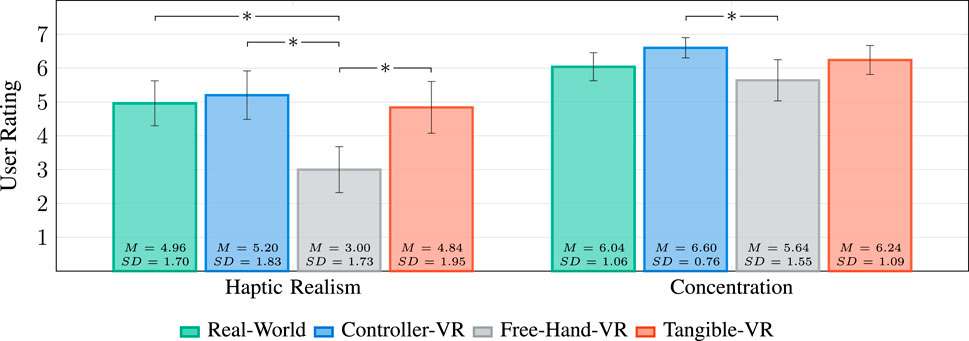

5.1 Haptic realism

To keep the differences between VR training and real situations as small as possible and thus positively affect the training outcome, the haptic feedback during VR training should be similar to a real situation. Thus, the participants answered the question, “I had the sense of holding the real grenade in my hand.” using a Likert scale ranging from 1 = “not at all”, to 7 = “very much”. Figure 6 (left) shows the corresponding aggregated results according to which the Free-Hand-VR interaction performed the lowest. An ANOVA test (α = 0.05) revealed significant differences between the ratings (F(3, 96) = 7.857, p < 0.001, η2 = 0.197). Post-hoc tests indicated significantly lower ratings for the Free-Hand-VR condition than Real-World(p = 0.001), Controller-VR(p < 0.001), and Tangible-VR(p = 0.003).

FIGURE 6. Results of the ratings: haptic realism when holding the grenade (left) and the ability to concentrate on the tasks (right). Mean scores with 95% confidence intervals for each condition. Upper lines represent significant relations.

5.2 Concentration ability

Participants rated the question “I was able to focus very well on the tasks.” using a seven-point Likert scale (1 = “not at all”, to 7 = “very much”) to capture the concentration ability of the different methods. As illustrated in Figure 6 (right), the Controller-VR method achieved the highest results. All these ratings were analyzed with an ANOVA, which revealed a significant difference (F(3, 96) = 3.025, p = 0.033, η2 = 0.086). Thereby, the Bonferroni-corrected post hoc test indicated a significant difference between the conditions Controller-VR and Free-Hand-VR(p = 0.024). The differences between the other conditions were not significant.

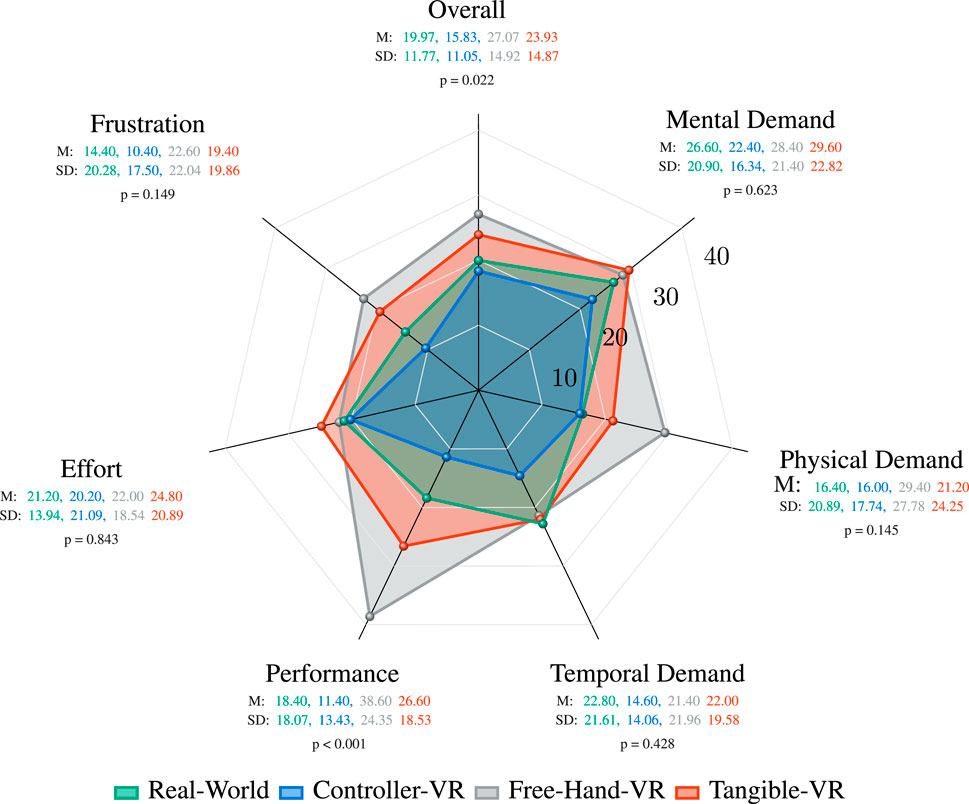

5.3 Workload

Participants rated their perceived cognitive workload by utilizing the Raw NASA-RTLX (RTLX) (Hart, 2006) questionnaire; the results are depicted in Figure 7. These ratings were also analyzed with an ANOVA (α = 0.05) and revealed a significant difference in the RTLX subscale Performance (F(3, 96) = 9.468, p < 0.001, η2 = 0.228). The Bonferroni post hoc test indicated a significant difference between the Real-World and the Free-Hand-VR group (p = 0.002), the Controller-VR and the Free-Hand-VR(p < 0.001), and between the Controller-VR and the Tangible-VR groups (p = 0.034). There were no significant differences between the other groups. In addition, the overall workload score of all six RTLX subscales indicated significant differences (F(3, 96) = 3.372, p = 0.022, η2 = 0.095). The post hoc test reported significant differences between the Controller-VR and the Free-Hand-VR conditions (p = 0.021).

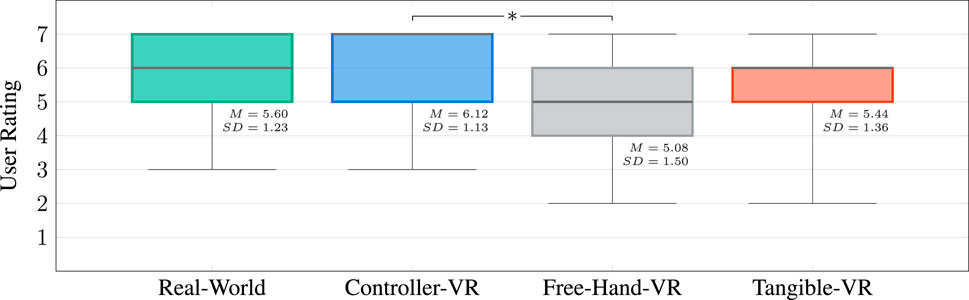

5.4 Subjective training success

The self-assessed learning skills were evaluated by the question “I feel able to transfer what I have learned without any problems.”, whose Likert scale also ranged from 1 = “not at all”, to 7 = “very much”. The subjective assessments of the participants are depicted in Figure 8. An ANOVA yielded significant differences (F(3, 96) = 2.721, p = 0.049, η2 = 0.078). The post hoc analysis revealed that the conditions Controller-VR and Free-Hand-VR significantly differed (p = 0.036).

FIGURE 8. Results of the subjective rating: The four conditions are visualized as boxplots and indicate the user’s estimated ability to handle the learned without any problems. Higher ratings represent a better assessment.

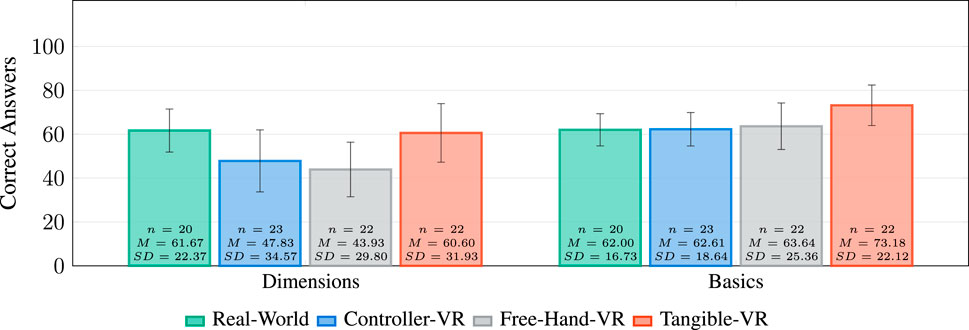

5.5 Test results

One week after participating in the lab study, the training outcome was measured with an online test. This comprises a total of thirteen questions. Three related to the grenade dimensions, and the remaining ten related to the training content explained. Results of both areas’ were compared with the Kruskal-Wallis H test (α = 0.05) since a Shapiro-Wilk test assessed the data as non-normally distributed (p < 0.001), and the group sizes were no longer equally distributed due to the response rate (87%). For both areas, the non-parametric test indicated no significant differences. However, the visualized results in Figure 9 illustrate that the participants of the Tangible-VR condition had the least error rate.

FIGURE 9. Percentage of the correctly answered test results with 95% confidence intervals. The dimensions (left) result from three questions and the basics (right) from ten questions.

6 Discussion

The collected data correspond to previous work, in which the Controller-VR condition performed significantly better than the Free-Hand-VR condition (Gusai et al., 2017; Masurovsky et al., 2020).

Regarding RQ1, “Does a tangible object improve Free-Hand-VR training?” is an essential benefit of the Tangible-VR condition compared to the Free-Hand-VR condition that the transformation of virtual tangible objects is more reliable. In this extended condition, the optical tracking interferences did not affect the usability, as the grenade remained in the correct position. Accordingly, the issues mentioned by Gusai et al. (2017) regarding accuracy and stability can be circumvented through this condition.

The RTLX subscales and its overall score, as well as the subjective training success and the online test results, indicated no significant differences, but a clear tendency can be identified. In all domains, the Tangible-VR condition achieved considerably higher results than the Free-Hand-VR condition. Additionally, participants trained with the Tangible-VR condition achieved the highest scores in the online test. Apart from the higher usability, these results can also be attributed to the significantly higher realism. They support the finding of Strandholt et al. (2020) that the extension by physical elements increases the user’s immersion.

Regarding RQ2, “Which of the interaction methods is preferred for VR training?” the results demonstrate that VR training with controllers is the most suitable method, consistent with the comparison between Leap Motion and HTC Vive controllers by Gusai et al. (2017). Likewise, haptic realism was also the highest in the Controller-VR condition, possibly due to the passive haptic feedback of the controller. Compared to the results of Strandholt et al. (2020) these findings demonstrate that haptic realism of physical 3D-printed objects can also be achieved with an HTC Vive controller, even when users are primarily focused on training. In general, all results of the RTLX indicated that the workload of Controller-VR is the lowest. These results correspond to the significant results of the concentration ability. The reason for this is that the virtual environment displayed in the HMD isolates the user from outside distractions, thus reducing the cognitive load (Sweller and Chandler, 1994).

Concerning the limitations, it should be noted that there are even more technologies for hand tracking, such as data gloves (Englmeier et al., 2020; Allgaier et al., 2022). In the context of using the Lighthouse system, it is important to consider that both the accuracy of the controller and tracker can be influenced by the position and number of the sensors, particularly in collaborative scenarios. One contributing factor to this phenomenon is the occlusion problem, which arises from bodies or objects obstructing the line of sight between the sensors and the tracked devices. In contrast to an optical tracking system, these touch the skin, which is why users passively perceive tactile stimuli. The application of Tangible-VR condition is also limited to objects that users can easily handle in terms of weight and shape. This was already concluded by Muender et al. (2019). Achievements such as haptic realism or user experience can vary with other ordnance, such as much larger ones. Due to the study design, the participants completed only one of the four conditions, so it is feasible that the results of the online test depend on the participants’ motivation and abilities. However, the subjective results are more conclusive due to their static power. An issue for future work is the investigation of the Tangible-VR, as it offers the possibility to avoid the problems of hand tracking even better than described in this contribution. For example, the tracking information of the wrist could be used instead of the whole hand, while the fingers grasping the objects are simulated by a kinematic model. In this case, the positions of the fingers can be defined, e.g., by electrodes attached to the object to be grasped or simply by the distance between the graspable object and the wrist. This avoids the problem of tracking accuracy when the virtual fingers are not in exact contact with the virtual object. Instead, they are usually in front of, next to, or inside the virtual object. In addition, the objects to be grasped occlude the fingers. This causes the occlusion problem, and the sensor can no longer detect the fingers. The resulting unnatural movements, i.e., the tracking problems, can also be avoided. In addition, it is necessary to investigate how the size of the tangible objects affects the subjective and objective training success and where the limits of size are.

7 Conclusion

In this work, we compared the following four training conditions: 1) Real-World, 2) Controller-VR, 3) Free-Hand-VR, and 4) Tangible-VR to determine which interaction method is most effective for VR training. We designed an EOD training for civilians in cooperation with a training center for EOD training and a humanitarian foundation. For comparison, all conditions included the same training content, thus we performed a between-subjects user study with 100 participants. The statistical analysis of the obtained data demonstrates that the extension of the Free-Hand-VR method with a tangible object offers the opportunity to increase the training experience and the training outcome. Of all methods, the test results of the Tangible-VR condition were the highest. However, all significant results reported that the Controller-VR is most suitable for EOD VR training because of the haptic realism, concentration ability, perceived workload, and subjective training success.

In particular, the results of this investigation have clarified that the training success of VR training in EOD training can not only circumvent the laws of physics but also provides better results than the Real-World training condition. This increased training success can help to improve the training of civilian EOD experts and reduce the associated risks since even the slightest differences in learning success can have a dramatic impact.

Data availability statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

MR: Conception, design of the study, statistical analysis, and draft of the manuscript. GR: supervision, funding acquisition, project administration, and review. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1187883/full#supplementary-material

References

Allgaier, M., Chheang, V., Saalfeld, P., Apilla, V., Huber, T., Huettl, F., et al. (2022). A comparison of input devices for precise interaction tasks in vr-based surgical planning and training. Comput. Biol. Med. 145, 105429. doi:10.1016/j.compbiomed.2022.105429

Bajrami, F. (2022). ’A huge demand’: Ukrainian women train to clear landmines. The Washington Times Available at: https://www.washingtontimes.com/news/2022/apr/30/a-huge-demand-ukrainian-women-train-to-clear-landm/ (Accessed March 10, 2023).

Ban, Y., Kajinami, T., Narumi, T., Tanikawa, T., and Hirose, M. (2012). “Modifying an identified curved surface shape using pseudo-haptic effect,” in Proceedings of the 2012 IEEE Haptics Symposium (HAPTICS), Vancouver, BC, Canada, March 2012, 211–216. doi:10.1109/HAPTIC.2012.6183793

Bergström, J., Mottelson, A., and Knibbe, J. (2019). “Resized grasping in vr: Estimating thresholds for object discrimination,” in Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, October 2019 (Association for Computing Machinery), 1175–1183. UIST ’19. doi:10.1145/3332165.3347939

Bozgeyikli, L. L., and Bozgeyikli, E. (2022). Tangiball: Foot-enabled embodied tangible interaction with a ball in virtual reality. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, March 2022, 812–820. doi:10.1109/VR51125.2022.00103

Caggianese, G., Gallo, L., and Neroni, P. (2019). “The vive controllers vs. leap motion for interactions in virtual environments: A comparative evaluation,” in Intelligent interactive multimedia systems and services. Editors G. De Pietro, L. Gallo, R. J. Howlett, L. C. Jain, and L. Vlacic (Cham: Springer International Publishing), 24–33.

Chung, J. C., Ryan-Jones, D. L., and Robinson, E. R. (1996). Graphical Systems for explosive ordnance disposal training. Tech. Rep. San Diego CA: NAVY Personnel Research and Development Center.

Clifford, R. M., Jung, S., Hoermann, S., Billinghurst, M., and Lindeman, R. W. (2019). Creating a stressful decision making environment for aerial firefighter training in virtual reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, March 2019, 181–189. doi:10.1109/VR.2019.8797889

Cuendet, S., Bumbacher, E., and Dillenbourg, P. (2012). “Tangible vs. virtual representations: When tangibles benefit the training of spatial skills,” in Proceedings of the 7th Nordic Conference on Human-Computer Interaction: Making Sense Through Design, New York, NY, USA (Association for Computing Machinery), 99–108. NordiCHI ’12. doi:10.1145/2399016.2399032

Englmeier, D., O’Hagan, J., Zhang, M., Alt, F., Butz, A., Höllerer, T., et al. (2020). TangibleSphere – interaction techniques for physical and virtual spherical displays. New York, NY, USA: Association for Computing Machinery.

Franzluebbers, A., and Johnsen, K. (2018). Performance benefits of high-fidelity passive haptic feedback in virtual reality training. In Proceedings of the 2018 ACM Symposium on Spatial User Interaction, New York, NY, USA, October 2018 (Association for Computing Machinery), SUI ’18, 16–24. doi:10.1145/3267782.3267790

Funk, M., Kosch, T., and Schmidt, A. (2016). Interactive worker assistance: Comparing the effects of in-situ projection, head-mounted displays, tablet, and paper instructions, in Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, New York, NY, USA, September 2016 (Association for Computing Machinery), 934–939. UbiComp ’16. doi:10.1145/2971648.2971706

Gisler, J., Holzwarth, V., Hirt, C., and Kunz, A. (2021). “Work-in-progress-enhancing training in virtual reality with hand tracking and a real tool,” in Proceedings of the 2021 7th International Conference of the Immersive Learning Research Network (iLRN, Eureka, CA, USA, March 2021, 1–3. doi:10.23919/iLRN52045.2021.9459332

Gusai, E., Bassano, C., Solari, F., and Chessa, M. (2017). “Interaction in an immersive collaborative virtual reality environment: A comparison between leap motion and htc controllers,” in International conference on image analysis and processing (Springer), 290–300.

Hart, S. G. (2006). Nasa-task load index (nasa-tlx); 20 years later. Proc. Hum. Factors Ergonomics Soc. Annu. Meet. 50, 904–908. doi:10.1177/154193120605000909

Hinckley, K., Pausch, R., Goble, J. C., and Kassell, N. F. (1994). “Passive real-world interface props for neurosurgical visualization,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New York, NY, USA, April 1994 (Association for Computing Machinery), 452–458. CHI ’94. doi:10.1145/191666.191821

Hoffman, H. (1998). “Physically touching virtual objects using tactile augmentation enhances the realism of virtual environments,” in Proceedings. IEEE 1998 Virtual Reality Annual International Symposium (Cat. No.98CB36180), Atlanta, GA, USA, March 1998, 59–63. doi:10.1109/VRAIS.1998.658423

Insko, B. E. (2001). Passive haptics significantly enhances virtual environments. North Carolina: The University of North Carolina at Chapel Hill, AAI3007820.

Khamvongsa, C., and Russell, E. (2009). Legacies of war: Cluster bombs in Laos. Crit. Asian Stud. 41, 281–306. doi:10.1080/14672710902809401

Kim, M., Jeon, C., and Kim, J. (2017). A study on immersion and presence of a portable hand haptic system for immersive virtual reality. Sensors 17, 1141. doi:10.3390/s17051141

Kovacs, R., Ofek, E., Gonzalez Franco, M., Siu, A. F., Marwecki, S., Holz, C., et al. (2020). Haptic PIVOT: On-demand handhelds in VR. New York, NY, USA: Association for Computing Machinery, 1046–1059.

Kwon, E., Kim, G. J., and Lee, S. (2009). “Effects of sizes and shapes of props in tangible augmented reality,” in Proceedings of the 2009 8th IEEE International Symposium on Mixed and Augmented Reality, Orlando, FL, USA, October 2009, 201–202. doi:10.1109/ISMAR.2009.5336463

Masurovsky, A., Chojecki, P., Runde, D., Lafci, M., Przewozny, D., and Gaebler, M. (2020). Controller-free hand tracking for grab-and-place tasks in immersive virtual reality: Design elements and their empirical study. Multimodal Technol. Interact. 4, 91. doi:10.3390/mti4040091

Mazalek, A., Chandrasekharan, S., Nitsche, M., Welsh, T., Thomas, G., Sanka, T., et al. (2009). “Giving your self to the game: Transferring a player’s own movements to avatars using tangible interfaces,” in Proceedings of the 2009 ACM SIGGRAPH Symposium on Video Games, New York, NY, USA, September 2009 (Association for Computing Machinery), 161–168. Sandbox ’09. doi:10.1145/1581073.1581098

Muender, T., Reinschluessel, A. V., Drewes, S., Wenig, D., Döring, T., and Malaka, R. (2019). “Does it feel real? Using tangibles with different fidelities to build and explore scenes in virtual reality,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, May 2019 (Association for Computing Machinery), 1–12. CHI ’19. doi:10.1145/3290605.3300903

Rettinger, M., Müller, N., Holzmann-Littig, C., Wijnen-Meijer, M., Rigoll, G., and Schmaderer, C. (2021). “Vr-based equipment training for health professionals,” in Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, May 2021 (Association for Computing Machinery). CHI EA ’21. doi:10.1145/3411763.3451766

Rettinger, M., and Rigoll, G. (2022). “Defuse the training of risky tasks: Collaborative training in xr,” in Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, October 2022, 695–701. doi:10.1109/ISMAR55827.2022.00087

Rettinger, M., Rigoll, G., and Schmaderer, C. (2022a). “Vr training: The unused opportunity to save lives during a pandemic,” in Proceedings of the 2022 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, March 2022, 423–424. doi:10.1109/VRW55335.2022.00092

Rettinger, M., Schmaderer, C., and Rigoll, G. (2022b). “Do you notice me? How bystanders affect the cognitive load in virtual reality,” in Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, March 2022, 77–82. doi:10.1109/VR51125.2022.00025

Salthouse, T. (1998). Independence of age-related influences on cognitive abilities across the life-span. Dev. Psychol. 34, 851–864. doi:10.1037//0012-1649.34.5.851

Steed, A., Pan, Y., Zisch, F., and Steptoe, W. (2016). “The impact of a self-avatar on cognitive load in immersive virtual reality,” in Proceedings of the 2016 IEEE virtual reality (VR), Greenville, SC, USA, March 2016, 67–76. doi:10.1109/VR.2016.7504689

Strandholt, P. L., Dogaru, O. A., Nilsson, N. C., Nordahl, R., and Serafin, S. (2020). “Knock on wood: Combining redirected touching and physical props for tool-based interaction in virtual reality,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, April, 2020 (Association for Computing Machinery), 1–13.

Strasnick, E., Holz, C., Ofek, E., Sinclair, M., and Benko, H. (2018). “Haptic links: Bimanual haptics for virtual reality using variable stiffness actuation,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, April 2018 (Association for Computing Machinery), 1–12. CHI ’18. doi:10.1145/3173574.3174218

Sweller, J., and Chandler, P. (1994). Why some material is difficult to learn. Cognition Instr. 12, 185–233. doi:10.1207/s1532690xci1203_1

Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educ. Psychol. Rev. 22, 123–138. doi:10.1007/s10648-010-9128-5

Tan, A. D. (2020). Augmented and virtual reality for hma eod training. J. Conventional Weapons Destr. 23, 4.

Tan, A. (2014). “Small arms and light weapons marking & tracing initiatives the Journal of ERW and Mine Action,” in Journal of explosive remnants of war and mine action (Harrisonburg, VA: Center for International Stabilization and Recovery at James Madison University), 39–43.

Tocu, A., Gellert, A., Stefan, I.-R., Nitescu, T.-M., and Luca, G.-A. (2020). “The impact of virtual reality simulators in manufacturing industry,” in Proceedings of the 12th International Conference on Education and New Learning Technologies, Palma de Mallorca, Spain, July 2020, 3084–3093. doi:10.21125/edulearn.2020.0905

Ulmer, J., Braun, S., Cheng, C.-T., Dowey, S., and Wollert, J. (2020). “Gamified virtual reality training environment for the manufacturing industry,” in Proceedings of the 31st Annual2020 19th International Conference on Mechatronics - Mechatronika (ME), Prague, Czech Republic, December 2020, 1–6. doi:10.1109/ME49197.2020.9286661

Wang, P., Bai, X., Billinghurst, M., Zhang, S., Han, D., Sun, M., et al. (2020). Haptic feedback helps me? A vr-sar remote collaborative system with tangible interaction. Int. J. Human–Computer Interact. 36, 1242–1257. doi:10.1080/10447318.2020.1732140

Yang, J. J., Horii, H., Thayer, A., and Ballagas, R. (2018). “Vr grabbers: Ungrounded haptic retargeting for precision grabbing tools,” in Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, October 2018 (Association for Computing Machinery), 889–899. UIST ’18. doi:10.1145/3242587.3242643

Yu, K., Winkler, A., Pankratz, F., Lazarovici, M., Wilhelm, D., Eck, U., et al. (2021). “Magnoramas: Magnifying dioramas for precise annotations in asymmetric 3d teleconsultation,” in Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, April 2021, 392–401. doi:10.1109/VR50410.2021.00062

Zhao, Y., Bennett, C. L., Benko, H., Cutrell, E., Holz, C., Morris, M. R., et al. (2018). Enabling people with visual impairments to navigate virtual reality with a haptic and auditory cane simulation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, April 2018 (Association for Computing Machinery), CHI ’18, 1–14. doi:10.1145/3173574.3173690

Keywords: virtual reality, tangible free hand interaction, training, user studies, explosive ordnance disposal training, EOD, collaborative training, interaction methods

Citation: Rettinger M and Rigoll G (2023) Touching the future of training: investigating tangible interaction in virtual reality. Front. Virtual Real. 4:1187883. doi: 10.3389/frvir.2023.1187883

Received: 16 March 2023; Accepted: 17 July 2023;

Published: 04 August 2023.

Edited by:

Anıl Ufuk Batmaz, Concordia University, CanadaReviewed by:

Gopal Nambi, Prince Sattam bin Abdulaziz University, Saudi ArabiaAdnan Fateh, University of Central Punjab, Pakistan

Copyright © 2023 Rettinger and Rigoll. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maximilian Rettinger, bWF4aW1pbGlhbi5yZXR0aW5nZXJAdHVtLmRl

Maximilian Rettinger

Maximilian Rettinger Gerhard Rigoll

Gerhard Rigoll