- 1Chair of Work-, Organizational- and Business Psychology, Ruhr University Bochum, Bochum, Germany

- 2Human-Computer Interaction, University of Trier, Trier, Germany

Based on the results of two laboratory studies, we show how the implementation of minimalistic social and task-relevant cues in Augmented Reality-based assistance systems for spatially dispersed teams impact team experience while not affecting team performance. In study 1 (N = 224) we investigated the Ambient Awareness Tool, which supports spatially dispersed teams in their temporal coordination when multiple team tasks or team and individual tasks must be executed in parallel. We found that adding a progress bar to the interface led to a significant increase in the perception of work group cohesiveness (diff = 0.34, p = .03, CI: [−0.65; −0.03], d = 0.39), but did not affect team performance (p = .92, η2 = 0.03). In study 2 (N = 23) we piloted an AR-based avatar representation of a spatially dispersed team member and evaluated whether the interactivity of the avatar impacts the perception of co- and social presence as well as team performance. An interactive avatar increased the perception of co- and social presence (co-presence: diff = 2.7, p < .001, η2 = 0.20; social presence: diff = 1.2, p = .001, η2 = 0.06). Team performance did not differ significantly (p = .177, η2 = 0.01). These results indicate that even minor social and task-relevant cues in the interface can significantly impact team experience and provide valuable insights for designing human-centered health-promoting AR-based assistance systems for spatially dispersed teams in the vocational context with minimal means.

1 Introduction

As a result of advancing digitalization and globalization, spatially dispersed teams (SD-teams) are becoming of increasing interest to organizations (Boos et al., 2017) and production industries (Hagemann et al., 2012).

Unquestionably, SD-teams offer many advantages (Bergiel et al., 2008), but the geographical and physical separation also bears several disadvantages. Two of the most prevailing challenges that SD-teams have to face are that, without technical support, 1) they cannot see each other, and thus have no possibility to share their visual context and workspace and 2) they cannot communicate directly (Thomaschewski et al., 2021). These challenges can have significant impacts on team experience and performance since, due to the technology-mediated communication, direct social interactions are limited, and a loss of verbal and nonverbal cues occurs. Especially as a result of this loss of cues, SD-team members are at risk of feeling isolated (Cascio, 2000; Kirkman et al., 2002) which can, according to Raghuram et al. (2001), in turn cause stress and work disengagement (Korsgaard et al., 2010). This also can lead to team members having the perception that they know too little about each other, which can cause uncertainty in their interactions (Tangirala and Alge, 2006). Furthermore, depending on the richness of the communication media, SD-teams are prone to act opportunistically, tend to profit unfairly from the achievements of their team members (“free-riding”), and can even behave anti-socially (Rockmann and Northcraft, 2008). These findings clearly show that spatial dispersion can have a strong negative impact on the experience of team members in SD-teams.

Moreover, this lack of contact not only affects team experience but can also negatively impact team performance. A particularly important factor influencing the performance of SD-teams is the temporal coordination of their interdependent subtasks (Bardram, 2000; Marks et al., 2001; Mohammed and Nadkarni, 2014; Mohammed et al., 2015). SD-teams often demonstrate less optimal temporal coordination since the lack of a common visual context or workspace makes it difficult to track the processing status of the respective subtasks (Fussell et al., 2000; Kraut et al., 2002; Sebanz et al., 2006; Vesper et al., 2016) and the current actions of team partners. In other words, SD-teams often show low levels of Task State Awareness (TSA) (Kraut et al., 2002), which is defined as knowledge about “the current state of the collaborative task in relation to an end goal” (p. 32). As a result, latencies in their temporal coordination occur, which in turn can affect team performance negatively.

The aforementioned disadvantages for team experience and team performance of SD-teams can be related to and explained by means of the media information richness of the technologies used for communication, coordination, and collaboration purposes. According to Media Richness Theory (MRT) (Daft and Lengel, 1986), SD-teams can experience challenges because of the limited capacities of the applied technologies to provide social and task-relevant cues and information. For instance, written forms of communication (e.g., e-mails, chats) are generally rather low in media richness because, e.g., social cues and information like facial expressions are not transmitted. On the other side, video conferences, for example, are comparatively high in media richness due to the real-time transmission of the conversation partners. According to MRT, the selection of the communication technology is not a matter of ‘the more the merrier’, but rather of appropriate application. That is, the technology should be able to provide the information and cues required to fulfill the team task.

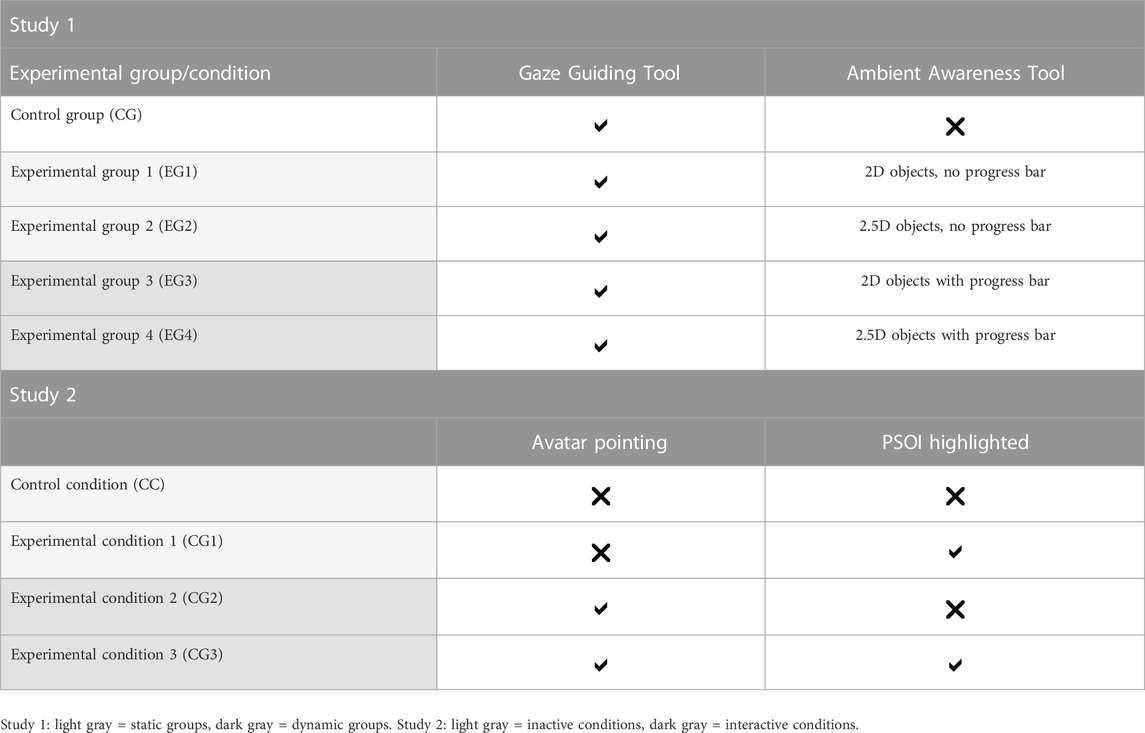

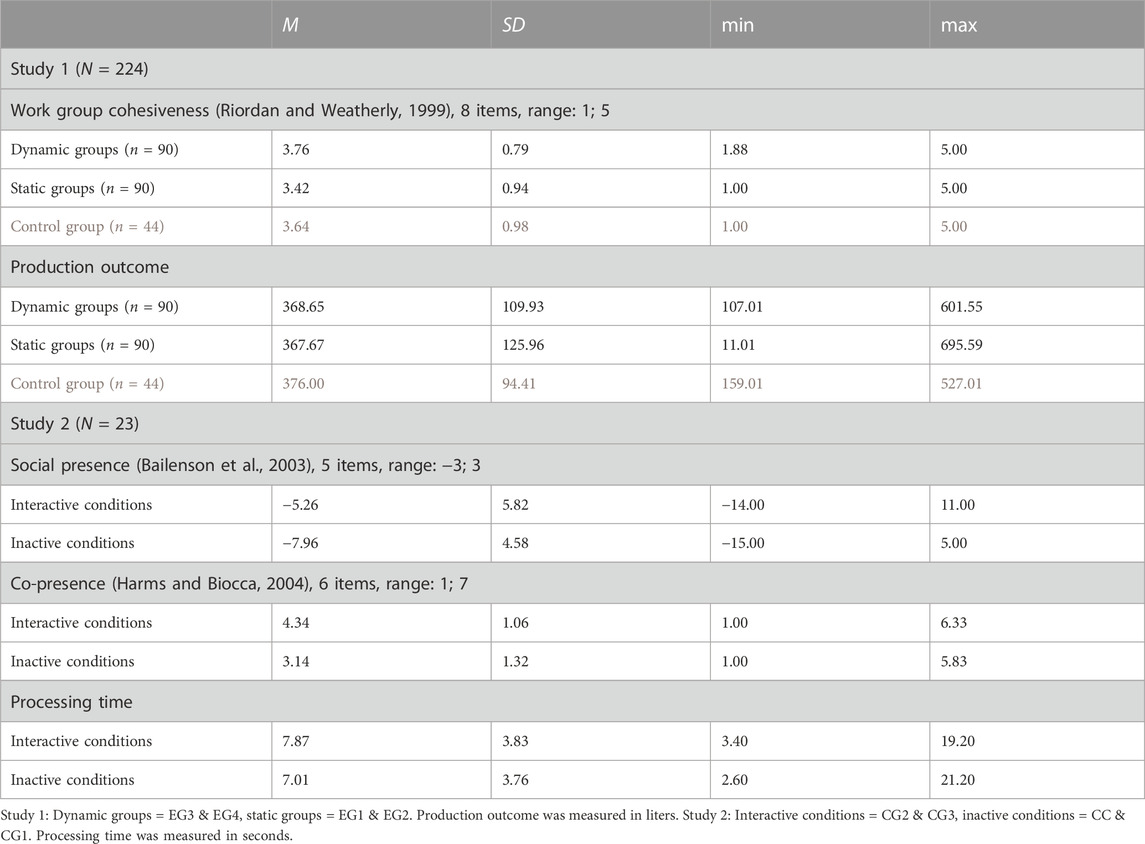

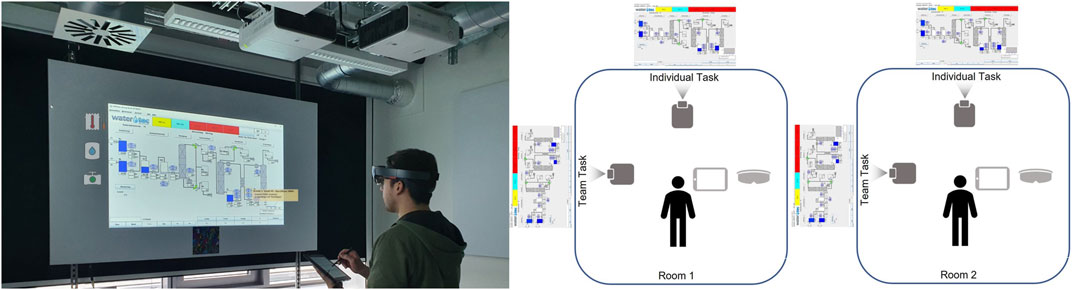

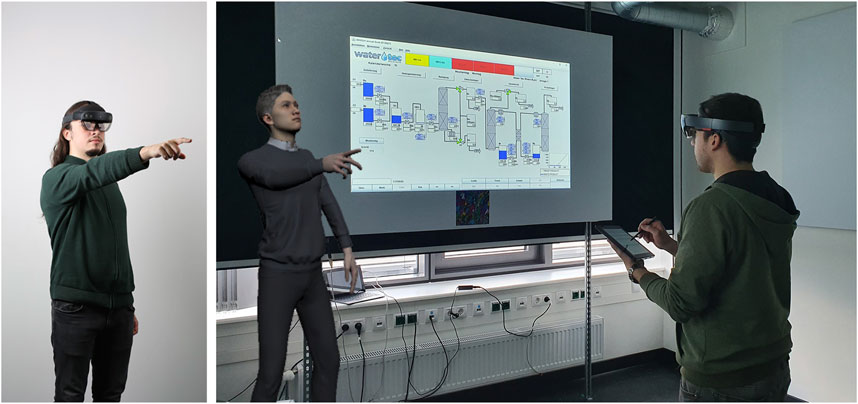

Thus, the focus of this paper is to investigate whether even minimalistic social and task-relevant cues in Augmented Reality (AR)-based assistance systems for SD-teams can positively affect team experience and team performance. Therefore, we conducted an exploratory analysis on data from two laboratory studies with AR-based assistance systems for SD-teams. Both studies applied a synchronous task design (Johansen, 1988). In study 1 participants had to start-up the simulation of a wastewater treatment plant and were assisted by an AR interface that utilizes graphical AR superimpositions (icons) to represent information about the current process state of the team task (Figure 1). To measure, whether team experience and performance are affected by minor alterations of social and task-relevant cues, we compared data from teams that had an additional progress bar in the interface to data from teams that did not have a progress bar available. Team experience was operationalized by means of work group cohesiveness (Riordan and Weatherly, 1999), and team performance by means of the production outcome. In study 2 participants performed a search task in which they were supported by an avatar representation of the team partner (Figure 2). Depending on the experimental condition, the avatar either did nothing (inactive avatar) or pointed supportively in the direction of the area to be found (interactive avatar). We compared the data of these different experimental conditions to investigate whether a minor change in avatar behavior (as social and task-relevant cue) affects team experience and team performance (processing time). Team experience was evaluated by means of co- (Harms and Biocca, 2004) and social presence (Bailenson et al., 2003).

FIGURE 1. Left panel: On-wall projected control surface of the WaTrSim (middle), Ambient Awareness Tool (AAT) (on the left side of the simulation surface), and Gaze Guiding Tool (located on the bottom right). Right panel: Experimental set-up of study 1.

FIGURE 2. Left panel: Experiment supervisor controlling the avatar. Right panel: Participant and avatar (mock-up—representation deviates slightly from the real set-up).

Regarding study 1, there are no comparable research results yet that show the influence of a progress bar in an AR-based interface as social and task-relevant cue on the work-related group cohesiveness and performance of SD-teams in vocational contexts or expert-to-expert collaboration. In contrast, the impact of avatar behavior on co- and social presence has already been examined in other studies (e.g., Casanueva and Blake, 2001; Kang et al., 2008; Kang and Watt, 2013; Piumsomboon et al., 2018; Piumsomboon et al., 2019; Bai et al., 2020; Brown and Prilla, 2020). In these studies, researchers were mostly interested in creating avatar behavior that is as natural, or human-like, as possible to enhance co- and social presence. Contrary, our findings from study 2 point to the notion, that already minor movements of the avatar might be sufficient to improve team experience by means of increasing co- and social presence. These findings could be interesting for organizations that want to support their SD-teams with AR-based assistance systems but, for example, only have limited resources or teams that work in areas with limited network bandwidth (e.g., rural areas).

2 Methods

Both studies employed the use case of a wastewater treatment plant (WaTrSim) (Weyers et al., 2015; Frank and Kluge, 2017; 2018). WaTrSim is a simulation environment that can be digitally controlled (see Figure 1, left panel). Participants start up the plant by following a predefined fixed sequence of 13 subtasks, encompassing the manipulation of the valve, heater, and tank settings. The objective is to achieve the highest possible production outcome of purified water and gas, which requires executing the 13 steps in the correct sequence and an appropriate timing (for further details see Thomaschewski et al., 2023). All statistical analyses (study 1 and 2) were conducted with R Project for Statistical Computing (RRID:SCR_001905).

2.1 Study 1

Study set-up and design: The task was to start up WaTrSim alone (individual task = IT) and in parallel in cooperation with the SD-team partner (team task = TT). While the IT required the execution of all subtasks alone, for the TT each team member had to alternately execute a predefined subset of the 13 steps. Accordingly, both participants had to show a high degree of temporal coordination to orchestrate their own IT and the respective sub steps of the TT to be executed in parallel in order to achieve the highest possible production outcome (for IT and TT). Both team members were placed in two separate rooms without any possibility to communicate. The IT and TT were presented by on-wall projections (1.20 × 0.75 m) on separate walls at a 90-degree angle and could be controlled by the participants via tablet (see Figure 1, right panel).

To support the SD-team, we developed an AR-based interface for the Microsoft HoloLens1, comprising a Gaze Guiding Tool (Weyers et al., 2015) and an Ambient Awareness Tool (AAT) (Thomaschewski et al., 2021). The Gaze Guiding Tool is a semi-transparent superimposition that guides the user’s gaze to the to-be-manipulated part of the system (see Figure 1, left panel). The AAT displays the next three steps of the task the user is currently not performing by means of graphical superimpositions of the to-be-manipulated system parts (heater, tank, or valve) (see Figure 1, left panel). This means that, while operating the IT, the interface displays the next three steps for the TT and vice versa. The upper icon represents the next step. As soon as this step can be executed, the icon starts flashing. By displaying the next three steps of the TT, both users receive information about the current process state of the TT, which should lead to a higher level of TSA and thus also increase performance. We applied a between-subjects 2 × 2 plus a control group design including the factors 1) dimensionality (2D superimpositions vs. 2.5D superimpositions) and 2) dynamics (static = no progress bar vs. dynamic = additional progress bar). In the present paper, we focus on the impact of the dynamics’ factor. Accordingly, the sample was divided into a) static (no progress bar), b) dynamic (additional progress bar), and c) control group (no AAT). Table 1 shows a description of all groups and depicts the group aggregation we used for the analysis in this paper.

The progress bar (dynamic group) was placed next to the upper icon and realized as a continuous progress indication consisting of discrete triggers indicating the time left until the next step must be executed. This means that the progress bar adds indirect information about the team partner’s current actions and the process state. Thus, by adding a progress bar, we enrich the AR-interface with additional social and task-relevant information, providing a more media-rich assistance system. Therefore, the dynamic group should differ in their team experience and team performance compared to the static groups.

One experimental procedure lasted 4 h in total and included an introduction on operating WaTrSim, completion of several questionnaires, desktop-based WaTrSim training (without AR-based support), and the main experiment with support of the AR-based assistance system. The main measurement period comprised six runs of simultaneously controlling one instance of WaTrSim alone (IT) and one instance of WaTrSim as part of an SD-team (TT). One “WaTrSim-start-up-run” lasted a maximum of 240 s.

Participants: We analyzed the data of N = 224 participants (147 female, 75 male, two other identifications; Mage = 23.22, SDage = 3.86, rangeage = 18; 43) who had no prior experience with WaTrSim. Most of the participants were students from various disciplines (95.98%) at a German university. For participation we either paid a €40 expense allowance or credited four subject hours. Of the participants, 83.93% indicated interest in the topic of AR and 7.14% have used AR glasses before. Most of the participants did not know their experiments’ team partner before taking part in the study (68.30%).

Instruments: Since cohesion is assumed to be positively related with satisfaction (Chidambaram, 1996), team experience was operationalized by work group cohesiveness (WGC), which we measured after the experiment using the WGC subscale of the work group identification questionnaire by Riordan and Weatherly (1999). WGC indicates the extent to which individuals identify with their team, measuring “the degree to which individuals believe that the members of their work groups are attracted to each other, willing to work together, and committed to the completion of the tasks and goals of the work group” (p. 315). In virtual teams, WGC tends to be lower compared to face-to-face teams due to the loss of social and task-relevant information (Warkentin et al., 1997). Therefore, adding additional social and task-relevant information by means of a progress bar should lead to a more positive team experience mirrored by a higher level of WGC.

The original English subscale was translated into German by the lead investigator and showed excellent values for internal consistency (α = .93 – .97). Higher scores indicate a higher perception of WGC.

Team performance was operationalized by the production outcome of the TT. For analysis, we used the trial with the highest production outcome in the TT of the last four runs. A higher production outcome indicates a better team performance (see Supplementary Table S1).

Results study 1: The descriptive results for study 1 are shown in Table 2. WGC differed significantly between the groups (one-way ANOVA: F (2,221) = 3.32, p = .038, η2 = 0.17). Tukey post-hoc testing indicated significant higher WGC values for the dynamic group compared to the static group (diff = −0.34, p = .03, CI: [−0.65; −0.03], d = 0.39) only.

Comparisons of the production outcome between the groups yielded no statistically significant differences (one-way ANOVA: F (2,221) = 0.085, p = .92, η2 = 0.03).

Discussion study 1: Our findings suggest that visualizing a continuous progress indication of the team task in a SD-team setting can significantly increase the perception of WGC, thus contributing to a positive team experience. This finding is consistent with the MRT (Daft and Lengel, 1986), as here the progress bar might has served as an additional social and task-relevant cue that led to an improved team experience: The progress bar provides task relevant information by indicating when the team member needs to become active again, but also serves as social relevant cue, as the progress bar information allows conclusions about what the SD team partner has just done.

2.2 Study 2

Study set-up and design: Study 2 was conceptualized as feasibility study of the set-up and also conducted with the WaTrSim environment. The participants’ task was to read specific system states from images of the WaTrSim surface, so-called plant section(s) of interest (PSOI): The supervisor asked the participant to read a specific tank, heat, or valve setting and the participant had to communicate these verbally to the supervisor, who represented the SD-team partner (located in a separate room). To support the participant, we displayed an AR-based full-body avatar representation of the SD-team partner (the supervisor) via Microsoft HoloLens1 (see Figure 2) For head and hand tracking we used a Microsoft HoloLens2, body tracking was realized via Microsoft Kinect. Mimic was not transferred. For further technical details of the set-up please see Thomaschewski et al. (2023).

The objective of study 2 was to investigate whether the avatar’s behavior and context cues influence task performance (processing time) and the perception of co- and social presence. We applied a 2 × 2 within-subject design, encompassing the factors 1) pointing (interactive: avatar points to the PSOI vs. inactive: avatar does not point to the PSOI) and 2) context cues (highlighting PSOI vs. no highlighting of PSOI). These results are reported in Thomaschewski et al., 2023. Like in study 1, for the explorative results reported on here, we applied another split of conditions to focus on the factor of avatar behavior. For the following comparisons, conditions were split into a) interactive avatar and b) inactive avatar. Table 1 provides a description of all conditions and shows the aggregation for the analysis in this paper.

In the interactive conditions, the avatar pointed to the direction of the PSOI to be read, in the inactive conditions the avatar just stood quietly next to the simulation surface. By pointing to the PSOI, the avatar delivered additional social and task-relevant information, making the assistance system higher in media richness. Thus, the interactive conditions should improve team experience and team performance.

To counteract sequence-based biases, the order of the experimental conditions was fully randomized. Each condition comprised the reading aloud five system states (four conditions = 20 in total). Co- and social presence were measured after each condition. Processing time was defined by measuring the time between the supervisor’s prompt to read a PSOI and the participant’s response. One study run lasted 1 h.

Participants: For analysis, we used the data of N = 23 participants (12 female, 11 male; Mage = 24.09, SDage = 3.27, rangeage = 19; 30) who had no prior experience in controlling WaTrSim. Most of the participants were students (82.61%). For participation we paid a €10 expense allowance. Of the participants, 30.43% had prior experience with AR and/or virtual reality (VR) and 43.48% indicated that they had seen an avatar before.

Instruments: Team experience was operationalized by co- and social presence. Social presence indicates the degree to which the participant perceives the avatar as a real person (Bailenson et al., 2003) and is directly connected to MRT, since it increases with media richness (Bulu, 2012). According to Daft and Lengel (1986), media richness can be improved by raising “the number of cues and senses involved” (p. 560). It follows that an interactive avatar should provoke higher levels of social presence, since the pointing gesture can be regarded as an additional cue that makes the avatar more vivid and interactive, increasing the perception of working together with a real human. Co-presence is primarily defined as the extent of the participant’s awareness that they are not alone or isolated (Harms and Biocca, 2004), the perception of sharing the same (virtual) environment with their team partner, and the team partner’s mutual awareness (Bulu, 2012). Thus, high levels of co-presence emerge when the used technology is capable of creating the perception of being socially and psychologically connected to one’s team partner. Against this background, the question arises whether a minimal pointing gesture of the avatar is already sufficient to generate co- and social presence.

To survey social presence, we used the five-item scale by Bailenson et al. (2003) (internal consistency α = .36–.78). Co-presence was measured with the respective subscale of Harms and Biocca (2004) (internal consistency: α = .85–.90).

Team performance was measured by the time the participants needed to verbally communicate the respective system state (processing time). Since the images of the simulation surface were presented using Microsoft PowerPoint, we measured the processing time by recording the display time of each slide. We propose that a lower processing time (mean scores per condition) indicates better team performance (see Supplementary Table S2).

Results study 2: As shown in Table 2, the participants showed higher scores for social and co-presence in the conditions with an interactive avatar in comparison to the conditions with an inactive avatar. Repeated measure ANOVAs confirmed the significance of this group difference (social presence: F (1,45) = 11.77, p = .001, η2 = 0.06, co-presence: F (1,45) = 33.56, p < .001, η2 = 0.20).

Processing time did not differ significantly between the conditions (F (1,45) = 1.88, p = .177, η2 = 0.01).

Discussion study 2: Our findings show that participants were more likely to perceive the interactive avatar as a real person than the inactive avatar (social presence). Further, the interactive avatar elicited a stronger sense of co-presence compared to the inactive avatar. This suggests that even a minimum level of interactivity via a pointing gesture is sufficient for SD-team members to feel less alone, have the impression of being in a shared (physical) context with their team partner, and perceive a mutual awareness between them and their team partner. Based on the MRT, we assume that the avatar’s pointing gesture served as socially relevant cue, as the movement conveyed information about the current behavior of the SD-team member. Moreover, we suppose that the pointing gesture also provided a task-relevant cue, as it supported the participant in the search task.

3 Limitations

Although our research provides relevant findings, they come with limitations regarding technical and sampling aspects. With respect to technical aspects, the narrow field of view of the HoloLens1 might have affected the study results. Since the AR-superimpositions we used in both studies were spatially fixed in position, it was not guaranteed that they were constantly in the participants’ field of view. This might have had a negative moderating impact on the influence of the social and task-relevant cues on team experience and team performance. To rule out such an attenuating effect, future research should either use hardware with a larger field of view or make the AR-superimpositions adaptive, such as the realization of the Mini-Me by Piumsomboon et al. (2018), for example.

Second, there are some limitations in relation to gender aspects of sample composition: In study 2 we used a male avatar. Due to the composition of our sample, we had 12 mixed dyads (male avatar and female participant). In accordance with the findings from Kang et al. (2008), who found higher interactant satisfaction in non-mixed dyads, this might have influenced our results. In future work this possible issue should be addressed by either controlling for the participant’s gender or by extending the experimental design to the inclusion of a female and gender-neutral avatar. In relation to study 1, it should be noted, that some teams knew each other before, whereas other teams met for the first time in our laboratory. In future studies, this should be kept consistent by investigating either a) newly formatting teams, or b) real existing work teams in which members already know each other. In the sense of application-oriented research for the vocational context, especially alternative b) would be appropriate here.

Finally, since the present analyses were conducted on exploratory research questions, further planned research is needed to support the outlined results. In this context, it would be particularly important to use further task scenarios to investigate whether our results are generalizable to other SD-team tasks. Moreover, it would be profitable to apply other measurements for team performance, like conversational processes. To gain first insights into the underlying communication patterns we transformed the SD-setting from study 1 into a non-spatially dispersed teamwork setting and are currently analyzing the team communication by means of videos that we made from the teams working together co-located.

4 Conclusion and future work

The objective of this paper was to investigate whether the implementation of minimalistic social and task-relevant cues in AR-based assistance systems for SD-teams influence team experience and team performance. For this purpose, we exploratively analyzed data from two empirical laboratory studies. In conclusion, our results suggest that even minor and rather latent social and task-relevant cues, such as the inclusion of a progress bar or a small pointing gesture of an avatar, are sufficient to positively influence team experience but not for enhancing team performance. These results are particularly interesting for the implementation of AR-based assistance systems for SD-teams in the vocational context, as they indicate that even with minimal means, team experience can be improved while at the same time not negatively impacting team performance.

These findings are relevant when designing AR-based assistance systems for vocational contexts with specific requirements, such as low available network bandwidth (e.g., rural areas) or the need for using hardware with low data storage and processing capacity. In such cases, it can be important to be able to weigh up how low media richness of the AR-based assistance system may be in order to still be conducive to teamwork.

In future work, we build upon our here presented explorative results and conduct planned and controlled laboratory studies with the objective of defining a) how much WGC, social and co-presence is “enough” to properly contribute to the team members wellbeing, and b) a threshold for the minimum of media richness that is required to elicit the levels scientifically evaluated under a). With these research results, we contribute to making access to such assistance systems much lower-threshold and their use much more inclusive: For instance, to create a minimalistic moving avatar, expensive hardware like in depth cameras or tracking systems would not be mandatory, so that the implementation of such assistance systems is significantly cheaper and thus accessible to a broader mass of users. At the same time, it will be much easier and faster to develop assistance systems low in media richness, which in turn conserves diverse resources.

Especially for small and medium-sized enterprises, which do not dispose of large investment volumes, these results could be interesting as they show that it is possible to provide human-centered and health-promoting AR-based assistance systems for supporting SD-teams despite limited resources.

In sum: Small changes make large differences!

Data availability statement

The datasets presented in this study can be found in the OSF online repository: https://osf.io/p9xta/?view_only=7ed970ed843149b390f73aeef3c979d6.

Ethics statement

The studies involving human participants were reviewed and approved by Local Ethics-Committee of the Faculty of Psychology (Ruhr University Bochum). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any identifiable images or data included in this article.

Author contributions

LT, NF, BW, and AK contributed to the conception and design of the study. LT organized the conduction of the studies, performed the statistical analysis, and wrote the first draft of the manuscript. LT, NF, BW, and AK wrote sections of the manuscript. All authors contributed to the manuscript revision and approved the submitted version.

Funding

This work was partly funded by the DFG (Deutsche Forschungsgemeinschaft), grant numbers KL2207/7-1 and WE5408/3-1.

Acknowledgments

We acknowledge support by the Open Access Publication Funds of the Ruhr-Universität Bochum. A special thanks goes to our student assistants for their tireless acquisition of test persons as well as for the support during the data collection process.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2023.1163337/full#supplementary-material

References

Bai, H., Sasikumar, P., Yang, J., and Billinghurst, M. “A user study on mixed reality remote collaboration with eye gaze and hand gesture sharing,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, April 2020.

Bailenson, J. N., Blascovich, J., Beall, A. C., and Loomis, J. M. (2003). Interpersonal distance in immersive virtual environments. Personality Soc. Psychol. Bull. 29, 819–833. doi:10.1177/0146167203029007002

Bardram, J. E. (2000). Temporal coordination –on time and coordination of CollaborativeActivities at a surgical department. Comput. Support. Coop. Work (CSCW) 9, 157–187. doi:10.1023/A:1008748724225

Bergiel, B. J., Bergiel, E. B., and Balsmeier, P. W. (2008). Nature of virtual teams: A summary of their advantages and disadvantages. Manag. Res. News 31, 99–110. doi:10.1108/01409170810846821

Boos, M., Hardwig, T., and Riethmüller, M. (2017). Führung und Zusammenarbeit in verteilten Teams. Göttingen, Germany: Hogrefe.

Brown, G., and Prilla, M. “The effects of consultant avatar size and dynamics on customer trust in online consultations,” in Proceedings of the Conference on Mensch und Computer, New York, NY, USA, September 2020.

Bulu, S. T. (2012). Place presence, social presence, co-presence, and satisfaction in virtual worlds. Comput. Educ. 58, 154–161. doi:10.1016/j.compedu.2011.08.024

Casanueva, J., and Blake, E. (2001). “The effects of avatars on co-presence in a collaborative virtual environment,” in Hardware, software and peopleware (Pretoria, South Africa: University of South Africa).

Chidambaram, L. (1996). Relational development in computer-supported groups. MIS Q. 20, 143. doi:10.2307/249476

Daft, R. L., and Lengel, R. H. (1986). Organizational information requirements, media richness and structural design. Manag. Sci. 32, 554–571. doi:10.1287/mnsc.32.5.554

Frank, B., and Kluge, A. (2018). Can cued recall by means of gaze guiding replace refresher training? An experimental study addressing complex cognitive skill retrieval. Int. J. Industrial Ergonomics 67, 123–134. doi:10.1016/j.ergon.2018.05.007

Frank, B., and Kluge, A. (2017). “Cued recall with gaze guiding—reduction of human errors with a gaze-guiding tool,” in Advances in neuroergonomics and cognitive engineering. Editors K. S. Hale, and K. M. Stanney (Cham, Germany: Springer International Publishing), 3–16.

Fussell, S. R., Kraut, R. E., and Siegel, J. “Coordination of communication,” in Proceedings of the 2000 ACM conference on Computer supported cooperative work - CSCW '00, New York, NY, USA, December 2000.

Hagemann, V., Kluge, A., and Ritzmann, S. (2012). Flexibility under complexity. Empl. Relat. 34, 322–338. doi:10.1108/01425451211217734

Harms, C., and Biocca, F. “Internal consistency and reliability of the networked minds social presence measure,” in Proceedings of the Seventh Annual International Workshop: Presence 2004, Valencia, Spain, October 2004.

Kang, S.-H., Watt, J. H., and Ala, S. K. “Communicators' perceptions of social presence as a function of avatar realism in small display mobile communication devices,” in Proceedings of the 41st Annual Hawaii International Conference on System Sciences (HICSS 2008), Waikoloa, HI, USA, January 2008.

Kang, S.-H., and Watt, J. H. (2013). The impact of avatar realism and anonymity on effective communication via mobile devices. Comput. Hum. Behav. 29, 1169–1181. doi:10.1016/j.chb.2012.10.010

Kirkman, B. L., Rosen, B., Gibson, C. B., Tesluk, P. E., and McPherson, S. O. (2002). Five challenges to virtual team success: lessons from sabre, inc. Acad. Manag. Perspect. 16, 67–79. doi:10.5465/ame.2002.8540322

Korsgaard, M. A., Picot, A., Wigand, R. T., Welpe, I. M., and Assmann, J. J. (2010). “Cooperation, coordination, and trust in virtual teams: insights from virtual games,” in Online worlds: Convergence of the real and the virtual. Editor W. S. Bainbridge (London: Springer London), 253–264.

Kraut, R. E., Gergle, D., and Fussell, S. R. “The use of visual information in shared visual spaces,” in Proceedings of the 2002 ACM conference on Computer supported cooperative work - CSCW '02, New York, NY, USA, November 2002, 31.

Marks, M. A., Mathieu, J. E., and Zaccaro, S. J. (2001). A temporally based framework and taxonomy of team processes. AMR 26, 356–376. doi:10.5465/amr.2001.4845785

Mohammed, S., Hamilton, K., Tesler, R., Mancuso, V., and McNeese, M. (2015). Time for temporal team mental models: expanding beyond “what” and “how” to incorporate “when”. Eur. J. Work Organ. Psychol. 24, 693–709. doi:10.1080/1359432X.2015.1024664

Mohammed, S., and Nadkarni, S. (2014). Are we all on the same temporal page? The moderating effects of temporal team cognition on the polychronicity diversity–team performance relationship. J. Appl. Psychol. 99, 404–422. doi:10.1037/a0035640

Piumsomboon, T., Dey, A., Ens, B., Lee, G., and Billinghurst, M. (2019). The effects of sharing awareness cues in collaborative mixed reality. Front. robotics AI 6, 5. doi:10.3389/frobt.2019.00005

Piumsomboon, T., Lee, G. A., Hart, J. D., Ens, B., Lindeman, R. W., Thomas, B. H., et al. “Mini-me,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, April 2018.

Raghuram, S., Garud, R., Wiesenfeld, B., and Gupta, V. (2001). Factors contributing to virtual work adjustment. J. Manag. 27, 383–405. doi:10.1177/014920630102700309

Riordan, C. M., and Weatherly, E. W. (1999). Defining and measuring employees’ identification with their work groups. Educ. Psychol. Meas. 59, 310–324. doi:10.1177/00131649921969866

Rockmann, K. W., and Northcraft, G. B. (2008). To be or not to be trusted: the influence of media richness on defection and deception. Organ. Behav. Hum. Decis. Process. 107, 106–122. doi:10.1016/j.obhdp.2008.02.002

Sebanz, N., Bekkering, H., and Knoblich, G. (2006). Joint action: bodies and minds moving together. Trends cognitive Sci. 10, 70–76. doi:10.1016/j.tics.2005.12.009

Tangirala, S., and Alge, B. J. (2006). Reactions to unfair events in computer-mediated groups: A test of uncertainty management theory. Organ. Behav. Hum. Decis. Process. 100, 1–20. doi:10.1016/j.obhdp.2005.11.002

Thomaschewski, L., Feld, N., Weyers, B., and Kluge, A. (2023). “The use of augmented reality for temporal coordination in everyday work context,” in Everyday virtual and augmented reality. Editors A. Simeone, B. Weyers, S. Bialkova, and R. Lindemann (Berlin, Germany: Springer), 57–87.

Thomaschewski, L., Weyers, B., and Kluge, A. (2021). A two-part evaluation approach for measuring the usability and user experience of an Augmented Reality-based assistance system to support the temporal coordination of spatially dispersed teams. Cognitive Syst. Res. 68, 1–17. doi:10.1016/j.cogsys.2020.12.001

Vesper, C., Schmitz, L., Safra, L., Sebanz, N., and Knoblich, G. (2016). The role of shared visual information for joint action coordination. Cognition 153, 118–123. doi:10.1016/j.cognition.2016.05.002

Warkentin, M. E., Sayeed, L., and Hightower, R. (1997). Virtual teams versus face-to-face teams: an exploratory study of a web-based conference system. Decis. Sci. 28, 975–996. doi:10.1111/j.1540-5915.1997.tb01338.x

Keywords: avatar, spatially dispersed teams, augmented reality, team experience, team performance, work group cohesiveness, co-presence, social presence

Citation: Thomaschewski L, Feld N, Weyers B and Kluge A (2023) I sense that there is someone else: an exploratory study on the influence of the media richness of Augmented Reality-based assistance systems on team experience and performance. Front. Virtual Real. 4:1163337. doi: 10.3389/frvir.2023.1163337

Received: 27 February 2023; Accepted: 16 August 2023;

Published: 15 September 2023.

Edited by:

Stefan Greuter, Deakin University, AustraliaReviewed by:

Mark Billinghurst, University of South Australia, AustraliaRyan J. Ward, University of Liverpool, United Kingdom

Copyright © 2023 Thomaschewski, Feld, Weyers and Kluge. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lisa Thomaschewski, bGlzYS50aG9tYXNjaGV3c2tpQHJ1Yi5kZQ==

Lisa Thomaschewski

Lisa Thomaschewski Nico Feld

Nico Feld Benjamin Weyers

Benjamin Weyers Annette Kluge

Annette Kluge