- 1Institute of Visual Computing, Bonn-Rhein-Sieg University of Applied Sciences, Sankt Augustin, Germany

- 2School of Interactive Arts and Technology, Simon Faser University, Burnaby, BC, Canada

- 3HIT Lab NZ, University of Canterbury, Christchurch, New Zealand

The visual and auditory quality of computer-mediated stimuli for virtual and extended reality (VR/XR) is rapidly improving. Still, it remains challenging to provide a fully embodied sensation and awareness of objects surrounding, approaching, or touching us in a 3D environment, though it can greatly aid task performance in a 3D user interface. For example, feedback can provide warning signals for potential collisions (e.g., bumping into an obstacle while navigating) or pinpointing areas where one’s attention should be directed to (e.g., points of interest or danger). These events inform our motor behaviour and are often associated with perception mechanisms associated with our so-called peripersonal and extrapersonal space models that relate our body to object distance, direction, and contact point/impact. We will discuss these references spaces to explain the role of different cues in our motor action responses that underlie 3D interaction tasks. However, providing proximity and collision cues can be challenging. Various full-body vibration systems have been developed that stimulate body parts other than the hands, but can have limitations in their applicability and feasibility due to their cost and effort to operate, as well as hygienic considerations associated with e.g., Covid-19. Informed by results of a prior study using low-frequencies for collision feedback, in this paper we look at an unobtrusive way to provide spatial, proximal and collision cues. Specifically, we assess the potential of foot sole stimulation to provide cues about object direction and relative distance, as well as collision direction and force of impact. Results indicate that in particular vibration-based stimuli could be useful within the frame of peripersonal and extrapersonal space perception that support 3DUI tasks. Current results favor the feedback combination of continuous vibrotactor cues for proximity, and bass-shaker cues for body collision. Results show that users could rather easily judge the different cues at a reasonably high granularity. This granularity may be sufficient to support common navigation tasks in a 3DUI.

1 Introduction

In Virtual Reality we perform many tasks that involve selecting and manipulating objects, or navigating between objects that constitute the space around us. To do so, we need to be and remain aware of these objects (spatial awareness). We do so by building up and maintaining our mental spatial representation of our surroundings while moving through it via a process known as spatial updating (Presson and Montello, 1994). In real-life, we rely on our perceptual systems to establish a relationship between our body and the objects surrounding us to trigger appropriate motor actions. While this process seems seamless and requires little cognitive effort, it is actually a complicated process that involves processing and combining stimuli from different perceptual channels, and responding to these stimuli in an apt matter. We rely on a range of stimuli, spanning from visual, auditory, vestibular, to proprioceptive including full-body tactile cues to build up our awareness of objects surrounding or touching our body, and create and update associated spatial models that represent the environment around us. For example, such cues are highly relevant when we move (navigate) towards an object in order to select it. We need to be aware of the target location as well as other objects that we may need to avoid colliding with by planning out path through the environment accordingly. Doing so, objects surrounding us can be referenced in direct relation to our body (body-referenced), which is the main focus of this paper. Unfortunately, many 3D user interfaces (3DUIs) have limitations in conveying multisensory cues adequately to inform these processes, often caused by hardware limitations. The aforementioned perceptual processes are closely tied to cognitive resources, attention mechanisms and underlying decision making processes that drive our motor action systems to plan for, and perform actions (Brozzoli et al., 2014). These processes are often associated with the so-called peripersonal (PPS) and extrapersonal spaces (EPS). These spaces relate to the perception of closeness (distance and direction) of objects in relation to the human body. The PPS deals specifically with objects in the direct proximity of the body, or objects that touch (or collide) with the body. Tactile cues from the arms, head, and chest but also visual or auditory stimuli presented within a limited space surrounding these body parts are processed to contribute to our PPS (Noel et al., 2015). The PPS is generally considered to extend around 30 cm from the body, but can vary up to around 1.5 m (Stone et al., 2017). The border of the PPS touches upon the surrounding EPS. Research suggests that collisions may stop our movement through an environment [actual body collision, e.g., when grasping an object (Lee et al., 2013)]. Alternatively we may simply try to avoid collisions by adjusting our movement [(Regan and Gray, 2000; Cinelli and Patla, 2008)].

1.1 Problem space and approach

In the context of 3DUIs, the PPS and EPS can take an important role. However, when cues are lacking due to hardware limitations (e.g., lack of peripheral visual cues or haptic feedback towards the body), performance of tasks relying on the PPS/EPS may be constrained (LaViola et al., 2017). In a 3DUI—in particular while using immersive systems—we relate our body to objects around us. These objects are judged within a reference frame to drive our motor actions associated with selection, manipulation and navigation tasks. In this paper, we look at vibration-based proximity and collision feedback methods that can potentially aid these processes in a 3DUI, by assessing their effect on lower level perceptual mechanisms. As an example to describe the effect of a common system constraint on these reference frames, research has indicated that a restricted field of view (FOV) can reduce perceptual and visuomotor performance in both real and virtual environments (Baumeister et al., 2017). Moreover, reducing the FOV can negatively affect our perception of motion (Brandt et al., 1973), scale (Jones et al., 2013) and distance (Jones et al., 2011), while also limiting the user’s spatial awareness (Legge et al., 2016). As such, a narrower FOV can directly affect our perception of objects surrounding our body captured in the PPS and EPS. Potential effects may increase when other feedback channels are also constrained. For example, full-body awareness can be limited when cues are not provided to the lower body but instead only focus on the upper body (Jung and Hughes, 2016).

In this article, we investigate if and how going beyond the commonly used feedback channels in VR (eyes, head, and hands) could support perception inside the PPS/EPS. We do so by stimulating the feet using tactile cues. Generally, we use softer tactile cues (vibrotactors) for proximity (sensory substitution) and stronger cues caused by a bass-shaker to convey collision as the heightened intensity resembles more closely collisions in real-life. As we will show in the related work section, tactile cues have often been shown to work well for conveying proximity to relevant body parts, while stronger cues more closely resemble actual on-body collisions.

We chose to focus on the lower body (including the feet) as we noted before that research indicates that lower-body stimuli may contribute to full-body PPS. The PPS relates the overall body to the objects surrounding us (Serino et al., 2015; Stone et al., 2017, 2020), as well as our body-ownership (Grivaz et al., 2017). As such it is directly relevant for actions in immersive environments where we need to react to or directly interact with objects around us. Thereby, the PPS and EPS also contribute to our situation awareness (Endsley, 1995) entailing knowledge about the dynamic configuration of objects surrounding us, being an important driver for 3DUI tasks (LaViola et al., 2017). In our studies, tactile cues encode information about objects in the proximity as well as touching the body. We use sensory substitution (Kaczmarek et al., 1991) to provide proximity cues that in real-world conditions are predominantly sensed over our visual or auditory system. In real-life, tactile cues are mainly concerned with touch, yet studies have shown that they can also be utilized for different purposes.

The work reported in this paper in inspired by results from our previous research. In (Kruijff et al., 2015) we used strong low-frequency vibrations to the feet to elicit haptics sensations in different body parts. We will more closely reflect the results of this study in Section 5 to guide our main studies. In (Marquardt et al., 2018a), we demonstrated a novel tactile glove and showed that tactile cues can be used to provide proxemic information about distance and direction to an object close to the hand. In our current studies we explore if proxemic cues can be used similarly when provided to feet instead of hands. In (Kruijff et al., 2016) we used tactile stimuli to the foot soles to simulate human gait, by simulating the roll-off process of the feet. A bass-shaker mounted under the feet simulated the impact, while footstep sounds simulated the sound associated with walking. We showed that these cues improved perception of self-motion velocities and distances traveled without increasing cognitive load, showing the potential of feet as feedback channel. Finally, in (Jones et al., 2020) we demonstrated how directional tactile and force feedback to the side of the feet can be deployed to provide directional proximity and collision cues. It was used in a remote telepresence robot operation scenario to assess how proximity feedback can affect general navigation behaviour. The paper did not look closely at directional and distance perception accuracy and actual changes in path planning behaviour, nor did it assess underlying perceptual mechanisms. To address this gap, the current study investigates more explicitly the perception of distance and direction of objects in our immediate surrounding, reflecting upon the EPS and PPS.

1.2 Contributions

This article focuses on exploring how vibrotactile cues applied to the feet are interpreted and what information they can convey about the PPS and EPS. Outcomes can inform the design of novel 3D interaction techniques and devices. First, we explain the PPS and EPS and their potential role in 3DUIs, and how cues towards the feet and lower body can contribute to these reference spaces.

In our studies we investigate two related issues: (A) perception of feet and lower body tactile stimuli that can convey proximity (PPS and EPS) and touch events (PPS only), and (B) how stimuli can convey information relevant for the reference spaces—in particular object direction, relative distance, and point of contact on the body—by partly translating visual information into tactile cues. We encode direction and relative distances to objects (“proximity cues”) as well as collisions by stimulating the feet from different directions. As we code relative distance, cues can be used to convey information about the EPS as well as the PPS. Note that collision cues (on-body) are only part of the PPS, while proximity cues (body-external) do not include collision cues, yet can inform motor process to actually avoid collisions. We will elaborate on this in Section 3. Doing so, we study different technical approaches and their effect on perception in a series of studies, providing the following contributions:

• Perceptual models: We provide an overview of the PPS and EPS perceptual systems and illustrate how they can affect typical 3DUI tasks such as selection, manipulation and navigation. The resulting deeper understanding can help to improve interaction techniques and devices, which can lead to improved user performance.

• Collision: Through analysis of our pilot study towards underlying perceptual mechanisms (PPS/EPS), we show that low frequency strong vibrations can provide haptic feedback sensed in individual body parts, yet that sensations are constrained by the point of entry of stimulation. While direction and strength of impact could be reasonably well perceived, more subtle vibrations may be more useful to convey fine-grained information from PPS and especially EPS, which we focus on in main study.

• Proximity and collision: The results of our main study (studies 1–4) indicate that proximity and body collision cues can be well perceived by combining low-intensity vibration stimuli of higher and lower frequency. Direction of incoming objects could be sensed easily (even without training) and with reasonably low error. Similarly, users noted the ease of detecting the direction (point of impact) of body collisions. Vibration-mode preferences differed for perception of incoming objects vs. body collision, making it easier to separate cues, and the vibration range differences (intensity) positively affected this. Finally, different levels of collision force could be easily differentiated.

2 Feedback methods

In this section, we outline related work in the area of feedback methods relevant to our studies. Related work and background on perceptual models, including embodiment, is discussed in Section 3.

2.1 Collision feedback

While vibration-based collision feedback is common in haptic interfaces for (hand-based) 3D selection and manipulation (LaViola et al., 2017), in particular haptic support for navigation tasks is far less common. Few exceptions include the usage of grids of bass-shakers for collision feedback, such as described in Blom and Beckhaus (2010). Feedback for collision avoidance—in contrast to direct collision feedback—has also been studied to some extent (but not in the frame of PPS/EPS), in part by providing visual (Scavarelli and Teather, 2017) and audio cues (Aguerrevere and Choudhury, 2004; Afonso and Beckhaus, 2011). These methods mimic collision feedback in real-world warning systems like car parking feedback systems (Meng et al., 2015).

2.2 Proximity feedback

Proxemics, the field describing how different “zones” around our body affect our interaction with the world around us, has been an active field of research (Hall, 1966; Holmes and Spence, 2004), primarily in areas such as ubiquitous computing (Greenberg et al., 2010) and collaborative work (Jakobsen and Hornbæk, 2012). A good overview of the overlap between PPS and proxemics can be found in (Bufacchi and Iannetti, 2018), while Pederson et al. (2012) discuss the relationship between proxemics and egocentric reference frames. With regards to proximity feedback, various modalities have been used, including force (Holbert, 2007), audio (Beckhaus et al., 2000), and vibration (Afonso and Beckhaus, 2011; Mateevitsi et al., 2013).

Such feedback is quite similar to the distance-to-obstacle feedback approaches presented by Hartcher-O’Brien et al. (2015); Uchiyama et al. (2008) In part, proximity feedback can also be used for collision avoidance. While these systems only provide general direction information for objects in the vicinity of the human body, the granularity of cues that can be provided is quite low. Tactile cues have been used to direct navigation (Lindeman et al., 2004; Uchiyama et al., 2008; Jones et al., 2020), 3D selection (Marquardt et al., 2018a; Ariza et al., 2018), and visual search tasks (Lindeman et al., 2003; Lehtinen et al., 2012; Marquardt et al., 2019).

2.3 Haptic feedback to locations other than the hands

While exoskeletons can be used to stimulate body parts other than the hands, most research focuses on alternative and less encumbering technical solutions, primarily by using vibrations. Feedback to the upper body is quite common using solutions like vibration vests and belts (Lindeman et al., 2004) or seats (Israr et al., 2012), or vibration extensions for head-worn devices (Berning et al., 2015; Kaul and Rohs, 2016; de Jesus Oliveira et al., 2017) e.g. to point users towards targets. Yet, feedback towards the lower body is still quite uncommon, though suits or wearables using vibrotactors have become available (e.g., the bHaptics system). Researchers have provided cues to arms, legs and the torso (Piateski and Jones, 2005) to train full-body poses, e.g., in sports (Spelmezan et al., 2009), or to guide arm motions (Schönauer et al., 2012; Uematsu et al., 2016). Recently, systems have also appeared that make use of electromuscular stimulation (EMS) over the full body (e.g., the TactaSuit system), though EMS can have side-effects (Kruijff et al., 2006; Nosaka et al., 2011). Systems are also available that stimulate feet and legs, mostly in association with human motion and guidance. Examples include moving foot platforms for walking and climbing stairs (Iwata et al., 2001) or pressure distributions such as foot roll-off during human gait (Kruijff et al., 2016). Stimulation of the foot sole has been shown to be sufficient to elicit a walking experience (Turchet et al., 2013), due to its high sensitivity (Gu and Griffin, 2011). Furthermore, non-directional tactile cues have been shown to provide some self-motion cues (Terziman et al., 2012; Feng et al., 2016) while directional cues can be used to aid directional guidance, e.g., by using higher density grids of vibrotactors under the foot sole (Velázquez et al., 2012). In some studies, vibration and audio cues have been studied in concert and showed cross-modal benefits in ground-surface perception (Marchal et al., 2013) and self-motion perception (vection) (Riecke et al., 2009).

3 Perceptual models and their relevance to a 3DUI

In our studies, we address stimuli provided towards the feet when users are seated or standing (but not walking) while interacting with a 3DUI. Our feedback consists of more subtle (main studies) up to higher-intensity (pilot study) vibrations conveyed via vibrotactors or basshakers under the feet and an external subwoofer. In this section, we address lower-body feedback and the lower-level perceptual mechanisms associated with the PPS and EPS. We look at how typical 3DUI tasks may benefit from cues that can aid with the development and continuous update of information captured in the PPS and EPS.

3.1 Lower-body perception

Similar to the upper body, the lower body affords sensing of stimuli over different types of receptors. Receptors in the muscles, tendons and joints allow us to perceive muscular forces and proprioception, the sense of the location and orientation our body parts. Furthermore, the skin contains different types of mechanoreceptors and tactile corpuscles that react to different vibration frequencies, as well as free nerve endings that react to temperature and skin deformations, or elicit pain as a defensive mechanism (Burdea, 1996). The feet and legs have a smaller brain area dedicated to sensory processing in comparison to the hands. This likely affects perception of stimuli and associated cognitive processes (Hoehn, 2016). Approaches using higher-intensity vibration are affected by vibration transmissibility, as vibrations provided to certain body parts may be perceived at other body parts depending on their resonance frequency. For example, transmissibility can occur through bone conduction (Paddan and Griffin, 1988; Toward and Griffin, 2011b). Perception may be affected by body mass (Toward and Griffin, 2011a), and dampening and cushioning (Lewis and Griffin, 2002). Upper and lower body parts can resonate at specific vibration frequencies caused by body-operated mechanical equipment (e.g., jack hammer) Rasmussen (1983). The feet are reasonably sensitive towards vibrations (Strzalkowski, 2015) even when denser grids of tactors are used (Velázquez et al., 2012). Even considering skin differences under the foot sole (e.g., skin hardness, epidermal thickness), low threshold cutaneous mechanoreceptors have shown good results for stimuli discrimination. Current research indicates an even distribution of mechanoreceptors across the foot sole despite regional differences in tactile sensitivity (Hennig and Sterzing, 2009). Contrary to our hands, receptors on our feet are equally distributed without an accumulation of receptors in the toes. There are also larger receptive fields predominantly isolated on the plantar surface of the hind-foot and mid-foot region. Findings suggest that skin receptors in the foot sole behave differently from those receptors found on the hand, which may reflect the role of these receptors in standing balance and movement control (Kennedy and Inglis, 2002).

3.2 Peripersonal and extrapersonal space

Objects surrounding our body are captured by the peripersonal and extrapersonal spaces. Peripersonal space is defined as the space immediately surrounding our bodies (Rizzolatti et al., 1981). Objects in the PPS can be grasped and manipulated. Objects in the EPS cannot be directly reached and thus require navigation (body movement) towards them, or their movement towards us (Holmes and Spence, 2004). The boundary between PPS and EPS has been approximated to be around 30–50 cm for the hands and 60 cm for the head (Serino et al., 2015). With respect to the trunk (upper back/chest), the PPS is thought to extend further into space, ranging from 65 to 100 cm. For the lower body, first results indicate that the PPS extends around 73 cm around the feet (Stone et al., 2018). It has been shown that the peripersonal space representation for the hands but not for the feet is dynamically updated based on both limb posture and limb congruency (Elk et al., 2013). Relevant for 3DUIs, the PPS can extend even further (up to 166 cm from the body) when participants walk on a treadmill (Noel et al., 2015). Generally, most research has focused on the PPS instead of the EPS, while also focusing mostly on manual actions related the space around the hands.

The PPS can be subdivided in two areas. Personal space consists of the body itself, and is mainly coded through proprioceptive, interoceptive (e.g., state of intoxication), and tactile cues. Reaching space extends as far as a person’s reach, and is mainly based on the integration of tactile, proprioceptive, and visual information (Occelli et al., 2011). The PPS and EPS are essential for (typically visual) perception of objects in the space surrounding us, and the interaction with them. We visually perceive objects in space, determining their location, size, object interrelations and potential movement. These skills help us to mentally represent (and imagine) objects. Conversely, motor action planning and execution requires an integrated neural representation of the body and the space surrounding us (Gabbard, 2015). This is where the PPS and EPS come into play, where visual (outside-body) and tactile (on-body - only in PPS) information are being integrated (Macaluso and Maravita, 2010). Proprioceptive cues also play a role, as these are tied to our motor control actions (Maravita et al., 2003). Research has shown that tactile detection (speed and sensitivity) is modulated by the proximity of the approaching visual or auditory stimulus, even when the stimuli are irrelevant or incongruent, and participants are asked to ignore the stimulus (Stone et al., 2017). Hence, perception in our PPS occurs through integration of multisensory stimuli. Auditory cues can aid the integration process, but their actual role is highly debated (Canzoneri et al., 2012; Tonelli et al., 2019). Spatial sounds may inform us about object locations and movements, yet, their role may be dependent on stimulus location, with different roles and integrations with other types of cues when they are in front or back of the user (Occelli et al., 2011).

This paper focuses on cues captured in the PPS and EPS that trigger full-body motor actions such as those found regularly in navigation tasks. For example, we tend to avoid objects moving towards us, or navigate around obstacles. These cues are typically captured by the peri-trunk and peri-leg spaces. In contrast, peri-hand space considers cues that are highly relevant for hand-object interactions such as 3D selection and manipulation, e.g., grasping an object (Stone et al., 2017). While the peri-trunk and peri-leg spaces may also affect hand-driven tasks, they seem to be more relevant for 3D navigation. Here, our whole body is reflected in relation to our surroundings to plan our movement through our environment, or perhaps change our overall posture (e.g., duck underneath an object). Unfortunately, the trunk and especially lower body have not been focused on extensively in PPS/EPS research. Current research indicates that body parts associated with whole body actions may have a receptive field that expands further than the hand-driven PPS, which is about arm’s reach. This space is likely driven by actions rather than by defensive responses (e.g., collision avoidance). Some research has focused on the PPS around the feet, looking at integration of visual and tactile cues, showing remapping of tactile location of all body parts into a non-anatomically anchored and common reference frame rather than a specific remapping for eye–hand coordination only (Schicke and Röder, 2006). However, how far the boundaries extend from the user’s body is currently unknown. While first studies indicate that the size of the lower-body PPS can be associated with stepping distances, it may also align with the trunk PPS as both are involved in full-body actions like walking (Stettler and Thomas, 2016; Stone et al., 2017).

The objects around us can be related to the egocentric and exocentric reference frames, in which objects are either related to the human body (e.g, eye, head or hand-centered) or world-centered. These reference frames play a key role in all our motor-driven 3DUI tasks (selection, manipulation and navigation) as they are highly relevant for interpreting and encoding cues in relation to our body, and planning and performing motor actions associated with these tasks. Thereby, the PPS and underlying neural processes appear to support the spatial coordinate system transformation, aiding in converting sensory input from its native reference frames (eye-centered, head-centered, and chest-centered) to another egocentric reference frame that affords motor output (Noel et al., 2015). Research suggests that sensory and motor responses are expressed in a common reference frame for locating objects in the space close to the body and for guiding movements toward them (Brozzoli et al., 2014). Hence, the PPS can be seen as a main driver for planning and executing motor actions. Neurons in brain areas that respond to a tactile stimulation of a specific part of the body typically also respond to visual or auditory stimuli approaching the same body part. It has been shown that the same brain areas are also directly involved in motor responses (Galli et al., 2015). The PPS can thus be thought of as a probabilistic action space: it predicts the probability of contact with an object and prepares the action that follows (Bufacchi and Iannetti, 2018), while motor actions conversely can also define the PPS representation. Actions may determine what is coded as far and near space (Serino et al., 2009).

3.3 Cue types and their potential role in a 3DUI

In our studies, we mainly focus on directional cues towards the human body, in particular those that can support proximity and touch/collision events. These events are interrelated with the PPS/EPS and can trigger action mechanisms associated with 3DUI tasks by aiding the construction of spatial awareness of objects surrounding the body. In this section we look more closely at how cues may support tasks performed in a 3DUI. We will reflect upon these cues in our studies.

3.3.1 Proximity cues/extrapersonal space

Extrapersonal space cues can be used to improve our spatial awareness of objects surroundings us, aiding in the creation of mental representations. These representations may encode object locations and object interrelationships e.g., through a cognitive map (Tolman, 1946) and can support our situation awareness. Situational awareness is regarded as the perception of environmental elements and events with respect to time or space, the comprehension of their meaning, and the projection of their future status (Endsley, 1995). It deals with perceiving the potentially dynamic configuration of objects surrounding the user. To assess proxemic information, the user will need to understand at least the direction and also the (approximate) distance to an object. In case of collision avoidance, the velocity at which the object approaches the user may also be relevant, although we do not assess it in our studies. Extrapersonal space mainly drives locomotor behavior, but can also drive manual actions related to the space around the hand (Higuchi et al., 2006). Most research has focused on the role of space around the hands, instead of full-body space. Cues in extrapersonal space will typically drive our navigation behavior or body posture in a 3DUI, as we may want to avoid collisions with objects, or move towards objects. These movements may be driven by general navigation behavior (e.g, to reach a target), but also can be closely coupled to 3D selection and manipulation tasks. For example, users may need to move towards an object to grasp it.

3.3.2 Proximity cues/reaching space

Reaching space cues are quite similar to the cues in extrapersonal space. However, there is a stronger binding to tactile cues in reaching space, as the body is triggered to react more directly to cues perceived in direct vicinity of the body. Cues will contribute similarly to our awareness about the potentially dynamic configuration of objects surrounding the body. As such, object direction, distances and velocities (as per changes in position) may be encoded. Some typical examples of using proxemic cues in a 3DUI are as follows. During selection, our hand movements are defined by a ballistic phase (approaching the object) followed by a corrective phase that is initiated to finely control movements to grasp the object. The grasp action is finalized once the hand touches the object at specific parts of the hand (personal space). In 3DUI research, some work has focused on adjusting the ballistic phase of hand movement to improve object selection but also manipulation in visually constrained scenarios by using proximity cues (Marquardt et al., 2018a; Ariza et al., 2018). Here, the ballistic movement phase is slowed down once the hand comes closer to an object, to avoid the hand passing through the virtual object due to the lack of physical constraints and force feedback. With respect to 3D navigation, the EPS and PPS afford the construction of spatial maps to control movement (Graziano and Gross, 1998), and are essential for our sense of body ownership that may also affect full body responses such as knowing when to duck when passing under objects (Grivaz et al., 2017). Body-ownership has been shown to facilitate body-scene awareness (Slater et al., 2010; Kilteni et al., 2012). Positive effects have largely been shown for interaction of the body as a whole (Maselli and Slater, 2013) or body parts that were visible like the hands (Ehrsson et al., 2005). More research is required as the legs and feet have largely been neglected (Jung and Hughes, 2016), especially when they cannot be perceived in our peripheral vision due to the reduced FOV of most displays. The PPS can affect our overall body posture (Maravita et al., 2003), e.g., by changing posture to avoid hitting an object. During real-world collision avoidance while navigating, we mainly depend on self-motion and spatial cues to adjust our movement based on our spatial awareness and spatial updating (Fajen et al., 2013) to understand the interrelationship between our moving body and the environment. While we may be helped by artificial aids during certain types of navigation (e.g., a car parking aid), we usually do not receive collision-avoidance feedback during navigation in a 3DUI, even though it has shown such cues can be relevant. Namely, hand- (Marquardt et al., 2018a) or feet-driven (Jones et al., 2020) proximity cues can overcome limitations in the sensory channels we use in real-life (e.g., wide FOV) to perceive proximity cues.

3.3.3 Touch or body collisions/personal space

We may obtain a variety of cues based on collision kinematics with objects that get in contact with our body (Toezeren, 2000). The point of impact where the body collides with an object provides the main point of reference to which the other collision characteristics are matched, including the direction of impact. This process can be informed by peripheral visual cues, proprioception, the user’s spatial model of the environment, and the direction of our body movement (Jansen et al., 2011). Directional cues can be coupled to information perceived in reaching space. As such, proximity and collision cues can be coupled to inform for example motor planning actions relevant for interaction. Finally, collision force of impact may provide additional information about the velocity with which we collided with an object (or the velocity of the object itself moving towards us). Collision avoidance can be performed independent of body collision feedback, however, may also be intertwined with body collision once coordination fails. For example, when walking we correct step size, movement velocity, and postural stability while avoiding collisions (Regan and Gray, 2000; Jansen et al., 2011). Furthermore, differences have been observed in visuo-tactile interaction during obstacle avoidance. This was dependent on the location of the stimulation trigger: visual interference was enhanced for tactile stimulation that occurred when the hand was near a to-be-avoided object (Menger et al., 2021). Generally, temporal and spatial information is fundamental for collision avoidance. However, it is not clear yet if the peripersonal and extrapersonal distance between our body and the moving objects affects the way in which users can predict possible collisions. Initial results show that peripersonal space is particularly affected by temporal information, in contrast to extrapersonal space (Iachini et al., 2017). Of direct impact on 3D interaction, action ability modulates time-to-collision judgments (Vagnoni et al., 2017), while threats module visuo-tactile interaction in peripersonal spac e (de Haan et al., 2016).

Research on PPS that primarily focused on hand–object interaction showed how visual stimuli are coded in a hand-centered representation of space surrounding the hand, i.e., “peri-hand space” (Maravita et al., 2003; Spence et al., 2004; Makin et al., 2007). Incongruent stimuli have been shown to modulate the sensitivity of our tactile sensory systems. Yet, congruent cues will often be more relevant for hand interaction. Thereby, these stimuli are likely affected by crossmodal processing (Maravita et al., 2003). These processes are reasonably well understood and as such their understanding can positively affect 3DUI tasks such as hand-based selection and manipulation. Similar to proxemic cues in reaching space, collisions may affect our posture during navigation. We may also change our posture to further avoid collisions with the body. Though mostly ignored in research, collisions can also be used on purpose. For example, during maneuvering we may use subtle collisions to fine-tune our movement (e.g., slowly backing up to a wall until we lightly touch it) to take an specific viewpoint.

4 Research questions

Our research investigates how foot feedback can support the perception of proximity or collisions, with a specific focus on lower level perceptual mechanisms. The results of the foundational studies will provide the basis for further studies that look at behavioral responses to these cues, e.g., for navigation.

RQ1. Collision: Can we induce localized haptic-like sensations in different areas of the whole body depending on their resonance frequencies, by combining a subwoofer and localized low-frequency vibrations into a foot platform/chair participants are standing/sitting on? And how well can participants perceive the point of impact and force of such stimulation?

RQ1 is based on a reflection on results of our previous study, reported in (Kruijff et al., 2015). That study combined low-frequency vibration with a subwoofer as an alternative to attaching actuators to separate body locations to potentially convey collision to different body parts, similar to (Rasmussen, 1983). We assessed the perceived point of contact and collision impact force in relation to on-body PPS personal space percepts. In Section 5, we reflect upon the results towards the theoretical framework provided in Section 3 to guide the design of our system and the main studies of this paper. Henceforth, we refer to this Kruijff et al. (2015) study as our pilot study.

RQ2. Proximity and collision: Can we elicit sensations of proximity (e.g., of approaching objects) by combining a bass shaker and localized vibrotactors into a foot platform participants are standing on? If so, (how well) can these convey the direction and distance of objects, forces, or potential collisions and their point of impact?

RQ2 was addressed in our main studies one to four, which looked into the combination of low-frequency vibration (to simulate collision force) and tactile cues (for object/collision direction and distance) towards the feet. As our previous study [see RQ1 and (Kruijff et al., 2015)] showed that lower-frequency vibrations were not able to provide well-localized collision percepts, we investigated in our main studies one to four how higher-frequency and localized foot vibrations might be able to convey direction and strength of proximity and collision feedback. As such, it covers proxemic cues (direction and relative distance associated with the extrapersonal and reaching space, as well as on-body collision events associated with personal space.

5 Pilot study

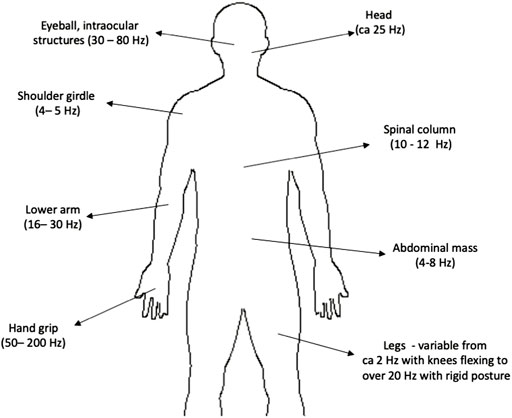

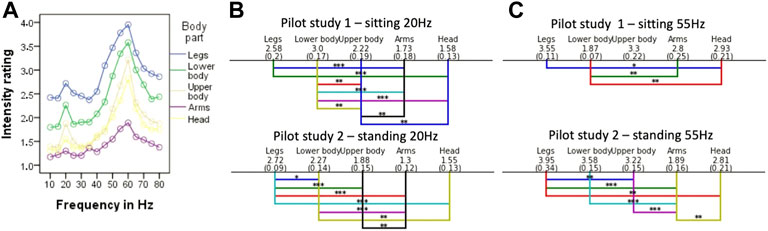

The pilot study consisted of two parts as explained below and in (Kruijff et al., 2015), with additional data plots provided in Figure 2. Here, we extend the previously reported results with a discussion towards the framework presented in Section 3. The pilot study was inspired by Rasmussen (1983) who reported that different mechanical vibration frequencies caused by mechanical equipment (e.g., jackhammers) were noticed in different parts of the body, as illustrated in Figure 1. Rasmussen’s study is grounded in the vibration literature, and has instigated follow up studies like (Randalls et al., 1997), which focused on the effect of low-frequency vibrations between 9 and 16hz on organs and the skeletal structure, addressing which body parts are affected at which frequency, focusing at health issues. The figure shows which body parts resonate at which frequency, highlighting transmissibility issues. We wanted to explore if specific frequencies could produce isolated haptic-like sensations in specific regions of the body, while using different points of entry for stimulation (chair or foot platform). In part 1, a bass-shaker was either mounted underneath a chair (seating) or a foot platform (standing) while also using a subwoofer for low-frequency audio feedback. It addressed the effect of 17 different frequencies between 10 and 80 Hz (in 5 Hz steps), subwoofer (audio on/off) and point of entry (related to seated or standing pose) on haptic sensations in the body. In part 2, users performed the study while standing on two foot platforms, each with a bass-shaker. It addressed the effect of 21 frequencies (2, 5 and 8 Hz, 20–75 in 5 Hz steps, 100–150 in 10 Hz steps) and vibration on the left, right, or both feet, on sense of direction to the stimulation. In both studies, participants rated the noticeability for different body parts: the feet and lower parts of the legs, the waist area, the chest, the arms and the head. In part 2, we additionally asked for left- and right-side body parts (except the waist and head).

FIGURE 1. Resonance frequencies of body parts, after (Rasmussen, 1983).

In part 1, haptic sensations could be easily judged but were primarily related to the point of entry with the strongest sensation on the feet and lower body, while other body parts could not be sensed in an isolated manner. Namely, point of entry (feet versus lower body) had a significant main effect on the perceived vibration intensity, while frequency showed a significant main effect on both feet. Thus, ratings show vibration strength is related to the distance from the point of entry; the body parts closest are rated highest. As Figure 2 shows, clear peaks can be noticed around 20 Hz and 60 Hz (feet stimulation) or 55 Hz for separated platforms in pilot study part 2. Analysis of part 2 revealed that sensation strength was affected by side (point of entry), body part, and frequency. When only one side is stimulated, the legs and lower body can be differentiated from the rest of the body because stimulation only reaches the point of entry (leg on one side) and the closest body part (lower body on the stimulated side). With both sides stimulated, other body parts reached slightly higher ratings, so lower body parts could be less clearly separated. Following, we reflect upon the results of the pilot study towards the theoretical framework, in order to derive useful design inputs for our main studies. Note that some of the issues that are relevant for both RQs are discussed under RQ2 to avoid repetition.

FIGURE 2. Pilot Study 1 (A) Perceived vibration intensity based on stimulus frequency, for foot condition. Right: Significance of identified peaks: Sitting (B) and standing (C) for representative frequencies (* <0.05; ** <0.01; *** <0.001). The significance indicates clear differences in strengths of perceiving vibrations in specific body parts. E.g., in the standing 55 Hz condition of pilot study 2, the perceived intensity was very different between the arms and the head. The differences are mainly associated with the point of entry.

RQ1. Collision: Can we induce localized haptic-like sensations in different areas of the whole body depending on their resonance frequencies, by combining a subwoofer and localized low-frequency vibrations into a foot platform/chair participants are standing/sitting on? And how well can participants perceive the point of impact and force of such stimulation?

5.1 Lower-body perception

Results show that it is difficult to target isolated sensations in specific parts of the body other than those closest to the point of entry. Perceived vibration intensity was rated significantly higher for legs and lower body than the other body parts when these specific body areas were stimulated. Transmissibility of the human body allows vibrations provided to a certain body location to be perceived at other parts of the body depending on their resonance frequency, for example through bone conduction. Point of entry has been noted as significantly effecting vibration sensations (Paddan and Griffin, 1988; Toward and Griffin, 2011b). The point of entry seems to overcast the isolated location perception reported in (Rasmussen, 1983): in our case we could not replicate isolated sensations. Interestingly, most vibration was transmitted towards the lower part of the body during lower body stimulation, instead of upwards. The waist is also close to the lower body, yet never received significantly higher ratings. While there seems to have been some dampening of the different kinds of tissues in the two different points of entry (Lewis and Griffin, 2002), we cannot fully explain this result by dampening only. Some slight frequency vibration effect differences occurred between the left and right leg that may have been caused by slight stance or balance differences between users. This also points to potential shortcomings of this approach to be robust against pose changes that would normally occur during interaction. Even with the strong nature and transmissibility of vibration throughout the body, left- and right-sided vibrations could still be well separated by participants. This could be useful to support the general direction of impact. However, finer directional perception would likely require an alternative feedback method. Adding audio over the subwoofer did not enhance any haptic sensations beyond those induced by vibrations alone. This is somewhat surprising as previous work indicates that perceptions of the body, of movement, and of surface contact features are influenced by the addition of auditory cues (Stanton and Spence, 2020). The result is also in contrast to our earlier work showing a potential effect (Kruijff and Pander, 2005), though higher amplitudes were used and results were only based on observations.

5.2 Peripersonal model

The peripersonal model, specifically reaching space, usually contains information regarding point, direction and force of impact. Within the pilot study we primarily addressed point of impact, while also showing that frequencies affected the intensity that theoretically can be interpreted as force of impact. Direction of impact was only roughly considered.

5.3 Potential effects on interaction

While specific body locations could only be partly targeted, tasks that require feedback about specific body-object couplings (impact)—such as typical selection or manipulation tasks—cannot be readily supported by the implemented frequency-based feedback method. Other tasks that may require general (e.g., left or right) or non-directional (e.g., hit or no hit) feedback can, however, be supported. We assume that the required localizablity is highly task specific, where certain tasks will require specific touch points, where for other tasks a rough direction may suffice.

5.4 Impact on main study

Our initial technical approach in (Kruijff et al., 2015) was not suitable for providing collision feedback to specific body parts. Based on the results, we decided to take an alternative feedback approach and also move away from the core feedback principle of Rasmussen’s study–the usage of fine-grained vibrotactile cues - to better address point, direction and force of impact perception granularity (PPS). Inspired by our previous studies (Marquardt et al., 2018a; Jones et al., 2020) we also considered proxemic cues to support the EPS. both direct collision and other percepts in extrapersonal and reaching space.

6 Main studies

To address RQ 2 we conducted four main studies. We explored the potential of foot-induced lower and higher frequency vibration cues for proximity and collision feedback to indicate object distance and direction, and point, force and direction of impact. The studies focused on error and reaction time of directional proximity cue interpretation (study 1), collision mode preference (study 2), proximity/collision cue combination preference (study 3) and force of impact differentiation (study 4). Twelve participants (four female) aged from 22 to 65 years M = 36.2, SD = 13.4 volunteered for the studies. In part, students were compensated with credit points.

6.1 Apparatus

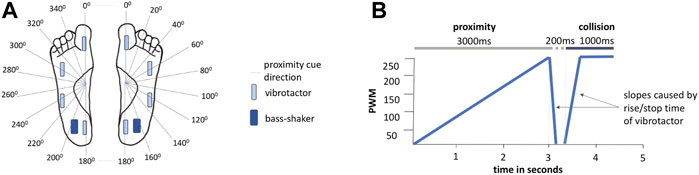

We adapted the foot haptics system reported in Kruijff et al. (2016) by deploying four vibrotactors per foot, approximately forming a semicircle (see Figure 3A). One vibrotactor was underneath the big toe, two underneath the mid-foot (Precision Microdrives Pico drive 5 mm encapsulated vibration motors 304–116, maximum 15,000 RPM) and one underneath the heel (Precision Microdrives 9 mm Pico drive 307–103 13,800 RPM). The vibrotactors were placed so that users with varying foot sizes could still perceive stimuli. Vibrotactors were glued to the underside of a rubber surface stretched over a solid foam sole, such that they did not bear the users’ weight, and vibrations on the foot sole could be easily detected. The vibrotactors were controlled using pulse width modulation (PWM). We refer to 0 PWM as no actuation and 256 PWM as full actuation of a vibrotactor, based on how they were controlled through the connected Arduino microcontroller. Based on previous work, we use both continuous and pulsed modes. The different modes have been used for tactile instructions for motion instructions Spelmezan et al. (2009) and pose guidance Marquardt et al. (2018b), and represent commonly used encoding schemes in vibration feedback. For example, object distance can be encoded as increasing frequency or strength (continuous) or as increasing frequency of pulses. As it was unclear which method would perform best for the purposes in our focused studies, we used both. For an overview of different tactile modes, please refer to Stanley and Kuchenbecker (2012). Note that other tactile feedback methods are theoretically possible (e.g., squeezing), however, were not useful for our purposes, as modes have been shown to (positively) affect perception and performance in other applications Marquardt et al. (2019).

FIGURE 3. (A) Vibrotactor and bass-shaker locations, with degree indications of proximity cues. Note that, e.g., for 40° users received an interpolated signal between two adjacent vibrotactors, wheras 60° used one vibrotactor, and 0° used two vibrotactors. (B) Continuous mode modulation of vibrotactors during combined proximity and collision cues in Study 1.

Namely, we showed that depth feedback can be well perceived using pulsed vibration feedback The interface was used while participants wore only socks. A bass-shaker (Visaton EX-60) was mounted on the foot-support plate underneath the heel, with a fall-off of vibration strength away from the heel. We used different vibrotactors activation patterns to provide users with directional feedback about 18 different horizontal radial directions (in 20° covering the whole 360° (Figure 3B). Participants stood upright on a the platform with footprint marks that illustrated the position of the feet. Users were told to keep their feet in place and distribute their weight equally on both feet. The study was implemented in Unity3D.

6.2 Procedure and experimental design

Participants were informed about the study goals and procedures, signed the informed consent form, and filled out an online demographics questionnaire. After each study, users took a short break in which they answered several questions including the NASA TLX (Hart and Staveland, 1988). Users gave verbal ratings (e.g., direction or strength) after each trial on an 11-point scale, noted by the experimenter. After all studies, participants answered usability and comfort questions, followed by an exit interview. The whole experiment took about 60 min per participant.

Study 1—Proximity direction (EPS) focused on the perception of directional proximity cues. The study was performed as a 18 × 2 × 3 within-subjects design with independent variables direction (18 directions in 20° steps) × vibration mode continuous/pulsed with three repetitions per condition. Eighteen different vibration patterns functioned as cues indicating horizontal radial directions of future collisions (hence, proximity feedback). It started from 0° (the user’s viewing direction) and increased in 20° steps for 360° around the participant, see Figure 3. Each vibration pattern was presented in either continuous mode (vibration of increasing strength) or pulsed mode (increasing strength, but pulsed), and indicated an approaching object through increasing strength. Each factorial combination was presented three times, resulting in 108 trials that were randomized blockwise, where trials were blocked by repetition: all combinations of directions and modes ran in repetition 1, followed by all blocked combinations in two and then 3. To increase participant motivation we gamified the study, in that participants were told to shoot an invisible enemy before it would hit them, by pointing and pulling the trigger of the hand-held Oculus controller used to measure directional judgment. In each trial, participants were presented with a vibration cue for 3 seconds that indicated the upcoming collision (proximity cue) and thus, the relative direction and distance to the enemy. The vibration cue started at 0 PWM and linearly increased to 256 PWM. They were told to react as fast and accurately as possible by aiming and pulling the trigger. The pointing direction was recorded. To avoid guessing, a variable time delay of 1–3 s was used to start the next trial, with a cue from a randomly selected direction. The study was performed eyes-open, and the user was stationary without any visual displays. Study 1 took around 15 min to finish.

Study 2—Collision direction (PPS) assessed user preference for different collision feedback modes. It employed a 2 × 2 × 3 × 3 factorial within-subjects design with independent variables collision mode (continuous/pulsed), bass-shaker (on/off) and directions [front (0°), back (180°), diagonal front (40°)], and three repetitions per condition. This resulted in 36 trials blocked by repetition and randomized blockwise, as in study 1. For each trial, collision feedback was provided for one second, starting at 200 PWM and linearly increasing to 256 PWM. For the bass-shaker conditions, the shaker was activated directly after the collision cue at a medium strength of 60%. After each trial, participants verbally rated the ease of judging the direction of the colliding object and the convincingness of the collision feedback (usefulness of the cue to simulate a collision). It took about 15 min to complete.

Study 3—Coupled proximity and collision (EPS/PPS) looked at how users rated different vibration mode combinations. It used a 2 × 2 × 2 × 3 factorial within-subjects design to examine the effect of independent variables proximity vibration mode (continuous/pulsed), collision vibration mode (continuous/pulsed), encoding scheme (full/separate range) and three repetitions. Full encoding means the proximity and collision cue were encoded at full PWM (0–256), whereas for separate encoding the proximity cue ranged from 0 to 200 PWM and the collision cue from 200 to 256 PWM. Each factorial combination was repeated three times resulting in a total of 24 trials presented in randomized order. The aim was to find out which combination of feedback modes and encoding schemes is most appropriate to simulate the 2-step process of proximity towards and collision with an object. In each trial a proximity cue was provided for 3 s, then a short interval of 200 ms passed (without any stimulation) before the collision feedback was given for 1s (see Figure 3B). We choose different durations to mimic the normally longer duration of an incoming object before it collides, which is rather an abrupt event. Users were presented with different cue combinations from diagonal front directions (40° left or right, random). After each trial participants rated the usefulness of the experienced cue combination for simulating a collision process, and how well they could distinguish between proximity and collision cues. It took about 10 min to finish Study 3.

Study 4—Collision force of impact (PPS) looked at users’ ability to differentiate forces of impact. It used a 3 × 5 × 3 factorial design to study the effect of factors collision feedback mode (continuous/pulse/no collision vibration feedback using vibrotactors), bass-shaker strength (5 levels, from 20 to 100% volume in 20% steps) and repetition on the user’s performance in strength discrimination (force of impact) and the subjective judgment of the ease of sensing the bass shaker strength. Each factor combination was randomized and repeated 3 times, resulting in 45 trials. Trials started with collision feedback (except in “no collision feedback” conditions) provided for 3 s rising from 0 PWM to 256 PWM, directly followed by the bass-shaker signal. After each trial participants estimated the force of impact on a scale from one to five and the ease of sensing the strength on a 11-point Likert scale ranging from 0 = “not easy at all” to 10 = “very easy”. Before starting the actual study participants were shown the different vibration strength levels to familiarize them with the range. It took about 10 min to finish.

6.3 Results

Here, we report significant main effects and interactions from our main studies 1–4. Greenhouse-Geisser corrections were applied whenever the ANOVA assumptions of sphericity were violated.

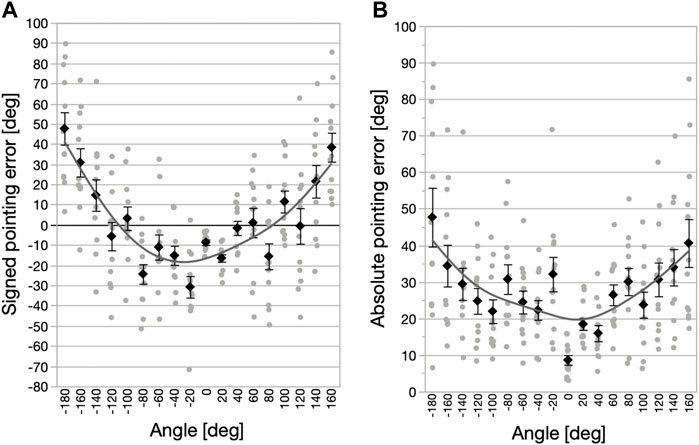

Study 1—Proximity Direction (EPS). 1,296 trials were analyzed using within-subject ANOVAs for the independent variables direction, vibration mode, and repetition. Signed and absolute pointing errors were calculated as the signed/absolute difference between the angle of the actual incoming direction and the direction indicated by participants (see Figure 4). Signed errors showed a significant main effect of the direction of the proximity cues, F (17, 187) = 18.73, p < 0.001, η2 = 0.63, and followed a U-shaped pattern with highest signed errors for stimuli coming from behind (see Figure 4A). There was also a significant interaction between proximity cues and repetition F (2, 22) = 3.94, p = 0.034, η2 = 0.26, although Tukey HSD post-hoc tests did not show any significant pair-wise differences. Absolute pointing errors also showed a significant main effect of the direction of the proximity cues, F (17, 187) = 5.16, p < 0.001, η2 = 0.32, and followed a similar U-shaped pattern as signed errors (see Figure 4B). Absolute error was smallest at an incoming direction of 0° where the collision cues came from the front of participants (M = 8.65, SE = 1.32), while increasing towards the back of the user. Pairwise contrasts were performed to investigate if directions where the directional cue did not have to be interpolated between vibrotactors (0°, ±60°, ±120°, 180°), see Figure 3) resulted in lower errors. Indeed absolute pointing errors for 0° were significantly lower that the other directions, p < 0.0001, but significantly higher for 180°, p < 0.0001, and not significantly different for the ±60° and ±120° conditions, p = 0.59. Together, this suggests that interpolating signals between vibrotactors did not per se impair direction judgements. Overall participants were less accurate when judging directions in the front vs. back hemisphere p < 0.0001. Participants reacted after 3.8 s (SD = 2.33) on average and in 75% of all trials in less than 4.6 s. There was no clear evidence for a speed-accuracy trade-off, meaning that reacting slower or faster did not significantly affect pointing errors. Reaction times differed significantly between vibration modes, and participants reacted slower with continuous feedback (M = 4.04, SE = 0.34) than with pulsed feedback (M = 3.56, SE = 0.30), F (1, 11) = 13.52, p = 0.004, η2 = 0.55. Repetition also affected reaction time (F (2, 22) = 4.17, p = 0.029, η2 = 0.275) as participants tended to get faster from the first (M = 4.08, SE = 0.33) to the third repetition (M = 3.52, SE = 0.32, p = 0.0224).

FIGURE 4. Means and standard errors of signed (A) and absolute pointing errors (B) in study 1. Gray dots depict individual participant’s data.

Study 2—Collision direction (PPS). For this study, we analyzed 432 trials. As ANOVAs have been shown to be quite robust against violations of normality as observed here, especially when sample sizes are equal (Schmider et al., 2010; Field, 2013), we analyze the data using repeated measures ANOVAs for the independent variables collision mode (continuous/pulsed), bass-shaker (on/off), directions [front (0°), back (180°), diagonal front (40°)], and repetition. Median ratings for ease in sensing the direction were 8.25 for continuous mode and 7.75 for pulsed (on a 0–10 scale). Median values for the convincingness of feedback were the same for both vibration modes (MD = 6.75). Generally ratings concerning the ease of judging the direction were rather positive as more than 75% of all ratings were higher than 7. Ratings about the convincingness of the collision feedback were also rather positive with 50% of the ratings higher than 6.75. Note, however, that neither the ease of judging directions nor the convincingness of the collision feedback showed any significant main effects or interactions of the independent variables bass shaker, collision mode, direction, or repetition (all p′s > 0.11). Confirmatory non-parametric test (Wilcoxon sign rank and Friedman for the 2-level and 3-level independent variables, respectively) confirmed the lack of any significant effects.

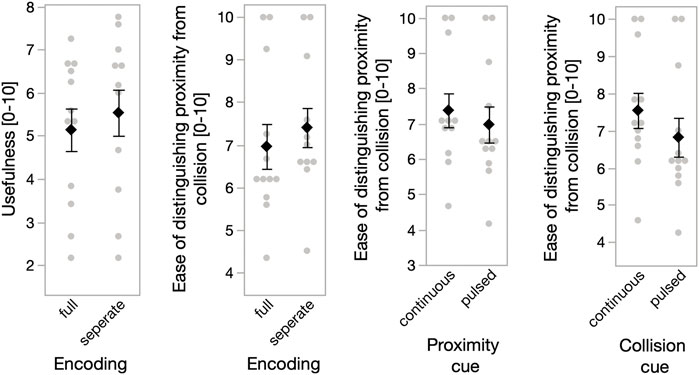

Study 3—Coupled proximity and collision (EPS/PPS). For this study, 288 trials were analyzed. Within-subject ANOVAs were used to investigate the effects of vibration modes of proximity cues (continuous vs. pulsed), collision cues (continuous vs. pulsed), encoding scheme (full vs. separate) and repetition (1, 2, 3) on ratings of the usefulness for simulating a collision process, and the ease of distinguishing between proximity and collision cues.

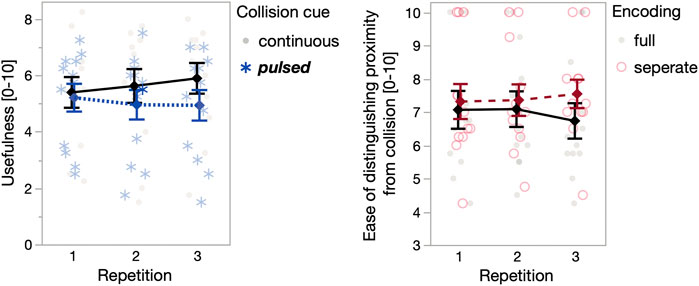

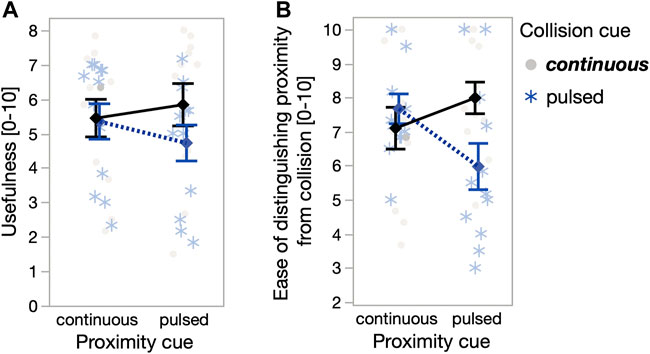

Participants rated both the cue usefulness (F (1, 11) = 10.4, p = 0.008, η2 = 0.49) and ease of distinguishing (F (1, 11) = 11.02, p = 0.007, η2 = 0.50) higher at separate encoding (PWM range separation for proximity and collision) than at full encoding (full PWM range for both proximity and collision), as illustrated in Figure 5. There was an interaction between repetition and encoding on ease of distinguishing, where the difference between the ratings for full and separate modes for ease of distinguishing increased significantly over repetitions (F (2, 22) = 3.65, p = 0.043, η2 = 0.25), as illustrated in Figure 6. Post-hoc Tukey HSD tests indicated that using two separate encoding ranges to code proximity vs. collision made it easier to distinguish proximity from collision cues at the last repetition (p = 0.0039), but not the earlier repetitions (p > 0.16). There was also an interaction between proximity and collision cue vibration modes on cue usefulness ratings (F (1, 11) = 9.10, p = 0.012, η2 = 0.45) and ease of distinguishability ratings (F (1, 11) = 7.24, p = 0.021, η2 = 0.40), see Figure 7. Tukey HSD tests showed that when the proximity cues were pulsed, the collision cues were rated as more useful when they were continuous vs. pulsed (p = 0.036). No other pair-wise comparisons of usefulness ratings reached significance, all p′s > 0.28. Distinguishing proximity from collision cues was rated most difficult when both proximity and collision cues were pulsed, and in fact significantly harder then any of the other combinations, all p′s < 0.010. Distinguishing proximity from collision cues was generally easier when the proximity cue was presented continuously as compared to pulsed, indicated by a significant main effect, (F (1, 11) = 8.08, p = 0.016, η2 = 0.42), see Figure 7. A similar main effect was observed for the collision cues, which were also easier to distinguish when continuously presented, (F (1, 11) = 18.34, p = 0.001, η2 = 0.63). There was an interaction between repetition and collision cue on usefulness rating, indicating that the difference between the usefulness of continuous vs. pulsed collision cued increased over the repetitions, (F (2, 22) = 5.28, p = 0.014, η2 = 0.32), as illustrated in Figure 6. Tukey HSD pairwise comparisons showed marginally higher usefulness ratings of continuous vs. pulsed collision cues for the last repetition (p = 0.082), all other p′s > 0.1. Though participants had some experience with cues before, we did not find or observe any clear indication of learned proficiency in reading the stimuli.

FIGURE 5. Illustration of significant main effects of encoding and vibration modes on usefulness and distinguishability ratings in Study 3, plotted as in Figure 4.

FIGURE 6. Illustration of significant interactions effects of repetitions in Study 3, plotted as in Figure 4.

FIGURE 7. Illustration of significant interaction effects of proximity and collision cues on usefulness ratings (A) and distinguishability (B) of Study 3, plotted as in Figure 4.

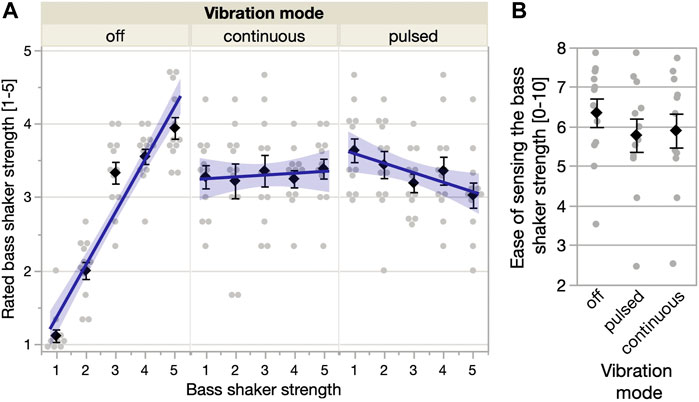

Study 4—Collision force of impact (PPS). For this study, 540 trials were analyzed using within-subject ANOVAs to examine the effect of independent variables vibration mode \{off, pulsed, continuous\}, bass shaker strength \{1, 2, 3, 4, 5\}, and repetition \{1, 2, 3\} on participants’ ratings of the bass shaker strength and the perceived ease of sensing the bass shaker strength. The ease of determining the bass shaker strength was significantly affected by the vibration mode (but not bass shaker strength or repetition) F (2, 22) = 3.54, p = 0.046, η2 = 0.24, see Figure 8B. Planned contrasts showed that it was perceived as easier to determine bass-shaker strength when the vibration was off (M = 6.35, SE = 0.40) versus on (p = 0.016), with no significant differences between pulsed (M = 5.79, SE = 0.40) and continuous (M = 5.90, SE = 0.40) vibration mode. This was corroborated by participants’ errors in estimating the bass shaker strength: Signed errors between the actual and estimated bass shaker strength showed a significant main effect of vibration mode, F (2, 22) = 23.1, p < 0.0001, η2 = 0.68. As illustrated in Figure 8A, when vibrations were off participants’ rated bass shaker strength correlated positively with actual bass shaker strength, r = 0.722, p < 0.0001, whereas the correlation was slightly negative for the pulsed vibrations, r = −0.131, p = 0.012, and close to zero for the continuous vibrations, r = 0.025, p = 0.870. That is, participants could only detect the bass shaker strength reliably when there were no vibrations added, suggesting that the vibrations may have masked the strength of the bass shaker. In addition, attentional limitations may also have played a role, leading to a potential integration of the sensory signals and thus interfering with participant’s ability to disambiguate between the different signals (see Discussion section). Repetition also affected signed errors, F (2, 22) = 7.23, p = 0.004, η2 = 0.4, which were significantly higher at the first repetition (M = 0.11, SE = 0.1) than at the second (M = −0.29, SE = 0.14, p = 0.03) and marginally higher than at the third repetition (M = −0.25, SE = 0.13, p = 0.058). There was also an interaction between vibration mode and repetition on signed error, F (4, 44) = 2.67, p = 0.045, η2 = 0.2.

FIGURE 8. Study four results. (A) Perceived vs. actual bass shaker strength including linear regression fits, depending on whether the vibrotactors were off, pulsed, or continuously vibrated. (B) Rated ease of sensing bass shaker strength depending on the vibrotactors’ vibration mode.

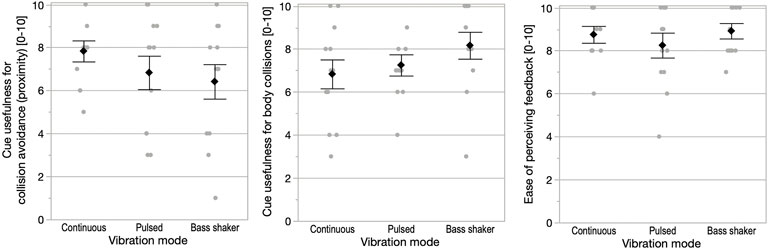

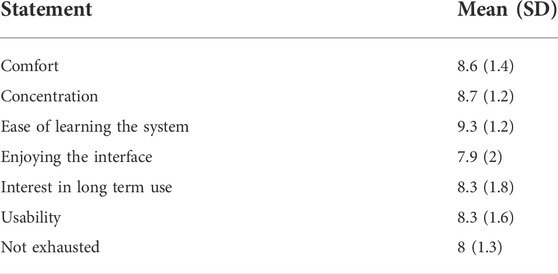

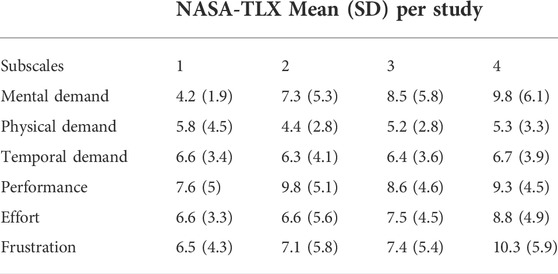

Subjective feedback Analyses of overall subjective feedback (see Figure 9 and Tables 1, 2) completed after studies one to four showed that overall cue usefulness was rated quite high (7 out of 10). There was a trend for participants rating the continuous vibration mode as somewhat more useful for indicating proximity, F (2, 22) = 1.37, p = 0.274, and the bass shaker as somewhat more useful for indicating body collisions, F (2, 22) = 2.78, p = 0.084, see Figure 9. As indicated in Figure 9 (Right) the ease of perceiving feedback was rated as overall high (8.6 on a 0–10 scale) and did not depend on feedback mode (continuous, pulsed, or bass shaker), F (2, 22) = 0.773, p = 0.47. While NASA TLX scores (cf. Table 1 slightly increased towards Study 3 (integrated cues) and 4 (force of impact), overall task load was medium at most. Usability questions (cf. Table 2) indicated the system was easy to learn and concentration was not an issue. Overall comfort and usability ratings were very positive (between 7.9 and 9.3 on a 0–10 scale, see Table 2.

TABLE 1. NASA Task load for studies one to four measured on six subscales each on a 20-point Likert scale.

7 Discussion

In this section, we reflect our results from the main study towards our research question and discuss how cues can potentially inform collision-driven behavior in 3DUIs. Note again that some issues that also account for our pilot study have been merged here for clarity.

In RQ2, we asked “Can we elicit sensations of proximity (e.g., of approaching objects) by combining a bass shaker and localized vibrotactors into a foot platform participants are standing on? If so, (how well) can these convey the direction and distance of objects, forces, or potential collisions and their point of impact?” In the following, we discuss different aspects of these questions:

7.1 Lower-body perception

Current results favor the feedback combination of continuous vibrotactor cues for proximity, and bass-shaker cues for body collision (see Figure 9). Results show that users could rather easily judge the different cues at a reasonably high granularity. Generally, the results are in line with previous findings on feet-based actuation regarding sensitivity and interpretation of cues (Velázquez et al., 2012; Strzalkowski, 2015; Kruijff et al., 2016). Namely, previous studies have shown that the feet can well be used to perceive directional feedback through vibration, and can well differentiate between feedback locations. As we had a relatively small number of participants, there has been some noise in the data. In particular, Figures 4–8 show large between-subject variability. Yet, the statistical analysis showed fairly clear results with reasonable effect sizes. Future research is needed to gain further insights and investigate how the current results might or might not generalize to larger and more diverse participant populations, setups, and tasks. Furthermore, it remains to be seen if some of the noise could be reduced by using individualized encoding schemes and calibrations. We assume that especially the subjective feedback (e.g., ease of use) would improve accordingly. Though previous work noted effects of skin differences (Hennig and Sterzing, 2009), we did not assess this. Yet, we also did not find performance fluctuations across participants that could be potentially linked to that. Still, future systems likely benefit from a calibration procedure to reduce potential effects of feet skin sensitivity differences between users, and to account for user preferences.

In contrast to the pilot study, we did not find any clear evidence for potential perceptual issues related to dampening or bone conduction. Though vibrations could potentially be transmitted upwards in the legs by the bass-shaker stimuli, they did not seem to have affected directional perception - at least, users did not take notice. This most likely was caused by the lower intensity stimuli used in the main studies. Though the bass-shaker produced higher intensity stimuli than the vibrotactors, we did not find any indication that it did overcast perception. On the other hand, force of impact could only be differentiated in the absence of vibration cues. The vibration stimuli were low intensity and likely were not affected much by conduction through bone or tissue as directionality could be reasonably well judged. It would be interesting to see if even finer granularity can be achieved, for example through stimulation at different foot areas. The current setup was mainly motivated by providing directional cues (hence the semi-circular setup of tactors) instead of considering more sensitive areas on the foot sole more closely. Previous work notes regional differences on the foot that could be considered (Hennig and Sterzing, 2009). In this context, the stimulation of both feet and lower-body (e.g., the legs) could be an interesting option to explore. As participants did not move around, the pressure distribution of the feet on the platform stayed constant. However, systems for moving users have been presented, for example those that make use of shoe inlays (Velázquez et al., 2012). During movement, the pressure distribution under the feet will change, caused by the roll-off process of the feet, while also (tactile) noise caused by different ground textures (e.g., tapestry or wooden floors) may affect perception (Kruijff et al., 2016). This will likely cause perceptual issues with the cue strength differentiation and localization as perception of tactile stimuli changes slightly based under changing skin contact pressure (Magnusson et al., 1990). In this case, complementary (redundant) and synced cues towards the feet and the legs could be considered to overcome potential ambiguities. Scenarios can also be envisioned where different tasks are associated with different cues. For example, navigation and selection/manipulation tasks could be supported by proximity and collision cues. Cues could for example be provided to the lower body (navigation) and the hands (selection/manipulation) (Marquardt et al., 2018b). Future work is needed to investigate how well users will be able to perceive objects presented to both hands and lower body as the same object. Conversely, will users be able to separate cues when they present different objects, e.g., when lower-body cues present an obstacle and hand cues present a graspable object? Finally, the issue of habituation to cues needs to be addressed. While users mainly focused on the cues in our experiments instead of on other tasks, and stimuli only lasted for a short time, habituation was not observed. However, habituation will likely occur when users make use of the system for an extended period, especially if their focus is on another task. Potential issues with habitation have been noted in prior work on feet- (Yasuda et al., 2019) and leg-based (Wentink et al., 2011) stimuli, and require further investigation. Furthermore, it remains to be seen if factors such as gamification or rewards-based mechanisms (as in study 1) which can help users to stay focused on the stimuli and task may help to overcome habituation. While we did not explicitly investigate the effects of gamification in this paper, one of the studies (study 1) contained gamification elements, and it is possible that this might have contributed to the much lower mental demand in the gamified study one compared to studies 2–4 (see Table 1).

7.2 Peripersonal and extrapersonal model

Study results showed that even with very limited training (e.g., in study 3, participants had some training as modi had been experienced in studies one and 2), the combination of low and higher frequency foot feedback can be used to provide proximity and collision information that is relevant for the PPS and EPS.

7.2.1 Encoding and errors

Users found it easy to judge proximity direction (EPS), and produced low errors especially for the forward and sideways directions (see Figure 4). Observations and user comments suggest that the higher error for backward directions may be related to it being ergonomically more challenging to point backwards (and do so accurately) with the hand-held controller as the feet had to stay aligned forward. Mental body rotation effects related to imagined spatial transformations may also potentially affect the pointing, however, this requires further study (Parsons, 1987). Pulsed mode allowed for faster reaction times (study 1). Participants in study two reported that it was overall rather easy to sense the collision direction (PPS)—even though the collision cues were much shorter (1s) than the proximity cues (3s). While we did assess direction instead of point of impact during collision feedback, users seem to mentally process a vector from the body center through the stimulated point at the semicircle around the foot, resulting in a directional cue relative to the body. Though this may be a learned effect, it may also not be necessary to separate collision direction and point of impact cues through different encodings, for example during navigation. Proximity cues will likely also inform the location of impact during collision, as they refer to the same object that collides with the body. Study three indicated that if PWM ranges are separated and if pulsed-pulsed vibration mode combinations of proximity and collision cues are avoided, proximity and body collision (EPS/PPS) cues can be more easily recognized as separate cues. Finally, force of impact (Study 4, PPS) from the bass-shaker could easily be judged, with a low error rate, however only in absence of further vibration cues.

The proximity direction cues did not provide an absolute distance, but rather informed users about an approaching object to improve situation awareness. As such, the border between PPS and EPS cannot be separated by tactile cues alone—here other congruent cues will be beneficial. As duration of cues (with proximity taking 3s instead of 1s for collision) also provided an indication of which cue was provided, it will be of interest to test for similar duration cues in future studies. Furthermore, the usage of mixed pulse/continuous modes could also be useful to study. Subjective feedback indicated different preferences for collision avoidance (where continuous vibrotactor vibrations were preferred) and body collision (where the bass-shaker was slightly preferred), see Figure 9. Finally, further tests are required to actually address the extend of errors on interaction tasks: currently, we have no absolute measure (baseline) to compare our errors against, nor any direct comparison to how angle errors would occur when using other modalities (e.g., audio).

In a next step, addressing further encoding schemes for different types of cues associated with the PPS and EPS would be of interest. This may also require different types of actuation and further comparisons to other methods reported in literature, e.g., as noted in the related work section. Thereby, different cue couplings may also lead to different encoding schemes: different body parts can theoretically be stimulated with either redundant or non-redundant cues. Especially for non-redundant cues, the same encoding may lead to confusion, e.g., when similar cues are used for separated navigation and selection tasks. Separate encodings or even separate sensory modalities may be an option to solve this issue. On the other hand, providing redundant cues to different body parts may also be particular useful to reduce feedback ambiguities, as discussed in Section 7.1. In this context, the coupling of lower-body and peri-trunk cues (i.e., cues provided to the trunk) can help to support full-body awareness (Stettler and Thomas, 2016). As such, coupled lower-body and peri-trunk cues can also affect navigation tasks in 3D environments (Matsuda et al., 2021). To measure the PPS, Matsuda et al. (2021) used a tactile and an audio stimulus as auditory information is available at any time in all directions. They used approaching and receding task-irrelevant sounds in the experiment. Their results suggest that humans integrate multisensory information in near-circular peri-trunk PPS around the body, and that integration occurs with approaching sounds. As such, multisensory cues can be of relevance (see Section 7.2.2).