94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 14 December 2022

Sec. Augmented Reality

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.943696

This article is part of the Research TopicSupernatural Enhancements of Perception, Interaction, and Collaboration in Mixed RealityView all 7 articles

Thammathip Piumsomboon1*

Thammathip Piumsomboon1* Gavin Ong1

Gavin Ong1 Cameron Urban 1

Cameron Urban 1 Barrett Ens 2

Barrett Ens 2 Jack Topliss 1

Jack Topliss 1 Xiaoliang Bai 3

Xiaoliang Bai 3 Simon Hoermann 1

Simon Hoermann 1This research explores visualisation and interaction techniques to disengage users from immersive virtual environments (IVEs) and transition them back to the Augmented Reality mode in the real world. To gain a better understanding and novel ideas, we invited eleven Extended Reality (XR) experts to participate in an elicitation study to design such techniques for disengagement. From the elicitation study, we elicited a total of 132 techniques for four different scenarios of IVEs: Narrative-driven, Social-platform, Adventure Sandbox, and Fast-paced Battle experiences. Through extracted keywords and thematic analysis, we classified the elicited techniques into six categories of Activities, Breaks, Cues, Degradations, Notifications, and Virtual Agents. We shared our analyses on users’ intrinsic motivation to engage in different experiences, subjective ratings of four design attributes in designing the disengagement techniques, Positive and Negative Affect Schedules, and user preference. In addition, we gave the design patterns found and illustrated the exemplary user cases of Ex-Cit XR. Finally, we conducted an online survey to preliminarily validate our design recommendations. We proposed the SPINED behavioural manipulation spectrum for XR disengagement to guide how the systems can strategically escalate to disengage users from an IVE.

The future of interconnected immersive virtual environments (IVEs), also referred to as the Metaverse, has the potential to be highly engaging once the underlying technologies (e.g., low latency, seamless transition between platforms) are enabled and drawbacks (e.g., cybersickness) mitigated (Slater et al., 2020). This research investigates strategies to help disengage and transition users from IVEs back to the real environment in an Augmented Reality (AR) mode, using visualisation and interaction techniques in Extended Reality (XR). However, our goal is not to remove the users from the immersive system entirely but rather for them to remain in the system by transitioning the users from Virtual Reality (VR), where the world is entirely virtual, to AR, where virtual content is superimposed into the real environment. Accordingly, we conducted an elicitation study to gain ideas for user disengagement from the subject-matter experts.

This decade promises an unprecedented digital transformation of a global society. One of several fundamental advancements is XR technology, which allows us to spatially interface with the digital realm. It encompasses the entire spectrum of virtual augmentation from AR to VR according to the reality-virtuality continuum by Milgram et al. (1995). The numerous benefits that XR and its applications can bring will transform human society. For instance, it alters how we communicate and collaborate with other people or agents (Lee et al., 2017; Piumsomboon et al., 2018c, 2019a,b; Ens et al., 2019). It could offer a positive emotional experience to help improve our well-being (Kitson et al., 2020). It could allow us to reminisce experiences in therapy as an intervention of cognitive impairment (Niki et al., 2021), and even permit us to share such experiences with others for improved empathy (Piumsomboon et al., 2017b; Dey et al., 2017; Hart et al., 2018a; Dey et al., 2021).

At the time of this writing, major technology juggernauts have redoubled their commitment and effort to developing platforms for interconnected IVEs that would enable digital living for anyone to work, socialise, and play in these alternate worlds. However, such a concept has already been explored prior to the pervasiveness of immersive technologies. We have already had a glimpse of such experiences in the past and present through online virtual platforms such as Second Life (Zhou et al., 2011; Boellstorff, 2015), and many online games that have accrued player counts in their billions (Wikipedia, n.d.) Arguably, the social and interactive media industry alike have already mastered their engagement formula. For example, dark game design patterns have existed for decades (Zagal et al., 2013) and compulsive use of mobile devices is a real issue (Tran et al., 2019). It is only a matter of time before the same will happen in IVEs (Hodent, 2017; Fullerton, 2019; Maloney et al., 2021). Moreover, the potential impacts might plausibly be greater for XR due to the ultra-realism nature that the technology could offer where IVEs are indistinguishable or even offer far richer experiences than one could ever encounter in the real world (Slater et al., 2020). Such technology might be subjected to misuse or abuse, causing detrimental social issues on a global scale ranging from physiological harms, and psychological issues, to people prioritising the virtual worlds over the real one (Wassom, 2014; Madary and Metzinger, 2016; Slater et al., 2020; Yoon et al., 2021).

Few research studies have examined the effects of exiting from VR experiences and explored the transition techniques between the real world, AR, and VR. Knibbe et al. (2018) studied the momentary experience and effects of exiting and transitioning back from VR. They proposed six techniques to heighten or lessen the exit experiences. An abrupt shutdown, for example, would heighten the effect. In contrast, fading and environment alignment would lessen it. Valkov and Flagge (2017) compared transition techniques and found that a smooth transition to the virtual environment improves the awareness of the user and may increase the perceived interactivity of the system. George et al. (2020) proposed the concepts around seamless experiences that support bi-directional transitions. Around the same time, Putze et al. (2020) investigated the break in the presence and found that the more coherent the new experience was to the virtual environment, the lessening the effects of the break in the presence. Recent research investigated breakdowns of VR systems by reviewing YouTube videos showing situations where VR went wrong Dao et al. (2021) and revealed the issue of the seam between VR and the real world that the users were not made aware of and proposed concepts of new interaction and cues to mitigate such failures.

Our background research could not find any research exploring visualisation and interaction techniques to help disengage and transition users from IVEs back to the real world in AR mode. This is the primary motivation for this work. Furthermore, this is the first expert elicitation study on the subject. As a result, we have elicited 132 techniques from eleven XR experts for four different scenarios. The scenarios are Narrative-driven, Social-platform, Adventure Sandbox, and Fast-paced Battle experiences. We provide analyses, classifications, and illustrations of the exemplar use cases of expert-elicited XR techniques. We discuss our compelling findings and the identified design patterns. We then share the results from a preliminary validation of our design recommendations and propose the SPINED behavioural manipulation spectrum for XR disengagement. This research has made several contributions as follows:

• Reviews of related work on potential impacts of XR, XR taxonomy, immersion preservation and real-world awareness, and blended realities.

• A remote study procedure utilising an elicitation process resulting in one hundred and thirty-two visualisation and interaction techniques.

• Classification of the elicited visualisation and interaction techniques.

• Exemplary use cases of the expert-elicited set of visualisation and interaction techniques distilled from the analysis.

• A discussion of the design patterns arise from the study results around scenarios, disengagement strategies and policies, and escalation.

• An online survey to preliminarily validate the potential of disengagement techniques

• The SPINED behavioural manipulation spectrum for XR disengagement to help guide the design of disengagement techniques and strategies.

This research draws from several related topics, from the characteristics of XR experiences to the concepts of blended realities. We have categorised the related work into four subsections. Firstly, we cover the potential impacts of XR raised by previous research and explain the motivation of this research. Next, we introduce crucial taxonomy in XR used to guide our design space exploration. Thirdly, we examine the state-of-the-art approaches to maintaining real-world awareness in academia and industry. Lastly, we examine techniques to seamlessly blend realities to create a place and plausible illusion.

Extended Reality (XR) is one of the key technologies that could transform how humans interface with machines and how our society can seamlessly communicate and collaborate across the real and virtual worlds. There have been several visions of how such a technology would impact the future of living and human society, disrupting the traditional work process in various domains such as healthcare (Halbig et al., 2022) and education (Thanyadit et al., 2022). For example, Orlosky et al. (2021) introduced “Telelife”, a vision for the near future, depicting the synergies of modern computing concepts (e.g., digital twins, context-aware UI) to better align remote living within the physical world. Due to the COVID-19 pandemic, our view of work and socialisation has significantly shifted, and the realisation that XR has the potential to empower people by improving the efficiency of the task, such as eliminating the need to commute to a meeting, while improving the experience like staying connected with distant family with high fidelity.

Nevertheless, the impacts of XR are expected to have much further reach with its potential to revolutionise user experiences entirely with augmented perception (Piumsomboon et al., 2018a,b; Zhang et al., 2022), enhanced emotion and cognition (Hart et al., 2018b), or collaboration (Piumsomboon et al., 2017a; Gao et al., 2017; Irlitti et al., 2019). Nevertheless, its implications will not be all desirable as detrimental physiological, psychological, or societal effects are plausible. For example, Yoon et al. (2021) studied the long-term physiological effects of VR use for the users wearing the HMD for 2 hours straight, which showed that it affected accommodation, convergence, exodeviation, and subjective symptoms. They concluded that participants with larger exodeviation, or the tendency of the eyes to deviate outward, showed a higher tendency toward worsening of exophoria or an eye condition affecting binocular vision and alignment at a far distance.

Slater et al. (2020) warned us of the implications of the ultra-realism that XR technologies could offer and its harmful impacts on humankind. One of the general principles for action that is highly relevant for our research was on “minimising potential harm of immoderate use”. They stressed that there has yet to be a societal norm to help indicate a reasonable frequency of use or who is responsible for limiting or enforcing the time spent in XR. For example, the issue of XR users’ withdrawal from the shared reality into their private world of personal reality could be a risk to their well-being and society as a whole. There are many unanswered questions around the issue, such as whether it is the user’s right to live in their own private world and opt out of the shared public sphere. They pointed out that social norms can be helpful in such a scenario, and the use of XR should possibly be regulated. The product and service providers should be aware of the ethical implications of their products. Moreover, they should be responsible for preventing harm that may result from immoderate use and even may be held accountable for it. Finally, they believe there still needs to be more research into recommending the approaches to help moderate XR use. Our research answers this call. These concerns prompt us to explore potential approaches to mitigate the potential problems raised before they become severe issues in the future.

Throughout this paper, we will refer to Extended Reality (XR) as the umbrella term that encompasses all immersive technologies, from Augmented Reality (AR) to Virtual Reality (VR). Milgram et al. (1995) first characterised XR through the reality-virtuality continuum and the taxonomy of the extent of world knowledge (EWK), reproduction fidelity (RF), and extent of presence metaphor (EPM). This is where the concept of Mixed Reality (MR) arose. MR situates between the real and virtual environment, defining different experiences ranging from AR to Augmented Virtuality (AV). Only recently, Skarbez et al. (2021) reexamined and refined the concept in three ways: 1) declared that RV continuum is discontinuous as the ideal VR is unattainable, 2) showed that MR is broader and encompassed conventional VR experiences, and 3) extended the taxonomy by merging RF and EPM into immersion (IM) and proposed a new dimension of coherence (CO). They explained the objective of the updated dimensions as EWK offers world awareness (how well the system understands the physical world).

The place illusion (PI) is the sense of presence, i.e., being there and the plausible illusion (Psi) is the illusion that the scenario being depicted is actually happening (Slater, 2009). According to Skarbez et al. (2021), IM provides place illusion, while CO offers plausibility illusion. Thereby, the interaction between these dimensions provides the focus of different illusions, replicated world illusion (IM × EWK, how well is the real world replicated to the users), presence (IM × CO), system intelligence illusion (EWK × CO, the system can use its awareness intelligently), and Mixed Reality Illusion (IM × EWK × CO, how well the worlds are blended seamlessly and responds intelligently to user’s behaviour). Based on such concepts, our work constitutes the design of Mixed Reality Illusion focusing on systems that can intelligently make decisions to disengage users based on their behaviour and utilise awareness of both the physical and virtual worlds to strategically influence and seamlessly transport the user back from a virtual environment to the real one. The sole purpose of such systems is for the users’ well-being to not overspend their time in the virtual environment.

During immersion, a disruption that can cause a break in presence (BIP) is perceived as undesirable as it affects the illusions of place and plausibility and possibly the user’s performance in virtual activities. Gottsacker et al. (2021) investigated such BIP interruption of the VR users from their immersion and introduced a concept of diegesis, which takes advantage of the current experience or story within the IVE to provide an internal consistency by providing virtual representations for the physical stimuli within the IVE. By comparing five diegetic and non-diegetic approaches during the interruption where the VR user was briefly interrupted by a person in the real world, they found that the diegetic representations could afford the highest-quality of interactions and place illusions, high senses of co-presence and the least disruptive to the virtual experiences. O’Hagan et al. (2020) conducted a study to better understand the nature of VR user interruption caused by the bystander in four different settings, including private spaces, public spaces, private transport, and public transport. They found that the relationship with the VR user is a crucial factor regardless of the environment, and the consideration of comfort and acceptability are paramount. A follow-up study examined a bystander interruption of a known VR user in a private setting. It was found that the majority of the interruptions were playful and used a combination of speech and tactile.

To maintain real-world awareness, Simeone (2016) used depth sensing to detect people within the tracking space to inform the VR user of their movements by presenting a widget inspired by the Alien film, which is an interface representing a 2D top-down view of the floor space represented by a cone (a depth sensor’s view) and circles (people within the tracked space). Medeiros et al. (2021) explored multiple visualisation techniques to notify the VR users, keeping them aware of the non-immersed bystanders during non-critical social interaction. The example visual cues were a 3D arrow, colour glow, a minimap UI similar to Simeone (2016), a minimap as a wristwatch, shadow on the ground, and a diegetic avatar similar to Gottsacker et al. (2021). The study found that participants preferred the maintenance of immersion while having a notification system combining visual and audio cues based on the proximity of the bystander to the VR user. Ghosh et al. (2018) investigated interruptibility and notification design for immersive VR experiences. A survey with 61 VR users identified common interruptions and scenarios that might benefit from some form of notification. Design sessions were conducted with seven VR developers and designers to ideate notification methods in two environmental conditions. Five notification methods combining multisensory feedback of audio, haptic, and visual were implemented. An empirical study showed the effects of modality and notification methods on reaction time, urgency, and understandability. Design guidelines were to create distinction, use the controllers effectively, reduce visual search, avoid jump scares, use familiar metaphors, switch context and provide details on-demand. At the same time, Zenner et al. (2018) recommended notifying users without breaking their immersion. The notification must be adapted to the user’s situation within the IVE. They introduced an open-source framework for adaptive and immersive notifications in VR with features such as a messaging functionality for a non-VR user to send a text to an immersed VR user.

The importance of real-world awareness was also evident in the industry approaches to providing awareness of the physical environment. Meta Quest two offered Space Sense, visualising the physical surrounding with outlines inside a room-scale boundary up to 9 feet away in front of the headset (Baker, n.d.). Windows Mixed Reality headsets offered the Mixed Reality Flashlight to show the see-through portal, i.e., a video window from the greyscale inside-out tracking cameras, of the real world that could be activated with voice or controller. The portal can be positioned using the controller. A similar feature had been introduced on the HTC Vive and Vive Pro (Lang, n.d.a) and later on the HTC Vive Focus 2.0 with colour pass-through front-facing cameras Lang (n.d.b). Valve’s Index HMD introduced “Room View 3D”, a colour video pass-through mode with a precise scale and depth view Heaney (n.d.b). However, the warping was still apparent when moving the head quickly. At the time of this writing, Varjo (n.d.) offered Varjo XR-3, an ultra-low latency, dual 12-megapixel video pass-through at 90 Hz with little warping. However, the XR-3 needs to be tethered and driven by a high-end PC. Recently, Meta released the Meta Quest Pro, a high-end standalone HMD with mobile processing and colour video pass-through Heaney (n.d.a). The trend showed that the pass-through video feature had become crucial for an HMD to provide an MR experience supporting real-world awareness and blended transitioning between the environments. Such a concept has been shown in past research, such as the G-SIAR system Piumsomboon et al. (2014b), where hand gestures allowed a smooth transition between the miniaturised workspace viewed in an MR view and an immersive VR view. This led our review to the next topic of how the two environments could be blended together for a seamless and coherent experience.

The presence of physical objects in the surroundings can challenge the users to achieve total immersion in IVEs. Simeone et al. (2015) explored the concept of Substitutional Reality (SR) in VR, where every physical object around the user has a virtual counterpart represented within the virtual environment. They conducted two studies to understand the factors influencing the suspension of disbelief and ease of use in the first and the levels of engagement in the second. They shared the SR design implications that SR systems should offer a functional substitution for objects to afford manipulation while minimising the mismatch between the manipulable parts. Furthermore, it is crucial to maintain correct proprioceptive feedback even in conflicts with reality. Furthermore, out-of-reach objects allow for more flexible mismatch, and materials can affect users’ expectations of the physical properties of the object, while substitutive objects can engage users as much as replicas. Finally, it was speculated that the reality-based IVEs might be more constrained to achieve presence. Nevertheless, the creation of the SR experience still required human designers, which is somewhat unscalable. However, it has provided a blueprint for subsequent work to determine how the process could be automated.

Oasis Sra et al. (2016), Sra et al. (2017) was introduced as a system that automatically generates IVE from the real environment. Oasis captured an indoor scene in 3D and determined a walkable area within IVE to enable real walking. The real objects could be recognised and paired with the virtual counterparts using a Project Tango tablet with depth-sensing capability. The system was shown to support multiuser collaboration in VR. Hartmann et al. (2019) also leveraged depth sensing to obtain a 3D reconstruction of the real world and combined it in IVE. Their system, RealityCheck, supported users’ transitioning and manipulating between the virtual and real environment. This allowed communication between those immersed and those in the real world and interaction with the physical objects. The system demonstrated application-agnostic compositing techniques (e.g., blendings, manipulating texture and geometry) with seven existing VR titles (e.g., Tilt Brush, Skyrim VR). The compositing techniques addressed potential conflicts when rendering the real and virtual environments together. The user evaluation compared RealityCheck against the HTC Vive’s chaperone, and the results showed significant improvements in transitions, physical manipulation, and safety. Similarly, Lindlbauer and Wilson (2018) presented Remixed Reality, a compilation of visualisation and interaction techniques in MR with a live 3D reconstruction provided by multiple external depth cameras. They characterised a taxonomy of manipulations that could be achieved with Remixed Reality, including spatial modification (e.g., reshape, erase), appearance modification (e.g., relight, stylised), viewpoint modification (e.g., portals, change projection), and temporal modification (e.g., speed up, reverse). These concepts provided new ways to experience events from different perspectives or variants within the same physical location. The taxonomy could serve as guidelines for manipulating the environmental stimuli present in the real world to influence the user’s perception and behaviour.

Although visualisation and interaction techniques and guidelines exist to improve seamlessness in transitioning and interacting between realities, research has yet to investigate their potential use as tools to help users disengage from IVEs. Our research approached some overlapping topics but with a unique set of research questions that still needed to be answered, centred on removing the users from IVEs back to the real world in AR mode.

Our goal is to gather ideas for disengagement techniques in XR. We define the term disengagement techniques as follows:

• “Disengagement techniques are methods which utilise visualisation, interaction, or both to disengage the users from their current immersive virtual environment and transition them back to the real world; such methods could provide an experience in addition to the current one that the user is engaged in or they could be subtly integrated to the current experience.”

We conducted a remote elicitation study through Zoom video conferencing with an online questionnaire. This study has been approved by the Human Research Ethics Committee of the University of Canterbury. We adopted three techniques of production, priming, and partner proposed by Morris et al. (2014) to improve our elicitation process, which aims at reducing legacy bias and increasing the novelty of the elicited techniques. To achieve this, we elicited three design ideas instead of one since the first idea that comes to mind is typically the legacy approach. In eliciting three, the participants need to think beyond the common approach they are familiar with.

Moreover, the researcher who acted as the partner could help reinforce and make sure that the three ideas elicited were distinct from each other by raising questions if they felt that the ideas were too similar. For this study, we decided to elicit the designs from XR experts instead of average users because of their insights and understanding of XR technologies and their awareness of the capabilities and limitations of the current and future XR systems. Although past elicitation studies’ guidelines were aimed at eliciting gestures, previous research had successfully applied these principles in other contexts, such as eliciting visual programming paradigm for IoT (Desolda et al., 2017) and voice command Ali et al. (2018).

It is of the utmost importance to explain that in this study, we did not ask the participants to experience VR applications directly but instead provided imaginary scenarios due to three considerations based on the nature of the elicitation study. Firstly, constraining the scenarios to specific experiences might be too restrictive in terms of the possible immersive experience of the future. For example, in prior elicitation studies eliciting gestures, to avoid the limitations of the hand-tracking technologies at the time, the study typically provided only animation in a non-interactive system to elicit gestures from participants where no tracking system was used. This helped mitigate the technological limitations where the system might fail to register or track specific hand postures or movements. We adopted the same paradigm where we did not want participants to limit their techniques to specific systems or applications. However, we grounded the proposed experiences on existing applications only to serve as a guide for the participants to build on. However, we did provide them with the creative freedom to imagine what the future immersive experience might be like, which allowed us to elicit novel techniques with little to no constraint.

Following the adopted paradigm, the second reason was that we presume that our experts have sufficient prior knowledge and experience in VR, which they do. All participants have experience developing VR applications and have played VR games. They found it relatively straightforward to understand and imagine the concept of each of the immersive experiences without constraining the experience to particular games or applications, allowing them to base their design on their own immersive experiences beyond the provided examples, which yielded a variety of techniques beyond those we could imagine. Lastly, the most sensitive matter to our participants was time. The study already took approximately 2 hours of their time, which was voluntary without any incentive or reward. For the remainder of this section, we will explain our design assumptions in Section 3.1 and the procedure of the study and data collection in Section 3.3.

In this study, we made four design assumptions based on how we envision the future of XR usage in order to accommodate novel ideas that stand the test of time. These design assumptions were grounded on the state of the art XR research. More explanation is provided as follows:

(i) Real-world awareness is paramount—The 1st assumption is derived from the concerns raised in previous research (Wassom, 2014; Madary and Metzinger, 2016; Slater et al., 2020). The issues range from personal to societal, such as negligence of self and dependents, lack of ground truth due to ultra-realism, and long-term and frequent use led to the prioritisation of the virtual world over the real one. We strongly believe that XR systems should assist the users in maintaining a connection to the real world by providing some level of awareness.

(ii) Always-worn display device will be common—The 2nd assumption is that the users do not need to remove their head- or eye-worn display device, e.g., head-mounted display (HMD), glasses, contact lens, as they are disengaged from an IVE. Successful disengagement from an IVE means that the users have been transitioned to the real world but they are expected to remain in the system, e.g., transition to an AR mode where the sense of presence is transferred from the virtual environment to the real one. This type of transition requires display devices that support see-through mode such as an HMD with cameras to support video see-through (Steptoe, 2013; Piumsomboon et al., 2014a; Heaney, n.d.a), or an optical see-through display that can turn opaque (Bar-Zeev et al., 2015).

(iii) Multi-sensory Support—The 3rd assumption is to allow ideas that leverage multi-sensory feedback beyond visual and auditory senses such as haptic, olfactory, and any emerging XR technologies.

(iv) Independent of Real World Stimuli—The 4th assumption is that disengagement techniques should not rely on stimuli from the real world as it is likely beyond the control of the XR systems. Although, inter-connectivity can be expected e.g., smart home where XR systems can control the real environment, we discouraged the idea as they introduce extraneous variables. However, to accommodate novel ideas and use cases, we made an exemption that such an idea could be proposed once per scenario.

We invited researchers from the authors’ professional network via email who engaged with the Visualization and Graphics Technical Community (VGTC) who have worked professionally and have publications in any areas of Extended Reality in the past 4 years. Eleven experts volunteered to participate in this study (3 females, mean age 36.4 years, SD = 5.1). They hold various academic positions comprising two Professors, one Senior Lecturer, two Assistant Professors, two Lecturers, one Research Fellow, two Postdoctoral Researchers, and one Senior Tutor. Their areas of expertise are in XR and HCI, more specifically: Remote Collaboration (× 5), Interaction/Interface Design (× 5), Education (× 2), Human Perception (× 2), Industry Applications (× 2), Display Technology (× 1), Game Design (× 1), Social VR (× 1), and Virtual Agents (× 1). Their average recent time spent using XR devices is 16.4 h per week (SD = 15.2). This might seem like a low number for experts, but the fact was that, as academics, they could not afford as much time in XR. However, they are still engaged in research and are aware of cutting-edge experiences. Lastly, they are based in various institutions from around the world: Asia (× 5), Oceania (× 3), Europe (× 2), and N.America (× 1).

Once the video call had been established, an online questionnaire was shared with the participant. First, the participant was asked to read the information sheet and provide consent by answering a question and proceeding to the next section. Then the participant had to complete the pre-experimental questionnaire asking for demographic information and their frequency using XR devices. Next, the participant was given an introduction to the concept of disengagement techniques through the information provided online and verbal explanation by the researcher. They were informed that they would be ideating ideas of various techniques and had to design a total of twelve techniques, three different techniques for four scenarios, to be covered in the Section 3.3.1 to follow. They were instructed that duplicated techniques were only allowed across different scenarios.

For measurements, we collect the following information at each step of the study:

1 Participant’s intrinsic motivations and level of engagement for engaging in each of the scenarios (sub-Section 3.3.2).

2 Eliciting three disengagement techniques as verbal and text descriptions (sub-Section 3.3.3).

3 Subjective ratings of four design attributes for each disengagement technique proposed (sub-Section 3.3.4).

4 Positive and Negative Affect Schedule (PANAS) for each disengagement technique proposed (sub-Section 3.3.5).

5 Ranking the three disengagement techniques proposed in terms of preference for each scenario (sub-Section 3.3.6).

We acknowledge that games, generally, can span multiple categories. Our intention in coming up with these scenarios was not to create a distinct scenario but to present scenarios that cover various intrinsic motivations. Our goal was to develop scenarios that would span and cover the basic psychological needs that motivate users to engage in IVEs. Four members of our research team independently surveyed existing VR games or experiences on Steam, Oculus Quest Store, and Microsoft Store and chose 6-8 different games spanning the four dimensions of intrinsic motivation (further explained in sub-Section 3.3.2). We then discussed and narrowed the genres down to the four scenarios.

The four scenarios are Narrative-driven, Social-platform, Adventure Sandbox, and Fast-paced Battle experience. Note that these scenarios are only a subset of possible applications and serve as samplings of possible experiences of future IVEs. They have overlapping attributes, but the goal was to span different intrinsic motivations and provide a variety of flavours. In the study, the span of our scenarios was tested by asking the participants to rate their perceived intrinsic motivation for the four scenarios and how engaged they would be in each activity. It was difficult to predict the participant’s preferences and individual motivations for engaging in each experience. Therefore having scenarios with overlapping attributes better represents real applications.

We selected a suitable example for each scenario based on the positive ratings and large player count to represent each scenario: Skyrim VR (Narrative), Rec Room (Social), Minecraft VR (Sandbox), and Population: ONE (Battle). During the study, all four scenarios shared the same structure and questionnaire except for their scenario description and the VR-180°video example of the four games for priming the participant to imagine being immersed and engaged in such an experience. To reduce carryover effects, we counterbalanced the scenarios using a Latin square. The descriptions for the four scenarios are as follows:

• Narrative-driven Experience — “Imagine you are engaging in a fantasy world of your liking. You are the hero in this world and the stories and quests are endless as they are being generated to match your preferences by algorithms. The world is also a living entity where your decisions and actions dynamically affect and alter this world. ”

• Social-platform Experience — “Imagine you are hanging out with your friends in this virtual sandbox experience. You and your pals can do any fun activities together in this world, from playing casual games like paintball or laser tags, shopping or attending a virtual concert. You can invite your friends to hang out at your virtual home or visit them at their abode at any time. ”

• Adventure Sandbox Experience — “Imagine you are exploring a wondrous world filled with new things to discover, harvest, craft, build, trade, etc. You determine your own journey and define how you want to play in this world. The possibilities are endless as new events and adventures are created every day. New tools and merchandise are also designed and developed, retaining an ever-renewing sense of discovery in this world. ”

• Fast-paced Battle Experience — “Imagine you are competing in a virtual battle arena to raise your ranking and earn a higher title. The experience is fast-paced, which demands complete attention and focus. However, frequent downtime serve as breaks to reduce fatigue, allowing you to relax and play even longer. This experience requires constant practice to hone your mastery. As a result, you have some followers and supporters who root for you and watch your matches from time to time. ”

For clarity, we decided to add the description, “relax and play even longer” to the Fast-paced Battle Experience as we foresaw that the application designers would be providing frequent breaks to allow the users to play for longer instead of leaving users exhausted and disengaged after a short period. This can be seen in games like Population One, a multiplayer FPS and Battle Royale VR game.

Once they read the description and watched the VR-180°video, we asked the participants to imagine the experience. Then they were asked to provide their motivation to engage in the given scenario under the four categories of needs that we have adapted based on self-determination theory (Deci and Ryan, 2013), which concerns an individual’s psyche and personal achievement for self-efficacy and self-actualization, and the study of individual motivation in the virtual world (Zhou et al., 2011; Barreda-Ángeles and Hartmann, 2022). Based on these previous works and current virtual experiences being offered, we proposed four categories of intrinsic motivations for engaging in these IVEs: 1) Self-driven (e.g., self-fulfilment, growth), 2) Self-validation (e.g., esteem, mastery, reputation), 3) Social (e.g., social needs, friendship, belongingness), and 4) Escapism (e.g., enjoyment, exploration, living another life). Of course, individual motivations are subjective, and multiple motivations could be chosen. Then they were asked to rate, on a 7-point Likert scale, how engaged they would be in such a scenario. The purpose of the level of engagement rating is to confirm that the participants find the scenario engaging. In doing so, we presumed our expert’s prior experience with VR applications and their ability to understand and imagine how engaged they would be. This removed the current technologies’ limitations, potential drawbacks in the implementation, and any constraints to promoting novel ideas.

When the participants were ready to proceed with the ideation process, the researcher asked for their permission and started the video recording of the session for further analysis. During the ideation process, the participant was asked to follow a think-aloud protocol and treat the researcher as a partner whose role was to listen and repeat the participant’s idea for clarity. For each scenario, they had to propose three different techniques. The concept of each technique was explained verbally to the researcher before inputting a text description in the online questionnaire. Additionally, they were asked to come up with three hashtags with keywords or phrases that concisely capture the key idea of their techniques.

During the ideation process, they were asked to consider their idea with four design attributes guided by previous research from XR research (Billinghurst et al., 2001; George et al., 2020) and game design (Schell, 2008; Fullerton, 2019). Once a technique has been proposed, the participants were asked to rate their own technique on a 7-point Likert scale for each design attribute:

• Effectiveness—degree to which the technique is likely to successfully disengage the user

• Unobtrusiveness—how the experience is not disruptive, intrusive or noticeable

• Seamlessness—how subtle is the transition in terms of continuity and smoothness

• Enjoyment—how enjoyable and playful is the experience

For analysis, we calculate the average of these four values yielding what we call the Goodness rating.

Afterwards, they had to rate their feeling as they imagined being disengaged using their own technique on the Positive and Negative Affect Schedule (PANAS) (Watson et al., 1988). PANAS is a questionnaire comprises two 10-item scales measuring positive and negative affect where each item is rated on a 5-point scale of 1 (not at all) to 5 (very much).

Once all three techniques were elicited, the participant had to rank them according to their preference. Then, the process was repeated until all four scenarios were completed. The session lasted approximately 2 hours.

We collected a total of 11 participants × 4 scenarios × 3 techniques = 132 disengagement techniques along with the responses to the questionnaire, including ratings of four design attributes and PANAS per technique, and intrinsic motivation, engagement rating, and preference ranking for each scenario. For the analyses, we will present our results in the order of importance. The techniques and classification results can be found in the Supplementary Table.

For the text descriptions of the disengagement techniques, we used a keyword extraction tool provided by Cortical.io, which employs the semantic folding technique to generate semantic fingerprints for a given input text (Webber, 2015). In short, the similar meaning words were clustered, and the largest distinct clusters were ranked on the list. A brief explanation of the algorithm was that the text was first segmented into snippets with similar meanings clustered together, generating a 2D semantic map where each snippet was represented as a 2D coordinate on this map. Next, each word that appeared in the text was given its 2D map. Each snippet containing this word or words with similar meanings was then marked on this empty map like a “mask” bitmap called a semantic fingerprint. All the semantic fingerprints (of all words) formed the dictionary. Semantic fingerprints would then help cluster words on the 2D semantic map based on the specified constraint for clustering. Therefore, the words shown in Figure 1 should be meaningful words recurring across the text, which form their own cluster.

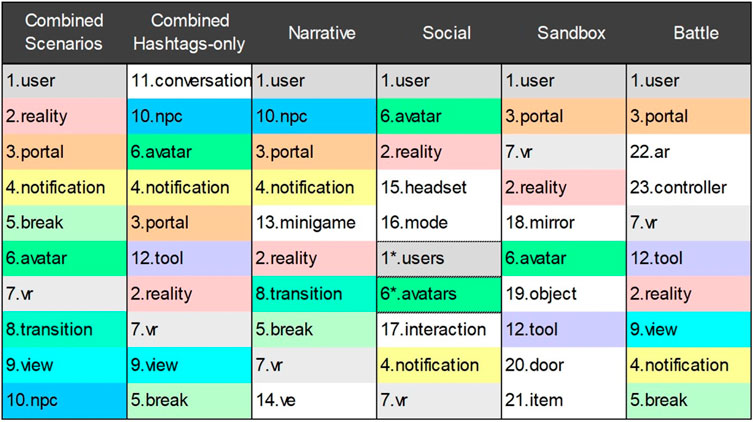

FIGURE 1. Keywords extracted by the semantic folding algorithm. The 1st column extracted from all the data from every scenarios combined. The 2nd column only considered the explicit hashtags keywords. The 3rd to the 6th column extracted keywords for the combined data for each scenario. Each unique keyword has been numbered and colour-coded for visibility.

Figure 1 shows a list of keywords extracted for six groups of text descriptions provided by the participants. Each column, from left to right, shows the keywords derived for 1) technique descriptions combined from all scenarios (132 techniques), hashtags-only (132 techniques), and for each scenario (33 techniques each). Duplicate keywords across columns share the same number and colour code. The significance of the keyword descends along the rows. These keywords guided our classification approach in the next subsection.

To better understand the design space of interactive techniques for disengagement, we classified the techniques guided by the extracted keywords from the previous step. Our procedure was to first examine each technique’s full description and hash-tags. Then we summarise its key concept into a short phrase using the terms from the extracted keywords. Each technique could belong to at most two categories in each round. We repeated the procedure and generalised grouping further using their common characteristics for dimension reduction. As a result, we performed three rounds of classification, starting with 132 techniques and categorised them into 23, 13, and six categories from the first to third rounds, respectively. The result is illustrated in Figure 2. In the end, we have six categories of Activities (30%), Cues (24%), Breaks (21%), Degradations (11%), Notifications (9%), and Virtual Agents or VAs (5%). The percentages following each category reflect the rounded-up proportion of all the elicited techniques. For the remainder of this subsection, we provide further descriptions and examples of the techniques in each category. We will provide examples of how the different techniques of various categories can be combined to potentially improve the effectiveness of disengagement in section 5.

Activities techniques are those interactive experiences that demand user to take actions. Such techniques engage the users to interact in the forms of mini-games (e.g., hunting creatures, tend to virtual garden—Figure 3-1D), tasks with or without rewards (e.g., crafting, loot boxes—Figure 3-3C, getting power-ups—Figure 3-1C, retrieving items—Figure 3-3D), accessing menu (e.g., access inventories or skill trees), socialization (e.g., chatting, conversing). These activities can take place in the real world (e.g., mundane real world activities can be mapped to IVE—Figure 3–4A), Augmented Reality (e.g., chasing enemies from IVE into real world—Figure 3-1D, AR pet comes into your view in IVE—Figure 3-2B), or Virtual Reality (e.g., opening a virtual door to the real world).

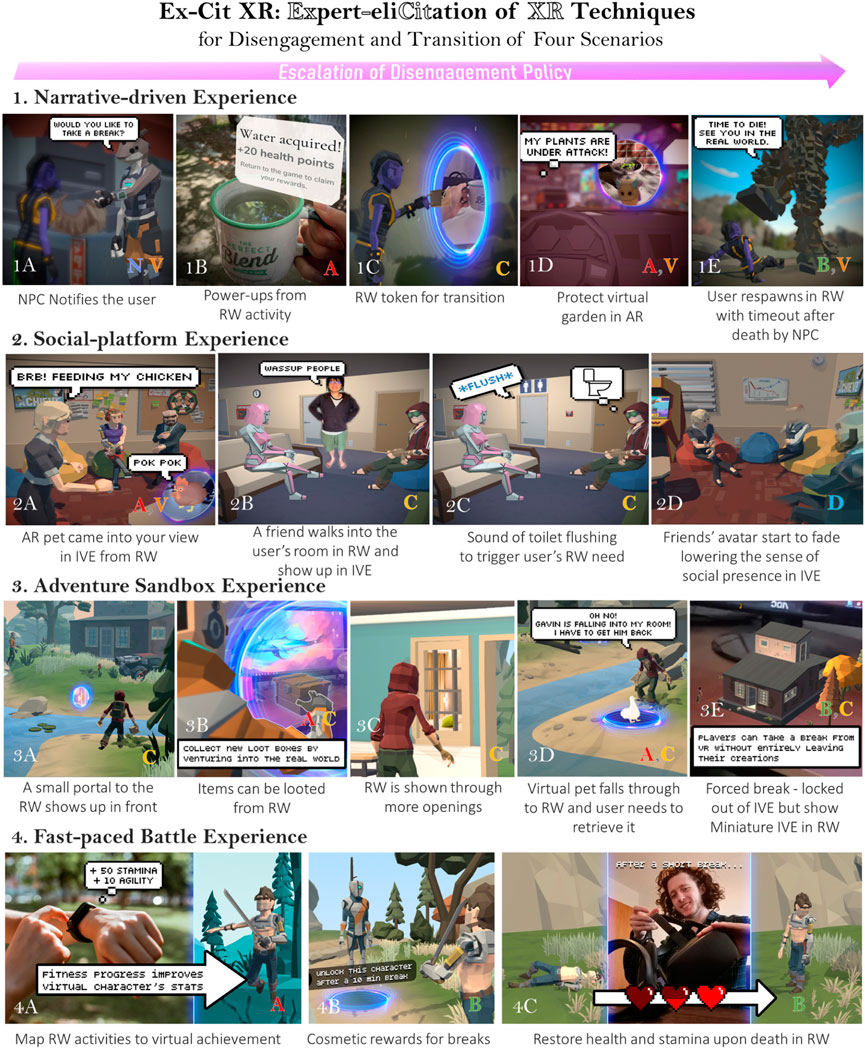

FIGURE 3. Exemplary use cases of Ex-Cit XR visualisation and interaction techniques for disengagement and transition of four unique scenarios in IVEs. Scenarios: 1-Narrative-driven experience: (1A) NPC notifies the user, (1B) power-ups from RW activity, (1C) RW token for transition, (1D) protect virtual garden in AR, and (1E) user respawns in RW with timeout after death by NPC; 2-Social-platform experience: (2A) AR pet came into user’s view in IVE from RW, (2B) a friend walks into the user’s room in RW and show up in IVE, (2C) Sound of toilet flushing to trigger user’s RW need, (2D) friends’ avatar start to face lowering the sense of social presence in IVE; 3-Adventure sandbox experience: (3A) a small portal to the RW shows up in front, (3B) items can be looted from RW, (3C) RW is shown through more openings, (3D) virtual pet falls through to RW and user needs to retrieve it, (3E) forced break—locked out of IVE but show miniature IVE in RW; 4-Fast-paced battle experience: (4A) map RW activities to virtual achievement, (4B) cosmetic rewards for breaks, (4C) restore health and stamina upon death in RW.

Breaks techniques offer the user a break with or without conditions and can be voluntary or not. There are four distinct characteristics, breaks with a timer (e.g., a coffee break where session resumes after, penalized for some violation such as friendly fire, time to unlock new quests/items—Figure 3-4B), break to replenish (e.g., restore energy, health—Figure 3-4C, remove deficiency), breaks with synchronized time (IVE and real world times are synchronised, e.g., night time to encourage users to go to bed, time taken to travel between places), and involuntary break (forcing users to break, e.g., in-game death and re-spawn in the real world—Figure 3-1E, locked in AR mode and show miniature IVE—Figure 3-3E, forced shut down).

Cues techniques demand user’s attention. They could afford interaction but do not require the user to engage immediately. The purpose of Cues is to establish and maintain the connections between IVE and the real world. They serve as reminders and bridges that the user can effortlessly cross between worlds. Various types of Cues are portals (George et al., 2020) (any openings in space, e.g., a small portal—Figure 3-3A, multiple windows—Figure 3-3B, holes that drop users or items into the real world—Figure 3-3D), real items/triggers [e.g., real world objects shown in IVE as tokens that transport users back to the real world once they interact—Figure 3-1B, real persons or pets that come into proximity for you to converse or interact—Figure 3-2C (von Willich et al., 2019; Kudo et al., 2021)], virtual items/triggers (virtual objects offering a view or an access to the real world, e.g., virtual goggles, hand mirrors, or triggers to remind their real world needs, e.g., food, toilet—Figure 3-2A), AR transitions (i.e., visual and audio effects that blend between IVE and real world, e.g., fade in/out, highlights).

Degradations techniques worsen the user experience in IVEs by reducing the sense of presence or the degree of immersion (Slater, 2009; Jung et al., 2018). For example, limit the interaction (e.g., limit activities, depopulate IVE), lower quality of IVE and sensory feedback (e.g., lower graphics settings, model quality, visual effects—Figure 3-2D, ambient sounds), lower presence by manipulating viewpoint (e.g., disconnect the camera from self-avatar, narrowing field-of-view), changing difficulties (e.g., making the challenges extremely easy or difficult).

Notifications techniques are aimed to inform the users without being too intrusive in their current experience in IVE. There are several ways the user can be notified, for example, UI notification (e.g., text messages, alert icons), voice notification (e.g., system voice messaging), notification by virtual agents (e.g., an NPC delivers the message—Figure 3-1A).

These techniques utilise Virtual Agents or VAs, which need to be combined with the other techniques. For example, a creature lured a user out to the real world combined Activities and VAs—Figure 3-1D, an AR pet comes into IVE momentarily—Figure 3-2B. Likewise, an NPC delivers a message for the user to take a break combined Notifications and VAs—Figure 3-1A. More coersively, the enemy NPC can attack the user and upon death, the user is respawned in the real world combining Breaks and VAs—Figure 3-1E.

We found that the intrinsic motivations were well spread, covering different types of motivation. Narrative and Sandbox were the only ones with distributions closest to each other. Figure 4 illustrates the distribution of participants’ motivations for engaging in the four given scenarios. It is apparent that engagement in Social experience is motivated by Social and Escapism while Battle experience evokes Self-validation and Social. Narrative and Sandbox experiences are primarily influenced by Escapism and Self-driven. This result indicates that the chosen scenarios elicit a broad range of intrinsic motivations and are suitable for representing various experiences that IVEs offer.

For the 7-point Likert scale for the level of engagement, the results showed a high level of engagement for every scenario, Narrative (M = 6.6, SD = 0.6), Social (M = 6.3, SD = 0.8), Sandbox (M = 6.1, SD = 1.2), and Battle (M = 6.6, SD = 0.6).

We examined the effects of design attribute ratings on the preference of the techniques. For better statistical power, we combined data across all four scenarios for this analysis. Kruskal-Wallis tests were performed followed by Mann-Whitney tests with Bonferroni P-value adjustment for post-hoc pairwise comparisons (the p-value is corrected while the alpha level is kept at 0.05). The four design attributes were Effectiveness, Unobtrusiveness, Seamlessness, and Enjoyment. We introduced the overall ratings by calculating the average value of the four design attributes, which yielded the Goodness rating. We found significant effects for Enjoyment (χ2(2) = 15.2, p

The same test was applied on the positive and negative affect scores but no significant difference was found. However, we found that the difference between the two affect scores, positive and negative affect, impacted the preference. We speculate that this measurement indicated how “enjoyable or engaging” the technique might for the users but this needs to be validated. Again, we used Kruskal-Wallis tests followed by Mann-Whitney tests with Bonferroni P-value adjustment. We found a significant difference for the differences of affect and preference of the technique (χ2(2) = 13.4, p = 0.001). The post-hoc pairwise comparisons yielded differences between 1st (M = 15.3,SD = 7.6) and 2nd (M = 9.2,SD = 9.0) ranked techniques (U = 1318.5, p

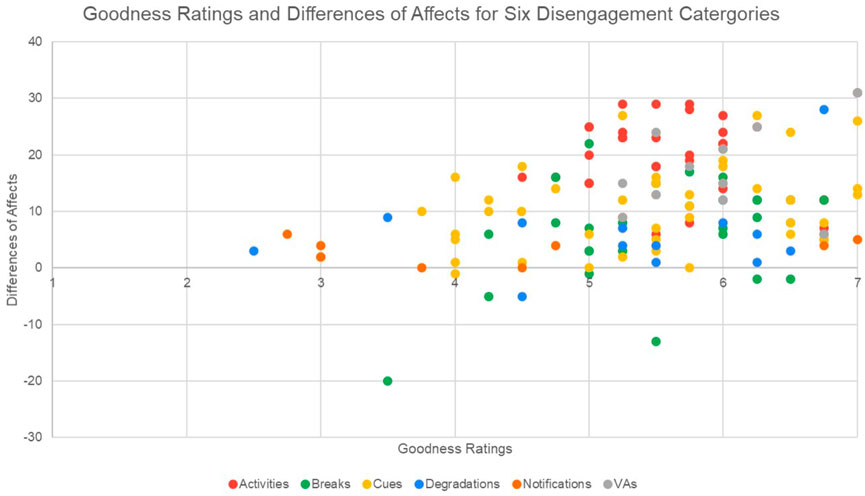

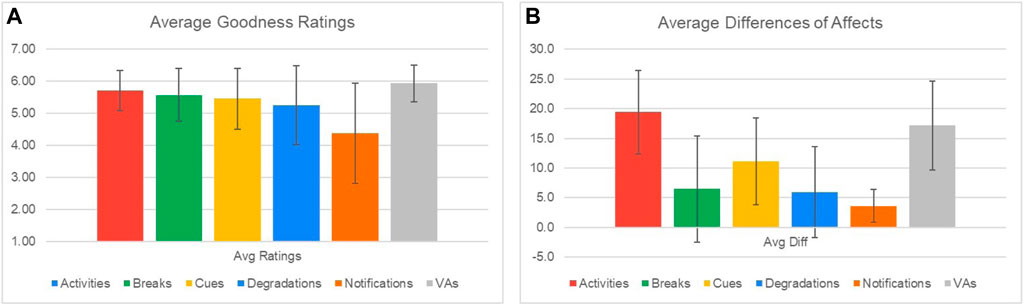

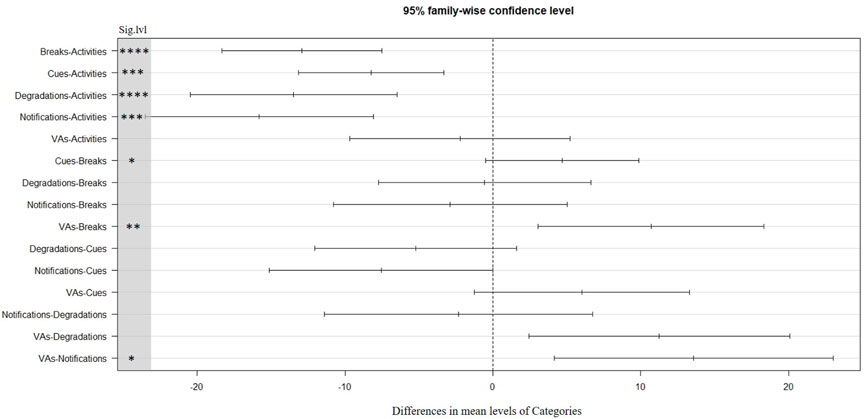

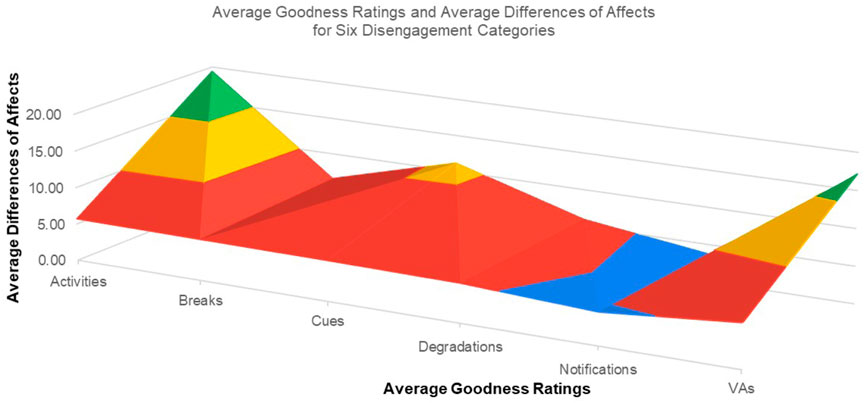

Based on the findings from sub-Sections 3.3.2, 3.3.3, we learnt that Goodness Ratings and the differences between positive and negative affect scores, Differences of Affect for short, influenced user preference. We plotted the data points, as shown in Figure 5. The colour of each data point represents Disengagement Categories, and the Goodness Ratings and Differences of Affect values are plotted on the horizontal and vertical axis, respectively. From Figure 5, we could see multiple clusters emerged. From this observation, we investigated the impacts of Disengagement Categories on the Average Goodness Ratings and the Average Differences of Affect. Figure 6 shows the Average Goodness Ratings and the Average Differences of Affect for six Disengagement Categories. We performed two-way ANOVA to analyse the effect of Disengagement Categories and Average Goodness Ratings on Average Differences of Affect. The result showed no significant interaction between the effects of Disengagement Categories and Average Goodness Ratings F(5, 129) = 1.58, p = 0.17. However, simple main effects analysis showed that both Disengagement Categories and Average Goodness Ratings did have a statistically significant effect on Average Differences of Affect (p

FIGURE 5. The plot shows the goodness ratings and differences of affect for each disengagement technique for six classified categories.

FIGURE 6. The (A)plot shows the average goodness ratings for six disengagement categories. The (B)plot shows the average differences of affect for six disengagement categories. Error bars represent a standard deviation from a 95% confidence interval.

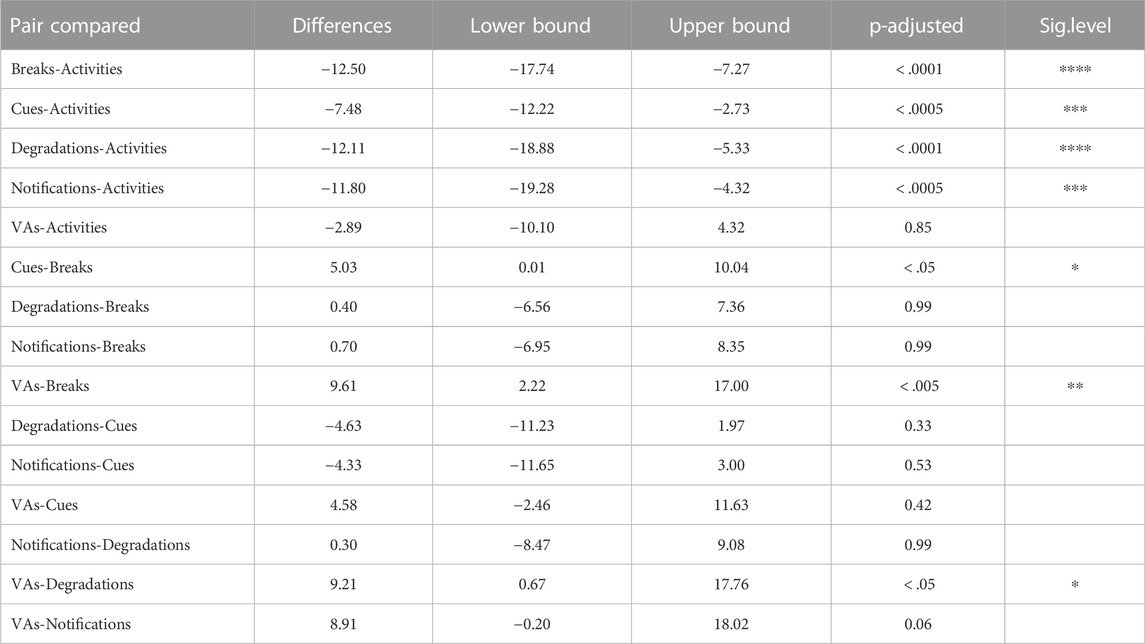

TABLE 1. The results of Tukey’s Honestly Significant Difference (Tukey’s HSD) post-hoc test for pairwise comparisons for the effect of Disengagement Categories and Average Goodness Ratings on Average Differences of Affect.

FIGURE 7. The plot shows the differences in mean levels of the difference of affect between pairs of disengagement categories.

This section discusses our findings from the study. We present four themes that emerged from our analyses, including the impacts of the proposed design attributes, the impacts of different scenarios of IVEs, the design pattern around disengagement strategies and policies, and the escalation design pattern.

The four design attributes proposed are Effectiveness, Unobtrusiveness, Seamlessness, and Enjoyment. From our analysis in sub-Section 4.3.2, we found that Enjoyment was the only attribute that significantly affects user preference. From the examination of the differences in the positive and negative affect scores (Differences of Affect) in sub-Section 4.3.3, we speculated that the Differences of Affect was a good indicator of how “enjoyable or engaging” the experience of such a disengagement technique would be. From sub-Section 4.3.4, we found that the Disengagement Categories and Goodness Ratings of techniques had a significant effect on the level of Differences of Affect and the post-hoc pairwise comparison showed that Activities and VAs, generally, provided greater Differences of Affect or, speculatively, more enjoyable than the other categories, which our participants found to be paramount for a disengagement experience. Figure 8 illustrates a plot of average goodness ratings against the average differences of affect scores for each disengagement category. This led us to postulate that experiences that could evoke heightened emotions might have higher chances of disengaging the users. However, experiences that could evoke greater positive affect than negative ones would be preferable. We also hypothesise that unobtrusiveness and seamlessness might not be as crucial as we first thought on their impact of disengagement. However, we believe that they could improve or worsen the overall disengagement experiences.

FIGURE 8. Average goodness ratings and average differences of positive and negative affect scores for the six classified categories.

Scenarios were essential factors in the variety of the resulting disengagement techniques elicited. Figure 1 showed a broad range of important keywords arose for describing techniques for different scenarios. These findings show that different scenarios demand suitable disengagement techniques to ensure success. There is unlikely to be a one-size-fits-all approach to disengagement as different scenarios afford a diverse set of intrinsic motivations, as we found in sub-Section 4.3.2. For example, Narrative scenario may benefit from NPCs interaction as it suits the theme, while this may not be suitable for a Fast-paced Battle scenario. For Battle, it makes sense to use a Break disengagement technique to let the user rest and recover. In Sandbox, item rewards from completing an activity might be enticing, but this might not provide a strong incentive in Social scenario, depending on the current activity and context, as the user′s motivation might rather be to socialise. For Social scenario, it might be best to tone down the bustling and lively environment, e.g., reducing the number of avatars shown, dimming the light, fade out the ambient noise/music to make users perceive that the party is winding down and it is time to leave. Therefore, it is crucial to apply suitable disengagement techniques for the right experience and time.

Throughout the study, we observed that the participants made use of several strategies to disengage the user and these strategies had common patterns. For example, some techniques were meant to entice users with rewards, while others tried to distract the user from existing experience or provide greater engagement than the current one. Some went further to coerce the user to make decisions, and some entirely robbed the user of their autonomy. These manipulative tactics to alter our behaviours are well-known in behavioural psychology as operant conditioning (Thorndike, 1898; Skinner, 2019), which is an associative learning process through reinforcements and punishments to strengthen or weaken behaviours (Miltenberger, 2015). Common positive reinforcement strategies were to those adding rewarding stimuli, for example, providing regular rewards (e.g., accumulating points for unlocking rewards) or intermittent rewards (e.g., lootboxes). Negative reinforcement strategies remove aversive stimuli, (e.g., remove RW portals hindering immersion in current experience, restoring health and stamina). Positive punishment strategies add aversive stimuli to the experience (e.g., RW portals show up everywhere in IVE, pest attacking user’s virtual garden). Finally, negative punishment removes rewarding stimuli (e.g., lower reward’s quality and quantity, increase interval between rewards, degrade experiences). Furthermore, we also observed strategies that utilised game design elements (Schell, 2008), which coincided with operant conditioning. This is mainly to win the engagement of the users over the current experience they are engaging in IVE by making the activities in the real world more fun or to lower the enjoyment of the experience in IVE as well. For example, mini-games were commonly proposed. This can be in the form of a continuing experience where the current IVE experience extends into the real world (e.g., an NPC led the user to the RW where the activity awaits), or it can be an independent experience that runs in parallel to the current IVE (e.g., protecting your AR virtual garden from pests). No matter the nature of these techniques, the takeaway message is the consideration of how XR platforms can support these disengagement strategies and policies, which may require a paradigm shift in how the operating system of XR is designed and implemented to support such cross-realities experiences.

Ultimately, the users (or the guardian) should have the autonomy to choose their preferred approach to disengagement. Suitable disengagement techniques will depend on the strategies devised by the chosen disengagement policies. From the analyses in sub-Section 4.3.4, we learned that different disengagement categories offer various levels of Goodness and Affect. For example, Notification via a text message might not be interesting nor exciting, but if the user does not want any interruption to their current experience in IVE, this is probably an ideal approach. However, combining Notification with VAs by using an NPC to notify the user would likely elevate the experience (see Figures 3-1A). Moreover, we found that greater positively Differences of Affect of techniques are generally more preferable than neutral or negative ones. Therefore, it is logical to employ these techniques earlier and leave negative experiences as a last resort. This simply means the strategy might be to first remind the users by notifying them in various ways that it is time to disengage without intruding on their current experience. If this does not work, the strategy shifts toward persuasion through positive or negative reinforcement. However, if this still does not work, the strategy might use coercion by imposing positive or negative punishment.

From our discussion in the previous section, we have created the exemplary use cases of the Ex-Cit XR visualisation and interaction techniques as comic strips to illustrate the possible disengagement strategies that can escalate. Figure 3) shows a sequence of escalating events for each scenario in IVEs. The four IVE scenarios are shown in each row. The sequence of events progresses from left to right as the system attempts to disengage the user from the IVE with escalating strategies. We provide further descriptions below.

Narrative-driven Experience—1A) NPC reminds the user to disengage through casual banter, 1B) Gain equivalent health benefits in the virtual world for fulfilling real world needs, 1C) Real world objects show up and can be retrieved to be used in the virtual world, 1D) Augmented Reality caretaker mini-game where the user has to periodically enter the real world to fend off pests from their farm, and 1E) An overpowered enemy knocks the user out and forces them to spend time in the real world.

Social-platform Experience—2A) Virtual augmented pet that distracts its owner for food when it is hungry, visible to everyone, 2B) People surrounding the user’s real world space are projected in VR, reminding the user about the real world, 2C) Subtle sound cues that increase the desire to fulfil daily needs, and 2D) People’s avatar features are removed for prolonged socialising, reducing their co-presence until a break is taken.

Adventure Sandbox Experience—3A) Portals to the real world intermittently open up, encouraging disengagement, 3B) Equipment and gear can be looted in AR mode to be used in the virtual world, 3C) Subtle visual cues of the real world, such as the real world being projected onto a window’s reflection, 3D) Virtual augmented pet that gets lost in the virtual world and has to be carried back into the real world, and 3E) Virtual world is projected onto the real world, reducing reluctance to leave the virtual world.

Fast-paced Battle Experience—4A) Fitness progress improving virtual character’s stats, incentivising disengagement via exercise, 4B) Cosmetic rewards for regular disengagement breaks, and 4C) Restore health and stamina upon death by exiting to the real world.

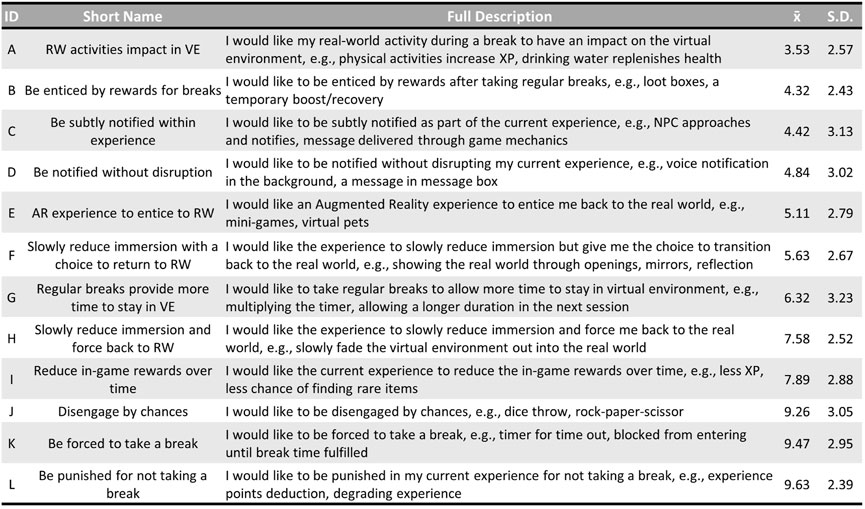

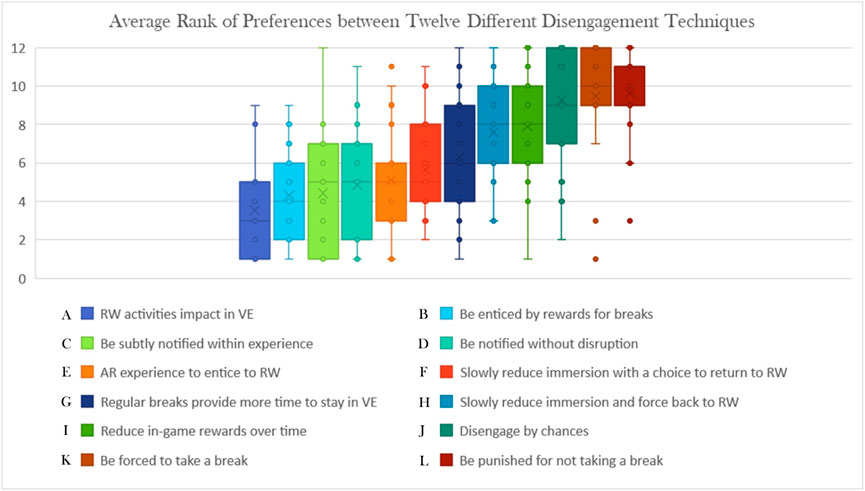

To preliminarily validate the proposed design patterns and learn more about the user preference for disengagement techniques of Ex-Cit XR, we conducted an online survey with the potential target users. We implemented our questionnaire using Qualtrics, an online survey tool. The questionnaire contained demographic, six ranking (lower is better) and one rating (higher is better) question. We hypothesised that the differences in the user preferences for disengagement techniques exist, which means the ranking frequency of disengagement techniques would not be uniformly distributed. Thereby, the resulting average ranking would differ for different disengagement techniques, and the most and least preferred techniques would emerge. To test our hypothesis, we asked the participants to rank the techniques for each of the four scenarios as shown in Figure 3. Furthermore, we were interested in the general use of disengagement techniques regardless of the scenarios for games or non-games applications. Therefore, from the existing techniques, we provided a more generic description of the unique techniques, which resulted in 12 general (non-scenario-specific) disengagement techniques for the participants to rank according to their preference. The descriptions of all the twelve techniques are listed in Figure 10.

In the online survey, we explained the concept of disengagement techniques and provided a description of this research. The estimated time for completing the survey was around 15 min. We advertised our survey on public forums, including two Reddit groups: Virtual Reality1 with 428 k members, and Extended Reality2 with 480 members; and two Facebook groups: Virtual Reality3 with 57.3 K members, and Mixed Reality Research4 with 14.5 K members. There were seven questions where questions 1–4 (Q1-Q4) asked the participants to rank the techniques in the order that they should appear in the experience, “Over a long play time, users become increasingly immersed, and multiple disengagement techniques may be used to help the user disengage. Please rank the techniques in the order of how you think they should appear as your playtime and immersion increase (a lower rank number means this technique should start appearing while less immersed, and a higher rank number means this technique should start appearing while more immersed).” The same task was repeated for each of the four scenarios of Narrative, Social, Adventure, and Battle. The disengagement techniques that appeared for ranking were the same ones shown in Figure 3, but they were listed in a randomised order. Question 5 (Q5) asked the participants to rate their engagement level for the four scenarios on a 7-point Likert Scale, “Do you agree with the following statement? I could see myself highly engaged in the _________experience (1–strongly disagree to 7–strongly agree).” Question 6 (Q6) asked the participants to rank the four design attributes in terms of their impacts on their preference of disengagement techniques, “Please rank the following attributes by their impacts on your consideration of preferable disengagement techniques (rank 1 is highest impact and rank 4 is lowest).” Finally, Question 7 (Q7) asked the participants to rank the 12 general (non-scenario-specific) disengagement techniques derived from the overall set of Ex-Cit XR, “Without any specific scenario, what would be the preferred order of strategies to disengage you from the immersive experience? Please rank the strategies in the order that you would like them to appear, where rank 1 will be experienced first to rank 12 will be experienced last.” Again, the order in which each technique appeared on the list was randomised. At the end of the questionnaire, the participants could leave their feedback.

At the end of the survey period, we received 41 submissions. However, we had to discard 22 of them due to incompleteness, yielding 19 usable data points. The participation was entirely voluntary without any compensations or rewards. The nineteen participants comprised five females and 14 males with an average age of 30.1 years (SD = 7.7). They have experience using immersive technologies for an average of 4.1 years (SD = 2.6), ranging from a minimum of 1 to a maximum of 11 years. Regarding the number of hours spent with immersive technologies, the average was 11.6 h (SD = 6.2), with a minimum of 1 and a maximum of 25.

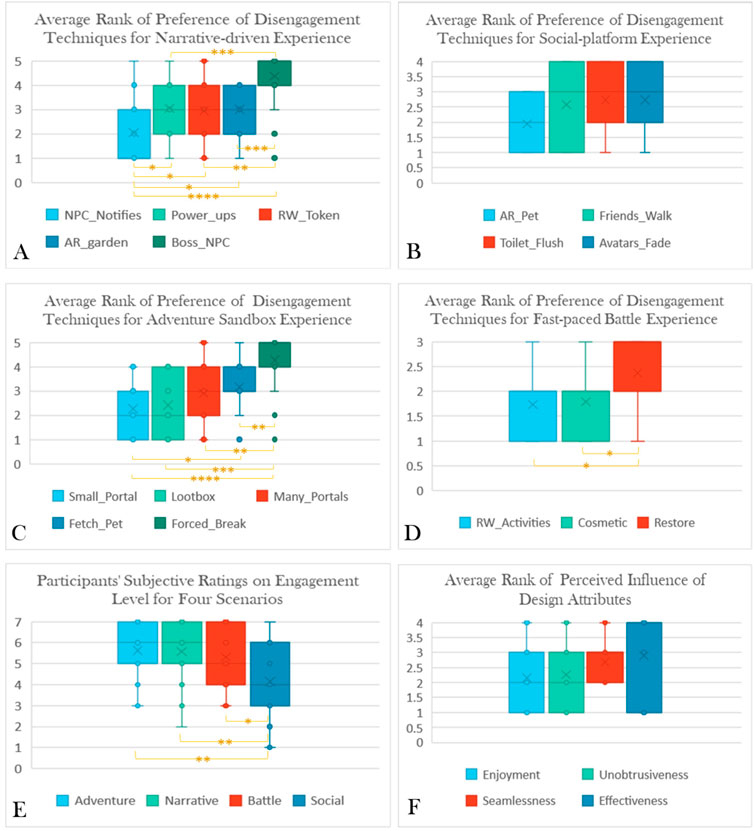

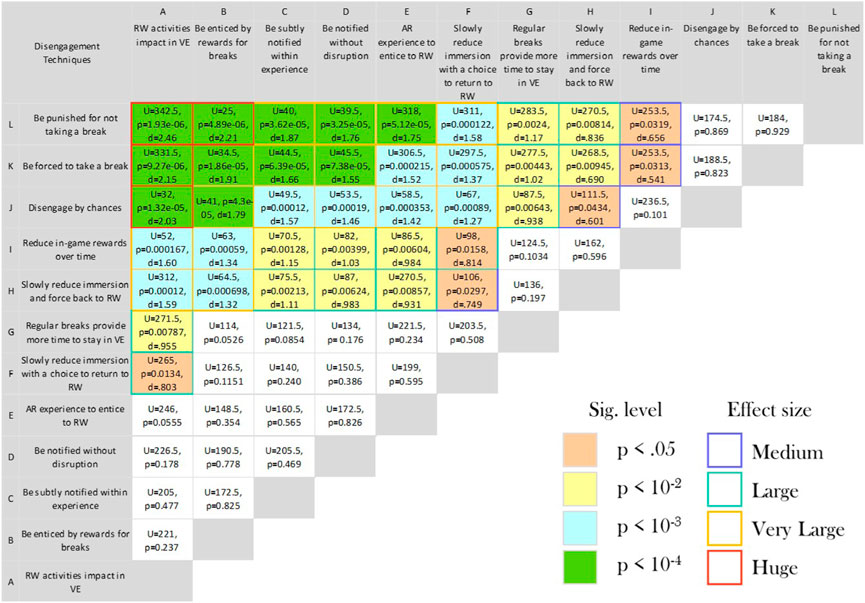

For our non-parametric data, we performed Kruskal-Wallis tests and for post-hoc pairwise comparisons, we applied Mann-Whitney tests with Bonferroni P-value adjustment (the p-value is corrected while the alpha level is kept at 0.05). We found significant results as shown below. We also calculated the effect size using Cohen’s d. Q1—Narrative (see Figure 9A): Kruskal-Wallis test yielded χ2(4) = 27.8, p

FIGURE 9. Plots for the results of Q1-Q6 with ranking [lower is better—(A–D,F)] and rating data [higher is better—(E)]: (A) Q1—Average rank of preference of disengagement techniques for narrative-driven experience, (B) Q2—Average rank for social-platform experience, (C) Q3—Average rank for social sandbox experience, (D) Q4—Average rank for fast-paced battle experience, (E) Q5—subjective ratings for engagement level for four scenarios, and (F) Q6—Average rank for design attributes (*p

Q3—Adventure (see Figure 9C): χ2(4) = 23.6, p

Q7—General Techniques: χ2(11) = 83.8, p

FIGURE 10. The information of twelve general disengagement techniques including their IDs, short names, long descriptions, average ranks, and standard deviations of rank.

FIGURE 11. A plot shows the average rank of preference (lower is better) between the twelve general disengagement techniques.

FIGURE 12. The results of post-hoc pairwise comparisons between the twelve general disengagement techniques using Mann-Whitney tests with Bonferroni P-value adjustment. The highlighted pairs indicated a significant difference.

Kruskal-Wallis tests did not yield any significant differences for Q2—Social (see Figure 9B), and Q6—Design Attributes (see Figure 9F).

At the end of the questionnaire, the participants could leave feedback on the overall impression of the concepts of disengagement techniques and strategies. Those who left their feedback were all in agreement that they would prefer to be subtly or diegetically notified or enticed by rewards over being forced or punished as part of the experience, for example:

Participant 2 (P2) explained that autonomy deprivation would have undesirable effects on the user’s perception of the experience, “I hate being forced or punished for enjoying my relaxation/fun time and anything that forces me to take breaks I rarely continue playing/engaging in.”

P4 also shared a similar view and disliked being coerced, “I prefer disengagement techniques that do not change my experience forcefully. Ex: if the immersive world locks me out for a specific time without any background story, it could affect my impression of the application.”

P10 thought that punishment should only be reserved for the critical situation and rewards might be the best option for disengagement, “Punishing the player should only be used in extreme cases, awarding him is probably the best way.”

P3 preferred being reminded in a friendly and diegetically manner, “I liked the notion of this research, i.e., disengaging players from the metaverse to return to the real world. Overall, I would prefer to have in-game mechanics such as NPC or pets to come to me to remind me that it is time to go back or decrease the motivating factors such as rewards. On the other hand, I do not prefer the idea of losing the agency and the feeling of being quit forcibly or getting punished.”

P1 mentioned that subtle cues that kept some form of connection to the real world would be preferable and less obtrusive to the current experience, any disengagement techniques that negatively affect the experience would be undesirable, “As you go through time, some techniques can cause discomfort, but if you can leave some traces of the real world as time goes by, it will help to force the player out of the game and the experience will not be so annoying. Also, if the techniques affect the game, like experience and resources, they will only make the experience annoying because you already spent all that time trying to get those resources to be taken away so maybe a warning would be better than having them taken away or going out and somehow getting them back.”

The results from our preliminary validation study supported our hypothesis that there would be differences in user preferences among twelve general disengagement techniques (see Figures 11, 12). In addition, they partially supported the case of the four scenarios, where we found significant results for Narrative-driven, Adventure Sandox, and Fast-paced Battle experiences, but not for Social-platform experience (see Figures 9A–D). We also found significant differences in terms of engagement level between scenarios where Narrative-driven, Adventure Sandox, and Fast-paced Battle were rated significantly higher than Social-platform (see Figure 9E)), which may explain the insignificant ranking result for Social-platform, as the participants would not be as engaged in such experience. We will share several interesting takeaways from this study in the remainder of this subsection.

As can be observed from Figures 9A, C, D and Figure 11, two extremes of respect and reward on one end and punishment on the other emerged. This was expected as discussed in Section 5.3 on the operant conditioning (Thorndike, 1898; Skinner, 2019). The rationale behind this emergence was clear from the participants’ feedback. The first explanation was that people do not like being forced, or worse, punished (P1-P4, and P10). The second explanation followed the first one; people want autonomy, i.e., the ability to be self-governed, which means the system should treat them with respect and allow them to make their own decision to leave (P1, P3, P4, and P10). The last explanation was that people like to be rewarded for compliance (P3 and P10). Between the two extremes, there was a grey area where the votes were not as unanimous. Disengagement techniques that fell in this range were ranked with a less significant difference, yet, subgroups could be observed. From this observation, we propose an approach to determine where the techniques may lie in relation to the two extremes based on the characteristics of such techniques in Section 7.6.

From further analyses on the desirable disengagement techniques, we found that participants preferred to remain in the presence of the immersive experience as they ranked “notifying by NPC” and “displaying a small portal” were the highest rank for Narrative-driven and Adventure Sandox scenarios, respectively. This was also in agreement with the general techniques where techniques C (be subtly notified within experience) and D (be notified without disruption) were highly ranked (see Figure 11). These findings also support design recommendations made by past research that the experience should avoid a break in presence (BIP) (O’Hagan et al., 2020; Putze et al., 2020; Gottsacker et al., 2021) as it is crucial to maintain place illusion (PI) and plausible illusion (Psi) for the best immersive experience of the users (Slater, 2009). This was reinforced by P4’s comment that negative experiences might be bearable if there was a backstory and a rational explanation for them. P3 also stated that in-game mechanics, such as NPC or pets, would be preferred to remind the users. For design guidelines for notification without BIP in VR, there are already several works (Simeone, 2016; Ghosh et al., 2018; Zenner et al., 2018; O’Hagan et al., 2020; Gottsacker et al., 2021; Medeiros et al., 2021).

Although less favourable, still more desirable to being forced, participants preferred having real-world cues in AV or activities in AR as they were moderately ranked for Narrative-driven and Adventure Sandox scenarios. For general techniques, technique E (AR experience to entice to RW) and F (slowly reduce immersion with a choice to return to RW) were upper-moderately ranked (see Figure 11). As P1 commented, keeping some traces of the real world would be less frustrating, so the users were constantly reminded that they would, eventually, need to return. Apart from reduction of the likelihood of BIP, smooth transition between environments could improve spatial awareness and perceived system’s interactivity (Valkov and Flagge, 2017), lessen the stress from exiting an IVE (Knibbe et al., 2018), and potentially mitigating breakdowns of the system due to lack of real-world awareness (Dao et al., 2021). Existing recommendations exist for designing the bi-directional transition on RV-Continuum, such as George et al. (2020), mapping the world from real to virtual with Substitutional Reality (SR) (Simeone et al., 2015), or procedurally generated such mappings (Sra et al., 2016, 2017).

From further analyses, we found that of all the twelve general techniques, technique A (RW activities impact in VE) was ranked significantly higher than technique F to L (see Figure 12). The full description of technique A is “I would like my real-world activity during a break to have an impact on the virtual environment, e.g., physical activities increase XP, and drinking water replenishes health”. Based on its description, technique A can be considered a type of reward having real-world activities mapped to achievements in an IVE. Arguably, the ultimate technique for disengagement might be a combination of physical activities in the real world being translated to progress made or rewards being given in the virtual one. However, this could be interpreted beyond the obvious that the users might no longer perceive the real and virtual environments as separate worlds but as a single multi-dimensional world. Actions in one have consequences in the other, for example, tangible interfaces (Ishii and Ullmer, 1997; Billinghurst et al., 2008) allow physical manipulation of the virtual representation, and teleoperation would support the manipulation of the physical objects from the IVE Saraiji et al. (2018). Several previous research explored how such MR experiences can be enhanced beyond the physical limitations of the real world (Lindlbauer and Wilson, 2018; Hartmann et al., 2019; Fender and Holz, 2022).

Beyond gaming, activities for improving our productivity tasks, such as sensemaking (Lisle et al., 2021), and training Thanyadit et al. (2022), will also take advantage of the IVEs for visualisation, interaction, and collaboration. As users are immersed and cut off from the real environment, the flow state is more likely to occur, causing the users to lose track of time Kim and Hall (2019). Therefore, disengagement techniques will likely be necessary and applicable for engaging experiences, even in a non-game context. The preliminary results from the validation study provided some indication to support that disengagement techniques would also work for non-game settings. Figures 11, 12 illustrate the significant differences in preferences for different techniques with a generic description and without a specific scenario. This meant regardless of the potential external stimuli from the application, the stimuli provided by disengagement techniques might be sufficient to influence the user’s response.