- Department of Psychology, Iowa State University, Ames, IA, United States

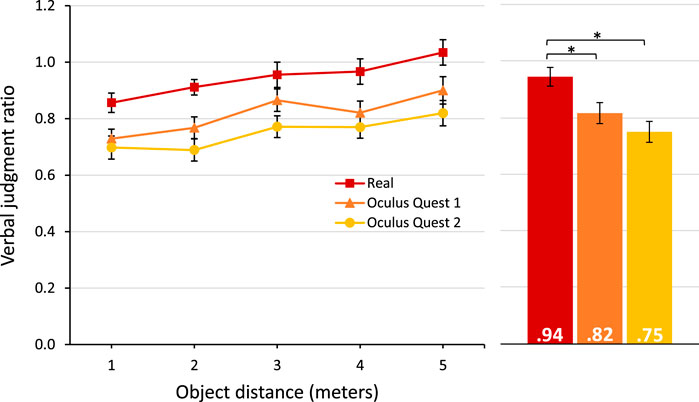

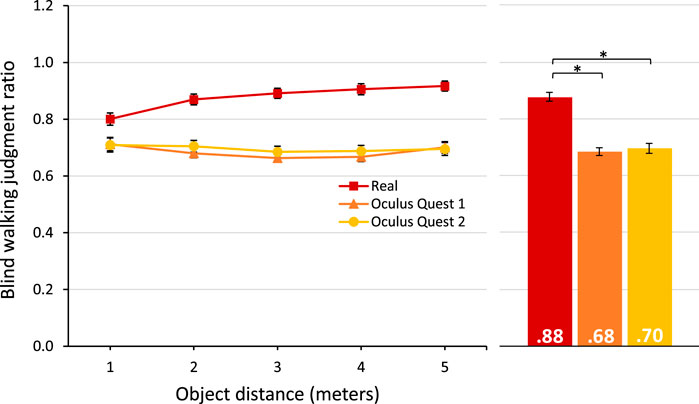

Distances in virtual environments (VEs) viewed on a head-mounted display (HMD) are typically underperceived relative to the intended distance. This paper presents an experiment comparing perceived egocentric distance in a real environment with that in a matched VE presented in the Oculus Quest and Oculus Quest 2. Participants made verbal judgments and blind walking judgments to an object on the ground. Both the Quest and Quest 2 produced underperception. Verbal judgments in the VE were 82% and 75% of the object distance, in contrast with real world judgments that were 94% of the object distance. Blind walking judgments were 68% and 70% of object distance in the Quest and Quest 2, respectively, compared to 88% in the real world. This project shows that significant underperception of distance persists even in modern HMDs.

Introduction

In order for virtual reality (VR) to be fully effective, the accuracy with which distance is perceived in virtual environments (VEs) should be similar to that in real environments. However, distance in VR is consistently underperceived compared to the real world, especially when viewed through a head-mounted display (HMD) (Witmer and Kline, 1998; Thompson et al., 2004; Kelly et al., 2017). For example, a 2013 review of 33 studies found that distance in VR was perceived to be 73% of intended distance, on average (Renner et al., 2013) (also see Creem-Regehr et al. (2015a) for a review and synthesis).

Distance underperception in VR could have pervasive consequences for users. For example, real estate development stakeholders viewing a virtual walk-through of a planned structure (Ullah et al., 2018) will perceive the space to be smaller than intended, potentially leading to planning and decision errors. Likewise, a soldier learning to operate a vehicle or to coordinate spatially with other troops in VR will learn a set of perception-action associations based on underperceived distances, which might require recalibration in the real environment. Misperception of spatial properties of the VE can undermine their value by introducing costly errors associated with decisions and actions based on the misperceived environment.

The phenomenon of distance underperception in VR has been documented for more than two decades (Witmer and Kline, 1998; Witmer and Sadowski, 1998). However, HMD technology has evolved considerably since that time, and distance perception has also improved (Feldstein et al., 2020). The 2016 release of the Oculus Rift and HTC Vive ushered in a new era of consumer-oriented VR, and subsequent years have seen a proliferation of HMDs available to consumers. Compared with earlier HMDs, modern HMDs generally offer a larger field of view (FOV), higher resolution, greater pixel density, lighter weight, brighter displays, and improved ergonomics, not to mention dramatically lower cost. Newer HMDs provide more accurate distance perception when directly compared with older HMDs (Creem-Regehr et al., 2015b; Kelly et al., 2017; Buck et al., 2018), although there is some evidence that even newer HMDs produce underperception compared to real environments (Kelly et al., 2017).

Many studies have compared perceived distance in a VE with that in a matched real environment (Aseeri et al., 2019; Ding et al., 2020; Feldstein et al., 2020; Grechkin et al., 2010; Kelly et al., 2017; Peer and Ponto, 2017; Ries et al., 2008; Sahm et al., 2005; Thompson et al., 2004; Willemsen and Gooch, 2002; Willemsen et al., 2009). This comparison is particularly useful because it removes the assumption that real world distance perception is 100% accurate. Although distance judgments in real environments are often found to be around 100% of the target distance [see (Knapp and Loomis, 2004) for a review], they are occasionally somewhat lower (e.g., (Aseeri et al., 2019; Grechkin et al., 2010; Kelly et al., 2017; Peer and Ponto, 2017). Therefore, it is advisable that researchers hoping to contextualize the underperception of distance in VR also measure perception in a real environment. Although the dramatic underperception that characterizes some older VR headsets (Renner et al., 2013) is evident without a real world comparison, researchers using modern consumer-grade headsets commonly report distance judgments above 80% ((Ahn et al., 2021; Buck et al., 2018; Ding et al., 2020; Masnadi et al., 2021; Kelly et al., 2017; Zhang et al., 2021)). As distance perception in VR improves, real world comparison becomes more important to establish whether underperception still occurs.

There are currently no published data on absolute distance perception in the Oculus Quest or Oculus Quest 2 (but see (Arora et al., 2021) for an investigation of relative distance judgments in the Oculus Quest), which represent two of the most popular HMDs among consumers. The Oculus Quest and Oculus Quest 2 currently make up 4.4% and 39.6% of HMDs connected to Steam VR (https://store.steampowered.com/hwsurvey/; retrieved on 3 January 2022). These numbers likely underestimate the popularity of the Quest and Quest 2, since Steam VR requires a connection to a gaming-capable PC and many users are drawn to purchase the Quest and Quest 2 HMDs precisely because they can also operate as stand-alone consoles. Thus, the primary contribution of this paper is to present new data from a carefully controlled experiment comparing perceived egocentric distance in a real environment with that in a matched VE presented on the Oculus Quest and Oculus Quest 2. Participants viewed an object placed on the ground plane and made two types of egocentric distance judgments: verbal judgments and blind walking judgments.

The study design and hypotheses were pre-registered on the Open Science Framework: https://osf.io/hq5fp/. The primary independent variable was the viewing condition: real world, Quest, or Quest 2. The primary hypothesis was that distance perception would be more accurate in the real world compared to the Quest and Quest 2, based on research showing that perceived distance is typically less than 100%, even in modern displays (Aseeri et al., 2019; Buck et al., 2018, 2021; Creem-Regehr et al., 2015b; Ding et al., 2020; Kelly et al., 2017; Li et al., 2015; Peer and Ponto, 2017). Comparison between the two HMDs was exploratory, since it was unclear whether technical differences between the displays should affect perceived distance. Likewise, analysis of the effect of object distance was exploratory.

Materials and Methods

Participants

The target sample size was 93 participants (31 per viewing condition), which was estimated by conducting a power analysis (G*Power v3.1) with the following parameters: independent samples comparison between two groups, Cohen’s d = 0.73, alpha = 0.05, minimum power needed to detect an effect = 0.80. Effect size was based on the verbal judgment data in Kelly et al. (2017), who used a similar design and reported a significant difference between real and virtual viewing conditions (they reported no difference between conditions for blind walking judgments, so only verbal data were used to estimate effect size).

A total of 93 individuals participated in the experiment in exchange for a gift card. Thirty participants (15 men, 15 women; mean age = 20.27, SE = 0.35) experienced the real environment, 31 participants (17 men, 14 women; mean age = 21.23, SE = 0.53) experienced the Oculus Quest, and 32 participants (17 men, 15 women; mean age = 20.57, SE = 0.48) experienced the Oculus Quest 2. Participants were recruited through a mass e-mail to university students. To be eligible for the study, participants were required to be 18 years or older, able to walk short distances, have normal or corrected-to-normal vision, and without history of amblyopia (an imbalance between the two eyes that can lead to problems with stereo vision) or photosensitive seizures. Participants and experimenters were required to wear masks throughout the experiment.

Design

The study used a 3 (viewing condition: real world, Quest, and Quest 2) by 5 (object distance: 1, 2, 3, 4, and 5 m) mixed design. Viewing condition was manipulated between participants and object distance was manipulated within participants. Participants were assigned to the real classroom, Quest, or Quest 2 at the time of enrollment in the study. The real world condition was run first, followed by the Quest, followed by the Quest 2. All participants completed the study within a 1.5 month period. Participants completed two separate blocks of verbal distance judgments and blind walking distance judgments, and block order was counterbalanced. Each block consisted of 15 trials, corresponding to five egocentric distances (1, 2, 3, 4, and 5 m) repeated three times each in a random order that was held constant across all participants (i.e., the same random order was used for all participants).

Stimuli

The real environment was a university classroom, 9.3 by 9.17 m. Tables and chairs were moved to the sides of the room, allowing for an open walking space to perform the distance judgment task. The VE was experienced through the Oculus Quest or Quest 2.

Each HMD was modified by removing the stock elastic head strap and replacing it with the HTC Vive Deluxe Audio Strap using custom 3D printed parts to make the connections. This was done because the Deluxe Audio Strap is easier to sanitize than the stock head strap and because the ratcheting size adjustment on the Deluxe Audio Strap is easier for participants to use. A silicone cover was added to the stock HMD face pad. The silicone cover contained a nose flap that blocked some of the light entering from below the display, although some light leakage probably occurred for most participants.

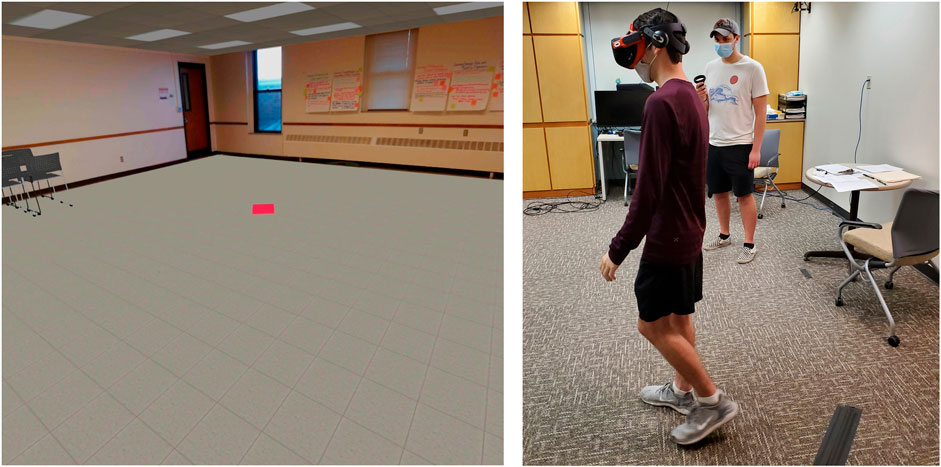

The VE was built in Unity game engine, and was designed to mimic the real classroom (see Figure 1, left). Photographic textures obtained from the real classroom were used for most surfaces, and spatial properties of the room and its contents were carefully matched. The VE was experienced while standing within a 6 by 7 m research lab (see Figure 1, right). The virtual environment did not contain shadows cast by virtual objects, nor did it include an avatar representing the participant’s body.

FIGURE 1. Left: Screenshot of the virtual classroom taken from the position of the viewing location. The red square is the target object used for verbal and blind walking distance judgments. Right: Participant (foreground) and researcher (background) during a blind walking trial.

The target object was a red square, 18 by 18 cm, placed on the floor of the environment. In the real classroom the target was made out of red cardboard. The viewing location in the real environment was marked by a strip of black tape on the floor, near the corner of the room. A similar black strip also appeared in the VE. The lab room in which the virtual conditions took place contained a raised rubber pad on the floor corresponding to the location of the virtual tape strip, so that participants could feel when they were standing at the viewing location.

A laser distance measure was used to measure walked distance on blind walking trials.

Procedure

Upon arrival at the research site, the participant completed the informed consent form as well as a COVID-19 screening form. The researcher then provided a basic description of the verbal and blind walking judgments.

In order to remind the participant of standard units of measurement used when making verbal judgments, the researcher led the participant into the hallway outside the classroom/lab to view tape markings on the floor corresponding to 1 foot and 1 m. The participant was given the choice of which measurement unit to use when making verbal distance judgments.

All distance judgments began with the participant standing at the viewing location with toes placed on the black tape. On blind walking trials in the real environment, the participant was instructed to close their eyes while the researcher placed the target object at the appropriate egocentric distance (faint marks guided the researcher but were not visible from the participant’s perspective). The researcher then instructed the participant to open their eyes. After 5 s of viewing, the participant closed their eyes, the researcher picked up the object, and the participant was instructed to walk to the previously seen object location with eyes closed.

Blind walking trials in the VE were similar to those in the real environment, except the timing of object presentation was controlled through the software, and the display turned uniformly black at times in which the participant was expected to close their eyes.

Regardless of environment (real or virtual), the researcher then measured and recorded walked distance. Measurement was accomplished with a laser measure positioned at the viewing location and aimed at the heel of the participant’s shoe. Walked distance was recorded in meters.

The procedure for verbal trials was similar to that for blind walking trials, except that the participant was instructed to verbally report the distance from their standing location to the location of the object, and the researcher recorded the judgment. Verbal judgments made in imperial units were later converted to metric units.

Results

Prior to analysis, judged distance was converted to a ratio of judged distance relative to object distance. Verbal judgment ratios are presented in Figure 2 and blind walking judgment ratios are presented in Figure 3.

FIGURE 2. Average verbal distance judgments expressed as a ratio of object distance. Data are plotted as a function of object distance (line graph, left), and also averaged across object distance (bar graph, right). Error bars represent ± one SEM. *p <0 .05.

FIGURE 3. Average blind walking distance judgments expressed as a ratio of object distance. Data are plotted as a function of object distance (line graph, left), and also averaged across object distance (bar graph, right). Error bars represent ± one SEM. *p <0 .05.

Verbal and blind-walking data were analyzed separately in 3 (viewing condition: real, Quest, or Quest 2) by 5 (object distance: 1–5 m) mixed-model ANOVAs. For verbal judgments, Mauchly’s test of sphericity was violated for the object distance variable so the analysis proceeded using a Greenhouse-Geisser correction. The main effect of viewing condition was significant, F (2, 90) = 7.749, p = 0.001,

For blind walking judgments, Mauchly’s test of sphericity was violated for the object distance variable, so the analysis proceeded using a Greenhouse-Geisser correction. The main effect of viewing condition was significant, F (2, 90) = 45.232, p < 0.001,

Discussion

Egocentric distance in a VE presented on the Oculus Quest and Oculus Quest 2 was underperceived relative to intended object distance, and also relative to perceived distance measured in a real environment upon which the VE was based. Evidence of underperception in both HMDs was found in verbal distance judgments as well as blind walking distance judgments. There were no significant differences between judgments made in the Quest and Quest 2.

The pattern of distance judgments as a function of object distance varied across response modality. Verbal distance judgment ratios increased linearly with object distance, regardless of viewing condition. Blind walking distance judgment ratios in the real environment also increased as a function of object distance (albeit non-linearly), but were mostly flat in the Quest and Quest 2, leading to a spreading interaction. The distinct patterns across the two dependent variables may reflect reliance on different distance cues. Whereas blind walking judgments are heavily dependent on angular declination of the target relative to the horizon (Ooi et al., 2001; Messing and Durgin, 2005), verbal judgments may depend more on contextual cues that help to scale the relevant units used in the verbal response.

The VE used in this study lacked shadows cast by virtual objects, although the photographic wall textures did contain shadows. Shadows are particularly useful for indicating contact between objects and surfaces, such as the ground plane (Hu et al., 2000; Madison et al., 2001; Adams et al., 2021). The target object used in this study was flat and flush with the floor, but future studies may benefit from using a 3D object (e.g., a sphere) that casts shadows on the ground plane.

Perceived distance in the Oculus Quest and Oculus Quest 2 ranged from 68 to 82% of intended distance, depending on the specific HMD and the response modality, and averaged 74% across the two HMDs and the two response types. Although averaging across response modalities is not typical, it provides an overall value with which to compare to the 73% value representing judged distance combined across several studies reviewed by Renner et al. (2013). The lack of clear improvement in the Quest and Quest 2 compared to older displays reported by Renner et al. (2013), despite vast technological advancements in recent years, is concerning and suggests that further improvements in HMD technology (e.g., wider field of view, higher resolution, etc.) may not resolve the underlying causes of distance underperception. The similarity in distance judgments when using the Quest and Quest 2 further underscores the concern that technological advances may not foster better distance perception. Although there are many technological differences between the Quest and Quest 2, the most salient is the difference in resolution (1,440 by 1,600 in the Quest and 1832 by 1920 in the Quest 2). The current results suggest that resolution is not the limiting factor, corroborating similar conclusions from other research (Willemsen and Gooch, 2002; Thompson et al., 2004; Buck et al., 2021).

There is a growing body of data on perceived distance using other modern consumer-grade HMDs, most notably the HTC Vive and the Oculus Rift (the consumer version, to distinguish from earlier development kits). Studies using the HTC Vive report distance judgments ranging from 66% (Buck et al., 2018) to 102% (Zhang et al., 2021) of actual distance, with several studies reporting intermediate values (Aseeri et al., 2019; Kelly et al., 2017; Maruhn et al., 2019; Peer and Ponto, 2017). Studies using the Oculus Rift report distance judgments from 75% (Peer and Ponto, 2017) to 104% (Ahn et al., 2021) of actual distance, with many in between (Aseeri et al., 2019; Buck et al., 2018; Ding et al., 2020). In this context, the current results from the Oculus Quest and Oculus Quest 2 are on the low end of the range established for other consumer-grade HMDs. Technological differences that would seem to favor the Quest and Quest 2 over the Oculus Rift and HTC Vive include the wireless capability of the Quest and Quest 2 compared to the typically tethered experience provided by the Rift and Vive, and the higher resolution of the Quest (1,440 by 1,600 pixels) and especially the Quest 2 (1,832 by 1,920 pixels) compared to the Rift (1,080 by 1,200 pixels) and Vive (1,080 by 1,200 pixels). On the other hand, the diagonal FOV of the HTC Vive (approximately 148°) is superior to that of the Quest (approximately 130°), Quest 2 and the Oculus Rift (both approximately 134°). The HMD weights are all very similar and much lighter than earlier displays, although the Quest (571 g) is slightly heavier than the Quest 2 (502 g), Oculus Rift (470 g), and HTC Vive (555 g). In terms of technical specifications, there is no clear reason why the Quest and Quest 2 would produce distance perception on the low end of the ranges established by the HTC Vive and Oculus Rift.

Although modern HMDs such as the Oculus Quest and Oculus Quest 2 continue to cause underperception of egocentric distance, research has identified several techniques that can be leveraged to alleviate the problem. Recalibration by walking through the VE improves blind-walking judgments (Kelly et al., 2014, 2018; Richardson and Waller, 2007; Waller and Richardson, 2008) as well as size judgments (Siegel and Kelly, 2017; Siegel et al., 2017), albeit to a lesser extent, although it can also cause miscalibration of actions subsequently performed in the real world (Waller and Richardson 2008). The widespread availability of room-scale tracking makes recalibration by walking trivial: simply walking around and exploring on foot, which naturally occurs in most VEs, leads to recalibration equivalent to more carefully controlled recalibration procedures (Waller and Richardson, 2008). Providing a self-avatar improves perceived distance (Aseeri et al., 2019; Leyrer et al., 2011; Ries et al., 2008), perhaps by providing additional familiar size cues. Displaying a replica of the actual environment in which the user is located also improves perceived distance (Interrante et al., 2006; Kelly et al., 2018), and this improvement may carry over into novel VEs (Steinicke et al., 2009). For simulations in which perceived distance is important, these tools remain the best ways to improve perceived distance until HMD technology supports perception of spatial properties on par with the real environments they intend to represent.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: Open Science Framework: https://osf.io/hq5fp/.

Ethics Statement

The studies involving human participants were reviewed and approved by Iowa State University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study. Consent was obtained from the researchers for the publication of their images in this article.

Author Contributions

The research was conceptualized by JK, TD, MA, and LC. MA coded the experiment. TD supervised data collection and analyzed the data. JK drafted the paper. TD, MA, and LC provided feedback and revisions on the paper.

Funding

The open access publication fees for this article were covered by the Iowa State University Library.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

Thanks to Alex Gillet, Matthew Pink, and Jason Terrill for assistance with data collection. The open access publication fees for this article were covered by the Iowa State University Library.

References

Adams, H., Stefanucci, J., Creem-Regehr, S. H., Pointon, G., Thompson, W. B., and Bodenheimer, B. (2021). Shedding Light on Cast Shadows: An Investigation of Perceived Ground Contact in Ar and Vr. IEEE Trans. Vis. Comput. Graphics, 1. Available at: https://ieeexplore.ieee.org/document/9490310. doi:10.1109/TVCG.2021.3097978

Ahn, S., Kim, S., and Lee, S. (2021). Effects of Visual Cues on Distance Perception in Virtual Environments Based on Object Identification and Visually Guided Action. Int. J. Human-Computer Interaction 37, 36–46. doi:10.1080/10447318.2020.1805875

Arora, R., Li, J., Shi, G., and Singh, K. (2021). Thinking outside the Lab: Vr Size & Depth Perception in the Wild. arXiv preprint.

Aseeri, S., Paraiso, K., and Interrante, V. (2019). Investigating the Influence of Virtual Human Entourage Elements on Distance Judgments in Virtual Architectural Interiors. Front. Robot. AI 6, 44. doi:10.3389/frobt.2019.00044

Buck, L. E., Young, M. K., and Bodenheimer, B. (2018). A Comparison of Distance Estimation in Hmd-Based Virtual Environments with Different Hmd-Based Conditions. ACM Trans. Appl. Percept. 15, 1–15. doi:10.1145/3196885

Buck, L., Paris, R., and Bodenheimer, B. (2021). Distance Compression in the Htc Vive Pro: A Quick Revisitation of Resolution. Frontiers in Virtual Reality. 2. doi:10.3389/frvir.2021.728667

Creem-Regehr, S. H., Stefanucci, J. K., Thompson, W. B., Nash, N., and McCardell, M. (2015b). “Egocentric Distance Perception in the Oculus Rift (DK2),” in Proceedings of the ACM Symposium on Applied Perception (New York City, NY: ACM), 47–50. doi:10.1145/2804408.2804422

Creem-Regehr, S. H., Stefanucci, J. K., and Thompson, W. B. (2015a). “Perceiving Absolute Scale in Virtual Environments: How Theory and Application Have Mutually Informed the Role of Body-Based Perception,” in The Psychology of Learning and Motivation. Editor B. Ross (Waltham, MA: Academic Press: Elsevier Inc.), 195–224. doi:10.1016/bs.plm.2014.09.006

Ding, F., Sepahyar, S., and Kuhl, S. (2020). “Effects of Brightness on Distance Judgments in Head Mounted Displays,” in ACM Symposium on Applied Perception 2020 (New York, NY, USA: Association for Computing Machinery). SAP ’20. doi:10.1145/3385955.3407929

Feldstein, I. T., Kölsch, F. M., and Konrad, R. (2020). Egocentric Distance Perception: A Comparative Study Investigating Differences between Real and Virtual Environments. Perception 49, 940–967. doi:10.1177/0301006620951997

Grechkin, T. Y., Nguyen, T. D., Plumert, J. M., Cremer, J. F., and Kearney, J. K. (2010). How Does Presentation Method and Measurement Protocol Affect Distance Estimation in Real and Virtual Environments? ACM Trans. Appl. Percept. 7, 1–18. doi:10.1145/1823738.1823744

Hu, H. H., Gooch, A. A., Thompson, W. B., Smits, B. E., Rieser, J. J., and Shirley, P. (2000). “Visual Cues for Imminent Object Contact in Realistic Virtual Environments,” in Proceedings Visualization 2000 (New York: Association for Computing Machinery), 179–185. (Cat. No.00CH37145). doi:10.1109/VISUAL.2000.885692

Interrante, V., Ries, B., and Anderson, L. (2006). “Distance Perception in Immersive Virtual Environments, Revisited,” in Proceedings of the IEEE Virtual Reality Conference (Washington, D.C.: IEEE Computer Society). doi:10.1109/VR.2006.52

Kelly, J. W., Cherep, L. A., Klesel, B., Siegel, Z. D., and George, S. (2018). Comparison of Two Methods for Improving Distance Perception in Virtual Reality. ACM Trans. Appl. Percept. 15, 1–11. doi:10.1145/3165285

Kelly, J. W., Cherep, L. A., and Siegel, Z. D. (2017). Perceived Space in the Htc Vive. ACM Trans. Appl. Percept. 15, 1–16. doi:10.1145/3106155

Kelly, J. W., Hammel, W. W., Siegel, Z. D., and Sjolund, L. A. (2014). Recalibration of Perceived Distance in Virtual Environments Occurs Rapidly and Transfers Asymmetrically across Scale. IEEE Trans. Vis. Comput. Graphics 20, 588–595. doi:10.1109/TVCG.2014.36

Knapp, J. M., and Loomis, J. M. (2004). Limited Field of View of Head-Mounted Displays Is Not the Cause of Distance Underestimation in Virtual Environments. Presence: Teleoperators & Virtual Environments 13, 572–577. doi:10.1162/1054746042545238

Leyrer, M., Linkenauger, S. A., Bülthoff, H. H., Kloos, U., and Mohler, B. (2011). “The Influence of Eye Height and Avatars on Egocentric Distance Estimates in Immersive Virtual Environments,” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization. (New York, NY, USA: Association for Computing Machinery), 67–74. APGV ’11. doi:10.1145/2077451.2077464

Li, B., Zhang, R., Nordman, A., and Kuhl, S. A. (2015). “The Effects of Minification and Display Field of View on Distance Judgments in Real and Hmd-Based Environments,” in Proceedings of the ACM Symposium on Applied Perception (New York City, NY: ACM), 55–58. doi:10.1145/2804408.2804427

Madison, C., Thompson, W., Kersten, D., Shirley, P., and Smits, B. (2001). Use of Interreflection and Shadow for Surface Contact. Perception & Psychophysics 63, 187–194. doi:10.3758/BF03194461

Maruhn, P., Schneider, S., and Bengler, K. (2019). Measuring Egocentric Distance Perception in Virtual Reality: Influence of Methodologies, Locomotion and Translation Gains. PLoS ONE 14, e0224651. doi:10.1371/journal.pone.0224651

Masnadi, S., Pfeil, K. P., Sera-Josef, J.-V. T., and LaViola, J. J. (2021). “Field of View Effect on Distance Perception in Virtual Reality,” in 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (Washington DC: IEEE Computer Society), 542–543. doi:10.1109/VRW52623.2021.00153

Messing, R., and Durgin, F. H. (2005). Distance Perception and the Visual Horizon in Head-Mounted Displays. ACM Trans. Appl. Percept. 2, 234–250. doi:10.1145/1077399.1077403

Ooi, T. L., Wu, B., and He, Z. J. (2001). Distance Determined by the Angular Declination below the Horizon. Nature 414, 197–200. doi:10.1038/35102562

Peer, A., and Ponto, K. (2017). “Evaluating Perceived Distance Measures in Room-Scale Spaces Using Consumer-Grade Head Mounted Displays,” in 2017 IEEE Symposium on 3D User Interfaces (3DUI), 83–86. doi:10.1109/3DUI.2017.7893321

Renner, R. S., Velichkovsky, B. M., and Helmert, J. R. (2013). The Perception of Egocentric Distances in Virtual Environments - A Review. ACM Comput. Surv. 46, 1–40. doi:10.1145/2543581.2543590

Richardson, A. R., and Waller, D. (2007). Interaction with an Immersive Virtual Environment Corrects Users' Distance Estimates. Hum. Factors 49, 507–517. doi:10.1518/001872007X200139

Ries, B., Interrante, V., Kaeding, M., and Anderson, L. (2008). “The Effect of Self-Embodiment on Distance Perception in Immersive Virtual Environments,” in Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology (New York, NY, USA: Association for Computing Machinery), 167–170. VRST ’08. doi:10.1145/1450579.1450614

Sahm, C. S., Creem-Regehr, S. H., Thompson, W. B., and Willemsen, P. (2005). Throwing versus Walking as Indicators of Distance Perception in Similar Real and Virtual Environments. ACM Trans. Appl. Percept. 2, 35–45. doi:10.1145/1048687.1048690

Siegel, Z. D., Kelly, J. W., and Cherep, L. A. (2017). Rescaling of Perceived Space Transfers across Virtual Environments. J. Exp. Psychol. Hum. Perception Perform. 43, 1805–1814. doi:10.1037/xhp0000401

Siegel, Z. D., and Kelly, J. W. (2017). Walking through a Virtual Environment Improves Perceived Size within and beyond the Walked Space. Atten Percept Psychophys 79, 39–44. doi:10.3758/s13414-016-1243-z

Steinicke, F., Bruder, G., Hinrichs, K., Lappe, M., Ries, B., and Interrante, V. (2009). “Transitional Environments Enhance Distance Perception in Immersive Virtual Reality Systems,” in Proceedings of the 6th Symposium on Applied Perception in Graphics and Visualization (New York, NY: ACM). doi:10.1145/1620993.1620998

Thompson, W. B., Willemsen, P., Gooch, A. A., Creem-Regehr, S. H., Loomis, J. M., and Beall, A. C. (2004). Does the Quality of the Computer Graphics Matter when Judging Distances in Visually Immersive Environments? Presence: Teleoperators & Virtual Environments 13, 560–571. doi:10.1162/1054746042545292

Ullah, F., Sepasgozar, S., and Wang, C. (2018). A Systematic Review of Smart Real Estate Technology: Drivers of, and Barriers to, the Use of Digital Disruptive Technologies and Online Platforms. Sustainability 10, 3142. doi:10.3390/su10093142

Waller, D., and Richardson, A. R. (2008). Correcting Distance Estimates by Interacting with Immersive Virtual Environments: Effects of Task and Available Sensory Information. J. Exp. Psychol. Appl. 14, 61–72. doi:10.1037/1076-898X.14.1.61

Willemsen, P., Colton, M. B., Creem-Regehr, S. H., and Thompson, W. B. (2009). The Effects of Head-Mounted Display Mechanical Properties and Field of View on Distance Judgments in Virtual Environments. ACM Trans. Appl. Percept. 6, 1–14. doi:10.1145/1498700.1498702

Willemsen, P., and Gooch, A. A. (2002). Perceived Egocentric Distances in Real, Image-Based, and Traditional Virtual Environments. Proc. IEEE Virtual Reality 2002, 275–276. doi:10.1109/VR.2002.996536

Witmer, B. G., and Kline, P. B. (1998). Judging Perceived and Traversed Distance in Virtual Environments. Presence 7, 144–167. doi:10.1162/105474698565640

Witmer, B. G., and Sadowski, W. J. (1998). Nonvisually Guided Locomotion to a Previously Viewed Target in Real and Virtual Environments. Hum. Factors 40, 478–488. doi:10.1518/001872098779591340

Keywords: distance perception, depth perception, virtual reality, head mounted display, oculus quest, oculus quest 2, Blind walking, verbal report

Citation: Kelly JW, Doty TA, Ambourn M and Cherep LA (2022) Distance Perception in the Oculus Quest and Oculus Quest 2. Front. Virtual Real. 3:850471. doi: 10.3389/frvir.2022.850471

Received: 07 January 2022; Accepted: 16 February 2022;

Published: 07 March 2022.

Edited by:

Frank Steinicke, University of Hamburg, GermanyReviewed by:

Pete Willemsen, University of Minnesota Duluth, United StatesSarah H. Creem-Regehr, The University of Utah, United States

Lauren Buck, Trinity College Dublin, Ireland

Copyright © 2022 Kelly, Doty, Ambourn and Cherep. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonathan W. Kelly, am9ua2VsbHlAaWFzdGF0ZS5lZHU=

Jonathan W. Kelly

Jonathan W. Kelly Taylor A. Doty

Taylor A. Doty Morgan Ambourn

Morgan Ambourn