94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real., 29 April 2022

Sec. Technologies for VR

Volume 3 - 2022 | https://doi.org/10.3389/frvir.2022.838720

This article is part of the Research TopicVirtual Reality, Augmented Reality and Mixed Reality - Beyond Head Mounted DisplaysView all 3 articles

The sensation of self-motion is essential in many virtual reality applications, from entertainment to training, such as flying and driving simulators. If the common approach used in amusement parks is to actuate the seats with cumbersome systems, multisensory integration can also be leveraged to get rich effects from lightweight solutions. In this paper, we introduce a novel approach called the “Kinesthetic HMD”: actuating a head-mounted display with force feedback in order to provide sensations of self-motion. We discuss its design considerations and demonstrate an augmented flight simulator use case with a proof-of-concept prototype. We conducted a user study assessing our approach’s ability to enhance self-motion sensations. Taken together, our results show that our Kinesthetic HMD provides significantly stronger and more egocentric sensations than a visual-only self-motion experience. Thus, by providing congruent vestibular and proprioceptive cues related to balance and self-motion, the Kinesthetic HMD represents a promising approach for a variety of virtual reality applications in which motion sensations are prominent.

With the blossoming of virtual reality (VR) consumer-grade devices in the last 5 years, numerous applications for VR bloomed in the entertainment, healthcare and manufacturing industries (Kim, 2016). Impressive advances were achieved in visual and audio quality, with audio spatialization being now common place and human-eye resolution about the be reached1. However, although VR experiences are often praised as “fully immersive”, bodily sensations are still largely missing, while they are crucial for several aspects of user experience (Reiner, 2004).

The perception of self-motion, in particular, can be induced visually but relies on multisensory cues, and notably on vestibular cues, i.e. acceleration and motion applied to the head (Britton and Arshad, 2019). Although visual cues are usually more precise for perceiving displacement, in some cases they can be dominated by vestibular cues (Harris et al., 2000; Fetsch et al., 2009).

This perceptual discrepancy leads to cybersickness effects as well as user disinvestment (in particular for whole-body displacement such as vehicle driving, flying, falling, etc), both of which are major issues in many VR applications. As a result, VR content producers tend to adapt to those technological limitations instead of fitting the best possible user experience. The most stunning example is probably the use of teleportation as a relatively standard locomotion system, despite huge design efforts for other metaphors with better realism and ecological agency (Boletsis, 2017). In order to address this lack of physical sensations, over the last years both researchers and industrialists proposed a large number of haptic VR peripherals (see (Wang D. et al., 2019) for a review).

Yet only a subset of them addressed motion sensations. If the common approach used in amusement parks is to actuate the seats with cumbersome systems, multisensory integration can also be leveraged to get rich effects from lightweight solutions (Danieau et al., 2012; Nilsson et al., 2012).

In this paper, we propose a novel approach called the “Kinesthetic HMD” to enhance self-motion sensations in immersive VR applications. It consists in actuating a head-mounted display (HMD) with force feedback in order to provide rich and versatile self-motion effects. Similarly to a motion platform, our approach provides motion cues to the user, but at the level of his/her head, so to simulate whole-body accelerations and enhance illusory self-motion. In contrast with actuated seats, the Kinesthetic HMD stimulates the vestibular system rather than the whole body.

In the remainder of this paper, we first present related work on VR peripherals providing motion sensations. Then, the Kinesthetic HMD approach is introduced and its hardware, software and safety design is discussed. We demonstrate a flight simulator use case with a proof-of-concept prototype featuring a high-end grounded 6-DoF (degrees of freedom) haptic arm. Then we present a user study focusing on the haptic enhancement of visual self-motion sensations, before finally discussing our experimental results and possible future works.

Although “vection” (illusory self-motion) was historically considered as a visual illusion, it can be defined more broadly as a subjective conscious experience of self-motion” (Palmisano et al., 2015). Its correlations with presence and immersion are studied, yet their relationship remain unclear (Prothero et al., 1995; Riecke et al., 2005a; Väljamäe et al., 2006). It was found that exposure to vection in VR modulates vestibular processing (Gallagher et al., 2019).

The vestibular system provides information about the angular rotation speed and linear acceleration of the head in space, which are crucial for self-motion estimation (Cullen, 2012). The relationship between visual and vestibular contributions to motion sensations is still not fully understood (Britton and Arshad, 2019), and they seem to be dynamically reweighted (Fetsch et al., 2009). Yet it can be said that the visual system is specialized for position and velocity estimation, while the vestibular system is optimized for acceleration processing.

This explains why the visual motion provided by a HMD, despite its ability to evoke illusory displacement, remains “incomplete” and not sufficient to provide compelling inertial sensations. In order to get physical sensations, there is a need for physical stimulation. Haptic devices provide kinesthetic and/or tactile stimulation. The vast majority of haptic solutions for VR are either wearable, holdable or grounded, and stimulate the finger or the hand in order to reproduce contact mechanics, material properties and physics of digital interaction (see (Wang D. et al., 2019) for a review). Much less addressed self-motion sensations.

One approach to induce vection is to stimulate a large part of the skin, usually by integrating vibrators in a chair (Soave et al., 2020). Yet the most common technological solution is to provide actual motion to the seat: moving seats are usually found in amusement arcades for racing games and in theme parks for so-called 4D cinemas2. Those systems being usually cumbersome and expensive, and yet limited by a restricted amplitude, several researchers proposed simplified versions relying on illusions or sensory substitution (Rietzler et al., 2018; Teng et al., 2019). In particular, Danieau et al. proposed to affix multiple force-feedback devices to a chair, stimulating specific parts of the body (hands and head), in order to provide inexpensive 6-DoF motion effects (Danieau et al., 2012).

Applying haptic stimulation on specific regions of the body (feet, hand or head) is indeed a promising approach to achieve rich effects with a minimalist setup. It was shown that a haptic stimulation of the feet could induce self-motion sensations while standing still in various virtual reality scenes (Nilsson et al., 2012), and modulates vection even in a seated position (Farkhatdinov et al., 2013; Kruijff et al., 2016). Lécuyer et al. investigated the perception improvement of visual turns by reproducing the turn angle (or its opposite) through a haptic handle (Lécuyer et al., 2004). Ouarti et al. used a similar setup to show that a haptic feedback in the hands could improve duration and occurrence of visually-induced illusory self-motion for linear and curved trajectories (Ouarti et al., 2014). Bouyer et al. extended this approach to an interactive video game context (Bouyer et al., 2017).

HMD-embedded haptics is a quite recent research topic. Peng et al. showed that step-synchronized vibrotactile stimuli on the head could significantly reduce cybersickness (Peng et al., 2020), while Wolf et al. proposed to combine vibrotactile and thermal feedback inside the HMD for an increased presence (Wolf et al., 2019). Gugenheimer et al. attached flywheels to an Oculus Rift DK2 to generate torque feedback on the head (Gugenheimer et al., 2016). A major drawback of this solution is the lack of transparency, as when the flywheel turns faster it builds up inertia against user’s movements. Kon et al. suggested to leverage the intriguing so-called “hanger reflex” effect, which is an involuntary head rotation arising from a specific pressure distribution, in order to provide various illusory forces (Kon et al., 2017). However this technique does not allow precise force rendering as it relies on muscular reflex that is likely to vary a lot among individuals. Chang et al. proposed a pulley-based mechanism to produce normal force on the HMD (Chang et al., 2018). This system could improve immersion in boxing or swimming simulations, but did not aim at generating self-motion sensations. Finally, Wang et al. integrated skin-stretch modules inside a HTC Vive Pro to provide haptic feedback on the user’s face (Wang C. et al., 2019). In a motorcycle racing simulation context, their system would simulate weight, bumping transients, inertial turns, and wind pressure. However, to our knowledge, grounded force feedback was never applied to a VR headset.

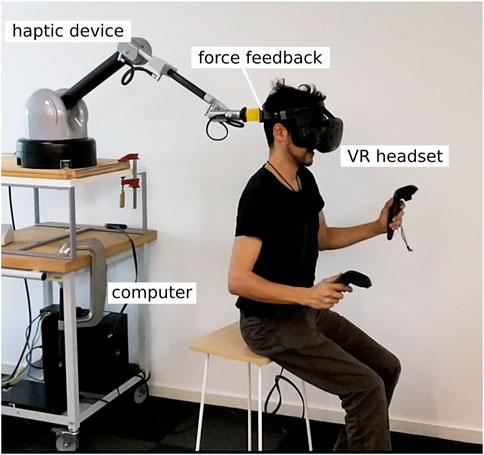

The Kinesthetic HMD is a novel approach to enhance self-motion sensations in virtual reality with head-based force feedback. It can be viewed as an augmentation of the HMD, adding precise forces and displacement to images and sound provided to the head (see Figure 1). The generated vestibular and proprioceptive cues emphasize the visual motion to produce stronger and more compelling sensations of begin accelerated, although the user, standing or seated, remains in the same place.

The force feedback should be congruent with visual motion, for instance being proportional to ego-acceleration. Yet the rendering algorithm can be adapted to the locomotion context. When the user walks virtually, the haptic device can simulate head movements in compliance with the walking pace. When the user gets in virtual car and drives, the force feedback can push or pull to simulate acceleration and braking.

The Kinesthetic HMD approach requires at least four components: a force-feedback device, a VR headset, a head clamping part transmitting the forces, and a software platform for VR content and haptic rendering. Such a system implies hardware, software as well as safety considerations, detailed in the reminder of this section.

Applying force feedback to the head raises a number of technical challenges, especially as producing vestibular cues requires steady directional forces, able to move the head relatively to the thorax in one to three directions. First, the actuator should benefit of adequate backing. Second, the system should be comfortable enough not to break immersion. Lastly, the forces should be correctly transmitted to the head.

Backing Most force feedback devices requires backing, either on the body (wearable) or on a fixed frame (grounded). Backing on the shoulders (or any body part other than the head) is complicated because of their mobility relatively to the head. Holdable devices, which could be totally embedded on a helmet, come with significant limitations like motion impediment and saturation (reaction wheels and weight shifting devices), or buzzing (asymmetric vibrations). Moreover their force capability is directly limited by their moving mass, and therefore their carrying weight.

Comfort The head is more sensitive than other body parts to noise, motion restriction or extra weight. Therefore mechanical actuators should ideally be properly isolated, or departed from the point of application. Also, the mechanical transmission should not present any risk of wrong movements for the neck.

Point of application The force feedback should not only be produced, but also properly transmitted, and choosing the adequate clamping system can be an issue. The clamping part should not slip on the head, yet it should remain comfortable. Pressure should be limited and therefore area of contact maximized, but avoiding ears or any sensitive part. Even with a capable device, producing a constant directional force on the head is challenging because when the head rotates the alignment with its mass center is easily lost, resulting in unwanted counter torques.

Designing the proper self-motion rendering algorithms for head-based force feedback is not straight-forward for several reasons, detailed in this subsection. First, the haptic stimulus should act as a metaphor, as we want the user to feel an illusory whole-body motion. Second, the provided forces and displacement should ensure safety and all values are not acceptable. The consequent filtering can impede realism, especially for real-time interactive content that is not known in advance. Third, the effect of the force feedback might depend on the user’s position, which should be taken in account in the rendering loop.

Haptic rendering Several authors used velocity-based vestibular feedback to study vection (Riecke, 2006; Vailland et al., 2020), but a comparative study suggested that acceleration is a better choice, producing stronger and more consistent illusory self-motion sensations (Ouarti et al., 2014). Another advantage of acceleration over velocity is that the summed amplitude over time is lower, and thus is less prone to workspace limitation issues. Among possible haptic metaphors, two were pointed out by previous works: the direct mode and the indirect mode (Lécuyer et al., 2004; Bouyer et al., 2017). In the direct mode, the haptic feedback is proportional to visual acceleration: this means if the virtual vehicle speeds up, the haptic device will push forwards, simulating physical acceleration. In the indirect mode, the haptic feedback is inverted: when the virtual vehicle speeds up the haptic device will pull backwards, simulating the physical body displacement.

Interactivity Just like the visual content, the haptic effects can be fully interactive (i.e., generated from user input), pre-recorded (i.e. experienced passively), or a mix of both (i.e. actively triggered or modulated). Pre-recorded content can be analyzed and scaled to mitigate extreme values while preserving realism. Fully interactive experiences might require thorough real-time filtering, depending on the content. Trade-offs can be found with hybrid strategies, for instance triggering a pre-recorded sequence only when the user reaches the adequate part of the workspace.

Motion filtering As our force feedback is expected to move the head, the user will lean (assuming they stay in the same position). The leaning amplitude will be limited either by the user’s anatomy or the haptic device’s capabilities. Just like with actuated seats, the rendering algorithms should avoid reaching the edges of the workspace, and eventually come back towards the center below perceptual thresholds, which can be achieved with so-called “washout filters” (Danieau et al., 2014). One potential issue in the context of head-based haptic rendering is to determine which perceptual thresholds (vestibular or proprioceptive) to consider.

Respecting anatomical limits and avoiding any risk of injury is of course a major requirement for any haptic setup, and even more when applied on the head. Although defining general-case safe limits is tricky, an order of magnitude of 100 N seem to be a low value both for neck muscular force in any direction for healthy adults (Lecompte, 2007) and for neck loadings involved by common non-injurious physical activities (Funk et al., 2011). Thus, values of one order of magnitude lower (dozens of N) might be considered for the maximal applied forces in healthy and warmed-up adults. In addition to limiting the total applied force, we suggest to limit the applied jerk (to avoid false moves), to have a hardware kill switch, and to design pre-session tests to adapt the gain to each individual. If the user is standing, the risk of unbalance and fall has also to be considered.

In order to showcase our concept, we developed a prototype (see Figure 2 for the detailed components) based on a high-end grounded haptic arm and tuned it for a flight simulation scenario. That is, we wanted to provide the user strong and compelling sensations of acceleration, deceleration, gravitational roll and turbulence. Also, we wanted our demo to be accessible without prior skills, so we chose a glider flight scenario, which requires minimal pilot skills. The full scenario is detailed in the accompanying video.

FIGURE 2. Flight simulation use case: the force feedback is inversely proportional to visual acceleration.

We used a Virtuose 6D 35–45 haptic arm, which provides strong forces and torques in a workspace of 1,200 × 515 × 915 mm. We placed its base about 1 m above the floor in order to have the user’s head in the middle of the workspace. By using a grounded device, we do not load the head with extra weight, and do not expose it to motor sound and vibrations.

For the HMD we used a HTC Vive headset with an unofficial “Rift S style” rigid headband found online. We designed and 3D-printed a mechanical connector replacing the Virtuose handle, plugged at the back of the headband and secured with a screw (see Figure 3). The use of a 6-DoF haptic arm allows for cancelling counter torques when applying steady 3-DoF forces.

We built upon a glider flight scenario of a standard software flight simulator (X-Plane 11). Flight simulation softwares output a series a real-time motion data, and notably acceleration values to be used for motion platforms. We used a python script to receive the flight simulation acceleration data through UDP and to compute the haptic rendering. The applied forces were negatively proportional to visual acceleration, i.e. the force command was equal to the acceleration vector multiplied by a negative coefficient (adjusted heuristically). In order to cancel counter torques, when force modulus exceeded 1 N the applied torques were simulating a virtual cylinder joint along the direction of the applied force. In this case, the Virtuose opposes to any rotation perpendicular to the defined direction, while translations remain free. When the forces were lower than 1 N there were no applied torques in order to let the head free to move.

Another advantage of flight simulation softwares is that they allow for recording and replaying scenarios. Therefore the user can choose between the intense experience of a fully interactive flight, or the comfort of a pre-recorded flight without the stress of manoeuvring the aircraft. In both cases, the force feedback is rendered in real-time from the acceleration values output. Moreover, as shown in the accompanying video, 3DoF accelerations allow the user to experience various effects (push/pull, lateral drift, turbulence).

No “washout filter” was implemented as applied forces usually lasted only a few seconds and were limited to 10 N, so the user would not reach the edges of the workspace during our scenario.

The Virtuose arm has a hardware security switch usually controlled by a proximity sensor integrated to the handle, so that force feedback is disabled whenever the handle is not held. We replaced it with a mechanical button acting as a kill switch that could be held either by the user or the experimenter. We limited the total applied force to 10 N. The gain factor between visual acceleration and applied forces could also be refined upon user request to adjust experience intensity.

In order to evaluate our system’s ability to enhance visually-induced self-motion sensations, we conducted a user study comparing the motion sensations evoked by visual and visuo-haptic displacement stimuli. On the basis of Ouarti et al.‘s results, we chose to focus on acceleration-based haptic rendering, and also to evaluate both direct mode and indirect mode, as Ouarti et al. suggested that half the people would prefer one and half the people the other (Ouarti et al., 2014).

In the context of virtual reality applications, we are not only interested in strengthening motion sensations, but also making them more compelling. That is, providing an illusory self-motion experience rather than a “scrolling landscape” feeling. In other words, we want the motion to be felt as more egocentric (self-motion rather than landscape motion) as well as more intense (with vivid bodily sensations). Comfort should also be considered, as visuo-haptic discrepancy can produce immersion-breaking awkwardness if they are not congruent.

Therefore we designed several displacement stimuli and evaluated the sensations they produced as follow:

• Relative motion: am I moving in a fixed an environment or am I at rest watching a moving environment?

• Acceleration: do I have bodily sensations similar to being in a moving vehicle with eyes closed?

• Likedness: how pleasant or unpleasant is the motion experience?

We designed our study to test the following hypotheses:

• H1: visuo-haptic stimuli induce more egocentric motion sensations than a similar visual stimulus

• H2: visuo-haptic stimuli induce stronger bodily sensations than a similar visual stimulus

• H3: some participants would have higher ratings (in relative motion, acceleration and/or comfort) for the direct mode, and some other for the indirect mode

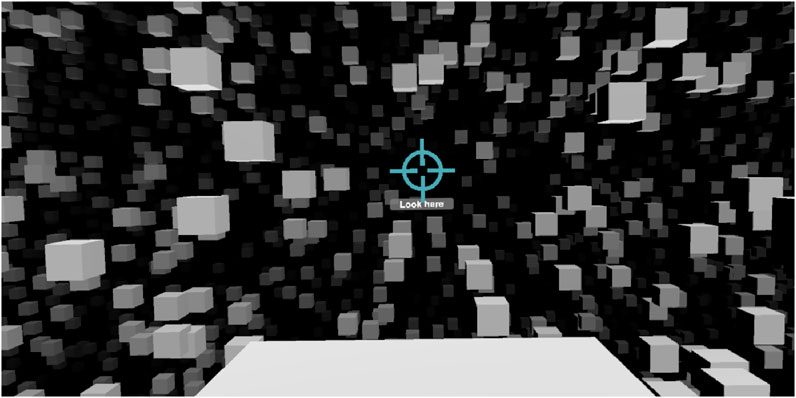

The visual context was designed so as to be as neutral as possible. The virtual environment was an empty space filled with a random spatial distribution of 20,000 white cubes, vanishing in a black fog at about 70 m distance. In order to mitigate the lack of embodiment and eventual vertigo symptoms, a white 3 m × 4 m rectangle was used as a symbolic ground.

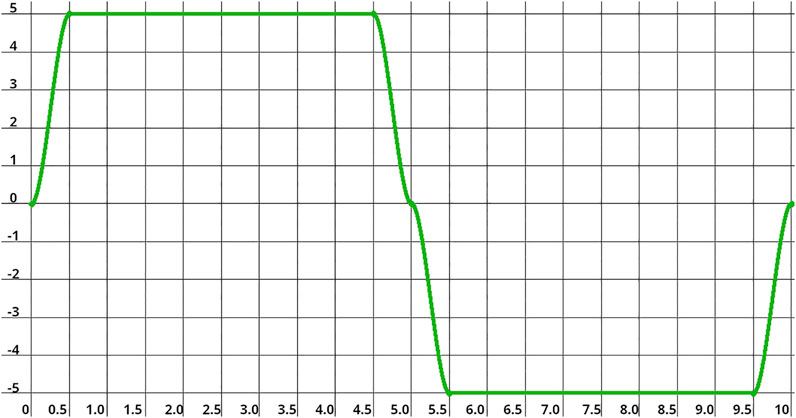

The displacement stimulus was based on a double step acceleration pattern (see Figure 4): a forward acceleration for 5 s followed by a deceleration of 5 s. In order to avoid transient effects, those two steps were eased with 0.5 s long sinusoidal curves, so the acceleration magnitude was of a maximal of 5 m s−2 for 4 s.

FIGURE 4. The temporal acceleration pattern used for the stimuli. The curve shows acceleration (m s−2) over time (s).

The visual stimulus was identical for all conditions: the cubes were accelerated according to the previously depicted pattern, creating an ambiguous relative displacement between them and the participant and ground. The three experimental conditions were as follow:

• H_NONE: no force feedback

• H_DIRECT: force feedback is proportional to the acceleration pattern: pushing during acceleration, pulling during deceleration

• H_INDIRECT: the force feedback is proportional to the opposite of the acceleration pattern

In both H_DIRECT and H_INDIRECT conditions, the haptic arm maintained head orientation constant during the stimulus to avoid any lever arm effect.

17 participants (2 females, age 23–54, mean = 35.3, SD = 10.7) volunteered for the experiment. They were all recruited in the research center, had corrected-to-normal vision, and no balance disorder history. They all signed an informed consent form prior to participating to the experiment. In order to prevent cybersickness symptoms, participants were asked to hydrate well before participating, and were free to have a break anytime during the experiment. One extra participant could not finish the experiment because of cybersickness symptoms, thus their incomplete data was removed from the results.

Each participant would attend a single session of about 50min consisting in three phases: an introduction phase, an exploration phase, and an experimental phase.

In the introduction phase, the participant read and signed an informed consent form conforming to the declaration of Helsinki. In order to clarify the vocabulary used during the experiment, the participant was orally given examples of different motion sensations related from a displacement stimulus: estimated traveled distance, relative motion (which of me or the landscape is actually moving ?), and “acceleration”, that is the non-visual sensation of being moved that can be felt when closing your eyes in a moving vehicle.

Then, the participant was introduced to the protocol through the exploration phase. The participant sat on a stool and adjusted the HMD for a correct vision. The experimenter progressively explained the experimental conduct by running eight trials (4 H_NONE, two H_DIRECT, two H_INDIRECT) and answering any question (except about the nature of the stimuli). In order to avoid surprise effects, the haptic arm was not connected to the HMD before the third trial (the first visuo-haptic stimulus). On one of the visuo-haptic trial, the participant was asked to perform a “security break exercise”: they would say a safeword any time during the stimulus, after which the experimenter immediately released the kill switch to disable haptic feedback and cancel the trial. The participant’s answers were not recorded during the introduction phase. After the eighth trial, the participant was offered to have a break, and the experimenter made sure they had no remaining question about the protocol or the task, before moving to the experimental phase.

The experimental phase consisted in a randomized block of 30 trials (10 repetitions of each three conditions). All the trials followed the same structure. First, the participant validated the launch of the trial. Then, a visual target was displayed forward for 1.5 s (see Figure 5). Then the stimulus was played for 10 s. Finally, the participant rated the stimulus on Relative Motion, Acceleration and Likedness scales (see Figure 6).

FIGURE 5. The visual stimulus used in the experiment. The target suggest looking forwared and disappears when the stimulus starts.

Participants were asked to keep their gaze towards the direction indicated by the target at the beginning of the trial. They were asked to maintain their gaze direction and simply remain comfortable if the haptic stimulus would modify their posture.

For each of the three evaluation criteria, as the normality assumption as the normality assumption was violated (Shapiro–Wilk’s normality test, p < 0.05), a Friedman test was conducted independently and significant differences were found each time (p < 0.001 for Relative Motion, p < 0.001 for Acceleration and p < 0.001 for Likedness). Therefore for the three criteria, the results for the H_NONE, H_DIRECT and H_INDIRECT conditions were compared pairwise with a Wilcoxon-signed ranks test with a Bonferroni correction. We did not find any effect of age, gender or previous experience of virtual reality on any of the three variables.

The ratings for Relative Motion (see Figure 7) were mostly positive (egocentric motion) in the H_DIRECT and H_INDIRECT conditions, and mixed for the H_NONE condition. They were significantly lower for the H_NONE condition than for both the H_DIRECT (p < 0.001) and the H_INDIRECT (p < 0.001) conditions.

Acceleration (see Figure 8) was mostly rated above 3/5 in the H_DIRECT and H_INDIRECT conditions, while mostly under 3/5 in the H_NONE condition. Ratings were significantly lower for the H_NONE condition than for both the H_DIRECT (p < 0.001) and the H_INDIRECT (p < 0.001) conditions.

Likedness (see Figure 9) ratings were mostly weakly positive for the H_DIRECT and H_NONE condition, and mixed for the H_INDIRECT condition. Significant differences were found between H_NONE and H_INDIRECT (p < 0.001), and to a lesser extent between H_NONE and H_INDIRECT (p = 0.0035).

Taken together our result validate both H1 and H2, with significantly higher ratings of Relative Motion and Acceleration for visuo-haptic stimuli compared the to the visual-only stimulus. Surprisingly, the rating distributions for both H_DIRECT and H_INDIRECT were very similar, and contrary to H3 we could not split the results in two populations preferring one or the other type of haptic feedback, as suggested by previous work (Ouarti et al., 2014). The only noticeable difference was the Likedness ratings, slightly more negative for the H_INDIRECT condition, leading to significant differences with the H_NONE condition, which ratings were mostly positive (almost never judged as uncomfortable).

Taken together the results of our study show that, as expected, the visuo-haptic stimuli induced more vivid and more compelling self-motion sensations than a similar visual-only stimulus. The enhanced motion sensations might arise from vestibular and/or proprioceptive cues. As the participants could resist more or less to the haptic feedback to maintain their posture, their leaning amplitude could vary a lot between the stimuli. However we could not find, formally or informally, a clear difference in the accompanying sensations. On top of that there was a significant difference in amplitude between individuals depending on their weight (which varied with a factor of two between participants). Yet even weighted individuals with no visible leaning might answer with high self-motion ratings. Further research should clarify the respective contributions of vestibular and proprioceptive cues.

The vestibular haptic cues might also alter the estimations travel distance, as suggested by previous work (Harris et al., 2000). We initially planned to include this task in our study, but the pilot tests revealed an surprisingly high cognitive load for making both distance and velocity/acceleration estimations. After providing a traveled distance estimate, the participants could hardly make assessments about relative motion or non-visual sensations and vice-versa, as if those two kinds of task had conflicting short memory processes. The perception of displacement given by visual and visuo-haptic stimuli remains to be studied.

As there is little difference between the outcomes of the two visuo-haptic conditions, it is not clear if the chosen haptic metaphors were effective or if simply having an extra physical stimulation would improve users’ rating. Further studies should include a haptic-only condition, and/or graduate the stimulus intensity.

The informal reactions to our flight simulator prototype confirmed that the visuo-haptic experience was much more compelling than the visual-only one. After having tried the haptic-enhanced scenario, the visual-only scenario felt “empty” or “flat”, and also more prone to dizziness. It seemed that both low-frequency and high frequency haptic feedback contributed strongly to the quality of experience: the roaring take-off would lack of power without the slow and strong pull, but would also lack of realism without the swift turbulence.

Although the mismatch generated by the use of inertial cues only at the head might be a limitation of our approach, users did not mention about it. Modulating the amplitude of the haptic stimuli (that was obviously lower than on a real flight) was accepted, and being able to adjust the coefficient was perceived as handy.

The rigid headband can be used separately from the haptic arm, but also from the HMD, which means we could use it with other visual conditions, from a simple screen to video-projected space or augmented reality glasses. A quick fastener on various haptic devices could allow for a versatile usage.

Depending on the application the displacement might be active or passive, and the user can be seated or standing. The design of head-based haptic feedback for active displacement might lead to novel locomotion techniques, improving user performance and/or quality of experience. When standing, the workspace can be limited depending on the chosen haptic device, and hardware should also be adapted to imbalance and falling issues.

The lack of bodily sensations is a major issue for virtual reality, limiting user quality of experience and agency, and increasing cybersickness occurrence. Our approach might open thrilling possibilities to apply haptic cinematography principles, as proposed by Danieau et al. (Guillotel et al., 2016). Beyond the realism of physical simulations, creators could make use of non-diegetetic effects to increase dramatic intensity, and direct attention with staging or anticipation techniques. More generally, haptics could be seen as a media by itself, able to improve storytelling and make better narratives, just like cinema sound design.

Head-based force feedback opens exciting possibilities in terms of haptic rendering, training simulation and virtual reality quality of experience. It also comes with various application-related challenges to address in future work.

Our prototype based on a 6-DoF haptic arm was able to provide stable forces in any of the three directions. Yet both visual and vestibular perceptual thresholds are anisotropic (Crane, 2014), thus directionality could be taken in account when elaborating head-based visuo-haptic stimuli, and the psychophysical thresholds could be investigated.

High individual variance, habituation and attentional phenomenons as well as influence from various top-down factors (Riecke et al., 2005b) need to be taken in account to achieve the rendering of bodily sensations. Control laws could be adjusted depending on the user as well as the virtual environment context, like getting in a virtual vehicle or flying. Those adjustment might be achieved with offline tests to estimate user sensibility, as well as tuned in real-time depending on user’s state, similarly to motion platform washout algorithms. The role of habituation and attention could also be further studied.

For specific scenarios, smaller haptic devices could be used. For instance, for a flight simulation altitude control exercise, the force feedback might only simulate gravitational tilt: when the plane pitches up, the user is pulled backwards, and when the plane is pointing down the user is pushed forwards.

In this paper, we proposed a novel approach to make self-motion sensations stronger and more compelling in virtual reality applications, by means of head-based force feedback. The provided vestibular and proprioceptive cues enhance the perception of self-motion, making it more egocentric and more bodily, while preserving user’s comfort. We discussed the technical and scientific challenges raised by head-based force feedback and demonstrated a flight simulator use case with a proof-of-concept prototype based on a high-end grounded haptic arm. We evaluated our system with a user study focused on the motion sensations provided by visuo-haptic displacement stimuli. Our results showed that the visuo-haptic rendering induced more vivid and more egocentric sensations of self-motion than a similar visual-only rendering.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Comité Opérationnel d’Evaluation des Risques Légaux et Ethiques Inria Rennes avis n° 2021–15. The patients/participants provided their written informed consent to participate in this study.

AC designed the prototype and the use case, conducted the study and wrote the paper. AL provided the original idea, helped designing the experimental protocol, and provided corrections to the final writing. Both authors approved the final version of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The authors would like to thank Guillermo Andrade Barroso for his kind help with the 3D printing.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2022.838720/full#supplementary-material

1Varjo website (accessed: 2021–11-29) https://varjo.com/products/vr-3

2Wikipedia notice for 4D film (accessed: 2021–11-29) https://en.wikipedia.org/wiki/4D_film

Boletsis, C. (2017). The new era of Virtual Reality Locomotion: A Systematic Literature Review of Techniques and a Proposed Typology. Multimodal Tech. Interaction. 1, 24. doi:10.3390/mti1040024

Bouyer, G., Chellali, A., and Lécuyer, A. (2017). “Inducing Self-Motion Sensations in Driving Simulators Using Force-Feedback and Haptic Motion,” in 2017 IEEE Virtual Reality (VR) (IEEE), 84–90. doi:10.1109/vr.2017.7892234

Britton, Z., and Arshad, Q. (2019). Vestibular and Multi-Sensory Influences upon Self-Motion Perception and the Consequences for Human Behavior. Front. Neurol. 10, 63. doi:10.3389/fneur.2019.00063

Chang, H.-Y., Tseng, W.-J., Tsai, C.-E., Chen, H.-Y., Peiris, R. L., and Chan, L. (2018). “Facepush: Introducing normal Force on Face with Head-Mounted Displays,” in Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, 927–935.

Crane, B. T. (2014). Human Visual and Vestibular Heading Perception in the Vertical Planes. J. Assoc. Res Otolaryngol. 15, 87–102. doi:10.1007/s10162-013-0423-y

Cullen, K. E. (2012). The Vestibular System: Multimodal Integration and Encoding of Self-Motion for Motor Control. Trends Neurosciences. 35, 185–196. doi:10.1016/j.tins.2011.12.001

Danieau, F., Lécuyer, A., Guillotel, P., Fleureau, J., Mollet, N., and Christie, M. (2014). A Kinesthetic Washout Filter for Force-Feedback Rendering. IEEE Trans. Haptics. 8, 114–118. doi:10.1109/TOH.2014.2381652

Danieau, F., Fleureau, J., Guillotel, P., Mollet, N., Lécuyer, A., and Christie, M. (2012). “Hapseat: Producing Motion Sensation with Multiple Force-Feedback Devices Embedded in a Seat,” in Proceedings of the 18th ACM symposium on Virtual reality software and technology, 69–76.

Farkhatdinov, I., Ouarti, N., and Hayward, V. (2013). “Vibrotactile Inputs to the Feet Can Modulate Vection,” in 2013 World Haptics Conference (WHC) (IEEE), 677–681. doi:10.1109/whc.2013.6548490

Fetsch, C. R., Turner, A. H., DeAngelis, G. C., and Angelaki, D. E. (2009). Dynamic Reweighting of Visual and Vestibular Cues during Self-Motion Perception. J. Neurosci. 29, 15601–15612. doi:10.1523/jneurosci.2574-09.2009

Funk, J. R., Cormier, J. M., Bain, C. E., Guzman, H., Bonugli, E., and Manoogian, S. J. (2011). Head and Neck Loading in Everyday and Vigorous Activities. Ann. Biomed. Eng. 39, 766–776. doi:10.1007/s10439-010-0183-3

Gallagher, M., Dowsett, R., and Ferrè, E. R. (2019). Vection in Virtual Reality Modulates Vestibular‐evoked Myogenic Potentials. Eur. J. Neurosci. 50, 3557–3565. doi:10.1111/ejn.14499

Gugenheimer, J., Wolf, D., Eiriksson, E. R., Maes, P., and Rukzio, E. (2016). “Gyrovr: Simulating Inertia in Virtual Reality Using Head Worn Flywheels,” in Proceedings of the 29th Annual Symposium on User Interface Software and Technology, 227–232.

Guillotel, P., Danieau, F., Fleureau, J., Rouxel, I., and Christie, M. (2016). “Introducing Basic Principles of Haptic Cinematography and Editing,” in Proceedings of the Eurographics Workshop on Intelligent Cinematography and Editing, 15–21.

Harris, L. R., Jenkin, M., and Zikovitz, D. C. (2000). Visual and Non-visual Cues in the Perception of Linear Self Motion. Exp. Brain Res. 135, 12–21. doi:10.1007/s002210000504

Kim, K. (2016). Is Virtual Reality (Vr) Becoming an Effective Application for the Market Opportunity in Health Care, Manufacturing, and Entertainment Industry? Eur. Scientific J. 12(9), 14–22. doi:10.19044/esj.2016.v12n9p14

Kon, Y., Nakamura, T., and Kajimoto, H. (2017). “Hangerover: Hmd-Embedded Haptics Display with Hanger Reflex,” in ACM SIGGRAPH 2017 Emerging Technologies, 1–2.

Kruijff, E., Marquardt, A., Trepkowski, C., Lindeman, R. W., Hinkenjann, A., Maiero, J., et al. (2016). “On Your Feet! Enhancing Vection in Leaning-Based Interfaces through Multisensory Stimuli,” in Proceedings of the 2016 Symposium on Spatial User Interaction, 149–158.

Lecompte, J. (2007). Biomecanique du segment tete-cou in vivo & aeronautique militaire. Paris: Approches neuromusculaire et morphologique. Ph.D. thesis, Arts et Métiers ParisTech.

Lécuyer, A., Vidal, M., Joly, O., Mégard, C., and Berthoz, A. (2004). “Can Haptic Feedback Improve the Perception of Self-Motion in Virtual Reality?,” in 12th International Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, 2004. HAPTICS’04. Proceedings (IEEE), 208–215.

Nilsson, N. C., Nordahl, R., Sikström, E., Turchet, L., and Serafin, S. (2012). “Haptically Induced Illusory Self-Motion and the Influence of Context of Motion,” in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (Springer), 349–360. doi:10.1007/978-3-642-31401-8_32

Ouarti, N., Lécuyer, A., and Berthoz, A. (2014). “Haptic Motion: Improving Sensation of Self-Motion in Virtual Worlds with Force Feedback,” in 2014 IEEE Haptics Symposium (HAPTICS) (IEEE), 167–174. doi:10.1109/haptics.2014.6775450

Palmisano, S., Allison, R. S., Schira, M. M., and Barry, R. J. (2015). Future Challenges for Vection Research: Definitions, Functional Significance, Measures, and Neural Bases. Front. Psychol. 6, 193. doi:10.3389/fpsyg.2015.00193

Peng, Y.-H., Yu, C., Liu, S.-H., Wang, C.-W., Taele, P., Yu, N.-H., et al. (2020). “Walkingvibe: Reducing Virtual Reality Sickness and Improving Realism while Walking in Vr Using Unobtrusive Head-Mounted Vibrotactile Feedback,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–12. doi:10.1145/3313831.3376847

Prothero, J. D., Hoffman, H. G., Parker, D. E., Furness, T. A., and Wells, M. J. (1995). “Foreground/background Manipulations Affect Presence,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting (Los Angeles, CA): SAGE Publications Sage CA), 1410–1414. doi:10.1177/154193129503902111

Reiner, M. (2004). The Role of Haptics in Immersive Telecommunication Environments. IEEE Trans. Circuits Syst. Video Technol. 14, 392–401. doi:10.1109/tcsvt.2004.823399

Riecke, B. E., Schulte-Pelkum, J., Avraamides, M. N., Von Der Heyde, M., and Bülthoff, H. H. (2005a). “Scene Consistency and Spatial Presence Increase the Sensation of Self-Motion in Virtual Reality,” in Proceedings of the 2nd Symposium on Applied Perception in Graphics and Visualization, 111–118. doi:10.1145/1080402.1080422

Riecke, B. E., Västfjäll, D., Larsson, P., and Schulte-Pelkum, J. (2005b). Top-down and Multi-Modal Influences on Self-Motion Perception in Virtual Reality. Proc. HCI Int., 1–10.

Riecke, B. E. (2006). “Simple User-Generated Motion Cueing Can Enhance Self-Motion Perception (Vection) in Virtual Reality,” in Proceedings of the ACM symposium on Virtual reality software and technology, 104–107. doi:10.1145/1180495.1180517

Rietzler, M., Hirzle, T., Gugenheimer, J., Frommel, J., Dreja, T., and Rukzio, E. (2018). “Vrspinning: Exploring the Design Space of a 1d Rotation Platform to Increase the Perception of Self-Motion in Vr,” in Proceedings of the 2018 Designing Interactive Systems Conference, 99–108.

Soave, F., Bryan-Kinns, N., and Farkhatdinov, I. (2020). “A Preliminary Study on Full-Body Haptic Stimulation on Modulating Self-Motion Perception in Virtual Reality,” in International Conference on Augmented Reality, Virtual Reality and Computer Graphics (Springer), 461–469. doi:10.1007/978-3-030-58465-8_34

Teng, S.-Y., Huang, D.-Y., Wang, C., Gong, J., Seyed, T., Yang, X.-D., et al. (2019). “Aarnio: Passive Kinesthetic Force Output for Foreground Interactions on an Interactive Chair,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 1–13.

Vailland, G., Gaffary, Y., Devigne, L., Gouranton, V., Arnaldi, B., and Babel, M. (2020). “Vestibular Feedback on a Virtual Reality Wheelchair Driving Simulator: A Pilot Study,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, 171–179.

Väljamäe, A., Larsson, P., Västfjäll, D., and Kleiner, M. (2006). Vibrotactile Enhancement of Auditory-Induced Self-Motion and Spatial Presence. J. Audio Eng. Soc. 54, 954–963.

Wang, C., Huang, D.-Y., Hsu, S.-w., Hou, C.-E., Chiu, Y.-L., Chang, R.-C., et al. (2019). “Masque: Exploring Lateral Skin Stretch Feedback on the Face with Head-Mounted Displays,” in Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology, 439–451.

Wang, D., Ohnishi, K., and Xu, W. (2019). Multimodal Haptic Display for Virtual Reality: A Survey. IEEE Trans. Ind. Electronics 67, 610–623.

Keywords: virtual reality, force feedback, haptics, vection, simulator

Citation: Costes A and Lécuyer A (2022) The “Kinesthetic HMD”: Inducing Self-Motion Sensations in Immersive Virtual Reality With Head-Based Force Feedback. Front. Virtual Real. 3:838720. doi: 10.3389/frvir.2022.838720

Received: 18 December 2021; Accepted: 06 April 2022;

Published: 29 April 2022.

Edited by:

Michael Zyda, University of Southern California, United StatesReviewed by:

Anderson Maciel, Federal University of Rio Grande do Sul, BrazilCopyright © 2022 Costes and Lécuyer . This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anatole Lécuyer , YW5hdG9sZS5sZWN1eWVyQGlucmlhLmZy

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.