94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 13 August 2021

Sec. Virtual Reality and Human Behaviour

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.712378

This article is part of the Research Topic Immersive Reality and Personalized User Experiences View all 7 articles

While studies have increasingly used virtual hands and objects in virtual environments to investigate various processes of psychological phenomena, conflicting findings have been reported even at the most basic level of perception and action. To reconcile this situation, the present study aimed 1) to assess biases in size perception of a virtual hand using a strict psychophysical method and 2) to provide firm and conclusive evidence of the kinematic characteristics of reach-to-grasp movements with various virtual effectors (whole hand or fingertips only, with or without tactile feedback of a target object). Experiments were conducted using a consumer immersive virtual reality device. In a size judgment task, participants judged whether a presented virtual hand or an everyday object was larger than the remembered size. The results showed the same amplitude of underestimation (approximately 5%) for the virtual hand and the object, and no influence of object location, visuo-proprioceptive congruency, or short-term experience of controlling the virtual hand. Furthermore, there was a moderate positive correlation between actual hand size and perception bias. Analyses of reach-to-grasp movements revealed longer movement times and larger maximum grip aperture (MGA) for a virtual, as opposed to a physical, environment, but the MGA did not change when grasping was performed without tactile feedback. The MGA appeared earlier in the time course of grasping movements in all virtual reality conditions, regardless of the type of virtual effector. These findings confirm and corroborate previous evidence and may contribute to the field of virtual hand interfaces for interactions with virtual worlds.

An increasing number of studies use consumer immersive virtual reality (VR) devices to investigate human perception, cognition, and action (e.g., Keizer et al., 2016; Osumi et al., 2017; Freeman et al., 2018; Sawada et al., 2020). A major benefit of using these devices is that they allow the experimenter to control environments and stimuli that are difficult to control in the real world. To accurately interpret cognitive or sensorimotor alterations in virtual environments (VEs), in psychological experiments as well as for practical applications, it is important to know the characteristics of perceptual and motor aspects of virtual objects and take those characteristics into consideration. Many researchers have revealed human biases in the perception of size, distance, and shape of virtual objects and spaces (see, Loomis et al., 2003; Renner et al., 2013; Creem-Regehr et al., 2015). However, the concern remains that there is a subjective underestimation of object size in virtual worlds (Thompson et al., 2004). Although recent consumer VR devices have been shown to provide great accuracy even for experimental purposes (Hornsey et al., 2020), it has not yet been confirmed how the perception of the size of one’s own body parts differs between physical environments (PEs) and immersive VEs created by a current-generation consumer device (cf., Bhargava et al., 2021). To interact with virtual objects from a natural first-person perspective, virtual hands are especially important. While several studies have shown characteristic kinematic properties in reach-to-grasp movements with a virtual hand (Magdalon et al., 2011; Levin et al., 2015) such as longer movement time, wider maximum grip aperture (MGA), and delayed MGA timing, these patterns were not necessarily replicated by other studies (e.g., Furmanek et al., 2019). Perceived virtual hand size is also crucial because it may influence the perception of the size of the whole body (Linkenauger et al., 2013; Mine et al., 2020). To accurately interpret VR studies and improve VR experience, the characteristics of virtual hands in VEs in terms of both perception and motor control need to be elucidated.

The first aim of this study was to assess biases in the size perception of a virtual hand in a VE and define the effects of three factors on size perception: 1) presented position, 2) visuo-proprioceptive congruency between virtual and real hands, and 3) motor control experience of the virtual hand. These three factors were selected because they influence human perception in VEs. Presented position is important because spatial heterogeneity has been observed in distance perception: near distances are overestimated and far distances underestimated in VEs (see, Loomis et al., 2003; Renner et al., 2013). Disparity in visuo-proprioceptive congruency means that a visual image of a VR version of a viewer’s body part can deliberately be presented at a location where the real one does not exist; such disparity between physical and virtual hands in a VE influences multisensory integration processes (Fossataro et al., 2020), which may affect hand size perception. Motor control experience of the virtual hand and/or adaptation to the VE could change size perception because minimum experience of reaching movements with a virtual arm induces a certain calibration in distance perception in a VE (Linkenauger et al., 2015). Understanding the effects of these components on hand size perception is crucial for the development of virtual experiences with deeper immersion and the precise control of psychological experiments.

To examine hand size perception, the current study used a task in which participants judged whether a presented virtual hand or object was larger than the remembered hand or object. Using this task in a PE, Cardinali et al. (2019) investigated the estimation bias of actual hand size among children aged 6–10 years. In their experiment, 3D-printed hand models or novel objects of different sizes were presented using the method of limits, and the participants judged them based on their memory of their own hand size or the object size. The authors found a consistent underestimation bias only for memorized hand size, with the bias increasing with age; that is, older children perceived smaller hand models as the same size as their own hands, and hence they demonstrated an overestimation bias of the hand model in a PE. This bias is opposite to that usually observed in VEs, where objects are generally perceived to be smaller than expected (Thompson et al., 2004; Stefanucci et al., 2012; Creem-Regehr et al., 2015; but also see; Stefanucci et al., 2015; de Siqueira et al., 2021). Previous research suggests two possibilities for hand size perception in an immersive VE: either that overestimation of a virtual hand size is observed, as in a PE, or underestimation of a virtual hand size is observed, as has long been reported in VR studies.

Perception bias was assessed by a strict psychophysical method. The point of subjective equality (PSE) and the just noticeable difference (JND) of hand size perception in VE were calculated using the method of constant stimuli. PSE is the stimulus value that one perceives as equal to the standard stimulus, while JND is the minimum value of stimulus change that one can detect 50 percent of the time, also known as the difference threshold. Previous studies used verbal scaling (Linkenauger et al., 2013; Lin et al., 2019) and visual or physical matching (Jung et al., 2018; de Siqueira et al., 2021), which are easy to implement but also susceptible to cognitive or motor biases other than perceptual bias. While Stefanucci et al. (2012) and Stefanucci et al. (2015) used a two-alternative forced choice, which is one of the most reliable psychophysical methods, those studies focused on non-human objects in a 2D monitor and did not use psychophysical functions to calculate PSE. In addition, most previous studies on size perception in VEs reported PSE but not JND, which indicates an inaccurate area of a given perception, using which one can evaluate whether the biased perception is detectable to observers or not. Using the method of constant stimuli and a two-alternative forced choice, this study calculated precise PSE and JND for both body parts and non-human objects.

The second purpose of this study was to establish evidence for the kinematic characteristics of reach-to-grasp movements in a VE created by a consumer immersive VR device. Although some studies have shown kinematic alterations when visual or haptic information is changed using VR techniques, results are not unequivocal, due to differences in participant samples, methods of creating VEs, target objects, control methods of virtual hands, devices, and experimental conditions (Kuhlen et al., 2000; Bingham et al., 2007; Magdalon et al., 2011; Bozzacchi and Domini, 2015; Levin et al., 2015; Ozana et al., 2018; Furmanek et al., 2019). For example, wider MGAs in VEs than in PEs were reported across many studies (Kuhlen et al., 2000; Magdalon et al., 2011; Bozzacchi and Domini, 2015; Levin et al., 2015) but not in a recent one with an immersive consumer VR device (Furmanek et al., 2019). The profile of grip aperture (the distance between tips of a thumb and an index finger) is particularly important in motor control of reach-to-grasp movements and is susceptible to changes in visual information of a target/effector and physical characteristics of an effector (Jakobson and Goodale, 1991; Gentilucci et al., 2004; Bongers, 2010; Itaguchi and Fukuzawa, 2014; Itaguchi, 2020). If one wants to use a haptic-free VR system in neurorehabilitation for stroke patients, a comprehensive characterization of the aperture profiles in the two environments will provide important information regarding feasibility (Furmanek et al., 2019) and will also help clarify whether kinematic alterations in the movements are caused by the patient’s symptom or the VE itself. The current study thus compared four commonly used virtual hand conditions with a baseline PE condition; the grasping effector was represented as a usual hand model (VE-H) or just two spheres, the position of which corresponded to the tips of the thumb and index finger (VE-S). Furthermore, this study introduced conditions in which tactile feedback from the target object was provided (VE-Hn and VE-Sn). The sphere condition was based on Bozzacchi and Domini (2015) and Furmanek et al. (2019), and the tactile feedback on the experimental conditions described in Bingham et al. (2007) and Furmanek et al. (2019).

This study adopted an exploratory approach and did not have specific hypotheses for size perception or kinematic characteristics of reach-to-grasp movements in an immersive VE. The focus of the study was to provide firm and conclusive evidence on the above two topics, the previous evidence on which was not decisive, using precise psychological methods and a current-generation immersive VR device. The study’s first goal was to clarify how one perceives the size of a human hand and a non-human everyday object presented in a VE and to determine what factors influence perception. The second purpose was to clarify the differences in aperture control during grasping movements between the PE and VEs with/without visual and tactile information.

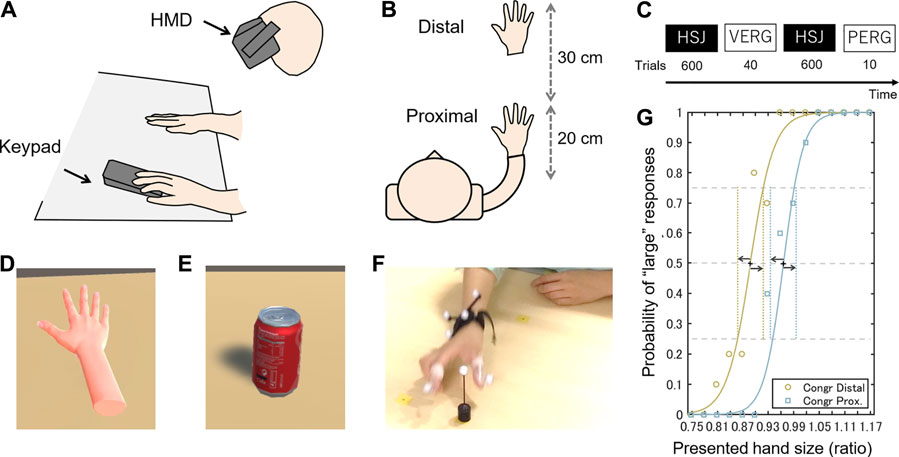

Sixteen right-handed young adults (mean age = 22.7 years, SD = 3.4) participated in the main experiment, which investigated hand size perception and the kinematics of reach-to-grasp movements in a VE. The participants wore a head-mounted display (HMD) (Oculus Rift, Facebook, Inc.) while sitting in a chair and putting their right hand on a desk (Figures 1A,B). In the main experiment, measurements of hand size perception were conducted both before and after participants performed the reach-to-grasp movement task to examine the effect of adaptation to the virtual hand on perception of hand size (Figure 1C). An example of a virtual hand (CG model) is shown in Figure 1D.

FIGURE 1. Experimental settings and stimuli. Side and top (A, B) view of the experimental scene, the time course of the main experiment (C), virtual hand presented in the main experiment (D), virtual can presented in the reference experiment (E), experimental scene of the reach-to-grasp movement task (F), and an example of psychophysical functions for the size judgment task (G). In panel (G), the crosses (+) indicate a PSE (50% probability of small/large responses) and the arrows indicate JND. HSJ: hand size judgment task, VERG: reach-to-grasp movement task in a virtual environment, PERG: reach-to-grasp movement task in a real environment.

In addition, a reference experiment was performed with another group of 16 young adults (mean age = 23.0 years, SD = 4.2) to assess the bias in size perception of a remembered everyday object. In the reference experiment, size judgments were performed for a can of cola (120 mm in height and 66 mm in diameter, Figure 1E) instead of a virtual hand. Object size perception was measured only once, and the reach-to-grasp movement task was not performed by this group of participants. The other procedures were identical to those in the main experiment.

All participants provided written informed consent prior to participating in the experiment. This study was approved by the ethics committee of Shizuoka University (approval no. 19–45) and was conducted in accordance with institutional guidelines and regulations.

A virtual hand and desk were presented on the HMD. Unity (version 2019.1.8f1) was used to present visual stimuli and collect participants’ responses. Hand size perception was examined using the method of constant stimuli, with participants judging whether a presented virtual hand was larger or smaller than the remembered hand by pressing a key with their left hand (two-alternative forced choice). As soon as they responded to a stimulus, the next stimulus was presented. Response keys were counterbalanced across participants. Participants were instructed not to move their right hand and not to move their heads to look at their hands from a close distance.

The size of the virtual hands ranged from 0.75 to 1.17 times as large as the participants’ actual hand size (in 0.02 increments, with 15 sizes in total; 1.0 is the same size as the actual object), and the presentation order was randomized. The ratio but not absolute length (cm) was used to control individual differences in hand size among participants. The size range and size interval were determined by the results of a pilot experiment. The participants in the main experiment performed 10 trials per size in each block (15 sizes × 10 trials); therefore, one judgment task consisted of 600 trials (150 trials × 2 positions × 2 consistencies).

Three factors were manipulated in the experiment: hand position (proximal and distal), visuo-proprioceptive congruency of hand position between the PE and VE (consistent and inconsistent), and motor control experience (measurement phase; pre- and post-movement task). The proximal and distal hand positions were 20 and 50 cm away from the participant’s body, respectively (Figure 1B). The transverse position of the hand was determined by the participants themselves as the most comfortable position and was kept constant between the experiments. The hand was positioned in front of the participant’s shoulder in most cases. In the congruent trials, a virtual hand was presented at the location where the actual hand was located (proximal–proximal and distal–distal), while in the incongruent trials, it was presented at the location where the actual hand was not located (proximal–distal and distal–proximal). The order of these blocked conditions was counterbalanced across participants.

The same CG hand model and color was used for all participants, with only its size changing. The skin color was adjusted to be natural for Japanese participants. The virtual hand size (finger length, finger width, and palm size) was customized for each participant based on their actual hand size: the length between the tip and the proximal joint of the index finger, the width of the index finger, and the length between the wrist and the proximal joint of the index finger was calculated using 3D positions measured with an optical motion tracking system (Flex 13, Optitrack, Inc.) before the experiment, and the ratios were implemented in the virtual CG hand. The virtual hand could not be moved by participants during the size judgment task. The surface of the VE desk was not patterned, unlike the real desk, in order to avoid providing any size information for the hand size judgments.

The participants in the main experiment performed reach-to-grasp movements in the VE between the hand size judgment tasks. The participants reached for, grasped, and lifted a cylindrical target (2 cm in height and 1.5 cm in diameter) in four virtual hand conditions: VE-H, VE-S, VE-Hn, and VE-Sn (H: hand, S: sphere, n: no feedback). In the hand condition, the grasping effector was represented as a hand model, the configuration of which was customized for each participant as in the hand size judgment task. In the sphere condition, the grasping effector was represented as only two spheres (1 cm in diameter) whose positions corresponded to the tips of the thumb and index finger; no other parts of the hand were presented. In the feedback condition, the participants could grasp and transport the actual target, while in the no-feedback condition, they could not obtain any tactile feedback of the target because the target object was only visible. Note that this target could be grasped and transported in the VE using a custom-made touch detection algorithm; when one of the virtual fingers/spheres touched the target object, it would adhere to the virtual finger/sphere.

The start position was located at the proximal position, and the target object was located at the distal position. Passive reflective markers were attached to the tip of the participant’s thumb, index finger, and wrist and to the top of the target object (Figure 1F). By using 3D positions of the fingers and target object and six degrees of freedom coordinates of the wrist, measured using the optical motion tracking system at 120 Hz, the participants could control the virtual hand and grasp the target in the VE. In the hand condition, the participants could control only the thumb and index finger, and they were thus asked to clench the other three fingers during the task to avoid inconsistencies between proprioception and visual information. Ten trials of reach-to-grasp movements in each condition (40 trials in total) were recorded for the analysis, followed by 1 min of unrecorded trials for familiarization with the virtual hand control in the VE-H condition, which was conducted to present the same condition for all participants immediately before the second hand size judgment task. After the second set of hand judgment task, as baseline data, the participants performed another 10 trials of reach-to-grasp movements in the PE without the HMD.

The probabilities of judgment responses were plotted for each hand size and then fitted to a logarithmic function to create a psychophysical function (for details see, e.g., Marks and Algom, 1998 or Gescheider, 2013). Figure 1G shows an example of psychophysical function for one participant. The PSE (where the probability of large/small judgments was 50%) and the JND (as half the difference between the 25 and 75% points) were calculated for each participant.

To assess perception biases (overestimation or underestimation) in hand and object size perception, paired Welch’s t-tests from 1 (actual size) were conducted on the PSE data using Holm’s method. PSE values larger/smaller than 1 indicate that participants perceive a virtual object to be larger (overestimation)/smaller (underestimation) than its actual size. A three-way within-participant ANOVA was then conducted on the PSE and JND data to test the effects of the three factors on size perception. For the reference task, which was conducted only once and did not include the visuo-proprioceptive congruency factor, paired Welch’s t-tests were used to examine the effect of the presented location on the measures. In addition, the Pearson’s correlation coefficient between the actual hand size and the two measures (PSE and JND) was calculated to determine a possible relationship that could be taken into account in VR studies.

Four standard kinematic measures of the reach-to-grasp movements were calculated: movement time, MGA, relative timing of MGA (MGA timing), and plateau duration. Movement time was defined as the time between the onset of the movement and the end of the grasp. The MGA is the maximum value of the aperture (the distance between the tips of the thumb and index finger) in the movement. MGA timing refers to the relative timing when the MGA appears. Plateau duration is the relative time length of the period when the aperture is larger than 90% of the MGA, which reflects the temporal coordination of the reach and grasp components. The details of the analysis of the reach-to-grasp movements followed Itaguchi and Fukuzawa (2014) and Itaguchi (2020); the onset of the movement was defined as the first time point at which tangential velocity exceeded 1 cm/s. The offset of the movement (the time of grasp) was the first time point at which tangential velocity fell below 5 cm/s after the maximum velocity and the change in aperture was terminated. Measures were compared between the PE condition and the other VE conditions by multiple comparisons with Holm’s method. An ANOVA was not conducted because the present study focused on the difference between each VE condition and natural hand grasping performed in the natural environment.

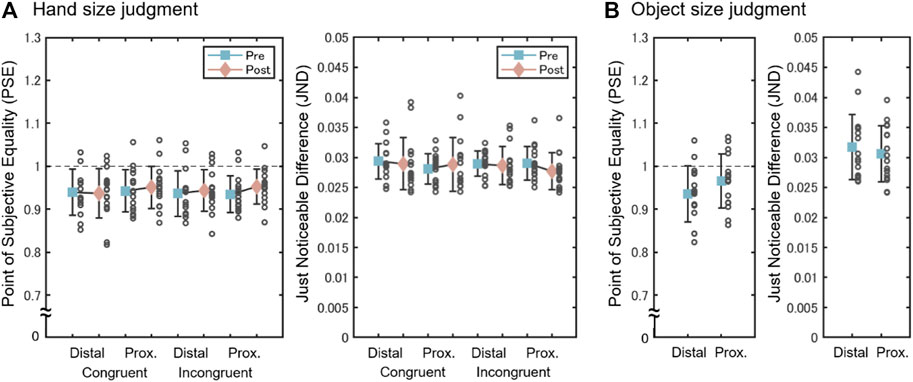

The results show that the average PSE in the hand size judgment was statistically smaller than 1 in all conditions, indicating that participants perceived the virtual hand to be smaller than their actual hand. Figure 2 shows the PSE and JND averaged for each experimental condition. The grand mean of the PSE and that of the JND for the hand size judgment (main experiment, Figure 2A) were 0.94 (SD = 0.04) and 0.030 (SD = 0.002), respectively, and 0.95 (SD = 0.06) and 0.031 (SD = 0.005) for the object size judgment (reference experiment, Figure 2B). Welch’s t-tests on the hand size judgments showed that the PSE was significantly smaller than 1 in all eight comparisons [ts (15) = 4.52, 4.67, 4.78, 6.19, 4.35, 4.02, 4.63, and 4.71, adj. ps < 0.05]. The object size judgment data showed that the PSE was significantly smaller than 1 in the distal [t (15) = 3.94, adj. p < 0.05] and in the proximal [t (15) = 2.18, adj. p < 0.05] positions. The grand mean of the goodness of fit measures (R2) for each psychophysical function was 0.81 (SD = 0.07) in the hand size judgment task and 0.79 (SD = 0.09) in the object size judgment task.

FIGURE 2. Averaged point of subjective equality (PSE) and just noticeable difference (JND) in the hand size judgment task (A) and the object size judgment task (B). PSE values smaller than 1 indicate that participants perceived a virtual object as smaller than its actual size (underestimation). Error bars indicate standard deviations, and small open circles indicate individual data. Prox.: proximal.

The ANOVA on the PSE data in the hand size judgment task did not reveal any statistically significant effects of hand position, congruency, or motor experience [F (1, 17) = 0.00, p = 0.99, ηp2 = 0.00; F (1, 17) = 0.41, p = 0.53, ηp2 = 0.02; F (1, 17) = 0.10, p = 0.76, ηp2 = 0.01, respectively]. The ANOVA on the JND data did not reveal statistically significant effects of hand position, congruency, or motor experience [F (1, 17) = 0.13, p = 0.72, ηp2 = 0.01; F (1, 17) = 0.02, p = 0.90, ηp2 = 0.00; F (1, 17) = 0.00, p = 0.95, ηp2 = 0.00, respectively]. No interaction effects were statistically significant. Similarly, the Welch’s t-tests on the object size judgment data did not reveal a statistical difference between the distal and proximal locations for either the PSE or the JND [t (30) = 1.34, p = 0.20, d = 0.33; t (29) = 0.65, p = 0.52, d = 0.16].

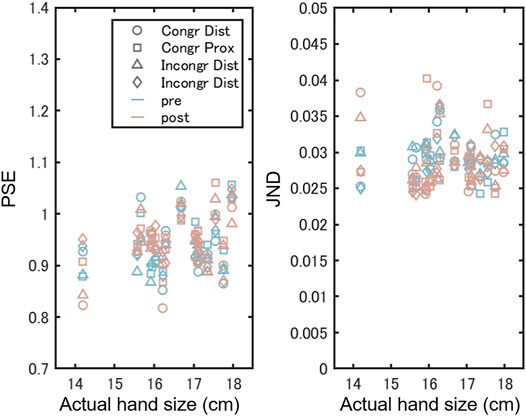

Figure 3 shows the relationships between actual hand size and the PSE/JND. The correlation coefficients were 0.32 [t (126) = 3.38, p < 0.001, 95%CI = 0.15–0.47] and −0.01 [t (126) = 0.14, p = 0.88, 95%CI = −0.18–0.16] for the PSE and JND, respectively, indicating a moderate positive correlation between actual hand size and underestimation bias of the virtual hand.

FIGURE 3. Relation between actual hand size (cm) and point of subjective equality (PSE) and just noticeable difference (JND). A significant correlation was found only for the PSE (r = 0.32). Congr: congruent, Incongr: incongruent, Dist: distal, Prox: proximal.

Each kinematic measure was compared between the VE conditions and the PE condition (baseline; the significance level was set to 0.05). Table 1 shows the descriptive statistics of the kinematic measurements and the results of the multiple comparisons to the PE condition. Movement times were significantly longer in all VE conditions than in the PE condition. MGA was significantly larger in the conditions with tactile feedback (VE-H and VE-S) than in the PE condition, while not in the conditions without tactile feedback (VE-Hn and VE-Sn). All VE conditions showed significantly earlier MGA than the PE condition. Regarding plateau duration, none of the VE conditions significantly differed from the PE condition. Note that even though there were no significant differences in plateau duration, the observed effect sizes were not small overall.

The present study investigated the perception of the size of a hand and of an everyday object in a virtual environment by comparing them to remembered sizes. The current results reveal two new findings. First, the data show the same degree of underestimation in object size presented in a virtual environment regardless of the type of object (body part or everyday object). Furthermore, object location, visuo-proprioceptive congruency, and short-term experience of controlling a virtual hand did not influence the bias or the precision of hand size perception. Second, there was a moderate positive correlation between actual hand size and overestimation bias; the smaller a participant’s hand, the smaller they perceived the virtual hand compared to its actual size.

The data show the same degree of underestimation in object size presented in a VE regardless of the type of object. While previous studies empirically showed changes in size perception of non-human 3D objects (Stefanucci et al., 2012; Stefanucci et al., 2015; de Siqueira et al., 2021) or a human-like hand (Lin et al., 2019) in VEs, none of them investigated both types of objects in the same VE. Even in the studies described above, estimation bias was not consistent. The present study, however, showed approximately 95% PSE (5% underestimation) on average for both the virtual hand and the everyday object (PSE = 0.95 and 0.94, respectively) presented in a consumer VR device. This result at least implies that consistent underestimation would be observed using the same VR device and the same game engine. The consistent underestimation of the virtual object sizes might be attributed to reduced environmental cues. It has been known that reduced cues for visual perception causes perceptual changes of distance and results in underestimation of object size in PEs (Leibowitz and Moore, 1966; Ono et al., 1974; Mon-Williams and Tresilian, 1999).

The underestimation bias of the virtual hand observed in this study corroborates evidence from previous VR reports (Thompson et al., 2004; Stefanucci et al., 2012; Creem-Regehr et al., 2015; Stefanucci et al., 2015) but is not consistent with an earlier report on a PE: Cardinali et al. (2019), using a similar task as that used in the present study, showed an underestimation bias in children for a participant’s remembered own hand, which in turn suggests an overestimation of the size of the physical hand model. This apparent discrepancy cannot be explained by the assumption that exactly the same visual experience is provided in PE and VE, thus leading us to conclude that there are differences critical for size perception between environments, even with current-generation VR devices. Note, however, that other differences in methods and results between the studies do not allow a straightforward interpretation; for example, the earlier study used fixed hand models as stimuli for all participants and found underestimation of own hand size not only in visual but also in haptic judgments. In addition, their measure for perception bias was computed by subtraction using absolute sizes. Notably, the authors observed that the underestimation effect was stronger in older children. This age-dependent change in the underestimation of one’s own hand is worth considering in the interpretation of the positive correlation between actual hand size and overestimation bias in the current results. Although part of the age-dependent change in the perception bias could be explained by maturation of the multisensory integration system of the brain (Cardinali et al., 2019), hand size itself might also play an important role.

Note that the present study compared virtual objects with remembered objects. This method inevitably involves effects of memory on size judgment; that is, we cannot dissociate whether the virtual objects are perceived as smaller than the physical object or whether the remembered objects in participants’ memories are larger than the physical ones. These questions, which are crucial for understanding the size perception system in humans, should be addressed in future studies. Nevertheless, the current findings may have significant practical implications for the use of VR devices for consumer games or academic studies.

None of the three factors investigated in the present study had a statistically significant effect on objects’ underestimation bias. Many studies indicate that distance estimation biases depend on the distance from the body in VEs: overestimation occurs in the near space and underestimation in the far space (Loomis et al., 2003; Renner et al., 2013). For example, a recent study found an underestimation bias starting at a distance of 30–40 cm from the body in a multisensory matching task (Hornsey et al., 2020). Usually, however, the distinction between “near space” and “far space” is based on the definition of a “reachable” versus an “out of reach” distance (Berti and Frassinetti, 2000; Gamberini et al., 2008; Linkenauger et al., 2015), and, therefore, it is surprising that the virtual hands and objects presented in the current experiments were not influenced by the distance factor; since an object 50 cm away from the viewer is still reachable, both locations in the current experiment (20 and 50 cm) were within the participants’ “near space.” Beneficial for applications, the same degree of underestimation was observed for virtual hands and objects, and the bias in hand size perception was not modulated by earlier experience of virtual hand control. In addition, visuo-proprioceptive incongruency did not affect hand size perception, which suggests that hand size perception is processed based only on the visual domain, unlike the enhancement in touch detection observed in Fossataro et al. (2020), which likely requires multisensory integration processes. This robustness of the size perception of virtual objects suggests that, at least in terms of size perception, a human hand is not a special entity in a VE and therefore is not influenced by sensorimotor or multisensory factors other than visual input.

The perceptual bias and sensitivity observed in the present experiment suggest that creators and experimenters should care about the size of objects when creating a virtual world with a consumer VR device. The current precise psychophysical experiment revealed a stable underestimation bias for both the virtual hand and the everyday object. The virtual objects presented at the same size as the actual object were perceived as approximately 5% smaller than the actual object. This value is larger than the average JND, approximately 3% of the actual object size, suggesting that the presentation of a virtual hand and everyday objects on the (not enlarged) built-in scale allows participants to notice the difference in size. This is consistent with what has been reported in academic studies (Thompson et al., 2004; Creem-Regehr et al., 2015) as well as by consumers, that virtual objects are perceived as smaller than expected. Furthermore, the asymmetry in perceptive tolerance for body size changes should also be taken into account. Pavani and Zampini (2007) reported that the presentation of an enlarged hand biased estimation of the actual hand size while a downsized hand did not, in a fake-hand (rubber-hand) illusion paradigm using a video-based system. The authors argued that individuals easily adapt to enlargements in body size but not reductions, referring to similar asymmetries found in other lines of literature (Mauguiere and Courjon, 1978; Gandevia and Phegan, 1999; de Vignemont et al., 2005). Thus, to dispel the feeling of discomfort often found in VEs, it may be recommended that programmers compensate for the underestimation bias by increasing object size by approximately 5%. To create the best user experience, and keeping in mind that not all participants underestimated the virtual hand and object, this bias should be further assessed in a strictly controlled psychological experiment.

To further consolidate the current evidence regarding object manipulation in VEs, the kinematics of reach-to-grasp movements in VEs are discussed. Note that, for conciseness and simplicity, the discussion focuses on previous results using an immersive HMD for comparisons with the current results. First, the current results show elongated movement times in the VE conditions compared to the PE condition, which seems to occur across immersive VE systems regardless of the existence of tactile feedback from a target object (Magdalon et al., 2011; Levin et al., 2015; Furmanek et al., 2019).

Second, increased MGA was observed only in the VE-H/S conditions, consistent with earlier experiments using tactile feedback (Magdalon et al., 2011; Levin et al., 2015). The larger MGA can be explained as a strategy to reduce collision possibility with a safety margin in environments with increased uncertainty (Jakobson and Goodale, 1991; Magdalon et al., 2011). In addition, the underestimation bias of the virtual hand found in the hand size judgment task might contribute to the enlarged MGA, although subjective discomfort regarding hand size was not explicitly assessed in the reach-to-grasp movement task. In contrast, the MGA comparison between the PE and the VE-Hn/Sn conditions yielded no significant differences (and very small effect sizes). This seems to be in line with the findings of Furmanek et al. (2019), who used virtual fingertips without tactile feedback, and observed an increased MGA only for their larger targets (7.2 and 5.4 cm) but not for the smaller one (3.6 cm). Because the current experiment used a target size of 1.5 cm, it is reasonable to presume that the maximum aperture does not change for relatively small targets in reach-to-grasp movements without tactile feedback. Although the reason for the size effect on the MGA observed in the previous study is unknown, it is likely that tactile feedback deprivation allows participants to reduce their effort to create an extra margin to avoid collision, resulting in the normal MGA amplitude for small to medium-sized targets even in a VE.

Third, MGA timing was earlier in all VE conditions than in the PE condition, indicating that the relative timing of aperture closure was advanced regardless of the type of virtual effector. This measure, however, varied across previous studies; MGA appeared earlier (in relative distance but not in relative time) in Furmanek et al. (2019) and was delayed in Magdalon et al. (2011) and Levin et al. (2015). The discrepancy in results might be explained by differences in haptic systems used in these experiments; the latter two studies used an exoskeleton device to provide tactile feedback of the target object. Although the earlier MGA timing usually appears in quick or easy grasping both with a hand and a tool (Itaguchi, 2020), it has also been suggested that MGA timing is strategically varied with grasping difficulty in a VE (Kuhlen et al., 2000). Thus, it is important to consider individual differences in grasping strategy as well as task requirements in various VEs to better understand the aperture time course.

Last, plateau durations (the period of static open aperture; its length reflects the degree of dissociation between reaching and grasping components) were longer in the VE conditions than in the PE, with moderate effect sizes (d = 0.44–0.53); the differences were, however, not statistically significant. As shown in previous studies, plateau durations are longer in tool-use grasping (Gentilucci et al., 2004; Bongers et al., 2012; Itaguchi and Fukuzawa, 2014), possibly because of a strategy unconsciously employed for successful grasping (Itaguchi, 2020). Given these earlier findings, the relatively normal (not changed) plateau durations observed in the current study can likely be attributed to the use of a natural hand without any exoskeletal constraints to control virtual effectors. These findings also suggest that the measure of plateau duration is more sensitive to the control difficulty of effectors, than to uncertainty in visual or tactile information.

The present study shows the same amplitude of underestimation of size perception for a virtual hand as for an everyday object in a VE. The effect was stable and not influenced by object location, visuo-proprioceptive congruency, or the participant’s short-term experience of controlling a virtual hand. However, actual hand size was correlated with perception bias. To improve practical and academic applications, these factors should be compensated for. Furthermore, analyses of reach-to-grasp movements with various virtual effectors revealed that longer movement times and larger MGAs are general characteristics of movements in VEs, but the latter was not observed for “air grasping” without an actual target. MGA appeared earlier for all virtual effectors in the current VE setting, and the changes in plateau duration varied across participants and did not show statistically significant differences. These findings corroborate previous evidence and have great significance for virtual hand interfaces for interactions with virtual worlds.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the ethics committee of Shizuoka University. The patients/participants provided their written informed consent to participate in this study.

The author confirms being the sole contributor of this work and has approved it for publication.

This work was supported by the Japan Society for the Promotion of Science, KAKENHI (grant numbers 17H06345 and 20H01785).

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The author is grateful for the assistance provided by Tomoka Matsumura and Luna Ando in collecting the experimental data.

Berti, A., and Frassinetti, F. (2000). When Far Becomes Near: Remapping of Space by Tool Use. J. Cogn. Neurosci. 12 (3), 415–420. doi:10.1162/089892900562237

Bhargava, A., Venkatakrishnan, R., Venkatakrishnan, R., Solini, H., Lucaites, K. M., Robb, A., et al. (2021). Did I Hit the Door Effects of Self-Avatars and Calibration in a Person-Plus-Virtual-Object System on Perceived Frontal Passability in VR. IEEE Trans. Visualization Comput. Graphics. doi:10.1109/tvcg.2021.3083423

Bingham, G., Coats, R., and Mon-Williams, M. (2007). Natural Prehension in Trials without Haptic Feedback but Only when Calibration Is Allowed. Neuropsychologia 45 (2), 288–294. doi:10.1016/j.neuropsychologia.2006.07.011

Bongers, R. M. (2010). Do changes in Movements after Tool Use Depend on Body Schema or Motor Learning?", in International Conference on Human Haptic Sensing and Touch Enabled Computer Applications. Springer, 271–276.

Bongers, R. M., Zaal, F. T. J. M., and Jeannerod, M. (2012). Hand Aperture Patterns in Prehension. Hum. Move. Sci. 31 (3), 487–501. doi:10.1016/j.humov.2011.07.014

Bozzacchi, C., and Domini, F. (2015). Lack of Depth Constancy for Grasping Movements in Both Virtual and Real Environments. J. Neurophysiol. 114 (4), 2242–2248. doi:10.1152/jn.00350.2015

Cardinali, L., Serino, A., and Gori, M. (2019). Hand Size Underestimation Grows during Childhood. Sci. Rep. 9 (1), 1–8. doi:10.1038/s41598-019-49500-7

Creem-Regehr, S. H., Stefanucci, J. K., and Thompson, W. B. (2015). “Perceiving Absolute Scale in Virtual Environments: How Theory and Application Have Mutually Informed the Role of Body-Based Perception,” in Psychology of Learning and Motivation (Elsevier), 195–224. doi:10.1016/bs.plm.2014.09.006

de Siqueira, A. G., Venkatakrishnan, R., Venkatakrishnan, R., Bharqava, A., Lucaites, K., Solini, H., et al. (2021). “Empirically Evaluating the Effects of Perceptual Information Channels on the Size Perception of Tangibles in Near-Field Virtual Reality,” in IEEE Virtual Reality and 3D User Interfaces (VR) (IEEE), 1–10. doi:10.1109/vr50410.2021.00086

de Vignemont, F., Ehrsson, H. H., and Haggard, P. (2005). Bodily Illusions Modulate Tactile Perception. Curr. Biol. 15 (14), 1286–1290. doi:10.1016/j.cub.2005.06.067

Fossataro, C., Rossi Sebastiano, A., Tieri, G., Poles, K., Galigani, M., Pyasik, M., et al. (2020). Immersive Virtual Reality Reveals that Visuo-Proprioceptive Discrepancy Enlarges the Hand-Centred Peripersonal Space. Neuropsychologia 146, 107540. doi:10.1016/j.neuropsychologia.2020.107540

Freeman, D., Haselton, P., Freeman, J., Spanlang, B., Kishore, S., Albery, E., et al. (2018). Automated Psychological Therapy Using Immersive Virtual Reality for Treatment of Fear of Heights: a Single-Blind, Parallel-Group, Randomised Controlled Trial. The Lancet Psychiatry 5 (8), 625–632. doi:10.1016/S2215-0366(18)30226-8

Furmanek, M. P., Schettino, L. F., Yarossi, M., Kirkman, S., Adamovich, S. V., and Tunik, E. (2019). Coordination of Reach-To-Grasp in Physical and Haptic-free Virtual Environments. J. Neuroengineering Rehabil. 16 (1), 78. doi:10.1186/s12984-019-0525-9

Gamberini, L., Seraglia, B., and Priftis, K. (2008). Processing of Peripersonal and Extrapersonal Space Using Tools: Evidence from Visual Line Bisection in Real and Virtual Environments. Neuropsychologia 46 (5), 1298–1304. doi:10.1016/j.neuropsychologia.2007.12.016

Gandevia, S. C., and Phegan, C. M. L. (1999). Perceptual Distortions of the Human Body Image Produced by Local Anaesthesia, Pain and Cutaneous Stimulation. J. Physiol. 514 (Pt 22), 609–616. doi:10.1111/j.1469-7793.1999.609ae.x

Gentilucci, M., Roy, A., and Stefanini, S. (2004). Grasping an Object Naturally or with a Tool: Are These Tasks Guided by a Common Motor Representation?. Exp. Brain Res. 157 (4), 496–506. doi:10.1007/s00221-004-1863-8

Hornsey, R. L., Hibbard, P. B., and Scarfe, P. (2020). Size and Shape Constancy in Consumer Virtual Reality. Behav. Res. 52 (4), 1587–1598. doi:10.3758/s13428-019-01336-9

Itaguchi, Y., and Fukuzawa, K. (2014). Hand-use and Tool-Use in Grasping Control. Exp. Brain Res. 232 (11), 3613–3622. doi:10.1007/s00221-014-4053-3

Itaguchi, Y. (2020). Toward Natural Grasping with a Tool: Effects of Practice and Required Accuracy on the Kinematics of Tool-Use Grasping. J. Neurophysiol. 123 (5), 2024–2036. doi:10.1152/jn.00384.2019

Jakobson, L. S., and Goodale, M. A. (1991). Factors Affecting Higher-Order Movement Planning: a Kinematic Analysis of Human Prehension. Exp. Brain Res. 86 (1), 199–208. doi:10.1007/BF00231054

Jung, S., Bruder, G., Wisniewski, P. J., Sandor, C., and Hughes, C. E. (2018). "Over My Hand: Using a Personalized Hand in Vr to Improve Object Size Estimation, Body Ownership, and Presence", in Proceedings of the Symposium on Spatial User Interaction, October 13–14, 2018, 60–68.

Keizer, A., van Elburg, A., Helms, R., and Dijkerman, H. C. (2016). A Virtual Reality Full Body Illusion Improves Body Image Disturbance in Anorexia Nervosa. PLoS One 11 (10), e0163921. doi:10.1371/journal.pone.0163921

Kuhlen, T., Kraiss, K.-F., and Steffan, R. (2000). How VR-Based Reach-To-Grasp Experiments Can Help to Understand Movement Organization within the Human Brain. Presence: Teleoperators & Virtual Environments 9(4), 350–359. doi:10.1162/105474600566853

Leibowitz, H., and Moore, D. (1966). Role of Changes in Accommodation and Convergence in the Perception of Size*. J. Opt. Soc. Am. 56 (8), 1120–1123. doi:10.1364/josa.56.001120

Levin, M. F., Magdalon, E. C., Michaelsen, S. M., and Quevedo, A. A. F. (2015). Quality of Grasping and the Role of Haptics in a 3-D Immersive Virtual Reality Environment in Individuals with Stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 23 (6), 1047–1055. doi:10.1109/TNSRE.2014.2387412

Lin, L., Normoyle, A., Adkins, A., Sun, Y., Robb, A., Ye, Y., et al. (2019). The Effect of Hand Size and Interaction Modality on the Virtual Hand Illusion", in: IEEE Conference on Virtual Reality and 3D User Interfaces, March 23–27 2019. 510–518. doi:10.1109/vr.2019.8797787

Linkenauger, S. A., Bülthoff, H. H., and Mohler, B. J. (2015). Virtual Arm׳s Reach Influences Perceived Distances but Only after Experience Reaching. Neuropsychologia 70, 393–401. doi:10.1016/j.neuropsychologia.2014.10.034

Linkenauger, S. A., Leyrer, M., Bülthoff, H. H., and Mohler, B. J. (2013). Welcome to Wonderland: The Influence of the Size and Shape of a Virtual Hand on the Perceived Size and Shape of Virtual Objects. PloS one 8 (7), e68594. doi:10.1371/journal.pone.0068594

Loomis, J. M., Knapp, J. M. J. V., and environments, a. (2003). Visual Perception of Egocentric Distance in Real and Virtual Environments. 11, 21–46.

Magdalon, E. C., Michaelsen, S. M., Quevedo, A. A., and Levin, M. F. (2011). Comparison of Grasping Movements Made by Healthy Subjects in a 3-dimensional Immersive Virtual versus Physical Environment. Acta psychologica 138 (1), 126–134. doi:10.1016/j.actpsy.2011.05.015

Marks, L. E., and Algom, D. (1998). Psychophysical Scaling. in Measurement, Judgment and Decision Making. Elsevier, 81–178. doi:10.1016/b978-012099975-0.50004-x

Mauguiere, F., and Courjon, J. (1978). Somatosensory Epilepsy. Brain 101 (2), 307–332. doi:10.1093/brain/101.2.307

Mine, D., Ogawa, N., Narumi, T., and Yokosawa, K. (2020). The Relationship between the Body and the Environment in the Virtual World: The Interpupillary Distance Affects the Body Size Perception. Plos One 15 (4), e0232290. doi:10.1371/journal.pone.0232290

Mon-Williams, M., and Tresilian, J. R. (1999). The Size-Distance Paradox Is a Cognitive Phenomenon. Expt. Brain Res. 126 (4), 578–582. doi:10.1007/s002210050766

Ono, H., Muter, P., and Mitson, L. (1974). Size-distance Paradox with Accommodative Micropsia. Perception & Psychophysics 15 (2), 301–307. doi:10.3758/bf03213948

Osumi, M., Ichinose, A., Sumitani, M., Wake, N., Sano, Y., Yozu, A., et al. (2017). Restoring Movement Representation and Alleviating Phantom Limb Pain through Short-Term Neurorehabilitation with a Virtual Reality System. Eur. J. Pain 21 (1), 140–147. doi:10.1002/ejp.910

Ozana, A., Berman, S., and Ganel, T. (2018). Grasping Trajectories in a Virtual Environment Adhere to Weber's Law. Exp. Brain Res. 236 (6), 1775–1787. doi:10.1007/s00221-018-5265-8

Pavani, F., and Zampini, M. (2007). The Role of Hand Size in the Fake-Hand Illusion Paradigm. Perception 36 (10), 1547–1554. doi:10.1068/p5853

Renner, R. S., Velichkovsky, B. M., and Helmert, J. R. (2013). The Perception of Egocentric Distances in Virtual Environments - A Review. ACM Comput. Surv. 46 (2), 1–40. doi:10.1145/2543581.2543590

Sawada, Y., Itaguchi, Y., Hayashi, M., Aigo, K., Miyagi, T., Miki, M., et al. (2020). Effects of Synchronised Engine Sound and Vibration Presentation on Visually Induced Motion Sickness. Sci. Rep. 10 (1), 7553. doi:10.1038/s41598-020-64302-y

Stefanucci, J. K., Lessard, D. A., Geuss, M. N., Creem-Regehr, S. H., and Thompson, W. B. (2012). "Evaluating the Accuracy of Size Perception in Real and Virtual Environments", in Proceedings of the ACM Symposium on Applied Perception, August 3–4, 2012, 79–82.

Stefanucci, J. K., Creem-Regehr, S. H., Thompson, W. B., Lessard, D. A., and Geuss, M. N. (2015). Evaluating the Accuracy of Size Perception on Screen-Based Displays: Displayed Objects Appear Smaller Than Real Objects. J. Exp. Psychol. Appl. 21 (3), 215–223. doi:10.1037/xap0000051

Thompson, W. B., Willemsen, P., Gooch, A. A., Creem-Regehr, S. H., Loomis, J. M., Beall, A. C., et al. (2004). Does the Quality of the Computer Graphics Matter when Judging Distances in Visually Immersive Environments?. Presence: Teleoperators & Virtual Environments 13 (5), 560–571. doi:10.1162/1054746042545292

Keywords: hand size perception, size estimation, reach-to-grasp movements, virtual reality, visuo-proprioceptive congruency

Citation: Itaguchi Y (2021) Size Perception Bias and Reach-to-Grasp Kinematics: An Exploratory Study on the Virtual Hand With a Consumer Immersive Virtual-Reality Device. Front. Virtual Real. 2:712378. doi: 10.3389/frvir.2021.712378

Received: 20 May 2021; Accepted: 05 August 2021;

Published: 13 August 2021.

Edited by:

Panagiotis Germanakos, SAP SE, GermanyReviewed by:

Elham Ebrahimi, University of North Carolina at Wilmington, United StatesCopyright © 2021 Itaguchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yoshihiro Itaguchi, aXRhZ3VjaGkueUBnbWFpbC5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.