- 1Center for Immersive Experiences, Department of Geography, The Pennsylvania State University (PSU), University Park, PA, United States

- 2Department of Geosciences, The Pennsylvania State University, University Park, PA, United States

To incorporate immersive technologies as part of the educational curriculum, this article is an endeavor to investigate the role of two affordances that are crucial in designing embodied interactive virtual learning environments (VLEs) to enhance students’ learning experience and performance: 1) the sense of presence as a subjective affordance of the VR system, and 2) bodily engagement as an embodied affordance and the associated sense of agency that is created through interaction techniques with three-dimensional learning objects. To investigate the impact of different design choices for interaction, and how they would affect the associated sense of agency, learning experience and performance, we designed two VLEs in the context of penetrative thinking in a critical 3D task in geosciences education: understanding the cross-sections of earthquakes’ depth and geometry in subduction zones around the world. Both VLEs were web-based desktop VR applications containing 3D data that participants ran remotely on their own computers using a normal screen. In the drag and scroll condition, we facilitated bodily engagement with the 3D data through object manipulation, object manipulation. In the first-person condition, we provided the ability for the user to move in space. In other words, we compared moving the objects or moving the user in space as the interaction modalities. We found that students had a better learning experience in the drag and scroll condition, but we could not find a significant difference in the sense of presence between the two conditions. Regarding learning performance, we found a positive correlation between the sense of agency and knowledge gain in both conditions. In terms of students with low prior knowledge of the field, exposure to the VR experience in both conditions significantly improved their knowledge gain. In the matter of individual differences, we investigated the knowledge gain of students with a low penetrative thinking ability. We found that they benefited from the type of bodily engagement in the first-person condition and had a significantly higher knowledge gain than the other condition. Our results encourage in-depth studies of embodied learning in VR to design more effective embodied virtual learning environments.

1 Introduction

Extended Reality (XR) technologies have become more accessible in terms of costs and required hardware and software and have gained attention and popularity in education (e.g., Dalgarno et al., 2011; Bulu, 2012; Merchant et al., 2014; Legault et al., 2019; Klippel et al., 2019). Recent advances in XR technologies have created an interest in investigating the role of cognitively motivated principles in designing virtual learning environments (VLEs) for education (e.g., Dalgarno and Lee, 2010; Lee et al., 2010; Johnson-Glenberg et al., 2014; Clifton et al., 2016; Yeonhee, 2018). There have been numerous efforts from various communities (e.g., IEEE ICICLE1 and The Immersive Learning Research Network (iLRN)2) to incorporate the technology-enhanced educational curriculum into classrooms, to overcome the limitations of learning technologies, and to design engaging and compelling learning experiences. The learning efficacy of these experiences is a product of their design, which in turn predicts the experiences of users (Dalgarno and Lee, 2010; Clifton et al., 2016; Jerald, 2016; Czerwinski et al., 2020). Among the various aspects that should be considered when designing an interactive virtual environment for learning, embodiment is argued to be one of the main contributors (Biocca, 1999; Johnson-Glenberg, 2018; Johnson-Glenberg et al., 2020). Within a rich body of research on the role of embodiment in spatial learning, thinking, and reasoning (e.g., Mou and McNamara, 2002; Wilson, 2002; Hegarty et al., 2006; Hostetter and Alibali, 2008; Kelly and McNamara, 2008; Kelly and McNamara, 2010; Paas and Sweller, 2012; Shapiro, 2014; Plummer et al. (2016)), there is a growing interest in investigating the role of embodiment in the design of VLEs as an essential factor influencing immersive learning (e.g., Kilteni et al., 2012; Lindgren and Johnson-Glenberg, 2013; Johnson-Glenberg et al., 2014; Lindgren et al., 2016; Clifton et al., 2016; Johnson-Glenberg, 2018; Skulmowski and Rey, 2018; Legault et al., 2019; Johnson-Glenberg et al., 2020; Southgate, 2020; Bagher, 2020).

This growing body of research examines the extent to which embodied learning in a virtual environment would enhance learning outcomes and improve learners’ spatial memory. Researchers in various fields have defined embodiment in different ways (Kilteni et al., 2012) and focused on numerous aspects, from body representation to the type of bodily engagement or the degree of embodiment. One common goal is to find out what type or degree of embodiment is beneficial in designing engaging and effective learning experiences in XR, especially virtual reality (Kilteni et al., 2012; Repetto et al., 2016; Johnson-Glenberg et al., 2016; Skulmowski and Rey, 2018; Johnson-Glenberg, 2018; Southgate, 2020; Johnson-Glenberg et al., 2020).

In this article, our focus is not the degree of embodiment but one of the affordances that play a key role in inducing the sense of embodiment (SOE) in VR. We investigate the extent to which bodily engagement (as an embodied affordance) contributes to SOE in VLEs and can affect learning experience and performance. Affordances are defined as “potential interactions with the environment” (Wilson, 2002, p.625). Different VR systems can afford different levels of sensorimotor contingencies depending on the system characteristics and the design choices for creating the learning environment. Sensorimotor contingencies refer to when we take certain actions to change our perception and interact with an environment, including but not limited to a virtual environment (Lee, 2004; Slater, 2009; Slater et al., 2010; Skulmowski and Rey, 2018). Johnson-Glenberg et al. (2014) refer to this as motor engagement. In this article, we use the term bodily engagement suggested by Skulmowski and Rey (2018) as this term entails a type of engagement that extends beyond the mind and considers the interaction between mind, body, and the environment (Wilson, 2002; Skulmowski and Rey, 2018). When the learning activities in a virtual environment are designed to engage the senses (i.e., vision) and motor engagement (i.e., body parts), the users experience higher engagement with those activities. As a result, they can be more embodied in the environment (Biocca, 1999; Jerald, 2016). The level of bodily engagement depends on the number of sensory systems engaged and whether the tasks are designed around meaningful activities. Bodily engagement can further affect memory trace and knowledge gain (Johnson-Glenberg et al., 2016; Skulmowski and Rey, 2018).

To examine the effect of bodily engagement on learning experience and performance, we focus on the design choices for bodily engagement in the same learning context with the same level of embodiment rather than evaluating the medium effect on learning. We have designed an experiment with two VLEs. These VLEs are web-based desktop VR applications. Web-based desktop VR refers to a desktop VR experience perceived via a standard screen delivered via a web browser. We argue that the type of 3D interaction for manipulation of virtual objects matters (Weise et al., 2019). In a recent study by Johnson-Glenberg et al. (2021) comparing immersive VR and a desktop VR with two levels of embodiment (low: passive video watching, high: interacting with the learning content), they found that the design is far important than the platform. The critical finding is that the way a learning environment is designed based on the presence or absence of interaction techniques matters in learning.

To carry out this research, first, we investigate the following questions: Does the type of interaction technique affect the level of bodily engagement and associated sense of agency? And does the type of interaction technique affect the sense of presence? To answer these questions, we look into 1) bodily engagement through two different interaction techniques and the associated sense of agency, and 2) the created sense of presence as the subjective or psychological affordance of the VR system (Slater and Wilbur, 1997; Ruscella and Obeid, 2021). We hypothesize that the design choices for the interaction technique influence the level of bodily engagement and the level of control over the learning environment that creates the sense of agency. This sense of agency can further affect the overall experienced sense of presence (Nowak and Biocca, 2003). Furthermore, presence, in return, has an effect on the level of bodily engagement and learning in VR (Johnson-Glenberg, 2018). Extensive research has been carried out on the sense of presence as a psychological affordance of a VR system (e.g., Slater and Wilbur, 1997; Witmer and Singer, 1998; Schuemie et al., 2001; Lee, 2004; Sanchez-Vives and Slater, 2005; Wirth et al., 2007; Schubert, 2009; Slater et al., 2010; Bulu, 2012; Bailey et al., 2012)

The goal of the VLEs used in this study is to support penetrative thinking in the “Discovering Plate Boundaries3” lab in an introductory physical geology course. In short, penetrative thinking is the ability to visualize a 2D profile of three-dimensional data. In designing and incorporating the VLEs into the plate boundaries lab exercise, we explore these research questions: Do interaction techniques affect learning experience and performance? And is one interaction technique superior to the other for students with a low penetrative thinking ability in terms of knowledge gain? We hypothesize that the interaction technique affects the learning experience and performance in the context of penetrative thinking in VR as a type of spatial learning. In a pilot study (Bagher et al., 2020) conducted in the Fall 2019, we focused on the 3D visualization of the US Geological Survey’s Centennial Earthquake Catalog (Ritzwoller et al., 2002) as a case study and immersive VR (IVR) using Head-Mounted Displays (HMDs) as an embodied and interactive learning experience. The pilot study focused on comparing IVR with the traditional teaching approach (using 2D maps) to determine whether IVR as an interactive 3D learning environment is superior to the traditional teaching methods. Due to the unprecedented event of the epidemic of COVID-19 during Fall 2020, physical attendance at the labs and using VR headsets (HMDs) was affected. Therefore, we created two VLEs based on virtual web-based desktop applications that presented the 3D visualization of the earthquake locations on a 2D interface with different interaction techniques. The use of a web browser was to give accessibility to students to attend the experiment from home. We incorporated the virtual learning environments into the curriculum to teach plate boundaries and earthquake locations, and they were the only method of learning available for the lab exercise. Therefore, this study explores whether the design of the interaction techniques used in the VLEs would affect learning experience and performance when VR is the established method of learning in the lab.

In the rest of the article, we first discuss the background of our research. Then, we discuss the design and implementation of the experiment. After reporting the results, we discuss their implications on learning experience, user experience, and learning performance. Then we address the limitations of the study and future directions for this research.

2 Background

2.1 Sense of Embodiment

Embodied learning theory (Stolz, 2015; Smyrnaiou et al., 2016), as a pedagogical approach rooted in embodied cognitive science, seeks to expand the application of embodied cognition into education. Embodiment is experiencing and interacting with the world through our bodies, suggesting that mind and body are linked (Wilson, 2002; Kilteni et al., 2012; Smyrnaiou et al., 2016). Therefore, in contrast to traditional cognitive science, embodied cognition explains how body and environment are related to cognitive processes (Barsalou, 1999; Barsalou, 2008; Shapiro, 2007; Shapiro, 2014; Skulmowski and Rey, 2018). Embodiment is rooted in human perception and motor systems and through the body’s interaction with the world rather than only relying on abstract symbolic and internal representations (Barsalou, 1999; Wilson, 2002; Waller and Greenauer, 2007; Shapiro, 2007, Shapiro, 2014). In recent years, the design of embodied interfaces, including immersive experiences, has captured the attention of researchers in different fields in an attempt to improve embodied learning (e.g., Dalgarno and Lee, 2010; Johnson-Glenberg et al., 2014; Clifton et al., 2016; Yeonhee, 2018; Czerwinski et al., 2020). To conceptualize embodiment in the context of virtual reality, we should define how SOE is constructed based on embodied mental representations. SOE is a psychological response to being situated in the space in relation to other objects and the self. A virtual interface can be an extension of human senses linking the human to the virtual environment (Biocca, 1999; Kilteni et al., 2012). In other words, SOE in VR can be defined as the integration of our senses with our technology extended bodies (Biocca, 1999).

Among research studies focused on embodiment in VR, some have focused on defining different contributing factors to the embodiment. For instance, Kilteni et al. (2012) define the sense of embodiment as a result of the sense of self-location, the sense of agency, and the sense of body ownership. Some researchers (e.g., Gonzalez-Franco and Peck, 2018) focus on the role of the body as an avatar and its effect on the sense of body ownership and agency. In another example, Southgate (2020) conceptualizes embodiment in virtual learning from different angles focusing on various representations of the body such as cyborg body, naturalistic body, political body, etc. Furthermore, several research studies are focusing on the role of bodily engagement on SOE in VR (e.g., Johnson-Glenberg, 2018; Skulmowski and Rey, 2018; Johnson-Glenberg et al., 2020; Johnson-Glenberget al., 2021). Johnson-Glenberg et al. (2020) defined two affordances for designing VR for learning: 1) the sensation of presence, and 2) embodiment and the agency linked with manipulating objects in 3D. They define embodiment as a meaningful interaction with the learning content through bodily engagement. In another study by Johnson-Glenberg et al. (2016), they found that embodiment and sensorimotor feedback can increase knowledge retention in some types of knowledge. Johnson-Glenberg et al. (2021) compared passive learning (watching a video) vs. active learning through embodied interactions on a 2D platform and an immersive VR (Oculus Go). In all conditions, users sit. In the active learning scenario, using a mouse on a 2D desktop and controllers in an immersive VR platform is highly embodied. Watching a video on both platforms is considered low embodied. Therefore, the user has the same level of bodily engagement both in VR and a 2D desktop when assigned to active learning. They found a significant main effect for embodiment regardless of the platform. Participants in high embodied conditions learned the most. Zielasko and Riecke (2021) carry out a systematic analysis with VR experts in a workshop to find out the effect of body posture and embodied interactions on various VR experiences such as engagement, enjoyment, comfort, and accessibility. They also found higher embodied locomotion cues for walking rather than sitting. Among other research studies focusing on interaction techniques, locomotion, and embodiment (e.g., Zielasko et al., 2016; Weise et al., 2019; Di Luca et al., 2021), Lages and Bowman (2018) focused on the effect of manipulating objects vs physically walking in the virtual environment on performance in demanding visual tasks. They found that in designing the learning environments, the creator should consider the user controller experience, past gaming experience, and spatial ability of the user.

In a desktop VR, hands movement and a mouse or a keyboard simulate bodily engagement at a lower level, giving the user the sense of being situated in the virtual environment while sitting in front of a 2D interface. We consider this form of SOE as the lower level of bodily engagement than immersive VR, where the whole body can be moved and engaged. In this article, instead of comparing the degree of embodiment, we investigate the design choices for bodily engagement in two web-based desktop VR with the same level of embodiment. We posit that different design choices for interaction techniques would affect learning experience and performance. We hypothesize that various interaction techniques can generate different levels of agency over the learning materials and result in different learning outcomes in terms of knowledge gain. Two main interaction techniques with the learning contents introduced in the literature are 1) gesture, and 2) object manipulation (Paas and Sweller, 2012). Several studies have explored the role of gesture as an effective bodily engagement technique in learning spatial information and offloading mental tasks to the surrounding environment (e.g., Hostetter and Alibali, 2008; Lindgren and Johnson-Glenberg, 2013; Plummer et al., 2016; Johnson-Glenberg, 2018). We propose to add a third interaction technique, 3) to move the user in space. This interaction technique creates a sense of embodied locomotion and gives the user the ability to control the rotation of the viewpoint by either stepping back in x,y,z direction and seeing an overview of the 3D objects or moving closer to inspect the 3D objects in greater detail. We are interested in examining the role of object manipulation and moving the user in space as interaction techniques contributing to bodily engagement in enhancing learning and the associated sense of agency.

Bodily Engagement Through Object Manipulation

This interaction technique creates a sense of agency and control over the 3D objects in a three-dimensional environment. According to Paas and Sweller (2012) object manipulation is a source of primary knowledge that will not affect cognitive load during the learning process. The primary systems can further assist the user in acquiring secondary knowledge. Manipulating an environment can help us to solve a problem through mental structures that assists perception and action. Moreover, adding a modality like object manipulation in the immediate environment may increase the strength of memory trace and recall (Barsalou, 1999; Wilson, 2002; Johnson-Glenberg et al., 2016; Johnson-Glenberg et al., 2020). In the recall process, in the absence of physical activity, the sensorimotor actions like object manipulation can later assist the processes of thinking and knowing by representing information or drawing inferences (Barsalou, 1999; Wilson, 2002). Working memory has a sensorimotor nature and benefits from off-loading information into perceptual and motor systems (Wilson, 2002). Therefore, we suggest using object manipulation to help with the cognitive load that can increase working memory capacity. Object manipulation in a web-based desktop VR can be achieved through dragging, rotation, and scroll using a mouse. Many 3D software programs use this technique to manipulate 3D content.

Bodily Engagement Through Moving the User in Space

Moving in space either physically in a virtual environment or through controller-based navigation in a web-based desktop VR is a cognitively demanding task. Changing perspective to create a different perception of the environment to perform a task or solve a problem is called epistemic action (Hostetter and Alibali, 2008). Epistemic actions are the result of sensorimotor contingencies (Slater, 2009; Slater et al., 2010) supported by a VR system. Even though physically walking is considered to be cognitively demanding, it is considered to be the most natural interaction technique (Lages and Bowman, 2018). Zielasko and Riecke (2021) carry out a survey in which participants rated higher embodied (non-visual) locomotion cues for walking, walking in place, and arm swinging than standing, sitting, or teleportation. In a web-based desktop VR, physical walking can be replicated using a controller. Moving in space can benefit from familiarity with controller-based games (Lages and Bowman, 2018) such as First Person Shooter (FPS) games. In these games, the player has an egocentric view and controls the movement in space in different directions using a game controller device or a mouse and keyboard.

2.2 Penetrative Thinking

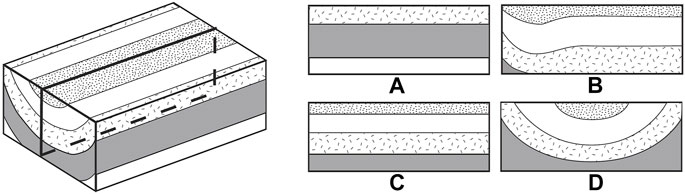

Spatial thinking is a fundamental part of many fields of science. One of the ways students can gain a better understanding of a spatial phenomenon is through visual-spatial thinking (Mathewson, 1999). Adequate visualization helps students to understand the spatial representation of information better. Spatial representations can be either extrinsic (e.g., locations) or intrinsic (e.g., shapes) to objects. One of the important spatial transformations related to intrinsic characteristics of objects is the ability to visualize penetrative views and to switch between two-dimensional and three-dimensional views. The ability to understand spatial relations inside an object and transform 3D data into a 2D profile is called penetrative thinking or cross-sectioning (Ormand et al., 2014; Newcombe and Shipley, 2015; Hannula, 2019). Figure 1 shows a penetrative thinking ability test to test students’ ability on mental slicing of a 3D geologic structure in a block diagram (Ormand et al., 2014).

FIGURE 1. Geologic Block Cross-sectioning Test for measuring students’ ability on mental slicing of a 3D geologic structure in a block diagram. The GBCT post-study test re-published from (Ormand et al., 2014).

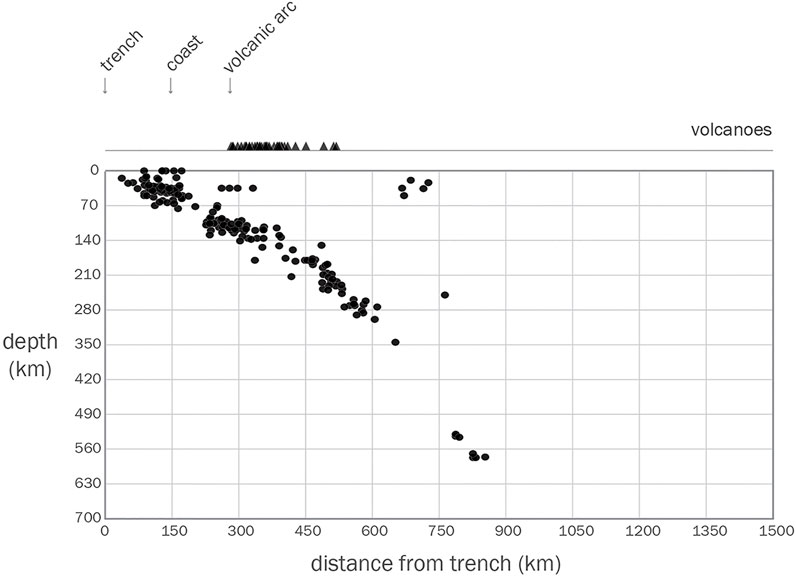

In domains such as geosciences, students usually visualize the 3D structure of objects presented on 2D interfaces (e.g., desktop computers) and then extract 2D profiles from the 2D representation of the data. For instance, phenomena and observations related to plate tectonics are inherently three-dimensional, yet are often plotted on 2D maps. In introductory geoscience courses, students are often trained to visualize 3D data by learning how to read 2D maps and block diagrams. For instance, this method of representation makes it difficult for some students to visualize the depth, extent, and geometry of earthquakes as they have different levels of penetrative thinking abilities. A 3D representation of the data can aid in better understanding the extent, shape, and cross-sections of the data. As an example, Figure 2 shows the cross-section of earthquakes and volcanoes across South America. Drawing a cross-section based on a 3D visualization of data can be much easier than seeing the 2D representation of data, imagining the 3D visualization, and then extracting the 2D profile.

FIGURE 2. An example of a plot drawn in an introductory geoscience course: cross-section of earthquakes and volcanoes in South America. Circles show the location of earthquakes and triangles show the location of volcanoes with distance from the trench.

2.3 Sense of Embodiment in The Context of Penetrative Thinking

This research examines whether penetrative thinking as a topic in spatial learning can benefit from embodied learning. We incorporate embodied interactions with the 3D visualization of the data (earthquakes, volcanoes, and plate boundaries) to enhance students’ ability in visualizing penetrative views and better understand the cross-section or profile of the data in different regions around the world. To evaluate the role of bodily engagement through different interaction techniques introduced in Section 2.1, object manipulation and moving the user in space, in a penetrative thinking exercise, we compared the two design choices by providing two VLEs in the form of web-based desktop VR applications. These VLEs are designed to create an interactive environment to support penetrative thinking in an introductory physical geology course to facilitate visualization of the distribution and depth of earthquakes around the world. Full bodily engagement and a higher level of embodiment can be achieved in an immersive VR using Head-Mounted Displays (HMDs). In a web-based desktop VR application, a lower level of bodily engagement can be created through hand movements and the use of a device like a mouse or a keyboard.

In the first condition, where bodily engagement is induced through object manipulation, students do not actively move in the environment. They move and manipulate all the 3D objects together by dragging, rotating, or zooming in/out. This manipulation technique helps the students to get closer to a specific location along x,y,z direction, where they can observe a specific subduction zone. In this condition, students have complete control over manipulating all 3D objects at the same time. They can switch between different datasets but they cannot manipulate each object individually (i.e, individual earthquake locations or volcanoes). We refer to this visualization as the drag and scroll condition (Supplementary Video S1). This interaction technique is similar to what is experienced in conventional 3D editors or geoscience software programs such as ArcScene4.

In the second condition, where the bodily engagement is induced through moving the user in space and creating a sense of locomotion, students rotate the viewpoint to the desired direction (along x,y,z axes) and move farther and closer to the 3D objects to inspect their spatial arrangement and their associated information. In this condition, the user can move in space and change the direction of the viewpoint in the virtual environment in a natural way (similar to what is experienced in conventional first-person camera views in games). In this condition, we manipulate the position and rotation of the first-person camera in VR to create a sense of egocentric movement in space. The first-person camera manipulation is designed based on the rotation of the camera using the mouse for determining the direction of the viewpoint and the arrow keys on the keyboard to translate in that direction. we refer to this condition as the first-person condition (see the Supplementary Video S1). This type of interaction technique in a web-based desktop VR is the closest type of simulation that we could create to induce the sense of locomotion compared to physical walking in an immersive VR using HMDs. Based on these definitions, the main difference between these two interaction techniques is the design choice of moving the 3D objects or moving the user.

3 The Experiment

This research examines the role of bodily engagement as an embodied affordance on users’ learning experience and performance. To conduct this research, two types of interaction techniques have been defined that can affect bodily engagement and the associated sense of agency. At the time of epidemic of COVID-19, when the use of HMDs became limited for safety reasons, designing web-based desktop VR applications that are accessible via web browsers gave students the flexibility of going through the exercise at home on their personal computers. We designed two web-based VLEs to explore how the design choices of interaction techniques can affect bodily engagement, agency, learning experience, and performance. As a case study, we visualized 3D earthquake locations around the world representing the USGS Centennial Earthquake Catalog (Ritzwoller et al., 2002) and Holocene volcanoes (Venzke, 2013) in the context of plate boundaries (Coffin et al., 1997).

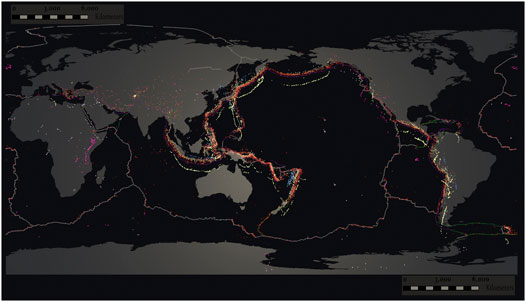

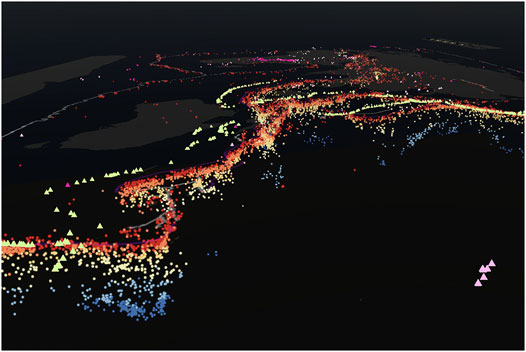

Figure 3 shows the top-down view of the web-based desktop VR applications and Figure 4 shows an egocentric view. The design of each VLEs is the same in terms of data visualization. What makes the two different is how interaction with the datasets is realized, which can be shown in a recorded video but not in a figure.5 The first VLE uses a mouse to drag, rotate and zoom in/out of the 3D visualization of the earthquakes and volcanoes. We refer to this visualization as the drag and scroll condition. The second VLE uses a mouse to define the direction of the viewpoint and the keyboard’s arrow keys to translate in the environment. We name this 3D visualization as the first-person condition.

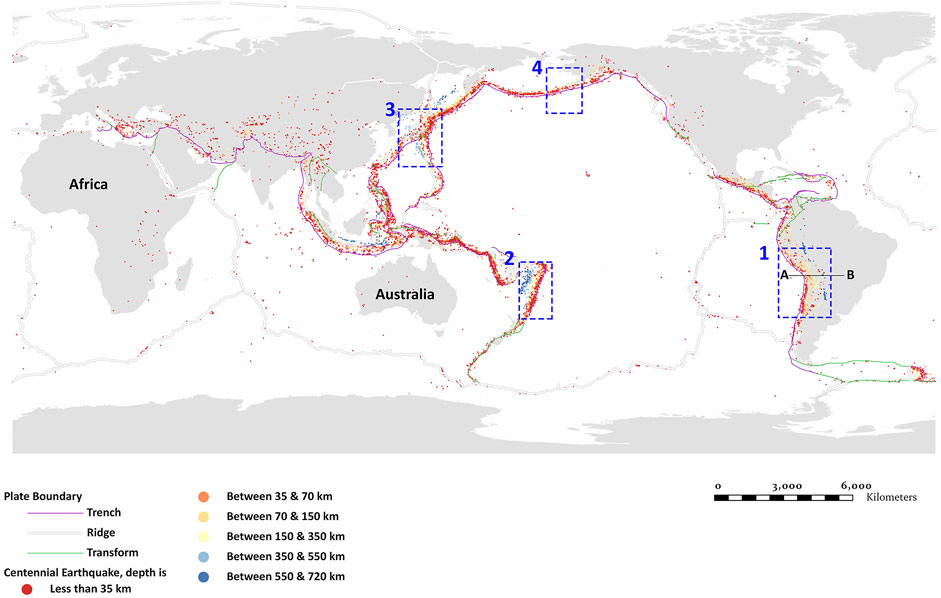

FIGURE 3. Top-down view of the web-based desktop VR application showing the world map, plate boundaries, earthquakes and volcanoes. Figure 5 shows the legend.

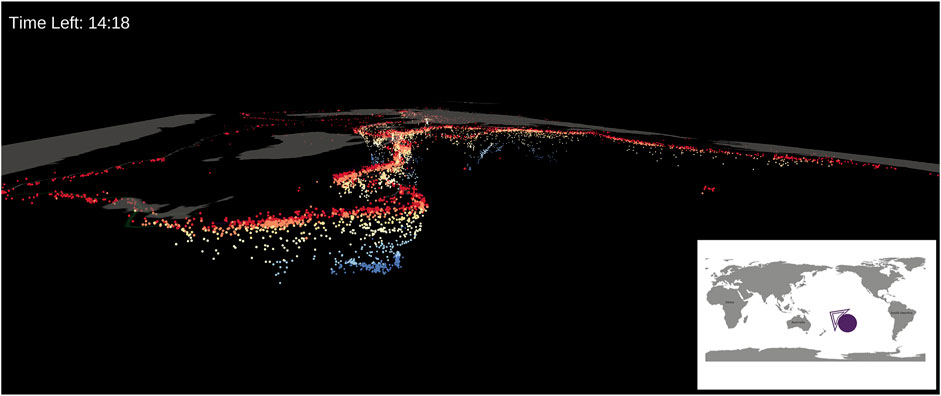

FIGURE 4. Egocentric view of the USGS Centennial Earthquake Catalog and Holocene Volcanoes. Figure 5 shows the legend.

Considering these two experimental conditions, this study investigates the following hypotheses in two area of interests: learning experience and learning performance.

Learning Experience:

H1. Students in the first-person condition experience a higher sense of presence.

H2. Students in the drag and scroll condition have higher control over the learning materials and as a result experience more agency.

H3. Students report a higher level of perceived learning in the drag and scroll condition.

H4. Students with a higher level of Visual Spatial imagery ability experience a higher sense of presence regardless of the condition.

Learning Performance:

H5. Students’ learning performance with low knowledge of the field improves after going through the experiences regardless of the conditions.

H6. Students’ level of control positively affects their learning performance regardless of the condition.

H7. Students with higher penetrative thinking ability show higher learning performance regardless of the condition.

H8. Students with lower penetrative thinking ability perform better in the first-person condition.

3.1 System Design

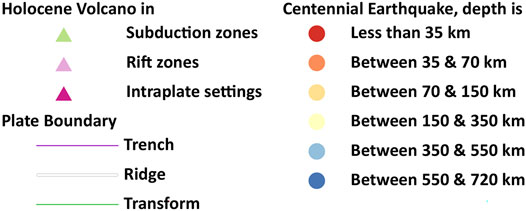

The data used to realize the visualizations in both conditions is the USGS Centennial Earthquake Catalog, which is a global catalog of well-located earthquakes from 1900 to 2008 that allows for the investigation of the depth and lateral extent of seismicity at plate boundaries (Coffin et al., 1997). To complement the earthquake locations and further connect the exercise to plate tectonics and plate boundary zones, maps of the current plate boundaries and the location of Holocene (i.e., < 10,000 years) volcanoes are also provided. Figure 5 shows the information provided in both conditions: 1) the three main plate boundary types; 2) horizontal scale in km; 3) the depth of the earthquakes: depth is less than 35 km; depth is between 35 and 70 km; depth is between 70 and 150 km; depth is between 150 and 350 km; depth is between 350 and 550 km; depth is between 550 and 720 km; 4) volcanoes: in subduction zones, in rift zones, and intraplate settings. The original format of the USGS Centennial Earthquake Catalog was a text file and for the Holocene Volcano, the original format was an Excel XML, both containing several values including X, Y, Z. The coordinates stored in the tables were imported into ArcGIS Pro6 as XY point data using the XY Table to Point tool.

FIGURE 5. Legend of the data visualized in the application, including plate boundaries, earthquakes and volcanoes.

The shapefiles were imported into Blender (Community, 2018) using a Blender importer called BlenderGIS7. Then they were imported to Unity3D®8 as FBX files. The earthquakes and volcanoes were visualized in the form of point clouds and were properly georeferenced. To overcome the performance limitation of rendering a large dataset (a total of 13,077 points for earthquakes) in VR, we used the particle system of Unity3D to generate points to have a more efficient and performant experience. Plate boundaries were visualized in the form of lines overlaid on the world map. Using these datasets, students can examine different subduction zone plate tectonics in terms of the locations and depths of the earthquakes.

The two different interaction techniques (one per condition) with the datasets were implemented in Unity3D. In both conditions, the users can switch between the earthquake and volcanoes datasets or enable both at the same time. Furthermore, they can access the label and other information of the data by opening showing/hiding a legend of the dataset. There is a scale bar next to the map to help users with the perception of distances. In the drag and scroll condition, the view of the users (i.e., the camera) orbits around a pivot point (starting at the center of the scene) using a common drag and movement functionality with the right mouse button, allowing the user to rotate the viewpoint. In addition, the pivot point can be moved within the 3D space of the scene along the X, Y, and Z axes using the drag and movement functionality with the left mouse button. Doing so would enable the users to move along these axes, and consequently orbit around the new pivot position. In the first-person condition, the users will use a combination of mouse and keyboard to perform a smooth translation along the X, Y, and Z axes using the WASD (or arrow) keys on the keyboard, while changing the direction of the movement based on the rotation of the camera using the mouse (i.e., steering which direction to move to with the mouse while the force is applied to that direction via the keyboard keys). The locomotion techniques in the conditions are very similar in nature (virtual travel and view point manipulation), but the two conditions are different in the mechanics of interaction used for locomotion. The drag and scroll condition simulates the interaction mechanics in software like ArcGIS, and the first-person condition simulates the interaction mechanics found in typical first-person shooter game.

3.2 Participants

236 students from two separate sections of an introductory physical geology course were invited to participate in this study in the Fall of 2020. The experience was embedded into the course as a lab assignment. Using a web-page, students selected whether they would like to take part in the research or only do the exercise as a lab assignment. From the 177 students who agreed to participate in the study, 96 students were randomly assigned to the drag and scroll condition and 81 students to the first-person condition. The section enrollment of participants was anonymized during the condition assignment to control for the environmental factors. All participants were compensated with extra course credit for their participation. 29.94% of the participants were female, 67.79% male, and less than 3% declared were non-binary or gender-nonconforming. The average age of the students was 19.45, with a maximum age of 21 and a standard deviation of 0.83. Also, 73.44% of the participants were majoring in Engineering.

3.3 Measures and Tests

To measure learning experience and knowledge gain, two types of questions were used in this study: 1) standardized measures, and 2) knowledge tests. Several existing standardized measures were incorporated into the pre-, and post-study questionnaires. Except for the demographic and background questions, all measures were of the type Likert-scale (ranging from 1 to 5 with 5 being the most positive), open-ended or multiple choice.

The pre-study questionnaire was comprised of the following measures:

• Demographics and background-related questions about gender, age, major and minor fields of study, and the year of study.

• A self-report measure of individual differences in terms of visual imagery: using the Visual Spatial Imagery (VSI) from MEC Spatial Presence questionnaire (Vorderer et al., 2004), with each item measured on a 1 to 5 Likert-scale. VSI is one of the spatial abilities that measures the ability to create clear spatial images and later access them from memory. People with higher VSI ability find it easier to access those spatial images from their memory (Wirth et al., 2007).

The post-study questionnaire was used to assess the learning experience of participants in light of the sense of presence and the sense of agency. Furthermore, the perceived learning experience of participants was measured.

• For measuring the sense of presence, we used the 6-item metric of Spatial Situation Model (SSM) from the MEC Spatial Presence Questionnaire (Vorderer et al., 2004). According to Wirth et al. (2007), a sense of presence can be built based on the Spatial Situation Model (SSM).

• For measuring the sense of agency, we used a combination of measures including Possible Actions from the MEC Spatial Presence Questionnaire (Vorderer et al., 2004) and measures suggested by Lee et al. (2010) including immediacy of control, perceived ease of use, and control and active learning.

• To measure perceived learning experience, we used three measures by Lee et al. (2010): reflective thinking, perceived learning effectiveness, and satisfaction. Perceived learning gives us feedback on the learning experience of students.

• Two open-ended questions were used to capture the general impression of participants about what they would change in the experiment and the advantages and disadvantages of this method of learning compared to classical teaching methods in classrooms.

For the knowledge tests, a pre-study and a post-study test were designed. Besides, a test that measured the participants’ mental slicing and penetrative thinking ability was used:

• The pre-study knowledge test contained six multiple-choice questions that tested students’ pre-knowledge of subduction zones and plate boundaries before going through the main experience.

• In the post-study knowledge test, seven multiple-choice questions were asked from the students to test their knowledge of the subject based on their penetrative thinking ability. In the pilot study (Bagher et al., 2020), we asked the students to draw by hand cross-sections plotting the depth of the earthquakes with distance from a subduction zone trench for segments of South America and Japan. Drawing a cross-section is a straightforward technique to test the students’ penetrative thinking ability in the field. In this research, due to remote participation, we could not include the same exercise. Therefore, we curated questions that not only test students’ knowledge of the subduction zones but test their penetrative thinking ability in the context of earthquake depth and distribution. For instance, we asked the student “Below are cross-sections of seismicity versus depth for four different subduction zones. Which cross-section is most similar to the South America subduction zone?”. The students had to use their VSI and penetrative thinking abilities to recall the cross-section of the South America subduction zone in their observation and choose one plot from multiple choices.

• The Geologic Block Cross-sectioning Test (GBCT) (Ormand et al., 2014) contains sixteen multiple-choice questions assessing the students’ ability to understand three-dimensional relationships by determining the correct vertical cross-section from a geologic block diagram.

3.4 Procedure

In both conditions, students filled out the pre-study questionnaire and then answered the pre-study knowledge test to establish their prior knowledge about the learning topic. Then, they were given information on the types of datasets they were going to explore in the VR experience and instructions on what areas to focus on. Figure 6 shows the area of interests including boxes 1–4 and cross-section A-B.

Region 1: South America

Region 2: Tonga-Kermadec

Region 3: Japan

Region 4: Eastern Alaska

Cross-section A-B: A cross-section across South American convergent margin.

FIGURE 6. Area of interests for the virtual experience. Students were asked to focuse on these eareas during the virtual experience.

Students were asked to explore and pay attention to the distribution of the earthquakes and volcanoes, and the depth range of the earthquakes in these regions while reflecting on the following questions: What do you observe with respect to these different subduction zones? Are the geometries of the subducting oceanic lithosphere the same (i.e., the distribution and geometry of the earthquakes) or are they different? Now, look specifically at the western margin of the South American Plate (Region 1). Is the Wadati-Benioff zone (i.e., the zone of seismicity that defines the subducting plate) the same north to south along the margin? They were informed that after the experience, they will be asked to answer several questions about these regions and the cross-section. In both conditions, they were given 15 min to explore the datasets and memorize the distribution of earthquakes in the defined regions. A two-dimensional guide map on the lower right side of the screen showed the position and the direction of the user in the world map. A timer on the upper left side reminded them of the remaining time (Figure 7). In both conditions, students could hide/show legend and instructions.

FIGURE 7. Guide map and the time counter to help the students keep track of time and navigate in the learning environment.

After the experience, students first answered the post-study questionnaire, and then the penetrative thinking ability test. Finally, they answered the post-study knowledge test. Placing the post-study knowledge test at the end introduced a period between the experience and the post-study knowledge test. This way, we could test the effect of various embodied interactions on knowledge retention. The session, from start to end, took around 40 min.

3.5 Analysis

For the learning experience assessment, we first identified the outliers using the Interquartile Range (IQR) method and carefully checked the dataset for removing any outliers. Then, we used Welch’s two-sample t-tests to compare the first-person condition with the drag and scroll condition based on the learning experience measures. For the learning performance measures, when Z-scores of the pre-, and post-study knowledge tests were compared regardless of the condition, Welch’s two-sample t-test was calculated. When we compared the post-study grades among the conditions, since the grades were ranked data, the Wilcoxon signed-rank test was used. To predict students’ sense of presence based on Visual Spatial Imagery and post-study grades based on their penetrative thinking ability, regression equations were calculated. As the number of participants in the two groups was different, Hedges’ g (Hedges and Olkin, 2014) was calculated instead of Cohen’s d for the calculation of effect size. A qualitative analysis of the two open-ended questions was performed to gain a better understanding of the participants’ opinions and experiences. Based on the approach proposed by Schreier (2012), two independent coders went over the responses of participants and inductively generated codes that would capture their content. Followed by consensus meetings, the codes were then grouped or rearranged into the final schema. Inter-rater reliability tests based on Cohen’s Kappa were then calculated for the finalized results.

4 Results

4.1 Learning Experience Assessment

Table 1 presents an overview of the mean, standard deviation, p-value, and effect size of the experience measures in the drag and scroll and the first-person conditions. As mentioned in the measures section, we measured the sense of presence, sense of agency, and perceived learning experience. There was no significant difference between the two conditions in terms of the sense of presence. Therefore, the first hypothesis (students in the first-person condition experience a higher sense of presence) is rejected. In terms of sense of agency, we measured possible actions, immediacy of control, perceived ease of use, and control and active learning, introduced in Section C. There is a significant group difference in the ease of use scores between the first-person (M = 3.14, SD = 0.63) and the drag and scroll (M = 3.32, SD = 0.50) conditions in favor of the drag and scroll condition, [t (153.12) = −1.98, p = 0.04]. The immediacy of control measures the students’ agency to change the view position and manipulate spatial objects. The difference for immediacy of control is very close to significant [t (174) = −1.77, p = 0.07] in favor of the drag and scroll condition (M = 4.07, SD = 0.88). We could not find any significant difference between the two conditions in terms of possible actions, and control and active learning. Based on these results, we have found some evidence in favor of the second hypothesis: students in the drag and scroll condition have higher control over the learning materials and as a result experience more agency. However, we could not find significant differences in all measures related to this affordance and as a result, we cannot conclude that the second hypothesis can entirely be accepted. In terms of perceived learning, students in the drag and scroll condition (M = 3.41, SD = 0.56) were significantly more satisfied [t (146) = 1.76, p = 0.04] than in the first-person condition (M = 3.20, SD = 0.75). We could not find any significant difference between the conditions in terms of reflective thinking and perceived learning effectiveness. Therefore, the only evidence that we could find in favor of the third hypothesis (students report a higher level of perceived learning in the drag and scroll condition) was satisfaction. Subsequently, we cannot conclude that the third hypothesis can be entirely accepted.

To conclude briefly, based on the discussed results, students in the drag and scroll condition had a better learning experience in terms of ease of use, immediacy of control, and satisfaction.

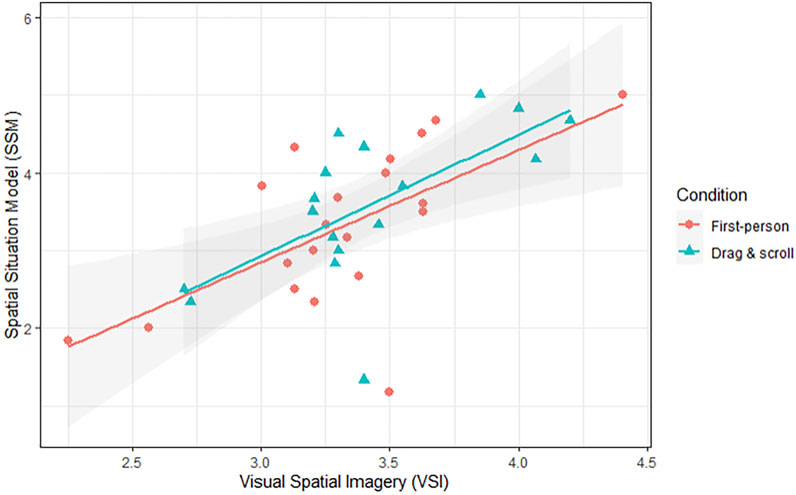

A simple linear regression was calculated to predict the effect of Visual Spatial Imagery (VSI) as a spatial ability on the sense of presence (SSM). Independent of the condition, a significant regression equation was found [F (1,175) = 53.04, p < 0.001] with an adjusted R2 of 0.228. Students’ sense of presence has increased by 0.64 for each unit of VSI. Therefore, hypothesis 4 can be accepted: students with a higher level of VSI experience a higher sense of presence. Figure 8 shows that in both the drag and scroll and the first-person conditions, the level of presence is dependent on the VSI spatial ability. A significant regression equation was found for the first-person condition [F (1,79) = 28.64, p < 0.001] with an adjusted R2 of 0.256. Students’ sense of presence has increased by 0.66 for each unit of VSI. For the drag and scroll condition, the significant regression equation is [F (1,94) = 22.83, p < 0.001] with an adjusted R2 of 0.186. Students’ sense of presence has increased by 0.61 for each unit of VSI.

4.2 Learning Performance Assessment

Before going through the experience, students answered six questions about subduction zones to test their knowledge of the subject in terms of the ability to understand the extent and geometry of the subduction zones based on their interpretation of the earthquakes, volcanoes, and plate boundaries. The total possible score was 8; The result of the test indicated an average score of 3.21 (SD = 1.24) with a minimum score of 1 and a maximum score of 7. 56.49% of the students who attended the study obtained a score that is less than the average score. This indicates that 56.49% of the students who attended the study had lower knowledge of the field compared to average performance. The post-study knowledge test contained seven questions with a total possible score of 14. The post-study knowledge test examined the same knowledge concepts with different types of questions to evaluate whether students’ understanding of the subject has improved after going through the experience. The result of the test indicated an average score of 7.9 (SD = 2.32) with a minimum score of 3 and a maximum score of 13.

Comparing the Z-scores of the pre-, and post-study knowledge tests, regardless of the condition, shows that students’ performance has improved by 0.05. However, the difference is not statistically significant: [t (176) = 0.55, p = 0.58]. We were under the impression that we can detect the presence or absence of students’ knowledge gain by studying the whole sample size. However, students with higher prior knowledge have a different level of improvement than students with lower knowledge of the field. Subsequently, we decided to analyze the learning performance of students with low prior knowledge of the subject compared to the average performance (pre-test Z-score ≤ 0). Based on our analysis, the performance of students with low prior knowledge of the field improved significantly regardless of the conditions: [t (167) = −5.86, p < 0.001]. For the drag and scroll condition [t (52) = −3.34, p < 0.001] and for the first-person condition, [t (46) = −5.41, p < 0.001]. Therefore, hypothesis 5 is accepted: both conditions have a significantly positive effect on students with low prior knowledge of the subject and the exposure to the VLEs improved their learning performance in terms of understanding earthquakes’ distribution and depth. In other words, when students with low prior knowledge of the field were exposed to the 3D representation of the epicenters of earthquakes from the USGS Centennial Earthquake catalog and locations of Holocene volcanoes, they understood the locations, depth, and geometry of the earthquakes in subduction zones better in different regions through 3D visualization. Yet, we could not find a significant difference between the conditions in terms of knowledge gain in students with low prior knowledge of the field: [t (97.2) = 0.94, p = 0.34].

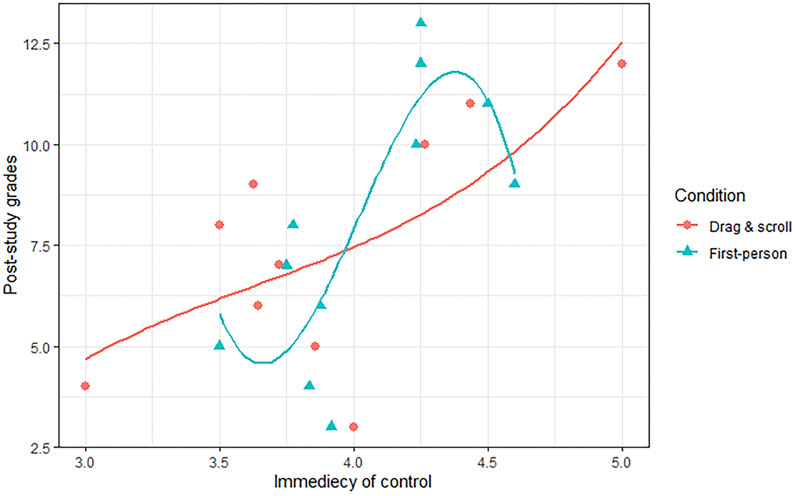

We also analyzed the impact of the immediacy of control as one of the important measures of the sense of agency on students’ learning performance (post-study grades). The students’ post-study grades were dependent on their evaluation of the immediacy of control in both conditions. In both conditions, the higher a student felt to be in control, the higher their post-study grades were (Figure 9). In the drag and scroll condition, a significant non-linear regression equation was found [F (1,92) = 3.406, p = 0.02] with an adjusted R2 of 0.07. In the first-person condition, a significant regression equation was found [F (1,77) = 3.007, p = 0.03] with an adjusted R2 of 0.069. Although the adjusted R2 for both equations are incredibly low and show that the immediacy of control is not a strong contributing factor, it is worth mentioning that there is a significant correlation. Respectively, hypothesis 6 is accepted: a higher level of control positively affects students’ learning performance.

To measure students’ penetrative thinking ability, students were asked to take the Geologic Block Cross-sectioning Test (GBCT) (Ormand et al., 2014). A simple linear regression was calculated to predict the result of the post-study grades based on the GBCT score (penetrative thinking ability). Independent of the condition, a significant regression equation was found [F (1,175) = 21.87, p < 0.001] with an adjusted R2 of 0.106. Therefore, hypothesis 7 about learning performance is accepted: students with higher penetrative thinking ability show higher learning performance. This shows that for students who understand the spatial relations between the objects, this penetrative thinking ability enables them to understand the location, direction, and shape of earthquake events around the world better. In terms of students with lower penetrative thinking ability (hypothesis 8), there is a significant difference between the post-study knowledge grades of the first-person condition (M = 7.84, SD = 2.07) and the drag and scroll condition (M = 6.63, SD = 2.5) in favor of the first-person condition, [t (67) = 2.36, p = 0.02]. We can conclude that the first-person condition with the freedom of moving in space and inspecting earthquake locations by moving closer to the objects in a first-person view has a positive effect on students with a low penetrative thinking ability. Therefore, hypothesis 6 is accepted: students with lower penetrative thinking ability perform better in the first-person condition. Interestingly, in the drag and scroll condition, there is a significant difference between the pre-, and post-study grades (Z-scores) of students with a low penetrative thinking ability (mean of the differences = 0.45) [t (37) = 2.11, p = 0.04]. Although students with a low penetrative thinking ability in the drag and scroll condition had lower post-study grades compared to the first-person condition they had a significant improvement from their pre-study grades. This result indicates that even though the drag and scroll condition is not as effective as the first-person condition in terms of the knowledge gained in students with a low penetrative ability, it still is an effective medium and has improved students’ knowledge gain after being exposed to the VR experience.

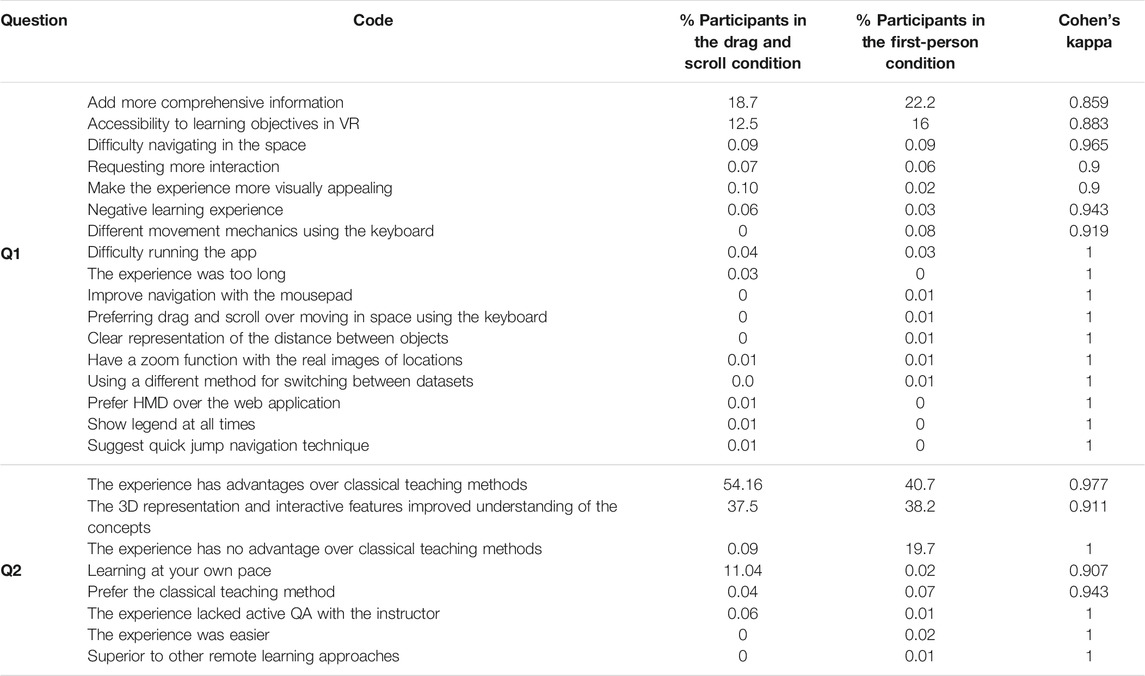

4.3 Qualitative Analysis of the Open-Ended Feedback of The Experience

Two open-ended questions were asked from the participants about their experiences as part of the post-study questionnaire:

Q1: If you could have changed something in the experience what would it have been and why?

Q2: If any, did this current method of instruction have advantages over classical methods of teachings used in classrooms?

Along with the quantitative analysis, the conducted qualitative analysis provides insights into the experiences of users after going through each condition. The extracted codes, capturing the content of the comments by participants, the percentage of participants talking about a code, and Cohen’s Kappa inter-rater reliability coefficient are reported in Table 2. Some of the codes are generally applicable to the experience regardless of the conditions and some are specific to the design choices based on the condition.

For the first question, the most frequent code was requiring more comprehensive information. Examples of this code include requesting an interactive legend (i.e., audio feedback), detailed description of features, and adding more features (i.e., mountains or continent names). In terms of accessibility to learning objectives in VR, before the experience, instructions and learning objectives were given to the students, including the highlighted areas to focus on and questions to have in mind while exploring the datasets. However, many students felt the need to see these learning objectives in the VR experience, being able to turn on and off the highlighted areas, and receiving more educational explanations of various subduction zones in the form of audio or text instead of self-exploration. Some students felt that there is no need for a change in the application whereas others mentioned difficulty in navigating in the space, negative learning experience, and difficulties running the app. In the first-person condition, some suggested different movement mechanics be designed to improve the experience. They suggested that instead of using the mouse as defining the direction of movement, two keys on the keyboard should allow for up and down movement. No one in the first-person condition complained that the experience was too long while three people in the drag and scroll condition complained about the length of the experience. Since the method of interaction in the first-person condition was new and students were not familiar with this method of movement in space, they might have used a considerable amount of time learning how to navigate in space and did not feel the time passing. Whereas the method of interaction in the drag and scroll condition is similar to geoscience software programs that many are familiar with.

In response to the second question, almost half of the students found this method of teaching superior to the classical methods of teaching. The advantages counted for this experience included learning at your own pace, being easier, and indicating that the 3D representation and interactive features improved their understanding of the concepts. 11.04% of the students in the drag and scroll condition declared that this method helped them to learn at their own pace, while only 0.02% in the first-person condition felt that way. As mentioned in the analysis of the first question, students in the first-person condition might have used a considerable amount of time learning how to navigate in space and that might have affected their learning pace. On the other hand, 19.7% of the students in the first-person condition found this type of experience to have no advantages over classical methods of teaching while only 0.09% in the drag and scroll condition felt that way. This indicates that although 40.7% of the students in the first-person condition found this method advantageous, 19.7% disagreed. One of the negative feedback about this method of teaching was the absence of active Q&A with the instructor while learning.

Overall, the insights from the first question show that students enrolled in the physical geology course are not used to memorization tasks, they typically would plot the locations and depths of the earthquakes by directly observing the data. In the exercise we designed, they first observed the data and then recalled the cross-sections based on memorization and memory trace. The second question gives insight that half of the students are open to technology-integrated teaching methods. Perhaps by improving their experience regarding the issues mentioned in the coding of the first question, more students might be open to this method of teaching.

5 Discussion

This study investigated the impact of bodily engagement on the learning experience and performance in the context of penetrative thinking in a critical 3D task in geosciences education: understanding the cross-section of the depth and geometry of earthquakes with distance from the trench. Since we have used the same platform (web-based desktop VR) for the design of VLEs, this study is not focusing on the effect of different mediums or degrees of embodiment on learning but the impact of interaction techniques on learning experience and performance.

5.1 The Effect of Bodily Engagement on Learning Experience

Our quantitative evaluation of the learning experience utilized established self-reported measures. We were anticipating a significant difference in the sense of presence between the two conditions. Although the sense of presence is not significantly different among the two conditions, we found that students with higher Visual Spatial Imagery (VSI) ability experience a higher sense of presence in both conditions. In terms of perceived learning, we found that students are significantly more satisfied with the drag and scroll condition but we could not find any difference in other measures related to perceived learning. Concerning the sense of agency, students reported that the drag and scroll condition is significantly easier to use than the first-person condition. They also found the drag and scroll condition to have a higher level of immediacy of control compared to the first-person condition. This evaluation indicates that students are more comfortable and familiar with the interaction method data manipulation which is dragging, rotating, and zooming in/out of the 3D data. This made us curious to see if declaring the drag and scroll condition as an easier interaction technique would translate into superior knowledge gain as well. The results of the structured content analysis show that almost the same percentage of students in both conditions felt that the 3D representation and the method of interaction have improved their understanding of the subject.

5.2 The Effect of Bodily Engagement on Learning Performance

Overall, all students gained some knowledge by going through the experience but we aimed to investigate the impact of interaction techniques on knowledge gain for students with low prior knowledge of the field. Our analysis showed that knowledge gain in students with low prior knowledge of the field improved significantly after going through the virtual experience in both conditions. We also found that when students felt more in control, in both conditions, they significantly performed better in terms of knowledge gain. This demonstrates that having control can be a contributing factor in knowledge gain. This shows that some students are more comfortable with moving in the three-dimensional environment and inspect objects based on changing their viewpoint whereas some students are more comfortable with data manipulation. With this result in mind, we looked into the penetrative thinking ability of the students to find out whether it would play a role in knowledge gain in different conditions. In the next section, we discuss our findings regarding students with lower penetrative thinking ability.

5.3 The Overall Effect of Bodily Engagement on Students With Lower Penetrative Thinking Ability

Weise et al. (2019) advise that the characteristics of the users should be considered in choosing an interaction technique. They suggest that users’ abilities can affect the performance and usability of the interaction technique. In this study, We used the Geologic Block Cross-sectioning Test (GBCT) to evaluate students’ penetrative thinking ability. We assessed whether this ability might affect their performance using either of the interaction techniques. Regardless of the conditions, we observed that the higher the penetrative thinking ability of the students, the higher the knowledge gain was. We hypothesized that students with higher spatial ability would better understand spatial relations of 3D objects and would perform better in either condition. One goal of designing interactive and embodied VLEs in 3D is to help students with lower spatial abilities, to help them visualize data in 3D, and better understand spatial relations between 3D objects. We found that students with a lower penetrative thinking ability benefited more from the interaction of the first-person condition. They had a significantly higher knowledge gain than students with a lower penetrative thinking ability in the drag and scroll condition. This result indicates that students with lower penetrative thinking ability benefit from active movement in space that facilitates adjusting their viewpoints. In other words, manipulating objects and trying to rotate them to get the desired viewpoint might be complex for students with lower penetrative thinking ability than naturally moving in space. Even though students with a lower penetrative thinking ability performed significantly higher in the first-person condition in terms of knowledge gain, students with a low penetrative thinking ability in the drag and scroll condition improved significantly compared to their pre-test Z-Score. This result suggests that even though the drag and scroll condition is not as ideal as the first-person condition for these students in terms of post-study knowledge gain, being exposed to a 3D representation of the data and interacting with the data would improve students’ penetrative view and result in a higher understanding the locations and depths of earthquakes when they have low penetrative thinking ability.

6 Conclusion, Limitations, and Future Work

In this article, we explored students’ penetrative thinking ability to interpret subduction zone plate tectonics from observations of the locations and depths of earthquakes. We argued that embodied learning could promote students’ learning experience and performance in visual-spatial thinking tasks such as penetrative thinking. To examine the role of bodily engagement as an embodied affordance on students’ learning experience and performance in an introductory physical geology course, we designed two VLEs based on two different interaction techniques: 1) object manipulation (drag and scroll) and 2) moving the user in space (first-person). Analyses of the data concerning learning experience and performance provided us with insights into students’ perception of learning and the actual performance. Overall, we argue that both interaction techniques have pros and cons regarding learning experience and performance. The goal of the VLE and the students’ spatial ability can further define which condition is a more suitable choice for teaching earthquake locations and depths.

One of the limitations of this study is the gender composition consisting of primarily male participants. Although our focus has not been the gender differences in spatial abilities, we are aware that there are conflicting studies regarding the differences in spatial abilities among male and female participants Yuan et al. (2019). Unfortunately, most studies focusing on spatial abilities compare the performance of male and female participants and would not include non-binary participants. Another limitation is that although our population is from different fields and backgrounds, they have been examined in the context of geosciences. In future studies, we plan to investigate the role of bodily engagement in other courses concerning visual-spatial learning. Furthermore, to measure the effect of bodily engagement on knowledge retention, we had to ask the students to answer the post-study knowledge test in a couple of hours to a day. However, due to time constraints during data collection, we could only delay answering the post-study knowledge test by approximately 15 min. We introduced this period between the experience and the post-study knowledge test by placing the post-study knowledge test at the end of the post-study survey. Another limitation of this research pertains to the setup of the experiment. Like most research in this domain, our conclusions are based on a single exposure to the VLE. Using a longitudinal study with multiple exposures, the observed effects of bodily engagement between the used conditions could be either amplified or diminished. Therefore, we will perform a longitudinal study over several weeks to further explore the lasting effect of different interaction techniques in future research.

Due to the COVID-19 pandemic, we could not compare the effects of different mediums (IVR vs web-based desktop VR) on bodily engagement and embodied learning. Therefore, as part of the future work, we are devising methods for sending Oculus Quest headsets to the students for remote VR data collection. We opt to investigate the effect of a higher level of bodily engagement in IVR on learning. Furthermore, although we designed this experiment with the utmost care, we plan to implement improvements for future studies. For instance, for the design of the VLEs, we did not include audio feedback for gaining information on earthquake depth or types of volcanoes. This proved to be a sought-after feature by the students, and as such, will be included in future versions of the tool. Future studies will also aim to understand why students reported the drag and scroll condition to be easier to use. We hypothesize that familiarity with this method of interaction due to prior experiences with geological software might be a key predictor. However, it is also pertinent to investigate whether the use of Quest controllers for object manipulation in an immersive VR while physically walking in the environment is considered easier than object manipulation using a drag and scroll technique (web-based desktop VR). Furthermore, comparing an IVR with web-based desktop VR, we plan to investigate the level of control experienced by the students in each condition to explore how much sense of agency they would experience.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the IRB Program (Office of Research Protection) at Penn State University Study ID: STUDY00008293. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

MB: First Author, conducted research, designed the VR experiment and the survey, carried out the analysis, and writing PS: designed the VR experiment, carried out the qualitative analysis, and writing JW: research design, quantitative analysis, and writing PLF: advisor on research design and implementation, writing AK: advisor on research design and implementation, writing.

Funding

This work was supported through a Penn State Strategic Planning award (proposal #1685_TE_Cycle2).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.695312/full#supplementary-material

Footnotes

1https://sagroups.ieee.org/icicle/

2https://immersivelrn.org/about-us/what-is-ilrn/

3Plate boundaries are the edges of plates created when the lithosphere is broken into multiple pieces (Tarbuck et al., 1997).

4https://desktop.arcgis.com/en/arcmap/latest/extensions/3d-analyst/choosing-the-3d-display-environment.htm

5Please refer to the video of the interaction techniques provided as the supplemental material

6https://www.esri.com/en-us/arcgis/products/arcgis-pro/resources

7https://github.com/domlysz/BlenderGIS

References

Ai-Lim Lee, E., Wong, K. W., and Fung, C. C. (2010). How Does Desktop Virtual Reality Enhance Learning Outcomes? a Structural Equation Modeling Approach. Comput. Edu. 55, 1424–1442. doi:10.1016/j.compedu.2010.06.006

Bagher, M. M. (2020). “Immersive Vr and Embodied Learning: The Role of Embodied Affordances in the Long-Term Retention of Semantic Knowledge,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (IEEE), 537–538. doi:10.1109/vrw50115.2020.00120

Bagher, M. M., Sajjadi, P., Carr, J., La Femina, P., and Klippel, A. (2020). “Fostering Penetrative Thinking in Geosciences through Immersive Experiences: A Case Study in Visualizing Earthquake Locations in 3d,” in 2020 6th International Conference of the Immersive Learning Research Network (iLRN) (IEEE), 132–139. doi:10.23919/ilrn47897.2020.9155123

Bailey, J., Bailenson, J. N., Won, A. S., Flora, J., and Armel, K. C. (2012). “Presence and Memory: Immersive Virtual Reality Effects on Cued Recall,” in Proceedings of the International Society for Presence Research Annual Conference (Citeseer) (IEEE), 24–26.

Barsalou, L. W. (2008). Grounded Cognition. Annu. Rev. Psychol. 59, 617–645. doi:10.1146/annurev.psych.59.103006.093639

Barsalou, L. W. (1999). Perceptual Symbol Systems. Behav. Brain Sci. 22, 577–660. doi:10.1017/s0140525x99002149

Biocca, F. (1999). The Cyborg's Dilemma. Hum. Factors Inf. Tech. 13, 113–144. doi:10.1016/s0923-8433(99)80011-2

Bulu, S. T. (2012). Place Presence, Social Presence, Co-presence, and Satisfaction in Virtual Worlds. Comput. Edu. 58, 154–161. doi:10.1016/j.compedu.2011.08.024

Clifton, P. G., Chang, J. S., Yeboah, G., Doucette, A., Chandrasekharan, S., Nitsche, M., et al. (2016). Design of Embodied Interfaces for Engaging Spatial Cognition. Cogn. Res. Princ Implic. 1, 24–15. doi:10.1186/s41235-016-0032-5

Coffin, M. F., Gahagan, L. M., and Lawver, L. A. (1997). Present-day Plate Boundary Digital Data Compilation. Austin, TX: Tech. rep., Institute for Geophysics.

Community, B. O. (2018). Blender - a 3D Modelling and Rendering Package. Amsterdam: Blender Foundation, Stichting Blender Foundation.

Czerwinski, E., Goodell, J., Sottilare, R., and Wagner, E. (2020). “Learning Engineering@ Scale,” in Proceedings of the Seventh ACM Conference on Learning@ Scale (ACM), 221–223. doi:10.1145/3386527.3405934

Dalgarno, B., Lee, M. J., Carlson, L., Gregory, S., and Tynan, B. (2011). An Australian and new zealand Scoping Study on the Use of 3d Immersive Virtual Worlds in Higher Education. Australas. J. Educ. Tech. 27, 1–15. doi:10.14742/ajet.978

Dalgarno, B., and Lee, M. J. W. (2010). What Are the Learning Affordances of 3-d Virtual Environments?. Br. J. Educ. Tech. 41, 10–32. doi:10.1111/j.1467-8535.2009.01038.x

Di Luca, M., Seifi, H., Egan, S., and Gonzalez-Franco, M. (2021). “Locomotion Vault: the Extra Mile in Analyzing Vr Locomotion Techniques,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (IEEE), 1–10. doi:10.1145/3411764.3445319

Gonzalez-Franco, M., and Peck, T. C. (2018). Avatar Embodiment. Towards a Standardized Questionnaire. Front. Robot. AI 5, 74. doi:10.3389/frobt.2018.00074

Hannula, K. A. (2019). Do geology Field Courses Improve Penetrative Thinking?J. Geosci. Edu. 67, 143–160. doi:10.1080/10899995.2018.1548004

Hegarty, M., Montello, D. R., Richardson, A. E., Ishikawa, T., and Lovelace, K. (2006). Spatial Abilities at Different Scales: Individual Differences in Aptitude-Test Performance and Spatial-Layout Learning. Intelligence 34, 151–176. doi:10.1016/j.intell.2005.09.005

Hostetter, A. B., and Alibali, M. W. (2008). Visible Embodiment: Gestures as Simulated Action. Psychon. Bull. Rev. 15, 495–514. doi:10.3758/pbr.15.3.495

Jerald, J. (2016). The VR Book: Human-Centered Design for Virtual Reality. Williston, VT: Morgan & Claypool.

Johnson-Glenberg, M. C., Bartolomea, H., and Kalina, E. (2021). Platform Is Not Destiny: Embodied Learning Effects Comparing 2d Desktop to 3d Virtual Reality Stem Experiences. J. Comp. Assist. Learn. 37, 1263–1284. doi:10.1111/jcal.12567

Johnson-Glenberg, M. C., Birchfield, D. A., Tolentino, L., and Koziupa, T. (2014). Collaborative Embodied Learning in Mixed Reality Motion-Capture Environments: Two Science Studies. J. Educ. Psychol. 106, 86–104. doi:10.1037/a0034008

Johnson-Glenberg, M. C. (2018). Immersive Vr and Education: Embodied Design Principles that Include Gesture and Hand Controls. Front. Robot. AI 5, 1–19. doi:10.3389/frobt.2018.00081

Johnson-Glenberg, M. C., Ly, V., Su, M., Zavala, R. N., Bartolomeo, H., and Kalina, E. (2020). “Embodied Agentic Stem Education: Effects of 3d Vr Compared to 2d Pc,” in 2020 6th International Conference of the Immersive Learning Research Network (iLRN) (IEEE), 24–30. doi:10.23919/ilrn47897.2020.9155155

Johnson-Glenberg, M. C., Megowan-Romanowicz, C., Birchfield, D. A., and Savio-Ramos, C. (2016). Effects of Embodied Learning and Digital Platform on the Retention of Physics Content: Centripetal Force. Front. Psychol. 7, 1819. doi:10.3389/fpsyg.2016.01819

Kelly, J. W., and McNamara, T. P. (2010). Reference Frames during the Acquisition and Development of Spatial Memories. Cognition 116, 409–420. doi:10.1016/j.cognition.2010.06.002

Kelly, J. W., and McNamara, T. P. (2008). Spatial Memories of Virtual Environments: How Egocentric Experience, Intrinsic Structure, and Extrinsic Structure Interact. Psychon. Bull. Rev. 15, 322–327. doi:10.3758/PBR.15.2.322

Kilteni, K., Groten, R., and Slater, M. (2012). The Sense of Embodiment in Virtual Reality. Presence: Teleoperators and Virtual Environments 21, 373–387. doi:10.1162/pres_a_00124

Klippel, A., Zhao, J., Jackson, K. L., La Femina, P., Stubbs, C., Wetzel, R., et al. (2019). Transforming Earth Science Education through Immersive Experiences: Delivering on a Long Held Promise. J. Educ. Comput. Res. 57, 1745–1771. doi:10.1177/0735633119854025

Lages, W. S., and Bowman, D. A. (2018). Move the Object or Move Myself? Walking vs. Manipulation for the Examination of 3d Scientific Data. Front. ICT 5, 15. doi:10.3389/fict.2018.00015

Lee, K. M. (2004). Presence, Explicated. Commun. Theor. 14, 27–50. doi:10.1111/j.1468-2885.2004.tb00302.x

Legault, J., Zhao, J., Chi, Y.-A., Chen, W., Klippel, A., and Li, P. (2019). Immersive Virtual Reality as an Effective Tool for Second Language Vocabulary Learning. Languages 4, 13. doi:10.3390/languages4010013

Lindgren, R., and Johnson-Glenberg, M. (2013). Emboldened by Embodiment. Educ. Res. 42, 445–452. doi:10.3102/0013189x13511661

Lindgren, R., Tscholl, M., Wang, S., and Johnson, E. (2016). Enhancing Learning and Engagement through Embodied Interaction within a Mixed Reality Simulation. Comput. Edu. 95, 174–187. doi:10.1016/j.compedu.2016.01.001

Mathewson, J. H. (1999). Visual-spatial Thinking: An Aspect of Science Overlooked by Educators. Sci. Ed. 83, 33–54. doi:10.1002/(sici)1098-237x(199901)83:1<33:aid-sce2>3.0.co;2-z

Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., and Davis, T. J. (2014). Effectiveness of Virtual Reality-Based Instruction on Students' Learning Outcomes in K-12 and Higher Education: A Meta-Analysis. Comput. Edu. 70, 29–40. doi:10.1016/j.compedu.2013.07.033

Mou, W., and McNamara, T. P. (2002). Intrinsic Frames of Reference in Spatial Memory. J. Exp. Psychol. Learn. Mem. Cogn. 28, 162–170. doi:10.1037/0278-7393.28.1.162

Newcombe, N. S., and Shipley, T. F. (2015). “Thinking about Spatial Thinking: New Typology, New Assessments,” in Studying Visual and Spatial Reasoning for Design Creativity (Springer), 179–192. doi:10.1007/978-94-017-9297-4_10

Nowak, K. L., and Biocca, F. (2003). The Effect of the Agency and Anthropomorphism on Users' Sense of Telepresence, Copresence, and Social Presence in Virtual Environments. Presence: Teleoperators & Virtual Environments 12, 481–494. doi:10.1162/105474603322761289

Ormand, C. J., Manduca, C., Shipley, T. F., Tikoff, B., Harwood, C. L., Atit, K., et al. (2014). Evaluating Geoscience Students' Spatial Thinking Skills in a Multi-Institutional Classroom Study. J. Geosci. Edu. 62, 146–154. doi:10.5408/13-027.1

Paas, F., and Sweller, J. (2012). An Evolutionary Upgrade of Cognitive Load Theory: Using the Human Motor System and Collaboration to Support the Learning of Complex Cognitive Tasks. Educ. Psychol. Rev. 24, 27–45. doi:10.1007/s10648-011-9179-2

Plummer, J. D., Bower, C. A., and Liben, L. S. (2016). The Role of Perspective Taking in How Children Connect Reference Frames when Explaining Astronomical Phenomena. Int. J. Sci. Edu. 38, 345–365. doi:10.1080/09500693.2016.1140921

Repetto, C., Serino, S., Macedonia, M., and Riva, G. (2016). Virtual Reality as an Embodied Tool to Enhance Episodic Memory in Elderly. Front. Psychol. 7, 1839. doi:10.3389/fpsyg.2016.01839