95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Virtual Real. , 04 June 2021

Sec. Augmented Reality

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.679902

This article is part of the Research Topic Editors Showcase: Embodiment in Virtual Reality View all 10 articles

This paper studies the sense of embodiment of virtual avatars in Mixed Reality (MR) environments visualized with an Optical See-Through display. We investigated whether the content of the surrounding environment could impact the user’s perception of their avatar, when embodied from a first-person perspective. To do so, we conducted a user study comparing the sense of embodiment toward virtual robot hands in three environment contexts which included progressive quantities of virtual content: real content only, mixed virtual/real content, and virtual content only. Taken together, our results suggest that users tend to accept virtual hands as their own more easily when the environment contains both virtual and real objects (mixed context), allowing them to better merge the two “worlds”. We discuss these results and raise research questions for future work to consider.

In Virtual Reality (VR), humans were shown to have the ability to experience ownership toward virtual bodies, also called self-avatars (Bainbridge, 2004). Such avatars have been increasingly used in Mixed Reality (MR) where users can see virtual content embedded into the real world. When embodied in MR, self-avatars allow users to see themselves in their own environment, but inside a body with a different shape, size, or appearance. This ability not only finds applications in the entertainment and education fields (Javornik et al., 2017; Hoang et al., 2018), but also in the psycho-medical areas. For example, virtual embodiment was previously used in MR to simulate medical prostheses (Lamounier Jr et al., 2012; Nishino et al., 2017) and to investigate non-intrusive therapies of chronic pain and mental disorders (Eckhoff et al., 2019; Kaneko et al., 2019).

The present study explores how the Sense of Embodiment (SoE) of virtual self-avatars is expressed in MR environments and whether the mixing of virtuality and reality impacts it. Indeed, by applying coherent sensory feedback, MR technologies can create similar virtual Body Ownership Illusions (BOI) as the ones previously witnessed in virtual environments (Maselli and Slater, 2013; Wolf and Mal, 2020).

Such illusions were widely studied in VR (Kilteni et al., 2012a; Spanlang et al., 2014; Kilteni et al., 2015), but little is known about them in MR contexts where the apprehension of virtual content is considerably different. In particular, Optical See-Through (OST) systems such as Microsoft Hololens two or Magic Leap are MR displays that usually produce partially transparent renderings (Evans et al., 2017). This transparency is inherent to their optical components which opacity varies depending on the lighting conditions (Kress and Starner, 2013). As a consequence, the real body of the user generally remains (partly) visible during first-person virtual embodiment experiences. Given that OST headsets are gaining in popularity and that more and more applications provide virtual hand overlays, studying the embodiment process in such visual experiences seems of increased importance.

Most of the previous research on the SoE was focused on the avatar itself or on the means to induce ownership (Kilteni et al., 2012b; Kokkinara and Slater, 2014; Hoyet et al., 2016). Our work, on the other hand, is interested in the play of environmental context on the SoE. More specifically, we aim to determine whether the mixing of real and virtual contents can impact the ability to embody virtual avatars in OST systems. Indeed, the visual feedback that VR and OST MR produce is significantly different: one displays fully coherent virtual graphics, while the other mixes reality and virtuality (Azuma, 1997). BOIs were already evidenced in both situations (Maselli and Slater, 2013; Wolf and Mal, 2020), but the differences in the perception of one’s avatar between these contexts were hardly studied. Our main motivation is to clarify whether environmental conditions could play a part in the SoE. This information is valuable for future studies as it could mean that the context of the embodiment has to be taken into account during analysis. Conversely, if environmental conditions do not affect the SoE, then it would mean that the scope of BOI experimental possibilities could be extended to various mixed reality contexts.

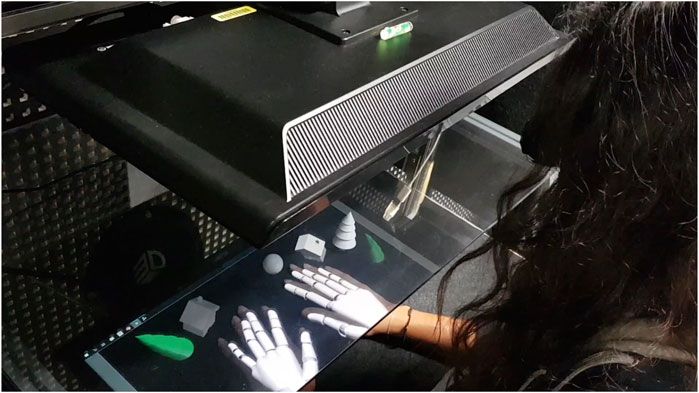

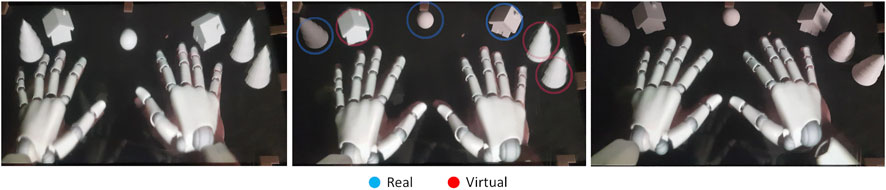

In this paper, we report an experiment that aimed to elicit the influence of the mixing of real and virtual contents on the virtual embodiment. Twenty-six participants could experience 3D robot virtual hands overlaid on their own hands (Figure 1). The induction of the SoE was done through the completion of a sequence of visuomotor tasks repeated in three environments with different amounts of real/virtual objects: only virtual, mixed, and only real objects (Figure 2). Upon finishing the task, for each environment, a subjective questionnaire assessed the participants’ SoE.

FIGURE 1. The experimental setup. A user equipped with tracked shutter glasses looks at her virtual hands under the semi-transparent mirror. The rendered perspective of the holograms is adapted to her point of view in real-time. For illustration clarity, we colored two of the virtual trees in a distinct color (green) from the real objects (white). All objects were white during the experiment.

FIGURE 2. The three experimental conditions represented from the participant’s point of view (Left) The “VIRTUAL” condition where all the objects were virtual (Middle) The “MIXED” condition where there were both real and virtual objects mixed (Right) The “REAL” condition where all objects were real. The 3D rendering allowed virtual objects to appear having the same 3D volume as their real counterparts.

In a nutshell, our results provide original insights regarding the perception of virtual avatars in MR environments. We could notably observe significant differences in the body ownership induced by the various environmental conditions, suggesting that the content of the environment, or its “level of virtuality”, is a new factor of influence over the SoE in MR applications.

Providing avatars that respond to gestures in real-time exposes users to visuomotor stimulation (Kokkinara and Slater, 2014). When congruent with the user, such stimulation can sometimes give rise to a “sense of embodiment” of the avatar corresponding to the illusion of owning, controlling, and being located inside the virtual body (Kilteni et al., 2012a; Jeunet et al., 2018). Avatars, in return, influence their users too: they can improve user experience (Yee and Bailenson, 2007), but also boost their performance (Banakou et al., 2018) and change the perception of themselves and their environment (Bailey et al., 2016). VR research has shown these effects to be particularly beneficial in the medical field (Kurillo et al., 2011; Mölbert et al., 2018), in social studies (Bailey et al., 2016; Banakou et al., 2016), or collaboration (Fribourg et al., 2018). One can easily imagine the benefits of transposing such scenarios to MR, where the real world remains visible.

Studies have shown that multisensory stimulation could successfully evoke the SoE of avatars in Augmented Reality (AR) (Suzuki et al., 2013; Škola and Liarokapis, 2016; Gilbers, 2017). However, Feuchtner and Müller (2017) showed that the real world’s visibility is double-edged since it includes by default the visibility of our own body, significantly hindering the strength of the SoE. Experiencing virtual embodiment while seeing one’s real body is nevertheless possible in cases where the avatar is realistically inserted into the environment, albeit in a weaker manner. Several works demonstrated this possibility through coherent visuomotor stimulation applied to partly transparent avatars (Wang et al., 2017) and avatars disconnected from the user’s body (Nimcharoen et al., 2018; Rosa et al., 2019; Wolf and Mal, 2020).

Aside from these findings, the SoE in MR remains a very young field of study, and not much is known about the validity of VR experimental results in MR environments. Indeed, comparing the outcomes of other experiments seems difficult as SoE measurement methods are often context-specific. Beyond that, it is uncertain whether two experiments replicated and evaluated identically in MR and VR will generate identical results. When real and virtual contents are displayed together, one can perceive a higher contrast between virtual rendering and real-life vision that does not appear in fully real or fully virtual environments. Perhaps there could be a difference in the experienced SoE due to the disruption of graphical coherence between the observed objects.

Among the studies that attempt to answer this question, Škola and Liarokapis (2016) examined the differences between the classical Rubber Hand Illusion and the Virtual Hand Illusion in both VR and AR. Their results suggest that the usage of AR and VR as a media of virtual hand embodiment has an effect of similar strength, but lesser than with a real rubber hand. Earlier on, IJsselsteijn et al. (2006) investigated the same illusion in the framework of mediated and unmediated experiments. Their study replaced the rubber hand with 2D images of a fake hand projected onto a tabletop and compared the resulting SoE to the one obtained in a reproduction of the experiment’s original paradigm. Although the SoE was found to be positive in both conditions, Ijsselsteihn et al. found a significantly weaker sense of ownership in the 2D projection of the hand. One explanation is that the visuotactile stimulation applied with a real brush on the 2D projection created a visual conflict between the real and the projected content, perceived as geometrically inconsistent.

A more recent study by Wolf and Mal (2020) investigated the differences between body weight perception in head-mounted VR displays and AR video see-through displays. During their experiment, subjects observed a photorealistic generic avatar through an interactive virtual mirror that provided visuomotor feedback. The VR condition showed a conform reproduction of the physical environment observed in the AR condition. Contrary to their expectations, participants did not report significantly different feelings of ownership and presence in AR than in VR. They explain that this might be due to the too similar perceived immersion between their AR and VR systems.

Most of the studies mentioned above let their participants experience embodiment in the third person. Although not mandatory to induce the SoE, being able to visualize an avatar in first-person along with other virtual objects is of interest for MR applications. We are not aware of papers that have investigated the specific impact of mixing different amounts of real and virtual contents during embodiment experiences. However, the study of the differences in the perception of MR environments is not exclusive to the SoE field: computer display research, for instance, has contributed to identifying dissimilarities between AR and VR in terms of distance estimation (El Jamiy and Marsh, 2019), presence (Tang et al., 2004) or in application-related efficiency (Azhar et al., 2018). These results are beyond the scope of our study, but they illustrate the global inconsistency in human perception.

To explore the potential influence of MR environments on the SoE, we conducted an experiment in which participants embodied the hands of an avatar in first-person within three environmental conditions (see Figure 2). In each condition, the user embodied robot hands overlaid on their own hands and performed a visuomotor task aiming to induce an SoE over the avatar. This task was completed while observing a scene composed of real and/or virtual elements. The conditions differed only in the nature of these objects (i.e. virtual or real) which were placed in identical positions and preserved the same appearance.

Twenty-six participants from age 21 to 61 (

Among them, 11 reported having no prior experience with AR or VR, 10 had little prior experience, and five had used these technologies many times. All had a normal or corrected-to-normal vision and those with glasses could keep them on if not causing discomfort (one participant removed her glasses). Lastly, this study was approved by a local committee of ethics.

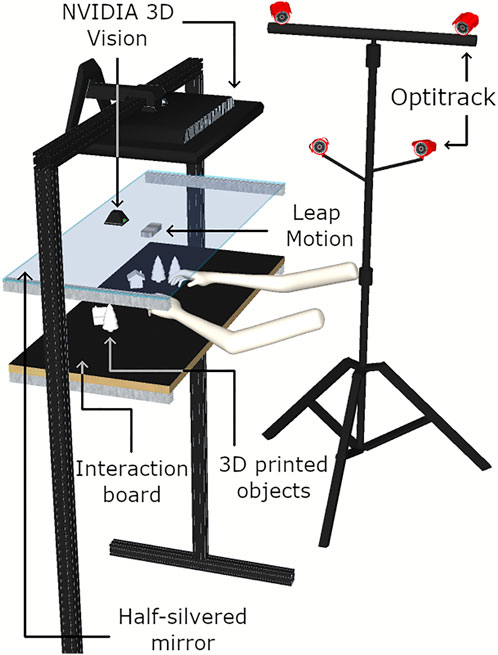

To control the visualization of both real and virtual contents in a seamless space, we built a setup inspired by previous VR fish-tank installations (Hachet et al., 2011; Hilliges et al., 2012). Our experimental setup is composed of a 3D display (NVIDIA 3D Vision, 120 Hz), a semi-transparent mirror, and a board for object placement. When equipped with shutter glasses, it allows subjects to observe semi-transparent “holograms” in the interactive volume located between the board and the mirror. These holograms are in fact the reflection of the 3D images displayed on the stereoscopic screen suspended above (see Figure 3).

FIGURE 3. The experimental setup in front of which the participants stood. Reflective markers were attached to the shutter glasses worn by the participants for head tracking.

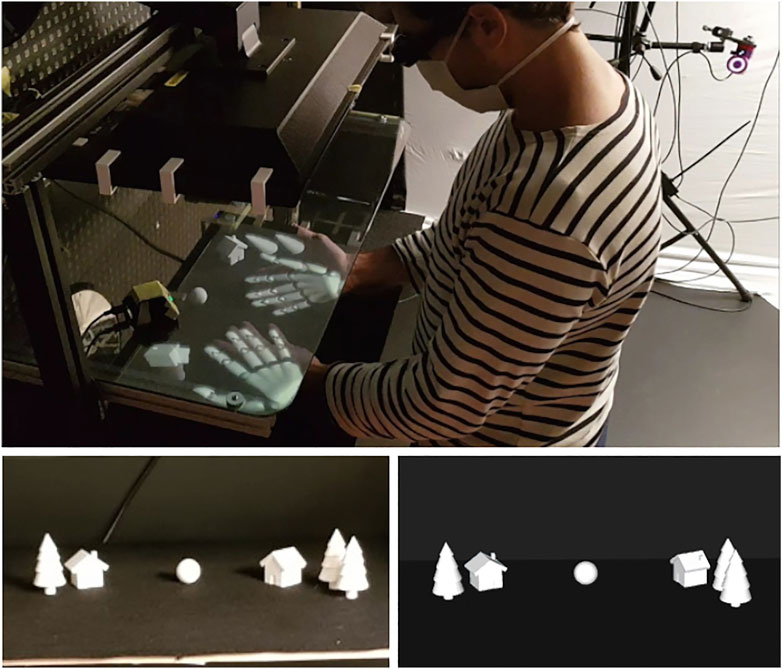

The experiment was developed using Unity 3D 2019.2. When positioning their forearms in the interaction volume, participants could see the forearms of an avatar co-located with their own and following their movements (see Figure 4). We used robot hand models extracted from the 2.3.1 version of the Leap Motion Unity SDK that was also used to track and animate the avatar’s hands.

FIGURE 4. (Top) A participant performing the task of turning his hands over during the MIXED condition (Bottom) Illustration of the object configuration that was seen by the user (Left) Real 3D-printed version (Right) and their virtual counterparts.

Secondly, to ensure a physically correct parallax, we implemented head tracking with Optitrack cameras. Indeed, the rendering of the 3D objects had to take into account the user’s point of view in real-time for volumetric cues to be displayed correctly (e.g. the user should see the side of an object when moving their head to the side). This adaptation was achieved through a custom shader implementing 3D anamorphic projections that distorts the rendering according to head tracking inputs.

The experimental device was installed in a closed room where reflective objects were masked to reduce infrared interference. To reinforce the illusion of presence of the 3D content, we simulated the lighting conditions of the room, illuminated with a single projector to simplify the simulation. As the semi-transparent mirror reflects only 70% of the incident light, the projector lighting makes the user’s real hands slightly visible underneath the mirror, resulting in a rendering close to the one proposed in commercialized OST headsets.

The environment observed during the experiment consisted of empty space with six virtual and/or real miniature objects (houses, trees, balls), modeled and printed in 3D (see Figure 4). Depending on the experimental condition, a number of these real or virtual objects were positioned on the interaction support, in front of where the participant would place their hands. As the system did not provide haptic feedback for the virtual objects, the participants were instructed not to touch any of the objects in order not to introduce a difference in perception between the real and virtual objects. Once the experiment started, instructions were given to the participants by playing an audio recording on a speaker placed at the back of the board.

Participants started by completing a consent form containing the written instructions of the experiment. After verbal explanations and video demonstration of the task, participants were asked to fill a questionnaire assessing their Locus of Control (LoC) for further analysis (cf. Section 3.5). They were then invited to stand in front of the experimental setup and to place their hands flat on the interaction board to proceed with a short calibration, lasting approximately 2 min. This calibration aimed to align the virtual hands with the real ones by moving them (if needed) in the direction expressed by the participant. While they acclimatized to the system, the experimenter then invited the participant to ask questions and requested them to focus on their virtual hands.

The experiment was divided into three blocks that ran sequentially, each displaying different amounts of real/virtual content. Depending on the block, miniature houses, trees, and a small ball appeared either really or virtually (or both) laid ahead of the participant’s hands, on top of the interaction board. The experiment then involved a visuomotor task as it has been shown more effective than visuotactile stimulation to induce ownership (Kokkinara and Slater, 2014). This task consisted of successively reproducing simple gestures dictated by audio instructions and presented beforehand. More specifically, participants had to i) drum with their fingers, ii) flip their hands, iii) adduct/abduct their fingers, and iv) position their right/left hand in front of a given item. These gestures, repeated 3 times each, had to be executed during a time delimited by a start “beep” and a stop “beep” for a total duration of about 4 min.

At the end of the audio recording, participants left the experimental setup to complete an SoE questionnaire adapted from the 7-point scale questionnaire of Gonzalez-Franco and Peck (2018) (cf. Section 3.5). They were then invited to proceed to the next experimental block and to repeat the same steps. The same avatar, task, and object configurations were maintained, but some virtual objects were replaced by their real version (or vice versa) depending on the condition tested. After going through all blocks and answering their respective questionnaires, participants were asked to fill one last questionnaire assessing their post-experiment perception of the avatar and the different environments seen. The average total time per participant, including instructions, questionnaires, experiment, breaks, and debriefing, was 1 h 30 min.

We tested three conditions where we varied the level of physicality/virtuality of the MR environment (Figure 2). Previous research indicates that there are considerable differences between individuals in their ability to experience BOIs (IJsselsteijn et al., 2006; Dewez et al., 2019). As between-subject designs are very sensitive to inter-individual differences, we chose to use a within-subject design to monitor these variations and increase the sensitivity of our experiment. The conditions were preceded by an acclimation phase of about 1 min and counterbalanced with a Latin Square to avoid order effects.

• Condition 1 (VIRTUAL) The environment contained only virtual items laid on the interaction support in front of the participant’s hands.

• Condition 2 (MIXED) Some of the virtual items (half of them) were replaced by their real version.

• Condition 3 (REAL) Except for the avatar hands, all content viewed by the participant was real.

One must note that all of these conditions take part in a real environment as we are interested in comparing OST experiences of embodiment. Therefore, the term “VIRTUAL” for condition one does not refer to a Virtual Reality condition, but to the virtual objects and hands that are featured in the (real) scene.

The choice of the avatar’s robotic appearance was based on the recommendations of Gilbers (2017) who found that human virtual hands embodied in AR provoked a high criticism from participants toward their realism and likeness. Several studies have similarly observed that VHI experiences should provide gender-matching models (Schwind et al., 2017) and color-matching skins (Lira et al., 2017) to avoid user self-comparison with the embodied avatar. Our experiment being focused on the influence of mixing realities and not of the avatar’s appearance, we did not want to provide personalized avatars that would potentially create inter-individual variations. Therefore, we chose a generic non-gendered robot model to avoid bias and increase result comparability.

For consistency, objects were arranged in the same way for all participants, and replaced objects were always the following: the sphere in the middle, the tree on the left, and the house on the right (see Figure 4). The experimenter created the condition arrangements during the completion of the embodiment questionnaires, without the participant witnessing this change. Unlike the virtual hands, one must note that the virtual objects were not superimposed over their real counterparts. We decided to proceed this way because it the most common situation encountered in head-mounted OST experiences: holograms are displayed in vacant space while virtual hands (when provided) are texture overlaid on the user’s hands.

Regarding the task, the drumming and adduction/abduction gestures were inspired by the experiment of Hoyet et al. (2016) which used them to induce SoE toward a six-fingered hand in VR. The third gesture used in their study (opening/clenching the fists) was replaced by the gesture of turning/flipping the hands because it caused fewer tracking artifacts. The fourth gesture is of our design: it aims to momentarily (5 s) shift the participant’s attention to the displayed environment so that they could take it into account when evaluating how they felt about the avatar.

To limit potential habituation and practice effects, each of these four gestures was repeated in a random order, renewed at each condition. The duration of the task (4 min) was chosen so that the stimulation would be long enough to induce an SoE (Lloyd, 2007) and short enough not to cause weariness.

Out of the 26 participants, the data of four was excluded from further analysis due to technical issues compromising the avatar’s perception. For the rest, we analyzed the data from the questionnaires presented in the following subsections.

The LoC is a personality trait corresponding to the “degree to which people believe that they have control over the outcome of events in their lives as opposed to external forces beyond their control” (Rotter, 1966; Dewez et al., 2019). This trait was shown by Dewez et al. (2019) to be correlated positively with the sense of ownership and agency. Its measurement therefore provides an additional tool to get a sense of the variability in the obtained results. A translated version (Rossier et al., 2002) of the 24-item IPC scale of Lefcourt (1981) was administered before the experiment’s start. This scale provides scores between 0 and 48 for three dimensions of the LoC: “Internality”, “Powerful Others” and “Chance”.

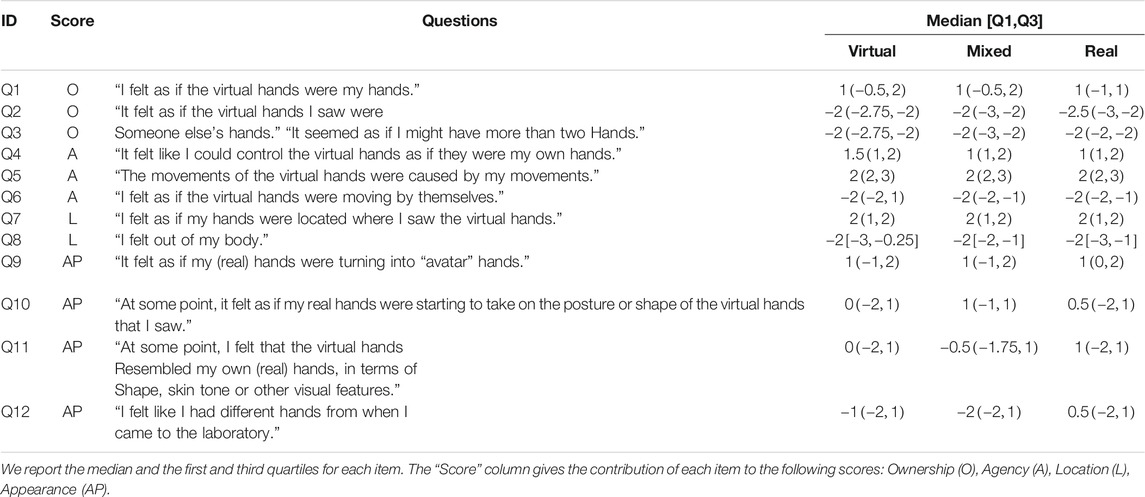

Despite a large number of studies on the sense of embodiment, there still is no gold standard to evaluate this feeling. We chose the widely used 7-point Likert scale questionnaire proposed by Gonzalez-Franco and Peck (2018), built with the most prevalent questions found in the literature. This questionnaire covers six areas of interest: body ownership, agency, tactile sensations, location, external appearance, and response to external stimuli. We removed the questions unrelated to our experimental settings (e.g. statements on mirrors, haptic feedback, non-collocated virtual bodies, etc.) to keep a total of 12 questions (see Table 1). Their answers can be computed into sub-scores by group of interest and into a global embodiment score.

TABLE 1. The embodiment questionnaire, adapted from Gonzalez-Franco and Peck (2018).

Given our interest to explore the impacts of blending real and virtual contents, we designed a post-experiment questionnaire to assess the perceptual differences between the environments that we tested. These questions were tailored to address aspects we felt could influence the SoE, such as the feeling of presence of the objects, the realism of the observed content, the feeling of being “immersed”, and the mental workload demanded by the task. The participants were asked to remember each condition and then to rate them on a 6-point Likert Scale, ranging from “strongly disagree” to “strongly agree”.

We also added two open-ended questions in order to get feedback with the participant’s own words. The first one asked the participants to describe their feelings regarding their virtual hands and if there were differences between the three conditions. Because user appreciation is important in the design of such experiences, the second question asked the participants to indicate if they had a preferred condition and to rank them from most favorite to least preferred. The full 8-item questionnaire is detailed in Table 2.

Each of the experimental interests surveyed in the embodiment questionnaire (i.e., ownership, agency, location, and appearance) were calculated into separate scores as described in the original questionnaire (Gonzalez-Franco and Peck, 2018): the textual ordinal answers were first converted to numerical data (“strongly disagree”

The LoC was also calculated into three separate scores corresponding to the Internality, Powerful Others, and Chance dimensions of Levenson’s scale (Lefcourt, 1981). As for the post-experiment questionnaire, the questions were analyzed one by one without resorting to scores. Their answers (on a 6-points Likert Scale) were converted to numerical data (“strongly disagree”

In order to have an overview of our population’s LoC profiles, we produced descriptive statistics on the LoC scores. The medians for each dimension (i.e. Internality, Powerful Others and Chance) were

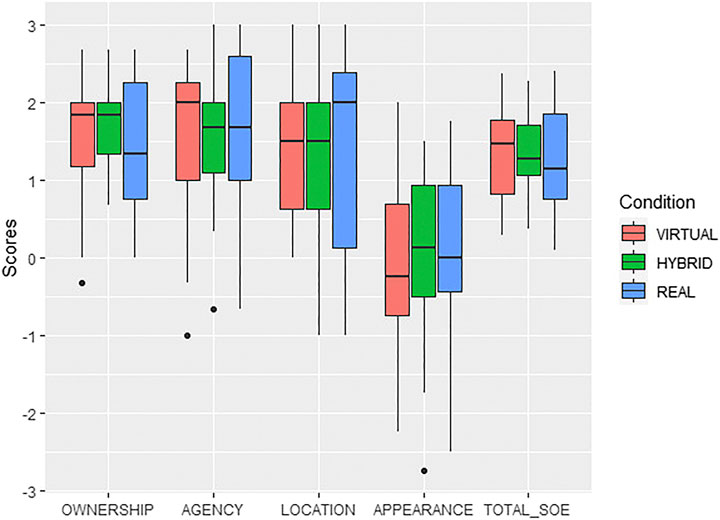

Generally speaking, all conditions appear to have successfully evoked a positive SoE. A summary of the descriptive analysis of each score is presented in Figure 5 and Table 3.

FIGURE 5. Boxplots representing the averaged embodiment scores for each condition. The scores all range from

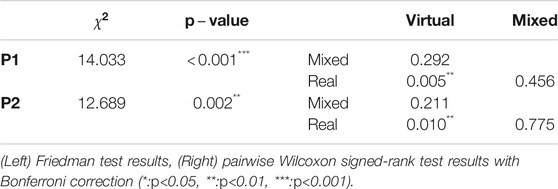

To identify potential differences between the SoE of each condition, we ran separate statistical tests on the embodiment scores. Shapiro-Wilk and Mauchly’s tests showed that none of the scores met the assumptions required for an ANOVA. Therefore, we applied Friedman’s tests as it takes into account the ordinal nature of Likert scales (Table 4). Only the ownership score came out as significantly different across the tested environments (

Subsequently, we applied a posthoc test (Wilcoxon Signed-rank) with Bonferroni correction to determine pairwise differences. We found that this significant result was issued from the comparison between the MIXED and the REAL conditions (

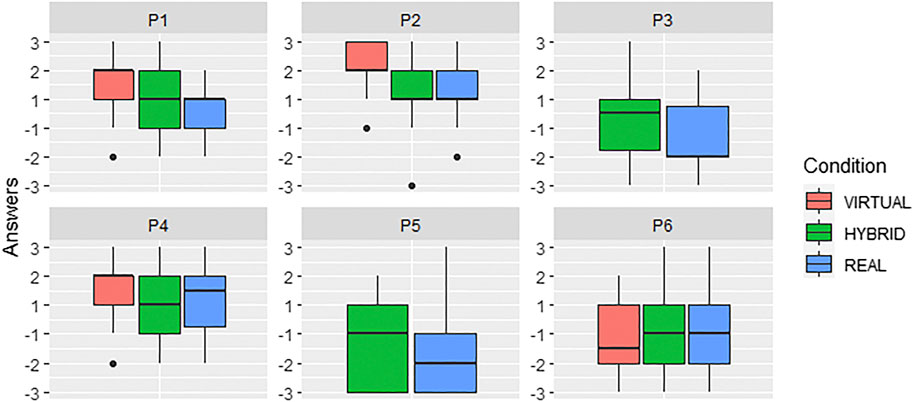

To get a first idea of the post-experiment questionnaire results, we made a descriptive analysis summarizing the main features of the data (see Table 2). The distribution of answers for questions P1 to P2 is illustrated in Figure 6.

FIGURE 6. Boxplots of the post-experiment questionnaire answers, evaluated on a 6-point (forced) Likert scale. Answers were coded from

Next, we analyzed whether these six statements were significantly different across conditions. To do so, we ran Friedman tests on each of them (all questions were non-normally distributed) and found significant differences in questions P1 (

TABLE 5. Significant differences found in the post-experiment questionnaire results across tested environments.

Posthoc tests with Bonferroni correction revealed that the significant differences resulted from the VIRTUAL-REAL pair in both cases (

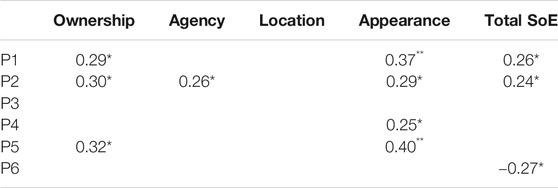

To identify a potential link between the perception of the environment and the SoE, we applied Spearman correlation tests between the SoE scores (all conditions together) and questions from P1 to P6. Positive correlations appeared with P1 (immersion), P2 (avatar integration), P4 (object presence), P5 (mix discomfort) and the embodiment scores detailed in Table 6. There was also a negative correlation between P6 (mental workload) and the total SoE score, but no significant difference was found between the conditions regarding this question.

TABLE 6. Spearman correlations between the embodiment scores and the post-experiment questionnaire (*:p<0.05, **:p<0.01, ***:p<0.001).

Regarding the P7 open-ended question, several topics came out as prominent: the ownership/disconnection of the avatar, the evolution of this feeling, the system’s quality, the separation/merge of virtual and real worlds, and the enjoyment of the experiment. Participants ordered their most preferred to least preferred conditions as following:

(i) VIRTUAL

(ii) MIXED

(iii) Other rankings appeared 3 times or less.

(iv) One participant could not rank the conditions, being undecided.

Overall, VIRTUAL seemed to be the most preferred condition (half ranked it as their first choice) and REAL the least preferred one (half ranked it as their last choice), but we could not confirm this preference statistically (Friedman

To put the previously presented results to the test, we conducted several posthoc analyses. Their results are presented in this section.

Although it is of reduced power, the analysis of the first trials of each participant can be interesting to check if the results would have been the same in a between-subjects design. Indeed, first trials can be grouped by condition to simulate independent measures. We applied such grouping and performed a Kruskal-Wallis test to compare the distributions of the three groups. No significant results were found for group sizes of 8, 7, and 7 for VIRTUAL, MIXED, and HYBRID respectively.

As mentioned in Section 3.5.2, the 12-item SoE questionnaire we used was adapted from the questionnaire of Gonzalez-Franco and Peck (2018). This questionnaire was updated after the completion of our study (Peck and Gonzalez-Franco, 2021): 9 items were removed from the original version and a new score computation method was proposed.

As the 16 items of this updated version are a subset of the previous questionnaire, we could run a second analysis with this revised version’s methodology. The goal of this recalculation was to extend the validity of our initial analysis by making backward compatible with papers that used the 2018 questionnaire and forward compatible with papers that adopted the 2021 questionnaire. To do so, we removed from analysis the questions that were not present in the new questionnaire and recomputed the scores following the newly described method. Using this method, we could not observe the significant difference that we had found between REAL and MIXED in terms of Ownership scores. We discuss these different outcomes in Section 6.7.

To understand better how the previously used items were involved in the significant results we had found, we performed a per-item analysis comparing each item of the embodiment questionnaire across the three conditions. Through this process, we aimed to identify which questions contributed to the significant difference in the Ownership score (Section 4.2).

The per-item analysis showed that Q1 had significantly different answers across the conditions (Friedman chi-squared = 9, p-value = 0.011). A posthoc test (Wilcoxon signed-rank with Bonferroni) revealed that this difference occurred between the conditions MIXED and REAL, matching with the Ownership score analysis. Other questions did not provide a significant difference.

To our knowledge, this study is the first to compare the SoE of virtual hands in environments with different amounts of virtual/real content. Our goal was to explore how the blending of realities experienced in OST systems can modulate virtual embodiment sensations. Our results reveal a potential difference in virtual body ownership linked to the amount of virtual/real content seen:

• Participants perceived the virtual hands significantly more as their own in the condition where both kinds of objects were mixed (MIXED) than in the one where only real objects (REAL) were in their field of view (Table 4).

• On the other hand, displaying unmixed virtual objects (VIRTUAL) created similar body ownership scores as in the MIXED condition (Figure 5).

• Meanwhile, the difference that could be expected between the VIRTUAL and REAL conditions did not appear (Table 4).

The other embodiment factors we evaluated (i.e., agency, self-location, appearance, and total SoE) did not emerge as significantly different. The fact that body ownership evolved separately is not without precedent. Indeed, previous studies have shown that SoE sub-components could be dissociated and elicited independently but that their co-occurrence would lead them to strengthen each other (Kalckert and Ehrsson, 2012; Braun et al., 2014). On the other hand, the differences we observed between the MIXED and REAL conditions raise novel questions to which we attempt to provide an explanation.

As the same protocol, tracking system, and avatar were used for the three conditions, the origin of the ownership differences we witnessed would logically be related to the variations in the objects set up in the environment. The first avenue we explored therefore consisted in evaluating how each environment was perceived and in identifying potential differences in their cognition.

From the post-experiment questionnaire, the VIRTUAL condition appeared as significantly superior to REAL in terms of i) feeling of immersion and ii) feeling of “integration” of the virtual hands in the real environment (Table 5, P2). More generally speaking, these two feelings were stronger when the virtual objects were present in larger quantities. This is reflected by the percentages of participants who agreed or strongly agreed to these statements: 59.1 and 77.3% did in VIRTUAL for P1 and P2 respectively, 45.5% did in MIXED (for both), against 22.7 and 31.8% in REAL.

Given these decreasing ratings, it seems as if replacing a part of the real items with virtual ones somewhat helped providing immersion and coherence to the avatar. We investigated a potential relationship with the SoE by applying Spearman correlation tests between the P1/P2 answers and each of the embodiment scores (all conditions taken together). P2 showed moderate positive correlations for all scores except location, whereas P1 did for all except location and agency (Table 6). In other words, regardless of the condition, participants who thought the virtual hands were well-integrated also tended to score higher on these specific SoE dimensions. Similarly, participants who felt more immersed also scored higher on these embodiment scores (and vice versa).

“Immersion” is a psychological state linked to the awareness of one’s own environment and physical state (Slater, 2018). The entire experiment being visualized in OST, participants were certainly more aware of the real world than in previous SoE studies in VR. Nevertheless, the conditions appeared to be different enough for participants to notice a preference for VIRTUAL in P1 and P2. The fact that this condition had higher immersion ratings seems somehow logical as the virtual content occupied considerably more visual space in it. This visual occupation could have led participants to be more distracted from their real hands, and therefore to be more prone to develop body ownership in this condition. This would be in line with previous studies that found immersion to improve the SoE in VR (Waltemate et al., 2018).

On the other hand, it remains unclear as to why the ownership scores of VIRTUAL and REAL did not emerge as significantly different when the P1 and P2 ratings were found to be significantly lower in REAL than in VIRTUAL. This is especially puzzling since MIXED and REAL did show a significant difference in their ownership scores, but not in P1 and P2 answers. In this regard, it should be kept in mind that the correlations mentioned above are only moderate and that other factors of influence could be at play.

A starting point to clarify these intricate results can be found in the P7 open-ended question. They seem to put forward that participants had remarkably varying feelings about the REAL condition. This disparity is also reflected in an inter-quartile range considerably larger than in the SoE scores of the two other conditions (Figure 5). On the one hand, many reported a weaker SoE when no virtual object was around: e.g., “The virtual hands felt less like my hands when it was all real”, “The fully real environment introduced greater distance between the robotic hands and the objects”. On the other hand, some participants appreciated REAL for its visual uniformity and often compared it to VIRTUAL: e.g., “It seemed more ‘real’ when everything was virtual or real”, or “It felt easier when everything was either all virtual or all real”.

Despite the relatively different distributions of REAL and VIRTUAL’s embodiment scores, such comments suggest that there are similar aspects in the perception of these two conditions. One possibility is that the unmixed objects of both conditions conveyed a shared feeling of homogeneity which was partly disrupted in MIXED (cf. Section 6.3). This uniformity in the objects is maybe what participants referred to when comparing REAL and VIRTUAL conditions. The proportion of participants who were sensitive to it might have led VIRTUAL not to stand out as significantly different from REAL in this particular experiment. However, at this point, we can’t settle whether this observation is specific to our experiment or not. A larger study will have to be conducted in order to conclude on the differences between the SoE in environments with the properties of VIRTUAL and REAL.

Like in the REAL condition, the MIXED condition generated a large spectrum of responses to P7. Some expressed their sensitivity to the visual contrast between real and virtual contents: e.g., “I was much more aware of the distinction between real and virtual objects.” These participants often described that they saw the objects as belonging to “separate worlds” or saw “superimposed levels of reality” that disturbed them or that they disliked. Meanwhile, other participants were not bothered by the mix and even appreciated it: e.g. “It took me a moment to notice that there were both real and virtual objects”; “Seeing a mixture of real and virtual objects helps to merge the two. It is harder to do the merge without it”.

In the face of these diametrically opposed comments, it seems that some participants could be categorized as “responders” or “rejecters” of the MIXED condition. Rejecters would correspond to users who were bothered by the mix of objects and who resented the contrast between the real and virtual contents. These participants maybe related with the real content undividedly, hence preventing them from strongly connecting with the avatar. The VIRTUAL condition could have been experienced as less disruptive than the MIXED and REAL conditions for them. For responders on the other hand, the virtual content of VIRTUAL maybe appeared as belonging to a separate layer, overlaid on the real environment but not mixing with it. Similarly, the virtual hands of the REAL condition could also have stood out as extraneous or out of place, being the only virtual content present in the scene. Mixing objects in MIXED therefore could have helped them to make the real and virtual layers “miscible”.

Altogether, the perception of the objects seems to divide the participants into three separate groups: those who found the mix helpful for their embodiment experience, those who found it disruptive, and those who found the absence of mix helpful (cf. Section 6.2). These groups seem to match the groups of rankings that came out of question P8, asking participants to rate the conditions by order of preference (cf. Section 4.3). We attempted to investigate the significance and intersections of these groups with cluster analysis, but the small number of participants of our experiment did not allow us to identify them reliably. A larger study will be required to verify these theories.

So far, our results show that participants had considerably varying reactions to all three conditions. The dominant reaction led MIXED to produce stronger body ownership than REAL, but its existence seems to have multiple origins that are difficult to define precisely. Among them, we previously identified that the perception of the virtual-real mix had a key role in the strength of the illusion. One possibility is that this perception has something to do with subjective expectations of the technology. Indeed, participants who reported a preference for VIRTUAL or REAL often commented that they experienced technical inconsistencies more strongly in MIXED (e.g., “The textures and the brightness seemed unnatural”). Meanwhile, the ones who preferred the MIXED condition shared their appreciation of the lighting simulation and tracking at the same level as the other conditions (e.g., “The shadows of virtual objects made the experience quite realistic”).

Surprisingly, however, most of them (indistinctly) expressed that the mix of objects in MIXED did not influence the perception of their avatar. This suggests that they were not conscious that their answers were influenced by the mix of content they observed.

As previous research showed correlations between personality traits and the SoE (Jeunet et al., 2018; Dewez et al., 2019), we suspected that such inner judgment could be related to the LoC profiles described in Section 4.1. The LoC was also formerly identified as linked to control beliefs when dealing with technology and therefore could have influenced the agency and acceptance of the avatar (Beier, 1999). We investigated whether the profiles of our participants could be predictors of “rejecter” and “responder” categories by applying a Spearman correlation test between the LoC dimensions and the embodiment scores. While we did find several significant correlations between locus scores and embodiment scores, we could not collect enough evidence supporting such classification or allowing us to conclude the influence of the LoC.

In our experiment, high appearance scores were translated to a strong personal identification with the avatar’s visual traits. This visual identification is usually not explicitly cited as one of the principal sub-components of the SoE (Kilteni et al., 2012a). However, it is most often recognized as an important top-down influence factor of the feeling of body ownership (Lugrin et al., 2015; Waltemate et al., 2018). Several studies in fact found that BOIs tended to be weaker when the embodied object was morphologically too different or in a too inconsistent pose (Tsakiris and Haggard, 2005; Costantini and Haggard, 2007; Lin and Jörg, 2016). In our case, the embodied hands had a human morphology and were aligned with the participant’s hands. Yet, from all scores, appearance was the one with the lowest outcomes in all conditions. Its median value hovered around 0 and spread out between −1 and 1 for about half of the population, hinting at some hesitation.

This is very likely related to the texture of the virtual hands that gave them a robotic appearance. We chose a non-human avatar to avoid bias linked to user gender, ethnicity, or general criticism caused by self-comparison (Gilbers, 2017; Schwind et al., 2017; Lira et al., 2017). However, this choice led several participants to verbally report being estranged to the virtual hands and of having the sensation of “wearing” them as if they were “gloves”. Indeed, personalized textures that appear natural-looking or matching the user’s skins were previously shown to induce a stronger embodiment than generic textures (Haans et al., 2008; Lira et al., 2017). The lack of resemblance with the robotic skin therefore probably limited the extent of the self-identification with the avatar hands. The conformity in pose and morphology, on the other hand, seems to have positively moderated the appearance scores. This would be in line with the work of Lin and Jörg (2016) showing that robot hands produced a weaker visual identification than with realistic human or zombie hands, but that they could still evoke an SoE.

On another note, appearance was found correlated to several aspects of the environment perception: participants who were able to relate to the avatar’s appearance tended to be more immersed (P1), to accept the virtual content more easily (P2, P4), but were also more bothered by the presence of real content (P5). This last correlation occurred with the ownership scores as well. We believe that these correlations denote limitations regarding the realism of the virtual content produced by our experimental setup. Indeed, as mentioned in Section 6.3, several participants sometimes described that their experience of the avatar was affected by the objects: the mix of virtual and real items raised identifiable differences in the perspective or lighting they displayed. The presence of real objects probably emphasized that the avatar was not real, being intuitively associated as of the same nature as the virtual objects. Perhaps the enhanced awareness of this virtuality was all the more disturbing when the appropriation and identification with the hands were strong, being somehow contradictory to these feelings.

We found a moderate negative correlation between the total SoE score and workload required by the visuomotor task. However, no significant difference appeared between the three conditions in terms of mental effort, evaluated by P6. This would be in line with previous research led by (Škola and Liarokapis, 2016) who compared the cognitive workload induced by Rubber Hand Illusions in VR, MR, and real settings with electroencephalography and NASA Task Load Index questionnaire. This is encouraging for future work intending to explore virtual embodiment scenarios in real environments as the mix of real and virtual objects is often a desired feature of MR applications.

As described in Section 5, we could not strengthen the impact of our paper by reanalyzing our data with the updated version of the embodiment questionnaire or with the analysis of the first trials (Peck and Gonzalez-Franco, 2021). The outcomes of our separate analyses can be summarized as follows: i) the 2018 questionnaire score evaluation shows there is a significant difference between REAL and MIXED in terms of Ownership sensations, ii) the per-item analysis of the 2018 and 2021 questionnaires confirm this result from a significant difference found in Q1, and iii) the 2021 questionnaire score evaluation and first trials analysis revealed no significant differences.

The lack of significant results in (iii) can perhaps be explained by the score computation method of the updated questionnaire and by the small number of participants. Indeed, the 2021 version of the questionnaire differs considerably from its 2018 version:

• nine questions were removed from the original 2018 questionnaire, four of which were used in our initial analysis (Q2, Q3, Q5, Q6).

• Among these four questions, two were assessing body ownership in our questionnaire (Q2, Q3) initially using three items to evaluate this dimension.

• Instead of each question contributing to a single dimension, all questions contribute to several dimensions, making their scores highly inter-dependent. Ownership is now computed with items Q1, Q4, Q7, Q10, and Q11.

• four dimensions are evaluated instead of 6: Ownership, Response, Appearance, Multi-Sensory. Tactile Sensations (not calculated here) and Location dimensions were merged into the “Multi-Sensory” dimension, and Agency was integrated into the “Response” dimension.

• The Total Embodiment score is no longer calculated with a weighted coefficients formula, but with a simple average of all scores.

Although Q1 was not removed from the Ownership scoring of the 2021 version, its combination with items Q4, Q7, Q10, and Q11 seems to have buried the information that it was yielding (and not when combined with control statements Q2 and Q3).

In their paper, the authors of the original questionnaire found that the new version is more sensitive to SoE variations but gave similar embodiment results than the previous one (Peck and Gonzalez-Franco, 2021). This led them to conclude positively on the forward and backward compatibility of their versions. However, unlike them, we found that the two versions could give different results.

There appear to be multiple explanations for this discrepancy. We mainly suspect the increased sensitivity of the updated questionnaire combined with our small number of participants to be the reason for this change in outcome. Peck and Gonzalez-Franco report that their revision amplified the dynamic range of the scale by reducing the number of items and embodiment dimensions. This reduction successfully maximized the inter-individual variability, but it is also possible that it smoothed out the intra-individual variability across the different dimensions.

From the revised questionnaire’s paper, it is not clear whether the sensitivity of the scale to intra-variability has become more critical. Regardless, reporting the differences we found seems of great importance for the community as they call for further discussion on the compatibility of the questionnaires. Future reviews of the literature on this scale will also need to be careful when examining results obtained with the 2018 and 2021 questionnaires as they may produce different conclusions.

Our study provided a consistent and replicable way to study environmental factors of the SoE and has raised the possibility that the world content may impact embodiment experiences in OST. However, the extent of this impact could not be addressed in its entire complexity and our analysis has to be read in the light of several limitations. First, as raised by several participants, the realism of the virtual content seems to have been limited by our rendering system. We attempted in providing physically correct parallax and reproduction of the lighting with custom implementations, but inaccuracies may have impacted the general embodiment experience. To avoid bias, we recommend future studies pay special attention to the lighting coherence of the virtual rendering. Physics simulation of real-world settings is still at an experimental stage in most MR frameworks, but taking advantage of them could be an efficient solution for this.

Secondly, the aspects measured in our post-experiment questionnaire (e.g., immersion, workload) lacked control items for most. We decided to limit the number of items to reduce the length of our experiment, but we acknowledge that including separate questionnaires to evaluate these dimensions individually would have increased the robustness of our analysis. Similarly, the secondary analysis we made following the new guidelines of Peck and Gonzalez-Franco (2021) shows that our results depend on evaluation methods and we could not confirm their validity. We believe that it is important for the community to be aware of what such changes put at stake and to investigate their impacts in future work.

Regarding our experiment’s design, we would like to draw the reader’s attention to the scope of this study. The purpose of our experiment was not to make a comparison of the SoE in immersive and non-immersive settings (e.g. VR vs. MR) but to examine its variations within the specific context of OST experiences. This choice led us to design conditions where participants were in the real world at all times and could partly see their real hands when interacting. This situation is usual in most widespread OST headsets such as the Microsoft Hololens or Magic Leap as they render virtual content with transparency and latency.

Studies comparing the SoE in MR and VR were previously conducted by Škola and Liarokapis (2016) and Wolf and Mal (2020), but the impact of aspects specific to OST displays have yet to be evaluated. We looked at the impact of displaying different amounts of real and virtual objects together, which is a common situation in MR experiences. In the continuation of this study, it would be interesting to analyze how the SoE evolves during direct manipulation of real and virtual objects with virtual hands and to investigate the influence of the real body’s visibility. Reproducing this experiment with different kinds of objects (e.g., realistic, animated, tangible) could also be an interesting avenue to obtain more complete insight into the influence of their presence.

This paper presents an experiment exploring the influence of the presence of virtual/real content on the SoE in MR. We evaluated differences of SoE within three MR environments visualized with an Optical See-Through setup: one displaying only virtual objects, one displaying only real objects, and one mixing both kinds of objects. We found that users tended to get stronger ownership of virtual hands when they were viewed in the presence of both virtual and real objects mixed, as opposed to when the virtual hands were the only virtual content visible. Additionally, we identified potential correlations between the ownership of the avatar’s hands and user immersion as well as the perception of the virtual content integration in the real world. Our results suggest that the content of mixed environments should be taken into account during embodiment experiences. However, we could not conclude with confidence on the origins of the observed differences and their extent to larger populations. We believe that this experiment should nevertheless encourage the community to further investigate the idea that the avatar itself is not the only moderator of the SoE and that environmental context could also play a role in MR. Extended research is therefore needed to clarify the environment’s influence on the SoE, but also to exploit it for stronger ownership illusions. The creation of such illusions in MR finds great potential in psycho-social studies as well as in the medical field, notably for prosthesis simulation or psychotherapy. We hope our study will have raised questions and brought inspiration for future work to evaluate the environmental factors of the SoE.

The raw data supporting the conclusion of this article will be upon request by the authors, without undue reservation.

Written informed consent was obtained from the individuals for the publication of any potentially identifiable images or data included in this article.

All authors contributed to the conception and design of the user study. AG wrote the first draft of the manuscript, wrote the sections of the manuscript, and performed the data analysis. AL and MH reviewed and contributed to the final manuscript. All authors approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors wish to thank all the participants who kindly volunteered.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.679902/full#supplementary-material

Azhar, S., Kim, J., and Salman, A. (2018). “Implementing Virtual Reality and Mixed Reality Technologies in Construction Education: Students’ Perceptions and Lessons Learned,” in 11th Annual International Conference of Education, Research and Innovation, Seville, Spain, 3720–3730. doi:10.21125/iceri.2018.0183

Azuma, R. T. (1997). A Survey of Augmented Reality. Presence: Teleoperators & Virtual Environments 6, 355–385.

Bailey, J. O., Bailenson, J. N., and Casasanto, D. (2016). When Does Virtual Embodiment Change Our Minds?. Presence: Teleoperators and Virtual Environments 25, 222–233.

Bainbridge, W. S. (2004). Berkshire Encyclopedia of Human-Computer Interaction, Vol. 1. Great Barrington: Berkshire Publishing Group LLC).

Banakou, D., Hanumanthu, P. D., and Slater, M. (2016). Virtual Embodiment of white People in a Black Virtual Body Leads to a Sustained Reduction in Their Implicit Racial Bias. Front. Hum. Neurosci. 10, 601. doi:10.3389/fnhum.2016.00601

Banakou, D., Kishore, S., and Slater, M. (2018). Virtually Being Einstein Results in an Improvement in Cognitive Task Performance and a Decrease in Age Bias. Front. Psychol. 9, 917. doi:10.3389/fpsyg.2018.00917

Braun, N., Thorne, J. D., Hildebrandt, H., and Debener, S. (2014). Interplay of Agency and Ownership: The Intentional Binding and Rubber Hand Illusion Paradigm Combined, 9. Publisher: Public Library of Science. e111967. doi:10.1371/journal.pone.0111967

Costantini, M., and Haggard, P. (2007). The Rubber Hand Illusion: Sensitivity and Reference Frame for Body Ownership. Conscious. Cogn. 16, 229–240. doi:10.1016/j.concog.2007.01.001

Dewez, D., Fribourg, R., Argelaguet, F., Hoyet, L., Mestre, D., Slater, M., et al. (2019). “Influence of Personality Traits and Body Awareness on the Sense of Embodiment in Virtual Reality,” in 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 123–134. doi:10.1109/ISMAR.2019.00-12

Eckhoff, D., Cassinelli, A., and Sandor, C. (2019). Exploring Perceptual and Cognitive Effects of Extreme Augmented Reality Experiences. Beijing, China, 4.

El Jamiy, F., and Marsh, R. (2019). Survey on Depth Perception in Head-Mounted Displays: Distance Estimation in Virtual Reality, Augmented Reality, and Mixed Reality. IET Image Process. 13, 707–712.

Evans, G., Miller, J., Pena, M. I., MacAllister, A., and Winer, E. (2017). Evaluating the Microsoft HoloLens through an Augmented Reality Assembly Application.

Feuchtner, T., and Müller, J. (2017). Extending the Body for Interaction with Reality. In ACM CHI. Denver: ACM Press, 5145–5157. doi:10.1145/3025453.3025689

Fribourg, R., Argelaguet, F., Hoyet, L., and Lecuyer, A. (2018). Studying the Sense of Embodiment in VR Shared Experiences. (IEEE VR. Reutlingen), 273–280. doi:10.1109/VR.2018.8448293

Gilbers, C. (2017). The Sense of Embodiment in Augmented RealityMaster’s Thesis. Utrecht (Netherlands): Ultrecht University.

Gonzalez-Franco, M., and Peck, T. C. (2018). Avatar Embodiment. Towards a Standardized Questionnaire. Front. Robotics AI 5, 74. doi:10.3389/frobt.2018.00074

Haans, A., IJsselsteijn, W. A., and de Kort, Y. A. W. (2008). The Effect of Similarities in Skin Texture and Hand Shape on Perceived Ownership of a Fake Limb. Body Image 5, 389–394. doi:10.1016/j.bodyim.2008.04.003

Hachet, M., Bossavit, B., Cohé, A., and de la Rivière, J.-B. (2011). Toucheo: Multitouch and Stereo Combined in a Seamless Workspace. In ACM UIST. Santa Barbara: ACM Press, 587. doi:10.1145/2047196.2047273

Hilliges, O., Kim, D., Izadi, S., Weiss, M., and Wilson, A. (2012). HoloDesk: Direct 3d Interactions with a Situated See-Through Display. In Proceedings of the 2012 ACM annual conference on Human Factors in Computing Systems - CHI ’12. Austin: ACM Press, 2421. doi:10.1145/2207676.2208405

Hoang, T. N., Ferdous, H. S., Vetere, F., and Reinoso, M. (2018). Body as a Canvas: An Exploration on the Role of the Body as Display of Digital Information. In Proceedings of the 2018 on Designing Interactive Systems Conference 2018 - DIS ’18 (Hong Kong). China: ACM Press, 253–263. doi:10.1145/3196709.3196724

Hoyet, L., Argelaguet, F., Nicole, C., and Lécuyer, A. (2016). “”wow! I Have Six Fingers!”: Would You Accept Structural Changes of Your Hand in VR?. Front. Robotics AI 3, 27. doi:10.3389/frobt.2016.00027

IJsselsteijn, W. A., de Kort, Y. A. W., and Haans, A. (2006). Is This My Hand I See before Me? the Rubber Hand Illusion in Reality, Virtual Reality, and Mixed Reality. Presence: Teleoperators and Virtual Environments 15, 455–464. doi:10.1162/pres.15.4.455

Javornik, A., Rogers, Y., Gander, D., and Moutinho, A. (2017). Magicface: Stepping into Character through an Augmented Reality Mirror. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 4838–4849.

Jeunet, C., Albert, L., Argelaguet, F., and Lécuyer, A. (2018). “Do You Feel in Control?”: towards Novel Approaches to Characterise, Manipulate and Measure the Sense of agency in Virtual Environments. IEEE Trans. visualization Comput. graphics 24, 1486–1495.

Kalckert, A., and Ehrsson, H. H. (2012). Moving a Rubber Hand that Feels like Your Own: A Dissociation of Ownership and Agency. Front. Hum. Neurosci. 6. doi:10.3389/fnhum.2012.00040

Kaneko, F., Shindo, K., Yoneta, M., Okawada, M., Akaboshi, K., and Liu, M. (2019). A Case Series Clinical Trial of a Novel Approach Using Augmented Reality that Inspires Self-Body Cognition in Patients with Stroke: Effects on Motor Function and Resting-State Brain Functional Connectivity. Front. Syst. Neurosci. 13, 76. doi:10.3389/fnsys.2019.00076

Kilteni, K., Groten, R., and Slater, M. (2012a). The Sense of Embodiment in Virtual Reality. Presence: Teleoperators and Virtual Environments 21, 373–387. doi:10.1162/PRES_a_00124

Kilteni, K., Maselli, A., Kording, K. P., and Slater, M. (2015). Over My Fake Body: Body Ownership Illusions for Studying the Multisensory Basis of Own-Body Perception. Front. Hum. Neurosci. 9, 141. doi:10.3389/fnhum.2015.00141

Kilteni, K., Normand, J.-M., Sanchez-Vives, M. V., and Slater, M. (2012b). Extending Body Space in Immersive Virtual Reality: A Very Long Arm Illusion. PLOS ONE 7, 1–15. doi:10.1371/journal.pone.0040867

Kokkinara, E., and Slater, M. (2014). Measuring the Effects through Time of the Influence of Visuomotor and Visuotactile Synchronous Stimulation on a Virtual Body Ownership Illusion. Perception 43, 43–58. doi:10.1068/p7545

Kress, B., and Starner, T. (2013). “A Review of Head-Mounted Displays (Hmd) Technologies and Applications for Consumer Electronics,” in Photonic Applications for Aerospace, Commercial, and Harsh Environments IV, 8720 (Baltimore, MD: International Society for Optics and Photonics), 87200A. doi:10.1117/12.2015654

Kurillo, G., Koritnik, T., Bajd, T., and Bajcsy, R. (2011). Real-time 3d Avatars for Tele-Rehabilitation in Virtual Reality. Med. Meets Virtual RealityNextMed 163 18, 290.

Lamounier, E., Lopes, K., Cardoso, A., and Soares, A. (2012). Using Augmented Reality Techniques to Simulate Myoelectric Upper Limb Prostheses. J. Bioengineer Biomed. Sci S 1, 2.

Lefcourt, H. M. (1981). Differentiating Among Internality, Powerful Others, and Chance. Research with the Locus of Control Construct 1. Publisher: Elsevier, 15–59.

Lin, L., and Jörg, S. (2016). Need a Hand?: How Appearance Affects the Virtual Hand Illusion. In Proceedings of the ACM Symposium on Applied Perception (New York, NY: Anaheim California: ACM), 69–76. doi:10.1145/2931002.2931006

Lira, M., Egito, J. H., Dall’Agnol, P. A., Amodio, D. M., Gonçalves, s. F., and Boggio, P. S. (2017). The Influence of Skin Colour on the Experience of Ownership in the Rubber Hand Illusion. Scientific Rep. 7, 15745. doi:10.1038/s41598-017-16137-3

Lloyd, D. M. (2007). Spatial Limits on Referred Touch to an Alien Limb May Reflect Boundaries of Visuo-Tactile Peripersonal Space Surrounding the Hand. Brain Cogn. 64, 104 – 109. doi:10.1016/j.bandc.2006.09.013

Lugrin, J.-L., Latt, J., and Latoschik, M. E. (2015). Anthropomorphism and Illusion of Virtual Body Ownership. Geneva (Switzerland): The Eurographics Association. doi:10.2312/egve.20151303

Maselli, A., and Slater, M. (2013). The Building Blocks of the Full Body Ownership Illusion. Front. Hum. Neurosci. 7, 83. doi:10.3389/fnhum.2013.00083

Mölbert, S. C., Thaler, A., Mohler, B. J., Streuber, S., Romero, J., Black, M. J., et al. (2018). Assessing Body Image in Anorexia Nervosa Using Biometric Self-Avatars in Virtual Reality: Attitudinal Components rather Than Visual Body Size Estimation Are Distorted. Psychol. Med. 48, 642–653. doi:10.1017/S0033291717002008

Nimcharoen, C., Zollmann, S., Collins, J., and Regenbrecht, H. (2018). Is that Me? — Embodiment and Body Perception with an Augmented Reality Mirror. Munich: IEEE ISMARIEEE), 158–163. doi:10.1109/ISMAR-Adjunct.2018.00057

Nishino, W., Yamanoi, Y., Sakuma, Y., and Kato, R. (2017). Development of a Myoelectric Prosthesis Simulator Using Augmented Reality. In 2017 IEEE International Conference on Systems, Man, and Cybernetics. (SMC) (IEEE), 1046–1051.

Peck, T. C., and Gonzalez-Franco, M. (2021). Avatar Embodiment. A Standardized Questionnaire. Front. Virtual Reality 1. doi:10.3389/frvir.2020.575943

Rosa, N., van Bommel, J.-P., Hurst, W., Nijboer, T., Veltkamp, R. C., and Werkhoven, P. (2019). “Embodying an Extra Virtual Body in Augmented Reality,”. Editors V. R. IEEE, and Japan. Osaka (IEEE), 1138–1139. doi:10.1109/VR.2019.8798055

Rossier, J., Rigozzi, C., and Berthoud, S. (2002). Validation de la version française de l’échelle de contrôle de Levenson (IPC), influence de variables démographiques et de la personnalité. Ann. Médico-psychologiques, revue psychiatrique 160, 138–148. doi:10.1016/S0003-4487(01)00111-1

Rotter, J. B. (1966). Generalized Expectancies for Internal versus External Control of Reinforcement. Psychol. Monogr. 80 (1), 1–28.

Schwind, V., Knierim, P., Tasci, C., Franczak, P., Haas, N., and Henze, N. (2017). These Are Not My Hands!”: Effect of Gender on the Perception of Avatar Hands in Virtual Reality. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems - CHI ’17. Denver, Colorado, USA: ACM Press), 1577–1582. doi:10.1145/3025453.3025602

Škola, F., and Liarokapis, F. (2016). Augmenting the Rubber Hand Illusion. Vis. Comp. 32, 761–770. doi:10.1007/s00371-016-1246-8

Slater, M.. (2018). Immersion and the Illusion of Presence in Virtual Reality. Br. J. Psychol. 109, 431–433. doi:10.1111/bjop.12305

Spanlang, B., Normand, J.-M., Borland, D., Kilteni, K., Giannopoulos, E., Pomés, A., et al. (2014). How to Build an Embodiment Lab: Achieving Body Representation Illusions in Virtual Reality. Front. Robotics AI 1, 9. doi:10.3389/frobt.2014.00009

Suzuki, K., Garfinkel, S. N., Critchley, H. D., and Seth, A. K. (2013). Multisensory Integration across Exteroceptive and Interoceptive Domains Modulates Self-Experience in the Rubber-Hand Illusion. Neuropsychologia 51 (13), 2909–2917. doi:10.1016/j.neuropsychologia.2013.08.014

Tang, A., Biocca, F., and Lim, L. (2004). “Comparing Differences in Presence during Social Interaction in Augmented Reality versus Virtual Reality Environments: An Exploratory Study,” in Proceedings of the 7th Annual International Workshop on Presence, Valencia, Spain, 204–208.

Tsakiris, M., and Haggard, P. (2005). The Rubber Hand Illusion Revisited: Visuotactile Integration and Self-Attribution. Science 31 (1), 80–91. doi:10.1037/0096-1523.31.1.80

Waltemate, T., Gall, D., Roth, D., Botsch, M., and Latoschik, M. E. (2018). The Impact of Avatar Personalization and Immersion on Virtual Body Ownership, Presence, and Emotional Response. IEEE Trans. Visualization Comp. Graphics 24, 1643–1652. doi:10.1109/TVCG.2018.2794629

Wang, K., Iwai, D., and Sato, K. (2017). Supporting Trembling Hand Typing Using Optical See-Through Mixed Reality. IEEE Access 5, 10700–10708. doi:10.1109/ACCESS.2017.2711058

Wolf, E., and Mal, D. (2020). Body Weight Perception of Females Using Photorealistic Avatars in Virtual and Augmented Reality Proc. IEEE ISMAR.

Keywords: sense of embodiment, augmented reality, user study, optical see-through, mixed reality

Citation: Genay A, Lécuyer A and Hachet M (2021) Virtual, Real or Mixed: How Surrounding Objects Influence the Sense of Embodiment in Optical See-Through Experiences?. Front. Virtual Real. 2:679902. doi: 10.3389/frvir.2021.679902

Received: 12 March 2021; Accepted: 20 May 2021;

Published: 04 June 2021.

Edited by:

Stephan Lukosch, Human Interface Technology Lab New Zealand (HIT Lab NZ), New ZealandReviewed by:

Tabitha C. Peck, Davidson College, United StatesCopyright © 2021 Genay, Lécuyer and Hachet. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adélaïde Genay, YWRlbGFpZGUuZ2VuYXlAaW5yaWEuZnI=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.