- 1Department of Psychology, University of Utah, Salt Lake City, UT, United States

- 2Department of Electrical Engineering and Computer Science, Vanderbilt University, Nashville, TN, United States

Measures of perceived affordances—judgments of action capabilities—are an objective way to assess whether users perceive mediated environments similarly to the real world. Previous studies suggest that judgments of stepping over a virtual gap using augmented reality (AR) are underestimated relative to judgments of real-world gaps, which are generally overestimated. Across three experiments, we investigated whether two factors associated with AR devices contributed to the observed underestimation: weight and field of view (FOV). In the first experiment, observers judged whether they could step over virtual gaps while wearing the HoloLens (virtual gaps) or not (real-world gaps). The second experiment tested whether weight contributes to underestimation of perceived affordances by having participants wear the HoloLens during judgments of both virtual and real gaps. We replicated the effect of underestimation of step capabilities in AR as compared to the real world in both Experiments 1 and 2. The third experiment tested whether FOV influenced judgments by simulating a narrow (similar to the HoloLens) FOV in virtual reality (VR). Judgments made with a reduced FOV were compared to judgments made with the wider FOV of the HTC Vive Pro. The results showed relative underestimation of judgments of stepping over gaps in narrow vs. wide FOV VR. Taken together, the results suggest that there is little influence of weight of the HoloLens on perceived affordances for stepping, but that the reduced FOV of the HoloLens may contribute to the underestimation of stepping affordances observed in AR.

1. Introduction

Augmented reality (AR) displays have significant potential for improving task performance in large-scale, real-world spaces because they superimpose computer-generated objects onto the real environment. In navigational tasks, virtual objects could serve as cues for wayfinding in an unfamiliar city or enhance memory for locations previously encountered; in architectural design, AR could allow for the virtual placement of large features or structures within a space without physically building or moving them. Importantly, the utility of these applications relies on the ability of users to perceive the scale (i.e., absolute size or distance) of the virtual objects as if they were real objects in order to interact naturally and accurately with them. There are multiple ways to assess the perception of scale in mediated or mixed-reality environments. Numerous studies have measured distance perception to virtual targets, typically finding some underestimation relative to the real world (Dey et al., 2018). Another approach, and the focus of this paper, is to measure an observer's perceived affordances of the virtual target. Affordances are perceived action capabilities of environmental features that are directly related to properties of the observer's body (Gibson, 1979). For example, an object is graspable if it fits within the constraints of the span of the hand.

There is a growing body of literature using perceived affordances as objective measures of perception of virtual spaces, much of which has suggested good similarity or perceptual fidelity between perception of real and virtual targets, but also has identified some systematic differences (Geuss et al., 2010; Lin et al., 2012, 2013, 2015; Regia-Corte et al., 2013; Stefanucci et al., 2015; Pointon et al., 2018a,b; Creem-Regehr et al., 2019; Bhargava et al., 2020b; Gagnon et al., 2020). Pointon et al. (2018b) found that observers' judgments of passing through apertures did not differ for AR and real-world targets, but that judgments of stepping over an AR gap were underestimated relative to the real world. One notable difference between stepping over gaps and passing through apertures is the region of space in which the action is performed. Whereas passing through an aperture typically involves viewing and acting on an environmental feature that is farther from the viewer in action space, stepping over a gap requires judging personal space (Cutting and Vishton, 1995), directly looking down at one's feet. In the current series of three experiments, we examined two possible reasons for the underestimation in AR relative to the real world that relate to perceiving AR gaps on the ground, close to the viewer. First, we consider the impact of wearing the HoloLens, given that the weight could impact the head rotations needed to view the gap on the ground. Most, if not all, of previous experiments using head-worn AR displays face the confound that the display is worn in AR but not in the real world. Second, we ask whether differences in estimations could be attributed to the restricted field of view (FOV), which limits the extent of the AR feature that can be viewed at one time, particularly at close distances. The need to integrate the small visible portions of the gap to perceive the full extent of the gap could affect perceived capabilities to cross. Given that the FOV in AR cannot be widened in current technologies to test whether restricted FOV affects estimates, we use virtual reality (VR) to manipulate FOV. This approach is not intended to simulate the AR experience, but rather to compare the gap estimation task performance across wide and restricted fields of view.

Affordances are judgments of action capabilities that are scaled to an observer's body dimensions and the surrounding environment (Gibson, 1979). By evaluating affordances in the real world and in mediated environments, we can assess the perceptual fidelity of mediated environments such as AR. Since the introduction of the theory of affordances by Gibson (1979), there has been extensive research using affordance judgments as an action-based measure of perception both in the real world and in virtual environments. Two common affordances are judgments of being able to pass through an aperture and stepping over a gap. For the passing-through affordance, viewers are asked to judge whether they think they could walk through an aperture without turning their shoulders. Warren and Whang (1987) established that, in real-world settings, judgments are scaled to viewers' shoulder widths in a consistent way. Viewers generally allow for a margin of safety when making these judgments, such that they judge the smallest passable aperture to be 10–20% greater than their shoulder width (Stefanucci and Geuss, 2009; Geuss et al., 2010; Franchak and Adolph, 2012). When making a gap-crossing judgment, viewers are asked to judge whether they think they could successfully step over a gap while keeping their back foot on the ground and without falling or jumping. This affordance can be reliably scaled to body dimensions such as leg length, step length, and eye height (Jiang and Mark, 1994). Studies in the real world generally show some overestimation of the maximum gap width that is crossable in children and adults when scaled to their actual step (Plumert and Schwebel, 1997; Creem-Regehr et al., 2019), but underestimation was found in an early study when judging crossing at a height (Jiang and Mark, 1994).

The majority of research comparing affordance judgments in the real world to virtual environments indicates that judgments do not differ between the real world and VR when stereoscopic vision is enabled (Geuss et al., 2010, 2015; Lin et al., 2015; Stefanucci et al., 2015; Bhargava et al., 2020a). These results were consistent across different types of affordances including passing through an aperture (Geuss et al., 2010, 2015; Bhargava et al., 2020a), object graspability and reaching through an aperture (Stefanucci et al., 2015), and stepping over a pole and stepping off a ledge (Lin et al., 2015), and across different display types (Geuss et al., 2015; Stefanucci et al., 2015). However, some studies have found differences in affordance judgments between the real world and VR. For example, in Ebrahimi et al. (2018) reachability judgments were closer to actual reach in VR compared to the real world. Recently, Bhargava et al. (2020b) investigated passability judgments in VR and the real world and found that aperture widths had to be larger in VR before they were judged to be passable compared to the real world. However, judgments were made after participants walked to the aperture rather than from a static viewpoint.

Research on affordances in AR is relatively new (Pointon et al., 2018a,b; Wu et al., 2019; Gagnon et al., 2020). Pointon et al. (2018a) assessed whether the passing-through affordance could be used as a measure of perceptual fidelity in AR. Participants completed one block of trials where they made verbal yes/no judgments on whether they thought they could pass through virtual poles presented with the Microsoft HoloLens and a second block of trials where they made distance judgments to the same poles with a blind walking task. These results were compared to previous work that used the same poles and affordance judgment task in VR and in the real world (Geuss et al., 2010). Consistent with previous work (Jones et al., 2016), blind walking judgments of distance were fairly accurate. Moreover, the passing-through affordance judgments were similar to those found in VR and the real world (Geuss et al., 2010). These results provide support for using affordance judgments as a measure of perceptual fidelity in AR.

Pointon et al. (2018b) extended their prior work to investigate two types of affordance judgments in AR: passing through an aperture and stepping over a gap. They directly compared the AR affordance judgments to judgments of the same tasks in the real world. The passing-through task was similar to Pointon et al. (2018a). In the AR condition, virtual poles were presented with the HoloLens. In the real-world condition, the aperture was created by real poles that resembled those modeled in AR. For the stepping-over affordance, in the AR condition, a virtual gap was presented on the ground using the HoloLens. In the real-world condition, a gap was created by adjusting fabric on the ground. Results from the passing-through experiment indicated that there was no difference in affordance judgments in AR compared to the real world. However, in the stepping-over experiment, participants judged the maximum gap that they could cross to be smaller in AR than in the real world. That is, participants underestimated their ability to cross a gap in AR compared to the real world.

A number of factors could contribute to gap-crossing affordance judgments differing between AR and the real world while passing-through affordances did not differ. One factor could be the area of space in which the target affordance is located. The aperture for passing-through judgments was located in action space but the gap for the crossing judgments was located in personal space, and different visual depth cues are available in these different regions (Cutting and Vishton, 1995). Another factor is the limited FOV of the HoloLens. The distance to the target affordance is related to the amount of visual information available within the FOV. Because the aperture for the passing-through judgment was located 3 meters from the viewer, the width of the aperture was always contained within the HoloLens' FOV. However, the gap was located at the viewers' feet, and wider gaps did not always fit within the HoloLens' FOV. This resulted in the need to integrate visual information through scanning of the gap.

It is also possible that the weight of the HoloLens contributed to the different findings. In Pointon et al. (2018b), participants did not wear the HoloLens for the real-world conditions. This might not have affected the passing-through affordance because making the passability judgment did not require much head movement since the aperture always fit within the FOV. However, due to the limited FOV of the HoloLens, making the gap-crossing judgment usually required the participant to look down and move their head to scan the entire gap width. Differences in head rotations with and without the HoloLens could have led to different visual or proprioceptive feedback about the gap. In addition to the effects on head movements, the added weight of the HoloLens could have reduced the perceived capability of stepping in AR because of the additional energetic demands on the body (Proffitt, 2006).

We draw on the VR literature to consider whether the weight of the HoloLens and/or the restricted FOV contribute to the perception of gap-crossing judgments in AR, as this question has not yet been explored with AR. A large body of work has investigated the perception of distance in virtual environments; typically, distances are underestimated in virtual environments compared to the real world (see Renner et al., 2013; Creem-Regehr et al., 2015 for reviews). A number of studies have explored possible reasons for this underestimation, including physical properties of the head-mounted display (HMD) such as restricted FOV and weight and inertial properties of the headset. Willemsen et al. (2009) created a mock HMD that mimicked the mass and moments of inertia and FOV of a real HMD but allowed for real-world viewing. They found that, compared to normal real-world viewing, the mock HMD resulted in underestimation of distance. However, wearing a headband with the same distribution of mass but that allowed for full FOV did not differ from real-world viewing, suggesting there may be an interaction between the weight of the device and the restricted FOV. Previous literature investigating the effects of FOV on space perception has mainly focused on distance perception. Restricting FOV in the real world often leads to the underestimation of distance to real targets (Wu et al., 2004; Lin et al., 2011; Li et al., 2015), although this effect was not found in Knapp and Loomis (2004). Comparing different FOV extents in VR HMDs also indicates that a larger FOV results in more accurate distance judgments (Jones et al., 2012, 2016; Li et al., 2015; Buck et al., 2018), but some work (Creem-Regehr et al., 2005) suggests that this might depend on whether or not head movement is restricted.

The limited FOV experience in optical see-through AR devices like the HoloLens is different than the FOV experience in VR HMDs and in real-world simulated FOV conditions. In optical see-through devices, the viewer still has access to their full visual field; visual information from the real world is still available in the periphery. The restricted FOV only applies to the virtual objects projected by the device. In contrast, VR HMDs and common real-world FOV restriction methods limit the visual information to only what is visible within the FOV aperture. Thus, it is possible that FOV effects in optical see-through AR might differ from the previous effects found in VR because the AR experience provides a more cue-rich environment. Jones et al. (2011) investigated this possibility by comparing distance judgments to a virtual target viewed in the real world in two optical see-through HMD conditions. In one condition, the HMD was worn as normal, with peripheral information available. In the other condition, the periphery of the HMD was blocked. They found that distance judgments were underestimated in the occluded HMD condition but not in the normal viewing condition, indicating that the availability of visual information in the periphery may impact distance judgments in AR optical see-through devices.

There is little research on how FOV might impact affordance judgments, particularly in AR. Recent work by Gagnon et al. (2020) examined whether the amount of visual information available in the HoloLens FOV affected passing-through affordance judgments. The amount of visual information available was manipulated by judging affordances at two different distances. At a far distance (similar to Pointon et al., 2018a,b), the aperture was always visible within the FOV. When making judgments at a closer distance (less than 1 m from the aperture), the width of the aperture did not always fit within the FOV. Therefore, at the close distance, viewers would need to rotate their head to view the extent of the aperture and integrate the visual information. Distance from the aperture (correlated to visibility of the aperture) had an effect on passability judgments. Viewers judged that they could pass through smaller apertures (closer to shoulder width) at the close distance, when the aperture did not completely fit within the FOV. In Gagnon et al. (2020), the FOV manipulation was tied to viewing distance. We take a different approach in the current study with the affordance of gap-stepping, directly manipulating FOV with a VR HMD that could vary the FOV to resemble the restriction in AR, but also widen it to fully test effects of FOV on stepping judgments in the same technology.

In the present study we conducted three experiments to investigate two factors—weight of the HoloLens and restricted FOV—that may have contributed to the underestimation of gap-crossing judgments in AR relative to the real world. First, in Experiment 1, we set out to replicate the gap-crossing results from Pointon et al. (2018b) in a different laboratory. We hypothesized that observers would underestimate gap-stepping abilities with AR gaps presented through the HoloLens relative to matched real-world gaps, given prior work. Then, in Experiment 2, we explored whether the weight of the HoloLens contributed to the affordance differences by having participants wear the HoloLens during the real-world trials as well as during the AR trials. We hypothesized that wearing the HoloLens in the real world would reduce the difference between AR and real-world estimates. In Experiment 3, we used immersive virtual reality (as displayed through the HTC Vive Pro) to manipulate FOV, given it is not possible to increase the FOV in the HoloLens. We compared gap-stepping estimates with the full FOV of the HMD to a restricted FOV HMD condition. We hypothesized that gap-stepping estimates would be lower with restricted VR FOV compared to the wider VR FOV.

2. Experiment 1

In the first experiment, we attempted to replicate the findings of Pointon et al. (2018b) in a different laboratory. Judgments of gap-crossing were collected within participants with the HoloLens on and displaying virtual gaps in one condition and with no HoloLens while viewing real-world gaps in the other condition. Given the findings of Pointon et al. (2018b), we hypothesized that participants would underestimate their ability to step over gaps in the AR condition compared to the real world.

2.1. Participants

Twenty participants were recruited from an introductory psychology course at the University of Utah and were compensated with course credit. One participant was excluded due to missing data. The remaining 19 participants (13 female, with ages ranging from 18 to 27, M = 20.47, SD = 2.22) were included in the analyses. All participants had normal or corrected-to-normal vision and gave consent for their participation.

2.2. Materials

Participants used the Microsoft HoloLens (version 1) to view the AR-generated gaps. The FOV of the HoloLens is approximately 30° ×17° and the device weighs 579 g. The experimenter controlled the progression of the AR trials using the HoloLens' wireless controller. Participants performed the experimental tasks in a windowless room (4 ×9.5 m). In the real-world condition, white theater fabric was placed on the ground and folded to match the various gap widths of the experiment. A self-retracting tape measure was used by the experimenter to fold the fabric to the designated trial gap width.

2.3. Procedure

Upon arrival, participants provided informed consent and completed a random-dot stereogram test to ensure they had functioning stereo vision. They were then given an overview of the experiment and the task instructions.

Participants completed two blocks of trials where they made gap-crossing judgments in a real-world (RW) condition block and an AR condition block. The order of the blocks was counterbalanced across participants. Each block consisted of 21 trials. Gap widths ranged from 0.60 to 1.50 m in 0.15 m increments, resulting in 7 gap widths. Each gap width was presented to the participant three times. Gap width presentation order was randomized such that the same width never occurred twice in a row. Participants completed one block of trials at one end of the room and walked to the other end of the room to complete the other block of trials.

In the RW condition, participants did not wear the HoloLens. For each trial, participants faced away from a piece of white theater fabric while the experimenter(s) folded the fabric to the designated gap width (see Figure 1). Once ready, the experimenter signaled the participant to turn around and view the gap. To make a gap-crossing judgment, participants indicated if they would be able to cross the gap (with a complete step that cleared the gap) without jumping or falling and then provided a verbal “yes” or “no” response to the experimenter.1 After making their judgment, they turned around to face the wall while the experimenter adjusted the fabric for the next trial. In the AR condition, participants were fitted with the HoloLens, which projected a virtual white gap on the floor for each trial (see Figures 2, 3). At the start of each trial a 6-digit number was presented on the AR screen when the participant looked straight ahead. Once they read the number to the experimenter they could look at the ground to view the AR gap and make their judgment. We did this to ensure that the participant looked away from the ground between each trial. After making their judgment, they were instructed to look straight ahead while the experimenter advanced to the next trial using the wireless clicker.

Figure 1. Participant making a gap affordance judgment in the Experiment 2 real-world condition. The HoloLens was not worn in the Experiment 1 real-world condition.

Figure 3. A composite image depicting the AR condition in Experiments 1 and 2 from a third-person point of view.

After completing both conditions, the following measurements were collected: standing height, eye height, leg length, and step length. Leg length was measured from the ground to the pelvic bone. Step length was measured by instructing the participant to take the largest step they could without jumping or falling and keeping their back foot on the ground. Each participant's step length was measured three times and then averaged. This length was measured from trailing toe to leading heel. Finally, participants were asked debriefing questions to gauge their experience and their perception of the purpose of the study.

2.4. Results and Discussion

Gap distances were scaled to participants' average step length (by dividing the trial gap distance by step length), and then centered at zero. Thus, a scaled gap width of 0 is the smallest gap participants would physically be able to cross. A scaled gap width greater than zero indicates a gap that would not be crossable, while scaled gap widths less than zero indicate crossable gaps.

We analyzed our data with a binomial mixed model, which is a form of generalized regression that is appropriate for non-normally distributed outcomes and repeated-measures designs (Raudenbush and Bryk, 2002). Specifically, we used the glmer function in the lme4 package (Bates et al., 2015) for R to conduct a logistic regression model using a binomial random component and logit link function. In our model, condition (RW vs. AR), order (AR first vs. AR second), the interaction between condition and order, and scaled gap width, were included as within-participants predictors. A random intercept was included to account for differences between participants. The outcome measure was whether or not participants said they could or could not step over a given gap. Thus, positive effects indicate an increased likelihood of judging a gap as crossable, whereas negative effects indicate a decreased likelihood of judging a gap as crossable. The resulting model was

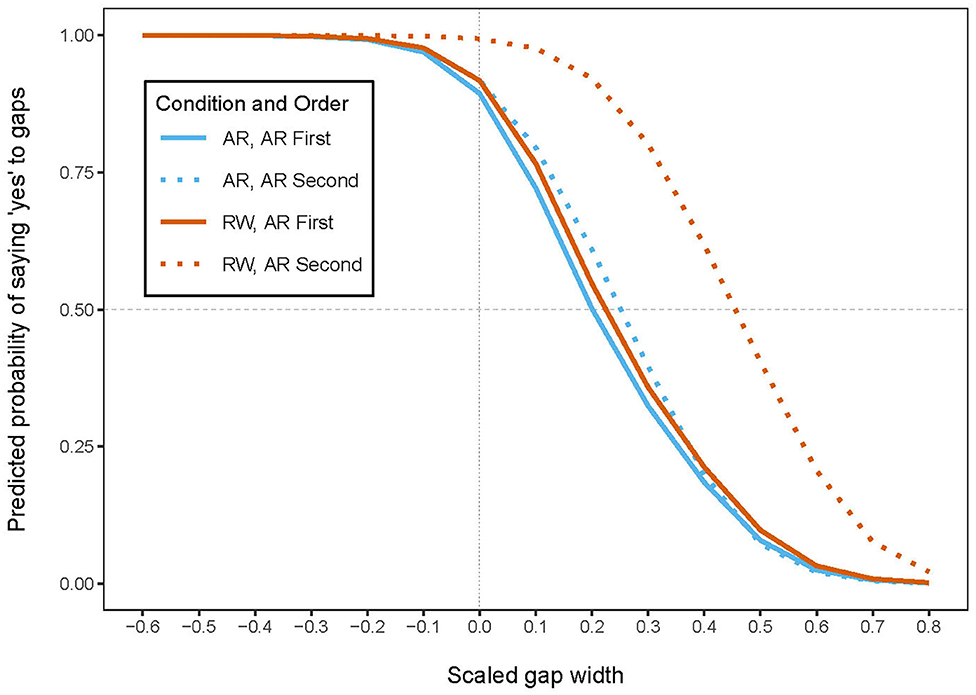

where p is the predicted probability that the gap is crossable. For each condition, we can straightforwardly transform this into an equation that indicates the predicted probability of crossing in terms of the scaled gap width. These curves are plotted in Figure 4.

Figure 4. Experiment 1 results. Predicted probability of saying “yes” to gap crossing as a function of scaled gap width for the AR condition (blue lines) and real-world condition (RW; orange lines) at two condition orders [AR experienced first (solid lines) and AR experienced second (dotted lines)]. The dotted line at x = 0.0 indicates when gap width = actual step length. The point of subjective equality (PSE) is the value of scaled gap width (on the x-axis) at which the curve intersects with y = 0.50.

We found a main effect of condition such that participants in the RW condition had an increased likelihood of judging gaps as crossable (B = 1.59, SE = 0.33, p < 0.001). However, this was qualified by an interaction between condition and order such that participants' likelihood of judging a gap as crossable was larger if they experienced the AR condition second (B = 2.57, SE = 0.64, p < 0.001). More specifically, when participants experienced the AR condition first, there was no significant difference in their judgments between the AR and RW conditions (B = 0.30, SE = 0.39, p = 0.438). When participants experienced the AR condition second, their likelihood of judging a gap as crossable was significantly greater in the RW condition compared to the AR condition (B = 2.87, SE = 0.52, p < 0.001). Lastly, we found that participants' likelihood of judging a gap as crossable decreased as gap width increased (B = −14.07, SE = 1.25, p < 0.001). The results suggest that participants did, on average, judge real-world gaps as more crossable, but this effect was driven mostly by the order in which the participants experienced the conditions (see Figure 4).

3. Experiment 2

In the first experiment, we replicated the findings of Pointon et al. (2018b). Participants underestimated their ability to step over gaps when the gaps were presented in AR as compared to the real world. The reason for this difference in estimates could be due to many factors. In this experiment, we directly test whether the weight and inertial properties of the HoloLens may have contributed to the difference by having participants wear it in both conditions. The HoloLens was powered off for real-world viewing. Examination of this question in VR suggests a possible influence of combined HMD properties of FOV, weight, and inertial properties on distance estimation that could be due to an influence on head rotations or scanning patterns (Willemsen et al., 2009; Grechkin et al., 2010). If these mechanical properties of the HoloLens influence perceptual estimations in AR, then we would expect to see a reduction in the difference between RW and AR judgments when wearing the HoloLens in both conditions.

3.1. Participants

Twenty-four participants were recruited for course credit in an introductory psychology course at the University of Utah. Three participants were not included in the final analyses because they experienced technical issues (N = 2) or did not understand the task instructions (N = 1). The remaining 21 participants (10 female, with ages ranging from 18 to 30, M = 20, SD = 2.63) were included in the analyses. All participants had normal or corrected-to-normal vision and gave consent for their participation.

3.2. Materials and Procedure

The materials were identical to Experiment 1. The Microsoft HoloLens (version 1) was used to present AR gaps, and the real-world gaps were presented with fabric placed on the ground. The procedure was also identical to Experiment 1, except that participants wore the HoloLens in both the AR and RW conditions (see Figures 1–3).

3.3. Results and Discussion

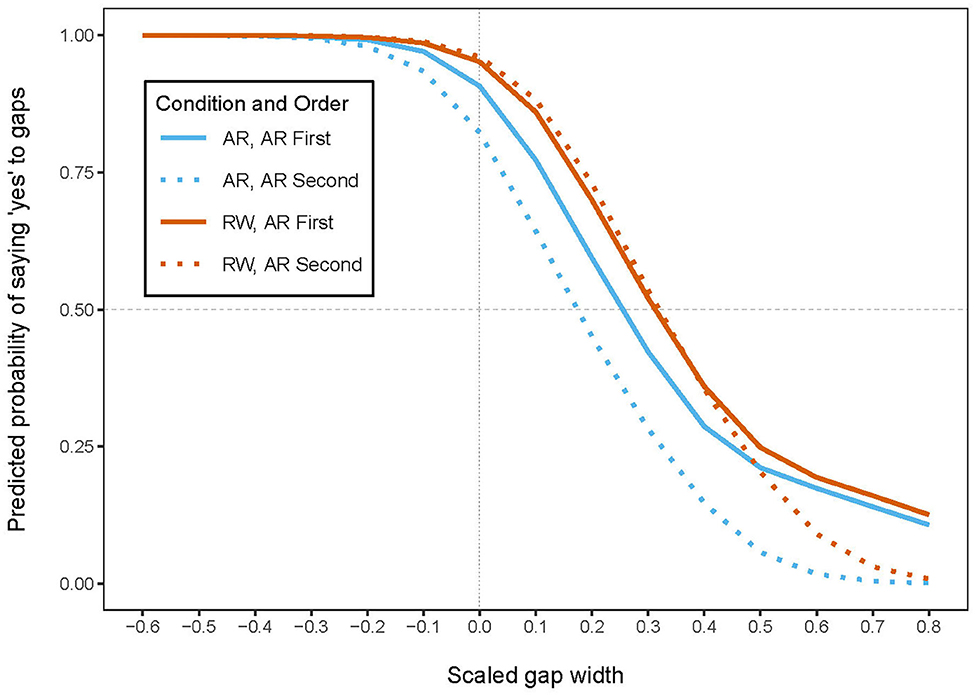

Our analysis method was identical to that of Experiment 1. The logit link function was found to be

and the resulting psychometric curves are shown in Figure 5. We replicated the main effect of condition: participants' likelihood of judging a gap as crossable was greater in the RW condition compared to the AR condition (B = 1.35, SE = 0.28, p < 0.001). The interaction between condition and order was also significant, which showed that participants' likelihood of judging a gap as crossable was greater when they experienced the AR condition second (B = 1.15, SE = 0.53, p = 0.031). Further inspection indicated that when participants experienced the AR condition first, they showed a significantly greater likelihood of judging gaps as crossable in the RW condition compared to the AR condition (B = 0.77, SE = 0.37, p = 0.037). When participants experienced the AR condition second, they showed even greater likelihood of judging gaps as crossable in the RW condition compared to the AR condition (B = 1.93, SE = 0.40, p < 0.001). Finally, we replicated the effect of gap width, such that increases in gap width were associated with decreases in the likelihood that participants judged gaps as crossable (B = −13.26, SE = 1.12, p < 0.001). Overall, the results suggest that participants judged gaps as more crossable in the RW condition compared to the AR condition, and that experiencing the AR condition second exaggerated this difference (see Figure 5). The replication of the effect of underestimation in AR relative the real world even when controlling for wearing the HoloLens in both conditions suggests that the weight and inertial properties of the HoloLens is not a strong explanation for the relative underestimation.

Figure 5. Experiment 2 results. Predicted probability of saying “yes” to gap crossing as a function of scaled gap width for the AR condition (blue lines) and real-world condition (RW; orange lines) at two condition orders [AR experienced first (solid lines) and AR experienced second (dotted lines)]. The dotted line at x = 0.0 indicates when gap width = actual step length. The point of subjective equality (PSE) is the value of scaled gap width (on the x-axis) at which the curve intersects with y = 0.50.

4. Experiment 3

Experiment 2 indicates that the weight and inertial properties of the HoloLens do not seem to play a large role in the underestimation observed in gap-crossing estimates made in the AR condition. Given the narrow FOV of the HoloLens, participants could have had trouble integrating the full extent of the gap when it was not able to be seen in its entirety. This inability to fully evaluate the size of the gap could have led to more conservative (relatively underestimated) judgments of crossing capability. To test whether restricted FOV is a factor in the underestimation of gap crossing, we manipulated the FOV for viewing gaps in VR. We acknowledge that testing for an effect of FOV in VR does not completely address whether the effects observed in the previous experiments were due to the restricted FOV in AR. However, current AR technologies do not allow for a widening of the FOV to adequately test its effects on gap-crossing estimates. So, we decided to test FOV in VR because it allowed for a comparison between a wider FOV and a restricted FOV. Using the HTC Vive Pro, we implemented a restricted FOV in a “narrow” viewing condition and compared crossing estimates made in that condition to a “wide” viewing condition, in which gaps were viewed with the full FOV (100° horizontal by 110° vertical) available in the Vive Pro. If the underestimation of gap affordances found in Pointon et al. (2018b) and Experiments 1 and 2 herein is partially due to a restriction of FOV, then we should observe a difference between the narrow and wide FOV in VR, with lower estimations for stepping capabilities in the narrow FOV condition.

4.1. Participants

Eight participants were recruited from the University of Utah, and 9 participants were recruited from Vanderbilt University, resulting in a total of 17 participants (8 female, with ages ranging from 19 to 48, M = 30.94, SD = 9.10). All participants were volunteers and had normal or corrected-to-normal vision, and all gave consent for their participation.

4.2. Materials

This experiment was presented in a virtual environment using the HTC Vive Pro. As in Experiments 1 and 2, we manipulated the gap width that participants judged whether they could step across, but we also added a manipulation of FOV. The HTC Vive Pro has an AMOLED display with a resolution of 2880 ×1600 pixels (1400 ×1600 pixels per eye) and has 100° horizontal by 110° vertical FOV. In a within-subjects manipulation, participants viewed the virtual environment through the normal FOV of the Vive Pro in one condition and through a restricted FOV in another condition. This restricted FOV was slightly larger than that of the HoloLens and was 50° horizontal by 20° vertical. The periphery of the Vive Pro display in the narrow FOV condition was black. Gap widths were varied from 0.45 to 1.50 m in 0.15 m increments, similar to Experiment 1.

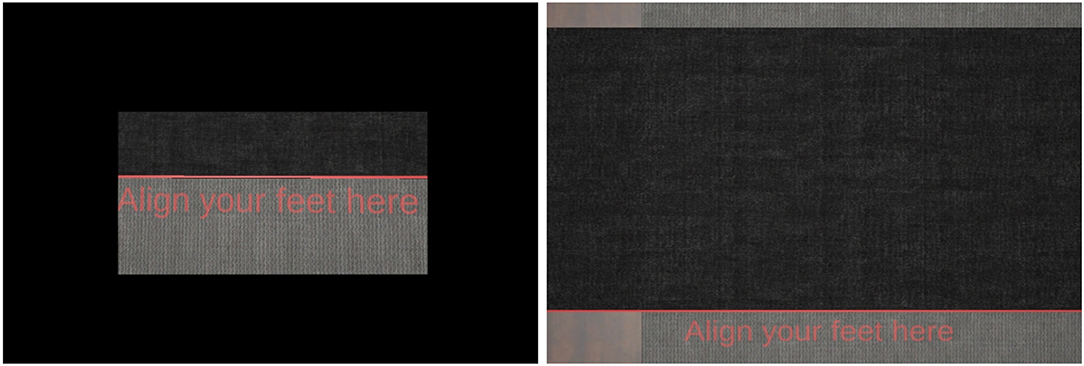

The virtual environment was built with Unity 2019.2.21f. The High Definition Render Pipeline was used to support the materials of some models downloaded from the Unity Asset Store and provide high-end graphics. There was a width-changing dark gray carpet representing the gap in the virtual environment and a virtual red line was placed at one edge of the gap to indicate where the participant should stand. We did not track or supply virtual avatar feet, but in the experiment participants felt it was intuitive to stand at the edge of the carpet without seeing their feet, consistent with our previous work (Creem-Regehr et al., 2019) (see Figure 6).

Figure 6. The virtual environment used in Experiment 3. Participants aligned their feet to the red line without visual self-representation.

4.3. Procedure

Upon arrival, participants provided informed consent. Next, the standing height, eye height, leg length, and maximum step length of each participant was measured and recorded. Step length was measured three times from trailing toe to leading heel and averaged. Participants were then given an overview of the experiment and procedure.

Participants completed four blocks of trials where they made gap-crossing judgments. Two conditions were varied in these four blocks: the FOV with which they could view the gap (wide vs. narrow) and the relative viewing direction with which they viewed the gap (viewing direction 0° vs. viewing direction 180°). Figure 7 shows the participant's view in the Wide and Narrow FOV conditions. The viewing direction in the virtual environment was varied to reduce the possibility that participants used their memory of prior trials, a potential landmark, or object in the room to make their estimates, but was not a primary variable of interest. Viewing direction was varied in the program such that participants did not have to physically turn around during the course of the experiment, and they were also not explicitly told that it changed.

Figure 7. Experiment 3 FOV conditions. The image on the left displays an example of a gap viewed in the Narrow FOV condition. The image on the right shows an example of a gap viewed in the Wide FOV condition.

Trials were blocked by FOV, thus forming two sets of orders: (i) Wide FOV (Wide FOV × Direction 0°, Wide FOV × Direction 180°) then Narrow FOV (Narrow FOV × Direction 0°, Narrow FOV × Direction 180°); (ii) Narrow FOV (Narrow FOV × Direction 0°, Narrow FOV × Direction 180°) then Wide FOV (Wide FOV × Direction 0°, Wide FOV × Direction 180°). Nine participants experienced the Wide FOV order first and eight participants the Narrow FOV first. In each trial, participants were asked to decide whether they could step across each gap using the procedure outlined in Experiments 1 and 2. Participants indicated their decision by using the Vive hand controller to choose either “Yes” or “No” on a virtual screen that was presented at eye level in the same environment as the gap (see Figure 6). Each participant made 24 gap-crossing judgments in each block (8 gap widths repeated 3 times, randomized) for a total of 96 trials.

4.4. Results and Discussion

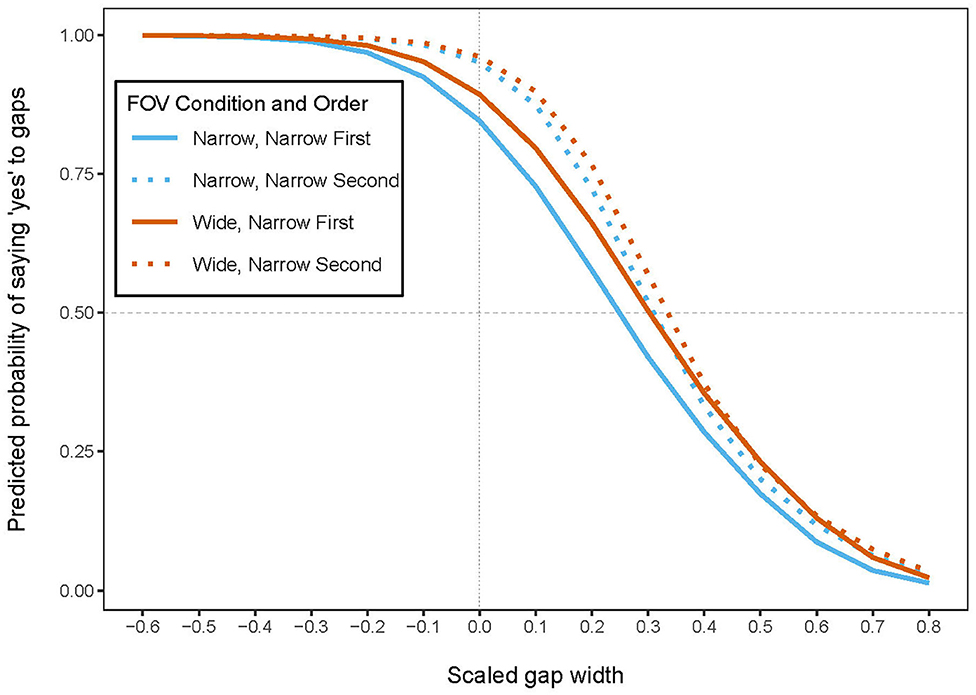

The analysis method was the same as in Experiments 1 and 2. However, in this experiment, condition refers to a simulated FOV that was either narrow or wide. The logit link function was found to be

and the resulting psychometric curves are shown in Figure 8. We found a main effect of FOV condition, such that participants judged gaps as more crossable in the Wide FOV condition compared to the Narrow FOV condition (B = 0.42, SE = 0.19, p = 0.028). Unlike the previous studies, we did not find an interaction between condition and order (p = 0.390). We did replicate the gap width effect, where participants' likelihood of judging a gap as crossable decreased with increasing gap widths (B = −10.81, SE = 0.64, p ≤ 0.001). The results suggest that a narrow FOV decreases individuals' perception of gap-crossing ability (see Figure 8).

Figure 8. Experiment 3 results. Predicted probability of saying “yes” to gap crossing as a function of scaled gap width for the Narrow FOV condition (blue lines) and Wide FOV condition (orange lines) at two condition orders [Narrow FOV experienced first (solid lines) and Narrow FOV experienced second (dotted lines)]. The dotted line at x = 0.0 indicates when gap width = actual step length. The point of subjective equality (PSE) is the value of scaled gap width (on the x-axis) at which the curve intersects with y = 0.50.

5. Discussion

Across the three experiments presented here, we showed an overall overestimation of gap-stepping ability. However, judgments made in AR or in a narrow FOV in VR were underestimated relative to the real world or wide FOV VR comparison. Specifically, Experiment 1 replicated the findings of Pointon et al. (2018b) by showing an underestimation of judgments of stepping over in AR (presented via the HoloLens) relative to the real world. Experiments 2 and 3 expanded on the previously observed underestimation in AR by testing for potential mechanisms that could underlie the effect. In Experiment 2, we tested whether the weight and inertial properties of the HoloLens contributed to the underestimation of stepping ability in AR compared to the real world. We hypothesized that the weight of the HoloLens could have affected head rotations and associated proprioceptive feedback or possibly the perceived effort of stepping, reducing estimations of capability in the AR condition in Experiment 1 and in Pointon et al. (2018b). Participants in Experiment 2 wore the HoloLens in the real-world condition to test this hypothesis, which equated the weight of the device in the AR and real-world conditions. However, an underestimation of stepping-over judgments in AR relative to the real world was still observed. These findings suggest that the weight and inertial properties of the HoloLens are not the likely explanation for the observed underestimation, despite the fact that the weight of head-mounted displays have been shown to have some influence on distance estimations in VR (Willemsen et al., 2009; Grechkin et al., 2013; Buck et al., 2018). Given the lack of an effect of the weight of the HoloLens, Experiment 3 tested our second hypothesis that the severely limited FOV in the HoloLens contributes to the relatively lower estimates of step capabilities. We predicted that the restricted FOV limits participants' abilities to integrate the full extent of the gap given that it allows for viewing only small areas of the gap at one time. Because the technology does not exist to compare narrow and wide FOV in AR, we compared a restricted FOV in VR to a wider FOV. Here, our findings were consistent with the AR results of Experiments 1 and 2 as well as Pointon et al. (2018b). Estimates were lower with a narrow FOV (similar to the HoloLens) than with the widest FOV possible in the HTC Vive Pro. These findings suggest that restricted FOV contributes to lower gap-crossing estimates and provides a potential mechanism for the observed underestimation across our experiments and in prior work. However, we do not and cannot make a strong claim about the FOV in AR being the sole contributor to the underestimation, given our FOV test was not performed in an AR device. We further discuss this limitation and the potential for future work on this research question below.

Despite some limitations, the effect of FOV observed in Experiment 3 is consistent with differences found in VR between wide and narrow FOVs when assessing distance perception. In this literature, we generally see an increase in distance estimation with increasing FOV, as evidenced by improvements in accuracy with newer commodity-level HMDs and direct manipulations of available FOV (Buck et al., 2018; Li et al., 2018). Our Experiment 3 is the first to demonstrate a FOV effect with perceived affordances in VR. One important question is whether the mechanisms underlying the FOV effects observed in this experiment and those of the prior distance perception experiments are the same. For the large-scale space perception typically tested in distance estimation studies, the restricted FOV could reduce peripheral environmental context or cues that provide a sense of scale of the space. However, these cues could be less important for the stepping judgments that are made in spaces closer to the observer. One possible account that spans both the previous distance estimations to targets within near action space (3 to 10 meters) and the current study within personal space is the importance of viewing the ground plane at one's feet. In other words, the larger vertical dimension of the wider FOV of the HTC Vive Pro may have allowed participants to see a larger portion of the ground plane surrounding their feet without as much need for head rotation or movement. The near-ground surface is important for distance perception (Gibson, 1950; Sinai et al., 1998; Wu et al., 2004) and essential for determining the extent of the gap that is crossable. The wider FOV of the HTC Vive Pro used in Experiment 3 provided a more continuous view of the ground plane, likely requiring fewer head rotations, and providing an experience more similar to the real world. Future work could aim to directly manipulate ground scanning patterns (Wu et al., 2004; Lin et al., 2011), assess looking patterns with measurements of head and eye movements, and also conduct a matched comparison between the FOV in VR and the real world.

In addition to replicating underestimation of judgments in AR and finding that FOV may contribute to the effect, we also observed an order effect that suggests that the relative underestimation in AR is stronger when participants perform AR judgments after making judgments in the real world. This order effect persisted across both Experiments 1 and 2, which suggests it is a reliable phenomenon. Previous work did not test for an order effect, so this finding is novel and warrants further investigation. We can only speculate as to why order may a play a role in the magnitude of the underestimation observed in AR. It is possible that viewing the gap with the full FOV of the real world first makes the restricted viewing in AR more noticeable, exaggerating the difference between the two conditions. Or, perhaps when participants complete the AR condition first, they develop a strategy for dealing with the reduced FOV to make their judgments, which they then carry over to the real world as manifested in somewhat reduced real-world estimates, as was observed here. Overall, our results suggest that the order in which participants view conditions, at least when testing in AR, could bias estimates in conditions that follow. Potential reasons for the observed order effect should be the focus of future work. Prior work in VR has also demonstrated order effects when comparing VR and the real world. For example, studies have shown that viewing a real space before a virtual space improves distance estimation in the virtual space (Interrante et al., 2006). Others have found that differences between real and virtual world judgments only occur in the blocks of trials that are performed first (Ziemer et al., 2009).

Further work investigating the effect that FOV has on judging action capabilities in AR is also needed. Although we found underestimation of stepping-over judgments in the simulated narrow FOV in VR compared to a wider FOV in Experiment 3, we acknowledge that the simulated reduced FOV in VR is different than the experience of the reduced FOV in AR. Specifically, in AR there is still a close to full natural FOV of the real world outside of the window in which the augmented graphical objects are displayed. Participants can see through the display at the periphery and gain visual knowledge about the surrounding real-world environment. In contrast, VR removes all peripheral information about the real environment, and previous VR research shows that manipulating peripheral stimulation influences space perception and action (Jones et al., 2012, 2013, 2016). VR also removed the ability of observers to see their feet, which prior work has shown may influence affordance judgments (Jun et al., 2015). Further, there were other differences between the AR and VR experiments presented here, including the graphical displays and environmental contexts. It is not our intention to make direct comparisons here between VR and AR, but rather to demonstrate with VR that FOV influences estimates of gap-stepping capabilities. The narrow FOV in VR (compared to the wide FOV) led to qualitatively similar underestimation as that observed with AR gaps relative to the real world. Future work could explore different ways to restrict FOV in VR in order to simulate the AR experience better, or it is possible that future iterations of AR devices will be able to increase the graphical FOV.

This work has potential applications in the medical domain. Bernhardt et al. (2017) provide a review of AR methods in laparascopic surgery, including a discussion of depth perception issues when alignment issues affect virtual objects or augmentation and the real surface image in the endoscopic display. AR methods have been used, for example, to provide extended field of views to the limited ones in an endoscope for surgery applications (Bong et al., 2018). Registration of the virtual overlays is still an ongoing concern, although progress is being made in this area (Chen et al., 2017; Vassallo et al., 2017; Pepe et al., 2019).

6. Conclusion

Establishing differences between action capabilities judged in AR and real environments can give us valuable information about the perceptual fidelity of virtual targets within AR systems. But, to effectively improve the utility of AR applications, it is critical to probe further into the mechanisms that might underlie these differences. We took this approach in the current series of experiments. We tested two potential explanations for the reduced estimations of stepping abilities toward AR gaps that focused on properties common to head-worn AR devices: weight and field of view. Together, we found more support for the influence of field of view than weight of the AR device. Our studies are the first to show objective evidence that reduced field of view influences judgments of action capabilities in AR, providing support for the restricted field of view as an explanation for differences found between AR and the real world. These findings emphasize the importance of studying the interaction of display technology and perceptual experience in order to advance the use of AR for applications that rely on accurate perceptions.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/6ezq4/.

Ethics Statement

The studies involving human participants were reviewed and approved by University of Utah Institutional Review Board and Vanderbilt University Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

HG wrote and edited the manuscript and analyzed data. JS, SC-R, and BB conceptualized the project and wrote and edited the manuscript. MR and YZ collected data and edited the manuscript. GP analyzed data. All authors participated in writing and editing the manuscript.

Funding

This work was supported in part by the Office of Naval Research under Grant No. ONR-N00014-18-1-2964.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Kiana Shams for her helping in collecting data for Experiments 1 and 2.

Footnotes

1. ^The yes/no response is a traditional response measure for the psychophysical method of constant stimuli used here. However, it could introduce bias if participants are more likely to respond “no” when they are uncertain (Steinicke et al., 2009).

References

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Bernhardt, S., Nicolau, S. A., Soler, L., and Doignon, C. (2017). The status of augmented reality in laparoscopic surgery as of 2016. Med. Image Anal. 37, 66–90. doi: 10.1016/j.media.2017.01.007

Bhargava, A., Lucaites, K. M., Hartman, L. S., et al. (2020a). Revisiting affordance perception in contemporary virtual reality. Virt. Real. 24, 713–724. doi: 10.1007/s10055-020-00432-y

Bhargava, A., Solini, H., Lucaites, K., Bertrand, J. W., Robb, A., Pagano, C. C., et al. (2020b). “Comparative evaluation of viewing and self-representation on passability affordances to a realistic sliding doorway in real and immersive virtual environments,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 519–528. doi: 10.1109/VR46266.2020.00073

Bong, J. H., Song, H. J., Oh, Y., Park, N., Kim, H., and Park, S. (2018). Endoscopic navigation system with extended field of view using augmented reality technology. Int. J. Med. Robot. Comput. Assist. Surg. 14:e1886. doi: 10.1002/rcs.1886

Buck, L. E., Young, M. K., and Bodenheimer, B. (2018). A comparison of distance estimation in HMD-based virtual environments with different hmd-based conditions. ACM Trans. Appl. Percept. 15, 21:1–21:15. doi: 10.1145/3196885

Chen, L., Day, T. W., Tang, W., and John, N. W. (2017). “Recent developments and future challenges in medical mixed reality,” in 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (Nantes), 123-135. doi: 10.1109/ISMAR.2017.29

Creem-Regehr, S. H., Gill, D. M., Pointon, G. D., Bodenheimer, B., and Stefanucci, J. (2019). Mind the gap: Gap affordance judgments of children, teens, and adults in an immersive virtual environment. Front. Robot. AI 6:96. doi: 10.3389/frobt.2019.00096

Creem-Regehr, S. H., Stefanucci, J. K., and Thompson, W. B. (2015). “Perceiving absolute scale in virtual environments: how theory and application have mutually informed the role of body-based perception,” in Psychology of Learning and Motivation, Volume 62 of Psychology of Learning and Motivation, ed B. H. Ross (Academic Press: Elsevier Inc.), 195–224. doi: 10.1016/bs.plm.2014.09.006

Creem-Regehr, S. H., Willemsen, P., Gooch, A. A., and Thompson, W. B. (2005). The influence of restricted viewing conditions on egocentric distance perception: Implications for real and virtual indoor environments. Perception 34, 191–204. doi: 10.1068/p5144

Cutting, J. E., and Vishton, P. M. (1995). “Perceiving layout and knowing distance: the integration, relative potency and contextual use of different information about depth,” in Perception of Space and Motion, eds W. Epstein and S. Rogers (New York, NY: Academic Press), 69–117. doi: 10.1016/B978-012240530-3/50005-5

Dey, A., Billinghurst, M., Lindeman, R. W., and Swan, J. E. (2018). A systematic review of 10 years of augmented reality usability studies: 2005 to 2014. Front. Robot. AI 5:37. doi: 10.3389/frobt.2018.00037

Ebrahimi, E., Robb, A., Hartman, L. S., Pagano, C. C., and Babu, S. V. (2018). “Effects of anthropomorphic fidelity of self-avatars on reach boundary estimation in immersive virtual environments,” in Proceedings of the 15th ACM Symposium on Applied Perception (Vancouver, BC: ACM), 1–8. doi: 10.1145/3225153.3225170

Franchak, J. M., and Adolph, K. E. (2012). What infants know and what they do: Perceiving possibilities for walking through openings. Dev. Psychol. 48, 1254–1261. doi: 10.1037/a0027530

Gagnon, H. C., Na, D., Heiner, K., Stefanucci, J., Creem-Regehr, S., and Bodenheimer, B. (2020). “The role of viewing distance and feedback on affordance judgments in augmented reality,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 922–929. doi: 10.1109/VR46266.2020.00112

Geuss, M., Stefanucci, J., Creem-Regehr, S., and Thompson, W. B. (2010). “Can I pass?: using affordances to measure perceived size in virtual environments,” in Proceedings of the 7th Symposium on Applied Perception in Graphics and Visualization, APGV '10 (York, NY: ACM), 61–64. doi: 10.1145/1836248.1836259

Geuss, M. N., Stefanucci, J. K., Creem-Regehr, S. H., Thompson, W. B., and Mohler, B. J. (2015). Effect of display technology on perceived scale of space. Hum. Fact. 57, 1235–1247. doi: 10.1177/0018720815590300

Grechkin, T. Y., Chihak, B. J., Cremer, J. F., Kearney, J. K., and Plumert, J. M. (2013). Perceiving and acting on complex affordances: how children and adults bicycle across two lanes of opposing traffic. J. Exp. Psychol. Hum. Percept. Perform. 39, 23–36. doi: 10.1037/a0029716

Grechkin, T. Y., Nguyen, T. D., Plumert, J. M., Cremer, J. F., and Kearney, J. K. (2010). How does presentation method and measurement protocol affect distance estimation in real and virtual environments? ACM Trans. Appl. Percept. 7:26:1–26:18. doi: 10.1145/1823738.1823744

Interrante, V., Anderson, L., and Ries, B. (2006). “Distance perception in immersive virtual environments, revisited,” in IEEE Virtual Reality Conference (VR 2006) (Alexandria, VA: IEEE), 3-10. doi: 10.1109/VR.2006.52

Jiang, Y., and Mark, L. S. (1994). The effect of gap depth on the perception of whether a gap is crossable. Percept. Psychophys. 56, 691–700. doi: 10.3758/BF03208362

Jones, J. A., Krum, D. M., and Bolas, M. T. (2016). Vertical field-of-view extension and walking characteristics in head-worn virtual environments. ACM Trans. Appl. Percept. 14, 9:1–9:17. doi: 10.1145/2983631

Jones, J. A., Suma, E. A., Krum, D. M., and Bolas, M. (2012). “Comparability of narrow and wide field-of-view head-mounted displays for medium-field distance judgments,” in Proceedings of the ACM Symposium on Applied Perception, SAP '12 (New York, NY: ACM), 119. doi: 10.1145/2338676.2338701

Jones, J. A., Swan, J. E., Singh, G., and Ellis, S. R. (2011). “Peripheral visual information and its effect on distance judgments in virtual and augmented environments,” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization (Toulouse: ACM), 29–36. doi: 10.1145/2077451.2077457

Jones, J. A., Swan, J. E. II., and Bolas, M. (2013). Peripheral stimulation and its effect on perceived spatial scale in virtual environments. IEEE Trans. Visual. Comput. Graph. 19, 701–710. doi: 10.1109/TVCG.2013.37

Jun, E., Stefanucci, J. K., Creem-Regehr, S. H., Geuss, M. N., and Thompson, W. B. (2015). Big foot: Using the size of a virtual foot to scale gap width. ACM Trans. Appl. Percept. 12, 16:1–16:12. doi: 10.1145/2811266

Knapp, J. M., and Loomis, J. M. (2004). Limited field of view of head-mounted displays is not the cause of distance underestimation in virtual environments. Presence Teleoperat. Virt. Environ. 13, 572–577. doi: 10.1162/1054746042545238

Li, B., Walker, J., and Kuhl, S. A. (2018). The effects of peripheral vision and light stimulation on distance judgments through hmds. ACM Trans. Appl. Percept. 15, 1–14. doi: 10.1145/3165286

Li, B., Zhang, R., Nordman, A., and Kuhl, S. (2015). “The effects of minification and display field of view on distance judgments in real and hmd-based environments,” in Proceedings of the ACM Symposium on Applied Perception, SAP '15 (New York, NY: ACM). doi: 10.1145/2804408.2804427

Lin, Q., Rieser, J., and Bodenheimer, B. (2012). “Stepping over and ducking under: the influence of an avatar on locomotion in an HMD-based immersive virtual environment,” in Proceedings of the ACM Symposium on Applied Perception, SAP '12 (New York, NY: ACM), 7–10. doi: 10.1145/2338676.2338678

Lin, Q., Rieser, J., and Bodenheimer, B. (2015). Affordance judgments in hmd-based virtual environments: stepping over a pole and stepping off a ledge. ACM Trans. Appl. Percept. 12, 6:1–6:21. doi: 10.1145/2720020

Lin, Q., Rieser, J. J., and Bodenheimer, B. (2013). “Stepping off a ledge in an HMD-based immersive virtual environment,” in Proceedings of the ACM Symposium on Applied Perception, SAP '13 (New York, NY: ACM), 107–110. doi: 10.1145/2492494.2492511

Lin, Q., Xie, X., Erdemir, A., Narasimham, G., McNamara, T., Rieser, J., et al. (2011). “Egocentric distance perception in real and hmd-based virtual environments: the effect of limited scanning method,” in Proceedings of the Symposium on Applied Perception in Graphics and Visualization (Toulouse). doi: 10.1145/2077451.2077465

Pepe, A., Trotta, G. F., Mohr-Ziak, P., Gsaxner, C., Wallner, J., Bevilacqua, V., et al. (2019). A marker-less registration approach for mixed reality-aided maxillofacial surgery: a pilot evaluation. J. Digit. Imaging 32, 1008–1018. doi: 10.1007/s10278-019-00272-6

Plumert, J. M., and Schwebel, D. C. (1997). Social and temperamental influences on children's overestimation of their physical abilities: links to accidental injuries. J. Exp. Child Psychol. 67, 317–337. doi: 10.1006/jecp.1997.2411

Pointon, G., Thompson, C., Creem-Regehr, S., Stefanucci, J., and Bodenheimer, B. (2018a). “Affordances as a measure of perceptual fidelity in augmented reality,” in 2018 IEEE VR 2018 Workshop on Perceptual and Cognitive Issues in AR (PERCAR) (Reutlingen: IEEE), 1–6.

Pointon, G., Thompson, C., Creem-Regehr, S., Stefanucci, J., Joshi, M., Paris, R., et al. (2018b). “Judging action capabilities in augmented reality,” in Proceedings of the 15th ACM Symposium on Applied Perception (Vancouver, BC: ACM), 1–8. doi: 10.1145/3225153.3225168

Proffitt, D. R. (2006). Embodied perception and the economy of action. Perspect. Psychol. Sci. 1, 110–122. doi: 10.1111/j.1745-6916.2006.00008.x

Raudenbush, S. W., and Bryk, A. S. (2002). Hierarchical Linear Models: Applications and Data Analysis Methods, Vol. 1, Thousand Oaks, CA: Sage Publications.

Regia-Corte, T., Marchal, M., Cirio, G., and Lécuyer, A. (2013). Perceiving affordances in virtual reality: influence of person and environmental properties in perception of standing on virtual grounds. Virt. Real. 17, 17–28. doi: 10.1007/s10055-012-0216-3

Renner, R. S., Velichkovsky, B. M., and Helmert, J. R. (2013). The perception of egocentric distances in virtual environments – a review. ACM Comput. Surv. 46, 23:1–23:40. doi: 10.1145/2543581.2543590

Sinai, M. J., Ooi, T. L., and He, Z. J. (1998). Terrain influences the accurate judgment of distance. Nature 395, 497–500. doi: 10.1038/26747

Stefanucci, J. K., Creem-Regehr, S. H., Thompson, W. B., Lessard, D. A., and Geuss, M. N. (2015). Evaluating the accuracy of size perception on screen-based displays: displayed objects appear smaller than real objects. J. Exp. Psychol. Appl. 21, 215–223. doi: 10.1037/xap0000051

Stefanucci, J. K., and Geuss, M. N. (2009). Big people, little world: the body influences size perception. Perception 38, 1782–1795. doi: 10.1068/p6437

Steinicke, F., Bruder, G., Jerald, J., Frenz, H., and Lappe, M. (2009). Estimation of detection thresholds for redirected walking techniques. IEEE Trans. Visual. Comput. Graph. 16, 17–27. doi: 10.1109/TVCG.2009.62

Vassallo, R., Rankin, A., Chen, E. C. S., and Peters, T. M. (2017). “Hologram stability evaluation for Microsoft HoloLens,” in Medical Imaging 2017: Image Perception, Observer Performance, and Technology Assessment, eds M. A. Kupinski and R. M. Nishikawa (Orlando, FL: International Society for Optics and Photonics; SPIE), 295–300. doi: 10.1117/12.2255831

Warren, W. H., and Whang, S. (1987). Visual guidance of walking through apertures: body scaled information for affordances. J. Exp. Psychol. Hum. Percept. Perform. 13, 371–383. doi: 10.1037/0096-1523.13.3.371

Willemsen, P., Colton, M. B., Creem-Regehr, S. H., and Thompson, W. B. (2009). The effects of head-mounted display mechanical properties and field of view on distance judgments in virtual environments. ACM Trans. Appl. Percept. 6:1–14. doi: 10.1145/1498700.1498702

Wu, B., Ooi, T. L., and He, Z. J. (2004). Perceiving distance accurately by a directional process of integrating ground information. Nature 428, 73–77. doi: 10.1038/nature02350

Wu, H., Adams, H., Pointon, G., Stefanucci, J., Creem-Regehr, S., and Bodenheimer, B. (2019). “Danger from the deep: a gap affordance study in augmented reality,” in 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Osaka: IEEE), 1775–1779. doi: 10.1109/VR.2019.8797965

Keywords: affordances, augmented reality, virtual reality, perception, field of view

Citation: Gagnon HC, Zhao Y, Richardson M, Pointon GD, Stefanucci JK, Creem-Regehr SH and Bodenheimer B (2021) Gap Affordance Judgments in Mixed Reality: Testing the Role of Display Weight and Field of View. Front. Virtual Real. 2:654656. doi: 10.3389/frvir.2021.654656

Received: 17 January 2021; Accepted: 22 February 2021;

Published: 15 March 2021.

Edited by:

Frank Steinicke, University of Hamburg, GermanyReviewed by:

Jan Egger, Graz University of Technology, AustriaAryabrata Basu, Emory University, United States

Copyright © 2021 Gagnon, Zhao, Richardson, Pointon, Stefanucci, Creem-Regehr and Bodenheimer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Holly C. Gagnon, aG9sbHkuZ2Fnbm9uQHBzeWNoLnV0YWguZWR1

Holly C. Gagnon

Holly C. Gagnon Yu Zhao

Yu Zhao Matthew Richardson

Matthew Richardson Grant D. Pointon1

Grant D. Pointon1 Jeanine K. Stefanucci

Jeanine K. Stefanucci Sarah H. Creem-Regehr

Sarah H. Creem-Regehr Bobby Bodenheimer

Bobby Bodenheimer