- 1Department of Physical Therapy, Movement and Rehabilitation Sciences, Northeastern University, Boston, MA, United States

- 2Institute of Sport Sciences, Academy of Physical Education, Katowice, Poland

- 3Department of Electrical and Computer Engineering, Northeastern University, Boston, MA, United States

- 4Department of Bioengineering, Northeastern University, Boston, MA, United States

Technological advancements and increased access have prompted the adoption of head- mounted display based virtual reality (VR) for neuroscientific research, manual skill training, and neurological rehabilitation. Applications that focus on manual interaction within the virtual environment (VE), especially haptic-free VR, critically depend on virtual hand-object collision detection. Knowledge about how multisensory integration related to hand-object collisions affects perception-action dynamics and reach-to-grasp coordination is needed to enhance the immersiveness of interactive VR. Here, we explored whether and to what extent sensory substitution for haptic feedback of hand-object collision (visual, audio, or audiovisual) and collider size (size of spherical pointers representing the fingertips) influences reach-to-grasp kinematics. In Study 1, visual, auditory, or combined feedback were compared as sensory substitutes to indicate the successful grasp of a virtual object during reach-to-grasp actions. In Study 2, participants reached to grasp virtual objects using spherical colliders of different diameters to test if virtual collider size impacts reach-to-grasp. Our data indicate that collider size but not sensory feedback modality significantly affected the kinematics of grasping. Larger colliders led to a smaller size-normalized peak aperture. We discuss this finding in the context of a possible influence of spherical collider size on the perception of the virtual object’s size and hence effects on motor planning of reach-to-grasp. Critically, reach-to-grasp spatiotemporal coordination patterns were robust to manipulations of sensory feedback modality and spherical collider size, suggesting that the nervous system adjusted the reach (transport) component commensurately to the changes in the grasp (aperture) component. These results have important implications for research, commercial, industrial, and clinical applications of VR.

1 Introduction

Natural hand-object interactions are critical for a fully immersive virtual reality (VR) experience. In the real world, reach-to-grasp coordination is facilitated by congruent visual and proprioceptive feedback of limb position and orientation and haptic feedback of object properties (Bingham et al., 2007; Coats et al., 2008; Bingham and Mon-Williams, 2013; Bozzacchi et al., 2014; Whitwell et al., 2015; Bozzacchi et al., 2016; Hosang et al., 2016; Volcic and Domini, 2016; Bozzacchi et al., 2018). In virtual environments (VE), visual feedback of the avatar hand may be incongruent with proprioceptive feedback from the biological hand. This discrepancy can arise from technological limitations (e.g., latency, rendering speed, and tracking accuracy) related to how the scene is calibrated (Stanney, 2002) or how the VR task is manipulated (Groen and Werkhoven, 1998; Prachyabrued and Borst, 2013). Moreover, the virtual representation of the limb may be distorted in appearance (Argelaguet et al., 2016; Liu et al., 2019) in a similar manner to the use of a cursor to represent hand position in traditional computer displays. For example, visualization of the index finger and thumb as simple spherical colliders to allow pincer grasping of objects in VE is often employed (Furmanek et al., 2019; van Polanen et al., 2019; Mangalam et al., 2021). The colliders’ size is often arbitrarily chosen by researchers but can have profound effects on behavior, especially for dexterous and accuracy-demanding tasks. Finally, when not combined with haptic devices, haptic information about whether and how a given object has been grasped is absent, creating additional uncertainty. The lack of haptic feedback about object properties may be supplemented with terminal visual feedback (sensory substitution) in the form of the object changing its color, or as auditory feedback in the form of a sound, to signal that the virtual object has been contacted or grasped and to minimize hand-object interpenetration (Zahariev and MacKenzie, 2003; Zahariev and MacKenzie, 2007; Castiello et al., 2010; Sedda et al., 2011; Prachyabrued and Borst, 2012; Prachyabrued and Borst, 2014; Canales and Jörg, 2020).

One of the most common and well-studied forms of hand-object interactions is reaching and grasping an object. Reach-to-grasp movements involve a reach component describing the transport of the hand toward the object and a grasp component describing the preshaping of the fingers to the object. Traditionally, the end of a “reach-to-grasp” movement is defined by contact with the object. The reach component is quantified through analysis of hand transport kinematics (e.g., trajectory and velocity of the wrist motion), and the grasp component is quantified through analysis of aperture kinematics (e.g., interdigit distance in time) (Jeannerod, 1981; Jeannerod, 1984). Planning and execution of successful reach-to-grasp movements require both spatial and temporal coordination between the reach and grasp components (Rand et al., 2008; Furmanek et al., 2019; Mangalam et al., 2021). Whether the transport and aperture components represent information flow in independent neural channels remains an open and interesting question (Culham et al., 2006; Vesia and Crawford, 2012; Schettino et al., 2017); however, several kinematic features of coordination between the two components have been well described (Haggard and Wing, 1991; Paulignan et al., 1991a; Paulignan et al., 1991b; Gentilucci et al., 1992; Haggard and Wing, 1995; Dubrowski et al., 2002). For instance, peak transport velocity tends to occur at 30

There is growing interest in contrasting performance of dexterous actions, such as reach-to-grasp, when executed in the physical environment (PE) and VE. In our previous work, we showed that temporal features of reach-to-grasp coordination and the control law governing closure (Mangalam et al., 2021) were preserved in a VE that utilized a reductionist spherical collider representation of the index and thumb and audiovisual feedback-based sensory substitution. However, we noted that movement speed and maximum grip aperture differed between the real environment and VE (Furmanek et al., 2019). These studies utilized only a single set of parameters for the presentation of feedback in the VE, and therefore, the influence of different parameters for representation of the virtual fingers and substitution of haptic feedback is unknown. The goal of this investigation was to test the extent to which the selection feedback parameters influence behavior in the VE. In two studies, we systematically varied parameters related to the sensory modality of haptic sensory substitution (Study 1) and the size of the spherical colliders representing the index-tip and thumb-tip (Study 2) to better understand the influence of these parameters on features of reach-to-grasp performance in VR. In both studies, participants reach to grasp virtual objects at a natural pace in an immersive VE presented via a head-mounted display (HMD).

Study 1 was designed to test whether visual, auditory, or audiovisual sensory substitution for haptic feedback of the object properties significantly affects reach-to-grasp kinematics. Participants grasped virtual objects of different sizes and placed them at different distances, where the change in color of the object (visual), tone (auditory), or both (audiovisual) was used to provide the terminal feedback that grasp was completed and achieved successfully. A previous study using spherical colliders to reach to grasp virtual objects reported that audio and audiovisual terminal feedback of the object being grasped resulted in shorter movement times than visual or absent terminal feedback, though there was no effect of terminal feedback on peak aperture (Zahariev and MacKenzie, 2007). While this study had a similar design to our Study 1, it was conducted using stereoscopic glasses to obtain a 3D view of images presented on a 2D display, and the results may not transfer to an HMD-based presentation of VR that presents a more immersive experience and is more commonly used today. Furthermore, no analysis of temporal or spatial reach-to-grasp kinematics was provided, limiting interpretations about the effects of terminal feedback on reach-to-grasp coordination. A more recent study using a robotic-looking virtual hand avatar to reach to grasp and transport virtual objects in an HMD immersive VR setup found that movement time was shorter for visual, compared to auditory or absent, terminal feedback (Canales and Jörg, 2020). Interestingly, participants subjectively preferred audio terminal feedback to other sensory modalities despite the fact that audio feedback produced the slowest movements. The Canales and Jörg study did not measure the kinematics of the movement and therefore interpretation about movement coordination is limited. Based on these studies and our previous work (Furmanek et al., 2019), we expected that the modality of terminal feedback used to signal successful grasp would affect reach-to-grasp kinematics due to uncertainty of contact with an object. Specifically, we hypothesized that, with multimodal (audiovisual) feedback, participants would show (H1.1) greater scaling of aperture to object width and (H1.2) faster completion of the reach-to-grasp task, but (H1.3) the spatiotemporal coordination between the reach and the grasp components of the movement should remain preserved across terminal feedback condition.

To date, no study has systematically examined the impact of the size of the virtual effector on reach-to-grasp kinematics. Study 2 was designed to fill this gap in the literature. Participants used spherical colliders of different diameters to reach to grasp virtual objects of different sizes placed at different distances. Ogawa and coworkers (Ogawa et al., 2018) reported that the size of a virtual avatar hand affects participants’ perception of object size in an HMD-based VE, but they did not study reach-to-grasp movements or analyze movement kinematics. Extrapolating from their results, we hypothesized that the size of the spherical collider would affect maximum grip aperture, with smaller colliders predicted to result in larger maximum grip aperture (H2). We specifically used a reduced version of the avatar hand (just two dots representing the thumb and index fingertips) to reduce the number of factors that can potentially affect reach-to-grasp kinematics, such as differences in the shape, color, and texture of a more biological looking hand avatar (Lok et al., 2003; Ogawa et al., 2018). Moreover, the spherical colliders allowed for more precise localization of the fingertips in VE than is typical of anthropomorphic hand avatars (Vosinakis and Koutsabasis, 2018) and eliminated the influence of visuoproprioceptive discrepancies caused by potential tracking or joint angle calibration errors inherent in sensor gloves. Similar reductionist effectors have been successfully used in multiple previous studies for similar reasons (Zahariev and MacKenzie, 2007; Zahariev and Mackenzie, 2008; Furmanek et al., 2019; Mangalam et al., 2021). Furthermore, a recent study where only the target and the richness of hand anthropomorphism (e.g., 2-point, point-dot hand, and full hand) were visible to participants reported that kinematic performance was best when either the minimal (2-point) or enriched hand-like model (skeleton, full) was provided (Sivakumar et al., 2021). Therefore, in the present study, we used simple spheres representing the fingertips to systematically test the effect of collider size on reach-to-grasp behavior.

Study 1 and Study 2 were designed to increase knowledge about how choices for haptic sensory substitution and collider size may affect reach-to-grasp performance in HMD-based VR. This work has the potential to directly impact the design of VR platforms used for commercial, industrial, research, and rehabilitation applications.

2 Materials and Methods

2.1 Participants

Ten adults [seven men and three women; M

2.2 Reach-to-Grasp Task, Virtual Environment, and Kinematic Measurement

Each participant reached to grasp 3D-printed physical objects in the PE and their exact virtual renderings in the haptic-free virtual environment (hf-VE) of three different sizes, small (width

A commercial HTC Vive Pro, comprised of HMD and an infrared laser emitter unit, was used. The virtual scene was created and rendered in Unity (ver. 5.6, 64 bits, Unity Technologies, San Francisco, CA) with C# as the programming language, running on a computer with Windows 7 Ultimate, 64-bit operating system, an Intel(R) Xenon(R) CPU E5-1630 v3 3.7 GHz, 32 GB RAM, and an NVIDIA Quadro M6000 graphics card. Given the power of the PC and simplicity of the VE, scenes were rendered in less than one frame time (see below). The interpupillary distance in the HMD was individually adjusted to each participant. Objects were displayed in stereovision giving the perception that they were 3D. Participants were asked to confirm that they perceived the object as 3D and that they could distinguish the object’s edges, though we did not formally test for stereopsis. Motion tracking of the head was achieved by streaming data from an IMU and laser-based photodiodes embedded in the headset. A detailed description of the HTC Vive’s head tracking system is published elsewhere (Niehorster et al., 2017). Position and orientation data provided by the Vive were acquired through Unity at

2.3 Procedure and Instructions to Participants

Each participant was seated on a chair with the right arm and hand placed on a table in front of them. At the start position, the thumb and index finger straddled a 1.5 cm wide plastic peg located 12 cm in front and 24 cm to the right of the sternum, with the thumb depressing a switch. Lifting the thumb off the switch marked movement onset. Upon an auditory tone (“beep” signal), the participant reached to grasp the virtual object presented in the HMD, lifted it, held it until it disappeared (3.5 s from movement onset, i.e., the moment the switch was released), and returned their hand to the starting position. Each auditory tone was time jittered within 0.5 s standard deviation from 1 s after trial start (i.e., after the start switch was activated) to avoid participants’ adaptation. A custom collision detection algorithm was used to determine when the virtual object was grasped. Each finger was represented by a sphere. When any point on the sphere made contact with any point on the object, it was considered “attached.” Once both fingers were “attached” to the object, the object was considered “grasped,” and translational movement from the fingers would also move the object. A 1.2 cm error margin, imposed on the distance between the spheres, was used to maintain grasp. If the distance between the spheres increased by more than 1.2 cm from its value at the time the object was “grasped” (e.g., if the fingers opened), the object was no longer considered grasped, the color changed to white, and it would drop to the table. Conversely, if the distance between the spheres decreased by more than 1.2 cm from its value at the time the object was “grasped,” the object was considered “overgrasped.” An “overgrasped” object would turn white and would remain frozen. If neither error occurred, the object was considered to be grasped successfully, and its color changed to red (visual feedback condition) or a tone sounded (audio condition); see below for details about terminal feedback conditions. 1.2 cm error margin was chosen after extensive piloting of the experiment. In the future, we are planning to systematically check for the effect of the error margin on reach-to-grasp behavior.

Before data collection, each participant was familiarized with the setup and procedure. Familiarization consisted of 30 trials of grasping virtual and physical objects (five trials

To wash out any effect of sensory feedback (Study 1) or collider size (Study 2) on reach-to-grasp coordination, each participant performed a block of reach-to-grasp movements in PE prior to each hf-VE block. The rendering in the virtual scene showed two spheres, representing the thumb and index fingertips, which were visible to the participant. To make the PE condition comparable with regard to what a participant saw, the room was darkened so that the participants could see only the glow-in-the-dark object and the illuminated IRED markers on their fingertips. Overhead lights were turned on and off (after every five trials) to prevent adaptation to the dark. PE trials were used strictly for washout and although data were recorded during these trials, the data were not analyzed nor presented in this manuscript.

2.4 Study 1: Manipulations of Sensory Feedback

Each participant was tested in a single session consisting of 270 trials evenly spread across six blocks of 45 trials, alternating between PE and hf-VE with the first block performed in PE. The participant was given a 2 min break between consecutive blocks. In the three blocks for hf-VE, visual (V), auditory (A), and both visual and auditory [audiovisual (AV)] feedback were provided to indicate that the virtual object had been grasped. In the vision condition, the object turned from blue to red. In the auditory condition, the sound of a click (875 Hz, 50 ms duration) was presented. In the audiovisual condition, the object turned from blue to red in addition to the sound of a click (Figure 1, top) and remained red until the object disappeared or was released/overgrasped. The collider size remained constant (diameter = 0.8 cm) in each feedback condition. The order of feedback conditions was pseudorandomized across participants. Each condition was collected in a single block that contained 45 trials (three object sizes, three object distances, and five trials per size-distance pair). Objects in each block were presented in the same order [small-near (five trials), small-middle (five trials), and small-far (five trials); medium-near (five trials), medium-middle (five trials), and medium-far (five trials); large-near (five trials), large-middle (five trials), and large-far (five trials)]. Each block of virtual grasping was preceded by an identical block of grasping physical objects to wash out possible carryover effects from the previous hf-VE block.

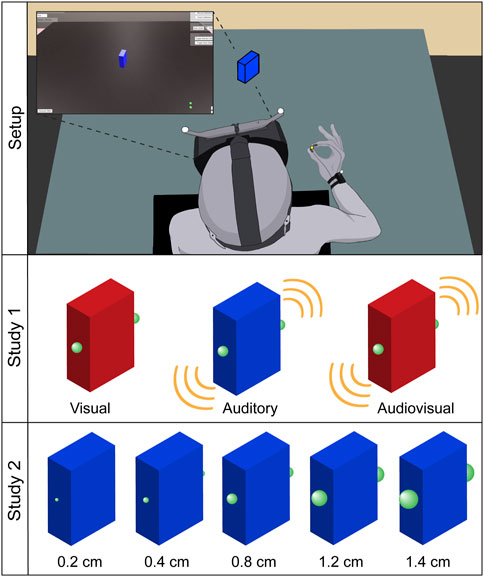

FIGURE 1. Schematic illustration of the experimental setup and procedure. After wearing an HTC ViveTM head-mounted display (HMD), the participants sat on a chair in front of the experimental rig, with their thumb pressing a start switch (indicated in yellow). IRED markers were attached to the participant’s wrist and the tips of the thumb and index finger. An auditory cue—a beep—signaled the participant to reach to grasp the object (small: 3.6 × 2.5 × 8 cm; medium: 5.4 × 2.5 × 8 cm; large: 7.2 × 2.5 × 8 cm), placed at three different distances relative to the switch (near: 24 cm; middle: 30 cm; far: 36 cm). An inset presents the first person scene that appeared in the HMD. Translucent panels containing text in the visual scene were only visible to the experimenter. In Study 1, participants grasped the object with 0.8 cm colliders, and visual, auditory, or audiovisual feedback was provided to signal that the object has been grasped. In Study 2, audiovisual feedback was provided to signal that the object has been grasped, and participants grasped the object with 0.2, 0.4, 0.8, 1.2, and 1.4 cm colliders. In middle and bottom panels, the medium object is presented with the accurate scaling relationship between object dimensions and collider size.

2.5 Study 2: Manipulations of Collider Size

Each participant was tested in a single session consisting of 450 trials evenly spread across ten blocks of 45 trials, alternating between PE and hf-VE with the first block performed in PE. The participant was given a 2 min break between consecutive blocks. In the five hf-VE blocks, we manipulated the collider size to be 0.2, 0.4, 0.8, 1.2, or 1.4 cm (Figure 1, bottom). Collider size was constant for all trials within a block. The order that collider size blocks were presented was pseudorandomized across participants. Each block contained 45 trials (three object sizes, three object distances, and five trials per size-distance pair). Objects in each block were presented in the same order [small-near (five trials), small-middle (five trials), and small-far (five trials); medium-near (five trials), medium-middle (five trials), and medium-far (five trials); large-near (five trials), large-middle (five trials), and large-far (five trials)]. Each block of virtual grasping was preceded by an identical block of grasping physical objects to wash out possible carryover effects from the previous hf-VE block.

2.6 Kinematic Processing

All kinematic data were analyzed offline using custom MATLAB routines (Mathworks Inc., Natick, MA). For each trial, time series data for the planar motion of the markers in the x- and y-coordinates were cropped from movement onset (the moment the switch was released) to movement offset (the moment the collision detection criterion was met). Transport distance (i.e., the straight-line distance of the wrist marker from the starting position in the transverse plane) and aperture (the straight-line distance between the thumb and index finger markers in the transverse plane) trajectories were computed for each trial. The first derivative of transport displacement and aperture was computed to obtain the velocity profiles for kinematic feature extraction. All time series were filtered at 6 Hz using a fourth-order low-pass Butterworth filter. In line with our past data processing protocols, trials in which participants did not move or lifted their fingers off the starting switch not in the process of making a goal-directed action toward the object were excluded from the analysis. Excluded trials comprised

Additionally, we also computed the time series for size-normalized aperture. The rationale for this normalization was twofold. First, markers were attached to the dorsum of the digits (on the nail) to avoid interference with grasping. Second, in hf-VE, the collider’s relative sizes and the target object might influence the grasp. For instance, a larger collider might lead to a small object being perceived disproportionately smaller than a large object. Normalizing peak aperture by object size allowed us to examine any effect of such perceptual discrepancy on the grasp.

For each trial, the following kinematic features, units in parentheses, were extracted using the filtered time series data:

• Movement time (ms): duration from movement onset to movement offset.

• Peak aperture (cm): maximum distance between the fingertip markers. Peak aperture also marked the initiation of closure or closure onset (henceforth, CO), which we refer to as aperture at CO.

• Size-normalized peak aperture: peak aperture normalized by the target object width.

• Time to peak aperture (ms): time from movement onset to peak aperture.

• Closure distance (cm): distance between the wrist’s position at CO and the object’s center.

• Peak transport velocity (cm/s): maximum velocity of the wrist marker.

• Time to peak transport velocity (ms): time from movement onset to maximum velocity of the wrist marker.

• Transport velocity at CO (cm/s): velocity of the wrist marker at the time of CO.

Movement time was used to examine the global effect of condition manipulations on reach-to-grasp movements. Peak aperture, time to peak aperture, and size-normalized peak aperture were used to examine the effect on the grasp component. Likewise, peak transport velocity and time to peak transport velocity were used to examine the effect on the transport component. Finally, time to peak transport velocity and time to peak aperture as well as transport velocity at CO and closure distance were used to examine the effects of task manipulations on reach-to-grasp coordination (Furmanek et al., 2019; Mangalam et al., 2021).

2.7 Statistical Analysis

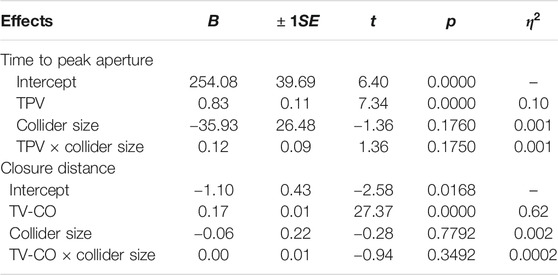

All analyses were initially performed at the trial level to compute means for each subject. Subjects’ means were then submitted to analysis of variance for group-level statistics. 3

We used linear mixed-effects (LME) models to test the relationship between time to peak transport velocity and time to peak aperture and between closure transport velocity at CO and closure distance, in both Studies 1 and 2. The same LMEs also tested whether and how the respective relationship was influenced by sensory feedback in Study 1 and collider size in Study 2. In LMEs for Study 1, sensory feedback served as a categorical independent variable with three levels: visual, auditory, and audiovisual. The “visual” feedback served as the reference level. In LMEs for Study 2, collider size served as a continuous independent variable. In each model, participant identity was treated as a random effect. Both models were fit using the lmer() function in the package lme4 (Bates et al., 2014) for R (Team R. C., 2013). Approximate effect sizes for LMEs were computed using the omega_squared() function in the package effectsize (Ben-Shachar et al., 2021) for R. Coefficients were considered significant at the alpha level of 0.05.

3 Results

3.1 Study 1: Effects of Sensory Feedback on Reach-to-Grasp Movements

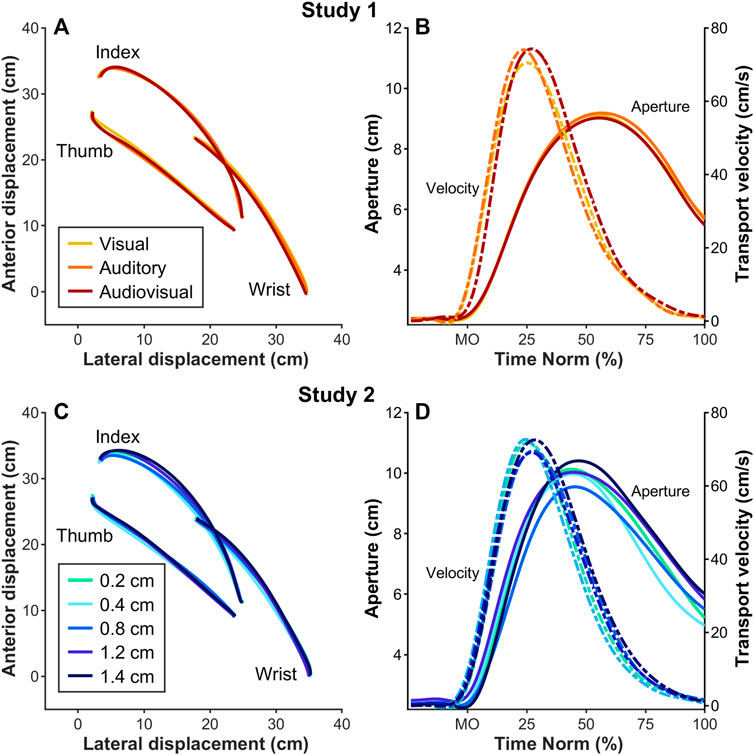

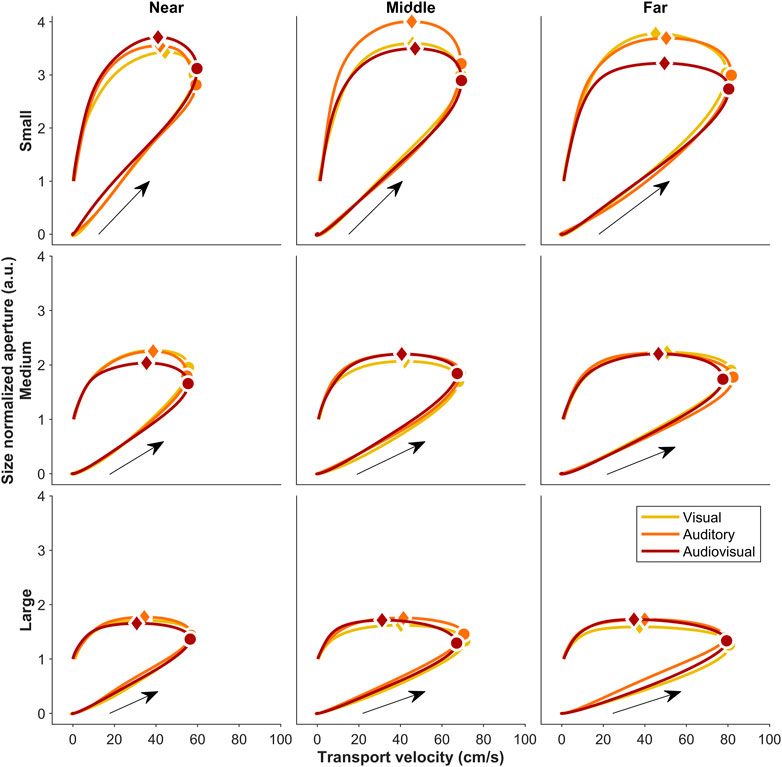

Figure 2A shows the trajectories of the mean 2D position of the wrist, thumb, and index finger corresponding to each sensory feedback condition for a representative participant (averaged across all trials) for the medium object placed at the middle distance. Figure 2B shows the mean transport velocity and aperture profiles obtained from the trajectories shown in Figure 2A. Notice that, in both figures, the curves for the three feedback conditions entirely eclipse each other, indicating that sensory feedback affected neither the wrist, thumb, and index finger trajectories nor the transport velocity and aperture profiles. Figure 3 shows the phase relationship between transport velocity and size-normalized aperture (Furmanek et al., 2019). An almost invariant location of peak transport velocity and peak aperture, which mark the onset of the shaping phase and the closure phase, respectively, indicates that this phase relationship did not vary across feedback conditions.

FIGURE 2. Mean trajectories for a representative participant showing reach-to-grasp kinematics for different sensory feedback and collider size. Study 1.(A) Marker trajectories for the wrist, thumb, and index finger across different conditions of sensory feedback. (B) Time-normalized aperture (solid lines) and transport velocity (dashed-dotted lines) profiles across different conditions of sensory feedback. Study 2.(C) Marker trajectories for the wrist, thumb, and index finger across different collider sizes. (D) Time-normalized aperture (solid lines) and transport velocity (dash-dotted lines) profiles across different collider sizes.

FIGURE 3. Study 1. Phase plots of size-normalized aperture vs. transport velocity for each condition of sensory feedback for a representative participant. Diamonds and circles indicate size-normalized peak aperture and peak transport velocity, respectively. Black arrows indicate the progression of reach-to-grasp movement.

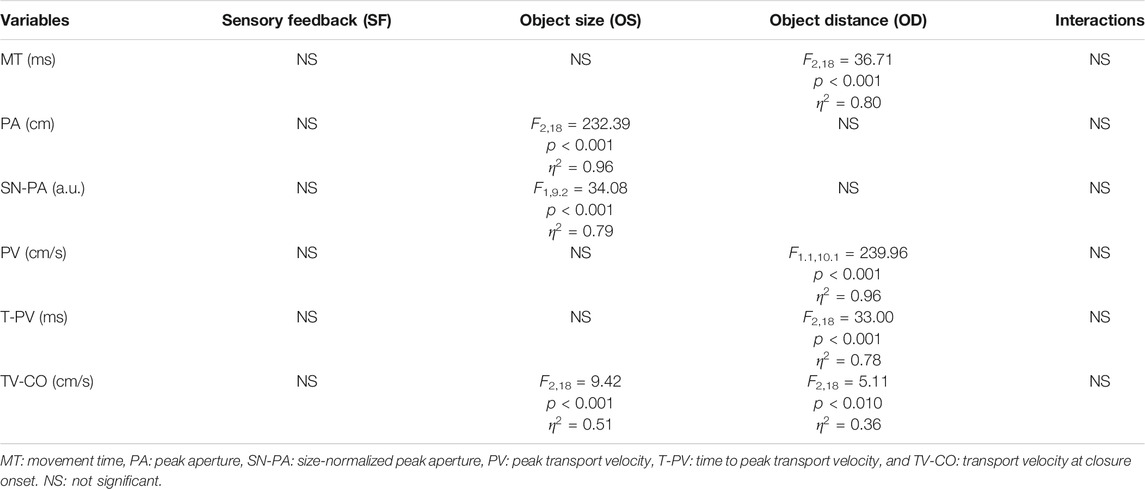

An rm-ANOVA revealed that movement time did not differ among the three types of sensory feedback (p

TABLE 1. Outcomes of 3 × 3 × 3 rm-ANOVAs examining the effects of sensory feedback (visual, auditory, and audiovisual), object size (small, medium, and large), and object distance (near, middle, and far) on each kinematic variable in Study 1.

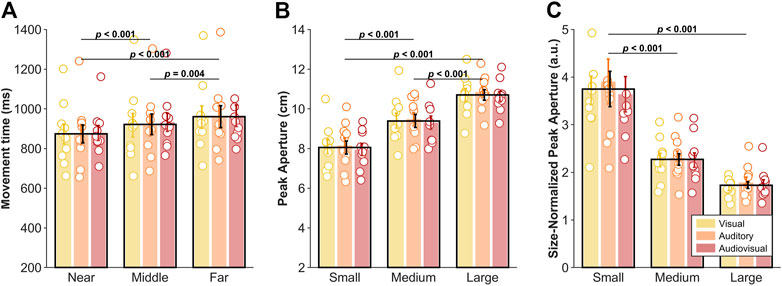

FIGURE 4. Study 1. Effects of (A) object distance on movement time, (B) object size on peak aperture, and (C) object size on size-normalized peak aperture. Error bars indicate ±1SEM (n = 10). Data calculated across all levels of sensory feedback for each participant.

Neither sensory feedback nor object distance affected any kinematic variable related to the grasp component: peak aperture and size-normalized peak aperture (p

Sensory feedback did not affect any variable related to the transport component: peak transport velocity, time to peak transport velocity, and transport velocity at CO (p

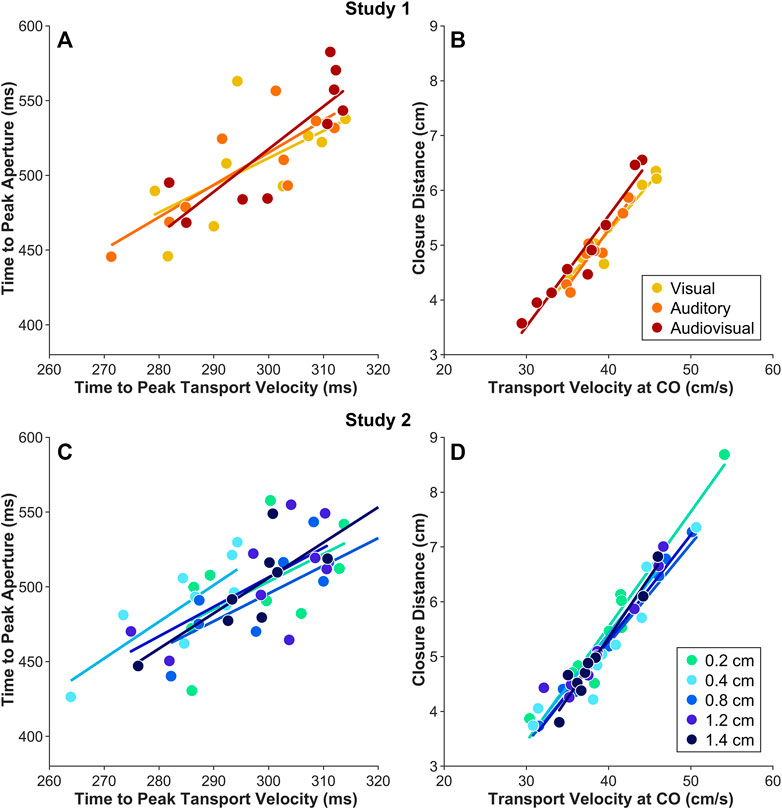

To investigate whether reach-to-grasp coordination was influenced by visual, auditory, and audiovisual feedback, LMEs were performed to test the relationship between time to peak transport velocity and time to peak aperture and between closure transport velocity at CO and closure distance, and how it was influenced by sensory feedback. Time to peak aperture increased with time to peak transport velocity (B

In summary, these results confirm the known effects of object size and object distance on variables related to the aperture and transport components, respectively (Paulignan et al., 1991a; Paulignan et al., 1991b). However, each type of sensory feedback—visual, auditory, or audiovisual—is equally provided for successful reach-to-grasp.

3.2 Study 2: Effects of Collider Size on Reach-to-Grasp Movements

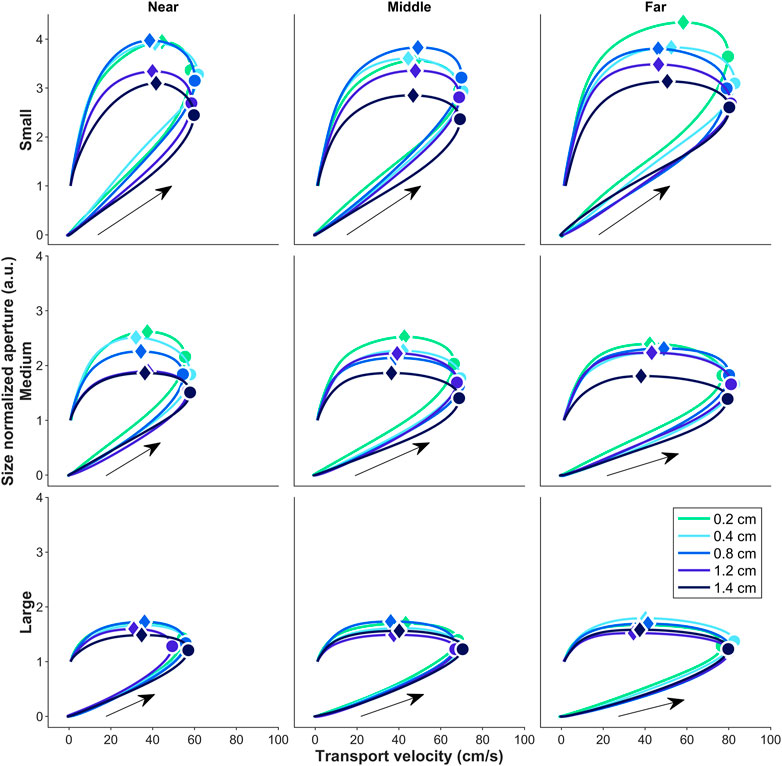

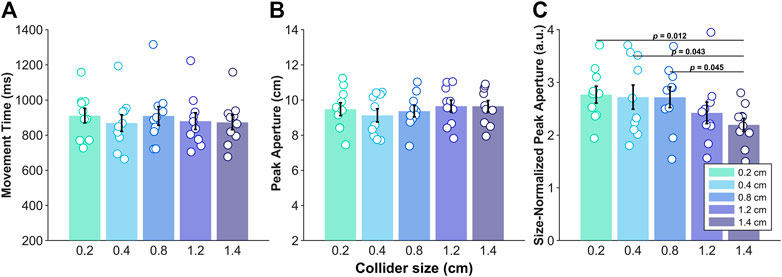

Figure 2C shows the trajectories of the mean 2D position of the wrist, thumb, and index finger corresponding to each collider size condition for a representative participant (averaged across all trials) for the medium object placed at the middle distance. Figure 2D shows mean transport velocity and aperture profiles obtained from the trajectories shown in Figure 2C. Notice that, in both figures, curves for the five collider sizes show noticeable differences. Figure 5 shows the phase relationship between transport velocity and size-normalized aperture. Notice that the magnitude of size-normalized peak aperture reduces with collider size and disproportionately more for a smaller and a more distant object, but it occurs at about the same transport velocity.

FIGURE 5. Study 2. Phase plots of size-normalized aperture vs. transport velocity for each collider size for a representative participant. Diamonds and circles indicate size-normalized peak aperture and peak transport velocity, respectively. Black arrows indicate the progression of reach-to-grasp movement.

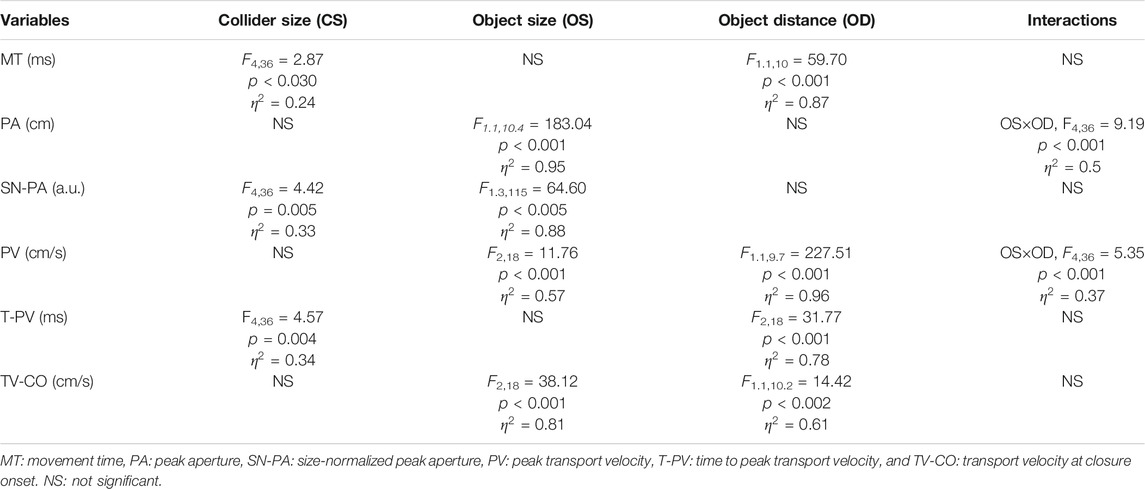

An rm-ANOVA revealed a significant main effect of collider size on movement time (F4,36

TABLE 3. Outcomes of 5 × 3 × 3 rm-ANOVAs examining the effects of collider size (0.2, 0.4, 0.8, 1.2, and 1.4), object size (small, medium, and large), and object distance (near, middle, and far) on each kinematic variable in Study 2.

FIGURE 6. Study 2. Effects of collider size on (A) movement time, (B) peak aperture, and (C) size-normalized peak aperture. Error bars indicate

Neither collider size nor object distance affected peak aperture (p

Size-normalized peak aperture differed across collider sizes (F4, 36

The only significant main effect of collider size was observed on time to peak transport velocity (F4, 36

To investigate whether reach-to-grasp coordination was influenced by collider size, LMEs were performed to test the relationship between time to peak transport velocity and time to peak aperture and between closure transport velocity at CO and closure distance and how it was influenced by collider size. Time to peak aperture increased with time to peak transport velocity (B

FIGURE 7. Effects of sensory feedback (Study 1, A & B) and collider size (Study 2, C & D) on reach-to-grasp coordination. (A,C) Temporal coordination: time to peak transport velocity vs. time to peak aperture. (B,D) Spatial coordination: transport velocity at CO vs. closure distance. Manipulation of sensory feedback and collider size did not alter reach-to-grasp coordination, indicating that the state-of-the-art hf-VE can support stable reach-to-grasp movement coordination patterns.

In summary, these results further confirm the known effects of object size and object distance on variables related to the aperture and transport components, respectively (Paulignan et al., 1991a; Paulignan et al., 1991b). Most importantly, we show that collider size also affects properties of the grasp relative to the object, specifically, a larger collider prompts a proportionally small aperture. Nonetheless, it appears that collider size has no bearing on reach-to-grasp coordination.

4 Discussion

We investigated the effects of sensory feedback mode (Study 1) and collider size (Study 2) on the coordination of reach-to-grasp movements in hf-VE. Contrary to our expectation (H1), we found that visual, auditory, and audiovisual feedback did not differentially impact key features of reach-to-grasp kinematics in the absence of terminal haptic feedback. In Study 2, larger colliders led to a smaller size-normalized peak aperture (H2) suggesting a possible influence of spherical collider size on the perception of virtual object size and motor planning of reach-to-grasp. Critically, reach-to-grasp spatiotemporal coordination patterns were robust to manipulations of sensory modality and for haptic sensory substitution and spherical collider size.

4.1 Manipulations of Sensory Substitution

In Study 1, we did not observe any changes in the transport and aperture kinematics or in the reach-to-grasp coordination, as a function due to the type of sensory substitution that was provided (visual, auditory, or audiovisual) to indicate that the object had been grasped in the absence of haptic feedback about object properties. Our data did confirm the known effects of object size and object distance on variables related to the aperture and transport components, respectively (Paulignan et al., 1991a; Paulignan et al., 1991b), indicating that variation in reach-to-grasp patterns with respect to object properties in our hf-VE is comparable to that found in the real world as previously indicated in Furmanek et al. (2019). While many studies have explored the role of sensory substitution of haptic feedback in VR (Sikström et al., 2016; Cooper et al., 2018), few studies have investigated the effect of sensory substitution for haptic feedback, specifically in the context of reach-to-grasp movements. One study that used simple spherical colliders for grasping reported faster movement time when sensory substitution for haptic feedback was provided with audio and audiovisual cues compared to visual or absent cues that the object was grasped (Zahariev and MacKenzie, 2007). Our findings that there were no differences in movement kinematics for different types of haptic sensory substitution conditions do not support these past findings, though differences in the outcomes may be explained, in part, by the VR technology utilized. For example, in Zahariev and MacKenzie (2007), participants grasped mirror reflections of computer-generated projections of objects. Such setups have lower fidelity of object rendering than what is typical of HMD-VR and might result in greater salience to auditory feedback. In a more recent study using HMD-VR, participants performed reach-to-grasp movements as part of a pick and place task in less time with visual compared to auditory sensory substitution but interestingly indicated a preference for auditory cues that the object was grasped (Canales and Jörg, 2020). Notably, differences between audio, visual, and audiovisual feedback were small, and since reach-to-grasp kinematics were not presented, interpretations as to why the movements were slower with audio feedback were not possible to make. In an immersive hf-VE like ours, participants might not have had to rely on one sensory modality over the other and hence did not show differences in reach-to-grasp coordination based on visual, auditory, and audiovisual feedback. Furthermore, the fact that we did not observe differences in movement kinematics and spatiotemporal reach-to-grasp coordination (Figures 7A,B) suggests that, in a high-fidelity VR environment, the choice of modality for sensory substitution for haptic feedback may have relatively little bearing on behavior. We speculate that, with high-fidelity feedback of the hand-object interaction, visual feedback of the hand-object collision, rather than explicit feedback in the form of overt sensory substitution, may govern behavior.

The finding that visual information may be sufficient for haptic-free grasping is in agreement with the interesting line of research using a haptic-free robotic system. For instance, Meccariello and others (Meccariello et al., 2016) showed that experienced surgeons perform conventional suturing faster and more accurately than nonexperts when only visual information was used. It has been proposed that experienced surgeons may create a perception of haptic feedback during haptic-free robotic surgery based on visual information and previously learned haptic sensations (Hagen et al., 2008). This suggests that haptic feedback may be needed during skill acquisition, but not necessary for practiced movement.

Another parsimonious explanation for why we did not observe between-condition differences of sensory feedback type on grasp kinematics is related to the study design. As opposed to Zahariev and Mackenzie (2007) and Zahariev and Mackenzie (2008), who randomized the order of object size trials, our participants performed reach-to-grasp actions to each object in a blocked manner (i.e., all trials for each object size-distance pair were completed consecutively within each block). Thus, in our study, subjects’ prior experience—specifically, the proprioceptively perceived final aperture—might have made reliance on explicit feedback of grasp less necessary. Indeed, the calibration of the current reach-to-grasp movement based on past movements is well documented (Gentilucci et al., 1995; Säfström and Edin, 2004; Säfström and Edin, 2005; Bingham et al., 2007; Mon-Williams and Bingham, 2007; Coats et al., 2008; Säfström and Edin, 2008; Foster et al., 2011). Finally, the availability of continuous online feedback of the target object and colliders might have also reduced reliance on sensory feedback (Zahariev and MacKenzie, 2007; Zahariev and Mackenzie, 2008; Volcic and Domini, 2014). The present study was not designed to test such a hypothesis, but future work can explicitly investigate whether reliance on different modalities of terminal sensory feedback may be stronger in a randomized design, when anticipation and planning are less dependable.

4.2 Manipulations of Collider Size

In Study 2, there was a significant main effect of collider size for movement time, time to peak transport velocity, and size-normalized peak aperture indicating that collider size modified key features of the reach-to-grasp movement. It is likely that the collider size altered the perception of object size, an object might be perceived to be smaller when using a larger collider, and that this altered perception might have affected the planning of reach-to-grasp movements. Indeed, previous studies have shown that the hand avatar may act as a metric to scale the intrinsic object properties (e.g., object size) (Linkenauger et al., 2011; Linkenauger et al., 2013; Ogawa et al., 2017; Ogawa et al., 2018; Lin et al., 2019). Interestingly, Ogawa et al. (2017) found that perception of object size was affected by the realism of the avatar, with a biological avatar showing a greater effect on object size perception than an abstract avatar such as what was used in our study. However, in that study participants did not grasp the object; the task was simply to carry the virtual cube object on an open avatar palm. It may therefore be concluded that the effect of avatar size on perception is likely mediated by the requirements of the task, and the use of avatar size as a means to scale the dimension of the intrinsic object properties is more sensitive when the avatar is used to actually grasp the object. One caveat to our finding is that a collider size by object size interaction was not observed. If collider size caused a linear scaling of the perception of object size, then a collider size by object size interaction would be expected as the change in the ratio of collider size to object size will be different for different object sizes. Hand size manipulations do not affect the perceived size of objects that are too big to be grasped, suggesting that hand size may only be used as a scaling mechanism when the object affords the relevant action, in this case, grasping (Linkenauger et al., 2011), providing further evidence of nonlinearities in the use of the hand avatar as a “perceptual ruler.” Therefore, our findings indicate that either the scaling of perception of object size by collider size is nonlinear or the changes we observed arise from different explicit strategies for different colliders independent of perception. Future research will test these competing hypotheses.

Assuming that collider size did in fact influence the perception of object size, it follows that the size of the colliders might have had a similar effect on altering the perceptual scaling of object distance. This interpretation provides a possible explanation for the significant main effect of collider size on time to peak transport velocity. However, given that the ratio of collider size to object distance was much smaller than the ratio of collider size to object size, we think that perceptual effects on distance were probably negligible, at least relative to the perceptual effects on object size. We therefore offer an alternative explanation for the scaling of peak transport velocity and associated movement time, with different collider sizes. If collider size affected the planning of aperture overshoot, as evidenced by the main effect of size-normalized peak aperture, then we may assume that this was also incorporated into the planning of transport to maintain the spatiotemporal coordination of reach-to-grasp. Our data indicate that this may be the case, as both temporal (the relationship between time to peak transport velocity and time to peak aperture) and spatial (the relationship between transport velocity at CO and closure distance) aspects of coordination were not influenced by collider size (Figures 7C,D).

Agnostic to whether the effects of the colliders on aperture profiles were perceptual or strategic, we surmise that these effects were present at the beginning of the movement to ensure that the coordination of the reach and grasp component was not disrupted. Preservation of reach-to-grasp coordination as the primary goal of reach-to-grasp movements is something we have observed in our previous work (Furmanek et al., 2019; Mangalam et al., 2021). The block nature of our design likely facilitated the described effect on planning; however, we do not believe that proprioceptive memory had a large influence on the effects observed in Study 2. If proprioceptive memory did influence behavior, we can assume that it would be equal across all collider sizes and therefore cannot explain behavioral differences across collider sizes. Future research should test whether the observations here hold if object size and distance are randomized.

Our result that larger colliders led to a smaller size-normalized peak aperture can also be framed using the equilibrium point hypothesis (EPH) (Feldman, 1986). In this framework, the peak aperture at a location near the object may be considered a key point in the referent trajectory driving the limb and finger movements (Weiss and Jeannerod, 1998). Given the evidence that the referent configuration for a reach-to-grasp action is specified depending on the object shape, localization, and orientation to define a position-dimensional variable, threshold muscle length (Yang and Feldman, 2010), it is possible that collider size may also influence the referent configuration. One possibility is that collider size may influence the perceived force needed to grasp the object (Pilon et al., 2007) despite the virtual object having no physical properties. Future studies may be specifically designed to test this hypothesis for hf-VE.

4.3 Limitations

Our studies had several limitations. Data were collected from only ten participants limiting the generalization of our findings and potentially exposing us to type 2 error if a certain outcome measure effect size is small. The sample involved only three female participants making it difficult to understand if there may be sex-dependent differences in reach-to-grasp performance, particularly in light of recent evidence that VR may be experienced differently between male and female participants (Munafo et al., 2017; Curry et al., 2020). We used a simple hand avatar rendering of spheres to represent only the tips of the thumb and index finger, and the results of this study may not extrapolate to more anthropomorphic avatars. Our VE was simple comprising only the table, object to be grasped, and hand avatar. Use of the hand avatar as a “perceptual ruler” for objects in the scene may be different for richer environments, especially for those comprising objects with strong connotations of their size (e.g., a soda can). Finally, the degree of stereopsis, presence, and immersion and symptoms of cybersickness were not recorded, and therefore, the influence of these factors on individual participant behavior is unknown.

5 Concluding Remarks

The results of our studies together suggest that spatiotemporal coordination of reach-to-grasp in a high-fidelity immersive hf-VE is robust to the type of modality (e.g., visual/auditory) used as a sensory substitute for the absence of haptic feedback and to the size of the avatar that represents the fingertips. Avatar size may modify the magnitude of peak aperture in hf-VE when using spheres to represent the fingertips, but this change did not affect spatiotemporal coordination between reach and grasp components of the movement. We suggest that the modulation of aperture associated with avatar size may be rooted in the use of the avatar as a “perceptual ruler” for intrinsic properties of virtual objects. These results have implications for commercial and clinical use of hf-VE and should be evaluated in relation to technological limitations of the VR system (i.e., tracking accuracy, update rate, and display latency) (Stanney, 2002). Specifically, when VR is used for manual skill training or neurorehabilitation (Adamovich et al., 2005; Adamovich et al., 2009; Massetti et al., 2018), future work should consider the implications of avatar size on the transfer of learning from the VE to the real world especially in populations with deficits in multisensory integration.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation. Please contact the corresponding author by e-mail.

Ethics Statement

The studies involving human participants were reviewed and approved by the Institutional Review Board (IRB) at Northeastern University. The participants provided their written informed consent to participate in this study.

Author Contributions

MF, MM, MY, and ET conceived and designed research; MF and AS performed experiments; MF, MM, KL, AS, and MY analyzed data; MF, MM, KL, MY, and ET interpreted results of experiments; MF prepared figures; MF, MY, and ET drafted manuscript; MF, MM, KL, AS, MY, and ET edited and revised manuscript; MF, MM, KL, AS, MY, and ET approved the final version of the manuscript.

Funding

This work was supported in part by NIH-2R01NS085122 (ET), NIH-2R01HD058301 (ET), NSF-CBET-1804540 (ET), NSF-CBET-1804550 (ET), and NSF-CMMI-M3X-1935337 (ET, MY).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors thank Alex Huntoon and Samuel Berin for programming the VR platform used for this study.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.648529/full#supplementary-material

References

Adamovich, S. V., Fluet, G. G., Tunik, E., and Merians, A. S. (2009). Sensorimotor Training in Virtual Reality: a Review. Nre 25, 29–44. doi:10.3233/nre-2009-0497

Adamovich, S. V., Merians, A. S., Boian, R., Lewis, J. A., Tremaine, M., Burdea, G. S., et al. (2005). A Virtual Reality-Based Exercise System for Hand Rehabilitation Post-Stroke. Presence: Teleoperators & Virtual Environments 14, 161–174. doi:10.1162/1054746053966996

Argelaguet, F., Hoyet, L., Trico, M., and Lécuyer, A. (2016). The Role of Interaction in Virtual Embodiment: Effects of the Virtual Hand Representation, 2016 IEEE Virtual Reality (VR) (IEEE), 19-23 March 2016, Greenville, SC, USA, 3–10. doi:10.1109/vr.2016.7504682

Barrett, J. (2004). Side Effects of Virtual Environments: A Review of the Literature. Command and control division information sciences laboratory. Defense science and technology organization Canberra, Australia, May 2004.

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2014). Fitting linear mixed-effects models using Lme4. arXiv preprintarXiv:1406.5823.

Bingham, G., Coats, R., and Mon-Williams, M. (2007). Natural Prehension in Trials without Haptic Feedback but Only when Calibration Is Allowed. Neuropsychologia 45, 288–294. doi:10.1016/j.neuropsychologia.2006.07.011

Bingham, G. P., and Mon-Williams, M. A. (2013). The Dynamics of Sensorimotor Calibration in Reaching-To-Grasp Movements. J. Neurophysiol. 110, 2857–2862. doi:10.1152/jn.00112.2013

Bozzacchi, C., Brenner, E., Smeets, J. B., Volcic, R., and Domini, F. (2018). How Removing Visual Information Affects Grasping Movements. Exp. Brain Res. 236, 985–995. doi:10.1007/s00221-018-5186-6

Bozzacchi, C., Volcic, R., and Domini, F. (2014). Effect of Visual and Haptic Feedback on Grasping Movements. J. Neurophysiol. 112, 3189–3196. doi:10.1152/jn.00439.2014

Bozzacchi, C., Volcic, R., and Domini, F. (2016). Grasping in Absence of Feedback: Systematic Biases Endure Extensive Training. Exp. Brain Res. 234, 255–265. doi:10.1007/s00221-015-4456-9

Canales, R., and Jörg, S. (2020). Performance Is Not Everything: Audio Feedback Preferred over Visual Feedback for Grasping Task in Virtual Reality MIG '20: Motion, Interaction and Games, 16–18, October 2020, Virtual Event SC USA. doi:10.1145/3424636.3426897

Castiello, U., Giordano, B. L., Begliomini, C., Ansuini, C., and Grassi, M. (2010). When Ears Drive Hands: the Influence of Contact Sound on Reaching to Grasp. PloS one 5, e12240. doi:10.1371/journal.pone.0012240

Castiello, U. (2005). The Neuroscience of Grasping. Nat. Rev. Neurosci. 6, 726–736. doi:10.1038/nrn1744

Coats, R., Bingham, G. P., and Mon-Williams, M. (2008). Calibrating Grasp Size and Reach Distance: Interactions Reveal Integral Organization of Reaching-To-Grasp Movements. Exp. Brain Res. 189, 211–220. doi:10.1007/s00221-008-1418-5

Cooper, N., Milella, F., Pinto, C., Cant, I., White, M., and Meyer, G. (2018). The Effects of Substitute Multisensory Feedback on Task Performance and the Sense of Presence in a Virtual Reality Environment. PloS one 13, e0191846. doi:10.1371/journal.pone.0191846

Culham, J. C., Cavina-Pratesi, C., and Singhal, A. (2006). The Role of Parietal Cortex in Visuomotor Control: what Have We Learned from Neuroimaging?. Neuropsychologia 44, 2668–2684. doi:10.1016/j.neuropsychologia.2005.11.003

Curry, C., Li, R., Peterson, N., and Stoffregen, T. A. (2020). Cybersickness in Virtual Reality Head-Mounted Displays: Examining the Influence of Sex Differences and Vehicle Control. Int. J. Human-Computer Interaction 36, 1161–1167. doi:10.1080/10447318.2020.1726108

Dubrowski, A., Bock, O., Carnahan, H., and Jüngling, S. (2002). The Coordination of Hand Transport and Grasp Formation during Single- and Double-Perturbed Human Prehension Movements. Exp. Brain Res. 145, 365–371. doi:10.1007/s00221-002-1120-y

Feldman, A. G. (1986). Once More on the Equilibrium-Point Hypothesis (λ Model) for Motor Control. J. Mot. Behav. 18, 17–54. doi:10.1080/00222895.1986.10735369

Foster, R., Fantoni, C., Caudek, C., and Domini, F. (2011). Integration of Disparity and Velocity Information for Haptic and Perceptual Judgments of Object Depth. Acta Psychologica 136, 300–310. doi:10.1016/j.actpsy.2010.12.003

Furmanek, M. P., Schettino, L. F., Yarossi, M., Kirkman, S., Adamovich, S. V., and Tunik, E. (2019). Coordination of Reach-To-Grasp in Physical and Haptic-free Virtual Environments. J. Neuroengineering Rehabil. 16, 78. doi:10.1186/s12984-019-0525-9

Gentilucci, M., Daprati, E., Toni, I., Chieffi, S., and Saetti, M. C. (1995). Unconscious Updating of Grasp Motor Program. Exp. Brain Res. 105, 291–303. doi:10.1007/BF00240965

Gentilucci, M., Chieffi, S., Scarpa, M., and Castiello, U. (1992). Temporal Coupling between Transport and Grasp Components during Prehension Movements: Effects of Visual Perturbation. Behav. Brain Res. 47, 71–82. doi:10.1016/s0166-4328(05)80253-0

Groen, J., and Werkhoven, P. J. (1998). Visuomotor Adaptation to Virtual Hand Position in Interactive Virtual Environments. Presence 7, 429–446. doi:10.1162/105474698565839

Hagen, M. E., Meehan, J. J., Inan, I., and Morel, P. (2008). Visual Clues Act as a Substitute for Haptic Feedback in Robotic Surgery. Surg. Endosc. 22, 1505–1508. doi:10.1007/s00464-007-9683-0

Haggard, P., and Wing, A. (1995). Coordinated Responses Following Mechanical Perturbation of the Arm during Prehension. Exp. Brain Res. 102, 483–494. doi:10.1007/BF00230652

Haggard, P., and Wing, A. M. (1991). Remote Responses to Perturbation in Human Prehension. Neurosci. Lett. 122, 103–108. doi:10.1016/0304-3940(91)90204-7

Hosang, S., Chan, J., Davarpanah Jazi, S., and Heath, M. (2016). Grasping a 2d Object: Terminal Haptic Feedback Supports an Absolute Visuo-Haptic Calibration. Exp. Brain Res. 234, 945–954. doi:10.1007/s00221-015-4521-4

Jeannerod, M. (1981). Intersegmental Coodination during Reaching at Natural Visual Objects. In: Long J, Baddeley A (eds) Attention and performance ix. Erlbaum, Hillsdale, NJ 234, 153–169.

Jeannerod, M. (1984). The Timing of Natural Prehension Movements. J. Mot. Behav. 16, 235–254. doi:10.1080/00222895.1984.10735319

Lin, L., Normovle, A., Adkins, A., Sun, Y., Robb, A., Ye, Y., et al. (2019). The Effect of Hand Size and Interaction Modality on the Virtual Hand Illusion. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (IEEE). 23-27 March 2019, Osaka, Japan, 510–518. doi:10.1109/vr.2019.8797787

Linkenauger, S. A., Leyrer, M., Bülthoff, H. H., and Mohler, B. J. (2013). Welcome to Wonderland: The Influence of the Size and Shape of a Virtual Hand on the Perceived Size and Shape of Virtual Objects. PloS one 8, e68594. doi:10.1371/journal.pone.0068594

Linkenauger, S. A., Witt, J. K., and Proffitt, D. R. (2011). Taking a Hands-On Approach: Apparent Grasping Ability Scales the Perception of Object Size. J. Exp. Psychol. Hum. Perception Perform. 37, 1432–1441. doi:10.1037/a0024248

Liu, H., Zhang, Z., Xie, X., Zhu, Y., Liu, Y., Wang, Y., et al. (2019). High-fidelity Grasping in Virtual Reality Using a Glove-Based System. In 2019 International Conference on Robotics and Automation (ICRA) (IEEE). 20-24 May 2019, Montreal, QC, Canada, 5180–5186. doi:10.1109/icra.2019.8794230

Lok, B., Naik, S., Whitton, M., and Brooks, F. P. (2003). Effects of Handling Real Objects and Self-Avatar Fidelity on Cognitive Task Performance and Sense of Presence in Virtual Environments. Presence: Teleoperators & Virtual Environments 12, 615–628. doi:10.1162/105474603322955914

Luckett, E. (2018). A Quantitative Evaluation of the Htc Vive for Virtual Reality Research. Ph.D. thesis. Oxford, MI: The University of Mississippi.

Mangalam, M., Yarossi, M., Furmanek, M. P., and Tunik, E. (2021). Control of Aperture Closure during Reach-To-Grasp Movements in Immersive Haptic-free Virtual Reality. Exp. Brain Res. 239, 1651–1665. doi:10.1007/s00221-021-06079-8

Massetti, T., Da Silva, T. D., Crocetta, T. B., Guarnieri, R., De Freitas, B. L., Bianchi Lopes, P., et al. (2018). The Clinical Utility of Virtual Reality in Neurorehabilitation: a Systematic Review. J. Cent. Nerv. Syst. Dis. 10, 1179573518813541. doi:10.1177/1179573518813541

Meccariello, G., Faedi, F., AlGhamdi, S., Montevecchi, F., Firinu, E., Zanotti, C., et al. (2016). An Experimental Study about Haptic Feedback in Robotic Surgery: May Visual Feedback Substitute Tactile Feedback? J. Robotic Surg. 10, 57–61. doi:10.1007/s11701-015-0541-0

Meulenbroek, R., Rosenbaum, D., Jansen, C., Vaughan, J., and Vogt, S. (2001). Multijoint Grasping Movements. Exp. Brain Res. 138, 219–234. doi:10.1007/s002210100690

Mon-Williams, M., and Bingham, G. P. (2007). Calibrating Reach Distance to Visual Targets. J. Exp. Psychol. Hum. Perception Perform. 33, 645–656. doi:10.1037/0096-1523.33.3.645

Munafo, J., Diedrick, M., and Stoffregen, T. A. (2017). The Virtual Reality Head-Mounted Display Oculus Rift Induces Motion Sickness and Is Sexist in its Effects. Exp. Brain Res. 235, 889–901. doi:10.1007/s00221-016-4846-7

Niehorster, D. C., Li, L., and Lappe, M. (2017). The Accuracy and Precision of Position and Orientation Tracking in the Htc Vive Virtual Reality System for Scientific Research. Iperception 8, 2041669517708205. doi:10.1177/2041669517708205

Ogawa, N., Narumi, T., and Hirose, M. (2017). Factors and Influences of Body Ownership over Virtual Hands, International Conference on Human Interface and the Management of Information. July 9-14, 2017, Vancouver, BC, Canada. Springer, 589–597. doi:10.1007/978-3-319-58521-5_46

Ogawa, N., Narumi, T., and Hirose, M. (2018). Object Size Perception in Immersive Virtual Reality: Avatar Realism Affects the Way We Perceive. In 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (IEEE). 18-22 March 2018, Tuebingen/Reutlingen, Germany, 647–648. doi:10.1109/vr.2018.8446318

Oldfield, R. C. (1971). The Assessment and Analysis of Handedness: the edinburgh Inventory. Neuropsychologia 9, 97–113. doi:10.1016/0028-3932(71)90067-4

Paulignan, Y., Jeannerod, M., MacKenzie, C., and Marteniuk, R. (1991b). Selective Perturbation of Visual Input during Prehension Movements. 2. The Effects of Changing Object Size. Exp. Brain Res. 87, 407–420. doi:10.1007/BF00231858

Paulignan, Y., MacKenzie, C., Marteniuk, R., and Jeannerod, M. (1991a). Selective Perturbation of Visual Input during Prehension Movements. 1. The Effects of Changing Object Position. Exp. Brain Res. 83, 502–512. doi:10.1007/BF00229827

Pilon, J.-F., De Serres, S. J., and Feldman, A. G. (2007). Threshold Position Control of Arm Movement with Anticipatory Increase in Grip Force. Exp. Brain Res. 181, 49–67. doi:10.1007/s00221-007-0901-8

Prachyabrued, M., and Borst, C. W. (2013). Effects and Optimization of Visual-Proprioceptive Discrepancy Reduction for Virtual Grasping. In 2013 IEEE Symposium on 3D User Interfaces (3DUI) (IEEE). 4-5 March 2012, Costa Mesa, CA, USA, 11–14. doi:10.1109/3dui.2013.6550190

Prachyabrued, M., and Borst, C. W. (2014). Visual Feedback for Virtual Grasping. In 2014 IEEE symposium on 3D user interfaces (3DUI) (IEEE). 16-17 March 2013, Orlando, FL, USA, 19–26. doi:10.1109/3dui.2014.6798835

Prachyabrued, M., and Borst, C. W. (2012). Visual Interpenetration Tradeoffs in Whole-Hand Virtual Grasping. In 2012 IEEE Symposium on 3D User Interfaces (3DUI) (IEEE). 29-30 March 2014, Minneapolis, MN, USA, 39–42. doi:10.1109/3dui.2012.6184182

Rand, M. K., Shimansky, Y. P., Hossain, A. B. M. I., and Stelmach, G. E. (2008). Quantitative Model of Transport-Aperture Coordination during Reach-To-Grasp Movements. Exp. Brain Res. 188, 263–274. doi:10.1007/s00221-008-1361-5

Säfström, D., and Edin, B. B. (2008). Prediction of Object Contact during Grasping. Exp. Brain Res. 190, 265–277. doi:10.1007/s00221-008-1469-7

Säfström, D., and Edin, B. B. (2005). Short-term Plasticity of the Visuomotor Map during Grasping Movements in Humans. Learn. Mem. 12, 67–74. doi:10.1101/lm.83005

Säfström, D., and Edin, B. B. (2004). Task Requirements Influence Sensory Integration during Grasping in Humans. Learn. Mem. 11, 356–363. doi:10.1101/lm.71804

Schettino, L. F., Adamovich, S. V., and Tunik, E. (2017). Coordination of Pincer Grasp and Transport after Mechanical Perturbation of the index finger. J. Neurophysiol. 117, 2292–2297. doi:10.1152/jn.00642.2016

Sedda, A., Monaco, S., Bottini, G., and Goodale, M. A. (2011). Integration of Visual and Auditory Information for Hand Actions: Preliminary Evidence for the Contribution of Natural Sounds to Grasping. Exp. Brain Res. 209, 365–374. doi:10.1007/s00221-011-2559-5

Sikström, E., Høeg, E. R., Mangano, L., Nilsson, N. C., De Götzen, A., and Serafin, S. (2016). Shop’til You Hear it Drop: Influence of Interactive Auditory Feedback in a Virtual Reality Supermarket. In Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology. November 2-4, 2016, Munich Germany. 355–356.

Sivakumar, P., Quinlan, D. J., Stubbs, K. M., and Culham, J. C. (2021). Grasping Performance Depends upon the Richness of Hand Feedback. Exp. Brain Res. 239, 835–846. doi:10.1007/s00221-020-06025-0

Stanney, K. M. (2002). Handbook of Virtual Environments: Design, Implementation, and Applications. 1st Edition. Boca Raton, FL: Lawrence Erlbaum Associates.

van Polanen, V., Tibold, R., Nuruki, A., and Davare, M. (2019). Visual Delay Affects Force Scaling and Weight Perception during Object Lifting in Virtual Reality. J. Neurophysiol. 121, 1398–1409. doi:10.1152/jn.00396.2018

Vesia, M., and Crawford, J. D. (2012). Specialization of Reach Function in Human Posterior Parietal Cortex. Exp. Brain Res. 221, 1–18. doi:10.1007/s00221-012-3158-9

Volcic, R., and Domini, F. (2016). On-line Visual Control of Grasping Movements. Exp. Brain Res. 234, 2165–2177. doi:10.1007/s00221-016-4620-x

Volcic, R., and Domini, F. (2014). The Visibility of Contact Points Influences Grasping Movements. Exp. Brain Res. 232, 2997–3005. doi:10.1007/s00221-014-3978-x

Vosinakis, S., and Koutsabasis, P. (2018). Evaluation of Visual Feedback Techniques for Virtual Grasping with Bare Hands Using Leap Motion and Oculus Rift. Virtual Reality 22, 47–62. doi:10.1007/s10055-017-0313-4

Weiss, P., and Jeannerod, M. (1998). Getting a Grasp on Coordination. Physiology 13, 70–75. doi:10.1152/physiologyonline.1998.13.2.70

Whitwell, R. L., Ganel, T., Byrne, C. M., and Goodale, M. A. (2015). Real-time Vision, Tactile Cues, and Visual Form Agnosia: Removing Haptic Feedback from a “natural” Grasping Task Induces Pantomime-like Grasps. Front. Hum. Neurosci. 9, 216. doi:10.3389/fnhum.2015.00216

Yang, F., and Feldman, A. G. (2010). Reach-to-grasp Movement as a Minimization Process. Exp. Brain Res. 201, 75–92. doi:10.1007/s00221-009-2012-1

Zahariev, M. A., and Mackenzie, C. L. (2008). Auditory Contact Cues Improve Performance when Grasping Augmented and Virtual Objects with a Tool. Exp. Brain Res. 186, 619–627. doi:10.1007/s00221-008-1269-0

Zahariev, M. A., and MacKenzie, C. L. (2003). Auditory, Graphical and Haptic Contact Cues for a Reach, Grasp, and Place Task in an Augmented Environment. In Proceedings of the 5th international conference on Multimodal interfaces. November 5-7, 2003, Vancouver, BC, Canada. 273–276doi:10.1145/958432.958481

Keywords: visual feedback, auditory feedback, haptic feedback, collision detection, prehension, virtual environment, virtual reality

Citation: Furmanek MP, Mangalam M, Lockwood K, Smith A, Yarossi M and Tunik E (2021) Effects of Sensory Feedback and Collider Size on Reach-to-Grasp Coordination in Haptic-Free Virtual Reality. Front. Virtual Real. 2:648529. doi: 10.3389/frvir.2021.648529

Received: 31 December 2020; Accepted: 05 July 2021;

Published: 19 August 2021.

Edited by:

Maxime T. Robert, Laval University, CanadaReviewed by:

Mary C. Whitton, University of North Carolina at Chapel Hill, United StatesNikita Aleksandrovich Kuznetsov, Louisiana State University, United States

Copyright © 2021 Furmanek, Mangalam, Lockwood, Smith, Yarossi and Tunik. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mariusz P. Furmanek, bS5mdXJtYW5la0Bub3J0aGVhc3Rlcm4uZWR1

Mariusz P. Furmanek

Mariusz P. Furmanek Madhur Mangalam

Madhur Mangalam Kyle Lockwood

Kyle Lockwood Andrea Smith

Andrea Smith Mathew Yarossi

Mathew Yarossi Eugene Tunik

Eugene Tunik