- 1Department of Computer Science and Applied Mathematics, Weizmann Institute of Science, Rehovot, Israel

- 2Department of Molecular Cell Biology, Weizmann Institute of Science, Rehovot, Israel

Many distinct spaces surround our bodies. Most schematically, the key division is between peripersonal space (PPS), the close space surrounding our body, and an extrapersonal space, which is the space out of one’s reach. The PPS is considered as an action space, which allows us to interact with our environment by touching and grasping. In the current scientific literature, PPS’ visual representations are appearing as mere bubbles of even dimensions wrapped around the body. Although more recent investigations of PPS’ upper body (trunk, head, and hands) and lower body (legs and foot) have provided new representations, no investigation has been made yet concerning the estimation of PPS’s overall representation in 3D. Previous findings have demonstrated how the relationship between tactile processing and the location of sound sources in space is modified along a spatial continuum. These findings suggest that similar methods can be used to localize the boundaries of the subjective individual representation of PPS. Hence, we designed a behavioral paradigm in virtual reality based on audio-tactile interactions, which has enabled us to infer a detailed individual 3D audio-tactile representation of PPS. Considering that inadequate body-related multisensory integration processes can produce incoherent spatio–temporal perception, the development of a virtual reality setup and a method to estimate the representation of the subjective PPS volumetric boundaries will be a valuable addition for the comprehension of the mismatches occurring between body physical boundaries and body schema representations in 3D.

Introduction

In the last two decades, we have witnessed a rising interest in neuroscience regarding cross-modal and multisensory body representations (Maravita et al., 2003; Holmes et al., 2004) and their influences on the mental division of external spaces and 3D spatial interactions (Grüsser, 1983; Previc, 1990; Previc, 1998; Cutting and Vishton, 1995; Maravita et al., 2004; De Vignemont and Iannetti, 2015; Postma et al., 2016). Many definitions evoking body spatial representations exist and as a result, much confusion arises between the concept of body schemas (Head and Holmes, 1911; Bonnier, 2009) and body image representations (Gallagher, 1986).

Originally, peripersonal space (PPS) is based dominantly on visual–tactile neurons, first observed in electrophysiological studies in monkeys Macaca fascicularis (Rizzolatti 1981; Graziano and Gross, 1993). In our study, we chose to focus on body schemas egocentric representations as insofar it represent how the body dictates the movement it performs (De Vignemont, 2010; De Vignemont, 2018) and is an unconscious experience of spatiality which relies on multisensory integration mechanism closely involved in the dynamic representations of PPS spatial encoding (Spence et al., 2008; Brozzoli et al., 2012), which interest us. PPS, the close space surrounding the body (Brain, 1941; Previc, 1988; Rizzolatti et al., 1997; Noel et al., 2015a; Di Pellegrino and Làdavas, 2015; Graziano, 2017; Hunley et al., 2018), can be traced back in visual representation all the way to the drawing of the “Vitruvian manˮ by Leonardo da Vinci, which depicted the human body anatomical configuration and proportions (1490). Continually, the topic concerning PPS spatial representation has been explored in different domains of visual arts over the centuries (sculpting, painting, and drawing), performing arts (dance, music, theater, fencing, etc.), and scientific domains. For instance, we could trace in sixteenth century Spain the origin of PPS in the fencing discipline named “destreza” based on the application of geometrical principles which determine an imaginary sphere in order to conceptualize distances and movements between the opponents. More recently, in the turn of twentieth century, the choreographer Rudolph Laban’s choreutics theory (Von Laban, 1966) has linked his studies of movement with Pythagorean mathematics and formulated the concept of a kinesphere in order to characterize the space surrounding one’s body “within reaching possibilities of the limbs without changing one’s place” (Dell et al., 1977).

In our view, the kinesphere indicates a deep understanding of the interactive and enactive properties inherent to embodied perception and cognition. Enactive is related to situations when the simple perception or recollection of the body motor action produces the activation of motor cortical area (Keysers et al., 2004; Gallese et al., 2009). Interactive relates to when the body acts as an interface for the planning and the execution of motor actions in its environment. One could even guess the origin of the PPS visual-rounded shape from the latest etymology of the word “sur-round-ing” as built upon man’s body sphere of action. Indeed, relating to our perception, the kinesphere could be considered a forerunner model of PPS as it exhibits dynamic and plastic features of its spatial boundaries that can either extend or shrink (Von Laban, 1966), which appears much similar to PPS. Thus, PPS size can be modified and remapped according to a long inventory of factors like a subject’s arm length (Longo et al., 2007; Lourenco et al., 2011), subject’s handedness (Hobeika et al., 2018), and the choice of the stimulated body parts (Serino et al., 2015a). Its size, however, can also be modified with tools use (Maravita and Iriki, 2004; Làdavas and Serino, 2008) as diverse as a rake (Farnè and Làdavas, 2000; Bonifazi et al., 2007; Farnè et al., 2007), a stick (Làdavas, 2002; Gamberini et al., 2008), a laser pointer (Gamberini et al., 2008), a computer mouse (Bassolino et al., 2010), a rubber hand (Lloyd, 2007), a dummy hand (Makin et al., 2008), a 3D virtual hand (D’Angelo et al., 2018), an avatar disconnected from the subject body (Mine and Yokosawa, 2020), a mirror (Holmes et al., 2004; Làdavas et al., 2008), body shadows (Pavani et al., 2004), and a wheelchair (Galli et al., 2015). The integration within PSS boundaries of external objects highlights the flexibility of the brain multisensory spatial PPS representations (De Vignemont and Iannetti, 2015; Dijkerman, 2017). Furthermore, PPS spatial boundaries can also be modulated without tool use (Berti and Frasinetti, 2000; Serino et al., 2015b). They can change by performing walk-and-reach movements (Berger et al., 2019) according to certain laws of physics, that is, gravitational cues (Bufacchi, et al., 2015); personality traits, that is, anxiety (Sambo and Iannetti, 2013; Iachini et al., 2015a); social perception of other persons’ body and facial postures (Ruggiero et al., 2017; Cartaud et al., 2018); others bodies (Fini et al., 2014; Pellencin et al., 2018) but also the social perception of others’ behaviors (Iachini et al., 2015b) and attitudes (Teneggi et al., 2013). Even certain phobias and psychiatric conditions can modulate PPS shapes and dimensions, that is, claustrophobia (Lourenco et al., 2011), cynophobic fear (Taffou et al., 2014), anorexia nervosa (Nandrino et al., 2017), schizophrenia (Delevoye-Turrell et al., 2011; Noel et al., 2017), autism spectrum disorder (Noel et al., 2017), and various apraxic syndromes, that is, mirror apraxia (Binkofski et al., 2003). All the non-exhaustive enumerated factors listed above are giving rise to particular shapes of PPS spatial representations.

For the investigation of PPS distinct spatial configurations, recent studies in neuroscience are using a vast panoply of means to an end. Some are looking at the neural basis of PPS by paying particular attention to the activity of the firing rates and receptive fields (RF) of multisensory neurons located in the fronto–parietal network that includes the premotor cortex (Graziano et al., 1994; Fogassi et al., 1996; Lloyd et al., 2003a; Ehrsson et al., 2004), together with the intraparietal sulcus and the lateral occipital complex (Makin et al., 2007) as well as the posterior parietal cortex (Bremmer et al., 2001). Although the premotor, intraparietal, and parietal associative areas have been found to be the functional regions more specifically involved in PPS multisensory representations (Serino et al., 2011; Clery et al., 2015), other studies have used a more psychophysical computational approach (Noel et al., 2018) or are designing neuropsychological and psychophysical studies (Canzoneri et al., 2012; Teneggi et al., 2013; Noel et al., 2018) to assess PPS proxy limits and flexibility using experimental setups in real and virtual reality (Iachini et al., 2016; Lee et al., 2016) as well as mixed reality environment (Serino et al., 2018). Even if these setups have been proved as valid techniques in neurophysiological studies, only the latter is using in its virtual setup real subjects and not only avatars. However, 3D description of PPS remains unexplored because the method involves visual stimuli.

When reviewing the current psychophysical and behavioral tasks that were already used to measure and identify PPS spatial signature, our motivation was to find the best method which could be applied in a VR setup. Until now behavioral tasks such as hand-blink reflex to map defensive peripersonal space (Sambo et al., 2012; De Vignemont and Iannetti, 2015; Bufacchi and Iannetti, 2016), line bisection tasks (Halligan and Marshall, 1991; Longo and Lourenco, 2006), cross-modal congruency tasks (Spence et al., 2000; Lloyd et al., 2003b), and visuo-tactile tasks (Brozzoli et al., 2010; Noel et al., 2015b) have been carried out. We chose to adapt an audio-tactile interaction task paradigm (Canzoneri et al., 2012; Teneggi et al., 2013) to a VR setup in order to infer PPS boundaries around the subjects’ body in 360°. The setup in VR allowed us using anechoic conditions to accurately eliminate noises (i e., reflection, reverberation, and Doppler shift) from our virtual binaural audio space and enabled the design of specific sound localizations (elevation, azimuth, and distance). In ambient space, it is challenging to record a sound object for every direction (Yairi et al., 2009), and therefore it fits our motivation for defining PPS 3D boundaries.

Our goal in this study is to provide a phenomenological description of PPS three dimensional audio-tactile boundaries in relation to its egocentric body schema–related representation. Audio-tactile stimuli were preferred over visuo-tactile ones (Kandula et al., 2017). We used both flat and dynamic sound stimuli instead of receding and looming sound stimuli because our aim was to acquire PPS 3D boundaries for dynamic and non-dynamic sound stimuli and not to deduce the boundaries from a comparative approach of reaction times (RT). Therefore, receding sounds were replaced by flat sounds (Ferri et al., 2015; Ardizzi and Ferri 2018).

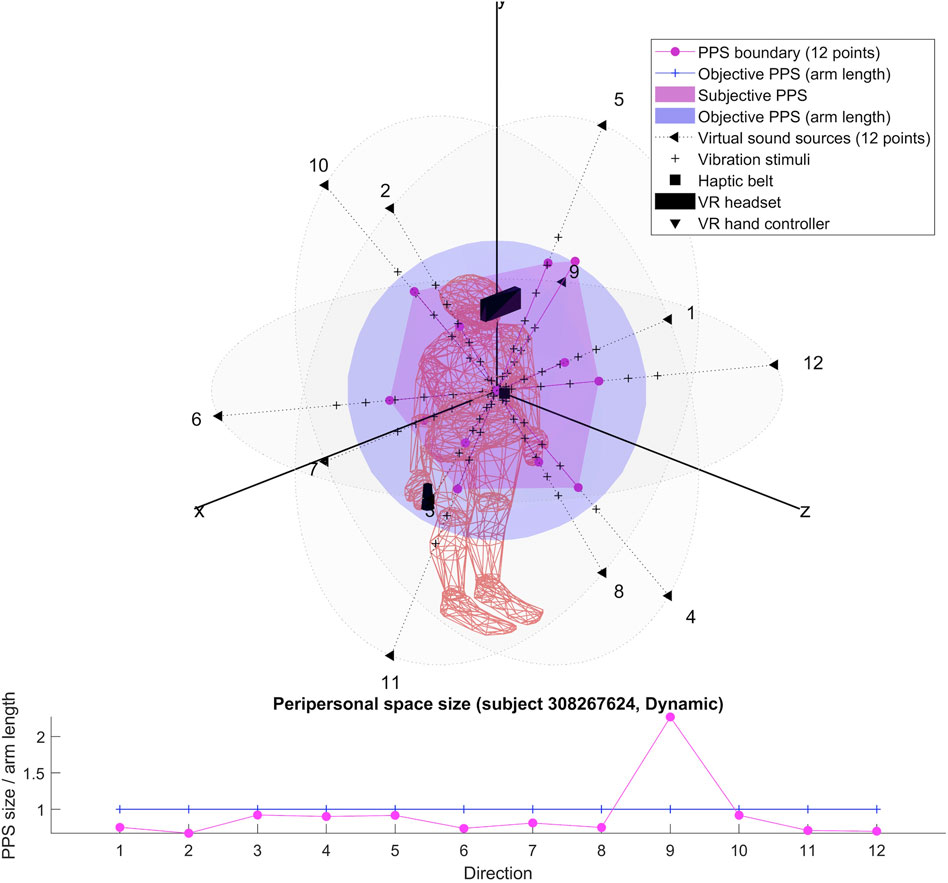

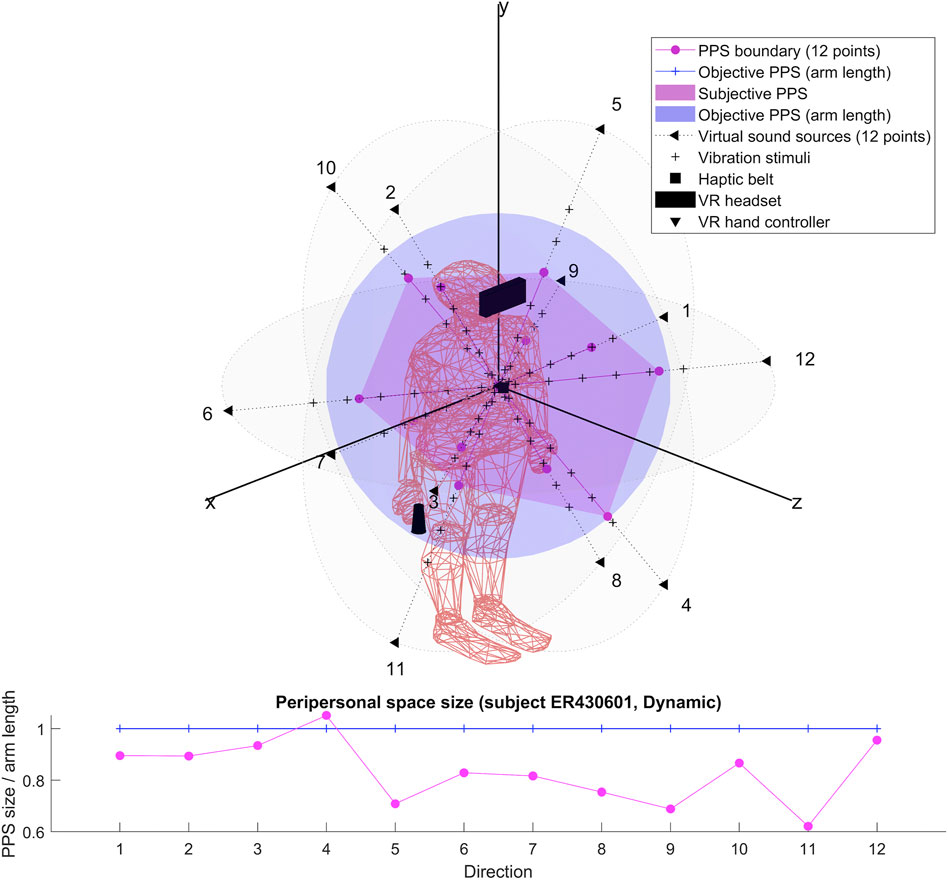

The PPS obtained by RT thresholds will be referred to as the subjective PPS and set against an objective PPS defined as the subject reachable space from a static position based on subjects’ arm lengths.

Materials and Methods

Participants

Eight healthy participants took part in the study (3 males: average age 27 ± 1 years and 5 females: 24 ± 2 years). We chose a small sample for our pilot in order to ease its implementation. The participants were recruited from the Weizmann Institute of Science and the Faculty of Agriculture students in Rehovot and were remunerated by 50 ₪ per hour. All the participants gave their written informed consent to participate in the study. The study was performed in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (IRB) of the Weizmann Institute. As self reported, all the participants had no hearing, touch, or visual impairments which caused them to wear glasses or contact lenses and no known history of neurological or psychiatric disorders.

Material

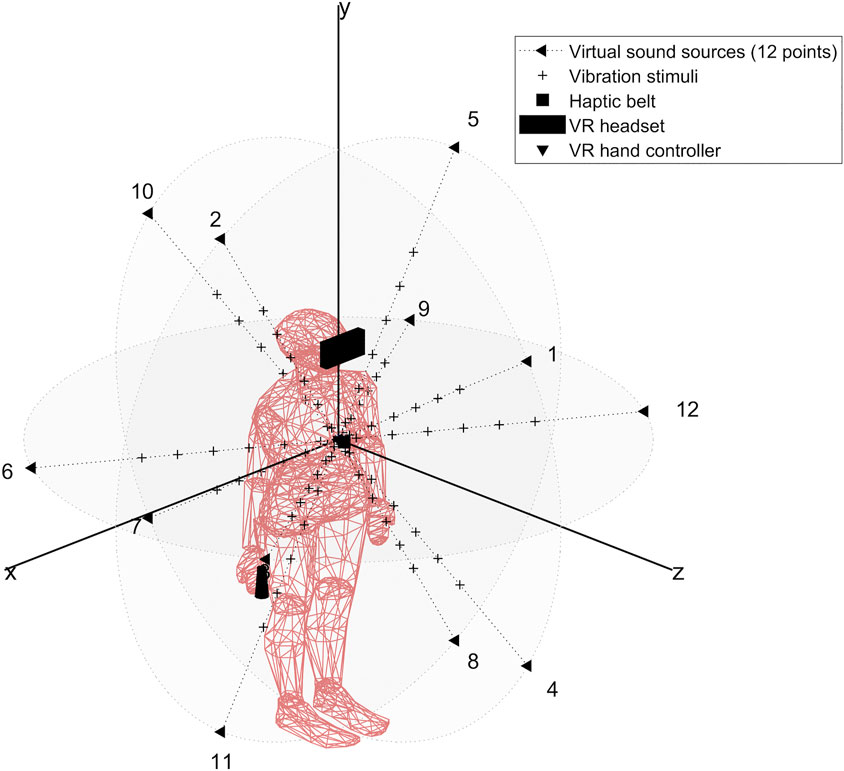

Our setup (see Figure 1) is based on previous studies (Canzoneri et al., 2012; Noel et al., 2015a; Serino et al., 2015a; Pfeiffer et al., 2018), which have demonstrated how the relationship between tactile processing and the location of sound sources in space, modified along a spatial continuum, can be used to localize the boundaries of peripersonal space representation. Relying on these findings, we designed an audio-tactile interaction task in a virtual reality using a Unity 3D game engine (version 2017.4.40) and run with the HTC Vive System (HTC Vive Virtual Reality System, 2017). The Vive System was set to track an area of 4 × 4 m which includes the HTC Vive headset at a refresh rate of 90 Hz with 110 degree field of view and a display resolution of 1,080 × 1,200 (2,160 × 1,200 combined pixels). It also includes a pair of hand controllers (dual-stage trigger) and uses a TPCast wireless adapter (wireless signal at 60 GHz with less than 2 ms latency).

Stimuli

The audio stimuli were displayed using HTC Vive headset ambisonic features to render a spherical soundscape around the subject’s body and the tactile stimuli were delivered using the Woojer haptic strap (https://www.woojer.com/technology/). To create, process, and control the directionality, intensity, and frequency of each axis of our audio input signal, we used the recently developed open-source audio 3D Tune-in Toolkit to render binaural spatialization (Cuevas-Rodriguez et al., 2019) and a custom MATLAB script to automatically create the audio stimuli for each subject based on subjects head circumference parameters. The files were imported into a Unity 3D game engine in wav format. Furthermore, the toolkit was developed to support people using hearing aid devices to gain optimal accuracy in the spatialization of the sound (angle and distance) and allowing the customization of subjects’ interaural time difference (IDT). The IDT was simulated separately from the HRIR and calculated with the specific user-inputted head circumference for each of the eight subjects.

Audio Stimuli

The audio stimuli were broadcasted from the twelve virtual sounds around the subject’s head and arranged in a random combination of flat (4) and dynamic (4) repeat of sound (pink noise 35 Hz wav with a velocity of 22 cm/sec and a duration of 5.5 s). The flat sounds were of constant intensity while the dynamic sounds were of increasing intensity in order to simulate looming sounds toward the subject’s sternum xiphoid process. The intensity level of the virtual audio stimuli surrounding the subject head was automatically generated by the 3D Tune-in Toolkit starting from twelve virtual sound sources positions located at 1.2 m from the subject’s head. The coordinates were selected to represent the surface of a sphere with a radius R = 1.2 m centered on the subject’s sternum xiphoid process (see Table 1 for the virtual sounds’ spatial positions).

The Tactile Stimuli

We ran in a Unity 3D calibration scene (10 trials of 60 trails) to calibrate the tactile stimuli based on subjects’ perceptive vibration thresholds. A MATLAB script was developed to analyze the subject’s perceptive level of vibration intensity (we choose the smallest number that scored 10 in the MATLAB summary). Each subject obtained this score with the maximum level of intensity. The signal decomposes into the sum of an attack signal which is a 200 Hz sinusoidal wave of amplitude +4dB and a decay signal of frequency 50 Hz and amplitude −22 dB. The tactile stimuli onset asynchrony (SOA) between audio and tactile stimuli were based on the individual subject’s arm length and were calculated to be displayed at 6 distances (timepoints) around the subject’s body D1: 0.7, D2: 0.9, D3: 1.1, D4: 1.3, D5: 1.5, and D6: 1.7 arm length distance from the subject’s chest for a duration of 100 ms. The tactile vibrations were delivered on subjects’ sternum xiphoid process location using the Woojer strap haptic belt which was connected to a sound card (USB 2.0 7.1 AUDIO SOUND BOX CM6206) enabling the display of the audio stimuli through the HTC Vive headset (computer sound setting was set to the maximum).

Procedure

On their arrival, subjects were asked to fill up their personal details (identity number, date of birth, gender, and level of fitness). We then manually took several other subjects’ body measurements, which were reported onto the subject’s personal form as we went along. Subjects’ measurements were accomplished in the following set order: weight and height, head circumference (for the parametrization of the 3D Tune-in Toolkit), arm length (from acromionclavicular joint till the middle fingertip), interpupillary distance (IPD) (for the calibration of the headset), and pupillary height from the floor and root of the nose to sternum xyphoid process location (which will later serve to position the haptic belt onto the subjects’ upper body).

Before the start of the experiment, we ran in a Unity 3D calibration scene to assess the perceptive level of vibration intensity of each subject. To do so, the subject was equipped with the Woojer haptic belt (which was placed on his/her lower part sternum according to the previous measurement we took) and while standing still, we delivered different intensities of vibration through the haptic belt. The subject task was to press the HTC Vive hand controller trigger every time he would feel a vibration. The data were saved in a MATLAB folder and analyzed using a custom MATLAB script to later serve to configure the intensity parameters of the tactile stimuli in a Unity 3D game engine based on each subject’s vibration perceptive intensity level.

The experiment was performed in a room without a window with the air-conditioning set at a temperature of 22°C. Before the start of the experiment, the investigator explained the task instructions of the experiment to the subject, its workflow, and duration. Subjects were instructed to standstill at the same marked position in the center of the room and motor action were limited to responding manually as fast as possible using one of the HTC Vive hand controller trigger (the right one for right handed and left one for left handed) to tactile stimulus administered on his/her lower sternum by a haptic belt at different delays from the onset of task-irrelevant dynamic sounds, which gave the impression of a sound source looming toward him/her. Results were derived on subject’s reaction time (RT) that was taken as subject’s PPS proxy. The workflow of the experiment included a preliminary training session to familiarize the subject with the equipment and the task of the experiment followed by the experiment which consisted of four separate blocks with 24 trials per block (total 144 trials, number of total duration was 1.30 min at the most with a 15 min break after the first two blocks).

After the subject was equipped with HTC Vive headset, which was adjusted according to his/her interpupillary distance and was given HTC Vive hand controllers, and the haptic belt strap was attached to his/her lower sternum location (based on our previous measures). The subject had to stand still in the middle of a square room of 4 × 4 m with his/her feet astride in parallel 40 cm apart as specified by two lines made with masking tape onto the floor and was told to keep this position during the entire experiment. Subsequently, the investigator customized the block parameters of the Unity 3D experiment using the subject’s preliminary acquired measurements (subject identity number, arm length, nose to sternum distance, eyes height, and vibration calibration). The virtual scene consisted of an infinite black background where the subjects had to fixate a red cross target located in the middle of the virtual scene at a distance of 3 m which was visible for 0.5 s. When the subject’s head was aligned correctly (less than 9.5 degrees of deviation from the target center), the red cross disappeared and only a black background remained. Thereafter, the experiment was initiated with the training session (10 trials) followed by four blocks of the experiment separated into two blocks each. The raw data results of the experiment were saved in a MATLAB folder for their analysis.

Analysis

We ran a preliminary analysis using a MATLAB script of 60 trails for the calibration of the tactile stimuli to ensure that the stimuli delivered were felt by all subjects.

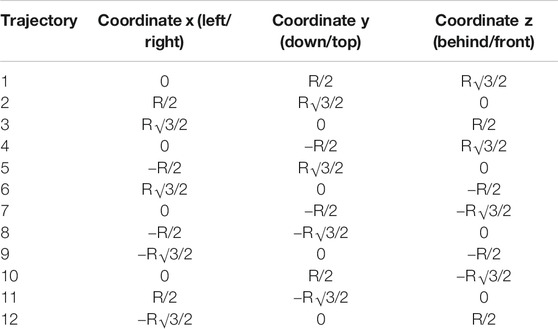

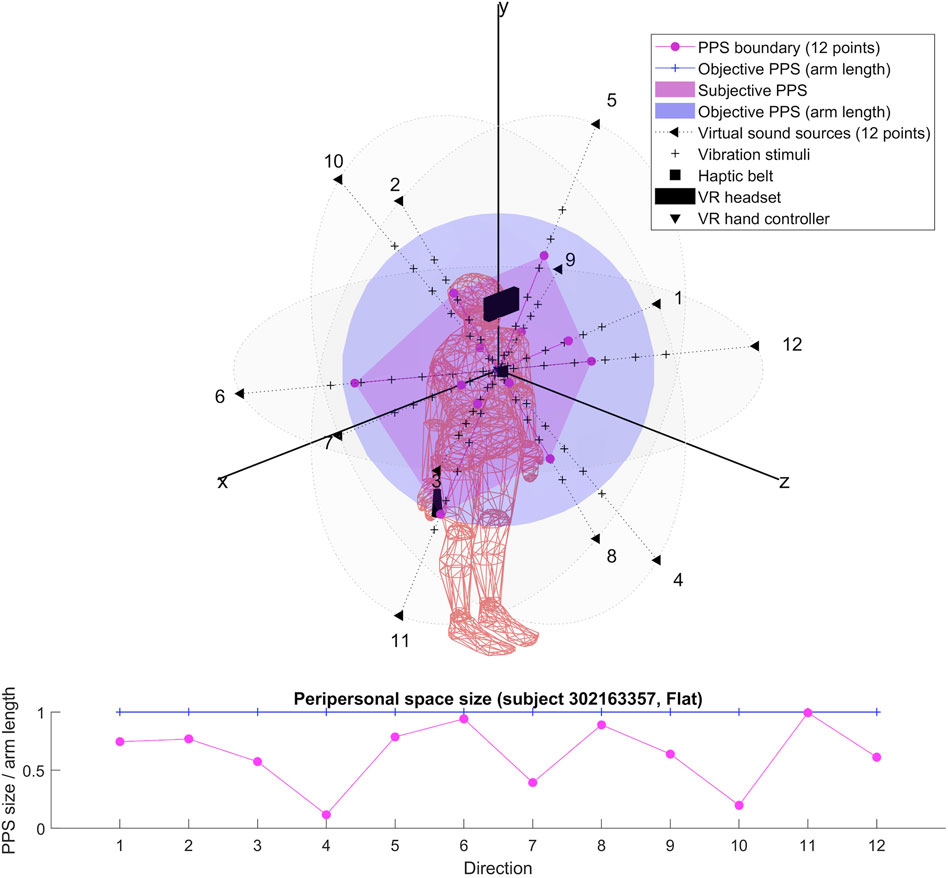

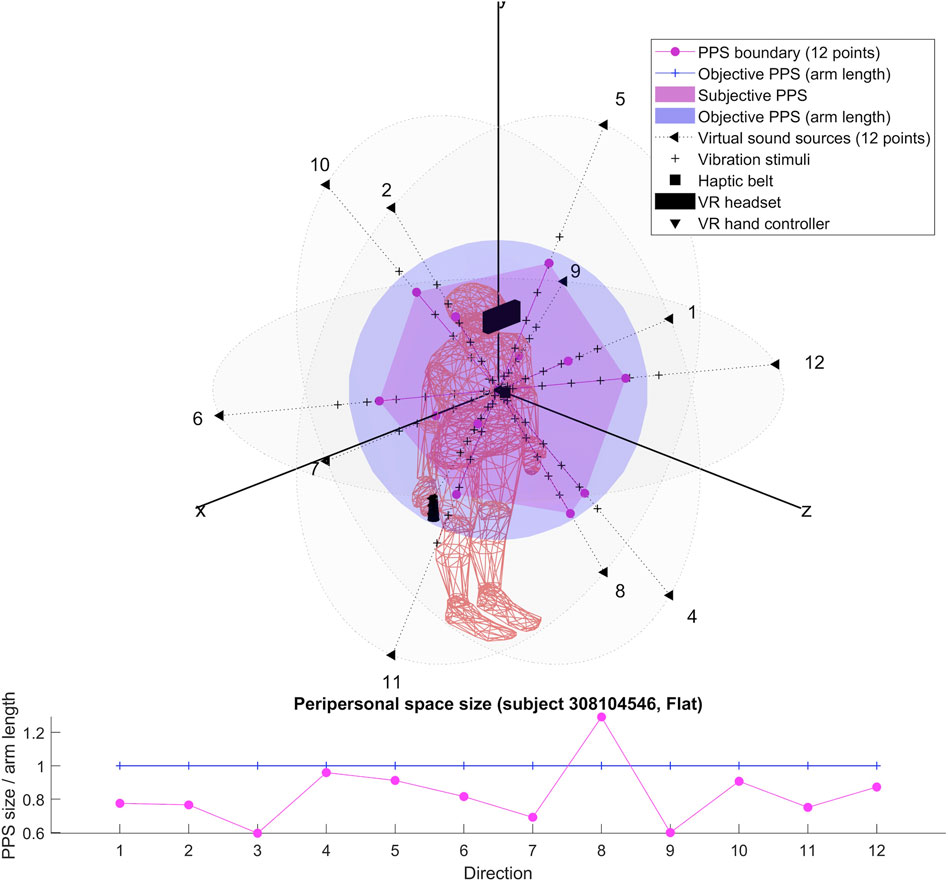

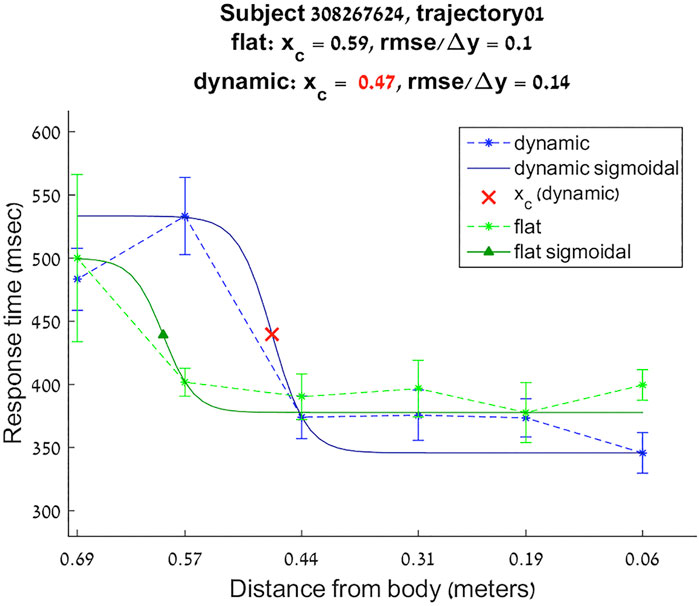

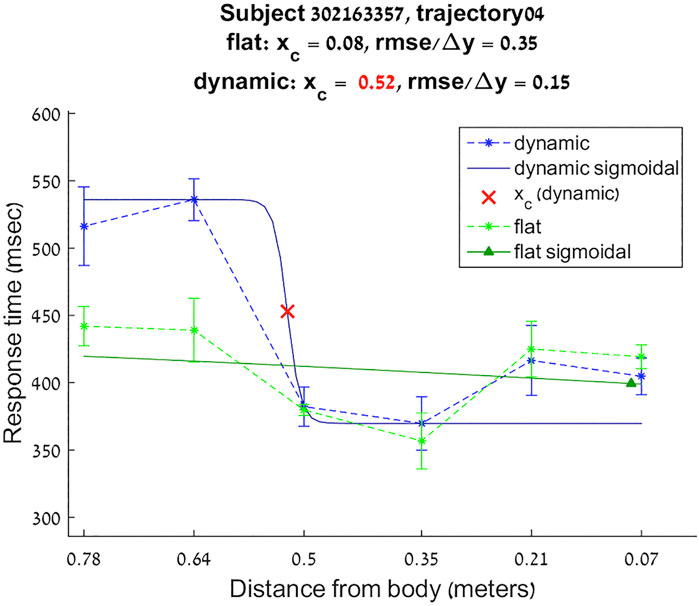

For each of the twelve directions of the virtual flat and dynamic sounds, the reaction times for the different distances were approximated with a sigmoidal function:

Here,

FIGURE 2. Reaction times sigmoidal approximation for subject 308267624 trajectory 1 flat and dynamic conditions.

FIGURE 3. Reaction times sigmoidal approximation for subject 302163357 trajectory 4 flat and dynamic conditions.

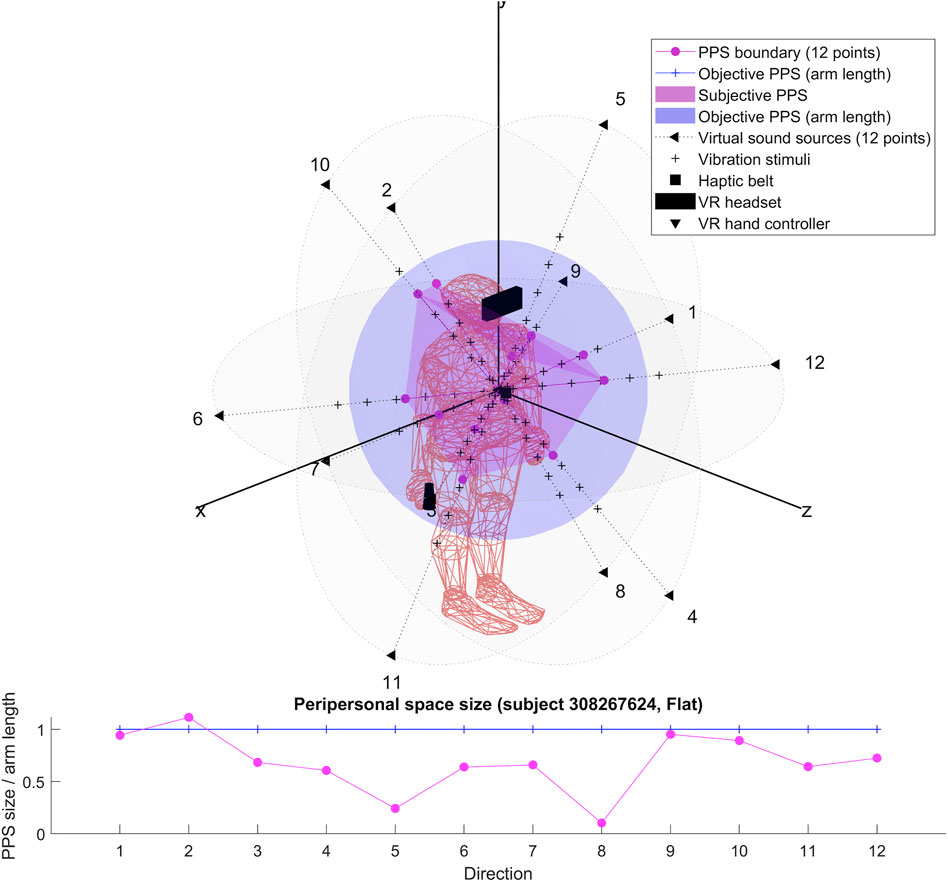

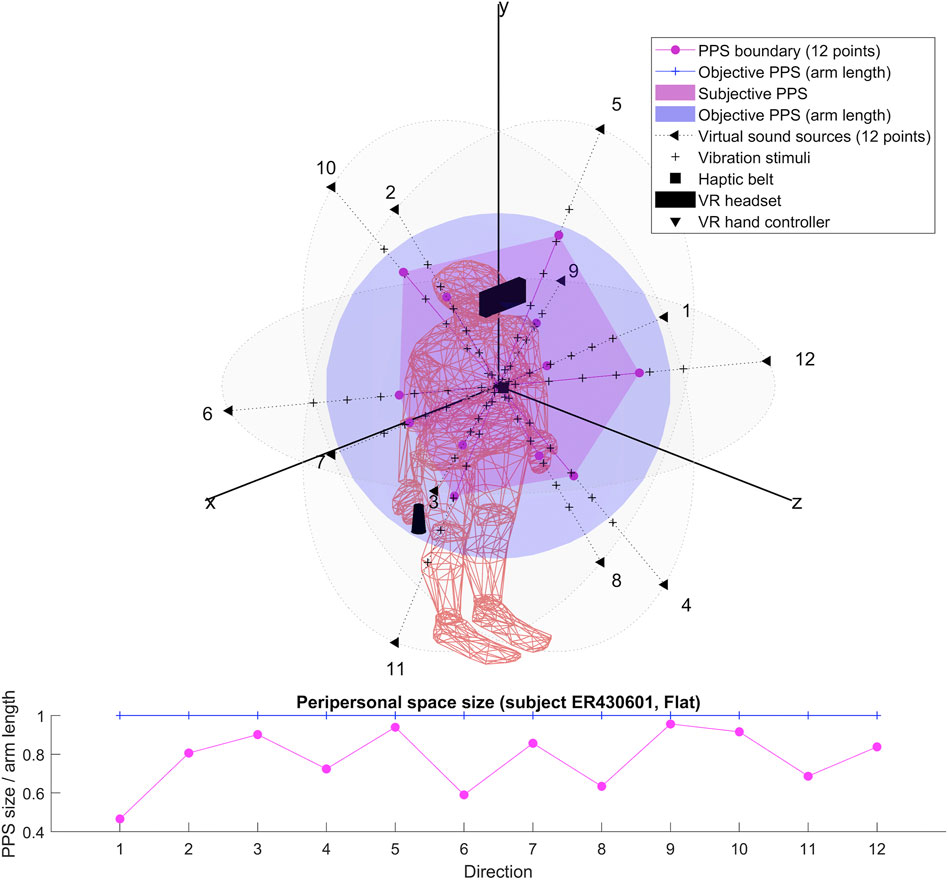

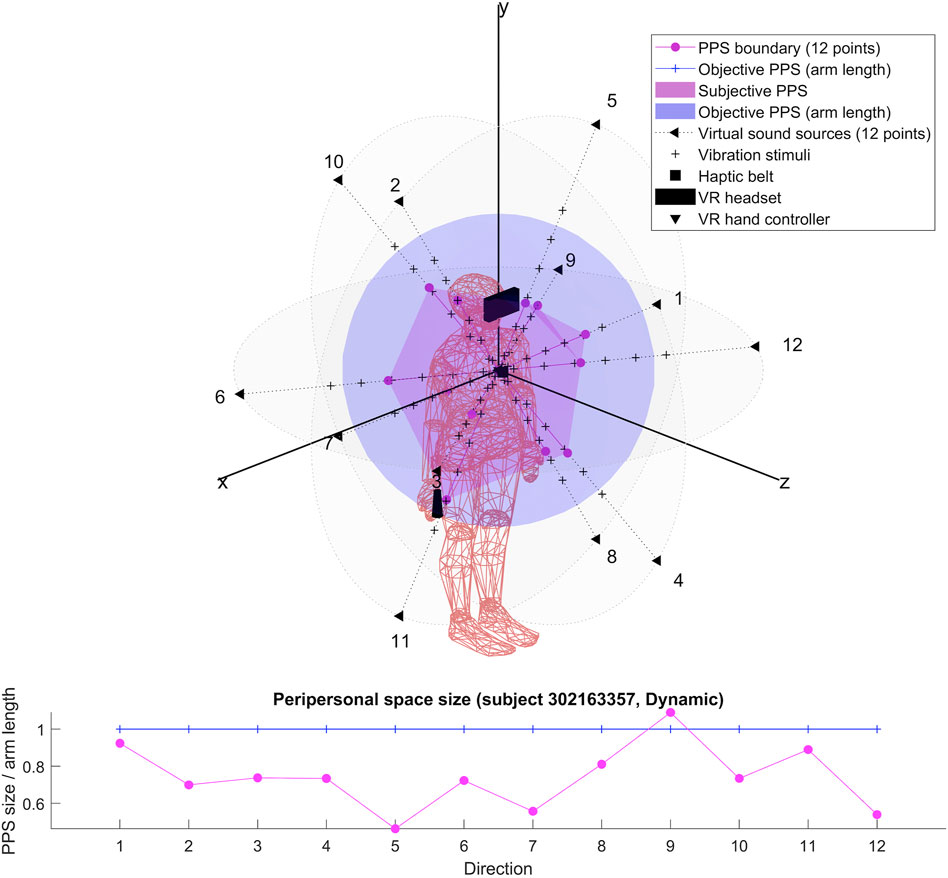

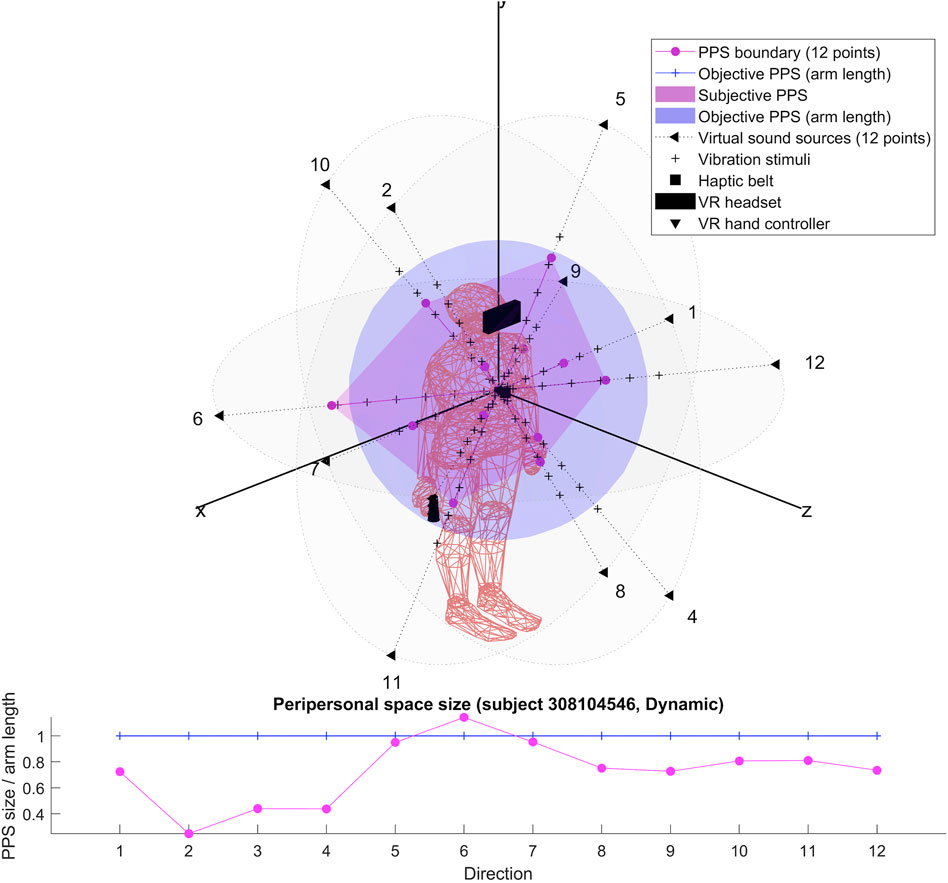

For each participant, we produced two 3D representations; one relying on the RT of dynamic sound stimuli and one on the RT of flat sound stimuli. We describe the obtained shapes and focus specifically on their possible anisotropy in both cases.

Results

The results for each participant are encompassed within the 3D representations we obtained for both flat and dynamic stimuli. By connecting the RT thresholds in each of the twelve directions, we draw a spatial polyhedron which serves as an approximation for PPS and its boundaries in 3D. This polyhedron does not display symmetric properties. Further the anisotropy of the polyhedron for the eight subjects do not obey any systematic rule. This might reflect the small sample of our pilot.

To our knowledge, the rendering of the phenomenological components of PPS in 3D has never been visually highlighted (see Figures 4–7 for flat stimuli responses and Figures 8–11 for dynamic stimuli responses). The totality of the results for each subject is in the Supplementary Material. No evident regularity was found between the shapes obtained using dynamic and flat stimuli for the different subjects.

Discussion

Virtual audio stimuli can be modified according to many experimental parameters: type (flat or dynamic and pink noise or white noise), velocity, distance, and direction.

One of the major problems in the use of an audio setup to estimate the borders of PPS is sound reverberation. Reverberation energy ratio depends on the shape, size, and physical material of the room. By using the 3D Tune-in Toolkit where the signal is anechoic, these effects were nullified.

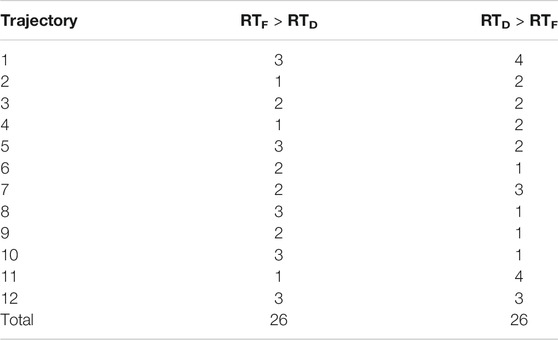

The comparison of subjective boundaries of PPS relatively to flat and dynamic audio stimuli shows across 52 pairs subject/trajectory, and the threshold of RTs is the closer one in the case of flat stimuli 26 times and the closer one for dynamic stimuli 26 times (see Table 2). We did not notice like several authors argued (Noel et al., 2015b; Serino et al., 2015b) that dynamic incoming sounds affected audio-tactile interactions predominantly compare to flat ones.

TABLE 2. Comparison of reaction time thresholds for each direction in flat and dynamic conditions (values below 25% and over 150% are removed, RTF is reaction time in flat condition, and RTD is reaction time dynamic condition).

In the literature, it appears that PPS responses rely not only on stimuli proximity but also on the velocity parameters. Multisensory neural adaptation mechanism involved in PPS responses may not work for stimuli above 100 cm/s (Noel et al., 2020) because of lack of rapid recalibration. The audio stimuli velocity of our setup was within the 22 cm/s range and cannot explain the non-concluding results between flat and dynamic audio stimuli.

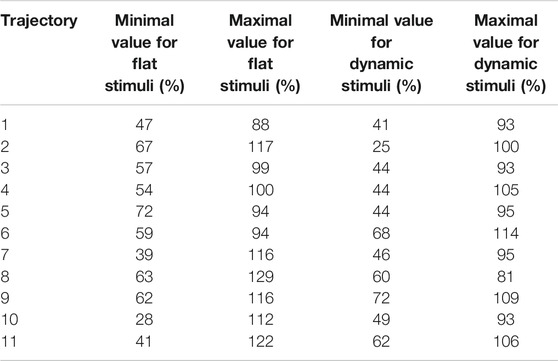

Boundaries of subjective PPS are obtained as thresholds in sigmoidal approximations of points corresponding to tactile stimuli distances (see Figures 2, 3). These distances represent between 25% and 129% of the arm length of the subject and the threshold should lie between these two extreme values (see Table 3). In some cases, the threshold is beyond or below these values (see. Figure 2). The interpretation is that sigmoidal approximation does not put in evidence as threshold for these data. This might be explained by the methodology of some studies which are removing from their results those participants with bad sigmoidal fit (Holmes et al., 2020). Furthermore, since we used stimuli which belong to the far category (130 cm), this could also be an effect of the variability of the multisensory responses between close vs. far audio-tactile stimuli which has been demonstrated by Serino to show higher variability for far stimuli than close ones (Serino, 2016).

TABLE 3. Extremal values of the reaction time threshold in each direction (in percent of the subject’s arm length and values below 25% and over 150% being removed).

Individual variability in PPS might be explained by the various cultural and ethnic factors. Indeed our subject were recruited among Weizmann international students. Indeed, the impact of the ethnic background on peripersonal size and shape has been recently acknowledged (Yu et al., 2020).

The stimuli factor proximity has been widely explored contrarily to the factor of the movement of the stimuli direction (Bufacchi and Iannetti, 2018). Thus, we introduced a setup that would test virtual audio stimuli in various directions. We observed that the representations computed from these results did not feature systematic anisotropy of the PPS 3D boundary in one direction relatively to others. Sometimes, anisotropic properties can be the result of gravitational forces (Bufacchi et al., 2018). By implementing our setup in a more systematic experiment than our experimental pilot, it should be possible to infirm or confirm isotropy of subjective PPS.

In further studies, this question could also be refined by testing other sound directions by reorienting the virtual sound sources and shifting the location of the tactile-given stimuli. Previous studies have already examined peripersonal space boundaries around the trunk, face (Serino et al., 2015a), feet (Stone et al., 2018), and the soles of the feet (Amemiya et al., 2019). In this experiment, the virtual audio stimuli were oriented toward the sternum. In prospective studies, we could test different reaction times taking into consideration the virtual audio stimuli directional orientation toward other body centers. We could then expect the various anisotropic properties of PPS boundaries to be associated to other body centers.

Conclusion

The originality of this phenomenological and behavioural approach was to provide representations of the audio-tactile boundaries of PPS in 3D for each participant using a VR setup. 3D peripersonal space had been investigated around the hand, face, and trunk but to our knowledge comprehensive 3D spatial representations of PPS around subjects’ body had not yet been rendered.

Although we could not establish a clear distinction between RT responses between flat and dynamic stimuli, we have to keep in mind that this setup is an experimental pilot run on a limited number of participants. Nevertheless, this setup benefit is its high flexibility, which could allow in the future to extend the experimental conditions further. For instance, not only the audio stimuli parametrization (velocity and type of sound) could be modified but also the localization of the audio stimuli in 3D in any direction and toward any body center, which could motivate an indepth study.

Prospectively, our apparatus could serve the purpose of setting up comfort distances in a social VR platform (i e., AltspaceVR, High Fidelity, and NEOS Metaverse Engine). Indeed, avatar embodiment can heightened feeling of space violation. This would enable tailored-made configuration of the interpersonal spaces, which in effect would facilitate the nonverbal social interactions through body gestures and the spatial positioning of avatars in a social VR platform. This setup could be imported within the social VR apps to customize the personal space of the gamer’s avatar which would improve the user experience (UX) and prevent virtual harassment issues.

Additionally, in the present, the literrature regarding mental pathologies (i e., anorexia nervosa, autism spectrum, and schizophrenia) regarding the limits of PPS has been evaluated mainly frontally. Therefore, a finer 3D topographical definition of PPS could provide a more precise understanding of the distorted body schemas involved in the production of these altered PPS spatial representations.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Institutional Review Board (IRB) of the Weizmann Institute. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

FL created the experimental design of the setup and conducted the experiments. AB wrote the MATLAB script for the experiment and OK processed the data. FL and GT analyzed and discussed the results. TF supervised the research.

Funding

All the authors are supported by the Feinberg Institute of the Weizmann Institute of Science, Rehovot, Israel. The first author has additional funding from the Braginsky Center of Art and Science of WIS. The second author is supported by the Israel Science Foundation (grant No 1167/17) and the European Research Council (ERC) under the European Union Horizon 2020 research innovation program (grant agreement No. 802107).

Disclaimer

Copyright notice of ‘nancy_body.m’. This collection of body segments and polygons was modified from an original VRML file by Cindy Ballreich (Y2luZHlAYmFsbHJlaWNoLm5ldA==) copyright 1997 3Name3D. This MATLAB version has the restriction by the original author (i.e., Cindy Ballreich) of noncommercial usage as long as original copyright remains and proper credit is given.

The original file in VRML was found at: http://www.ballreich.net/vrml/h-anim/nancy_h-anim.wrl.

This file can be located at http://www.robots.ox.ac.uk/∼wmayol/3D/nancy_matlab.html.

MATLAB version by Ben Tordoff (Ymp0QHJvYm90cy5veC5hYy51aw==) and Walterio Mayol (d21heW9sQHJvYm90cy5veC5hYy51aw==), Robotics Research Group, University of Oxford. The main difference with the VRML version is that all polygons were manually converted to triangles. More information about H-Anim humanoid specifications can be found at http://www.h-anim.org.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thanks for their valuable remarks Johannes Lohmann (University of Tübingen) and Prof Giandomenico Iannetti (Italian Institute of Technology, University College London).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.644214/full#supplementary-material

References

Amemiya, T., Ikei, Y., and Kitazaki, M. (2019). Remapping Peripersonal Space by Using Foot-Sole Vibrations without Any Body Movement. Psychol. Sci. 30 (10), 1522–1532. doi:10.1177/0956797619869337

Ardizzi, M., and Ferri, F. (2018). Interoceptive Influences on Peripersonal Space Boundary. Cognition 177, 79–86. doi:10.1016/j.cognition.2018.04.001

Bassolino, M., Serino, A., Ubaldi, S., and Làdavas, E. (2010). Everyday Use of the Computer Mouse Extends Peripersonal Space Representation. Neuropsychologia 48 (3), 803–811. doi:10.1016/j.neuropsychologia.2009.11.009

Berger, M., Neumann, P., and Gail, A. (2019). Peri-Hand Space Expands Beyond Reach in the Context of Walk-and-Reach Movements. Sci. Rep. 9 (1), 1–12. doi:10.1038/s41598-019-39520-8

Berti, A., and Frassinetti, F. (2000). When Far Becomes Near: Remapping of Space by Tool Use. J. Cogn. Neurosci. 12 (3), 415–420. doi:10.1162/089892900562237

Binkofski, F., Butler, A., Buccino, G., Heide, W., Fink, G., Freund, H.-J., et al. (2003). Mirror Apraxia Affects the Peripersonal Mirror Space. A Combined Lesion and Cerebral Activation Study. Exp. Brain Res. 153 (2), 210–219. doi:10.1007/s00221-003-1594-2

Bonifazi, S., Farnè, A., Rinaldesi, L., and Làdavas, E. (2007). Dynamic Size-Change of Peri-Hand Space through Tool-Use: Spatial Extension or Shift of the Multi-Sensory Area. J. Neuropsychol. 1 (1), 101–114. doi:10.1348/174866407x180846

Bonnier, P. (2009). Asomatognosia P. Bonnier. L’aschématie. Revue Neurol 1905;13:605-9. Epilepsy Behav. 16 (3), 401–403. doi:10.1016/j.yebeh.2009.09.020

Brain, W. R. (1941). Visual Orientation with Special Reference to Lesions of the Right Cerebral Hemisphere. Brain A J. Neurol. 64, 244–272. doi:10.1093/brain/64.4.244

Bremmer, F., Schlack, A., Shah, N. J., Zafiris, O., Kubischik, M., Hoffmann, K.-P., et al. (2001). Polymodal Motion Processing in Posterior Parietal and Premotor Cortex. Neuron 29 (1), 287–296. doi:10.1016/s0896-6273(01)00198-2

Brozzoli, C., Cardinali, L., Pavani, F., and Farnè, A. (2010). Action-Specific Remapping of Peripersonal Space. Neuropsychologia 48 (3), 796–802. doi:10.1016/j.neuropsychologia.2009.10.009

Brozzoli, C., Makin, T. R., Cardinali, L., Holmes, N. P., and Farnè, A. (2012). “PPS: A Multisensory Interface for Body-Object Interactions”, in The Neural Bases of Multisensory Processes. (Boca Raton, FL: CRC Press/Taylor& Francis), Chap. 23.

Bufacchi, R. J., Liang, M., Griffin, L. D., and Iannetti, G. D. (2015). A Geometric Model of Defensive Peripersonal Space. J. Neurophysiol. 115 (1), 218–225. doi:10.1152/jn.00691.2015

Bufacchi, R. J., and Iannetti, G. D. (2016). Gravitational Cues Modulate the Shape of Defensive Peripersonal Space. Curr. Biol. 26 (21), R1133–R1134. doi:10.1016/j.cub.2016.09.025

Bufacchi, R. J., and Iannetti, G. D. (2018). An Action Field Theory of Peripersonal Space. Trends Cogn. Sci. 22 (12), 1076–1090. doi:10.1016/j.tics.2018.09.004

Canzoneri, E., Magosso, E., and Serino, A. (2012). Dynamic Sounds Capture the Boundaries of Peripersonal Space Representation in Humans. PloS One 7 (9), e44306. doi:10.1371/journal.pone.0044306

Cartaud, A., Ruggiero, G., Ott, L., Iachini, T., and Coello, Y. (2018). Physiological Response to Facial Expressions in Peripersonal Space Determines Interpersonal Distance in a Social Interaction Context. Front. Psychol. 9, 657. doi:10.3389/fpsyg.2018.00657

Cléry, J., Guipponi, O., Wardak, C., and Ben Hamed, S. (2015). Neuronal Bases of Peripersonal and Extrapersonal Spaces, Their Plasticity and Their Dynamics: Knowns and Unknowns. Neuropsychologia 70, 313–326. doi:10.1016/j.neuropsychologia.2014.10.022

Cuevas-Rodríguez, M., Picinali, L., González-Toledo, D., Garre, C., de la Rubia-Cuestas, E., Molina-Tanco, L., et al. (2019). 3D Tune-In Toolkit: An Open-Source Library for Real-Time Binaural Spatialisation. PloS one 14 (3). doi:10.1371/journal.pone.0211899

Cutting, J. E., and Vishton, P. M. (1995). “Perceiving Layout and Knowing Distances: The Integration, Relative Potency, and Contextual Use of Different Information about Depth,” in Perception of Space and Motion. Academic Press, 69–117. doi:10.1016/b978-012240530-3/50005-5

D’Angelo, M., Di Pellegrino, G., Seriani, S., Gallina, P., and Frassinetti, F. (2018). The Sense of Agency Shapes Body Schema and Peripersonal Space. Sci. Rep. 8, 13847. doi:10.1038/s41598-018-32238-z

De Vignemont, F. (2010). Body Schema and Body Image-Pros and Cons. Neuropsychologia 48 (3), 669–680. doi:10.1016/j.neuropsychologia.2009.09.022

De Vignemont, F. (2018). Mind the Body: An Exploration of Bodily Self-Awareness. London: Oxford University Press

De Vignemont, F., and Iannetti, G. D. (2015). How Many PPSs? Neuropsychologia 70, 327–334. doi:10.1016/j.tics.2003.12.008

Delevoye-Turrell, Y., Vienne, C., and Coello, Y. (2011). Space Boundaries in Schizophrenia. Soc. Psychol. 42, 193–204. 10.1027/1864-9335/a000063

Dell, C., Crow, A., and Bartenieff, I. (1977). Space Harmony: Basic Terms. New York, NY: Dance Notation Bureau Press.

Di Pellegrino, G., and Làdavas, E. (2015). Peripersonal Space in the Brain. Neuropsychologia 66, 126–133. doi:10.1016/j.neuropsychologia.2014.11.011

Dijkerman, H. C. (2017). On Feeling and Reaching: Touch, Action, and Body Space, Neuropsychology of Space: Spatial Functions of the Human Brain. (London: Academic Press), 77–122.

Ehrsson, H. H., Spence, C., and Passingham, R. E. (2004). That’s My Hand! Activity in Premotor Cortex Reflects Feeling of Ownership of a Limb. Science 305 (5685), 875–877. doi:10.1126/science.1097011

Farnè, A., and Làdavas, E. (2000). Dynamic Size-Change of Hand Peripersonal Space Following Tool Use. Neuroreport. 11 (8), 1645–1649. doi:10.1097/00001756-200006050-00010

Farnè, A., Serino, A., and Làdavas, E. (2007). Dynamic Size-Change of Peri-Hand Space Following Tool-Use: Determinants and Spatial Characteristics Revealed through Cross-Modal Extinction. Cortex. 43 (3). doi:10.1016/s0010-9452(08)70468-4

Ferri, F., Tajadura-Jiménez, A., Väljamäe, A., Vastano, R., and Costantini, M. (2015). Emotion-Inducing Approaching Sounds Shape the Boundaries of Multisensory Peripersonal Space. Neuropsychologia 70, 468–475. doi:10.1016/j.neuropsychologia.2015.03.001

Fini, C., Costantini, M., and Committeri, G. (2014). Sharing Space: the Presence of Other Bodies Extends the Space Judged as Near. PloS one 9 (12), e114719. doi:10.1371/journal.pone.0114719

Fogassi, L., Gallese, V., Fadiga, L., Luppino, G., Matelli, M., and Rizzolatti, G. (1996). Coding of Peripersonal Space in Inferior Premotor Cortex (area F4). J. Neurophysiol. 76 (1), 141–157. doi:10.1126/science.7973661

Gallagher, S. (1986). Body Image and Body Schema: A Conceptual Clarification. J. Mind Behav., 541–554.

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (2009). Action Recognition in the Premotor Cortex. Brain. 132, 1685–1689.

Galli, G., Noel, J. P., Canzoneri, E., Blanke, O., and Serino, A. (2015). The Wheelchair as a Full-Body Tool Extending the PPS. Front. Psychol. 6, 639. doi:10.3389/fpsyg.2015.00639

Gamberini, L., Seraglia, B., and Priftis, K. (2008). Processing of Peripersonal and Extrapersonal Space Using Tools: Evidence from Visual Line Bisection in Real and Virtual Environments. Neuropsychologia 46 (5), 1298–1304. doi:10.1016/j.neuropsychologia.2007.12.016

Graziano, M. (2017). The Spaces between Us: A Story of Neuroscience, Evolution, and Human Nature. London: Oxford University Press. doi:10.4324/9781351314442

Graziano, M. S. A., and Gross, C. G. (1993). A Bimodal Map of Space: Somatosensory Receptive Fields in the Macaque Putamen with Corresponding Visual Receptive Fields. Exp. Brain Res. 97, 96–109. doi:10.1007/bf00228820

Graziano, M., Yap, G., and Gross, C. (1994). Coding of Visual Space by Premotor Neurons. Science 266 (5187), 1054–1057. doi:10.1126/science.7973661

Grüsser, O.-J. (1983). “Multimodal Structure of the Extrapersonal Space,” in Spatially Oriented Behavior. New York, NY: Springer, 327–352. doi:10.1007/978-1-4612-5488-1_18

Halligan, P. W., and Marshall, J. C. (1991). Left Neglect for Near but Not Far Space in Man. Nature. 350 (6318), 498–500. doi:10.1038/350498a0

Head, H., and Holmes, G. (1911). Sensory Disturbances from Cerebral Lesions. Brain 34 (2–3), 102–254. doi:10.1093/brain/34.2-3.102

Hobeika, L., Viaud-Delmon, I., and Taffou, M. (2018). Anisotropy of Lateral Peripersonal Space is Linked to Handedness. Exp. Brain Res. 236 (2), 609–618. doi:10.1007/s00221-017-5158-2

Holmes, N. P., Martin, D., Mitchell, W., Noorani, Z., and Thorne, A. (2020). Do sounds Near the Hand Facilitate Tactile Reaction Times? Four Experiments and a Meta-Analysis Provide Mixed Support and Suggest a Small Effect Size. Exp. Brain Res. 238 (4), 995–1009. doi:10.1007/s00221-020-05771-5

Holmes, N. P., and Spence, C. (2004). The Body Schema and Multisensory Representation(s) of Peripersonal Space. Cogn. Process. 5 (2), 94–105. doi:10.1007/s10339-004-0013-3

Hunley, S. B., and Lourenco, S. F. (2018). What is PPS? an Examination of Unresolved Empirical Issues and Emerging Findings. Wiley Interdiscip. Rev. Cogn. Sci. 9 (6), e1472. doi:10.1002/wcs.1472

Iachini, T., Coello, Y., Frassinetti, F., Senese, V. P., Galante, F., and Ruggiero, G. (2016). Peripersonal and Interpersonal Space in Virtual and Real Environments: Effects of Gender and Age. J. Environ. Psychol. 45, 154–164. doi:10.1016/j.jenvp.2016.01.004

Iachini, T., Pagliaro, S., and Ruggiero, G. (2015a). Near or Far? it Depends on My Impression: Moral Information and Spatial Behavior in Virtual Interactions. Acta psychologica 161, 131–136. doi:10.1016/j.actpsy.2015.09.003

Iachini, T., Ruggiero, G., Ruotolo, F., di Cola, A. S., and Senese, V. P. (2015b). The Influence of Anxiety and Personality Factors on Comfort and Reachability Space: A Correlational Study. Cogn. Process 16 (1), 255–258. doi:10.1007/s10339-015-0717-6

Kandula, M., Van der Stoep, N., Hofman, D., and Dijkerman, H. C. (2017). On the Contribution of Overt Tactile Expectations to Visuo-Tactile Interactions within the Peripersonal Space. Exp. Brain Res. 235 (8), 2511–2522. doi:10.1007/s00221-017-4965-9

Keysers, C., Wicker, B., Gazzola, V., Anton, J.-L., Fogassi, L., and Gallese, V. (2004). A Touching Sight. Neuron. 42 (2), 335–346. doi:10.1016/s0896-6273(04)00156-4

Làdavas, E. (2002). Functional and Dynamic Properties of Visual Peripersonal Space. Trends Cogn. Sci. 6 (1), 17–22. doi:10.1016/s1364-6613(00)01814-3

Làdavas, E., and Serino, A. (2008). Action-dependent Plasticity in PPS Representations. Cogn. Neuropsychol. 25 (7–8), 1099–1113. doi:10.1080/02643290802359113

Lee, J., Cheon, M., Moon, S. E., and Lee, J. S. (2016). “Peripersonal Space in Virtual Reality: Navigating 3D Space with Different Perspectives,” in Proceedings of the 29th Annual Symposium on User Interface Software and Technology. ACM, 207–208.

Lloyd, D. M., Merat, N., Mcglone, F., and Spence, C. (2003a). Crossmodal Links between Audition and Touch in Covert Endogenous Spatial Attention. Perception Psychophys. 65 (6), 901–924. doi:10.3758/bf03194823

Lloyd, D. M., Shore, D. I., Spence, C., and Calvert, G. A. (2003b). Multisensory Representation of Limb Position in Human Premotor Cortex. Nat. Neurosci. 6 (1), 17–18. doi:10.1038/nn991

Lloyd, D. M. (2007). Spatial Limits on Referred Touch to an Alien Limb May Reflect Boundaries of Visuo-Tactile Peripersonal Space Surrounding the Hand. Brain Cogn. 64 (1), 104–109. doi:10.1016/j.bandc.2006.09.013

Longo, M. R., and Lourenco, S. F. (2006). On the Nature of Near Space: Effects of Tool Use and the Transition to Far Space. Neuropsychologia 44 (6), 977–981. doi:10.1016/j.neuropsychologia.2005.09.003

Longo, M. R., and Lourenco, S. F. (2007). Space Perception and Body Morphology: Extent of Near Space Scales with Arm Length. Exp. Brain Res. 177 (2), 285–290. doi:10.1016/s0896-6273(04)00156-4

Lourenco, S. F., Longo, M. R., and Pathman, T. (2011). Near Space and its Relation to Claustrophobic Fear. Cognition 119 (3), 448–453. doi:10.1016/j.cognition.2011.02.009

Makin, T. R., Holmes, N. P., and Ehrsson, H. H. (2008). On the Other Hand: Dummy Hands and Peripersonal Space. Behav. Brain Res. 191 (1), 1–10. doi:10.1016/j.bbr.2008.02.041

Makin, T. R., Holmes, N. P., and Zohary., E. (2007). Is that Near My Hand? Multisensory Representation of Peripersonal Space in Human Intraparietal Sulcus. J. Neurosci. 27 (4), 731–740. doi:10.1523/jneurosci.3653-06.2007

Maravita, A., and Iriki, A. (2004). Tools for the Body (schema). Trends Cogn. Sci. 8 (2), 79–86. doi:10.1016/j.tics.2003.12.008

Maravita, A., Spence, C., and Driver, J. (2003). Multisensory Integration and the Body Schema: Close to Hand and within Reach. Curr. Biol. 13 (13), R531–R539. doi:10.1016/s0960-9822(03)00449-4

Mine, D., and Yokosawa, K. (2020). Disconnected Hand Avatar Can Be Integrated into the Peripersonal Space. Exp. Brain Res., 1–8. doi:10.1007/s00221-020-05971-z

Nandrino, J.-L., Ducro, C., Iachini, T., and Coello, Y. (2017). Perception of Peripersonal and Interpersonal Space in Patients with Restrictive-type Anorexia. Eur. Eat. Disord. Rev. 25 (3), 179–187. doi:10.1002/erv.2506

Noel, J.-P., Blanke, O., Magosso, E., and Serino, A. (2018). Neural Adaptation Accounts for the Dynamic Resizing of Peripersonal Space: Evidence from a Psychophysical-Computational Approach. J. Neurophysiol. 119 (6), 2307–2333. doi:10.1152/jn.00652.2017

Noel, J.-P., Cascio, C. J., Wallace, M. T., and Park, S. (2017). The Spatial Self in Schizophrenia and Autism Spectrum Disorder. Schizophrenia Res. 179, 8–12. doi:10.1016/j.schres.2016.09.021

Noel, J.-P., Grivaz, P., Marmaroli, P., Lissek, H., Blanke, O., and Serino, A. (2015a). Full Body Action Remapping of Peripersonal Space: the Case of Walking. Neuropsychologia 70, 375–384. doi:10.1016/j.neuropsychologia.2014.08.030

Noel, J.-P., Pfeiffer, C., Blanke, O., and Serino, A. (2015b). Peripersonal Space as the Space of the Bodily Self. Cognition 144, 49–57. doi:10.1016/j.cognition.2015.07.012

Noel, J. P., Bertoni, T., Terrebonne, E., Pellencin, E., Herbelin, B., Cascio, C., et al. (2020). Rapid Recalibration of Peri-Personal Space: Psychophysical, Electrophysiological, and Neural Network Modeling Evidence. Cereb. Cortex. 30, 5088–5106. doi:10.1093/cercor/bhaa103

Pavani, F., and Castiello, U. (2004). Binding Personal and Extrapersonal Space through Body Shadows. Nat. Neurosci. 7 (1), 14–16. doi:10.1038/nn1167

Pellencin, E., Paladino, M. P., Herbelin, B., and Serino, A. (2018). Social Perception of Others Shapes One’s Own Multisensory Peripersonal Space. Cortex 104, 163–179. doi:10.1016/j.cortex.2017.08.033

Pfeiffer, C., Noel, J. P., Serino, A., and Blanke, O. (2018). Vestibular Modulation of Peripersonal Space Boundaries. Eur. J. Neurosci. 47 (7), 800–811. doi:10.1111/ejn.13872

Postma, A., and van der Ham, I. J. (2016). Neuropsychology of Space: Spatial Functions of the Human Brain. London: Academic Press.

Previc, F. H. (1990). Functional Specialization in the Lower and Upper Visual Fields in Humans: Its Ecological Origins and Neurophysiological Implications. Behav. Brain Sci. 13 (3), 519–542. doi:10.1017/s0140525x00080018

Previc, F. H. (1998). The Neuropsychology of 3-D Space. Psychol. Bull. 124 (2), 123–164. doi:10.1037/0033-2909.124.2.123

Rizzolatti, G., Fadiga, L., Fogassi, L., and Gallese, V. (1997). NEUROSCIENCE: Enhanced: The Space Around Us. Science 277 (5323), 190–191. doi:10.1126/science.277.5323.190

Rizzolatti, G., Scandolara, C., Matelli, M., and Gentilucci, M. (1981). Afferent Properties of Periarcuate Neurons in Macaque Monkeys. II. Visual Responses. Behav. Brain Res. 2, 147–163. doi:10.1016/0166-4328(81)90053-x

Ruggiero, G., Frassinetti, F., Coello, Y., Rapuano, M., Di Cola, A. S., and Iachini, T. (2017). The Effect of Facial Expressions on Peripersonal and Interpersonal Spaces. Psychol. Res. 81 (6), 1232–1240. doi:10.1007/s00426-016-0806-x

Sambo, C. F., Forster, B., Williams, S. C., and Iannetti, G. D. (2012). To Blink or Not to Blink: Fine Cognitive Tuning of the Defensive Peripersonal Space. J. Neurosci. 32 (37), 12921–12927. doi:10.1523/jneurosci.0607-12.2012

Sambo, C. F., and Iannetti, G. D. (2013). Better Safe Than Sorry? The Safety Margin Surrounding the Body Is Increased by Anxiety. J. Neurosci. 33 (35), 14225–14230. doi:10.1523/jneurosci.0706-13.2013

Serino, A., Canzoneri, E., and Avenanti, A. (2011). Fronto-parietal Areas Necessary for a Multisensory Representation of Peripersonal Space in Humans: An rTMS Study. J. Cogn. Neurosci. 23 (10), 2956–2967. doi:10.1162/jocn_a_00006

Serino, A., Canzoneri, E., Marzolla, M., Di Pellegrino, G., and Magosso, E. (2015a). Extending PPS Representation without Tool-Use: Evidence from a Combined Behavioral-Computational Approach. Front. Behav. Neurosci. 9, 4. doi:10.3389/fnbeh.2015.00004

Serino, A., Noel, J. P., Galli, G., Canzoneri, E., Marmaroli, P., Lissek, H., et al. (2015b). Body Part-Centered and Full Body-Centered PPS Representations. Scientific Rep. 5, 18603. doi:10.1038/srep18603

Serino, A. (2016). Variability in Multisensory Responses Predicts the Self-Space. Trends Cogn. Sci. 20 (3), 169–170. doi:10.1016/j.tics.2016.01.005

Serino, A., Noel, J. P., Mange, R., Canzoneri, E., Pellencin, E., Ruiz, J. B., et al. (2018). PPS: an Index of Multisensory Body–Environment Interactions in Real, Virtual, and Mixed Realities. Front. ICT 4, 31. doi:10.3389/fict.2017.00031

Spence, C., Pavani, F., and Driver, J. (2000). Crossmodal Links between Vision and Touch in Covert Endogenous Spatial Attention. J. Exp. Psychol. Hum. Perception Perform. 26 (4), 1298–1319. doi:10.1037/0096-1523.26.4.1298

Spence, C., Pavani, F., Maravita, A., and Holmes, N. (2008). Multi-sensory Interactions. Haptic rendering: Foundations, algorithms, Appl., 21–52. doi:10.1201/b10636-4

Stone, K. D., Kandula, M., Keizer, A., and Dijkerman, H. C. (2018). Peripersonal Space Boundaries Around the Lower Limbs. Exp. Brain Res. 236 (1), 161–173. doi:10.1007/s00221-017-5115-0

Taffou, M., and Viaud-Delmon, I. (2014). Cynophobic Fear Adaptively Extends Peri-Personal Space. Front. Psychiatry 5, 122. doi:10.3389/fpsyt.2014.00122

Teneggi, C., Canzoneri, E., di Pellegrino, G., and Serino, A. (2013). Social Modulation of Peripersonal Space Boundaries. Curr. Biol. 23 (5), 406–411. doi:10.1016/j.cub.2013.01.043

Yairi, S., Iwaya, Y., Kobayashi, M., Otani, M., Suzuki, Y., and Chiba, T. (2009). “The Effects of Ambient Sounds on the Quality of 3D Virtual Sound Space,” in 2009 Fifth International Conference on Intelligent Information Hiding and Multimedia Signal Processing. IEEE, 1122–1125.

Keywords: peripersonal space, virtual reality, 3D boundary, audio-tactile representation, body schemas, reaction time

Citation: Lerner F, Tahar G, Bar A, Koren O and Flash T (2021) VR Setup to Assess Peripersonal Space Audio-Tactile 3D Boundaries. Front. Virtual Real. 2:644214. doi: 10.3389/frvir.2021.644214

Received: 20 December 2020; Accepted: 27 April 2021;

Published: 13 May 2021.

Edited by:

Doron Friedman, Interdisciplinary Center Herzliya, IsraelReviewed by:

Tomohiro Amemiya, The University of Tokyo, JapanIvan Patané, INSERM U1028 Center de Recherche en Neurosciences de Lyon, France

Copyright © 2021 Lerner, Tahar, Bar, Koren and Flash. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: France Lerner, RnJhbmNlLkxlcm5lckB3ZWl6bWFubi5hYy5pbA==

France Lerner

France Lerner Guillaume Tahar1

Guillaume Tahar1 Tamar Flash

Tamar Flash