94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Virtual Real., 11 March 2021

Sec. Virtual Reality in Medicine

Volume 2 - 2021 | https://doi.org/10.3389/frvir.2021.641650

This article is part of the Research TopicExploring Human-Computer Interactions in Virtual Performance and Learning in the Context of Rehabilitation.View all 11 articles

Dynamic systems theory transformed our understanding of motor control by recognizing the continual interaction between the organism and the environment. Movement could no longer be visualized simply as a response to a pattern of stimuli or as a demonstration of prior intent; movement is context dependent and is continuously reshaped by the ongoing dynamics of the world around us. Virtual reality is one methodological variable that allows us to control and manipulate that environmental context. A large body of literature exists to support the impact of visual flow, visual conditions, and visual perception on the planning and execution of movement. In rehabilitative practice, however, this technology has been employed mostly as a tool for motivation and enjoyment of physical exercise. The opportunity to modulate motor behavior through the parameters of the virtual world is often ignored in practice. In this article we present the results of experiments from our laboratories and from others demonstrating that presenting particular characteristics of the virtual world through different sensory modalities will modify balance and locomotor behavior. We will discuss how movement in the virtual world opens a window into the motor planning processes and informs us about the relative weighting of visual and somatosensory signals. Finally, we discuss how these findings should influence future treatment design.

Virtual reality (VR) is a compelling and motivating tool that can be used to modulate neural behavior for rehabilitation purposes. Virtual environments can be developed as simple two-dimensional visual experiences and as more complex three-dimensional gaming and functional environments that can be integrated with haptics, electromyography, electroencephalography, and fMRI. These environments can then be used to address a vital need for rehabilitative training strategies that improve functional abilities and real-world interaction. There has been a concerted effort to determine whether motor learning in VR transfers to the physical world (Levac et al., 2019). Although this is important for determining measurable goals for intervention with VR, the sole focus on diminishing a motor deficit without controlling the perceptual factors within the virtual environment could actually interfere with task transfer and the rehabilitation process. Mounting evidence suggests that VR contributes to the complex integration of information from multiple sensory pathways and incorporates the executive processing needed to perceive this multimodal information (Keshner and Fung, 2019). Thus, VR is a rehabilitation tool that can be designed to address the perception-action system required for motor planning, a vital part of motor learning and performance, as well as motor execution.

In humans, common neural activation during action observation and execution has been well documented. A variety of functional neuroimaging studies, using fMRI, positron emission tomography, and magnetoencephalography, have demonstrated that a motor resonance mechanism in the premotor and posterior parietal cortices occurs when participants observe or produce goal directed actions (Grèzes et al., 2003; Hamzei et al., 2003; Ernst and Bülthoff, 2004). Mirror neurons in the ventral premotor and parietal cortices of the macaque monkey that fire both when it carries out a goal-directed action and when it observes the same action performed by another individual also provides neurophysiological evidence for a direct matching between action perception and action production (Rizzolatti and Craighero, 2004).

The concept of perception-action coupling has been accepted since Gibson (Gibson, 1979) who argued that when a performer moves relative to the environment, a pattern of optical flow is generated that can then be used to regulate the forces applied to control successive movements (Warren, 1990). In other words, we organize the parameters of our movement in relation to our perception of the signals we are receiving from the environment, and the change resulting from our action will then change the environment we must perceive for any subsequent action. Thus, how we perceive the environmental information will always affect how we organize and execute an action. Not taking into account the environmental factors that influence perception during training may well confound any assessments of performance and transfer of training (Gorman et al., 2013).

The essence of VR is the creation of the environment. Environments are created for many purposes ranging from industrial to entertainment and gaming to medical (Rizzo and Kim, 2005; Levin et al., 2015; Garrett et al., 2018; Keshner et al., 2019). Environments have been developed to overlay virtual objects on the physical world (i.e., augmented reality) or to present a fully artificial digital environment (i.e., VR). Rarely, however, is the motor ability of the performer considered in the design of these environments. In this study we will present work from our laboratories in which we specifically focused on coupling of the environmental and motion parameters.

In a seminal paper initially published in 1958, Gibson formulated the foundations of what would become an influential theory on the visual control of locomotion (Gibson, 2009). Among key aspects of this theory was the role visual kinaesthesis, or optic flow, in the perception of egomotion and control of locomotion (Warren, 2009). Since early 2000, VR technology has undoubtedly contributed to our understanding of the role of optic flow and other sources of visual information in the control of human posture and locomotion (Warren et al., 2001; Wilkie and Wann, 2003).

Several psychophysical phenomena are attributed to the impact of optic flow on perception. Presence and immersion describe the user’s belief in the reality of the environment (Slater, 2003). These terms have been used interchangeably, but they should be distinguished from the perspective of the measurement tool. According to Slater (Slater, 2003), immersion is a measure of the objective level of sensory fidelity provided by a VR system; presence is a measure of the subjective psychological response of a user experiencing that VR system.

Vection is the sensation of body motion in space produced purely by visual stimulation. This illusory motion of the whole body or of body parts is induced in stationary observers viewing environmental motion (Dichgans and Brandt, 1972; Dichgans et al., 1972; Palmisano et al., 2015). Examples of such a conflict occur in daily life when watching a moving train and sensing that it is the train and not yourself who is moving (Burr and Thompson, 2011). It is generally agreed that this illusion of self-motion results from a sensory conflict or mismatch that cannot be resolved by the CNS. Vection has also been defined more broadly as the conscious subjective experience of self-motion (Ash et al., 2013) that is crucial for successful navigation and the prevention of disorientation in the real world (Riecke et al., 2012).

Lastly, perception of self-motion is a challenging problem in the interpretation of multiple sensory inputs, requiring the neural combination of visual signals (e.g., optic flow), vestibular signals regarding head motion, and also somatosensory and proprioceptive cues (Deangelis and Angelaki, 2012). To perform successfully, we need to link sensory information to the context of the movement and determine whether there is a match between the visual motion and our vestibular and somatosensory afference and then shape our movement to accurately match the demands of the environment (Hedges et al., 2011). Consistent multisensory information about self-motion, rather than visual-only information, has been shown to reduce vection and improve both heading judgment and steering accuracy (Telford et al., 1995). Subjects demonstrated no compensation for self-motion that was defined solely by vestibular cues, partial compensation (47%) for visually defined self-motion, and significantly greater compensation (58%) during combined visual and vestibular self-motion (Dokka et al., 2015). Body posture will orient to a visual, somatosensory, or vestibular reference frame depending on the task, behavioral goals, and individual preference (Streepey et al., 2007b; Lambrey and Berthoz, 2007). Development across the lifespan and damage to the CNS may produce a shift in sensory preferences and thereby alter the responsiveness to any of the sensory pathways resulting in altered motor behavior (Slaboda et al., 2009; Yu et al., 2020). Thus, understanding how virtual environment parameters influence motor planning and execution is essential if we are to use virtual reality effectively for training and intervention.

Through the combination of VR and neuroimaging tools, key brain regions involved in the perception and use of optic flow during simulated “locomotor tasks” were unveiled. Human motion area hMT+ and ventral intraparietal cortex (VIP) play a role in the perception of egomotion from optic flow (Morrone et al., 2000; Dukelow et al., 2001; Wall and Smith, 2008), while a region of the intraparietal sulcus (IPS) would be responsible for identifying heading from optic flow information (Peuskens et al., 2001; Liu et al., 2013). PET and MRI studies indicate that when both retinal and vestibular inputs are processed, there are changes in the medial parieto-occipital visual area and parietoinsular vestibular cortex (Brandt et al., 1998; Dieterich and Brandt, 2000; Brandt et al., 2002), as well as cerebellar nodulus (Xerri et al., 1988; Kleinschmidt et al., 2002), suggesting a deactivation of the structures processing object-motion when there is a perception of physical motion. When performing VR-based steering tasks, additional regions such as the premotor cortex and posterior cerebellum get recruited (Field et al., 2007; Billington et al., 2010; Liu et al., 2013). The latter two brain regions would contribute to the planning and online monitoring of observer’s perceived position in space, while also contributing to the generation of appropriate motor responses (Field et al., 2007; Liu et al., 2013). Interestingly, a study which combined EEG to a VR setup during Lokomat-supported locomotion also showed an enhancement in premotor cortex activation when performing a steering task in first or third person view compared to conditions where no locomotor adaptations were required, which the authors also attributed to an enhanced need for motor planning (Wagner et al., 2014).

In most recent VR-based neuroimaging studies, individuals are immersed in more realistic environments and perform tasks of increasing complexity such as attending to or avoiding moving objects during simulated self-motion, where both perceived self-motion and object motion are at play (Calabro and Vaina, 2012; Huang et al., 2015; Pitzalis et al., 2020). Collectively, the fundamental knowledge acquired through VR-based neuroimaging experiments is key as it has allowed rehabilitation scientists to pose hypotheses and explain impaired locomotor behaviors and the heterogeneity of thereof in clinical populations with brain disorders such as stroke or Parkinson’s disease. Existing VR-based neuroimaging studies, however, remain foremost limited by their lack of integration of actual locomotor movements and nonvisual self-motion cues (Chaplin and Margrie, 2020). Multisensory convergence takes place at multiple levels within the brain. As an example, animal research has shown that MSTd and the parietoinsular vestibular contribute to a coherent percept of heading by responding both to vestibular cues and optic flow (Duffy, 1998; Angelaki et al., 2011)—an observation that was made possible by exposing the animal to a combination of optic flow manipulation and actual body translation in space. In human research, the emergence of mobile neuroimaging tools (e.g., fNIRS, EEG) and more robust analysis algorithms now makes it possible to examine the neural substrates of actual locomotion (Gramann et al., 2011; Brantley et al., 2018; Gennaro and De Bruin, 2018; Nordin et al., 2019; Wagner et al., 2019). Studies combining VR as well as other technologies (e.g., motion platform, robotic devices) to mobile neuroimaging can be expected, in the near future, to flourish and advance our understanding of locomotor control in complex, comprehensive yet controlled multisensory environments.

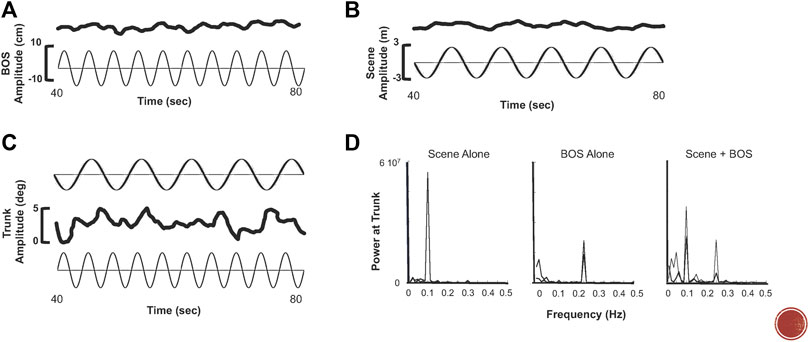

Our studies in immersive VR environments (using both projection and head mounted display (HMD) technology) reveal that it is nearly impossible for a performer to ignore the dynamic visual stimulus (Keshner and Kenyon, 2000, 2009; Cleworth et al., 2012). As shown in a seminal paper by Dichgans et al. (1972), sensitivity to a virtual visual stimulus is greatly increased when there is a combination of meaningful inputs (Dichgans et al., 1972). Measures of head, trunk, and lower limb excursions revealed that the majority of participants compensated in the opposite direction but at the same frequency for motion of a translating platform in the dark (Keshner et al., 2004). When on a stationary platform with a translating visual scene, participants matched the frequency and direction of the scene motion with their head and trunk but at much smaller amplitudes. Combining platform and visual scene motion produced the greatest amplitudes of motion occurred in all body segments. Additionally, frequency content of that movement reflected both the frequencies of the platform and the visual scene suggesting that the sense of presence was greatly intensified when producing self-motion within a dynamic visual environment (Figure 1).

FIGURE 1. (A) Trunk excursion (top trace) to sinusoidal a-p translation (bottom trace) of the base of support (BOS) at 0.25 Hz. (B) Trunk excursion (top trace) to sinusoidal a-p optic flow (scene) at 0.1 Hz. (C) Trunk excursion (middle trace) when 0.25 Hz motion of the BOS (bottom trace) and 0.1 Hz of the scene (top trace) occur simultaneously. (D) FFT analysis demonstrating power at the trunk reflects frequency of the stimulus, i.e., the scene (left), the BOS (middle), and simultaneous BOS and scene motion (right).

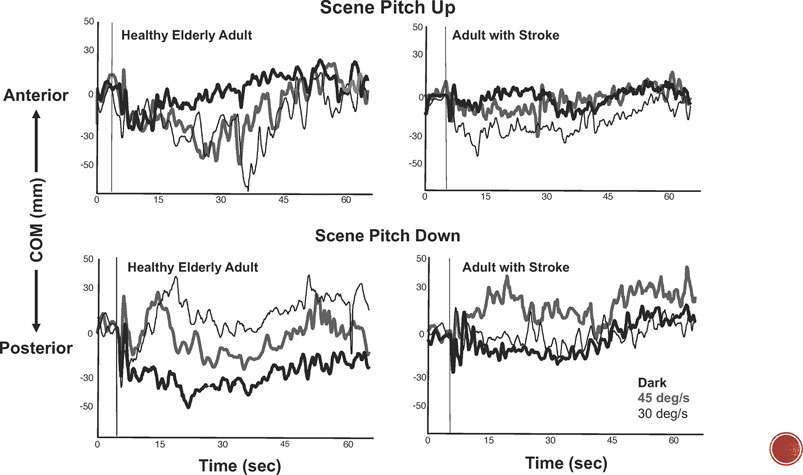

These results suggest that the postural response was modulated by all of the available sensory signals. In fact, the data strongly establish that kinematic variables of postural behavior are responsive to the metrics of the multimodal inputs. In particular, postural behavior has been shown to be influenced by the velocity, direction, and frequency parameters of the optic flow (Figure 2). For example, healthy young adults standing on a tilting platform in a 3-wall projection environment (Dokka et al., 2010; Wang et al., 2010) modified the direction, velocity, and amplitude of their COM motion in relation to the velocity of a visual scene rotating in the pitch direction. When standing on a stable surface, healthy young adults matched the direction of their head and trunk swaying to the direction of visual motion in both pitch and roll.

FIGURE 2. Center of mass (COM) excursions during a-p translations of a platform at 0.25 Hz while standing in the dark (bold black line) and while viewing continuous pitch rotations of optic flow at 30 deg/sec (thin black line) and 45 deg/sec (bold gray line). Top graphs: responses to pitch-up rotations of the scene in a healthy 62-year-old adult (left) and 65 year-old-adult with right hemiplegia (right). Bottom graphs: responses to pitch down rotations of the scene in a healthy elderly adult (left) and elderly adult with stroke (right). Vertical thin line indicates start of optic flow field.

Although velocity and direction may be governed by optic flow, magnitude of the response does vary across individuals (Keshner et al., 2004; Streepey et al., 2007a; Dokka et al., 2009). Healthy young adults in front of a wide field of view virtual scene that translated in the anterior-posterior (a-p) direction stood upon a rod that supported 100% or 45% of their foot length; thus, the base of support was whole or narrowed. Even in these healthy, young adults, success at maintaining a vertical orientation was compromised when standing on the narrowed base of support; however, the sway of about half the participants matched the frequency of the visual scene whereas the other half did not demonstrate a predominant frequency. This suggests a preferential sensory referencing in some participants to the sinusoidal visual signals and in others to the proprioceptive signals from the body. Intraindividual variability and task dependency that is demonstrated in the virtual environment (Keshner and Kenyon, 2000; Streepey et al., 2007b) imply that postural control is both task and organism dependent and should not be treated as a stereotypical, automatic behavior.

A developmental impact on the ability to process optic flow was revealed during a functional sit-to-stand task (Slaboda et al., 2009). Healthy children (8–12 years) and adults (21–49 years) were seated in a virtual environment that rotated in the pitch and roll directions. Participants were told to stand either (1) concurrent with onset of visual motion or (2) after an immersion period in the moving visual environment and (3) without visual input. Both adults and children reduced head and trunk angular velocity after immersion in the moving visual environment. Unlike adults, children demonstrated significant differences in displacement of the head center of mass during the immersion and concurrent trials when compared to trials without visual input. These data support previous reports (Keshner and Kenyon, 2000; Keshner et al., 2004) of a time-dependent effect of vision on response kinematics in adults. Responses in children are more influenced by the initial presence or absence of vision from which we might infer poorer error correction in the course of an action.

Optic flow in the virtual environment robustly influences the organization of postural kinematics. This influence, however, fluctuates with the integrity of the CNS and the perceptual experiences of each individual. Sensory signals are often reweighted in individuals as they age and with neurological disability, which then alters the postural response to optic flow (Slaboda et al., 2009; Yu et al., 2018). Thus, the success of any therapeutic intervention employing VR needs to consider the parameters of visual motion of the virtual environment. There are, however, some global precepts that can guide the deployment of any VR intervention. Specifically, studies have consistently demonstrated that (1) the direction of full-field optic flow will regulate the direction of postural sway (Keshner and Kenyon, 2009); (2) increasing velocity will increase the magnitude of postural sway (Dokka et al., 2009; Wang et al., 2010); (3) multiple sensory frequencies will be reflected in the body segment response frequencies (Keshner et al., 2004; Slaboda et al., 2011a); and (4) the influence of optic flow becomes more substantial during self-motion (Dokka et al., 2010).

Training individuals that have instability and sensory avoidance to produce effective postural behaviors have obvious value and there are some studies demonstrating carryover to the functional postural behavior of individuals with labyrinthine loss (Haran and Keshner, 2008; Bao et al., 2019), Parkinson’s disease (Bryant et al., 2016; Nero et al., 2019; Rennie et al., 2020), and stroke (Van Nes et al., 2006; Madhavan et al., 2019; Saunders et al., 2020). The very strong directional effect of optic flow on posture and spatial orientation (Keshner and Kenyon, 2000) would support incorporating this technology into any balance rehabilitation program.

The ability to change response magnitudes relative to visual velocity has been demonstrated in young healthy adults and in individuals diagnosed with dizziness (Keshner et al., 2007), stroke (Slaboda and Keshner, 2012), and cerebral palsy (Yu et al., 2018; Yu et al., 2020) when support surface tilts were combined with sudden rotations of the visual field. Both of these variables are time dependent and require further clinical trials to determine appropriate dosage of these interventions. Sensory reweighting, however, has been shown to be frequency dependent and requires control of multimodal stimuli. Angular displacements of the head, trunk, and head with respect to the trunk consistently revealed that healthy individuals linked their response parameters to visual inputs and those with visual sensitivity as measured with a Rod and Frame test could not use the visual information to appropriately modulate their responses. Instead, individuals with visual dependence, with or without a history of labyrinthine dysfunction, tended to produce longer duration and larger magnitude angular velocities of the head than healthy individuals in all planes of motion and at all scene velocities (Keshner and Dhaher, 2008; Wright et al., 2013).

These findings could be explained by an inability to adapt the system to the altered gains resulting from the neurological damage so that they could not accommodate to sensory signals with which they had no prior experience (i.e., constant motion of the visual world). A similar outcome was observed in healthy young adults who received vibrotactile noise on the plantar surface of the foot during quiet stance. Stochastic resonant vibration of the lower limbs in older adults and patients with stroke has been shown to reduce postural instability (Van Nes et al., 2004; Guo et al., 2015; Lu et al., 2015; Leplaideur et al., 2016). Although vibration does not shorten the time to react to instability, it can decrease the amplitude of fluctuation between the controlled body segment and unstable surface thereby increasing the likelihood that a corrective response will be effective. While viewing visual field rotations, however, magnitude and noise of their center of mass (COM) and center of pressure (COP) responses increased rather than decreased with vibration (Keshner et al., 2011) suggesting that, by increasing noise in the system, individuals were unable to fully compensate for the disturbances. The use of noise and sensory mismatch to encourage desensitization or compensation is currently being explored for the treatment of dizziness and postural instability (Pavlou et al., 2011; Pavlou et al., 2012; Sienko et al., 2017; Bao et al., 2019). Individuals with dizziness from concussion or labyrinthine dysfunction have also been exposed to erroneous or conflicting visual cues (visual-vestibular mismatch) while attempting to maintain balance (Bronstein and Pavlou, 2013; Pavlou et al., 2013). Results suggest that exposure to unpredictable and noisy environments can be a valuable tool for motor rehabilitation. Dosages (e.g., timeframe and range of stimulation) of the intervention need to be further explored with controlled trials.

An extensive body of literature has examined the role of visual self-motion in the control of locomotion by selectively manipulating the direction or speed of the optic flow provided through the virtual environment. Our work and that of others have shown that one’s walking speed is affected by changing optic flow speeds and show an out-of-phase modulation pattern. In other words, slower walking speeds are adopted at faster optic flow speeds while faster walking speeds are observed at slower optic flows (Pailhous et al., 1990; Konczak, 1994; Prokop et al., 1997; Varraine et al., 2002). Such strategy would allow reducing the incongruity that arises from the mismatch between proprioceptive information from the legs and the visual flow presented in the virtual simulation (Prokop et al., 1997; Lamontagne et al., 2007). The presence of optic flow during treadmill walking also influences one’s ability to correct small stepping fluctuations (Salinas et al., 2017). Compelling evidence also support the role of optic flow in the control of locomotor steering (Jahn et al., 2001; Warren et al., 2001; Mulavara et al., 2005; Turano et al., 2005; Bruggeman et al., 2007). In the latter body of literature, a shift in the focus of expansion of the optic flow is externally induced and this causes the participants to perceive a shift in their heading direction. As a result, the participants correct the perceived shift by altering their walking trajectory in the opposite direction. Our team has also shown that depending on whether the shift in the focus of expansion is induced through rotational vs. translational flow, different steering strategies emerge (Sarre et al., 2008). In the former scenario, a steering strategy characterized by head, trunk, and foot reorientation is observed, while the latter scenario rather induces a typical “crab walk pattern” characterized by a change of walking trajectory with very little body segment reorientation. Such crab walking pattern has also been reported in other VR studies that used translational optic flow (Warren et al., 2001; Berard et al., 2009).

Interestingly, if the same rotational optic flow is generated via a simulated head yaw rotation (camera rotation in VR) vs. an actual head rotation, a different locomotor behavior also emerges, whereby the simulated but not the actual head rotation results in a trajectory deviation (Hanna et al., 2017). Such findings support the potential contribution of the motor command (here neck and oculomotor muscles) in heading estimation (Banks et al., 1996; Crowell et al., 1998). These findings also corroborate the presence of multisensory integration of both visual and nonvisual information (e.g., vestibular, proprioceptive, and somatosensory) to generate a single representation of self-motion and orientation in space (De Winkel et al., 2015; Acerbi et al., 2018).

Collectively, the above-mentioned observations demonstrate that while locomotor adaptions rely on multisensory integration, vision and here, more specifically, optic flow exert a powerful influence on the observed behavior. Findings presented also provide concrete examples as to how optic flow information can be selectively manipulated to alter locomotor behavior. Thus, not only is the replication of reality in VR not a necessity, but the selective manipulation of the sensory environment can and should as needed be capitalized on to promote the desired outcome. To allow for such manipulations to be effective in a given clinical population, however, the latter must show a residual capacity to perceive and utilize optic flow information while walking.

The perception of optic flow and its use in locomotion have been examined in several clinical populations such as older adults (Chou et al., 2009; Lalonde-Parsi and Lamontagne, 2015) and Parkinson’s disease patients (Schubert et al., 2005; Davidsdottir et al., 2008; Young et al., 2010; Van Der Hoorn et al., 2012), but let us use stroke as an example to demonstrate applications in rehabilitation. Following stroke, the perception of optic flow often is preserved (Vaina et al., 2010; Ogourtsova et al., 2018) but becomes affected when the lesion is located in rostrodorsal parietal and occipitoparietal areas of the brain, which are involved in global motion perception (Vaina et al., 2010). In presence of unilateral spatial neglect (USN), the bilateral perception of optic flow (e.g., optic flow direction and coherence) becomes dramatically altered (Ogourtsova et al., 2018). In fact, altered optic flow perception along with USN severity as measured by clinical tests explain 58% of the variance in locomotor heading errors in individuals with poststroke USN (Ogourtsova et al., 2018). Such observations emphasize the need to consider the role of visual-perceptual disorders in poststroke locomotor impairments.

Beyond studies examining the perception of optic flow perception, our group has also examined the use of optic flow during locomotion by manipulating the direction or speed of the virtual environment (Lamontagne et al., 2007; Lamontagne et al., 2010; Berard et al., 2012; Aburub and Lamontagne, 2013). From these experiments emerged three main observations: (1) globally, the ability to utilize OF information during walking is altered following stroke; (2) there is however a large heterogeneity across individuals, ranging from no alterations to profound alterations in locomotor responses to optic flow manipulations; and (3) most individuals show some degree of modulation (albeit incomplete or imperfect) of their locomotor behavior in response to optic flow manipulation. Thus, one can infer that there is potential to induce the desired locomotor adaptations through optic flow manipulation in stroke survivors. However, integration of such manipulations in intervention studies for locomotor rehabilitation is scare and evidence of effectiveness is lacking.

In 2012, Khang and collaborators combined treadmill training to optic flow speed manipulation for 4 weeks and examined the effects on balance and locomotion following stroke (Kang et al., 2012). Unfortunately, although the study showed larger posttraining gains in walking speed and endurance in the optic flow manipulation group vs. control groups receiving either conventional treadmill training or a stretching program, the study design did not allow to dissociate the contribution of VR itself from that of the optic flow manipulation. Furthermore, it is unclear if any online walking speed adaptation took place during training given the absence of a self-pace mode on the treadmill. A study from Bastian’s lab also showed that combining split-belt walking to an incongruent optic flow that predicted the belt speed of the next step enhanced the rate of learning during split-belt locomotor adaptations in healthy individuals (Finley et al., 2014). To date, however, the integration of such paradigm as part of an intervention to enhance poststroke gait asymmetry remains to be examined.

In recent years, and thanks to technological development that allows tracking and displaying body movements in real-time in a virtual environment, the development of avatar-based paradigms in rehabilitation has emerged. Unlike virtual humans or agents which are controlled by computer algorithms, avatars are controlled by the users and “mimic” their movements in real-time. The avatar can represent either selected body parts (e.g., arms or legs) or the full body. They can also be viewed from a first-person perspective (1 PP) or third-person perspective (3 PP). In the paragraphs below, we are mainly concerned with exploring the impact of avatar-based feedback as a paradigm to enhance postural control and locomotion in clinical populations, but literature on upper extremity research that explores mechanisms is also examined.

Potential principles of action of avatar-based feedback are multiple and, as stated in a recent expert review on virtual reality, they open a “plethora of possibilities for rehabilitation” (Tieri et al., 2018). When exposed to virtual simulations representing body parts or the full body, a phenomenon referred to as virtual embodiment can develop. This sense of embodiment translates as the observer experiencing a sense of owning the virtual body simulation (ownership) and of being responsible for its movement (agency) (Longo et al., 2008; Pavone et al., 2016). While such sense of embodiment is subjectively reported as higher for 1 PP vs. 3 PP (Slater et al., 2010; Petkova et al., 2011; Pavone et al., 2016), we argue that the latter perspective remains very useful for postural and locomotor rehabilitation (as one does not necessarily look down at their feet, for instance, when standing or walking). The similarity between the virtual vs. real body part(s) (Tieri et al., 2017; Kim et al., 2020; Pyasik et al., 2020), the real-time attribute or synchrony of the simulation with actual movements (Slater et al., 2009; Kim et al., 2020), and the combination of sensory modalities (e.g., visuotactile (Slater et al., 2008) or visuovestibular (Lopez et al., 2008; Lopez et al., 2012)) are factors that enhance the illusory sensation.

Neuroimaging experiments indicate that the premotor areas (pre-SMA and BA6) are involved in the sense of agency (Tsakiris et al., 2010), while ownership would be mediated through multimodal integration that involves multiple brain areas including the somatosensory cortex, intraparietal cortex, and the ventral portion of the premotor cortex (Blanke, 2012; Guterstam et al., 2019; Serino, 2019). Mirror neurons located in the ventral premotor cortex and parietal areas, but also in other regions such as visual cortex, cerebellum, and regions of the limbic system, also fire when an individual observes someone else’s action (Molenberghs et al., 2012) and are likely activated when exposed to avatar-based feedback. Passively observing modified (erroneous) avatar-based feedback also leads to activation of brain regions associated with error monitoring (Pavone et al., 2016; Spinelli et al., 2018), which is a process essential for motor learning.

During actual locomotion, the performance of a steering task while exposed to avatar feedback provided in 1 PP or 3 PP was shown to induce larger activation in premotor and parietal areas compared to movement-unrelated feedback or mirror feedback (Wagner et al., 2014). While such enhanced activation appears primarily caused by the motor planning and visuomotor demands associated with gait adaptations (Wagner et al., 2014), it may as well have been potentiated by a sense of embodiment and/or mirror neuron activations. More recently, another study reported an event-related synchronization in central-frontal (likely SMA) and parietal areas both during actual and imagined walking while exposed to 1 PP avatar-based feedback (Alchalabi et al., 2019). This event-related synchronization was attributed by the authors to the high sense of agency experienced during these conditions. Together, the latter two locomotor studies provide preliminary evidence that the body of knowledge on avatar-based feedback gathered primarily via upper extremity experiments can be extended, at least in part, to locomotion. Most importantly, observations from neuroimaging experiments as a whole indicate that avatar-based feedback does modulate brain activation. Through repeated exposure, such a paradigm could thus support neuronal reorganization and recovery following a neurological insult.

From a more pragmatic perspective, avatar-based feedback also capitalizes on the remarkable ability of the human brain to perceive and interpret biological motion information (Johansson, 1973). This remarkable ability allows recognizing features such as the nature of the activity being performed (e.g., walking), gender and emotion, even when exposed to impoverished visual simulations such as point-light displays (Johansson, 1973; Troje, 2002; Atkinson et al., 2004; Schouten et al., 2010). For similar reasons, we as human can easily identify even the most subtle limp when observing a walking pattern, which makes avatar-based feedback a potentially powerful approach to give and receive feedback on complex tasks such as locomotion. Avatar feedback further allows providing real-time feedback on the quality of movement (knowledge of performance) (Liu et al., 2020b), which is especially challenging for clinicians to do. In line with previous literature on embodiment presented earlier, avatar-based feedback may also impact recovery by enhancing movement awareness, which is affected in clinical populations such as stroke (Wutzke et al., 2013; Wutzke et al., 2015).

Avatar-based feedback can be manipulated in different ways (e.g., view, available sensory modality, modified vs. unmodified feedback, etc.), yet the optimal parameters to obtain the desired responses remain unclear. In a recent study from our laboratory, we posed the question “which avatar view between the front, back and side view, yields the best instantaneous improvement in poststroke gait asymmetry?” (Liu et al., 2020b). Participants were tested while exposed to 3 PP full-body avatars presented either in the front, back, or paretic side view and resulting changes in gait symmetry were examined. The side view, which likely provides the best perspective on the temporal-distance parameters of gait, was the only view that induced enhanced spatial symmetry but only in those participants who initially presented a larger step on the paretic side. This finding was caused by the participants increasing their step length on the nonparetic side when exposed to the avatar, which resulted in improved symmetry only in those with a large paretic step. Such an observation suggests that the initial profile of the participant matters and, by extension, that avatar-based feedback may not be suitable for all individuals. Of note, manipulating 3 PP viewing angle of a virtual arm was also found to alter kinematic outcomes during a reaching task performed while standing (Ustinova et al., 2010). Avatar view thus emerges as a factor to consider in the design of an intervention.

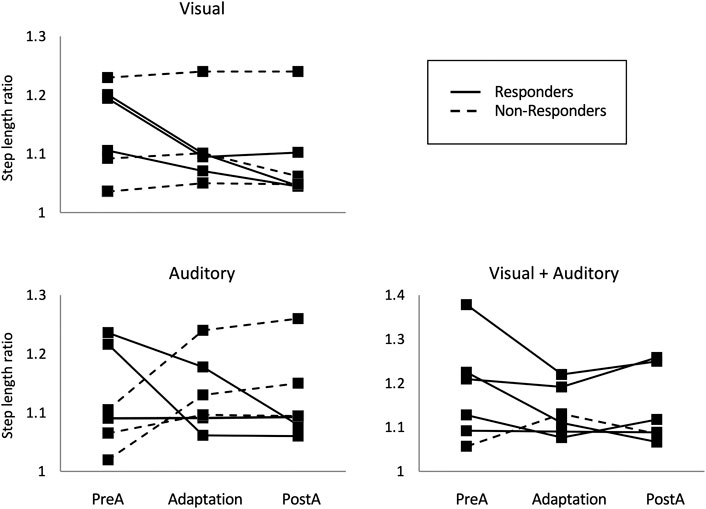

In a second series of experiments, we examined the impact of modulating the sensory modality of avatar-based feedback on poststroke gait asymmetry. The feedback consisted either of a 3 PP visual avatar in the side view (visual), footstep sounds (auditory), or a combination of visual avatar and footstep sounds (combined modality) (Liu et al., 2020a). Although these results are preliminary, there is a clear implication that combining sensory modalities yielded the largest improvements in spatial symmetry (Figure 3). These results are in agreement with prior studies on other types of multimodal simulation, such as the combination of a visual avatar to tactile or haptic feedback, that were found to have additional beneficial effects on the performance of healthy individuals performing a stepping task (Koritnik et al., 2010) and on the ability of individuals with spinal cord injury to integrate virtual legs to their body representation (Shokur et al., 2016).

FIGURE 3. Step length ratio values exhibited by stroke survivors walking on a self-paced treadmill while exposed to avatar-based feedback in the visual, auditory, and combined (visual + auditory) sensory modality. Values are presented for the preadaptation (no avatar for 30 s), adaptation (avatar present for 1 min), and postadaptation periods (avatar removed for 1 min). Responders, that is individuals showing a reduction of their step length ratio during the adaptation period, are represented by a plain line, while non‐responders are represented by a dotted line. Note the larger number of responders to the combined vs. individual sensory modalities.

The evidence supports the use of multimodal feedback to modulate or train functional locomotion from a rehabilitation perspective. In upper extremity rehabilitation research, a well-studied approach consists of artificially increasing the perceived performance error through visual or haptic feedback (i.e., error augmentation paradigm) (Israely and Carmeli, 2016; Liu et al., 2018). Similarly, manipulating avatar-based feedback offers an opportunity to modify the locomotor behavior. In 2013, Kannape and Blanke manipulated the temporal delay of avatar-based feedback and found that, while gait agency decreased with longer delays, participants “systematically modulated their stride time as a function of the temporal delay of the visual feedback”, making faster steps in presence of incongruous temporal feedback (Kannape and Blanke, 2013). More recently, a preliminary study examined the impact of stride length manipulation through hip angle modifications and found a clear trend toward larger step lengths when exposed to larger avatar step lengths (Willaert et al., 2020). Such experiments provide preliminary evidence that modified avatar-based feedback can lead to locomotor adaptations either in the temporal or spatial domain. Avatar-based feedback can further be augmented with visual biofeedback on specific kinematic or kinetic features of the gait cycle. In children with cerebral palsy, for instance, avatar-based feedback was augmented with biofeedback on knee or hip excursion, as well as step length, resulting in further improvements in those parameters compared to avatar-based feedback alone (Booth et al., 2019).

Collectively, findings in this section demonstrate that avatar-based feedback can be effectively manipulated to modify locomotor behavior and target specific features of gait. It can also be used as a mean to enhance the control of movement through brain computer interface (Wang et al., 2012; King et al., 2013; Nierula et al., 2019). Further research is needed, however, to understand how it can be optimized to promote the desired outcome. At this point in time, intervention studies that specifically focus on repeated exposure to avatar-based feedback as an intervention for postural or locomotor rehabilitation in populations with sensorimotor disorders are crucially lacking.

Inclusion of external agents (i.e., virtual humans) in virtual scenarios has emerged as a means to modulate locomotion in the context of rehabilitation. Such an approach stems in part from a large body on research on the use of external sensory cueing (e.g., visual or auditory) to modulate the temporal-distance factors of gait both in healthy individuals (Rhea et al., 2014; Terrier, 2016) and individuals with gait disorders (Roerdink et al., 2007; Spaulding et al., 2013). It also stems from the fact that when two individuals walk together (i.e., when exposed to biological sensory cues), the locomotor behavior is modulated as a result of a mutual interaction between the two walkers (Ducourant et al., 2005) and a phenomenon of “gait synchronization”, whereby a follower matches the gait pattern of the leader, can be observed (Zivotofsky and Hausdorff, 2007; Zivotofsky et al., 2012; Marmelat et al., 2014; Rio et al., 2014). Such gait synchronization can be fostered through different sensory channels (e.g., visual, tactile, and auditory) and is enhanced with multimodal simulations (Zivotofsky et al., 2012). In postural tasks, a similar phenomenon of synchronization of postural sway is observed when individuals are standing and having a physical contact (Reynolds and Osler, 2014), while looking at each other (Okazaki et al., 2015) or while sharing a cooperative verbal task (Shockley et al., 2003). Given the flexibility and control afforded by VR, virtual humans can also be used to “cue” and modulate behavior, as demonstrated through different studies which have examined instantaneous effects on locomotion (Meerhoff et al., 2017; Meerhoff et al., 2019; Koilias et al., 2020). While promising as a tool for rehabilitation, however, evidence of effectiveness of external cueing through virtual humans as an intervention either for posture or locomotion remains to be established.

Virtual humans can also be used for the assessment and training of complex locomotor tasks such as avoiding collisions with other pedestrians, which is a task essential for independent community walking (Patla and Shumway-Cook, 1999; Shumway-Cook et al., 2003). Collision avoidance heavily relies on the sense of vision, in comparison to other senses such as audition (Souza Silva et al., 2018). For this reason, most of the literature has focused on the visual modality to infer the control variables involved (Cutting et al., 1995; Gerin-Lajoie et al., 2008; Olivier et al., 2012; Fajen, 2013; Darekar et al., 2018; Pfaff and Cinelli, 2018). VR has brought major contributions to our understanding of collision avoidance, with some elements that are especially relevant to rehabilitation. A first key element is that different collision avoidance strategies emerge when avoiding virtual objects vs. virtual humans. The latter were shown to lead to smaller obstacle clearances which were interpreted as a use of less conservative avoidance strategies (Lynch et al., 2018; Souza Silva et al., 2018). Factors that may explain such difference include the level of familiarity with the task (i.e., avoiding pedestrians is far more common than avoiding an approaching cylinder/sphere), the social attributes of the virtual humans (Souza Silva et al., 2018), as well as the local motion cues arising from the limb movements that were shown to shape some aspects of the avoidance strategy (Lynch et al., 2018; Fiset et al., 2020). A combination of real-world and VR studies has also shown that the collision avoidance strategy in response to a human interferer is modulated by factors such as the static vs. moving nature of the interferer (Basili et al., 2013) as well as its direction (Huber et al., 2014; Knorr et al., 2016; Buhler and Lamontagne, 2018; Souza Silva et al., 2018) and speed of approach (Huber et al., 2014; Knorr et al., 2016). All these factors can easily and effectively be manipulated in VR to promote the desired behavior and expose users to the diversity of scenarios they would encounter while walking in the community. Whether personal attributes of the interferers impact on collision avoidance strategies, however, is still unclear (e.g., Knorr et al., 2016; Bourgaize et al., 2020) and deserves further investigations.

VR-based studies on pedestrian interactions and collision avoidance, including recent work from our laboratory, have proven to be useful in unveiling the altered collision avoidance strategies experienced by several populations such as healthy older adults (Souza Silva et al., 2019; Souza Silva et al., 2020), individuals with mild traumatic brain injury (Robitaille et al., 2017), and individuals with stroke with (Aravind and Lamontagne, 2014; Aravind et al., 2015; Aravind and Lamontagne, 2017a; b) and without USN (Darekar et al., 2017b; a). We and others have also shown that simultaneously performing a cognitive task alters the collision avoidance behavior and can compromise safety by generating addition collisions (Aravind and Lamontagne, 2017a; Robitaille et al., 2017; Lamontagne et al., 2019a; Souza Silva et al., 2020; Deblock-Bellamy et al., 2021—accepted). In parallel to those clinical investigations, other studies carried out in healthy individuals have demonstrated that similar obstacle avoidance strategies are used when avoiding virtual vs. physical humans, although with subtle differences in walking speed and obstacle clearance (Sanz et al., 2015; Buhler and Lamontagne, 2018; Olivier et al., 2018; Bühler and Lamontagne, 2019). Such results support the use of virtual humans as a valid approach to evaluate and train pedestrian interactions as experienced in daily life. Pedestrian interactions can be facilitated by the use of omnidirectional treadmills that allow speed and trajectory changes (Lamontagne et al., 2019b; Soni and Lamontagne, 2020) and should be added as an essential dimension of community walking to complement existing VR-based interventions that focus on locomotor adaptations (e.g., Yang et al., 2008; Mirelman et al., 2011; Mirelman et al., 2016; Peruzzi et al., 2017; Richards et al., 2018).

A recent review (Tieri et al., 2018) of the contributions of VR to cognitive and motor rehabilitation suggests that the most promising effects of VR are the ability to multitask in a virtual environment that can replicate the demands of a physical environment, i.e., it is an ecologically valid rehabilitation tool. Our data and others indicate that the sensory environment can be effectively manipulated to promote a desired motor outcome so that engagement with the task is encouraged and the process of active motor control is facilitated even if the VR environment deviates from physical reality. In order to accomplish this, however, we need to understand the properties of VR technology that create meaningful task constraints such as sensory conflict and error augmentation. One of the greatest weaknesses afflicting identification of the value of VR to rehabilitation is the application of the term “VR” to describe a myriad of paradigms that do not meet the requirements to truly be considered virtual reality. In order for a VR guided rehabilitation program to be successful, immersion in an environment that produces presence and embodiment is necessary if the user is to respond in a realistic way (Kenyon et al., 2004; Keshner and Kenyon, 2009; Tieri et al., 2018). Thus, only by activating the perception-action pathways for motor behavior will appropriate emotional reactions, incentives to act, and enhanced performance take place.

Results from the studies presented here clearly demonstrate that one of the primary contributions of VR to physical rehabilitation interventions is the ability to engage the whole person in the processes of motor learning and control (Sveistrup, 2004; Adamovich et al., 2009). Principal strengths of utilizing VR for rehabilitation is that it encourages motor learning through practice and repetition without inducing the boredom often resulting during conventional exercise programs. With this technology, interventions can be designed to address the particular needs of each individual, activity can be induced through observation, and intensity of practice can be modified in response to individual needs. But, in order to accomplish any of these goals, it is essential that the clinicians understand how and why they are choosing VR to meet their treatment goals and how to optimally tailor treatments for a desired outcome. Factors to consider when choosing to incorporate VR into a treatment intervention include whether (1) the donning of devices such as goggles alter motor performance (Almajid et al., 2020); (2) the manipulation of objects in the environment will alter the sense of presence; (3) certain populations are more susceptible to the virtual environment and, therefore, will respond differently than predicted (Slaboda et al., 2011b; Almajid and Keshner, 2019); and (4) a visual or multimodal presentation of the environment and task will be best to obtain the desired behavior. In addition, significant weaknesses remain in our understanding about the impact of VR on physical rehabilitation because of the dearth of well-designed clinical trials that consider dosages and technological equivalencies (Weiss et al., 2014).

In this article, we have focused on research demonstrating how multisensory signals delivered within a virtual environment will modify locomotor and postural control mechanisms. Studies using motor learning principles and complex models of sensorimotor control demonstrate that all sensory systems are involved in a complex integration of information from multiple sensory pathways. This more sophisticated understanding of sensory processing and its impact on the multisegmental body has altered our understanding of the causality and treatment of instability during functional movements. Therefore, incorporating VR and other applied technologies such as robotics has become essential to supplying the impact of multisensory processing on motor control (Saleh et al., 2017).

Motivation and enjoyment are an essential component in a rehabilitation program, and we are in no way suggesting that computer gaming and exercise and augmented reality technologies should be ignored because they do not necessarily deliver all components of a virtual reality environment. Rather, we are contending that there are additional pathways for training and modifying postural and locomotor behaviors in an immersive and multimodal virtual environment that will facilitate transfer of training of the neurophysiological and musculoskeletal mechanisms underlying functional motor behavior.

Both authors have contributed equally to this work and share first authorship.

EK has received funding from the NIH National Institute of Aging and National Institute of Deafness and Communication Disorders for her research. AL has received funding from the Canadian Institutes of Health Research (PJT-148917) and the Natural Sciences and Engineering Research Council of Canada (RGPIN-2016-04471).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Aburub, A. S., and Lamontagne, A. (2013). Altered steering strategies for goal-directed locomotion in stroke. J. NeuroEng. Rehabil. 10, 80. doi:10.1186/1743-0003-10-80

Acerbi, L., Dokka, K., Angelaki, D. E., and Ma, W. J. (2018). Bayesian comparison of explicit and implicit causal inference strategies in multisensory heading perception. PLoS Comput. Biol. 14, e1006110. doi:10.1371/journal.pcbi.1006110

Adamovich, S. V., Fluet, G. G., Tunik, E., and Merians, A. S. (2009). Sensorimotor training in virtual reality: a review. NeuroRehabilitation 25, 29–44. doi:10.3233/NRE-2009-0497

Alchalabi, B., Faubert, J., and Labbe, D. R. (2019). EEG can Be used to measure embodiment when controlling a walking self-avatar. 2019 IEEE Conference on Virtual Reality and 3D User Interfaces(VR), Osaka, Japan, 23-27 March 2019, doi:10.1109/VR.2019.8798263

Almajid, R., and Keshner, E. (2019). Role of gender in dual-tasking timed up and go tests: a cross-sectional study. J. Mot. Behav. 51, 681–689. doi:10.1080/00222895.2019.1565528

Almajid, R., Tucker, C., Wright, W. G., Vasudevan, E., and Keshner, E. (2020—). Visual dependence affects the motor behavior of older adults during the Timed up and Go (TUG) test. Arch. Gerontol. Geriatr. 87, 104004. doi:10.1016/j.archger.2019.104004

Angelaki, D. E., Gu, Y., and Deangelis, G. C. (2011). Visual and vestibular cue integration for heading perception in extrastriate visual cortex. J. Physiol. (Lond.) 589, 825–833. doi:10.1113/jphysiol.2010.194720

Aravind, G., Darekar, A., Fung, J., and Lamontagne, A. (2015). Virtual reality-based navigation task to reveal obstacle avoidance performance in individuals with visuospatial neglect. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 179–188. doi:10.1109/TNSRE.2014.2369812

Aravind, G., and Lamontagne, A. (2017a). Dual tasking negatively impacts obstacle avoidance abilities in post-stroke individuals with visuospatial neglect: task complexity matters! Restor. Neurol. Neurosci. 35, 423–436. doi:10.3233/RNN-160709

Aravind, G., and Lamontagne, A. (2017b). Effect of visuospatial neglect on spatial navigation and heading after stroke. Ann Phys Rehabil Med. 61(4), 197–206. doi:10.1016/j.rehab.2017.05.002

Aravind, G., and Lamontagne, A. (2014). Perceptual and locomotor factors affect obstacle avoidance in persons with visuospatial neglect. J. NeuroEng. Rehabil. 11, 38. doi:10.1186/1743-0003-11-38

Ash, A., Palmisano, S., Apthorp, D., and Allison, R. S. (2013). Vection in depth during treadmill walking. Perception 42, 562–576. doi:10.1068/p7449

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746. doi:10.1068/p5096

Banks, M. S., Ehrlich, S. M., Backus, B. T., and Crowell, J. A. (1996). Estimating heading during real and simulated eye movements. Vis. Res. 36, 431–443. doi:10.1016/0042-6989(95)00122-0

Bao, T., Klatt, B. N., Carender, W. J., Kinnaird, C., Alsubaie, S., Whitney, S. L., et al. (2019). Effects of long-term vestibular rehabilitation therapy with vibrotactile sensory augmentation for people with unilateral vestibular disorders—a randomized preliminary study. J. Vestib. Res. 29, 323–334. doi:10.3233/VES-190683

Basili, P., Sağlam, M., Kruse, T., Huber, M., Kirsch, A., and Glasauer, S. (2013). Strategies of locomotor collision avoidance. Gait Posture 37, 385–390. doi:10.1016/j.gaitpost.2012.08.003

Berard, J., Fung, J., and Lamontagne, A. (2012). Visuomotor control post stroke can be affected by a history of visuospatial neglect. J. Neurol. Neurophysiol.and S8, 1–9. doi:10.4172/2155-9562.S8-001

Berard, J. R., Fung, J., Mcfadyen, B. J., and Lamontagne, A. (2009). Aging affects the ability to use optic flow in the control of heading during locomotion. Exp. Brain Res. 194, 183–190. doi:10.1007/s00221-008-1685-1

Billington, J., Field, D. T., Wilkie, R. M., and Wann, J. P. (2010). An fMRI study of parietal cortex involvement in the visual guidance of locomotion. J. Exp. Psychol. Hum. Percept. Perform. 36, 1495–1507. doi:10.1037/a0018728

Blanke, O. (2012). Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571. doi:10.1038/nrn3292

Booth, A. T., Buizer, A. I., Harlaar, J., Steenbrink, F., and Van Der Krogt, M. M. (2019). Immediate effects of immersive biofeedback on gait in children with cerebral palsy. Arch. Phys. Med. Rehabil. 100, 598–605. doi:10.1016/j.apmr.2018.10.013

Bourgaize, S. M., Mcfadyen, B. J., and Cinelli, M. E. (2020). Collision avoidance behaviours when circumventing people of different sizes in various positions and locations. J. Mot. Behav. 53(2), 166–175. doi:10.1080/00222895.2020.1742083

Brandt, T., Bartenstein, P., Janek, A., and Dieterich, M. (1998). Reciprocal inhibitory visual-vestibular interaction. Visual motion stimulation deactivates the parieto-insular vestibular cortex. Brain 121 (Pt 9), 1749–1758. doi:10.1093/brain/121.9.1749

Brandt, T., Glasauer, S., Stephan, T., Bense, S., Yousry, T. A., Deutschlander, A., et al. (2002). Visual-vestibular and visuovisual cortical interaction: new insights from fMRI and pet. Ann. N. Y. Acad. Sci. 956, 230–241. doi:10.1111/j.1749-6632.2002.tb02822.x

Brantley, J. A., Luu, T. P., Nakagome, S., Zhu, F., and Contreras-Vidal, J. L. (2018). Full body mobile brain-body imaging data during unconstrained locomotion on stairs, ramps, and level ground. Sci Data 5, 180133. doi:10.1038/sdata.2018.133

Bronstein, A. M., and Pavlou, M. (2013). Balance. Handb. Clin. Neurol. 110, 189–208. doi:10.1016/B978-0-444-52901-5.00016-2

Bruggeman, H., Zosh, W., and Warren, W. H. (2007). Optic flow drives human visuo-locomotor adaptation. Curr. Biol. 17, 2035–2040. doi:10.1016/j.cub.2007.10.059

Bryant, M. S., Workman, C. D., Hou, J. G., Henson, H. K., and York, M. K. (2016). Acute and long-term effects of multidirectional treadmill training on gait and balance in Parkinson disease. Pm r 8, 1151–1158. doi:10.1016/j.pmrj.2016.05.001

Buhler, M. A., and Lamontagne, A. (2018). Circumvention of pedestrians while walking in virtual and physical environments. IEEE Trans. Neural Syst. Rehabil. Eng. 26, 1813–1822. doi:10.1109/TNSRE.2018.2865907

Bühler, M. A., and Lamontagne, A. (2019). Locomotor circumvention strategies in response to static pedestrians in a virtual and physical environment. Gait Posture 68, 201–206.

Burr, D., and Thompson, P. (2011). Motion psychophysics: 1985-2010. Vis. Res. 51, 1431–1456. doi:10.1016/j.visres.2011.02.008

Calabro, F. J., and Vaina, L. M. (2012). Interaction of cortical networks mediating object motion detection by moving observers. Exp. Brain Res. 221, 177–189. doi:10.1007/s00221-012-3159-8

Chaplin, T. A., and Margrie, T. W. (2020). Cortical circuits for integration of self-motion and visual-motion signals. Curr. Opin. Neurobiol. 60, 122–128. doi:10.1016/j.conb.2019.11.013

Chou, Y. H., Wagenaar, R. C., Saltzman, E., Giphart, J. E., Young, D., Davidsdottir, R., et al. (2009). Effects of optic flow speed and lateral flow asymmetry on locomotion in younger and older adults: a virtual reality study. J. Gerontol. B Psychol. Sci. Soc. Sci. 64, 222–231. doi:10.1093/geronb/gbp003

Cleworth, T. W., Horslen, B. C., and Carpenter, M. G. (2012). Influence of real and virtual heights on standing balance. Gait Posture 36, 172–176. doi:10.1016/j.gaitpost.2012.02.010

Crowell, J. A., Banks, M. S., Shenoy, K. V., and Andersen, R. A. (1998). Visual self-motion perception during head turns. Nat. Neurosci. 1, 732–737. doi:10.1038/3732

Cutting, J. E., Vishton, P. M., and Braren, P. A. (1995). How we avoid collisions with stationary and moving objects. Psychol. Rev. 102, 627–651.

Darekar, A., Lamontagne, A., and Fung, J. (2017a). Locomotor circumvention strategies are altered by stroke: I. Obstacle clearance. J. NeuroEng. Rehabil. 14, 56. doi:10.1186/s12984-017-0264-8

Darekar, A., Lamontagne, A., and Fung, J. (2017b). Locomotor circumvention strategies are altered by stroke: II. postural coordination. J. NeuroEng. Rehabil. 14, 57. doi:10.1186/s12984-017-0265-7

Darekar, A., Goussev, V., Mcfadyen, B. J., Lamontagne, A., and Fung, J. (2018). Modeling spatial navigation in the presence of dynamic obstacles: a differential games approach. J. Neurophysiol. 119, 990–1004. doi:10.1152/jn.00857.2016

Davidsdottir, S., Wagenaar, R., Young, D., and Cronin-Golomb, A. (2008). Impact of optic flow perception and egocentric coordinates on veering in Parkinson’s disease. Brain 131, 2882–2893. doi:10.1093/brain/awn237

De Winkel, K. N., Katliar, M., and Bülthoff, H. H. (2015). Forced fusion in multisensory heading estimation. PloS One 10, e0127104. doi:10.1371/journal.pone.0127104

Deangelis, G. C., and Angelaki, D. E. (2012). “Visual-vestibular integration for self-motion perception,” in The neural bases of multisensory processes. Editors M. M. Murray, and M. T. Wallace (Boca Raton, FL, United States: CRC Press).

Deblock-Bellamy, A., Lamontagne, A., Mcfadyen, B. J., Ouellet, M. C., and Blanchette, A. K. (2021). Virtual reality-based assessment of cognitive-locomotor interference in healthy young adults. Accepted for publication in 2021. J. NeuroEng. Rehabil. doi:10.1186/s12984-021-00834-2

Dichgans, J., and Brandt, T. (1972). Visual-vestibular interaction and motion perception. Bibl. Ophthalmol. 82, 327–338.

Dichgans, J., Held, R., Young, L. R., and Brandt, T. (1972). Moving visual scenes influence the apparent direction of gravity. Science 178, 1217–1219. doi:10.1126/science.178.4066.1217

Dieterich, M., and Brandt, T. (2000). Brain activation studies on visual-vestibular and ocular motor interaction. Curr. Opin. Neurol. 13, 13–18. doi:10.1097/00019052-200002000-00004

Dokka, K., Kenyon, R. V., and Keshner, E. A. (2009). Influence of visual scene velocity on segmental kinematics during stance. Gait Posture 30, 211–216. doi:10.1016/j.gaitpost.2009.05.001

Dokka, K., Kenyon, R. V., Keshner, E. A., and Kording, K. P. (2010). Self versus environment motion in postural control. PLoS Comput. Biol. 6, e1000680. doi:10.1371/journal.pcbi.1000680

Dokka, K., Macneilage, P. R., Deangelis, G. C., and Angelaki, D. E. (2015). Multisensory self-motion compensation during object trajectory judgments. Cerebr. Cortex 25, 619–630. doi:10.1093/cercor/bht247

Ducourant, T., Vieilledent, S., Kerlirzin, Y., and Berthoz, A. (2005). Timing and distance characteristics of interpersonal coordination during locomotion. Neurosci. Lett. 389, 6–11. doi:10.1016/j.neulet.2005.06.052

Duffy, C. J. (1998). MST neurons respond to optic flow and translational movement. J. Neurophysiol. 80, 1816–1827. doi:10.1152/jn.1998.80.4.1816

Dukelow, S. P., Desouza, J. F., Culham, J. C., Van Den Berg, A. V., Menon, R. S., and Vilis, T. (2001). Distinguishing subregions of the human MT+ complex using visual fields and pursuit eye movements. J. Neurophysiol. 86, 1991–2000. doi:10.1152/jn.2001.86.4.1991

Ernst, M. O., and Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends Cognit. Sci. 8, 162–169. doi:10.1016/j.tics.2004.02.002

Fajen, B. R. (2013). Guiding locomotion in complex, dynamic environments. Front. Behav. Neurosci. 7, 85. doi:10.3389/fnbeh.2013.00085

Field, D. T., Wilkie, R. M., and Wann, J. P. (2007). Neural systems in the visual control of steering. J. Neurosci. 27, 8002–8010. doi:10.1523/JNEUROSCI.2130-07.2007

Finley, J. M., Statton, M. A., and Bastian, A. J. (2014). A novel optic flow pattern speeds split-belt locomotor adaptation. J. Neurophysiol. 111, 969–976. doi:10.1152/jn.00513.2013

Fiset, F., Lamontagne, A., and Mcfadyen, B. J. (2020). Limb movements of another pedestrian affect crossing distance but not path planning during virtual over ground circumvention. Neurosci. Lett. 736, 135278. doi:10.1016/j.neulet.2020.135278

Garrett, B., Taverner, T., Gromala, D., Tao, G., Cordingley, E., and Sun, C. (2018). Virtual reality clinical research: promises and challenges. JMIR Serious Games 6, e10839. doi:10.2196/10839

Gennaro, F., and De Bruin, E. D. (2018). Assessing brain-muscle connectivity in human locomotion through mobile brain/body imaging: opportunities, pitfalls, and future directions. Front Public Health 6, 39. doi:10.3389/fpubh.2018.00039

Gérin-Lajoie, M., Richards, C. L., Fung, J., and Mcfadyen, B. J. (2008). Characteristics of personal space during obstacle circumvention in physical and virtual environments. Gait Posture 27, 239–247. doi:10.1016/j.gaitpost.2007.03.015

Gibson, J. J. (2009). Reprinted from the British Journal of Psychology (1958), 49, 182-194: visually controlled locomotion and visual orientation in animalsVisually controlled locomotion and visual orientation in animals. Br. J. Psychol. 100, 259–271. doi:10.1348/000712608X336077

Gibson, J. J. (1979). The ecological approach to visual perception, Hove, JI, United Kingdom: Psychology Press.

Gorman, A. D., Abernethy, B., and Farrow, D. (2013). The expert advantage in dynamic pattern recall persists across both attended and unattended display elements. Atten. Percept. Psychophys. 75, 835–844. doi:10.3758/s13414-013-0423-3

Gramann, K., Gwin, J. T., Ferris, D. P., Oie, K., Jung, T. P., Lin, C. T., et al. (2011). Cognition in action: imaging brain/body dynamics in mobile humans. Rev. Neurosci. 22, 593–608. doi:10.1515/RNS.2011.047

Grèzes, J., Armony, J. L., Rowe, J., and Passingham, R. E. (2003). Activations related to “mirror” and “canonical” neurones in the human brain: an fMRI study. Neuroimage 18, 928–937. doi:10.1016/s1053-8119(03)00042-9

Guo, C., Mi, X., Liu, S., Yi, W., Gong, C., Zhu, L., et al. (2015). Whole body vibration training improves walking performance of stroke patients with knee hyperextension: a randomized controlled pilot study. CNS Neurol. Disord.— Drug Targets 14, 1110–1115. doi:10.2174/1871527315666151111124937

Guterstam, A., Collins, K. L., Cronin, J. A., Zeberg, H., Darvas, F., Weaver, K. E., et al. (2019). Direct electrophysiological correlates of body ownership in human cerebral cortex. Cerebr. Cortex 29, 1328–1341. doi:10.1093/cercor/bhy285

Hamzei, F., Rijntjes, M., Dettmers, C., Glauche, V., Weiller, C., and Büchel, C. (2003). The human action recognition system and its relationship to Broca’s area: an fMRI study. Neuroimage 19, 637–644. doi:10.1016/s1053-8119(03)00087-9

Hanna, M., Fung, J., and Lamontagne, A. (2017). Multisensory control of a straight locomotor trajectory. J. Vestib. Res. 27, 17–25. doi:10.3233/VES-170603

Haran, F. J., and Keshner, E. A. (2008). Sensory reweighting as a method of balance training for labyrinthine loss. J. Neurol. Phys. Ther. 32, 186–191. doi:10.1097/NPT.0b013e31818dee39

Hedges, J. H., Gartshteyn, Y., Kohn, A., Rust, N. C., Shadlen, M. N., Newsome, W. T., et al. (2011). Dissociation of neuronal and psychophysical responses to local and global motion. Curr. Biol. 21, 2023–2028. doi:10.1016/j.cub.2011.10.049

Huang, R. S., Chen, C. F., and Sereno, M. I. (2015). Neural substrates underlying the passive observation and active control of translational egomotion. J. Neurosci. 35, 4258–4267. doi:10.1523/JNEUROSCI.2647-14.2015

Huber, M., Su, Y. H., Krüger, M., Faschian, K., Glasauer, S., and Hermsdörfer, J. (2014). Adjustments of speed and path when avoiding collisions with another pedestrian. PloS One 9, e89589. doi:10.1371/journal.pone.0089589

Israely, S., and Carmeli, E. (2016). Error augmentation as a possible technique for improving upper extremity motor performance after a stroke—a systematic review. Top. Stroke Rehabil. 23, 116–125. doi:10.1179/1945511915Y.0000000007

Jahn, K., Strupp, M., Schneider, E., Dieterich, M., and Brandt, T. (2001). Visually induced gait deviations during different locomotion speeds. Exp. Brain Res. 141, 370–374. doi:10.1007/s002210100884

Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Percept. Psychophys.and 14, 201–211. doi:10.3758/BF03212378

Kang, H. K., Kim, Y., Chung, Y., and Hwang, S. (2012). Effects of treadmill training with optic flow on balance and gait in individuals following stroke: randomized controlled trials. Clin. Rehabil. 26, 246–255. doi:10.1177/0269215511419383

Kannape, O. A., and Blanke, O. (2013). Self in motion: sensorimotor and cognitive mechanisms in gait agency. J. Neurophysiol. 110, 1837–1847. doi:10.1152/jn.01042.2012

Kenyon, R. V., Leigh, J., and Keshner, E. A. (2004). Considerations for the future development of virtual technology as a rehabilitation tool. J. NeuroEng. Rehabil. 1, 13. doi:10.1186/1743-0003-1-13

Keshner, E. A., and Dhaher, Y. (2008). Characterizing head motion in three planes during combined visual and base of support disturbances in healthy and visually sensitive subjects. Gait Posture 28, 127–134. doi:10.1016/j.gaitpost.2007.11.003

Keshner, E. A., and Fung, J. (2019). Editorial: current state of postural research—beyond automatic behavior. Front. Neurol. 10, 1160. doi:10.3389/fneur.2019.01160

Keshner, E. A., and Kenyon, R. V. (2000). The influence of an immersive virtual environment on the segmental organization of postural stabilizing responses. J. Vestib. Res. 10, 207–219.

Keshner, E. A., and Kenyon, R. V. (2009). Postural and spatial orientation driven by virtual reality. Stud. Health Technol. Inf. 145, 209–228. doi:10.3233/978-1-60750-018-6-209

Keshner, E. A., Kenyon, R. V., and Langston, J. (2004). Postural responses exhibit multisensory dependencies with discordant visual and support surface motion. J. Vestib. Res. 14, 307–319.

Keshner, E. A., Streepey, J., Dhaher, Y., and Hain, T. (2007). Pairing virtual reality with dynamic posturography serves to differentiate between patients experiencing visual vertigo. J. NeuroEng. Rehabil. 4, 24. doi:10.1186/1743-0003-4-24

Keshner, E. A., Slaboda, J. C., Buddharaju, R., Lanaria, L., and Norman, J. (2011). Augmenting sensory-motor conflict promotes adaptation of postural behaviors in a virtual environment. Conf Proc IEEE Eng Med Biol Soc 2011, 1379–1382. doi:10.1109/IEMBS.2011.6090324

Keshner, E. A., Weiss, P. T., Geifman, D., and Raban, D. (2019). Tracking the evolution of virtual reality applications to rehabilitation as a field of study. J. NeuroEng. Rehabil. 16, 76. doi:10.1186/s12984-019-0552-6

Kim, C. S., Jung, M., Kim, S. Y., and Kim, K. (2020). Controlling the sense of embodiment for virtual avatar applications: methods and empirical study. JMIR Serious Games 8, e21879. doi:10.2196/21879

King, C. E., Wang, P. T., Chui, L. A., Do, A. H., and Nenadic, Z. (2013). Operation of a brain-computer interface walking simulator for individuals with spinal cord injury. J. NeuroEng. Rehabil. 10, 77. doi:10.1186/1743-0003-10-77

Kleinschmidt, A., Thilo, K. V., Büchel, C., Gresty, M. A., Bronstein, A. M., and Frackowiak, R. S. (2002). Neural correlates of visual-motion perception as object- or self-motion. Neuroimage 16, 873–882. doi:10.1006/nimg.2002.1181

Knorr, A. G., Willacker, L., Hermsdörfer, J., Glasauer, S., and Krüger, M. (2016). Influence of person- and situation-specific characteristics on collision avoidance behavior in human locomotion. J. Exp. Psychol. Hum. Percept. Perform. 42, 1332–1343. doi:10.1037/xhp0000223

Koilias, A., Nelson, M., Gubbi, S., Mousas, C., and Anagnostopoulos, C. N. (2020). Evaluating human movement coordination during immersive walking in a virtual crowd. Behav. Sci. 10(9), 130. doi:10.3390/bs10090130

Konczak, J. (1994). Effects of optic flow on the kinematics of human gait: a comparison of young and older adults. J. Mot. Behav. 26, 225–236. doi:10.1080/00222895.1994.9941678

Koritnik, T., Koenig, A., Bajd, T., Riener, R., and Munih, M. (2010). Comparison of visual and haptic feedback during training of lower extremities. Gait Posture 32, 540–546. doi:10.1016/j.gaitpost.2010.07.017

Lalonde-Parsi, M. J., and Lamontagne, A. (2015). Perception of self-motion and regulation of walking speed in young-old adults. Mot. Contr. 19, 191–206. doi:10.1123/mc.2014-0010

Lambrey, S., and Berthoz, A. (2007). Gender differences in the use of external landmarks versus spatial representations updated by self-motion. J. Integr. Neurosci. 6, 379–401. doi:10.1142/s021963520700157x

Lamontagne, A., Fung, J., Mcfadyen, B., Faubert, J., and Paquette, C. (2010). Stroke affects locomotor steering responses to changing optic flow directions. Neurorehabilitation Neural Repair 24, 457–468. doi:10.1177/1545968309355985

Lamontagne, A., Fung, J., Mcfadyen, B. J., and Faubert, J. (2007). Modulation of walking speed by changing optic flow in persons with stroke. J. NeuroEng. Rehabil. 4, 22. doi:10.1186/1743-0003-4-22

Lamontagne, A., Bhojwani, T., Joshi, H., Lynch, S. D., Souza Silva, W., Boulanger, M., et al. (2019a). Visuomotor control of complex locomotor tasks in physical and virtual environments. Neurophysiol. Clin./Clin. Neurophysiol. 49, 434. doi:10.1016/j.neucli.2019.10.077

Lamontagne, A., Blanchette, A. K., Fung, J., Mcfadyen, B. J., Sangani, S., Robitaille, N., et al. (2019b). Development of a virtual reality toolkit to enhance community walking after stroke. IEEE Proceedings of the International Conference on Virtual Rehabilitation (ICVR), Tel Aviv, Israel, 21-24 July 2019, 2. doi:10.1109/ICVR46560.2019.8994733

Leplaideur, S., Leblong, E., Jamal, K., Rousseau, C., Raillon, A. M., Coignard, P., et al. (2016). Short-term effect of neck muscle vibration on postural disturbances in stroke patients. Exp. Brain Res. 234, 2643–2651. doi:10.1007/s00221-016-4668-7

Levac, D. E., Huber, M. E., and Sternad, D. (2019). Learning and transfer of complex motor skills in virtual reality: a perspective review. J. NeuroEng. Rehabil. 16, 121. doi:10.1186/s12984-019-0587-8

Levin, M. F., Weiss, P. L., and Keshner, E. A. (2015). Emergence of virtual reality as a tool for upper limb rehabilitation: incorporation of motor control and motor learning principles. Phys. Ther. 95, 415–425. doi:10.2522/ptj.20130579

Liu, A., Hoge, R., Doyon, J., Fung, J., and Lamontagne, A. (2013). Brain regions involved in locomotor steering in a virtual environment. IEEE Conf Proc International Conference on Virtual Rehabilitation, Philadephia, PA, United States, 26-29 August 2013. 8. doi:10.1109/ICVR.2013.6662070

Liu, L. Y., Li, Y., and Lamontagne, A. (2018). The effects of error-augmentation versus error-reduction paradigms in robotic therapy to enhance upper extremity performance and recovery post-stroke: a systematic review. J. NeuroEng. Rehabil. 15, 65. doi:10.1186/s12984-018-0408-5

Liu, L. Y., Sangani, S., Patterson, K. K., Fung, J., and Lamontagne, A. (2020b). Real-time avatar-based feedback to enhance the symmetry of spatiotemporal parameters after stroke: instantaneous effects of different avatar views. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 878–887. doi:10.1109/TNSRE.2020.2979830

Liu, L. Y., Sangani, S., Patterson, K. K., Fung, J., and Lamontagne, A. (2020a). Application of visual and auditory biological cues in post-stroke gait training – the immediate effects of real-time virtual avatar and footstep sound on gait symmetry. Proceedings of the 11th World Congress of the WFNR, Lyon, France, October 2020.

Longo, M. R., Schüür, F., Kammers, M. P., Tsakiris, M., and Haggard, P. (2008). What is embodiment? A psychometric approach. Cognition 107, 978–998. doi:10.1016/j.cognition.2007.12.004

Lopez, C., Bieri, C. P., Preuss, N., and Mast, F. W. (2012). Tactile and vestibular mechanisms underlying ownership for body parts: a non-visual variant of the rubber hand illusion. Neurosci. Lett. 511, 120–124. doi:10.1016/j.neulet.2012.01.055

Lopez, C., Halje, P., and Blanke, O. (2008). Body ownership and embodiment: vestibular and multisensory mechanisms. Neurophysiol. Clin. 38, 149–161. doi:10.1016/j.neucli.2007.12.006

Lu, J., Xu, G., and Wang, Y. (2015). Effects of whole body vibration training on people with chronic stroke: a systematic review and meta-analysis. Top. Stroke Rehabil. 22, 161–168. doi:10.1179/1074935714Z.0000000005

Lynch, S. D., Kulpa, R., Meerhoff, L. A., Pettre, J., Cretual, A., and Olivier, A. H. (2018). Collision avoidance behavior between walkers: global and local motion cues. IEEE Trans. Visual. Comput. Graph. 24, 2078–2088. doi:10.1109/TVCG.2017.2718514

Madhavan, S., Lim, H., Sivaramakrishnan, A., and Iyer, P. (2019). Effects of high intensity speed-based treadmill training on ambulatory function in people with chronic stroke: a preliminary study with long-term follow-up. Sci. Rep. 9, 1985. doi:10.1038/s41598-018-37982-w

Marmelat, V., Delignières, D., Torre, K., Beek, P. J., and Daffertshofer, A. (2014). “Human paced” walking: followers adopt stride time dynamics of leaders. Neurosci. Lett. 564, 67–71. doi:10.1016/j.neulet.2014.02.010

Meerhoff, L. A., De Poel, H. J., Jowett, T. W. D., and Button, C. (2019). Walking with avatars: gait-related visual information for following a virtual leader. Hum. Mov. Sci. 66, 173–185. doi:10.1016/j.humov.2019.04.003

Meerhoff, L. R. A., De Poel, H. J., Jowett, T. W. D., and Button, C. (2017). Influence of gait mode and body orientation on following a walking avatar. Hum. Mov. Sci. 54, 377–387. doi:10.1016/j.humov.2017.06.005

Mirelman, A., Maidan, I., Herman, T., Deutsch, J. E., Giladi, N., and Hausdorff, J. M. (2011). Virtual reality for gait training: can it induce motor learning to enhance complex walking and reduce fall risk in patients with Parkinson’s disease? J Gerontol A Biol. Sci. Med. Sci. 66, 234–240. doi:10.1093/gerona/glq201

Mirelman, A., Rochester, L., Maidan, I., Del Din, S., Alcock, L., Nieuwhof, F., et al. (2016). Addition of a non-immersive virtual reality component to treadmill training to reduce fall risk in older adults (V-TIME): a randomised controlled trial. Lancet 388, 1170–1182. doi:10.1016/S0140-6736(16)31325-3

Molenberghs, P., Cunnington, R., and Mattingley, J. B. (2012). Brain regions with mirror properties: a meta-analysis of 125 human fMRI studies. Neurosci. Biobehav. Rev. 36, 341–349. doi:10.1016/j.neubiorev.2011.07.004

Morrone, M. C., Tosetti, M., Montanaro, D., Fiorentini, A., Cioni, G., and Burr, D. C. (2000). A cortical area that responds specifically to optic flow, revealed by fMRI. Nat. Neurosci. 3, 1322–1328. doi:10.1038/81860