- 1School of Optometry and Vision Science, University of New South Wales, Sydney, NSW, Australia

- 2School of Psychology, University of Wollongong, Wollongong, NSW, Australia

- 3Department of Otolaryngology, Graduate School of Medical Sciences, Nagoya City University, Nagoya, Japan

Humans rely on multiple senses to perceive their self-motion in the real world. For example, a sideways linear head translation can be sensed either by lamellar optic flow of the visual scene projected on the retina of the eye or by stimulation of vestibular hair cell receptors found in the otolith macula of the inner ear. Mismatches in visual and vestibular information can induce cybersickness during head-mounted display (HMD) based virtual reality (VR). In this pilot study, participants were immersed in a virtual environment using two recent consumer-grade HMDs: the Oculus Go (3DOF angular only head tracking) and the Oculus Quest (6DOF angular and linear head tracking). On each trial they generated horizontal linear head oscillations along the interaural axis at a rate of 0.5 Hz. This head movement should generate greater sensory conflict when viewing the virtual environment on the Oculus Go (compared to the Quest) due to the absence of linear tracking. We found that perceived scene instability always increased with the degree of linear visual-vestibular conflict. However, cybersickness was not experienced by 7/14 participants, but was experienced by the remaining participants in at least one of the stereoscopic viewing conditions (six of whom also reported cybersickness in monoscopic viewing conditions). No statistical difference in spatial presence was found across conditions, suggesting that participants could tolerate considerable scene instability while retaining the feeling of being there in the virtual environment. Levels of perceived scene instability, spatial presence and cybersickness were found to be similar between the Oculus Go and the Oculus Quest with linear tracking disabled. The limited effect of linear coupling on cybersickness, compared with its strong effect on perceived scene instability, suggests that perceived scene instability may not always be associated with cybersickness. However, perceived scene instability does appear to provide explanatory power over the cybersickness observed in stereoscopic viewing conditions.

Introduction

Over the past decade, we have seen a rapidly expanding consumer uptake of head-mounted displays (HMDs) for virtual reality (VR) in numerous applications. These applications have included education (Polcar and Horejsi, 2015), entertainment (Roettl and Terlutter, 2018), telehealth (Riva and Gamberini, 2000), anatomy and diagnostic medicine (Jang et al., 2017; Chen et al., 2020). The recent success of consumer-grade HMDs for VR is not only attributed to their increasing affordability, but also to their operational enhancements (e.g., larger field of view, relatively low system latency). These enhancements contribute to generating compelling experiences of spatial presence—the feeling of being “there” in the virtual environment, as opposed to here in the physical world (Skarbez et al., 2017). However, symptoms of cybersickness (nausea, oculomotor, discomfort, and disorientation) can still occur during HMD VR, particularly when angular head rotation generates display lag (e.g., Feng et al., 2019; Palmisano et al., 2019, 2020; Kim et al., 2020). Here, we examine whether the cybersickness in these HMDs can also be attributed to the visual-vestibular conflicts generated during linear head translation.

Previous research on HMD VR has primarily been concerned with studying the effects of visual-vestibular conflicts on vection—the illusory perception of self-motion that occurs when stationary observers view visual simulations of self-motion (Palmisano et al., 2015). In one vection study, Kim et al. (2015) used the Oculus Rift DK1 HMD to systematically vary the synchronization between visual simulations of angular head rotation and actual yaw angular rotations of the head performed at 1.0 Hz in response to a metronome. They found that vection was optimized when synchronizing the visually simulated viewing direction with the actual head rotation (i.e., when the display correctly compensated for the user's physical head motion). Vection strength was found to be reduced when no compensation was generated (i.e., when head tracking was disabled), and lower still when inverse compensation was generated (i.e., where the compensatory visual motion in display moved was in the opposite direction to normal for the user's head-movement). These findings suggest that synchronizing visual and vestibular signals concerning angular head rotation improve vection.

In a follow-up study to assess whether angular visual-vestibular interactions are also critical for cybersickness, Palmisano et al. (2017) systematically varied visual-vestibular conflict using the Oculus Rift DK1 HMD during sinusoidal yaw angular head rotations. They used the Simulator Sickness Questionnaire (SSQ) to measure cybersickness (Kennedy et al., 1993) and found that full-field inverse display compensation generated greatest cybersickness. The mean display lag was determined to be ~ 72 ms for the HMD VR system they used to generate their virtual environment. This latency is quite high compared to modern systems like the Oculus Rift CV1 and S, which use Asynchronous Time Warp (ATW) to effectively eliminate angular latency. Indeed, Feng et al. (2019) and Palmisano et al. (2019) both reported that cybersickness was considerably reduced (during yaw head movements, respectively) when using these more recent HMDs with very low display lags. They measured cybersickness using the FMS—the Fast Motion Sickness questionnaire (Keshavarz and Hecht, 2011). They found that increasing display lag above baseline latency monotonically increased reported cybersickness severity from low to moderate levels. Even at baseline levels of lag (<5 ms), participants tended to report a very small level of discomfort consistent with cybersickness.

One potential explanation for this effect of display lag on cybersickness is the level of sensory conflict it generates (e.g., Reason and Brand, 1975; Reason, 1978). It is often assumed that cybersickness arises when one or more senses provide information that is incongruent with information provided by other senses (i.e., intersensory conflict). Recently, we have proposed that DVP—the magnitude of Difference between the orientation of the Virtual head relative to the Physical head—can be used to quantify the overall amount of sensory conflict generated by a stimulus. In the first study to examine this proposal, Kim et al. (2020) examined the effects of experimentally manipulating the level of display lag during active HMD VR. They instructed their participants to make oscillatory 1.0 or 0.5 Hz head rotations in pitch while viewing a simulated wireframe ground plane. They found that increasing display lag increased the magnitude of DVP. Critically, as the magnitude of this DVP increased, the participants' perceptions of scene instability and cybersickness both increased, and their feelings of presence decreased. These findings suggest that sensory conflict (as operationalised by DVP) can offer diagnostic leverage in accounting for cybersickness severity. However, conscious perceptions of scene instability and feelings of presence may also contribute to the severity of these symptoms (see Weech et al., 2019).

In early research, Allison et al. (2001) found that human observers could tolerate very significant system latencies before the virtual environment became perceptually unstable. In that study, significant scene instability was only perceived when observers executed high-velocity head movements that revealed the inconsistency between head and display motion. However, other researchers have proposed that moderate head-display lags (40−60 ms) can impair perception of simulator fidelity (Adelstein et al., 2003), and that even shorter temporal lags (< 20 ms) can be perceptible to well-trained human observers (Mania et al., 2004). Most previous studies have only considered the effect of angular sensory conflicts on perception. However, studies are yet to examine the effects of linear sensory conflict caused by head translation on cybersickness, as well as spatial presence and perceived scene instability. Ash et al. (2011) found that linear visual-vestibular conflicts can influence perceptual experiences of self-motion generated by external visual motion displays (c.f., Kim and Palmisano, 2008, 2010). Hence, it is possible that such linear visual-vestibular conflicts in HMD VR could affect perceived scene instability, presence and cybersickness.

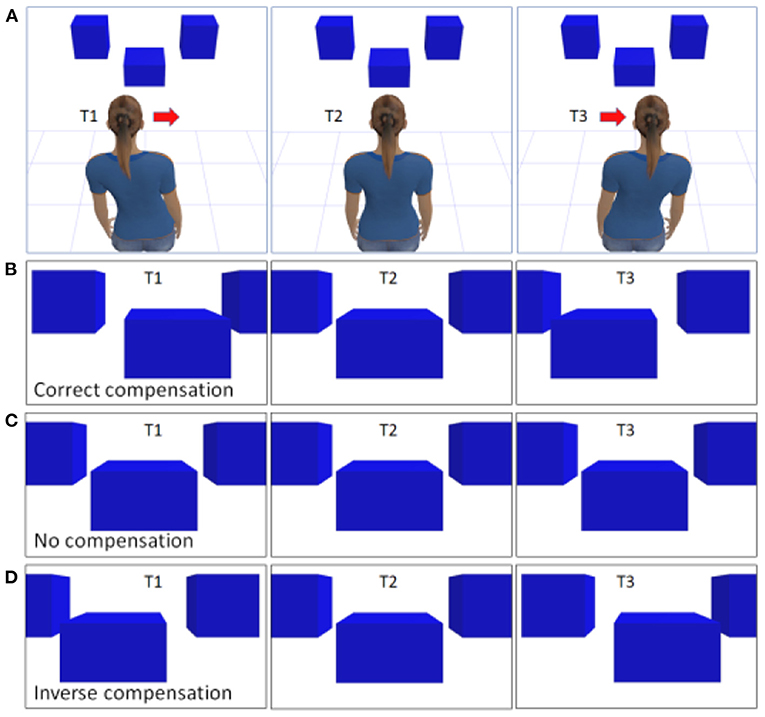

New HMDs offer portable VR solutions (e.g., Oculus Go and Oculus Quest), but have significant functional differences in their response to changes in angular and linear head position. Whereas the Oculus Quest provides six-degrees of freedom (6DOF) head tracking and compensates for both angular and linear head displacement, the Oculus Go only provides 3DOF tracking to compensate for angular rotations of the head (with no linear head tracking). Hence, the Oculus Quest generates “Correct Compensation” during linear head translation, but the Oculus Go generates a condition of “No Compensation” during the same head translation (see Figure 1). We can use the linear gains of 1.0 and 0.0 to describe the amount of potential sensory conflict provided by linear compensation in the Oculus Quest and Go, respectively. Using this convention, a gain of −1.0 would represent “Inverse Compensation” and should generate the greatest level of visual-vestibular sensory conflict. We predict that cybersickness should be less likely and less severe when using the Oculus Quest compared to the Oculus Go. We further predict that attenuating the gain of linear tracking in the Quest to zero should generate similar user experiences to the Oculus Go, but that inverse compensation (i.e., negative gain) should generate greatest cybersickness, perceived scene instability and reduced presence. Given that stereoscopic viewing might exacerbate cybersickness (Palmisano et al., 2019), we compared these attributes across displays viewed stereoscopically or monoscopically.

Figure 1. Environmental appearances during linear head translation. (A) Physical relationship between stationary 3D objects and the point of regard during a linear translation of the head from left to right over time (T1–T3). (B) Correct compensation results in a retinal image that presents the correct perspective on the scene at each point in time. (C) No compensation generates no change in the visual image during changes in linear head position. (D) Inverse compensation presents visually simulated head motion in the opposite direction to that expected for physical head movement.

Materials and Methods

Participants

A total of 14 normal healthy adults (age range 19–36 years) participated in this study. All had no neurological impairment and had good visual acuity without the need for the correction of refractive errors. All procedures were approved by the Human Research Ethics Advisory panel (HREA) at the University of New South Wales (UNSW Sydney).

Head Mounted Displays (HMDs)

We used two different devices, the Oculus Go and the Oculus Quest. These mobile HMDs are both completely portable but have quite different manufacturer specifications (developer.oculus.com/design/oculus-device-specs/). Both systems use ATW to minimize the effective/perceived angular display lag during head rotation.

The Oculus Go uses a single fast-switching LCD with a total resolution of 2,560 × 1,440 pixels. It supports two refresh rates (60 or 72 Hz) with natural color reproduction (sRGB, 2.2 gamma, and CIE standard D65 white illuminant). The binocular field of view is ~100°. The Oculus Go's head movement tracking system offers only 3DOF positional tracking of only angular head rotation (not linear head displacement).

The Oculus Quest uses dual OLEDs with individual resolutionsw of 1,440 × 1,600 pixels, somewhat superior to the Oculus Go. The Oculus Quest operates at the 72 Hz refresh rate for each eye with default SDK color reproduction (native RGB, 2.2 gamma, but still with CIE standard D65 white illuminant). Like the Oculus Go, the binocular field of view is ~100°, but the Oculus Quest uses an inside-out optical head movement tracking system to offer 6DOF positional tracking (tracking both angular and linear head position).

We configured both these devices after pairing the hand remote(s) to the respective HMDs using an Apple i-Phone running the Oculus App. This application showed the view being presented in the HMD in real time on the phone's display. The devices were set to enable developer mode to allow the addition of new Android applications to be uploaded to the HMDs for running our experiment.

The Virtual Environment

We adapted the Native Mobile SDK application “NativeCubeWorldDemo” accompanying the Oculus developer code examples on the Oculus website (https://developer.oculus.com/). We configured the compiler using Android Studio based on the recommended settings provided by the Oculus developer website. The experimental application code was compiled to build an Android application package (APK), which was then pushed to the Oculus Quest and Go using the Android Debug Bridge (ADB). These devices were connected to the development PC via direct USB connection.

The default behavior of the example application was modified by setting the color of 3D generated cubes to a darker bluish hue sRGB (0.0, 0.0, 0.2~0.4). Two opposing faces were configured to have slightly different blue intensities (0.2 and 0.4). This ensured that the chromaticity of the simulated visual elements was comparable to similar traditional research studies on perception of self-motion in virtual environments (e.g., Kim and Palmisano, 2008, 2010—both of which examined display lag manipulations during physical head movements using large external displays).

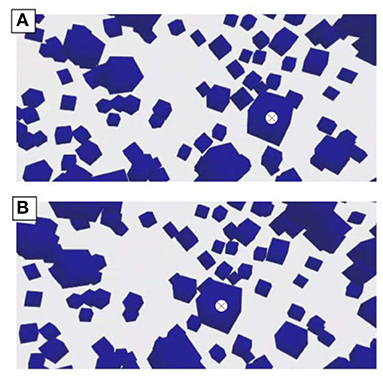

A static screenshot of the virtual environment from one vantage point is shown in Figure 2. Because the cubes surround the user within a ± 4 m perimeter, many of the cubes will never been seen. To increase the depth of the display beyond the default behavior of the sample code, we shifted all the cubes in front of the participant to generate an 8 m deep display. A sample code snippet shows the method we used to preserve stereopsis or determine the cyclopean view (for monoscopic viewing), while still supporting motion parallax as a function of linear gain (see Appendix A). Essentially, the gain served as a multiplier that affected simulated head displacement along the three cardinal axes. All rotational mappings of head movements were preserved (i.e., correct angular compensation was applied in all situations of linear gain manipulation).

Figure 2. Sample screenshots of the virtual environment. These two screenshots show the same environment viewed from two different vantage points. These views were produced by a roughly linear head displacement of ~30 cm from the left horizontal position (A) to the right horizontal position (B). Individual cube orientations maintained between views to show the motion parallax capabilities of this system. Corresponding foreground cubes in the two images have been marked with an ‘×’ for reference. Note that the black background has been set to white for print reproduction.

Procedure

Prior to participation, all participants consented to the recruitment requirements of the study by providing written informed consent. Participants were instructed to stand upright wearing one of the HMDs and perform interaural head translations at a rate of 0.5 Hz. The rate was maintained using an audible metronome running continuously on a separate host PC with speakers. Participants were each given a small amount of time to practice the head movements with feedback provided by the experimenter trained on the assessment of head movements. This was done to ensure the participants understood the instructions and that they had good range of mobility for generating the required inter-aural head movements with minimal head rotation. During the experiment no further feedback was provided on performance. Participants were instructed to maintain their gaze off in the distance to one of the farthest targets while viewing each simulation. No fixation was used to create conditions that were comparable to typical viewing in natural viewing conditions.

In each test session, participants viewed 14 conditions on the Oculus Quest: stereoscopic vs. monoscopic viewing (two levels) × different amounts of translational gain: −1.0, −0.5, −0.25, 0.0, +0.25, +0.5, +1.0 (seven levels). Participants also performed two separate conditions on the Oculus Go: stereoscopic vs. monoscopic viewing at 0.0 translational gain. Participants viewed simulations on the Oculus Quest and Oculus Go in counterbalanced order (e.g., 14 trials on the Quest followed by 2 trials on the Go for one participant, and then, 2 trials on the Go and 14 trials on the Quest for the next participant). After participants viewed each display condition for 30 s, the simulation ceased and the display faded to complete darkness. At this time, participants were instructed to verbally report perceived scene instability, spatial presence and cybersickness. Time was provided before participants commenced their subsequent trial. This was done to mitigate the build-up of cybersickness and contamination between trials. The minimum delay between trials was 30 s (to reduce the possibility of any experience of cybersickness transferring between trials). However, the experimenter could pause the display between trials if participants requested a break. Participants were told that they should not proceed onto the next trial until their cybersickness symptoms had dissipated (i.e., their FMS score had returned to 0). On the few occasions, a break of up to ~90 s was necessary for the participant to report that their symptoms had resolved.

Perceived scene instability was reported as a subjective 0–20 rating on how much the simulated cubes in the virtual environment appeared to move with the participant as they translated their head inter-aurally (0 = remained stationary independent of head movement like objects in the real world; 10 = moved as much as the participants own head; 20 = moved twice as much the participants head moved). Spatial presence was reported on a 0–20 rating scale, where 0 indicated the participant “feels completely here in the physical environment” and 20 indicated the participant “feels completely there in the virtual environment”. This rating system is based on those used in previous studies (IJsselsteijn et al., 2001; Clifton and Palmisano, 2019). Cybersickness was measured using the Fast Motion Sickness (FMS) scale (Keshavarz and Hecht, 2011). This FMS scale provides discrete values per trial, and therefore, is a convenient method for making inter-trial comparisons. The FMS was originally validated against the Kennedy Simulator Sickness Questionnaire (SSQ) (Kennedy et al., 1993). Although it does not provide information about cybersickness symptoms (only its severity), it requires far less time than the SSQ for participants to complete (Keshavarz and Hecht, 2011). To gain insights into the overall level of cybersickness generated by participation in this study, and the symptoms experienced, we did however have participants complete the SSQ prior to, and at the conclusion of, their HMD VR testing.

Statistical Analysis

For data obtained using the Oculus Quest, participant reports of perceived scene instability and spatial presence were analyzed using repeated-measures ANOVAs. A Poisson mixed model was used to test the effect of linear gain and viewing type on cybersickness. We also correlated these perceptual outcome measures against one another to identify any perceptual interrelationships. For data obtained using the Oculus Go, we used repeated-measures t-tests to assess whether our outcome measures differed to the mean Correct Compensation and No Compensation conditions obtained using the Oculus Quest. For the Oculus Quest, we also assessed the overall amplitudes of the 6DOF head movements generated by participants to determine how consistent they were across viewing conditions. We also verified that the comparable levels of angular head rotation were minimal and consistent between tasks performed on the Oculus Quest and Go HMDs.

Results

Oculus Quest

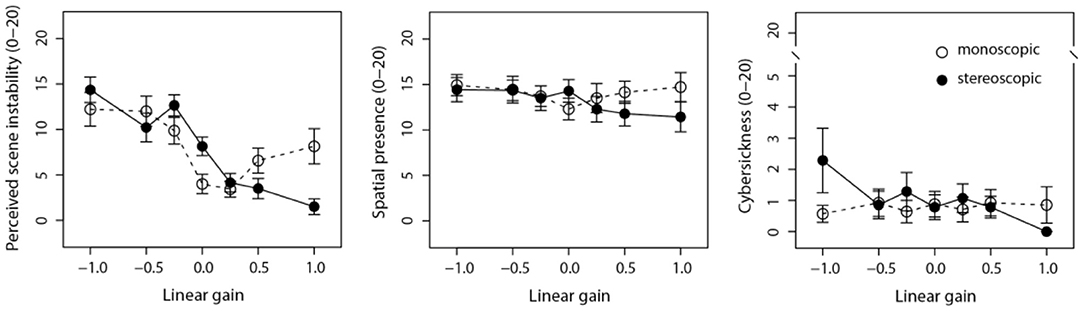

Each of our three outcome metrics are plotted as a function of linear gain imposed on the Oculus Quest in Figure 3 below. Whereas results from monoscopic viewing are represented by open points and dashed lines, results from stereoscopic viewing are represented by dark points and solid lines.

Figure 3. Outcome measures plotted as a function of linear gain on the Oculus Quest HMD. Mean perceived scene instability plotted as a function of linear gain (Left). Mean spatial presence plotted as a function of linear gain (Middle). Mean cybersickness ratings plotted as a function of linear gain (Right). Dark points and solid lines show data for stereoscopic viewing, open points and dashed lines show data for monoscopic viewing. Error bars show standard errors of the mean.

Perceived scene stability improved when the linear gain increased from negative to positive (inverse to correct) compensation (i.e., perceived scene instability was reduced). A repeated-measures ANOVA found a significant main effect of linear gain on perceived scene instability (F6, 78 = 19.71, p < 0.001). There was no main effect of viewing type (stereoscopic or monoscopic) on perceived scene instability (F1, 13 = 0.04, p = 0.84). However, there was a significant interaction effect between linear gain and viewing type on perceived scene instability (F6, 78 = 7.29, p < 0.001). This interaction can be attributed to the greater perceived scene instability found for monoscopic viewing in the correct-compensation condition, but lower perceived scene instability under the no-compensation condition (compared with stereoscopic viewing).

Spatial presence was unaffected by changes in linear gain. A repeated-measures ANOVA found no main effect of linear gain on spatial presence (F6, 78 = 1.41, p = 0.22). There was also no main effect of viewing type on spatial presence (F1, 13 = 1.68, p = 0.22). There was no interaction between linear gain and viewing type on spatial presence (F6, 78 = 1.66, p = 0.14). These results show that spatial presence is robust to changes in linear gain.

Cybersickness was consistently reported to be zero for many of our participants across all levels of gain. A total of seven participants reported cybersickness in at least one stereoscopic viewing condition, six of whom also reported cybersickness in monoscopic viewing conditions. Hence, seven participants did not report any cybersickness during their participation in this study. Due to the large number of zero ratings reported, we used a Poisson mixed model with viewing type and gain as fixed effect factors and trial order as a separate time-varying covariate. For this analysis, monoscopic viewing was coded as 0 and stereoscopic viewing was coded as 1. We treated linear gain as a numeric variable based on the assumption the overall trend is linear as evident in Figure 3 (Right). There were no detected significant fixed effects on cybersickness for both linear gain (β = −0.78, SE =1.24, p = 0.53) and viewing type (β = +2.73, SE = 1.62, p = 0.09). However, there was a significant interaction effect between viewing type and gain on cybersickness (β = −0.88, SE = 0.29, p = 0.002). The effect of trial order on reported cybersickness was also found to be significant (β = +0.06, SE = 0.02, p = 0.004). These results show we could not detect any significant effect of linear gain on cybersickness under monoscopic viewing conditions. However, the significant interaction suggests that the effect of linear gain on cybersickness is significantly different under stereoscopic viewing conditions.

To assess other possible order effects, we performed correlations between the two remaining outcome metrics and the temporal order of all conditions performed by participants irrespective of viewing condition or linear gain. There were no detected significant correlations between perceived scene instability and trial order (r = −0.01, p = 0.99) or between spatial presence and trial order (r = −0.06, p = 0.43).

We assessed whether the small amounts of reported cybersickness on average could be accounted for by perceived scene instability. A Pearson's product-moment correlation found a significant linear relationship between perceived scene instability and cybersickness severity during stereoscopic viewing conditions (r = +0.81, p = 0.028). No significant correlation was detected between perceived scene instability and cybersickness when viewing displays monoscopically (r = −0.27, p = 0.55). These findings suggest that variations in perceived scene instability account for 66% of the variations in cybersickness associated with stereo viewing only.

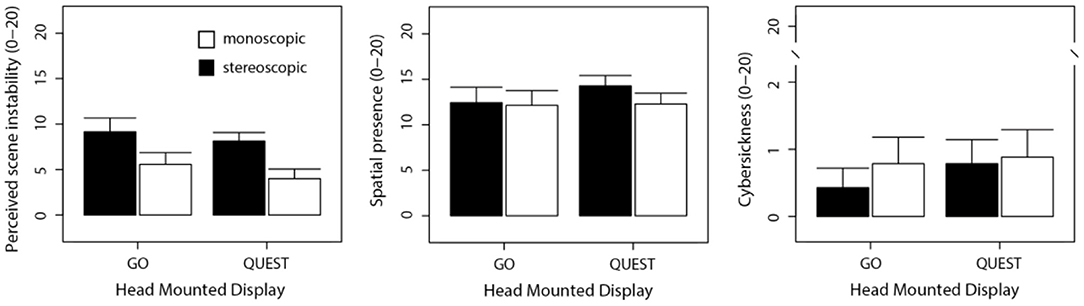

Oculus Go

Bar graphs in Figure 4 show the mean outcome metrics for the Oculus Go compared with the equivalent zero-gain (i.e., no compensation) for linear tracking on the Oculus Quest. Repeated-measures ANOVAs were used to examine the effects of device type and viewing type on perception and well-being in these zero-gain conditions. For perceived scene instability, there was no main effect of device type (F1, 13 = 2.61, p = 0.13). However, there was a significant main effect of viewing type on perceived scene instability (F1, 13 = 19.02, p < 0.001)—with stereoscopic viewing resulting in greater scene instability than monoscopic viewing. There was also a significant interaction between device type and viewing type for perceived scene instability (F1, 13 = 19.02, p < 0.001). For spatial presence, there was no significant main effect of device type detected (F1, 13 = 0.097, p = 0.76). There was no significant main effect of viewing type on spatial presence detected (F1, 13 = 2.69, p = 0.13). The interaction between device type and viewing type was also not statistically significant for spatial presence (F1, 13 = 2.69, p = 0.13). No device type or viewing type effects were found to be significant for cybersickness (none of the conditions examined generated mean FMS scores that were statistically greater than zero). Of the 14 participants, the number of participants who reported any cybersickness was 6 in zero-gain conditions on the Oculus Quest and 4 on the Oculus Go.

Figure 4. Bar graphs of the outcome measures for the zero-gain conditions on the Oculus Go and Oculus Quest HMDs. Mean perceived scene instability (Left). Mean spatial presence (Middle). Mean cybersickness ratings (Right). Dark bars show data for stereoscopic viewing, white bars show data for monoscopic viewing. Error bars show standard errors of the mean.

Head Movements

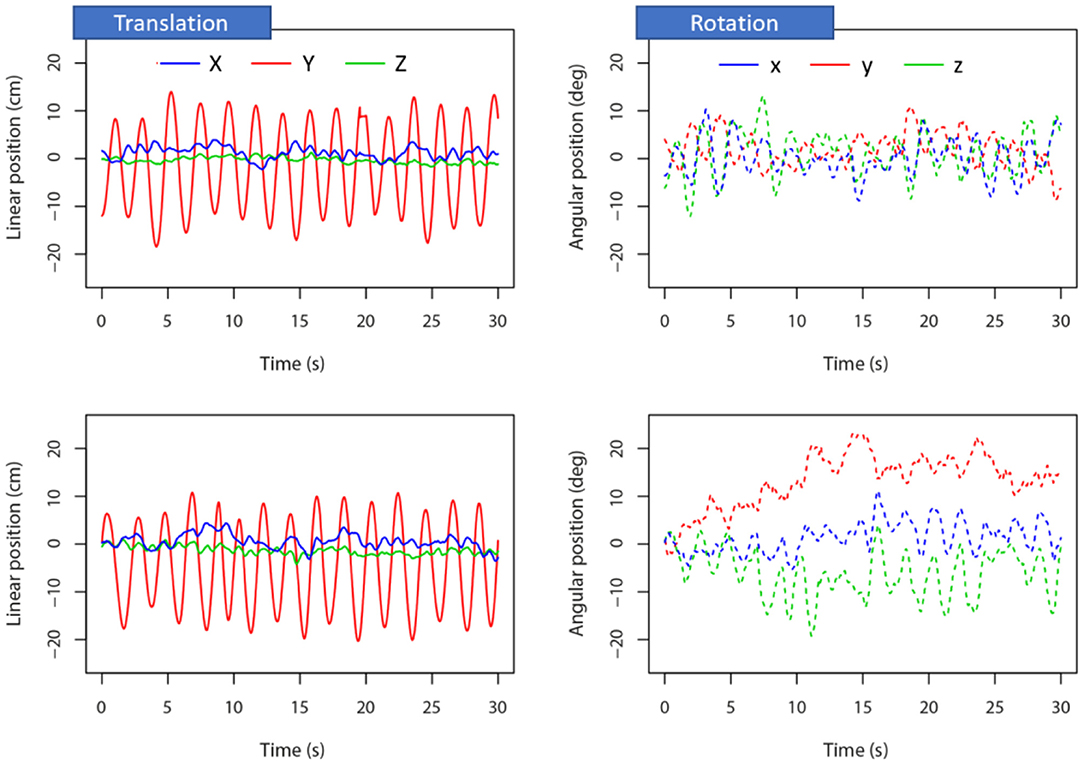

Typical head movements generated by a representative participant are shown in Figure 5, which plots the time-series data for changes in linear and angular head position generated in the no-compensation condition under stereoscopic (top) and monoscopic (bottom) viewing conditions. Further analysis on the overall peak-to-peak change in head displacement confirmed that there were no consistent differences in linear head movement across gain conditions. A three-way ANOVA did not find significant main effects of viewing condition (F1, 5 = 1.01, p = 0.36) or linear gain (F6, 30 = 0.64, p = 0.70) on the amplitude of cardinal linear head displacement. However, there was a significant main effect of peak-to-peak amplitude of linear head displacement along the three cardinal axes of head displacement (F2, 10 = 14.70, p = 0.001). Linear displacement of the head along the inter-aural axis (M = 20.9 cm, SD = 5.8 cm) was greater than naso-occipital head movements (M = 4.0 cm, SD = 4.1 cm) and dorso-ventral head movements (M = 8.0 cm, SD = 9.6 cm).

Figure 5. Head movements from a representative participant in the zero-gain condition on the Oculus Quest. Left panel shows linear head position and right panels show angular head position. Upper panel shows results from stereoscopic viewing and lower panels show results from monoscopic viewing. X corresponds to translation along the naso-occipital axis, Y corresponds to translation along the inter-aural axis, and Z corresponds to translation along the dorso-ventral axis (x, y, and z show rotations around the same axes).

Another three-way ANOVA detected no significant main effect of viewing condition (F1, 5 = 0.087, p = 0.78) or linear gain (F6, 30 = 0.75, p = 0.62) on the amplitude of cardinal angular head rotation. There was a significant main effect of peak-to-peak amplitude of angular head rotation around the three cardinal axes (F2, 10 = 9.87, p = 0.004). Mean angular displacement of the head around the vertical dorso-ventral axis (M = 11.5°, SD = 6.19°) was significantly greater than head rotation around the naso-occipital axis (M = 5.95°, SD = 2.42°) and inter-aural axis (M = 5.73°, SD = 2.44°).

Discussion

When using the Oculus Quest (with linear head tracking), perceived scene instability was found to increase as linear display gain was reduced from correct compensation toward inverse compensation. However, this manipulation only altered cybersickness in stereoscopic viewing conditions. Estimates of spatial presence were also found to be invariant across changes in linear display gain. When using the Oculus Go (without linear head tracking), we found that levels of perceived scene instability, presence and cybersickness were similar to those obtained with the Quest under comparable (i.e., zero-gain linear compensation) conditions. While monoscopic viewing in these zero-gain conditions was found to improve perceived scene stability on both devices, it also had the effect of reducing spatial presence (compared to stereoscopic viewing). However, under correct compensation conditions, perceived scene stability was higher under stereoscopic viewing conditions.

Our past research has shown that cybersickness is increased by brief exposures to angular visual-vestibular conflicts (produced by artificially inflating display lag—Feng et al., 2019; Palmisano et al., 2019, 2020; Kim et al., 2020). These increases in cybersickness were found even with brief exposures of around 12 s. In these studies, participants were instructed to engage in active angular head displacements at a comfortable functional range (similar in terms of amplitude to those they would normally use when exploring virtual environments in most use cases). Although we found in the current study that instructing participants to engage in purely linear changes in head orientation at a comfortable biomechanical range generated cybersickness, the severity of cybersickness was lower and only reported during stereoscopic viewing conditions.

One potential explanation for the difference in findings between our active linear conflict study and angular conflicts studied previously is the salience of the vestibular stimulation involved. The angular range of 0.5 Hz head rotations in previous studies was typically about ± 20°, which can potentially achieve angular accelerations of up to ~200°/s2. These levels of angular head acceleration were sufficient to generate compelling cybersickness during head rotations in yaw (Feng et al., 2019; Palmisano et al., 2019, 2020) and pitch (Kim et al., 2020). In the current study, participants generated head movements over a 20 cm range on average. Hence, a ± 10 cm head translation of 0.5 Hz should have generated short peak acceleration of ~1.0 m/s2. This vestibular stimulation is shorter and less intense than many of the linear head accelerations encountered in the real-world (e.g., in situations like driving a car; Bokare and Maurya, 2016). So, it is possible that longer lasting and more intense linear visual-vestibular conflicts may be more provocative for cybersickness. However, an alternative interpretation of the current findings is that humans may be biomechanically resistant to linear conflicts generating cybersickness (at least compared to the effects of angular conflicts).

Otolithic Contributions to Linear Sensory Conflict

As noted above, even with significant stimulation of the vestibular system, it is possible that conditions of linear conflict might be less provocative than angular conflicts. The general lack of cybersickness found with linear visual-vestibular conflict could be attributed to functional differences of the otolith system, compared with the semicircular canal (SCC) system. Eye-movement responses to angular head acceleration have a latency of around 10 ms (Collewijn and Smeets, 2000). However, these latencies can typically be longer in response to linear head accelerations; the latency of the otolith-ocular reflex is about 10 ms for high-acceleration linear head translations (Iwasaki et al., 2007), but can range up to 34 ms in response to low-acceleration linear head translations (Bronstein and Gresty, 1988). The relatively low translatory head accelerations generated by our participants would have invoked activity of this longer latency low-frequency otolith-ocular system, which may be more tolerant to sensory conflict.

Neurological evidence further suggests that endogenous otolith-mediated conflicts might be less provocative than conflicts associated with SCC dysfunctions. Neurologists routinely carry out assessments of vestibular evoked myogenic potentials (VEMPs), which measure short-latency click-evoked responses of the cervical muscles (cVEMP) (Colebatch et al., 1994) or short-latency ocular responses to high-frequency head vibrations administered to the hairline at Fz (e.g., Iwasaki et al., 2007). These clicks and vibrations are known to selectively stimulate primary otolith receptors, as verified in neurophysiological studies on guinea pigs (Murofushi et al., 1995; Curthoys et al., 2006). Surveys of hospital records on vestibular patients have identified patients with normal vestibular ocular responses to angular head impulses indicative of normal SCC function, but abnormal VEMPs indicative of otolith dysfunction (Iwasaki et al., 2015; Fujimoto et al., 2018). Fujimoto et al. (2018) found these patients with otolithic-specific vestibular dysfunction (OSVD) often reported symptoms attributed to rotary vertigo caused by dislodged otoconia in one of the SCCs (~14%)—a condition known as benign paroxysmal positional vertigo (BBPV). Non-rotary disturbances were not generally reported by those diagnosed with BPPV nor by the 47% of OSCD patients not formally diagnosis with a specific vestibular disorder. These findings suggest that (real/simulated) otolith dysfunctions per se are less likely to generate noteworthy subjective disturbances than SCC dysfunctions.

Based on this neurophysiological evidence, it is possible that participants may be more perceptually tolerant of visual-vestibular sensory conflict generated by linear head motion during HMD VR. This may account for the limited cybersickness in the current study, compared to previous studies that found angular sensory conflicts generate compelling cybersickness (Palmisano et al., 2017, 2019; Feng et al., 2019; Kim et al., 2020). Coupled with the low intensity brief translational accelerations imposed in the present study, no significant cybersickness was reported. It is possible that more salient linear conflicts would ultimately be required to generate provocative experiences of cybersickness in HMD VR. However, healthy users can find low frequency, large amplitude vertical or horizontal linear body translations to be highly sickening, so our predictions do not extend to these types of otolith-medicated cases, which can occur in the transportation and laboratory settings (e.g., Vogel et al., 1982).

Functional Comparison of the Oculus Quest and Oculus Go HMDs

Significant cybersickness was not consistently reported on either the Quest or the Go in any of the linear head movement and viewing conditions examined in this study. When we considered the responses in the zero-gain condition of the Oculus Quest and the Oculus Go, stereoscopic viewing generated significantly greater presence than monoscopic viewing. Perceived scene instability was found to also be significantly greater in the stereoscopic condition, compared with the monoscopic viewing condition. However, perceived scene instability was lower for stereoscopic viewing under correct-compensation on the Oculus Quest compared with monoscopic viewing (evident in the significant interaction effect between viewing condition and linear gain). We did not observe any main effects of linear gain or stereoscopic viewing on spatial presence when using the Oculus Quest. Overall, the rates of perceived scene instability, presence and cybersickness were quite similar across the two types of displays when matched on functional limitations, but functional advantages were achievable when using the Oculus Quest with correct-compensation linear gain.

Dependence on Properties of the Visual Environment

In the current study, perceived scene stability/instability was found to depend on the level of linear gain on the Oculus Quest. The steep decline from −0.25 through zero to +0.25 would suggest that participants are more sensitive to scene instability inferred from a head-centric rather than world-centric coordinate frame. Hence, participants appear to rely on the velocity of retinotopic motion to assess visual-vestibular compatibility when judging perceived scene instability. The findings also suggested that perceived scene instability accounted for cybersickness observed in stereo viewing conditions, which could depend on retinotopic assessment of motion conflict between visual and vestibular signals about variations in lateral linear head velocity. These findings have some similarity to the perceived “angular” scene instability and cybersickness reported in Kim et al. (2020). However, spatial presence was generally found to be robust to changes in linear gain, unlike the strong dependence on angular conflicts observed in the previous Kim et al. (2020) study. The differences in the findings of these two studies is likely to be due to differences in the salience of the visual-vestibular conflicts involved, and properties of the displays may also account for these differences.

One major difference between these studies was the previous emphasis on display lag. Our past research on perceived scene instability has focussed on the effects of adding display lag (on both DVP and cybersickness) during angular head rotations (Palmisano et al., 2019; Kim et al., 2020). In the current study, no additional display lag was imposed, only changes in the direction and velocity of visual motion relative to active linear head movements. It appears that display lag was important for generating cybersickness in previous work. However, display lag per se may not be critical for the compelling experience of cybersickness, but rather, the simulation of significant visual motion presented during angular (and possibly linear) head displacements.

Other previous research has shown that cybersickness tends to be higher when viewing displays with angular inverse compensation (Arcioni et al., 2019), a difference found to be significant when viewing full-field visual motion (Palmisano et al., 2017). During head rotation, all the display elements move at the same velocity during angular rotation of the head. In contradistinction, linear head translations (like those used in the current study) generate motion parallax; nearer simulated visual features are displaced more than visual features simulated in the distance. The relatively stable visual elements simulated in the background could serve as a rest frame (Prothero, 1998), constraining the generation of cybersickness. Although our participants were instructed to rate perceived scene instability, these perceptual estimates may have been based on any set of visual elements distributed in depth. Following their participation, some observers noted that monoscopic conditions appeared to generate the appearance of a larger, but less stable virtual environment (because it appeared less rigid). Nearer/larger objects appeared more unstable than smaller/farther objects. It would be advantageous in future to consider whether reducing the simulated depth of the scene increases perceived scene instability and generates cybersickness during conditions of inverse linear display compensation.

In the present study, we rendered 3D cubes that were distributed in depth to create a volumetric cloud with geometric perspective cues and size cues to depth of the scene. Stereoscopic viewing also facilitated the appearance of depth in the display. Though we did not assess apparent size of the environment, informal reports (from some participants after the experiment) were that the scene appeared to be larger in scale when viewed monoscopically. It is possible the “no linear compensation” displays appeared more stable with monoscopic viewing because the elements appeared to be farther away and provided less information about their organization in depth. It is possible then that using an environmental simulation with intrinsic perspective (e.g., a textured ground plane), may help to increase visual sensitivity to processing information about scene instability under these conditions.

Ultimately, it is expected that industry developments in optimizing render times should further improve user experiences in a variety of VR applications by enhancing image quality and minimizing cybersickness. In this study, we found that linear conflicts appear to be tolerated better than the angular conflicts found previously with sensory conflicts generated by display lag. It is possible that modulation of render quality over render time could be dynamically altered during the simulation based on the amount of linear or angular head movements engaged in by users. This may have critical benefits for GPU rendering architectures where near-photorealistic rendering is preferred for AR or VR applications and planet scale XR (Xie et al., 2019, 2020).

Suggested Design Guidelines

It is important to consider the implications of the findings of the current study for the future design of HMD VR hardware and software. Our collective findings across studies suggest that the self-generated angular conflicts we generated during VR use in Kim et al. (2020) may be less provocative than the linear visual-vestibular sensory conflicts we observed in the present study. This remains to be confirmed in a direct within-study comparison with a larger sample and additional controls for carryover effects, further control of linear and angular head movements, and the contribution of angular versus linear movement in the absence of artificially-introduced VR conflict. One possible interpretation of these findings is that users are more tolerant of linear conflicts, compared with angular conflicts. It would therefore be strategic to prioritize the implementation of innovations to reduce angular conflicts over linear conflicts. For example, software methods used to reduce render time (e.g., foveated rendering or reduced rendering quality) could be dynamically applied depending on the instantaneous angularity or linearity of head movements. This dynamic rendering may need to be implemented in a way that is also dependent on scene content. It is possible that the user's tolerance of linear sensory conflict may decline when a structured ground-plane is used, which could be exacerbated by the rendering of diffuse or specular reflectance properties informative of surface shape and gloss (Honson et al., 2020; Ohara et al., 2020). In these situations, it may be necessary to rely on rest frames to provide users with a stable physical frame of reference (Prothero, 1998). This may help by providing a stable world-centric frame of reference to reduce any perceived scene instability, which was found to be positively correlated with cybersickness in a recent study (Kim et al., 2020).

Potential Limitations

It is possible that the large number of zero scores for cybersickness reflects statistical censoring in the reporting of the magnitude of cybersickness experienced by our participants. However, we believe that these results indicate that linear visual-vestibular conflicts are less likely to generate cybersickness. In previous work, we found that angular conflicts for head rotation generated significant levels of cybersickness that were ~20% of the reportable FMS maximum of 20 (Feng et al., 2019; Palmisano et al., 2019; Kim et al., 2020). The minimal cybersickness reported in the present study was obtained using considerably longer HMD VR exposure durations (30 s) compared to these previous angular conflict studies (12 s). Even if we were to consider only reportable values that were greater than 0 (e.g., a value of 1), the magnitude of the effect would be no greater than 5% of the reportable FMS range. Hence, after considering the potential limitation of statistical censoring, linear sensory conflicts still do not appear to be as provocative of cybersickness as angular conflicts (at least for the virtual environment we used in this study). However, future research using an articulated ground plane will help ascertain whether this finding generalizes beyond 3D point-cloud virtual environments.

Researchers should take care to mitigate any carryover effect between trials, caused either by cybersickness building from trial-to-trial, or conversely, by adaptation to the stimuli causing less cybersickness overall (compared to what would have been present in the absence of adaptation-based carryover effects). The current study did not allow for pauses long enough to confidently rule out potential carryover effects, and our verification that symptoms elapsed between trials is not a guarantee against confounding sickness sensitization caused by a one trial to carryover to another. Nevertheless, the reported symptoms in this study were infrequent and low in severity when felt, which implies there was less overt sickness to carryover from trial to trial. However, we observed a significant effect of trial order, which provided evidence consistent with a build-up of cybersickness over successive trials. To address these potential limitations, it would be ideal to allow more time to mitigate the likelihood of cybersickness sustaining or even accumulating across conditions. It would be valuable to also consider to what extent variations in cybersickness across successive trials could be subject to learning and sensorimotor recalibration (Wilke et al., 2013). The oscillatory head movements used in our study were also very unusual. Hence, there may be limited ability to generalize the findings from our study to these kinds of linear (and angular) head movements likely to occur more typically in regular VR situations.

It should be noted that angular self-movement can elicit symptoms even when an artificial sensory conflict such as ours has not been introduced. Previous research by Bouyer and Watt (1996a,b,c) shows that torso-rotation can generate motion sickness over a period of 30 min. This motion sickness was found to habituate over a period of 3–4 days (Bouyer and Watt, 1996a). The habitual decline in motion sickness was not associated with changes in gain of the angular vestibulo-ocular reflex (aVOR) during active oscillatory head rotation at 1–2 Hz (Bouyer and Watt, 1996a). However, the amplitude of these active head movements was found to increase with measured declines in aVOR (Bouyer and Watt, 1996b,c). This suggests that participants may unintentionally generate different active head movements under conditions that alter vestibular function. In the current study, we found that the amplitude of head movements was consistent across conditions, despite the imposed changes visual-vestibular coupling. The 30 s duration of our head-displacement task was also much shorter than the torso rotations used in the Bouyer et al. studies, reducing the likelihood of any significant adaptation occurring. This evidence appears to support the view that linear visual-vestibular conflicts are less provocative than angular conflicts. Although the literature offers evidence that a comparable angular motion in a normal room can be sickening, no such evidence has been found concerning a comparable linear motion. It will therefore be important for future studies to compare our experimental angular and linear self-motion conditions to identical movements inside a normal room when no virtual conflict is introduced.

Another potential limitation is the lack of provision of feedback on head movements made during the linear head displacement tasks. Overall, linear head translation along the inter-aural axis was a dominant feature in head movements generated by our active participants. However, the head movements also contained small amplitudes of linear translation in other directions and small amounts of 3D angular rotation. It is possible that some of these extraneous head movements could be responsible for the cybersickness reported here. Future studies could mitigate these undesired head movements using feedback provided about tracked head movements in real-time, which should help users control head movements more precisely. However, this feedback might introduce attentional effects, which we opted to avoid introducing in the design of the current study. Although it is possible that small inadvertent angular rotations of the head might be more visually salient in zero gain conditions, angular head movements were correctly compensated for at all times. This experimental arrangement should have mitigated the potential effects of these angular head rotation on generation of cybersickness.

Given the potential role of the linear VOR and gaze holding, it may also be valuable to consider the role of gaze in future. Although we requested participants to look deep into the virtual environment, it is difficult to ensure that gaze was constrained in depth without eye tracking. It is possible that eye movements may influence experiences of the virtual environment by modifying the pattern of retinal motion generated by optic flow (Kim and Khuu, 2014; Fujimoto and Ashida, 2020). Therefore, it would be advantageous to assess whether vestibular-mediated gaze holding in depth might also influence the effect of linear gain on perceived scene instability, presence and cybersickness.

The sex composition of our sample was primarily male (11 vs. 3), but the effect we report with subjects between HMDs devices would seem to have been appropriately controlled. Previous studies have reported sex differences when using HMDs, whereby females tend to either experience more cybersickness severity, or experience it sooner, compared with males (Munafo et al., 2017; Curry et al., 2020). However, such sex differences were not supported by recent systematic reviews of the literature on cybersickness (Grassini and Laumann, 2020) and motion sickness more generally (Lawson, 2014). A recent study by Stanney et al. (2020) showed this effect is principally attributed to the design of HMDs to have fixed disparities that accommodate the average inter-pupillary distances of males more than females. It is possible that the fixed disparities of mobile VR devices like the Oculus Go could contribute to enduring systemic causes of cybersickness onset and severity. However, given that we compared cybersickness reported between devices in a counterbalanced order, we propose that the limited effect we observe is not due to the participant pool being primarily male. In our recent study on angular sensory conflict, we found that all participants (male and female) experienced compelling cybersickness when short-duration angular visual-vestibular conflicts were imposed for 12 s. The lack of cybersickness we report here with longer viewing times (20 s) suggests that linear conflicts do not generate compelling cybersickness, at least for the stimulus conditions we imposed. It would be advantageous to consider whether other displays (e.g., a simulated ground plane) might amplify any effects of linear conflict on cybersickness.

Conclusion

While linear visual-vestibular conflicts (produced by desynchronising visual and vestibular cues to linear head displacement) can generate perceived scene instability, they do not appear to significantly reduce presence or increase the likelihood/severity of cybersickness. Linear conflicts on the Oculus Go were found to produce very similar experiences to those encountered on the Oculus Quest with linear head tracking disabled. These findings suggest that the visual system is neurophysiologically tolerant to visual-otolith conflicts generated by brief, low-acceleration head movements. This could explain why positional time warping algorithms have not been prioritized to date, as active linear head movements are less likely to induce sensory conflicts that significantly generate cybersickness (compared to angular conflicts, which are known to be provocative). Future studies will hopefully identify the visual-otolithic constraints under which linear sensory conflicts might contribute to cybersickness generated during active and passive visual exploration of virtual environments experienced using HMD VR.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Human Research Ethics Advisory Panel, University of New South Wales, Australia. The participants provided their written informed consent to participate in this study.

Author Contributions

JK created the stimuli and WL and JK collected the data. JK analyzed the data. All authors interpreted the findings and wrote the article. All authors contributed to the design of the study.

Funding

This work was supported by Australian Research Council (ARC) Discovery Project (DP210101475).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2021.582156/full#supplementary-material

References

Adelstein, B., Lee, T., and Ellis, S. (2003). Head tracking latency in virtual environments: psychophysics and a model,in Proceedings of the Human Factors and Ergonomics Society 47th Annual Meeting (Santa Monica, CA: HFES), 2083–2087. doi: 10.1177/154193120304702001

Allison, R., Harris, L., Jenkin, M., Jasiobedzka, U., and Zacher, J. (2001). Tolerance of temporal delay in virtual environments. Proc. IEEE Virtual Real. 2001, 247–254. doi: 10.1109/VR.2001.913793

Arcioni, B., Palmisano, S., Apthorp, D., and Kim, J. (2019). Postural stability predicts the likelihood of cybersickness in active HMD-based virtual reality. Displays 58, 3–11. doi: 10.1016/j.displa.2018.07.001

Ash, A., Palmisano, S., and Kim, J. (2011). Vection in depth during consistent and inconsistent multisensory stimulation. Perception 40, 155–174. doi: 10.1068/p6837

Bokare, P. S., and Maurya, A. K. (2016). Acceleration-deceleration behaviour of various vehicle types, in World Conference on Transport Research—WCTR 2016 (Shanghai), 10–15.

Bouyer, L. J., and Watt, D. G. (1996a). Torso rotation experiments; 1: adaptation to motion sickness does not correlate with changes in VOR gain. J. Vestib. Res. 6, 367–375. doi: 10.3233/VES-1996-6505

Bouyer, L. J., and Watt, D. G. (1996b). Torso rotation experiments; 2: gaze stability during voluntary head movements improves with adaptation to motion sickness. J. Vestib. Res. 6, 377–385. doi: 10.3233/VES-1996-6506

Bouyer, L. J., and Watt, D. G. D. (1996c). Torso rotation experiments; 3: effects of acute changes in vestibular function on the control of voluntary head movements. J. Vestib. Res. 6, 387–393. doi: 10.3233/VES-1996-6507

Bronstein, A. M., and Gresty, M. A. (1988). Short latency compensatory eye movement responses to transient linear head acceleration: a specific function of the otolith-ocular reflex. Exp. Brain Res. 71, 406–410. doi: 10.1007/BF00247500

Chen, E., Luu, W., Chen, R., Rafik, A., Ryu, Y., Zangerl, B., et al. (2020). Virtual reality improves clinical assessment of the optic nerve. Front. Virtual Real., 19:20. doi: 10.3389/frvir.2020.00004

Clifton, J., and Palmisano, S. (2019). Effects of steering locomotion and teleporting on cybersickness and presence in HMD-based virtual reality. Virtual Real. 24, 453–468. doi: 10.1007/s10055-019-00407-8

Colebatch, J. G., Halmagyi, G. M., and Skuse, N. F. (1994). Myogenic potentials generated by a click evoked vestibulocollic reflex. J. Neurol. Neurosurg. Psychiatry 57, 190–197.

Collewijn, H., and Smeets, J. B. (2000). Early components of the human vestibulo-ocular response to head rotation: latency and gain. J. Neurophysiol. 84, 376–289. doi: 10.1152/jn.2000.84.1.376

Curry, C., Li, R., Peterson, N., and Stoffregen, T. A. (2020). Cybersickness in virtual reality head-mounted displays: examining the influence of sex differences and vehicle control. Int. J. Hum.–Comput. Interact. 36, 1161–1167. doi: 10.1080/10447318.2020.1726108

Curthoys, I. S., Kim, J., McPhedran, S. K., and Camp, A. J. (2006). Bone conducted vibration selectively activates irregular primary otolithic vestibular neurons in the guinea pig. Exp. Brain Res. 175, 256–267. doi: 10.1007/s00221-006-0544-1

Feng, J., Kim, J., Luu, W., and Palmisano, S. (2019). Method for estimating display lag in the Oculus Rift S and CV1. SA '19: SIGGRAPH Asia 2019 Posters 39, 1–2. doi: 10.1145/3355056.3364590

Fujimoto, C., Suzuki, S., Kinoshita, M., Egami, N., Sugasawa, K., and Iwasaki, S. (2018). Clinical features of otolith organ-specific vestibular dysfunction. Clin. Neurophysiol. 129, 238–245. doi: 10.1016/j.clinph.2017.11.006

Fujimoto, K., and Ashida, H. (2020). Roles of the retinotopic and environmental frames of reference on vection. Front. Virtual Real. 1:581920. doi: 10.3389/frvir.2020.581920

Grassini, S., and Laumann, K. (2020). Are modern head-mounted displays sexist? A systematic review on gender differences in HMD-mediated virtual reality. Front. Psychol. 11:1604. doi: 10.3389/fpsyg.2020.01604

Honson, V., Huynh-Thu, Q., Arnison, M., Monaghan, D., Isherwood, Z. J., and Kim, J. (2020). Effects of shape, roughness and gloss on the perceived reflectance of colored surfaces. Front. Psychol. 11:485. doi: 10.3389/fpsyg.2020.00485

IJsselsteijn, W., de Ridder, H., Freeman, J., Avons, S. E., and Bouwhuis, D. (2001). Effects of stereoscopic presentation, image motion, and screen size on subjective and objective corroborative measures of presence. Presence 10, 298–311. doi: 10.1162/105474601300343621

Iwasaki, S., Fujimoto, C., Kinoshita, M., Kamogashira, T., Egami, N., and Yamasoba, T. (2015). Clinical characteristics of patients with abnormal ocular/cervical vestibular evoked myogenic potentials in the presence of normal caloric responses. Ann. Otol. Rhinol. Laryngol. 124, 458–465. doi: 10.1177/0003489414564997

Iwasaki, S., McGarvie, L. A., Halmagyi, G. M., Burgess, A. M., Kim, J., Colebatch, J. G., et al. (2007). Head taps evoke a crossed vestibulo-ocular reflex. Neurology 68, 1227–1229, doi: 10.1212/01.wnl.0000259064.80564.21

Jang, S., Vitale, J. M., Jyung, R. W., and Black, J. B. (2017). Direct manipulation is better than passive viewing for learning anatomy in a three-dimensional virtual reality environment. Comput. Educ. 106, 150–165. doi: 10.1016/j.compedu.2016.12.009

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3, 203–220. doi: 10.1207/s15327108ijap0303_3

Keshavarz, B., and Hecht, H. (2011). Validating an efficient method to quantify motion sickness. Hum. Fact. 53, 415–426. doi: 10.1177/0018720811403736

Kim, J., Chung, C. Y. L., Nakamura, S., Palmisano, S., and Khuu, S. K. (2015). The oculus rift: a cost-effective tool for studying visual-vestibular interactions in self-motion perception. Front. Psychol. 6:248. doi: 10.3389/fpsyg.2015.00248

Kim, J., Luu, W., and Palmisano, S. (2020). Multisensory integration and the experience of scene instability, presence and cybersickness in virtual environments. Comput. Hum. Behav. 113:106484. doi: 10.1016/j.chb.2020.106484

Kim, J., and Palmisano, S. (2008). Effects of active and passive viewpoint jitter on vection in depth. Brain Res. Bull. 77, 335–342. doi: 10.1016/j.brainresbull.2008.09.011

Kim, J., and Palmisano, S. (2010). Visually mediated eye movements regulate the capture of optic flow in self-motion perception. Exp. Brain Res. 202, 355–361. doi: 10.1007/s00221-009-2137-2

Lawson, B. (2014). Motion sickness symptomatology and origins, in Handbook of Virtual Environments: Design, Implementation, and Applications, eds Hale, K. S., and Stanney, K. M., (New York, NY: CRC Press), 1043–1055. doi: 10.1201/b17360-29

Mania, K., Adelstein, B., Ellis, S., and Hill, M. (2004). Perceptual sensitivity to head tracking latency in virtual environments with varying degrees of scene complexity, in Proceedings of the ACM APGV; 1st Symposium (Los Angeles, CA), 39–48. doi: 10.1145/1012551.1012559

Munafo, J., Diedrick, M., and Stoffregen, T. A. (2017). The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Exp. Brain Res. 235, 889–901. doi: 10.1007/s00221-016-4846-7

Murofushi, T., Curthoys, I. S., Topple, A. N., Colebatch, J. G., and Halmagyi, G. M. (1995). Responses of guinea pig primary vestibular neurons to clicks. Exp. Brain Res. 103, 174–178. doi: 10.1007/BF00241975

Ohara, M., Kim, J., and Koida, K. (2020). The effect of material properties on the perceived shape of three-dimensional objects. i-Perception. 11, 1–14. doi: 10.1177/2041669520982317

Palmisano, S., Allison, R. S., and Kim, J. (2020). Cybersickness in head-mounted displays is caused by differences in the user's virtual and physical head pose. Front. Virtual Real. 1:587698. doi: 10.3389/frvir.2020.587698

Palmisano, S., Allison, R. S., Schira, M. M., and Barry, R. J. (2015). Future challenges for vection research: definitions, functional significance, measures, and neural bases. Front. Psychol. 6:193. doi: 10.3389/fpsyg.2015.00193

Palmisano, S., Mursic, R., and Kim, J. (2017). Vection and cybersickness generated by head-and-display motion in the Oculus Rift. Displays 46, 1–8. doi: 10.1016/j.displa.2016.11.001

Palmisano, S., Szalla, L., and Kim, J. (2019). Monocular viewing protects against cybersickness produced by head movements in the oculus rift, in Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST) (Hong Kong), doi: 10.1145/3359996.3364699

Polcar, J., and Horejsi, P. (2015). Knowledge acquisition and cyber sickness: a comparison of VR devices in virtual tours. MM Sci. J. 613–616. doi: 10.17973/MMSJ.2015_06_201516

Prothero, J. D. (1998). The Role of Rest Frames in Vection, Presence and Motion Sickness (Ph.D. thesis), University of Washington, Seattle, WA.

Reason, J. (1978). Motion sickness: some theoretical and practical considerations. Appl. Ergon. 9, 163–167. doi: 10.1016/0003-6870(78)90008-X

Riva, G., and Gamberini, L. (2000). Virtual reality in telemedicine. Telemed. J. E-Health 6, 327–340. doi: 10.1089/153056200750040183

Roettl, J., and Terlutter, R. (2018). The same video game in 2D, 3D or virtual reality—how does technology impact game evaluation and brand placements? PLoS ONE 13:e0200724. doi: 10.1371/journal.pone.0200724

Skarbez, R., Brooks, F. Jr., and Whitton, M. (2017). A survey of presence and related concepts. ACM Comput. Surveys (CSUR), 50, 1–39. doi: 10.1145/3134301

Stanney, K., Fidopiastis, C., and Foster, L. (2020). Virtual reality is sexist: But it does not have to be. Front. Robot. AI 7:4. doi: 10.3389/frobt.2020.00004

Vogel, H., Kohlhaas, R., and von Baumgarten, R. J. (1982). Dependence of motion sickness in automobiles on the direction of linear acceleration. Eur. J. Appl. Physiol. 48, 399–405. doi: 10.1007/BF00430230

Weech, S., Kenny, S., and Barnett-Cowan, M. (2019). Presence and cybersickness in virtual reality are negatively related: a review. Front. Psychol. 10:158. doi: 10.3389/fpsyg.2019.00158

Wilke, C., Synofzik, M., and Lindner, A. (2013). Sensorimotor recalibration depends on attribution of sensory prediction errors to internal causes. PLoS ONE 8:e54925. doi: 10.1371/journal.pone.0054925

Xie, C., Fu, X., Chen, M., and Song, S. L. (2020). OO-VR: NUMA friendly object-oriented VR rendering framework for future NUMA-based multi-GPU systems, in Proceedings of the 46th International Symposium on Computer Architecture (ISCA '19) (New York, NY: Association for Computing Machinery) 53–65. doi: 10.1145/3307650.3322247

Keywords: virtual-reality, presence, cybersickness, motion sickness, vestibular, head mounted displays

Citation: Kim J, Palmisano S, Luu W and Iwasaki S (2021) Effects of Linear Visual-Vestibular Conflict on Presence, Perceived Scene Stability and Cybersickness in the Oculus Go and Oculus Quest. Front. Virtual Real. 2:582156. doi: 10.3389/frvir.2021.582156

Received: 10 July 2020; Accepted: 21 January 2021;

Published: 29 April 2021.

Edited by:

Kay Marie Stanney, Design Interactive (United States), United StatesReviewed by:

Philip Lindner, Karolinska Institutet (KI), SwedenLihan Chen, Peking University, China

Copyright © 2021 Kim, Palmisano, Luu and Iwasaki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Juno Kim, anVuby5raW1AdW5zdy5lZHUuYXU=

Juno Kim

Juno Kim Stephen Palmisano

Stephen Palmisano Wilson Luu

Wilson Luu Shinichi Iwasaki

Shinichi Iwasaki