- Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany

Latency is a key characteristic inherent to any computer system. Motion-to-Photon (MTP) latency describes the time between the movement of a tracked object and its corresponding movement rendered and depicted by computer-generated images on a graphical output screen. High MTP latency can cause a loss of performance in interactive graphics applications and, even worse, can provoke cybersickness in Virtual Reality (VR) applications. Here, cybersickness can degrade VR experiences or may render the experiences completely unusable. It can confound research findings of an otherwise sound experiment. Latency as a contributing factor to cybersickness needs to be properly understood. Its effects need to be analyzed, its sources need to be identified, good measurement methods need to be developed, and proper counter measures need to be developed in order to reduce potentially harmful impacts of latency on the usability and safety of VR systems. Research shows that latency can exhibit intricate timing patterns with various spiking and periodic behavior. These timing behaviors may vary, yet most are found to provoke cybersickness. Overall, latency can differ drastically between different systems interfering with generalization of measurement results. This review article describes the causes and effects of latency with regard to cybersickness. We report on different existing approaches to measure and report latency. Hence, the article provides readers with the knowledge to understand and report latency for their own applications, evaluations, and experiments. It should also help to measure, identify, and finally control and counteract latency and hence gain confidence into the soundness of empirical data collected by VR exposures. Low latency increases the usability and safety of VR systems.

1. Introduction

Cybersickness is a severe problem for the usage and safety of VR technology. It hinders both the broader adoption of VR technology and its overall usability. Cybersickness is closely related to simulator sickness and motion sickness. Early research describes cybersickness as a motion sickness in virtual environments (McCauley and Sharkey, 1992). Cybersickness is usually defined by a set of specific adverse symptoms in combination with the use of certain technologies, such as disorientation, apathy, fatigue, dizziness, headache, increased salivation, dry mouth, difficulty focusing, eye strain, vomiting, stomach awareness, pallor, sweating, and postural instability (LaViola, 2000; Stone Ill, 2017; McHugh, 2019). These symptoms are shared with related definitions of sickness, even though their severity might vary. Stanney et al. (1997) argues that cybersickness is connected to more symptoms in the disorientation cluster of the Simulator Sickness Questionnaire (SSQ) (Kennedy et al., 1993) than simulator sickness. The disorientation cluster contains several symptoms which do not all carry the explicit meaning of disorientation. Gavgani et al. (2018) show that motion sickness and cybersickness show the same severity of symptoms in extreme cases. Bockelman and Lingum (2017) distinguish cybersickness from other definitions of sickness by its “cyber” source. We use the term cybersickness to describe sickness with the aforementioned symptoms induced by Virtual Reality or Augmented Reality applications that do not apply external forces on the user. External forces are motion platforms or other motor actuated methods that move a user without the user's own effort. These VR or AR applications provide stimuli predominately by visual perception.

Chang et al. (2020) review experiments that measure cybersickness. They describe the frequency of use for different subjective measurements. Out of 76 experiments, 58 (≈76%) use the SSQ (Kennedy et al., 1993). Less often used questionnaires are the Fast Motion Sickness scale (FMS, 6 experiments ≈ 8%, Keshavarz and Hecht, 2011), a forced-choice question (5 experiments ≈ 6.5%, Chen et al., 2011), the Misery Scale (MISC, 4 experiments ≈ 5%, Bos et al., 2010), the Motion Sickness Assessment Questionnaire (MSAQ, 3 experiments ≈ 4%, Gianaros and Stern, 2010) and the Virtual Environment Performance Assessment Battery (VEPAB, 3 experiments ≈ 4%, Lampton et al., 1994). In contrast, Davis et al. (2014) state that the Pensacola Diagnostic Index (Graybiel et al., 1968) is the “most widely used measure in motion sickness studies”(Davis et al., 2014, p. 6). They state that another widely used questionnaire besides the SSQ is the Nausea Profile (Muth et al., 1996) and further list the Virtual Reality Symptom Questionnaire (Ames et al., 2005). Another questionnaire in use is the Motion Sickness Susceptibility Questionnaire (MSSQ) (Golding, 1998). Here again, it becomes apparent how close cybersickness is to simulator sickness and motion sickness, since questionnaires are often used for multiple sickness definitions. The listed questionnaires are in use for research on cybersickness, but care has to be taken to understand their different usage and purpose. Many, like the SSQ, report on the sickness experienced at the time of answering the questionnaire while others like the MSSQ ask for past experiences to gauge sickness susceptibility that can play into the experience. The Nausea Profile is a scale for measuring nausea due to any cause, not a motion sickness-specific scale, while the MSAQ of the same group targets motion sickness and describes subscales for further differentiating motion sickness aspects.

There are different explanations how cybersickness comes into being and there are multiple factors that influence cybersickness. Explanations for cybersickness often preceed the term cybersickness itself. They were created for motion sickness or simulator sickness and then adopted for cybersickness. Rebenitsch and Owen (2016) and LaViola (2000) list and discuss the following theories for cybersickness: the sensory mismatch theory (Reason and Brand, 1975; Oman, 1990), the poison theory (Treisman, 1977), the postural instability theory (Riccio and Stoffregen, 1991) and the rest frame theory (Virre, 1996). Oman (1990) describe their sensory mismatch theory as possibly underlying multiple different sickness definitions such as motion sickness and simulator sickness. Bles et al. (1998) adapt this statement to describe postural stability as underlying multiple different sickness definitions.

Factors that evoke cybersickness are “rendering modes, visual display systems and application design” (Rebenitsch and Owen, 2016, p. 102) as well as hardware-specific factors. Rebenitsch and Owen (2016) describe the former factors but leave hardware-specific factors such as latency open to be discussed in other publications. This review focuses on latency contributions to cybersickness. There are other hardware-specific factors such as tracking accuracy (Chang et al., 2016) that are not covered in this review. Latency describes the processing time incurred by the computer system used for the VR application. VR needs complex hard- and software to deliver the desired experience. Each part in the system contributes to the overall latency by itself and by the effects of its interaction with other parts.

Latency as an inherent property of computer system processing is easily introduced into complex architectures, and as such is subject to many evaluations. There are different angles toward research on latency in virtual environments that mutually influence each other. Effects of latency on cybersickness are found, which necessitate research into measurements and control of latency. Experiments that simulate latency are performed that gather more insight into its effects on cybersickness and user performance. And not least of all, latency in experiments performed in virtual environments needs to be reported in research articles. This review is thus organized as follows: First, we discuss experiments that show that latency contributes to cybersickness. Then, we describe ways to measure latency, which is essential for development of applications with consistent latency behavior. We then show how measured latency is reported in research articles to illustrate latency patterns in experiments.

2. Effects

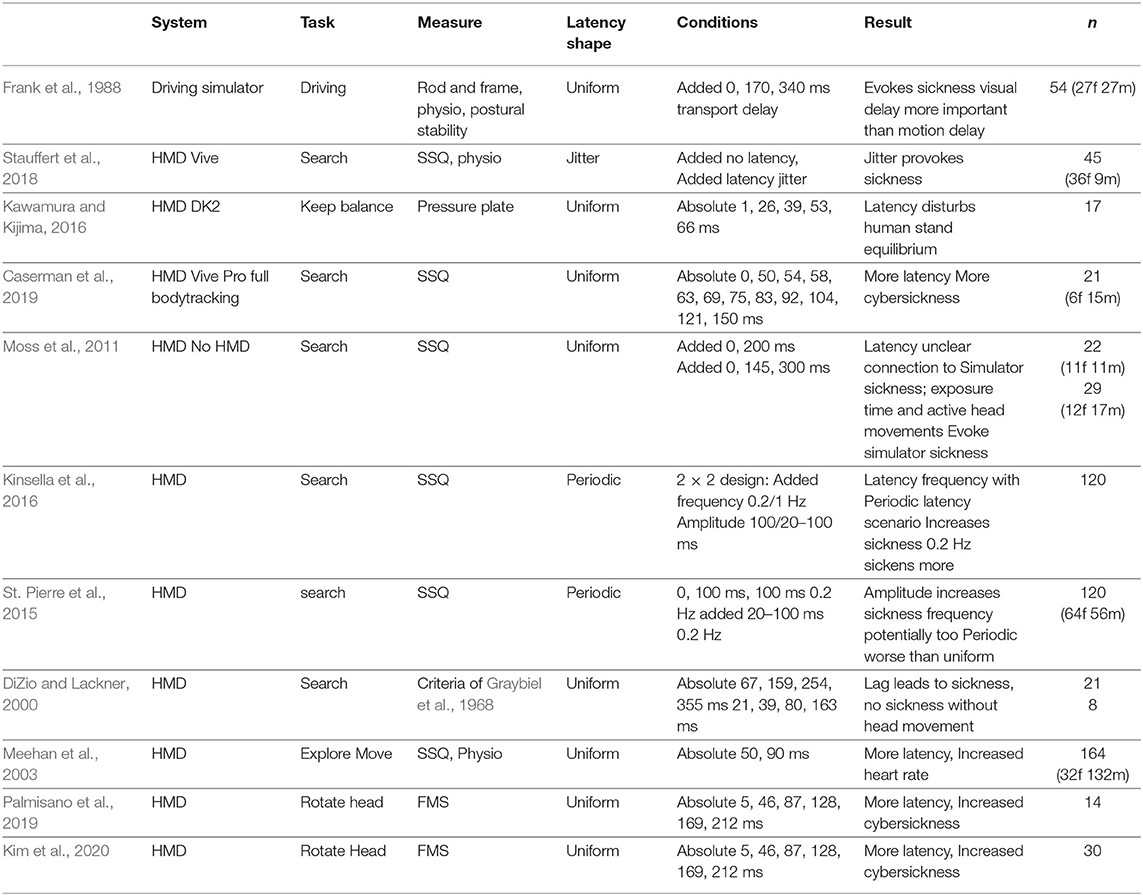

Table 1 shows an overview of different studies that show that latency contributes to cybersickness. The researchers conducted experiments with latency as the independent variable and cybersickness as the dependent variable. Latency is manipulated to create conditions of different motion to photon latency in the employed systems. For each condition, cybersickness is measured to compare sickness values between the conditions. Researchers measure cybersickness with subjective questionnaires or objective physiological measurements. The most often used questionnaire for the listed papers is the SSQ with six out of 11 papers (Meehan et al., 2003; Moss et al., 2011; St. Pierre et al., 2015; Kinsella et al., 2016; Stauffert et al., 2018; Caserman et al., 2019). Physiological measurements are postural stability or postural sway, heart rate, sweating and galvanic skin response. We list postural stability separate from the other physiological measurements to distinguish the different cases of usage. Frank et al. (1988) list postural stability separate from other physiological measurements. Kawamura and Kijima (2016) only observe postural stability. Postural instability often correlates with visually-induced motion sickness (Riccio and Stoffregen, 1991) and some studies have found it to be predictive of visually-induced motion sickness (Arcioni et al., 2019). Meehan et al. (2003) and Stauffert et al. (2018) only use heart rate and galvanic skin response. Their physiological measurements showed an effect of increased latency on heart rate. Gavgani et al. (2018) argue that forehead sweating is the best physiological indicator for motion sickness which shows the same symptoms as cybersickness in extreme cases. Their rollercoaster experiment only finds minor or moderate effects for heart rate and galvanic skin response. While heart rate may not be the best indicator of latency induced cybersickness, it supports the research that evaluates cybersickness with the SSQ.

Most research for latency and cybersickness tests only the effect of static latency added (Frank et al., 1988; DiZio and Lackner, 2000; Meehan et al., 2003; Moss et al., 2011; Kawamura and Kijima, 2016; Caserman et al., 2019; Palmisano et al., 2019; Kim et al., 2020). They introduce a fixed delay into the system and test different such latencies against each other. This is based on the assumption that most observed latencies are close to one mean latency, for which one fixed added latency per condition is an approximation. This simple latency model consistently shows an increase of latency in the VR simulation, leading to increased cybersickness or a more disturbed stand equilibrium.

Movement itself is important to experience latency induced cybersickness (DiZio and Lackner, 2000). Although, Moss et al. (2011) found no influence of latency in an experiment with a lot of head movement. They report that the head movement itself evoked sickness. It may be that sickness from other sources was stronger than the latency induced sickness thereby masking it. Without movement, the user might not feel the discrepancy between real world and virtual world widened by latency. An increase of head movement can increase cybersickness (Palmisano et al., 2019; Kim et al., 2020). Studies often involve a search task that requires head movement.

Taking into account that latency in measurements often shows irregular spikes, Stauffert et al. (2018) showed that not only uniform but occasional latency spikes provoke cybersickness. St. Pierre et al. (2015) and Kinsella et al. (2016) show that periodic latency like measured by Wu et al. (2013) contributes to cybersickness. They describe latency as consisting of a time-invariant and a periodic part. Periodic latency is described to follow a sine wave. St. Pierre et al. (2015) argues that the sine's amplitude has more influence than its frequency. Kinsella et al. (2016) observes the opposite.

3. Measuring Latency

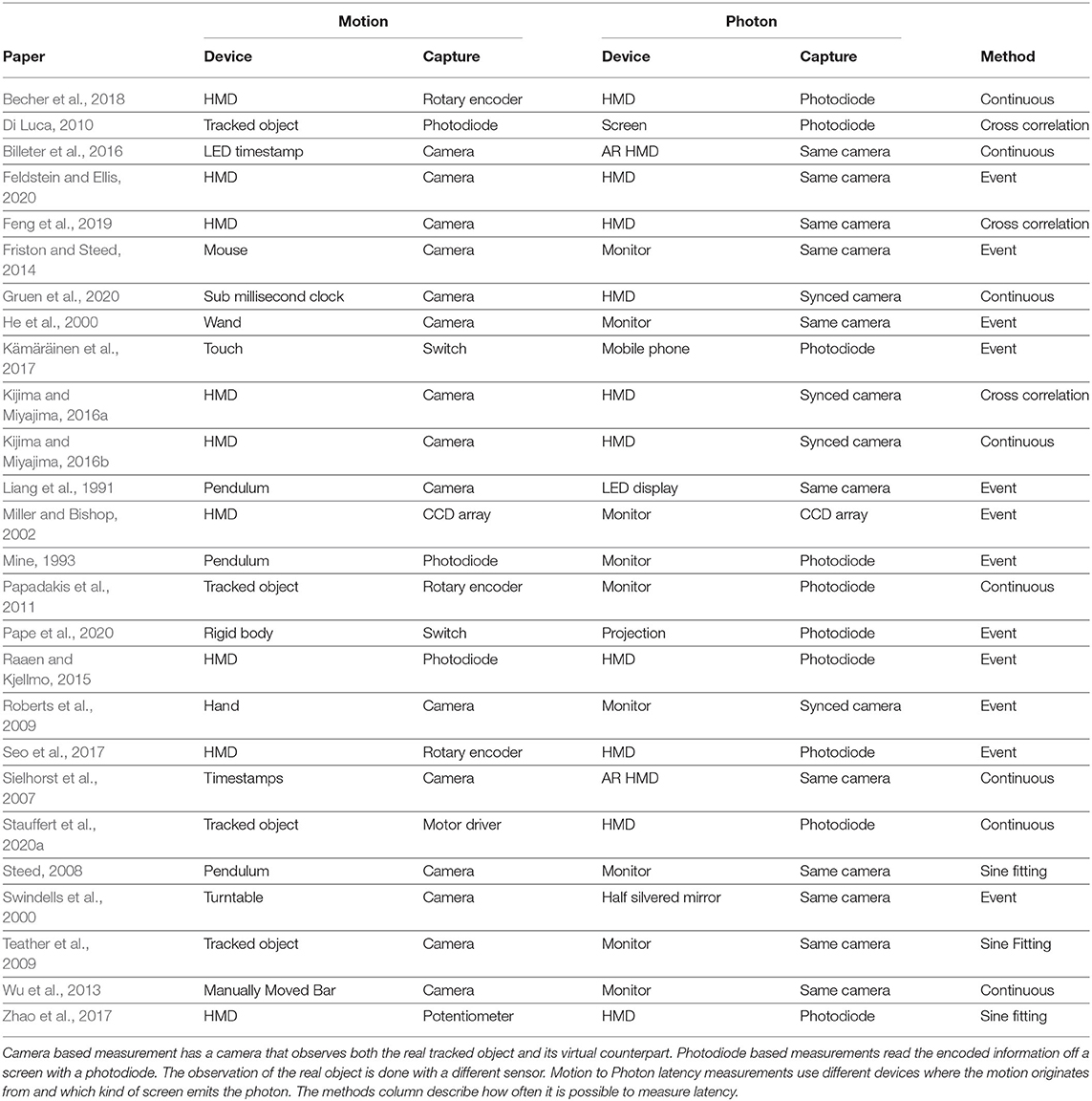

The contribution of latency to cybersickness necessitates controlling latency in every VR or AR application. High latency and especially latency spikes can often only be detected by measurements, which in turn provide researchers and other developers with indications if and where interventions are needed during the development process. Approaches to measure latency are numerous and distinguish themselves in the amount of instrumentation they need, and which kind of latency they measure. Most approaches measure motion to photon latency, which is the time between a movement of some tracked object, and the effect corresponding to this movement shown on a screen, conveyed by photons emitted from the screen. Different tracked objects can be used to signify movement in the measurement of motion to photon latency, such as Motion Controllers or Head-Mounted Displays (HMD). The employed screens may be computer monitors, mobile phone screens or AR/VR HMD screens. The motion to photon latency is also called end-to-end latency. Table 2 shows an overview of approaches.

Measurements need to compare the time difference between the motion of a tracked object and a resulting response on a screen. The observed motion can be the onset of a motion (Feldstein and Ellis, 2020), special characteristics during a motion such as the peak of acceleration (Friston and Steed, 2014), the end of a motion (Chang et al., 2016) or arrival at a predetermined position (He et al., 2000) or a predetermined motion (Di Luca, 2010). A predetermined motion is usually a sinusoidal movement of a pendulum (Steed, 2008) or the circular movement of a turntable (Swindells et al., 2000). A motion can also be the passing of time in the form of timestamps (Sielhorst et al., 2007; Billeter et al., 2016; Gruen et al., 2020).

The screen shows either a copy of the motion (Roberts et al., 2009) or an encoded version of it (Becher et al., 2018). The system needs to track the tracked object, integrate it into its simulation and show a generated image on the screen (Mine, 1993; Feldstein and Ellis, 2020). The necessary processing time leads to the image on the screen always being delayed in contrast to the original, real motion. Additional steps such as Remote Graphics Rendering (Kämäräinen et al., 2017), or using additional computers to process tracking information, leads to increased latency (Roberts et al., 2009).

A straight forward approach uses a camera to observe both the real and the virtual motion and compare the delay between their chosen motion aspect. The analysis can be done by hand (Liang et al., 1991) or automated (Friston and Steed, 2014). Tracking cameras trade spatial resolution for temporal resolution. High spatial resolution is needed to better capture the real motion, but high temporal resolution is needed to determine a high precision latency value. A way around the dilemma is to fit the mathematical function of the known movement to the tracking data (Steed, 2008). This reduces uncertainty due to restricted resolution.

Camera based measurements do not work well with HMDs, because the lenses distort the image in a way that necessitates them to be very close to the lens. This way, they cannot record the real tracked object any longer. These approaches usually use a computer monitor as the observed screen. Some researchers remove the HMD lenses (Feldstein and Ellis, 2020) or use additional lenses that reverse the distortion (Becher et al., 2018).

An alternative is to observe the real motion separately from its virtual counterpart. The obvious extension is to use two synchronized cameras (Kijima and Miyajima, 2016b). More often, the real motion is observed by a photodiode that gets covered (Mine, 1993) or a rotary encoder (Seo et al., 2017) that reports the orientation of the platform that the tracked object is placed on. The screen is monitored by one (Pape et al., 2020) or more photodiodes (Becher et al., 2018; Stauffert et al., 2020a). A photodiode has a high temporal resolution but can only measure one brightness value per measurement. The application to measure needs to display its tracking information in brightness levels to use photodiodes.

The chosen method determines how many latency values are measured. Sine fitting (Steed, 2008; Teather et al., 2009; Zhao et al., 2017) and cross correlation (Di Luca, 2010; Kijima and Miyajima, 2016b; Feng et al., 2019) only report one latency for one measurement run. If the latency between an event and its reaction on the screen is measured, the number of latency measurements that can be reported depends on the approach. Some methods need to provoke an event and then wait for the result, before it is possible to measure again (Liang et al., 1991; He et al., 2000; Swindells et al., 2000; Miller and Bishop, 2002; Roberts et al., 2009; Friston and Steed, 2014; Raaen and Kjellmo, 2015; Kämäräinen et al., 2017; Seo et al., 2017; Feldstein and Ellis, 2020; Pape et al., 2020). Some approaches allow to measure the latency for every frame shown on the screen (Sielhorst et al., 2007; Papadakis et al., 2011; Wu et al., 2013; Billeter et al., 2016; Kijima and Miyajima, 2016b; Becher et al., 2018; Gruen et al., 2020; Stauffert et al., 2020a). Some approaches that only measure the latency of an event are usable to measure continuously, while others are not. We distinguish methods in Table 2 depending on the reported usage.

4. Description

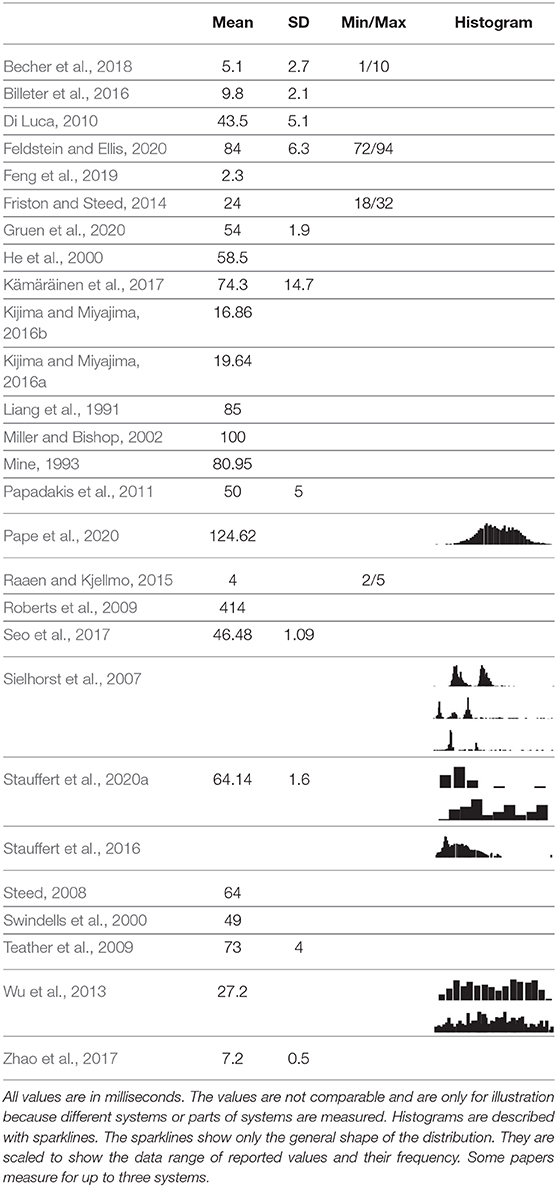

Looking at the approaches to measure latency, we see that latency is reported in different ways. The reported values are often not comparable, as different papers use different systems with varying complexity. A less complex system is expected to show lower and more deterministic latency than a more complex system. Newer hardware often has lower latency with reduced determinism (McKenney, 2008). Some papers report multiple measurements of different systems. Table 3 lists only a subset of the numbers reported in the respective research papers. Interested readers are referred to the original publications.

Table 3. Table summarizing how latency is reported in papers that propose latency measurement approaches.

An observation is that latency is not a constant value. Latency is different with different devices (Mine, 1993), different software configurations (Friston and Steed, 2014) or different input methods (Kämäräinen et al., 2017). Different usage patterns such as a change of the movement direction can influence latency (He et al., 2000). Even small changes in the measurement setup can make a difference. Latency measured in the upper part of a screen can be lower than latency measured in the lower part, due to the scan out sequence (Papadakis et al., 2011). The problem with latency measurements is that they are often performed “under optimized and artificial conditions that may not represent latency conditions in realistic application-oriented scenarios” (Feldstein and Ellis, 2020).

The variability is usually reported by a mean value at least. Standard deviation and minimum/maximum values provide more insight. Histograms can be used to show even more information about what latencies are to be expected. We want to focus on these visualization methods here as a basis to understand the connection between latency and cybersickness. The different ways to describe cybersickness are used in the different simulated latencies of the cybersickness experiments of Table 1.

The sparklines in Table 3 give an impression of the shape of latency. The data is stretched to take the maximum amount in x and y direction and only shows the x axis segment that contains data. Sparklines are supposed to only give a general idea of the shape (Tufte, 2001). Stauffert et al. (2016) and Stauffert et al. (2020a) use a logarithmic y axis. The other papers use a linear y axis. Every sparkline has the measured latency in x direction and its probability in y direction. We exclude Stauffert et al. (2020a) systems where there is artificial latency introduced, but include systems that have artificially high system load but mimic real world scenarios.

A key difference between representations given in publications is if they include rare outliers. Some researchers show no outliers (Wu et al., 2013; Pape et al., 2020) while others do (Sielhorst et al., 2007; Stauffert et al., 2016, 2020a). Latencies usually cluster around one or multiple values. Wu et al. (2013) system 2 and Stauffert et al. (2020a) system 1 show one cluster. Pape et al. (2020) and Sielhorst et al. (2007) system 1 and 3, Wu et al. (2013) system 1 and Stauffert et al. (2016) show two clusters. Sielhorst et al. (2007) system 2 shows 3 clusters and Stauffert et al. (2020a) system 2 shows 9 clusters, each indicated by higher probabilities surrounded by lower probabilities in the histogram.

Each cluster's distribution appears to follow a normal distribution though Sielhorst et al. (2007) system 1, Stauffert et al. (2016) and Stauffert et al. (2020a) system 2 show a more skewed distribution with a longer tail toward larger latencies, resembling more a gamma distribution. Pape et al. (2020) proposes to describe the distribution with a gaussian mixture model, i.e., an imposition of multiple normal distributions. Stauffert et al. (2018) argue to use an empirical distribution derived from the measurements. Multiple clusters presumably originate from the interplay of two or more parts running in decoupled loops in the observed system. Feldstein and Ellis (2020) list processing stages such as simulation or rendering that contribute to the final latency pattern with their runtime and communication behavior. Antoine et al. (2020) show how latency jitter emerges when input device and display sampling frequency differ.

Besides the general distribution, there may be temporal patterns. Stauffert et al. (2020a) found reoccurring latency spikes with a uniform interarrival time. Wu et al. (2013) found a sinusoidal latency pattern.

5. Discussion

We have shown how latency is measured. The necessary instrumentation varies from simple observations of the VR equipment (Steed, 2008), to the need of specific software to run (Friston and Steed, 2014), to required modifications of the hardware (Stauffert et al., 2020a). The motion may be evoked manually (Wu et al., 2013) or with a pendulum (Mine, 1993) or a turntable (Chang et al., 2016). Latency is observed from one distant observer with one camera (He et al., 2000), multiple distant observers with synchronized cameras (Gruen et al., 2020) or close observers that are attached to the moved device and the screen (Di Luca, 2010).

Most researchers that measure latency report a mean latency value with an optional standard deviation. Some report a minimum and maximum value in addition. More insight is provided by histograms and plots showing the temporal behavior (Wu et al., 2013). There is research into whether latency influences cybersickness. Most compare the effect of one latency condition with another condition that has a time invariant increased latency (Frank et al., 1988; DiZio and Lackner, 2000; Meehan et al., 2003; Moss et al., 2011; Kawamura and Kijima, 2016; Caserman et al., 2019; Palmisano et al., 2019; Kim et al., 2020). This is based on the most often reported mean latency. Latency jitter as described in latency histograms and periodic latency patterns are shown to also contribute to cybersickness (Stauffert et al., 2018). All approaches to report latency find a counterpart where latency is simulated and shown to influence cybersickness.

There is more research into latency for VR systems than for AR systems, mainly because the technology is often times easier to handle. Many AR systems are simulated with VR systems until AR technology makes the simulated features possible. While less researched, AR systems show similar problems (Sielhorst et al., 2007).

5.1. Limitations on Latency Comparability

There are many factors that can influence latency and the predictability. Kijima and Miyajima (2016a) show that HMD prediction and timewarp (van Waveren, 2016) make a difference. Asynchronous timewarp uses a shortcut to update the displayed image after it was rendered, which yields different values when measured to a system that looks at motion controller movement that is only updated in the simulation of the virtual world. A sequential scan-out process leads to the eyes getting the information at different points in time so it can make a difference which screen is taken for measurement (Papadakis et al., 2011). He et al. (2000) found different latency depending on the movement direction of the tracked object. Manufacturers optimize latency with prediction that may fail (Gach, 2019).

Latency reporting depends on the observed system. The values in Table 3 are not comparable to one another because some do not measure certain stages of computation or use other hardware. Even though the values are not comparable, they are often reported in a similar fashion with one mean value and a standard deviation.

Spatial jitter can be similar to latency jitter by offsetting tracking positions in an unexpected way. Some measurement methods can not distinguish between latency jitter and spatial jitter by their design. 2D pointing performance suffers with spatial jitter (Teather et al., 2009). Spatial jitter is likely to evoke cybersickness as well and may partially be described in the latency jitter studies already. Some measurement methods measuring related phenomena further complicates the comparison.

5.2. Latency Variability

VR and AR applications require substantial computational power to create virtual environments. Computer systems to provide the experience are optimized for performance rather than real-time, i.e., guaranteed response times (McKenney, 2008). Some applications such as robotics and space exploration require such deterministic runtime behavior of software. Modern operating systems do not provide real-time capabilities and even the Linux PREEMPT_RT patches cannot provide reliable real-time runtimes (Mayer, 2020). Without a real-time operating system, there may be unforseeable latency spikes that can harm VR experiences, even if latency was previously acceptable.

Researchers agree that “the delays vary substantially” (Kämäräinen et al., 2017) and often try to “illustrate the variations in latency of real systems” (Friston and Steed, 2014) by reporting more than one mean latency value. As a caveat, the “latency testing on isolated virtual reality systems under optimized and artificial conditions may not represent latency conditions in realistic application-oriented scenarios” (Feldstein and Ellis, 2020). Care must be taken to measure as close to the use case as possible to best represent the expected latencies. The best case would be to measure during exposure.

Rare latency outliers show latencies much larger than the average (Stauffert et al., 2020a). Networked applications often only look at the 95th, 99th, and 99.9th percentile (Vulimiri et al., 2013) to estimate response times. Teather et al. (2009) use the 95th percentile to describe their motion-to-photon latency measurements. Stauffert et al. (2018) provide a first study with latency spiking behavior including the top one percent but more research is needed to understand if regarding only the 95th or 99th percentile is sufficient. Some web applications found the need to include the remaining one percent of latencies in their analyses (Hsu, 2015).

Latency jitter can be reduced with prediction (Jung et al., 2000). Incorporating latency jitter in the prediction model increases the prediction performance (Tumanov et al., 2007). Prediction, however, introduces its own side effects such as over anticipation (Nancel et al., 2016).

5.3. Desirable Latency Values

How much latency is tolerable for a good VR experience? Carmack (2013) says that it should be below 50 ms to feel responsive and recommends less than 20 ms. Attig et al. (2017) look at HCI experiments without VR that report no impact on usability when latency is below 100 ms. Humans can detect visual variations at 500 Hz (Davis et al., 2015) and latency below 17 ms (Ellis et al., 1999, 2004; Adelstein et al., 2003). Although, Feldstein and Ellis (2020) indicate that perceivable latency does not necessarily cause cybersickness. Jerald (2010) measures a minimum latency threshold of 3.2 ms in one of the participants, but adds that the exact perceivable latency may depend on the virtual environment.

5.4. Need to Measure Latency

Measuring latency helps to become aware of bottlenecks in employed hard- and software (Swindells et al., 2000; Di Luca, 2010). Without measuring, those problems may never be detected and may influence an otherwise sound experiment. Many researchers, however, do not report latency. The 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) saw 104 published papers. 85 papers conducted a user study in virtual reality. Only 6 reported the latency of the employed VR system. Although a reported mean latency strengthens trust that the systems performed as expected, latency jitter may still have occurred during experiments and may have impaired individual measurements.

Which approach to use depends on the application and possibilities of the researchers. A detailed analysis helps to judge the application's performance but everything is better than not measuring at all. Every researcher should be able to do manual frame counting (He et al., 2000) as shown in Feldstein and Ellis (2020) that compare the results of different evaluators. Sine fitting (Steed, 2008) reduces imprecisions in the video analysis. Even though it is more involved than manual frame counting, software can help with the analysis (Stauffert et al., 2020b). Beyond these basic approaches, the choice of how to measure latency depends on the specific hard- and software used. Design your measurement system to fit your VR system guided by the approaches in Table 2. Research should strive toward measuring latency for every frame shown on the employed screen to assure validity of observations and to maximize insight. Measuring latency can hint at problems, latency values then have to be interpreted to find an intervention if need be.

6. Conclusion

Latency is one of the characteristics of a computer system that is often discussed to have a major impact on the system's usability. Research shows that larger latencies and latency jitter can influence well-being in a negative way in the form of cybersickness. Yet little research of VR experiences check and report the latency behavior of their employed computer system. Only 7% of the papers published at the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) conducting user studies in virtual reality reported their motion to photon latency. Latency may introduce unwanted effects that are not obvious to the researchers and reviewers if a latency value is not reported.

Latency is not restricted to one value but changes over time and with the VR system usage pattern. More elaborated test setups are required to capture these dynamics. Research is only beginning to understand the implications of time-invariant latency. Even the occasional latency spike will contribute to cybersickness. Measuring latency is of importance to understand better the influence on cybersickness and to understand where latency might not be the main cause for cybersickness.

Author Contributions

J-PS conducted the literature review and took the lead in writing the manuscript, he collectively discussed, and developed concepts to measure and report latency. FN worked on the manuscript and supervised the project. ML conceived the original idea, collectively discussed and developed concepts of own research on latency, and supervised the project. All authors provided critical feedback and helped shape the research, analysis, and manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adelstein, B. D., Lee, T. G., and Ellis, S. R. (2003). “Head tracking latency in virtual environments: psychophysics and a model,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 47 (Los Angeles, CA: SAGE Publications), 2083–2087. doi: 10.1177/154193120304702001

Ames, S. L., Wolffsohn, J. S., and McBrien, N. A. (2005). The development of a symptom questionnaire for assessing virtual reality viewing using a head-mounted display. Optometry Vis. Sci. 82, 168–176. doi: 10.1097/01.OPX.0000156307.95086.6

Antoine, A., Nancel, M., Ge, E., Zheng, J., Zolghadr, N., and Casiez, G. (2020). “Modeling and reducing spatial jitter caused by asynchronous input and output rates,” in UIST 2020-ACM Symposium on User Interface Software and Technology (New York, NY). doi: 10.1145/3379337.3415833

Arcioni, B., Palmisano, S., Apthorp, D., and Kim, J. (2019). Postural stability predicts the likelihood of cybersickness in active HMD-based virtual reality. Displays 58, 3–11. doi: 10.1016/j.displa.2018.07.001

Attig, C., Rauh, N., Franke, T., and Krems, J. F. (2017). “System latency guidelines then and now–is zero latency really considered necessary?” in Engineering Psychology and Cognitive Ergonomics: Cognition and Design, ed D. Harris, Vol. 10276 (Cham: Springer International Publishing), 3–14. doi: 10.1007/978-3-319-58475-1_1

Becher, A., Angerer, J., and Grauschopf, T. (2018). Novel approach to measure motion-to-photon and mouth-to-ear latency in distributed virtual reality systems. arXiv preprint arXiv:1809.06320.

Billeter, M., Rothlin, G., Wezel, J., Iwai, D., and Grundhofer, A. (2016). “A LED-based IR/RGB end-to-end latency measurement device,” in 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct) (Merida), 184–188. doi: 10.1109/ISMAR-Adjunct.2016.0072

Bles, W., Bos, J. E., De Graaf, B., Groen, E., and Wertheim, A. H. (1998). Motion sickness: only one provocative conflict? Brain Res. Bull. 47, 481–487. doi: 10.1016/S0361-9230(98)00115-4

Bockelman, P., and Lingum, D. (2017). “Factors of cybersickness,” in HCI International 2017-Posters' Extended Abstracts, ed C. Stephanidis, Vol. 714 (Cham: Springer International Publishing), 3–8. doi: 10.1007/978-3-319-58753-0_1

Bos, J. E., de Vries, S. C., van Emmerik, M. L., and Groen, E. L. (2010). The effect of internal and external fields of view on visually induced motion sickness. Appl. Ergon. 41, 516–521. doi: 10.1016/j.apergo.2009.11.007

Carmack, J. (2013). Latency mitigation strategies. Available online at: https://danluu.com/latency-mitigation/

Caserman, P., Martinussen, M., and Gobel, S. (2019). “Effects of end-to-end latency on user experience and performance in immersive virtual reality applications,” in Entertainment Computing and Serious Games, eds E. van der Spek, E., S. Gobel, E. Y.-L. Do, E. Clua, and J. Baalsrud Hauge, Vol. 11863 (Cham: Springer International Publishing), 57–69. doi: 10.1007/978-3-030-34644-7_5

Chang, C.-M., Hsu, C.-H., Hsu, C.-F., and Chen, K.-T. (2016). Performance Measurements of Virtual Reality Systems: Quantifying the Timing and Positioning Accuracy. New York, NY: ACM Press. doi: 10.1145/2964284.2967303

Chang, E., Kim, H. T., and Yoo, B. (2020). Virtual reality sickness: a review of causes and measurements. Int. J. Hum. Comput. Interact. 36, 1658–1682. doi: 10.1080/10447318.2020.1778351

Chen, Y.-C., Dong, X., Hagstrom, J., and Stoffregen, T. A. (2011). Control of a virtual ambulation influences body movement and motion sickness. BIO Web Conf. 1:00016. doi: 10.1051/bioconf/20110100016

Davis, J., Hsieh, Y.-H., and Lee, H.-C. (2015). Humans perceive flicker artifacts at 500 Hz. Sci. Rep. (London) 5:7861. doi: 10.1038/srep07861

Davis, S., Nesbitt, K., and Nalivaiko, E. (2014). A Systematic Review of Cybersickness. ACM Press. doi: 10.1145/2677758.2677780

Di Luca, M. (2010). New method to measure end-to-end delay of virtual reality. Presence 19, 569–584. doi: 10.1162/pres_a_00023

DiZio, P., and Lackner, J. R. (2000). Motion sickness side effects and aftereffects of immersive virtual environments created with helmet-mounted visual displays. Brandeis Univ Waltham ma ashton Graybiel Spatial Orientation Lab, 5.

Ellis, S. R., Mania, K., Adelstein, B. D., and Hill, M. I. (2004). “Generalizeability of latency detection in a variety of virtual environments,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 48 (Los Angeles, CA: SAGE Publications), 2632–2636. doi: 10.1177/154193120404802306

Ellis, S. R., Young, M. J., Adelstein, B. D., and Ehrlich, S. M. (1999). “Discrimination of changes of latency during voluntary hand movement of virtual objects,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 43 (Los Angeles, CA: SAGE Publications), 1182–1186. doi: 10.1177/154193129904302203

Feldstein, I. T., and Ellis, S. R. (2020). “A simple, video-based technique for measuring latency in virtual reality or teleoperation,” in IEEE Transactions on Visualization and Computer Graphics (New York, NY). doi: 10.1109/TVCG.2020.2980527

Feng, J., Kim, J., Luu, W., and Palmisano, S. (2019). “Method for estimating display lag in the Oculus Rift S and CV1,” in SIGGRAPH Asia 2019 Posters (Brisbane, QLD: ACM), 1–2. doi: 10.1145/3355056.3364590

Frank, L. H., Casali, J. G., and Wierwille, W. W. (1988). Effects of visual display and motion system delays on operator performance and uneasiness in a driving simulator. Hum. Fact. 30, 201–217. doi: 10.1177/001872088803000207

Friston, S., and Steed, A. (2014). Measuring latency in virtual environments. IEEE Trans. Visual. Comput. Graph. 20, 616–625. doi: 10.1109/TVCG.2014.30

Gach, E. (2019). Valve Updates Steam VR Because Beat Saber Players Are Too Fast. Library Catalog: kotaku.com

Gavgani, M. A., Walker, F. R., Hodgson, D. M., and Nalivaiko, E. (2018). A comparative study of cybersickness during exposure to virtual reality and “classic” motion sickness: are they different? J. Appl. Physiol. 125, 1670–1680. doi: 10.1152/japplphysiol.00338.2018

Gianaros, P. J., and Stern, R. M. (2010). A questionnaire for the assessment of the multiple dimensions of motion sickness. Aviation, Space, and Environmental Medicine, 72:115.

Golding, J. F. (1998). Motion sickness susceptibility questionnaire revised and its relationship to other forms of sickness. Brain Res. Bull. 47, 507–516. doi: 10.1016/S0361-9230(98)00091-4

Graybiel, A., Wood, C. D., and Cramer, D. B. (1968). Diagnostic criteria for grading the severity of acute motion sickness. Aerosp. Med. 39, 453–5.

Gruen, R., Ofek, E., Steed, A., Gal, R., Sinclair, M., and Gonzalez-Franco, M. (2020). “Measuring System Visual Latency through Cognitive Latency on Video See-Through AR devices,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 791–799.

He, D., Liu, F., Pape, D., Dawe, G., and Sandin, D. (2000). “Video-based measurement of system latency,” in International Immersive Projection Technology Workshop (Ames, IA).

Hsu, R. (2015). Who Moved my 99th Percentile Latency? Available online at: https://engineering.linkedin.com/performance/who-moved-my-99th-percentile-latency

Jerald, J. J. (2010). “Relating scene-motion thresholds to latency thresholds for head-mounted displays,” in 2009 IEEE Virtual Reality Conference (Lafayette, LA), 211–218. doi: 10.1109/VR.2009.4811025

Jung, J. Y., Adelstein, B. D., and Ellis, S. R. (2000). “Discriminability of prediction artifacts in a time-delayed virtual environment,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 44 (Los Angeles, CA: SAGE Publications), 499–502. doi: 10.1177/154193120004400504

Kämäräinen, T., Siekkinen, M., Ylä-Jääski, A., Zhang, W., and Hui, P. (2017). “Dissecting the end-to-end latency of interactive mobile video applications,” in Proceedings of the 18th International Workshop on Mobile Computing Systems and Applications - HotMobile '17 (Sonoma, CA: ACM Press), 61–66. doi: 10.1145/3032970.3032985

Kawamura, S., and Kijima, R. (2016). “Effect of head mounted display latency on human stability during quiescent standing on one foot,” in 2016 IEEE Virtual Reality (VR) (Greenville, SC) 199–200. doi: 10.1109/VR.2016.7504722

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3, 203–220. doi: 10.1207/s15327108ijap0303_3

Keshavarz, B., and Hecht, H. (2011). Validating an efficient method to quantify motion sickness. Hum. Fact. 53, 415–426. doi: 10.1177/0018720811403736

Kijima, R., and Miyajima, K. (2016a). “Looking into HMD: a method of latency measurement for head mounted display,” in 2016 IEEE Symposium on 3D User Interfaces (3DUI) (Greenville, SC), 249–250. doi: 10.1109/3DUI.2016.7460064

Kijima, R., and Miyajima, K. (2016b). “Measurement of Head Mounted Display's latency in rotation and side effect caused by lag compensation by simultaneous observation–an example result using Oculus Rift DK2,” in 2016 IEEE Virtual Reality (VR) (Greenville, SC), 203–204. doi: 10.1109/VR.2016.7504724

Kim, J., Luu, W., and Palmisano, S. (2020). Multisensory integration and the experience of scene instability, presence and cybersickness in virtual environments. Comput. Hum. Behav. 113:106484. doi: 10.1016/j.chb.2020.106484

Kinsella, A., Mattfeld, R., Muth, E., and Hoover, A. (2016). Frequency, not amplitude, of latency affects subjective sickness in a head-mounted display. Aerosp. Med. Hum. Perform. 87, 604–609. doi: 10.3357/AMHP.4351.2016

Lampton, D. R., Knerr, B. W., Goldberg, S. L., Bliss, J. P., Moshell, J. M., and Blau, B. S. (1994). The virtual environment performance assessment battery (VEPAB): development and evaluation. Presence Teleoperat. Virt. Environ. 3, 145–157. doi: 10.1162/pres.1994.3.2.145

LaViola, J. J. Jr. (2000). A discussion of cybersickness in virtual environments. ACM SIGCHI Bull. 32, 47–56. doi: 10.1145/333329.333344

Liang, J., Shaw, C., and Green, M. (1991). “On temporal-spatial realism in the virtual reality environment,” in Proceedings of the 4th Annual ACM Symposium on User Interface Software and Technology (Hilton Head, SC), 19–25. doi: 10.1145/120782.120784

Mayer, B. (2020). SpaceX: Linux in den Rechnern, Javascript in den Touchscreens. Library Catalog: www.golem.de

McCauley, M. E., and Sharkey, T. J. (1992). Cybersickness: perception of self-motion in virtual environments. Presence Teleoperat. Virt. Environ. 1, 311–318. doi: 10.1162/pres.1992.1.3.311

McHugh, N. (2019). Measuring and minimizing cybersickness in virtual reality. Dissertation, University of Canterbury. doi: 10.26021/1316

McKenney, P. E. (2008). ““Real time” vs. “real fast”: how to choose?” in Ottawa Linux Symposium (Ottawa), 57–65.

Meehan, M., Razzaque, S., Whitton, M., and Brooks, F. (2003). “Effect of latency on presence in stressful virtual environments,” in IEEE Virtual Reality, 2003 (Los Angeles, CA), 141–148.

Miller, D., and Bishop, G. (2002). “Latency meter: a device end-to-end latency of VE systems,” in Stereoscopic Displays and Virtual Reality Systems IX (San Jose, CA), Vol. 4660, 458–464. doi: 10.1117/12.468062

Mine, M. (1993). Characterization of End-to-End Delays in Head-Mounted Display Systems. The University of North Carolina at Chapel Hill.

Moss, J. D., Austin, J., Salley, J., Coats, J., Williams, K., and Muth, E. R. (2011). The effects of display delay on simulator sickness. Displays 32, 159–168. doi: 10.1016/j.displa.2011.05.010

Muth, E. R., Stern, R. M., Thayer, J. F., and Koch, K. L. (1996). Assessment of the multiple dimensions of nausea: the Nausea Profile (NP). J. Psychosom. Res. 40, 511–520. doi: 10.1016/0022-3999(95)00638-9

Nancel, M., Vogel, D., De Araujo, B., Jota, R., and Casiez, G. (2016). “Next-point prediction metrics for perceived spatial errors,” in Proceedings of the 29th Annual Symposium on User Interface Software and Technology (Tokyo), 271–285. doi: 10.1145/2984511.2984590

Oman, C. M. (1990). Motion sickness: a synthesis and evaluation of the sensory conflict theory. Can. J. Physiol. Pharmacol. 68, 294–303. doi: 10.1139/y90-044

Palmisano, S., Szalla, L., and Kim, J. (2019). “Monocular viewing protects against cybersickness produced by head movements in the Oculus Rift,” in 25th ACM Symposium on Virtual Reality Software and Technology (Parramatta, NSW: ACM), 1–2. doi: 10.1145/3359996.3364699

Papadakis, G., Mania, K., and Koutroulis, E. (2011). “A system to measure, control and minimize end-to-end head tracking latency in immersive simulations,” in Proceedings of the 10th International Conference on Virtual Reality Continuum and Its Applications in Industry - VRCAI '11, (Hong Kong: ACM Press) 581. doi: 10.1145/2087756.2087869

Pape, S., Kruger, M., Muller, J., and Kuhlen, T. W. (2020). “Calibratio: a small, low-cost, fully automated motion-to-photon measurement device,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (Atlanta, GA), 234–237. doi: 10.1109/VRW50115.2020.00050

Raaen, K., and Kjellmo, I. (2015). “Measuring latency in virtual reality systems,” in Entertainment Computing - ICEC 2015, eds K. Chorianopoulos, M. Divitini, J. Baalsrud Hauge, L. Jaccheri, and R. Malaka, Vol. 9353 (Cham: Springer International Publishing), 457–462.

Rebenitsch, L., and Owen, C. (2016). Review on cybersickness in applications and visual displays. Virt. Real. 20, 101–125. doi: 10.1007/s10055-016-0285-9

Riccio, G. E., and Stoffregen, T. A. (1991). An ecological theory of motion sickness and postural instability. Ecol. Psychol. 3, 195–240. doi: 10.1207/s15326969eco0303_2

Roberts, D., Duckworth, T., Moore, C., Wolff, R., and O'Hare, J. (2009). “Comparing the end to end latency of an immersive collaborative environment and a video conference,” in 2009 13th IEEE/ACM International Symposium on Distributed Simulation and Real Time Applications (Singapore: IEEE), 89–94. doi: 10.1109/DS-RT.2009.43

Seo, M.-W., Choi, S.-W., Lee, S.-L., Oh, E.-Y., Baek, J.-S., and Kang, S.-J. (2017). Photosensor-based latency measurement system for head-mounted displays. Sensors 17:1112. doi: 10.3390/s17051112

Sielhorst, T., Sa, W., Khamene, A., Sauer, F., and Navab, N. (2007). “Measurement of absolute latency for video see through augmented reality,” in 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (Nara), 215–220. doi: 10.1109/ISMAR.2007.4538850

Stanney, K. M., Kennedy, R. S., and Drexler, J. M. (1997). Cybersickness is not simulator sickness. Proc. Hum. Fact. Ergon. Soc. Annu. Meet. 41, 1138–1142. doi: 10.1177/107118139704100292

Stauffert, J.-P., Niebling, F., and Latoschik, M. E. (2016). Towards Comparable Evaluation Methods and Measures for Timing Behavior of Virtual Reality Systems. Munich: ACM Press. doi: 10.1145/2993369.2993402

Stauffert, J.-P., Niebling, F., and Latoschik, M. E. (2018). “Effects of latency jitter on simulator sickness in a search task,” in 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Reutlingen: IEEE), 121–127. doi: 10.1109/VR.2018.8446195

Stauffert, J.-P., Niebling, F., and Latoschik, M. E. (2020a). “Simultaneous run-time measurement of motion-to-photon latency and latency jitter,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Atlanta, GA: IEEE), 636–644. doi: 10.1109/VR46266.2020.1581339481249

Stauffert, J.-P., Niebling, F., Lugrin, J.-L., and Latoschik, M. E. (2020b). “Guided sine fitting for latency estimation in virtual reality,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 707–708. doi: 10.1109/VRW50115.2020.00204

Steed, A. (2008). “A simple method for estimating the latency of interactive, real-time graphics simulations,” in Proceedings of the 2008 ACM Symposium on Virtual Reality Software and Technology, VRST '08 (New York, NY: ACM), 123–129. doi: 10.1145/1450579.1450606

Stone Ill, W. B. (2017). Psychometric evaluation of the Simulator Sickness Questionnaire as a measure of cybersickness (Doctor of Philosophy). Iowa State University, Digital Repository, Ames, IA.

St. Pierre, M. E., Banerjee, S., Hoover, A. W., and Muth, E. R. (2015). The effects of 0.2Hz varying latency with 20–100ms varying amplitude on simulator sickness in a helmet mounted display. Displays 36, 1–8. doi: 10.1016/j.displa.2014.10.005

Swindells, C., Dill, J. C., and Booth, K. S. (2000). “System lag tests for augmented and virtual environments,” in Proceedings of the 13th Annual ACM Symposium on User Interface Software and Technology (San Diego, CA: ACM), 161–170. doi: 10.1145/354401.354444

Teather, R. J., Pavlovych, A., Stuerzlinger, W., and MacKenzie, I. S. (2009). “Effects of tracking technology, latency, and spatial jitter on object movement,” in 2009 IEEE Symposium on 3D User Interfaces (Lafayette, LA), 43–50. doi: 10.1109/3DUI.2009.4811204

Treisman, M. (1977). Motion sickness: an evolutionary hypothesis. Science 197, 493–495. doi: 10.1126/science.301659

Tufte, E. R. (2001). The Visual Display of Quantitative Information, Vol. 2. Cheshire, CT: Graphics Press.

Tumanov, A., Allison, R., and Stuerzlinger, W. (2007). “Variability-aware latency amelioration in distributed environments,” in 2007 IEEE Virtual Reality Conference (Charlotte, NC), 123–130. doi: 10.1109/VR.2007.352472

van Waveren, J. M. P. (2016). “The asynchronous time warp for virtual reality on consumer hardware,” in Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology - VRST '16 (Munich: ACM Press), 37–46. doi: 10.1145/2993369.2993375

Virre, E. (1996). Virtual reality and the vestibular apparatus. IEEE Eng. Med. Biol. Mag. 15, 41–43. doi: 10.1109/51.486717

Vulimiri, A., Godfrey, P. B., Mittal, R., Sherry, J., Ratnasamy, S., and Shenker, S. (2013). Low latency via redundancy. arXiv preprint arXiv:1306.3707. doi: 10.1145/2535372.2535392

Wu, W., Dong, Y., and Hoover, A. (2013). Measuring digital system latency from sensing to actuation at continuous 1-ms resolution. Presence Teleoperat. Virt. Environ. 22, 20–35. doi: 10.1162/PRES_a_00131

Keywords: virtual reality, latency, cybersickness, jitter, simulator sickness

Citation: Stauffert J-P, Niebling F and Latoschik ME (2020) Latency and Cybersickness: Impact, Causes, and Measures. A Review. Front. Virtual Real. 1:582204. doi: 10.3389/frvir.2020.582204

Received: 10 July 2020; Accepted: 30 October 2020;

Published: 26 November 2020.

Edited by:

Kay Marie Stanney, Design Interactive, United StatesReviewed by:

Pierre Bourdin, Open University of Catalonia, SpainWolfgang Stuerzlinger, Simon Fraser University, Canada

Juno Kim, University of New South Wales, Australia

Copyright © 2020 Stauffert, Niebling and Latoschik. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jan-Philipp Stauffert, amFuLXBoaWxpcHAuc3RhdWZmZXJ0QHVuaS13dWVyemJ1cmcuZGU=

Jan-Philipp Stauffert

Jan-Philipp Stauffert Florian Niebling

Florian Niebling Marc Erich Latoschik

Marc Erich Latoschik