- 1Department of Internal Medicine, Leiden University Medical Center, Leiden, Netherlands

- 2Department of Anatomy & Embryology, Leiden University Medical Center, Leiden, Netherlands

- 3Center for Innovation in Medical Education, Leiden University Medical Center, Leiden, Netherlands

- 4Centre for Innovation, Leiden University, The Hague, Netherlands

- 5Department of Pulmonology, Leiden University Medical Center, Leiden, Netherlands

- 6Department of Nephrology, Leiden University Medical Center, Leiden, Netherlands

Introduction: Augmented Reality is a technique that enriches the real-life environment with 3D visuals and audio. It offers possibilities to expose medical students to a variety of clinical cases. It provides unique opportunities for active and collaborative learning in an authentic but safe environment. We developed an Augmented Reality application on the clinical presentation of shortness of breath (dyspnea), grounded on a theoretical instructional design model.

Methods: A team of various stakeholders (including medical teachers and students) was formed to design the application and corresponding small group learning session, grounded on principles of instruction as described by Merrill. Evaluation was performed by an explorative questionnaire, consisting of open and closed questions (Likert scales), covering user experience, content and physical discomfort.

Results: Multiple interactive cases of dyspnea were designed. The application plays back audio samples of abnormal lung sounds corresponding to a specific clinical case of dyspnea and displays a 3D model of the related pulmonary pathologies. It was implemented in the medical curriculum as an obligatory small group learning session scheduled preceding clinical clerkships. Prior knowledge was activated prior to the learning session. New knowledge was acquired with the application by solving an authentic problem based on a real patient case. In total 110 students participated in the study and 104 completed the questionnaire. 85% of the students indicated that the virtually auscultated lung sounds felt natural. 90% reported that the augmented reality experience helped them to better understand the clinical case. The majority of the students (74%) indicated that the experience improved their insight in the portrayed illness. 94.2% reported limited or no physical discomfort.

Discussion: An Augmented Reality application on the presentation of dyspnea was successfully designed and implemented in the medical curriculum. Students confirm the value of the application in terms of content and usability. The extension of this Augmented Reality application for education of other healthcare professionals in currently under consideration.

Introduction

Medical students need exposure to a wide variety of authentic clinical cases to prepare for clinical activities. Until recently, the preparation of medical students for the clinical clerkships at Leiden University Medical Center (LUMC) comprised mainly e-learning modules, small group learning, physical examination of peer students, interpretation of 2D radiology images and practicing with simulated patients. However, authenticity in these learning environments is often limited. Learning activities with simulated patients cannot expose students to abnormalities on physical examination such as pathological lung sounds, which can be important clues in the diagnostic process (Cleland et al., 2009). E-learning can offer virtual patients with ample examples of authentic clinical cases, but has limitations in practicing communication (Edelbring et al., 2011) and psychomotor skills, such as auscultation. However, these competencies will also be necessary once the students encounter real patients. And finally, simulated patients nor e-learning can demonstrate the relationship between auscultated lung sounds and 3D anatomy very well, which is one of the major challenges for students.

A possible technology to help students bridging the gap between virtual or simulated patients and clinical practice is Augmented Reality (AR). AR is an innovative digital technique that enriches the real-life environment by adding computer generated perceptual information, such as 3D visuals, audio and/or (haptic) feedback. It can promote active learning by literally making students walk around a 3D hologram and stimulating relevant motion patterns, and it is suitable for collaborative learning by synchronizing experiences among users (Kaufmann and Schmalstieg, 2003; Barmaki et al., 2019; Bogomolova et al., 2019, 2020). In order to add virtual content to the real-life environment, various hardware devices can be used for AR ranging from handheld devices such as smart phones or tablets to head-mounted transparent displays (Kamphuis et al., 2014). Examples of the latter are Google Glass® and Microsoft HoloLens®. An advantage of AR applications with a head-mounted display is that, in contrast to virtual reality (VR) headsets, learners can continue interacting with each other and their teachers because of the transparency of the AR headset. In this way, an authentic and safe learning environment for small group learning can be created.

Not surprisingly, the field of AR in medicine is growing rapidly (Eckert et al., 2019). There are many publications on this topic, and it should be noted that one should be careful interpreting literature data as AR technology changed markedly over time. Many of the published AR applications are on the topics of surgery and radiology, and described prototypes that needed further development (Eckert et al., 2019). When zooming in on AR specifically for medical education, most studies focus on surgical or anatomy education (Zhu et al., 2014; Sheik-Ali et al., 2019; Tang et al., 2020; Uruthiralingam and Rea, 2020). Studies on other medical fields are underrepresented (Nischelwitzer et al., 2007; Rosenbaum et al., 2007; Nilsson and Johansson, 2008; Pretto et al., 2009; Sakellariou et al., 2009; Lamounier et al., 2010; Rasimah et al., 2011; Kotranza et al., 2012; von Jan et al., 2012; Gerup et al., 2020). Interestingly, only a few AR applications incorporated a collaborative aspect (e.g., Kaufmann and Schmalstieg, 2003; Barmaki et al., 2019; Bogomolova et al., 2019), perhaps because of technical reasons, such as a lack of widely available network coverage or the computational ability to allow for shared or multiuser applications. In many studies, both learners and experts responded positively toward AR and the possibility of implementing AR in training programs. The current quality and range of AR research in medical education may not yet be sufficient to recommend the adoption of AR technologies into medical curricula (Tang et al., 2020). There are relatively few studies focusing on AR with head-mounted displays, and specifically in the field of lung diseases and auscultation, there are no AR applications described yet.

In this study we designed and developed an AR application for head-mounted display in the field of pulmonary medicine, to complement the diagnostic process on the presentation of dyspnea (shortness of breath). This application provides users with a synchronized experience comprising a virtual stethoscope and positional lung sounds, and visualizes relevant anatomy and pathology in an interactive setup. It is grounded on a theoretical instructional design model based on the First principles of instruction as described by Merrill (2002). The first implementation in our medical curriculum was evaluated by an explorative questionnaire amongst learners.

Materials and Methods

Learning Experience Design and Design Process of the AR Application

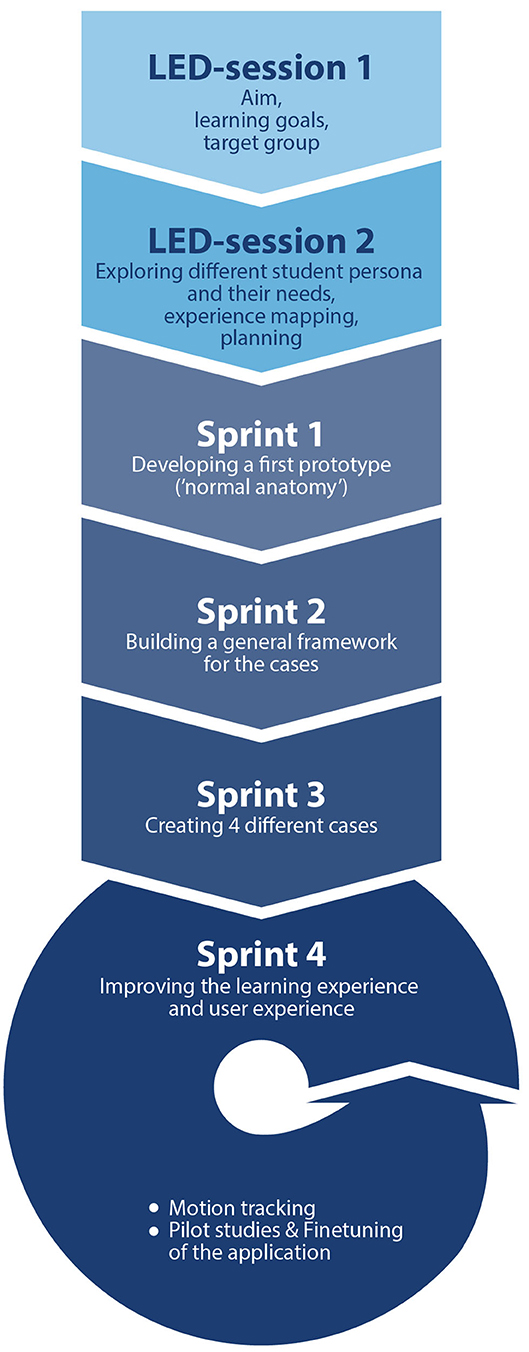

In November 2017, a collaboration between Leiden University Medical Center (LUMC) and Leiden University's Center for Innovation was formed in order to co-create the AR application and small group learning sessions with this application. The team consisted of various stake holders: clinical teachers, anatomy experts, medical students, developers, project managers, educational experts and a medical illustrator. The total design process had a duration of ~22 months and is visualized in Figure 1. It was started by a so-called “Learning Experience Design (LED) session” which involved all stakeholders. During this session, the common goal was set, the target group was defined and the project process was outlined. The project was carried out using the SCRUM method (Schwaber and Beedle, 2008) working in short timeframes (sprints). Once every 2 months the project team held sprint meetings in which the progress was discussed and project activities were prioritized. This design process resulted in several functional requirements. The application should offer:

• An immersive authentic AR experience for use with a head-mounted display (the HoloLens®)

• A 3D thorax model that is anatomically correct, and several 3D thorax models with authentic pulmonary pathologies

• The possibility to auscultate lung sounds within the AR application corresponding to a clinical case in an authentic manner, and practice as long and as frequently as needed

• The possibility to visualize site-specific pathologies corresponding to localized lung sounds

• The possibility of augmentation of a real-life simulated patient with the digital models, so communication skills could be practiced in an authentic manner

• A synchronized experience by multiple users.

It was decided to use a modular design for the AR application, so extra cases could be easily added in the future.

The first LED session was followed by a second LED session and multiple sprints with cycles of design, evaluation and redesign (Figure 1). In the second LED session, the team focused on learning experience mapping. Specifically, distinct learner personas were created and the learner journey was outlined. A design goal was that the AR application would optimally serve the different student personas. One student persona is a motivated learner with affinity for ICT, and another persona that was less motivated requiring more assistance with ICT. The learner journey was translated into a series of modular steps in the learning process and within the AR application, which were further explored and developed in the sprints. In the first sprint, a first prototype of the 3D model was created and evaluated: a 3D thorax with normal anatomy and the possibility to listen to lung sounds. After testing the prototype with multiple team members (students and teachers), the feedback was used as input for the second sprint. The main suggestions for improvements were: a feature to move the model up or down (the default position was too low and could not yet be adjusted), a longer or resizable virtual stethoscope (by using a relative short stethoscope users had to come quite close to the model, which resulted in a limited overview of the model due to the decreased field of view). The second sprint resulted in a prototype with a general framework of a user interface with the possibility to adjust the position and the size of the model. Again, the prototype was evaluated by team members (both students and teachers). Their feedback included that the audio files did not start and stop in a natural way, which was fixed in a later sprint. In the third sprint, the content for four clinical cases was created and incorporated in the application. The 3D models corresponding to the cases were designed by a medical illustrator based on radiology images (mainly CT scans) of patients with corresponding diagnoses. Previously recorded audio samples of lung sounds of actual patients were used, and for each case the spatial positioning of the appropriate lung sounds was programmed to correspond to the location(s) of pulmonary abnormalities. The fourth sprint comprised further finetuning of the application to improve both learning and user experience and refining a system for motion tracking of the virtual stethoscope. After the fifth sprint, pilot studies with medical students were performed and evaluated. The prototype was tested by several medical students that were not involved in designing the application. The students were observed by several team members whilst using the application, and were asked to provide their feedback. One suggestion for improvement was providing feedback whether they listened on an appropriate or inappropriate location (e.g., it is not possible to listen to lung sounds if you place the virtual stethoscope on a bony surface such as the scapula). This feedback resulted in an extra feature: the virtual stethoscope changes in color from blue to orange if a student listens on an inappropriate location.

Instructional Design of the Small Group Learning Session

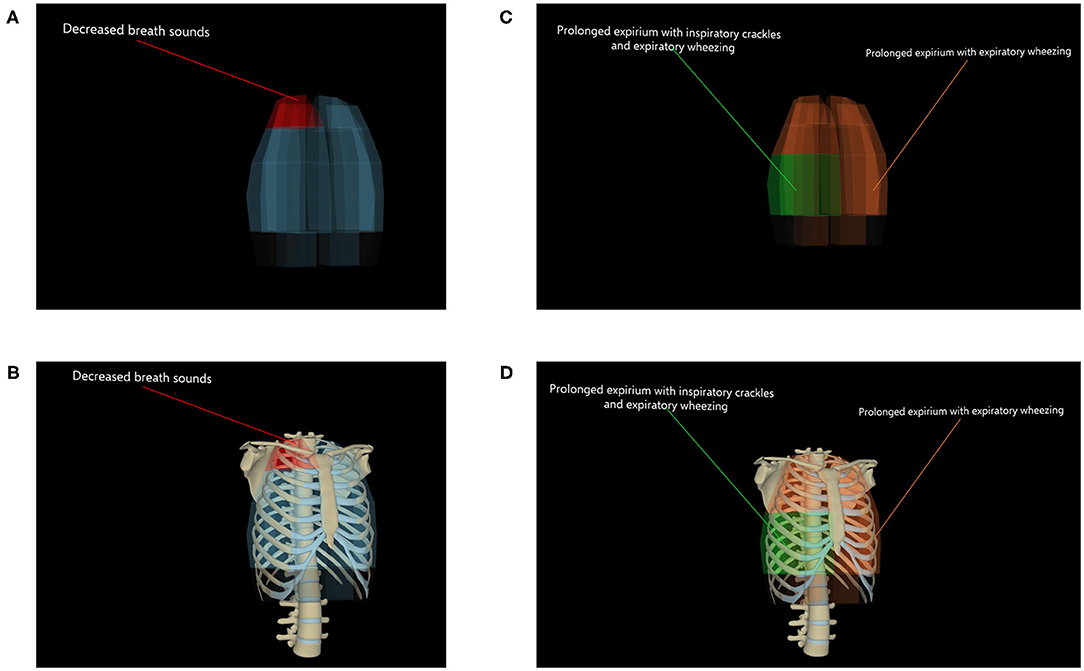

Merrill's First Principles of Instruction were used as theoretical framework for designing the small group learning session (Merrill, 2002). The incorporation of these five instructional design principles is shown in Table 1. The core principle is to engage learners in relevant, real-world problems. The presenting complaint shortness of breath was selected, because interns will encounter many patients with this symptom at the Emergency Department and Internal Medicine ward. The other principles of Merrill relate to consecutive phases of instruction. The second principle is that activation of previous knowledge or experience promotes learning. In order to stimulate this, students are provided with online assignments before entering the learning session, to recall knowledge relating to diagnosing patients with dyspnea. Because a simple recall of knowledge is rarely effective, the teacher refers to these assignments at the start of the session and allows opportunities for discussion. The third principle is that learning is promoted by demonstrating what needs to be learned. In order to do this, the teacher will demonstrate the consecutive steps of the diagnostic process and problem-solving strategies. The fourth principle is to promote learning by solving problems (“the application phase”). The vast part of the small group learning session is dedicated to this phase in which students collaboratively solve a patient case, including the parts in which the AR application is used. The fifth and final principle is the integration of knowledge. This is a natural step, because students will have ample opportunities to utilize the obtained knowledge and skills on new (real-world) patient cases during the Internal Medicine clerkship, which in our hospital starts within 2 weeks after the learning session. Because the students will be regularly assessed in the clinical clerkships, the subject of diagnosing cases with dyspnea was not formally assessed during this phase of the course.

Table 1. Use of Merrill's First Principles of Instruction in the educational process with the AR application.

Evaluation of the Implementation of the AR Application

The application and learning session have been developed for students in the preparative course of the Internal Medicine clerkship. In our curriculum, this clerkship is the first in a 3-years period of clinical rotations. The first experiences of students with the AR application in the small group learning session, were obtained by a voluntary questionnaire. This explorative questionnaire, as provided as Supplementary Material, covered the following topics:

• General information

• Use of AR application

• Content of the AR application

• Personal goal of the students

• Physical discomfort.

All medical students at LUMC participating in the obligatory small group learning sessions during the preparative course of the Internal Medicine clerkship were included in the study. Students that participated previously (e.g., students that had to repeat the clerkship including the preparative course) were excluded.

It was planned to study 10 groups of ~10–12 students over a period of 5 months to reach at least 45 responses being a representative 15% sample of the full cohort of 300 students per year. If the number of 45 responses could not reached after 5 months, the study period would be expanded until the number of 45 responses has been reached.

Participants were invited by a team member that was not involved in assessing or grading them to fill out a paper questionnaire, immediately after the small group learning session. All the participants received a letter with detailed information about the study objectives and data safety, and signed an informed consent form. After collecting the questionnaires, the data were pseudonymized and provided as such to the research team. The data have been stored for further research purposes according to the “Nederlandse Gedragscode Wetenschapsbeoefening” of the VSNU (The Association of Universities The Netherlands). The study protocol has been reviewed and approved by the Educational Research Review Board of the Leiden University Medical Center (OEC/ERRB/20191008/1).

The answers to the evaluative questions with a 4 or 5-point Likert scale were quantitatively analyzed. The answers to open ended questions were qualitatively analyzed by a thematic analysis. The answers were coded in vivo, overarching themes were selected in a inductive approach, and the quotations were assigned to overarching themes by the principal investigator (Miles et al., 2014). Illustrative quotations per theme were selected. The questions related to the type and extent of physical discomfort that students experienced during the use of the AR application, were descriptively analyzed.

Results

Short Description of the AR Application

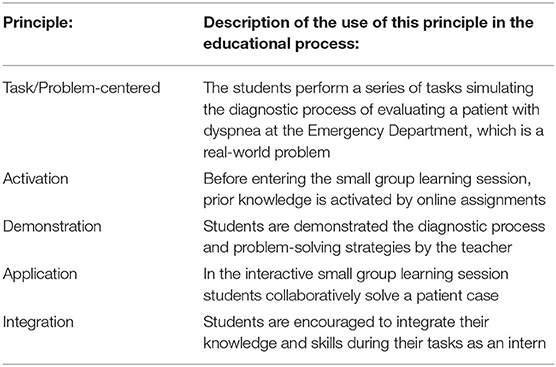

The design process resulted in an interactive multiuser AR application: AugMedicine: Lung cases [see online video's: Demonstration of AugMedicine: Lung Cases (2020); HoloLens App AugMedicine: Lung Cases (short) (2020)]. The application comprises a multilayer 3D anatomical model of a human thorax combined with 3D spatialized audio. When entering a session, users (either student or teacher) are assigned one of two possible roles: host or client. The host can control the application and select different cases of dyspnea (COPD + pneumonia, pulmonary embolism, pneumothorax or pleural effusion) or a 3D model with normal anatomy. The models are textured surface models which limits the amount of (synchronized) data, and improves performance. Since no cross sections are implemented in this application, solid models would have no added value. The device uses a coordinate system to place the model into the room, and the users can walk around to change their perspective on the 3D model. Users can manipulate a virtual stethoscope to listen to the lung sounds at different locations and from different angles, exactly as they would do when examining a real patient. The sounds are played back by the AR glasses and correspond to the selected case (see Figures 2A,B). The host can display the 3D model on a real person's body (e.g., a simulated patient) so the auscultation process becomes more authentic (Figure 2A). The host can also choose which anatomical layers are visible to the learners (bones, diaphragm, heart and blood vessels, trachea, lungs, pleurae; see Figure 2B). The host can eventually show a 3D visual representation of the actual diagnosis.

Figure 2. Impressions of the use of the AR application. (A) Auscultation with the AR application on a standardized patient. (B) User interface of the AR application.

Technology Used for Developing the AR Application

Development Framework

The application was developed using the Unity game engine, with C# as scripting language. The open source Mixed Reality Toolkit was used for basic features, such as rotating, moving, resizing the 3D model, and basic user interface (UI) components such as buttons, sliders, input handlers, screen reticle, shaders. All other functionalities and app logic were custom developed for this application.

Multi-User Synchronizing

Synchronizing the application across multiple HoloLens® devices was done through connecting the devices over a wireless local area network (WLAN). To achieve this, custom networking functionality was developed for the application, using the UNet networking High Level Application Programming Interface (HLAPI) from Unity. Upon starting a group session, one of the users chooses to start the application in “host mode.” This starts a new networked session in which this user is both host and server. Once the other users start the application, they are automatically added to the session as “clients.” The HoloLens® running in “host mode” displays, additionally to the virtual torso, a user interface that can be used to control the session. The user interface includes the following options: changing the case, selecting which elements of the 3D model are visible, changing stethoscope length, resizing, moving and rotating the 3D model. Only the host can perform these actions. Changes in the application are automatically synchronized with the clients.

Aligned Spatial Positioning

It was aimed to achieve a multi-user experience that enables users to communicate about the virtual content in a way that is similar to communicating about a real world object, e.g.,: a user can direct the attention of other users toward a specific part of the 3D model by simply pointing a finger to it. To enable such an experience, it is required that the virtual content is displayed at the same position and orientation relative to the real-world space for all the users. This was achieved by implementing image recognition functionality in the application through the Vuforia® software development kit (SDK), and using it to enable the HoloLens® to recognize the position and orientation of a printed visual marker. Before the start of a session, a printed marker (a QR code) is placed on a non-moving surface, such as a table. During startup of the application, the user is asked to aim the HoloLens®' camera at the marker by looking at it. Once the marker is recognized, the application sets a spatial anchor on the position of the marker and displays the virtual on top of the marker for the rest of the session. Since all users make use of the same printed marker, the virtual content is aligned for all users. Over time, it may happen that a user's model loses alignment. A button was incorporated in the UI that enables the host of the session to trigger a realignment request for users. This prompts them with a message to rescan the printed marker for realigning the virtual content to the marker's position and orientation.

Virtual Stethoscope

Our aim was to simulate working with a stethoscope as accurately as possible. Therefore, the application enables users to control the position and orientation of the virtual stethoscope through operating a real stethoscope (or any other physical object). This requires 6-DOF tracking of the real stethoscope, which was achieved through making use of the image recognition functionality of the Vuforia® SDK. We created a printable custom “multi-target” cube, which is a foldable 3D object that can be recognized by the Vuforia® engine from all angles. Multiple shapes and sizes of the multi-target were tested: cube-, cylinder- and cone-shaped. A cube of 6 cm by 6 cm provided the best balance between tracking stability and size. Using tape, the cube can be attached to a real stethoscope (or any other object). Upon holding the stethoscope with attached cube in front of the HoloLens®' cameras, it is recognized and a virtual stethoscope is rendered over it. The tip of the virtual stethoscope consists of a 3D sphere with a collider, which is used to detect collisions with the virtual torso. The distance between the tip of the virtual stethoscope and the cube target, “stethoscope length”, can be set from within the application. When set to a short length, users have to place the virtual stethoscope directly on the outside of the virtual torso to hear sounds, like in a real-world setting. Setting a longer “stethoscope length”, allows users to also aim the virtual stethoscope at the virtual torso from a distance to auscultate. This was implemented for a better user experience. When working with larger groups, it enables multiple people to listen to the sounds while keeping personal distance. Given the HoloLens®' Field of View limitations, a longer stethoscope length also allows users to see the full torso when listening to the lung sounds.

Lung Sounds

A custom method was developed to simulate the positioning of sounds for an area of the torso that roughly corresponds with the torso area on which a sound can be heard in reality. This method makes use of audio recordings that are played back in mono. No audio spatialization effects are used, since a real stethoscope also simply provides mono audio. However, like in a real-world situation, the type of sound heard is determined by the position of the stethoscope. The application simulates this by using the location on which the virtual stethoscope collides with the virtual torso to control the type of audio sample that is played back and its volume. This is achieved in the following way.

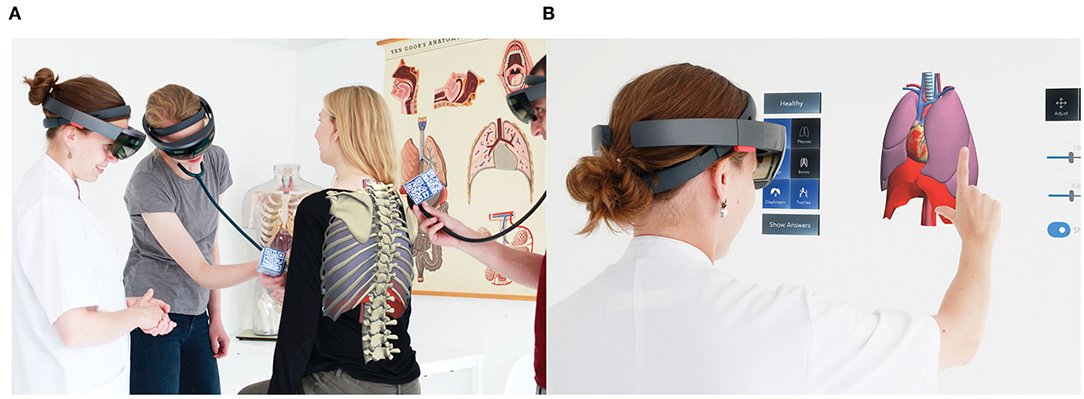

An invisible 3D model that follows the general outline of the visible 3D torso (functioning as a sort of shell) was used to detect collisions between the torso and the virtual stethoscope. We call this invisible model “Audio Collision Model” (ACM). The ACM is divided into 50 segments that are used to distinguish between different collision areas of the model (see Figure 3). Each segment has its own “collider” to detect collisions with the virtual stethoscope. A custom script was written to map each segment to a sound file from our database of lung sounds. A Unique mapping was created for each of the clinical cases in the app. A mapping was made by first identifying, for each clinical case, the torso areas on which a collision with the virtual stethoscope should trigger playback of a particular sound. Then, the segments of the ACM covering that part of the torso area were selected and mapped to the corresponding audio file. This approach allowed us to reuse one ACM for each of the clinical cases in the application. Hence, when switching to a different case, the ACM remains unchanged, but the mapping between the ACM's segments and the sound files is updated. Figure 3 shows differences in the mapping of audio files to the ACM between two clinical cases (Figures 3A,B: pneumothorax; Figures 3C,D: COPD + pneumonia). Each color corresponds to one unique sound file. This shows that multiple segments of the ACM can be mapped to the same audio file. Together with a medical specialist it was decided that dividing the ACM in 50 segments provided sufficient resolution for making mappings that simulate a real-world situation. When moving the virtual stethoscope from one segment of the ACM to another, a cross-fade between the volume of the previous and the new audio sample is used to create a smooth transition.

Figure 3. Screenshots of the “Audio Collision Model” (ACM). (A) ACM with mapping of audio files of the case “Pneumothorax.” (B) ACM with mapping of audio files of the case “Pneumothorax,” with bones layer visible. (C) ACM with mapping of audio files of the case “COPD + pneumonia.” (D) ACM with mapping of audio files of the case “COPD + pneumonia,” with bones layer visible.

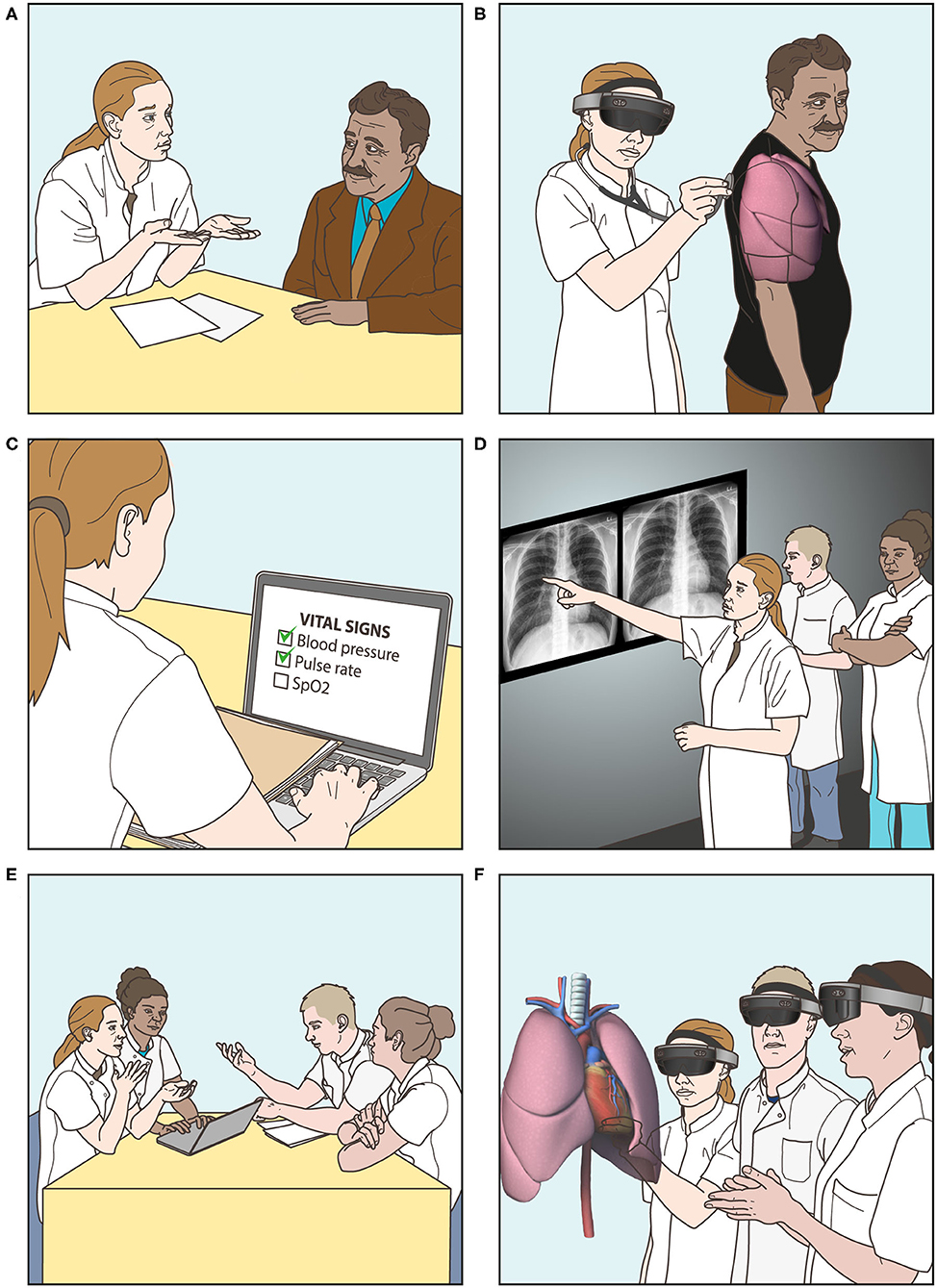

Description of the Small Group Learning Session

The small group learning session for 10–12 students is guided by a clinical teacher and is aimed at diagnosing one clinical case presenting with dyspnea. An illustrative overview of the session is shown in Figure 4. In two small subgroups (5–6 students each), the students follow sequential diagnostic steps: taking a patient history with a simulated patient, auscultating lung sounds using the AR application (displayed on the simulated patient), gathering and interpreting information of the physical examination, ordering and interpreting relevant laboratory tests and radiology exams, and finally formulating a working diagnosis as well as a differential diagnosis. Students are encouraged to think-aloud and discuss their findings and thoughts with their peers at each step. After the last step, a 3D hologram of the underlying illness is displayed by the AR application and is discussed under guidance of a teacher. Because of the use of a simulated patient, students also train their communication skills. This offers the possibility of patient feedback, in addition to peer and teacher feedback. The duration of the session is ~2 h, during which the students use the AR application twice: at the introduction phase while auscultating the model with the virtual stethoscope, and at the evaluation phase, each time for an estimated 10–15 min (total of 20–30 min per session). There are two short breaks during the session.

Figure 4. Overview of small group learning session with the AR application. (A) Taking a patient history with a standardized patient. (B) Auscultating lung sounds using the virtual stethoscope. (C) Gathering and interpreting information of the physical examination. (D) Ordering and interpreting laboratory tests and radiology examinations. (E) Formulating a differential and working diagnosis. (F) Observing a 3D hologram of the underlying illness, displayed by the AR application, and discussing the case.

Implementation of the Small Group Learning Session With the AR Application

First, two pilot sessions were held in summer 2019. These sessions were used to refine logistics and to improve the study questionnaire. After these pilot studies, the small group learning session was implemented in our medical curriculum. The learning session is now integral part of the preparative course for the Internal Medicine clerkship. Every 4 weeks two new groups start (total of 20–24 new students) and participate in the obligatory small group learning session. During the study period, four different teachers guided the small group learning sessions. One of the teachers (AP) was involved in designing the AR application and in evaluating the AR experience. The other teachers received instructions about the AR application and evaluation prior to the session. Sometimes technical difficulties occurred during the learning sessions, e.g. problems connecting a HoloLens® to the group session, drifting of the model and crashing of the application. These technical problems appeared to increase when more students were using the AR application simultaneously, although we did not formally test this in this study. At the end of the study period, adjustments were made to prevent these technical problems in the future.

Evaluation of the AR Application

During the study period between October 2019 and February 2020, all students who participated in the obligatory small group learning session (n = 110) were asked to participate in the study. A total of 104 completed questionnaires was received (response rate 94.5 %). None of the students that were involved in designing the application participated in the study. The general information of the study participants is provided in Table 2. The average participant age was 23 years, with a range of 21–47 years. 42.3% of the participants was male. Many of the responders reported that they had glasses (25%) or contact lenses (29%). Only some of the students actually wore their glasses during the AR experience (11 out of 25 students), although it is no problem to wear glasses while using the AR application with the head-mounted display. Almost none of the students reported hearing problems. Most students did not have any prior experience with an AR headset (84%). Two different clinical cases were used in the study period: Pneumothorax and COPD (Chronic Obstructive Pulmonary Disease) plus pneumonia.

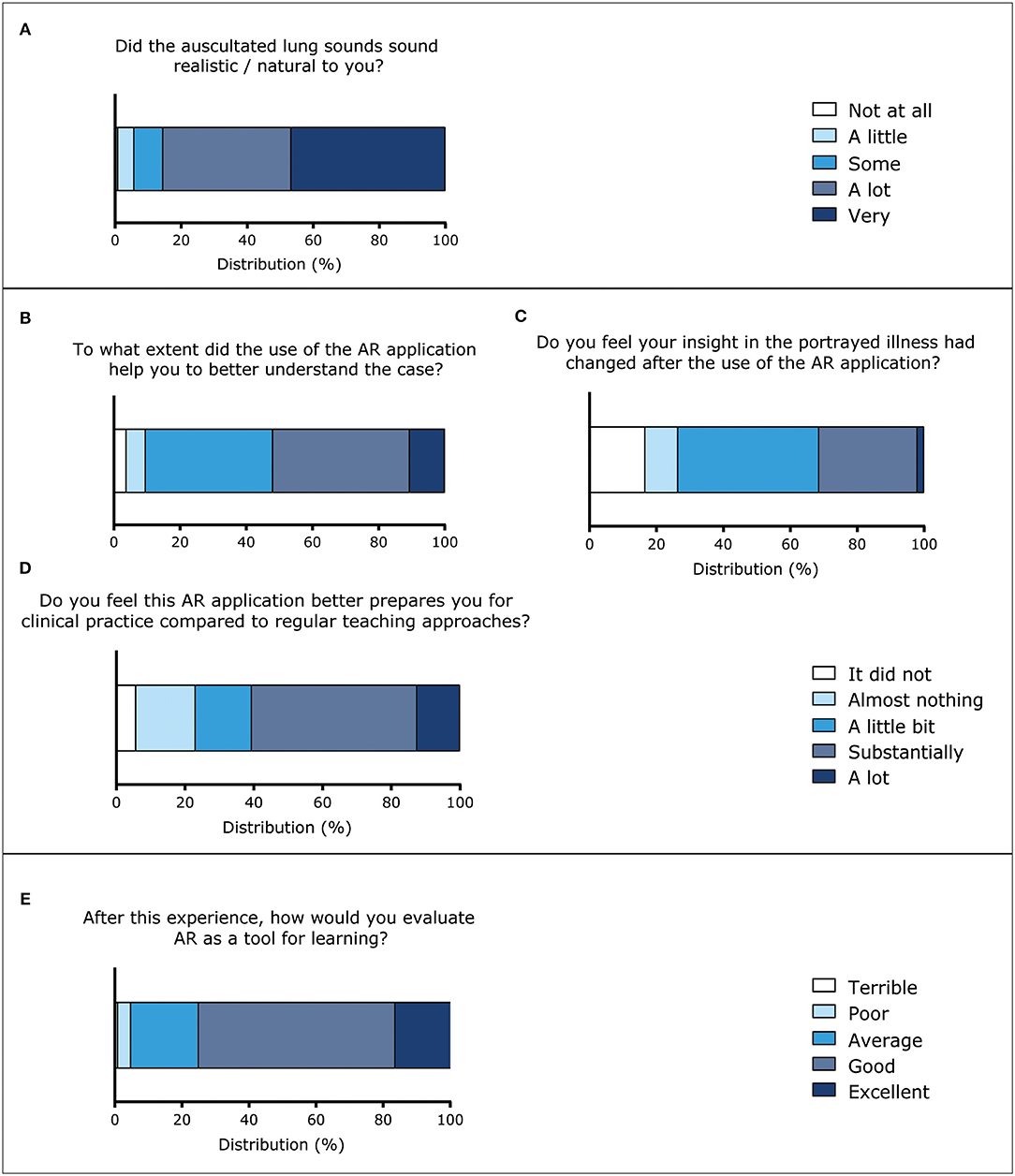

33% of the respondents reported that they had previously experienced a situation in the real world similar to the AR experience, e.g., examining a (simulated) patient with shortness of breath. The majority of the students indicated that the virtual lungs sounded natural (Figure 5A).

Figure 5. Evaluation of the AR application and AR as a tool for learning (five-point Likert scales). (A) Responses to the question “Did the auscultated lung sounds sound realistic / natural to you?” (n = 103). (B) Responses to the question “To what extend did the use of the AR application help you to better understand the case?” (n = 104). (C) Responses to question “Do you feel your insight in the portrayed illness had changed after the use of the AR application?” (n = 102). (D) Responses to the question “Do you feel this AR application better prepares you for clinical practice compared to regular teaching methods?” (n = 104). (E) Responses to the question “After this experience, how would you evaluate AR as a tool for learning?” (n = 104).

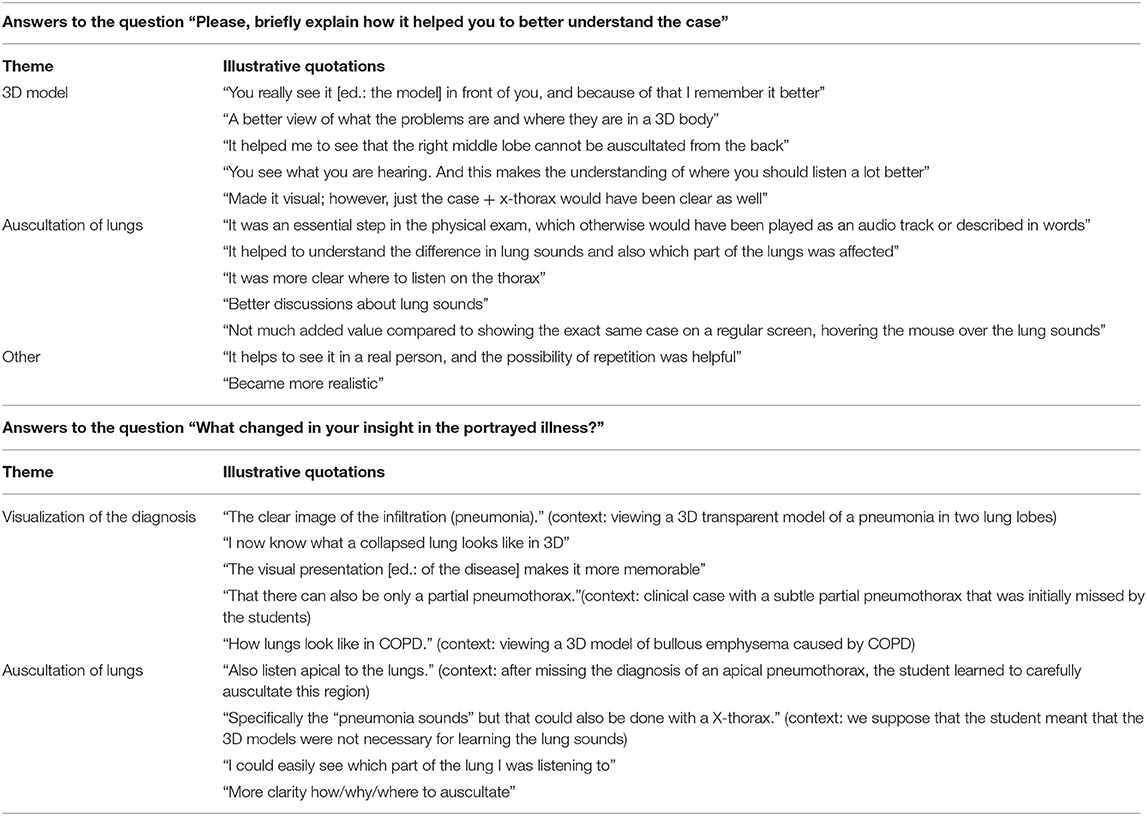

For most students, the AR experience helped them to understand the case a little bit or substantially better (Figure 5B). A qualitative analysis to how the AR experience helped students to better understand the case is shown in Table 3. Answers to the open ended question “Please, briefly explain how it helped you to better understand the case” were explored and assigned to one of three categories: “3D models,” “auscultation of lungs,” and “other.” A lot of students reported that the virtual auscultation helped them: they indicated for instance that the lung sounds provided additional information and that is was useful that there were different lung sounds on different spots. In addition, they mentioned that they had better discussions about lung sounds. Students remarked they liked the “Real physical examination with the case” and “The combination of seeing realistic pictures 3D, realistic sounds and the fun and interactive setting.” A few students were critical regarding the auscultation process, e.g., “There was not much added value compared to showing the exact same case on a regular screen, hovering the mouse over the lung sounds.” Students also reported to be supported by the 3D visuals in multiple ways, e.g., “You really see it in front of you, and because of that I remember it better,” “It helped me to see that the right middle lobe cannot be auscultated from the back.” Only a few students reported aspects that were not related to the first two themes: one student mentioned “It helps to see it in a real person, and the possibility of repetition was helpful.” More quotations are displayed in Table 3.

Table 3. Illustrative quotations of answers to the questions “Please, briefly explain how it helped you to better understand the case” (divided into three themes) and “What changed in your insight in the portrayed illness?” (divided into two themes).

Besides that the AR experience helped students to understand the case, they also indicated that their insight in the portrayed illness had changed a little bit (42.2%) to substantially (29.4%) (Figure 5C). Answers to the question “What changed in your insight in the portrayed illness?” were qualitatively analyzed. Again, the main themes were the 3D models (visualization of the diagnosis) and the auscultation of the lungs. Students learned for instance from the 3D visuals “[…] what a collapsed lung looks like in 3D” or “That it can be a really small area with a subtle abnormality” (pneumothorax case). Students also reported insights regarding the auscultation process, for instance “Also listen apical to the lungs,” after a learning session with a case of an apical pneumothorax which many students missed at first examination. More quotations are provided in the second half of Table 3.

More than half of the students reported that the application better prepared them for clinical practice than regular teaching approaches, such as e-learning modules, small group learning, physical examination of peer students, and practicing with simulated patients (Figure 5D). After the first use of this AR application students felt generally somewhat more confident about diagnosing lung cases.

With the questionnaire it was explored what students particularly liked about the AR experience. This resulted in different answers. One student answered: “Novel way of learning. I love learning by interacting in a more “physical manner.” Another student liked “To be able to walk around the case, hear all the different parts of the lung and discuss with peers.” Another illustrative quotation is: “To see anatomy and pathology and how they interact. Also the fact that all observers see the same makes discussing more fruitful.”

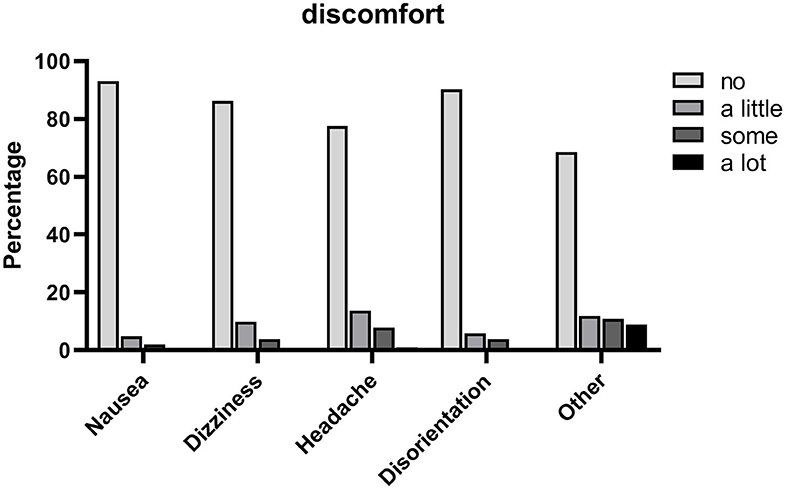

44.2% of the studied population reported no physical discomfort at all, and 50% reported a little to some physical discomfort. Only four participants (3.8%) experienced a lot of physical discomfort. The most commonly reported complaints were headache, symptoms that could be attributed to the weight of the head-mounted display (such as “heaviness on the head,” “pressure on the nose,” “pain on nose,” “tight headset”) and dizziness. The experience of different types of physical discomfort is displayed in Figure 6. While analyzing the general question “What did you dislike?” it was noted that 21.2% of the participants provided an answer related to physical discomfort, mainly due to the head-mounted display, e.g., “The AR glasses were very heavy, had to lift them with my hand,” “Annoying headset” and “The headset isn” t so comfortable.”

Figure 6. Experience of different types of physical discomfort during the AR experience (four-point Likert scale), n = 102.

Students were asked how they would evaluate AR as a tool for learning, based on this specific experience. Most evaluated it is as an excellent (16.3%), good (58.7%) or average (20.2%) tool for learning (Figure 5E). Many students (39.4%) indicated that they would like to see more AR applications for medical education on the topic of anatomy. Other topics that were suggested include developmental biology, surgery, cardiology, interactive pharmacology and emergency care. Students were also asked “What did you dislike?” in order to identify areas of improvement. Approximately one-third of the responders to this question (31.7%) mentioned technical difficulties or problems with the use of the AR application, e.g., “Problems starting the AR” and “We had to reconnect sometimes.” A few students (4.8%) mentioned the limited field of view of the HoloLens® as a disadvantage. Remarks that provided input for future improvements included: “[…] would” ve been nice if you could actually see the lungs expand with breathing,” “sounds etc in the room were a little distractive,” “Took quite a while with two groups, we had to wait quite a bit,” “Small [learning] space.”

Discussion

In this paper the design and implementation of the AR application, AugMedicine: Lung cases, on patient cases with dyspnea is described. Our application was developed in a time period of 22 months by a large team of stakeholders. A small group learning session was designed around the AR application, based on instructional design principles of Merrill (Merrill, 2002) and this session was successfully implemented in our clerkship curriculum. Until now various AR applications for medical education have been developed mainly in the field of surgery and anatomy (Zhu et al., 2014; Sheik-Ali et al., 2019; Gerup et al., 2020; Tang et al., 2020; Uruthiralingam and Rea, 2020). This study contributes to the exploration of the potential of AR in health care education beyond those fields.

Integration of AR technology into the medical curriculum should be carefully designed to avoid focus on the technology itself. In an integrative review it was proposed that appropriate learning theories should be identified in order to guide the integration of AR in medical education (Zhu et al., 2014). In line with this recommendation, the LED has been a prime focus in our design process resulting in successful integration in a small group learning session following the instructional design principles of Merrill (2002). These principles were not only applied for integrating this novel technology into learning sessions, but also in the concept of the AR application itself (first and fourth principle). There was a particular focus on creating a more authentic learning environment for diagnosing dyspnea rather than merely an e-learning or practicing with a simulated patient, which is in line with Merrill's first principle of instruction, engaging learners in relevant and real-world problems.

Kamphuis et al. also suggested that AR technology has the potential to create a situated and authentic learning experience that may better facilitate transfer of learning into the workplace (Kamphuis et al., 2014). In the past, other technologies have been described to increase authenticity while training chest auscultation. Examples are web-based auscultation (Ward and Wattier, 2011), mannequin-based simulators / auscultation torsos (Ward and Wattier, 2011; McKinney et al., 2013; Bernardi et al., 2019), multimedia presentation of acoustic and graphic characteristics of lung sounds (Sestini et al., 1995), “hybrid simulators,” a combination of simulated patients with hardware and software of auscultation torsos (Friederichs et al., 2014) and auscultation sound playback devices or stethoscopes (Ward and Wattier, 2011). Although some of these tools improved learning of lung or heart sounds compared to traditional teaching methods, all of these examples have in common that they lack the combination with 3D visuals of the underlying diagnosis. This combination is a major advantage of Augmedicine: Lung cases, as students reported that the combination of auscultation and 3D visuals was helpful to them. The results suggest that most students recognize and confirm the authenticity of the learning setting, although some reported that it felt a bit unnatural. This effect may have been caused or aggravated by technical difficulties such as problems with manipulating the virtual stethoscope or drifting of the model. This could be further explored by performing focus groups or interviews to analyze the answers in more depth.

The results of our study indicate that the AR experience helped students to better understand a case of dyspnea and gain more insight into the portrayed illness. An explanation for these effects could be the stimulation of active learning by the application. In the past decades, medical education has shifted from traditional lecture-based teaching to more active and collaborative learning. In science education, active learning has proven to be more effective than lecture-based learning in the study of Freeman et al. (2014). It was defined in this study as: “Active learning engages students in the process of learning through activities and/or discussion in class, as opposed to passively listening to an expert. It emphasizes higher-order thinking and often involves group work.” The small group learning session with the AR application has multiple learning activities that relate to active learning: the students walk around a 3D hologram, auscultate the virtual lungs, and discuss the case with their peers and teacher. The better understanding of the case might also be explained by the effect of Embodied Cognition. The concept of Embodied Cognition is described as “Cognition is embodied when it is deeply dependent upon features of the physical body of an agent, that is, when aspects of the agent's body beyond the brain play a significant causal or physically constitutive role in cognitive processing.” (Website Stanford Encyclopedia of Philosophy, 2020). While learning with the studied AR application, students use their physical body for examining the 3D models, manipulating the virtual stethoscope by a touch-free gestural interface in order to listen to the lung sounds. These are relevant motion patterns for diagnosing patients in the real-life environment and therefore could support students to construct a mental model of the learned concept. Although various clinical cases are available in the AR application, the students explored only one within the context of the formal learning session. This could explain why students generally did not feel substantially more confident about diagnosing lung cases after the AR experience. However, the other cases can be further explored independently at a later moment in time.

A possible disadvantage of introducing AR/VR technologies into education is the perception of simulator sickness or cybersickness, which is a form of motion sickness with adverse symptoms and observable signs that are caused by elements of visual display and visuo-vestibular interaction (Vovk et al., 2018). Although there is extensive literature on this phenomenon in virtual reality (e.g., Gavgani et al., 2017; Gallagher and Ferrè, 2018; Weech et al., 2019; Saredakis et al., 2020), publications in the context of AR are scarce, especially regarding AR with head-mounted displays. However, visuo-vestibular interactions differ considerably between VR and AR environments. In a study by Moro et al., participants were allocated to either use of an VR, AR or tablet-based application for learning skull anatomy (Moro et al., 2017). Adverse physical symptoms were compared, and were significantly higher in the VR group. Vovk et al. investigated simulator sickness symptoms in AR training with the HoloLens® with a validated 16-item simulator sickness questionnaire (SSQ), with three subscales relating to gastrointestinal symptoms, oculomotor symptoms, and disorientation. They concluded that interactive augmented interaction is less likely to cause simulator sickness than interaction with virtual reality. Although some of the major symptoms were encountered as well in our study, we did not explore this in a structured way. Some of the students reported symptoms, such as heaviness on the nose or pain on the nose, that may be caused by the weight of the HoloLens®Perhaps the recently released HoloLens® two could solve some of these physical discomfort issues as it is designed to be more comfortable by shifting most of the weight from the front to the back of the headset. However, this remains to be studied.

Limitations

A limitation of this study is that an isolated questionnaire was used, instead of a questionnaire in combination with e.g., a focus group or structured interviews. Sometimes students provided answers which could be interpreted in multiple ways. Focus groups or interviews with students could have helped, but were not part of this research. Possible confounders in this study were variable learning spaces, variable occurrence of technical incidents, variable teachers (a total of four teachers) and use of two different cases (pneumothorax and COPD plus pneumonia). We cannot definitively know if the other clinical cases in the application would result in comparable user experiences. Educational spaces that provided the HoloLens®'s positional tracking sensors with more environmental landmarks seemed to result in less technical problems, although we did not formally test this.

Practical Implications

Institutions that consider to use AR in their medical curriculum, should be aware that besides the costs for developing and maintaining an AR application and the purchase of hardware devices, additional resources should be allocated. In our experience, this type of technology requires a dedicated infrastructure, including training and allocating technical support (for maintenance and repair of the head-mounted displays and software updates), arranging a group of teaching assistants for supporting teachers, and training of several dedicated teachers. For the described AR application, the applied group size for the learning session was a maximum of 12 students (of which six simultaneously wearing a head-mounted display). It is not (yet) possible to increase the group size, because of limited availability of head-mounted displays. The scalability of comparable AR applications is also likely to be limited, in contrast to VR applications for which in our experience the group size can more easily be increased.

Future Directions

The applicability and extension of this AR application for other healthcare professionals, such as residents, medical specialists, general practitioners, physician assistants and paramedics, in currently under consideration. Although now applied as a teacher-led small group learning session, it is planned to extend the application to facilitate asynchronous learning. By adapting the AR application to a standalone version it could be used without teacher presence, offering students the option to practice as frequently as needed and experience more cases as part of a more individualized learning process. In terms of researching AR in medical education, a recent systematic review proposed an analytical model to evaluate the potential for an AR application to be integrated into the medical curriculum, in order to result in both higher quality study designs and formal validity assessments (Tang et al., 2020). The model consists of four components: quality, application content, outcome, and feasibility. Although the current study provided relevant insights on the application content and feasibility, the study design is observational and outcomes in terms of efficacy remain to be studied. In alignment with the model, a next step to provide higher grade evidence for the implementation of this AR application could be performing a randomized-controlled trial to compare the effectiveness of this AR experience to traditional learning methods alone.

Conclusions

Until now various AR applications for medical education have been developed mainly in the field of surgery and anatomy. This study contributes to the exploration of the potential use of AR in health care education beyond those fields. We integrated AugMedicine: Lung cases into the curriculum through a specially designed small group learning session. In general, students reported that the AR experience helped them to better understand the clinical case and most students value AR as a good tool for learning. The user evaluation was predominantly positive, although physical discomfort seemed to be an issue for some students.

Data Availability Statement

A limited dataset (without qualitative data) is available as Supplementary Material. The complete dataset is available on request. Requests should be directed to: Arianne D. Pieterse, YS5kLlBpZXRlcnNlQGx1bWMubmw=.

Ethics Statement

The studies involving human participants were reviewed and approved by the Educational Research Review Board of the Leiden University Medical Center. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

AP, BH, JK, and MR were part of the team that designed and developed the AR application. AP, BH, PJ, and MR designed the study. AP and BH analyzed the data. AP wrote the first draft of the manuscript. BH, PJ, JK, and MR provided further first-hand writing and edited the manuscript. JK and LW contributed in reviewing the manuscript. All authors have approved the final version of the manuscript and agree to be accountable for all aspects of the work.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank all members of the AugMedicine: Lung cases team for their contribution to the design process. We thank Argho Ray, Leontine Bakker, and Soufian Meziyerh for teaching small group learning sessions with the AR application. We thank Franka Luk and Manon Zuurmond for their contributions to this study and paper.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2020.577534/full#supplementary-material

Abbreviations

ACM, Audio Collision Model; AR, Augmented Reality; COPD, Chronic Obstructive Pulmonary Disease; HLAPI, High Level Application Programming Interface; LED, learning experience design; LUMC, Leiden University Medical Center; SDK, software development kit; UI, user interface; VR, Virtual Reality; WLAN, wireless local area network.

References

Barmaki, R., Yu, K., Pearlman, R., Shingles, R., Bork, F., Osgood, G. M., et al. (2019). Enhancement of anatomical education using augmented reality: an empirical study of body painting. Anat. Sci. Educ. 12, 599–609. doi: 10.1002/ase.1858

Bernardi, S., Giudici, F., Leone, M. F., Zuolo, G., Furlotti, S., Carretta, R., et al. (2019). A prospective study on the efficacy of patient simulation in heart and lung auscultation. BMC Med. Educ. 19:275. doi: 10.1186/s12909-019-1708-6

Bogomolova, K., Hierck, B. P., Looijen, A. E. M., et al. (2020). Stereoscopic three-dimensional visualization technology in anatomy learning: a meta-analysis. Med. Educ. doi: 10.1111/medu.14352. [Epub ahead of print].

Bogomolova, K., van der Ham, I. J. M., Dankbaar, M. E. W., van den Broek, W. W., Hovius, S. E. R., van der Hage, J. A., et al. (2019). The effect of stereoscopic augmented reality visualization on learning anatomy and the modifying effect of visual-spatial abilities: a double-center randomized controlled trial. Anat. Sci. Educ. 13, 558–567. doi: 10.1002/ase.1941

Cleland, J. A., Abe, K., and Rethans, J. J. (2009). The use of simulated patients in medical education: AMEE guide No 42. Med. Teach. 31, 477–486. doi: 10.1080/01421590903002821

Demonstration of AugMedicine: Lung Cases (2020). Available online at: https://youtu.be/GGiigVMKPTA (accessed September 14, 2020).

Eckert, M., Volmerg, J. S., and Friedrich, C. M. (2019). Augmented reality in medicine: systematic and bibliographic review. JMIR Mhealth Uhealth 7:e10967. doi: 10.2196/10967

Edelbring, S., Dastmalchi, M., Hult, H., Lundberg, I. E., and Dahlgren, L. O. (2011). Experiencing virtual patients in clinical learning: a phenomenological study. Adv. Health Sci. Educ. Theory Pract. 16, 331–345. doi: 10.1007/s10459-010-9265-0

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. U.S.A. 111, 8410–8415. doi: 10.1073/pnas.1319030111

Friederichs, H., Weissenstein, A., Ligges, S., Moller, D., Becker, J. C., and Marschall, B. (2014). Combining simulated patients and simulators: pilot study of hybrid simulation in teaching cardiac auscultation. Adv. Physiol. Educ. 38, 343–347. doi: 10.1152/advan.00039.2013

Gallagher, M., and Ferrè, E. (2018). Cybersickness: a multisensory integration perspective. Multisensory Res. 31, 645–674. doi: 10.1163/22134808-20181293

Gavgani, A. M., Nesbitt, K. V., Blackmore, K. L., and Nalivaiko, E. (2017). Profiling subjective symptoms and autonomic changes associated with cybersickness. Auton. Neurosci. 203, 41–50. doi: 10.1016/j.autneu.2016.12.004

Gerup, J., Soerensen, C. B., and Dieckmann, P. (2020). Augmented reality and mixed reality for healthcare education beyond surgery: an integrative review. Int. J. Med. Educ. 11, 1–18. doi: 10.5116/ijme.5e01.eb1a

HoloLens App AugMedicine: Lung Cases (short) (2020). Available online at: https://www.youtube.com/watch?v=feiOz7UAxbs (accessed June 25, 2020).

Kamphuis, C., Barsom, E., Schijven, M., and Christoph, N. (2014). Augmented reality in medical education? Perspect. Med. Educ. 3, 300–311. doi: 10.1007/s40037-013-0107-7

Kaufmann, H., and Schmalstieg, D. (2003). Mathematics and geometry education with collaborative augmented reality. Comput. Graph. 27, 339–345. doi: 10.1016/S0097-8493(03)00028-1

Kotranza, A., Lind, D. S., and Lok, B. (2012). Real-time evaluation and visualization of learner performance in a mixed-reality environment for clinical breast examination. IEEE Trans. Vis. Comput. Graph. 18, 1101–1114. doi: 10.1109/TVCG.2011.132

Lamounier, E., Bucioli, A., Cardoso, A., Andrade, A., and Soares, A. (2010). On the use of augmented reality techniques in learning and interpretation of cardiologic data. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2010, 610–613. doi: 10.1109/IEMBS.2010.5628019

McKinney, J., Cook, D. A., Wood, D., and Hatala, R. (2013). Simulation-based training for cardiac auscultation skills: systematic review and meta-analysis. J. Gen. Intern. Med. 28, 283–291. doi: 10.1007/s11606-012-2198-y

Miles, M. B., Huberman, A. M., and Saldana, J. (2014). Qualitative Data Analysis: A Methods Sourcebook, 3rd edn. Thousands Oaks, CA: SAGE Publications, Inc.

Moro, C., Stromberga, Z., Raikos, A., and Stirling, A. (2017). The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat. Sci. Educ. 10, 549–559. doi: 10.1002/ase.1696

Nilsson, S., and Johansson, B. (2008). “Acceptance of augmented reality instructions in a real work setting,” in Conference on Human Factors in Computing Systems, C. H. I (Florence). doi: 10.1145/1358628.1358633

Nischelwitzer, A., Lenz, F., Searle, G., and Holzinger, A. (2007). “Some aspects of the development of low-cost augmented reality learning environments as examples for future interfaces in technology enhanced learning,” in Universal Access in HCI, Part III, HCII 2007, LNCS 4556, ed C. Stephanidis (Berlin; Heidelberg), 728–737. doi: 10.1007/978-3-540-73283-9_79

Pretto, F., Manssour, I., Lopes, M., da Silva, E., and Pinho, M. (2009). “Augmented reality environment for life support training,” in SAC '09: Proceedings of the 2009 ACM symposium on Applied Computing, eds S. Y. Shin, and S. Ossowski (Honolulu, HI: Association for Computing Machinery), 836–841. doi: 10.1145/1529282.1529460

Rasimah, C., Ahmad, A., and Zaman, H. (2011). Evaluation of user acceptance of mixed reality technology. AJET 27, 1369–1387. doi: 10.14742/ajet.899

Rosenbaum, E., Klopfer, E., and Perry, J. (2007). On location learning: authentic applied science with networked augmented realities. J. Sci. Educ. Technol. 16, 31–45 doi: 10.1007/s10956-006-9036-0

Sakellariou, S., Ward, B., Charissis, V., Chanock, D., and Anderson, P. (2009). “Design and implementation of augmented reality environment for complex anatomy training: inguinal canal case study”, in Virtual and Mixed Reality, Third International Conference, VMR 2009, ed R. Shumaker (San Diego, CA), 605–614. doi: 10.1007/978-3-642-02771-0_67

Saredakis, D., Szpak, A., Birckhead, B., Keage, H. A. D., Rizzo, A., and Loetscher, T. (2020). Factors associated with virtual reality sickness in head-mounted displays: a systematic review and meta-analysis. Front. Hum. Neurosci. 14:96. doi: 10.3389/fnhum.2020.00096

Schwaber, K., and Beedle, M. (2008). Agile Software Development With Scrum. London: Pearson Education (Us).

Sestini, P., Renzoni, E., Rossi, M., Beltrami, V., and Vagliasindi, M. (1995). Multimedia presentation of lung sounds as a learning aid for medical students. Eur. Respir. J. 8, 783–788.

Sheik-Ali, S., Edgcombe, H., and Paton, C. (2019). Next-generation virtual and augmented reality in surgical education: a narrative review. Surg. Technol. Int. 35, 27–35.

Tang, K. S., Cheng, D. L., Mi, E., and Greenberg, P. B. (2020). Augmented reality in medical education: a systematic review. Can. Med. Educ. J. 11, e81–e96. doi: 10.36834/cmej.61705

Uruthiralingam, U., and Rea, P. M. (2020). Augmented and virtual reality in anatomical education - a systematic review. Adv. Exp. Med. Biol. 1235, 89–101. doi: 10.1007/978-3-030-37639-0_5

von Jan, U., Noll, C., Behrends, M., and Albrecht, U. (2012). mARble – augmented reality in medical education. Biomed. Tech. 57(Suppl. 1), 67–70. doi: 10.1515/bmt-2012-4252

Vovk, A., Wild, F., Guest, W., and Kuula, T. (2018). “Simulator sickness in augmented reality training using the microsoft hololens”, in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18), eds R. Mandryk and M. Hancock (New York, NY; Montréal, QC), 1–9. doi: 10.1145/3173574.3173783

Ward, J. J., and Wattier, B. A. (2011). Technology for enhancing chest auscultation in clinical simulation. Respir. Care 56, 834–845. doi: 10.4187/respcare.01072

Website Stanford Encyclopedia of Philosophy (2020). Available online at: https://plato.stanford.edu/entries/embodied-cognition/ (accessed June 25, 2020).

Weech, S., Kenny, S., and Barnett-Cowan, M. (2019). Presence and cybersickness in virtual reality are negatively related: a review. Front. Psychol. 10:158. doi: 10.3389/fpsyg.2019.00158

Keywords: augmented reality, mixed reality, medical education, technology enhanced learning, active learning, collaborative learning, pulmonary medicine, internal medicine

Citation: Pieterse AD, Hierck BP, de Jong PGM, Kroese J, Willems LNA and Reinders MEJ (2020) Design and Implementation of “AugMedicine: Lung Cases,” an Augmented Reality Application for the Medical Curriculum on the Presentation of Dyspnea. Front. Virtual Real. 1:577534. doi: 10.3389/frvir.2020.577534

Received: 29 June 2020; Accepted: 15 September 2020;

Published: 28 October 2020.

Edited by:

Georgios Tsoulfas, Aristotle University of Thessaloniki, GreeceReviewed by:

Randy Klaassen, University of Twente, NetherlandsAlexandros Sigaras, Weill Cornell Medicine, Cornell University, United States

Copyright © 2020 Pieterse, Hierck, de Jong, Kroese, Willems and Reinders. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Arianne D. Pieterse, YS5kLnBpZXRlcnNlQGx1bWMubmw=

Arianne D. Pieterse

Arianne D. Pieterse Beerend P. Hierck

Beerend P. Hierck Peter G. M. de Jong

Peter G. M. de Jong Jelger Kroese

Jelger Kroese Luuk N. A. Willems5

Luuk N. A. Willems5 Marlies E. J. Reinders

Marlies E. J. Reinders