95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

TECHNOLOGY AND CODE article

Front. Virtual Real. , 03 November 2020

Sec. Technologies for VR

Volume 1 - 2020 | https://doi.org/10.3389/frvir.2020.561558

Mar Gonzalez-Franco1*

Mar Gonzalez-Franco1* Eyal Ofek1

Eyal Ofek1 Ye Pan2

Ye Pan2 Angus Antley1

Angus Antley1 Anthony Steed1,3

Anthony Steed1,3 Bernhard Spanlang4

Bernhard Spanlang4 Antonella Maselli5

Antonella Maselli5 Domna Banakou6,7

Domna Banakou6,7 Nuria Pelechano8

Nuria Pelechano8 Sergio Orts-Escolano9

Sergio Orts-Escolano9 Veronica Orvalho10,11,12

Veronica Orvalho10,11,12 Laura Trutoiu13

Laura Trutoiu13 Markus Wojcik14

Markus Wojcik14 Maria V. Sanchez-Vives15,16

Maria V. Sanchez-Vives15,16 Jeremy Bailenson17

Jeremy Bailenson17 Mel Slater6,7

Mel Slater6,7 Jaron Lanier1

Jaron Lanier1As part of the open sourcing of the Microsoft Rocketbox avatar library for research and academic purposes, here we discuss the importance of rigged avatars for the Virtual and Augmented Reality (VR, AR) research community. Avatars, virtual representations of humans, are widely used in VR applications. Furthermore many research areas ranging from crowd simulation to neuroscience, psychology, or sociology have used avatars to investigate new theories or to demonstrate how they influence human performance and interactions. We divide this paper in two main parts: the first one gives an overview of the different methods available to create and animate avatars. We cover the current main alternatives for face and body animation as well introduce upcoming capture methods. The second part presents the scientific evidence of the utility of using rigged avatars for embodiment but also for applications such as crowd simulation and entertainment. All in all this paper attempts to convey why rigged avatars will be key to the future of VR and its wide adoption.

When representing users or computer-controlled agents within computer graphics systems we have a range of alternatives from abstract and cartoon-like, through human-like to fantastic creations from our imagination. However, in this paper we focus on anthropomorphically correct digital human representations: avatars. These digital avatars are a collection of geometry (meshes, vertex) and textures (images) combined to look like real humans in three dimensions (3D). When these avatars are rigged they have a skeleton system, can walk and be animated to resemble people. A human-like avatar is defined by its morphology and behavior. The morphology of an avatar refers to the definition of the shape and structure of the geometry of the 3D model, and it usually complies with the anatomical structure of the human body. The behavior of an avatar is defined by the movements the 3D model can perform. Avatars created with computer graphics can reach such a level of realism that they can substitute real humans inside Virtual Reality (VR) or Augmented Reality (AR). An avatar can represent a real live participating person, or an underlying software agent. When digital humans are controlled by algorithms they are referred to as embodied agents (Bailenson and Blascovich, 2004).

When people enter an immersive virtual environment through a VR/AR system they may experience an illusion of being in the place depicted by the virtual environment, typically referred to as “presence” (Sanchez-Vives and Slater, 2005). Presence has been decomposed into two different aspects, the illusion of “being there,” referred to as “Place Illusion,” and the illusion that the events that are occurring are really happening, referred to as “Plausibility” (Slater, 2009). These illusions and their consequences occur in spite of the person knowing that nothing real is happening. However, typically, the stronger these illusions the more realistically people will respond to the events inside the VR and AR (Gonzalez-Franco and Lanier, 2017).

A key contributor to plausibility is the representation and behavior of the avatars in the environment (Slater, 2009). Are those avatars realistic? Do their appearance, behavior, and actions match with the plot? Do they behave and move according to expectations in the given context? Do they respond appropriately and according to expectations with the participant? Do they initiate interactions with the participant of their own accord? A simple example is that a character should smile back, or at least react, when a person smiles toward it. Another example is that a character moves out of the way, or acknowledges the participant in some way, as she or he walks by.

Avatars are key to every social VR and AR interaction (Schroeder, 2012). They can be used to recreate social psychology scenarios that would be very hard or impossible to recreate in reality to evaluate human responses. Avatars have helped researchers in further studying bystander effects during violent scenarios (Rovira et al., 2009; Slater et al., 2013) or paradigms of obedience to authority (Slater et al., 2006; Gonzalez-Franco et al., 2019b), to explore the effects of self-compassion (Falconer et al., 2014, 2016), crowd simulation (Pelechano et al., 2007), or even experiencing the world from the embodied viewpoint of another (Osimo et al., 2015; Hamilton-Giachritsis et al., 2018; Seinfeld et al., 2018). In many cases of VR social interaction, researchers use embodied agents (i.e., procedural avatars). Note that in this paper we do not use the term “procedural” to refer to how they were created, but rather how they are animated to represent agents in the scene, for example, following a series of predefined animations potentially driven by AI tools.

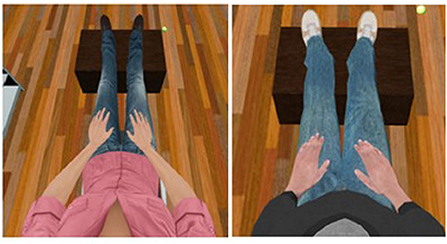

A particular case of avatars inside VR are self-avatars, or embodied avatars. A self-avatar is a 3D representation of a human model that is co-located with the user's body, as if it were to replace or hide the real body. When wearing a VR Head Mounted Display (HMD) the user cannot see the real environment around her and in particular, cannot see her own body. The same is true in some AR configurations. Self-avatars provide users with a virtual body that can be visually coincident with their real body (Figure 1). This substitution of the self-body with a self-avatar is often referred to as embodiment (Longo et al., 2008; Kilteni et al., 2012a). We use the term “virtual embodiment” (or just “embodiment”) to describe the physical process that employs the VR hardware and software to substitute a person's body with a virtual one. Embodiment under a variety of conditions may give rise to the subjective illusions of body ownership and agency (Slater et al., 2009; Spanlang et al., 2014). Body ownership is enabled by multisensory processing and plasticity of body representation in the brain (Kilteni et al., 2015). For example, if we see a body from a first-person perspective that moves as we move (i.e., synchronous visuo-motor correlations) (Gonzalez-Franco et al., 2010), or is touched with the same spatio-temporal pattern as our real body (i.e., synchronous visuo-tactile correlations) (Slater et al., 2010b), or is just static but co-located with our own body (i.e., congruent visuo-proprioceptive correlation) (Maselli and Slater, 2013), then an embodiment experience is generated. In fact, even if the participant is not moving, nor being touched, in some setups, the first-person co-location will be sufficient to generate embodiment (González-Franco et al., 2014; Maselli and Slater, 2014; Gonzalez-Franco and Peck, 2018), to result in the perceptual illusion that this is our body (even though we know for sure that it is not). Interestingly, and highly useful as a control, if there is asynchrony or incongruence between sensory inputs (either in space or in time) or in sensorimotor correlations, the illusion breaks (Berger et al., 2018; Gonzalez-Franco and Berger, 2019). This body ownership effect that was first demonstrated with a rubber hand (Botvinick and Cohen, 1998), has now been replicated in a large number of instances with virtual avatars, (for a review see the Slater and Sanchez-Vives, 2016; Gonzalez-Franco and Lanier, 2017).

Figure 1. Two of the Microsoft Rocketbox avatars being used for a first-person substitution of gender matched bodies in an embodiment experiment at University of Barcelona by Maselli and Slater (2013).

This means that self-avatars not only allow us to interact with others as we would do in the real world, but are also critical for non-social VR experiences. The body is a basic aspect required for perceptual, cognitive, and bodily interactions. Inside VR, the avatar becomes our body, our “self”. Indeed participants that have virtual body show better perceptual ability when estimating distances than non-embodied participants in VR (Mohler et al., 2010; Phillips et al., 2010; Gonzalez-Franco et al., 2019a). Self-avatars also change how we perceive touch inside VR (Maselli et al., 2016; Gonzalez-Franco and Berger, 2019). Even more interestingly, self-avatars can even help users to better perform cognitive tasks (Steed et al., 2016b; Banakou et al., 2018), modify implicit racial bias (Groom et al., 2009; Peck et al., 2013; Banakou et al., 2016; Hasler et al., 2017; Salmanowitz, 2018) or even change, for example, their body weight perception (Piryankova et al., 2014).

Such examples of research are just the tip of the iceberg, but show the importance of avatars that are controllable for developing VR/AR experiments, games, and applications. Hence, multiple commercial and non-commercial tools (such as the Microsoft Rocketbox library, Autodesk Character Generator, Mixamo/Adobe Fuse or iClone Character Creator, to name a few) aim to democratize and extend their use of avatars among developers and researchers.

Avatars can be created in different ways and in this paper we will detail how they can be fitted to a skeleton, and animated. We give an overview of previous work as well as future avenues for avatar creation. We also describe the particularities of the use and creation of the Microsoft Rocketbox avatar library and we discuss the consequences of the open source release (Mic, 2020).

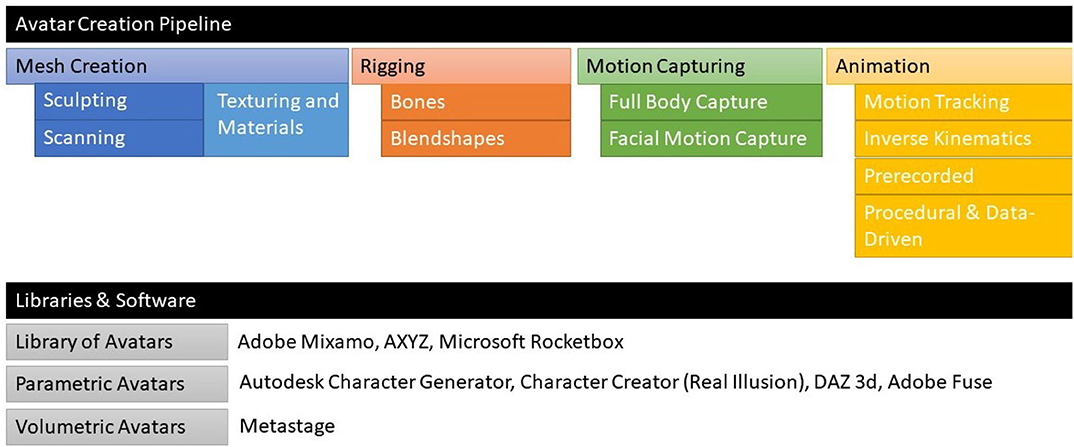

The creation of avatars that can move and express emotions is a complex task and relies on the definition of both the morphology and behavior of its 3D representation. The generation of believable avatars requires a technical pipeline (Figure 2), that can create the geometry, textures, control structure (rig) and movements of the avatar (Roth et al., 2017). Anthropomorphic avatars might be sculpted by artists, scanned from real people or a combination of both. Microsoft Rocketbox avatars are based on sculpting. With current technological advances it is also possible to create avatars automatically from a set of input images or through manipulation of a small set of parameters. At the core rigging is the set of control structures attached to selected areas of the avatar, allowing its manipulation and animation. A rig is usually represented by a skeleton (bone structure). However, rigs can be attached to any structure that is useful for the task needed (animators may use much more complex rigs than a skeleton). The main challenge when rigging an avatar is to accurately mimic the deformation of an anthropomorphic shape, so artists and developers can manipulate and animate the avatar. Note that non-anthropomorphic avatars, such as those lacking legs or other body parts, are widely used in social VR. However, although previous research has shown that non-anthropomorphic avatars can also be quite successful in creating self-identification, there are more positive effects to using full anthropomorphic avatars (Aymerich-Franch, 2012).

Figure 2. Pipeline for the creation of Microsoft Rocketbox Rigged Avatars and main libraries and software available with pre-created avatars of different types. The mesh creation produces a basic sculpted humanoid form. The rigging attaches the vertices to different bones corresponding to the skeleton of the digital human.

Much like the ancient artists sculpted humans in stone, today digital artists can create avatars by combining and manipulating digital geometric primitives and deforming the resulting collection of vertex or meshes. Manually sculpting, texturing, and rigging using 3D content creation tools, such as Autodesk 3ds Max (3dm, 2020), Maya (May, 2020), or Blender (Ble, 2020), has been the traditional way to achieve high-quality avatars.

This work requires artists specializing in character design and animation, and though this can be a long and tedious process, the results can be optimized for high-level quality output. Most of the avatars currently used for commercial applications, such as AAA games and most VR/AR applications, are based on a combination of sculpted and scanned work with a strong artistic involvement.

In fact, at the time of writing, specialized artistic work is generally still used to fine tune avatar models to avoid artifacts produced with the other methods listed here, even though some of these avatars can still suffer from the uncanny valley effect (Mori, 1970), where extreme but not perfect realism can cause a negative reaction to the avatar.

In many applications, geometry scanning is used to generate an avatar that can be rigged and animated later (Kobbelt and Botsch, 2004). Geometry scanning is the acquisition of physical topologies by imaging techniques and accurate translation of this surface information to digital 3D geometry; that is, a mesh (Thorn et al., 2016).

That later animation might itself use a motion capture system to provide data to animate the avatar. The use of one-time scanning enables the artist to stylize the scanned model and optimize it for animation and the application. Scanning is often a pre-processing step where the geometry and potentially animation is recorded and used for reference or in the direct production of the final mesh and animation.

Scanning is particularly useful if an avatar needs to be modeled on existing real people (Waltemate et al., 2018). Indeed, the creation of avatars this way is extremely common in special effects rendering, for sports games or for content around celebrities.

Depth cameras or laser techniques allow developers and researchers to capture their own avatars, and create look-alike avatars (Gonzalez-Franco et al., 2016). These new avatars can later be rigged in the same way as sculpted ones. Scanning is a fast alternative to modeling of avatars. But depending on the quality, it comes with common flaws that need to be tweaked afterwards.

Indeed, special care needs to be taken to scan the model from all directions to minimize the number and size of occluded regions. To enable future relighting of the avatar, textures have to be scanned under known natural and sometimes varying illuminations to recover the original albedo or bi-directional reflectance function (BRDF) of the object (Debevec et al., 2000).

To capture the full body (Figure 3), the scanning requires a large set of images to be taken around the model by a moving camera (Aitpayev and Gaber, 2012). Alternatively a large number of cameras in a capture stage can collect the required images at one instant (Esteban and Schmitt, 2004). Surface reconstruction using multi-view stereo infers depth by finding matching neighborhoods in the multiple images (Steve et al., 2006). When there is a need to capture both the geometry and textures of the avatar, as well as the motion of the model, a large set of cameras can also enable the capture of a large set of images that covers most, yet but not all, of the model surface at each time frame sometimes this is referred to as fusion4d or volumetric capturing (Dou et al., 2016; Orts-Escolano et al., 2016). More details on volumetric performance capture are presented in section 4.

Figure 3. A Microsoft Rocketbox avatar mesh with less than 10,000 triangle polygons with two texture modes: only diffuse and diffuse, specular and normal (bump mapping). The models include multiple Levels of Detail (LODs) which can be optionally used for performance optimization (on the right).

Special care should be taken when modeling avatar hair. While hair may be an important recognizable feature of a person, it is notoriously hard to scan and model due to its fine structure and reluctance properties. Tools such as those suggested by Wei et al. (2005) use visible hair features as seen by multiple images capturing the head from multiple directions, to fill a volume similar to the image. However, hair and translucent clothing remains a research challenge.

Recent deep learning methods also using retrieved data can be used at many stages of the avatar creation process. Some methods have been successfully used to create avatars from pictures by recreating full 3D meshes from a photo (Hu et al., 2017; Saito et al., 2019), meshes from multiple cameras Collet et al. (2015); Guo et al. (2019), reduce the generated artifacts (Blanz and Vetter, 1999; Ichim et al., 2015), as well as to improve rigging (Weng et al., 2019). Deep learning methods can also generate completely new avatars that are not representations of existing people, by using adversarial networks (Karras et al., 2019).

Another way to reduce the effort needed to model an avatar of an actual person is to use a parametric avatar and fit the parameters to images or scans of the person.

Starting from an existing avatar guarantees that the fitting process will end up with a valid avatar model that can be rendered and animated correctly. Such methods are able to reduce the modeling process to a few images or even a single 2D image (Saito et al., 2016; Shysheya et al., 2019). They can be used to recover physically correct models that can be animated of finer details, even with fine and semi-transparent objects, such as hair (Wei et al., 2019). This is at the cost of some differences compared to the actual person's geometry. There are many things these systems still cannot do well without additional artist ic work: hair, teeth, and fine lines in the face, to name a few.

There are some commercial and non-commercial applications for parametric avatars that provide some of these features, including Autodesk Character Generator, Mixamo/Adobe Fuse or iClone Character Creator.

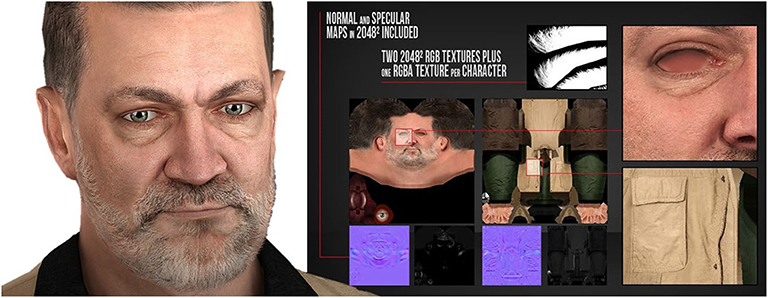

At the time of rendering, each vertex and face of the mesh is assigned a value. That value is computed from retrieving the textures in combination with the shaders that might incorporate enhancements and GPU mixing of multiple layers with information about specular, albedo, normal mapping, and transparency among other material properties. While the specular map states where the reflection should and should not appear, the albedo is very similar to a diffuse map, but with one extra benefit: all the shadows and highlights have been removed. The normal map is used to add details without using more polygons; the computer graphics simulates high-detail bumps and dents using a bitmap image.

Therefore, texturing the avatar is another challenge in the creation process (Figure 4). Adding textures requires specific skills as the vertexes and unwrapping are often based on real images that might also need additional processing, but in some occasions this can be automated.

Figure 4. A close-up of an avatar face from Microsoft Rocketbox and corresponding set of textures that are mapped to the avatar mesh to produce the best possible appearance. Textures need to be mapped per each vertex and can contain information about the diffuse, specular, normal, and transparency colors of each vertex.

When captured independently, each image covers only part of the object, and there is a need to fuse all sources to a single coherent texture. One possibility is to blend available images into a merged texture (Wang et al., 2001; Baumberg, 2002). However, any misalignment or inaccurate geometry (such as the case of fitting a parametric model) might lead to ghosting and blurring artifacts when the textures are geometrically misaligned.

To reduce such artifacts, texturing has been addressed as an image stitching problem (Lempitsky and Ivanov, 2007; Gal et al., 2010). This approach targets each surface triangle that is then projected onto the images from which it is visible, and the final texture is assigned entirely from one image in this set. The goal is to select the best texture source and to penalize mismatches across triangle boundaries. Lighting variations are corrected at post-processing using a piece-wise continuous function over the triangles.

A shader program runs in the graphics pipeline and determines how the computer will render each pixel of the screen based on the different texture images and the material and physical properties associated with the different objects. Shader programs are often used to control lighting and shading effects, and are programmed using GLSL (OpenGL Shading Language).

Shader programming also plays a very important role in applying all the additional textures (albedo, specular, bump mapping etc.). Most of the rendering engines will provide some basic shaders that will map the textures of the material assigned to the avatar.

A key step in most animation systems is to attach a hierarchy of bones to the mesh in a process called rigging. The intention is that animations are defined by moving the bones, not the individual vertices. Therefore during rigging, each vertex of the mesh is attached to one or more bones. If a vertex is attached to one bone, it is either rigidly attached to that bone or has a fall-out region of influence. Alternatively if a vertex is attached to multiple bones then the effect of each bone on the vertex's positions is defined by weights. This allows for a mesh to smoothly interpolate as the bones move. These vertexes affected by multiple bones are typical in the joint sections.

Animators may also use much less complex rigs than a skeleton by combining, for example, different expressions (i.e., blendshapes) and movements to a combined trigger (e.g., happy expression).

Artists typically structure the bones to follow, crudely, the skeletal structure of the human or animal (Figure 5).

There are several pipelines available today for rigging a mesh so that it can be imported into a game engine such as Unreal or Unity. One common way is using the CATS plugin for Blender (Cat, 2020) that can export in a compatible manner with the Mecanim retargeting system used in Unity. Another method is to import the mesh into Maya or another 3D design program and rig it by hand, or alternatively, use Adobe Fuse (Fus, 2020) to rig the mesh. Nonetheless, each of these methods has its advantages and disadvantages. As with any professional creation tool, they have steep learning curves in becoming familiar with the user interfaces and with all of the foibles of misplacing bones in the mesh that can lead to unnatural poses. Although with the CATS plugin and Fuse the placement of bones is automated, if they are not working initially it can be difficult to fix issues.

Mixamo (Mix, 2020) makes rigging and animation easier for artists and developers. Users upload the mesh of a character and then place joint locators (wrists, elbows, knees, and groin) by following the onscreen instructions. Mixamo is not only an auto-rigging software but also provides a character library.

Independently of the tool used, the main problems usually encountered during the animation of avatars are caused by various interdependent design steps in the 3D character creation process. For example, poor deformation can come from bad placement of the bones/joints, badly structured mesh polygons, incorrect weighting of mesh vertex to their bones or non-fitting animations. Even for an avatar model that looks extremely realistic in a static pose, bad deformation/rigging would lead to a significant loss of plausibility and immersion in the virtual scenario as soon as the avatar moves its body. In fact, if not done carefully rigged avatars can exhibit pinched joints or unnatural body poses when animated. This depends on the level of sophistication of the overall process, weight definition, deformation specs, and kinematic solver. The solver software can also create such effects.

Overall, creating a high quality rig is not trivial. There is a trend toward easier tools and automatic systems for rigging meshes and avatars (Baran and Popović, 2007; Feng et al., 2015). They will be easier and more accessible as the technology evolves but having access to professional libraries of avatars, such as Microsoft Rocketbox, can simplify production and allow researchers to focus on animating and controlling avatars at a high level rather than at the level of mesh movement.

While bones are intuitive control systems for bodies, for the face, bones are not created analogously to real bones, but rather to pull and push on different mesh parts of the face. While bones can be used for face animation on their own, a common alternative to bone-based facial animation is to use blendshape animation. (Vinayagamoorthy et al., 2006; Lewis et al., 2014).

Blendshapes are variants of an original mesh with each variant representing a different non-rigid deformation or, in this context, a different isolated facial expression. The meshes have the same number of vertices and the same topology. Facial animation is created as a linear combination of blendshapes. At key instances in time, blendshapes are combined as a weighted sum into a keypose mesh. Different types of algebraic methods are used to interpolate between keyposes for all frames of the animation (Lewis et al., 2014). For example, one can select 10% of a smile, and 100% of left eye blink and the system would combine both as in Figure 6. Blendshapes can then be considered as the units of facial expressions and despite the fact that they are seldomly followed there are standards proposed such as the Facial Action Coding System (FACS) proposed by Ekman and Friesen (1976). The number of blendshapes in facial rigs varies. For example, some facial rigs use 19 blendshapes plus neutral (Cao et al., 2013). However, if facial expressions have more complex semantics the sliders can reach much higher numbers, and 45 or more poses are often used (Orvalho et al., 2012). For reference, high-end cinematic quality facial rigs often require hundreds of blendshapes to fully represent subtle deformations (the Gollum character in the Lord of the Rigns movies had over 600 blendshapes for the face).

Figure 6. One of the Microsoft Rocketbox avatars—Blendshapes by Pan et al. (2014).

Blendshapes are mostly used for faces because (i) they capture well non-rigid deformations common for the face as well as small details that might not be represented by a skeleton model, and (ii) the physical range of motion in the face is limited, and in many instances can be triggered by emotions or particular facial expressions that can be later merged with weights (Joshi et al., 2006).

Blendshapes can be designed manually by artists but also captured using camera tracking systems (RGB-D) from an actor and then blended through parameters at the time of rendering (Casas et al., 2016). In production settings, hybrid blendshape and bones based on facial rigs are sometimes used.

At run-time, rigged avatars can be driven using pre-recorded animations or procedural programs to create movements during the VR/AR experience (Roth et al., 2019). A self-avatar can be animated to follow real-time motion tracking of the participants. The fidelity of this type of animation depends on the number of joints being captured and the techniques used to do extrapolations and heuristics between those joints (Spanlang et al., 2014).

VR poses a unique challenge for animating avatars that are user driven as this needs to happen at low latency so there is little opportunity for sophisticated animation control. It is particularly tricky if the avatar is used as a replacement for the user's unseen body. In such cases, to maximize the feeling of body ownership of the user, VR should use a low latency motion capture of the user's body and animate the avatar in the body's place. Due to limitations of most commercial VR systems, the capture of the body is typically restricted to measuring the 6 degrees of freedom of two controllers and the HMD. Other techniques, such as inverse kinematics, can be used to infer movements of the body that are not actually tracked. From these, the locations of two hands and head can be determined and other parts of the body can be inferred.

In recent years some systems have offered additional sensors such as finger tracking around the hand-held controllers, eye tracking in the HMD, and optional stand-alone trackers. Research is examining the use of additional sensors and tracking systems such as external (Spanlang et al., 2014; Cao et al., 2017; Mehta et al., 2017) or wearable cameras (Ahuja et al., 2019) for sampling more information about the user's pose and perhaps in the future we may see full-body sensing.

Motion capture is the processes of sensing of a person's pose and movement. Pose is usually represented by a defining skeletal structure, and then for each joint giving its 3 or 6 degrees of freedom transformation (rotations and sometimes position) from its parent in the skeletal structure. A common technique for motion capture has the actor wearing easily recognized markers such as retro-reflective markers or active markers that are observed by multiple high-speed cameras (Ma et al., 2006). Optitrack (Opt, 2020) and Vicon (Vic, 2020) are two commercial motion capture systems that are commonly used in animation production and human- computer interaction research (Figure 7).

Figure 7. Body motion capture via OptiTrack motion capture system (Opt, 2020) and the corresponding mapping to one of the Microsoft Rocketbox avatars in a singing experiment by Steed et al. (2016a).

Another set of techniques uses computer vision to track a person's movement without markers, such as using the Microsoft Kinect sensor (Wei et al., 2012), and more recently RGB cameras (Shiratori et al., 2011; Ahuja et al., 2019), RF reflection (Zhao et al., 2018a,b), and capacitive sensing (Zheng et al., 2018). A final set of techniques uses Worn sensors to recover the user's motion with no external sensors. Examples include inertial measurement units (IMUs) (Ha et al., 2011), wearable cameras (Shiratori et al., 2011) or mechanical suits such as METAmotion's Gypsy (Met, 2020).

For facial motion capture we have similar options to the ones proposed in the full body setup: either marker-less with a camera and computer vision tracking algorithms, such as Faceshift (Bouaziz et al., 2013) (Figure 8), or using marker-based systems such as Optitrack.

Figure 8. One of the Microsoft Rocketbox avatars animated with markerless facial motion capture via Faceshift by Steed et al. (2016a).

However, the problem with facial animation is that on instances, when needed in real-time, users might be wearing HMDs that occlude their real face, and therefore capturing their expressions is very hard (Lou et al., 2019). Some researchers have shown that it is possible to add sensors to the HMDs to record facial expressions (Li et al., 2015). The BinaryVR face tracking device attaches to your HMD and tracks facial expressions (Bin, 2020).

For animating an avatar, translations and rotations of each of the bones are changed to match the desired pose at an instant in time. If the motions have been prerecorded, then this constitutes a sort of playback. However, data-driven approaches can be much more sophisticated than simple playback.

Independently of whether the animation is performed in real-time or using pre-recorded animations, in both cases at some point there has been a motion capture that either sensed the full body and created prerecorded animations, or a subset of body parts to later do motion retargeting. If is done offline the animations are then saved and accessed during runtime. Aternatively, if the motion capture is done in real-time then this can be used directly to animate the avatars that match the users' motions.

When each joint of the participant can be recorded in real-time through a full body motion capturing system (Spanlang et al., 2014), they can be transferred directly to the avatar (Spanlang et al., 2013). For best performance this assumes that the avatar is resized to the size of the participant. In that situation participants achieve the maximum level of agency over the self-avatar and rapidly embody it (Slater et al., 2010a). However, this requires wearing a motion capturing suit or having outside sensors monitoring the motions of the users, which can be expensive and/or hard to setup (Spanlang et al., 2014). Since direct mapping between the tracking and avatar skeletons can sometimes be non trivial, intermediate solvers are typically put in place in most approaches today Spanlang et al. (2014).

Most commercial VR systems capture only the rotation and position of the HMD and two hand controllers. In some cases the hands are well-tracked with finger capacitive sensing around the controller. Some new systems are introducing more sensors such as eye tracking. However, in most systems, most of the body degrees of freedom are not monitored. Even if only using partial tracking (Badler et al., 1993) it is possible to infer a good approximation of the full body, but the end-effectors need to be well-tracked. Nevertheless, many VR applications limit the rendering of the user's body to hands only. Although such representations may suffice for many tasks, this lowers the realism of the virtual scenario and may reduce the level of embodiment of the user (De Vignemont, 2011) and even have further impact even on their cognitive load (Steed et al., 2016b).

A common technique to generate a complete avatar motion given the limited sensing of the body motion, is Inverse Kinematics (IK). The position and orientations of avatar joints that are not monitored are estimated given the known degrees of freedom. IK originated from the field of robotics and exploits the inherent movement limitations of each of the skeleton's joints to retrieve a plausible pose (Roth et al., 2016; Aristidou et al., 2018; Parger et al., 2018). The pose fits the position and direction of the sensed data and lies within the body's possible poses. IK addresses the challenge that there are many possible combinations and sometimes it is not a perfect one-to-one method. Additionally it can do a reasonable job in reconstructing motions in joints that are within ground truth nodes, but does a worse job in extrapolating to other joints, for example to know the leg motions from the hand trackers is harder than it is to know the elbow motions.

Most applications of avatars in games and movie productions use a mix of prerecorded motion captures of actors and professionals (Moeslund et al., 2006) and artists' manual animations, together with AI, algorithm ic and procedural animations. The main focus of these approaches is the generation of performances that are believable, expressive, and effective. Added animation aims at minimizing artifacts that can result due to a lack of small motions that may exist during a real person's motions, such as clothes bending, underlying facial movements (Li et al., 2015) and others.

Prerecorded animations alone can be a bit limiting in the interactivity delivered by the VR, especially if they are driving a first-person embodied avatar where the user wants to retain agency over the body. For that reason, prerecorded animations are generally not used for self-avatars; however, recent research has shown that they can be used to trigger enfacement illusions and realistic underlying facial movements (Gonzalez-Franco et al., 2020b).

Indeed, the recombination of existing animations can become quite complex and procedural or deep learning systems can be in charge of generating the correct animations for each scene.

A combination of procedural animation with data-driven animation is a common strategy for real-time systems. However, this approach also allows compensation for incomplete sensing data for real-time animation, especially self-avatars.

In some scenarios the procedural animation systems can use the context of the application itself to animate the avatars. For example, in a driving application where the legs can be rendered sitting in the driver seat performing actions consistent with the driving inputs even if the user is not actually in a correct driving position.

In other scenarios the approach is more interactive and depends on the user input. It can incorporate tracking information from the user motion capture and start to complete parts of the self-avatar or their motions if they are not complete. This could be an improvement over traditional IK, in which a data-driven animation can incorporate the popularity of the sampled data and retrieve as a result the most likely pose within the whole range of possible motions of the IK (Lee et al., 2010).

Using data-driven machine learning algorithms the system can pick the most likely pose out of the known data given the limited capture information. In many cases this might result in a motion retargeting or blending scenario.

More recently, we have seen rigged avatars that can be trained through deep learning to perform certain motion actions, such as dribbling (Liu and Hodgins, 2018), adaptive walking and running (Holden et al., 2017), or physics behaviors (Peng et al., 2018). These are great tools for procedural driven avatars and can also solve some issues with direct manipulation.

However, with any such procedural animation, care needs to be taken that the animation doesn't stray too far from other plausible interpretations of the tracker input as discrepancies in representation of the user's body may reduce their sense of body ownership and agency (Padrao et al., 2016; Gonzalez-Franco et al., 2020a).

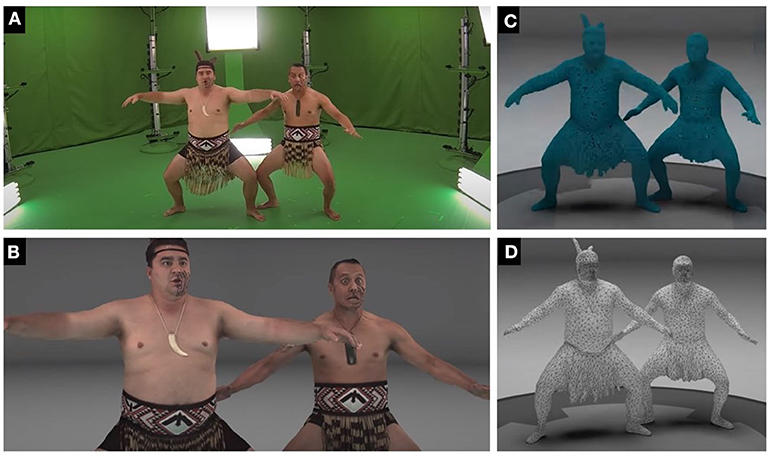

There are other forms of avatar creation beyond the pipeline defined previously. For example using volumetric capture, we can capture meshes and their animations without the need for rigging (Figure 9). Volumetric capturing can be considered an advanced form of scanning in which the external optical sensors (often numerous) allow a reconstruction of the whole point cloud and mesh of a real person and can record the movement of the person over time, essentially leading to a 3D video.

Figure 9. Two volumetric avatars captured in the Microsoft Mixed Reality studio Collet et al. (2015). (A) Input setup. (B) Avatar output. (C) Scanned point cloud. (D) Processed mesh.

When we talk about volumetric avatars, we refer to different types of 3D representations, such as point clouds, meshes (Orts-Escolano et al., 2016), voxel grids (Loop et al., 2016), or light fields (Wang et al., 2017; Overbeck et al., 2018). However, the 3D triangle mesh is still one of the most common 3D representations that is used in current volumetric capture systems, mostly because GPUs are traditionally designed to render triangulated surface meshes. Moreover, from a temporal point of view, we can also distinguish between capturing a single frame, and then performing rigging on the single frame, or capturing a sequence of these 3D representations, which is usually known as volumetric video. Finally, volumetric avatars can also be categorized as those that are captured offline (Collet et al., 2015; Guo et al., 2019) and played back as volumetric streams of data or those that are being captured and streamed in real-time (Orts-Escolano et al., 2016).

Either real-time or offline, volumetric video capture is a complex process that involves many stages, from low-level hardware setups and camera calibration, to sophisticated machine learning and computer vision processing pipelines. A traditional volumetric capture pipeline includes the following steps: image acquisition, image preprocessing, depth estimation, 3D reconstruction, texture UV atlas parameterization, and data compression. Some additional steps are usually performed to remove flickering effects in the final volumetric video, as mesh tracking (Guo et al., 2019) or non-rigid fusion (Dou et al., 2016, 2017) in the case of offline approaches and real-time ones, respectively. More detailed information about state-of-the-art volumetric performance capture systems can be found in Guo et al. (2019), Collet et al. (2015), and Orts-Escolano et al. (2016).

The latest frontier in volumetric avatars has been for the computation to be performed in real-time, and this was achieved in the Holoportation system (Orts-Escolano et al., 2016). When these real-time systems are combined with mixed reality displays such as HoloLens, this technology also allows users to see, hear, and interact with remote participants in 3D as if they are actually present in the same physical space.

Both pre-recorded and real-time volumetric avatars offer a different level of realism that cannot be currently achieved by using rigging techniques. For example, volumetric avatars automatically capture object interaction, extremely realistic cloth motion capture and accurate facial expressions.

Real-time volumetric avatars can enable a more interactive communication with remote users, allowing also the user to interact and perform eye contact with the other participants. However, these systems have to deal with a huge amount of computing, sacrificing the quality of the reconstructed avatar for a more interactive experience, which can also affect the perception of eye gaze or other facial clues (MacQuarrie and Steed, 2019). A good rigged avatar with animated facial animation can do an equal or better job for communication (Garau et al., 2003; Gonzalez-Franco et al., 2020b). Moreover, it is important to note that if the user is wearing a HMD while being captured, either a VR or an AR device, it will require additional processing to remove the headset in real-time (Frueh et al., 2017). For this purpose, eye-tracking cameras are often mounted within the headset, which allows in-painting of occluded areas of the face in a realistic way in the final rendered 3D model. Another common problem that real-time capture systems need to solve is latency. Volumetric captures tend to involve heavy computational processing and result in larger data sets. Thus, they require high bandwidth and this increases the latency between the participants in the experience.

Most recent techniques or generating real-time volumetric avatars are focusing on learning-based approaches. By leveraging neural rendering techniques these novel methods have achieved unprecedented results, enabling the modeling of view-dependent effects such as specularity and also correcting for imperfect geometry (Lombardi et al., 2018, 2019; Pandey et al., 2019). Compared to traditional graphics pipelines, these new methods require less computation and also can deal with a coarser geometry, which is usually used as a proxy for rendering the final 3D model. Most of these approaches are able to achieve compelling results, with substantially less infrastructure than previously required.

The Microsoft Rocketbox avatar library creation process deployed a lot of research and prototyping work into “base models” for male and female character meshes (Figure 10). These base models were already rigged and tested with various (preferably extreme) animations in an early stage of development. The base models were used as a starting point for creation of all character types later on so as to guarantee a high-quality standard and consistent specifications throughout the whole library. Optimization opportunities identified during the production phase of the library also flowed back into these base models. In order to be able to mix different heads with different bodies of the avatars more easily, one specific polygon edge around the neck area of the characters is identical in all avatars of the same gender.

Figure 10. Several of the 115 Microsoft Rocketbox rigged avatars released in the library that show a diversity in race, gender, and age, as well as attire and occupation.

UV Mapping was also predefined in the base meshes already. UV Mapping is the process of “unfolding” or “unwrapping” the mesh to a 2D coordinate system that maps onto the texture. To allow for mixing and matching texture elements of different characters from the library, many parts of the character UV coordinates were standardized; for example, the hands or the face. Therefore, it is possible to exchange face textures across different characters of the library with some knowledge of image editing and retouching. The UV Mapping of polygons that move or stretch significantly when animation is applied achieves a higher texture resolution to avoid blur effects (Figure 4).

Another important set of source data for the creation of the Microsoft Rocketbox library were photographs of real people that were taken in a special setup with photo studio softbox lights. A whitebox and a turntable to guarantee neutral lighting of the photo material that was used as a reference for modeling the meshes and as source material for creating the textures. Photos of people from many different ethnic groups, clothing styles, age classes, and so on, were taken in order to have high diversity across the library. However, different portions from the source material were mixed and strongly modified for the creation of the final avatars so that the avatars represent generic humans that do not exist in reality.

The models are available in multiple Levels of Detail (LODs) which can be optionally be used for performance optimization for scenes with larger numbers of characters, or for mobile platforms. The LODs in the models include “hipoly” (10.000 triangles), “midpoly” (5.000 triangles), “lowpoly” (2.500 triangles), and “ultralowpoly” (500 triangles) levels, (Figure 3). Textures are usually included with a resolution of 2048x2048 pixels, with one separate texture for the head and another one for the body. This means that the head texture has a higher detail level (pixel resolution per inch) than the body texture. A set of textures are then mapped to the avatar mesh to produce the best possible appearance. Textures need to be mapped per each vertex and can contain information about the diffuse, specular, or normal colors of each vertex (Figure 4).

All in all, the Rocketbox avatars provide a reliable rigged skeletal system with 56 body bones, that despite not being procedurally generated can be used for procedural animation thanks to the rigged system.

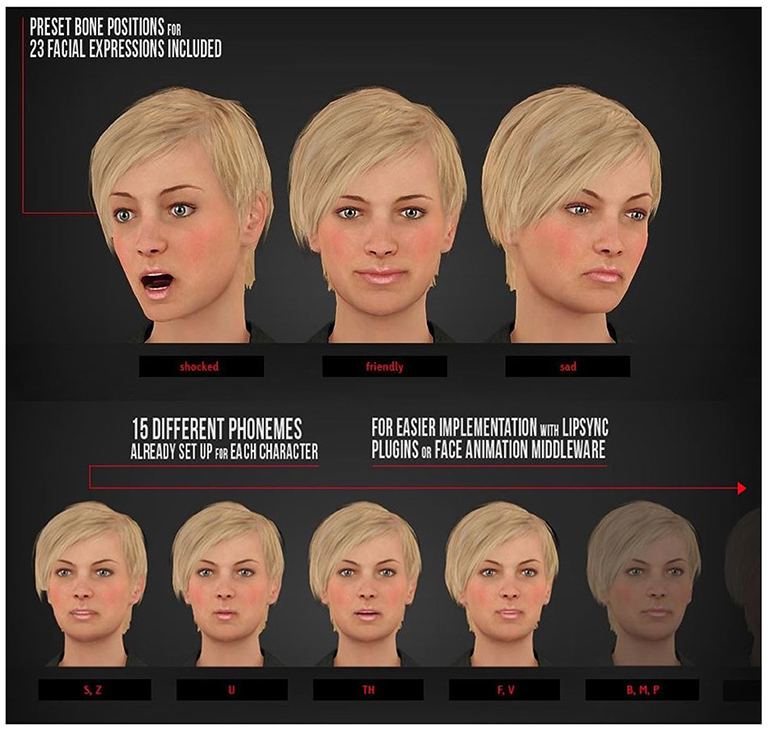

Facial animations for the Microsoft Rocketbox library animation sets (Figure 11) were created though generating different facial expressions manually by setting up and saving different face bone constellations (Figure 5). Additionally visemes (face bone positions) for all important phonemes (speech-sounds) were created. These facial expressions were saved as poses and used for keyframe animation of the face adapted to the body movement of the respective body animation. The usage of animation sets across all characters of the library without retargeting the animations required some general conditions to be accounted for during creation of the Rocketbox HD library. The bones in the face only transform in x,y,z and do not rotate. Therefore, facial animation also looks correct on character variants with face elements such as eyebrows or lips positioned deviant from the base mesh. The only exception are the bones for the eyeballs which have rotation features only—this requires the eyeballs of all avatars that belong to the same class (e.g., adult female) to be at the same position in 3D space.

Figure 11. (Top) 3 of the 23 facial expressions included in the Microsoft Rocketbox avatars together with (Bottom) 5 of the 15th phonemes already setup in each character for easier implementation with lipsync plugins and mace animation software. Underlying there are 28 facial bones that allow researchers to create their own expressions.

When compared to the other available avatar libraries and tools, the Microsoft Rocketbox present some limitations (Table 1).

The Rocketbox avatars do not use blendshapes for facial animation but bones, meaning that, it is up to the users to create their own blendshapes on top, as shown in Figure 11. However, creating blendshapes is a process that needs skill and cannot be replicated easily to other avatars but has to be performed manually for each avatar (or scripted).

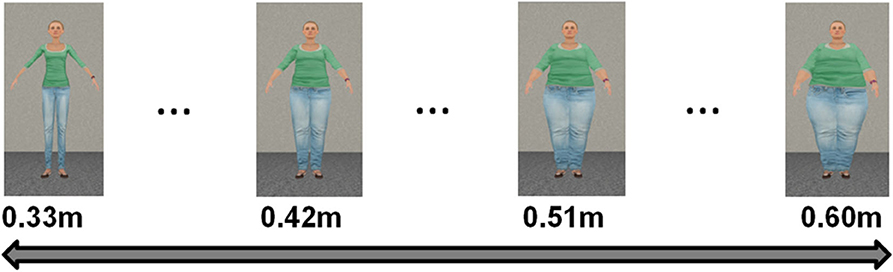

The library also shows limitations for extreme scaling, that can result in errors regarding the predefined skeleton skin weights and additionally result in unrealistic proportions (see Figure 12 where the texture stretches unrealistically). Other limitations are the exchangeability of clothes, hair styles, face looks, gender, and so on. Nevertheless, given that the avatars use a base model this simplifies the access to and editing of avatar shapes and clothes. For cases where the avatars are required to look like the participants, or in which there is a need to adapt the body sizes of participants, there might be a way to either change the texture of the facial map, through a transformation, or modifying the mesh, or directly stitching a different mesh to the face of a given library avatar. Initiatives like the virtual caliper (Pujades et al., 2019) could help in adapting the current avatars; however, these possibilities are not straightforward with the release. Future work on the library would also need to bring easier tools for animation for general use, as well as tools to facilitate the exchangeability of clothes based on the generic model, as well as easier blendshape creation.

Figure 12. A Microsoft Rocketbox avatar with modified mesh being used in a body weight study at Max Plank by Piryankova et al. (2014).

The first experiences with avatars and social VR were implemented in the 1980s in the context of the technology startup (VP Research). While many of the results were reported in the popular press (Lanier, 2001) that early work was also the germ of what later became a deep engagement from the academic community into exploring avatars and body representation illusions as well as their impact on human behavior (Lanier et al., 1988; Lanier, 1990). In that regard VPL Research not only did the initial explorations on avatar interactions but also provided crucial VR instrumentation for many laboratories and pioneered a whole industry in the headsets, haptics and computer graphics arenas. Many of the possibilities of avatars and VR for social and somatic interactions were explored (Blanchard et al., 1990) and were later formalized empirically by the scientific community as presented in this section.

Researchers in diverse fields have since explored the impact of avatars on human responses. The underlying aim of the research in many of the avatar scientific studies is to better understand the behavioral, neural, and multisensory mechanisms involved in shaping the facets of humanness, and to further explore potential applications for therapeutic treatments, training, education, and entertainment. A significant number of these studies has used the Microsoft Rocketbox avatars (with over 145 papers and 5307 citations, see the Supplementary Materials for the full list). In this paper, at least 49 citations (representing 25% of the citations) used the Rocketbox avatars, and all are referenced in the following section about applications, where they represent almost 50% of all the citations in this section. Considering that the Rocketbox avatars started being used around 2009 (see our list of papers using the avatars in Supplementary Materials) and that 23 of the cited papers in this section were published before 2009, we can consider that 60% of the research referenced in this section has been carried out with the Rocketbox avatars. The remaining citations are included to provide further context for the importance of the research in this area, as well as of the potential applications of the avatars that range from simulations to entertainment to embodiment.

Indeed, a substantial focus of interest has been on how using and embodying self-avatars can change human perception, cognition and behavior. In fact, the possibility of making a person, even if only temporarily, “live in the skin” of a virtual avatar has tremendously widened the limit of experimental research with people, allowing scientific questions to be addressed that would not have been possible otherwise.

In the past two decades there has been an explosion of interest in the field of cognitive neuroscience on how the brain represents the body, with a large part of the related research making use of body illusions. A one-page paper in Nature (Botvinick and Cohen, 1998), showed that a rubber hand can be incorporated into the body representation of a person simply by application of multisensory stimulation. This rubber-hand illusion occurs when a rubber hand in an anatomically plausible position is seen to be tactilely stimulated synchronously with the corresponding real out-of-sight hand. For most people, after about 1 minute of this stimulation and visuo-tactile correlation, proprioception shifts to the rubber hand, and if the rubber hand is threatened there is a strong physiological response (Armel and Ramachandran, 2003) and corresponding brain activation (Ehrsson et al., 2007). This research provided convincing evidence of body ownership illusions. The setup has since been reproduced with a virtual arm in virtual reality (Slater et al., 2009), also with appropriate brain activation to a threat (González-Franco et al., 2014).

Petkova and Ehrsson (2008) showed how the techniques used for the rubber-hand illusion could be applied to the whole body thus producing a “body ownership illusion” of a mannequin's body and this also has been reproduced in VR (Slater et al., 2010b; Yuan and Steed, 2010). First-person perspective over the virtual body that visually substitutes the real body in VR is a prerequisite for this type of body ownership illusion (Petkova et al., 2011; Maselli and Slater, 2014). The illusion induced by visuomotor synchrony, where the body moves synchronously with the movements of the person, is typically more vivid than those triggered by visuotactile synchrony, where the virtual body is passively touched synchronously with real touch (Gonzalez-Franco et al., 2010; Sanchez-Vives et al., 2010; Kokkinara and Slater, 2014). Illusory body ownership over a virtual avatar, however, can be also experienced in static conditions, when the virtual body is seen in spatial alignment with the real body, a condition that could be easily met using immersive stereoscopic VR displays (Maselli and Slater, 2013; Gonzalez-Franco et al., 2020a). The visuomotor route to body ownership is a powerful one, integrating visual and proprioceptive sensory inputs in correlation with motor outputs. This route is uniquely suited to self-avatars in VR, since it requires the technical capabilities of the forms of body tracking and rendering discussed in the animation section. Additional correlated modalities (passive or active touch, sound) can enhance the phenomenon.

Several studies have focused on casting the illusions of body ownership into a coherent theoretical framework. Most accounts have been discussed in the context of the rubber-hand illusion and point to multisensory integration as the main key underlying process (Graziano and Botvinick, 2002; Makin et al., 2008; Ehrsson, 2012). More recently, computational models developed in the framework of causal inference (Körding et al., 2007; Shams and Beierholm, 2010) described the onset of illusory ownership as the result of an “inference process” in which the brain associates all the available sensory information about the body (visual cues from the virtual, together with somatosensory or proprioceptive cues from the physical body) to a single origin: the own body (Kilteni et al., 2015; Samad et al., 2015). According to these accounts, all visual inputs associated with the virtual body (e.g., its aspect, the control over it, the interactions that it has with the surrounding environment) are processed as if emanating from the own body. As such, these could have a profound implications on perception and behavior.

What is even more interesting than the illusion of ownership over a virtual body is indeed the consequences it can have for changed physiology, behaviors, attitudes, and cognition.

Self-perception theory argues that people often infer their own attitudes and beliefs from observing themselves—for example their facial expressions or styles of dress—as if from a third party (Bem, 1972). Could avatars have a similar effect? Early work focused on what Yee and Bailenson called “The Proteus Effect,” the notion that one's avatar would influence the behavior of the person embodied in it. The first study examined the consequences of embodying older avatars, where college students were embodied in either age-appropriate avatars or elderly ones (Yee and Bailenson, 2007). The results showed that negative stereotyping toward the elderly was reduced when participants were placed into older avatars compared with those placed into young avatars. Subsequent work extended this finding to other domains. For example, people embodied in taller avatars negotiated more aggressively than those embodied in shorter ones, and attractive avatars caused more self-disclosure and closer interpersonal distance to a confederate than unattractive ones during social interaction (Yee and Bailenson, 2007). The embodiment effects of both height and attractiveness in VR extended to subsequent interactions outside of the laboratory, predicting players' performance in online games, but also face-to-face interactions (Yee et al., 2009). Another study from the same laboratory has also shown how embodying avatars of different races can modulate implicit racial prejudice (Groom et al., 2009). In this case, Caucasian participants embodying “Black” avatars demonstrated increased level of racial prejudice favoring Whites, with respect to participants embodying “White” avatars, in the context of a job interview. These effects of embodying an avatar only occurred when the avatar's movements were controlled by the user—simply observing an avatar was not sufficient to cause changes in social behavior (Yee and Bailenson, 2009).

While these pioneering studies give a solid foundation to understanding the impact of self-avatars on social behavior, they relied on fairly simple technology at the time. The avatars were only tracked with head rotations and the body translated as a unit, while the latency and frame-rate were courser than today's standards. Also, these studies entailed a third-person view of self-avatars, either reflected in a mirror from a “disembodied” perspective (i.e., participants could see the avatar body rendered in a mirror as from a first-person perspective, but looking down they would see no virtual body), or from a third-person perspective as in an on-line community. More recent work has demonstrated that embodying life-size virtual avatars from a first-person perspective, and undergoing the illusion of having one's own physical body (concealed from view) substituted by the virtual body seen in its place and moving accordingly, could further leverage the impact of self-avatars on implicit attitudes, social behavior, and cognition (Maister et al., 2015).

Several studies have shown that in neutral or positive social circumstances embodiment of White people in a Black virtual body results in a reduction in implicit racial bias, measured using the Implicit Association Test (Peck et al., 2013). Similar results had been found with the rubber-hand illusion over a black rubber hand (Maister et al., 2013), and these results and explanations for them were discussed in (Maister et al., 2015). Banakou et al. (2016) explored the impact of the number of exposures and the duration of the effect. The results of Peck et al. (2013) were replicated, but in Banakou et al. the racial-bias measure was taken one week after the final exposure in VR, suggesting the durability of the effect. These results stand in contrast to Groom et al. (2009), which had found an increase in the racial-bias IAT, as discussed above. Recent evidence suggests, however, that when the surrounding social situation is a negative one (in the case of Groom et al., 2009 a job interview) then the effect reverses. These results have been simulated through a neural network model (Bedder et al., 2019).

When adults are embodied in the body of a 5-year-old child with visuomotor synchrony they experience strong body ownership over the child body. As a result, they self-identify more with child-like attributes and see the surrounding world as larger (Banakou et al., 2013; Tajadura-Jiménez et al., 2017). However, when there is body ownership over an adult body of the same size as the child, the perceptual effects are significantly lower, suggesting that it is the form of the body that matters, not only the height. This result was also replicated (Tajadura-Jiménez et al., 2017).

Virtual embodiment allows us not only to experience having a different body, but to live through situations from a different perspective. One of these situations is the experience of a violent situation. In (Seinfeld et al., 2018) men who were domestic violence offenders experienced a virtual domestic violent confrontation from the perspective of the female victim. Such perspective has been found to modulate the brain network that encodes the bodily self (de Borst et al., 2020). Violent offenders often have deficits in emotion recognition, in the case of male offenders, with a deficit in recognizing fear in the faces of women. This deficit was found to be reduced after embodiment in the female subject to domestic abuse by a virtual man (Seinfeld et al., 2018). Similarly, mothers of young children tend to improve in empathy toward their children after spending some time embodied as a child in interaction with a virtual mother (Hamilton-Giachritsis et al., 2018).

Embodying virtual avatars can further influence the engagement and performance on a given task or situation, depending on the perceived appropriateness of the embodied avatar for the task. For example, in a drumming task, participants showed more complex and articulated movement patterns when embodying a casually dressed avatar that when embodying a business man in a suit (Kilteni et al., 2013). Effects at the cognitive level have also been found. For example, participants embodied as Albert Einstein tend to improve their performance on a cognitive test than when embodied in another “ordinary” virtual body (Banakou et al., 2018). It has been shown that people embodied as Sigmund Freud tend to offer themselves better counseling than when embodied in a copy of their own body, or embodied as Freud with visuomotor asynchrony (Osimo et al., 2015; Slater et al., 2019). Moreover, being embodied as Lenin, the leader of the October 1917 Russian Revolution in a crowd scene leads to people being more likely to follow up on information about the Russian Revolution (Slater et al., 2018). All these studies form a body of accumulated evidence of the power of embodying virtual avatars not only in modifying physiological responses and perception and the world and the others, but also modifying behavior and cognitive performance.

It has been largely demonstrated that embodying a virtual avatar affects the way bodily related stimuli are processed. Experimental research based on the rubber hand illusion paradigm (Botvinick and Cohen, 1998) provided robust evidence for the impact of illusory body ownership on the perception of bodily related stimuli (Folegatti et al., 2009; Mancini et al., 2011; Zopf et al., 2011). Following this tradition, the embodiment of virtual avatars was shown to affect different facets of perception, from tactile processing to pain perception and own body image.

When in an immersive VR scenario, embodying a life-size virtual avatar enhances the perception of touch delivered on a held object, with respect to having no body in VR (Gonzalez-Franco and Berger, 2019). It was also shown that experiencing ownership toward a virtual avatar modulates the temporal constraints for associating two independent sensory cues, visual and tactile, to the same coherent visuo-tactile event (Maselli et al., 2016; Gonzalez-Franco and Berger, 2019). Virtual embodiment could therefore grant a larger flexibility to spatiotemporal offsets with respect to the constraints that apply in the physical world or when having an embodied self-avatar in VR.

Not only can embodiment modify the perception of timing of sensory inputs (Berger and Gonzalez-Franco, 2018; Gonzalez-Franco and Berger, 2019), but also the perception of other sensorial modalities such as temperature (Llobera et al., 2013b) and pain (Llobera et al., 2013a). Several studies have demonstrated that virtual embodiment can modulate pain (Lenggenhager et al., 2010; Martini et al., 2014). In particular, it has been shown that pain perception is modulated by the visual appearance of the virtual body, including its color (Martini et al., 2013), shape (Matamala-Gomez et al., 2020), or level of transparency (Martini et al., 2015), as well as by the degree of spatial overlap between the real and the virtual bodies (Nierula et al., 2017). This is a relevant area regarding chronic pain-therapeutical applications (Matamala-Gomez et al., 2019b), although pain of different origins may require different manipulations of the embodied-avatars (Matamala-Gomez et al., 2019a).

The embodiment of a virtual avatar further allows the temporal reshaping of peripersonal space, the space surrounding the body where external stimuli (e.g., visual or auditory) interact with the somatosensory system (Serino et al., 2006). This can be done by manipulating the visual perspective over an embodied avatar, as for the case of illusory out-of-body experiences (Lenggenhager et al., 2007; Blanke, 2012; Maselli and Slater, 2014), as well as by modulating the size and/or shape of the virtual body that interacts with this peripersonal space (Abtahi et al., 2019), for example by having an elongated virtual arm (Kilteni et al., 2012b; Feuchtner and Müller, 2017).

Such flexibility of the virtual body shape modification has been leveraged to study psychological phenomena such as body image in anorexia and other eating disorders (Piryankova et al., 2014; Mölbert et al., 2018) (Figure 12).

Agency is also an important element of the perceptual experience associated with the embodiment of self-avatars. Suppose you are embodied in a virtual body visuomotor synchrony and after a while the body does something that you did not do (in this case talk). It was found that participants have agency over the speaking, and the way they themselves speak later is influenced by how their self-avatar spoke (Banakou and Slater, 2014). The rigged self-avatar which has its movements driven by the movements of the participant was crucial to this result, since it was later found that if the body ownership is induced through tactile synchrony, then although the subjective illusory agency still occurs, it is not complemented by the behavioral after-effect (Banakou and Slater, 2017). Agency can also be induced when the real body does not move but the virtual body moves, after embodiment has been induced. This is the case when the virtual body is moved by means of a brain-computer interface (Nierula et al., 2019) or when seated participants can have the illusion of walking, based solely on the feedback from their embodiment in a walking virtual body (Kokkinara et al., 2016).

Interesting is also the case of pain perception. Several studies have demonstrated that virtual embodiment can modulate it (Lenggenhager et al., 2010; Martini et al., 2014) with important implication for pain treatment applications (Matamala-Gomez et al., 2019a,b). In particular, it was shown that pain perception is modulated by the visual appearance of the virtual body, including its color (Martini et al., 2013), shape (Matamala-Gomez et al., 2020), and level of transparency (Martini et al., 2015), as well as by the degree of spatial overlap between the real and the virtual bodies (Nierula et al., 2017).

The use and applications of avatars beyond basic scientific research is potentially as vast as the use of VR and AR and more generally computer graphics. In sum avatars are the main way to realistically represent humans, or in some situations, computational agents, inside digital content even if they are not displayed in immersive technologies, for example they are often part of animation movies or console games. Therefore, avatars have potential applications across many areas including therapeutic treatments, training, education, and entertainment. In this section we will only explore two areas of application that have traditionally had a big emphasis on avatars: the entertainment industry and simulations. We will try to summarize why having access to high-quality rigged avatars is important for these fields. For more possible applications of avatars and VR in general we recommend a more in-depth review by Slater and Sanchez-Vives (2016).

Avatars or 3D characters can create a long-lasting emotional bond with the audience, and thus play an essential role in film-making, VR and computer games. For example, characters must exhibit clearly recognizable facial expressions that are consistent with their emotional state in the storyline (Aneja et al., 2018). Manually creating character animation requires expertise and hours of work. To speed up the animation process, we could use human actors to control and animate a character using a facial motion capture system (Li et al., 2015). Many of the techniques to create and animate avatars have been described above. However, there are particularities for this industry and despite recent advances in modeling capabilities, motion capture and control parameterization, most current animation studios still rely on artists manually producing high-quality frames. Most motion capture systems require skilled actors and laborious post-processing steps. The avatars need to match or exaggerate the physiology of performer limits to the possible motions (e.g., actor cannot perform exaggerated facial expressions).

Alleviating artist workload, but creating believable and compelling character motions, is arguably the central challenge in animated storytelling. Some professionals are using VR-based interfaces to pose and animate 3D characters: (PoseVR, 2019) or (Pan and Mitchell, 2020) developed by the Walt Disney Animation Studios, is a recent example in this direction. Meanwhile, a couple of VR-based research prototypes and commercial products have also recently been developed; however, these have mainly targeted to non-professional users (Cannavò et al., 2019). Alternatively, some researchers have proposed methods for generating 3D character expressions from humans in a geometrically consistent and perceptually valid manner using machine learning models (Aneja et al., 2018).

By open sourcing high quality rigs, the Microsoft Rocketbox avatar library, we are providing opportunities for researchers, engineers, and artists to work together to discover new tools and techniques that will shape the future of animated storytelling.

No matter how realistic a virtual environment looks from the point of view of geometry and rendering, it is essential that the virtual environment appears populated by realistic people and crowds in order to bring those virtual environments to life.

The needs for other people with whom a person interacts in VR/AR ranges from simulating one or two avatars to a whole crowd. Multiple studies have found that people behave realistically when interacting with avatars inside VR/AR. In recent years these realistic responses gained the interest of sociologists and psychologists who want to explore scientifically increasingly complex scenarios. Inside VR, researchers have replicated obedience to authority paradigms such as the Milgram experiments, that became almost impossible to run in real setups due to the ethical considerations regarding the deception scheme underlying the learner and punishment mechanisms (Slater et al., 2006; Gonzalez-Franco et al., 2019b). Indeed, the replication of famous results such as the Milgram studies, further validate the use of avatars for social-psychological studies (Figure 13). Indeed, VR Milgram paradigms have recently been used to study empathy levels, predisposition and conformity to sexual harassment scenarios (Neyret et al., 2020).

Figure 13. One of the Microsoft Rocketbox avatars being used for a recreation of the Stanley Milgram experiment on obedience to authority at University College London by Gonzalez-Franco et al. (2019b).

Researchers have also used VR to create violent scenarios. For example, converting the avatar into a domestic abuser to see what the response of a real offender would be when exposed to this role scenario (Seinfeld et al., 2018), or studying what happens when a violent scenario has bystanders. In an experiment with soccer fans in a VR pub, researchers found a strong in-group, out-group effect for the victim of a soccer bulling interaction (Rovira et al., 2009). However, the response of the participants would vary depending on whether there were other bystander avatars and whether they were or not fans of the same soccer team (Slater et al., 2013). Some of these scenarios recreated in VR would be impossible in reality.

Besides the interactions with avatars in violent scenarios, researchers have also explored how users of different personalities interact with avatars. For example (Pan et al., 2012) studied how socially anxious and confident men interacted with a forward virtual woman, and how medical doctors respond to avatar patients who insist and demand unreasonably being treated with antibiotics (Pan et al., 2016).

Research in the area of social-psychology has also utilized avatars, for example to study moral dilemmas on how people would react when exposed to a shooting in a museum (Pan and Slater, 2011; Friedman et al., 2014). Or how different types of audiences would affect public speaking anxiety (Pertaub et al., 2002), and phobias (Botella et al., 2017).

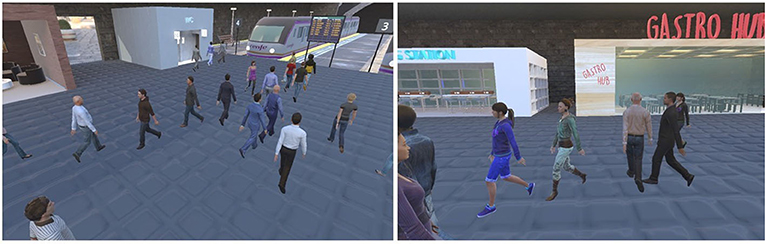

Simulations have evolved from a different angle in the field of crowd simulation (Figure 14). In that area researchers have spent a great deal of effort in improving the algorithms that move agents smoothly between two points while avoiding collisions. However, no matter how close the simulation gets to real data, it is essential that each agent's position is then represented with a natural looking fully rigged avatar. The crowd simulation field has focused its work in the development of a large number of algorithms based on social forces (Helbing et al., 2000), geometrical rules (Pelechano et al., 2007), vision-based approaches (López et al., 2019), velocity vectors (Van den Berg et al., 2008), or data driven (Charalambous and Chrysanthou, 2014). Often psychological and personality traits can be included to add heterogeneity to the crowd (Pelechano et al., 2016). The output of a crowd simulation model is typically limited to just a position, the motion and sometimes some limited pose data such as a torso orientation. This type of output is repeatedly rendered as a crowd of moving points, or simple geometrical proxies such as 3D cylinders. Ideally the crowd simulation output should be seamlessly input ted into a system that could provide fully animated avatars with animations naturally matching the crowd trajectories. Research in real-time animation is not yet at the stage of providing a good real-time solution to this problem, but having high-quality fully rigged avatars is already a big step forward into making crowd simulation more realistic and thus, being ready to enhance the realism of immersive virtual scenarios.

Figure 14. Microsoft Rocketbox avatars being used for a recreation of a train station for studies of human behavior in crowded VR situations at the Universitat Politcnica de Catalunya, by Ríos and Pelechano (2020).

Research efforts into simulations of few detailed humans and large crowds are gradually converging. The simulation research community needs realistic looking rigged avatars. For large crowds it also needs them to have flexibility in the number of polygons so that the cost of skinning and rendering does not become a bottleneck (Beacco et al., 2016). Natural looking avatars are not only critical to small simulations but can also greatly enhance the crowd simulation appearance when being rendered in 3D on a computer screen. This effect is even more important when being rendered in a HMD, where avatars are seen at eye level and from close distances.

Having realistic and well-formed avatars is especially relevant if we are studying human behavior in crowded spaces using immersive VR. Such setups can be used, for example, to evaluate human decision making during emergencies based on the behavior of the crowd (Ríos and Pelechano, 2020). Or to explore cognitive models of locomotion in crowded spaces (Thalmann, 2007; Luo et al., 2008; Olivier et al., 2014). Or for one-to-one interactions with avatars inside VR while performing locomotion (Pelechano et al., 2011; Ríos et al., 2018).

While in one-to-one interactions the use of facial expressions has been growing in use (Vinayagamoorthy et al., 2006; Gonzalez-Franco et al., 2020b). In current crowd simulations that very important aspect is missing. Current simulated virtual crowds appear as non-expressive avatars that simply look straight ahead while maneuvring around obstacles and other agents. There have been some attempts to introduce gaze so that avatars appear to acknowledge the user's presence in the virtual environment but without changes in facial expression (Narang et al., 2016).

The Microsoft Rocketbox avatars not only provide a large set of avatars that can be animated for simulations but also provide the possibility of including facial expressions, which can open the doors to achieving virtual simulations of avatars and crowds that appear more lively and that can show real emotions. This will have a massive impact on the overall credibility of the crowd and will enrich the heterogeneity and realism of the populated virtual worlds.