- Department of Environmental Science and Policy, George Mason University, Fairfax, VA, United States

Institutions of higher education have increasingly focused on data-driven decision-making and assessments of their sustainability goals. Yet, there is no agreement on what constitutes sustainability literacy and culture (SLAC) at colleges and universities, even though promoting these types of campus population-level changes is often seen as key to the greening of higher education. It remains unclear what motivates institutions to measure these constructs, the barriers they face in doing so, and how they use these assessments to improve sustainability outcomes. In order to understand how universities are conducting SLAC assessments and for what purpose, we carried out an analysis of a subgroup of institutions–doctoral universities with very high research activity (R1)–with respect to institutional organizational learning (OL). Semi-structured interviews were conducted with administrators of 20 R1 universities that reported SLAC assessments (2017–2020) in the Association for the Advancement of Sustainability in Higher Education's STARS rating system. As anticipated, R1 universities reported conducting SLAC assessments for STARS points, but they also are motivated by the potential for the data to inform campus programs. Challenges in conducting assessments included: lack of institutional prioritization, difficulty conducting the surveys, inadequate resources, institutional barriers, and perceived methodological inadequacies. While very few of the higher OL institutions pointed to lack of institutional prioritization as a problem, more than half of lower OL universities did. Institutional support, having a dedicated office, and using survey incentives served as facilitators. This is one of the first studies to relate higher education OL to sustainability assessments. OL is likely to be an important construct in furthering an understanding of the institutional capacities required for implementation of assessments and their effectiveness in evidence-based decision-making.

Introduction

Higher education institutions (HEIs) can play a critical role in attaining global sustainability goals by promoting pro-environmental knowledge, attitudes, and behaviors among the next generation of citizens and ensuring the education of a science-literate public (United Nations, 2013; Rivera and Savage, 2020). “Sustainability literacy” is often conceptualized as student learning outcomes from formal educational curricula while “sustainability culture” encompasses the awareness, behaviors, and lifestyle choices of the broader campus community, including its employees (Hopkinson and James, 2010; Marans et al., 2010, 2015; Callewaert, 2018). For decades, higher education institutions have committed to promoting sustainability (ULSF, 1990). As they have grappled with how to implement these goals, literatures have arisen on conducting sustainability assessments (Shriberg, 2002) and competencies for sustainability professionals that academic programs and tools should address (Wiek et al., 2011; Redman et al., 2021). Yet, there is no consensus on what constitutes sustainability literacy and culture at colleges and universities. It is largely unknown what motivates institutions to measure campus sustainability literacy and culture, what obstacles they experience in doing so, and how these assessments are used to enhance sustainability results.

Campuses have recognized the need for broad sustainability education for decades. The 1990 Talloires Declaration was the first official statement made by universities and colleges of their commitment to sustainability (ULSF, 1990). The plan required signatories to agree to take 10 actions, among them increasing awareness of sustainable development, creating institutional cultures of sustainability, educating for environmental citizenship, fostering environmental literacy, and facilitating interdisciplinary collaborations. Sustainable development (SD) itself became a dominant global discourse during approximately the same time period with the publication of the report Our Common Future by the Brundtland Commission in 1987 (Brundtland, 1987; Dryzek, 2005). Since then, many international frameworks and conventions have addressed education for sustainable development (ESD) (Calder and Clugston, 2003), with key recommendations for its promotion delivered at the 1992 United Nations Conference on Environment and Development (UNCED), often known as The Earth Summit (Wals, 2012; UNESCO, 2015). While this research study is focused only on sustainability literacy and culture and not ESD, the meaning of sustainability literacy and culture overlaps with ESD in terms of building knowledge, skills, values, and attitudes for a sustainable future.

Of the ~4,000 higher education institutions in the United States (NCES, 2021), only 4% assess and report the sustainability literacy and culture of their students within the largest higher education assessment database, the Association for the Advancement of Sustainability in Higher Education's (AASHE) Sustainability Tracking, Assessment and Rating System (STARS) (AASHE, 2020a). Attempts have been made to develop standardized tools for assessing sustainability literacy and culture, such as the Assessment of Sustainability Knowledge (ASK), Sustainability Attitude Scale (SAS), and Sulitest (Shephard et al., 2014; Décamps et al., 2017; Zwickle and Jones, 2018). However, the majority of institutions that report these data adopt their own definitions and systems of measurement (AASHE, 2020a). Further, it is unclear to what extent institutions that conduct the assessments use the data to inform programmatic development. The use of data-driven decision-making—e.g., leveraging standardized test scores and other evaluations to inform classroom and institutional practices—has become a priority within higher education in order to improve educational outcomes in an increasingly competitive environment (Mertler, 2014; Arum et al., 2016). Yet, in practice, it remains difficult for many organizations to use data within their decision-making routines. We hypothesize that organizational learning (OL) characteristics are related to the ways in which higher education institutions implement and use sustainability literacy and culture (SLAC) assessment data. This has not been previously explored in the literature, as we will subsequently elaborate in the “literature review” section.

Through interviews with representatives from R1 institutions that have opted to conduct the sustainability literacy and culture assessments, we seek to answer the following research questions: (1) their motivation for implementing the assessments; (2) the methods they used; (3) what posed a challenge during the process; (4) what facilitated the assessments; (5) how institutions use the assessments; and (6) how organizational learning capability is related to these characteristics.

Very high research activity (R1) doctoral institutions in the United States (The Carnegie Classification of Institutions of Higher Education, 2020) are the focus of our investigation because of their similarities in research orientation and access to resources (physical, human, and financial). The Carnegie classification is based on factors such as research and development expenditures, science and engineering research staff, and doctoral conferrals (The Carnegie Classification of Institutions of Higher Education, 2022). By expanding our understanding of the current role of sustainability literacy and culture assessments and organizational learning in R1 institutions, we hope to assist other higher education institutions in making decisions about how to meet their own sustainability goals.

Literature review

Sustainability assessments

Sustainability assessments are procedures that “(direct) planning and decision-making toward sustainable development” in broad terms (Hacking and Guthrie, 2008, p. 73). Ideally, they should integrate natural and social systems, address both local and global dimensions, and account for both short- and long-term outcomes (Hou and O'Connor, 2020). Through these processes, institutions can determine whether an initiative is truly sustainable in evaluation against a set of sustainability principles. Higher education institutions have established campus programs to meet their institutional sustainability commitments primarily through: (1) reduced consumption of energy, water, and other resources; (2) sustainability education; (3) integration of teaching, research, and operations; (4) cross-institutional learning; and 5) promotion of incremental and systemic change (Shriberg, 2002). Numerous formalized assessments have arisen in recent decades to evaluate how well institutions meet sustainability goals (Alghamdi et al., 2017). Shriberg (2002), Alshuwaikhat and Abubakar (2008), and Sayed and Kamal (2013) each analyzed these sustainability assessments to identify their strengths and limitations. In this study, we focus on the Association for the Advancement of Sustainability in Higher Education's (AASHE) Sustainability Tracking, Assessment and Rating System (STARS) as it has become the best-known and most-supported campus sustainability assessment tool worldwide (Maragakis and Dobbelsteen, 2013). AASHE—a professional association for higher education sustainability—launched STARS in 2010 to help institutions measure and report on their sustainability performance and encourage them to adopt best practices (AASHE, 2019c).

The STARS self-reporting tool has become the benchmark standard for post-secondary sustainable performance initiatives in the United States, with 1,018 institutions reporting during its first 11 years (AASHE, 2019b). Universities and colleges have many ways of collecting the requisite documentation, but their final report must be approved by an executive-level college or university official. Most institutions say that they participate in STARS because of the benefits that accompany reporting, such as benchmarking, transparency, and publicity for continued progress (Buckley and Michel, 2020). Indeed, the STARS “silver, gold, platinum” ratings can be used by universities to advertise their commitments to sustainability, including to prospective students.

The STARS reporting tool evaluates the practice of sustainability in higher education across five categories: academics, engagement, operations, planning and administration, and innovation and leadership (AASHE, 2020b). The category of “academics” includes a “curriculum” subcategory under which sustainability literacy assessments can earn universities and colleges up to 4 points. Further, the “engagement” category includes a “campus engagement” subcategory under which institutions can earn another point for assessing campus culture. Thus, the SLAC assessments enable universities to earn 5 of 61 total points for “curriculum” and “campus engagement” (AASHE, 2020b). The assessments must be repeatedly administered to a representative sample of the predominant or entire student body (in the case of literacy assessments) or the entire campus community, including students, faculty, and staff (in the case of culture assessments) (AASHE, 2019a,b). AASHE does not define how institutions should measure these constructs, but literacy is generally described as knowledge, and culture as values and behaviors. Often, these assessments are combined and administered as a single instrument by the participating institutions (AASHE, 2020a).

There are varied definitions of sustainability literacy, some that emphasize knowledge and skills (Akeel et al., 2019), while others emphasize knowingness, attitudes, and behavior (Chen et al., 2022). AASHE materials point out that measures of sustainability literacy have right or wrong answers whereas sustainability culture assessments do not because they assess perceptions, beliefs, dispositions, behaviors, and awareness of campus sustainability initiatives (AASHE STARS, 2022). Unfortunately, there is not one standardized or universally accepted tool that measures both of these constructs. Yet, between 2017 and 2020 more than 160 institutions reported data for each of the assessments through STARS (AASHE, 2020b). We sought to understand their motivation for engaging in these assessments (Stough et al., 2021) and the methods universities are using to conduct them as the first two research questions.

Assessing student, staff, and faculty sustainability literacy and culture poses a challenge to many higher education institutions (Ceulemans et al., 2015). Yet, the assessments are essential for providing an understanding of campus sustainability knowledge, values, and behaviors, apart from accruing AASHE STARS points (Buckley and Michel, 2020). As campuses grapple with conducting SLAC assessments, understanding the ways other higher education institutions have effectively implemented them can facilitate more impactful adoption, learning, and institutional change. Hence, our third and fourth research questions are what are the challenges and facilitators experienced by universities in conducting the SLAC assessments.

Lastly, researchers assert that evidence of the institutional impact of sustainability indicators, like the SLAC assessments, is broadly lacking. For example, Ramos and Pires wrote, “There are still no clear answers about the effective impact of these indicator initiatives in society, showing who really adopt and use these tools and at the end how valuable or irrelevant they are in practice” (2013, p. 82). Through a study of the existing 19 sustainability assessment tools, the authors investigated whether these tools truly assess the impact of HEIs on sustainable development (Findler et al., 2019). They found that the tools do not assess impacts beyond the organization, rather focus on internal operations. Hence, our final research question is about institutional change.

Organizational learning capability

According to Smith (2012), an organization's ability to achieve “triple bottom line” sustainability in its social, environmental, and financial performance depends on its ability to respond to new information by updating its assumptions, pre-existing mental models, norms, and policies. Organizational learning can be viewed as a complex, continuous, and dynamic process that occurs at all levels: individual, group, and organization (Huber, 1991). Jerez-Gómez et al. (2005) define organizational learning as the “capability of an organization to process knowledge—in other words, to create, acquire, transfer, and integrate knowledge, and to modify its behavior to reflect the new cognitive situation, with a view to improving its performance” (p. 716).

An organization is said to learn “if through its processing of information, the range of its potential behaviors is changed, and an organization learns if any of its units acquire knowledge that it recognizes as potentially useful to the organization” (Huber, 1991, p. 89). Organizations that do not easily learn from new information may suffer from weak data systems, the inability to turn information into knowledge, limited knowledge, poor team structures or cultures, narrow organizational attitudes, information silos, hierarchy, and a plethora of other institutional characteristics (Argyris and Schön, 1978). Psychological factors also may inhibit learning. For example, in a multi-institutional initiative, fear of being judged served as one of the barriers to organizational learning (Kezar and Holcombe, 2020). While there is a considerable push for campus decision-makers to move toward data-driven management, studies indicate that campuses first need to build their human and data infrastructures in ways that will facilitate these processes (Kezar et al., 2015).

The relationship between organizational learning and sustainability performance is typically studied in the private sector (Alegre and Chiva, 2013; Zgrzywa-Ziemak and Walecka-Jankowska, 2020; Martinez-Lozada and Espinosa, 2022). Ramos and Pires (2013) assert that higher education should learn from the private sector in order to align with data-informed decision-making models (Arum et al., 2016). In the educational sector, organizational learning has been found to have a significant and positive effect on teaching competence and lecturer performance (Hartono et al., 2017). However, to our knowledge, there is no research relating organizational learning to how universities use sustainability assessments. Dee and Leišytė (2016) claim that research on organizational learning in higher education suffers from few empirical studies and a functionalist approach focused on institutional change and effectiveness that assumes power flows from the top down within management structures modeled on corporations. They argue that colleges and universities are unique institutions because of the degree of independence of faculty and departments, which may not have a shared vision of their institution's future. As a result, they encourage researchers to also take interpretive and critical approaches to explore the process of meaning-making and the exercise of power across all levels. Therefore, we hypothesize that organizational learning (OL) capability likely relates to how higher education institutions implement and use sustainability literacy and culture assessments.

In a 2005 study, Jerez-Gómez et al. proposed a measurement scale for organizational learning capability and examined its reliability and validity with a sample of 111 Spanish chemical manufacturing firms. The scale treats organizational learning as a latent construct with four dimensions identified in their literature review, and incorporates both previous items and new ones. The composite reliability index for the whole scale was 0.94; further, it demonstrated internal consistency, and convergent and discriminant validity. According to the authors, four conditions are necessary for organizational learning:

1. Managerial commitment. Leadership must support organizational learning and promote it as part of the institution's culture. Spearheading the process should be the responsibility of management, but all staff should be engaged.

2. Systems perspective. Organizational learning requires a collective institutional identity that enables the organization to be seen as a system in which every member contributes to achieving a successful outcome. If there is a lack of a collective goal, or shared language and perspectives, individual acts will be less likely to lead to organizational learning.

3. Knowledge transfer and integration. The structure of the organization must facilitate the transfer and integration of individually acquired knowledge to groups within the institution, and then to a collective corpus of knowledge.

4. Openness and experimentation. Organizations must be open to questioning existing practices and experimenting with alternatives. This type of generative learning requires an open attitude toward new ideas and a tolerance for risk and making mistakes.

Each of the four dimensions are positively related to organizational learning capability; the highest learning organizations score well on each (Jerez-Gómez et al., 2005). In this study, we focus on sustainability literacy processes with respect to organizational learning capabilities.

Methods

The online STARS reports database (AASHE, 2020b) for U.S. colleges and universities provided the data frame from which we selected R1 institutions, and their sustainability administrators, to conduct semi-structured interviews and deliver a short set of organizational learning measures.

Sample selection and characteristics

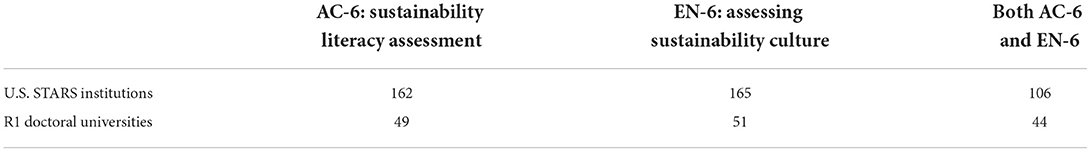

We initially downloaded the United States STARS dataset on October 1, 2020, which included 394 higher education institutions that had submitted reports between the years 2017 to 2020. We then filtered the dataset to find those that had completed the sustainability literacy (162) and sustainability culture (165) assessments; 106 had completed both SLAC assessments. We further filtered the dataset to include only the doctoral universities with very high research activity (R1) as per the Carnegie Classification of Institutions of Higher Education (The Carnegie Classification of Institutions of Higher Education, 2020) that had scored some points for both the sustainability literacy and culture assessments. Thus, out of the 106 institutions that conducted both sustainability literacy and culture assessments, 44 of them were R1 universities (see Table 1). We focused on universities' STARS report sections titled “AC-6: Sustainability Literacy Assessment” and “EN-6: Assessing Sustainability Culture,” termed sustainability literacy and culture (SLAC) assessments henceforth in the paper.

Table 1. U.S. institutions reporting sustainability literacy and culture (SLAC) assessments from 2017 to 2020 (AASHE, 2020b).

Recruitment emails were sent to all the 44 R1 institutions' sustainability liaisons listed on the AASHE website. Out of the 44, 20 R1 university liaisons agreed to be interviewed (response rate, 45.5%). Eleven (55.0%) of the interviewees served as a director/assistant director of sustainability, five (25.0%) were managers, and the rest (4; 20.0%) were either post-doctoral researchers or sustainability coordinators/specialists. Job tenure varied from 1 to 15 years with an average of 5.9 years in the position. According to researchers, qualitative sample sizes of 10 may be sufficient for sampling among a homogeneous population (Boddy, 2016). Hence our sample size of 20 from the homogeneous R1 institutions is considered sufficient for this study.

The websites and STARS reports of each university were reviewed prior to each interview to provide background and information regarding the methods used by every participating institution. This information was verified during the interviews. Semi-structured interviews with around 28 questions were carried out with the university administrative representatives between February 22 and March 12, 2021. They ranged in length from 35 to 50 minutes and were recorded and transcribed. The research was approved by the Institutional Review Board for the Protection of Human Subjects at George Mason University (IRB# 1687212-1). The interview sample included both public and private universities across the northeastern, southern, western, and central regions of the United States. The interview questions were developed based on the STARS report and the research questions of the study and were pre-tested with our university's Office of Sustainability before conducting the interviews. The interview questions, survey scale, and institutional characteristics (Supplementary Table A) are provided in the Supplementary Materials.

Measures

The study combines survey and qualitative (semi-structured interview) data in a mixed-methods approach. Organizational learning capability survey questions were asked to provide insight on the context for assessment practices (Jerez-Gómez et al., 2005). The 16 questions of the OL scale were grouped into four subscales that represent each of the dimensions (5 questions for management commitment to learning—MC; 3 questions for systems perspective—SP; 4 questions for openness and experimentation—EX; and 4 questions for knowledge transfer and integration—TR) with response options from 1 representing “totally disagree” to 7 “totally agree.” Based on the survey responses, a follow-up probe was carried out with a couple of open-ended questions. The 28 interview questions and the survey scale are provided in the Supplementary Materials. Ten questions gathered demographic and office information, 4 questions probed institutional motivations for engaging in SLAC assessments, 4 questions requested assessment methodology information, 3 main questions related to the challenges and/or facilitators experienced in conducting the assessments, and 6 questions addressed the implications of the assessments and using them as evidence for institutional change. A final question requested any other pertinent information that had not been covered in the interview.

Categorizing institutions by measures of organizational learning

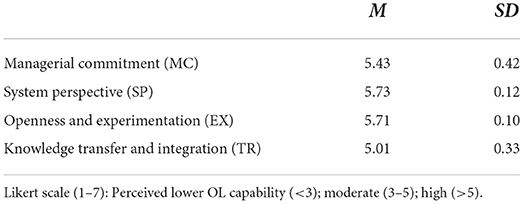

We calculated each organizational learning items' mean and standard deviation for the four organizational learning dimensions (Table 2). On each dimension, each university's mean was subtracted from the overall mean to identify which universities ranked higher or lower within the 20 universities according to the type of organizational learning capability. The sum of these differences differentiated those institutions that were “higher OL”—e.g., their positive score indicated they ranked predominantly above the mean (across three to four of the four dimensions)—or as “lower OL,” in which they fell predominantly below the mean (again, across three to four of the dimensions).

Analysis of interview data

The interview responses were analyzed across 17 variables according to pre-defined themes and deductive codes based on the interview responses and university background from the AASHE STARS website. Coding categories were developed for each of six themes: institutional motivation for engaging in the assessments; the process of developing the assessments; challenges; facilitators; dissemination of assessment data; and use of data. The categories and definitions are included in Supplementary Table B. Responses to the interview questions on the SLAC assessments were grouped for analysis according to the universities' higher and lower organizational learning designations.

Limitations

There were some drawbacks to the methodological approach. First, the survey measures for organizational learning, asked during the semi-structured interviews, were subject to the interpretation of just one respondent per institution; others at each university may view the institution differently. This problem is prevalent in studies measuring individual perception (Jerez-Gómez et al., 2005). We attempted to mitigate this possible risk by following up on the respondent's answers with open-ended questions to ensure that we captured evidence of institutional activities that might be less perceptually influenced, such as examples of information dissemination or adoption of new programs.

Second, although focusing the study on a single type of institution (R1s) creates a more homogeneous sample for the purpose of analyzing differences in how organizational learning might influence university assessment implementation, it can also limit its external validity (Jerez-Gómez et al., 2005). We acknowledge that this research is restricted to U.S. R1 universities. Since many of the research findings have not been previously established, it would be worthwhile to expand the study to other higher education institutions to ascertain the wider generalizability of the findings. The findings should be viewed as illustrative of the current status of these assessments within the approximately half of R1 institutions that currently conduct them.

Results

Across the 20 universities, the categorical mean score of the four dimensions of organizational learning measured was 5.43 (managerial commitment, SD = 0.42), 5.73 (system perspective, SD = 0.12), 5.71 (openness and experimentation, SD = 0.10), and 5.01 (knowledge transfer and integration, SD = 0.33) as shown in Table 2. While the universities' overall organizational learning capabilities were high, 9 institutions ranked higher on organizational learning and 11 ranked lower. Next, we describe the findings for how these institutions reported using the assessments to answer our research questions and to probe hypothesized differences by organizational learning capability.

Institutions' motivation for conducting the assessments

Our first research question asked why universities conduct the assessments. Our hypothesis was that universities at higher and lower levels of organizational learning would respond differently. We found—as expected—that universities conduct the assessments for STARS points, but there are other reasons as well. For example, one university administrator stated, “We feel that it's [SLAC] an important way for us to gauge the community's interest and inform our future programming.”

Higher OL universities reported conducting the assessments to understand office effectiveness, inform decision making, and meet sustainability goals. But most of the institutions were also motivated by STARS points. According to the university administrators, the SLAC assessments are seen as low-hanging fruit that can be relatively inexpensive to conduct, but can aid in getting the campus leadership's attention, shed light on student body characteristics, or provide information on long-term campus trends. Most lower OL universities also indicated that they used the assessment for STARS points and for other reasons than STARS points.

Methods of conducting the assessments

When we asked how universities conduct the SLAC assessments–our second research question—three modalities were identified. Universities acquired existing tools and used them without any changes, modified those tools, or they conducted in-house development of their own tools. One interviewee explained: “We've looked at other institutions. We started a literature review, and we recreated the survey ourselves, and we've made it available on our website.”

More than half of the higher OL institutions modified available tools and they reported involving faculty. For instance, one administrator said: “We found what other universities have done, we thought about what we really wanted to ask our own students, and we discussed it with faculty and included questions that could be compared to other universities on some level, and include something more specific to us.”

A smaller group of higher OL institutions developed tools completely in-house: “So when we first started exploring the idea of a sustainability literacy assessment—just a very small working group of a couple faculty and a couple of representatives from the office of sustainability—to focus on if we were to implement a literacy survey what questions would we ask. So we did have that small working group. We had a few meetings we kind of refined the questions on the questionnaire. And that was how we initially, developed the survey within that working group.” Very few of the higher OL institutions used existing tools as-is.

Lower OL universities also took varied approaches to the selection and development of assessment tools, reporting that preferred to modify existing tools, used the available tools as-is, or developed them in-house. Less than half said that they involved faculty in the SLAC assessments. As one administrator notes: “Specifically with the literacy assessment or with the cultural assessments, no, we don't typically involve faculty in that process.”

Challenges

In our third research question, we asked the interviewers open questions about what hinders the assessment process, again hypothesizing that there are likely differences between higher and lower OL institutions. University administrators identified five challenges in conducting the assessments. They included:

1) The assessments are not an institutional priority.

2) Conducting the surveys themselves is difficult because of their longitudinal nature, problems with survey fatigue, and other challenges in implementation such as response rates, ensuring completion, and avoiding selection bias.

3) Working within large R1 institutions poses its own challenges: it is hard to get past the bureaucracy, coordination is difficult, it is hard to engage everyone at the university and/or get the right people, the institution is risk-averse, higher-level decision-makers are not accessible, and the assessments have never been embedded in policy or processes.

4) Resources to conduct the assessments may be lacking, including lack of time, bandwidth, expertise/capacity, and the lack of expertise to develop literacy goals.

5) While STARS requires repeated assessments to assess sustainability literacy and culture, some feel that other methodologies might be more suitable.

Universities with higher OL capability generally had institutions that prioritized the assessments. Some faced institutional challenges and more than half didn't have the time and resources they needed. For example, one of the interviewees said, “I think our staff is so small that we just don't have time to do it thoughtfully. And the fact that we don't have faculty that are assigned to that part of it, I think is a challenge. I think that having dedicated people whose time is dedicated to the assessments, like a committee that convenes or something like that, reviews the survey information every year, would be helpful… I'm sure lots of institutions don't have that or have access to those people or that expertise and it's challenging to cultivate that and to keep it going.” Delivery of the survey itself was the largest hurdle. One university administrator said, “Not everybody in the campus community take surveys.”

For some higher OL institutions, surveys are not the best method. One interview respondent explained: “I think the survey maybe it's slightly more actionable but it still provides high-level information on why students do what they do and the truth of the matter is that student behavior is really complex and highly variable and that's really not the best way to get that information. It would be like a focus group, not a survey, in my opinion.” Another said, “Some of the challenges were in discussing what we were trying to do. We knew it had to be short because we didn't want to overload the students. But, the biggest discussion topic that we had in developing it was how much of it do we try to make like a well-rounded theory assessment versus how much do we really just have questions that we need to answer.”

Conversely, most lower OL universities said that the SLAC assessments were not an institutional priority, and that they were hindered by the lack of resources and challenges in survey implementation. As one administrator said, “I would say it's the lack of administrative support for the assessments. There are other stuff going on at the university and these assessments are constantly on the back burner... Nobody has pushed to make this a larger idea. Administration was not open as we're pushing so many initiatives with them, and the decision-maker did not want to push it as a mandatory assessment. This is seen as a social initiative, as a socially conscious thing by the administration.”

Institutional challenges at lower OL universities also contributed to difficulties in conducting the assessments: “We weren't able to get the permission to send it out to everyone and so we just had to send it out to a small cohort. I think that was probably our biggest challenge.” And, again, respondents posed the question of whether surveys are the best method: “I think the shortcoming is we've not had the ability or the thought to really be able to individually parse out responses from different stakeholder groups; that's the problem with these sorts of mailing surveys.”

Facilitators

Administrators noted that there are ways to make the process easier. Among the three themes, institutional support was described as important the most frequently by both higher and lower OL institutions. A shared commitment to the process and supportive interdisciplinary collaboration/teamwork can make the assessments much easier. So, too, having dedicated staff or an office to handle the sampling and survey implementation can be critical. As one respondent described: “We construct a representative sample, we don't send it just to everybody, I think that's a difference, too, I think some institutions just will put their survey on a website and direct everyone to it. It all depends on how much resources and time you have, I think that's better than nothing, but we actually work with our Institute for XYZ and they build the sample for us, so it's representative.” Finally, having incentives for participation in the survey makes garnering high response rates easier, whether through providing gift cards, raffles, or other prizes.

Use of assessments for institutional change

Our last research question explored what effects do the assessments have on institutions, with the hypothesis that they would be more impactful in organizations higher in organizational learning. In trying to understand the implications of the assessments, we also posed a question during the interview to find out who received the data at the university. Either the results were delivered to university upper management and students, or remained within the Office of Sustainability (OOS). One interviewee said: “The assistant to the provost for sustainable initiatives would love to see the results.” Alternately, another reported: “Right now, we don't really have a formal reporting structure for those, so we mostly use them internally within the Office of Sustainability to just kind of inform our own programs.” In terms of data dissemination, higher and lower OL institutions reported similar practices. Most delivered the SLAC assessment results to upper management/committee and/or through a website/listserv to the students, in addition to reporting to STARS. Few of the institutions delivered the data solely to STARS and their Office of Sustainability.

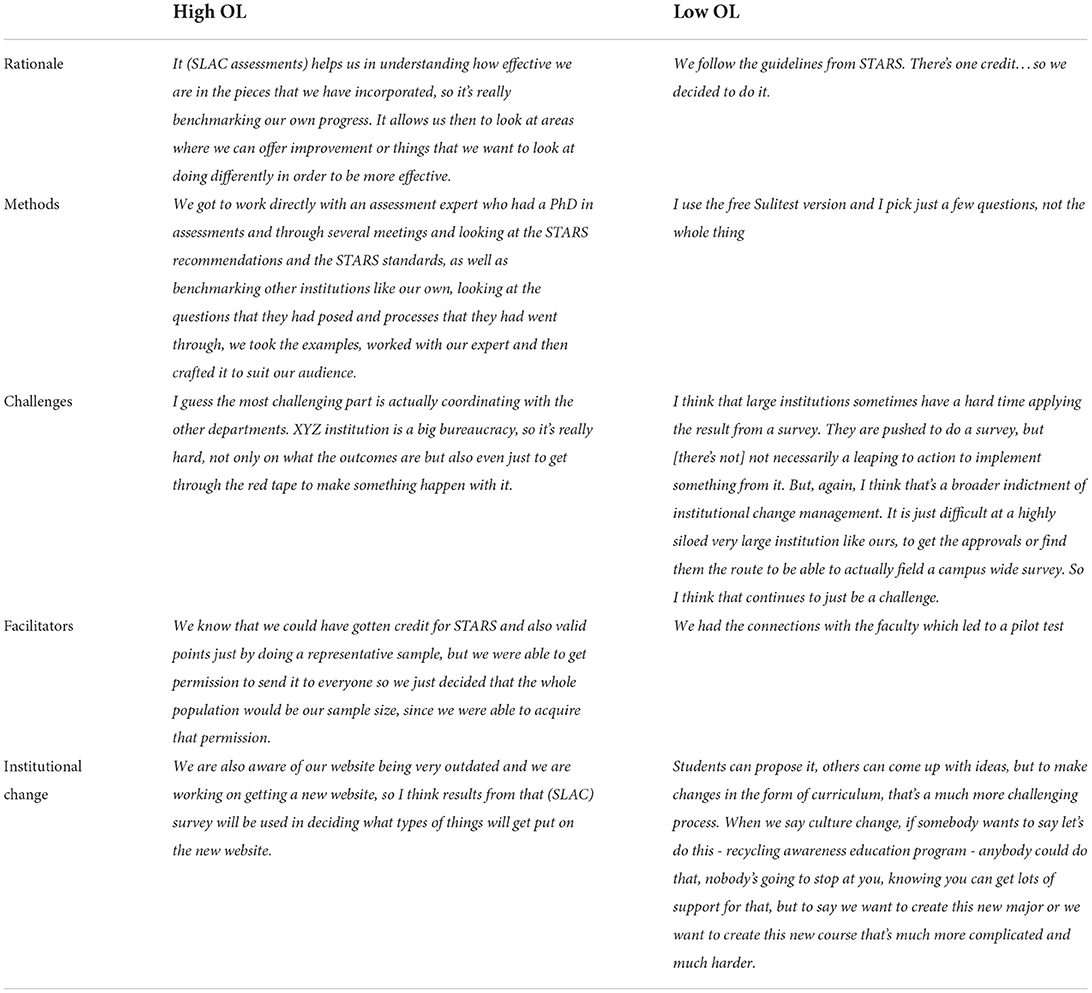

We anticipated that after disseminating the information, only a subset of the universities would use the data for institutional change. Table 3 provides a compilation of the illustrative quotes under each category. The majority of both higher and lower OL institutions stated that the SLAC data spurred change within their universities. Typically, these changes occurred in university programs or communication efforts. Examples included: developing course/gen-ed curriculum, new pedagogy, recycling/waste management, transportation, recruiting or marketing, residence life, new topics in new student orientation, hiring decisions, new seminars/outreach events, and new funding allocations. One administrator noted: “It's informed some of the recycling that's going on campus and some of the communication around recycling. Another said: “We now have a new position, a new full-time position that is focused on student engagement, specifically, and so my guess is that the assessment made us realize, we need to do more engagement on campus which is why we're dedicating a full position to it.”

Discussion

In this study, we sought to understand why and how R1 universities conduct the SLAC assessments and with what results, hypothesizing that universities would perform differently in alignment with their organizational learning capability. Buckley and's Michel (2020) findings that most institutions conduct sustainability assessments to receive STARS points aligns with what we heard from university administrators. But we also found that majorities of both lower and higher organizational learning universities conduct the assessments for purposes other than STARS. Indeed, almost all higher OL institutions did so, for example in informing communication efforts or program design. Universities that are characterized as having greater organizational learning characteristics almost universally report these types of motivations.

In answering our second research question, we sought to understand how R1 universities conduct the SLAC assessments, hypothesizing that higher OL universities tailor the existing assessment tool requirements and involve faculty in the assessment process. Assessment tools such as the Sulitest, Assessing Sustainability Knowledge (ASK), Sustainability Attitude Scale (SAS), etc. were most frequently modified or tailored according to each campus' requirements and culture by both higher OL and lower OL capability universities. But the majority of higher OL university representatives reported that they included faculty during the assessment process. The “openness and experimentation” dimension of organizational learning suggests that institutions interrogate existing practices prior to adoption (Jerez-Gómez et al., 2005); Smith (2012) also viewed the ability to update or rework existing practices as an attribute of achieving organizational sustainability.

In our third and fourth research questions, we explored challenges and facilitators in conducting the SLAC assessment process. The universities cited a number of challenges: lack of institutional prioritization, difficulty conducting the surveys, inadequate resources, institutional barriers, and the perceived inadequacy of surveys to measure the constructs. While the vast majority of higher OL institutions did not cite the lack of institutional prioritization as a problem, more than half of lower OL universities did. These findings align with Jerez-Gómez et al. (2005) in their inclusion of “managerial commitment” as an important dimension of organizational learning. Further, the inadequacy of surveys for assessing SLAC is also consistent with Shriberg's (2002) findings that no one sustainability assessment tool can adequately capture all the required attributes. However, the current SLAC assessments may be particularly lacking in this regard due to the small number of tools available.

Institutional support was the most important facilitator in conducting the SLAC assessments, followed by having a dedicated office and using survey incentives. A dedicated office for survey administration made it easier for institutions to implement high quality survey sampling and data collection, especially longitudinally. Further, having an established tool, platform, or software ensured easier administration and follow-up. Respondents elaborated that institutional support manifested in having good connections with academic departments and faculty liaisons that made the process easier, increased the involvement of faculty with appropriate expertise, and widened the circle of people within the university who were aware of, and interested in, the data.

Lastly, we sought to understand if the SLAC assessments prompt institutional change, with the hypothesis that higher OL universities would be particularly effective in using the information for impact. This research question aligns with the literature on data-driven educational decision making (Mertler, 2014; Arum et al., 2016). Both the lower and higher OL universities delivered the assessment results to upper management and to their students, in addition to reporting to STARS and internally to their Office of Sustainability. The majority of both lower and higher OL institutions also reported using the data for institutional change, with almost all higher OL universities doing so. To ensure that data is effectively used for decision-making (Mertler, 2014), universities need to focus on developing processes for the consideration of data in regards to programmatic and institutional changes. This requires universities to prioritize organizational learning capabilities: management commitment to learning; keeping a systems perspective; openness and experimentation; and promotion of knowledge transfer and integration (Jerez-Gómez et al., 2005).

Conclusions

This research points to the critical role that institutional prioritization plays in organizations that conduct SLAC assessments. Interestingly, higher and lower OL institutions also demonstrated the biggest differences on this measure (not an institutional priority), which follows from Ramos and Pires' organizational learning theory. To ensure the institutional impact of assessments, university administrators should consider how to develop a conducive environment for data-based decision-making. While more empirical research is needed to understand how to broadly enhance organizational learning at universities in order to increase the impacts of SLAC assessments, our findings highlight current challenges universities face and ways to alleviate them.

Implications

The findings of this research can guide the practical implementation of the assessments by universities. Gathering institutional support, identifying dedicated staff or an office to conduct the surveys, and providing resources to incentivize survey responses may increase the likelihood of institutional success. Building organizational learning capability requires management's commitment to learning, the establishment of a collective sense of purpose and identity, willingness to be open to new ideas and experimentation, and the ability to facilitate knowledge transfer and integration. But, currently, with no one set of best practices or tools, it can be difficult to conduct the SLAC assessments, marshal institutional support for the practice, and find faculty and staff to design and implement them. Looking at those institutions that have higher OL capabilities, they...

• involve faculty in the process;

• perform the assessments for other purposes than just accruing STARS points;

• prefer not to use readily available tools;

• face significant challenges in implementing the surveys; and

• principally use the data for institutional changes.

Learning from these institutions may aid other universities as they decide either to begin SLAC assessments or revisit their university's implementation and use of them. In explicating the connections between organizational learning and sustainability assessments, this research contributes to societal understanding of how institutions gather and use data for the purpose of making adaptive management decisions. To our knowledge, no research has been previously done to relate the organizational learning capabilities of higher education institutions to their sustainability assessments, thereby advancing the suggestion of Gigauri et al. (2022). Applying this theoretical lens—and associated methodologies—opens the doors for broadly understanding sustainability assessments in terms of the effects of organizational structure on institutional impact. As such, we hope it helps further the work of practitioners and sustainability professionals across higher education.

Future directions

More research is recommended through studies that include a wider range of colleges and universities, not just R1 institutions. These studies should include interviews with more than one individual at each HEI in order to triangulate the data and ascertain its validity. These types of studies will build the foundation for a wider understanding of the use of sustainability literacy and culture assessments: institutions' rationales, methodologies, and challenges. Moreover, future research should focus on ways that HEIs can overcome these challenges and increase their organizational learning capability in order to generate actionable recommendations.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Institutional Review Board for the Protection of Human Subjects at George Mason University (IRB# 1687212-1). The patients/participants provided their written informed consent to participate in this study.

Author contributions

NL and KLA contributed to the conception of the study. NL designed, conducted, performed the analysis of the study, and wrote drafts of the manuscript. KLA contributed to manuscript revision, read, and approved the submitted version. All authors contributed to the article and approved the submitted version.

Acknowledgments

Publication of this article was funded in part by the George Mason University Libraries Open Access Publishing Fund.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frsus.2022.927294/full#supplementary-material

References

AASHE (2019a). STARS® 2.2 technical manual. Association for the Advancement of Sustainability in Higher Education (AASHE). Available online at: https://stars.aashe.org/resources-support/technical-manual/ (accessed June 30, 2019).

AASHE (2019b). STARS® 2.2 technical manual: EN 06 Assessing sustainability culture. Association for the Advancement of Sustainability in Higher Education (AASHE). Available online at: https://drive.google.com/file/d/1qm_-hXC4RN9x3FBeTDGDabL8uGZEnCOy/view?usp=embed_facebook (accessed June 30, 2019).

AASHE (2019c). How many total points are available in STARS? The Sustainability Tracking, Assessment and Rating System (STARS). Available online at: https://stars.aashe.org/resources-support/help-center/the-basics/how-many-total-points-are-available-in-stars/ (accessed May 15, 2022).

AASHE (2020a). Reports and data. The Sustainability Tracking, Assessment and Rating System (STARS). Available online at: https://stars.aashe.org/reports-data/ (accessed May 10, 2022).

AASHE (2020b). Stars participants and reports. Association for the Advancement of Sustainability in Higher Education. Available online at: https://reports.aashe.org/institutions/participants-and-reports/ (accessed February 01, 2021).

AASHE STARS (2022). Sustainability Literacy Assessment. The Sustainability Tracking, Assessment and Rating System. Available online at: https://stars.aashe.org/resources-support/help-center/academics/sustainability-literacy-assessment/ (accessed May 15, 2022).

About Carnegie Classification. Available online at: https://carnegieclassifications.acenet.edu/ (accessed May 17, 2022).

Akeel, U., Bell, S., and Mitchell, J. E. (2019). Assessing the sustainability literacy of the Nigerian engineering community. J. Clean. Product. 212, 666–676. doi: 10.1016/j.jclepro.2018.12.089

Alegre, J., and Chiva, R. (2013). Linking entrepreneurial orientation and firm performance: the role of organizational learning capability and innovation performance. J. Small Bus. Manage. 51, 491–507. doi: 10.1111/jsbm.12005

Alghamdi, N., den Heijer, A., and de Jonge, H. (2017). Assessment tools' indicators for sustainability in universities: an analytical overview. Int. J. Sustain. Higher Educ. 18, 84–115. doi: 10.1108/IJSHE-04-2015-0071

Alshuwaikhat, H. M., and Abubakar, I. (2008). An integrated approach to achieving campus sustainability: assessment of the current campus environmental management practices. J. Clean. Product. 16, 1777–1785. doi: 10.1016/j.jclepro.2007.12.002

Argyris, C., and Schön, D. A. (1978). Organizational Learning: A Theory of Action Perspective. Boston: Addison-Wesley Pub. Co.

Arum, R., Roksa, J., and Cook, A. (2016). Improving Quality in American Higher Education: Learning Outcomes and Assessments for the 21st Century. New York, NY: John Wiley and Sons.

Boddy, C. R. (2016). Sample size for qualitative research. Qual. Market Res. Int. J. 19, 426–432. doi: 10.1108/QMR-06-2016-0053

Brundtland, G. H. (1987). Report of the World Commission on Environment and Development: Our Common Future (document A/42/427). United Nations General Assembly. Available online at: https://sustainabledevelopment.un.org/content/documents/5987our-common-future.pdf (accessed May24, 2021).

Buckley, J. B., and Michel, J. O. (2020). An examination of higher education institutional level learning outcomes related to sustainability. Innovat. High. Educ. 45, 201–217. doi: 10.1007/s10755-019-09493-7

Calder, W., and Clugston, R. M. (2003). International efforts to promote higher education for sustainable development. Sustain. Develop. 15, 9. doi: 10.1016/S0952-8733(02)00009-0

Callewaert, J. (2018). “Sustainability literacy and cultural assessments,” in Handbook of Sustainability and Social Science Research, eds W. Leal Filho, R. W. Marans, and J. Callewaert (New York, NY: Springer International Publishing), pp. 453–464. (accessed May 24, 2022).

Ceulemans, K., Molderez, I., and Van Liedekerke, L. (2015). Sustainability reporting in higher education: a comprehensive review of the recent literature and paths for further research. J. Clean. Product. 106, 127–143. doi: 10.1016/j.jclepro.2014.09.052

Chen, C., An, Q., Zheng, L., and Guan, C. (2022). Sustainability literacy: assessment of knowingness, attitude and behavior regarding sustainable development among students in China. Sustainability 14, 4886. doi: 10.3390/su14094886

Décamps, A., Barbat, G., Carteron, J.-C., Hands, V., and Parkes, C. (2017). Sulitest: A collaborative initiative to support and assess sustainability literacy in higher education. Int. J. Manage. Educ. 15, 138–152. doi: 10.1016/j.ijme.2017.02.006

Dee, J. R., and Leišytė, L. (2016). “Organizational learning in higher education institutions: Theories, frameworks, and a potential research agenda,” in Higher Education: Handbook of Theory and Research, ed M. B. Paulsen (Springer International Publishing), 275–348. doi: 10.1007/978-3-319-26829-3_6

Dryzek, J. S. (2005). Environmentally Benign Growth: Sustainable Development. in The Politics of the Earth (2nd ed.). Oxford: Oxford University Press. Available online at: http://staff.washington.edu/jhannah/geog270aut07/readings/SustainableDevt/Dryzek%20-%20The%20quest%20for%20Sustainability.pdf (accessed May 19, 2021)

Findler, F., Schönherr, N., Lozano, R., Reider, D., and Martinuzzi, A. (2019). The impacts of higher education institutions on sustainable development: a review and conceptualization. Int. J. Sustain. High. Educ. 20, 23–38. doi: 10.1108/IJSHE-07-2017-0114

Gigauri, I., Vasilev, V., and Mushkudiani, Z. (2022). In pursuit of sustainability: towards sustainable future through education. Int. J. Innovat. Technol. Econ. 1, 37. doi: 10.31435/rsglobal_ijite/30032022/7798

Hacking, T., and Guthrie, P. (2008). A framework for clarifying the meaning of triple bottom-line, integrated, and sustainability assessment. Environ. Impact Assess. Rev. 28, 73–89. doi: 10.1016/j.eiar.2007.03.002

Hartono, E., Wahyudi, S., and Harahap, P. (2017). Does organizational learning affect the performance of higher education lecturers in Indonesia? the mediating role of teaching competence. Int. J. Environ. Sci. Educ. 12, 865–878. Available online at: https://eric.ed.gov/?id=EJ1144864

Hopkinson, P., and James, P. (2010). Practical pedagogy for embedding ESD in science, technology, engineering and mathematics curricula. Int. J. Sustain. High. Educ. 11, 365–379. doi: 10.1108/14676371011077586

Hou, D., and O'Connor, D. (2020). “Green and sustainable remediation: concepts, principles, and pertaining research,” in Sustainable Remediation of Contaminated Soil and Groundwater (London: Elsevier), pp. 1–17.

Huber, G. P. (1991). Organizational learning: the contributing processes and the literatures. Organization Sci. 2, 88–115. doi: 10.1287/orsc.2.1.88

Jerez-Gómez, P., Céspedes-Lorente, J., and Valle-Cabrera, R. (2005). Organizational learning capability: a proposal of measurement. J. Bus. Res. 58, 715–725. doi: 10.1016/j.jbusres.2003.11.002

Kezar, A., Gehrke, S., and Elrod, S. (2015). Implicit theories of change as a barrier to change on college campuses: an examination of STEM reform. Rev. High. Educ. 38, 479–506. doi: 10.1353/rhe.2015.0026

Kezar, A. J., and Holcombe, E. M. (2020). Barriers to organizational learning in a multi-institutional initiative. High. Educ. 79, 1119–1138. doi: 10.1007/s10734-019-00459-4

Maragakis, A., and Dobbelsteen, A. V. D. (2013). Higher education: Features, trends and needs in relation to sustainability. J. Sustain. Educ. 4, 1–20.

Marans, R. W., Levy, B., Celia, H., Bennett, J., Bush, K., Doman, C., et al. (2010). Campus Sustainability Integrated Assessment: Culture Team Phase I Report. Michigan: University of Michigan. Available online at: https://graham.umich.edu/media/files/CampusIA-InterimReport.pdf (accessed May 24, 2022)

Marans, R. W., Callewaert, J., and Shriberg, M. (2015). “Enhancing and monitoring sustainability culture at the University of Michigan,” in Transformative Approaches to Sustainable Development at Universities, ed W. Leal Filho (New York, NY: Springer International Publishing), pp. 165–179.

Martinez-Lozada, A. C., and Espinosa, A. (2022). Corporate viability and sustainability: a case study in a Mexican corporation. Syst. Res. Behav. Sci. 39, 143–158. doi: 10.1002/sres.2748

Mertler, C. A. (2014). “Introduction to data-driven educational decision making,” in The Data-Driven Classroom: How Do I Use Student Data to Improve my Instruction? Alexandria, VA: ASCD.

NCES (2021). Digest of Education Statistics, 2020. Institute of Education Sciences, National Center for Education Statistics, U.S. Department of Education; National Center for Education Statistics. Available online at: https://nces.ed.gov/programs/digest/d20/tables/dt20_317.10.asp?current=yes (accessed November 03, 2020).

Ramos, T., and Pires, S. M. (2013). “Sustainability assessment: the role of indicators,” in Sustainability Assessment Tools in Higher Education Institutions, eds S. Caeiro, W. L. Filho, C. Jabbour, and U. M. Azeiteiro (New York, NY: Springer International Publishing), pp. 81–99.

Redman, A., Wiek, A., and Barth, M. (2021). Current practice of assessing students' sustainability competencies: a review of tools. Sustain. Sci. 16, 117–135. doi: 10.1007/s11625-020-00855-1

Rivera, C. J., and Savage, C. (2020). Campuses as living labs for sustainability problem-solving: trends, triumphs, and traps. J. Environ. Stud. Sci. 10, 334–340. doi: 10.1007/s13412-020-00620-x

Sayed, A., and Kamal Asmuss, M. (2013). Benchmarking tools for assessing and tracking sustainability in higher educational institutions: identifying an effective tool for the University of Saskatchewan. Int. J. Sustain. High. Educ. 14, 449–465. doi: 10.1108/IJSHE-08-2011-0052

Shephard, K., Harraway, J., Lovelock, B., Skeaff, S., Slooten, L., Strack, M., et al. (2014). Is the environmental literacy of university students measurable? Environ. Educ. Res. 20, 476–495. doi: 10.1080/13504622.2013.816268

Shriberg, M. (2002). Institutional assessment tools for sustainability in higher education: Strengths, weaknesses, and implications for practice and theory. Higher Educ. Policy 15, 153–167. doi: 10.1016/S0952-8733(02)00006-5

Smith, P. A. C. (2012). The importance of organizational learning for organizational sustainability. Learn. Organiz. 19, 4–10. doi: 10.1108/09696471211199285

Stough, T., Ceulemans, K., and Cappuyns, V. (2021). Unlocking the potential of broad, horizontal curricular assessments for ethics, responsibility and sustainability in business and economics higher education. Assess. Eval. High. Educ. 46, 297–311. doi: 10.1080/02602938.2020.1772718

The Carnegie Classification of Institutions of Higher Education (2020). Available online at: https://carnegieclassifications.iu.edu/lookup/srp.php?clq=%7B%22basic2005_ids%22%3A%2215%22%7D&start_page=standard.php&backurl=standard.php&limit=0,50 (accessed May 17, 2021)

ULSF (1990). Talloires Declaration. Association of University Leaders for a Sustainable Future. Available online at: http://ulsf.org/talloires-declaration/

UNESCO (2015). Sustainable Development. UNESCO. Available online at: https://en.unesco.org/themes/education-sustainable-development/what-is-esd/sd

United Nations (2013). Education for Sustainable Development. UNESCO. Available online at: https://en.unesco.org/themes/education-sustainable-development

Wals, A. E. J. (2012). “Learning our way out of unsustainability: the role of environmental education,” in The Oxford Handbook of Environmental and Conservation Psychology, ed S. D. Clayton (Oxford: Oxford University Press), p. 32. Available online at: http://www.oxfordhandbooks.com/abstract/10.1093/oxfordhb/9780199733026.001.0001/oxfordhb-9780199733026-e-32 doi: 10.1093/oxfordhb/9780199733026.013.0032

Wiek, A., Withycombe, L., and Redman, C. L. (2011). Key competencies in sustainability: a reference framework for academic program development. Sustain. Sci. 6, 203–218. doi: 10.1007/s11625-011-0132-6

Zgrzywa-Ziemak, A., and Walecka-Jankowska, K. (2020). The relationship between organizational learning and sustainable performance: an empirical examination. J. Workplace Learn. 33, 155–179. doi: 10.1108/JWL-05-2020-0077

Keywords: sustainability literacy, sustainability culture, evidence-based decision-making, institutional impact, organizational learning, higher education, AASHE, STARS

Citation: Lad N and Akerlof KL (2022) Assessing campus sustainability literacy and culture: How are universities doing it and to what end? Front. Sustain. 3:927294. doi: 10.3389/frsus.2022.927294

Received: 24 April 2022; Accepted: 28 June 2022;

Published: 19 July 2022.

Edited by:

Rodrigo Lozano, University of Gävle, SwedenReviewed by:

Núria Bautista-Puig, University of Gävle, SwedenSubarna Sivapalan, University of Technology Petronas, Malaysia

Joy Kcenia Polanco O'Neil, University of Wisconsin–Stevens Point, United States

Copyright © 2022 Lad and Akerlof. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nikita Lad, bmxhZDJAZ211LmVkdQ==

Nikita Lad

Nikita Lad KL Akerlof

KL Akerlof