- 1Institute of Robotics, Bulgarian Academy of Sciences, Sofia, Bulgaria

- 2Department of Mathematics and Informatics, Sofia University, Sofia, Bulgaria

A mini-review of the literature, supporting the view on the psychophysical origins of some user acceptance effects of cyber-physical systems (CPSs), is presented and discussed in this paper. Psychophysics implies the existence of a lawful functional dependence between some aspect/dimension of the stimulation from the environment, entering the senses of the human, and the psychological effect that is being produced by this stimulation, as reflected in the subjective responses. Several psychophysical models are discussed in this mini-review, aiming to support the view that the observed effects of reactance to a robot or the uncanny valley phenomenon are essentially the same subjective effects of different intensity. Justification is provided that human responses to technologically and socially ambiguous stimuli obey some regularity, which can be considered a lawful dependence in a psychophysical sense. The main conclusion is based on the evidence that psychophysics can provide useful and helpful, as well as parsimonious, design recommendations for scenarios with CPSs for social applications.

1 Introduction

The introduction of cyber-physical systems (CPSs) has become ubiquitous, raising issues of adequate design and reliable interdependencies between their different components (technical and social), being in itself of a large societal impact (Losano and Vijayan, 2020; Wang et al., 2023; El-Haouzi et al., 2021). Some of these CPSs are especially designed with interfaces, capable of conveying social communication (such as chat bots in various applications) and education, e.g., LEGO (Lawhead et al., 2002) or MIRO (Collins et al., 2015) robots, as well as performing psycho-social or pedagogical rehabilitation roles (Bachrach, 1992; Dimitrova et al., 2023; Robinson et al., 2019; Dimitrova et al., 2021a). The CPSs in focus in the present mini-review are those used for social applications mostly, as given in the book (Dimitrova and Wagatsuma, 2019). A functional classification of the existing CPSs, consisting of 10 groups of the respective technological devices/systems, is proposed in Dimitrova et al. (2016). Eight of these are being commonly referred to in respect to their functional roles, such as CPSs for manufacturing, agriculture, transport, energy, medicine, and disability. Two more types are added—“CPSs for creativity, art, social communication/media and companionship” and “CPSs for education and pedagogical rehabilitation” (which were further explored within the EU-funded research project “CybSPEED: Cyber-physical systems for pedagogical rehabilitation in special education,” 2017–2023)1. It is evident that these two types of CPSs have emerged in the lifetime of the most recent human generations; therefore, investigating their socio–cultural relevance from different scientific perspectives—including psychophysical and psychosocial—is still ahead.

The paper is an attempt to relate the “uncanny valley” effect, as described originally by Masahiro Mori in his seminal work, reproduced in Mori et al. (2012), and some laws in psychophysics, which can explain and predict the intensity of a human reaction to a mechanical device, intended to perform social roles and, therefore, perceived as a special category/ontology of “being,” sharing the features of sentient, living, and nonliving things (Prescott, 2017; Moore, 2012; Dimitrova and Wagatsuma, 2015).

A point of terminological departure in the present mini-review is the attempt to make (as much as possible) a clear distinction between metaphors and real entities/processes/objects, which are often used in human–robot interaction research. For example, a “social robot” is a metaphor, whereas a “social CPS” is a real entity—a CPS for a social application like a robot used in teaching children social skills. The “uncanny valley” is a metaphor, whereas “psychological reactance” is a real process, which is being measured in the respective scientific discipline (psychometrics). In a similar manner, we refer to “user acceptance” of social CPSs as to a real psychological effect, differing and being measurable in various conditions, which may, or may not, correlate with other psychological effects like, trust, compliance, and liking.

Numerous factors of user acceptance of socially functioning CPSs (Horváth, 2014) have been intensively studied recently within frameworks like the technology acceptance model (TAM) and the unified theory of acceptance and use of technology (UTAUT) and summarized in previous studies (Wolbring et al., 2013; Ghazali et al., 2018; Ghazali et al., 2020). Furthermore, user acceptance of persuasive robots is being modeled within the recently proposed persuasive robot acceptance model (PRAM), demonstrating that the user trusting and liking the robot added predictive power to the PRAM. However, psychological reactance and compliance, being the processes of interest in the present mini-review, “were not found to contribute to the prediction of persuasive robot’s acceptance” (Ghazali et al., 2020, p. 1,075). This outcome suggests that the perception of the persuasion from the robot (an explicit process) is not directly linked to the self-reflection of reactance to the robot (an implicit process) (Ghazali et al., 2018). Therefore, employing a methodology beyond psychometrics can be helpful in revealing important aspects, contributing to the user experiencing comfort with the CPS and providing other useful guidelines for technology design.

Unlike numerous studies on factor similarity, contributing to user acceptance of robotic systems, our focus is on defining the inner psychological dynamics of a human encounter with a CPS in terms of its intensity (measured response to a range of stimulations) and its valence (type of emotional reaction, i.e., positive or negative). The main assumption in the analysis here is the understanding that the “uncanny valley” and “reactance” to the robot are the same as psychological effects but differ in their emotional depth—the former is deeper and the latter is shallower. In this way, different roles of robots can be allocated depending on users being confident with different styles of interaction with mechanical, machine-looking, humanoid, or android devices.

This mini-review presents some classical psychophysical assumptions, which are relevant to the issue of CPS acceptance as a psychological reality, in Section 2. Within this subjective realm, by applying Thurstone’s model (e.g., the “law of comparative judgement”) (Thurstone, 1927; Thurstone, 1928), the individual distance between the perceived agents on the human likeness dimension (x-axis) can be defined and facets of the reaction of the human toward the agents (y-axis) assessed, as described in Section 3, which summarizes the main points of the paper, proposing the implementation of indirect scaling procedures to predict user confidence with specific CPSs/robots performing different social roles.

2 Classical psychophysical assumptions relevant to user acceptance of social CPSs as a psychological reality

Masahiro Mori defined the “uncanny valley” effect in psychophysical terms, writing that “the mathematical term monotonically increasing function2 describes a relation in which the function y = f(x) increases continuously with the variable x … I have noticed that, in climbing toward the goal of making robots appear like a human3 our affinity for them4 increases until we come to a valley … , which I call the uncanny valley” (Mori et al., 2012, p. 98).

The small increment of x, bringing the “uncanny valley” emotional response from liking to repulsion with the increase in “human likeness” beyond a certain degree, is something that resembles the so-called “just noticeable difference” (JND) in psychophysics (Sanford and Halberda, 2023). This increment in x is commonly assumed as the quantitative basis for devising the so-called “psychophysics laws” of perception of Weber, Fechner, or Stevens (Laming, 2009; Fechner, 1948; Stevens, 1957). For example, Weber’s law assumes that the JND of a stimulus (Δx) is relative to the intensity of this stimulus x, so under different conditions, the JNDs are different in magnitude (when x is big, Δx is big and vice versa, so that Δx/x = constant) (Laming, 2009). Fechner’s law postulates that—in strictly controlled experimental situations—the subjective experience is a logarithmic function of the stimulation (Fechner, 1948). Stevens proposed a power function to express the same relation, where the power can be > or < than 1 (Stevens, 1957). In general, it was found that these laws are valid under strictly regulated experimental conditions and with respect to sets of intensities of “pure” stimuli like pitch, illumination, and amounts of a chemical substance for taste. Further studies demonstrated the complexity of brain processing, underlying every sensation reported by the subject, as is the processing of abstract concepts like the “human likeness” (Deco and Rolls, 2006; Johnson et al., 2002).

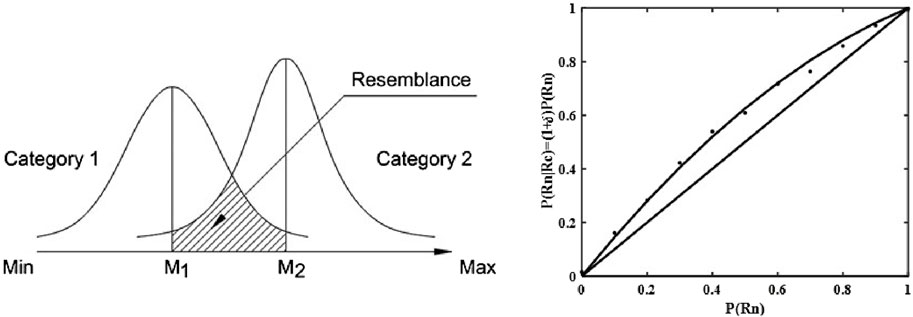

Of main interest in psychophysics are some boundary conditions when the subject is not entirely sure in her/his assessment in each trial, yet in multiple trials, the threshold of sensitivity to the minimal increase (Δx) in the physical quantity of the stimulation is being determined (the so-called differential threshold) (Sanford and Halberda, 2023). That is, two different, but close, values of a given intensity x1 and x2 on the stimulus dimension x, where x2–x1 = Δx, are represented by the means M1 and M2, respectively, of two different but close distributions of psychological effects, where ΔM = M2–M1. The psychophysical methodology is often based on using some methods for indirect measurements to evaluate the above subjective values, as is the “law of comparative judgement” proposed by Thurstone (1928) or the “law of categorical judgement” proposed by Torgerson (1958). Thurstone’s model is based on a scaling method for pairwise comparisons of stimulus intensities x1, x2, … xn, resulting in the respective values of the means of the subjective effects’ distributions M1, M2, … ,Mn. The distances between the means are being plotted on an interval scale (after computing the probabilities of the relations of the pairwise comparisons and respective z transformations, omitted here for simplicity) (Franceschini, and Maisano, 2020).

Our proposal is to use Thurstone’s scaling method in order to arrange a set of robots on an interval x scale of Mori’s “human likeness” dimension. By assessing the degree of overlap of probability distributions with means M1, M2 …, it can predict conditions when psychological confusion may occur due to the possible overlap of the distributions of the effects, generated by different stimuli (see Figure 1, left)5. We follow the theory of Moore (2012) in assuming the overlap of category distributions as the source of confusion between “human” and “non-human” categories. We propose differing distances between agents—human, robot, or android—on a single x-axis. We set as an aim for future research to study cases of different degrees of overlap between distributions of effects from different entities like mechanical robots, humanoid robots, and android robots within certain educational scenarios.

Figure 1. Hypothetical representation of two “nearly independent” distributions of effects produced by the categories 1 (“non-human”) and 2 (“human”) (left). Reproduced from Dimitrova et al. (2021b), licensed under Creative Commons Attribution License (CC BY). A hypothetical plot of Tulving and Wiseman’s “near-independence” function of P(Rn) along the x axis and P(Rn|Rc) = (1+ δ)P(Rn) along the y axis (right).

Some accounts of user acceptance of CPSs in terms of the existence of possible higher overlap (due to a slight distortion of the underlying functions of representation) of the distributions of the psychological effects are formulated in recent theories of user interaction with a variety of humanoid or android robots, such as the theory of violation of predictive coding (Saygin et al., 2012; Urgen et al., 2013; Urgen et al., 2018), realism inconsistency theory (MacDorman, and Chattopadhyay, 2016), distortion of categorical perception theory (Moore, 2012), and robot mediation in social control theory (Xu et al., 2023).

The attempts to formulate lawful dependencies in human cognition (based on postulating function distortions) go beyond perception. For example, an account of human memory performance is proposed by Tulving and Wiseman, who derived a function of a “near-independence” relation between the probability to recognize a studied item P(Rn) and to recall it P(Rc), where P(Rn|Rc) = P(Rn) (1 + δ), or P(Rn|Rc)/P(Rn) = 1 + δ, called the “recognition failure of recallable words” phenomenon (Tulving, and Wiseman, 1975, p.79). This theory argues against the common assumption that the probabilities of recognizing and recalling studied items are highly correlated, i.e., P(Rc) = P(G)P(Rn), where P(G) is the probability of generating a previously studied item and recognizing it as such (Bahrick, 1970).

It is well known that when the conditional probability of an event P(Rn|Rc) with respect to another event P(Rc) equals its probability of occurrence of P(Rn), the two processes are independent (George, 2004; Brereton, 2016) and their plot is diagonal (Figure 1, right)6. In the theory proposed by Tulving and Wiseman (1975), this independence relation is slightly violated by a fraction δ, where δ = c[1-P(Rn)], and c is a coefficient in the range (0, 1).

Tulving et al. observed this slight distortion of the independence relation under numerous experimental conditions (Tulving, and Wiseman, 1975; Tulving, and Watkins, 1977), which seems logically linked to the discussed models of the “uncanny valley,” postulating a slight distortion of the distributions of the subjective effects, produced by a robot (with a mean M1), strongly resembling a human (with a mean M2) on the x-axis, leading to a somewhat greater overlap (causing psychological effects, above a certain subjective threshold) than in cases of the effects, produced by completely independent events, as discussed in Moore (2012).

These psychophysical “laws” operate on the abstract representation of the external stimulus in the human mental representation space, which may, or may not, be entirely mutually congruent (Osorina and Avanesyan, 2021; Momen et al., 2024), still aiming at creating a meaningful and truthful picture of the objective world. Such a possibility is being supported by studies, mapping linear to nonlinear transformations of psychophysical processes onto neuronal activities in fMRI studies in support of the existence of the “uncanny valley” effect (Rosenthal-Von der Pütten, 2019).

A recent systematic review of the human acceptance of social robots provides evidence of humans having a generally positive attitude toward social robots, hypothesizing the following intriguing possibility, forwarded earlier by Prescott (2017)—to “acknowledge the qualities that mark social robots as not just another technological development but perhaps as an entire new social group7 with its own complexity” (Naneva et al., 2020). In the latter work, a new ontological category is being proposed—that of the robots as technological tools, which are perceived as more than just machines, i.e., as entities, possessing some distinctive features of being agents—initiative, autonomy, and reflection. The present paper considers the psychophysical nature of the inner, subjective response to this newly emergent complex stimulus—the CPS/robot—with its instantly presented perceptual features of physical, technical, technological, bio-physical, and social appearance.

Until recently, it was possible to assume perceiving a robot as cognitively similar to perceiving variants of non-living things such as puppets, mechanical toys, and avatars. (Dimitrova et al., 2020). However, in recent decades, the CPS/robot category has emerged as a new socio-cultural phenomenon (Prescott, 2017; Naneva et al., 2020; Dimitrova and Wagatsuma, 2015). By existing on the verge of perception (the subjective level of processing the incoming senses from the information) and categorization (the subjective level of retrieving from the memory of knowledge about known and unknown categories of entities), it is possible to expect certain dynamics in the mental representations of robots, tailored to the specific context of usage, as well as some shift toward higher psychological confidence with multifunctional technological devices, than 50 years ago when Masahiro Mori proposed his theory.

As pointed out by Mori, a CPS, or a robot, can be a physical entity with few, or none, biologically inspired features, or it can resemble biological tissue, or even non-living entities like zombies. His proposal is to find the balance between functional realism and aesthetics in the design of the features of the physical appearance of humanoid agents in order to avoid the “uncanny valley” effect (Mori et al., 2012). To a great extent, this advice was followed while designing the humanoid robot NAO at Aldebaran Robotics, Ltd., commonly used in, for example, interactive scenarios for children (Jackson, and Williams, 2021).

Our approach asserts that the classical and modern psychophysical assumptions describe the regularities of the internal representation of the complex external environment in which the human exists—physical, technological, and social—on different conceptual levels of mental abstraction, reflecting the complexities of the attributes of the objects in the world. Justification for this view was given by Johnson et al. (2002), referring to the classics of psychophysics from a modern perspective: “Fechner seemed to have a clear notion of what had to be done to translate the study of outer psychophysics to the study of inner psychophysics (Fechner 1860, p. 56): “Quantitative dependence of sensation on the [outer] stimulus can eventually be translated into dependence on the [neural activity] that directly underlies sensation—in short, the psychophysical processes—and the measurement of sensation will be changed to one depending on the strength of these processes.” When people coexist with CPSs in various social situations such as at work, home, and hospital, the attributes of the robot are being subjectively processed from many facets, including the psychophysical facet.

3 A view on the psychophysical distance between the robot and human agent stimuli

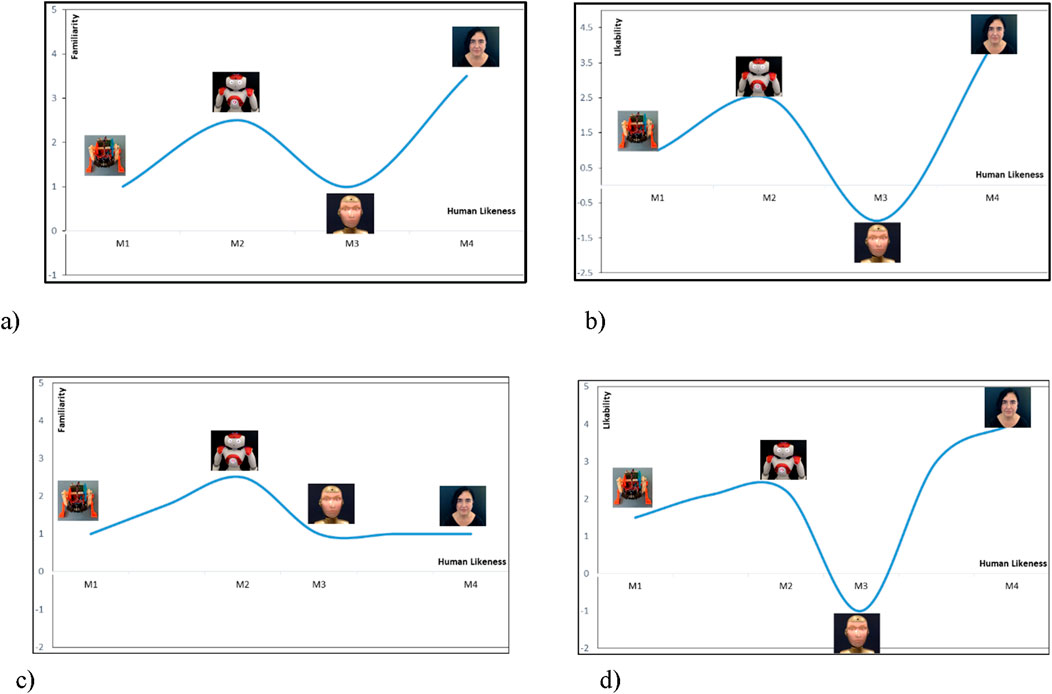

The classical “uncanny valley” function depicts the functional relation between two dimensions—human likeness and affinity (Mori et al., 2012). The horizontal axis x represents the human likeness, whereas the vertical axis y represents the affinity in Mori’s terms. Recent studies split the affinity attribute/feature into familiarity and likability since the original affinity feature is a complex attribute, which is easily understood in Japanese but not possible to translate unambiguously in English. Both familiarity and likability are used to complement or confirm the main assumptions on investigating various factors, which may produce the psychological effect of uncanny valley in cases of an encounter with an artificial agent (Rosenthal-Von der Pütten et al., 2019).

In previous studies, we used four types of agents in various roles, such as a toy—a walking robot BigFoot (Nikolov et al., 2022); a zoology teacher—the humanoid robot NAO8 (called Roberta) (Dimitrova et al., 2019); and a counseling assistant—the android type of robot SociBot9 (called Alice), which was previously used in the studies on psychological reactance to robots by Ghazali et al. (2018) and Ghazali et al. (2020). In our study, the users’ reaction to videos of Alice and Roberta was compared to the reaction to a video of a human actress (by the name of Violina), along six characteristics (plotted on the y axis as a function of x), three positive—sociability, trust, and emotional stability—and three negative—weirdness, threat, and aggression (Dimitrova et al., 2023). Viewers differently assessed the positive and negative features (main effect of factor feature type) of the presented faces —human, android, or robotic (machine-looking). They tend to be cautious in negatively evaluating neutral faces and are inclined to see positive features in these faces to a large extent. This observation deserves further exploration yet suggests certain asymmetry in user attribution of features to the robots, depending on the robots’ intended roles.

Figure 2 plots the hypothetical uncanny valley effect, expected at the encounter of each of the above agents, according to the proposed psychophysical account of robot acceptance by the human. The reactance effect is assumed identical with the uncanny valley effect in terms of its valence but different as intensity—manifested on the emotional level in the first case (reactance) and on the visceral level in the second (uncanny valley). Cases (a) and (b) of Figure 2 represent hypothetically the classical view of the uncanny valley effect, whereas cases (c) and (d) present the psychophysical view forwarded in the present paper.

Figure 2. Plots of the expected uncanny valley function in four different cases: (A) plot of familiarity as a function of human likeness in the classical case; (B) plot of likability as a function of human likeness in the classical case; (C) plot of familiarity as a function of human likeness in the proposed case; and (D) plot of likability as a function of human likeness in the proposed case.

The machine-looking toy robot BigFoot is expected to be placed at the left origin of the human likeness dimension in all four cases shown in Figure 2. The robot is the least human-looking (M1 on the x axis), least familiar, and, possibly, not liked (y axis). The position of the humanoid robot Roberta (M2 on the x axis), popular for being designed as cute and likable by children, will possibly be approximately in the same position in all four cases as well—more human-like than BigFoot, familiar as being quite frequent, and likable by design (y axis). The relative positions of the android Alice and the human Violina, however, are expected to differ in the classical and the proposed cases. In the classical case, the uncanny valley function would predict a decrease below the zero line of the y-axis with the increase in human-like features of the artificial agent Alice. This decrease below the y-axis signifies the affective (negative emotional) reaction to the android, closely resembling perceptually a human agent. Alice is unfamiliar (a) and possibly not much liked because of this (b). In both cases, the human face is expected to be the most familiar and likable (although never seen before).

In case (c), only NAO is familiar, whereas BigFoot, Alice (M3 on the x axis), and Violina (M4 on the x axis) are never seen before, and the familiarity effect will be similar for all of them (y axis). At the same time, the human likeness is distinctly different in all three robotic cases (x axis). In case (d), it is not quite possible to predict which agent will be most liked (y axis). All faces have neutral expressions, and the classical condition of the expected repulsion by the artificial agent will not hold to the full extent. Still, the characteristic form of the uncanny valley function—an increase with Roberta and decrease with Alice—will be observed as a law of perception, holding every time when robots are assessed by their appearance or action.

Most of the existing models of this phenomenon postulate the positions of the agents on the x axis in an ad hoc way. Our proposal is to implement the rating procedures for different characteristics on the y axis (trust, likability, familiarity, etc.) plus the indirect scaling procedure of Thurstone to determine the (non-arbitrary) interval values with respect to the “human likeness” dimension (x axis)—M1, M2, M3, and M4—as the means for the distributions of each category of agents—a mechanical robot, machine-looking humanoid, android, and a human. Moreover, for every role or scenario involving the CPS/robot, and even for each human, interacting with the robot, individual mapping can be performed so that for every user, the level of confidence with any of the robots can be defined. In this way, the proposed psychophysical approach can be used as an overall methodological framework, applicable for the design of CPSs, intended to support users in general and enhance accessibility via CPSs for users with special needs.

Overall, the current analysis is being validated to a certain extent by Mathur, and Reichling (2016). Users were presented with photos of the 80 most popular on-the-web robotic faces, ranging from 0 to max on the x-axis of “human likeness.” By fitting the data curves of the robot’s likability, a well-pronounced uncanny valley effect was observed. The authors assert that “a formidable android specific problem” exists (p. 22), which we call a lawful dependence of human reaction to CPSs/robots of different perceptual similarities to a human. The machine-looking robot, resembling NAO, obtained the highest score on “likability,” similar to the result that we observed and presented in Dimitrova et al. (2024). Mori’s intuition was supported in terms of a strong link between functionality and aesthetics when designing humanoid robots as a subclass of the class “social CPSs.” Mathur, and Reichling (2016) revealed different effects of the factors likability and trust, which did not predict each other. The authors state the following: “These observations help locate the study of human-android robot interaction squarely in the sphere of human social psychology rather than solely in the traditional disciplines of human factors or human machine interaction” (p. 31). Therefore, if the interaction with social CPSs is governed by the laws of social psychology, the assumption about the newly emergent socially shaped ontology, involving mechanical entities, projecting their “inner states” to the human, gradually acquires more support.

Psychophysics is essential in providing a methodology for the indirect measurements and design of quantitative scales to represent the inner dynamics of perceiving objects in order to avoid negative emotions—a process largely dependent on the individual characteristics of the current user of the CPS. It is also proposed to map professional and other social roles to different types of agents at the stage of interaction design, rather than rely on a unified generalized conceptualization of all possible perceptual varieties of robots and androids engaged in social activities.

4 Conclusion

This mini-review considers several psychophysical laws from the point of view of their relevance to model the psychological processes underlying the user acceptance of complex CPSs for social applications. It is demonstrated that the laws of psychophysics are intrinsically related to the theory proposed by Masahiro Mori regarding the function of non-monotonic increase in user affinity for a robot with an increasing degree of its “human likeness.” It is worth investigating human responses to technologically and socially ambiguous stimuli by implementing the psychophysical methodology to reveal the internal dynamics of perceiving the newly designed social agents, performing various social roles. By implementing the indirect scaling methodology useful scenarios for human–robot interaction can be designed, accounting for the individual user-explicit or implicit preferences, accessible and individualized, in order to better adapt to the sensor and learning needs of the users.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any identifiable images or data included in this article.

Author contributions

MD: conceptualization, funding acquisition, methodology, project administration, writing–original draft, and writing–review and editing. NC: investigation, validation, visualization, and writing–review and editing. AM: formal analysis, methodology, and writing–review and editing. AK: data curation, investigation, visualization, and writing–review and editing. IC: formal analysis, supervision, validation, and writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was funded by the Bulgarian National Science Fund, grant number KP-06-H42/4 of 08.12.2020, for the project “Digital Accessibility for People with Special Needs: Methodology, Conceptual Models, and Innovative Ecosystems” and the European Regional Development Fund under the Operational Program “Scientific Research, Innovation and Digitization for Smart Transformation 2021-2027”, Project CoC “Smart Mechatronics, Eco- and Energy Saving Systems and Technologies”, BG16RFPR002-1.014-0005.

Acknowledgments

The authors thank Dr. Chris Harper, Dr. Dan Withey, and Dr.Virginia Ruis Garate for insightful discussions of the model and their help in programming Alice at Bristol Robotics Laboratory, United Kingdom, within the TERRINet Project of the EC (2017–2021), and the actress Violina for her contribution to the present work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1https://cordis.europa.eu/project/id/777720

2The italics is ours.

3along the x-axis (added by us).

4along the y-axis (added by us).

5Reproduced from Dimitrova et al. (2021b) under Creative Commons Attribution License (CC BY).

6The simulated function and data are MATLAB generated from the “recognition failure” function of Tulving and Wiseman (1976) for the illustration of the “near-independence” probabilistic relation.

7Italics is ours.

8https://www.aldebaran.com/en/nao

9https://wiki.engineeredarts.co.uk/SociBot

References

Bachrach, L. L. (1992). Psychosocial rehabilitation and psychiatry in the care of long-term patients. Am. J. Psychiatry 149, 1455–1463. doi:10.1176/ajp.149.11.1455

Bahrick, H. P. (1970). Two-phase model for prompted recall. Psychol. Rev. 77 (3), 215–222. doi:10.1037/h0029099

Brereton, R. G. (2016). Statistically independent events and distributions. J. Chemom. 30 (3), 90–92. doi:10.1002/cem.2773

Collins, E. C., Prescott, T. J., and Mitchinson, B. (2015). “Saying it with light: a pilot study of affective communication using the MIRO robot,” in Biomimetic and biohybrid systems: 4th international conference, living machines, barcelona, Spain, july 28-31, proceedings 4 (Springer International Publishing), 243–255. doi:10.1007/978-3-319-22979-9_25

Deco, G., and Rolls, E. T. (2006). Decision-making and Weber's law: a neurophysiological model. Eur. J. Neurosci. 24 (3), 901–916. doi:10.1111/j.1460-9568.2006.04940.x

Dimitrova, M., Garate, V. R., Withey, D., and Harper, C. (2023). “Implicit aspects of the psychosocial rehabilitation with a humanoid robot,” in International conference in methodologies and intelligent systems for technology enhanced learning (Cham: Springer Nature Switzerland), 119–128.

Dimitrova, M., Kostova, S., Chavdarov, I., Krastev, A., Chehlarova, N., and Madzharov, A. (2024) “Psychosocial and psychophysical aspects of the interaction with humanoid robots: implications for education,” in Proceedings of CompSysTech ’24, ruse, Bulgaria, ACM, 1–9. 979-8-4007-1684-3/24/06. doi:10.1145/3674912.3674951

Dimitrova, M., Kostova, S., Lekova, A., Vrochidou, E., Chavdarov, I., Krastev, A., et al. (2021a). Cyber-physical systems for pedagogical rehabilitation from an inclusive education perspective. BRAIN. Broad Res. Artif. Intell. Neurosci. 11 (2Suppl. 1), 186–207. doi:10.18662/brain/11.2sup1/104Available at: https://brain.edusoft.ro/index.php/brain/article/view/1135.

Dimitrova, M., Krastev, A., Zahariev, R., Vrochidou, E., Bazinas, C., Yaneva, T., et al. (2020). “Robotic technology for inclusive education: a cyber-physical system approach to pedagogical rehabilitation,” in Proceedings of the 21st international conference on computer systems and technologies, 293–299.

Dimitrova, M., Lekova, A., Kostova, S., Roumenin, C., Cherneva, M., Krastev, A., et al. (2016). “A multi-domain approach to design of CPS in special education: issues of evaluation and adaptation,” in Proceedings of the 5th workshop of the MPM4CPS COST action. Editors H. Vangheluwe, V. Amaral, H. Giese, J. Broenink, B. Schätz, A. Nortaet al. (Spain: Malaga), 196–205. doi:10.5281/zenodo.12935570

Dimitrova, M., and Wagatsuma, H. (2015). Designing humanoid robots with novel roles and social abilities. Lovotics 3 (112), 2. doi:10.4172/2090-9888.1000112Available at: https://www.omicsonline.org/open-access/designing-humanoid-robots-with-novel-roles-and-social-abilities-2090-9888-1000112.php?aid=67398.

M. Dimitrova, and H. Wagatsuma (2019). “Cyber-physical systems for social applications,”, 13. doi:10.4018/978-1-5225-7879-6IGI Glob., 9781522578796.

Dimitrova, M., Wagatsuma, H., Krastev, A., Vrochidou, E., and Nunez-Gonzalez, J. D. (2021b). A review of possible EEG markers of abstraction, attentiveness, and memorisation in cyber-physical systems for special education. Front. Robotics AI 8, 715962. doi:10.3389/frobt.2021.715962

Dimitrova, M., Wagatsuma, H., Tripathi, G. N., and Ai, G. (2019). “Learner attitudes towards humanoid robot tutoring systems: measuring of cognitive and social motivation influences,” in Cyber-physical systems for social applications. Editors M. Dimitrova, and H. Wagatsuma (Hershey, PA, United States: IGI Global), 1–24. doi:10.4018/978-1-5225-7879-6.ch004

El-Haouzi, H. B., Valette, E., Krings, B. J., and Moniz, A. B. (2021). Social dimensions in CPS and IoT based automated production systems. Societies 11 (3), 98. doi:10.3390/soc11030098Available at: https://www.mdpi.com/2075-4698/11/3/98.

Fechner, G. T. (1948) Elements of psychophysics, 1860. Available at: https://lru.praxis.dk/Lru/microsites/hvadermatematik/hem2download/kap4_projekt_4_9_ekstra_Fechners_originale_vaerk.pdf.

Fechner, G. T. (1860) . Elements of psychophysics. Translated by H. E. Adler, 1966 (New York: Holt, Rinehart and Winston).

Franceschini, F., and Maisano, D. (2020). Adapting Thurstone’s law of comparative judgment to fuse preference orderings in manufacturing applications. J. Intelligent Manuf. 31 (2), 387–402. doi:10.1007/s10845-018-1452-5

George, G. (2004). 88.76 Testing for the independence of three events. Math. Gazette 88 (513), 568. doi:10.1017/S0025557200176363

Ghazali, A. S., Ham, J., Barakova, E., and Markopoulos, P. (2018). The influence of social cues in persuasive social robots on psychological reactance and compliance. Comput. Hum. Behav. 87, 58–65. doi:10.1016/j.chb.2018.05.016

Ghazali, A. S., Ham, J., Barakova, E., and Markopoulos, P. (2020). Persuasive robots acceptance model (PRAM): roles of social responses within the acceptance model of persuasive robots. Int. J. Soc. Robotics 12 (5), 1075–1092. doi:10.1007/s12369-019-00611-1

Horváth, I. (2014). “What the design theory of social-cyber-physical systems must describe, explain and predict?,” in An anthology of theories and models of design: philosophy, approaches and empirical explorations (London: Springer London), 99–120.

Jackson, R. B., and Williams, T. (2021). A theory of social agency for human-robot interaction. Front. Robotics AI 8, 687726. doi:10.3389/frobt.2021.687726

Johnson, K. O., Hsiao, S. S., and Yoshioka, T. (2002). Neural coding and the basic law of psychophysics. Neuroscientist 2002 (2), 111–121. doi:10.1177/107385840200800207Available at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1994651/.

Laming, D. (2009). “Weber’s law,” in Inside psychology: a science over 50 years. Editor P. Rabbitt (Oxford University Press), 177–189. 9780199228768.

Lawhead, P. B., Duncan, M. E., Bland, C. G., Goldweber, M., Schep, M., Barnes, D. J., et al. (2002). A road map for teaching introductory programming using LEGO© mindstorms robots. ACM SIGCSE Bull. 35 (2), 191–201. doi:10.1145/782941.783002

Losano, C. V., and Vijayan, K. K. (2020). Literature review on cyber physical systems design. Procedia Manuf. 45, 295–300. doi:10.1016/j.promfg.2020.04.020

MacDorman, K. F., and Chattopadhyay, D. (2016). Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition 146, 190–205. doi:10.1016/j.cognition.2015.09.019Available at: https://core.ac.uk/download/pdf/334499249.pdf.

Mathur, M. B., and Reichling, D. B. (2016). Navigating a social world with robot partners: a quantitative cartography of the Uncanny Valley. Cognition 146, 22–32. doi:10.1016/j.cognition.2015.09.008Available at: https://www.sciencedirect.com/science/article/pii/S0010027715300640.

Momen, A., Hugenberg, K., and Wiese, E. (2024). Social perception of robots is shaped by beliefs about their minds. Sci. Rep. 14 (1), 5459. doi:10.1038/s41598-024-53187-wAvailable at: https://www.nature.com/articles/s41598-024-53187-w.

Moore, R. K. (2012). A Bayesian explanation of the ‘Uncanny Valley’ effect and related psychological phenomena. Sci. Rep. 2 (1), 864. doi:10.1038/srep00864Available at: https://www.nature.com/articles/srep00864.

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robotics and automation Mag. 19 (2), 98–100. doi:10.1109/mra.2012.2192811Available at: https://ieeexplore.ieee.org/document/6213238.

Naneva, S., Sarda Gou, M., Webb, T. L., and Prescott, T. J. (2020). A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. Int. J. Soc. Robotics 12 (6), 1179–1201. doi:10.1007/s12369-020-00659-4

Nikolov, V., Dimitrova, M., Chavdarov, I., Krastev, A., and Wagatsuma, H. (2022). “Design of educational scenarios with BigFoot walking robot: a cyber-physical system perspective to pedagogical rehabilitation,” in International work-conference on the interplay between natural and artificial computation (Cham: Springer International Publishing), 259–269.

Osorina, M. V., and Avanesyan, M. O. (2021). Lev Vekker and his unified theory of mental processes. Eur. Yearb. Hist. Psychol. 7, 265–281. doi:10.1484/J.EYHP.5.127027

Prescott, T. J. (2017). Robots are not just tools. Connect. Sci. 29 (2), 142–149. doi:10.1080/09540091.2017.1279125

Robinson, N. L., Cottier, T. V., and Kavanagh, D. J. (2019). Psychosocial health interventions by social robots: systematic review of randomized controlled trials. J. Med. Internet Res. 21 (5), e13203. doi:10.2196/13203Available at: https://www.jmir.org/2019/5/e13203/.

Rosenthal-Von der Pütten, A. M., Krämer, N. C., Maderwald, S., Brand, M., and Grabenhorst, F. (2019). Neural mechanisms for accepting and rejecting artificial social partners in the uncanny valley. J. Neurosci. 39 (33), 6555–6570. doi:10.1523/JNEUROSCI.2956-18.2019

Sanford, E. M., and Halberda, J. (2023). A shared intuitive (mis) understanding of psychophysical law leads both novices and educated students to believe in a just noticeable difference (JND). Open Mind 7, 785–801. doi:10.1162/opmi_a_00108

Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., and Frith, C. (2012). The thing that should not be: predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Soc. Cognitive Affect. Neurosci. 7 (4), 413–422. doi:10.1093/scan/nsr025

Stevens, S. S. (1957). On the psychophysical law. Psychol. Rev. 64 (3), 153–181. doi:10.1037/h0046162

Thurstone, L. L. (1927). A law of comparative judgment. Psychol. Rev. 34 (4), 273–286. doi:10.1037/h0070288Available at: http://mlab.no/blog/wp-content/uploads/2009/07/thurstone94law.pdf.

Thurstone, L. L. (1928). Attitudes can be measured. Am. J. Sociol. 33 (4), 529–554. doi:10.1086/214483

Tulving, E., and Watkins, O. C. (1977). Recognition failure of words with a single meaning. Mem. and Cognition 5, 513–522. doi:10.3758/BF03197394

Tulving, E., and Wiseman, S. (1975). Relation between recognition and recognition failure of recallable words. Bull. Psychonomic Soc., Vol 6(1), 79–82. doi:10.3758/BF03333153

Urgen, B. A., Kutas, M., and Saygin, A. P. (2018). Uncanny valley as a window into predictive processing in the social brain. Neuropsychologia 114, 181–185. doi:10.1016/j.neuropsychologia.2018.04.027

Urgen, B. A., Plank, M., Ishiguro, H., Poizner, H., and Saygin, A. P. (2013). EEG theta and Mu oscillations during perception of human and robot actions. Front. Neurorobotics 7, 19. doi:10.3389/fnbot.2013.00019

Wang, S., Gu, X., Chen, J. J., Chen, C., and Huang, X. (2023). Robustness improvement strategy of cyber-physical systems with weak interdependency. Reliab. Eng. and Syst. Saf. 229, 108837. ISSN 0951-8320. doi:10.1016/j.ress.2022.108837

Wolbring, G., Diep, L., Yumakulov, S., Ball, N., and Yergens, D. (2013). Social robots, brain machine interfaces and neuro/cognitive enhancers: three emerging science and technology products through the lens of technology acceptance theories, models and frameworks. Technologies 1 (1), 3–25. doi:10.3390/technologies1010003Available at: https://www.mdpi.com/2227-7080/1/1/3.

Xu, J., Zhang, C., Cuijpers, R. H., and Ijsselsteijn, W. A. (2023). How might robots change us? Mechanisms underlying health persuasion in human-robot interaction from a relationship perspective: a position paper, Available at: https://ceur-ws.org/Vol-3474/paper20.pdf.

Keywords: cyber-physical systems, robot acceptance, psychophysics, psychological reactance, categorical perception, uncanny valley effect, probability distribution, accessibility

Citation: Dimitrova M, Chehlarova N, Madzharov A, Krastev A and Chavdarov I (2024) Psychophysics of user acceptance of social cyber-physical systems. Front. Robot. AI 11:1414853. doi: 10.3389/frobt.2024.1414853

Received: 09 April 2024; Accepted: 24 September 2024;

Published: 15 October 2024.

Edited by:

Livija Cveticanin, University of Novi Sad, SerbiaReviewed by:

Tibor Farkas, Óbuda University, HungaryCopyright © 2024 Dimitrova, Chehlarova, Madzharov, Krastev and Chavdarov. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maya Dimitrova, bWF5YS5kaW1pdHJvdmEuaXJAZ21haWwuY29t

†ORCID: Ivan Chavdarov, orcid.org/0000-0002-3978-4821

Maya Dimitrova

Maya Dimitrova Neda Chehlarova

Neda Chehlarova Anastas Madzharov

Anastas Madzharov Aleksandar Krastev

Aleksandar Krastev Ivan Chavdarov

Ivan Chavdarov