95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Robot. AI , 07 November 2023

Sec. Humanoid Robotics

Volume 10 - 2023 | https://doi.org/10.3389/frobt.2023.1223946

The incessant progress of robotic technology and rationalization of human manpower induces high expectations in society, but also resentment and even fear. In this paper, we present a quantitative normalized comparison of performance, to shine a light onto the pressing question, “How close is the current state of humanoid robotics to outperforming humans in their typical functions (e.g., locomotion, manipulation), and their underlying structures (e.g., actuators/muscles) in human-centered domains?” This is the most comprehensive comparison of the literature so far. Most state-of-the-art robotic structures required for visual, tactile, or vestibular perception outperform human structures at the cost of slightly higher mass and volume. Electromagnetic and fluidic actuation outperform human muscles w.r.t. speed, endurance, force density, and power density, excluding components for energy storage and conversion. Artificial joints and links can compete with the human skeleton. In contrast, the comparison of locomotion functions shows that robots are trailing behind in energy efficiency, operational time, and transportation costs. Robots are capable of obstacle negotiation, object manipulation, swimming, playing soccer, or vehicle operation. Despite the impressive advances of humanoid robots in the last two decades, current robots are not yet reaching the dexterity and versatility to cope with more complex manipulation and locomotion tasks (e.g., in confined spaces). We conclude that state-of-the-art humanoid robotics is far from matching the dexterity and versatility of human beings. Despite the outperforming technical structures, robot functions are inferior to human ones, even with tethered robots that could place heavy auxiliary components off-board. The persistent advances in robotics let us anticipate the diminishing of the gap.

During the last decades, society has been confronted with an incessant advance of robotictechnologies in the human work environment. First, the production industry deployed robots for well defined, heavy lifting, and high accuracy assembly tasks that are characterized by a high number of repetitions, e.g., in the automotive industry. Robots with more versatile functions were not existing or reliably functioning. Now, robots advance more and more into the field of service tasks that require more demanding behaviors with respect to perception, adaptation, and cooperation with humans, in human-centered domains such as private households or clinical facilities. Latest developments in humanoid and legged robotics demonstrate that technology advances to accomplish an increasing number of functions that are typical for humans (Miki et al., 2022; Boston Dynamics Support Center, 2021; Boston Dynamics, 2017). Furthermore, parathletes such as Heinrich Popow or Markus Rehm are using high-tech lower leg prostheses for sprint and broad jump disciplines, respectively. Their performances belong to the best among all athletic achievements, including those without amputations (Hassani et al., 2015; Beckman et al., 2017).

Humanoids and legged robots in general experienced impressive advancement in the last decades. The story of legged robots started, e.g., with Mark Raibert’s hopping machines in the 1980ies (Raibert, 1986) or Honda’s Asimo in the 1990ies (Sakagami and Watanabe). Since these first endeavors in the field, remarkable improvements were made afterwards. Very powerful und skilled walking and running robots have been introduced in the last decade with robots such as Atlas, MIT Cheetah, ANYmal, Spot, or Cassie (Boston Dynamics, 2022; Seok et al., 2014; Hutter et al., 2016a; Boston Dynamics Support Center, 2021; Gong et al., 2019) and its commercially available successor Digit (C et al., 2021; NED Directory, 2023; Robots, 2019). Performance of robots showcased at events such as the DARPA Robotics Challenge 2015 and 2021 (Johnson et al., 2015), the ANA Avatar XPRize (Luo et al., 2022) or the Cybathlon 2016 and 2020 (Riener, 2016; Wolf and Riener, 2018; CYBATHLON, 2022); they show impressive technological achievements close to being applicable in daily live.

This impressive advancements in robotics together with the progressive rationalization and outperformance of human labor by robotic technology induces resentment or even fear in society (Nestik et al., 2018), whereas stirring up hope to cede all dangerous and wearing tasks to machines. The image of robots as superior to humans has also been pushed by the entertainment industry with movies such as Terminator, Ironman, or I-Robot. This might foster high expectations for robotic technology, thereby creating the public belief, that humanoid robots and artificial limbs are readily available, and that they can fully replace or even outperform the functions of our biological limbs.

So where are we today? Do robots already outperform human beings with respect to sensory, computational, and motor performance? Can robots now replace humans acting in typical human-centered domains? This paper attempts to shine a light into this pressing question by comparing state-of-the-art robotic performance with that of human beings.

The goal of this study is to perform an objective (i.e., a normalized) comparison between existing humanoid robotic devices with human beings with respect to their structures and functions in human domains. Structures include sensory, computational, and motor components in both robots and humans. They are required to enable functions such as locomotion and haptic interactions within these domains.

Comparisons of robotic systems with biological ones is not a straight-forward process, because the technical systems are not built by biological cells that can regenerate, grow, and adapt to (slowly) changing conditions. On the other hand, technology can easily outperform a human when focused on a single and repetitive function, as the technology can be optimized or just enhanced with respect to performance of only a single function. For example, a robot can become stronger and faster, when choosing larger and more powerful actuators. A robotic arm can also reach higher when choosing longer links. And, a robot can work longer and thus highly repetitive, without fatigue and endurance limitations like in humans, when choosing larger batteries with higher capacities or when harvesting external energy sources, e.g., solar energy. However, all these enhancements come at a cost: the robotic system usually becomes much larger or heavier (or both) than a human, which limits other functions such as agility, portability, dexterity, or versatility.

Therefore, it is important when conducting any type of functional or structural comparison to relate a concrete, measurable feature (i.e., a physical quantity), against a respective normative base, a common “denominator,” such as size, mass, available (battery) power, or cost of transport (Tucker, 1975; Kashiri et al., 2018). With such a normalization, the relation of the feature to the denominator is approximated to be linear, which should be an accurate estimation in close proximity to the normalization point and is commonly performed to compare features at different scales, e.g., power to weight ratio. The comparison refers to environments and tasks that are made and/or accessible for human beings and serve them in their everyday leisure or work lives.

Furthermore, robot and human performance must be compared in similar environments, while doing similar tasks. For example, the performance of a vacuum cleaning task should not be assessed with measures relevant for outdoor locomotion or performance in the assembly line of a car manufacturer. We focus this review work on humanoid robots, to allow a “fair” (apples to apples) comparison and to limit the scope of the comparison. We assume that general structures, comprising legs and limbs in a slender appearance, can act within human-centered domains and interact with humans for a series of different human-like tasks.

Related work mostly focused on comparisons of specific structural components between technological and biological systems. Aubin et al. (2022) investigated embodied energy storage. Rothemund et al. (2021) discuss the benefits of flexible structures in biological systems and the potential of material science to provide technological counterparts. Yin et al. (2021) review control and perception methods for deformable object manipulation. Furthermore, many publications compare the performance of biological and technological actuation systems (Higueras-Ruiz et al., 2021; Liang et al., 2020; Acome et al., 2018). Skorka and Joseph (2011) compared visual sensors. Yamane and Murai (2016) discussed the similarities and differences between human and humanoid robot structures qualitatively. A comprehensive comparison of structures and functions between the human and humanoid robots is not known to the authors.

It is neither trivial nor meaningful to compare, for instance, the speed of an autonomous robotic car with that of a sprinting human; the costs of machine maintenance with the costs of sustaining human life; or aspects of technical deterioration with biological aging. Moreover, a robot devoid of feelings cannot be compared to the human experience of emotion. Robots, however, can move, perceive, and communicate, but at present they are incapable of experiencing an emotional spectrum, for example, feelings of happiness after successfully completing a task or frustration in times of failure. Since robots do not yet have an emotional framework, comparison in this context is not meaningful as the tasks and contexts differ.

Robots are technological systems developed with the purpose of serving humans, and in some cases, they do this by replacing human operators. Therefore, we compare “apples with apples”, i.e., similar “structures” that perform “functions” of similar activities (Figure 1) and take place in similar “domains”, shared by humans and robots. Such meaningful comparisons are those of walking or running (on legs) in human-centered domains, grasping, manipulating objects relevant for daily life, learning new movements, perceiving the environment, and processing this information with some level of “cognition,” computing a motor task, or consuming energy for comparable motor tasks to name just a few.

Comparing robots and humans with respect to their common structures and functions in similar tasks and contexts allows us to refer to applications, where a robot can support or replace humans or a part of the human body to serve society in any task that is relevant for aspects of our private or work lives. Therefore, we focus on “service functions” for humans and include movement and interaction tasks in human-centered domains, elaboration, and communication of information perceived from such domains. Our human domains have evolved in a way that we can function well within these domains with our structures including legs, arms, fingers, eyes, ears, etc. Therefore, we assume that robotic structures that serve to support humans in their domains must have humanoid structures, and consequently, we focus on robots with legs and hands, with all the sensors carried by the robot. Structures and functions that are required for self-sustaining, such as breathing, cardiovascular functions, eating, or digestion, are not taken into consideration (Figure 1).

In this paper, we first confront anatomical structures such as bones, joints, muscles, eyes, brain etc. with corresponding technical systems like links, bearings, actuators, cameras, and processing units by comparing features such as Young Modulus, friction, force, power generation, power consumption or spatial and temporal resolution. Then we move toward humanoid functions that are based on the presented structures. For example, given the common function “locomotion,” we compared features such as walking or running speed.

The performance of humans and robots regarding their functions and structures must be compared with respect to defined domains and related tasks. The domains and tasks must allow a replacement of humans or parts of humans by robots. Examples of domains are indoor environments or rooms, outdoor environments, environments that involve objects, devices, and machines (e.g., cars to drive, buses to enter, buttons to press, or signs to read) or other humans with whom robots would need to interact (e.g., to listen, to talk, or to communicate).

We can distinguish natural and artificial conditions of domains. Typical natural environmental conditions to be considered are exposure time for service and resting, environmental temperature, inclination of the ground, nature of the ground (such as texture, gravel, or sand), friction between feet and natural ground (dry, wet, snow, or ice), exposure to radiation, water or any other media, brightness, etc. Artificial environmental conditions are room temperature, type and intensity of room lighting, and obstacles to be negotiated (e.g., slippery artificial floors, steps, escalators, or doors). Further, conditions are determined by the properties of objects and devices that require interaction.

In this paper, we target a generic comparison between robots and humans in natural and artificial domains that confront us in daily life conditions. Thus, the natural environmental conditions chosen are 24 h service and rest cycles with 8–16 h of service exposure, and temperature that can vary between −10°C and 40°C depending on location, season, and time of the day. We compare walking grounds to include flat and rough terrains, dry, sticky, or even slippery due to snow or ice. Brightness resulting from the typical outdoor light conditions during daytime, 100′000 lux on average (Kelley et al., 2006), and 0.0001 lux at nighttime (Engineering Toolbox, 2022a).

Artificial conditions considered in this study are typical indoor environments that comprise even terrains on flat floors but may also contain steps or stairs, barriers such as doors with handles, and objects such as chairs or tables. The human or robot can interact with different objects and interfaces of vehicles that are actively driven by the user, or that passively transport the user to different locations. Room temperature is in the range of about 18°C–32°C (World Health Organisation, 2018).

Our comparisons focus on robots and humans analyzed in usual conditions of industrialized societies. Therefore, we assume augmentative technologies such as shoes, clothes, and eyeglasses, that can enhance human performances in special indoor and outdoor environments, as “natural” parts that belong to the humans. In contrast, powered devices worn or carried by humans to enhance their performance and often include some level of technical intelligence, such as smartphones, exoskeletons, bikes, and other means of transportation are excluded in this study.

The International Classification of Functioning, Disability and Health (ICF) released by the WHO (World Health Organisation, 2007) can be used as a starting point for collecting different functions and structures of both humans and robots. The ICF distinguishes between human body structures (joints, limbs, or organs, etc.), body functions (joint movement, leg movement, vision, etc.), activities related to tasks and actions of an individual (walking, using public transportation, or cooking) and participation as an involvement in a life situation (visit lectures, participate and cultural events, or playing in a music band). These aspects are viewed as a complex interaction between the personal factors of the individual and the contextual factors of the domain.

The starting point for the choice of the compared functions and structures is a human being as classified by the ICF. Starting with the human helps us finding the concrete robotic functions and structures out of a virtually infinitive number of different robotic designs.

The ICF itself highlights the importance of environmental factors and lists the most relevant in the classification of human structures and functions.

To compare the functions and structures of robots and humans quantitatively, measurable “features” have been introduced–whenever possible. Examples of features to evaluate functions are gait speed for walking, typing speed - rate for writing on a keyboard, or words per minute for speaking. Examples of features to evaluate structures are mass for a body segment or the entire body, image resolution for the vision system, or power density (e.g., power/mass ratio) for an actuator.

Functions to be compared are derived from ICF functions and include aspects of activities and participation (World Health Organisation, 2007) and applied to the environment and the robot representatives. In this study, we collected robots and their applications with respect to the following functions:

• Movement: Walking, running, climbing stairs, changing, and maintaining basic body positions, negotiating rough terrain, crawling, jumping, and swimming.

• Physical interaction: Driving a vehicle, lifting, and carrying objects with upper extremities, kicking objects with the legs, and use of hands and arms.

• Elaboration: Basic motor learning

In addition, the non-haptic interaction functions are presented in Supplementary Appendix SB:

• Elaboration: attention and multi-tasking functions, reading, and writing.

• Communication: Conversation, i.e., listening and producing verbal and non-verbal messages

These functions are resulting out of a complex interplay of sensory, motor and cognitive components and their sub-functions–in both humans and robots. The execution of functions depends on the existence of physical structures. Structures were derived from the above-listed functions performed by the human or robot, as defined by the ICF, and include:

• Sensory structures: for vision (eye and cameras), tactile perception (skin and tactile sensors), proprioception, and balance (vestibular structures of the inner ear and inertial measurement units)

• Motor structures: for actuation (muscles and actuators)

In addition, the structures for non-haptic interaction and computational abilities are presented in Supplementary Appendix SB.

• Motor structures: speaking (organ of speech and speakers)

• Sensory structures: hearing (ears and microphones)

• Computational structures: for memory and computation (brain and CPU/storage devices.

We normalize the features by common denominators and make them comparable among different systems. For example, the payload of a large and heavy-lifting robot used for construction should be downscaled (i.e., normalized) by using a denominator that takes care of the size of the system. Like features, denominators should be measurable, so that they are applicable for normalization. Denominators can be picked among those features of functions and structures that are usually not the primary focus for the design of the robot or the evolution of the human. They are rather a consequence of the physical implementation of the primary design features that are required to obtain desired (e.g., service) functions. Measurable features of functions and structures were compared (i.e., normalized) with respect to the following denominators:

• Mass, in kg.

• Size, in m, for example, body height, main dimension, nominal length.

• Power consumption, usually in W, including dissipated power.

• The inverse of the operational time (under normal conditions), in s-1. The inverse has been taken to get the same polarity of the respective feature to better compare the features in relation to different denominators.

• Cost of Transport (CoT), a dimensionless metric

• Material density, in kg m-3.

Cost of transport CoT (or specific resistance) is a common normalized metric that refers to energy consumption for locomotion (Kashiri et al., 2018; Tucker, 1975):

where E is the energy inserted into the system, m the mass of the system, d the distance it travels, g the gravity acceleration, v the velocity, and P the input power.

The linear dashed line drawn in many of the figures, symbolizes a linear scaling of the feature (of certain functions or structures) with respect to a denominator based on the values found for the human. Values of robots that are placed above this dashed lines mean that the robot performs “better” than the human with respect to the single feature taken into account, with respect to the denominator chosen. The application of denominators to “normalize” the features in a linear way based on a linear scaling of the feature quality using the denominator. For many of the features the relation to the denominators is non-linear. In these cases, the comparison of human and robot features by the linear approximation is only reliable in close proximity to denominator values typical for humans. Thus, we indicate the 2nd percentile female to 98th percentile male interval for each denominator as indication for the magnitude of scaling.

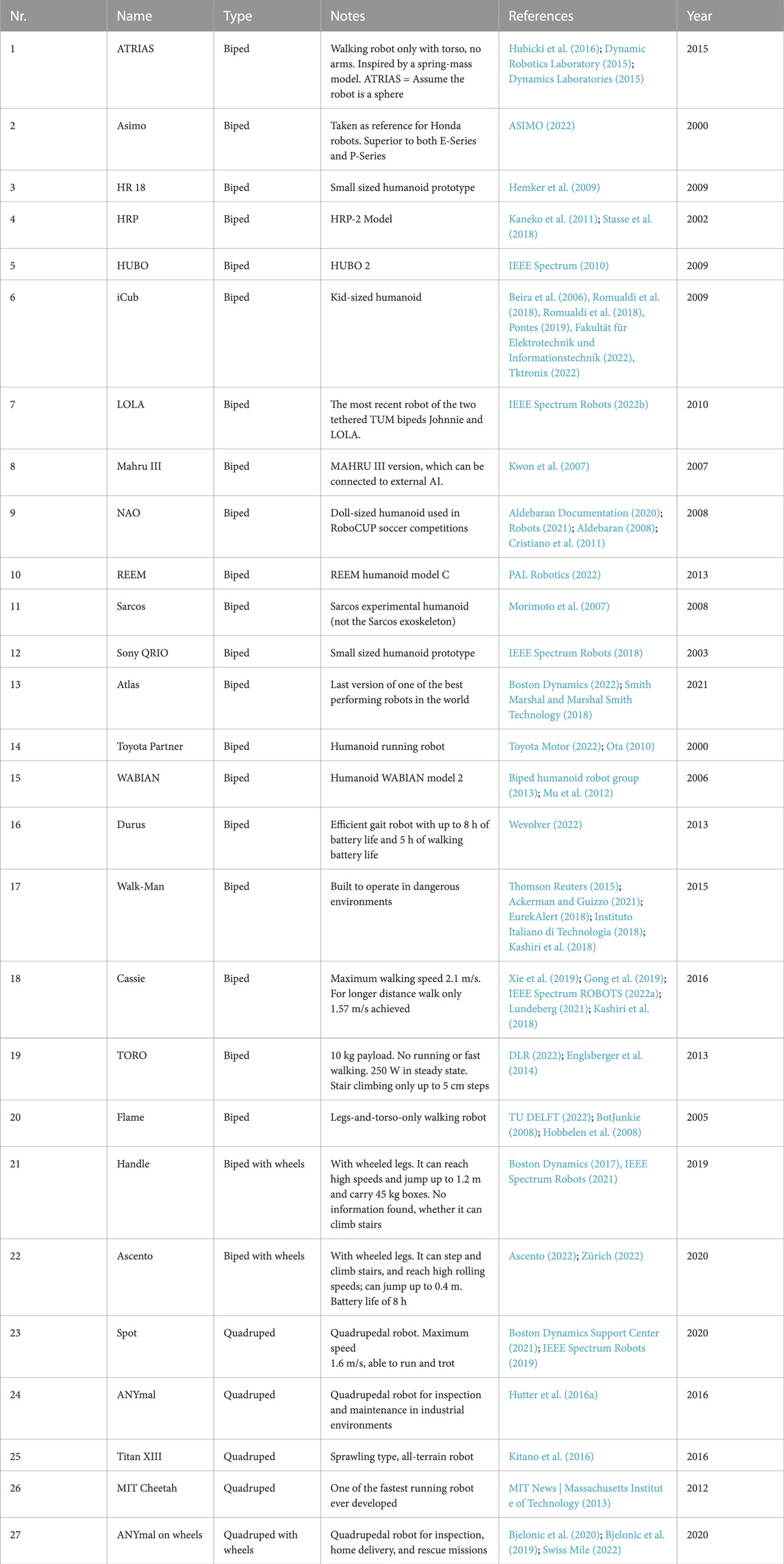

Out of a collection of thousands of different service robots in existence today, we have chosen those that fulfill the requirements to be in principle able to function in human-centered domains:

• Legged robots excel in negotiating rough terrain by leveraging their ability to select footholds and cross gaps (TheIHMC, 2019). Thereby, they can reach locations that similarly sized wheeled-only systems struggle with, while having less impact on the soil. Furthermore, the capability of stepping rather than rolling over obstacles is a significant advantage in narrow and cluttered home environments and environments that include steps and stairs. Therefore, we restrict the comparison to legged robots. This includes bipedal robots, such as Asimo (ASIMO, 2022) or Atlas (Boston Dynamics, 2022), four-legged robots, such as Spot (Boston Dynamics Support Center, 2021) or ANYmal (Hutter et al., 2016a), and even legged robots with wheels, such as Handle (Boston Dynamics, 2017; IEEE Spectrum Robots, 2021) or Ascento (Ascento, 2022). Robots with wheels or tracks only are excluded, e.g., Eve [by Halodi Robotics AS, (Halodi Robotics, 2021)] or autonomous wheeled vehicles.

• The robots must be of slender appearance to fit through doors or move in narrow spaces, e.g., in human crowds. Very broad and bulky robots are excluded, such as BigDog [by Boston Dynamics, (Playter et al., 2006)].

• The robots must have sufficient body height to be able reach and grasp objects on tables and shelves. Assuming that an additional robotic arm can be added to reach higher, a minimum body height of 0.5 m might be sufficient. Smaller robots, such as Robotis OP 2 (Robotis, 2023) or JO-ZERO (ikinamo, 2009a), are excluded.

• The robots must be powered to perform energy demanding, daily tasks, such as opening a door and they must be strong enough to carry objects that are relevant for daily life activities. Therefore, weak or unpowered robots, such as passive walking machines (Collins et al., 2005) are excluded. Also flying drones are excluded due to the negative effects of the strong downwash.

• The robots must be able to perform simple manipulations. Therefore, they must be equipped with arms such as Atlas (Boston Dynamics, 2022), iCub (Beira et al., 2006), or Digit (C et al., 2021) or be able to get equipped with an arm or any manipulative device such as Cassie (Gong et al., 2019).

• The robots must have sensors to perceive the environment to perform locomotion and manipulation tasks robustly in dynamic environments.

• The robots must not produce too much noise or exhaust gases, as they would be not acceptable in human-centered indoor environments. Thus, machines such as BigDog are excluded (Playter et al., 2006).

In this study, we analyze 27 robots that match these criteria, such as iCub, Atlas, ASIMO, or Sarcos (see Table 1). Not all robots have human appearance, as they might have no trunk, shortened arms, small or no heads, etc. And the robots can comprise structures that are not existing in mammals such as shaft-hub connections or prismatic joints. A nice example of a (nameless) bipedal robot with prismatic knee joints has been presented by SCHAFT, Japan some years ago (Ackerman and Guizzo, 2016).

TABLE 1. Compared robots. List of robots considered in this study. The robots have been selected among those currently existing as of 2021. All are potentially capable of taking over human-centered service tasks. The numbers on the far right indicate the year of release of the respective robots.

To the best of our knowledge, none of these robots have been used in ordinary real-life scenarios perhaps with one exception: Just recently, robot Digit came out as the successor of Cassie (Gong et al., 2019). It is commercialized by the spin-off Agility Robotics. The company seems to lead the commercial development of humanoid robots with the aim of relieving humans from certain tasks and to compensate for the increasing decline in qualified workers as a result of demographic change. Most of the other robots are only available as lab devices that require extra efforts to be used outside the lab. Others do not comprise trunk and arms, which limits their functions. Even those robots that are commercially available, have not been tested in random real-life situations, but only in standardized “fake-like” settings, e.g., Atlas within the DARPA challenge. Others were used for entertainment shows and soccer challenges, e.g., Asimo or iCub. Tesla’s Optimus Robot (Youtube - Tesla Bot Update, 2023) is another promising humanoid released recently. However, not many technical specifications have been published yet, and despite the remarkable development efforts and results, experts were assessing the robot’s performance as not too spectacular (Ackerman and Guizzo, 2022). However, substantial improvements were introduced with the updated version (Youtube - Tesla Bot Update, 2023).

The human representative, the so called “reference man”, was defined according to the International Commission on Radiological Protection’s 1974, as someone being between 20 and 30 years of age, 170 cm of height, weighing 70 kg, and living in a climate with an average temperature of 10°C–20°C (Snyder et al., 1974). We have chosen this source because it provided a large amount of available data and is used and cited in many other projects. More recent models of the standard person confirmed similar values for average weight and height, with only slightly larger values for males (Ellis, 1990). An adult, average person consumes about 300 W–450 W for walking (Radhakrishnan, 1998; Das Gupta et al., 2019) and between 700 W and 1500 W for running depending on the speed (Riddick and Kuo, 2022). These values include the power consumption of both mechanical and metabolic activity. The parameter operational time is defined as the time an average adult can operate without needing a rest for recreation, sleep, or food intake. We estimate the operation time of an average human for walking to be about 8 h and for running to be about 1 h, which is a reasonable duration for a healthy adult who is jogging or engaging in moderate exercise.

The human eyeball has a diameter of 21–27 mm (Bekerman et al., 2014) and it weighs about 7.5 g (Todd et al., 1940). Power consumption due to retinal, metabolic, and muscular activities is less than 10 mW (Skorka and Joseph, 2011). The monocular visual field of a healthy adult extends maximally 135° in the vertical and maximally 160° in the horizontal directions (Skorka and Joseph, 2011). The spatial resolution of the eye is 576 MPX (Syawaludin et al., 2019) with highest resolution at the center and gradually declining resolution toward the periphery. The highest resolution is at least 1 arc minute (1/60)°, which corresponds to a visual acuity of 1 or 100%. The shortest duration required to detect unknown images is 13 m (Potter et al., 2014), which translates to a detection frequency of 77 Hz. Other publications estimate that in typical office conditions, the human eye can detect temporal changes at 65 Hz, and frequencies greater than 80–90 Hz at high luminance levels (Skorka and Joseph, 2011). The perceived light is in the visible spectrum characterized by wavelengths in the range of 380 nm–740 nm.

The human eye can adapt to different brightness conditions, yielding a very large dynamic range, or luminance, reaching from 10–6 cd/m2 in the dark (scotopic threshold) to 108 cd/m2 during daylight (damage threshold) (Spillmann and Werner, 2012). Hence, over different scenes, a luminance range of 1014 can be detected by the human eye. Other sources state a luminance range of 1010 (Ferwerda, 2001). However, it requires several seconds to minutes until the human vision system adjusts to a new illumination condition. For a specific scene, the contrast ratio approaches 120 dB, which corresponds to a luminance ratio of 106 (Rosenthal et al., 2004; Durini and Arutinov, 2020). Color vision, or photopic vision, occurs at luminance greater than 3 cd/m2. Vision at a luminance lower than 0.001 cd/m2, in which only rods are operative, is called dark vision, or scotopic vision–the corresponding luminance value is called “dark limit” (Skorka and Joseph, 2011).

Human depth perception is possible due to a variety of different cues, including static and dynamic monocular cues, oculomotor cues (accommodation and convergence), and binocular cues (stereopsis, i.e., binocular disparity) (Schiller et al., 2011; Riener and Harders, 2012). Based on the human ability to detect depth differences at 30 arcseconds disparity (Coutant and Westheimer), we estimate the resolution for depth perception at 10 m distance to be around 0.25 m using basic trigonometry.

Traditional RGB cameras based on charged-coupled device (CCD) or complementary metal-oxide-semiconductor (CMOS) chip technologies have a narrower dynamic range than the human eye, although the gap is closing. Skorka and Joseph (2011) have published a comparison between the human vision system and artificial vision sensors in 2011. At that time, some CCD image sensors achieved higher spatial resolutions and temporal resolutions than the human eye. On the other hand, dynamic ranges and dark limits were still significantly less than in the human eye with a difference of 1.6–4.5 orders of magnitude. Denominator values of power consumption, mass, and size were significantly higher than those of the human eye. Even when checking the data sheets of the newest CCD and CMOS cameras, these values have not improved.

The work from Skorka and Joseph did not include lens systems and sensors with extremely wide field of view (FOV) or very high framerate. “Fish-eye lenses” on modern devices can provide a 360° FOV by adding only a little mass (less than 150 g) and volume (less than 0.16 L) (Insta360, 2022). Ultra-high-speed cameras can reach up to 1 million frames per second; however, with a rather large mass (about 8 kg), size (about 10 L), and power consumption (about 300 W) (Phantom, 2022). Most camera sensors easily cover the color range of the visible light spectrum, some specialized sensors can detect images even in the infrared or ultraviolet light range.

Latest technologies are event-based cameras, which offer attractive properties compared to traditional cameras: they come with high temporal resolutions (in the order of kHz to MHz), high dynamic ranges, and rather low power consumption (Gallego et al., 2017). Embedded event-camera systems, where the sensor is directly interfaced to a processor, have shown system-level power consumption (i.e., sensing plus processing) of 100 mW and even less. At the die level, most event-based cameras use about 10 mW, and some prototypes achieve less than 10 μW, thus, reaching or even outperforming the values of the human eye. The shortest detectable event of these devices lies in the range of microseconds. Therefore, event-based cameras are used in applications that require high refresh rates and low latencies. Image information from event-based cameras can be fused with signals from depth and RGB perception sensors to obtain accurate depth information (Gallego et al., 2017). Dynamic ranges of event-based cameras are above 120 dB, notably exceeding the 60 dB of high-quality, frame-based cameras, making them able to acquire information from moonlight to daylight, comparable to the performance of the human eye. Chip size is in the range of only 1 cm2, not including other hardware components (Gallego et al., 2017). Consequently, with the advent of event-based cameras, we experience artificial vision that is indeed starting to measure up to the human eye.

Lightweight 3D camera systems with time-of-flight or stereoscopic sensors (e.g., Kinect V2 or IntelRealsense D415) have a depth perception uncertainty of around 1.5 mm and a bias of 2–5 mm at a distance of 1 m (Giancola et al., 2018). These sensors have a restricted sensing range of up to a couple of meters, while the sampling rate goes up to 90 Hz (Intel Realsense, 2021). The portable LIDAR sensor Ouster OS34, can measure with a precision of 80 mm at up to 240 m distance, which is clearly outperforming the human depth perception (Ouster, 2021). However, the measurement system does not allow simultaneous measurement in the whole field of view and the update rate for a single line of sight is limited, e.g., to 20 Hz for the Ouster OS2.

Human density of tactile receptors varies among different areas of the body and is highest on the tongue. Most relevant is the tactile perception on the fingertips as the fingers are important for manipulation tasks. At the fingertips, humans can perceive a spatial resolution of 1.8 mm, a sensitivity of 0.2 g/mm2, response threshold range of 0–100 g/mm2, and a frequency response of up to 100 Hz (Dargahi and Najarian, 2004). Assuming signal propagation speeds between 2 m/s and 100 m/s and nerve lengths of maximally 1 m, signal latencies are about 10–500 m (Dargahi and Najarian, 2004). The complete coverage of the skin with tactile sensors allows the human to perceive and interact with the environment in a highly versatile way by including arbitrary body parts in interactions with the environment.

The latest artificial pressure sensor matrices can outperform all these biological values easily. Sensing devices reach localization accuracies of up to 0.1 mm at a sampling frequency of 100 Hz (Yan et al., 2021). Some of them are inspired by the human vibrotactile sensing principle (Chun et al., 2021; Dahiya et al., 2009). Visual-tactile sensors were successfully used to detect slip (Yuan et al., 2015; Xia et al., 2022). Li et al., present a comprehensive recent review of the state of the art in tactile perception and application (Li et al., 2020a). The progress in tactile sensor developments leads to increased functionality and performance for robotic manipulation (Kappassov et al., 2015; Luo et al., 2017; Yin et al., 2021; Xia et al., 2022).

Standard kinematic sensors can measure angles and distances proprioceptively in the range of angular seconds and micrometers, respectively (Azibil Corporation, 2023). Inertial measurement units can measure angles with 0.6° accuracy and 0.1° precision (Leah et al., 2017) while sensor fusion approaches combine the high precision and accuracy of proprioceptive, and visual sensors with the robustness and reliability of vestibular sensors (Wisth et al., 2023). Thus, they easily match or excel the performance of the human proprioceptive or vestibular systems. For example, conventional position sensors and inertial measurement units provide high quality signals about posture, movement, and balance. Technical systems are rather compact and lightweight, and do not need a lot of power, so that they can be easily implemented in humanoid robots.

Next to tactile sensing, also proprioceptive force perception plays an essential role for humans to control haptic interactions with the environment. Although the human body does not have any receptors that detect force directly, it can sense different amounts of forces or weights by integrating tactile and proprioceptive receptor information. Proprioceptive force perception is used to robustly track interaction forces, e.g., when polishing objects, dancing with a partner, or steering a vehicle. Similarly like in humans, force perception can be realized using the intrinsic properties of artificial actuators, e.g., with series-elastic or quasi-direct electric motor actuation comparable or even more accurate perception of joint loads to humans can be achieved. More accurately, forces and torques can also be measured by commercially available force/torque sensors that function on resistive, capacitive or optical measurement principles. Typical force sensors used in robotics can have accuracies better than 1% of the measurement range (Cao et al., 2021). They are easily outperforming the human sense.

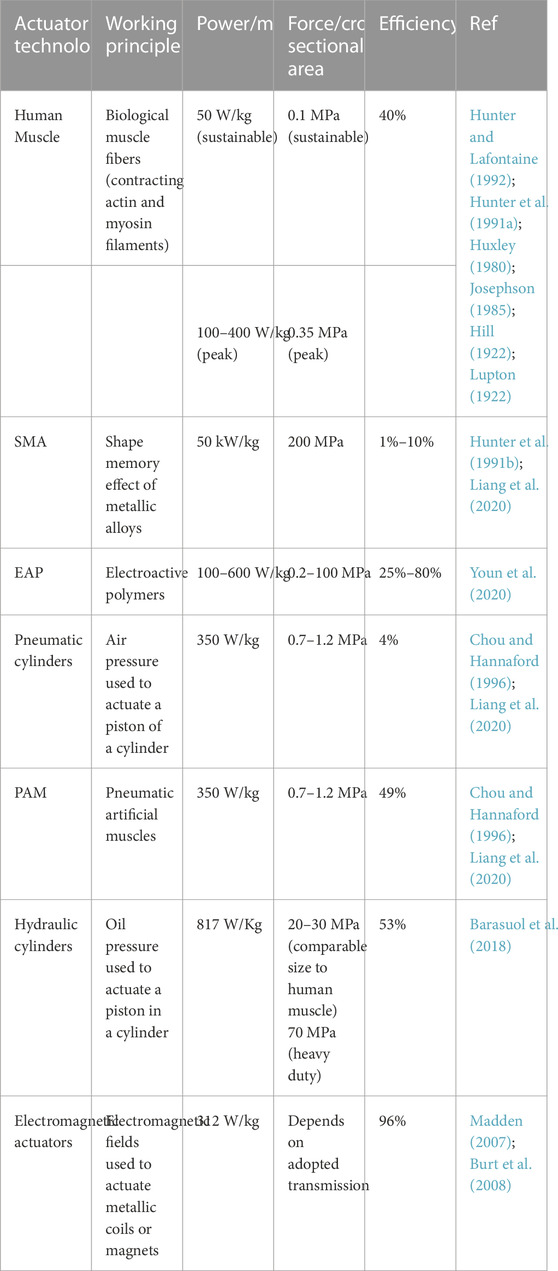

The human muscle is a powerful and efficient organ that can produce high muscle forces requiring little energy supply (see Table 2). For example, the biceps brachii muscle of an average adult human produces a continuous mechanical power output of 50 W/kg (Hunter and Lafontaine, 1992; Hunter et al., 1991a; Huxley, 1980) and peak power to mass values of 100–400 W/kg (Hunter and Lafontaine, 1992; Josephson, 1985; Hill, 1922; Lupton, 1922). Mammalian muscle force normalized by cross-sectional area as a denominator for size can reach values up to 0.35 MPa for peak forces and about 0.1 MPa for sustainable muscle force (Hunter and Lafontaine, 1992; Huxley, 1980). Thus, the sustainable muscle power and force is about 30% (Schoenfeld et al., 2021) to 40% (Leng et al., 2021) or less of the peak value. Mechanical output power in relation to power consumption yields an efficiency of 40% for the biceps muscle (Leng et al., 2021) which is similar for other mammals (Curtin et al., 2018), as work is produced not only mechanically but also metabolically resulting in heat. A specialty of mammalian muscles is their capability to regenerate after injuries and to adapt their dynamic properties (speed, amount of force, fatigue behavior) to slowly varying conditions. The deformable nature of muscles allows a tight packaging spanning one or multiple joints, such as at the shoulder or vertebrae. Further, the series-elasticity introduced by the tendons as well as the antagonistic system allows storage of mechanical energy and selective stiffening up of a joint. For instance, the Achilles tendon can store energy during the stance phase that is released during toe push-off by leveraging the remarkable eccentric strengths of muscles. Moreover, the structure and selective recruitment of muscles enables the impressive versatility from highly delicate interaction to application of large forces and high power.

TABLE 2. Actuator Principles. Comparison of different actuation technologies in power density, efficiency (excluding transmission), and force density.

Most humanoid robots are equipped with electromechanical motors, while for instance Atlas is actuated by hydraulics. Further actuation technologies applied in robotics are pneumatic actuators, such as pneumatic artificial muscles (PAMs such as McKibben actuators), shape memory alloys (SMAs), and electroactive polymers (EAPs). EAPs include dielectric elastomer actuators (DEAs) and relaxor ferroelectric polymers (RFPs). The latest devices also include ionic polymer metal composites (IPMCs) or hydraulically amplified self-healing electrostatic (HASEL) actuators.

Depending on the type and ratio of the accompanying transmission, electromagnetic actuators, also called electromechanical motors, can produce quasi-arbitrarily high forces. Power density values range from 312 W/kg, when using rare earth magnets, and twice that when they are actively cooled (Madden, 2007). Power efficiencies of motors can achieve up to 96% (Burt et al., 2008). Thus, modern electromagnetic actuators have a slightly higher power density compared to humans.

High-pressure hydraulic cylinders for heavy-duty applications, e.g., the RC10010 (by Enerpac Ltd., United States) can be operated with 70 MPa. However, smaller cylinders and valves built for mobile robotic applications in a scale that is comparable to humans, are typically rated for operation with 20 MPa–30 MPa (Barasuol et al., 2018), e.g., Model E081-115 by Moog uses 21 MPa according to the company data sheet. When considering the outer cylinder diameter, the force per area can be substantially lower, e.g., by a factor of 0.53 for RC1514 (by Enerpac Ltd., United States). Thereby, this actuator achieves a power density of 817 W/kg based on the company data sheet and when using the weight reported for the academic equivalent ISA V5 (Barasuol et al., 2018). Thus, hydraulic cylinders achieve force densities that are two magnitudes higher than human muscles are capable of and around double the power density. MPa Pneumatic cylinders and PAMs can generate forces in the range of 0.7 MPa–1.2 MPa and about 3.5-times higher power densities compared to the human muscle (Chou and Hannaford, 1996; Liang et al., 2020). Efficiencies of PAMs are up to 49%, whereas pneumatic cylinders are below 4% (Chou and Hannaford, 1996). However, these numbers found for hydraulic and pneumatic actuators do not include the weight of the means for pressure generation and storage.

SMAs produce forces of 200 MPa and power densities of 50 kW/kg; with an efficiency of only about 1%–10% (Hunter et al., 1991b; Liang et al., 2020). EAPs generate very high force densities of 0.2 MPa–100 MPa (Youn et al., 2020). Power densities are between 100 to W/kg600 W/kg and efficiencies between 25% and 80% (Youn et al., 2020; Higueras-Ruiz et al., 2021). HASEL actuators produce comparable force densities 0.3 MPa and higher power densities and 614 W/kg, but smaller efficiencies when compared to human muscle (Acome et al., 2018; Liang et al., 2020). IPMC actuators produce higher force densities, but smaller power densities and efficiencies when compared to human muscle (Kim and Shahinpoor, 2003; Liang et al., 2020). So far, these actuation principles are mainly used in nano and micro-robotics, because of their good performance at small scale. Successful application in human-scaled multi-DOF robots was not yet demonstrated.

Combustion machines are rarely used in robotics (and were excluded for the use in human-centered domains in this study), although the continuous power density is about 10 times greater than the continuous power output of muscles (Madden, 2007) and the forces can be high using transmissions with high gear ratios. Furthermore, gasoline has a high energy per unit mass that is about 20 times higher than that of a good battery, even after accounting for the approximately 30% efficiency that is typical in combustion processes.

All these actuation technologies (except for IPMCs) outperform human skeletal muscles with respect to force and power densities, when neglecting the weight of energy storage and pressure generation. Besides pneumatic cylinders and SMAs, they are also providing higher power efficiency.

Most artificial actuators outperform the human muscle concerning speed and endurance because the generation of muscular force is bound to rather slow electrochemical processes and the force levels cannot be kept constant over long time periods due to muscular fatigue.

Efficiency values were obtained primarily from Higueras-Ruiz et al. (2021); they consider the total system including off-board energy storage to output work. However, the force and power density data of the technical actuator systems do not include components that store and provide electric, fluidic, or thermal power (e.g., battery, compressor, pressure tank, heating system, respectively). They also do not include components that are required to change actuator speeds (e.g., transmissions and clutches), that which take away the heat (e.g., air ventilation or liquid cooling systems), or any other components such as sensors, cables, electric, and thermic or acoustic isolations. As many of those components can be rather bulky and heavy, they would weaken the force and power density values, which likely gives the human actuator an advantage over artificial ones. Unfortunately, the weight of those auxiliary components is largely unreported in the literature; and it varies a great deal between different solutions and applications. Other studies investigating methods for embodied energy strive towards more lightweight and untethered energy supply and conversion methods (Aubin et al., 2022). For most of such concepts, demonstrations were either performed at a very small, micro-robotic scale, on isolated actuator systems, or on robots with the primary purpose to showcase the actuation method.

Series-elastic actuation is frequently used in walking systems (Hutter et al., 2016a) or in robots that are in interaction with humans (Vallery et al., 2008; Zimmermann et al., 2019). The energy-storing and release capability of these actuators was often envisioned, however, so far barely successfully exploited by state-of-the-art systems. Series-elastic actuators have further advantages that can be nicely exploited in robotic applications, e.g., the ability to withstand shocks due to mechanical compliance. However, the design and control of robots with such actuators is challenging, because of the additional mechanical components that lead to higher compliance. Many demonstrators with novel series-elastic actuators including variable impedance control exist in the scientific literature (Ham et al., 2009; Vanderborght et al., 2013; Verstraten et al., 2016). There have been a number of approaches for tendon-driven series elastic actuation approaches for bipedal humanoid robot locomotion aiming for utilization of the passive synchronization properties of the mono and biarticular muscle groups like in the human leg (Seyfarth et al., 2009; Sharbafi et al., 2016; Sharbafi et al., 2016).

Systems realizing more efficient use of materials by embedded energy storage (i.e., combining energy storage with actuation) were demonstrated in less versatile robots applied to less complex cases than humanoid robots (Aubin et al., 2022). Soft actuation principles promise to close the gap in power density and more optimized system integration due to the flexible structures. However, these technologies are to date still in an early phase where prototypes are mostly investigated in isolated settings and not incorporated in full robotic systems (Rothemund et al., 2021).

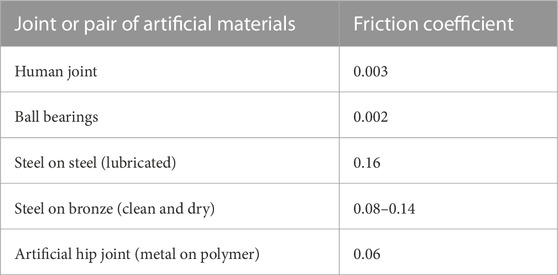

The skeletal systems of the human and robot are comprised by links (i.e., bones) and joints that connect the links allowing relative movement. Human bones can transfer rather high mechanical loads and have impressive self-healing capabilities and other relevant functions, such as blood cell production. Most joints in the human body are synovial joints. Ligaments, tendons, and other connective tissues form the articular capsule with a synovial cavity between the bones. Synovial joints are self-lubricating, almost frictionless, and able to withstand heavy loads in the magnitude of the body weight (and higher), while still executing smooth and precise movements. Human synovial joints are difficult to outperform by artificial joints (Table 3). Only very dedicated pairs of materials or ball bearings can compete with the friction properties of human joints. In contrast, robotic joints can have larger ranges of motion, even up to 360° or more, which is not possible with physiological joints.

TABLE 3. Friction coefficients of different joints and pairs of materials and a human joint (Serway and Vuille, 2014; Sagbas, 2016; Koyo Bearings/JTEKT CORPORATION, 2022; Engineering ToolBox, 2022b; Copper, 2022).

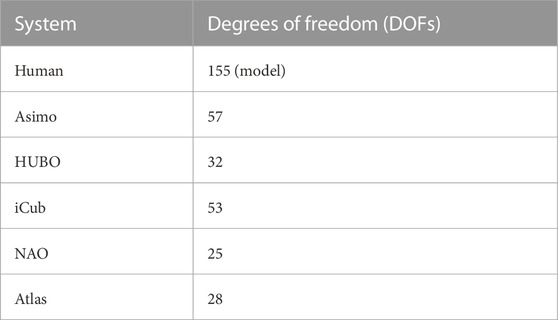

The adult human body comprises 206 bones that are connected by joints. Each of these joints has negligible, one, or multiple degrees of freedom. The number of degrees of freedom per joint depends on the complexity of the kinematic model. For example, the knee joint not only flexes in the sagittal plane of the body, but it can also move outside the sagittal plane (“varus-valgus”) or rotate around its longitudinal axes. Due to the mechanical compliance of the joint capsule and cartilage, it can even move in translational directions. Thus, knee joint models can vary between one and six DOF. Even simpler models count about 155 DOF (Venture et al., 2007) in the human skeleton. This high number of DOF enables a large range of motion at certain body regions such as the shoulder or the trunk, allowing manyfold, dexterous grips of the hand, and generates vast kinematic redundancy of the extremities when interacting with objects, humans, or other robots. The most advanced humanoid robots are counting maximally only one-third of the DOF of the human skeleton (Table 4).

TABLE 4. The number of degrees of freedom (DOF) of different robots in comparison to a simplified model of the human (Takiyama et al., 2020; Yamane et al., 2005; Li, 2006; ROBOTIS, 2022; ikinamo, 2009b; Samson et al., 2001b; Hutter et al., 2016b).

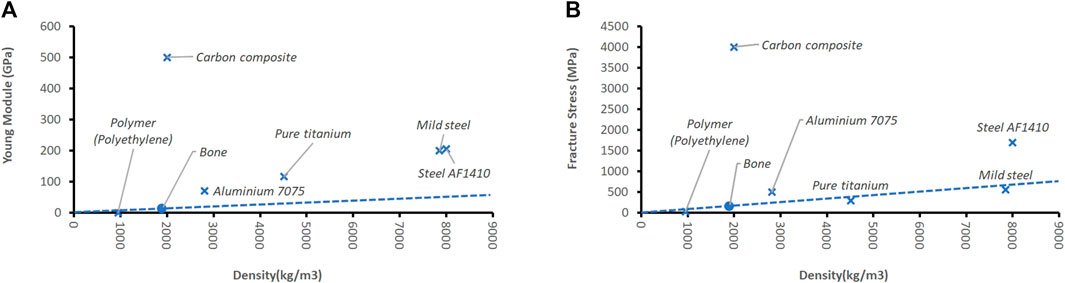

In robots, bones are represented by rigid links (bars) made of metal, polymers, or carbon composites. Many metals and carbon composites do have better stiffness and fracture properties than human bones (Figure 2).

FIGURE 2. Two features to describe the properties of bones are elasticity expressed by the Young Module (A) and Fracture Stress (B). Features are normalized by the denominator bone density, which correlates to the mass of the material. (The world material, 2022; Cameron et al., 2000; HABA, 2021; Garrison, 1990; Cao et al., 2009; HABA, 2021; Garrison, 1990; Reed and Brown, 2001). The dotted line indicates the linear extrapolation of the normalized average human performance.

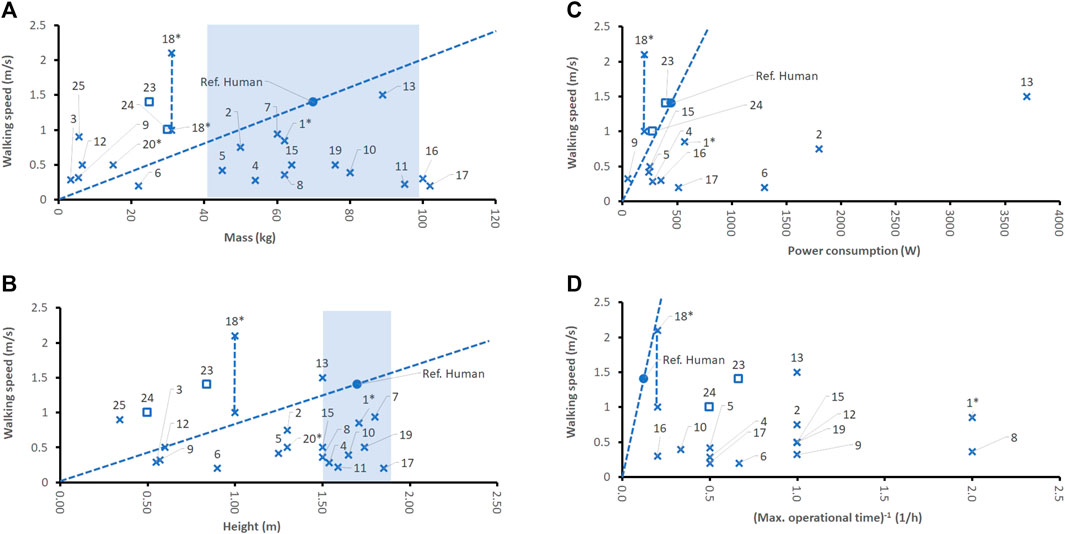

Normal walking is one of the most developed functions of humanoid robots. The preferred natural walking speed of humans is about 1.4 m/s (Samson et al., 2001a). We compared the natural human walking speed with those of the 22 bipedal robots found in this study. Walking speed has been related to the denominators mass, size, power consumption and inverse of the operational time.

When relating natural human walking speed to mass or size (Figures 3A, B), one can see that many of the robots cannot compete with humans, because their walking speeds in relation to their physical dimensions (expressed by robot mass and robot height) are lower than that of humans. In contrast, Cassie clearly outperformed the human test case with respect to natural gait stepping speed. However, Cassie does not have a torso nor arms and head, giving it an advantage with respect to its mass. The quadruped robots Spot, ANYmal, and Titan clearly outperformed the human test case with respect to natural gait stepping speed. Other robots, such as HR 18, NAO, QRIO, Asimo, LOLA, and Atlas are showing similar walking speed performances to humans. Flame performs about as well as humans when relating gait speed to its mass, but not when relating it to its height, because this robot is rather “skinny,” thus, lightweight, and tall (only around 16 kg at heights of more than 1.2 m). From a pure kinematic point of view, a tall robot has an easier job performing large steps leading to a higher gait speed. Also, when relating natural walking speed to power consumption, not many robots can outperform humans (Figures 3C). Human power consumption during walking was assumed to be 450 W. Only Cassie, NAO and Robotis are outperforming human walking speed when relating it to power consumption. All these robots have very small masses and heights, making it obviously easier to realize an efficient energy concept. Cassie, a robot without even a torso and one of the newest bipedal robots, reaches a maximum walking speed of 2.1 m/s (Michigan Robotics, 2020); it consumes about 200 W, when walking with a speed of 1 m/s (Kashiri et al., 2018). In contrast, Atlas requires more than ten times more power to reach about the same walking speed as Cassie. Digit (C et al., 2021; NED Directory, 2023; Robots, 2019), the successor of Cassie, has a maximum walking speed of only 1.5 m/s. Furthermore, Digit is heavier than Cassie (42.2 kg–48 kg, depending on source, vs. 31 kg) and taller than Cassie (1.55–1.58 m vs. 1.15 m), which worsens the robot’s features when normalizing them with respect to denominators mass and size. Digit’s run time is equal to the one of Cassie (light duty run time: 3 h, heavy duty run time: 1.5 h). It seems that Digit’s additional trunk and arms (despite the great additional functions) reduces its performance in our metrics compared to Cassie.

FIGURE 3. Comparison of robots’ walking speeds for bipeds (x), quadrupeds (□), and legged robots with rolls (o) with respect to denominators mass (A), height (B), power consumption (C), and the inverse of the maximum operational time (D). Bipeds without humanoid upper bodies (without torso, or without functional hands or head) are marked by a star (x*). Maximum operational time and power consumption refer to common activities, such as walking. Note that not all robot sources provided data about all features studied. For the human test case, the so-called “preferred walking speed” (Samson et al., 2001a) was chosen. For Cassie (no. 18) two different walking speeds have been reported in the literature. Both are displayed and connected by a vertical line. The dotted line indicates the linear extrapolation of the normalized average human performance. The blue shade indicates a typical range of the denominator for humans (2nd percentile female to 98th percentile male).

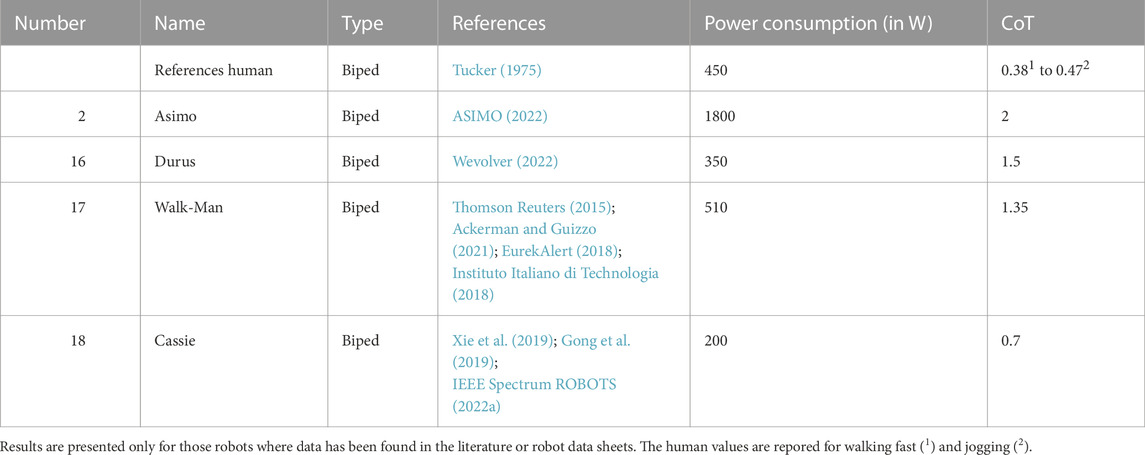

Instead of power consumption, also Cost of Transport (CoT), can be used to normalize walking speed and compare it among different robots and the human. CoT, also called “specific resistance,” expresses power consumption, and relates it to mass and locomotion speed. The CoT of biological systems is far superior to those of robots (see Table 5) The reported CoT values of humans and robots are sample values taken from the mentioned references. The CoT is a function of the gait type, walking speed conditions, the kinematic characteristics of the individual, etc. Thus, reported CoT might vary between references.

TABLE 5. Comparison of Cost of Transport (CoT) and power consumption of different robots for walking.

The data found on operational time refers to normal usage conditions, and not any extreme condition. All robots functioning with an autonomous energy supply (i.e., portable batteries) exhibit performance inferior to the human test case when relating their walking speed to the operational time (Figures 3D). Although robots such as Cassie, and Atlas are reaching rather high walking speeds, their operational time is significantly lower compared to humans.

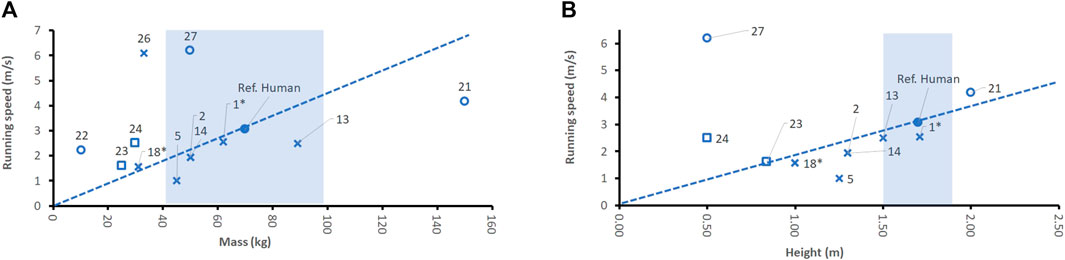

Running is defined as a legged locomotion pattern that includes a short flight phase in which both feet do not touch the ground. Running can be characterized by running speed and endurance. In the human-centered domain, there is a high variability of performance among individuals. The world’s best athletes can finish a marathon distance at an average pace of about 21 km/h or run 160 km with 10 km elevation gain in little more than 1 day (26.4 h) (Swiss Alps 100, 2021; World Athletics, 2022). For an average human, we estimate the operational time for running to be at least 1 h. Assuming an average jogging performance, a healthy young adult can run at an average pace of 3.06 m/s for quite long times (Barreira et al., 2010), with an optimal, energy-efficient running speed of 3.7 m/s for males (Steudel-Numbers and Wall-Scheffler, 2009).

Also, some legged robots can perform a running pattern. One of the newest and best performing humanoid robots, Atlas, has a battery cycle time of about 1 h for running, and reaches maximum running speed of only 2.5 m/s, which is inferior to the human. Robots ATRIAS, Asimo, and Toyota Partner are jogging with a similar performance to humans, when referring to their mass and size (Figure 4). For most robots, there is no data available about running endurance and power consumption.

FIGURE 4. Comparison of running speeds for bipeds (x) and quadrupeds (□) with respect to denominators mass (A) and height (B). Biped robot ATRIAS (no. 1) has no arms and no head and is, therefore, marked by x*. Note that not all robot sources provided data about all features studied. The running speed of the human refers to a human jogger running at a pace of 3.06 m/s (Barreira et al., 2010). The dotted line indicates the linear extrapolation of the normalized average human performance. The blue shade indicates a typical range of the denominator for humans (2nd percentile female to 98th percentile male).

The COT of MIT cheetah (0.52) came closest of all robots to the COT of a human (0.2–0.47). Further, MIT cheetah outperforms a jogging human: it runs at a pace of 6.1 m/s and weighs only 33 kg (Figures 4A). Obviously, four legs enable much faster running speeds than two legs. Its drawbacks, however, are that its power consumption is high [973 W (Seok et al., 2014)] and operational time cannot compete with that of a jogging human. However, cheetah did not achieve this performance while transporting means for manipulation.

Adding trunk and arms for functions other than locomotion, such as perception and manipulation, would further downgrade running performance of the robots. One of the reasons, why it is so difficult to outperform human running performance could be the fact that mammals like the human comprise elastic components, tendons, that store and release potential energy and compensate shocks when the foot touches the ground. This makes the running very energy efficient and keeps the foot on the ground and avoids too much shaking of the other parts of the body. Most humanoid robots do not contain such components yet.

Legged robots rolling on wheels can outperform humans when referring the feature running speed to the denominators mass and/or size. Some recent examples for such robots are Handle and the version of ANYmal equipped with wheels connected to its feet (Bjelonic et al., 2020; IEEE Spectrum Robots, 2021), Ehile the idea of combining wheels weigh legs is much older and shares a blurry border with active suspensions (Smith et al., 2006). ANYmal on wheels achieves a locomotion speed of 6.2 m/s using the wheels for propulsion (Swiss Mile, 2022). However, comparing the running speed of an average human might not be a fair comparison, as the human could also use rolling means of transport (e.g., roller blades).

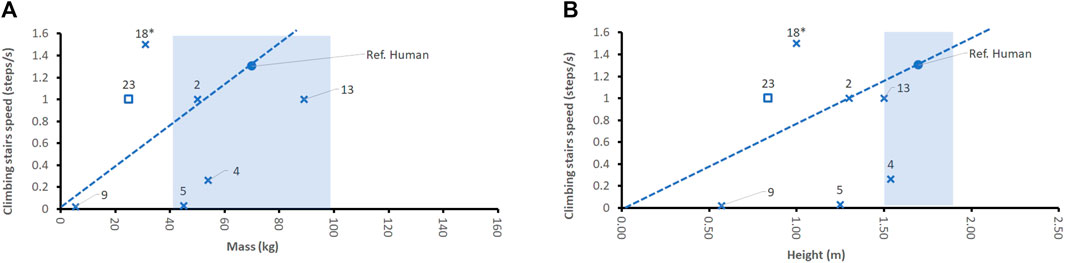

The average stair climbing speed of an adult human male or female is about 0.66 m/s or 0.48 m/s, respectively (Choi et al., 2014), which corresponds to an average of 1.3 steps per second (Hinman et al., 2014). Also, most legged robots can ascend and descend stairs. The stepping speed of the robots has been estimated from public video material and displayed in relation to mass or height (Figure 5), see Supplementary Appendix, as there was no quantitative information available in the literature or in the data sheets of the devices.

FIGURE 5. Comparison of robots’ stair climbing speeds for bipeds (x) and quadruped Spot (□, no. 23) with respect to denominators mass (A) and height (B). Cassie (no. 18) has no torso and no arms and head and is, therefore, marked by x*. Note that not all robot sources provided data about all features studied. The dotted line indicates the linear extrapolation of the normalized average human performance. The blue shade indicates a typical range of the denominator for humans (2nd percentile female to 98th percentile male).

Except NAO, all the stepping robots considered in this paper can overcome step sizes that are comparable to steps in human-centered domains, or even higher. Asimo and Atlas perform stepping speeds that are comparable to the human test case. These two robots have body masses and heights that are like those of an average human. Only Cassie and Spot outperform a human, as they can reach about the same numbers of steps per second, as a human, at human-sized steps. However, Cassie has a body mass that is less than half of an average human. It seems to have again an advantage, thanks to its missing torso and arms. However, this prevents its application for other tasks such as carrying and manipulating objects. And operational time is probably lower than that of a human walking.

A company video (Smith Marshal and Marshal Smith Technology, 2018) shows quadrupedal robot Spot climbing a normally sized staircase in a human laboratory environment. The robot climbs 7 steps in about 7 s, thus, achieving a stair climbing speed of 1 steps/s, see Figure 4.

There are robots that are designed to specialize in mimicking human gestures, e.g., the RASA (Meghdari et al., 2019). Other devices can manipulate their environment, while optimizing the posture for improved load transfer in confined workspaces (The Verge, 2015). These robots perform well in tracking precise end-effector trajectories if the attached load is constant. However, for tasks involving heavy interaction, e.g., cutting with power tools or determining precise and accurate position trajectory, tracking is a challenge (Gawel et al., 2019; The Verge, 2015). Further relevant human body functions listed by the International Classification of Functioning, Disability and Health (ICF) are lying down, squatting, kneeling, sitting, standing, bending, and shifting the body’s center of gravity (World Health Organisation, 2007). Those functions can be performed by some, but not many humanoid robots (Tektronics Expert, 2016; Fakultät für Elektrotechnik und Informationstechnik, 2022; Romualdi et al., 2018; Beira et al., 2006; Pontes, 2019). iCub, for example, is instrumented with very precise position and inertial sensors. Consequently, iCub can track trajectories and perform its postures with a very high repetition accuracy, perhaps comparable to those of Tai-Chi athletes (Poppy Mosbacher, 2020). Thus, iCub outperforms the average human with respect to posture accuracy, as many other robots do, even if their repertoire of implemented postures is much smaller. Furthermore, robots have a better postural endurance than humans. The ability to control body postures in a humanoid robot (as well as posturally stable walking and running) is closely related to two key functions: the robot’s artificial vestibular sensing system (i.e., an inertial measurement unit providing sufficiently fast and accurate 3D linear acceleration and 3D rotational angular velocities, and/or visual sensors) and the actuators being able to perform sufficiently fast enough motions. In addition to the actuation system (e.g., electric motor), brake systems can be deployed that use form fits or friction to lock the robot joint’s. While these systems require only little to no power to hold the position, they add weight and volume to the system and consume energy to change the state. Dexterity and velocity in performing such postures and movements, however, remain superior in humans.

Comparing capable robots with the average human can provide valuable information on the agility of these robots in rough natural terrains. The very dexterous platform, Atlas, can jump as high as an adult human, do backflips, and negotiate highly challenging artificial terrains (Boston Dynamics Support Center, 2021). Crawling on the ground can be performed by the small humanoid, iCub. With an estimated crawling speed of 0.03 m/s (i. HumanoidRobot, 2010) it is relatively slow with a body height of 1 m compared to humans crawling up to 1.47 m/s (Gallagher et al., 2011). The very specialized humanoid robot Swumanoid (Plastic Pals, 2022) can perform a crawl swim at 0.12 m/s (Nakashima and Kuwahara, 2016), while average human swimmers reach 0.96 m/s (Seifert et al., 2010). The bipedal rolling robot Handle can jump up to 1.2 m (Boston Dynamics, 2017; IEEE Spectrum Robots, 2021). Quadrupedal walking robots like ANYmal and Spot recently have shown great performance in negotiating rough terrain robustly. ANYmal was shown to negotiate rough terrain matching the speed expected for an average human on a 2.2 km hike and 120 m elevation gain in a total time of 78 min, while being lighter than a human (Miki et al., 2022). Further, the ANYmal robot demonstrated robust performance in narrow subterranean tunnels (Tranzatto et al., 2022).

The DARPA Robotics Challenge required the participating humanoids to drive a small vehicle a short distance by interacting with a steering wheel and one pedal. Many platforms have consequently been programmed to drive autonomously or teleoperated. As shown by Goswami and Vadakkepat (2019) the robot HRP2 could successfully perform this task, by driving a small buggy. A similar performance was observed by IIT robot Walk-Man (Instituto Italiano di Technologia, 2015) and other robots that participated at the DARPA Challenge. However, the vehicle was specially designed for this challenge allowing simplified access and control, and the driving was relatively slow. The dexterity of the driving robot was far inferior to that of an average human driver (Signe Brewster, 2015).

The Swiss State Secretariat for Economic Affairs outlines the maximum allowed loads carried by (human) workers, depending on their age and gender, and ranging from 10 kg to 25 kg (Swiss State Secretariat for Economic Affairs, 2016). However, the weights carried by a well-trained human can be much higher. The robot, Atlas can successfully lift and carry 5 kg boxes while the payload to Toro was 10 kg. The Handle robot can carry weights of up to 45 kg, but only when it is rolling, not stepping (Boston Dynamics, 2017; IEEE Spectrum Robots, 2021).

In the Humanoid League of the RoboCup, autonomous robots with human-like bodies and senses play soccer against each other in a competition. Unlike robots outside of the Humanoid League, walking, running, kicking the ball, balancing, visual perception, self-localization, localization of the overall soccer scenario, team play, strategy planning etc. must be human-like. Several of the best autonomous humanoid robots in the world, such as NAO, compete in this competition. While these humanoid robots fulfill structural demands, such as the right anatomy to trap and shoot the ball and locomote between other (robotic) soccer players, dexterous motor functions, such as dribbling, sensory functions, such as an accurate localization of ball and other players, and higher cognitive functions, such as strategy planning, are still lacking. Consequently, soccer capabilities, especially game speed and dexterity, are still far below that of human soccer players. When establishing the RoboCup initiative in the nineties of the last century, the organizers envisioned to build humanoid robots that are capable to beat the human world champion team playing according to the FIFA rules in 2050 (Gerndt et al., 2015).

The human hand has approximately 27 degrees of freedom (DOF), with 6 actuators and 4 DOF for each finger (Goswami and Vadakkepat, 2019). The spatial accuracy of the human hand of a surgeon can reach values of less than 1 mm (Kwartowitz et al., 2007; Choi et al., 2009), while the same hand is capable of generating fist forces of more than 484 N (male, mean + 1SD) (Hogrel et al., 2007). Humans are highly capable of exploring and manipulating objects due to their efficient sensory and motor functions. Human sensation employs sensor fusion of tactile, proprioceptive, visual, and auditory modalities with high spatial and temporal resolution, leading to rich information required to interact with the environment. Furthermore, human muscles are actuated in a highly selective way with respect to space and time, due to the presence of many small motor units that can be recruited independently. Therefore, humans are highly efficient in bimanual manipulation and task execution. One of the most agile robotic hands integrated into a humanoid, is the one in iCub, which has 9 DOF in total. Humanoid hands that are detached from a robotic trunk and mounted to a rigid frame can have a higher number of DOF (Szkopek and Redlarski, 2019). For example, the Gifu Hand III has 16 DOF (Kawasaki and Mouri, 2019), and the Shadow Hand even 20 DOF (Rothling et al.). Recently published review articles present the current state-of-the-art and future trends of robotic grasping and manipulation, including sensing, actuation, and control (Billard and Kragic, 2019; Cui and Trinkle, 2021; Sleiman et al., 2019; Yin et al., 2021).

Purpose-built robots such as the surgical tele-manipulator DaVinci achieve accuracies of about 1 mm (Kwartowitz et al., 2007) in dexterous multi-DOF robotic manipulation. Thus, they can compete with human surgeons regarding accuracy. However, the DaVinci has a fixed base, is 175 cm tall, and has a mass of 545 kg (intuitive, 2022). Similarly, robots built for pick-and-place excel human performance in speed [e.g., YF003N (Kawasaki, 2023)]. However, these robots do not meet our inclusion criteria regarding mobility.

Human-centered domains challenge robots with a large variety of shapes, softness, and surface properties of objects to be manipulated. To cope with this large variety of objects, novel machine learning methods applied to sensor fusion and control seem to be promising (Cui and Trinkle, 2021). Rakita et al. (2019) introduced a method to transfer human bimanual manipulation strategies to a humanoid robot. A shared-control policy allows the user to telemanipulate the robot, while being supported by an assistance controller that identifies typical patterns from a bimanual action library in the human commands (Rakita et al., 2019). Understanding the tactile perception of shapes, stiffnesses, and contact states (e.g., slip) is crucial to advance manipulation capabilities in robots. The recent developments in visual perception processing improved vision-based object localization, tracking and semantic characterization. Many of the methods developed for visual perception also find their applications in the processing of tactile sensor signals (Luo et al., 2017). The fusion of tactile, visual, and proprioceptive sensing modalities leads to versatile and robust object and contact state perception (Xia et al., 2022). Li et al. (2020b) demonstrated the use of a multi-modal tactile sensor to classify objects based on pressure, temperature, and thermal conductivity information. This progresses also advanced the manipulation of deformable objects, which is still one of the biggest challenges for robot manipulation (Yin et al., 2021). Because of the highly efficient sensory and motor functions in humans, robots are still performing inferior in dexterous manipulation tasks, particularly when complex tactile perception is demanded. Some robotic systems that are capable of choosing and connecting different robotic hands to their most distal limb to allow a higher versatility of tasks, e.g., to switch between precision and power grip (Billard and Kragic, 2019).

Despite the recent advancements in the development of robotic hands and arms for mobile robots, mobile robotic manipulation lacks far behind average human skills. One eminent difference is the lack of versatility in robotic hands preventing them from performing both precise finger movements as well as powerful grasping and manipulation tasks using a large number of DOF. Next to the mechanical challenges, also controls and perception pose challenges to mobile robotic manipulation. Arguably, the biggest discrepancy between human and mobile robotic manipulation is the semantic and physical understanding of the environment the robot is interacting with (Billard and Kragic, 2019). Likely this is why work environments for robots are still structured to allow robots that are less versatile than humans to successfully perform manipulative tasks.

Motor learning performance is difficult to quantify in numbers. Human and machine learning follow different paradigms but are becoming more and more comparable as the performances of CPUs and artificial intelligence algorithms increase. Human motor learning follows a learning curve that is estimated to lead to acceptable performances within 20 h of practice; however, more than 10′000 h are required to be fully proficient in a complex motor skill, such as surgery (Hirschl, 2015; Kaufman, 2013). Learning algorithms of machines can be much faster in reinforcement learning of motor abilities than humans as multiple scenarios can be evaluated in parallel physics simulations (Miki et al., 2022; Hwangbo et al., 2019).

Artificial vision sensors based on traditional CCD and CMOS chip technology can outperform the eye in temporal and spatial resolutions. However, comparisons must be interpreted with care, because biological and artificial vision systems are based on different design principles and functional paradigms. While digital cameras work with discrete framerate and pixel numbers, the human eye follows the laws of neural activation, resulting in a fusion of continuous stimulations (Scharnowski et al., 2007). Human vision works with higher-level interpretations and sensor fusion to augment the perceived image information.

CCD and CMOS based digital camera systems are inferior to the eye in other features: they have smaller dynamic ranges and higher dark limits than the human eye, and the denominators mass, size, and power consumption are larger. Specialized RGB sensors can outperform the eye, when dealing with non-visible light or in very dark environments, where the human eyes do not perform at all. However, these specialized sensors tend to trail behind nature regarding other specifications.

At the cost of relatively high mass and volume, LIDAR sensors can perceive depth with high accuracy, clearly superior to human eyesight. However, due to the measurement principle, the latency of measurement updates for a field of view is higher, thus inferior to the human eye. Therefore, structured light and stereoscopic sensor technologies, which are characterized by low latency, but less accurate depth perception, are often used in combination with LIDAR sensors for the analysis of the environment close to the robot where fast reactions are needed.

The latest event camera technologies do finally compete with the performance of the human eye with respect to latency, dynamic range, motion blur, and power consumption. (Gallego et al., 2017). However, there are different trade-offs involved, such as latency vs. power consumption, or sensitivity vs. bandwidth and processing capacity, or camera size vs. spatial resolution. Overall performance could be further improved by pairing an event camera with depth sensor technologies, or an RGB camera to provide 3D depth information and a larger color range, respectively.

Conventional vision systems on board humanoid robots might be sufficient for most human-like tasks in human-centered domains, as for most tasks, optical challenges are not extreme. Furthermore, only one or two vision sensors are required, and not hundreds of units (as for the joints and actuators, so that additional mass, size, and power consumption may not over compromise the entire robotic system. Last, but not least, the visual perception of the environment can be augmented by scans obtained from a set of stationary camera systems and/or cameras mounted on other robots and unmanned aerial vehicles (UAVs) sharing the same domain.

Artificial microphones outperform the human hearing system in terms of perceived frequency range and detection threshold sensitivity. Sound localization accuracy, however, is only better when using larger microphone setups that may not be carried by mobile platforms.

Artificial pressure sensor matrices can outperform human tactile perception. Other sensor technologies outperform proprioceptive and vestibular senses.

The power efficiency of human muscles is better than the one from SMAs and pneumatic cylinders, but most electromechanical and hydraulic systems, PAMs, and EAPs outperform human muscle efficiency, even when considering off-board energy storage. Most artificial actuator technologies outperform the human muscle also with respect to maximum speed and endurance.

It seems that many technical actuator systems also outperform the human muscle with respect to generated force and power density, but only when excluding the mass of power supply and other technical components that are required to provide pressure (compressor, pump, pressure tank/reservoir), heat, or high voltage, thus, a tethered system. Many actuators require heavy active cooling systems. Electromechanical actuators require heavy transmissions to reduce the high rotational speed that they generate (Madden, 2007). Some kinds of EAP technologies require high voltages, which makes handling difficult or even dangerous (Chen et al., 2020). Those auxiliary components need to be carried by the robot, if it is made completely untethered, such as in the hydraulically driven (and heavy) Atlas robot (Ackerman, 2015).

Unlike artificial actuators’ metrics, the metrics for biological muscles account for the weight of auxiliary components like power source (organic compounds, such as Adenosine Triphosphate (ATP), that store and provide energy to drive many cellular processes. Normalization of human and robot actuation including the weight of components that are required to run the actuation can lead to an improvement of the specific metrics of biological muscles compared to artificial actuators (Higueras-Ruiz et al., 2021).

Internal combustion engines seem to be an attractive option for use onboard a robot, because of the high energy density of gasoline. However, according to Madden (Madden, 2007), there are two main drawbacks of combustion engines: First, the engine operates only over a narrow range of speeds requiring heavy transmissions and slip clutches; and second, the machines produce unwanted side-effects such as heat, noise, and fumes. That’s why we have excluded such engines in the frame of this comparative study.