95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Robot. AI , 10 April 2023

Sec. Human-Robot Interaction

Volume 10 - 2023 | https://doi.org/10.3389/frobt.2023.1112986

This article is part of the Research Topic Human-in-the-Loop Paradigm for Assistive Robotics View all 4 articles

A crucial aspect in human-robot collaboration is the robot acceptance by human co-workers. Based on previous experiences of interaction with their fellow beings, humans are able to recognize natural movements of their companions and associate them with the concepts of trust and acceptance. Throughout this process, the judgment is influenced by several percepts, first of all the visual similarity to the companion, which triggers a process of self-identification. When the companion is a robot, the lack of these percepts challenges such a self-identification process, unavoidably lowering the level of acceptance. Hence, while, on the one hand, the robotics industry moves towards manufacturing robots that visually resemble humans, on the other hand, a question is still open on whether the acceptance of robots can be increased by virtue of the movements they exhibit, regardless of their exterior aspect. In order to contribute to answering this question, this paper presents two experimental setups for Turing tests, where an artificial agent performs human-recorded and artificial movements, and a human subject is to judge the human likeness of the movement in two different circumstances: by observing the movement replicated on a screen and by physically interacting with a robot executing the movements. The results reveal that humans are more likely to recognize human movements through interaction than observation, and that, under the interaction condition, artificial movements can be designed to resemble human ones for future robots to be more easily accepted by human co-workers.

The human acceptance of robots’ behavior is key to designing suitable human-robot interaction schemes and related control algorithms (Kim et al., 2012). From the human standpoint, robots should mimic human motor functions. Possible strategies are to design robots with anthropomorphic aspects (Averta et al., 2020) and/or to assign them a specific behavior, concerning movement, speech and visual/facial gestures, shaping their interaction with humans (Kelley et al., 2010).

Focusing on the latter, one of the main differences between human and classical robotic arm movements is observed in their velocity features. The Kinematic Theory of rapid human movements (Plamondon, 1995), and its consequent sigma-lognormal model (O’Reilly and Plamondon, 2009), suggest that complex human movements are the result of the time superimposition of elementary movements, each of which is commanded by the central nervous system and exhibits a lognormal velocity profile. Throughout the years, the sigma-lognormal model has proved to be effective at reproducing human-like movements in 2D (Ferrer et al., 2020; Parziale et al., 2020; Laurent et al., 2022) and 3D conditions (Fischer et al., 2020). On the other side, typical robotic/artificial velocity profiles are constant or trapezoidal (Kavraki and LaValle, 2016).

Given this difference, questions arise on whether humans are able to discriminate between human and artificial movements and whether the modalities by which humans perceive the movement can affect the judgment about its human likeness. Chamberlain et al. (2022) designed experiments consisting in the observation of videos and claimed that human-like drawing movements with bell-shaped velocity profiles are perceived as more natural and pleasant than movements with a uniform profile. On the contrary, Quintana et al. (2022) showed how humans do not have a clear preference between movements at constant and lognormal speed, both when watching videos of a robotic arm executing some trajectories in space and when interacting with the robot by tracking its tip with their finger.

Some works focused on the design of new control strategies able to provide a robot with more comfortable movements during human-robot interaction tasks. Tamantini et al. (2022) presented a patient-tailored control architecture for upper-limb robot-aided orthopedic rehabilitation. They designed a learning-by-demonstration-based approach using Dynamic Movement Primitives (DMP). The proposed controller is capable of adapting the rehabilitation workspace and the assistance forces according to the patient’s performance. Similarly, Lauretti et al. (2019) proposed a new formulation of DMP that endows anthropomorphic robots with the capability of performing movements similar to the human demonstrator both in the joint and Cartesian space and avoiding obstacles. The proposed approach was compared with a literature method based on Cartesian DMP and (IK). The questionnaire results showed that the users prefer the anthropomorphic motion planned through the proposed approach with respect to the non-anthropomorphic one planned by means of Cartesian DMP and IK.

Our contribution is to investigate to which extent the modalities by which humans perceive motion affect their judgment about the human likeness of movements. Our results show that the physical interaction with the robot simplifies the detection of human movements if compared to the mere observation of the same movements. In our view, this supports the thesis that, depending on the task, robots can be provided with different degrees of human likeness, in view of acceptance. Also, in the same view, not all artificial trajectories are the same, but some are more frequently perceived as human than others. This paves the way to the design of robots resembling human behavior and, therefore, is expected to improve robot acceptance in human-robot collaboration.

The remainder of the paper is organized as follows: Section 2 describes two Turing tests, each featuring a different experimental setup; Section 3 presents the results of such experiments and Section 4 discusses the results and provides concluding remarks.

With the focus on robot acceptance, we designed our experiments to excite the visual and the proprioceptive systems separately, and to analyze which of them is more effective in the view of human-robot collaboration. The experiments are shaped in the form of Turing tests: the stimulus provided to a human subject is a handwriting motion, and the subject is required to decide whether it is produced by a human or by an artificial agent.

The participants were recruited among members of the Natural Computation and Robotics Laboratories and students attending the M.Eng. degrees at our department. All the participants accepted to participate on a voluntary basis, and formally expressed their consent to participate by reading and signing a consensus form. The participants were divided in two disjoint groups, referred to as G1 and G2. The nine subjects of G1 provided the human trajectories, while the 36 of G2 participated in the tests. None of the subjects of G2 had previous experience of physical interaction with a robot. Details about the participants included in G1 and G2 are reported in the Supplementary Table S1.

In general, the equipment adopted to record movements and the motor task that is investigated characterize studies on human movements. Recording systems (motion tracker, tablet, smartwatch, etc.) and motor tasks (gait, reaching movements, handwriting, etc.) are selected according to the final application or the particular aspect of the movements to be investigated. Pen-tip movements during signing, drawing or writing acquired with graphic tablets and smartpads have been largely adopted (Diaz et al., 2019; Parziale et al., 2021; Cilia et al., 2022), but video recording of gait as well as food manipulation during feeding have also been suggested (Bhattacharjee et al., 2019; Kumar et al., 2021).

In our work, the motor task we opted for is the drawing of the simple shapes illustrated in the top panel of Figure 1, because they do not trigger the processing of semantic information in the subjects involved in the experiments. The advantage of adopting these shapes instead of characters, words, signatures or other goal-driven actions, is that of not activating cortex regions devoted to integrating semantic information, as it would happen if the task had a semantic content (Harrington et al., 2009), thus avoiding cognitive biases that could affect the experimental results. Notwithstanding, the tasks are not simple from a motor perspective, in that their execution requires the proper synchronization of many elementary movements.

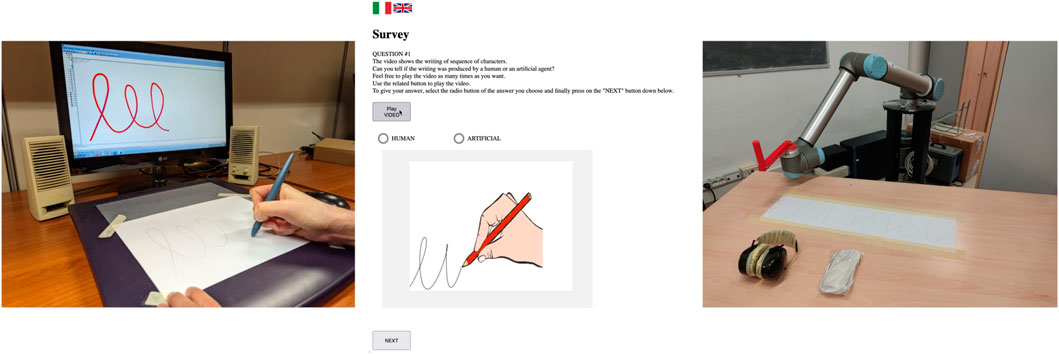

The subjects of G1 were requested to draw the proposed shapes with a ballpoint pen on a sheet of paper placed on an ink-and-paper WACOM Intuos 2 digitizing tablet with a 100 Hz sampling rate. The motion of the ballpoint pen over the 2D plane of the tablet was recorded through the software MovAlyzeR®v6.1 (Teulings, 2021). The subjects were instructed to draw 10 times each pattern so as to occupy as much as possible of the A4 surface of the sheet. They were free to write at their own pace and only the on-paper movements were recorded. The acquisition setup is reported in Figure 2.

FIGURE 2. Acquisition setup (left), observation experiment setup (center), interaction experiment setup (right).

From the recorded human trajectories, we extracted the geometrical features, i.e., the path in planar coordinates, and assigned each of them two different time laws from robotics literature to move along, referred to as MethodA (Kavraki and LaValle, 2016) and MethodB (Slotine and Yang, 1989). In particular, MethodA assumes that the velocity is uniform, while MethodB assumes that the velocity depends on curvature, according to a time-optimal profile, as in the case of human movements. Thus, for each pattern, there are three different execution modalities, which we term classes, one human and two artificial, denoted in the sequel as Human, MethodA and MethodB. We use the term Human to denote trajectories that, although executed by an artificial agent, are not obtained by any model, but are a mere replication of the human movements acquired through the graphic tablet. For more information about MethodB trajectories, refer to the Supplementary Material.

In this experiment only visual stimuli are provided to the subjects for making a decision. To this purpose, we generated animations of the trajectories in compliance with human and artificial time laws. Based on the assumption that context information have an effect on the judgment of human likeness, we stimulated the self-identification with the execution of a handwriting task by including an icon of a human hand holding a pen, and generating the animated trajectory as the hand moves, so as to simulate a pen releasing ink on a sheet. This represents the major difference with respect to the experiments reported by (Chamberlain et al., 2022) and (Quintana et al., 2022), as we purged videos from the visual clues that could divert the attention of the individual from the movement (such as a robotic manipulator structure), and include elements to help the human subject in the self-identification process.

The visual stimuli are presented as a sequence of 10 instances of the aforementioned patterns, generated by randomly selecting three human trajectories, one for each pattern, and, for each of them, the corresponding trajectories generated by MethodA and MethodB, respectively, plus one more artificial trajectory generated by MethodB. During the test, one trajectory animation at a time is presented on a screen, and the participant is requested to decide if the movements in the video are produced by a human or by an artificial agent. The test is designed in such a way to avoid a participant skipping or pausing a video, going back to already answered questions or repeating the entire survey. A screenshot of the survey is provided in Figure 2, while the Supplementary Video S1 is the screen recording of a subject completing the observational experiment.

In this experiment, subjects only receive proprioceptive stimuli for making a decision. For the purpose, we let the subject be in touch with the robot tip while performing the movements, as it will be described in the sequel. In order to isolate the proprioception, and hence preserve the purpose of the experiment, the visual and hearing systems are inhibited through a sleep mask and ear muffs.

The interaction experiment is performed with a velocity-controlled UR10 robot, fed with both human and artificial trajectories. Velocity-based control, i.e. the tracking of velocity references, is preferred over position-based control, i.e. the tracking of position references, because a characteristic of human movements is that their tangential velocity profiles can be decomposed into a sequence of submovements with an invariant velocity profile (Morasso and Mussa Ivaldi, 1982; Plamondon and Guerfali, 1998). So, preserving this characteristic should be the key to generating human-like movements with a robot. Since human behavior is expected to be encoded in the velocity information, removing (or reducing) non-desirable effects of control is paramount for this experiment.

In terms of its workspace, the robot performs the handwriting trajectories in its free space (without interacting with surrounding objects) on a virtual horizontal plane. The human participant holds the robot end-effector, specifically designed and 3D-printed to be grasped like a pen. For safety reasons, a physical barrier is placed between the robot and the human, preventing the latter to enter the robot workspace with the entire body. In addition, the robot is programmed to operate close to its workspace boundary, so that its extension towards the human is limited. The end-effector is stiff, so as to prevent introducing artificial dynamics, but also fragile enough, for safety reasons, to break at a collision before provoking injuries to the participant’s hand.

For each participant, the robot executes nine handwriting trajectories (3 Human, 3 MethodA, 3 MethodB) composed as in the previous experiment. To avoid a drop-off in the attention of the participants or the insurgence of fatigue effects, which could affect the experimental results, we reduce the temporal duration of the experiment by executing the three trajectories from the same class one after the other before asking the participant to make a decision. So, each participant interacts with three different sequences made up of three trajectories. The order of the three sequences is randomized among participants in such a way that the human trajectories are presented as the first, the second or the third sequence of movements. After each sequence, the participant is requested to decide if the movements performed by the robot had been generated by a human or by an artificial agent.

Before the experiment, the operator describes the protocol (see the Supplementary Material) and shows to the participant the three movement patterns, printed in the original size and posted on a desk whose top is immediately below and parallel to the virtual plane where the robot movements are executed. This way, the participant is aware of the trajectories’ geometrical features and, during the experiment, can pay attention only to the proprioception of the movement. This should prevent participants from creating a mental image of movements they are experiencing, a phenomenon that could disturb their decision-making process or cause a drop-off in their attention.

A picture of the interaction experiment setup is shown in Figure 2, while the Supplementary Video S2 shows a human subject interacting with the UR10 robot.

Each participant was requested to complete the visual test, and a week later, was recalled for the interaction test. The visual test was available on a website for 1 week, and participants were allowed to participate at their convenience. For the interaction test, the participants were admitted one at a time to the room where the experiment was performed to prevent their judgment could be affected by the opinion of other participants.

Through this methodology, the two experiments present a significant difference: while the visual test is performed with no previous experience of the movement patterns (the participants never saw the patterns before taking the test and were completely unaware of the experiment’s purpose), the movements proposed at the interaction experiment were already experienced, although only through observation.

Eventually, and in order to estimate the possible interaction between experiments, we asked the subjects to take the visual test again (with the same trajectories in the same order) immediately after participating to the interaction experiment. The goal is to exclude or confirm that human judgment is somehow affected by experience.

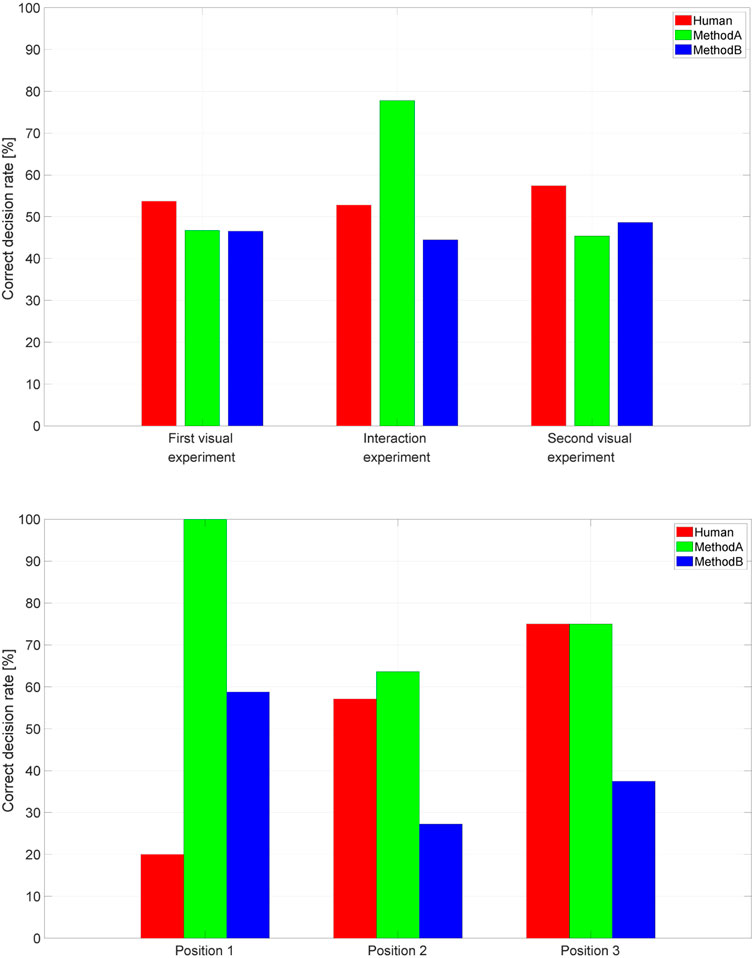

The results of the three experiments, in terms of correct decision percentage, for the three trajectory classes, are summarized in Figure 3 (top).

FIGURE 3. Experimental results over all the performed experiments (top) and accuracy vs. position in the interaction experiment (bottom).

The results of the first experiment show that there is no evidence that human subjects are able to discriminate human and artificial trajectories by only observing the movement itself, even when their velocity profiles are quite different, as for trajectories generated by MethodA.

The results of the Second experiment reveal some important facts. First, the correct decision rate for MethodA trajectories considerably increases compared to the observation case. This confirms that the proprioceptive sensory system is more sensitive than the visual one in recognizing movements with velocity profiles that are different from those of humans. Second, the correct decision rate of Human and MethodB trajectories in the interaction experiment is similar, thus indicating that MethodB is a better candidate to design human-like trajectories compared to MethodA. As a matter of fact, 55.56% of them are classified as human.

Figure 3 (bottom) reports, for the interaction experiment, the correct decision rate in discriminating human and artificial trajectories depending on the position of the trajectories in the entire sequence. The histogram shows that the later the human trajectories appear in the sequence the higher the correct decision rate. In particular, the correct decision rate for human trajectories is equal to 20%, 57.14%, and 75% when they are the first, second and third of the sequence, respectively. In the case of artificial trajectories, the loss of performance when either MethodA or MethodB trajectories are executed in the second position seems to depend on which trajectory has been executed first in the sequence. Nevertheless, this observation could only be confirmed with experiments involving a larger number of subjects.

These results suggest that the subjects use the first sequence to set a reference, and then evaluate the following ones with respect to it. Moreover, it seems that when evaluating the first sequence, they exhibit some bias towards the artificial category, possibly because they have never experienced a direct interaction with a robot, hence the whole experience is perceived as “artificial”, and such a feeling translates in their judgment. As the experiment proceeds, they adjust to the setting: the bias toward artificial is reduced, they concentrate more on the task and the performance consequently increases.

This interpretation is also confirmed by analyzing the results depending on the number of repetitions of the robot movements the subject asked for before answering the question. In particular, we find out opposite results in the case of human and artificial trajectories, and in particular:

• In the case of human trajectories, the correct decision rate is 57.69% for those executed only once, while it drops to 40.00% for those executed twice;

• In the case of MethodA trajectories, the correct decision rate is 72.41% for those executed only once, and it reaches 100.00% for those executed twice;

• In the case of MethodB trajectories, the correct decision rate is 34.62% for those executed only once, and it reaches 70.00% for those executed twice.

The results of the second visual test, executed soon after the interaction experiment, show no evidence that previous experience of observation and interaction increases the human ability to identify human and artificial movements. Indeed, the results are in line with those of the first one. Furthermore, although participants are able to correctly detect MethodA artificial movements during the interaction experiment, they cannot identify the same movements in the second visual test. This suggests that the difficulty in recognizing human movements, in our experiments, might be unrelated to the lack of experience, while being related to the fact that movement features are more easily perceived through the proprioceptive system than visually.

The correct decision rate for each participant and experiment is reported in Figure 4. Each histogram, associated with each experiment, has 36 bars, each corresponding to the correct decision rate achieved by each participant in that experiment. Mean and standard deviation over all the participants are overlapped on each histogram. A Wilcoxon test with a significance level of 0.05 is used to compare the results of the two visual experiments. The null hypothesis that the medians of the differences between the two group samples are equal is accepted with p-value equal to 0.68.

The robot acceptance by human operators is a complex issue, that is tightly connected to the human perception of motion. In order to assess how different senses perform to this respect, we ran experiments involving human subjects, who were asked to observe and interact with an artificial agent in two different experimental setups, stimulating only one sense at a time. For practicality, the selected movements were extracted from handwriting tasks. In particular, we assessed the validity of the experimental method consisting of exciting the visual system only and, in view of acceptance, compared it with a different experimental method consisting of exciting the proprioceptive system only.

In the first test, the handwriting motion was reproduced in a video, whereas, in the second test, the blindfolded and soundproofed participant was asked to hold the robot end-effector, while being compliant with its motion. In both scenarios, the participants were asked to judge the human likeness of each individual movement, which could embed a human or an artificial time law (derived with one of two different methods from the robotics literature). Eventually, we repeated the first test to assess the influence of experience on judgment.

The outcomes of this research can be summarized as follows:

• a set of participants is not able to reliably distinguish between human and artificial movements shown in a video, even by eliminating visual elements hampering, e.g. a physical robot, and by adding visual elements helping, e.g. a hand with a pen, the self-identification process: whatever class of movements is shown, the correct decision percentage is about 50%;

• during the interaction with a robot executing handwriting movements, participants are able to identify artificial movements adopting uniform velocity (77.78%), but the same does not hold for those that adopt curvature-dependent velocity, which resemble the human ones (44.44%);

• The experience of observation and interaction does not seem to help detect movements in further observation experiments;

• The experience of interaction does seem to help for further interactions, in fact, the correct decision rate for each movement class also depends on the order in which trajectories are executed in the interaction experiment (human movements executed as first are 20.00% correctly identified, while human movements executed as last are 75.00% correctly identified).

From the last observation, a new research question arises: does a human need a comparison to correctly distinguish human and artificial movements? In view of investigating robot acceptance, should the experimental method be defined in terms of “which of these two movements made you feel more comfortable?”. Talking to the participants after they performed the tests, they reported, referring to human movements they were unaware of, that “the movement was too perfect to be performed by a human”. We observed that there are expectations about the robotic/artificial movement that undoubtedly affect the judgment. This also explains why, after gaining some experience of interaction, the correct decision rate tends to increase. Also, in light of these observations, we believe that formulating the question in terms of comfort instead of human likeness is more appropriate in view of robot acceptance. In this case, we would move the focus to the quality of the motion itself, instead of identifying the motion with a robot or a human. This is a research direction that we are willing to investigate in a future work.

In addition, the experimental setup can be improved by including additional tools: for example, a motion tracker could be used to record more generic 3D movements from human subjects, while a 3D simulation environment, featuring a 3D human-like avatar reproducing the motion, could empower the self-identification process and provide further insights about the role and power of observation in the acceptance of artificial movements.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

AM and PC designed the outline of the project and the experimental methodologies and supervised the project. GM and AP designed, implemented and supervised the execution of the observation experiments, while GM and EF designed, implemented and supervised the execution of the interaction experiments. GM carried out the experiments with the participants and processed the data. GM, EF, AP, and AM contributed to the interpretation of the results. GM, AP, and EF drafted the manuscript and designed the figures, and AM did the final editing. All authors provided critical feedback to shape the research, analyze the data and edit the manuscript draft.

GM was funded by the scholarship grant “Pianificazione e controllo per la movimentazione robotica human-like” from the Department of Information Engineering, Electrical Engineering, and Applied Mathematics at the University of Salerno, Italy.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2023.1112986/full#supplementary-material

Averta, G., Della Santina, C., Valenza, G., Bicchi, A., and Bianchi, M. (2020). Exploiting upper-limb functional principal components for human-like motion generation of anthropomorphic robots. NeuroEngineering Rehabilitation 17, 63–15. doi:10.1186/s12984-020-00680-8

Bhattacharjee, T., Lee, G., Song, H., and Srinivasa, S. S. (2019). Towards robotic feeding: Role of haptics in fork-based food manipulation. IEEE Robotics Automation Lett. 4, 1485–1492. doi:10.1109/LRA.2019.2894592

Chamberlain, R., Berio, D., Mayer, V., Chana, K., Leymarie, F. F., and Orgs, G. (2022). A dot that went for a walk: People prefer lines drawn with human-like kinematics. Br. J. Psychol. 113, 105–130. doi:10.1111/bjop.12527

Cilia, N. D., De Gregorio, G., De Stefano, C., Fontanella, F., Marcelli, A., and Parziale, A. (2022). Diagnosing Alzheimer’s disease from on-line handwriting: A novel dataset and performance benchmarking. Eng. Appl. Artif. Intell. 111, 104822. doi:10.1016/j.engappai.2022.104822

Diaz, M., Ferrer, M. A., Impedovo, D., Malik, M. I., Pirlo, G., and Plamondon, R. (2019). A perspective analysis of handwritten signature technology. ACM Comput. Surv. 51, 1–39. doi:10.1145/3274658

Ferrer, M. A., Diaz, M., Carmona-Duarte, C., and Plamondon, R. (2020). iDeLog: Iterative dual spatial and kinematic extraction of sigma-lognormal parameters. IEEE Trans. Pattern Analysis Mach. Intell. 42, 114–125. doi:10.1109/TPAMI.2018.2879312

Fischer, A., Schindler, R., Bouillon, M., and Plamondon, R. (2020). Modeling 3D movements with the kinematic theory of rapid human movements (WORLD SCIENTIFIC), chap. 15, 327–342. doi:10.1142/9789811226830_0015

Harrington, G. S., Farias, D., and Davis, C. H. (2009). The neural basis for simulated drawing and the semantic implications. Cortex 45, 386–393. doi:10.1016/j.cortex.2007.10.015

Kavraki, L. E., and LaValle, S. M. (2016). “Motion planning,” in Springer handbook of robotics. Editors B. Siciliano, and O. Khatib (Springer International Publishing), 139–162. doi:10.1007/978-3-319-32552-1_7

Kelley, R., Tavakkoli, A., King, C., Nicolescu, M., and Nicolescu, M. (2010). “Understanding activities and intentions for human-robot interaction,” in Human-robot interaction. London: Editor D. Chugo (InTech), 1–18. doi:10.5772/8127

Kim, A., Kum, H., Roh, O., You, S., and Lee, S. (2012). “Robot gesture and user acceptance of information in human-robot interaction,” in Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction - HRI ’12. Boston:(ACM Press), 279–280. doi:10.1145/2157689.2157793

Kumar, M., Singh, N., Kumar, R., Goel, S., and Kumar, K. (2021). Gait recognition based on vision systems: A systematic survey. J. Vis. Commun. Image Represent. 75, 103052. doi:10.1016/j.jvcir.2021.103052

Laurent, A., Plamondon, R., and Begon, M. (2022). Reliability of the kinematic theory parameters during handwriting tasks on a vertical setup. Biomed. Signal Process. Control 71, 103157. doi:10.1016/j.bspc.2021.103157

Lauretti, C., Cordella, F., and Zollo, L. (2019). A hybrid joint/cartesian DMP-based approach for obstacle avoidance of anthropomorphic assistive robots. Int. J. Soc. Robotics 11, 783–796. doi:10.1007/s12369-019-00597-w

Morasso, P., and Mussa Ivaldi, F. A. (1982). Trajectory formation and handwriting: A computational model. Biol. Cybern. 45, 131–142. doi:10.1007/BF00335240

O’Reilly, C., and Plamondon, R. (2009). Development of a sigma–lognormal representation for on-line signatures. Pattern Recognit. 42, 3324–3337. doi:10.1016/j.patcog.2008.10.017

Parziale, A., Carmona-Duarte, C., Ferrer, M. A., and Marcelli, A. (2021). “2D vs 3D online writer identification: A comparative study,” in Document analysis and recognition – ICDAR 2021. Editors J. Lladós, D. Lopresti, and S. Uchida (Springer International Publishing), 307–321. doi:10.1007/978-3-030-86334-0_20

Parziale, A., Parisi, R., and Marcelli, A. (2020). Extracting the motor program of handwriting from its lognormal representation (WORLD SCIENTIFIC), chap. 13, 289–308. doi:10.1142/9789811226830_0013

Plamondon, R. (1995). A kinematic theory of rapid human movements: Part I. movement representation and generation. Biol. Cybern. 72, 295–307. doi:10.1007/BF00202785

Plamondon, R., and Guerfali, W. (1998). The generation of handwriting with delta-lognormal synergies. Biol. Cybern. 78, 119–132. doi:10.1007/s004220050419

Quintana, J. J., Ferrer, M. A., Diaz, M., Feo, J. J., Wolniakowski, A., and Miatliuk, K. (2022). Uniform vs. lognormal kinematics in robots: Perceptual preferences for robotic movements. Appl. Sci. 12, 12045. doi:10.3390/app122312045

Slotine, J.-J., and Yang, H. (1989). Improving the efficiency of time-optimal path-following algorithms. IEEE Trans. Robotics Automation 5, 118–124. doi:10.1109/70.88024

Tamantini, C., Cordella, F., Lauretti, C., di Luzio, F. S., Bravi, M., Bressi, F., et al. (2022). “Patient-tailored adaptive control for robot-aided orthopaedic rehabilitation,” in 2022 international conference on robotics and automation (ICRA) (IEEE), 5434–5440. doi:10.1109/ICRA46639.2022.9811791

Teulings, H. L. (2021). MovAlyzeR. version 6.1 https://www.neuroscript.net

Keywords: collaborative robots, human-robot collaboration, human-robot interaction, robot acceptance, turing test

Citation: Mignone G, Parziale A, Ferrentino E, Marcelli A and Chiacchio P (2023) Observation vs. interaction in the recognition of human-like movements. Front. Robot. AI 10:1112986. doi: 10.3389/frobt.2023.1112986

Received: 30 November 2022; Accepted: 21 March 2023;

Published: 10 April 2023.

Edited by:

Francesca Cordella, Campus Bio-Medico University, ItalyReviewed by:

Christian Tamantini, Università Campus Bio-Medico di Roma, ItalyCopyright © 2023 Mignone, Parziale, Ferrentino, Marcelli and Chiacchio. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Giovanni Mignone, Z21pZ25vbmVAdW5pc2EuaXQ=; Antonio Parziale, YW5wYXJ6aWFsZUB1bmlzYS5pdA==; Enrico Ferrentino, ZWZlcnJlbnRpbm9AdW5pc2EuaXQ=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.