94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

TECHNOLOGY AND CODE article

Front. Robot. AI , 28 September 2022

Sec. Biomedical Robotics

Volume 9 - 2022 | https://doi.org/10.3389/frobt.2022.875845

The percutaneous biopsy is a critical intervention for diagnosis and staging in cancer therapy. Robotic systems can improve the efficiency and outcome of such procedures while alleviating stress for physicians and patients. However, the high complexity of operation and the limited possibilities for robotic integration in the operating room (OR) decrease user acceptance and the number of deployed robots. Collaborative systems and standardized device communication may provide approaches to overcome named problems. Derived from the IEEE 11073 SDC standard terminology of medical device systems, we designed and validated a medical robotic device system (MERODES) to access and control a collaborative setup of two KUKA robots for ultrasound-guided needle insertions. The system is based on a novel standard for service-oriented device connectivity and utilizes collaborative principles to enhance user experience. Implementing separated workflow applications allows for a flexible system setup and configuration. The system was validated in three separate test scenarios to measure accuracies for 1) co-registration, 2) needle target planning in a water bath and 3) in an abdominal phantom. The co-registration accuracy averaged 0.94 ± 0.42 mm. The positioning errors ranged from 0.86 ± 0.42 to 1.19 ± 0.70 mm in the water bath setup and from 1.69 ± 0.92 to 1.96 ± 0.86 mm in the phantom. The presented results serve as a proof-of-concept and add to the current state of the art to alleviate system deployment and fast configuration for percutaneous robotic interventions.

Image-guided percutaneous biopsies provide the basis for diagnosis and staging in cancer therapy by sampling tumor tissue. Traditionally, a physician inserts a needle into the target organ (typically liver, kidney, breast, lymph nodes) based on pre-operative computed tomography (CT) or magnetic resonance imaging (MRI). The target deformations and needle deflections due to variations in tissue density often require intraoperative image guidance. Depending on the pathology and target location, CT and MRI offer high imaging quality and tissue differentiation also during interventions. However, the radiation exposure of CTs, the limited available space, and the long image acquisition times of MRIs often impede elementary biopsies. Interventional ultrasound (US) imaging provides more flexible image guidance, despite lower resolution (Roberts et al., 2020). Facing these constraints, robotic systems can improve the efficiency and outcome of percutaneous interventions while lowering the burden for the patient.

In the last 5 years, the development of robots with different actuation technologies (electric, pneumatic, cable-actuated, etc.) and degrees of freedom (2–7 DOF) provided high precision and repeatability for needle insertions (Siepel et al., 2021). These systems represent successfully deployed solutions, but despite their advantages, they are still not state-of-the-art in the surgical domain. This often affiliates to a lack of user acceptance, due to the high complexity of operation and the difficulties to integrate robotic systems into the operating room (OR). Besides a lack of full OR integration, the extensive costs limit the number of deployed robots even further. Today, only hospitals with maximum care can provide the infrastructure and resources to acquire modern robots. The restraint of individual systems to single or specific types of use-cases enhances this problem even more (Hoeckelmann et al., 2015; Schleer et al., 2019).

In industrial setups, similar challenges were discussed since the early 2010s, proposing an effective solution in the form of service-oriented architectures (SOA). By providing system functionalities as services to all relevant participants in a network, this approach was identified to not only achieve the ability for the integration of different systems but also improve reusability, scalability, and setup-time (Veiga et al., 2009; Cesetti et al., 2010; Oliveira et al., 2013; Cai et al., 2016). A variety of tools and frameworks to implement this approach in industrial settings has been published previously, e.g., the Robot Operating System (ROS1) or the Open Robot Control Software (OROCOS2). Although these tools can assist to overcome the highlighted problems, an effective utilization in medicine still lacks behind.

In 2001 Cleary and Nguyen discussed the necessity of flexible system architectures, for “[…] medical robotics to evolve as its own field […]” (Cleary and Nguyen, 2001). As a response, research was conducted to realize a standardized, vendor-independent medical device communication (Arney et al., 2014; Okamoto et al., 2018). Promising solutions were presented to improve interoperability in the operating room (OR) resulting in the approval of the IEEE 11073 SDC standard for service-oriented device connectivity (Kasparick et al., 2015, 2018; Rockstroh et al., 2017). This standardized approach already showed benefits, such as technical context-awareness to enable intelligent and cooperative behavior of medical devices (Franke et al., 2018). The SDC standard may also improve the modularity and interoperability of surgical robotic systems and, thereby, support the efforts towards wider adoption.

The principles of collaborative robots showed additional approaches in industrial setups to increase usability when sharing a workspace between humans and robots. The optimized fluency and synchronization of task transitions between the user and the assisting robot in collaborative setups may also yield high precision during percutaneous interventions while alleviating stress in the surgical team (Ajoudani et al., 2018; Hoffman, 2019).

In previous works, the application of novel collaborative approaches in robot-assisted US guided biopsies showed promising results (Berger et al., 2018a; Berger et al., 2018b). The implementation of a force-based touch gesture interaction in an experimental setup with a KUKA robot provided the basis for a preliminary user validation. The study involved nine participants of technical and/or medical background performing biopsies of two lesions in an abdominal phantom, both manually and with robotic assistance. The setup comprised a single robot moving an ultrasound device with an integrated guide for manual needle advancement and optical tracking tools for target planning. The users rated the intuitiveness and alleviation of needle guidance with touch gestures and hand-guided robot movement in a questionnaire, resulting in an overall positive evaluation of the interaction concept and the alleviation of the needle insertion tasks. In all performed biopsies, the target lesions were hit on the first try when using robot assistance.

To build upon these findings and to extend the preliminary system, this work explores the potential of two KUKA arms deployed in a collaborative setup for US guided needle insertions. It shall provide an addition to the current state of the art and prove the concept of a flexible robotic setup to support image-guided biopsies. The underlying control software incorporates multiple robots and medical devices to prove the possibility to deploy complex technical setups integrated into a standardized dynamic device network. It aims to promote the information exchange between commercially available collaborative systems in the operating room and to provide reusability in varying use cases. The introduction of collaborative interaction principles shall further increase acceptance when operating with robot assistance in percutaneous interventions.

The main goal of this work was the implementation of a robot control system that utilizes the advantages of the SDC standard and collaborative interaction principles for robot-assisted needle insertions. It should incorporate existing robotic systems, to improve reusability and flexibility. The targeted robots must, therefore, support control and information exchange via SDC.

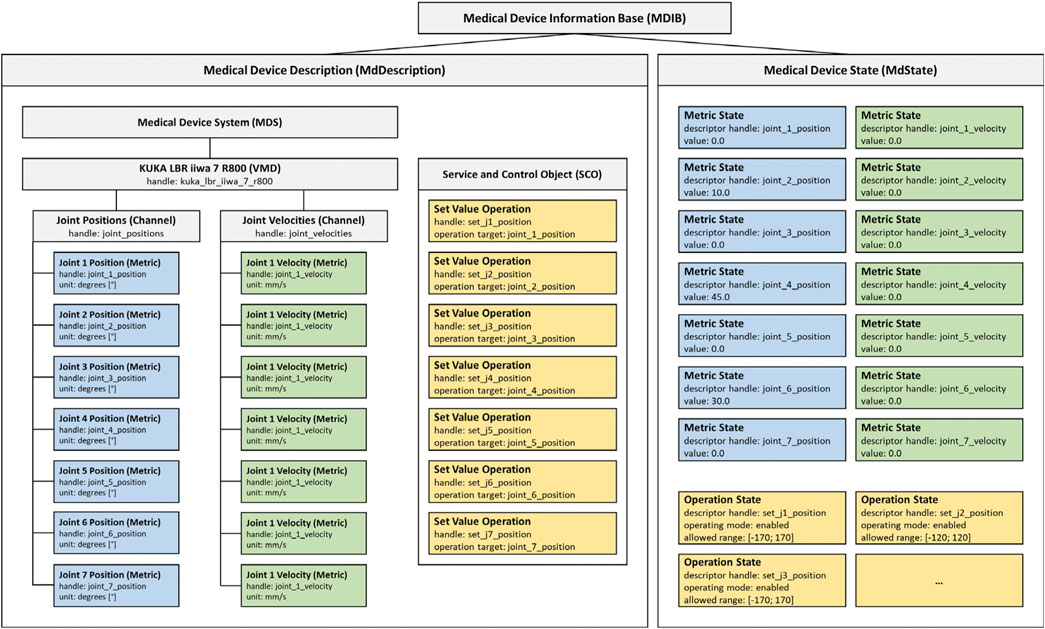

The SDC standard implements a service provider and consumer architecture with medical devices acting as providers, consumers, or both. Providers publish medical device capabilities and consumers interact as needed by either request and response or via subscription. The communication between provider and consumer supports dynamic device discovery with unique device identifiers (UDI) and is realized via a standard Ethernet connection. In the SDC standard family, a medical device information base (MDIB) represents the device as a pair of medical device description (MdDescription) and medical device state (MdState). The MdDescription contains the supported conditions and variables stored as metrics of distinct types (e.g., numeric, string, enumeration). Metrics are grouped into channels to categorize the device capabilities, which in turn are assigned to a virtual medical device (VMD). Multiple VMDs can be further combined into a single medical device system (MDS). All VMDs, channels, and metrics are identifiable by unique handles. The MdState contains the actual values of available metrics and device characteristics. A consumer can remotely control a provider (and thereby the medical device) via a service and control object (SCO) which defines set operations on the metrics or activation operations to trigger specific behavior. (Kasparick et al., 2015, Kasparick et al.,2018) describe the structure of SDC in more detail.

The MDIB structure allows for the representation of all essential characteristics of any robotic device, like the available degrees of freedom, their current position, velocities, etc. For example, the possibility to implement a KUKA LBR iiwa 7 R800 as an SDC medical device was shown before (Berger et al., 2019). Figure 1 visualizes a possible MDIB with joint positions and velocities.

FIGURE 1. Example MDIB for a KUKA LBR iiwa 7 R800. The MdDescription includes the KUKA VMD with joint positions (blue) and velocities (green). The SCO provides operations to set position values (yellow) and the MdState contains exemplary values and ranges for the metrics and operations.

To exploit the features of SDC as best as possible, this work presents a flexible software framework with interchangeable manipulators and configurations for deployment in different use-cases. The following sections describe the design of this Medical Robotic Device System (MERODES) based on the SDC MDIB architecture and its deployment with two robotic arms for US-guided biopsies.

The core component of MERODES is a central MDS that manages all involved robotic devices represented by their respective VMD. This enables the system to dynamically include or exclude any proprietary robot on initialization (if an SDC conform VMD is available). Depending on the use case, the system hides or publishes the respective services of each included robot in the network.

Operating multiple robotic devices requires a shared coordinate space. MERODES additionally acts as a consumer of SDC compatible tracking devices and, thereby, supports the usage of surgical tracked navigation principles. The active tracking device can be selected on initialization. The system includes an additional comprehensive VMD that provides services and metrics for the overall system control (e.g., the selected tracking device, activation commands for starting the registration process, transformations between the robot coordinate systems).

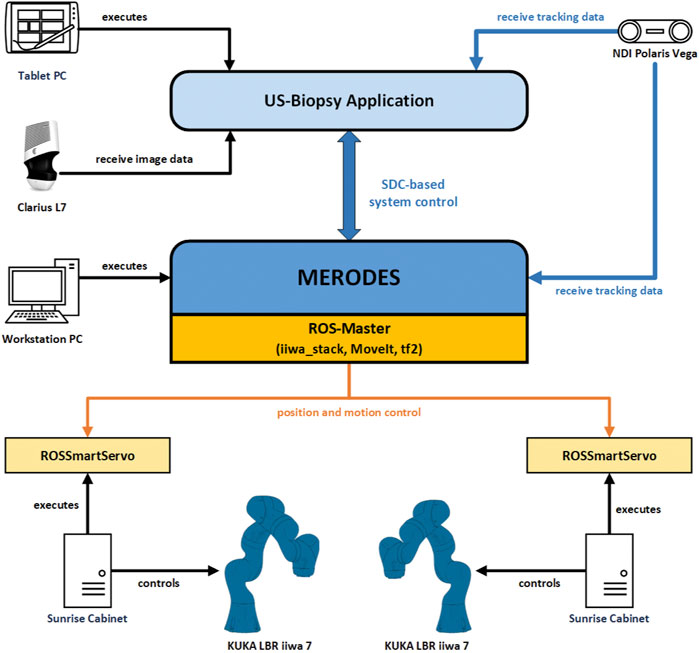

Many robotic systems support interchangeable end-effectors, or are solely built to control proprietary tools (e.g., KUKA). These end-effectors may comprise medical devices themselves, and can be included in the MDS if their services are required in the network (e.g., starting/stopping diagnostic or therapeutic functions). All remote capabilities of the included devices and robots are stored in the SCO when adding a VMD to the system. MERODES separates the workflow logic from the incorporated robots. Depending on the intervention, use case-specific workflow applications act as consumers, access the robot services, and implement the robotic behavior and user interactions. This allows for a flexible setup by changing the consumer application to use the same robots for different tasks and vice versa. Figure 2 shows the MERODES architecture.

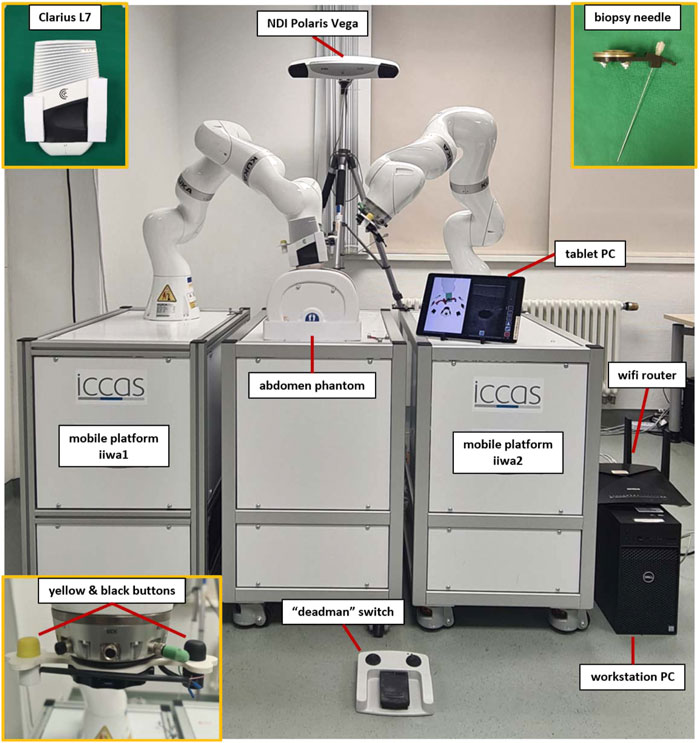

To validate the feasibility of deploying MERODES for US-guided needle insertion, the robotic setup in this work comprised two KUKA LBR iiwa 7 R800 robotic arms (KUKA AG, Germany). Both robots and their respective cabinet PCs were mounted on custom-built mobile platforms. The biopsy workflow was based on one robot (iiwa1) providing US image guidance and target positions for the second robot (iiwa2) steering the needle (see Section 2.2.3). A Clarius L7 (Clarius Mobile Health Corp., Canada) served as the diagnostic US device, attached to the flange of iiwa1 via a 3D printed mount. An US compatible biopsy needle (length 180 mm) was similarly mounted to iiwa2. An NDI Polaris Vega (Northern Digital Inc., Canada) acted as SDC provider and enabled the utilization of both robots in a shared coordinate space by co-registration. Both robot platforms comprised rigid markers for optical tracking, attached at the back of the setup. The markers were not integrated with the end-effectors to avoid line-of-sight problems when working in human-robot-collaboration and to allow for the flexible exchange of tools without worrying about the tracking device. This approach allowed for changes in the deployment of both arms after initial registration. A central workstation PC (DELL Precision 3630 Intel (R) Core™ i7-8700K CPU @ 3.70 GHz, 32.0 GB RAM) served as the control unit to execute the MERODES software and other dependencies. The collaborative nature of the KUKA robots allows for the utilization of human-machine-interaction principles, like shared target manipulation and fluent changes between automated and manual tasks (Hoffman, 2019). Both robots, therefore, were augmented with two interactive buttons (black and yellow) installed between the flanges and the respective tools to perform hand guiding operations or other workflow-dependent actions. The electric flange interfaces of the KUKA robots directly routed the button input signals through the manipulators to a USB port of the workstation. A separate tablet (iPad Pro model A1652) allowed for remote access to the system while working in collaboration with the robots. All devices and computers were connected to a smart wifi router (NETGEAR nighthawk ×10 ad7200) to form a closed network. Figure 3 provides an overview of the experimental dual-arm setup (DAS).

FIGURE 3. The experimental dual-arm setup with two KUKA LBR iiwa 7 R800 robots mounted on mobile platforms. The manipulators are positioned at an abdominal phantom and the tablet PC displays a workflow application for US-guided biopsies (described in more detail in Section 2.2.3).

The software in the DAS incorporated a shared planning environment for both robots using the MoveIt framework and ROS. In this instance, MERODES accessed and controlled the robots using the iiwa_stack application3 (Hennersperger et al., 2017) and translated between ROS inherent topics and SDC messages. The iiwa_stack application was extended to include multiple robots rooted in the coordinate system of the utilized tracking device, allowing for path planning with collision avoidance between the robots, the patient, and other peripherals. The tf2 library4 provided the transformations between both robots and the tracking space. On initialization, MERODES dynamically mirrored all available ROS services and generated the associated VMDs for each robot. Hence, the DAS encompassed two VMDs for iiwa1 and iiwa2, respectively. Besides the ROS services, additional metrics for the attached buttons at the robot flanges were included in the robot VMDs to publish the interactive states (pressed/released) to the SDC network. Based on previous works, the possibility to access applied forces on the manipulators allowed for the mapping of touch gestures on the end-effectors to specific commands (Berger et al., 2018b). The system published the received gestures (e.g., left push, right push, etc.) to the SDC network, as well. MERODES and all ROS dependencies were implemented in C++ and compiled/executed on a virtual machine (OracleVM, version 5.2.26) running Ubuntu 16.04. Since ROS and SDC are not real-time capable, the iiwa_stack inherent ROSSmartServo application managed all real-time dependent motion controls on the KUKA Cabinets. To provide additional safety, a foot pedal (directly connected to the Cabinets) served as a “deadman switch” to enable/disable robot movement. A consumer application for US-guided biopsies (written in C++ with Qt55 and built for Windows 10 with the Visual Studio 2019 toolset) provided a graphical user interface (GUI) that was streamed to the tablet. It managed the configuration and control of the robotic system via SDC (see Section 2.2.3). All SDC dependencies were implemented using the SDCLib/C6 library. The DAS components are visualized in Figure 4.

FIGURE 4. The communication and information exchange between the components of the dual-arm setup. Blue arrows represent SDC-based communication; orange arrows represent ROS-based communication.

The workflow for needle insertion incorporated treatment planning in US images and switching between motion-control modes of the manipulators (i.e., hand guidance and automated positioning). On initial connection to MERODES, the consumer application configured the system settings to assign the tool center points (tcp) for each robot (iiwa1—Clarius tcp, iiwa2—needle tcp). The button signals (sent via SDC while pressing the buttons) were mapped to activate different hand guiding modes as follows:

• Translation hand guidance (iiwa1 and iiwa2—yellow buttons): The end-effector can move only in translational DOF. Any rotational movement of the tcp is constrained.

• Rotation hand guidance (iiwa1—black button): The end-effector can move only in rotational DOF. Any translational movement of the tcp is constrained.

• Trajectory hand guidance (iiwa2—black button): The end-effector can move only along the trajectory axis (i.e., the effective direction of the tool). Any translation along the orthogonal axes and any rotation of the tcp is constrained.

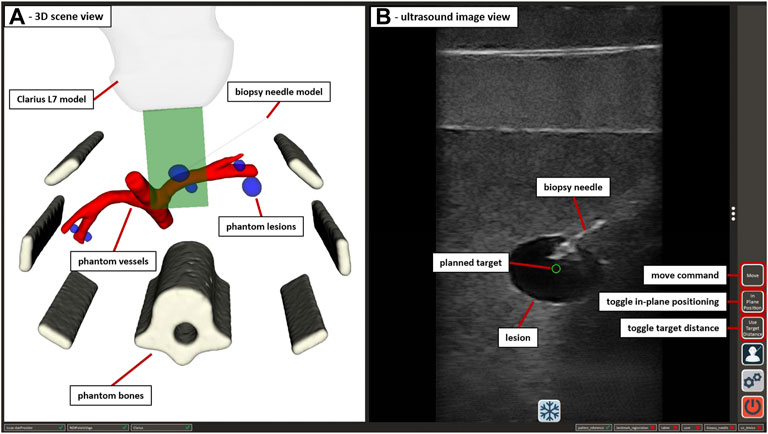

The application received 2D image data from the US device via the Clarius Cast API7 and displayed it in an interactive view, as shown in Figure 5. The treatment planning was performed on the tablet PC by clicking/touching on the target position for the needle tip inside the US image. Using the transformations of the System Control VMD (see Figure 2) the 2D click position was represented as the corresponding 3D position in the coordinate space of iiwa2. The application supported two options for the orientation (rotation around the tcp) of the needle at the target point: perpendicular to the imaging plane or parallel to the imaging plane with a changeable angle for the in-plane position (see Section 2.3.2). Sending the target position and rotation of the needle to MERODES via SDC, the system calculated the robot path from the current position to the target using MoveIt and returned a success message if the target was reachable. Reachable targets were represented by a green circle at the click position in the US image. A button in the GUI and/or a right push gesture on the needle manipulator enabled the activation of automated movement to the target. The insertion of the needle is the most critical part of the procedure. To keep the clinical personnel in charge of all invasive tasks, the option to position the needle at a non-invasive distance to the target, subsequently called pre-position, was included. Executing the path planning with this condition the pre-position was set by shifting the user-selected target position 200 mm away from the target along the needle trajectory. Subsequently, the user could move iiwa2 with trajectory hand guidance to advance the needle manually while staying on target. For better orientation, a separate scene view provided the option to visualize CT and MRI-based 3D patient models and the robotic end-effectors (see Figure 5). The application was connected to the tracking device, to perform patient registration and to include additional optical tools (e.g., pointers) for planning purposes, if so desired.

FIGURE 5. The GUI of the US-guided biopsy application. (A) displays the 3D scene view containing the segmented CT/MRI data of the abdominal phantom used in Section 2.3.3 and 3D representations of the used end-effectors. (B) shows the US image view with the planned target position (green circle) and the image of an inserted biopsy needle. Interactive GUI elements for movement commands and switching between positioning modes are listed in a toolbar at the right border.

Using the US-guided biopsy application, the needle insertion workflow involved the following three steps:

1. Locating the target structures (e.g., tumor tissue) with US imaging, using translation and/or rotation hand guidance with iiwa1 while orienting in the 3D scene

2. Planning the target needle position in the US image and performing automated movement of iiwa2 to the pre-position

3. Advance the needle under US image monitoring, using trajectory hand guidance with iiwa2 until the needle is visible in the target position

The design of MERODES and the depicted application intends to allow the interventional radiologist and/or surgeon to perform all tasks independently.

The validation of MERODES and the biopsy workflow application included three test sets; 1) Measuring the co-registration accuracy of both robots and the referring rigid markers with an optical tracking tool 2) Assessing the accuracy of positioning iiwa2 depending on the US-guided targeting provided by iiwa1 in water bath 3) Performing the biopsy workflow and measuring the accuracy on an abdominal phantom.

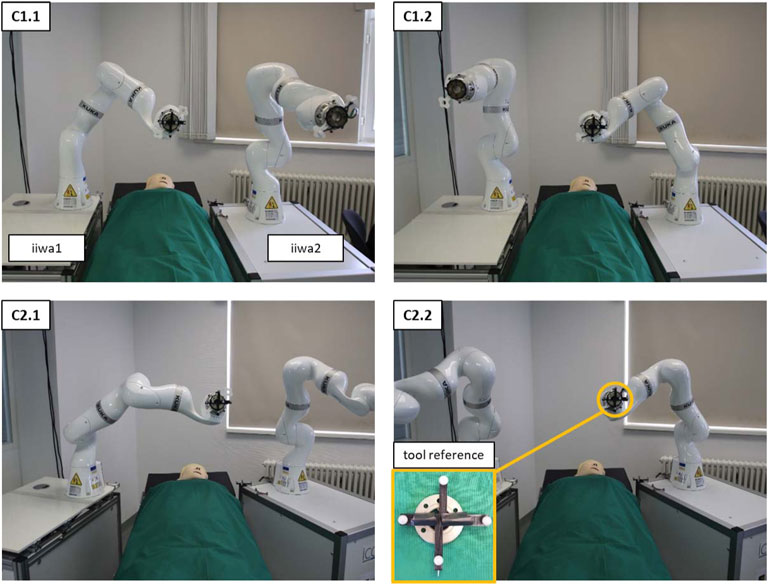

To achieve reliable accuracy measurements, a custom-made tool provided the reference end-effector for the interdependent positioning of both robots. As shown in Figure 6, the tool comprised a 3D cross shape, mounting four infrared marker spheres. An integrated nail tip served as the tcp. The marker spheres were calibrated with the NDI Polaris Vega to compose an optical tool reference (TR). The TR was attached to an FWS flat change system (SCHUNK GmbH & Co. KG, Germany) for a fast exchange between the robots and added as an end-effector to the robot arms toolset. MERODES supports landmark registration functionality, which is common in tracked surgical navigation. This method was used to determine the transformations between the coordinate spaces of the robots and their respective rigid markers, by moving the TR to five distinct positions (visible by the tracking camera) and recording the coordinates in both robot and tracking space. The resulting transformations were added to the tf2 tree of MERODES, enabling the transformation between both robot coordinate systems.

FIGURE 6. The setup of both robot platforms at an OR-table to measure the interdependent positioning accuracy. C1 shows the configuration in parallel to the table; C2 shows the angled configuration. C1.1 and C2.1 show examples of the 9 initial positions of iiwa1. C1.2 and C2.2 depict the corresponding positions of iiwa2 after solving the coordinate transformation. The pictures show the setup from the visual perspective of the tracking camera.

The accuracy measurements of the co-registration involved the movement of the robots to nine distinct positions in three repetitions. First, iiwa1 steered to the predefined positions to record the coordinates of the TR in tracking space (using the Vega camera) and in robot space (using the Cartesian position of the manipulator). Subsequently, the tool was mounted to iiwa2, to move to the same positions, by solving the coordinate transformation from iiwa1 space to iiwa2 space. The tracking camera similarly recorded the coordinates of the TR for iiwa2 to calculate the Euclidean distance between the corresponding positions. The procedure was conducted for two deployment configurations of the robot platforms (C1—deploying the robots in parallel at an OR-table; C2—deploying the robots in an angled position). Figure 6 depicts the two configurations and the interdependent positioning.

The system must provide sufficient accuracy, not only when directly transforming between the tcp coordinates, but also for target positions derived from US images. To measure the error introduced into the system by translating the clicked coordinates in the image view to a target position in robot coordinates, an additional validation was performed using a water bath. Performing the tests in water ensured moving the needle without introducing errors by deformation, e.g., due to deflections while advancing it through tissue (as addressed in Section 2.3.3). Consequently, the data resulting from this setup will serve as a reference for improving the system quality in future optimization steps.

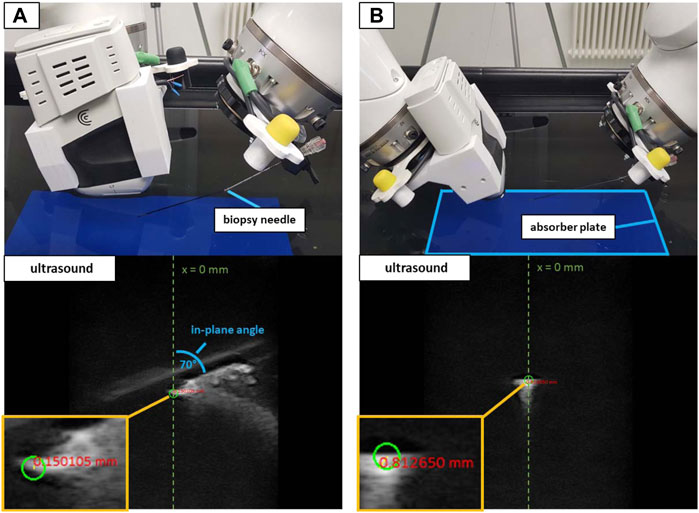

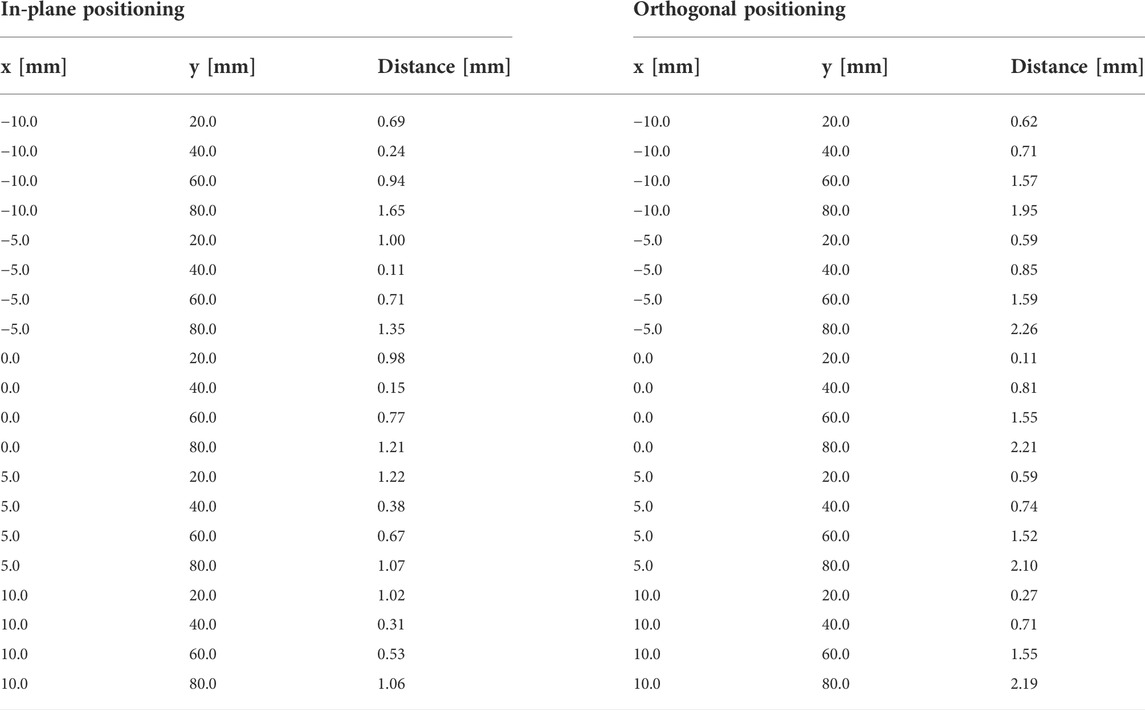

The imaging array of the Clarius device was submerged in water and directed down onto an US absorbent plate (Aptflex F288) to minimize US reflections. The device was slightly angled to the water surface, allowing for better freedom of movement for the second robot. At first instance, the validation involved automated positioning, equivalent to the co-registration measurements in Section 2.3.1. Pre-defined 2D coordinates laid out as a 5 × 4 grid in the imaging plane served as the target positions. The 20 coordinates comprised values of −10, −5, 0, 5, and 10 mm horizontally (x-axis, with x = 0 being the horizontal center of the image) and 20, 40, 60, and 80 mm of depth (y-axis, with y = 0 being the upper border of the image). The 2D coordinates (given in relation to the tcp of iiwa1) were translated into 3D and iiwa2 placed the needle tip at the resulting target positions, by applying the robot-to-robot transformation. Positioning the US compatible biopsy needle inside the imaging plane provided a sharp contrast, enabling the visual assessment of reaching the planned targets. The targeting error was assessed manually in the US image, by measuring the distance between the planned 2D coordinates (green circle) and the approximated positions of the needle tip. Since only 2D imaging was available and to gain information for the missing dimension, the procedure was performed placing the needle orthogonally to the imaging plane and in parallel to the imaging plane at an angle of 70°. Figure 7 visualizes both the orthogonal and in-plane positioning with the resulting US images for the x-/y-coordinate of 0/40 mm.

FIGURE 7. The water bath setup with in-plane positioning at 70° (A) and orthogonal positioning (B). White artifacts in the US images depict the reflections of the needle. The green circles show the planned target at x = 0 mm and a depth = 40 mm. The red numbers show the distance from the planned target to the approximated center of the needle tip in the US image.

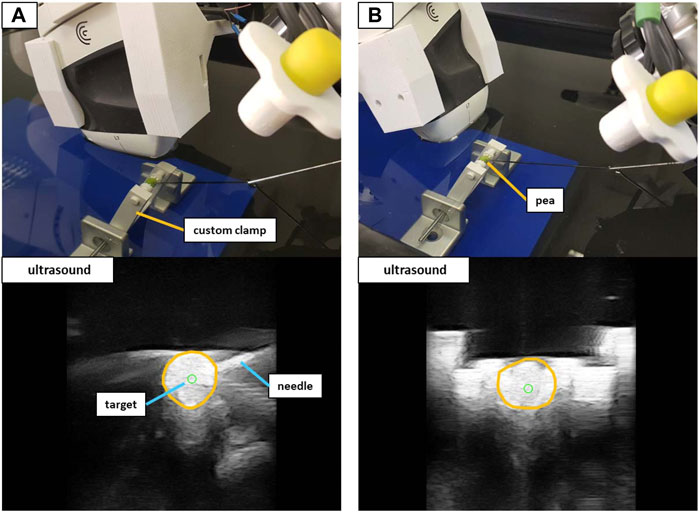

To validate the possibility to puncture sufficiently small structures when using the trajectory hand guidance, peas (∼6 mm in diameter) provided the targets for a second water bath validation as shown in Figure 8. A 3D-printed custom clamp held the submerged peas in place to perform needle insertions. The clamp could be rotated to adjust the height of the peas and was placed at five arbitrary positions on the absorber plate in the water bath. iiwa1 positioned the US device to visualize the center of the peas for both orthogonal and in-plane targeting. Clicking inside the area of the peas in the US image provided the target positions. The movement planning for iiwa2 positioned the robot at distance to the targets (as described in Section 2.2.3) and the needle was advanced via hand guidance until perforating the peas. The tip of the needle was not visible within the peas (see Figure 8). Therefore, instead of measuring the positioning error to the planned target, a binary analysis was performed (the target was perforated or not perforated).

FIGURE 8. The experimental setup to target peas in the water bath. Shown are the in-plane positioning (A) and orthogonal positioning (B) of the US device. The orange circles in the US images outline the peas.

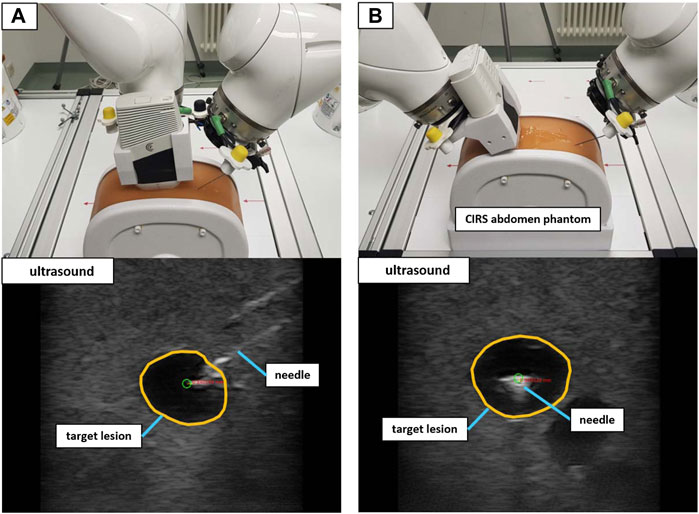

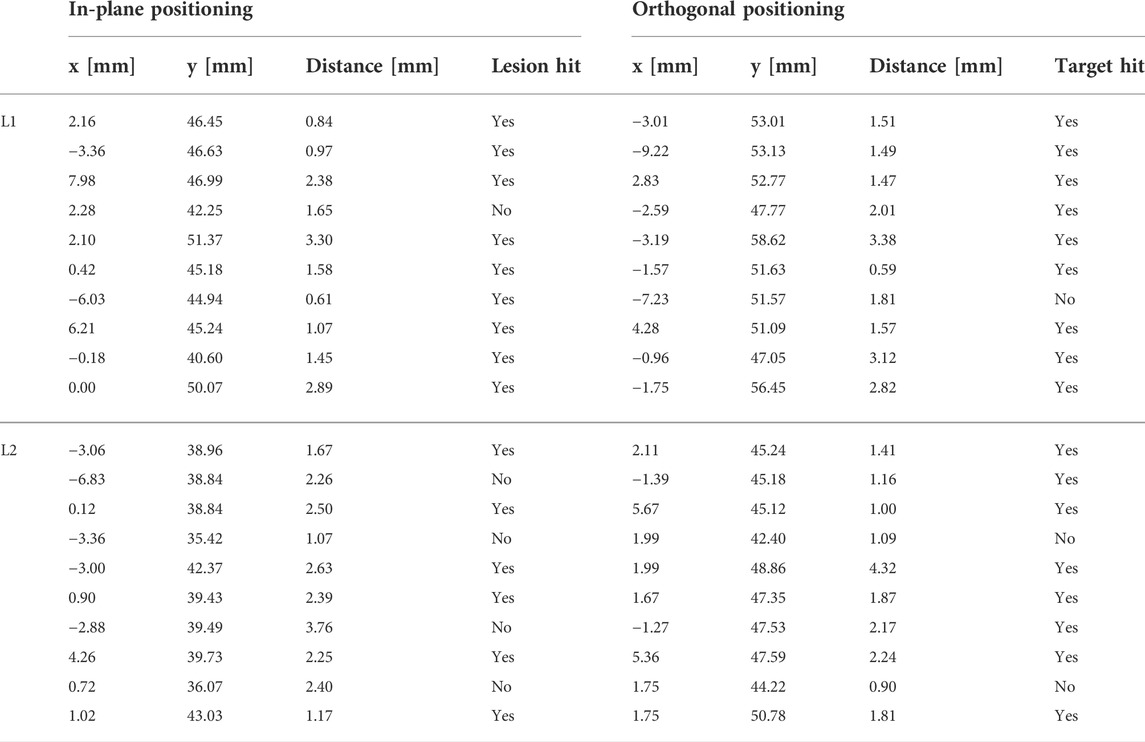

A validation setup with a triple modality 3D abdominal phantom9 (CIRS Inc., United States) allowed for a performance test that was closer to reality. Abiding by the needle insertion workflow described in Section 2.2.3, 40 needle insertions were performed on two distinct lesions inside the phantom. The first lesion (L1) was located at a depth to the surface of ∼42 mm with a size of ∼12 mm in diameter. Lesion 2 (L2) resided at a depth of ∼35 mm with a size of ∼9 mm in diameter. Again, the needle orientation was planned for both in-plane and orthogonal positioning (20 insertions for each orientation). Figure 9 illustrates the phantom setup with the corresponding US images for L1.

FIGURE 9. The validation setup to puncture lesions inside an abdominal phantom with trajectory hand guidance. Shown are the in-plane positioning (A) and orthogonal positioning (B) for the same target lesion. The green circle marks the planned needle tip position.

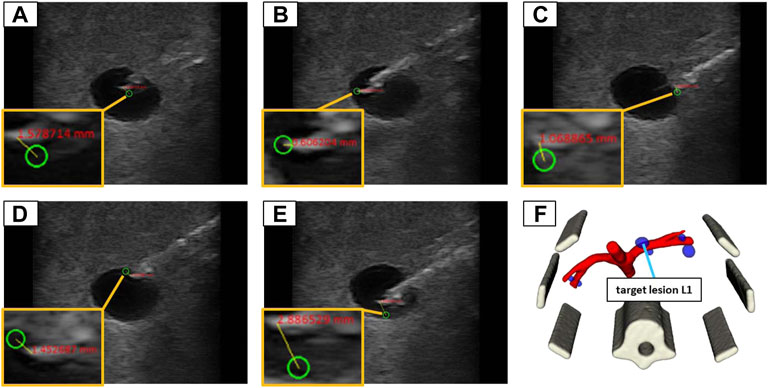

Similar to the validation in water, the needle images allowed for the manual assessment of the position error, by measuring the 2D distance between the needle tip and the planned target. The needle insertions were conducted in eight iterations (four in-plane and four orthogonal). Each iteration comprised the placement of the US device to visualize the target structure and the planning of five target positions inside the lesion (at the left, right, top, and bottom borders as well as in the center) as depicted in Figure 10. The needle was advanced in hand guidance with the goal to minimize the distance of the needle tip to the planned target. The insertion depth was determined, relying on the visual feedback in the US image view.

FIGURE 10. The 5 target positions inside of a lesion (L1) in the abdominal phantom for an in-plane needle positioning: (A) in the center; (B) left border; (C) right border; (D) top border; (E) bottom border; (F) illustrates the 3D model of the phantom to provide an overview where the lesion is located in 3D space.

The results of this work represent the performance of MERODES when controlled by an SDC consumer application for US-guided biopsies. All workflow-related tasks described in previous sections were performed solely by exchanging SDC messages and commands between the biopsy application and the dual-arm setup. The accuracy measurements of the three validation test sets yielded the following results.

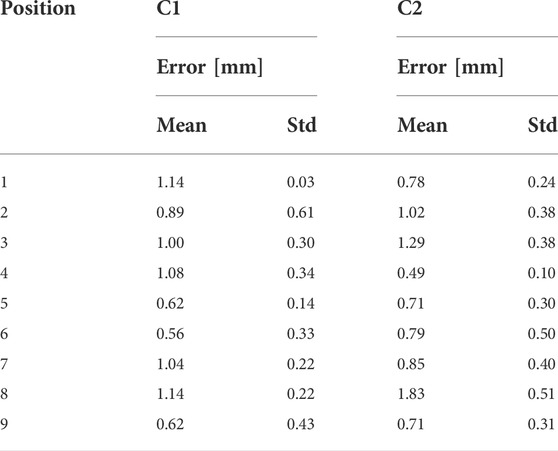

The mean error of the interdependent positioning for deployment configuration C1 was 0.93 ± 0.29 mm. Deployment configuration C2 resulted in a mean target deviation of 0.95 ± 0.38 mm. The overall positioning error of the dual-arm setup on average was 0.94 ± 0.42 mm. The measured mean errors and standard deviations for all 9 positions in both configurations are listed in Table 1.

TABLE 1. The mean position errors and standard deviations for all 9 positions in the co-registration setup for the deployment configuration C1 and C2. All values are provided in mm.

When positioning the Clarius device in a water bath, iiwa2 reached all pre-defined 2D targets, utilizing automated movement directly to the target positions. Measuring the target distances in the US images using in-plane positioning resulted in a mean 2D error of 0.86 ± 0.42 mm. The mean 2D positioning error in orthogonal needle placement was 1.19 ± 0.70 mm. Table 2 contains all target coordinates in the image (x, y) and the corresponding measured distance to the needle tip for in-plane and orthogonal positioning.

TABLE 2. The 2D distance measurements for in-plane and orthogonal positioning in the water bath setup. The click positions of the planned targets are provided as x- and y-coordinates in mm.

Advancing the needle with trajectory hand guidance was sufficiently accurate to target peas in water. At all five locations, the peas were punctured by the needle using in-plane and orthogonal positioning.

The needle advancement in tissue resulted in higher errors. In-plane positioning yielded a mean 2D target deviation of 1.96 ± 0.86 mm. The orthogonal approach resulted in a mean 2D positioning error of 1.69 ± 0.92 mm. Planning positions at the borders of the lesions lead to missing the target volume in 8 out of 32 cases. Aiming at the lesion centers always resulted in a hit. The measured positioning errors for lesion 1 (L1) and lesion 2 (L2) are listed in Table 3.

TABLE 3. The 2D positioning errors for in-plane and orthogonal positioning for lesions L1 and L2 in the abdominal phantom. The click positions of the planned targets are provided as x and y coordinates within the US image. A binary assessment of hitting/missing the lesion is included.

This work presents the design and implementation of a collaborative robotic setup for US-guided needle insertions. The systems control software allows for the utilization of two KUKA lbr iiwa 7 R800 robotic arms in a flexible deployment. In the described setup, the robots control an US-imaging device and an US-compatible biopsy needle to perform interdependent target planning and positioning. The utilized hand guidance control and touch-gesture inputs support fluent transitions between automated movements and hands-on interaction. By utilizing encapsulated applications to separate the workflow logic from the incorporated robots, a quick configuration of the system depending on the clinical environment or user preferences can be enabled. The standardized integration via SDC additionally allows for variable setups to exchange information with peripheral medical devices (e.g., tracking cameras or ultrasound imaging).

To provide a performance validation, the system accuracy was measured while being controlled by the US-guided biopsy application. The system exhibited stable behavior at all times performing the interventional tasks under SDC based workflow control. The presented accuracies of 1.96 ± 0.86 mm (in-plane) and 1.69 ± 0.92 mm (orthogonal) in the phantom setup are within the range of currently available biopsy robots (Siepel et al., 2021). Since only 2D US-imaging was available in the described setup, the positioning errors could also only be measured in 2D. Due to the missing dimension, the 3D positioning error is expected to be higher. However, the same limitations apply for hand-guided needle positioning, in which the user would assess if a target was hit in a comparable way. The system can, therefore, be rated as non-inferior to the current state of the art concerning accuracy. The needle insertion was accomplished using a trajectory hand guidance mode in which the robot only allows for a single degree of freedom during the motion. Ideally, the needle should exactly follow the trajectory to the target structure. The targeting of peas in water promises sufficient accuracy for targets of ∼6 mm size. However, hand guidance with the KUKA robots relies on an impedance mode that increases the stiffness of the robot with an increasing deviation of the tcp from the specified path, i.e., movement orthogonally to the needle trajectory. The maximum achieved stiffness is limited and, therefore, introduces inaccuracies when moving through tissue. Further inaccuracies in reaching the desired target are introduced by the needle itself. Due to variations in tissue density, the needle deflects and bends during perforation. The described system does not provide needle steering. Although the positioning error can be detected using real-time US imaging, the error is not corrected. The binary assessment of hitting and missing targets in the phantom reflects this circumstance. In 8 out of 32 cases, the needle missed the targeted lesion due to additional deflections towards the surface of the phantom (negative y-direction in relation to the US image). Although lesion L1 was only missed in 2 cases when aiming for the upper or left borders, the deflections were especially problematic for the smaller lesion L2 (∼9 mm), for which most misses occurred. Active needle path correction plays an essential role in precise targeting and recent works, e.g., closed-loop deflection compensation as presented by Wartenberg et al. (2018) provide promising solutions. The capabilities of the proposed system should be enhanced by introducing a similar needle steering mode in the controlling workflow application to enable more precise targeting.

Positioning an US probe and a biopsy needle as described in this work is limited by the available space around the patient and the available degrees of freedom of the used robots. When using hand guidance, both robots have to operate very close to each other, as can be seen in Figure 9A. Reaching the desired target structure without robot collision is impeded by the target depth and the placement of the US device on the patient. The target planning with in-plane or orthogonal positioning introduces further restrictions to the freedom of movement. With the proposed workflow, the robot setup reliably reaches a needle depth of up to 60 mm (perpendicular to the skin surface) depending on the insertion angle, which is clinically acceptable for most percutaneous biopsies. Allowing the planning of needle positions independent of the imaging plane should be further investigated to reduce restrictions on the freedom of movement.

This work aims to reduce the complexity in the OR and increase acceptance of robotic interventions. The depicted system configuration introduces additional overhead that can impede reaching these goals, especially for relatively straightforward procedures such as biopsies. In its current state, the deployment of two KUKA arms can be considered too extensive and the possibilities for miniaturization must be examined. The MERODES architecture inherently supports the exchange of robots and tools represented in the SDC standard (see Figure 2), easing the reengineering process to incorporate smaller manipulators or decrease their number. The promotion of standardized information exchange among manufacturers of medical robotic systems (e.g., via SDC) is crucial in this process. Implementing SDC communication for the KUKA LBR iiwa allows adapting the presented setup for other use cases. The introduction of additional, exchangeable workflow applications facilitates improvements for more challenging puncture interventions in cancer therapy such as US guided cryoablation or non-invasive treatment with high-intensity focused ultrasound (Kim et al., 2019; Ward et al., 2019; Guo et al., 2021).

With its flexible extension possibilities, the presented system provides an addition to the current state of the art of robot-assisted percutaneous interventions. Additional treatment principles can be integrated and the system configuration can be adjusted without much overhead. In future works, the possibilities of interchanging robotic devices and extending the usability of utilized robots in different use cases must be verified. The benefits of standardized device communication in robotic applications must be further investigated. Currently, only a base set of devices (OR-tables, microscopes, etc.) are included in the SDC device network and real-time capability for full robotic integration still poses challenges (Vossel et al., 2017; Schleer et al., 2019).

The data presented in the study are deposited in the “Frontiers MERODES Data” repository, accessable under https://git.iccas.de/iccas-public/frontiers-merodes-data. Further inquiries can be directed to the corresponding author.

JB and MU contributed to the conception and design of the work. JB, JK, CR, and MU contributed to the hardware and software design and implementation. JB and MU acquired and analyzed the data. JB wrote the manuscript. CR and MU edited the manuscript. TN and AM reviewed and edited the manuscript. All authors approved it for publication.

The work described in this article received funding from the German Federal Ministry of Education and Research (BMBF) under the Grant No. 03Z1L511 (SONO-RAY project) and the Eurostars-2 joint programme with co-funding from the European Union Horizon 2020 research and innovation programme (Grant number E!12491). This work received funding for open access publication fees from the German Research Foundation (DFG) and Universität Leipzig within the program of Open Access Publishing.

The author(s) acknowledge support from the German Research Foundation (DFG) and Universität Leipzig within the program of Open Access Publishing.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1ROS: Home Available at: https://www.ros.org/ [Accessed January 4, 2022].

2The Orocos Project Available at: https://orocos.org/ [Accessed January 4, 2022].

3IFL-CAMP/iiwa_stack Available at: https://github.com/IFL-CAMP/iiwa_stack [Accessed January 17, 2022].

4tf2 - ROS Wiki Available at: http://wiki.ros.org/tf2 [Accessed January 17, 2022].

5Qt5 (2022). Qt Available at: https://github.com/qt/qt5 [Accessed January 24, 2022].

6Surgitaix (2021). SDCLib. Available at: https://github.com/surgitaix/sdclib [Accessed January 23, 2022].

7Clarius Cast API (2022). Clarius Mobile Health - Developer Portal Available at: https://github.com/clariusdev/cast [Accessed January 24, 2022].

8High Frequency Acoustic Absorber Precision Acoustics. Available at: https://www.acoustics.co.uk/product/aptflex-f28/ [Accessed January 26, 2022].

9Triple Modality 3D Abdominal Phantom CIRS. Available at: https://www.cirsinc.com/products/ultrasound/zerdine-hydrogel/triple-modality-3d-abdominal-phantom/ [Accessed January 26, 2022].

Ajoudani, A., Zanchettin, A. M., Ivaldi, S., Albu-Schäffer, A., Kosuge, K., and Khatib, O. (2018). Progress and prospects of the human–robot collaboration. Auton. Robots 42, 957–975. doi:10.1007/s10514-017-9677-2

Arney, D., Plourde, J., Schrenker, R., Mattegunta, P., Whitehead, S. F., and Goldman, J. M. (2014). “Design pillars for medical cyber-physical system middleware,” in 5th Workshop on Medical Cyber-Physical Systems, 9. doi:10.4230/OASICS.MCPS.2014.124

Berger, J., Unger, M., Keller, J., Bieck, R., Landgraf, L., Neumuth, T., et al. (2018a). Kollaborative Interaktion für die roboterassistierte ultraschallgeführte Biopsie CURAC Tagungsband (Leipzig. Available at: https://www.curac.org/images/advportfoliopro/images/CURAC2018/CURAC%202018%20Tagungsband.pdf (Accessed February 7, 2022).

Berger, J., Unger, M., Landgraf, L., Bieck, R., Neumuth, T., and Melzer, A. (2018b). Assessment of natural user interactions for robot-assisted interventions. Curr. Dir. Biomed. Eng. 4, 165–168. doi:10.1515/cdbme-2018-0041

Berger, J., Unger, M., Landgraf, L., and Melzer, A. (2019). Evaluation of an IEEE 11073 SDC connection of two KUKA robots towards the application of focused ultrasound in radiation therapy. Curr. Dir. Biomed. Eng. 5, 149–152. doi:10.1515/cdbme-2019-0038

Cai, Y., Tang, Z., Ding, Y., and Qian, B. (2016). Theory and application of multi-robot service-oriented architecture. IEEE/CAA J. Autom. Sin. 3, 15–25. doi:10.1109/JAS.2016.7373758

Cesetti, A., Scotti, C. P., Di Buo, G., and Longhi, S. (2010). “A Service Oriented Architecture supporting an autonomous mobile robot for industrial applications,” in 18th Mediterranean Conference on Control and Automation, MED’10, 604–609. doi:10.1109/MED.2010.5547736

Cleary, K., and Nguyen, C. (2001). State of the art in surgical robotics: Clinical applications and technology challenges. Comput. Aided Surg. 6, 312–328. doi:10.3109/10929080109146301

Franke, S., Rockstroh, M., Hofer, M., and Neumuth, T. (2018). The intelligent OR: Design and validation of a context-aware surgical working environment. Int. J. Comput. Assist. Radiol. Surg. 13, 1301–1308. doi:10.1007/s11548-018-1791-x

Guo, R.-Q., Guo, X.-X., Li, Y.-M., Bie, Z.-X., Li, B., and Li, X.-G. (2021). Cryoablation, high-intensity focused ultrasound, irreversible electroporation, and vascular-targeted photodynamic therapy for prostate cancer: A systemic review and meta-analysis. Int. J. Clin. Oncol. 26, 461–484. doi:10.1007/s10147-020-01847-y

Hennersperger, C., Fuerst, B., Virga, S., Zettinig, O., Frisch, B., Neff, T., et al. (2017). Towards MRI-based autonomous robotic US acquisitions: A first feasibility study. IEEE Trans. Med. Imaging 36, 538–548. doi:10.1109/TMI.2016.2620723

Hoeckelmann, M., Rudas, I. J., Fiorini, P., Kirchner, F., and Haidegger, T. (2015). Current capabilities and development potential in surgical robotics. Int. J. Adv. Robotic Syst. 12, 61. doi:10.5772/60133

Hoffman, G. (2019). Evaluating fluency in human–robot collaboration. IEEE Trans. Human-Mach. Syst. 49, 209–218. doi:10.1109/THMS.2019.2904558

Kasparick, M., Schlichting, S., Golatowski, F., and Timmermann, D. (2015). “New IEEE 11073 standards for interoperable, networked point-of-care Medical Devices,” in 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 1721–1724. doi:10.1109/EMBC.2015.7318709

Kasparick, M., Schmitz, M., Andersen, B., Rockstroh, M., Franke, S., Schlichting, S., et al. (2018). OR.NET: A service-oriented architecture for safe and dynamic medical device interoperability. Biomed. Eng. Biomed. Tech. 63, 11–30. doi:10.1515/bmt-2017-0020

Kim, D. K., Won, J. Y., and Park, S. Y. (2019). Percutaneous cryoablation for renal cell carcinoma using ultrasound-guided targeting and computed tomography-guided ice-ball monitoring: Radiation dose and short-term outcomes. Acta Radiol. 60, 798–804. doi:10.1177/0284185118798175

Okamoto, J., Masamune, K., Iseki, H., and Muragaki, Y. (2018). Development concepts of a smart cyber operating theater (SCOT) using ORiN technology. Biomed. Eng. /Biomed. Tech. 63, 31–37. doi:10.1515/bmt-2017-0006

Oliveira, L. B. R., Osório, F. S., and Nakagawa, E. Y. (2013). “An investigation into the development of service-oriented robotic systems,” in Proceedings of the 28th Annual ACM Symposium on Applied Computing - SAC ’13, 223. doi:10.1145/2480362.2480410

Roberts, R., Siddiqui, B. A., Subudhi, S. K., and Sheth, R. A. (2020). “Image-guided biopsy/liquid biopsy,” in Image-guided interventions in oncology. Editors C. Georgiades, and H. S. Kim (Cham: Springer International Publishing), 299–318. doi:10.1007/978-3-030-48767-6_18

Rockstroh, M., Franke, S., Hofer, M., Will, A., Kasparick, M., Andersen, B., et al. (2017). OR.NET: Multi-perspective qualitative evaluation of an integrated operating room based on IEEE 11073 SDC. Int. J. Comput. Assist. Radiol. Surg. 12, 1461–1469. doi:10.1007/s11548-017-1589-2

Schleer, P., Drobinsky, S., de la Fuente, M., and Radermacher, K. (2019). Toward versatile cooperative surgical robotics: A review and future challenges. Int. J. Comput. Assist. Radiol. Surg. 14, 1673–1686. doi:10.1007/s11548-019-01927-z

Siepel, F. J., Maris, B., Welleweerd, M. K., Groenhuis, V., Fiorini, P., and Stramigioli, S. (2021). Needle and biopsy robots: A review. Curr. Robot. Rep. 2, 73–84. doi:10.1007/s43154-020-00042-1

Veiga, G., Pires, J. N., and Nilsson, K. (2009). Experiments with service-oriented architectures for industrial robotic cells programming. Robotics Computer-Integrated Manuf. 25, 746–755. doi:10.1016/j.rcim.2008.09.001

Vossel, M., Strathen, B., Kasparick, M., Müller, M., Radermacher, K., Fuente, M. de L., et al. (2017). “Integration of robotic applications in open and safe medical device IT networks using IEEE 11073 SDC,” in EPiC series in Health sciences (England: EasyChair), 254–257. doi:10.29007/fmqx

Ward, R. C., Lourenco, A. P., and Mainiero, M. B. (2019). Ultrasound-guided breast cancer cryoablation. Am. J. Roentgenol. 213, 716–722. doi:10.2214/AJR.19.21329

Keywords: surgical robotics, robotic biopsy, image-guided robot, collaborative robotics, IEEE 11073 SDC

Citation: Berger J, Unger M, Keller J, Reich CM, Neumuth T and Melzer A (2022) Design and validation of a medical robotic device system to control two collaborative robots for ultrasound-guided needle insertions. Front. Robot. AI 9:875845. doi: 10.3389/frobt.2022.875845

Received: 14 February 2022; Accepted: 31 August 2022;

Published: 28 September 2022.

Edited by:

Kim Mathiassen, Norwegian Defence Research Establishment, NorwayReviewed by:

Marina Carbone, University of Pisa, ItalyCopyright © 2022 Berger, Unger, Keller, Reich, Neumuth and Melzer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andreas Melzer, YW5kcmVhcy5tZWx6ZXJAbWVkaXppbi51bmktbGVpcHppZy5kZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.