- 1Department of Electrical and Computer Engineering, Mississippi State University, Mississippi State, MS, United States

- 2Department of Electrical Engineering, National Taipei University, and Tainan National University of the Arts, Taipei, Taiwan

- 3Department of Civil and Mechanical Engineering, Purdue University Fort Wayne, Fort Wayne, IN, United States

With the introduction of autonomy into the precision agriculture process, environmental exploration, disaster response, and other fields, one of the global demands is to navigate autonomous vehicles to completely cover entire unknown environments. In the previous complete coverage path planning (CCPP) research, however, autonomous vehicles need to consider mapping, obstacle avoidance, and route planning simultaneously during operating in the workspace, which results in an extremely complicated and computationally expensive navigation system. In this study, a new framework is developed in light of a hierarchical manner with the obtained environmental information and gradually solving navigation problems layer by layer, consisting of environmental mapping, path generation, CCPP, and dynamic obstacle avoidance. The first layer based on satellite images utilizes a deep learning method to generate the CCPP trajectory through the position of the autonomous vehicle. In the second layer, an obstacle fusion paradigm in the map is developed based on the unmanned aerial vehicle (UAV) onboard sensors. A nature-inspired algorithm is adopted for obstacle avoidance and CCPP re-joint. Equipped with the onboard LIDAR equipment, autonomous vehicles, in the third layer, dynamically avoid moving obstacles. Simulated experiments validate the effectiveness and robustness of the proposed framework.

1 Introduction

In real-world applications such as environmental exploration (Rose and Chilvers, 2018), environmental sensing (Stolfi et al., 2021) and disaster response (Carrillo-Zapata et al., 2020), and other autonomous vehicle applications such as agricultural harvesting and forest surveillance, prospecting, search and rescue vehicles, concurrent complete coverage path planning (CCPP), and mapping are needed to navigate a vehicle to cover every part of the terrain in unknown environments (Wang et al., 2019; Iqbal et al., 2020; Cèsar-Tondreau et al., 2021; Meng, 2021). In previous CCPP research, the vehicle needs to concurrently consider mapping, obstacle avoidance, and route planning intractably while traversing in a workspace, which makes the entire navigation system fairly complicated and computationally expensive (Lee et al., 2014; Poonawala and Spong, 2017; Niyaz et al., 2019; Jiang et al., 2020). Particularly, in real-time navigation, re-planning with unforeseen moving obstacles may be computationally expensive. This study proposes a new framework that tackles issues of environment mapping, path generation, CCPP, and dynamic obstacle avoidance in a hierarchical manner.

1.1 Related Work

For decades, CCPP has undergone extensive research, and many algorithms have emerged, such as the bio-inspired neural network (BNN) approach, the Boustrophedon Cellular Decomposition (BCD) method, and the deep reinforcement learning approach (DRL). Luo and Yang (2008) developed the bio-inspired neural network (BNN) method to navigate robots to perform CCPP while avoiding obstacles within dynamic environments in real time (Zhu et al., 2017). The robot is attracted to unscanned areas and repelled by the accomplished areas or obstacles based on the neuron activity in the BNN given by the shunting equation (Yang and Luo, 2004; Li et al., 2018). Without any prior knowledge about the environment, the next position of the robot depends on the current position of the robot and neuron activity associated with its current position (Luo et al., 2016). However, it is time- and energy-consuming for the vehicles and requires high computing resources to process fine-resolution mapping (Sun et al., 2018). Unlike the BNN approach, the boundary representation method that defines the workspace is adopted by the Boustrophedon Cellular Decomposition (BCD) method and the deep reinforcement learning approach (DRL). The BCD method is proposed by Acar and Choset (2002), which decomposes the environment into many line scan partitions and is explored through a back-and-forth path (BFP) in the same direction. The BCD is an effective CCPP method with more diverse, non-polygonal obstacles in workspace. In trapezoidal decomposition as a cell, it is covered in back-and-forth patterns. For a complex configuration space with irregular-shaped obstacles, BCD needs to construct a graph that represents the adjacency connections of the cells in the boustrophedon decomposition. Therefore, a deep leaning-based method may promote it to a more efficient CCPP method (Sünderhauf et al., 2018; Valiente et al., 2020; Rawashdeh et al., 2021). Similarly, Nasirian et al. (2021) utilized traditional graph theory to segment the workspace and proposed a deep reinforcement learning approach to solve the CCPP problem in the complex workspace. However, the most common shape of the workspace is represented by polygons. As irregular areas of non-convex polygons, they can still be decomposed into multiple convex polygons (Li et al., 2011). Thus, the representation of polygons is also adopted in this study to express most workspace that needs to be explored. Such a method simplifies the complex environments and solves the covering irregularity for vehicles (Quin et al., 2021).

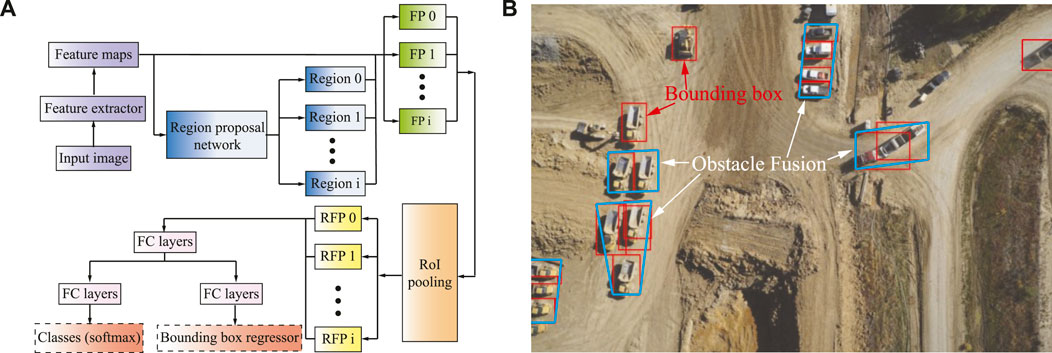

Faster R-CNN originated from R-CNN, and Fast CNN uses a unified neural network (NN) for object detection shown in Figure 4A. The faster R-CNN avoids using selective search, which accelerates region selection and further reduces computational costs. The faster R-CNN detector is mainly composed of a region proposal network (RPN), which generates region proposals, and a network that uses these generated feature patches (FP) for object detection. The region of interest (ROI) pooling layer is used to resize the feature patch (RFP), finally concatenated with a set of fully connected (FC) layers in our study. The two fully connected NN layers are utilized to refine the location of the bounding box and classify the objects. Faster R-CNN effectively uses the bounding box in our studies to identify and locate vehicles and obstacles in the images. This is also applied to the map obtained from farms, search, and rescue scenes to distinguish the vehicles, machines, and human beings on the image.

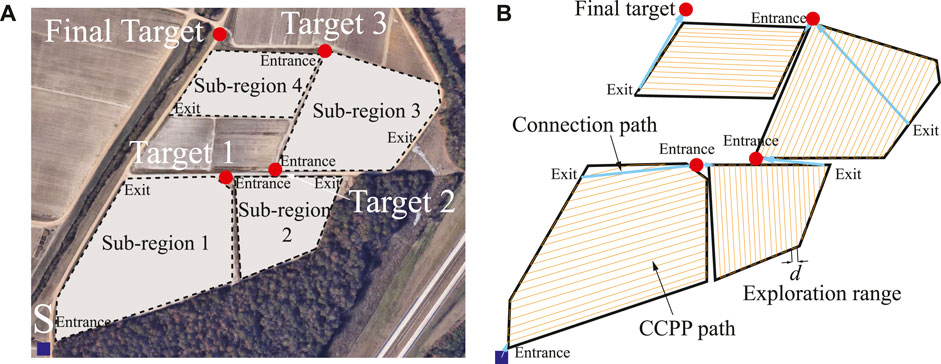

Although the above-mentioned CCPP approaches have achieved remarkable results, such approaches may still be sub-optimal when the starting and target positions required by the vehicle are included in the path. Especially for multiple sub-region exploration tasks shown in Figure 1A, the task is considered continuous to explore the four sub-regions, and the starting point of the next sub-region to be explored is the target point of the last sub-region as shown by the red circles in Figure 1. The selection of intermediate target points for multiple polygonal exploration areas is still an open problem because it needs to consider the shape and relative position of each sub-region, as well as the entrance and exit of the exploration area (Graves and Chakraborty, 2018). For simplicity, the entrances of the next sub-region are selected as target points here. The connection path length from the starting point to the target point should be considered, as shown in the blue lines in Figure 1B. In this case, ignoring the connection path may increase the complete path length of the overall exploration task (Xie et al., 2019). Thus, it is vital to consider the starting and target points of the vehicle, including the exploration task, and obtain a shorter path that effectively utilizes the limited onboard resources. Another challenging problem that arises in CCPP is obstacle avoidance (An et al., 2018; Wang et al., 2021). Based on the excellent optimization and search capabilities of nature-inspired algorithms, researchers have recently explored many nature-inspired computational approaches to solve vehicle collision-free navigation problems (Deng et al., 2016; Ewerton et al., 2019; Lei et al., 2019, 2021; Segato et al., 2019). For instance, a hybrid fireworks algorithm with LIDAR-based local navigation was developed by Lei et al. (2020a), capable of generating short collision-free trajectories in unstructured environments. Zhou et al. (2019) developed a modified firefly algorithm with the self-adaptive step factor to avoid the premature and improve the operational efficiency of autonomous vehicles. Lei et al. (2020b) proposed a graph-based model integrated with ant colony optimization (ACO) to navigate the robot under the robot’s kinematics constraints. Xiong et al. (2021) further improved ACO using the time Taboo strategy to improve the algorithm convergence speed and global search ability in a dynamic environment. Cèsar-Tondreau et al. (2021) proposed a human-demonstrated navigation system, which integrates the behavioral cloning model into an off-the-shelf navigation stack.

FIGURE 1. (A) Illustration of multiple sub-regions exploration task. (B) The entire CCPP trajectory of multiple sub-regions with connection paths.

1.2 Proposed Framework and Original Contributions

This study proposes a progressive three-layer framework for the CCPP navigation of autonomous vehicles. Initially, in the first layer, a new type of deep learning-based complete coverage path generation method is developed to generate complete coverage trajectories without considering obstacles. A feature learning-enabled fully convolutional deep neural network (FCNN) model is developed to identify the edges of the workspace to be explored, in combination with the starting and target positions of the vehicle to estimate waypoints given an occupancy grid map and generate the CCPP paths. The generated paths are references to guide the vehicle in the following layers to reset and continue CCPP with obstacle avoidance once traversing in the vicinity of obstacles, which improves the computational efficiency of vehicle re-planning.

In the second layer, the obstacles in the environment are considered in this stage. A nature-inspired path planning method is proposed to perform autonomous navigation of vehicles in the environment. Particularly, the vehicle deeply re-plans when it traverses in the vicinity of obstacles. In this study, the Bat algorithm is utilized to plan a collision-free trajectory in light of the size and shape of the obstacles. Once the vehicle completes the re-planning near the obstacles, a new re-joint mechanism is developed to enable the vehicle to re-join complete coverage trajectories. Additionally, an environment-based obstacle approximation and fusion paradigm is developed using image processing of feature extraction. Based on the proposed obstacle approximation and fusion method and the nature-inspired path planning method integrated with the re-joint mechanism, the autonomous vehicle takes less computational effort for optimal path planning on the map populated with obstacles.

Furthermore, a reactive local navigator in the third layer is developed to dynamically update the path and map in real time, so as to avoid moving obstacles and unknown obstacles in the dynamical environment. It dynamically adjusts the speed and direction based on onboard LIDAR sensors to navigate autonomous vehicles locally, thereby benefiting obstacle avoidance and safety assurance.

Overall, the framework composed of three layers advances accurately and is efficiently based on the environmental information layer by layer. Specifically, in the first layer, only the satellite images are needed to provide the size and shape of the searching area and the vehicle’s initial and final positions. In the second layer, the images obtained from the unmanned aerial vehicle (UAV) are required to gather detailed information of the obstacles in the environment, such as minecarts, planters, and vehicles. The third layer is based on onboard LIDAR sensors, used for real-time local reactive navigation of autonomous vehicles, avoiding moving obstacles, and building maps simultaneously. The contributions of this study are summarized as follows:

1) A hierarchical framework is proposed for the autonomous vehicle CCPP navigation in real-time environments;

2) A deep learning-based complete coverage path generation method is developed to generate complete coverage trajectories without considering obstacles;

3) For the problem of obstacle avoidance, an obstacle fusion paradigm and Bat algorithm-based path re-joint method is proposed;

4) Regarding avoiding dynamic and unknown obstacles in real-time environments, a local reactive navigator is introduced.

The rest of this study is organized as follows: in Section 2, the deep learning-based complete coverage path generation method is addressed. The second layer with regard to the nature-inspired algorithm and re-joint mechanism is explained in Section 3. Section 4 shows the reactive local navigator based on LIDAR sensors, which is the third layer in our proposed framework. Simulation and comparison studies are presented in Section 5. Several important properties of the presented framework are summarized in Section 6.

2 Deep Learning-Based Complete Coverage Path Planning

In the first layer, a deep learning-based method is proposed to generate a path with the starting and end positions while considering the shape of the explored areas for creating the optimal back-and-forth (BFP) coverage trajectories.

2.1 Preliminaries

In this section, the required assumptions are described for the proposed method. The region to be explored is assumed in a 2D environment, and the configuration space ℧ for autonomous vehicle Δ is formulated as

2.2 Search Direction

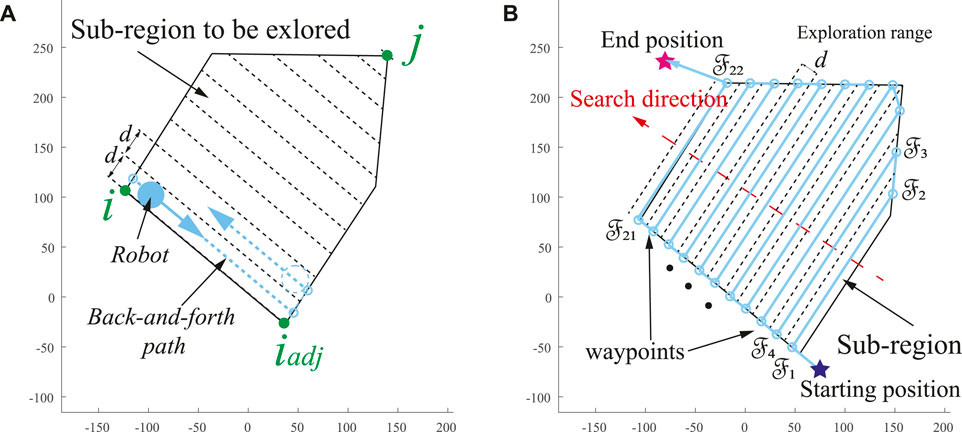

Previous research has mainly focused on the CCPP exploration in the workspace to be explored while ignoring the vehicle’s starting and end positions in real-world scenarios. However, based on energy optimization and constraint considerations, the entire trajectories need to be considered. Therefore, for multiple edges of the polygons, the starting and end points of the vehicle should be combined to determine the vehicle’s search direction. Meanwhile, in light of the properties of the BFP-based CCPP trajectories, the optimal trajectory lines are parallel to one of the edges of the area (Torres et al., 2016). The procedure of the search direction is developed in Algorithm 1, and the process details are discussed in the following sections. The algorithm requires searching the set of opposite vertex pairs η, such as vertices (i, j) in Figure 2A. One of the vertexes, such as i, finds its adjacent vertex iadj, and the BFP is formed in parallel to the line

FIGURE 2. (A) Construction of BFP path. (B) BFP search direction based on the vehicle’s starting and end position.

Then, the starting and end points of the autonomous vehicle are enclosed in the total length

Algorithm 1. Pseudo-code for search direction.

2.3 Deep Learning-Based Path Generation

Through the obtained BFP path segmentation line, we take the points that are intersections of the segmentation line and the workspace edge as a regression problem, and a fully convolutional deep neural network is utilized to estimate the positions of different points. In light of the turning radius of the vehicle, as shown in Figure 3B, the global CCPP trajectories are predicted by the neural network (NN) from the input image. The input image with resolution M × N is first divided into an IM × IN grid map (Figure 3A). The grid map IM × IN is h times smaller than the input image. Each grid contains h × h pixels, and the confidence probability

FIGURE 3. (A) The occupancy grid map of the workspace. (B) The computation of the CCPP path waypoint location based on vehicle turning radius. (C) Overview of the deep learning architecture.

The fully convolutional neural network (FCN) designed by an input tensor

3 Path Re-Joint and Obstacle Fusion

In the second layer, through the generated deep learning-based coverage paths, the obstacles in the environment are considered.

3.1 Obstacle Detection and Approximation

In the field of autonomous vehicles, map information is highly important, especially for global path planning, which determines the accuracy of the trajectory. However, for complex environments, such as disaster sites, many obstacles are scattered or gathered in various places, which bring safety and computational difficulties to the path planning of autonomous vehicles. When acquiring the disaster area or agricultural field map through drones, we need to retrieve map information to obtain specific locations of obstacles and approximate and merge a large number of obstacles into several large convex obstacles, thereby improving the efficiency of search and exploration tasks. Especially at the disaster site, the rescue time is limited, and it is important to quickly locate and approximate obstacles in the complex environment. Therefore, this section proposes an effective method for obstacle detection, obstacle approximation, and fusion.

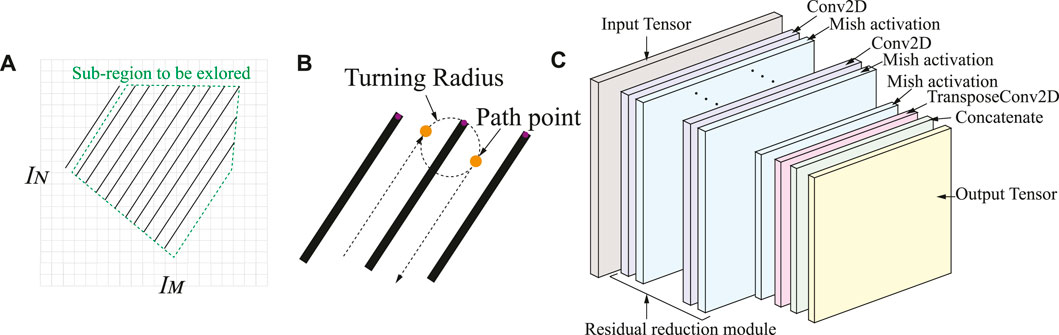

3.1.1 Object Detection With Bounding Box

Many methods have been developed for object detection. The most commonly used methods include single shot detector (SSD), region-based faster convolutional neural network (Faster R-CNN), region-based fully connected network (RCF). When these deep learning CNN models perform object detection and classification, they will obtain a bounding box based on the object’s shape. The bounding box provides us with the specific location of the object in the image and the object classification to be found. In this study, we use Faster R-CNN as our object detection method, which has been proven an efficient and accurate method in many fields (Alzadjali et al., 2021).

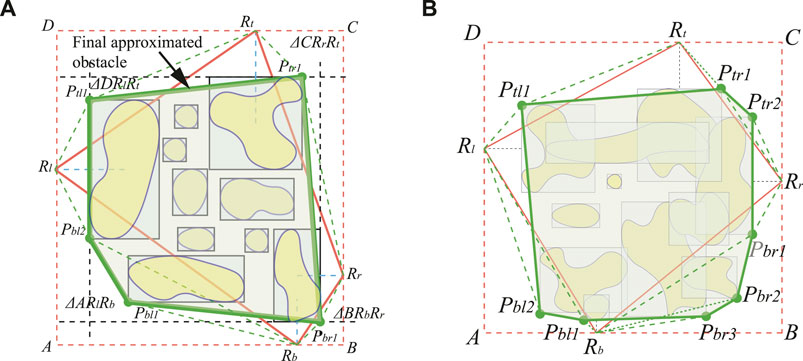

3.1.2 Obstacle Approximation and Fusion

In this section, obstacles are approximated and merged into larger convex-shaped obstacles. Through object detection, we can obtain a large amount of information in the pictures, such as inaccessible and dangerous areas. The formed map is of great assistance to the subsequent search and distribution of ground vehicles. However, excessively unorganized obstacle information on the map will cause computational costs to vehicle path planning, especially as overlapping obstacles and excessive tiny obstacles, which are very close to each other. Therefore, it is essential to integrate multiple tiny obstacles or overlapping obstacles into an approximation of the overall obstacle.

The method of finding the approximated obstacles is to find the obstacles to be integrated in the area. For example, in Figure 4B, the trucks parked in the mining site are considered a greater obstacle in the environment. The red bounding box is generated by the object detection method before the approximation method merges multiple bounding boxes into convex obstacles enclosed in the blue lines. Unmanned aerial vehicles (UAVs) are particularly suitable for searching large-scale farms and dangerous areas (Hassler and Baysal-Gurel, 2019). The detailed information of the scene generated through the photos of the drone’s onboard camera has made great contributions to agriculture, search, and rescue (Alzadjali et al., 2021). By merging a large number of scattered or even overlapping bounding boxes in the image to approximate as a convex obstacle, we need to select a set of suitable points from the bounding box.

FIGURE 4. (A) Schematic illustration of the faster region-based convolutional neural network (Faster R-CNN) object detection method. (B) The obstacle approximation for a real map. Red boxes are the bounding boxes for object detection, and the green boxes are the approximation of the objects.

Assuming that the image has been gathered and recognized, the selection of points that approximates the obstacles will not be internal of the bounding box; thus. only the corners of the bounding box need to be considered. We then define the four reference points as the leftmost

FIGURE 5. The illustration of obstacle fusion. (A) The final obstacle fusion for a set of non-overlapping obstacles. (B) The obstacle fusion for a set of overlapping obstacles\enleadertwodots.

The initial convex polygon

3.2 Bat Algorithm-Based Path Re-Joint

3.2.1 Bat Algorithm

The Bat algorithm (BA) is a nature-inspired population-based meta-heuristic optimization algorithm (Yang, 2010). The search strategy of the BA is inspired by the social behavior of bats and the use of echolocation in foraging and avoiding obstacles. The echolocation process of bats is addressed as follows: 1) All bats apply echolocation to sense the distance between the current position and different sources, in which all bats can distinguish food/prey and background barriers intelligently. 2) Bats automatically adjust the wavelength and frequency of their emitted ultrasonic pulses while foraging. They fly randomly at position

where ζ denotes a randomly generated number within the interval [0, 1] and

In order to achieve a balance between local search and global search capabilities, a random walk procedure is processed in local search under certain probability. The new solution

where ρ is the scaling factor which is confined to the random walk’s step size and ρ ∈ [ − 1, 1] is a random number.

where γ and η are positive constants.

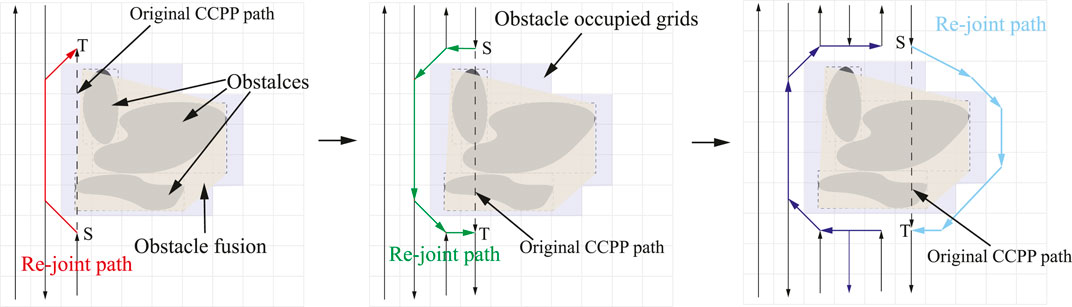

3.2.2 Obstacle Avoidance and Path Re-Joint

In order to fulfill a high degree of autonomy in autonomous vehicle navigation, environment modeling or map construction is necessary to enable autonomous vehicles to generate collision-free trajectories. Therefore, in this section, the BA is utilized to perform autonomous navigation of vehicles in the grid-based environment; especially, the vehicles deeply re-plan when they traverse in the vicinity of obstacles. The grid map is composed of equal-sized grids, referred to as the generated CCPP path in Section 2. It should be noted that the grid occupied as obstacles is an inaccessible area in Figure 6. When an obstacle is presented in front of the vehicle, the current grid is defined as the initial point

Each point is defined by its grid coordinates (x, y), and the center of the grid pixel is regarded as a grid point. Path length is defined by the sum of the Euclidean distance between two adjacent points on the trajectory:

where

Algorithm 2. Procedure of proposed deep learning-based CCPP.

4 Real-Time Navigation of Autonomous Vehicles

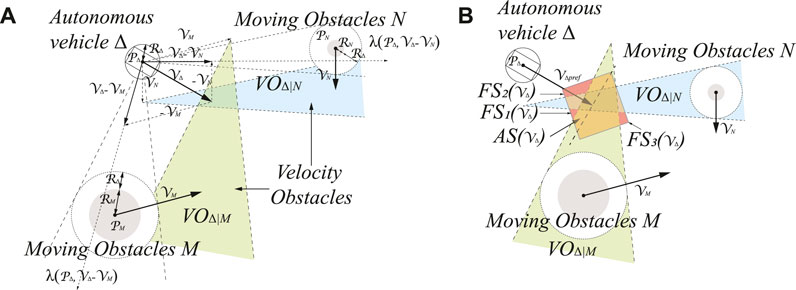

In the third layer, once the coverage trajectories are planned, a velocity-based local reactive navigator with mapping capability is considered to avoid moving obstacles while locally constructing an environmental map. The environment of autonomous vehicle navigation is dynamic, including static obstacles and moving obstacles. The local navigation only reacts to their local environment at any moment in time, aimed to create velocity commands of an autonomous vehicle to traverse towards a destination, such as the dynamic window approach of Fox et al. (1997) and Borenstein et al. (1991). Including a sequence of bread crumbs as local waypoints in the path planning, which decomposes the coverage trajectories into a sequence of segments, makes the model particularly efficient for the environment densely populated by obstacles. In this case, a velocity obstacle approach (VOA) for real-time autonomous vehicle navigation is utilized in this study as our LIDAR-based local navigator (Fiorini and Shiller, 1998). The required information is the other sensed agents’ current position, velocity, and exact shape. The definition of the VOA is defined as follows.

Δ represents the autonomous vehicle that needs to be navigated, and

FIGURE 7. The illustration of velocity obstacle approach (VOA) in our model. (A) Velocity obstacles

Let

As shown in Figure 7A, the

The current autonomous vehicle

Our approach uses both the current position and velocity of other moving obstacles to compute their future collision-free trajectories. Obstacles are also considered in the environments, uncertainty in radius, position, and velocity, as well as dynamics and kinematics of the vehicles. The proposed velocity-based local navigator avoids unforeseen moving obstacles on the planned trajectory, which re-joins the previously planned route after it traverses in the vicinity of the obstacle. Furthermore, each layer takes advantage of the results of the previous layer as a reference to decrease the computational effort.

5 Simulated Experiments and Results

In this section, two simulation studies are conducted to validate the feasibility and merit of the proposed framework. The first simulation investigates the CCPP obtained by the deep learning method. The second simulation, through more detailed images obtained by drones, undertakes obstacle avoidance and re-joint paths. Moreover, onboard LIDAR is utilized to identify moving obstacles in the environment. The parameters of the proposed framework are listed below. In the CCPP deep learning training, 3000 environment maps with polygonal shape areas are utilized for training, with a resolution of 1000 × 1000 and h = 10. The prediction made by the NN for the image is based on the spatial dimension of 100 × 100. Parameters of BA are set as the following:

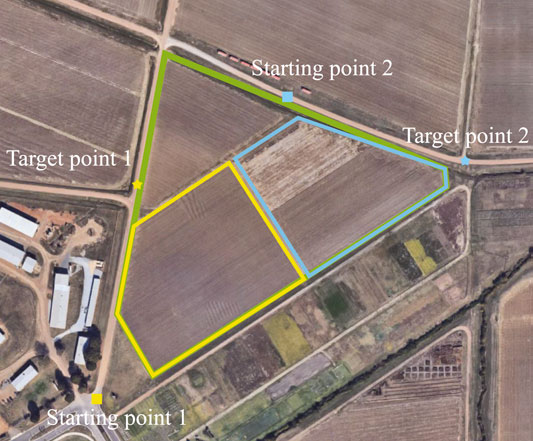

5.1 Simulation and Comparative Studies in CCPP Without Obstacles

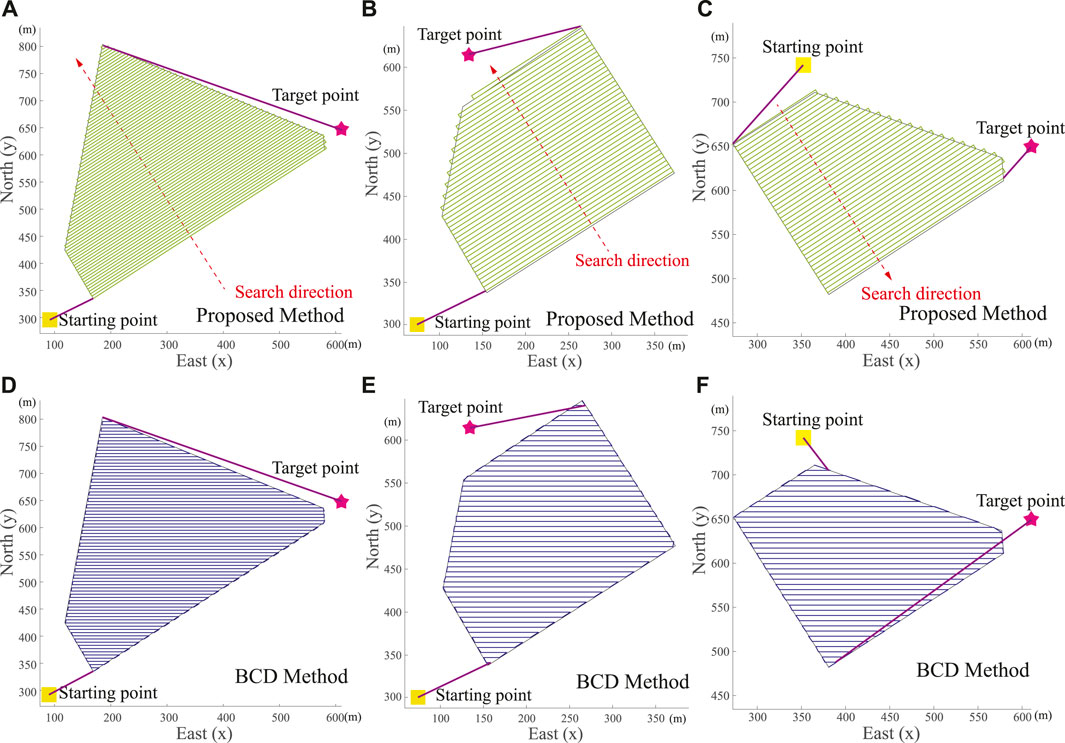

In order to compare the proposed model with others, we compare the proposed CCPP method with the well-known Boustrophedon Cellular Decomposition (BCD) method (Acar and Choset, 2002). In this section, the map is a satellite image from the North Farm of Mississippi State University as shown in Figure 8. The starting and target points are randomly selected. Different shapes of the targeted areas are selected to perform coverage searches. The edges of the targeted areas as three scenarios are highlighted in yellow, blue, and green in Figure 8. The starting and target points are represented by squares and stars, respectively. The exploration range d of the autonomous vehicle is set as 5 m. The shape of the targeted areas, the starting and target positions of the autonomous vehicle are considered. Then, the directions of the vehicle’s search of the proposed CCPP in these three scenarios are achieved in Figures 9A–C, respectively.

FIGURE 8. Real-world satellite images taken from Google Maps. Three targeted areas with specific starting and target points are assigned to CCPP (image from Mississippi State University North Farm).

FIGURE 9. Real-world scenarios of autonomous vehicle CCPP trajectories in Figure 8. (A–C) Trajectories generated by proposed CCPP method. (D–F) Trajectories generated by Boustrophedon Cellular Decomposition (BCD) method.

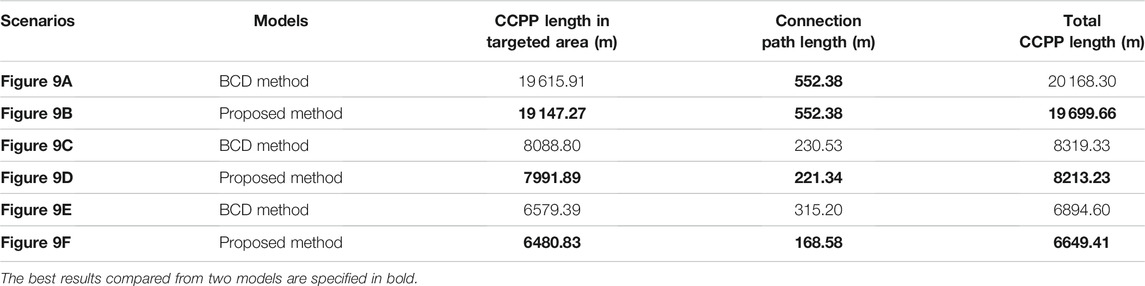

In three scenarios, the proposed CCPP method has a shorter path length regardless of the coverage path within the targeted areas, the path connecting the starting and target points, and the final total path. The trajectories of the proposed CCPP method are shown in Figures 9A–C, respectively. The trajectories of the BCD method are shown in Figures 9D–F, respectively. The comparative studies are summarized in Table 1.

TABLE 1. Performance analysis of the proposed CCPP method with Boustrophedon Cellular Decomposition (BCD) method (Acar and Choset, 2002) under different scenarios.

The average precision (AP) metric is utilized to evaluate the training results. A total of 3000 synthetic images with a resolution of 800 × 800 are utilized for training and h = 8. Then, the network is evaluated with 1000 synthetic images. The network is trained with 200 epochs using Adam optimizer. The learning rate is equal to 3e-4, and the batch size is 16. A tunning point prediction is within the selected area as a true positive (TP), while more predictions fall within the selected range. Only one is counted as TP and all others as false positive (FP). All ground-truths not covered by a prediction are counted as false negatives (FN). Because different confidence thresholds can obtain different recall and precision values and the AP calculation is obtained by the common definition of recall and precision, we change the threshold value from 0 to 1 with step size 0.1. Multiple results obtained by modifying the threshold show that recall and precision are inversely proportional. The final confidence threshold is set as 0.9. At a distance range of 8 pixels, the average precision equals 0.9735.

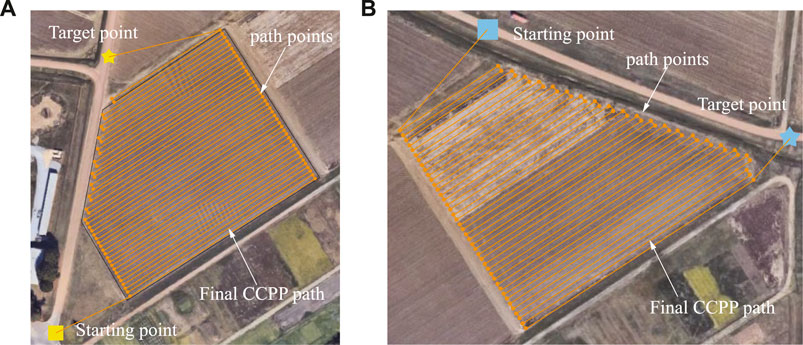

Consequently, through the deep learning method, the turning points of the autonomous vehicle are generated, and the final CCPP paths are obtained, as shown in Figures 10A,B. The neural network training and testing procedure are similar to the Deepway model (Mazzia et al., 2021). However, the Deepway model relies only on identifying row-based crops to manually sort the order of waypoints that generates the final CCPP result. It remarkably limits the usage scenarios of the model and requires additional labor time to sort the waypoints. Our proposed model extends the range of usage to random environments with arbitrary shape search areas and considers the relative positions of the autonomous vehicle to obtain the optimal CCPP path, as shown in Figure 10.

FIGURE 10. Illustration of the final trajectory in light of the deep learning-based CCPP. (A) The final deep learning-based CCPP path regarding the targeted area in Figure 9B. (B) The final deep learning-based CCPP path regarding the targeted area in Figure 9C.

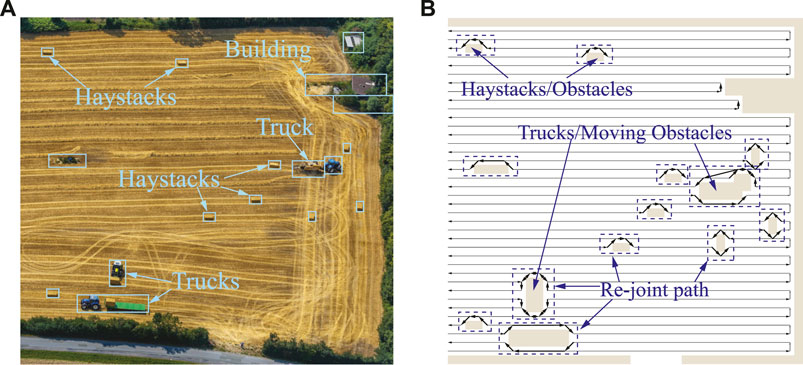

5.2 CCPP Amid Stationary and Dynamic Obstacles

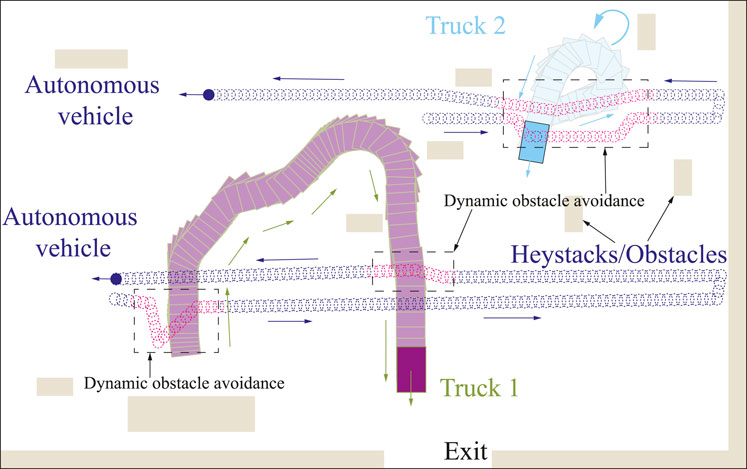

In this section, simulation studies are carried out to validate the second and third layers of the proposed framework, utilized for CCPP re-joint and obstacle avoidance in environments with stationary and dynamic obstacles. Due to the relatively large environment, in order to better show the re-joining path of the autonomous vehicle, a part of the map is truncated. More detailed information of the images is obtained from the drones, as shown in Figure 11A, in which haystacks and trucks are detected as obstacles. Obstacles are then approximated and merged into a grid-based map. The CCPP with obstacle avoidance function uses the CCPP path obtained in the first layer as a reference to decrease the computational effort. The proposed obstacle avoidance method based on the BA algorithm will only be triggered when an obstacle is presented in front of the vehicle. The grid of the current position is taken as the starting point, and the next grid of the CCPP reference path unoccupied by obstacles is regarded as the target point to plan a collision-free trajectory. The proposed method is unnecessarily to recalculate for the complete map, which can flexibly adapt to the alterations of obstacles in the map. The CCPP trajectory of static obstacle avoidance is shown in Figure 11B. When there are unknown and moving obstacles in the environment, such as the trucks in Figure 11, the autonomous vehicles can still rely on the onboard LIDAR to dynamically avoid obstacles and return to the original coverage path to search the entire environment. Two specific operations of the autonomous vehicle avoiding moving trucks are shown in Figure 12. The autonomous vehicle, the first truck, and the second truck are represented by dark blue circles, yellow rectangles, and light blue rectangles, respectively. The autonomous vehicle performs dynamic avoidance twice for the first truck. The vehicle successfully avoids obstacles and returns to the planned CCPP trajectory. The second truck first stops at the original position before the vehicle avoids obstacles according to the obstacle avoidance path planned by the second layer of the framework. During the returning process, the truck starts to move, and the vehicle can still avoid obstacles to the updated truck position. These results prove that the proposed CCPP framework is effective and efficient in coverage navigation under real-world applications.

FIGURE 11. (A) Real-world images taken from UAVs. (B) Re-joint and collision-free CCPP trajectories.

FIGURE 12. Illustration of autonomous vehicle CCPP navigation with unknown and moving obstacles in the real-world environment. The dashed boxes depict the avoidance of the moving trucks.

6 Conclusion and Future Work

A new framework to tackle issues of environment mapping, path generation, CCPP, and dynamic obstacle avoidance in a hierarchical manner has been proposed. The proposed framework comprises three layers that advance more accurately and efficiently based on environmental information. The framework adopts a layer-by-layer approach with the intention of each layer treating the results of the previous layer as a reference to reduce the computational effort. Simulation studies validated the effectiveness and robustness of the proposed framework. We are working on ROS-based sensor configuration and implementation of this proposed model on an actual mobile robot. Sensors being integrated include five components: a camera, a Hokuyo LIDAR, a differential global positioning system, a digital compass, and an inertial measurement unit.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank the editor-in-chief, the associate editor, and the reviewers for their valuable comments and suggestions, which improved the manuscript.

References

Acar, E. U., and Choset, H. (2002). Sensor-based Coverage of Unknown Environments: Incremental Construction of morse Decompositions. Int. J. Robotics Res. 21, 345–366. doi:10.1177/027836402320556368

Alzadjali, A., Alali, M. H., Sivakumar, A. N. V., Deogun, J. S., Scott, S., Schnable, J. C., et al. (2021). Maize Tassel Detection from UAV Imagery Using Deep Learning. Front. Robotics AI 8, 136. doi:10.3389/frobt.2021.600410

An, V., Qu, Z., Crosby, F., Roberts, R., and An, V. (2018). A Triangulation-Based Coverage Path Planning. IEEE Trans. Syst. Man, Cybernetics: Syst. 50, 2157–2169.

Arkin, E. M., Fekete, S. P., and Mitchell, J. S. B. (2000). Approximation Algorithms for Lawn Mowing and milling☆☆A Preliminary Version of This Paper Was Entitled "The Lawnmower Problem" and Appears in the Proc. 5th Canad. Conf. Comput. Geom., Waterloo, Canada, 1993, Pp. 461-466. Comput. Geometry 17, 25–50. doi:10.1016/s0925-7721(00)00015-8

Borenstein, J., and Koren, Y. (1991). The Vector Field Histogram-Fast Obstacle Avoidance for mobile Robots. IEEE Trans. Robot. Automat. 7, 278–288. doi:10.1109/70.88137

Carrillo-Zapata, D., Milner, E., Hird, J., Tzoumas, G., Vardanega, P. J., Sooriyabandara, M., et al. (2020). Mutual Shaping in Swarm Robotics: User Studies in Fire and rescue, Storage Organization, and Bridge Inspection. Front. Robot. AI 7, 53. doi:10.3389/frobt.2020.00053

Cèsar-Tondreau, B., Warnell, G., Stump, E., Kochersberger, K., and Waytowich, N. R. (2021). Improving Autonomous Robotic Navigation Using Imitation Learning. Front. Robotics AI 8, 46.

Deng, L., Ma, X., Gu, J., Li, Y., Xu, Z., and Wang, Y. (2016). Artificial Immune Network-Based Multi-Robot Formation Path Planning with Obstacle Avoidance. Int. J. Robotics Automation 31, 233–242. doi:10.2316/journal.206.2016.3.206-4746

Ewerton, M., Arenz, O., Maeda, G., Koert, D., Kolev, Z., Takahashi, M., et al. (2019). Learning Trajectory Distributions for Assisted Teleoperation and Path Planning. Front. Robot. AI 6, 89. doi:10.3389/frobt.2019.00089

Fiorini, P., and Shiller, Z. (1998). Motion Planning in Dynamic Environments Using Velocity Obstacles. Int. J. Robotics Res. 17, 760–772. doi:10.1177/027836499801700706

Fox, D., Burgard, W., and Thrun, S. (1997). The Dynamic Window Approach to Collision Avoidance. IEEE Robot. Automat. Mag. 4, 23–33. doi:10.1109/100.580977

Graves, R., and Chakraborty, S. (2018). A Linear Objective Function-Based Heuristic for Robotic Exploration of Unknown Polygonal Environments. Front. Robot. AI 5, 19. doi:10.3389/frobt.2018.00019

Hassler, S. C., and Baysal-Gurel, F. (2019). Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy 9, 618. doi:10.3390/agronomy9100618

Iqbal, J., Xu, R., Halloran, H., and Li, C. (2020). Development of a Multi-Purpose Autonomous Differential Drive mobile Robot for Plant Phenotyping and Soil Sensing. Electronics 9, 1550. doi:10.3390/electronics9091550

Jiang, M., Chi, G., Pan, G., Guo, S., and Tan, K. C. (2020). Evolutionary Gait Transfer of Multi-Legged Robots in Complex Terrains. arXiv preprint arXiv:2012.13320.

Lee, S.-M., Kim, H., Myung, H., and Yao, X. (2014). Cooperative Coevolutionary Algorithm-Based Model Predictive Control Guaranteeing Stability of Multirobot Formation. IEEE Trans. Control. Syst. Technol. 23, 37–51.

Lei, T., Luo, C., Ball, J. E., and Bi, Z. (2020a). “A Hybrid Fireworks Algorithm to Navigation and Mapping,” in Handbook of Research on Fireworks Algorithms and Swarm Intelligence (Pennsylvania, United States: IGI Global), 213–232. doi:10.4018/978-1-7998-1659-1.ch010

Lei, T., Luo, C., Ball, J. E., and Rahimi, S. (2020b). “A Graph-Based Ant-like Approach to Optimal Path Planning,” in 2020 IEEE Congress on Evolutionary Computation (CEC), 1–6. doi:10.1109/cec48606.2020.9185628

Lei, T., Luo, C., Jan, G. E., and Fung, K. (2019). “Variable Speed Robot Navigation by an ACO Approach,” in International Conference on Swarm Intelligence (Berlin, Germany: Springer), 232–242. doi:10.1007/978-3-030-26369-0_22

Lei, T., Luo, C., Sellers, T., and Rahimi, S. (2021). A Bat-pigeon Algorithm to Crack Detection-Enabled Autonomous Vehicle Navigation and Mapping. Intell. Syst. Appl. 12, 200053. doi:10.1016/j.iswa.2021.200053

Li, Y., Chen, H., Joo Er, M., and Wang, X. (2011). Coverage Path Planning for UAVs Based on Enhanced Exact Cellular Decomposition Method. Mechatronics 21, 876–885. doi:10.1016/j.mechatronics.2010.10.009

Li, Y., Cui, R., Li, Z., and Xu, D. (2018). Neural Network Approximation Based Near-Optimal Motion Planning with Kinodynamic Constraints Using RRT. IEEE Trans. Ind. Electron. 65, 8718–8729. doi:10.1109/tie.2018.2816000

Luo, C., and Yang, S. X. (2008). A Bioinspired Neural Network for Real-Time Concurrent Map Building and Complete Coverage Robot Navigation in Unknown Environments. IEEE Trans. Neural Netw. 19, 1279–1298. doi:10.1109/tnn.2008.2000394

Luo, C., Yang, S. X., Li, X., and Meng, M. Q.-H. (2016). Neural-dynamics-driven Complete Area Coverage Navigation through Cooperation of Multiple mobile Robots. IEEE Trans. Ind. Electron. 64, 750–760.

Mazzia, V., Salvetti, F., Aghi, D., and Chiaberge, M. (2021). Deepway: A Deep Learning Waypoint Estimator for Global Path Generation. Comput. Electron. Agric. 184, 106091. doi:10.1016/j.compag.2021.106091

Meng, M. Q.-H. (2021). Bridging AI to Robotics via Biomimetics. Biomimetic Intelligence and Robotics 1, 100006. doi:10.1016/j.birob.2021.100006

Nasirian, B., Mehrandezh, M., and Janabi-Sharifi, F. (2021). Efficient Coverage Path Planning for mobile Disinfecting Robots Using Graph-Based Representation of Environment. Front. Robotics AI 8, 4. doi:10.3389/frobt.2021.624333

Niyaz, S., Kuntz, A., Salzman, O., Alterovitz, R., and Srinivasa, S. S. (2019). Optimizing Motion-Planning Problem Setup via Bounded Evaluation with Application to Following Surgical Trajectories. Rep. U S 2019, 1355–1362. doi:10.1109/IROS40897.2019.8968575

Poma, X. S., Riba, E., and Sappa, A. (2020). “Dense Extreme Inception Network: Towards a Robust Cnn Model for Edge Detection,” in IEEE/CVF Winter Conference on Applications of Computer Vision, 1923–1932.

Poonawala, H. A., and Spong, M. W. (2017). Time-optimal Velocity Tracking Control for Differential Drive Robots. Automatica 85, 153–157. doi:10.1016/j.automatica.2017.07.038

Quin, P., Nguyen, D. D. K., Vu, T. L., Alempijevic, A., and Paul, G. (2021). Approaches for Efficiently Detecting Frontier Cells in Robotics Exploration. Front. Robot. AI 8, 1. doi:10.3389/frobt.2021.616470

Rawashdeh, N. A., Bos, J. P., and Abu-Alrub, N. J. (2021). “Drivable Path Detection Using CNN Sensor Fusion for Autonomous Driving in the Snow,” in Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2021. Vol. 11748, 1174806. doi:10.1117/12.2587993

Rose, D. C., and Chilvers, J. (2018). Agriculture 4.0: Broadening Responsible Innovation in an Era of Smart Farming. Front. Sustain. Food Syst. 2, 87. doi:10.3389/fsufs.2018.00087

Segato, A., Pieri, V., Favaro, A., Riva, M., Falini, A., De Momi, E., et al. (2019). Automated Steerable Path Planning for Deep Brain Stimulation Safeguarding Fiber Tracts and Deep gray Matter Nuclei. Front. Robot. AI 6, 70. doi:10.3389/frobt.2019.00070

Stolfi, D. H., Brust, M. R., Danoy, G., and Bouvry, P. (2021). UAV-UGV-UMV Multi-Swarms for Cooperative Surveillance. Front. Robotics AI 8, 5. doi:10.3389/frobt.2021.616950

Sun, B., Zhu, D., Tian, C., and Luo, C. (2018). Complete Coverage Autonomous Underwater Vehicles Path Planning Based on Glasius Bio-Inspired Neural Network Algorithm for Discrete and Centralized Programming. IEEE Trans. Cogn. Dev. Syst. 11, 73–84.

Sünderhauf, N., Brock, O., Scheirer, W., Hadsell, R., Fox, D., Leitner, J., et al. (2018). The Limits and Potentials of Deep Learning for Robotics. Int. J. Robotics Res. 37, 405–420.

Torres, M., Pelta, D. A., Verdegay, J. L., and Torres, J. C. (2016). Coverage Path Planning with Unmanned Aerial Vehicles for 3D Terrain Reconstruction. Expert Syst. Appl. 55, 441–451. doi:10.1016/j.eswa.2016.02.007

Valiente, R., Zaman, M., Fallah, Y. P., and Ozer, S. (2020). “Connected and Autonomous Vehicles in the Deep Learning Era: A Case Study on Computer-Guided Steering,” in Handbook of Pattern Recognition and Computer Vision (Singapore: World Scientific), 365–384. doi:10.1142/9789811211072_0019

Wagner, M. P., and Oppelt, N. (2020). Extracting Agricultural fields from Remote Sensing Imagery Using Graph-Based Growing Contours. Remote Sensing 12, 1205. doi:10.3390/rs12071205

Wang, C., Chi, W., Sun, Y., and Meng, M. Q.-H. (2019). Autonomous Robotic Exploration by Incremental Road Map Construction. IEEE Trans. Automat. Sci. Eng. 16, 1720–1731. doi:10.1109/tase.2019.2894748

Wang, J., Chi, W., Li, C., and Meng, M. Q.-H. (2021). “Efficient Robot Motion Planning Using Bidirectional-Unidirectional RRT Extend Function,” in IEEE Transactions on Automation Science and Engineering. doi:10.1109/tase.2021.3130372

Xie, J., Jin, L., and Garcia Carrillo, L. R. (2019). “Optimal Path Planning for Unmanned Aerial Systems to Cover Multiple Regions,” in AIAA Scitech 2019 Forum, 1794. doi:10.2514/6.2019-1794

Xiong, N., Zhou, X., Yang, X., Xiang, Y., and Ma, J. (2021). Mobile Robot Path Planning Based on Time Taboo Ant colony Optimization in Dynamic Environment. Front. Neurorobot 15, 642733. doi:10.3389/fnbot.2021.642733

Yang, S. X., and Luo, C. (2004). A Neural Network Approach to Complete Coverage Path Planning. IEEE Trans. Syst. Man. Cybern. B 34, 718–724. doi:10.1109/tsmcb.2003.811769

Yang, X.-S. (2010). “A New Metaheuristic Bat-Inspired Algorithm,” in Nature Inspired Cooperative Strategies for Optimization (NICSO 2010) (Berlin, Germany: Springer), 65–74. doi:10.1007/978-3-642-12538-6_6

Zhou, J., Chen, P., Liu, H., Gu, J., Zhang, H., Chen, H., et al. (2019). “Improved Path Planning for mobile Robot Based on Firefly Algorithm,” in 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), 2885–2889. doi:10.1109/robio49542.2019.8961442

Keywords: Deep learning-based path generation, complete coverage path planning, obstacle approximation and fusion, nature-inspired path planning, velocity-based local navigator, re-joint paradigm

Citation: Lei T, Luo C, Jan GE and Bi Z (2022) Deep Learning-Based Complete Coverage Path Planning With Re-Joint and Obstacle Fusion Paradigm. Front. Robot. AI 9:843816. doi: 10.3389/frobt.2022.843816

Received: 27 December 2021; Accepted: 11 February 2022;

Published: 22 March 2022.

Edited by:

Jason Gu, Dalhousie University, CanadaReviewed by:

Chaoqun Wang, Shandong University, ChinaGuoming Li, Iowa State University, United States

Copyright © 2022 Lei, Luo, Jan and Bi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chaomin Luo, Q2hhb21pbi5MdW9AZWNlLm1zc3RhdGUuZWR1

Tingjun Lei

Tingjun Lei Chaomin Luo

Chaomin Luo Gene Eu Jan2

Gene Eu Jan2 Zhuming Bi

Zhuming Bi