- Dipartimento di Ingegneria, Università degli Studi della Campania Luigi Vanvitelli, Aversa, Italy

Modern scenarios in robotics involve human-robot collaboration or robot-robot cooperation in unstructured environments. In human-robot collaboration, the objective is to relieve humans from repetitive and wearing tasks. This is the case of a retail store, where the robot could help a clerk to refill a shelf or an elderly customer to pick an item from an uncomfortable location. In robot-robot cooperation, automated logistics scenarios, such as warehouses, distribution centers and supermarkets, often require repetitive and sequential pick and place tasks that can be executed more efficiently by exchanging objects between robots, provided that they are endowed with object handover ability. Use of a robot for passing objects is justified only if the handover operation is sufficiently intuitive for the involved humans, fluid and natural, with a speed comparable to that typical of a human-human object exchange. The approach proposed in this paper strongly relies on visual and haptic perception combined with suitable algorithms for controlling both robot motion, to allow the robot to adapt to human behavior, and grip force, to ensure a safe handover. The control strategy combines model-based reactive control methods with an event-driven state machine encoding a human-inspired behavior during a handover task, which involves both linear and torsional loads, without requiring explicit learning from human demonstration. Experiments in a supermarket-like environment with humans and robots communicating only through haptic cues demonstrate the relevance of force/tactile feedback in accomplishing handover operations in a collaborative task.

1 Introduction

Recent studies testify an increasing use of robots in retail environments with a number of objectives: to relieve staff from repetitive tasks with low added value; to reallocate clerks to customer-facing activities; to gather in-store data for real-time inventory to reduce out-of-stock losses (Retail Analytics Council, 2020). Commercial solutions already exist for automated inventory management, e.g., the Bossa Nova 2020, Tally 3.0 by Simberobotics, LoweBot by Fellow Robots or Stockbot by PAL robotics (Bogue, 2019). However, other in-store logistic processes, like product pre-sorting and shelf re-stocking are still difficult to automate, even though there is a strong interest from retailers, due to their high costs (Kuhn and Sternbeck, 2013). The most time consuming task is the shelf replenishment and 50% of such time is devoted to find the correct slot on the shelf. Only few scientific contributions exist on this logistic automation problem (Ricardez et al., 2020; Sakai et al., 2020), mainly related to the Future Convenience Store robotic challenge launched by the World Robotic Summit1. This is because the re-stocking task involves complex manipulation operations that are still a challenge for a robot. Dexterous manipulation of objects with unknown physical properties is very difficult, especially with simple grippers like parallel jaws, that are the most widespread devices for material handling. Adopting a fixed grasp with a parallel gripper can be very limiting when objects have to be handled in cluttered and narrow spaces. Changing the grasp configuration without re-grasping the object requires the ability to re-orient the object in hand, that is a pivoting motion about the grasping axis of the parallel gripper. Recent papers demonstrated that such in-hand manipulation abilities allow a robot to autonomously refill a supermarket shelf (Costanzo et al., 2020b; Costanzo et al., 2020c; Costanzo et al., 2021). Nevertheless, the whole re-stocking process requires execution of additional tasks that cannot be executed by robots, like opening cartons, removing packaging of multiple items before placing them on the shelf, or handling exceptions, like liquid spills. All these operations demand for complex cognitive and/or manipulative skills that are still a human prerogative. Therefore, with the current technology a step toward automation of retail logistics can be taken through execution of such processes in a collaborative way, where robots and humans are able to exchange objects, the so-called handover operation. Availability of a robot able to receive objects from the human clerk (H2R operation) can significantly alleviate the human workload since the robot can easily reach lower or higher shelf layers, that are outside the ergonomic golden zone. The dual robot-to-human operation (R2H) can be very useful in stores to help impaired or elderly people to retrieve products from the shelves. Analogously, in fulfilment centers of large on-line stores, robots can retrieve products from shelves and pass them to a human operator who takes care of their packaging. In a more futuristic scenario where robots can fully replace human clerks, the robot-to-robot (R2R) handover operation can be envisaged during the whole in-store logistic process.

The handover task is commonly defined in the robotics community as a joint action between two agents, the giver and the receiver (Ortenzi et al., 2020). It is usually divided into two phases, the pre-handover and the physical handover. In each phase, a number of aspects should be considered. For a detailed review of each aspect, the reader is referred to the survey by Ortenzi et al. (2020); in the following only a short review is presented with the aim to frame the contribution of the present paper within the literature.

The communication mechanism is crucial for initiating the action and to coordinate it as soon as it has started (Strabala et al., 2013). We address only the communication problem during the physical handover phase, and we adopt haptic cues as the sole communication mean between the two agents, even in the R2R handover the robot controllers do not share any further communication channel. The cues are a simple code based on the interaction force perceived by the agents through tactile sensing at fingertips. The code assumes that robots are able to measure all components of the force vector, thus the grippers are equipped with force/tactile sensors built in our laboratory (see the experimental setup in Figure 1).

FIGURE 1. Left picture: Experimental setup for the robot-to-human and human-to-robot handover, the frame

Grasp planning is another fundamental problem to tackle for an effective handover execution. Ideally, the giver should grasp the object in such a way the receiver does not need to perform complex manipulation actions after the handover before using the object, as discussed, e.g., in Aleotti et al. (2014). In a R2H context, the robot should be capable of dexterous operations to hand the object over in a proper pose. Even parallel grippers are able to perform in-hand manipulation actions as demonstrated by Costanzo et al. (2020a). Both object and gripper pivoting maneuvers are used in this paper to achieve this objective, for instance, choosing an object orientation so as to avoid high torsional loads at receiver fingertips in case a precision grasp is needed to take the object. This can likely happen in a collaborative packaging task where the human operator has to arrange in a box the items passed by the robot. In the H2R context, as proved by many studies on human behavior, e.g. (Sartori et al., 2011), the giver grasps the object to hand over by considering the final goal of the receiver. If a desired orientation is required, the robot should apply a grasp force suitable to hold also the torsional load. To the best of our knowledge no other research deals with handover of objects subject to torsional load.

Still in the H2R operation, visual perception of the object location by the robot is challenging since it has to be performed with the object already grasped by the human, hence it is partially occluded. We adopt a texture-based approach that tracks 3D key points on the object surface detected by an eye-in-hand RGB-D camera. Hence, the robot directly tracks the object rather than the human hand, and no additional markers are needed, differently from the marker-based solutions proposed by Medina et al. (2016) and by Pan et al. (2018). Among the marker-less approaches, recent works are (Nemlekar et al., 2019; Yang et al., 2020; Rosenberger et al., 2021). Each of these works on H2R handover, and our paper, use object and/or human tracking with a different aim. Nemlekar et al. (2019) focus on reaching the handover location by tracking the human skeleton and predicting the so-called Object Transfer Point, Yang et al. (2020) focus on the choice of the grasp orientation based on the human grasp type, while Rosenberger et al. (2021) focus on the choice of a grasp that ensures the safety of the human using eye-in-hand vision. Our approach, instead, aims at reaching a grasp selected beforehand based on the task that the robot has to perform after the handover, i.e. placing the object on the shelf possibly using in-hand manipulation which requires specific constraints on the grasp. Differently from the solution by Yang et al. (2020), in our work the grasp motion is not planned but directly executed in a closed-loop fashion, this allows the robot to achieve a speed comparable to the human one while automatically reacting to the giver motion. The approach proposed by Rosenberger et al. (2021) is computationally demanding and it is still not suitable for real-time closed-loop control. In contrast, our approach requires an object database containing the grasp poses (this is a fair assumption in a supermarket scenario) but enables a fast closed-loop control and can track the object even if the human changes the object pose during the handover execution. The main limit of the solution by Nemlekar et al. (2019) is the need to keep the entire skeleton of the human body within the field of view of the camera to allow the tracking algorithm to work properly; this limit has been overcome by Rosenberger et al. (2021) who can track only parts of the human body as well as our approach which tracks the object only.

A fast visual servoing loop is used in this paper to control the robot receiver motion in such a way the handover is fluid, that is a primary requirement as discussed by Medina et al. (2016). This way, during the H2R operation the handover location is chosen by the giver and the receiver moves toward it in real-time. For instance, this is helpful if the human has a limited workspace and the robot has to reach an handover location compliant with the human constraints. When the robot approaches the object, the initial error between the actual and desired robot location could be high, in the classical algorithm by Marchand et al. (2005) this issue limits the dynamic performance of the visual servoing controller. This paper improves the dynamic performance of the visual controller in terms of speed by using a time-varying reference on the feature space to have a low tracking error and higher control gains.

During the physical handover phase the object load is shared by the giver and the receiver. A number of studies on the forces exchanged by humans during this phase have been carried out. For instance, Mason and MacKenzie (2005) found that the grip force of both giver and receiver is modulated during the object exchange. The giver decreases its grip force, while the receiver increases it until the load is transferred. Another relevant aspect, highlighted by Chan et al. (2012), consists in the post-unloading phase, when the giver still applies a grasping force even though its sensed load is almost zero. This means that the giver is the agent responsible of the object safety during the passing. The main contribution of the present paper focuses on these two aspects by proposing grip force modulation algorithms, based on the sole load perception including both linear and torsional one, for enabling a safe load sharing and transferring. The building blocks are the slipping avoidance algorithm originally proposed by Costanzo et al. (2020b) and the in-hand pivoting abilities devised by Costanzo et al. (2020a). In the H2R operation the robot gripper is controlled using the slipping avoidance modality so as the grip force is automatically computed based on the sensed load and slipping velocity estimated through the nonlinear observer proposed by Cavallo et al. (2020). However, the slipping avoidance strategy alone revealed ineffective, a communication channel has to be established between the giver and the receiver. We adopt haptic cues applied by the robot to the giver to communicate that the contact with the object is established and its readiness to hold the load. The load transfer takes place safely and effectively without any knowledge of the object inertial properties and center of gravity position with respect to grasping points, but only friction parameters have to be known in advance, mainly related to object surface material. Differently from the approach by Medina et al. (2016), no model learning phase is required.

In the R2H operation the grip release strategy is of paramount importance. Most of the works adopt a simplistic approach, namely, the robot releases the object as soon as a pull by the receiver is detected. However, taking the release decision based on the pulling force only might cause object falls if the receiver is not sharing the load. On the other hand, works deciding the grip release on the load sharing only might be wrong as well since without any pulling force cue there is no clear knowledge of the receiver intention. In this paper, we adopt a strategy based on both indicators, the pulling force and the load share. This greatly enhances the reliability of the R2H handover.

In the R2R operation reliability of the object passing is crucial. Assuming the adoption of parallel grippers only, if the receiver grasps the object in a location too far from the center of gravity, the grip force might exceed the gripper force capability to keep the initial object orientation. In this case the giver should foresee this situation and avoid the grip release. It should communicate to the receiver the need to change the grasp location. We present here a first method to address the problem, based on the anticipatory detection of the slipping velocity. This feature is enabled, in this paper, by a smart use of the slipping detection algorithm by the giver and the controlled sliding by the receiver. Remarkably, in our approach the two robots are independent agents and the controllers are not coordinated but they communicate via haptic cues only.

The rest of the paper is organized as follows. Section 2 describes the reactive control algorithms for visual servoing, slipping avoidance and controlled sliding. Section 3 presents the finite state machines (FSM) handling the whole handover task in the three scenarios R2H, H2R and R2R. Experimental results obtained in an in-store logistics collaborative scenario are described in Section 4, while conclusions are drawn in Section 5.

2 Reactive Controllers

In the handover execution, we assume that the receiver, whoever it is, has to adapt to the giver behavior. For instance, in all operation types, the giver shows the object to the receiver in a zone within its field of view, and the receiver has to reach such location even if the giver moves before giving the object. Even in the R2R case this should be achieved because we assume there is no communication between the two control units, apart from the haptic cues during the physical handover. Moreover, any handover task is characterized by a large degree of uncertainty affecting the handover location, object mass and grasp location. We address these issues by resorting to sensor-based reactive control. The first controller is based on visual feedback and is a fast visual servoing algorithm aimed at tracking the object motion carried by the giver. The second controller is based on force/tactile feedback and aims at modulating the grip force so as to avoid object slippage while transferring the load from the giver to the receiver. The two controllers are activated in different times and the scheduling logic is encoded in the state machines described in Section 3.

2.1 Visual Servoing Controller

The objective of the visual servoing controller is to generate a velocity of the receiver hand so as to grasp the object to exchange. This feature is certainly needed in the H2R operation, but it can be useful even in the R2R operation in case the base frames of the two robotic agents are not calibrated, as in our experiments.

During the H2R handover, the human giver presents an item to the robot by putting it within the field of view of the camera. Since the human is not able to keep the object fixed or because he/she would like to move the object before passing it, the handover location is time-variant. We address this problem by using a visual servoing controller that tracks the object and controls the robot velocity toward the object location.

The controller is based on the ViSP library (Marchand et al., 2005) and uses the images acquired from an RGB-D camera (RealSense D435i in Figure 1) to adjust the robot pose with respect to the object and correctly grasp it. We acquire offline a target image that corresponds to the desired grasp configuration, then the algorithm moves the robot to align the current image with the target one. The target image implicitly defines the end-effector pose relative to the object, i.e. the grasp pose. The grasp pose of each object is calibrated in a preliminary phase using the gripper with two calibration fingers specifically designed to achieve the desired accuracy of the grasping location. In this paper, for each object there is only one grasp pose, but the same procedure can be repeated to define multiple grasp poses as proposed by Costanzo et al. (2021) for a pick-and-place task. The images are synthetically represented by 3D feature points matched between the target image and the current image by means of a keypoint matching algorithm available in the ViSP library.

The visual servoing algorithm minimizes the error between the target features

where

The algorithm minimizes the error (Eq. 1) by controlling the camera linear and angular velocity with the following law

where

Commonly,

being

The homogeneous transformation matrix

We adopt the Ceres2 solver to solve this optimization problem and, to ensure that

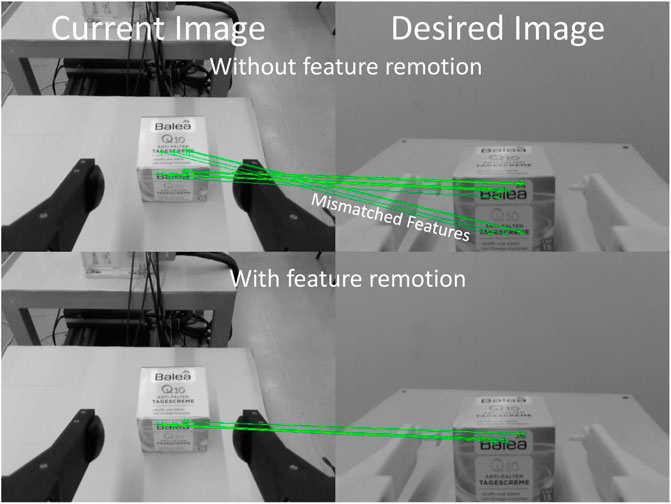

The keypoint matching algorithm of the ViSP library is used to find only the initial features

FIGURE 2. Example of matched features without (top) and with (bottom) the feature removing algorithm. The images on the right show the 3D printed calibration fingers mounted on the gripper grasping the object in the desired location.

If condition (Eq. 7) is detected, our algorithm does not remove all the features that meet the condition, but it removes only the feature with the highest residual and reiterates the optimization algorithm by solving (Eq. 6) again. This is helpful because in the next iteration, without the removed feature, the optimization algorithm will find a solution with a lower residual on the other features and the condition (Eq. 7) may not be satisfied anymore; in this case the algorithm is stopped. In the end, all the mismatched features are removed (see the bottom picture of Figure 2). The accuracy achieved by the algorithm is relevant to the successful execution of the task by the robot after the handover. For instance, if the robot needs to execute pivoting maneuver, i.e. let the object rotate in-hand so as to reach a vertical orientation, the grasping point needs to be above the CoG otherwise the object would not be vertical at the end of the maneuver. Based on our experience, we estimate that the grasping point should be in a 2 mm ball located on the vertical line above the CoG. For this reason, we set

2.2 Grip Force Control

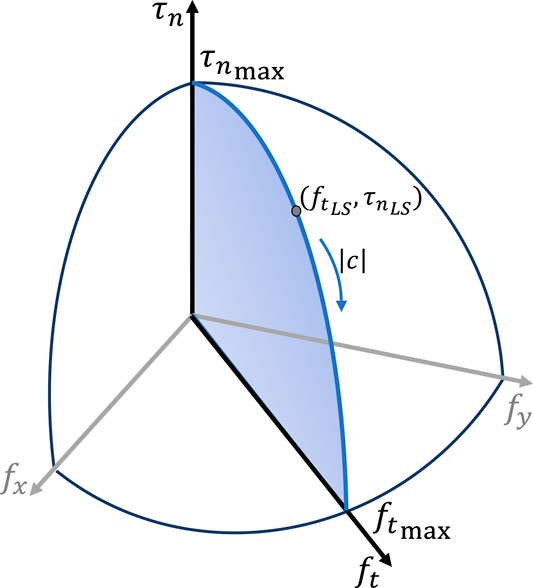

The control algorithm exploits the data provided by force/tactile sensors mounted on the fingertips, which can measure the 6D contact wrench (Costanzo et al., 2019). Each fingertip is a soft hemisphere with a stiffness and a curvature radius smaller than those of the handled object, hence the contact area is approximately a circle with radius ρ and the pressure distribution is axisymmetric. Under these assumptions, the grip force

where µ is the dry friction coefficient, depending on the object surface material, ξ is a parameter depending on the pressure distribution, while δ and γ are parameters depending on the soft pad material only. It is well-known that the radius is related to the grip force as

where ζ is the internal LuGre state (Canudas de Wit et al., 1995) and ω is the estimated slipping velocity. The function

where

FIGURE 3. Limit Surface with maximum tangential

The methods described above are used to design a grasp controller for computing the grip force to avoid object slippage, the so-called slipping avoidance (SA) control modality, used in the handover strategy described in Section 3. The grip force is the superposition of two components, namely,

where

In the pre-handover phase, another control modality can be useful to change the relative orientation between the gripper and the object in a configuration that is more suitable for the exchange. This modality is called pivoting and its aim is to bring and keep the object in a vertical position, i.e., with zero frictional torque. Again, the LS method can show that the grasp force to achieve this objective coincides with the Coulomb friction law, i.e.,

where the value α sets the time constant of the filter and

3 Handover Strategy

The assumptions underpinning the proposed approach and the requirements of the application tackled in this paper are summarized as follows:

• We do not investigate the signaling problem, we assume that the receiver already agreed on getting the object from the giver

• The receiver already knows which object the giver is passing;

• Both the agents have the capability to withstand the weight of the object;

• The objects to exchange are texture-rich and with a cylinder-like or parallelepiped-like shape;

• A simple handover protocol is known to the agents based on haptic cues;

• We use parallel grippers;

• The handover location is decided by the giver;

• We are not allowed to sensorize, e.g., using ARTags, neither the objects or the human;

• We are allowed to use only sensors on board the robot, no external sensor can be used;

• In the R2R case no communication between the robot control units is allowed.

Under these assumptions, which seem reasonable in the in-store logistics settings where performance and cost should be well balanced, with only visual and tactile information we perform natural handover operations.

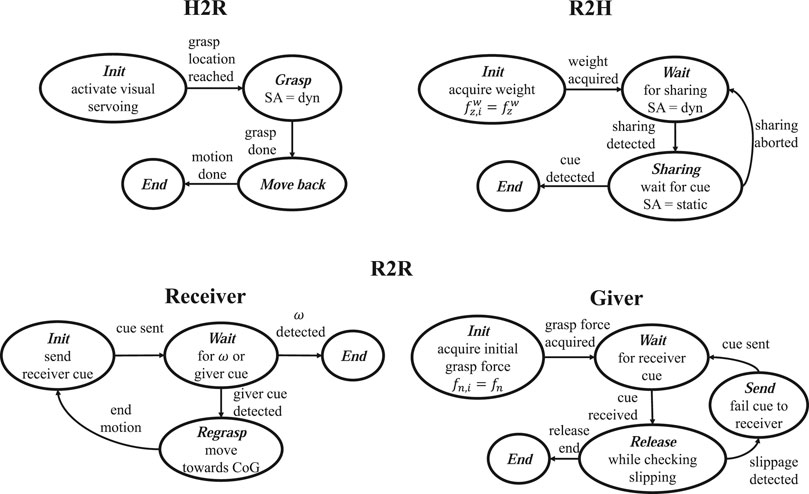

For each handover operation, H2R, R2H and R2R, we use different strategies. Each one corresponds to a FSM represented in Figure 4 and described hereafter.

3.1 H2R Operation

In the H2R operation, the human holds an item and the robot has to grasp it from the human hand. The strategy is represented in the top-left FSM diagram of Figure 4. In the Init state the robot activates the visual servoing algorithm, described in Section 2.1, to reach the handled object in the grasp configuration corresponding to the target image. During this phase, the human can move and the robotic receiver tracks the object by complying with the dynamic behavior of the giver. When the time varying feature trajectory (Eq. 5) terminates and the visual servoing error norm is below the desired accuracy

3.2 R2H Operation

In the R2H operation, a robot intends to pass an held object to a human. The algorithm is described by the top-right FSM diagram of Figure 4. During the Init state the system is in the pre-handover phase, the gripper is controlled in SA dynamic mode, the robot feels the object weight by means of the sensorized fingertips; the weight is represented by the force component

where the scale factor

where the scale factor

where

3.3 R2R Operation

The handover strategy for the R2R case is executed by two FSMs, one for the giver and one for the receiver, depicted in the bottom diagram of Figure 4. One might think to reuse the same strategy devised for the H2R case for the robot receiver and the same strategy devised for the R2H for the robot giver. However, this approach cannot handle the case the selected grasp configuration to be achieved via the visual servoing is not compatible with the maximum grip force of the receiving gripper. This might happen if the grasp pose is such that the gravity torsional load requires a grip force higher than the gripper capacity, i.e. the grasp location is too far from the center of gravity. When the giver is a human, this exception is easily handled by the giver exploiting his/her manipulation dexterity and the ability to coordinate visual and tactile feedback to anticipate the failure event. Therefore, in the R2R case we use the tactile feedback of the giver, in charge of the object safety, to anticipate the slipping event that would happen if the receiver grip force, required by the slipping avoidance, exceeded the gripper limit. Exploiting the a priori knowledge contained in the soft contact model and the tactile perception data only, the giver is able to foresee this failure event and reacts to avoid it, still using haptic communication only, as detailed in the following.

The giver in the Init state is grasping an object with the grasp control in SA mode, then it records the grip force

where the threshold

where

The robot receiver in the Init state, with the grasp control in SA mode, sends a haptic cue to the giver, again with the short backward and forward motion. Then, it comes to a Wait state where the following conditions are checked:

where

4 Experiments

The handover algorithms described so far have been tested in a number of experiments carried out on the setup described in Section 4.1, testing all three handover operations H2R, R2H and R2R.

4.1 Experimental Setup

The experimental setup is composed of two robots (Figure 1), a Kuka LBR iiwa seven equipped with a WSG-50 gripper, and a Yaskawa Motoman SIA5F equipped with a WSG-32 gripper. The finger motion of both grippers is velocity controlled via a ROS interface that communicates with the grippers through a LUA script running on the gripper MCU. Because of the LUA interpreter, the velocity commands can be sent to the grippers only with a rate of 50 Hz, this limits the performance of the grasp force controller. Each gripper is equipped with two SUNTouch sensorized fingertips (Costanzo et al., 2019) that are able to measure the 6D contact wrench. The method to extract the external wrench applied to the object from the single finger contact wrench vectors is explained in (Costanzo et al., 2020b). The sensors communicate with the ROS network via a serial interface at a sampling rate of 500 Hz. The mean error on the measured force is 0.2 N, hence we set the lower bound of the SA algorithm to a conservative value of 0.5 N. The grip force is controlled via a low-level force loop that acts on the finger velocity to track a reference grip force with a typical response time of 0.15 s, hence the time constant of the filter in (Eq. 13) is set to 0.33 s corresponding to the value of α reported in Table 1 considering the sampling rate of 500 Hz of the digital implementation. The iiwa robot is also equipped with an Intel D435i RGB-D camera, mounted in an eye-in-hand configuration, used for the visual servoing phase.

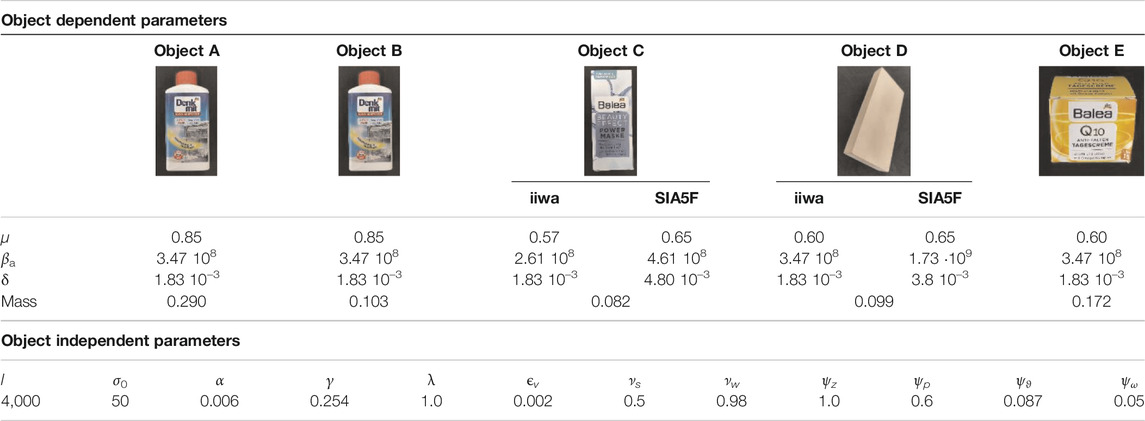

TABLE 1. Parameters of the algorithm (SI units). The friction model parameter μ, δ, and γ appear in Eq. 8 and

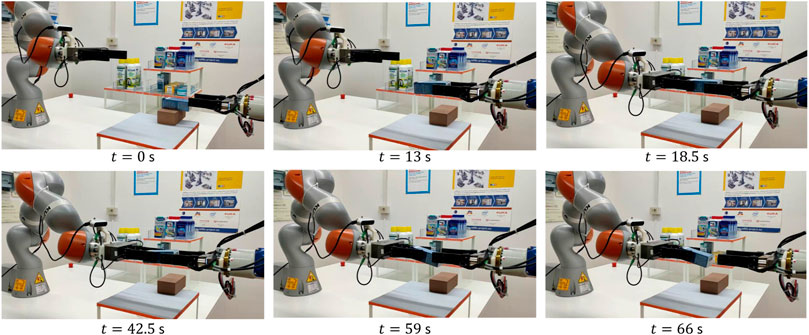

Two sets of experiments have been carried out. The first concerns H2R and R2H handover operations performed in a typical pick-and-place task in a collaborative shelf replenishment scenario of a retail store. The setting consists of shelf layers with a clearance of 15 cm. Each shelf has different facings to place the objects detached by 5 cm height separators. A human operator hands an object over to the iiwa robot (H2R), which then places it on a shelf layer. The placing motion is autonomously planned off-line using the method proposed by Costanzo et al. (2021) and the solution found to accomplish the task might include pivoting operations (pivoting the object inside the fingers or rotating the gripper about the grasping axis while keeping the object fixed). The method allows the robot to plan off-line even if the starting robot configuration is likely different at run time since the object is passed by a human and it is not picked from a fixed location. After the place task, the robot is asked to re-pick the same object and hands it over to the human (R2H). This sequence has been selected simply because it allows us to test both handover operations with a human.

The second set of experiments concerns the R2R handover operation. The SIA5F robot is commanded to pick an object in a given position and to pass it to the iiwa robot. The SIA5F is controlled according to the R2R Giver FSM, while the iiwa is controlled via the R2R Receiver one. The two robots communicate only via haptic feedback through the protocol defined in the FSMs. The R2R experiments have been carried out with two different objects.

The quantitative results reported in the next sections refer to experiments carried out with three different objects, a plastic bottle full of liquid (Object A), the same bottle but empty (Object B), and a cardboard box (Object C). Moreover, in the Supplementary Video S1, there are an extra R2R experiment performed with a resin block (Object D) and an extra H2R experiment carried out with a cardboard cube (Object E). Object D has been used since it is fully rigid and is made of the same material used in the calibration of the tactile sensors, hence the experiment runs in ideal conditions highlighting details of the physical handover phase. Object E is challenging to handover, since it is small compared to the robot fingers and the human should present it in a way depending on the pick-and-place task, in particular on the placing action the robot has to do. Table 1 shows all the objects used in the experiments with their friction parameters together with all parameters necessary to run the algorithms that do not depend on the specific object. Note that different friction parameters have been used for iiwa and SIA5F robots since the fingers are different.

4.2 H2R and R2H Experimental Results

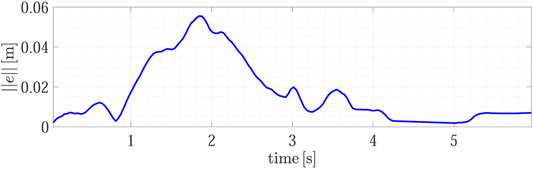

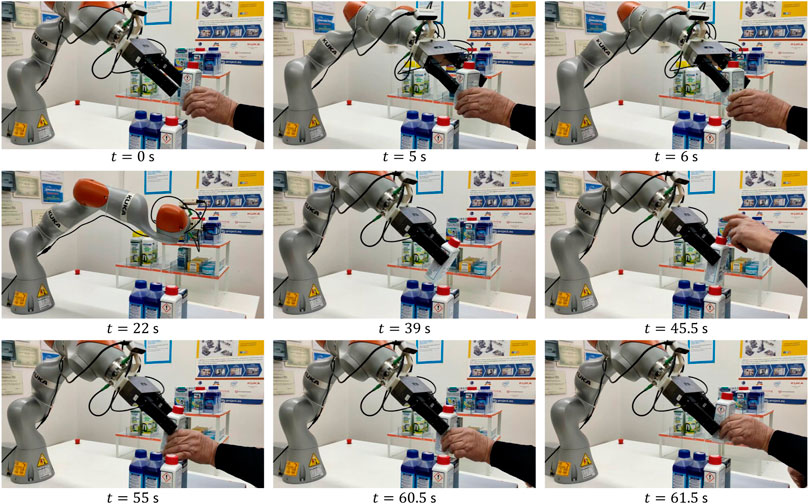

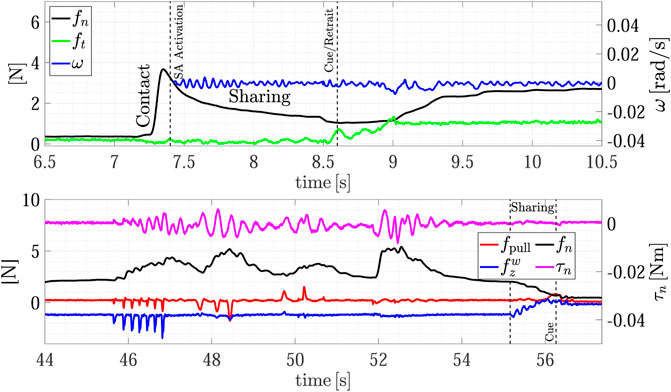

Figure 5 shows the snapshots of the first experiment execution. In the beginning, the human brings the object A inside the field of view of the robot camera and the robot goes toward it by using the visual servoing algorithm (t = 0 s). Before the grasp, the human rotates the object (t = 2 s) by changing its pose during the robot motion to reach the object. We intentionally introduced this dynamic change to show the capability of the algorithm to reach the target grasp point (t = 6 s) even in case of rapid motions of the human giving the object to the robot. This feature alleviates the cognitive burden of the giver, who does not need to focus on staying firm in the handover location waiting for the robot. Figure 6 shows the visual servoing error during this phase, the error starts from zero with a bell-like shape thanks to the time-varying reference features

FIGURE 5. First experiment (object A): H2R handover operation (top row), object placing phase (middle row), R2H handover operation (bottom row).

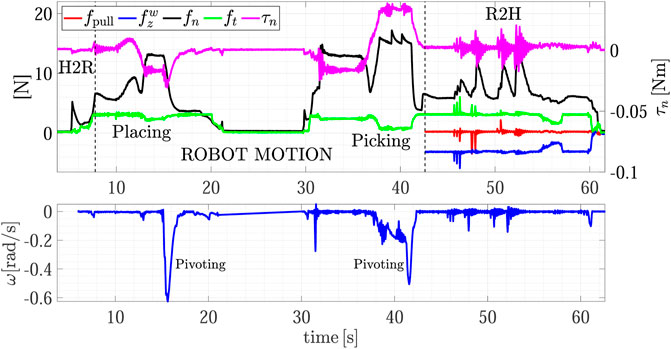

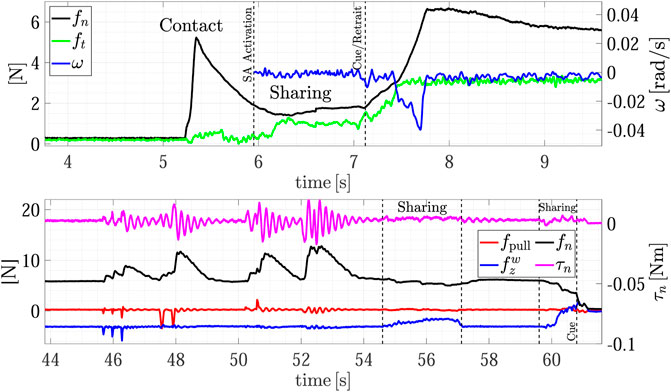

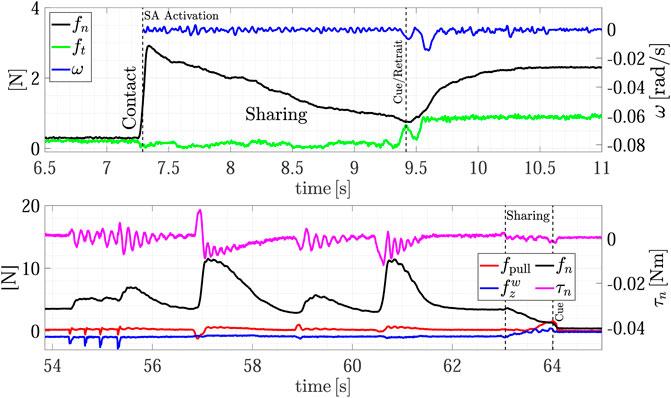

Figure 7 shows the forces and torsional moment as well as the estimated sliding velocity during the whole experiment, while the top plot of Figure 8 shows a detail of the H2R physical handover phase. The first grasp force peak depends on the impact velocity between the fingers and the object, then at t = 6 s the SA mode is activated and the receiver automatically chooses the grasp force. Here the load sharing phase starts, and both the agents hold the object weight. The weight of object A is 290 g, but in the sharing phase (around t = 6.5 s) the robot feels a tangential load

FIGURE 7. First experiment (Object A): forces and torsional moment measured by the robot during the whole task execution (top plot); estimated slipping velocity (bottom).

FIGURE 8. First experiment (Object A): detail of the H2R physical handover phase (top); detail of the R2H physical handover phase (bottom).

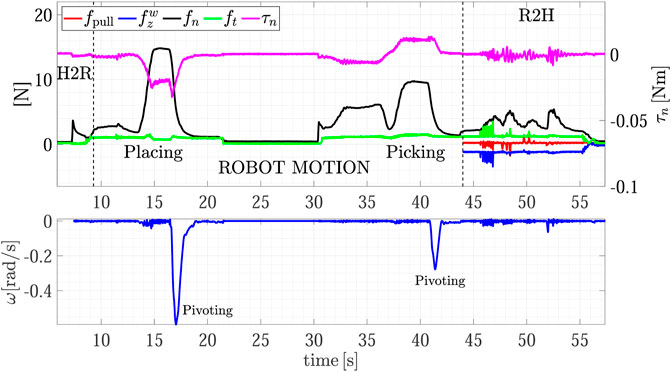

After the H2R phase the robot moves to place the object on the top shelf from t = 7.5 s to t = 22 s (see also Figure 5). The robot motion causes a variation of the gravity torsional load on the fingertips, this, in turn, causes an increment of the grasp force applied by the slipping avoidance algorithm. As shown in the Supplementary Video S1, at the end of the placing action, the robot executes a pivoting maneuver, autonomously planned, to reorient the object in a vertical configuration to correctly place it. This event is visible in the estimated slipping velocity signal (see the bottom plot of Figure 7) at about t = 16 s where the observer catches the velocity profile corresponding to the pivoting maneuver.

The same experiment presents also the dual case, where the robot takes an object from the shelf and gives it to a human. At about t = 30 s the robot picks again the object from the shelf and presents it to the human operator according to the strategy encoded in the R2H FSM. As shown in the Supplementary Video S1, before activating the FSM, the robot performs a pivoting maneuver to present the object in a vertical configuration, this way all the gravity torque accumulated during the robot motion vanishes (see Figure 7, t = 42 s).

The results of the R2H phase are detailed in the bottom plot of Figure 8. From t = 44 to t = 54 s the FSM is waiting for the Sharing state and counteracts the external disturbances intentionally applied by the human who touches repeatedly the object held by the robot. The plot shows the two signals used in the FSM, i.e., the pulling force

The same experiment has been repeated with the same bottle but now empty (Object B). It is worth mentioning that no vision system is able to estimate the weight of a non-transparent closed bottle and without the force/tactile sensing is not possible to distinguish between Object A and Object B. The experiment description is identical to the previous one and the full task is reported in Figure 9. The top plot of Figure 10 shows the detail of the H2R phase. The plot is qualitatively similar to the previous experiment, but now the measured tangential force and the resulting grasping force are lower. In particular, at the end of the H2R the robot feels a tangential force of about 1 N which corresponds to the object weight of 103 g. In turn, the grasping force

FIGURE 9. Second experiment (Object B): repetition of the first experiment using an empty bottle; forces and torsional moment measured by the robot during the whole task execution (top plot); estimated slipping velocity (bottom).

FIGURE 10. Second experiment (Object B): detail of the H2R physical handover phase (top); detail of the R2H physical handover phase (bottom).

The third experiment of this subsection involves object C and it is reported in Figure 11. The H2R and R2H handover phases (Figure 12) are very similar to those of the previous experiments and we will not discuss them further. This experiment is presented to show the particular pick-and-place task, because the handover grasp configuration is not compatible with the place location due to the tight clearance between two shelves. As shown in the Supplementary Video S1, to execute the placing maneuver the robot uses the gripper pivoting ability, again autonomously planned, i.e., the object remains fixed while the gripper rotates about the grasping axis. This happens from t = 20 to t = 25 s (see the estimated sliding velocity in the zoom of the bottom plot of Figure 11). After the placing the robot retreats. Then, it begins the new pick-and-place task to make the R2H operation. The robot picks the object again and, during its motion, the object impacts the facing separator. This is caught by the slipping avoidance algorithm that estimates a velocity peak at t = 36.4 s and, in turn, increases the grasping force to counteract the generated torsional moment

FIGURE 11. Third experiment (Object C): forces and torque measured by the robot during the whole task execution (top plot); estimated slipping velocity (bottom).

FIGURE 12. Third experiment (Object C): detail of the H2R physical handover phase (top); detail of the R2H physical handover phase (bottom).

4.3 R2R Experimental Result

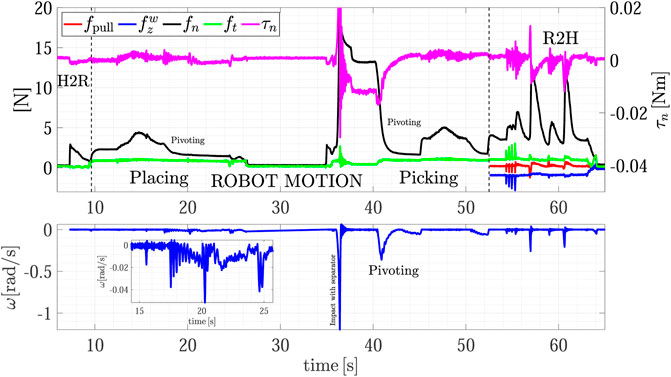

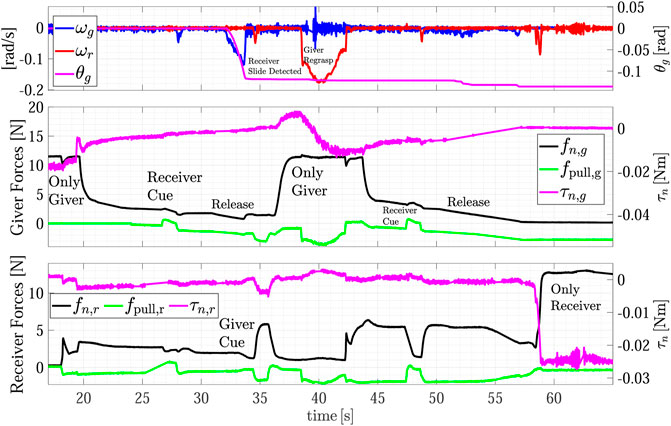

The R2R handover experiment has been carried out with Object C. Figure 13 shows the snapshots of the experiment execution, while the forces and torque as well as the estimated slipping velocities of the giver

FIGURE 14. Fourth experiment (Object C): estimated slipping velocities of the giver and the receiver (top); forces and torque measured by the giver (middle plot); forces and torque measured by the receiver (bottom plot).

After the sliding phase, the receiver goes back to SA mode and at t = 47 s, it sends again the cue to the giver to grasp the object. This time, during the release phase from t = 50 to t = 55 s the variation on the virtual sliding angle estimated by the giver

5 Conclusion

The experimental results reported in the paper give evidence to the importance of the haptic perception during the handover operations between humans and robots but even between robots that communicate only through physical interaction. The force/tactile perception enables reactive controllers to smartly modulate the grip force during the physical handover phase, ensuring successful handovers without object slippage. The proposed methods, based on physics models of the soft contact, have been presented in the framework of an in-store logistic collaborative scenario with a set of requirements and assumptions, and, in particular, using parallel grippers. Nevertheless, satisfactory results encourage us to investigate possible generalization to more complex robotic grippers, which can enlarge the set of objects that can be handled. We presented not only classical experiments of human-to-robot handovers and vice versa, but also a preliminary algorithm for robot-to-robot handover, that is envisaged useful in a future where robots collaborate with each other with simple communication channels, like haptic cues. The current limitation is the assumption of objects with specific shapes and the knowledge of the location of the re-grasp point. Overcoming this limit requires methods to re-plan or learn the re-grasping strategy.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

Conception and design of the reactive control algorithms were mainly done by MC, who coded all the software in C++. Other authors contributed equally to the rest of the paper and its writing by approving the final version of the manuscript.

Funding

This work has received funding by the European Commission under the H2020 Framework Programme, REFILLS project GA n. 731590.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2021.672995/full#supplementary-material

SUPPLEMENTARY VIDEO S1 | The experiments carried out to demonstrate the R2H, H2R, and R2R handover strategies.

Footnotes

1World Robotic Summit (2021). Future Convenience Store Challenge. Available at: https://worldrobotsummit.org/en/wrs2020/challenge/service/fcsc.html (Accessed April 20, 2021).

2Sameer Agarwal and Keir Mierle and Others (2021). Ceres Solver. Available at: http://ceres-solver.org (Accessed April 20, 2021).

References

Aleotti, J., Micelli, V., and Caselli, S. (2014). An Affordance Sensitive System for Robot to Human Object Handover. Int. J. Soc. Robotics 6, 653–666. doi:10.1007/s12369-014-0241-3

Bogue, R. (2019). Strong Prospects for Robots in Retail. Ir 46, 326–331. doi:10.1108/ir-01-2019-0023

Canudas de Wit, C., Olsson, H., Aström, K. J., and Lischinsky, P. (1995). A New Model for Control of Systems with Friction. IEEE Trans. Automat. Contr. 40, 419–425. doi:10.1109/9.376053

Cavallo, A., Costanzo, M., De Maria, G., and Natale, C. (2020). Modeling and Slipping Control of a Planar Slider. Automatica 115, 108875. doi:10.1016/j.automatica.2020.108875

Chan, W. P., Parker, C. A. C., Van der Loos, H. F. M., and Croft, E. A. (2012). “Grip Forces and Load Forces in Handovers: Implications for Designing Human-Robot Handover Controllers,” in 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, March 5–8, 2012, 9–16. doi:10.1145/2157689.2157692

Costanzo, M., De Maria, G., Lettera, G., and Natale, C. (2021). Can Robots Refill a Supermarket Shelf?: Motion Planning and Grasp Control. IEEE Robot. Automat. Mag. 2021, 2–14. doi:10.1109/MRA.2021.3064754

Costanzo, M., De Maria, G., Lettera, G., and Natale, C. (2020a). Grasp Control for Enhancing Dexterity of Parallel Grippers,” in 2020 IEEE International Conference on Robotics and Automation, Paris, France, May 31–August 31, 2020, 524–530.

Costanzo, M., De Maria, G., Natale, C., and Pirozzi, S. (2019). Design and Calibration of a Force/tactile Sensor for Dexterous Manipulation. Sensors 19, 966. doi:10.3390/s19040966

Costanzo, M., De Maria, G., and Natale, C. (2020b). Two-fingered In-Hand Object Handling Based on Force/tactile Feedback. IEEE Trans. Robot. 36, 157–173. doi:10.1109/tro.2019.2944130

Costanzo, M. (2020). Soft-contact Modeling For In-Hand Manipulation Control and Planning. PhD thesis. Aversa, Italy: Università degli Studi della Campania Luigi Vanvitelli

Costanzo, M., Stelter, S., Natale, C., Pirozzi, S., Bartels, G., Maldonado, A., et al. (2020c). Manipulation Planning and Control for Shelf Replenishment. IEEE Robot. Autom. Lett. 5, 1595–1601. doi:10.1109/LRA.2020.2969179

Endo, S., Pegman, G., Burgin, M., Toumi, T., and Wing, A. M. (2012). “Haptics in Between-Person Object Transfer,” in Haptics: Perception, Devices, Mobility, and Communication. Berlin Heidelberg: Springer, 103–111. doi:10.1007/978-3-642-31401-8_10

Howe, R. D., and Cutkosky, M. R. (1996). Practical Force-Motion Models for Sliding Manipulation. Int. J. Robotics Res. 15, 557–572. doi:10.1177/027836499601500603

Kuhn, H., and Sternbeck, M. G. (2013). Integrative Retail Logistics: An Exploratory Study. Oper. Manag. Res. 6, 2–18. doi:10.1007/s12063-012-0075-9

Marchand, E., Spindler, F., and Chaumette, F. (2005). ViSP for Visual Servoing: a Generic Software Platform With a Wide Class of Robot Control Skills. IEEE Robot. Automat. Mag. 12, 40–52. doi:10.1109/mra.2005.1577023

Mason, A. H., and MacKenzie, C. L. (2005). Grip Forces when Passing an Object to a Partner. Exp. Brain Res. 163, 173–187. doi:10.1007/s00221-004-2157-x

Medina, J. R., Duvallet, F., Karnam, M., and Billard, A. (2016). “A Human-Inspired Controller for Fluid Human-Robot Handovers,” in 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, November 15–17, 2016, 324–331. doi:10.1109/HUMANOIDS.2016.7803296

Nemlekar, H., Dutia, D., and Li, Z. (2019). Object Transfer Point Estimation for Fluent Human-Robot Handovers,” in 2019 International Conference on Robotics and Automation, Montréal, QC, May 20–24, 2019 (Montréal, QC: ICRA), 2627–2633. doi:10.1109/ICRA.2019.8794008

Ortenzi, V., Cosgun, A., Pardi, T., Chan, W., Croft, E., and Kulic, D. (2020). Object Handovers: a Review for Robotics. arXiv:2007.12952

Pan, M. K. X. J., Croft, E. A., and Niemeyer, G. (2018). “Exploration of Geometry and Forces Occurring within Human-To-Robot Handovers,” in 2018 IEEE Haptics Symposium (HAPTICS), San Francisco, CA, March 25–28, 2018, 327–333. doi:10.1109/HAPTICS.2018.8357196

Retail Analytics Council (2020). Emerging Trands in Retail Robotics. Evanston, IL: Northwestern University, 1–24.

Ricardez, G. A. G., Okada, S., Koganti, N., Yasuda, A., Eljuri, P. M. U., Sano, T., et al. (2020). Restock and Straightening System for Retail Automation Using Compliant and Mobile Manipulation. Adv. Robotics 34, 235–249. doi:10.1080/01691864.2019.1698460

Rosenberger, P., Cosgun, A., Newbury, R., Kwan, J., Ortenzi, V., Corke, P., et al. (2021). Object-independent Human-To-Robot Handovers Using Real Time Robotic Vision. IEEE Robot. Autom. Lett. 6, 17–23. doi:10.1109/LRA.2020.3026970

Sakai, R., Katsumata, S., Miki, T., Yano, T., Wei, W., Okadome, Y., et al. (2020). A Mobile Dual-Arm Manipulation Robot System for Stocking and Disposing of Items in a Convenience Store by Using Universal Vacuum Grippers for Grasping Items. Adv. Robotics 34, 219–234. doi:10.1080/01691864.2019.1705909

Sartori, L., Straulino, E., and Castiello, U. (2011). How Objects Are Grasped: the Interplay between Affordances and End-Goals. PLoS One 6, e25203. doi:10.1371/journal.pone.0025203

Shkulipa, S. A., den Otter, W. K., and Briels, W. J. (2005). Surface Viscosity, Diffusion, and Intermonolayer Friction: Simulating Sheared Amphiphilic Bilayers. Biophysical J. 89, 823–829. doi:10.1529/biophysj.105.062653

Strabala, K. W., Lee, M. K., Dragan, A. D., Forlizzi, J. L., Srinivasa, S., Cakmak, M., et al. (2013). Towards Seamless Human-Robot Handovers. Jhri 2, 112–132. doi:10.5898/JHRI.2.1.Strabala

Xydas, N., and Kao, I. (1999). Modeling of Contact Mechanics and Friction Limit Surfaces for Soft Fingers in Robotics, with Experimental Results. Int. J. Robotics Res. 18, 941–950. doi:10.1177/02783649922066673

Keywords: object handover, tactile sensing, robotic manipulation, human-robot collaboration, cooperative robots

Citation: Costanzo M, De Maria G and Natale C (2021) Handover Control for Human-Robot and Robot-Robot Collaboration. Front. Robot. AI 8:672995. doi: 10.3389/frobt.2021.672995

Received: 26 February 2021; Accepted: 22 April 2021;

Published: 07 May 2021.

Edited by:

Perla Maiolino, University of Oxford, United KingdomReviewed by:

Akansel Cosgun, Honda Research Institute Japan Co., Ltd., JapanParamin Neranon, Prince of Songkla University, Thailand

Copyright © 2021 Costanzo, De Maria and Natale. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marco Costanzo, bWFyY28uY29zdGFuem9AdW5pY2FtcGFuaWEuaXQ=

Marco Costanzo

Marco Costanzo Giuseppe De Maria

Giuseppe De Maria Ciro Natale

Ciro Natale