- Department of Industrial Engineering and Management, Mobile Robotics Laboratory and HRI Laboratory, Ben-Gurion University of the Negev, Be’er Sheva, Israel

Unexpected robot failures are inevitable. We propose to leverage socio-technical relations within the human-robot ecosystem to support adaptable strategies for handling unexpected failures. The Theory of Graceful Extensibility is used to understand how characteristics of the ecosystem can influence its ability to respond to unexpected events. By expanding our perspective from Human-Robot Interaction to the Human-Robot Ecosystem, adaptable failure-handling strategies are identified, alongside technical, social and organizational arrangements that are needed to support them. We argue that robotics and HRI communities should pursue more holistic approaches to failure-handling, recognizing the need to embrace the unexpected and consider socio-technical relations within the human robot ecosystem when designing failure-handling strategies.

Introduction

In 2016, a security robot at a shopping mall ran over a child and kept walking (Kircher, 2016). In 2017, a patrol robot rolled itself into a fountain (Swearingen, 2017). In 2020, a delivery robot got stuck on a sidewalk and needed to be rescued (Media, 2020). As robots become more common in public spaces (44% growth in 2019; IFR, 2020), questions of what to do when they fail become increasingly important. In most Sci-Fi movies, when the protagonist’s autonomous tool breaks down, they leave it and move on. In reality, the robot is its owner’s responsibility. Suppose 85-year-old Maggie was at the mall with her robot assistant, when suddenly it ran over a child, fell into a fountain, or got stuck. Maggie cannot ignore the incident, she cannot shut the robot off and leave it at the mall, and she cannot carry it home herself. What should she do?

Despite their grandiose portrayals in the media, robots still struggle to reliably perform tasks in nondeterministic environments. Failures, “degraded states of ability causing the behavior or service being performed by the system to deviate from the ideal, normal, or correct functionality” (Brooks, 2017, p.9), often occur. Countless autonomous fault-diagnosis and failure-handling methods have been developed (Ku et al., 2015; Hanheide et al., 2017; O’Keeffe et al., 2018; Kaushik et al., 2020). Yet, design improvements will never fully eliminate the potential for unexpected failures. Assistive robots will operate in many dynamic unstructured environments, among people with changing goals, abilities, and preferences (Jung and Hinds, 2018), so there will always be unexpected events, challenging the robot (Woods, 2018).

We propose to leverage socio-technical relations within the human-robot ecosystem (HRE) to develop strategies for handling unexpected failures. Social interactions with technical parts of the ecosystem can be the source of unexpected failures (Drury et al., 2003; Carlson and Murphy, 2005; Mutlu and Forlizzi, 2008), help detect unexpected failures (Giuliani et al., 2015; Mirnig et al., 2017) and facilitate resolutions (Steinbauer, 2013; Knepper et al., 2015). For example, bystanders can cause robots to freeze, but can also help robots identify and overcome technical obstacles. Customer service policy can dictate whether unexpected failures are resolved quickly or continue to escalate. Considering unexpected failures within the broader socio-technical ecosystem can predict sources of failure and novel methods of response.

When performance is challenged by extenuating circumstances, organized structures and processes in the HRE will change (Holling, 1996), influencing the robot's ability to respond. For example, if the robot sparked a fire; bystanders, who normally would help the robot, may refuse to assist. Social resources (like emergency services or specialized engineers) may become available to overcome the failure. Therefore, there are bilateral relationships between unexpected robot failures and socio-technical aspects of the HRE; unexpected robot failures can be caused by members of the ecosystem and the robot's response to them can impact the structure of the ecosystem, which, in turn, may influence the robot's ability to further respond. Considering relationships between the robot and its surrounding social context becomes critical for understanding which failure handling strategies are available.

To model socio-technical relations within the HRE, and understand how they impact robots abilities to respond to unexpected failures, we apply the Theory of Graceful Extensibility (TGE; Woods, 2018). TGE explains fundamental principles behind successful cases of sustained adaptability in systems. Sustained adaptability, the “ability to be poised to adapt,” is critical for systems to respond and recover from unexpected disruptions (Woods, 2019). Most theoretical frameworks assume that certain components in the ecosystem remain fixed, focus on specific types of socio-technical relations or do not consider systemic changes that occur when the ecosystem is significantly challenged (e.g. Zieba et al., 2011; Ouedraogo et al., 2013; Ruault et al., 2013). TGE is flexible enough to account for different socio-technical relations, recognizing that entities within the ecosystem and their relations are constantly changing. It provides insight regarding how the ecosystem’s structure influences its ability to adapt to surprise events, like unexpected failures.

Prior studies viewed robots as part of larger technological ecosystems (Quan and Sanderson, 2018; Kim, 2019) or performed socio-technical analyses (Fiore et al., 2011; Lima et al., 2016; Shin, 2019; Morgan-Thomas et al., 2020). However, studies on the impact of social infrastructure on robots’ ability to handle unexpected failures are scarce (Honig and Oron-Gilad, 2018). Viewing human-robot relations within a socio-technical ecosystem using TGE offers new directions for research. In advancing this perspective, we respond to calls to identify new strategies for predicting, preventing and handling unexpected robot failures (Zhang et al., 2017). We highlight and demonstrate first and second order relations between the robot’s response to unexpected failures and the socio-technical ecosystem it resides in, frequently ignored in robotics literature. Our goal is to trigger the robotics and HRI communities to adopt holistic approaches to failure-handling, recognizing the need to embrace the unexpected and consider socio-technical relations within the HRE.

The Theory of Graceful Extensibility

TGE provides a formal base for characterizing how complex systems maintain or fail-to-maintain adaptability to changing environments, contexts, stakeholders, demands, and constraints. Complex systems are modeled by “Tangled Layered Networks” of adaptive units (UABs; Units of Adaptive Behavior). Each UAB has adaptive capacity - the potential for adjusting activities to handle future changes. This generates a range of adaptive behaviors allowing the UAB to respond to changing demands (termed Capacity for Maneuver; CfM). Since this range is finite, all UABs risk saturation (running out of CfM) when presented with surprises (events that fall near or outside boundaries). Consequently, units require ways to extend their adaptive capacity when they risk saturation.

Performance of any UAB as it approaches saturation differs from when it operates far from saturation, resulting in two forms of adaptive capacity: base and extended. Base adaptive capacity refers to the potential to adapt to well-modeled changes (far from saturation) and is more ubiquitous in contemporary robotic design. In this mode, the goal is efficiency (“faster/better/cheaper”). Extended adaptive capacity, or Graceful Extensibility, represents the ability to expand CfM when surprise events occur (when risk of saturation is increasing or high). Near saturation, UABs aim to maintain performance.

Layers in the network represent hierarchical functional relations between UABs - lower layers provide services to upper layers (Doyle and Csete, 2011). In networks with high graceful extensibility, UABs in upper layers continuously assess the risk of saturation of themselves, their neighbors, and UABs in lower layers, by monitoring the relationship between upcoming demands and response capacity. When risk is high, upper UABs act to increase CfM of lower UABs by changing priorities, invoking new processes, extending resources, removing potential restrictions, empowering decentralized initiatives, and rewarding reciprocity. UABs increase the CfM of their neighbors by providing assistance (e.g., sharing resources). A UAB’s ability to model and track CfM is limited, and their localized perspective in the network obscures their perceptions of the environment, so ongoing efforts and shifts in perspective are required to improve a unit’s estimation of its own and others’ capabilities and performance.

Woods (2018) identified three common patterns leading adaptive systems to break down. Decompensation, exhausting the capacity to adapt as challenges grow faster than solutions can be implemented, occurs when CfMs of UABs are mismanaged, UABs are not synchronized, or lower level UABs are unable to take actions to limit event escalation. Working at cross purposes occurs when one UAB increases its CfM while reducing CfM of others, i.e., locally adaptive but globally maladaptive, and is often caused by mis-coordination across UABs. The third pattern involves failing to ensure current strategies are still effective as system states change. To sustain adaptability, one should empower decentralized initiative at lower layers, reward reciprocity and coordinate activities between UABs to meet changing priorities.

Applying the Theory of Graceful Extensibility to Failure Handling of Assistive Robots

The HRE’s ability to adapt and respond to unexpected failures can be modeled via TGE. Since we aim to improve service and understand socio-technical relations, we take a systems approach. We view the robot as one adaptive unit within a broader system, rather than delving into architectural components, consistent with other socio-technical networks (Mens, 2016). For clarity of presentation, we layered UABs by their ability to extend the CfM of lower UABs, rather than by their hierarchical functional relations. Although the models are abstracted representations of HREs, they are sufficient to showcase the importance of socio-technical relations to the design of failure-handling strategies for unexpected robot failures.

Base Adaptive Capacity

Imagine that a sensor on Maggie’s robot malfunctioned while at the mall, and the robot no longer recognizes obstacles or people in its environment. The robot runs its automatic diagnostic program, failing to find anomalies. Neither the robot nor Maggie know what caused the issue or how to resolve it. Maggie wants to complete her shopping, return home with her robot and belongings, and fix the malfunction as quickly as possible. The robot is carrying Maggie’s belongings, some of which she cannot carry herself.

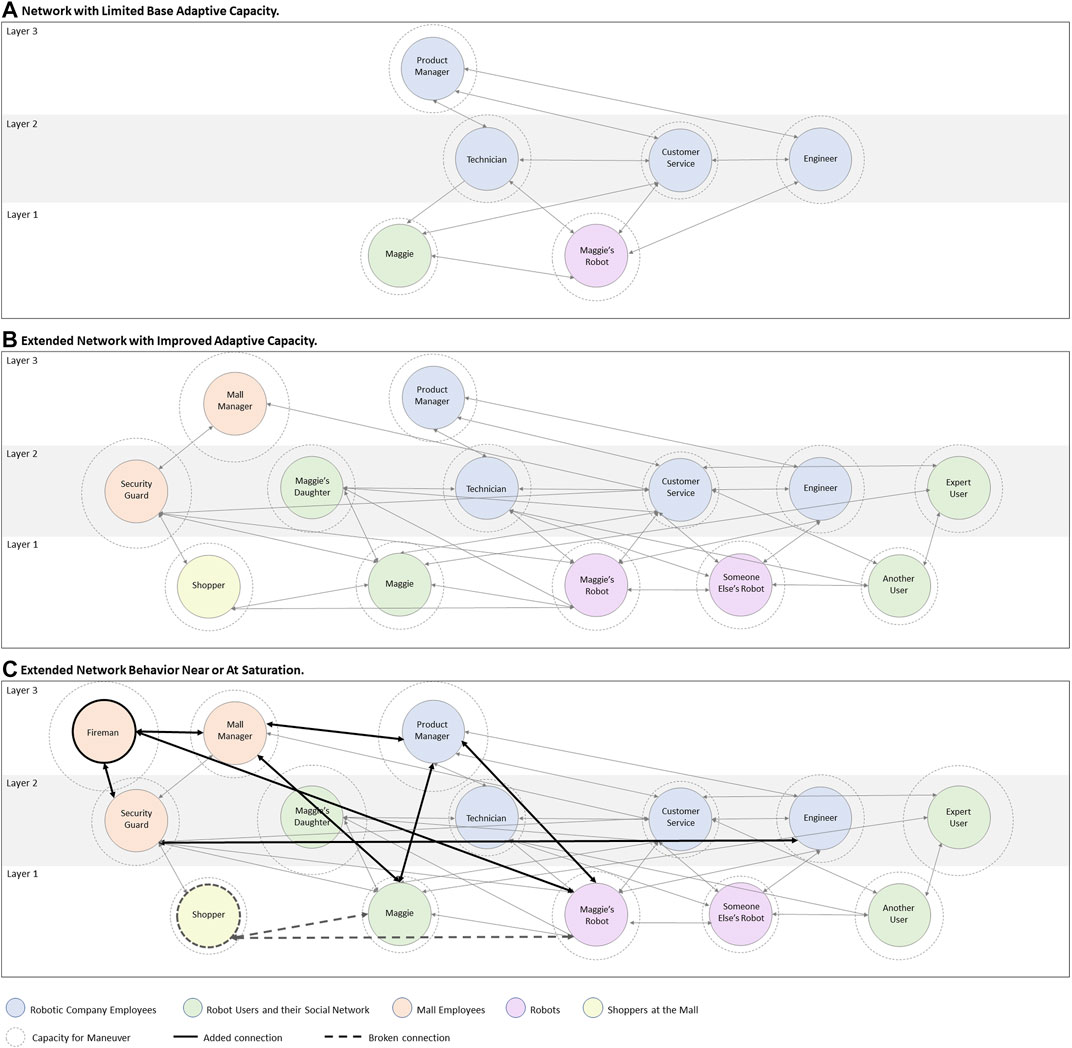

One possible network is modeled in Figure 1A. This network is representative of many robotic services today, which rely primarily on communications between the robot, the user and the service provider (through the robot, customer service, technicians or online) to resolve failures. Each UAB has its own CfM, indicated by the size of the dotted circle around it. Links between UABs are depicted by arrows. An engineer can send a push update to Maggie’s robot (extending its CfM), so it is in a higher layer than the robot.

FIGURE 1. Tangled Layered Networks of socio-technical relations in the Human-Robot Ecosystem (HRE), (A) with limited base Adaptive Capacity, (B) with improved base Adaptive Capacity, (C) adapting in response to an unexpected event that challenged the network.

Various social and socio-technical strategies for resolving the unexpected issue are supported by this network. For example, the robot could ask Maggie to guide it through a safe path with no obstacles (e.g., by holding its hand) and display a warning signal to alert people in the environment to stay clear. The robot could automatically flag the problem to an engineer to remotely run debugging tests; or to customer service, who could initiate a service call to help Maggie resolve the issue. The robot could guide Maggie through questions to help isolate the problem source.

Unexpected failures fall within base adaptive capacity when the existing HRE infrastructure is able to successfully help UABs adapt to achieve their goals efficiently, while managing their risk of saturation. Here, the sensor failure would fall within base adaptive capacity if the type of coordination needed to enable Maggie to complete her shopping and return home in a timely manner is well-supported within the ecosystem (e.g., if customer service managed to remotely fix the sensor failure, or if Maggie was able to guide the robot back home safely, despite the sensor failure).

The base adaptive capacity of this network is quite limited, as communications are distributed across few UABs. If the connections within the HRE were extended (e.g., in Figure 1B), various additional social and socio-technical strategies could be leveraged to resolve a wider variety of unexpected issues, increasing the base adaptive capacity of the network. For example, expert users could be rewarded for providing troubleshooting support to Maggie. The robot could call a security guard to move Maggie’s belongings to a shopping cart and store the robot until a technician arrives. Robots of the same model could help it identify the issue; sharing the outcomes of their learning algorithms or prior experiences (Arrichiello et al., 2017). The robot could call Maggie’s daughter to pick them up and wait for a technician elsewhere. If the socio-technical infrastructures that are needed to support these interactions have been put into place in advance, then the risk of saturation for Maggie, the robot, customer service agents, and other UABs in the network would remain low despite the unexpected issue.

The robot’s technical design will influence the ability of solutions to take place. For the security guard to take the robot to storage, it must be easily moveable. For Maggie’s daughter to pick up her mother and the robot, it must be light enough to be lifted into a car. For inexperienced users to help the robot identify and/or resolve unknown issues, there must be a user-friendly interface that guides them. Social and socio-technical failure-handling solutions require social and technical infrastructures to support them.

Behavior Near or At Saturation

Imagine that Maggie's robot accidentally sparked fire at the mall. How will the network in Figure 1B respond? If the robot alerts people who are already aware of the fire or are not receptive to listening, or starts its usual diagnostics while ignoring the fire altogether, it is failing to ensure its current strategies are still effective. The robot can alert an engineer, who can then alert the product manager, initiating crisis management processes, but by the time this feedback loop closes, the situation in the mall may escalate. This would be an example of decompensation. The robot can attempt to direct people toward an emergency exit, however, if it is programmed to walk at slow speed, it may block other escapees. This would be an example of the robot working at cross purposes.

Maggie’s goal would no longer be to return home quickly with her belongings and the robot, but to return home safely. To provide good service in such situations, the entire ecosystem will adapt in unexpected ways to meet this new goal. For example, the robot may need to obtain permission to walk faster than its normal speed range. An engineer previously working on developing new features, may need to send the robot’s last location to emergency services. Customer service agents may need to contact owners of similar robots to prevent additional occurrences.

Examples of possible ad-hoc network changes due to the fire are modeled in Figure 1C in bold. These changes can impact the ability of UABs to further respond to this event. For example, if firefighters are using the robot to locate the fire, it may not be able to lead Maggie to an emergency exit. If the call center is overwhelmed by concerned customers, Maggie may not be able to reach them to explain how the fire began. New resources will become available, e.g., firefighters can locate and/or extinguish the fire. Contrarily, some resources in the ecosystem that under normal circumstances are leveraged, may no longer be available, e.g., people at the mall may become reluctant or unavailable to help. The CfM of UABs in the network could change based on changes to the network that occurred as a result of the failure–for example, the product manager’s CfM could increase as a result the fire, because they would be justified in setting aside all non-urgent responsibilities and using/recruiting additional resources to respond to the extenuating circumstances. It is therefore important for the robot and service providers to consider the immediate consequences of attempted failure-handling measures and the unintentional outcomes that occur due to socio-technical changes within the ecosystem.

Graceful Extensibility

Preparing for Unexpected Robot Failures That Challenge the Ecosystem

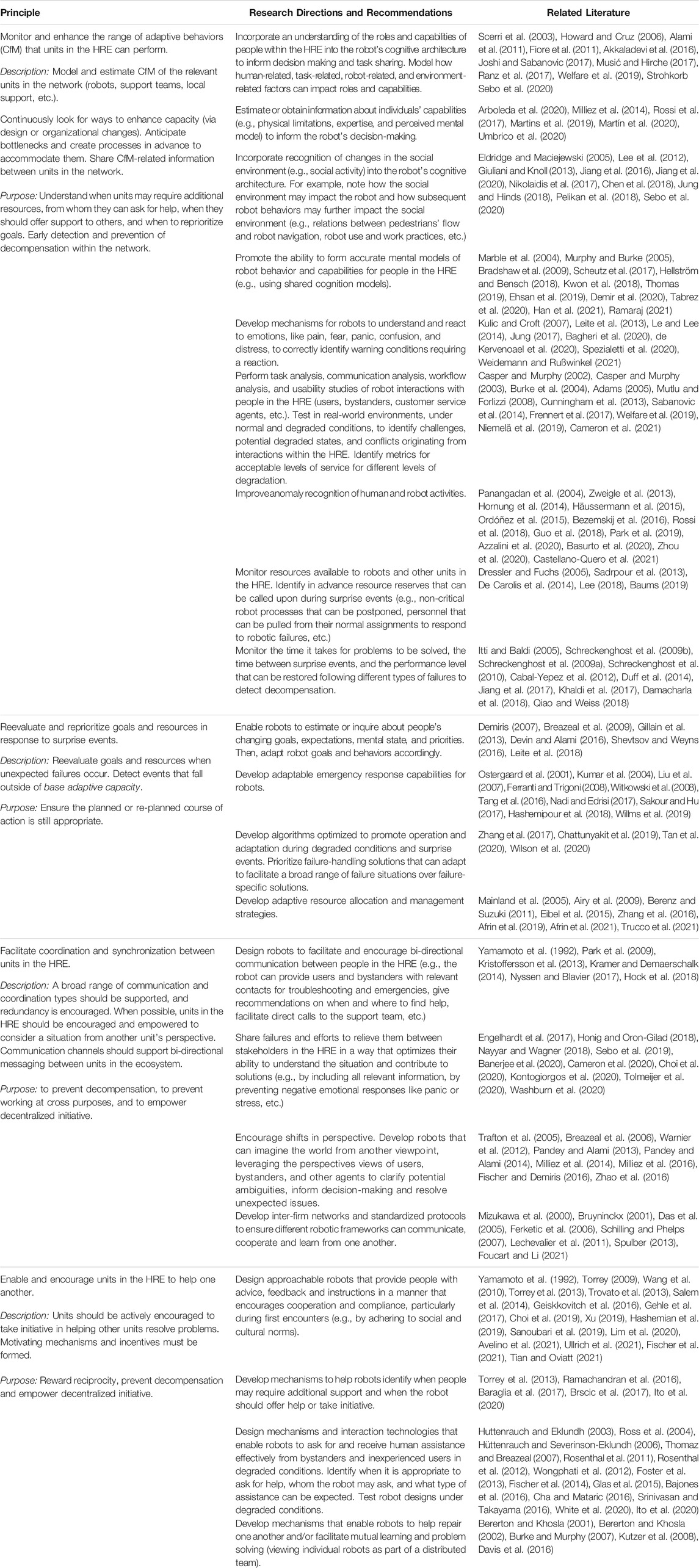

While it is impossible to eliminate the risk of robotic failures or to predict how the HRE will change during surprise events, it is possible to design robots that promote graceful extensibility; allowing the HRE to make required adjustments to accommodate new contingencies. Various strategies can be developed, in advance, to manage risk of saturation and increase the range of adaptive behaviors for better preparedness to unexpected failures. In Table 1, we describe principles based on TGE that support better sustained adaptability in the HRE, and specify recommendations for how robot developers, designers and robot companies can act on them. The result is a set of guidelines for what could be done, in advance, to provide better handling of unexpected robot failures.

TABLE 1. Socio-technical considerations for handling unexpected failures that challenge the human-robot ecosystem.

Discussion

Existing robotic failure-handling techniques struggle to create appropriate responses to predictable failures, let alone unexpected ones that challenge the Human-Robot Ecosystem (HRE). The Theory of Graceful Extensibility provides a framework within which the HRE and its ability to adapt to unexpected failures can be modeled, evaluated, and improved. By expanding from Human-Robot Interaction (HRI) to HRE, adaptable failure-handling strategies can be identified, alongside social and technical infrastructure requirements needed to support them.

Investing in responses to unexpected failures is a fine balancing act (Woods, 2018). Resources that improve performance near saturation may undermine performance far from saturation. Encouraging people to contact customer service will inevitably lead to increased demand for customer service, costing money that could have gone toward improving the robot’s failure-prevention systems. Similarly, resources that support graceful extensibility challenge the ecosystem's desire for efficiency during normal operations. Sustained adaptability requires the ecosystem to continuously search for the balance between improving base adaptive capacity and supporting graceful extensibility.

The ability to handle unexpected failures requires shared acknowledgement that unexpected failures are natural and unavoidable. Robotic companies today seem to prioritize perception of perfection over facilitating open communication and collaboration within their ecosystems. It is currently difficult to obtain information regarding the types of failures robots experience and possible resolutions. This is problematic for all failure types, but particularly for unexpected ones, as it often takes additional effort to differentiate between known and unknown problems. Grassroots efforts to overcome poor socio-technical relations such as YouTube instructional videos are less effective as complexity increases. Forewarning people of robot imperfections can improve evaluations of the robot and quality of service following failures (Lee et al., 2010). Taking steps to improve communication, cooperation, and collaboration between people in the ecosystem is likely to improve customer acceptance of robots.

Issues of information overload, control management and privacy arise from many of the strategies suggested to support adaptive capacity. How do we facilitate communication and collaboration between people in the ecosystem without annoying or overwhelming them? Who decides with whom the robot can share information and what it can share? How do we protect Maggie’s privacy? Many social, legal and ethical questions are raised from this approach and remain unresolved (Scheutz and Arnold, 2016; Santoni de Sio and van den Hoven, 2018). Close collaborations with government agencies, regulators, and related service corporations and organizations (e.g., malls, hospitals, etc.) will be needed to answer these questions. However, we strongly believe in the importance of extending adaptive capacity through socio-technical means in order to handle unexpected failures in robots.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

The first author, SH is supported by a scholarship from The Helmsley Charitable Trust through the Agricultural, Biological, Cognitive Robotics Center; by Ben-Gurion University of the Negev through the High-tech, Bio-tech and Chemo-tech Scholarship; and by the George Shrut Chair in human performance management.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adams, J. A. (2005). Human-Robot Interaction Design: Understanding User Needs and Requirements. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 49, 447–451. doi:10.1177/154193120504900349

Akkaladevi, S. C., Plasch, M., Pichler, A., and Rinner, B. (2016). “Human Robot Collaboration to Reach a Common Goal in an Assembly Process,” in STAIRS 2016: Proceedings of the Eighth European Starting AI Researcher Symposium, The Hague, the Netherlands, 23 August 2016, Editors D. Pearce, and H.S. Pinto (IOS Press, 2016), 3–14. doi:10.3233/978-1-61499-682-8-3

Alami, R., Warnier, M., Guitton, J., Lemaignan, S., and Sisbot, E. A. (2011). “When the Robot Considers the Human,” in The 15th International Symposium on Robotics Research (ISRR), Flagstaff, Arizona, USA, December 9-12, 2011, Editors Henrik I. Christensen, and Oussama Khatib, (Springer International Publishing Switzerland: Flagstaff). hal-01979210.

Arboleda, S. A., Pascher, M., Lakhnati, Y., and Gerken, J. (2020). “Understanding Human-Robot Collaboration for People with Mobility Impairments at the Workplace, a Thematic Analysis,” in 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 Aug.-4 Sept. 2020, (New Jersey: IEEE), 561–566. doi:10.1109/RO-MAN47096.2020.9223489

Arrichiello, F., Marino, A., and Pierri, F. (2017). Distributed Fault-Tolerant Control for Networked Robots in the Presence of Recoverable/Unrecoverable Faults and Reactive Behaviors. Front. Robot. AI The Hague, the Netherlands 4, 2. doi:10.3389/frobt.2017.00002

Avelino, J., Garcia-Marques, L., Ventura, R., and Bernardino, A. (2021). Break the Ice: a Survey on Socially Aware Engagement for Human-Robot First Encounters. Int. J. Soc. Rob., 1–27. doi:10.1007/s12369-020-00720-2

Azzalini, D., Castellini, A., Luperto, M., Farinelli, A., and Amigoni, F. (2020). “HMMs for Anomaly Detection in Autonomous Robots,” in Proceedings of the 19th International Conference on Autonomous Agents and MultiAgent Systems AAMAS ’20, Auckland, New Zealand, May 9–13, Editors B. An, N. Yorke-Smith, A. El Fallah Seghrouchni, and G. Sukthankar, (Richland, SC: International Foundation for Autonomous Agents and Multiagent Systems), 105–113. doi:10.5555/3398761.3398779

Bagheri, E., Esteban, P. G., Cao, H.-L., Beir, A. D., Lefeber, D., and Vanderborght, B. (2020). An Autonomous Cognitive Empathy Model Responsive to Users' Facial Emotion Expressions. ACM Trans. Interact. Intell. Syst. 10, 1–23. doi:10.1145/3341198

Bajones, M., Weiss, A., and Vincze, M. (2016). “Help, Anyone? A User Study for Modeling Robotic Behavior to Mitigate Malfunctions with the Help of the User,” in 5th International Symposium on New Frontiers in Human-Robot Interaction 2016. Christchurch, New Zealand, 7-10 March 2016, (New Jersey: IEEE), doi:10.1109/hri.2016.7451874 Available at: http://arxiv.org/abs/1606.02547

Banerjee, S., Gombolay, M., and Chernova, S. (2020). “A Tale of Two Suggestions: Action and Diagnosis Recommendations for Responding to Robot Failure,” in 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 Aug.-4 Sept. 2020, (New Jersey: IEEE), 398–405. doi:10.1109/RO-MAN47096.2020.9223545

Baraglia, J., Cakmak, M., Nagai, Y., Rao, R. P., and Asada, M. (2017). Efficient Human-Robot Collaboration: When Should a Robot Take Initiative?. Int. J. Rob. Res. 36, 563–579. doi:10.1177/0278364916688253

Basurto, N., Cambra, C., and Herrero, Á. (2020). Improving the Detection of Robot Anomalies by Handling Data Irregularities. Neurocomputing. doi:10.1016/j.neucom.2020.05.101

Bererton, C., and Khosla, P. (2002). “An Analysis of Cooperative Repair Capabilities in a Team of Robots,” in Proceedings 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292) 1, 476–482. doi:10.1109/ROBOT.2002.1013405

Bererton, C., and Khosla, P. K. (2001). “Towards a Team of Robots with Reconfiguration and Repair Capabilities,” in Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164)Washington, DC, USA, 11-15 May 2002, (New Jersey: IEEE), 2923–2928. doi:10.1109/ROBOT.2001.933065

Bezemskij, A., Loukas, G., Anthony, R. J., and Gan, D. (2016). “Behaviour-Based Anomaly Detection of Cyber-Physical Attacks on a Robotic Vehicle,” in 2016 15th International Conference on Ubiquitous Computing and Communications and 2016 International Symposium on Cyberspace and Security (IUCC-CSS), Granada, Spain, 14-16 Dec. 2016, (New Jersey: IEEE), 61–68. doi:10.1109/IUCC-CSS.2016.017

Bradshaw, J. M., Feltovich, P., Johnson, M., Breedy, M., Bunch, L., Eskridge, T., Jung, H., Lott, J., Uszok, A., and van Diggelen, J. (2009). “From Tools to Teammates: Joint Activity in Human-Agent-Robot Teams,” in International conference on human centered design, San Diego, CA, USA, July 19-24, Editors Masaaki Kurosu, (Berlin, Heidelberg: Springer), 935–944. doi:10.1007/978-3-642-02806-9_107

Breazeal, C., Berlin, M., Brooks, A., Gray, J., and Thomaz, A. L. (2006). Using Perspective Taking to Learn from Ambiguous Demonstrations. Rob. Autonomous Syst. 54, 385–393. doi:10.1016/j.robot.2006.02.004

Breazeal, C., Gray, J., and Berlin, M. (2009). An Embodied Cognition Approach to Mindreading Skills for Socially Intelligent Robots. Int. J. Rob. Res. 28, 656–680. doi:10.1177/0278364909102796

Brooks, D. J. (2017). A Human-Centric Approach to Autonomous Robot Failures. Ph.D. dissertation. Lowell, MA: Department of Computer Science, University.

Brscic, D., Ikeda, T., and Kanda, T. (2017). Do You Need Help? A Robot Providing Information to People Who Behave Atypically. IEEE Trans. Robot. 33, 500–506. doi:10.1109/TRO.2016.2645206

Burke, J., Murphy, R., Coovert, M., and Riddle, D. (2004). Moonlight in Miami: Field Study of Human-Robot Interaction in the Context of an Urban Search and rescue Disaster Response Training Exercise. Human-comp. Interact. 19, 85–116. doi:10.1080/07370024.2004.966734110.1207/s15327051hci1901&2_5

Burke, J., and Murphy, R. (2007). “Rsvp,” in 2007 2nd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Arlington, Virginia, USA, 10 March, 2007- 12 March, 2007, Editors Cynthia Breazeal, and Alan C. Schultz, (New York, NY, USA: Association for Computing Machinery), 161–168. doi:10.1145/1228716.1228738

Cameron, D., de Saille, S., Collins, E. C., Aitken, J. M., Cheung, H., Chua, A., et al. (2021). The Effect of Social-Cognitive Recovery Strategies on Likability, Capability and Trust in Social Robots. Comput. Hum. Behav. 114, 106561. doi:10.1016/j.chb.2020.106561

Carlson, J., and Murphy, R. R. (2005). How UGVs Physically Fail in the Field. IEEE Trans. Robot. 21, 423–437. doi:10.1109/TRO.2004.838027

Casper, J. L., and Murphy, R. R. (2002). “Workflow Study on Human-Robot Interaction in USAR,” in Proceedings 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11-15 May 2002, (New Jersey: IEEE), 2, 1997–2003. doi:10.1109/ROBOT.2002.1014834

Casper, J., and Murphy, R. R. (2003). Human-robot Interactions during the Robot-Assisted Urban Search and rescue Response at the World Trade Center. IEEE Trans. Syst. Man. Cybern. B 33, 367–385. doi:10.1109/TSMCB.2003.811794

Castellano-Quero, M., Fernández-Madrigal, J.-A., and García-Cerezo, A. (2021). Improving Bayesian Inference Efficiency for Sensory Anomaly Detection and Recovery in mobile Robots. Expert Syst. Appl. 163, 113755. doi:10.1016/j.eswa.2020.113755

Cha, E., and Mataric, M. (2016). “Using Nonverbal Signals to Request Help during Human-Robot Collaboration,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea (South), 9-14 Oct. 2016, (New Jersey: IEEE), 5070–5076. doi:10.1109/IROS.2016.7759744

Chattunyakit, S., Kobayashi, Y., Emaru, T., and Ravankar, A. (2019). Bio-Inspired Structure and Behavior of Self-Recovery Quadruped Robot with a Limited Number of Functional Legs. Appl. Sci. 9, 799. doi:10.3390/app9040799

Chen, Z., Jiang, C., and Guo, Y. (2018). “Pedestrian-Robot Interaction Experiments in an Exit Corridor,” in 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26-30 June 2018, (New Jersey: IEEE), 29–34. doi:10.1109/URAI.2018.8441839

Choi, S., Liu, S. Q., and Mattila, A. S. (2019). "How May I Help You?" Says a Robot: Examining Language Styles in the Service Encounter. Int. J. Hospitality Manage. 82, 32–38. doi:10.1016/j.ijhm.2019.03.026

Choi, S., Mattila, A. S., and Bolton, L. E. (2020). To Err Is Human(-Oid): How Do Consumers React to Robot Service Failure and Recovery?. J. Serv. Res., 109467052097879. doi:10.1177/1094670520978798

Cunningham, S., Chellali, A., Jaffre, I., Classe, J., and Cao, C. G. L. (2013). Effects of Experience and Workplace Culture in Human-Robot Team Interaction in Robotic Surgery: A Case Study. Int. J. Soc. Rob. 5, 75–88. doi:10.1007/s12369-012-0170-y

Davis, J. D., Sevimli, Y., Kendal Ackerman, M., and Chirikjian, G. S. (2016). “A Robot Capable of Autonomous Robotic Team Repair: The Hex-DMR II System,” in Advances in Reconfigurable Mechanisms and Robots II, Editors Xilun Ding, Xianwen Kong, and Jian S. Dai, (Springer, Cham: Springer), 619–631. doi:10.1007/978-3-319-23327-7_53

de Kervenoael, R., Hasan, R., Schwob, A., and Goh, E. (2020). Leveraging Human-Robot Interaction in Hospitality Services: Incorporating the Role of Perceived Value, Empathy, and Information Sharing into Visitors' Intentions to Use Social Robots. Tourism Manage. 78, 104042. doi:10.1016/j.tourman.2019.104042

Demir, M., McNeese, N. J., and Cooke, N. J. (2020). Understanding Human-Robot Teams in Light of All-Human Teams: Aspects of Team Interaction and Shared Cognition. Int. J. Human-Computer Stud. 140, 102436. doi:10.1016/j.ijhcs.2020.102436

Demiris, Y. (2007). Prediction of Intent in Robotics and Multi-Agent Systems. Cogn. Process. 8, 151–158. doi:10.1007/s10339-007-0168-9

Devin, S., and Alami, R. (2016). “An Implemented Theory of Mind to Improve Human-Robot Shared Plans Execution,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7-10 March 2016, (New Jersey: IEEE), 319–326. doi:10.1109/HRI.2016.7451768

Doyle, J. C., and Csete, M. (2011). Architecture, Constraints, and Behavior. Proc. Natl. Acad. Sci. 108, 15624–15630. doi:10.1073/pnas.1103557108

Drury, J. L., Scholtz, J., and Yanco, H. A. (2003). “Awareness in Human-Robot Interactions,” in SMC’03 Conference Proceedings. 2003 IEEE International Conference on Systems, Man and Cybernetics. Conference Theme - System Security and Assurance (Cat. No.03CH37483), Washington, DC, USA, 8-8 Oct. 2003, (New Jersey: IEEE), 912–918. doi:10.1109/ICSMC.2003.1243931

Ehsan, U., Tambwekar, P., Chan, L., Harrison, B., and Riedl, M. O. (2019). “Automated Rationale Generation,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, Marina del Ray, California, March 17 - 20, 2019, editors Wai-Tat Fu, and Shimei Pan, (New York, NY, USA: ACM), 263–274. doi:10.1145/3301275.3302316

Eldridge, B. D., and Maciejewski, A. A. (2005). Using Genetic Algorithms to Optimize Social Robot Behavior for Improved Pedestrian Flow. IEEE Int. Conf. Syst. Man Cybernetics 1, 524–529. doi:10.1109/ICSMC.2005.1571199

Engelhardt, S., Hansson, E., and Leite, I. (2017). “Better Faulty Than Sorry : Investigating Social Recovery Strategies to Minimize the Impact of Failure in Human-Robot Interaction,” in WCIHAI 2017 Workshop on Conversational Interruptions in Human-Agent Interactions: Proceedings of the first Workshop on Conversational Interruptions in Human-Agent Interactions co-located with 17th International Conference on International Conference on Intelligent Virtual Agents (IVA 2017), Stockholm, Sweden, August 27, 2017, 19–27.

Ferranti, E., and Trigoni, N. (2008). “Robot-assisted Discovery of Evacuation Routes in Emergency Scenarios,” in 2008 IEEE International Conference on Robotics and Automation, 2824–2830. doi:10.1109/ROBOT.2008.4543638

Fiore, S. M., Badler, N. L., Boloni, L., Goodrich, M. A., Wu, A. S., and Chen, J. (2011). Human-Robot Teams Collaborating Socially, Organizationally, and Culturally. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 55, 465–469. doi:10.1177/1071181311551096

Fischer, K., Naik, L., Langedijk, R. M., Baumann, T., Jelínek, M., and Palinko, O. (2021). “Initiating Human-Robot Interactions Using Incremental Speech Adaptation,” in Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, CO, Boulder, USA, March 8 - 11, 2021, Editors Cindy Bethel, and Ana Paiva, (New York, NY, USA: ACM), 421–425. doi:10.1145/3434074.3447205

Fischer, K., Soto, B., Pantofaru, C., and Takayama, L. (2014). “Initiating Interactions in Order to Get Help: Effects of Social Framing on People's Responses to Robots' Requests for Assistance,” in The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25-29 Aug. 2014, (Edinburgh, UK: IEEE), 999–1005. doi:10.1109/ROMAN.2014.6926383

Fischer, T., and Demiris, Y. (2016). “Markerless Perspective Taking for Humanoid Robots in Unconstrained Environments,” in 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16-21 May 2016, (New Jersey: IEEE), 3309–3316. doi:10.1109/ICRA.2016.7487504

Foster, M. E., Gaschler, A., and Giuliani, M. (2013). “How Can I Help You',” in Proceedings of the 15th ACM on International conference on multimodal interaction - ICMI ’13, Sydney, Australia, December 9 - 13, 2013, Editors Julien Epps, Fang Chen, Sharon Oviatt, and Kenji Mase, (New York, New York, USA: ACM Press), 255–262. doi:10.1145/2522848.2522879

Frennert, S., Eftring, H., and Östlund, B. (2017). Case Report: Implications of Doing Research on Socially Assistive Robots in Real Homes. Int. J. Soc. Rob. 9, 401–415. doi:10.1007/s12369-017-0396-9

Gehle, R., Pitsch, K., Dankert, T., and Wrede, S. (2017). “How to Open an Interaction between Robot and Museum Visitor?,” in Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, March 6 - 9, 2017, Editors Bilge Mutlu, and Manfred Tscheligi, (New York, NY, USA: ACM), 187–195. doi:10.1145/2909824.3020219

Geiskkovitch, D. Y., Cormier, D., Seo, S. H., and Young, J. E. (2016). Please Continue, We Need More Data: an Exploration of Obedience to Robots. J. Human-robot Interact. 5, 82–99. doi:10.5555/3109939.3109943

Giuliani, M., and Knoll, A. (2013). Using Embodied Multimodal Fusion to Perform Supportive and Instructive Robot Roles in Human-Robot Interaction. Int. J. Soc. Rob. 5, 345–356. doi:10.1007/s12369-013-0194-y

Giuliani, M., Mirnig, N., Stollnberger, G., Stadler, S., Buchner, R., and Tscheligi, M. (2015). Systematic Analysis of Video Data from Different Human-Robot Interaction Studies: a Categorization of Social Signals during Error Situations. Front. Psychol. 6, 931. doi:10.3389/fpsyg.2015.00931

Glas, D. F., Kamei, K., Kanda, T., Miyashita, T., and Hagita, N. (2015). “Human-Robot Interaction in Public and Smart Spaces,” in Intelligent Assistive Robots: Recent Advances in Assistive Robotics for Everyday Activities. Editors S. Mohammed, J. C. Moreno, K. Kong, and Y. Amirat (Cham: Springer International Publishing), 235–273. doi:10.1007/978-3-319-12922-8_9

Guo, P., Kim, H., Virani, N., Xu, J., Zhu, M., and Liu, P. (2018). “RoboADS: Anomaly Detection Against Sensor and Actuator Misbehaviors in Mobile Robots,” in 2018 48th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN) (IEEE), 574–585. doi:10.1109/DSN.2018.00065

Han, Z., Giger, D., Allspaw, J., Lee, M. S., Admoni, H., and Yanco, H. A. (2021). Building the Foundation of Robot Explanation Generation Using Behavior Trees. ACM Trans. Hum.-Robot Interact 10, 26. doi:10.1145/3457185

Hanheide, M., Göbelbecker, M., Horn, G. S., Pronobis, A., Sjöö, K., Aydemir, A., et al. (2017). Robot Task Planning and Explanation in Open and Uncertain Worlds. Artif. Intelligence 247, 119–150. doi:10.1016/j.artint.2015.08.008

Hashemian, M., Paiva, A., Mascarenhas, S., Santos, P. A., and Prada, R. (2019). “The Power to Persuade: a Study of Social Power in Human-Robot Interaction,” in 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Luxembourg, Luxembourg, 25-28 June 2018, (New Jersey: IEEE), 1–8. doi:10.1109/RO-MAN46459.2019.8956298

Hashemipour, M., Stuban, S., and Dever, J. (2018). A Disaster Multiagent Coordination Simulation System to Evaluate the Design of a First-Response Team. Syst. Eng. 21, 322–344. doi:10.1002/sys.21437

Häussermann, K., Zweigle, O., and Levi, P. (2015). A Novel Framework for Anomaly Detection of Robot Behaviors. J. Intell. Rob. Syst. 77, 361–375. doi:10.1007/s10846-013-0014-5

Hellström, T., and Bensch, S. (2018). Understandable Robots - what, Why, and How. J. Behav. Robot. 9, 110–123. doi:10.1515/pjbr-2018-0009

Hock, P., Oshima, C., and Nakayama, K. (2018). “CATARO,” in Proceedings of the Genetic and Evolutionary Computation Conference Companion, Kyoto, Japan, July 15 - 19, 2018, Editor Hernan Aguirre, (New York, NY, USA: ACM), 1841–1844. doi:10.1145/3205651.3208264

Holling, C. S. (1996). “Engineering Resilience versus Ecological Resilience,” in Engineering Within Ecological Constraints. Editors Peter Schulze (Washington, DC: National Academies Press), 32–44.

Honig, S., and Oron-Gilad, T. (2018). Understanding and Resolving Failures in Human-Robot Interaction: Literature Review and Model Development. Front. Psychol. 9, 861, 1-21. doi:10.3389/fpsyg.2018.00861

Hornung, R., Urbanek, H., Klodmann, J., Osendorfer, C., and van der Smagt, P. (2014). “Model-free Robot Anomaly Detection,” in 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14-18 Sept. 2014, (New Jersey: IEEE), 3676–3683. doi:10.1109/IROS.2014.6943078

Howard, A. M., and Cruz, G. (2006). “Adapting Human Leadership Approaches for Role Allocation in Human-Robot Navigation Scenarios,” in 2006 World Automation Congress, Budapest, Hungary, 24-26 July 2006, (New Jersey: IEEE), 1–8. doi:10.1109/WAC.2006.376028

Huttenrauch, H., and Eklundh, K. S. (2003). “To Help or Not to Help a Service Robot,” in The 12th IEEE International Workshop on Robot and Human Interactive Communication, 2003. Proceedings. ROMAN 2003, Millbrae, CA, USA, 2-2 Nov. 2003, (New Jersey: IEEE), 379–384. doi:10.1109/ROMAN.2003.1251875

Hüttenrauch, H., and Severinson-Eklundh, K. (2006). To Help or Not to Help a Service Robot. Is 7, 455–477. doi:10.1075/is.7.3.15hut

IFR (2020). Executive Summary World Robotics 2020 Service Robots. Int. Fed. Robot.. Available at: https://ifr.org/img/worldrobotics/Executive_Summary_WR_2020_Service_Robots.pdf (Accessed May 5, 2021).

Ito, K., Kong, Q., Horiguchi, S., Sumiyoshi, T., and Nagamatsu, K. (2020). “Anticipating the Start of User Interaction for Service Robot in the Wild,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May-31 Aug, (New Jersey: IEEE), 9687–9693. doi:10.1109/ICRA40945.2020.9196548

Jiang, C., Ni, Z., Guo, Y., and He, H. (2020). Pedestrian Flow Optimization to Reduce the Risk of Crowd Disasters through Human-Robot Interaction. IEEE Trans. Emerg. Top. Comput. Intell. 4, 298–311. doi:10.1109/TETCI.2019.2930249

Jiang, C., Ni, Z., Guo, Y., and He, H. (2016). “Robot-assisted Pedestrian Regulation in an Exit Corridor,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), (IEEE), 815–822. doi:10.1109/IROS.2016.7759145

Joshi, S., and Sabanovic, S. (2017). “A Communal Perspective on Shared Robots as Social Catalysts,” in 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Daejeon, Korea (South), 9-14 Oct. 2016, (New Jersey: IEEE), 732–738. doi:10.1109/ROMAN.2017.8172384

Jung, M. F. (2017). “Affective Grounding in Human-Robot Interaction,” in Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction Vienna, Austria, March 6 - 9, 2017, Editors Bilge Mutlu and Manfred Tscheligi, (New York, NY, USA: ACM), 263–273. doi:10.1145/2909824.3020224

Jung, M., and Hinds, P. (2018). Robots in the Wild. J. Hum.-Rob. Interact. 7, 1–5. doi:10.1145/3208975

Kaushik, R., Desreumaux, P., and Mouret, J.-B. (2020). Adaptive Prior Selection for Repertoire-Based Online Adaptation in Robotics. Front. Rob. AI 6, 151. doi:10.3389/frobt.2019.00151

Kim, D. (2019). “Understanding the Robot Ecosystem: Don't Lose Sight of Either the Trees or the forest,” in Proceedings of the 52nd Hawaii International Conference on System Sciences. Grand Wailea, Hawaii, USA, 08 - 11 Jan 2019, (BY-NC-ND 4.0), doi:10.24251/HICSS.2019.760

Kircher, M. M. (2016). 300-Pound Robot Security Guard Topples Toddler. New York Mag. Available at: https://nymag.com/intelligencer/2016/07/robot-mall-security-guard-topples-onto-toddler.html (Accessed October 4, 2020).

Knepper, R. A., Tellex, S., Li, A., Roy, N., and Rus, D. (2015). Recovering from Failure by Asking for Help. Auton. Rob. 39, 347–362. doi:10.1007/s10514-015-9460-1

Kontogiorgos, D., Pereira, A., Sahindal, B., van Waveren, S., and Gustafson, J. (2020). “Behavioural Responses to Robot Conversational Failures,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge United Kingdom, 23 March, 2020- 26 March, 2020, Editors Tony Belpaeme, and James Young, (New York, NY, USA: ACM), 53–62. doi:10.1145/3319502.3374782

Kramer, N. M., and Demaerschalk, B. M. (2014). A Novel Application of Teleneurology: Robotic Telepresence in Supervision of Neurology Trainees. Telemed. e-Health 20, 1087–1092. doi:10.1089/tmj.2014.0043

Kristoffersson, A., Coradeschi, S., and Loutfi, A. (2013). A Review of Mobile Robotic Telepresence. Adv. Human-Computer Interact. 2013, 1–17. doi:10.1155/2013/9023162013

Ku, L. Y., Ruiken, D., Learned-Miller, E., and Grupen, R. (2015). “Error Detection and surprise in Stochastic Robot Actions,” in 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea (South), 3-5 Nov. 2015, (New Jersey: IEEE), 1096–1101. doi:10.1109/HUMANOIDS.2015.7363505

Kulic, D., and Croft, E. A. (2007). Affective State Estimation for Human-Robot Interaction. IEEE Trans. Rob. 23, 991–1000. doi:10.1109/TRO.2007.904899

Kumar, V., Rus, D., and Singh, S. (2004). Robot and Sensor Networks for First Responders. IEEE Pervasive Comput. 3, 24–33. doi:10.1109/MPRV.2004.17

Kutzer, M. D. M., Armand, M., Scheid, D. H., Lin, E., and Chirikjian, G. S. (2008). Toward Cooperative Team-Diagnosis in Multi-Robot Systems. Int. J. Rob. Res. 27, 1069–1090. doi:10.1177/0278364908095700

Kwon, M., Huang, S. H., and Dragan, A. D. (2018). “Expressing Robot Incapability,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, IL, Chicago, USA, March 5 - 8, 2018, Editor Takayuki Kanda, and Selma Ŝabanović, (New York, NY, USA: ACM), 87–95. doi:10.1145/3171221.3171276

Le, B. V., and Lee, S. (2014). “Adaptive Hierarchical Emotion Recognition from Speech Signal for Human-Robot Communication,” in Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IEEE), 807–810. doi:10.1109/IIH-MSP.2014.204

Lee, M. K., Kiesler, S., Forlizzi, J., and Rybski, P. (2012). “Ripple Effects of an Embedded Social Agent,” in Proceedings of the 2012 ACM annual conference on Human Factors in Computing Systems - CHI ’12, Texas, Austin, USA, May 5 - 10, 2012, Editor Joseph A. Konstan(New York, New York, USA: ACM Press), 695. doi:10.1145/2207676.2207776

Lee, M. K., Kiesler, S., Forlizzi, J., Srinivasa, S., and Rybski, P. (2010). “Gracefully Mitigating Breakdowns in Robotic Services,” in 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Kitakyushu, Japan, 27-29 Aug. 2014, (New Jersey: IEEE), 203–210. doi:10.1109/HRI.2010.5453195

Leite, A., Pinto, A., and Matos, A. (2018). A Safety Monitoring Model for a Faulty Mobile Robot. Robotics 7, 32. doi:10.3390/robotics7030032

Leite, I., Pereira, A., Mascarenhas, S., Martinho, C., Prada, R., and Paiva, A. (2013). The Influence of Empathy in Human-Robot Relations. Int. J. Human-Computer Stud. 71, 250–260. doi:10.1016/j.ijhcs.2012.09.005

Lim, V., Rooksby, M., and Cross, E. S. (2020). Social Robots on a Global Stage: Establishing a Role for Culture during Human-Robot Interaction. Int. J. Soc. Rob.. doi:10.1007/s12369-020-00710-4

Lima, T., Santos, R. P. d., Oliveira, J., and Werner, C. (2016). The Importance of Socio-Technical Resources for Software Ecosystems Management. J. Innovat. Digital Ecosyst. 3, 98–113. doi:10.1016/j.jides.2016.10.006

Marble, J. L., Bruemmer, D. J., Few, D. A., and Dudenhoeffer, D. D. (2004). “Evaluation of Supervisory vs. Peer-Peer Interaction with Human-Robot Teams,” in 37th Annual Hawaii International Conference on System Sciences, 2004. Proceedings of the, Big Island, HI, USA, 5-8 Jan. 2004, (New Jersey: IEEE), 9. doi:10.1109/HICSS.2004.1265326

Martín, A., Pulido, J. C., González, J. C., García-Olaya, Á., and Suárez, C. (2020). A Framework for User Adaptation and Profiling for Social Robotics in Rehabilitation. Sensors 20, 4792. doi:10.3390/s20174792

Martins, G. S., Santos, L., and Dias, J. (2019). User-Adaptive Interaction in Social Robots: A Survey Focusing on Non-physical Interaction. Int. J. Soc. Robotics 11, 185–205. doi:10.1007/s12369-018-0485-4

Media, J.. (2020). Delivery Robot Gets Stuck while Moving on Sidewalk. Yahoo News. Available at: https://uk.news.yahoo.com/delivery-robot-gets-stuck-while-221930892.html?guccounter=1&guce_referrer=aHR0cHM6Ly93d3cuZ29vZ2xlLmNvbS8&guce_referrer_sig=AQAAADFWoFZ3oEZtKVUtS3k610uRzKFrCGnhc2b2DuTR3a8BpcLMNqoVSSXPjOKkcMDWbHwJgsn73y_yQpdTmqEbvHxlaieZBuFi2vJYM.

Mens, T. (2016). “An Ecosystemic and Socio-Technical View on Software Maintenance and Evolution,” in 2016 IEEE International Conference on Software Maintenance and Evolution (ICSME), Raleigh, NC, USA, 2-7 Oct. 2016, (New Jersey: IEEE), 1–8. doi:10.1109/ICSME.2016.19

Milliez, G., Lallement, R., Fiore, M., and Alami, R. (2016). “Using Human Knowledge Awareness to Adapt Collaborative Plan Generation, Explanation and Monitoring,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7-10 March 2016, (New Jersey: IEEE), 43–50. doi:10.1109/HRI.2016.7451732

Milliez, G., Warnier, M., Clodic, A., and Alami, R. (2014). “A Framework for Endowing an Interactive Robot with Reasoning Capabilities about Perspective-Taking and Belief Management,” in The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25-29 Aug. 2014, (New Jersey: IEEE), 1103–1109. doi:10.1109/ROMAN.2014.6926399

Mirnig, N., Stollnberger, G., Miksch, M., Stadler, S., Giuliani, M., and Tscheligi, M. (2017). To Err Is Robot: How Humans Assess and Act toward an Erroneous Social Robot. Front. Rob. AI 4, 1–15. doi:10.3389/frobt.2017.00021

Morgan-Thomas, A., Dessart, L., and Veloutsou, C. (2020). Digital Ecosystem and Consumer Engagement: A Socio-Technical Perspective. J. Business Res. 121, 713–723. doi:10.1016/j.jbusres.2020.03.042

Murphy, R. R., and Burke, J. L. (2005). Up from the Rubble: Lessons Learned about HRI from Search and Rescue. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 49, 437–441. doi:10.1177/154193120504900347

Musić, S., and Hirche, S. (2017). Control Sharing in Human-Robot Team Interaction. Annu. Rev. Control. 44, 342–354. doi:10.1016/j.arcontrol.2017.09.017

Mutlu, B., and Forlizzi, J. (2008). “Robots in Organizations,” in Proceedings of the 3rd international conference on Human robot interaction - HRI ’08, Amsterdam, The Netherlands, March 12 - 15, 2008, Editor Terry Fong, and Kerstin Dautenhahn, (New York, New York, USA: ACM Press), 287. doi:10.1145/1349822.1349860

Nayyar, M., and Wagner, A. R. (2018). “When Should a Robot Apologize? Understanding How Timing Affects Human-Robot Trust Repair,” in International Conference on Social Robotics, Editors Shuzhi Sam Ge, John-John Cabibihan, Miguel A. Salichs, Elizabeth Broadbent, Hongsheng He, Alan R. Wagner, and Álvaro Castro-González, (Springer, Cham: Springer), 265–274. doi:10.1007/978-3-030-05204-1_26

Niemelä, M., van Aerschot, L., Tammela, A., Aaltonen, I., and Lammi, H. (2019). Towards Ethical Guidelines of Using Telepresence Robots in Residential Care. Int. J. Soc. Rob., 13, 1–9. doi:10.1007/s12369-019-00529-8

Nikolaidis, S., Nath, S., Procaccia, A. D., and Srinivasa, S. (2017). “Game-Theoretic Modeling of Human Adaptation in Human-Robot Collaboration,” in Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, March 6 - 9, 2017, Editor Bilge Mutlu, and Manfred Tscheligi, (New York, NY, USA: ACM), 323–331. doi:10.1145/2909824.3020253

O'Keeffe, J., Tarapore, D., Millard, A. G., and Timmis, J. (2018). Adaptive Online Fault Diagnosis in Autonomous Robot Swarms. Front. Rob. AI 5, 131. doi:10.3389/frobt.2018.00131

Okal, B., and Arras, K. O. (2014). “Towards Group-Level Social Activity Recognition for mobile Robots,” in IROS Assistance and Service Robotics in a Human Environments Workshop. Chicago, IL, USA, 14 - 18 Sep, 2014,

Ordóñez, F. J., de Toledo, P., and Sanchis, A. (2015). Sensor-based Bayesian Detection of Anomalous Living Patterns in a home Setting. Pers Ubiquit Comput. 19, 259–270. doi:10.1007/s00779-014-0820-1

Ostergaard, E. H., Mataric, M. J., and Sukhatme, G. S. (2001). “Distributed Multi-Robot Task Allocation for Emergency Handling,” in Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), Maui, HI, USA, 29 Oct.-3 Nov. 2001, (New Jersey: IEEE), 821–826. doi:10.1109/IROS.2001.976270

Ouedraogo, K. A., Enjalbert, S., and Vanderhaegen, F. (2013). How to Learn from the Resilience of Human-Machine Systems?. Eng. Appl. Artif. Intelligence 26, 24–34. doi:10.1016/j.engappai.2012.03.007

Panangadan, A., Matarie, M., and Sukhatme, G. (2004). “Detecting Anomalous Human Interactions Using Laser Range-Finders,” in 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 Sept.-2 Oct, (New Jersey: IEEE), 2136–2141. doi:10.1109/IROS.2004.1389725

Pandey, A. K., and Alami, R. (2013). “Affordance Graph: A Framework to Encode Perspective Taking and Effort Based Affordances for Day-To-Day Human-Robot Interaction,” in 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3-7 Nov. 2013, (New Jersey: IEEE), 2180–2187. doi:10.1109/IROS.2013.6696661

Pandey, A. K., and Alami, R. (2014). Towards Human-Level Semantics Understanding of Human-Centered Object Manipulation Tasks for HRI: Reasoning About Effect, Ability, Effort and Perspective Taking. Int. J. Soc. Rob. 6, 593–620. doi:10.1007/s12369-014-0246-y

Park, D., Kim, H., and Kemp, C. C. (2019). Multimodal Anomaly Detection for Assistive Robots. Auton. Robot 43, 611–629. doi:10.1007/s10514-018-9733-6

Park, K.-H., Kim, J.-C., Jung, S.-T., Koo, M.-W., and Ahn, H.-J. (2009). “Robot Based Videophone Service System for Young Children,” in 2009 IEEE Workshop on Advanced Robotics and its Social Impacts, Tokyo, Japan, 23-25 Nov. 2009, (New Jersey: IEEE), 93–97. doi:10.1109/ARSO.2009.5587067

Pelikan, H. R. M., Cheatle, A., Jung, M. F., and Jackson, S. J. (2018). Operating at a Distance - How a Teleoperated Surgical Robot Reconfigures Teamwork in the Operating Room. Proc. ACM Hum.-Comput. Interact. 2, 1–28. doi:10.1145/3274407

Quan, X. I., and Sanderson, J. (2018). Understanding the Artificial Intelligence Business Ecosystem. IEEE Eng. Manag. Rev. 46, 22–25. doi:10.1109/EMR.2018.2882430

Ramachandran, A., Litoiu, A., and Scassellati, B. (2016). “Shaping Productive Help-Seeking Behavior during Robot-Child Tutoring Interactions,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7-10 March 2016, (New Jersey: IEEE), 247–254. doi:10.1109/HRI.2016.7451759

Ramaraj, P. (2021). “Robots that Help Humans Build Better Mental Models of Robots,” in Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, CO, Boulder, USA, March 8 - 11, 2021, Editors Cindy Bethel, and Ana Paiva, (New York, NY, USA: ACM), 595–597. doi:10.1145/3434074.3446365

Ranz, F., Hummel, V., and Sihn, W. (2017). Capability-based Task Allocation in Human-Robot Collaboration. Proced. Manufact. 9, 182–189. doi:10.1016/j.promfg.2017.04.011

Rosenthal, S., Veloso, M., and Dey, A. K. (2012). Is Someone in This Office Available to Help Me?. J. Intell. Rob. Syst. 66, 205–221. doi:10.1007/s10846-011-9610-4

Rosenthal, S., Veloso, M. M., and Dey, A. K. (2011). “Task Behavior and Interaction Planning for a Mobile Service Robot that Occasionally Requires Help,” in Automated Action Planning for Autonomous Mobile Robots. San Francisco, California, August 7–8, 2011, (AAAI Press), doi:10.5555/2908675.2908678

Ross, R., Collier, R., and O’Hare, G. M. P. (2004). “Demonstrating Social Error Recovery with AgentFactory,” in 3rd International Joint Conference on Autonomous Agents and Multi-agent Systems (AAMAS04), New York City, New York, USA, July 19 - 23 2004, (Los Alamitos, CA, USA: IEEE Computer Society), 1424–1425. doi:10.1109/AAMAS.2004.103

Rossi, S., Bove, L., Di Martino, S., and Ercolano, G. (2018). “A Two-Step Framework for Novelty Detection in Activities of Daily Living,” in International Conference on Social Robotics, Qingdao, China, November 28 - 30, 2018, Editors Shuzhi Sam Ge, John-John Cabibihan, Miguel A Salichs, Elizabeth Broadbent, Hongsheng He, Alan R. Wagner, and Álvaro Castro-González, (Springer, Cham: Springer), 329–339. doi:10.1007/978-3-030-05204-1_32

Rossi, S., Ferland, F., and Tapus, A. (2017). User Profiling and Behavioral Adaptation for HRI: A Survey. Pattern Recognition Lett. 99, 3–12. doi:10.1016/j.patrec.2017.06.002

Ruault, J.-R., Vanderhaegen, F., and Kolski, C. (2013). Sociotechnical Systems Resilience: a Dissonance Engineering point of View. IFAC Proc. Volumes 46, 149–156. doi:10.3182/20130811-5-US-2037.00042

Sabanovic, S., Reeder, S., and Kechavarzi, B. (2014). Designing Robots in the Wild: In Situ Prototype Evaluation for a Break Management Robot. J. Human-Rob. Interact. 3, 70–88. doi:10.5898/JHRI.3.1.Sabanovic

Sakour, I., and Hu, H. (2017). Robot-Assisted Crowd Evacuation under Emergency Situations: A Survey. Robotics 6, 8. doi:10.3390/robotics6020008

Salem, M., Ziadee, M., and Sakr, M. (2014). “Marhaba, How May I Help You?,” in Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction Bielefeld, Germany, March 3 - 6, 2014, Editors Gerhard Sagerer, and Michita Imai, (New York, NY, USA: ACM), 74–81. doi:10.1145/2559636.2559683

Sanoubari, E., Seo, S. H., Garcha, D., Young, J. E., and Loureiro-Rodriguez, V. (2019). “Good Robot Design or Machiavellian? An In-The-Wild Robot Leveraging Minimal Knowledge of Passersby's Culture,” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea (South), 11-14 March 2019, (New Jersey: IEEE), 382–391. doi:10.1109/HRI.2019.8673326

Santoni de Sio, F., and van den Hoven, J. (2018). Meaningful Human Control over Autonomous Systems: A Philosophical Account. Front. Rob. AI 5, 15. doi:10.3389/frobt.2018.00015

Scerri, P., Pynadath, D., Johnson, L., Rosenbloom, P., Si, M., Schurr, N., and Tambe, M. (2003). “A Prototype Infrastructure for Distributed Robot-Agent-Person Teams,” in Proceedings of the second international joint conference on Autonomous agents and multiagent systems - AAMAS ’03, Melbourne, Australia, July 14 - 18, 2003, Editor Jeffrey S. Rosenschein, and Michael Wooldridge, (New York, New York, USA: ACM Press), 433. doi:10.1145/860575.860645

Scheutz, M., and Arnold, T. (2016). Feats without Heroes: Norms, Means, and Ideal Robotic Action. Front. Rob. AI 3, 32. doi:10.3389/frobt.2016.00032

Scheutz, M., DeLoach, S. A., and Adams, J. A. (2017). A Framework for Developing and Using Shared Mental Models in Human-Agent Teams. J. Cogn. Eng. Decis. Making 11, 203–224. doi:10.1177/1555343416682891

Sebo, S. S., Krishnamurthi, P., and Scassellati, B. (2019). “"I Don't Believe You": Investigating the Effects of Robot Trust Violation and Repair,” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea (South), 11-14 March 2019, (New Jersey: IEEE), 57–65. doi:10.1109/HRI.2019.8673169

Sebo, S., Stoll, B., Scassellati, B., and Jung, M. F. (2020). Robots in Groups and Teams. Proc. ACM Hum.-Comput. Interact. 4, 1–36. doi:10.1145/3415247

Shevtsov, S., and Weyns, D. (2016). “Keep it SIMPLEX: Satisfying Multiple Goals with Guarantees in Control-Based Self-Adaptive Systems,” in Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, WA, Seattle, USA, November 13 - 18, 2016, Editor Thomas Zimmermann, (New York, NY, USA: ACM), 229–241. doi:10.1145/2950290.2950301

Shin, D. (2019). A Living Lab as Socio-Technical Ecosystem: Evaluating the Korean Living Lab of Internet of Things. Government Inf. Q. 36, 264–275. doi:10.1016/j.giq.2018.08.001

Spezialetti, M., Placidi, G., and Rossi, S. (2020). Emotion Recognition for Human-Robot Interaction: Recent Advances and Future Perspectives. Front. Rob. AI 7, 145. doi:10.3389/frobt.2020.532279

Srinivasan, V., and Takayama, L. (2016). “Help Me Please,” in Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, California, San Jose, USA, May 7 - 12, 2016, Editors Jofish Kaye, and Allison Druin, (New York, NY, USA: ACM), 4945–4955. doi:10.1145/2858036.2858217

Steinbauer, G. (2013). “A Survey about Faults of Robots Used in RoboCup,” in Lecture Notes in Computer Science. Editors Z. T. X. van derChen, P. Stone, and L. E. Sucar (Berlin, Heidelberg: Springer), 344–355. doi:10.1007/978-3-642-39250-4_31

Strohkorb Sebo, S., Dong, L. L., Chang, N., and Scassellati, B. (2020). “Strategies for the Inclusion of Human Members within Human-Robot Teams,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, United Kingdom, March 23 - 26, 2020, Editors Tony Belpaeme, and James Young, (New York, NY, USA: ACM), 309–317. doi:10.1145/3319502.3374808

Swearingen, J. (2017). Robot Security Guard Commits Suicide in Public Fountain. New York Mag. doi:10.4324/9780203711125Available at: https://nymag.com/intelligencer/2017/07/robot-security-guard-commits-suicide-in-public-fountain.html (Accessed October 4, 2020).

Tabrez, A., Luebbers, M. B., and Hayes, B. (2020). A Survey of Mental Modeling Techniques in Human-Robot Teaming. Curr. Rob. Rep. 1, 259–267. doi:10.1007/s43154-020-00019-0

Tan, N., Hayat, A. A., Elara, M. R., and Wood, K. L. (2020). A Framework for Taxonomy and Evaluation of Self-Reconfigurable Robotic Systems. IEEE Access 8, 13969–13986. doi:10.1109/ACCESS.2020.2965327

Tang, B., Jiang, C., He, H., and Guo, Y. (2016). Human Mobility Modeling for Robot-Assisted Evacuation in Complex Indoor Environments. IEEE Trans. Human-Mach. Syst. 46, 694–707. doi:10.1109/THMS.2016.2571269

Thomas, J. (2019). Autonomy, Social agency, and the Integration of Human and Robot Environments. Applied Sciences: School of Computing Science, Simon Fraser University Thesis type: (Thesis) Ph.D. doi:10.1109/iros40897.2019.8967862

Thomaz, A. L., and Breazeal, C. (2007). “Robot Learning via Socially Guided Exploration,” in 2007 IEEE 6th International Conference on Development and Learning, London, UK, 11-13 July 2007, (New Jersey: IEEE), 82–87. doi:10.1109/DEVLRN.2007.4354078

Tian, L., and Oviatt, S. (2021). A Taxonomy of Social Errors in Human-Robot Interaction. J. Hum.-Rob. Interact. 10, 1–32. doi:10.1145/3439720

Tolmeijer, S., Weiss, A., Hanheide, M., Lindner, F., Powers, T. M., Dixon, C., and Tielman, M. L. (2020). “Taxonomy of Trust-Relevant Failures and Mitigation Strategies,” in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, United Kingdom, March 23 - 26, 2020, Editors Tony Belpaeme, and James Young, (New York, NY, USA: ACM), 3–12. doi:10.1145/3319502.3374793

Torrey, C., Fussell, S. R., and Kiesler, S. (2013). “How a Robot Should Give Advice,” in 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3-6 March 2013, (New Jersey: IEEE), 275–282. doi:10.1109/HRI.2013.6483599

Torrey, C. (2009). How Robots Can Help: Communication Strategies that Improve Social Outcomes. Dissertation. Carnegie Mellon University, USA. Advisor(s) Sara Kiesler and Susan R. Fussell. Order Number: AAI3455972. https://dl.acm.org/doi/10.5555/2231207.

Trafton, J. G., Cassimatis, N. L., Bugajska, M. D., Brock, D. P., Mintz, F. E., and Schultz, A. C. (2005). Enabling Effective Human-Robot Interaction Using Perspective-Taking in Robots. IEEE Trans. Syst. Man. Cybern. A. 35, 460–470. doi:10.1109/TSMCA.2005.850592

Trovato, G., Zecca, M., Sessa, S., Jamone, L., Ham, J., Hashimoto, K., et al. (2013). Cross-cultural Study on Human-Robot Greeting Interaction: Acceptance and Discomfort by Egyptians and Japanese. Paladyn, J. Behav. Robot. 4, 83–93. doi:10.2478/pjbr-2013-0006

Ullrich, D., Butz, A., and Diefenbach, S. (2021). The Development of Overtrust: An Empirical Simulation and Psychological Analysis in the Context of Human-Robot Interaction. Front. Rob. AI 8, 44. doi:10.3389/frobt.2021.554578

Umbrico, A., Cesta, A., Cortellessa, G., and Orlandini, A. (2020). A Holistic Approach to Behavior Adaptation for Socially Assistive Robots. Int. J. Soc. Rob. 12, 617–637. doi:10.1007/s12369-019-00617-9

Wang, L., Rau, P.-L. P., Evers, V., Robinson, B. K., and Hinds, P. (2010). “When in Rome: The Role of Culture & Context in Adherence to Robot Recommendations,” in 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2-5 March 2010, (IEEE), 359–366. doi:10.1109/HRI.2010.5453165

Warnier, M., Guitton, J., Lemaignan, S., and Alami, R. (2012). “When the Robot Puts Itself in Your Shoes. Managing and Exploiting Human and Robot Beliefs,” in 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9-13 Sept. 2012, (New Jersey: IEEE), 948–954. doi:10.1109/ROMAN.2012.6343872

Washburn, A., Adeleye, A., An, T., and Riek, L. D. (2020). Robot Errors in Proximate HRI. J. Hum.-Rob. Interact. 9, 1–21. doi:10.1145/3380783

Weidemann, A., and Rußwinkel, N. (2021). The Role of Frustration in Human-Robot Interaction - what Is Needed for a Successful Collaboration?. Front. Psychol. 12, 707. doi:10.3389/fpsyg.2021.640186

Welfare, K. S., Hallowell, M. R., Shah, J. A., and Riek, L. D. (2019). “Consider the Human Work Experience when Integrating Robotics in the Workplace,” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea (South), 11-14 March 2019, (Piscataway, NJ: IEEE), 75–84. doi:10.1109/HRI.2019.8673139

White, S. S., Bisland, K. W., Collins, M. C., and Li, Z. (2020). “Design of a High-Level Teleoperation Interface Resilient to the Effects of Unreliable Robot Autonomy,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 Oct.-24 Jan. 2021, (Piscataway, NJ: IEEE), 11519–11524. doi:10.1109/IROS45743.2020.9341322

Willms, C., Houy, C., Rehse, J.-R., Fettke, P., and Kruijff-Korbayova, I. (2019). “Team Communication Processing and Process Analytics for Supporting Robot-Assisted Emergency Response,” in 2019 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Würzburg, Germany, 2-4 Sept. 2019, (IEEE), 216–221. doi:10.1109/SSRR.2019.8848976

Wilson, N. J., Ceron, S., Horowitz, L., and Petersen, K. (2020). Scalable and Robust Fabrication, Operation, and Control of Compliant Modular Robots. Front. Robot. AI 7, 44. doi:10.3389/frobt.2020.00044

Wongphati, M., Kanai, Y., Osawa, H., and Imai, M. (2012). “Give Me a Hand — How Users Ask a Robotic Arm for Help with Gestures,” in 2012 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Bangkok, Thailand, 27-31 May 2012, (IEEE), 64–68. doi:10.1109/CYBER.2012.6392528

Woods, D. D. (2019). “Essentials of Resilience, Revisited.” in Handbook on Resilience of Socio-Technical Systems. Editors Matthias Ruth, and Stefan Goessling-Reisemann, (Northampton, Massachusetts, USA: Edward Elgar Publishing), 52–65. doi:10.4337/9781786439376.00009

Woods, D. D. (2018). The Theory of Graceful Extensibility: Basic Rules that Govern Adaptive Systems. Environ. Syst. Decis. 38, 433–457. doi:10.1007/s10669-018-9708-3

Xu, K. (2019). First Encounter with Robot Alpha: How Individual Differences Interact with Vocal and Kinetic Cues in Users' Social Responses. New Media Soc. 21, 2522–2547. doi:10.1177/1461444819851479

Yamamoto, Y., Sato, M., Hiraki, K., Yamasaki, N., and Anzai, Y. (1992). “A Request of the Robot: an experiment with the Human-Robot Interactive System HuRIS,” in [1992] Proceedings IEEE International Workshop on Robot and Human Communication, Tokyo, Japan, 1992, (IEEE), 204–209. doi:10.1109/ROMAN.1992.253887

Zhang, T., Zhang, W., and Gupta, M. (2017). Resilient Robots: Concept, Review, and Future Directions. Robotics 6, 22. doi:10.3390/robotics6040022

Zhao, X., Cusimano, C., and Malle, B. F. (2016). “Do people Spontaneously Take a Robot's Visual Perspective?,” in IEEE International Conference on Human-Robot Interaction (HRI) (IEEE), 335–342. doi:10.1109/HRI.2016.7451770

Zhou, X., Wu, H., Rojas, J., Xu, Z., and Li, S. (2020). “Nonparametric Bayesian Method for Robot Anomaly Monitoring,” in Nonparametric Bayesian Learning for Collaborative Robot Multimodal Introspection. Singapore: Springer Singapore, 51–93. doi:10.1007/978-981-15-6263-1_4

Zieba, S., Polet, P., and Vanderhaegen, F. (2011). Using Adjustable Autonomy and Human-Machine Cooperation to Make a Human-Machine System Resilient - Application to a Ground Robotic System. Inf. Sci. 181, 379–397. doi:10.1016/j.ins.2010.09.035

Zweigle, O., Keil, B., Wittlinger, M., Häussermann, K., and Levi, P. (2013). “Recognizing Hardware Faults on Mobile Robots Using Situation Analysis Techniques,” in Intelligent Autonomous Systems 12: Volume 1 Proceedings of the 12th International Conference IAS-12, Jeju Island, Korea, held June 26-29, 2012. Editors S. Lee, H. Cho, K.-J. Yoon, and J. Lee (Berlin, Heidelberg: Springer Berlin Heidelberg), 397–409. doi:10.1007/978-3-642-33926-4_37

Keywords: unexpected failures, human-robot ecosystem, social robots, non-expert user, resilience engineering, resilient robots, user-centered, failure handling

Citation: Honig S and Oron-Gilad T (2021) Expect the Unexpected: Leveraging the Human-Robot Ecosystem to Handle Unexpected Robot Failures. Front. Robot. AI 8:656385. doi: 10.3389/frobt.2021.656385

Received: 20 January 2021; Accepted: 21 June 2021;

Published: 26 July 2021.

Edited by:

Siddhartha Bhattacharyya, Florida Institute of Technology, United StatesReviewed by:

Emmanuel Senft, University of Wisconsin-Madison, United StatesMatthew Studley, University of the West of England, United Kingdom

Copyright © 2021 Honig and Oron-Gilad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shanee Honig, c2hhbmVlaEBwb3N0LmJndS5hYy5pbA==

Shanee Honig

Shanee Honig Tal Oron-Gilad

Tal Oron-Gilad