- Music Department, Mason Gross School of the Arts, Rutgers University, New Brunswick, NJ, United States

The field of musical robotics presents an interesting case study of the intersection between creativity and robotics. While the potential for machines to express creativity represents an important issue in the field of robotics and AI, this subject is especially relevant in the case of machines that replicate human activities that are traditionally associated with creativity, such as music making. There are several different approaches that fall under the broad category of musical robotics, and creativity is expressed differently based on the design and goals of each approach. By exploring elements of anthropomorphic form, capacity for sonic nuance, control, and musical output, this article evaluates the locus of creativity in six of the most prominent approaches to musical robots, including: 1) nonspecialized anthropomorphic robots that can play musical instruments, 2) specialized anthropomorphic robots that model the physical actions of human musicians, 3) semi-anthropomorphic robotic musicians, 4) non-anthropomorphic robotic instruments, 5) cooperative musical robots, and 6) individual actuators used for their own sound production capabilities.

Introduction

The field of musical robotics presents an interesting case study of the intersection between creativity and robotics. While the potential for machines to express creativity represents an important issue in the field of robotics and AI, this subject is especially relevant in the case of machines that replicate human activities that are traditionally associated with creativity, such as music making. Several recent studies have explored the history and current state of musical robotics. While these present an overview of the field, they tend to focus primarily on issues related to functional design, with little discussion of creativity. Musical robots are categorized based on how they produce sound (Kapur 2005), how they function as interactive multimodal systems (Solis and Ng 2011), how they developed over history (Murphy et al., 2012; Long et al., 2017), and the ways that they engage in “Robotic Musicianship” (Bretan and Weinberg, 2016).1

Based on a review of existing literature as well as the author’s experience designing and composing music for musical robots, this article proposes a new classification framework based on the ways that musical robots express creativity through anthropomorphic form, capacity for sonic nuance, control, and musical output. By exploring the field of musical robotics through this lens, we are able to better understand the ways that specific approaches lead to both technical and artistic goals.

Definitions and Criteria for Evaluation

Defining Musical Robotics

Both designers and audiences use the term “musical robots” or “robotic musical instruments” to refer to a broad range of musical machines. From an engineering perspective, approaches that lack autonomy could be more accurately be described as “musical mechatronics” (Bretan and Weinberg, 2016). However, the popular conception of robots, rooted in mythology, includes any machines that can mimic human actions (Jones 2017; Szollosy, 2017). Therefore, this discussion will consider “musical robotics” as any approach where an electromechanical actuator produces a visible, physical action that models the human act of music making, regardless of autonomous control.

By modeling the human act of music making, musical robots may be considered inherently anthropomorphic. Fink describes the important connection between anthropomorphism and robotics, as expressed through anthropomorphic form (appearance), behavior, and interaction with humans (Fink, 2012). While not all musical robots possess an anthropomorphic form, modeling the physical actions of music making represents anthropomorphic behavior. The ways that designers and audiences experience anthropomorphism significantly impacts how these machines express creativity. With this idea in mind, I identify six approaches that express creativity in different ways. These include: 1) nonspecialized anthropomorphic robots that can play musical instruments, 2) specialized anthropomorphic robots that model the physical actions of human musicians, 3) semi-anthropomorphic robotic musicians, 4) non-anthropomorphic robotic instruments, 5) cooperative musical robots, and 6) individual actuators used for their own sound production capabilities.

Defining Creativity

Several different fields currently focus on creativity, including esthetics, psychology, and artificial intelligence (Götz 1981; Bailin 1983; Boden, 1996; Boden, 2004; Cope 2005; Runco and Jaeger, 2012). Runco and Jaeger distinguish two fundamental criteria of creativity: originality and effectiveness (Runco and Jaeger 2012, 92). Originality, or creative insight, emerges from what Cope describes as, “The initialization of connections between two or more multifaceted things, ideas, or phenomenon hitherto not otherwise considered actively connected” (Cope 2005, 11). Effectiveness is determined through evaluation of creative insight by the creator, as well as related communities (Boden, 1996, 268).

Evaluative Criteria for Creativity in Musical Robotics

Musical robots tend to be viewed as creative machines due to their connection to music, which is understood to be an inherently creative endeavor. While studies of creativity in musical robots should focus on the music they produce, originality and effectiveness are also expressed through anthropomorphic form, capacity for sonic nuance, control, as well as musical output.

Anthropomorphic Form

The physical appearance of musical robots as well as the ways they model the human actions of music making are extremely important for designers and audiences. According to Fink, “the physical shape of a robot strongly influences how people perceive it and interact with it…” (Fink, 203). Fink also describes the importance of anthropomorphic behavior from the observer’s perspective. “If a system behaves much like a human being (e.g., emits a human voice), people’s mental model of the system’s behavior may approach their mental model of humans,” based on the estimation of the robot’s capabilities (Fink, 201). Some approaches to musical robotics focus on modeling human appearance and movement while others explore mechatronic sound production techniques that do not possess anthropomorphic form. Evaluating creativity in terms of anthropomorphic form requires an understanding of the ways that designers and audiences ascribe human qualities to a musical robot’s form and behavior.

Capacity for Sonic Nuance

Much of the existing literature in the field of musical robotics focuses on robots’ ability to model the sonic capabilities of human performers. The benchmark for success in this area is often described as the ability to play music expressively (e.g., Murphy, 2014). While designers often describe how advancements in sound control parameters and their resolution enable expressivity, the concept of expression tends to be loosely defined (Kemper and Cypess, 2019). Therefore, it is more accurate to describe these features as increasing the capacity for sonic nuance (Kemper and Barton, 2018). While greater capacity for sonic nuance allows musical robots to more accurately model the dynamics, articulations, and phrasing of human performers, it can also create novel sonic and musical possibilities that differ from the ways that humans perform (Kemper, 2014). Thus, creativity in this domain refers to novel approaches to sonic nuance either for the purposes of modeling human performance or exploring new sonic and musical possibilities that are unique to musical robots.

Control

Musical robots can be controlled in a variety of ways, ranging from autonomous modes that enable interaction with human performers to modes where the movement of every actuator is preprogrammed. One of the challenges of assessing creativity in musical robotics is that control systems are often separable from the robot itself. Research in the areas of artificial musical generation and listening algorithms tend to focus on note generation in a generic way (e.g., as MIDI data), rather than being tailored to the mechanical requirements of a specific robot (e.g., Cope 2005; Xia and Dannenberg, 2015). For example, Solis and Ng’s Musical Robots and Interactive Multimodal Systems, is divided into two separate sections that describe control and output respectively (Solis and Ng, 2011). While control determines the ways that actuators operate and thus how the robot produces sound, it is important to distinguish these instructions from the actual musical output.

Musical Output

The music that robots perform represents an important avenue for expressing creativity; however, this topic has received surprisingly little attention. Some robots use a single piece of music to demonstrate their capabilities, while others perform in a diverse array of styles, collaborate in real time with human performers, and are designed as creative tools for musical artists.2 Evaluating creativity in musical output should consider both the robot’s performative capabilities and musical decisions. Performative capabilities include the ability for robots to present a compelling performance, either by modeling human performers or exploring their own unique capabilities. Musical decisions include the specific musical pieces composed or arranged for the robot(s), musical decision-making by autonomous control systems, and the ways that new music created for (or by) robots engages with the unique capabilities of these machines.

Different Approaches to Musical Robotics

Nonspecialized Anthropomorphic Robots that Can Play Musical Instruments

Over the past 2 decades several companies have developed general-purpose anthropomorphic bipedal robots that replicate human actions in a variety of areas, including musical performance (Goswami and Vadakkepat 2019). For example, Toyota modified versions of their Partner robot to play trumpet, violin, and an electronic drum kit (Doi and Nakajima 2019). Of these approaches, the trumpet robot approximates human performance most closely in terms of articulation, dynamics, and timing. Conversely, the violin playing robot is limited in its range, and struggles somewhat with intonation and tone compared to a trained violinist.3 This reflects the challenges of modeling the complex physical actions of bow pressure, bow speed, proper finger position, and vibrato.

In general, these demonstrations prioritize showing versatile, humanoid robots engaging in a quintessentially “human” activity over novel musical output. As Doi and Nakajima state, “We began the development of musical performance humanoid out of curiosity that we would like to make a humanoid robot realize such a human unique activity [sic]” (Doi and Nakajima, 218). This is emphasized by the fact that available videos of these robots perform easily recognizable versions of popular music, including “When you Wish Upon a Star” and “Pomp and Circumstance.“4,5 As robots are more specifically designed for musical performance, they become more specialized in their ability to produce sonic nuance as described in the examples below.

Specialized Anthropomorphic Robots That Model the Physical Actions of Human Musicians

Several approaches have focused on building robots that model the physical actions involved in musical performance. These include pioneering work from Waseda University, including the WABOT-series piano robot, WF-series flutist robot, and the WAS-series saxophone robot (Roads 1986; Solis et al., 2006; Solis and Hashimoto 2010). Shibuya and Park have also created robotic models of violin performance (Shibuya et al., 2007; Park et al., 2016), and Chadefaux has created a robotic “finger” for harp plucking (Chadefaux et al., 2012).

While these approaches accurately model the actions of human performance, they can result in a lack of musical “efficiency” when compared to musical robots that do not model human actions (see Non-anthropomorphic Robotic Instruments). For example, the Waseda WF-4RII Flutist Robot possesses 43 DOF, and each robotic component is designed to replicate its human counterpart, including “humanoid organs” such as robotic lips, lungs, arms, neck, tongue, and oral cavity (Solis et al., 2006, 13). By modeling the human actions of performance, the robot helps us to understand how instrumental performers produce musical sounds. However, the complexity of the mechanical model limits the sonic possibilities that are available to machines, such as super-virtuosic speed and novel approaches to sonic nuance. This is evidenced by available videos of performance, such as that of the WF-4RII performing Rimsky-Korsakov’s “Flight of the Bumblebee” at a (humanly) comfortable tempo of c.150 BPM.6

Semi-Anthropomorphic Robotic Musicians

The musical robots in this category assume an anthropomorphic form, however they do not model the specific actions of human performance and are focused more on appearance and musical output. Over the past few decades several robotic “bands” have emerged, including the rock bands The Trons, Captured! By Robots and Compressorhead, as well as a collaboration between Z-Machines7 and Squarepusher on the 2014 album “Music for Robots.” (Snake-Beings, 2017; Gallagher, 2017; Davies and Crosby, 2016; Squarepusher x Z-Machines, 2014). MOJA features a drummer, harpist, and flutist performing in a style evocative of traditional Chinese music.8 In addition to these “bands,” the Robotic Musicianship Group at Georgia Tech has developed two well-documented semi-Anthropomorphic musical robots: Haile, a robotic drummer, and Shimon, a robotic marimba player (Weinberg and Driscoll 2006; Weinberg et al., 2020).

The anthropomorphic nature of these robots is highlighted primarily by their stylized appearance, rather than an attempt to model the human actions of performance. For example, Compressorhead’s multi-armed drummer “Stickboy” has a mohawk made of metal spikes and is designed to headbang along with the music. MOJA’s robots are dressed in Tang-dynasty style garments. These design choices have nothing to do with sound production, however they enhance the connection between the audience and robot performers.

Even though the sound-producing mechanisms of these robots do not model human performers, much of the music they play could be easily performed by humans. One exception to this is Squarepusher’s approach, which engages with the unique musical possibilities afforded by Z-Machines’s robots. In the song “Sad Robot Goes Funny” the double-necked “guitar-bot” instrument performs extremely rapid picking while at the same time dynamically changing the chords in a way that would be impossible for a human musician. This takes full advantage of that instrument’s 78 solenoid-based “fingers” and picks that can articulate each string individually. Similarly, while Shimon and Haile are designed to perform with human musicians, both have explored the extra-human musical capabilities of their designs with an emphasis on “play [ing] like a machine” (Weinberg et al., 2020, 95).

Non-Anthropomorphic Robotic Instruments

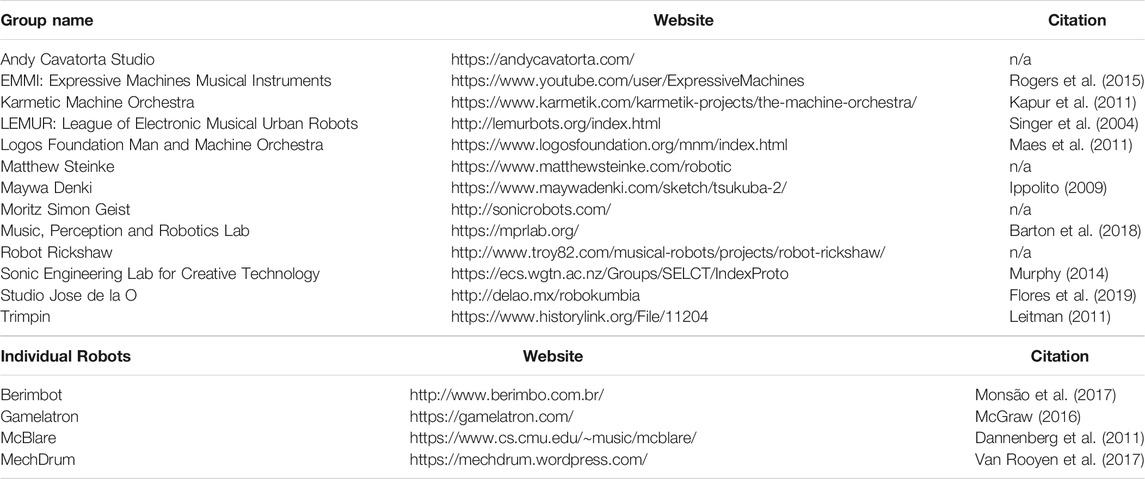

Non-anthropomorphic robotic instruments can either be mechatronic augmentations of existing acoustic instruments (e.g. Yamaha’s Disklavier),9 or newly designed instruments with no acoustic analog (e.g. Andy Cavatorta Studio’s Gravity Harp).10Table 1 includes a selection of recently active groups and individuals producing collections of non-anthropomorphic robotic instruments, as well as well-documented individual robots.

TABLE 1. Selection of recently active groups and individuals producing collections of non-anthropomorphic robotic instruments, as well as recently designed, well-documented individual robots.

Non-anthropomorphic robotic instruments tend to focus more on sonic nuance than modeling the human actions of performance. For example, the Logos Foundation’s robotic vibraphone <Vibi> couples actuating and dampening solenoids to each bar of the instrument rather than designing robotic arms and hands with multiple degrees of freedom that would model a human performer (Maes et al., 2011, 41).11 This design allows <Vibi> to play much more rapidly than a human performer. It also enables complete polyphony of the instrument as well as individual control of the dampening mechanisms. <Vibi>‘s unique capabilities open up a new world of musical possibilities when compared to a human performer on a traditional instrument.

One drawback of <Vibi>‘s design is that since the solenoids are mounted below the striking bars it is difficult for the audience to see their movement, obscuring the connection between physical action and sound production. While this is a common issue in this category, some projects are designed to maximize the visibility of movement. For example, LEMUR’s GuitarBot features four vertically mounted strings where pitch is changed on each string with a belt-driven fret that travels over half a meter (Singer et al., 2003). Other approaches include using LEDs to visualize sound production (e.g. Rogers et al., 2015).

Cooperative Musical Robots

An emerging area of musical robotics combines human performance and robotic actuation on a single shared interface. Barton describes these devices as cooperative musical machines, differentiating between cooperative (electro)mechanical instruments that do not react to human input, and cooperative robotic instruments that respond and interact with human performers (Barton, et al., 2017). Examples of cooperative (electro)mechanical instruments include Meywa Denki’s Ultra Folk acoustic guitar12 and Gurevich’s STRINGTREES (Gurevich, 2014). Examples of cooperative robotic instruments include Barton’s Cyther, a human-playable, self-tuning robotic zither, as well as the previously discussed Halie (Weinberg et al., 2020, 26).

Moving beyond a shared interface, Georgia Tech’s Robotic Drumming Prosthetic Arm robotically augments the capabilities of the body. This device consists of a prosthetic arm outfitted with brushless gimbal motors and a single stage timing belt drive connected to a drumstick (Weinberg et al., 2020, 219). An amputee drummer controls the stick through EMG sensors connected to muscles on the residual limb. Rather than simply serving as a replacement for a human arm, the capabilities of mechatronic design and robotic control, including the addition of a second stick, allow for humanly impossible virtuosity and speed (Weinberg et al., 213, 226). Beyond musical possibilities, robotic augmentation of the body concretizes notions of posthumanism and the cyborg (Haraway 1991). It also causes observers to question the “humanness” of an augmented individual rather than evoking a sense of anthropomorphism (Swartz and Watermeyer 2008), though that may change as these technologies become more widely accepted.

Individual Actuators Used for Their Own Sound Production Capabilities

The final category in this discussion encompasses projects that focus on the sounds and movement of individual actuators. While some may not consider these approaches to be musical robots due to a lack of complexity in design or sonic output, I argue that they are important to consider in this discussion because 1) as with all of the other approaches described here, they possess electromechanical actuators that produce a visible, physical action resulting in sound production and 2) they distill sound produced by electromechanical actuators to its most basic form.

Several designers have created music using voice coil and stepper actuators from floppy disc and hard drives, as well as from individual stepper motors. These approaches tend to reproduce well-known music, such as Zadrożniak’s arrangement of the “Imperial March” from Star Wars for floppy disc drives.13 While these actuators produce a unique timbre, there is limited capacity for sonic nuance.

Other designers create work that produces sound using the simple actions of motors. For example, Zimoun builds large-scale sound sculptures that feature individual motors actuating resonant objects.14 One installation consists of 658 cardboard boxes that are hit by cotton balls connected to a DC motor by piano wire. As the motor spins the ball hits the box and produces a resonant sound. In this approach, gravity, friction, and resonance, as well as the movement of the motor itself produce variations in the sound that makes the work compelling.

Discussion

This paper proposes a novel classification system that enables us to consider how different approaches to musical robotics express creativity in different ways. In general, the more overtly anthropomorphic the form, the more central the physical appearance of the project is to its creativity. For example, the Toyota Partner robot’s creative impact stems from the fact that a humanoid robot is performing a quintessentially human activity. As anthropomorphic form diminishes, originality and effectiveness are conveyed through the capacity for sonic nuance, as well as the ways that these machines either accurately model human performance or develop their own robotic performance practice. For an extreme case such as Zimoun’s work, the connection to anthropomorphic behavior in the process of sound production lies at the center of the work. By understanding the connections and divergent goals among different approaches to musical robotics, we can better evaluate the ways that these machines express originality and effectiveness for both designers and audiences. Though the classifications developed here are theoretical in nature, they will hopefully prove useful in developing future studies that explore the ways that musical machines can be considered creative.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1This article defines Robotic Musicianship as the intersection of musical mechatronics and machine musicianship. While the concepts of creativity and anthropomorphism are mentioned in passing, they are not used to classify different approaches to musical robotics.

2E.g. https://www.patmetheny.com/orchestrioninfo/

3Toyota Partner Violin Robot: https://www.youtube.com/watch?v=-yInphJdick

4Toyota Partner Trumpet Robot: https://www.youtube.com/watch?v=6fctULDctuA

5Toyota Partner Violin Robot (see n.3).

6http://www.takanishi.mech.waseda.ac.jp/top/research/music/flute/wf_4rii/index.htm (Section IV)

7https://www.yurisuzuki.com/design-studio/z-machines

8https://news.tsinghua.edu.cn/en/info/1012/5231.htm

9https://usa.yamaha.com/products/musical_instruments/pianos/disklavier/index.html

10https://andycavatorta.com/gravityharps.html

11https://logosfoundation.org/instrum_gwr/vibi.html

12https://www.maywadenki.com/sketch/tsukuba-2/

13https://www.youtube.com/watch?v=yHJOz_y9rZE

References

Bailin, S. (1983). On creativity as making: a reply to götz. J. Aesthetics Art Criticism 41 (4), 437–442. doi:10.2307/429877

Barton, S., Prihar, E., and Carvalho, P. (2017). “Cyther: a human-playable, self-tuning robotic zither,” in Proceedings of the international conference on new interfaces for musical expression, Copenhagen, Denmark (NIME), 319–324.

Barton, S., Sundberg, K., Walter, A., Baker, L.S., Sane, T., and O'Brien, A. (2018). “A robotic percussive aerophone,” in Proceedings of the 18th international conference on new interfaces for musical expression. 409–412.

Boden, M. A. (1996). “Creativity,” in Artificial intelligence. Editor M. A. Boden (San Diego, CA: Academic Press), 267–291.

Boden, M. A. (2004). The creative mind: myths & mechanisms. 2nd Edn. London, United Kingdom: Routledge.

Bretan, M., and Weinberg, G. (2016). A survey of robotic musicianship. Commun. ACM 59 (5), 100–109. doi:10.1145/2818994

Chadefaux, D., Le Carrou, J-L., Vitrani, M-A., Billout, S., and Quartier, L. (2012). “Harp plucking robotic finger,” in 2012 IEEE/RSJ international conference on intelligent robots and systems (Vilamoura-Algarve, Portugal), 4886–4891. doi:10.1109/IROS.2012.6385720

Dannenberg, R. B., Brown, H. B., and Lupish, R. (2011). “McBlare: a robotic bagpipe player,” in Musical robots and interactive multimodal systems. Springer tracts in advanced robotics. Editors J. Solis, and K. Ng (Berlin: Springer).

Davies, A., and Crosby, A. (2016). “Compressorhead: the robot band and its transmedia storyworld,” in Cultural robotics. Editors J. T. K. V. Koh, B. J. Dunstan, D. Silvera-Tawil, and M. Velonaki (Cham: Springer International Publishing).

Doi, M., and Nakajima, Y. (2019). “Toyota partner robots,” in Humanoid robotics: a reference. Editors A. Goswami, and P. Vadakkepat (Dordrecht: Springer Nature).

Fink, J. (2012). “Anthropomorphism and human likeness in the design of robots and human-robot interaction,” in Social robotics. Editors S. Sam Ge, O. Khatib, J. Cabibihan, R. Simmons, and M-A. Williams (Berlin, Heidelberg: Springer), 199–208.

Flores, R. I., Morán, R. M. L., and Ruano, D. S. (2019). Mexi-futurism. The transitorial path between tradition and innovation. Strateg. Des. Res. J. 12 (2), 222–234. doi:10.4013/sdrj.2019.122.08

Gallagher, D. (2017). Jay vance may play metal with homemade robots, but He says it's not meant to be funny. Available at: https://www.dallasobserver.com/music/jay-vance-frontman-of-captured-by-robots-on-how-he-turned-his-novelty-robot-band-into-a-serious-one-10081744 (Accessed December 10, 2020).

A. Goswami, and P. Vadakkepat (2019). Humanoid robotics: a reference (Dordrecht, Netherlands: Springer).

Götz, I. L. (1981). On defining creativity. J. Aesthetics Art Criticism 39 (3), 297–301. doi:10.2307/430164

Gurevich, M. (2014). “Distributed control in a mechatronic musical instrument,” in Proceedings of the international conference on new interfaces for musical expression, 487–490. London, United Kingdom: Zenodo.

Haraway, D. (1991). “A cyborg manifesto: science, Technology, and socialist-feminism in the late twentieth century” in simians, cyborgs, and women: the reinvention of nature. New York, NY: Routledge.

Ippolito, J. (2009). Art commodities from Japan: propagating art and culture via the internet. Int. J. Arts Soc. 4 (4), 109–116. doi:10.18848/1833-1866/cgp/v04i04/35682

Jones, R. (2017). Archaic man meets a marvellous automaton: posthumanism, social robots, archetypes. J. Anal. Psychol. 62, 338–355. doi:10.1111/1468-5922.12316 |

Kapur, A. (2005). “A history of robotic musical instruments,” in Proceedings of the 2005 international computer music conference, ICMC 2005. September 4-10, 2005, Barcelona, Spain. Available at: http://hdl.handle.net/2027/spo.bbp2372.2005.162.

Kapur, A., Darling, M., Diakopoulos, D., Murphy, J. W., Hochenbaum, J., Vallis, O., et al. (2011). The machine orchestra: an ensemble of human laptop performers and robotic musical instruments. Comp. Music J. 35 (4), 49–63. doi:10.1162/comj_a_00090

Kemper, S., and Barton, S. (2018). “Mechatronic expression: reconsidering expressivity in music for robotic instruments,” in Proceedings of the 18th international conference on new interfaces for musical expression. Blacksburg, Virginia, USA: Zenodo, 84–87. doi:10.5281/zenodo.1302689

Kemper, S. (2014). Composing for musical robots: aesthetics of electromechanical music. Emille: J. Korean Electro-Acoustic Music Soc. 12, 25–31.

Kemper, S., and Cypess, R. (2019). Can musical machines Be expressive? Views from the enlightenment and today. Leonardo 52 (5), 448–454. doi:10.1162/LEON_a_01477

Long, J., Murphy, J., Carnegie, D., and Kapur, A. (2017). Loudspeakers Optional: a history of non-loudspeaker-based electroacoustic music. Organised Sound 22 (2), 195–205. doi:10.1017/S1355771817000103

Maes, L., Raes, G-W., and Rogers, T. (2011). The man and machine robot orchestra at Logos. Comp. Music J. 35 (4), 28–48. doi:10.1162/comj_a_00089

McGraw, A. (2016). Atmosphere as a concept for ethnomusicology: comparing the gamelatron and gamelan. Ethnomusicology 60 (1), 125–147. doi:10.5406/ethnomusicology.60.1.0125

Monsão, I. C., Cerqueira, J. D. J. F., and da Costa, A. C. P. L. (2017). The berimbot: a robotic musical instrument as an outreach tool for the popularization of science and technology. Int. J. Soc. Robotics 9, 251–263. doi:10.1007/s12369-016-0386-3

Murphy, J. (2014). Expressive musical robots: building, evaluating, and interfacing with an ensemble of mechatronic instruments. PhD dissertation. Wellington, NZ: Victoria University of Wellington.

Murphy, J., Kapur, A., and Carnegie, D. (2012). Musical robotics in a loudspeaker world: developments in alternative approaches to localization and spatialization. Leonardo Music J. 22, 41–48. doi:10.1162/lmj_a_00090

Park, H., Lee, B., and Kim, D. (2016). Development of anthropomorphic robot finger for violin fingering. ETRI J. 38, 1218–1228. doi:10.4218/etrij.16.0116.0129

Rogers, T., Kemper, S., and Barton, S. (2015). “MARIE: monochord-aerophone robotic instrument ensemble,” in Proceedings of the international conference on new interfaces for musical expression. Baton Rouge, Louisiana, USA: Zenodo, 408–411. doi:10.5281/zenodo.1179166

Runco, M. A., and Jaeger, G. J. (2012). The standard definition of creativity. Creativity Res. J. 24 (1), 92–96. doi:10.1080/10400419.2012.650092

Shibuya, K., Matsuda, S., and Takahara, A. (2007). “Toward developing a violin playing robot - bowing by anthropomorphic robot arm and sound analysis,” in RO-MAN 2007 - the 16th IEEE international symposium on robot and human interactive communication. Jeju, Korea (South), 763–768. doi:10.1109/ROMAN.2007.4415188

Singer, E., Feddersen, J., Redmon, C., and Bowen, B. (2004). “LEMUR’s musical robots,” in Proceedings of the international conference on new interfaces for musical expression. Hamamatsu, Japan: Zenodo. doi:10.5281/zenodo.1176669

Singer, E., Larke, K., and Bianciardi, D. (2003). “LEMUR GuitarBot: MIDI robotic string instrument,” in Proceedings of the international conference on new interfaces for musical expression, 188–191.

Snake-Beings, E. (2017). The do-it-yourself (DiY) craft aesthetic of the trons − robot garage band. Craft Res. 8 (1), 55–77. doi:10.1386/crre.8.1.55_1

Solis, J., Chida, K., Taniguchi, K., Hashimoto, S. M., Suefuji, K., and Takanishi, A. (2006). The Waseda flutist robot WF-4RII in comparison with a professional flutist. Comp. Music J. 30 (4), 12–27. doi:10.1162/comj.2006.30.4.12

Solis, J., and Hashimoto, A. K. (2010). “Development of an anthropomorphic saxophone-playing robot,” in Brain, body and machine proceedings of an international symposium on the occasion of the 25th anniversary of McGill university centre for intelligent machines. Editors B. Boulet, J. J. Clark, J. Kovecses, and K. Siddiqi (Berlin: Springer), 175–186. doi:10.1007/978-3-642-16259-6

Swartz, L., and Watermeyer, B. (2008). Cyborg anxiety: oscar Pistorius and the boundaries of what it means to be human. Disabil. Soc. 23 (2), 187–190. doi:10.1080/09687590701841232

Szollosy, M. (2017). Freud, Frankenstein and our fear of robots: projection in our cultural perception of technology. AI Soc. 32, 433–439. doi:10.1007/s00146-016-0654-7

Van Rooyen, R., Schloss, A., and Tzanetakis, G. (2017). Voice coil actuators for percussion robotics. doi:10.5281/zenodo.1176149

Weinberg, G., and Driscoll, S. (2006). Toward robotic musicianship. Comp. Music J. 30 (4), 28–45. doi:10.1162/comj.2006.30.4.28

Weinberg, G., Bretan, M., Hoffman, G., and Driscoll, S. (2020). Robotic musicianship: embodied artificial creativity and mechatronic musical expression. Switzerland: Springer Nature.

Keywords: robotic musical instruments, creativity, anthropomorphism, music generation, musical robotics

Citation: Kemper S (2021) Locating Creativity in Differing Approaches to Musical Robotics. Front. Robot. AI 8:647028. doi: 10.3389/frobt.2021.647028

Received: 28 December 2020; Accepted: 11 February 2021;

Published: 23 March 2021.

Edited by:

Amy LaViers, University of Illinois at Urbana-Champaign, United StatesReviewed by:

Myounghoon Jeon, Virginia Tech, United StatesRichard Savery, Georgia Institute of Technology, United States

Copyright © 2021 Kemper. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Steven Kemper, c2tlbXBlckBtZ3NhLnJ1dGdlcnMuZWR1

Steven Kemper

Steven Kemper