94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI, 12 April 2021

Sec. Computational Intelligence in Robotics

Volume 8 - 2021 | https://doi.org/10.3389/frobt.2021.562524

This article is part of the Research TopicBiological and Robotic Inter-Limb CoordinationView all 23 articles

There are currently many quadruped robots suited to a wide range of applications, but traversing some terrains, such as vertical ladders, remains an open challenge. There is still a need to develop adaptive robots that can walk and climb efficiently. This paper presents an adaptive quadruped robot that, by mimicking feline structure, supports several novel capabilities. We design a novel paw structure and several point-cloud-based sensory structures incorporating a quad-composite time-of-flight sensor and a dual-laser range finder. The proposed robot is equipped with physical and cognitive capabilities which include: 1) a dynamic-density topological map building with attention model, 2) affordance perception using the topological map, and 3) a neural-based locomotion model. The novel capabilities show strong integration between locomotion and internal–external sensory information, enabling short-term adaptations in response to environmental changes. The robot performed well in several situations: walking on natural terrain, walking with a leg malfunction, avoiding a sudden obstacle, climbing a vertical ladder. Further, we consider current problems and future development.

Robots have become necessary to ease human tasks in many contexts such as industrial, military, entertainment, and disaster settings. Robots have different structures for different purposes. Arm-like robots feature in industrial contexts for performing hand-like functions. Humanoid robots with a wheeled base are often used in social and entertainment contexts. Likewise, robots with legs have an advantage on rough terrain, making them suitable for military and disaster contexts. From a broader perspective, legged robots are more versatile than wheeled robots simply because less than half of the world’s terrain can be accessed on wheels.

There are currently many varieties of legged robot exhibiting inspired designs and performance. Boston Dynamics has built many quadruped robots that have excellent capability on rough terrain (Ackerman, 2016). Quadruped robots developed at Waseda University have also demonstrated performance on rough terrain and in ladder-climbing (Hashimoto et al., 2019). Their movement, however, seems slow compared with existing quadruped robots. Most legged robot researchers implement biological structures of quadruped animals to benefit from the animal’s performance. MIT, for example, has built a Cheetah-like robot that moves at high speed (Hyun et al., 2014). BigDog (Raibert et al., 2008), Spotmini (Ackerman, 2016), HyQ (Semini et al., 2011), and Laikago (Spectrum, 2019) are inspired by dogs. They show flexibility of omni-directional movement on natural terrain. Ijspeert’s group took their inspiration from salamanders (Crespi et al., 2013). Animal-inspired robots, however, draw their mobility capabilities from the animals that they are designed after. In contrast to dogs and salamanders, cats are able to climb as well as walk, run and leap over rough terrain. Their claws allow agile climbing behaviors. We have therefore proposed a quadruped robot inspired by feline morphology. We propose a unique paw structure with a gripping mechanism.

The proposed robot is equipped with physical and cognitive capabilities, which include: 1) affordance perception for movement behavior, 2) path planning, 3) a dynamic locomotion generator, 4) stabilization behavior.

For the movement-related perception process, researchers have used different sensors and different strategies. LittleDog (Kolter et al., 2009) used stereo-vision to build the terrain model for the space in front of the robot. Then, it performs footstep planning for the next stepping movement (Kolter et al., 2008). Other researchers have done similar work in perception strategy (Diebel et al., 2004)(Gao et al., 2007). Havoutis et al. used an RGBD camera to perceive environmental conditions. Their robot then generates a motion pattern and undertakes foothold planning (Havoutis et al., 2013). Their subsequent work continues on to advanced implementation, such as stair-climbing (Winkler et al., 2015). The MIT Cheetah robot performs impressively while running and jumping to avoid an obstacle (Park et al., 2015). This robot uses LRF (laser range finder) sensors to detect upcoming obstacles, and identifies them using an iterative end-point fitting (IEPF) algorithm. Once an obstacle is perceived, the robot prepares the jump by controlling speed.

Manchester et al., used more complex external sensors such as vision, laser, and radar sensors. Their robot builds a terrain map model and then generates a sequence of footstep locations and associated joint trajectories. The perception is only effective on slow timescales. The footstep planning is updated in every footstep (Manchester et al., 2011). The high-rate timescale is used only for internal sensory response. Many researchers also conducted footstep planning, updated at every footstep, in both bipedal (Deits and Tedrake, 2014) (Maier et al., 2013) (Kuindersma et al., 2016) and hexapodal robots (Belter and Skrzypczyński, 2011). Taking a different approach, Hoffmann et al. use a closed-loop strategy for perception and action. They developed interaction between the robot’s embodiment and its environmental context. The robot adjusts its gait or speed when environmental changes are detected (Hoffmann et al., 2011). In this work, the robot reconstructs its map before generating motion plans that address only high-level motion (speed, step length, step height). Next, the stability model controls the low-level motion. The external sensory information is hence not directly used in low-level motion planning. In our proposal, the cognitive model plays a role in the lower-level locomotion model. Using external sensory information and a laser sensor costs less in computational processing to detect object shapes, than using a vision sensor.

The locomotion generator, as its name suggests, generates the movement behavior appropriate to particular conditions. There are many models for legged-robot locomotion. Most researchers implement trajectory-based locomotion for its simplicity; this has been done in bipedal (Manchester et al., 2011)(Zhang et al., 2014b)(Nandi et al., 2016)(Saputra et al., 2015a)(Khusainov et al., 2016)(Saputra et al., 2015c), quadrupedal (Winkler et al., 2015)(Kolter et al., 2008)(Mastalli et al., 2017)(Zhang et al., 2016)(Matsuzawa et al., 2016), and hexapodal robots (Qian and Goldman, 2015) (Zhu et al., 2016). Trajectory-based models control the motion planning in Cartesian coordinates using polynomial equations or Bézier curves (Manchester et al., 2011). Other researchers use center-of-gravity–based trajectory generation for quadrupedal robots (Winkler et al., 2015)(Mastalli et al., 2017). These center-of-gravity trajectory models have been successfully implemented for complex terrain. However, this approach has proven lacking on dynamic locomotion behavior. The trajectory-based approach needs to plan scenario motion planning in advance, and requires extensive parameter-tuning.

On the other hand, some researchers have tried other ways to develop dynamic locomotion patterns that can synchronize automatically with sensory feedback. They consider natural processes to develop locomotion models from human and animal gaits. Quadrupedal animals can generate gait patterns (walk, pace, amble, trot, gallop) automatically, depending on the animal’s intentions and environmental conditions. The animal’s body structure also regulates the gait pattern, which means every kind of animal has different gait efficiencies. Nakada et al. propose a neuromorphic locomotion model with a CMOS (Complementary Metal Oxide Semiconductor) controller for inter-limb coordination in quadrupedal robots (Nakada et al., 2003), while other researchers propose central pattern generation (CPG) for quadrupedal robot locomotion (Ijspeert and Cabelguen, 2006)(Asadi et al., 2015)(Maufroy et al., 2008)(Zhang et al., 2014a)(Sun et al., 2018)(Liu et al., 2018). Ijspeert’s group proposed CPG–based control of their salamander robot (Ijspeert and Cabelguen, 2006), which can transition dynamically from walking to swimming. Transitional movements in quadruped robot have also been proposed using CPG model by several researchers (Maufroy et al., 2010; Fukuoka et al., 2015; Owaki and Ishiguro, 2017). Other researchers have developed integration between CPG and ground reaction feedback to synchronize the gait with terrain conditions (Maufroy et al., 2008). Zhang et al., for example, designed a CPG-based controller for trotting (Zhang et al., 2014a). CPG gait generators can be implemented using a spiking neural network (Espinal et al., 2016) or a recurrent neural network (Tran et al., 2014). Sun et al. used a decoupled neural CPG circuit for adaptive locomotion (Sun et al., 2018). In our previous model, we combined the CPG with a Bézier curve model for efficiency. We implemented our ideas in a small quadrupedal robot, but it showed limitations on handling variant gait (Saputra et al., 2016)(Saputra et al., 2015b). The quadrupedal robot proposed in the present paper will be implemented as an efficient neural-based locomotion model using a single-rhythm generator-based CPG model, and will include a reflex system for synchronizing with locomotion events. Here, the reflex system is composed as the muscle reflex system explained in (Saputra et al., 2020b) and sensory afferent from force sensor in each leg explained in Affordance Detection for Grasping.

Our robot is equipped with external and internal sensors. We use point-cloud data information generated by a laser depth sensor as external sensory information. There are many robots that effectively detect and recognize obstacles using depth sensors (Park et al., 2015)(Hashimoto et al., 2019)(Camurri et al., 2015). The WAREC robot, for example, has a rotating laser range-finder array for scanning the surrounding environment (Hashimoto et al., 2019). Since depth sensors are limited in frequency rate, size, weight, and range, we propose a light-weight array of time-of-flight sensors which alleviates these limitations. To provide internal sensory information, we use an inertial measuring unit (IMU), four force sensors, and four grip-touch sensors.

This paper is organized as follows: In Design of Robot’s Hardware, we describe the robot’s mechanical and hardware design. Movement-Related Capabilities examines the robot’s unique capabilities. Robot Implementationtn shows the implementation of the robot and demonstrates its effectiveness. Finally, in Conclusions and Future Plans, we conclude the paper.

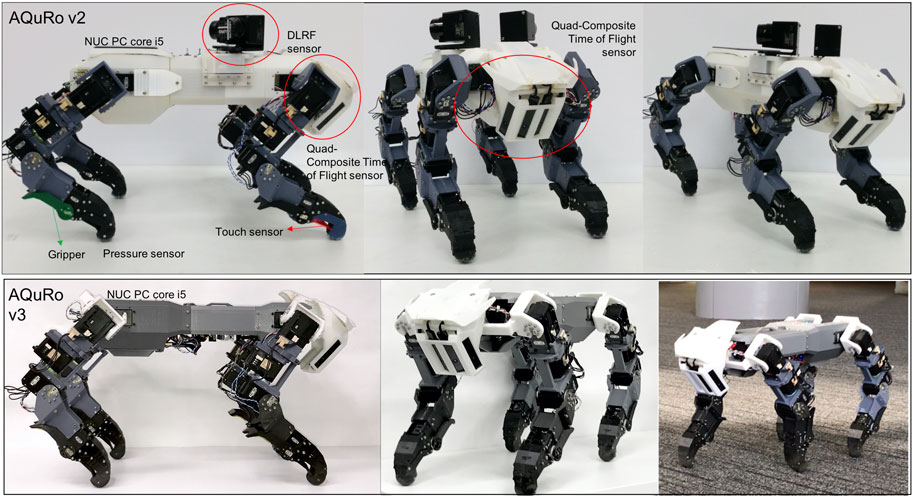

As stated in the Council on Competitiveness—Nippon (COCN) report, robots suitable for use in disaster situations must be able to move over all of rough, sloped and natural terrain (grass, uneven soil), through narrow spaces, and be able to climb stairs and vertical ladders (Council on Competitiveness Nippon (COCN), 2013). When we seek inspiration from the animal kingdom, the cat family (Felidae) stands out as able do all of these things. Cats can handle many complex environmental conditions. They can swim, are agile, and can climb trees. The cat offers a most appropriate archetype to imitate in agile quadrupedal robots. The feline robot that we developed is shown in Figure 1.

FIGURE 1. The quadruped robot. AQuRo v2 is attached with DLRF sensor and AQuRo v3 has slimmer body without DLRF sensor.

Our proposed quadrupedal robot is similar in size to a mature domestic cat: 25 cm (width) × 60 cm (length) × 30 cm (height). The robot has around 7 kg of weight. Figure 2 The robot’s foreleg imitates the cat’s forelimb structure minus the wrist joint. It has only two joints, the shoulder and elbow. There are three actuators associated with the ball joint structure of the shoulder, and one actuator associated with the hinge joint structure of the elbow. To design the robot’s hindleg, we considered the cat’s rhythmic motion, in which the proximal and distal leg segments maintain their relative angular orientation during most of the cycle, the deviation of angular joints differing only at the onset of toe-off (Witte et al., 2001). In the hindlegs, therefore, we simplified by eliminating the knee joint so the ankle and hip joints could be directly integrated. The leg can be seen in Figure 3. There are five degrees of freedom in each leg, one of which is used as the gripper joint. The tibia is 175 mm long, and the femur is 145 mm long. The robot’s Denavit-Hartenberg parameters are summarized in Table 1.

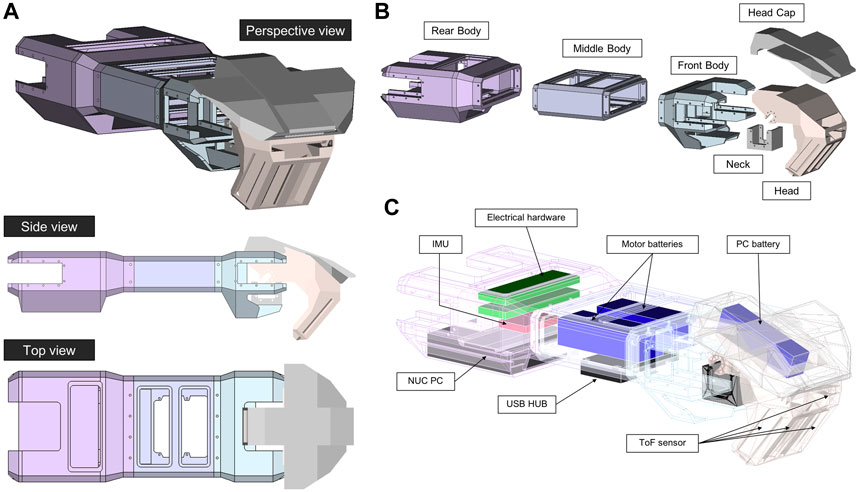

FIGURE 2. The robot body (torso and head). (A) Orthographic projections. (B) Interior hardware placements. (C) Exploded parts.

The robot’s overall body shape can be seen in Figure 1. The shell was 3D-printed in poly-(lactic acid) (PLA). The robot body comprises three parts: rear, middle, and front. The rear legs are attached to the rear part, which also holds the NUC PC, IMU sensor, and electrical hardware. The middle part holds two batteries for the motors and a USB Hub. The front part provides an attachment point for the neck and head. The head holds a battery for the PC.

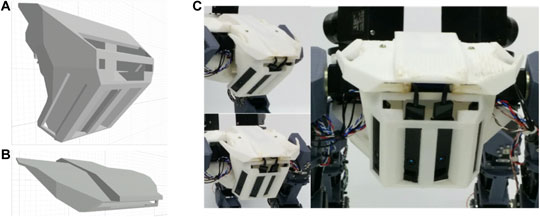

We designed the end effector to support agile movements such as walking on rough terrain and climbing vertical ladders. The end effectors must also measure the ground reaction force, and must satisfy size constraints. When climbing, cats use claws to grasp rocky walls, trees, poles, etc. Shiquan et al. developed an end effector with a dense array of micro-spines (Wang et al., 2016) for rock climbing. It needs a larger space, however, than is appropriate for our proposed robot. Furthermore, cats grasp by using two limbs in concert. Cats also find it difficult to climb vertical ladders.

In contrast, humans and monkeys have hands to hold and hang from ladder rungs. However, the hand mechanism for such hanging behavior needs a huge torque, which would require a correspondingly bigger servomotor. We simplified using a hook-shaped end effector that requires no actuator. The design can be seen in Figure 4. The end is rounded to simplify footing, eliminating the need for an actuator. Furthermore, in the sensory design, we put a force-sensitive resistor (FSR) between the upper and lower parts of the paw. A switch inside the hook cavity serves as a sensor to detect whether the paw is hooked over a rung. Behind the paw is a moveable claw for grasping and for supporting the hindleg to stand on a rung. The claw, moved by a low-torque servomotor, helps to avoid slippage.

We provided the cat robot with several sensors representing exteroceptors and interoceptors. To represent the exteroceptors, we built a quad-composite time-of-flight sensor for detecting the surroundings in front the robot, and a dual-laser range-finder for observing more widely. To represent interoceptors, we installed force sensors (force-sensitive resistors) and touch sensors (microswitches) in each leg, and an inertial measurement unit (IMU) inside the body. We use IMU module NG-IMU, as specified in Table 2.

This sensor will be installed in the head of the robot. It combines four CamBoard pico flexx ToF sensors made by pmd, as specified in Table 3. The composite sensor structure is depicted in Figure 5. This design, combined with the robot’s head shape, provides a wide field of view in order to minimize the number of actuators needed in the robot’s neck. The neck hence contains only one actuator, rotating in the sagittal plane. The CAD design drawings and photographs can be seen in Figure 6.

FIGURE 6. The quad-composite time-of-flight sensors. (A) Head housing; the sensors are mounted inside the downward-facing slits. (B) Head cap. (C) The head with installed sensors.

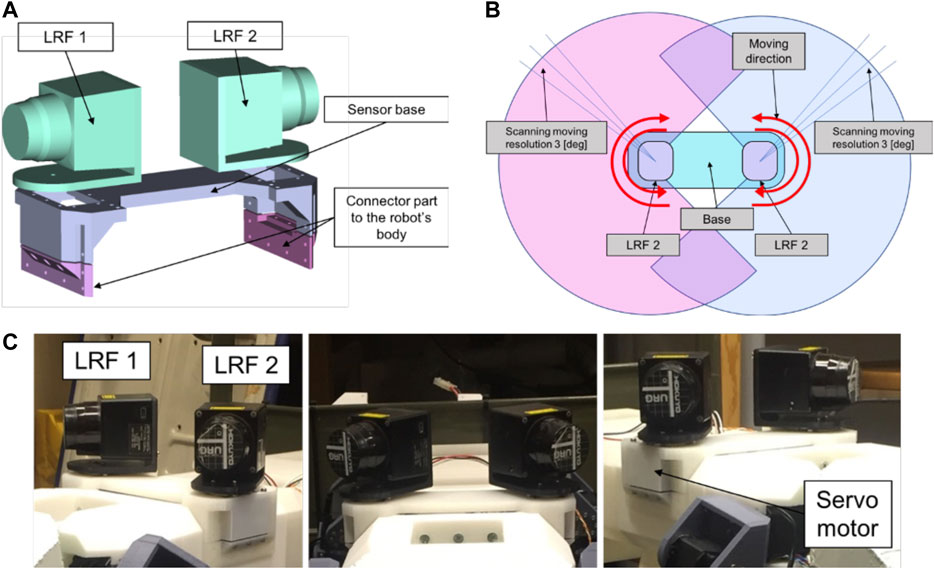

The DLRF is composed of two LRF sensors, with each LRF is attached to a Dynamixel MX-28 servomotor. This mechanism allows the sensors to measure distances. The design can be seen in Figure 7. Table 4 shows the specifications of the LRF sensor used. In Figure 7C we can see the moving mechanism of the sensors. The sensors will move symmetrically, where if the left sensor moving clockwise then the opposite sensor will move counterclockwise. Each sensor will move 240 [degree] of range. After reaching the limit degree, then the sensor will move the opposite direction.

FIGURE 7. Design of DLRF sensor. (A) CAD design. (B) The structure of the sensor (C) The appearance design of the Dual LRF sensor.

The robot has been equipped with a hardware configuration to handle both the internal and the external sensory information. The hardware structure can be seen in Figure 8. We use an ATmega 8 microcontroller as the sub-controller for pre-processing the internal sensory inputs from the force sensor, touch sensor, and IMU. A NUC PC core i3 serves as the main controller for processing several advanced systems such as perception, motion control, communication, and interfacing.

The sub-controller processes analog signals from the force sensor, touch sensor, and IMU sensor through its analog to digital converter input. The data stream is then transferred to the main controller via a USB connection. All external sensory information is also conveyed via USB connections. The main controller generates digital motor control signals for all of the servomotors. There are 12 servo motors Dynamixel MX-106, four servo motors Dynamixel MX-64, and seven servo motor Dynamixel MX-28.

The electrical system is powered by two 4–cell lithium–polymer batteries holding 2700 mAh (14.8 V) and one 4–cell lithium–polymer battery holding 2200 mAh (14.8 V). The batteries are expected to be power the robot for around 15 min.

We implemented the robot’s movement-related capabilities by integrating external and internal sensory information. This integration allows external sensory information to inform movement behaviors in short adaptation times, as happens in animals: when an obstacle suddenly appears during the walking swing phase, the swing is changed in response, bringing the foot into a safe area. This mechanism illustrates the importance of external sensory information (in this case, vision) for movement behavior.

To use depth information or a 3D point-cloud data as the external sensory information, our robot’s processing system includes 1) a dynamic-density topological map-builder with an attention model, 2) an affordance perceptor using the topological map, and 3) a neural network-based locomotion model.

We present a novel algorithm to realize an attention mechanism for robot movement, based on the dynamic density of a growing neural gas. The aim of this model is to reduce the data representation overhead associated with the 3D point-cloud data. The basic real-time Growing Neural Gas (GNG) technique has been implemented in our previous path planning model (Saputra et al., 2017). We extended the GNG by adding a dynamic-density model. The algorithm’s details are given in (Saputra et al., 2019b)(Saputra et al., 2019a). A comparison between the common GNG and the proposed GNG augmented with dynamic attention can be seen in the link of Video 1.

The concept of affordance originated from Gibson, in ecological psychology (Gibson, 1977). Turvey describes affordance as the environment’s dispositional properties. The actor’s effectivity or dispositional properties will supplement what the environment provides. Affordance provides important details governing the actor’s potential behavior and capability. A difference in the robot’s embodiment can therefore lead to different affordance perceptions (Turvey, 1992). The aim of our proposed affordance perception model is to find a suitable integration between environmental conditions and possible actions for the robot. We built affordance perception systems for the robot’s locomotion, ladder detection, and grasping.

In the locomotion system, the active behavior is regulated by perceiving the affordances. Prospective actions are therefore produced according to the affordance information obtained. In our model, affordances are detected by examining planes in the topological map generated by the dynamic-density growing neural gas (DD-GNG). The affordances of interest are horizontal (or nearly horizontal) surfaces that the robot can step on. These are found by calculating the plane’s slope. We calculated the normal vector of triangular facets in the topological structure using Eq. 1, as illustrated in Figure 9.

After that, the slope of plane facet (γi) in every surrounding surface needs to be calculated using Eq. 2. A safe-to-step-on factor can then be calculated by considering how vertical the plane is.

Where,

The model aims to present low-cost real-time vertical ladder-detection from 3D point-cloud data. The output from the DD–GNG is used as input to the affordance model. Feature-extraction is needed to identify suspected artifacts for the next stage of processing. Thereafter, vertical ladder-rung detection is processed using an inlier–outlier system. The ladder detection system thereby represents the ladder as a set of nodes and edges. Next, we detect the graspable locations by considering the robot’s embodiment. The details of the proposed detection system can be seen in (Saputra et al., 2019a).

This affordance detection process aims to detect possible gripping positions on the object. The process generates a seven-dimensional representation of grippable locations: (3D location, 3D rotation, and object diameter). We put an RGB camera above the robot’s quad-composite ToF sensor to detect the target object. Detection of target objects is performed by a computer vision algorithm. After that, the topological structure will be generated by using the proposed DD–GNG. The density of the topological structure is centralized on the desired object. Based on the inlier–outlier process, the possible gripping information is determined from topological map information and the robot gripper embodiment. Gripping possibilities can then be ranked from ‘best’ to ‘worst’ in any identified gripping solution. Details of this process can be seen in previous research (Saputra et al., 2019b).

Our locomotion model responds to current CPG development challenges in quadruped locomotion research. We present an efficient and solid CPG model that dynamically integrates with sensory feedback for generating various gaits, and allows for leg malfunction compensation without greatly increasing the number of parameters involved. The model has two feedback mechanisms based on sensorimotor coordination (Rossignol and Frigon, 2011)(Lam and Pearson, 2002). In the first feedback mechanism, sensory feedback is used to adjust CPG modulation. This is done by feeding proprioceptive signals representing the leg’s force exertion and swing phase back to the rhythm generator neurons (RG). This feedback is reduced by the second feedback mechanism, when legs are injured. A nociceptor neuron in the injured leg sends a signal to modify the effects of that same leg’s other sensory signals to the RG. Furthermore, we integrate the locomotion functions with supraspinal-level functions generated from cognitive information. Our overall model mimics the descent of influence from attention mechanisms driven by visual information down to muscle activation. Our model addresses the problem of providing short-term adaptation in response to perceiving a sudden obstacle Table 5.

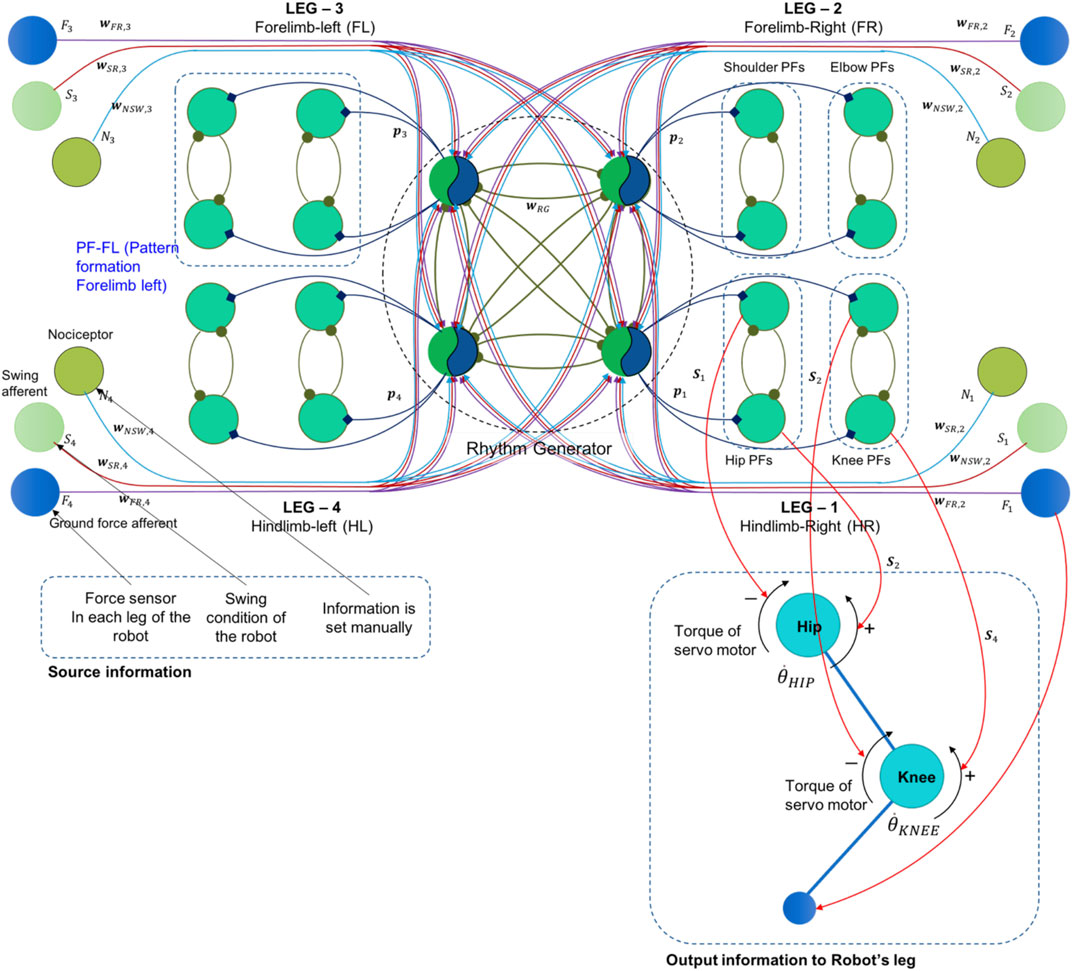

We designed a single-model CPG in which each RG neuron represents the movement pattern of one leg, and each pattern formation (PF) neuron represents the activation of one muscle. Since we use four muscles in one leg (flexor and extensor muscles of hip and knee joint), each limb structure in the CPG network comprises one RG neuron and four PF neurons. Our model uses two CPGs, one for the forelimbs, and one for the hindlimbs. The overall CPG design can be seen in Figure 10. We extend the CPG model from our previous model published in (Saputra et al., 2020b). We used the Matsuoka neural-oscillator model to generate a dynamic signal. The inner state of the RG neuron can be seen in this following equation:

FIGURE 10. The single-rhythm CPG model with a two-layered CPG. The rhythm generator neurons received feedback signals from a force sensor, a pain receptor, and a swing sensor in each leg.

The signal from RG neurons will be transmitted to the PF neurons. PF neuron will generate spike activation for swinging action in certain leg. The spike signal of PF neurons (Pi) is calculated in the Eq. 6, where the references signal (hiref) is calculated in Eq. 7, R is subtraction constant parameter, γref is discount rate of hiref, and q is the spike threshold. We also provide a source code of the CPG model in the attached link of Supplementary Material S3.

In the process, RG neurons have a rhythmic pattern signal and generate the spike signal to the PF neurons. The parameter PFi,k(t)in Eq. 8 is the signal generated by kth of PF neuron in ith leg. It will activate the muscle stimulation explained in the previous research (Saputra et al., 2020b)(Saputra et al., 2020a). The output of the muscle stimulation (Si) will be converted to the direction the torque of servo actuator in the robot’s leg. The connection information can be seen in the Figure 10. Torque of one servo motor is driven by two muscle stimulation for different direction, flexor muscle stimulation is for CW direction and extensor stimulation is for CCW direction. Regarding to the Figure 10, the total torque and the servo angular velocity are approached by Eqs. 9 Eqs. 10, where (r) is the attachment length of muscle assumption, defined as 0.03 m.

In order to test and demonstrate the robot’s capabilities, we had the robot move across natural terrain, walk with a leg malfunction, avoid a sudden obstacle while walking, and climb on a vertical ladder. The optimization process of CPG model and muscle activation function can be seen in our previous papers (Saputra et al., 2020b)(Saputra et al., 2020a).

We trialed the robot on natural terrain (grassed soil with varying slope) and flat terrain (a carpeted floor). The robot’s gait pattern was controlled using the proposed neural-network locomotion generator. The optimized parameter of CPG model as pattern generation can be seen in Table 6.

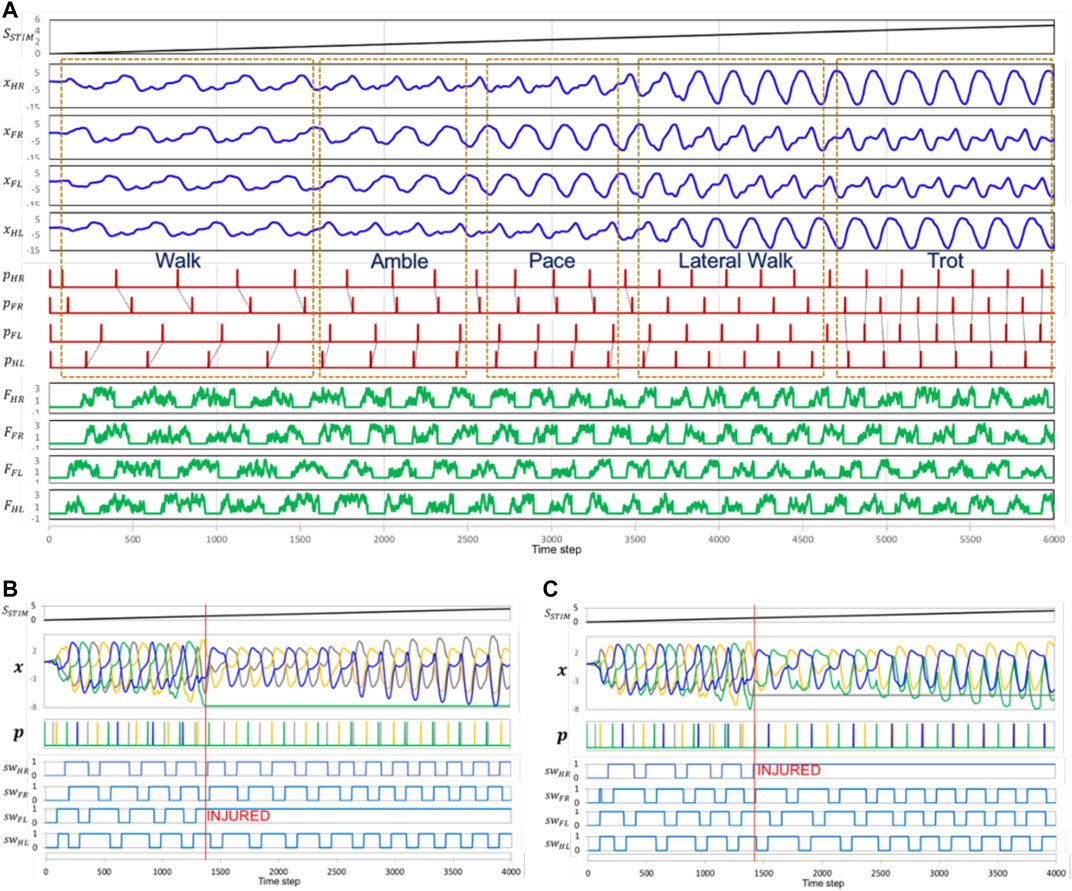

Sample snapshots of the robot’s performance can be seen in Figures 11A,B. We set SSTIM from zero and gradually increase along the value of time step (SSTIM = time step/1200). The result can be seen in Figure 12A. The CPG model can generate dynamic gait pattern. The robot can produce dynamic gait patterns to walk, amble, pace and trot successfully across both terrains.

FIGURE 11. The robot’s dynamic gait pattern (A) on natural terrain (B) on flat terrain. (C) Dynamic gait pattern on flat terrain with injured forelimb. (D) On flat terrain with injured hindlimb. (E) On natural terrain with injured forelimb. (F) On natural terrain with injured hindlimb. The video of robot’s performance can be seen in the link of Video 2.

FIGURE 12. (A) The locomotion model can generate dynamic gait pattern by giving different speed stimulation. It shows there are five different known gait patterns from slow speed to high speed. The CPG model can generate a dynamic gait pattern through differing speed stimulation SSTIM. This increases the frequency of the CPG outputs x of five different known gait patterns from slow speed to high speed. Parameter FHR, FFR, FHL, FFL shows the ground reaction force for every limb. (B) The generated gait patterns in malfunction conditions and the speed stimulation responses. The signal pattern p is changing to respond to the absence of CPG signals. The time phase decreases after a leg is injured. The malfunction of the right forelimb (NFL = 1) is at time step 1380. During injury, the model tends to generate a pattern with the same phase difference at a lower speed. At high speeds, left and right hindlimbs feature the same phase. (C) malfunction of the left hindlimb at time step 1400. In this condition, the left and right forelimbs feature the same phase at a higher speed.

We tested the robot in two conditions: 1) with an injured forelimb, and 2) with an injured hindlimb. Both tests began with the robot in normal gait. After a few seconds, we set one of the legs to its ‘injured’ state. In this case the locomotion model cannot generate signal to the injured leg. However, the torque force of the leg is still active. In both tests, the robot responded by appropriately transitioning its without falling down. These tests were conducted on both artificial and natural terrain. Snapshots of the robot’s performance can be seen in Figures 11C–F. The corresponding video is can be seen in the link of Video 2. Furthermore, the movement transition when leg got injured can be analyzed in Figures 12B,C.

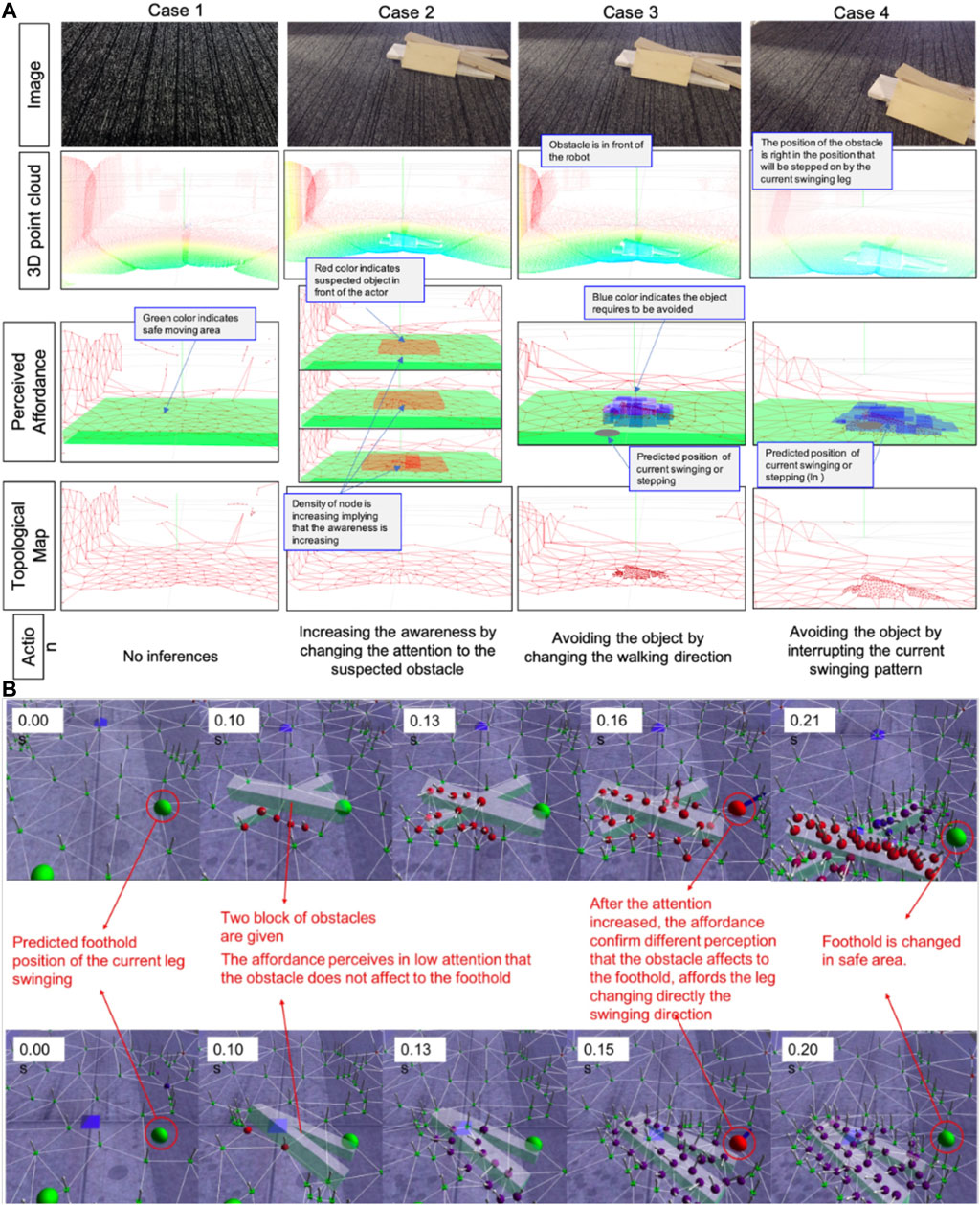

In this trial, we set the robot to travel straight ahead. Once it was moving, we suddenly put a few small pieces of woods in front of the robot’s front leg. This experiment tested how effectively the locomotion generator could produce short-term adaptations in response to external sensory information. The affordance process perceived the object before the robot took any action. The four columns in Figure 13A show affordance perception and adaptation in progress. An increase in map density (case 3) corresponds to the obstacle’s location. The robot performance avoiding sudden obstacle dropped into its path can be seen in the link of Video 3.

FIGURE 13. (A) The result of different condition perception in different levels of data (3D point-cloud being the raw data from the depth sensors, topological map structure represent the attention model, perceived affordance, and generated action) (B) The snapshots show the integration between affordance and attention in computer simulation.

In order to show the integration of affordance and attention in robot locomotion, we first analyze the attention and affordance result in simulation, as shown in this link Video 6 and Figure 13B. Simulation proved that the degree of attention may affect the accuracy of affordance detection. The topological structure (nodes and edges) represent the attentional model. The green ball represents the predicted foothold position for the current swinging movement. We suddenly put an obstacle around that intended foothold position 0.1 S after the leg starts swinging. A few nodes appear with non-homogeneous normal vectors (red color's nodes), meaning that the affordance detector has perceived some sudden obstacle with low accuracy. In this condition, the affordance system asks the attention process to focus on the obstacle. Then, the red-colored nodes promptly generate new nodes. After 0.11 S, the number of nodes has greatly increased around the obstacle, showing that the affordance detector has perceived the obstacle with high accuracy. The robot is then directed to change its swing to a safe area (green nodes).

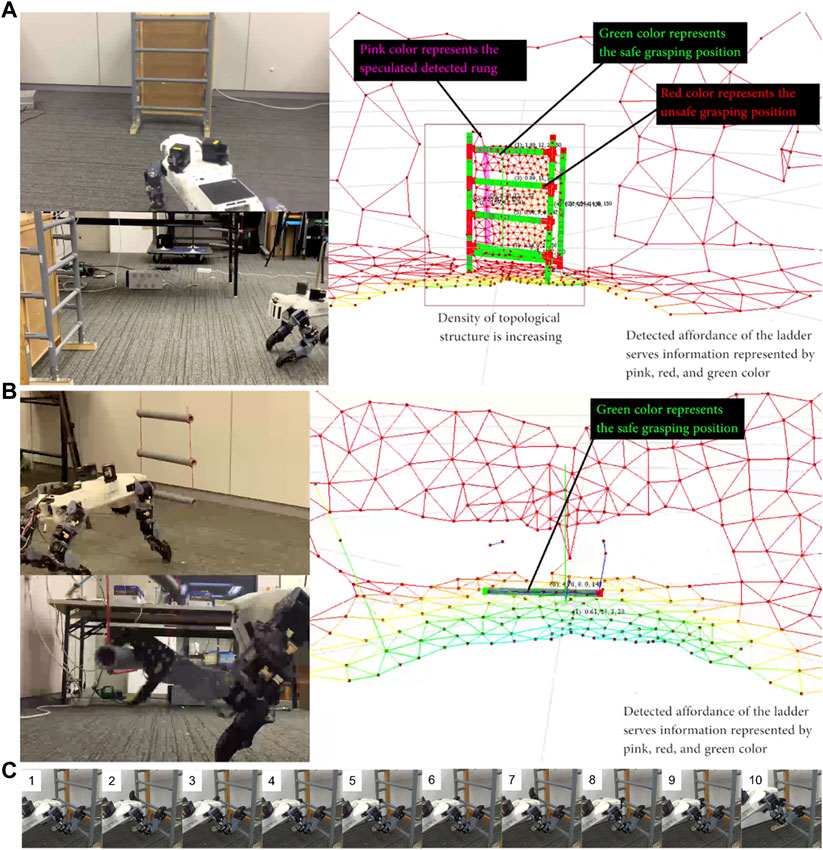

Before setting a climbing task, we tested the robot’s ability to detect and interpret a vertical ladder detection using an inlier–outlier method. Affordance detection, in this case, is directed toward finding feasibly graspable locations. The detail affordance detection is explained in the (Saputra et al., 2019b). Figure 14A shows the robot detecting and tracking the ladder structure in real time, identifying which parts it can safely grasp. The detail video can be seen in the link of Video 4.

FIGURE 14. (A) The robot detects the ladder’s affordances while approaching it. (B) Robot detects graspability of a swinging ladder and grasps a rung of the moving ladder. (C) The snapshot video of the robot performance detailed in the link of Video 5. The robot approaches and climbs a ladder, then transitions back to a horizontal posture to stand on the tabletop (frames 9 – 10).

The robot’s next task is to walk to the ladder and climb up it onto a higher floor, all without handrail support. This task entails transitions from horizontal motion to vertical motion, and then from vertical motion to horizontal motion. To tackle this problem, we propose an additional behavior generation model using independent stepping and pose control in the robot. Posture, safe movement areas, possible touch points, graspability, and target movement all need to be determined from the robot’s sensors. As noted in our earlier research, four kinds of behavior are required: approaching, body–placing, stepping, and grasping (Saputra et al., 2019c). The proposed model was first optimized through simulation. The robot, in turn, successfully moved from the lower level to the upper level, negotiating the ladder between them (Figure 14C). The video of the robot performance climbing the vertical ladder can be seen in the link of Video 5.

We developed a robot inspired by domestic feline morphology. The main contribution of the proposed robot is finding some benefit of biological morphology for robotics to tackle unsolved terrain. We imitate the morphology of the Cat animal in the robot structure and the paw mechanism. In the sensory system, we design the novel structure of 3D point cloud sensors for improving the efficiency. Then, the robot is built to show some novel bio-inspired model. The robot responds to both internal and external sensory information, processing the sensory input through several bio-inspired novel capabilities that enable the robot’s motion through complicated terrains. The robot, though built on a low-cost budget (estimated as 12.000 USD), has been successfully trialed in several environmental conditions. The locomotion model of the robot can generate a dynamic gait pattern by stimulated only one single speed parameter. There are five patterns generated in the robot performance, walk, amble, pace, symmetrical walk, and trot gait. There are, however, still some practical problems still to be solved. Our continuing research will focus on these three areas:

ߦ Improving stability: We will improve the robot’s use of its inertial sensor data in manipulating the current stability model.

ߦ Soften footfall: the robot’s step is currently heavy. We will add a damper mechanism to soften footfall, inspired by feline leg structure.

ߦ Improving durability: the robot needs to run for longer. This may be achieved by increasing battery capacity and body efficiency, for example by decreasing the robot’s weight.

ߦ Advanced terrain handling: further experimentation is required to develop the robot’s performance in more complex environments. We will design and build an artificial ruin in which to develop and test the robot.

Video 1: https://youtu.be/9MEojC5SjdA Shows 3D point clouds data generated by Quad ToF sensor, Comparison of the proposed dynamic density topological generator with other model, The proposed model can specified the density in the obstacle area automatically.

Video 2: https://youtu.be/4NeW1u3OfFo Shows the robot performance in natural and rough terrain, dynamic gait transition in different speed, and robot’s performance during malfunction condition.

Video 3: https://youtu.be/TYACHd9G88E Shows the Robot Performance Avoiding Sudden Obstacle While Moving

Video 4: https://youtu.be/4sZH1vKzNp0 Shows the performance of real time vertical ladder affordance detection while approaching the ladder and performance of moving ladder affordance detection.

Video 5: https://youtu.be/Y_lmzQf-3Lk Shows the novel capabilities of the robot moving through the vertical ladder without handrail support.

Video 6: https://youtu.be/hfL9vE847Es Shows the integration between affordance and attention in computer simulation.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

AS, NT, KW contributed to design and development of mechanical and electrical hardware. AS and NK contributed to cognitive model development. AS and AJ contributed to neural-based robot controller. All authors contributed to writing and refining the manuscript.

This work was partially supported by a Grant-in-Aid for Scientific Research (18J21284) from Japan Society for the Promotion of Science.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frobt.2021.562524/full#supplementary-material.

Ackerman, E. (2016). Boston dynamics’ SpotMini is all electric, agile, and has a capable face-arm. IEEE Spectrum.

Asadi, F., Khorram, M., and Moosavian, S. A. A. (2015). “CPG-based gait transition of a quadruped robot,” in RSI International conference on robotics and mechatronics. (Concorezzo, Italy: ICROM), 210–215.

Belter, D., and Skrzypczyński, P. (2011). Rough terrain mapping and classification for foothold selection in a walking robot. J. Field Robotics 28, 497–528. doi:10.1002/rob.20397

Camurri, M., Bazeille, S., Caldwell, D. G., and Semini, C. (2015). “Real-time depth and inertial fusion for local SLAM on dynamic legged robots,” in IEEE International conference on multisensor Fusion and integration for Intelligent systems. San Diego, CA, September 14–16, 2015, (IEEE), 259–264.

Council on Competitiveness Nippon (COCN) (2013). COCN (Council on Competitiveness-Nippon) Project on Robot Technology Development and Management for Disaster Response,” in IEEE Region 10 Humanitarian Technology Conference 2013 (HTC 2013). Editors H. Asama, S. Tadokoro, and H. Setoya.

Crespi, A., Karakasiliotis, K., Guignard, A., and Ijspeert, A. J. (2013). Salamandra robotica II: An amphibious robot to study salamander-like swimming and walking gaits. IEEE Trans. Robot. 29, 308–320. doi:10.1109/tro.2012.2234311

Deits, R., and Tedrake, R. (2014). “Footstep planning on uneven terrain with mixed-integer convex optimization,” in IEEE-RAS international conference on humanoid robots, Madrid, Spain, November 18–20, 2014, (IEEE), 279–286.

Diebel, J., Reutersward, K., Thrun, S., Davis, J., and Gupta, R. (2004). “Simultaneous localization and mapping with active stereo vision,” in IEEE/RSJ International conference on Intelligent robots and systems (IROS), Sendai, Japan, September 28–October 2, 2014, (IEEE), 4, 3436–3443.

Espinal, A., Rostro-Gonzalez, H., Carpio, M., Guerra-Hernandez, E. I., Ornelas-Rodriguez, M., Puga-Soberanes, H. J., et al. (2016). Quadrupedal robot locomotion: a biologically inspired approach and its hardware implementation. Comput. Intell. Neurosci. 2016, 5615618. doi:10.1155/2016/5615618

Fukuoka, Y., Habu, Y., and Fukui, T. (2015). A simple rule for quadrupedal gait generation determined by leg loading feedback: a modeling study. Sci. Rep. 5, 8169–8211. doi:10.1038/srep08169

Gao, L.-F., Gai, Y.-X., and Fu, S. (2007). “Simultaneous localization and mapping for autonomous mobile robots using binocular stereo vision system,” in 2007 International Conference on Mechatronics and Automation, Harbin, China, August 5–8, 2007, (IEEE), 326–330.

Hashimoto, K., Matsuzawa, T., Sun, X., Fujiwara, T., Wang, X., Konishi, Y., et al. (2019). “WAREC-1--a four-limbed robot with advanced locomotion and manipulation capabilities,” in disaster robotics. Berlin, Germany: Springer, 327–397.

Havoutis, I., Ortiz, J., Bazeille, S., Barasuol, V., Semini, C., and Caldwell, D. G. (2013). “Onboard perception-based trotting and crawling with the hydraulic quadruped robot (HyQ),” in IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, November 3–7, 2013, (IEEE), 6052–6057.

Hoffmann, M., Schmidt, N., Nakajima, K., Iida, F., Pfeifer, R., and Ishiguro, A. (2011). “Perception, motor learning, and speed adaptation exploiting body dynamics: case studies in a quadruped robot,” in International symposium on adaptive motion of animals and machines (AMAM), Hyogo, Japan, October 11–14, 2011, 39–40.

Hyun, D. J., Seok, S., Lee, J., and Kim, S. (2014). High speed trot-running: implementation of a hierarchical controller using proprioceptive impedance control on the MIT cheetah. Int. J. Robotics Res. 33, 1417–1445. doi:10.1177/0278364914532150

Ijspeert, A., and Cabelguen, J.-M. (2006). “Gait transition from swimming to Walking: investigation of salamander locomotion control using nonlinear oscillators,” in In adaptive Motion of Animals and machines. Editors H. Kimura, K. Tsuchiya, A. Ishiguro, and H. Witte (New York: Springer Tokyo), 177–188.

Khusainov, R., Shimchik, I., Afanasyev, I., and Magid, E. (2016). “3D modelling of biped robot locomotion with walking primitives approach in Simulink environment,” in Informatics in control, automation and robotics 12th International conference, Colmar, France, July 21–23, 2016, 287–304.

Kolter, J. Z., Kim, Y., and Ng, A. Y. (2009). “Stereo vision and terrain modeling for quadruped robots,” in 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, May 12–17, 2009, 1557–1564.

Kolter, J. Z., Rodgers, M. P., and Ng, A. Y. (2008). “A control architecture for quadruped locomotion over rough terrain,” in 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, May 19–23, 2008, 811–818.

Kuindersma, S., Deits, R., Fallon, M., Valenzuela, A., Dai, H., Permenter, F., et al. (2016). Optimization-based locomotion planning, estimation, and control design for the atlas humanoid robot. Auton. Robot 40, 429–455. doi:10.1007/s10514-015-9479-3

Lam, T., and Pearson, K. G. (2002). The role of proprioceptive feedback in the regulation and adaptation of locomotor activity. Adv. Exp. Med. Biol. 508, 343–355. doi:10.1007/978-1-4615-0713-0_40

Liu, C., Xia, L., Zhang, C., and Chen, Q. (2018). Multi-layered CPG for adaptive walking of quadruped robots. J. Bionic Eng. 15, 341–355. doi:10.1007/s42235-018-0026-8

Maier, D., Lutz, C., and Bennewitz, M. (2013). “Integrated perception, mapping, and footstep planning for humanoid navigation among 3d obstacles,” in 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, November 3–7, 2013, 2658–2664.

Manchester, I. R., Mettin, U., Iida, F., and Tedrake, R. (2011). Stable dynamic walking over uneven terrain. Int. J. Robotics Res. 30, 265–279. doi:10.1177/0278364910395339

Mastalli, C., Focchi, M., Havoutis, I., Radulescu, A., Calinon, S., Buchli, J., et al. (2017). Trajectory and foothold optimization using low-dimensional models for rough terrain locomotion,” in 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, May 29–June 3, 2017, 1096–1103.

Matsuzawa, T., Koizumi, A., Hashimoto, K., Sun, X., Hamamoto, S., Teramachi, T., et al. (2016). Crawling gait for four-limbed robot and simulation on uneven terrain,” in 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, November 15–17, 2016, 1270–1275. doi:10.1109/humanoids.2016.7803433

Maufroy, C., Kimura, H., and Takase, K. (2008). Towards a general neural controller for quadrupedal locomotion. Neural Netw. 21, 667–681. doi:10.1016/j.neunet.2008.03.010

Maufroy, C., Kimura, H., and Takase, K. (2010). Integration of posture and rhythmic motion controls in quadrupedal dynamic walking using phase modulations based on leg loading/unloading. Auton. Robot 28, 331–353. doi:10.1007/s10514-009-9172-5

Nakada, K., Asai, T., and Amemiya, Y. (2003). An analog CMOS central pattern generator for interlimb coordination in quadruped locomotion. IEEE Trans. Neural Netw. 14, 1356–1365. doi:10.1109/TNN.2003.816381

Nandi, G. C., Semwal, V. B., Raj, M., and Jindal, A. (2016). Modeling bipedal locomotion trajectories using hybrid automata. IEEE region 10 conference (TENCON), Singapore, November 22–25, 2016, (IEEE), 1013–1018.

Owaki, D., and Ishiguro, A. (2017). A quadruped robot exhibiting spontaneous gait transitions from walking to trotting to galloping. Sci. Rep. 7, 277. doi:10.1038/s41598-017-00348-9

Park, H. W., Wensing, P. M., and Kim, S. (2015). “Online planning for autonomous running jumps over obstacles in high-speed quadrupeds,” in Robotics: Science and systems conference, Rome, Italy, July 13–17, 2015.

Qian, F., and Goldman, D. (2015). “Anticipatory control using substrate manipulation enables trajectory control of legged locomotion on heterogeneous granular media,” in Micro-and Nanotechnology sensors, systems, and applications VII, doi:10.1117/12.2177224

Raibert, M., Blankespoor, K., Nelson, G., and Playter, R. (2008). Bigdog, the rough-terrain quadruped robot. IFAC Proc. Volumes 41, 10822–10825. doi:10.3182/20080706-5-kr-1001.01833

Rossignol, S., and Frigon, A. (2011). Recovery of locomotion after spinal cord injury: some facts and mechanisms. Annu. Rev. Neurosci. 34, 413–440. doi:10.1146/annurev-neuro-061010-113746

Saputra, A. A., Chin, W. H., Toda, Y., Takesue, N., and Kubota, N. (2019a). Dynamic density topological structure generation for real-time ladder affordance detection. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, November 3–8, 2019, (IEEE), 3439–3444.

Saputra, A. A., Hong, C. W., and Kubota, N. (2019b). “Real-time grasp affordance detection of unknown object for robot-human interaction,” in IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, October 6–9, 2019, (IEEE), 3093–3098. doi:10.1109/smc.2019.8914202

Saputra, A. A., Toda, Y., Takesue, N., and Kubota, N. (2019c). A novel capabilities of quadruped robot moving through vertical ladder without handrail support,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, November 3–8, 2019, (IEEE), 1448–1453. doi:10.1109/IROS40897.2019.8968175

Saputra, A. A., Ijspeert, A. J., and Kubota, N. (2020a). “A neural primitive model with sensorimotor coordination for dynamic quadruped locomotion with malfunction compensation,” in IEEE/RSJ International conference on Intelligent robots and systems (IROS), Las Vegas, NV, October 24–January 24, 2020, (IEEE), 3783–3788.

Saputra, A. A., Wei Hong, C., Ijspeert, A. J., and Kubota, N. (2020b). A muscle-reflex model of forelimb and hindlimb of felidae family of animal with dynamic pattern formation stimuli. International Joint conference on neural networks (IJCNN), Glasgow, UK, July 19–24, 2020, (IEEE), 1–8. doi:10.1109/IJCNN48605.2020.9206784

Saputra, A. A., Khalilullah, A. S., Sulistijono, I. A., and Kubota, N. (2015a). “Adaptive motion pattern generation on balancing of humanoid robot movement,” in 2015 IEEE 28th Canadian Conference on Electrical and Computer Engineering (CCECE), Halifax, NS, May 3–6, 2015, (IEEE), 1479–1484.

Saputra, A. A., Takeda, T., Botzheim, J., and Kubota, N. (2015b). “Multi-objective evolutionary algorithm for neural oscillator based robot locomotion,” in IECON 2015-41st Annual conference of the IEEE Industrial Electronics Society, Yokohama, Japan, November 9–12, 2015, (IEEE), 2655–2660.

Saputra, A. A., Takeda, T., and Kubota, N. (2015c). “Efficiency energy on humanoid robot walking using evolutionary algorithm,” in 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, March 25–28, 2015, (IEEE), 573–578.

Saputra, A. A., Tay, N. N. W., Toda, Y., Botzheim, J., and Kubota, N. (2016). “Bézier curve model for efficient bio-inspired locomotion of low cost four legged robot,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea (South), October 9–14, 2016, (IEEE), 4443–4448.

Saputra, A. A., Toda, Y., Botzheim, J., and Kubota, N. (2017). Neuro-activity-based dynamic path planner for 3-d rough terrain. IEEE Trans. Cogn. Dev. Syst. 10, 138–150. doi:10.1109/TCDS.2017.2711013

Semini, C., Tsagarakis, N. G., Guglielmino, E., Focchi, M., Cannella, F., and Caldwell, D. G. (2011). Design of HyQ - a hydraulically and electrically actuated quadruped robot. Proc. Inst. Mech. Eng. J. Syst. Control. Eng. 225, 831–849. doi:10.1177/0959651811402275

Spectrum, I. (2019). Laikago. ROBOTS Available at: https://robots.ieee.org/robots/laikago/.

Sun, T., Shao, D., Dai, Z., and Manoonpong, P. (2018). “Adaptive neural control for self-organized locomotion and obstacle negotiation of quadruped robots, in 27th IEEE International Symposium on robot and human interactive communication RO-MAN, Nanjing, China, August 27–31, 2018, (IEEE), 1081–1086.

Tran, D. T., Koo, I. M., Lee, Y. H., Moon, H., Park, S., Koo, J. C., et al. (2014). Central pattern generator based reflexive control of quadruped walking robots using a recurrent neural network. Robotics Autonomous Syst. 62, 1497–1516. doi:10.1016/j.robot.2014.05.011

Turvey, M. T. (1992). Affordances and prospective control: an outline of the ontology. Ecol. Psychol. 4, 173–187. doi:10.1207/s15326969eco0403_3

Wang, S., Jiang, H., and Cutkosky, M. R. (2016). “A palm for a rock climbing robot based on dense arrays of micro-spines, in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, October 9–14, 2016, (IEEE), 52–59. doi:10.1109/iros.2016.7759034

Winkler, A. W., Mastalli, C., Havoutis, I., Focchi, M., Caldwell, D. G., and Semini, C. (2015). “Planning and execution of dynamic whole-body locomotion for a hydraulic quadruped on challenging terrain,” in IEEE Int. Conf. Robotics Automation (ICRA), Seattle, WA, May 26–30, 2015, (IEEE), 5148–5154.

Witte, H., Hackert, R., Lilje, K. E., Schilling, N., Voges, D., Klauer, G., et al. (2001). Transfer of biological principles into the construction of quadruped walking machines,” in Proceedings of the second International Workshop on robot motion and control RoMoCo’01: IEEE Cat. No. 01EX535, Bukowy Dworek, Poland, October 20, 2001, (IEEE), 245–249.

Zhang, G., Rong, X., Hui, C., Li, Y., and Li, B. (2016). Torso motion control and toe trajectory generation of a trotting quadruped robot based on virtual model control. Adv. Robotics 30, 284–297. doi:10.1080/01691864.2015.1113889

Zhang, J., Gao, F., Han, X., Chen, X., and Han, X. (2014a). Trot gait design and CPG method for a quadruped robot. J. Bionic Eng. 11, 18–25. doi:10.1016/s1672-6529(14)60016-0

Zhang, Y., Luo, J., Hauser, K., Park, H. A., Paldhe, M., Lee, C. S. G., et al. (2014b). “Motion planning and control of ladder climbing on DRC-Hubo for DARPA Robotics Challenge,” in Proceedings of the IEEE International conference on robotics and automation, Hong Kong, China. 2086–2086.

Keywords: quadruped robot, bio-inspired model, neural-based locomotion, internal-external sensory information, novel capabilities

Citation: Saputra AA, Takesue N, Wada K, Ijspeert AJ and Kubota N (2021) AQuRo: A Cat-like Adaptive Quadruped Robot With Novel Bio-Inspired Capabilities. Front. Robot. AI 8:562524. doi: 10.3389/frobt.2021.562524

Received: 15 May 2020; Accepted: 28 January 2021;

Published: 12 April 2021.

Edited by:

Dai Owaki, Tohoku University, JapanReviewed by:

Paolo Arena, University of Catania, ItalyCopyright © 2021 Saputra, Takesue, Wada, Ijspeert and Kubota. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Azhar Aulia Saputra, YXpoYXIuYXVsaWEuc0BnbWFpbC5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.