95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Robot. AI , 16 November 2020

Sec. Robot Vision and Artificial Perception

Volume 7 - 2020 | https://doi.org/10.3389/frobt.2020.591827

This article is part of the Research Topic Current Trends in Image Processing and Pattern Recognition View all 8 articles

Significant information extraction from the images that are geometrically distorted or transformed is mainstream procedure in image processing. It becomes difficult to retrieve the relevant region when the images get distorted by some geometric deformation. Hu's moments are helpful in extracting information from such distorted images due to their unique invariance property. This work focuses on early detection and gradation of Knee Osteoarthritis utilizing Hu's invariant moments to understand the geometric transformation of the cartilage region in Knee X-ray images. The seven invariant moments are computed for the rotated version of the test image. The results demonstrated are found to be more competitive and promising, which are validated by ortho surgeons and rheumatologists.

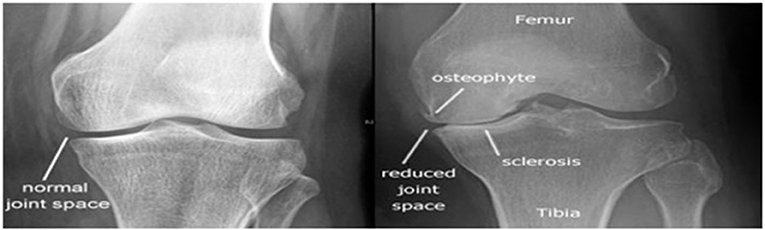

Knee Osteoarthritis (OA) is a human knee joint condition that primarily impacts cartilage. Cartilage has a significant role to perform in leg movement. In OA, the top layer of cartilage disintegrates and deteriorates resulting in intense pain (Gornale et al., 2020c). The patient suffering from knee pain has to consult a doctor, who will then test the clinical symptoms of the patient and advise the patient to go for radiographic imaging of the knee. Clinical symptoms play a crucial role in the treatment of osteoarthritis (de Graaff et al., 2015). The proper analysis of the illness is carried out by observing both clinical signs and radiological criteria. Relevant radiological parameters are width of the joint area, osteophytes, sclerosis, etc. The important radiological parameters are depicted in Figure 1. Based on the radiological parameters, the severity level of the ailment is analyzed using the Kellgren and Lawrence (KL) grading system (Reichmann et al., 2011; Gornale and Patravali, 2017). The KL system is the most common method used to classify the knee joint OA into 5 different grades essentially to discern the severity of the disease (Kellgren and Lawrence, 1957; Stemcellsdoc's Weblog, 2011). Knee radiographic images are very sensitive to unintended defects that may create complications in the study of bone structures. It may result in the experts taking more time to analyse the Knee x-ray and infer the existence of OA. Thus, in order to overcome these problems, this work focuses on early detection and gradation of Knee OA utilizing Hu's invariant moments to understand the geometric transformation of the cartilage region in Knee X-ray images.

Figure 1. Radiological parameters of Knee Osteoarthritis (Stemcellsdoc's Weblog, 2011).

In this work, an extensive survey has been carried out, which indicates that many researchers have primarily worked on different segmentation techniques for extracting the cartilage region either automatically or semi automatically. While other researchers have focused solely on various feature extraction methods by manually extracting the region of interest, minimal experiments have been performed in the evaluation of ailments as per the Kellgren and Lawrence grading system. The findings presented in the literature are considered to be satisfactory. But there is still room to create a more important and reliable automatic computer aided algorithm for OA detection that will contend with state-of-the-art techniques.

Gornale et al. (2016b,c, 2017, 2019a,b,c, 2020a,b) have used the semi-automated active contour method for the extraction of the cartilage region and experimented with their own database of 500 Knee X-ray images. The different statistical features, geometric features and Zernike moments are computed and classified obtaining the accuracy of 87.92% for random forest classifier and 88.88% for K-NN classifier (Gornale et al., 2016b,c). Subsequently, in the next experiment with the extended dataset, the gradient features are computed and classified obtaining an accuracy of 95% (Gornale et al., 2017). Further, different segmentation methods are implemented for the extraction of cartilage. The basic mathematical features are computed and classified giving an accuracy of 97.55% (Gornale et al., 2019a). Further, a novel approach to identify and extract the region of interest based on the density of pixels is implemented in (Gornale et al., 2020a). The extracted region is then used for computation using a histogram of the oriented gradient method and local binary pattern, the experimentation being done on a dataset of 1,173 Knee X-ray images. The results demonstrated an accuracy of 97.86 and 97.16% with respect to Medical Expert-I and Medical Expert-II opinions. In (Gornale et al., 2019b), with an expanded dataset of 1,650 knee X-ray images, an algorithm that calculates the cartilage thickness/area has been developed. The calculated thickness demonstrated an accuracy of 99.81% using a K-NN classifier, which is evaluated by radiographic expert incompliance with KL grading framework. In order to provide improved performance for the denser regions, local phase quantization and projection profile features are computed and classified. The gradation was done using Artificial Neural Network and an accuracy of 98.7 and 98.2% as per the Expert I and II opinions, respectively, has been achieved (Gornale et al., 2019c). Lastly, the experiments utilizing multi-resolution wavelet filters with varying filter orders and decomposition levels have been performed and classification is done using decision tree classifier. Classification accuracy of 98.54 and 97.93% as per the Expert I and II opinions is obtained for the Biorthogonal 1.5 wavelet filter at 4th decomposition level (Gornale et al., 2020b).

According to the earlier work, the key sources of distortions or noises in knee X-ray images are due to film processing and digitization. It becomes difficult to extract the significant regions from the X-ray images that are geometrically distorted. Hence, it is proposed to use Hu's invariant moments in the present analysis to address these issues as these are invariant to scale, translation and orientation.

The proposed method includes pre-processing that automatically detects the contours of knee bones and eliminates unwanted distortions. Then the cartilage region is identified and extracted. Features, namely, Hu's invariant moments, are computed and then classified to determine OA grading. The flow diagram is represented in Figure 2.

The own dataset of 2000 digital knee X-ray images collected from well-reputed local hospitals and health centers was considered for the experimentation. The fixed-flexion digital knee X-rays have been acquired using a PROTEC PRS-500E X-ray machine. Original images were 8-bit 1,350 × 2,455 grayscale images. For the experimentation, the manual annotation of these images was done by two medical experts for the gradation of each Knee X-ray according to the KL grading framework, which is summarized in the Table 1. This data is publicly available and can be found in Gornale and Patravali (2020).

In the initial pre-processing of a knee X-ray image, the contours of knee bone are detected, which is followed by filtering to eliminate the noise content without hindering the essential information of the image (Hall et al., 1971).

The cartilage region in the knee joint is the region of interest (ROI). Identification of ROI is done on the basis of pixel density, as the bone in the X-ray is denser, which results in a higher number of pixel values (Semmlow, 2004; Nithya and Santhi, 2017). Later, the detected ROI is cropped and then used as one of the inputs to the active contour algorithm for segmentation. The portion of the knee is dynamically segmented using 3 × 3 masks (Caselles et al., 1995). The object boundaries are further located iteratively by using this mask (Gornale et al., 2016b,c; Gornale et al., 2017, 2019b).

Hu's invariant moments are computed from the segmented region for the detection and classification of Knee Osteoarthritis. Based on algebraic invariants, one skew orthogonal invariant and six orthogonal invariants were derived by Hu that are independent to geometric transformations and parallel projection (Hu, 1962). Herein, the basic principle is to depict the objects by means of measurable quantities called invariants which have adequate power of classification to differentiate between objects belonging to different classes (Li, 2010; Urooj and Singh, 2016). For a 2-D continuous function f(x,y), the moment of order (p+q) is defined in the Equation (1):

where, p, q = 0,1,2,…. and f(x,y) is a piecewise continuous function that has non-zero values only in a finite portion of the xy-plane; moments of all orders arise and the moment series (mpq) is uniquely defined by f(x,y) (Gonzalez and Woods, 2002; Li, 2010). Conversely, mpq uniquely determines f(x,y). The central moments are defined in the Equation (2):

where, and , m00 is the mass of the image.

m10/m00 and m01/m00 are the centroids of the image. The scale invariance is obtained by dividing the central moments by a proper normalization factor which is a non-zero quantity for all the test images (Hu, 1962; Huang and Leng, 2010). It is evident that the lower order moments are more robust to noise and easy to calculate (Li, 2010). The normalized central moments denoted ηpq are defined in the Equation (3):

where, , for p+q=2,3….

In 1962, Hu proposed the following seven invariant moments for in-plane rotation (Hu, 1962; Gonzalez and Woods, 2002; Li, 2010):

The Hu's moments computed for the segmented regions are classified using two different classifiers, namely, K-NN and Decision Tree. The analysis of results shows that the K-NN classifier produced superior results relative to decision tree classifier. The K-NN categorizes class labels depending upon the gap ratio between the evaluation data and the testing data. The relevant K value is considered by the K-NN classifier to provide a class label for unlabelled image by identifying the nearest neighbor (Gornale et al., 2016a, 2020c).

The experiment was carried out on a test image that was rotated by 15 to 90 degrees with 15-degree increments. Moments were determined for the rotated version of the test image. The findings demonstrated that the rotational invariant moments were in reasonable agreement with the invariants computed for the original image. Further, to check the robustness and the invariance, the experiment was extended to 180 degrees and it was noticed that comparable findings were obtained for all the seven invariant features. The results for rotations up to 900 are shown in Table 2. The experiment is then performed to check the invariant moment for scale invariance. The test image is scaled by positive scale factor, namely, 0.2, 0.3, 0.4, 0.5, and 0.6. Hu's invariant moments are determined for various scales and the corresponding results are shown in Table 3.

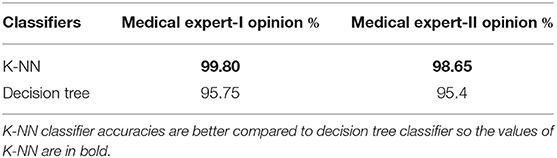

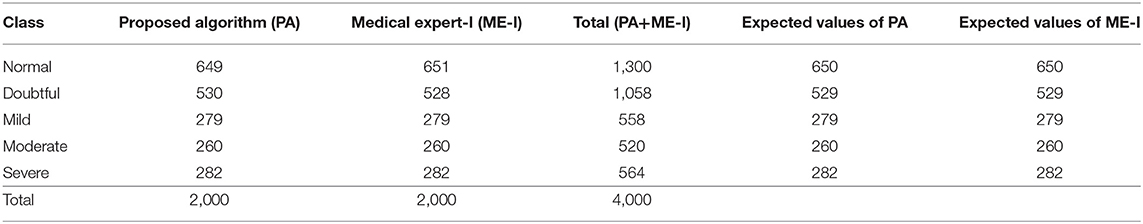

To further examine the optimality, the experimentation is carried for the entire dataset using 2-fold cross validation strategy. The classification accuracies of Decision tree and K-NN classifiers are summarized in Table 4, which reveals that the performance of the K-NN classifier is better relative to that of the decision tree classifier. Therefore, the confusion matrix of the K-NN classifier related to Expert I and II is provided in Tables 5, 6. From Table 5, it is found that the accuracy rates of 99.53% for Normal grade, 99.81% for Doubtful grade and 100% for Mild, Moderate and Severe grade were obtained. Correspondingly, from the Table 6, it is found that the accuracy rates of 99.04% for Normal grade, 96.18% for Doubtful grade and 100% for Mild, Moderate and Severe grade were obtained. The overall accuracies of 99.80% as per medical Expert-I opinion and 98.65% as per medical Expert-II were attained.

Table 4. Accuracies obtained by using KNN and decision tree classifiers as per Expert I and II opinion.

To assess the classification accuracies and the efficiency, Precision and Recall are used which are defined in Equations (4, 5).

Where, TP denotes True Positive, FP denotes False Positive and FN denotes False Negative. The precision and recall for Expert I and II opinions are given in Tables 7, 8, respectively. The comparative analysis of the results of the proposed method and Medical experts' opinions is graphically depicted in Figures 3, 4, respectively.

The significance test is performed to determine whether the outcomes of the experiments are statistically significant or not. Two different tests, namely, the Chi-square test and t-test, are performed to verify the inferences of the present work.

Chi-Square test examines whether there is relationship between two variables or not. In this test a null hypothesis is about a difference between the opinions of two medical experts. The evaluation is carried out with the findings of the proposed algorithm and the manual analysis made by medical experts. The hypotheses for Chi-Square test, performed separately in relation to medical Expert I and II, are stated as below:

• Null Hypothesis (H0): There is close consensus between the outcomes of the algorithm and the annotations made by the medical Expert I/ Expert II.

• Alternative Hypothesis (H1): No association between the findings of proposed algorithm and the annotations made by the medical Expert-I/ Expert- II

• Degree of freedom (df) = 4, the critical value of χ2 with df = 4 at 5% if significance level is 9.48773 (from the Chi-square table).

• If χ2 <9.48773, Accept H0 and reject H1 else vice versa.

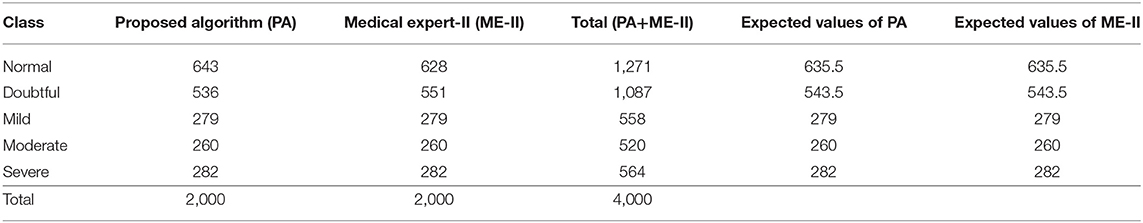

The details of the Chi-square test related to medical Expert-I and II are presented in the Tables 9, 10. The computed values of the Chi-square variables in cases of the Expert I and II are given below:

Table 9. Chi-square test between the results of proposed algorithm and annotations by medical Expert-I.

Table 10. Chi-square test between the results of proposed algorithm and annotations by medical Expert-II.

The computed Chi-square values are observed to be lower than the critical values obtained from the Chi-square table. Hence, the null hypothesis is accepted and the alternative hypothesis is rejected. It indicates that there is significant agreement between the findings of the proposed method and the annotations provided by both the medical experts.

The t-test is meant to answer the question of whether two groups are statistically different from each other. In this test a null hypothesis is about a difference between the opinions of two medical experts. The common hypotheses for all the experiments utilizing t-test are stated as below:

• Null Hypothesis (H0): Difference in opinions is due to random variations in samples and not due to experts.

• Alternative Hypothesis (H1): Difference in opinions is due to medical experts and not due to random variations in samples.

• Degree of freedom (df) = 4, the critical value of t with df = 4 at 5% significance level is 2.1318 (from the t distribution table).

• If t-score>2.1318, Reject H0 and accept H1 else vice versa.

The details of t-test related to medical Expert-I and II are presented in the Table 11.

Therefore, the computed t-value is less than the table value, and hence the hypothesis is accepted. It means that the disparity of opinions between the two experts is attributed to random variations of samples and not due to experts. Both the tests of statistical significance have demonstrated that the proposed algorithm is substantially successful and thus gives reliable computer aided assistance to physicians for rapid evaluation of the OA ailment. It helps patients to secure appropriate and reliable timely diagnosis and treatment.

The prime cause for geometric distortions of cartilage region in knee X-ray images is the progression of OA, which could be misrepresented due to filming, handling, and digitization during image acquisition. It may become difficult in extracting the significant regions from such distorted images. Hu's invariant moments provide suitable invariant features from such distorted images due to their rotation, scale, and translation invariance properties. In this work, feature extraction using Hu's invariant moments is done for the detection and classification of Knee Osteoarthritis. The experimental results obtained for the rotated and scaled images are in reasonable agreement with the invariants computed for the original image. Overall, the proposed algorithm results, which are validated by ortho-surgeons and rheumatologists, are found to be more competitive and promising.

This data is already publicly available and can be found in (Gornale and Patravali, 2020).

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

SG: paper writing and proof reading. PP: data collection and experimental analysis. PH: testing and validation. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Authors thank the Department of Science and Technology (DST), Govt of India, for its financial assistance under Women Scientist-B Scheme [Ref No: SR/WOS-B/65/2016(G)]. The authors are grateful to Dr. Chetan M. Umarani, Orthopedic Surgeon, Gokak, Karnataka, and Dr. Kiran S. Marathe, Orthopedic Surgeon, JSS Hospital, Mysore, Karnataka, India, for providing knee X-ray images with annotations and validating the computed results. Further, the authors are also grateful to the reviewers for their helpful comments and suggestions on the present work.

Caselles, V., Kimmel, R., and Sapiro, G. (1995). Geodesic active contours. Int. J. Comput. Vis. 22, 61–79. doi: 10.1109/ICCV.1995.466871

de Graaff, S., Runhaar, J., Oei, E. H., Bierma-Zeinstra, S. M., and Waarsing, J. H. (2015). The association between knee joint shape and osteoarthritis development in middle-aged, overweight and obese women. Erasmus J. Med. Rotterdam: Erasmus MC University Medical Center, 4, 47–52.

Gonzalez, R. C., and Woods, R. E. (2002). Digital Image Processing. Upper Saddle River, NJ: Prentice Hall.

Gornale, S., and Patravali, P. (2020). Digital Knee X-ray Images. Mendeley Data V1. doi: 10.17632/t9ndx37v5h.1

Gornale, S. S., and Patravali, P. U. (2017). “Medical imaging in clinical applications: algorithmic and computer based approaches,” in Engineering and Technology: Latest Progress, eds D. Nilanjan, B. Vikrant, H. Aboul Ella (New Delhi: Meta Research Press), 65–104.

Gornale, S. S., Patravali, P. U., and Hiremath, P. S. (2019b). Early detection of osteoarthritis based on cartilage thickness in knee X-ray images. Int. J. Image Graph Signal Process. 11, 56–63. doi: 10.5815/ijigsp.2019.09.06

Gornale, S. S., Patravali, P. U., and Hiremath, P. S. (2019c). Detection of osteoarthritis in knee radiographic images using artificial neural network. Int. J. Innovat. Technol. Explor. Eng. 8, 2429–2434. doi: 10.35940/ijitee.L3011.1081219

Gornale, S. S., Patravali, P. U., and Hiremath, P. S. (2020a). “Identification of Region of Interest for Assessment of Knee Osteoarthritis in Radiographic Images”, in International Journal of Medical Engineering and Informatics (Scopus Elsevier, Inderscience). Available online at: https://www.inderscience.com/info/ingeneral/forthcoming.php?jcode=ijmei

Gornale, S. S., Patravali, P. U., and Hiremath, P. S. (2020b). “Osteoarthritis detection in knee radiographic images using multi-resolution wavelet filters,” in International Conference on Recent Trends in Image Processing and Pattern Recognition (rtip2r) (Aurangabad: Dr. Babasaheb Marathwada University).

Gornale, S. S., Patravali, P. U., and Hiremath, P. S. (2020c). “Chapter 6–cognitive informatics, computer modelling and cognitive science assessment of knee osteoarthritis in radiographic images: a machine learning approach”, in Cognitive Informatics, Computer Modelling, and Cognitive Science, Vol. 1 (Academic Press), 93–121.

Gornale, S. S., Patravali, P. U., and Manza, R. R. (2016a). A survey on exploration and classification of osteoarthritis using image processing techniques. Int. J. Sci. Eng. Res. 7, 334–355.

Gornale, S. S., Patravali, P. U., and Manza, R. R. (2016b). Detection of osteoarthritis using knee X-ray image analyses: a machine vision based approach. Int. J. Comput. Appl. 145, 20–26. doi: 10.5120/ijca2016910544

Gornale, S. S., Patravali, P. U., and Manza, R. R. (2016c). “Computer Assisted Analysis and Systemization of knee Osteoarthritis using Digital X-ray images,” in Proceedings of 2nd International Conference on Cognitive Knowledge Engineering (ICKE), Chapter 42 (Aurangabad: Excel Academy Publishers), 207–212.

Gornale, S. S., Patravali, P. U., Marathe, K. S., and Hiremath, P. S. (2017). Determination of osteoarthritis using histogram of oriented gradients and multiclass SVM. Int. J. Image Graph. Signal Process. 9, 41–49. doi: 10.5815/ijigsp.2017.12.05

Gornale, S. S., Patravali, P. U., Uppin, A. M., and Hiremath, P. S. (2019a). Study of segmentation techniques for assessment of osteoarthritis in knee X-ray images. Int. J. Image Graph. Signal Process. 11, 48–57. doi: 10.5815/ijigsp.2019.02.06

Hall, E. L., Kruger, R. P., Dwyer, S. J., Hall, D. L., Mclaren, R. W., and Lodwick, G. S. (1971). “A Survey of pre processing and feature extraction techniques for radiographic images,” in IEEE Transactions On Computers (IEEE), 1032–1044.

Hu, H- K. (1962). “Visual pattern recognition by moment invariants,” in IRE Transactions on Information Theory, Vol. 8 (IEEE), 179–187. Available online at: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=1057692&isnumber=22787

Huang, Z., and Leng, J. (2010). “Analysis of Hu's moment invariants on image scaling and rotation,” in Proceedings of 2010 2nd International Conference on Computer Engineering and Technology (ICCET) (Chengdu: IEEE),476–480.

Kellgren, J. H., and Lawrence, J. S. (1957). Radiological assessment of osteoarthritis. Ann. Rheumat. Dis. 16, 494–502. doi: 10.1136/ard.16.4.494

Li, D. (2010). Analysis of moment invariants on image scaling and rotation. Innovations in Computing Sciences and Software Engineering (Dordrecht: Springer), 415–419.

Nithya, R., and Santhi, B. (2017). Computer aided diagnostic system for mammogram density measure and classification. Biomed. Res. 28, 2427–2431.

Reichmann, W. M., Maillefert, J. F., Hunter, D. J., Katz, J. N., Conaghan, P. G., and Losinav, E. (2011). Responsiveness to change and reliability of measurement of radiographic joint space width in osteoarthritis of the knee: a systematic review. Osteoarthritis Cartilage 19, 560–556. doi: 10.1016/j.joca.2011.01.023

Semmlow, J. L. (2004). Bio Signal and Biomedical Image Processing: MATLAB-Based Applications (Signal Processing). New York, NY: Taylor & Francis Inc.

Stemcellsdoc's Weblog. (2011). Kellgren-Lawrence Classification: Knee Osteoarthritis Classification and Treatment Options. Available online at: https://stemcelldoc.wordpress.com/2011/11/13/kellgren-lawrence-classification-knee-osteoarthritis-classification-and-treatment-options/

Keywords: knee radiography, osteoarthritis (OA), KL grading, Hu's invariant moments, K-NN

Citation: Gornale SS, Patravali PU and Hiremath PS (2020) Automatic Detection and Classification of Knee Osteoarthritis Using Hu's Invariant Moments. Front. Robot. AI 7:591827. doi: 10.3389/frobt.2020.591827

Received: 05 August 2020; Accepted: 22 September 2020;

Published: 16 November 2020.

Edited by:

Manza Raybhan Ramesh, Dr. Babasaheb Ambedkar Marathwada University, IndiaReviewed by:

Rakesh Ramteke, Kavayitri Bahinabai Chaudhari North Maharashtra University, IndiaCopyright © 2020 Gornale, Patravali and Hiremath. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pooja U. Patravali, cGNkb25nYXJlQGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.