94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Internet Things, 18 March 2024

Sec. Security, Privacy and Authentication

Volume 3 - 2024 | https://doi.org/10.3389/friot.2024.1296599

This article is part of the Research TopicSocio-technical Cybersecurity and Resilience in the Internet of ThingsView all 8 articles

The security, privacy, and safety issues around Female-oriented technologies (FemTech) and data can lead to differential harms. These complex risks and harms are enabled by many factors including inadequate regulations, the non-compliant practices of the industry, and the lack of research and guidelines for cyber-secure, privacy-preserving, and safe products. In this paper, we review the existing regulations related to FemTech in the United Kingdom, EU, and Switzerland and identify the gaps. We run experiments on a range of FemTech devices and apps and identify several exploitative practices. We advocate for the policymakers to explicitly acknowledge and accommodate the risks of these technologies in the relevant regulations.

Generally known and referred to as female-oriented technologies (aka female technologies or “FemTech”), FemTech is a term applied to the collection of digital technologies focused on women’s health and wellbeing, as the majority of the industry talks about its users. We, however, acknowledge that these products are available for people across all gender identities. FemTech products come in all forms of types and applications, ranging from mobile period apps to fertility-tracking wearables to IVF services on the blockchain. FemTech Analytics, a strategic analytics agency focused on the FemTech sector suggests several sub-sectors1. These sub-sectors have different market shares and include Pregnancy and nursing (21%), Reproductive health & contraception (17%), Menstrual health (14%), General healthcare (14%), Pelvic and uterine healthcare (10%), Sexual health (9%), Women’s wellness (7%), Menopause care (6%), Longevity (2%), and Mental health (2%). Predicted to be a $75-billion industry by 2025, this sector is booming. Consequently, they also introduce new risks and harms associated with the collection of sensitive health, medical, and sex data that are not identified and addressed in the related regulations.

There is some research addressing the security and privacy (SP) risks that can originate from the mismanagement, misuse, and misappropriation of intimate data on issues such as abortion and (in)fertility (e.g., Mehrnezhad and Almeida, 2021). However, limited work has gone into exploring the laws, regulations, policies and standards surrounding FemTech’s SP risks. The existing work is either mainly around US regulations, e.g., Scatterday (2022); Rosas (2019), explores the gaps without demonstrating how such gaps can be exploited (e.g., McMillan, 2023), focuses on user studies (e.g., Mcdonald and Andalibi, (2023), or is limited to a subset of FemTech solutions such as fertility tracker apps (Mehrnezhad and Almeida 2021).

Although a wide range of regulations may concern the data types collected by FemTech, the sector is yet to be properly regulated. Such regulations include the California Consumer Privacy Act (CCPA)2, Health Insurance Portability and Accountability Act (HIPPA)3, Federal Food, Drug, and Cosmetic Act (FD&C Act)4, Federal Trade Commission Act5, the General Data Protection Regulations (GDPR)6, the Swiss Federal Act on Data Protection7, United Kingdom Medicines & Healthcare products Regulatory Agency (MHRA)8, and the EU Medical Devices regulation9. Note that there is a range of standards related to FemTech, e.g., the ISO 13485 Medical devices10 and ISO 3533:2021 Sex toys (Design and safety requirements for products in direct contact with genitalia, the anus, or both)11. Here we only focus on the related regulations with standardisation beyond the scope of this paper.

We conduct our studies in the United Kingdom and Switzerland. These two countries are particularly interesting since they are not EU members. However, they have significant business operating in the EEA which makes them relevant to the EU regulations including the general data protection laws and medical and health ones. Specifically, we aim to focus on laws and regulations as they pertain to Europe, the United Kingdom and Switzerland, so as to complement the work that is ongoing regarding laws and regulations in the US (Scatterday, 2022; Rosas 2019). Specifically, we aim to answer the following research questions:

• RQ1: What gaps exist in the applicable laws and regulations when it comes to female-related data?

• RQ2: How do FemTech systems (apps, websites, IoT devices) misuse these gaps in the regulations, either intentionally or unintentionally?

• RQ3: How do these systems violate the applicable laws and regulations?

We review the existing regulations related to FemTech in the United Kingdom, EU, and Switzerland (as shown in Table 1). We run experiments on a range of FemTech devices, apps, and websites (as shown in Figure 1 and Table 2) and identify several exploitative practices. Our results show that there is indeed a gap in the existing laws and the current FemTech devices, apps, and systems are collecting a wide range of sensitive data about the users and others such as partner(s), baby/child, family and friends. We advocate for policymakers to explicitly acknowledge and accommodate the risks of these technologies in the relevant regulations.

Table 1. List of regulations in EU, United Kingdom, and Switzerland related to FemTech systems and data.

Figure 1. Examples of Femtech products (IoT, Apps) and their categories. Images have been borrowed from the products’ websites and modified for presentation in this paper. These categories are based on FemTech Analytics, a strategic analytics agency focused on the FemTech sector (femtech.health).

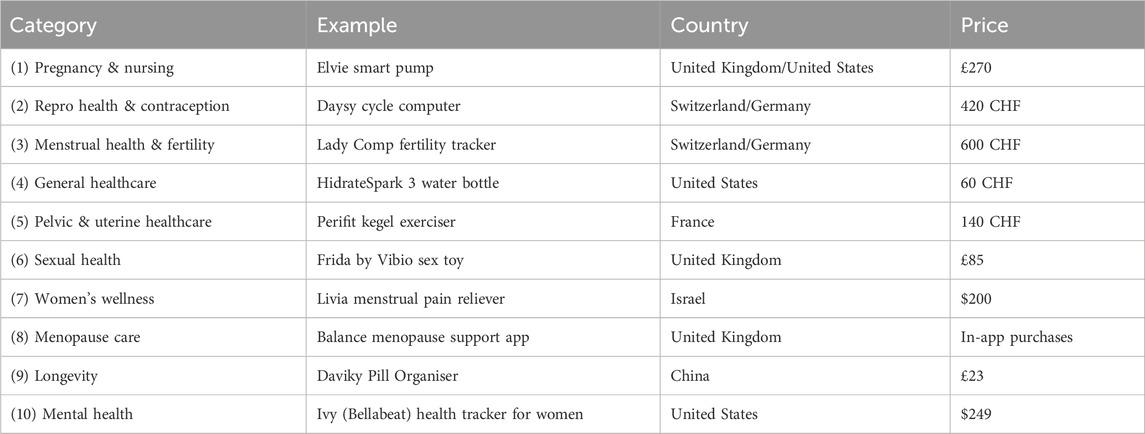

Table 2. Examples of FemTech digital solutions, categories, company’s country, and price. These categories are based on FemTech Analytics, a strategic analytics agency focused on the FemTech sector (femtech.health).

Women have been discriminated against in medical and health research in many ways. For instance, in 1977, the US Food and Drug Administration (FDA) excluded women of childbearing age from taking part in drug trials leading to women being underrepresented in drug trials ever since (Nayeri, 2021). The same trend has been followed by technology companies where their solutions are mainly tailored to the male body. In response, FemTech solutions have stepped in and the COVID-19 pandemic has contributed to the massive digitisation of healthcare, including FemTech, too.

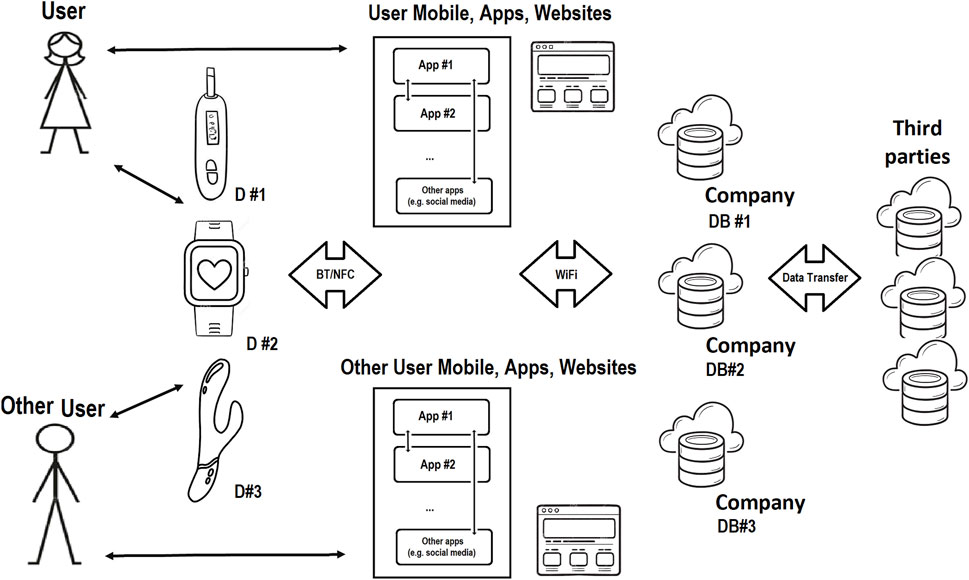

FemTech products include mobile apps, connected devices and online services covering menstruation, menopause, fertility, pregnancy, nursing, sexual wellness, and reproductive healthcare, to name a few categories. The SP of FemTech can be investigated by looking into IoT hardware, product websites, mobile apps, cloud datasets, etc. Figure 2 shows the FemTech ecosystem. However, the security and privacy of the user and data in FemTech are more complex than in some other contexts due to the nature of the data and the range of users (Coopamootoo et al., 2022).

Figure 2. FemTech IoT Ecosystem; data can be entered into these systems via user putting it in the app, website, or device, or automatically collected by the smart device, app, and website. Data is transferred to other places e.g., the company’s database in the cloud or sold to third parties.

In Mehrnezhad et al., 2022b), it is suggested that FemTech privacy should be looked at via different lenses. These include the cases where somebody (e.g., a company) has user personal data but the user does not–inverse privacy (Erickson et al., 2022), when peer pressure causes people to disclose information to avoid the negative inferences of staying silent–unravelling privacy (Peppet, 2011), when the privacy of others (e.g., child, partner, family, friend) also matters–collective privacy (Almeida et al., 2022; Almeida et al., 2023), and when systems should also focus on the intersectional qualities of individuals and communities–differential vulnerabilities (Mehrnezhad and Almeida 2021). Multiple FemTech threat actors have been identified in Mehrnezhad et al. (2022b). These interested parties include, but are not limited to: (ex-)partner and family, employers and colleagues, insurance firms, advertising companies, political and religious organisations, governments, and medical and research companies.

Such threat actors may exploit FemTech systems in various ways by performing attacks at different points of the ecosystem, e.g., human dimensions, hardware vulnerabilities, dataset attacks, app and website exploits, etc. A number of system studies have been performed on the security and privacy practices of FemTech. Examples of such system studies include analysis of the data collection practices of the period tracking app ecosystem and their policies (Shipp and Blasco, 2020), measuring the tracking practices of FemTech IoT devices Mehrnezhad et al., 2022b; Almeida et al., 2022), fertility apps and their compliance with the GDPR (Mehrnezhad and Almeida 2021), as well as traffic analysis and policy review (with a focus on HIPPA) of a subset of iOS apps (Erickson et al., 2022). Limited work has gone into the SP assessment of FemTech IoT devices (Valente et al., 2019). The SP community has yet to properly investigate the data collection of FemTech ecosystems, (lack of) implemented security and privacy-enhancing technologies (PETs), the existing vulnerabilities, and potential SP measures to mitigate them.

IoT systems interact with more intimate aspects of our lives, bodies, and environments than other technologies; meaning their risks may lead to critical safety issues. IoT systems–which are yet to be regulated, create new opportunities for data collection than just apps and have the potential to compromise user security and privacy more significantly. We argue that the intersection of health and medical solutions, user general data, and the data produced and collected by IoT devices and apps are putting and will continue to put FemTech users at greater risks, as evident by the ongoing research after the overturning of Roe vs. Wade (Mcdonald and Andalibi, 2023).

The methods we use fall into two groups: reviewing the regulations and conducting system studies.

Various aspects of FemTech data and systems make it challenging to point to one single law for the protection of FemTech data. The data collected by such technologies can be related to regulations around general data protection, work discrimination, software, apps, IoT, medical and health, and human rights. We focus on the general data protection laws and those concerning medical and health data. More specifically, we review the General Data Protection Regulations (GDPR), the Swiss Federal Act on Data Protection (FADP), the United Kingdom Medicines & Healthcare Products Regulatory Agency (MHRA), and the Regulation (EU) 2017/745 for Medical Devices.

For each law, we go through its public documents and manually search for mentions of Fem-Tech data via a few keywords. For building these keywords set we use the categories in Figure 1 and expand on it. Our keywords include, but are not limited to: Fem-Tech, women, period, fertility, pregnancy, abortion, fetus, baby, health, sex, menopause, mental health, reproductive, contraception, nursing, longevity, wellness, pelvic, uterine, breast, milk, female, cycle, birth, hormone, ovulation, lactation, menopause, etc. We identify the (lack of) related sections of each law regarding FemTech.

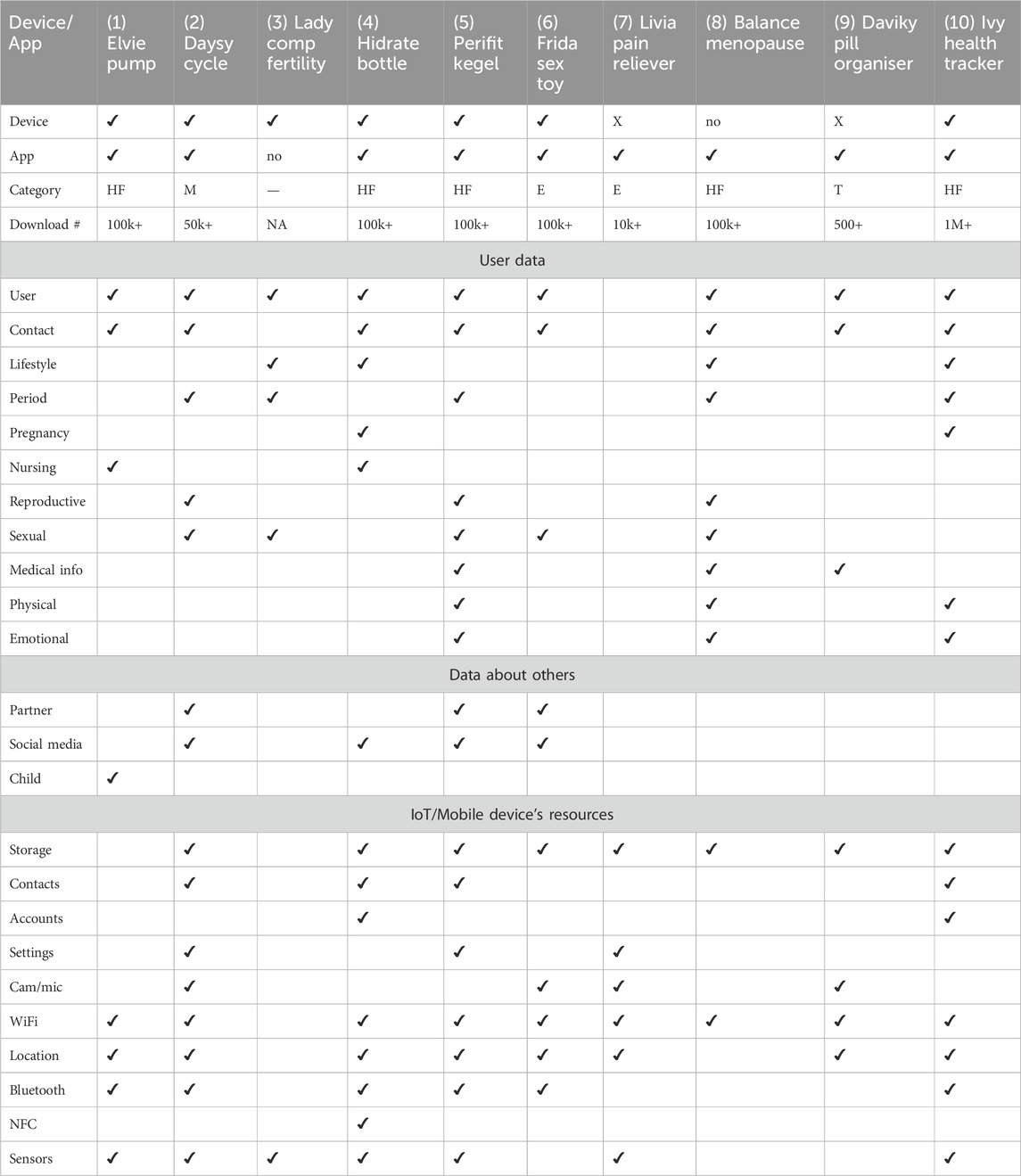

In this section, we explain our approach to investigating the data collection and privacy practices of a set of FemTech systems. In Figure 1, we have identified off-the-shelf products for the different FemTech categories. The products on the market can belong to multiple categories. For instance, a pelvic floor trainer can also be an intimate massager. Some of these products (e.g., a pill organiser) would also be categorised as general health solutions. Our system study experiments are performed in the United Kingdom between September 2022 to April 2023. We purchased these devices in either the United Kingdom or Switzerland by searching for FemTech products in each category. Table 3 shows that six of these devices (no.: 1,2,4,5,6,10) are connected to an app, one does not offer an app and is a standalone device (no.: 2), two are not connected to their apps (no.: 7,9), and one is only an app (no.: 8). These devices and apps are manufactured in various countries including United Kingdom, United States, Switzerland, Germany, France, Israel, and China and their price varies based on the product (from free apps with in-app purchases to £500–600). We chose this combination for two reasons. First, we wanted to cover a range of products from different brands with various functionalities and features. Second, some of these categories do not offer off-the-shelf IoT devices and are limited to apps or non-IT products only.

Table 3. Data collected by FemTech IoT devices and apps. Devices with X are not connected to their associated apps. Android App categories include: Health and Fitness, HF; Medical, M; Entertainment, E; and Tools, T.

We installed all the Android apps associated with these products from the Google Play App Store. In the case of IoT devices, we set them up, i.e., charging them, turning them on and connecting them to the Android app. We then started using these devices and their companion apps as an end-user. We observed what type of data each of these devices collect either via the user’s manual input (e.g., name and age) or automatic data collection via the device’s sensors and other resources, e.g., access to phone contacts. These data types are presented in Table 3. For these experiments, we followed the same structure of recent papers (Mehrnezhad et al., 2022b; Almeida et al., 2022; Mehrnezhad et al., 2022a). Two of the authors repeated this process for each app independently (on two Google Pixel 6 phones) and logged their observations. If there was an inconsistency in the result, the experiment was repeated jointly for a third time.

The ePrivacy Directive12 (“ePD,” aka “cookie law”) provides supplementary rules to the GDPR. According to the ePD website, publishers must rely on user consent when collecting and processing personal data using non-mandatory (not strictly necessary for the services requested by the user) cookies or other technologies. This is in accordance with the guidance given by the European Data Protection Board and the ICO. To comply with the GDPR, and according to the ICO guidelines, the online service providers (e.g., product websites and Android apps) are required to inform the users about tracking technologies (e.g., cookies), their purpose and reasons, and obtain the person’s consent to use the tracking data.

This consent must involve some form of unambiguous positive action (e.g., ticking a box and clicking a link) and be separated from other matters (e.g., terms and conditions and privacy policy). In order to avoid “nudge behaviour,” the privacy consent should allow the user to make a choice, therefore it should include options such as Accept (Yes, Agree, Allow, etc.) and Reject (No, Disagree, Block, etc.). If a privacy notice only includes Accept and requires the user to engage with the notice and accept the settings before they can access an online service’s content, they are presenting the user with a tracking “wall.” Such user consent is not considered valid if the use of this tracking wall nudges the user to agree to their personal data being used by the company or any third parties as a condition of accessing the service. Similar to the above, the consent should not highlight Accept over Reject and other options. The online services should enable the user to withdraw the previously given consent with the same ease that they gave it. The service providers should not rely on the other control mechanisms (e.g., browser settings or mobile settings) as users’ opt-out mechanism. Pre-enabling the non-essential tracking technologies without users taking positive action before it is set on their device does not represent valid consent and is a violation.

In order to highlight the non-compliant practices of these devices and systems, we followed the same methods we used in Mehrnezhad (2020); Mehrnezhad and Almeida (2021) and tested the websites and apps of these products for their tracking practices. For websites, we opened each website on Chrome on a MacBook laptop in order to observe (i) if there is a cookie (privacy) notice, and (ii) what the user control options were. For apps, when we installed each app on an Android device, we opened it for the first time as well as later (a few times), and again to test if there was a cookie (privacy) notice and the control options. In order to review the privacy policies, when there was a link available, we followed the same approach used in the review of the regulations by looking for FemTech-related keywords.

To study the tracking behaviour of the websites of these devices, we used Brave13 (a privacy-oriented browser) to identify how many trackers are activated when the website is loaded for the first time, and before any engagement with the cookie notice. Brave uses a block-by-design mechanism that blocks and reports ads and website trackers while the webpage is getting parsed. For identifying the app trackers, we use the Exodus Privacy app (a privacy audit platform for Android apps)14 to find the number and types of trackers within each app. Exodus uses static analysis (the evaluation of the app code without executing it) to find the tracker’s code signature in an app’s APK.

In this section, we provide the results of our review of the laws and regulations.

Due to Brexit, and since the EU GDPR is an EU regulation and no longer applies to the United Kingdom. If a company operates inside the United Kingdom, they need to comply with the Data Protection Act 2018 (DPA 2018). According to the ICO, the provisions of the EU GDPR have been incorporated directly into United Kingdom law as the United Kingdom GDPR. In practice, there is little change to the core data protection principles, rights and obligations.

In the GDPR, personal data is defined as: “information that relates to an identified or identifiable individual.” The GDPR recognises some types of personal data as more sensitive, referred to as “special category data,” and gives them extra protection15. This data includes information that reveals racial or ethnic origin, political opinions, religious or philosophical beliefs, trade union membership, as well as genetic data and biometric data, and data concerning health, sex life, and sexual orientation. The GDPR prohibits the processing of special category data. This requirement is on top of all the other subject rights for general personal data.

When we search in the GDPR articles and guidelines, Fem-Tech data categories are not mentioned directly. There is an overlap between FemTech data and some of the special categories of data, e.g., health, sex life, sexual orientation, and potentially genetic, biometric data, and even racial or ethnic origin, political opinions, religious or philosophical beliefs. The GDPR defines the following data:

Health data: “data concerning health means personal data related to the physical or mental health of a natural person, including the provision of healthcare services, which reveal information about his or her health status.”

Genetic data: “means personal data relating to the inherited or acquired genetic characteristics of a natural person which give unique information about the physiology or the health of that natural person and which result, in particular, from an analysis of a biological sample from the natural person in question.”

Biometric data: “means personal data resulting from specific technical processing relating to the physical, physiological or behavioural characteristics of a natural person, which allow or confirm the unique identification of that natural person, such as facial images or dactyloscopic data.” It does not define data concerning sex life, sexual orientation, racial or ethnic origin, political opinions, or religious or philosophical beliefs.

A few more focused guidelines and documents have been developed around the special category data including the European Data Protection Board (EDPB)’s guidelines for genetic data and biometric data. However, to the best of our knowledge, there aren’t any specific data protection regulations set for “Fem-Tech data” when collected and processed beyond health and medical clinics.

Switzerland is not an EU member, and nor is it a member of the larger European Economic Area (EEA). Swiss companies don’t have to obey the GDPR. However, they have to obey the GDPR when they are operating in the EEA. The main data protection law of Switzerland is the Federal Act on Data Protection (FADP). FADP’s definitions include a category of sensitive personal data. Sensitive personal data is defined in four groups: data on 1) religious, ideological, political or trade union-related views or activities, 2) health, the intimate sphere or the racial origin, 3) social security measures, and 4) administrative or criminal proceedings and sanctions. Accordingly, in addition to valid consent for personal data, consent must be given expressly in the case of processing sensitive personal data or personality profiles. Similar to the GDPR, the FADP gives sensitive data more protection.

Switzerland is implementing new legislation to better protect its citizens’ data: the new Federal Act on Data Protection (nFADP), will come into effect on 1st September 2023. This revision was intended in particular to bring it closer to European data protection legislation. One of the main changes is in the definition of sensitive data. These categories of personal data will continue to be considered sensitive under the Revised FADP. For instance, the Revised FADP will add two new categories: genetic data and biometric data that uniquely identify an individual.

Both GDPR and nFADP mandate a Data Protection Impact Assessment (DPIA) on special categories and sensitive data. DPIA is a process to help companies identify and minimise the data protection risks of a project16. In general, by going through the guidelines and the description of data protection laws, we did not find any explicit mention of the FemTech keywords in the FADP. We also observed that the FADP is less expanded, developed, specified, and potentially enforced when it comes to sensitive data.

The Medicines and Healthcare Products Regulatory Agency (MHRA) is an executive agency of the Department of Health and Social Care in the United Kingdom which is responsible for ensuring the safety of medicines and medical devices. Their website provides a range of guidance17 and regulations concerning health and medical services. MHRA has a guidance document on medical device stand-alone software including apps. It was published in 2014 and updated in 2022. It is clarified that “a medical purpose is determined by what the manufacturer states in the device’s labelling, instructions for use and any promotional materials.” It is a helpful document to guide developers in identifying how to progress within the regulatory environment and to distinguish whether the app falls within the scope of being a “medical device.” If the device or app is a medical device then it must comply with the Medical Devices Regulations200218. This regulation is more than 20 years old and does not provide any content on the SP aspects of modern medical devices, e.g., apps and connected devices. There is also no mention of FemTech-related data.

More recently, the MHRA has been working on a new Software and AI as a Medical Device Change Programme19 where one of its 11 work packages (WP5) is “Cyber Secure Medical Devices.” This WP’s deliverables include: 1) Secondary Legislation [Cybersecurity requirements for medical devices and IVDs (in vitro diagnostic medical devices)], 2) Regulatory Guidance (Guidance elucidating cybersecurity requirements for medical device and IVDs), 3) Best Practice Guidance (Management of unsupported software devices), 4) Processes (Reporting of relevant cybersecurity vulnerabilities).

The United Kingdom has been complying with EU medical and health regulations for years. However, due to Brexit, the United Kingdom does not necessarily comply with EU medical regulations anymore. For medical devices, Switzerland follows what is specified by the EU system of compliance assessment and certification, based on bilateral agreements. Hence, we also review the EU Regulation for Medical Devices. In the EU, medical devices must undergo a conformity assessment to demonstrate they meet legal requirements to ensure their safety and performance as intended. They are regulated at the EU Member State level, but the European Medicines Agency (EMA) is involved in the regulatory process. The Regulations on Medical Devices [Regulation (EU) 2017/745] and on In Vitro Diagnostic Devices [Regulation (EU) 2017/746] changed the European legal framework for medical devices, coming into effect in 2021 and 2022, respectively. In this section, we focus on the former.

This regulation defines “medical device” as “any instrument, apparatus, appliance, software, implant, reagent, material or other article intended by the manufacturer to be used, alone or in combination, for human beings for one or more of the following specific medical purposes.” They include diagnosis, prevention, prediction, monitoring, prognosis, treatment, alleviation, and compensation of disease, injury or disability, investigation, replacement or modification of the anatomy or of a physiological or pathological process or state, providing information by means of in vitro examination of specimens derived from the human body (e.g., organ, blood and tissue donations). They add that “devices for the control or support of conception” shall also be deemed to be medical devices. The following products shall also be deemed to be medical devices. It defines an “invasive device” as “any device which, in whole or in part, penetrates inside the body, either through a body orifice or through the surface of the body.” This regulation also applies to clinical investigations concerning such medical devices.

As general requirements for Electronic programmable systems, this document briefly says that for software devices or those that incorporate software, the development and risk management (i.e., information security, verification and validation) should be according to the state-of-the-art practices. The general safety requirements take into account the intended purpose which is set by the manufacturer. The parts related to risks and risk management are for safety risks and there is no mention of SP risks. Article 110 of this regulation is on data protection stating: “1) Member States shall apply Directive 95/46/EC to the processing of personal data carried out in the Member States pursuant to this Regulation. 2) Regulation (EC) No 45/2001 shall apply to the processing of personal data carried out by the Commission pursuant to this Regulation.” Note that the GDPR supersedes the Directive 95/46/EC and repeals Regulation (EC) No 45/2001.

Overall, we did not find any direct mention of FemTech-related data and its protection in these regulations. Similar to the United Kingdom MHRA, the European Commission also has a guidance document on Qualification and Classification of Software in Regulation20 (EU) 2017/745–MDR and Regulation (EU) 2017/746–IVDR, released in October 2019. In comparison, we found the United Kingdom guidance more comprehensive in terms of helping developers decide about the intended use of their product as a medical device.

In this section, we present the results of data collection and tracking practices as well as the privacy policy review.

In Figure 1, we have identified off-the-shelf products available for purchase in Switzerland and/or the United Kingdom, with a range of functionalities. While we purchased a device per category, these devices may belong to different categories and be advertised across categories as well as FemTech and/or general health.

We examined what types of data these devices collect, as presented in Table 3. We broadly categorise this data into three groups: user data, data about others, and device/phone data. Our examinations show that user data include, but are not limited to: Name (e.g., photo, age, gender), Contact (e.g., mobile, email, address), Lifestyle (e.g., weight, diet, sleep), Period (e.g., cycle length, ovulation days), Pregnancy (e.g., test results, due dates, IVF), Nursing (e.g., time, volume, pain) Reproductive organs (e.g., cervical mucus, biofeedback, muscle strength), Sexual activities (e.g., date, contraceptives, orgasm), Medical information (e.g., medication type, blood pressure, lab reports scan). Physical symptoms (e.g., headache, constipation), Emotional symptoms (e.g., happy, anxious). These systems also ask for or automatically collect data about others including: Baby/child (e.g., nursing, sleep cycles, fetal movements), Social media profiles, forums, or plugins (e.g., Facebook, Spotify), Partner (e.g., details of partnered sex activities, name, age, photo). These technologies might even ask about the medical history of the user’s family. Finally, these systems also have access to the devices’ resources, e.g., camera, microphone, device files/and storage, phone’s contacts and calls, communicational sensors (WiFi, Bluetooth, NFC), motion and environmental sensors from the phone or the device (e.g., temperature, pressure, Co2).

For example, Daysy Cycle Computer, Hidrate Spark3 Smart Bottle, and Perifit Kegel Exerciser collected data in all categories (user, partner, and device) quite intensively. There were also some devices which collected minimal data. For instance, Lady Comp Fertility Tracker collects some form of user data (e.g., age), cycle information, sex, and has a thermometer to measure user basal temperature. This device does not offer an app and has a memory for a year. The manual suggests that this data can be backed up by connecting the device to a PC via a cable. However, via testing, we could not use such a feature. Note that Table 3 only represents the data collected by the device and app itself and does not show the data that may be collected via the websites, e.g., for purchasing, creating a profile account for networking, and subscribing. For instance, the Livia Menstrual Pain Reliever device does not collect any data about the user, though its associated app (which is not connected to the devices) does. Also, its website requires user and contact information for purchasing and subscription and offers a review platform via a third-party service too.

As can be seen, not only do these systems collect data about the user (and others), but the majority of them have access to mobile and device resources too. Some of these permissions are marked as dangerous according to Google’s protection levels. If not justified well, the risks of access to storage, contacts, camera, microphone, and location are more visible. However, specific permissions such as access to system Settings and other Accounts on the device also impose SP risks. Similarly, there is a body of research [e.g., Delgado-Santos et al. (2022)] on how sensors can be used to break user privacy. This can become more critical in FemTech systems since they are associated with user health.

As demonstrated in Table 4, all the apps and websites that we studied appear to violate the GDPR in terms of asking for valid consent. They either nudge the user into accepting a highlighted accept, limit the access behind a privacy notice wall, bundle the privacy notice with other matters (e.g., terms and conditions), or don’t provide any privacy consent. The only exception is the Balance Menopause App which presented valid consent. However, its website did not.

Table 4. Privacy notice of apps and websites and GDPR violations. The bold options in the Website cookie notice column is the highlighted option in the notice.

In addition, our review of the privacy policies of these apps indicates that 4 apps included a reference to or a description of FemTech-related data. For instance, the DaysyDay app highlights that “Within this framework of the contractual relationship between you and us, health data such as your body temperature, menstruation, etc. may also be processed. For such processing, we need your explicit consent. By submitting this data, you are granting us that consent.” Yet, they also say: “Our online services are not subject to HIPA.” These statements are problematic since explicit consent is in conflict with obtaining consent via submitting such data. Similarly, Prifit’s privacy policy explains “Sensitive personal data” which is in accordance with the GDPR special data category and lists the data items that the app collects. However, it does not clarify how such data is given extra protection. Balance app’s policy has a dedicated section for “health data” by defining it and explaining their approach regarding explicit consent for such data. However, again, it is not clear how such data is treated with care. Bellabeat’s policy has a similar content on defining sensitive personal data to Prifit. It then says: “If the information we collect is health data or another special category of personal data subject to the European Union’s General Data Protection Regulation, we ask for your explicit consent to process that kind of data. We acquire this consent separately when you take actions resulting in our receiving the data, for instance when you use the menstrual calendar feature.” However, when trying to use the app by signing up via email, another Privacy Consent wall was shown which required the user to agree to tick two boxes: one general privacy policy and terms of use and one stating: “I agree to the processing of my personal health data for providing me Period Diary app functions, See more in Privacy Policy.”

Table 5 shows the apps and websites of all these products and the trackers. Our Exodus analysis revealed that these apps have between 1 and 9 trackers. In addition, the majority of these websites are tracking the users before the user engages with the cookie notice. One particular website (hidratespark.com/) increased the number of these trackers to more than 70 (and counting) while we kept the website open and without any interaction with it. It also attempted to use motion sensors on a mobile device if accessed from one. In contrast, the Daysy Cycle Computer and Lady Comp Tracker both included only one tracker (Google Tag Manager).

Recently, there are some efforts to enforce the law in the FemTech space (e.g., the ICO’s recent project on auditing FemTech apps21). Here we discuss the risks of FemTech, our findings, and that a more proactive approach to policy-making and enforcement is needed in this sector.

As we discussed earlier, multiple threat actors have been shown to be interested in such data (Mehrnezhad et al. 2022b) including(ex-)partner and family, employers and colleagues, insurance firms, advertising companies, political and religious organisations, governments, and medical and research companies. For instance, some of these products can have shared usage, e.g., via a remote partner mode. Access to such intimate data (e.g., reproductive health) without informed and continuous user consent may enable tech abuse such as external pressure to become pregnant (WHO, 2020; Mehrnezhad and Almeida, 2021) and/or cyberstalking (Stevens et al. 2021; Chan 2021). The existing regulations are yet to cover several aspects of online safety for collectively created and shared data.

FemTech solutions have already found their way to organisational usage (Erickson et al. 2022) There are concerns around how workplace monitoring threatens women’s equity (Brown 2021; Veliz 2022; Brown 2020) e.g., the case of pregnancy redundancies and impact on promotions (Maternity Action, 2019) or discrimination due to infertility (van der Berch 2010). Given that FemTech solutions (e.g., fertility apps) are already sharing these data with third parties including employers (Harwell 2019; Scatterday 2022) without user consent (Mehrnezhad and Almeida 2021), these technologies could be used to further gender inequality at work. Similarly, work-related regulations, policies and guidelines could be blindsided when it comes to SP of such data. Take the “BS 30416, Menstruation, menstrual health and menopause in the workplace–Guide” as an example22 with no content on the SP issues of the data related to these practices.

Health insurance discrimination on the basis of health status is already a pressing issue (Crossley 2005; Rosenbaum 2009) and FemTech data can be used for such applications (Scatterday 2022). When an insurance company has access to the health data of users, parents, siblings and other relatives, it is directly putting the privacy of others at risk too. If a possibility of a pre-existing medical condition (e.g., infertility or breast cancer) is identified, such insurance firms will not support the person or do it at much higher rates. A situation that might continue even after the death of the users; impacting their offspring. In such situations, customers have almost zero agency in objecting to the decision since the existing practices (including the regulations) do not give such support either in principles and/or the enforcement.

During the pandemic selling and sharing medical and health data, e.g., with medical and research companies became a common practice. This trend has contributed to the ongoing conversations about the sensitive nature of such data and legal complications (Powles and Hodson 2017; Solon 2018). FemTech data deals with a complex mix of health, medical, biometric, and genetic data, alongside sexual activities/orientation, reproductive decisions, and even religious and political views (Mehrnezhad and Almeida 2021) and can be of particular interest to cyber-criminals e.g., for blackmailing. Data breaches in digital health and FemTech are even more serious because of the sensitive nature of the data (Veliz 2022; Rosas 2019), particularly when taking socio-cultural differences into account; more marginalised groups will have more to lose from such disclosures.

From app-only data collection to sensor-enabled FemTech devices, with extra processing via advanced algorithms, e.g., AI, FemTech data reveal people better than they know themselves. Reportedly, these apps share sensitive data (e.g., sex activity) with third parties (e.g., Facebook) the moment the user opens the app, even without a Facebook account Int (2019). Apart from the academic research on FemTech tracking practices such as (Mehrnezhad and Almeida 2021; Shipp and Blasco 2020), news reports have also paid attention to this matter (Page 2022), including the cases of selling FemTech data by data marketplaces (Cox 2022), the interest of political and religious organisations in such data (Glenza 2019) and the potential for new opportunities for spreading health-related misinformation (Pennycook et al. 2021). Additionally, FemTech data can be particularly of interest to governments. The recent debates around the overturn of the abortion law in the US Supreme Court (Page 2022) has shown very well how FemTech (e.g., apps) can enable such a systematic tracking and controlling of women’s bodies (Alvarez 2019; Shoichet 2020).

Our review of the related regulations, in combination with our system studies, highlight that the existing risks can put the users at differential risks.

Our critical review of FemTech-related regulations shows that they are inadequate in addressing the risks associated with these technologies. The EU and United Kingdom medical devices regulations don’t have any references to FemTech data and user protection. The GDPR and Swiss FADP have references to sensitive and special category data which overlap with FemTech data. Yet, there are several areas for expansion and improvement.

While GDPR gives extra protection to special category data, there are 10 exceptions: explicit consent, employment, social security and social protection (if authorised by law), vital interests, not-for-profit bodies, made public by the data subject, legal claims or judicial acts, reasons of substantial public interest (with a basis in law), health or social care (with a basis in law), public health (with a basis in law), and archiving, research and statistics (with a basis in law). Special category data cannot be used for solely automated decision-making (including profiling) that has legal or similarly significant effects unless there is explicit consent or substantial public interest conditions are met. The exceptions of data protection regulations (e.g., GDPR) are indeed debatable. While the analysis of these exceptions in the wild is beyond the scope of this paper, we believe that this is an area that will unfold significantly in the future. For instance, consider the first exception: explicit consent. Given the sensitive nature of FemTech data and its differential and complex risks and harms (Mehrnezhad and Almeida 2021), how do we guarantee that the user is fully aware of the consequences of such consent and makes an informed and later continues decision? More research is needed to fill in these research gaps.

When reviewing the current general data protection regulations and the medical ones, we find several gaps and disconnections between the two sets of regulations. We would expect a higher level of safeguarding in these products where personal health data is recorded, even if the app does not fall within current medical device definitions and regulations. This is supposed to be covered by the special categories of data in the general data protection laws. However, in practice and based on our experiments, it is not enforced properly. For instance, we did not find any appropriate consent in apps and websites tested and whether or not any extra protection is given to sensitive FemTech data. As we discuss in Almeida et al. (2022), the fact that these products collect data about others (partner, baby/child, family, etc.) adds to these complexities.

When registering an app in the app store, the developers select the most appropriate app category. However, due to the ambiguity in the definition of these categories, the doors are open to potential misuse and gaming by the registrant. At the time of this writing, there are 38 categories on the Google Play App Store including “Medical,” and “Health and Fitness” categories. Yet, as reported in Table 3, only one of these apps (#2) is listed as medical, 5 listed as health and fitness, and the rest include “Entertainment” or “Tool.” Miscategorising an app which contains medical records (such as user’s medical conditions and medicines, or family history) as Health & Fitness or other groups would enable the developers to avoid the potential consequences, for example, of remaining in the app market without drawing significant attention to it. As long as such apps and services make only general wellness claims -like tools, entertainment, health and fitness, they do not need to be vetted by health regulators or as seriously as one expects by the mobile app store.

The United Kingdom MHRA is developing a new Software and AI as a Medical Device Change Programme, where apart from a dedicated work package to cybersecurity (WP5), it also has one on Classification (WP2). The problem statement says: “Currently, the Medical Device Regulations 2002 (as amended) do not classify software proportionate to the risk it might pose to patients and public safety.” We believe that such efforts are required immediately to protect citizens against these risks.

We identified a range of inappropriate SP practices in a subset of FemTech systems. We showed that they do not present valid consent, they do not give extra protection to sensitive data, and track users without consent. These are some of the non-compliant practices within the current regulations. In Mehrnezhad et al. (2022b), we discuss that not only is such intimate data collected by FemTech systems, but also this data is processed and sold to third parties23. In Mehrnezhad and Almeida (2021), Mehrnezhad and Almeida (2023), we have discussed at length that complex harms and risks such as the re-identification of individuals based on health data (Goldacre et al. 2022) can differentially impact the users.

In addition, most of these products do not need a wide range of information about the users to deliver their services. Yet they continue to collect such sensitive data. Some of these practices could be due to factors such as copying and pasting an app code by developers without considering privacy-by-design principles. For instance, the app associated with Livia period pain reliever (which is approved by the FDA), is simply a guide on the use of the device. While interacting with it, we did not notice any data collection or permission requests. Yet, when we checked the app’s permissions, we noticed that the camera, music and audio, notifications, and photos and videos are listed. If turned on, this app is able to collect such data. This is clearly a bad practice from the developer side.

Non-compliance or poor adherence to laws and standards may arise for many reasons. There may be unintentional oversight or a deliberate attempt for commercial or other purposes. The developers themselves may be unaware of best practices and regulations in the area. Different solutions (websites, apps, IoT devices) developed in different territories may be subject to different regulations, yet regulators may not have the powers or resources to certify compliance or investigate potential non-compliance where no certification process exists. This might be the time to focus on more sectorial and domain-specific data protection regulations as we discuss next.

As discussed in this paper, two sets of regulations apply to FemTech solutions: general data protection regulations and medical and health regulations. However, as shown, alone or combined they fail to protect the user from malicious practices. In addition, a key complement to regulations is systems of certification, compliance testing and policing/penalties. Accordingly, providers and developers need to be aware of the regulations, guidance and best practices and have appropriate tools to develop and evaluate products. Currently, there are no entities well-equipped to provide such services.

We acknowledge that the legal framework of the medical and health sector is a combination of laws, standards, certifications, and beyond. For instance, ISO 13485 is specifically for products that fall within the criteria for a “medical device.” Implementation of ISO 13485 tends to draw with its alignment to data standards, as such products are subject to clinical trial validation, governed by ethics committees, who would likely question marginal data practices and so has a wider influence on the company and its marketing behaviour. Companies often deliberately frame their products as “non-medical” and, e.g., as “wellbeing” to avoid being subject to the medical device regulation. Hence, the period and cycle tracking apps are on the market free from regulation as it can be argued that the information is not used for clinical decision-making and guidance for treatment. Whereas ovulation tests (aka class I in medical devices regulations)/pregnancy tests (class II) are used and subject to regulation, even if ovulation tests are then associated with an app just for the purpose of cycle tracking.

We are now seeing more efforts in the policymaking space to recognise these issues. For instance, the EU is aiming to foster common European data spaces. Data spaces are data ecosystems, often domain-specific, in which data sharing should be possible between actors. One of the data spaces is the European Health Data Space24. This proposal is still under review and it is unclear when and how it will be implemented and enforced, let alone what kind of organisations fall under these definitions. We believe that the medical and health space is in need of domain-specific and sectorial regulations with attention to the needs of marginalised user groups such as women and those with physical and mental ability limitations. That, together with better enforcement of the existing regulations discussed in this paper can lead to more effective practices to protect the citizens’ security, privacy, and safety, while enabling them to improve the quality of their lives including their health via using these technologies without any risk or fear.

The SP issues around FemTech can lead to differential harm where complex risks are enabled by many factors including gaps in the regulations, non-compliant practices, the lack of enforcement, and limited research and guidelines for secure, privacy-preserving, and safe products. We reviewed the regulations related to FemTech in the United Kingdom, EU, and Switzerland and identified the gaps. We ran experiments on a range of FemTech devices, apps, and websites and identified several exploitative practices. We discussed our results and suggested that policymakers explicitly acknowledge and accommodate the risks of these technologies in the relevant regulations.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

MM: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Writing–original draft, Writing–review and editing. TV: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Writing–original draft, Writing–review and editing. MC: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Writing–original draft, Writing–review and editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the ETH Future Computing Laboratory, partially conducted when the first and second authors were working at ETH Zurich in 2022. This work has been supported by the UK EPSRC PETRAS National Centre of Excellence for IoT Systems Cybersecurity (EP/S035362/1), and the UKRI funded project, AGENCY: Assuring Citizen Agency in a World with Complex Online Harms (EP/W032481/2).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

3cdc.gov/phlp/publications/topic/hipaa.html

4fda.gov/regulatory-information/laws-enforced-fda/federal-food-drug-and-cosmetic-act-fdc-act

5ftc.gov/legal-library/browse/statutes/federal-trade-commission-act

6ico.org.uk/for-organisations/guide-to-data-protection/guide-to-the-general-data-protection-regulation-gdpr/

8gov.uk/government/organisations/medicines-and-healthcare-products-regulatory-agency

9ema.europa.eu/en/human-regulatory/overview/medical-devices

10iso.org/iso-13485-medical-devices.html

12eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A02002L0058-20091219

14reports.exodus-privacy.eu.org/en/

15ico.org.uk/for-organisations/guide-to-data-protection/guide-to-the-general-data-protection-regulation-gdpr/special-category-data/what-is-special-category-data/

16ico.org.uk/for-organisations/guide-to-data-protection/guide-to-the-general-data-protection-regulation-gdpr/accountability-and-governance/data-protection-impact-assessments/

17gov.uk/government/publications/medical-devices-software-applications-apps

18legislation.gov.uk/uksi/2002/618/contents/made

19gov.uk/government/publications/software-and-ai-as-a-medical-device-change-programme/

20ec.europa.eu/docsroom/documents/37581?locale=en

21datamatters.sidley.com/2023/04/20/femtech-has-been-warned-uks-ico-indicates-closer-scrutinization-of-femtech-apps/

22bsigroup.com/en-GB/our-services/events/events/2023/menstrual-and-menopausal-health-in-the-workplace/

23ftm.eu/articles/your-intimate-data-is-being-sold

24health.ec.europa.eu/ehealth-digital-health-and-careeuropean-health-data-space_en

Almeida, T., Mehrnezhad, M., and Cook, S. (2023). “The importance of collective privacy in digital sexual and reproductive health,” in The 17th annual UK Fertility Conference. arXiv preprint arXiv:2311.15432.

Almeida, T., Shipp, L., Mehrnezhad, M., and Toreini, E. (2022). Bodies like yours: enquiring data privacy in femtech. ACM Nord. doi:10.1145/3547522.3547674

Alvarez, P. (2019). House judiciary committee asks former orr director to clarify testimony on pregnant minors. CNN. Available at: https://edition.cnn.com/2019/03/22/politics/scott-lloyd-pregnant-minors/index.html

Brown, E. (2020). Supercharged sexism: the triple threat of workplace monitoring for women. SSRN 3680861

Brown, E. (2021). The femtech paradox: how workplace monitoring threatens women’s equity. Jurimetrics.

Chan, S. (2021). Hidden but deadly: stalkerware usage in intimate partner stalking. Introd. Cyber Forensic Psychol. Underst. Mind Cyber Deviant Perpetrators, 45–66.

Coopamootoo, K., Mehrnezhad, M., and Toreini, E. (2022). “i feel invaded, annoyed, anxious and i may protect myself”: individuals’ feelings about online tracking and their protective behaviour across gender and country. USENIX.

Cox, J. (2022). Data marketplace selling info about who uses period tracking apps. Available at: https://www.vice.com/en/article/v7d9zd/data-marketplace-selling-clue-period-tracking-data.

Crossley, M. (2005). Discrimination against the unhealthy in health insurance. U. Kan. L. Rev. 54, 73.

Delgado-Santos, P., Stragapede, G., Tolosana, R., Guest, R., Deravi, F., and Vera-Rodriguez, R. (2022). A survey of privacy vulnerabilities of mobile device sensors. ACM Comput. Surv. (CSUR) 54, 1–30. doi:10.1145/3510579

Erickson, J., Yuzon, J. Y., and Bonaci, T. (2022). What you don’t expect when you’re expecting: privacy analysis of femtech. IEEE Transactions on Technology and Society.

Glenza, J. (2019). Revealed: women’s fertility app is funded by anti-abortion campaigners. The Guardian Theguardian.com/world/2019/may/30/revealed-womens-fertility-app-is-funded-by -anti-abortion-campaigners

Goldacre, B., Morley, J., and Hamilton, N. (2022). Better, broader, safer: using health data for research and analysis. A Review Commissioned by the Secretary of State for Health and Social Care.

Harwell, D. (2019). Is your pregnancy app sharing your intimate data with your boss? The Washington Post. Available at: https://www.washingtonpost.com/technology/2019/04/10/tracking-your-pregnancy-an-app-may-be-more-public-than-you-think/.

How menstruation apps are sharing your data (2019). How menstruation apps are sharing your data. Priv. Int. Available at: https://privacyinternational.org/long-read/3196/no-bodys-business-mine-how-menstruations-apps-are-sharing-your-data.

Maternity Action (2019). Discrimination during maternity leave and on return to work, Available at: https://maternityaction.org.uk/advice/discrimination-during-maternity-leave-and-on-return-to-work/

Mcdonald, N., and Andalibi, N. (2023). “i did watch ‘the handmaid’s tale”’: threat modeling privacy post-roe in the United States. ACM Trans. Computer-Human Interact. 30, 1–34. doi:10.1145/3589960

McMillan, C. (2023). Rethinking the regulation of digital contraception under the medical devices regime. Med. Law Int. 23, 3–25. doi:10.1177/09685332231154581

Mehrnezhad, M. (2020). “A cross-platform evaluation of privacy notices and tracking practices,” in IEEE EuroS&P Workshop (EuroUSEC).

Mehrnezhad, M., and Almeida, T. (2021). “Caring for intimate data in fertility technologies,” in Acm CHI.

Mehrnezhad, M., and Almeida, T. (2023). “my sex-related data is more sensitive than my financial data and i want the same level of security and privacy”: user risk perceptions and protective actions in female-oriented technologies. EuroUSEC.

Mehrnezhad, M., Coopamootoo, K., and Toreini, E. (2022a). How can and would people protect from online tracking? Proc. Priv. Enhancing Technol. 1, 105–125. doi:10.2478/popets-2022-0006

Mehrnezhad, M., Shipp, L., Almeida, T., and Toreini, E. (2022b). “Vision: too little too late? do the risks of femtech already outweigh the benefits?,” in EuroUSEC 2022.

Nayeri, F. (2021). Is ‘femtech’ the next big thing in health care?. Available at: https://www.nytimes.com/2021/04/07/health/femtech-women-health-care.html, [Accessed April 2022]

Page, C. (2022). As roe v. wade reversal looms, should you delete your period-tracking app? Available at: https://techcrunch.com/2022/05/05/roe-wade-privacy-period-tracking

Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., and Rand, D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature 592, 590–595. doi:10.1038/s41586-021-03344-2

Peppet, S. R. (2011). Unraveling privacy: the personal prospectus and the threat of a full-disclosure future. Nw. UL Rev.

Powles, J., and Hodson, H. (2017). Google deepmind and healthcare in an age of algorithms. Health and technology 7, 351-367. doi:10.1007/s12553-017-0179-1

Rosas, C. (2019). The future is femtech: privacy and data security issues surrounding femtech applications. Hastings Bus. Law J. 15.

Rosenbaum, S. (2009). Insurance discrimination on the basis of health status: an overview of discrimination practices, federal law, and federal reform options: executive summary. J. Law, Med. Ethics 37, 101–120. doi:10.1111/j.1748-720x.2009.00423.x

Scatterday, A. (2022). This is no ovary-action: femtech apps need stronger regulations to protect data and advance public health goals. N. C. J. Law Technol. 23.

Shipp, L., and Blasco, J. (2020). How private is your period? a systematic analysis of menstrual app privacy policies. Proc. Priv. Enhancing Technol. 2020, 491–510. doi:10.2478/popets-2020-0083

Shoichet, C. (2020). In a horrifying history of forced sterilizations, some fear the us is beginning a new chapter. Available at: https://edition.cnn.com/2020/09/16/us/ice-hysterectomy-forced-sterilization-history/index.html.

Solon, O. (2018). Data is a fingerprint: why you aren’t as anonymous as you think online. Available at: https://www.theguardian.com/world/2018/jul/13/anonymous-browsing-data-medica l-records-identity-privacy

Stevens, F., Nurse, J. R., and Arief, B. (2021). Cyber stalking, cyber harassment, and adult mental health: a systematic review. Cyberpsychology, Behav. Soc. Netw. 24, 367–376. doi:10.1089/cyber.2020.0253

Valente, J., Wynn, M. A., and Cardenas, A. A. (2019). Stealing, spying, and abusing: consequences of attacks on internet of things devices. IEEE Secur. Priv. 17, 10–21. doi:10.1109/msec.2019.2924167

van der Berch, K. (2010). Courts’ struggle with infertility: the impact of hall v. nalco on infertility-related employment discrimination, 81. University of Colorado Law Review.

Veliz, C. (2022). Privacy is power: why and how you should take back control of your data. Int. Data Priv. Law.

World Health Organization (2020). Sexual and reproductive health: infertility. World Health Organization. Available at: https://www.who.int/reproductivehealth/topics/infertility/keyissues/en/.

Keywords: female-oriented technologies, FemTech, security, privacy, digital health, data protection regulations

Citation: Mehrnezhad M, Van Der Merwe T and Catt M (2024) Mind the FemTech gap: regulation failings and exploitative systems. Front. Internet. Things 3:1296599. doi: 10.3389/friot.2024.1296599

Received: 18 September 2023; Accepted: 08 February 2024;

Published: 18 March 2024.

Edited by:

Peter Novitzky, University College London, United KingdomReviewed by:

Sine Nørholm Just, Roskilde University, DenmarkCopyright © 2024 Mehrnezhad, Van Der Merwe and Catt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maryam Mehrnezhad, bWFyeWFtLm1laHJuZXpoYWRAcmh1bC5hYy51aw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.