- 1Department for Health Services Research, Division for Health Services, The Norwegian Institute of Public Health, Oslo, Norway

- 2Faculty of Medicine, Institute of Health and Society, University of Oslo, Oslo, Norway

Introduction: The experiences of patients receiving health care constitute an important aspect of health-care quality assessments. One of the purposes of the national program of patient-experience surveys in Norway is to support institutional and departmental improvements to the quality of local health-care services. This program includes national surveys of patients receiving interdisciplinary treatment for substance dependence performed four times between 2013 and 2017. The aims of this study were twofold: (i) to determine the attitudes of employees towards these surveys and their use of the survey results, and (ii) to identify changes in patient experiences at the national level from 2013 to 2017.

Material and methods: Employees were surveyed one week prior to conducting cross-sectional patient experience surveys. One-way ANOVA and chi-square tests were used to assess differences between years, and content analysis was applied to the open-ended comments.

Results: Around 400 employees were recruited in each of the four survey years, and the response rate varied from 61% to 79%. The employees generally reported a positive attitude towards patient-experience surveys, and 40%–50% of them had implemented quality initiatives based on the results of the patient surveys. The mean score for the question on usefulness was higher than 3 (on a Likert scale from 1 to 5 points) for all four surveys. Many employees provided details about the changes that had been made in open-ended comments. The results from the patient-experience surveys demonstrated positive changes over time.

Discussion: The employees had positive viewpoints towards patient-experience surveys, and around half of them had implemented quality initiatives. This implies that employees find such surveys important, and that patient-experience surveys are regarded as useful and actionable. The surveys of patients showed positive changes in their experiences over time. The most-common target areas reported by employees showed clear improvements in patient experiences at the national level.

1 Introduction

Measuring and reporting patient experiences and satisfaction have become important components of health-care quality assessments, due to their links with patient safety and clinical effectiveness, and also the desire to provide patient-centred care (1, 2). Such measurements are carried out routinely in health-care systems, including in Norway (3–7). In Norway, secondary health-care organizations, such as hospitals and specialized treatment facilities, including those providing interdisciplinary care for substance dependence, are required to measure patient experiences as part of quality and performance reporting, with the results affecting some of the funding that they receive from the government through a scheme where a share of the budget for the health regions is made dependent on achievement of targets on selected indicators (8).

Many methods can be used to gather such data, with surveys being very common (7, 9). The Norwegian Institute of Public Health (NIPH) conducts research on patient-reported experiences and outcomes, aiming for a more patient-centred health system through surveys and continuous measurements across various patient populations. Several different populations have been surveyed using tested and validated questionnaires, such as the PEQ-ITSD for inpatients receiving interdisciplinary treatment for substance dependence and the PIPEQ-OS in mental-health care (10, 11). Results from previous surveys, including the current, are publicly available for all relevant levels of the health-care system, from the smallest units or departments, throughout the health-care hierarchy to the regional and national level, as basis for use in local quality improvement, hospital management, free patient choice and public accountability.

Even though large-scale patient-experience surveys are conducted frequently worldwide, information about the use of the obtained data in improving the quality of local services is scarce (7, 12). Such surveys are often conducted by an external surveyor who reports back to the health-care institutions being surveyed (7, 12), with little support provided on how to use the data (7). Moreover, little is still known about whether or how the results lead to quality improvements (9). A survey found that half of the employee respondents in Norwegian paediatric departments reported that they had implemented improvements to address the problems identified in a national survey of parents, and that such surveys can be actively utilized in quality-improvement interventions (13).

The Norwegian national patient-experience program includes surveys of patients receiving interdisciplinary treatment for substance dependence, which have been conducted four times during 2013–2017, with the first three being annual measurements, and with a small delay for the last, being conducted in 2017 (14–17). The questionnaire used in the patient surveys has been developed and tested using standardized methods to ensure its validity and reliability (10). The surveys have been mandatory for all public residential institutions as well as private residential institutions that have contracts with the regional health authorities, institution here being defined as the most granulated level of health care, and often underlying a department, public hospital, or private organization, constituting the levels of health-care system over institution in the field of interdisciplinary treatment for substance dependence. All patients aged 16 years or older, who were receiving residential treatment for substance dependence were invited to participate in the surveys. Detoxification units and patients treated for gambling addiction were excluded. In addition, patients could be excluded based on ethical considerations by personnel at each institution. Unlike most national surveys in Norway, the data have been collected while the patients are at the institutions, hence requiring close collaboration between the NIPH and institution employees. Reports were distributed a few months after data collection to all levels of health care following each of the four national surveys. The results from the patient-experience surveys were presented on the particular level of health care service, such as department, institution, hospital trust, regional hospital trust as well as national, given sufficient patient responses (n ≥ 5). The reports included descriptives on items and scales, as well as a short introduction with description of the methods and appendices where the scores from each hospital trust were compared with the national mean on the scale scores. The reports were distributed via e-mail from the NIPH to regional health authorities who then forwarded to leaders on the health care levels below. The reports were also published on the NIPH's website. Improvements in patient experiences could be expected if the providers use this information to improve the quality of health care (18).

Conducting a patient-experience survey will not in itself improve the quality of care (19). To facilitate the use of patient-experience data to improve the quality of health care, data need to be reliable, valid and usable (6). This includes favorable attitudes toward such data, as well as an absence of barriers that can hinder quality work that is desirable or needed. Alongside the national patient-experience surveys, we surveyed employees at the institutions about their attitudes towards the surveys and their use of the results in quality improvement. The current study had the following aims: (i) to determine the attitudes of employees towards the national patient-experience surveys and their use of the results when providing interdisciplinary treatment for substance dependence, and (ii) to identify changes in patient experiences at the national level from 2013 to 2017 While individual patient care is crucial, this study focuses on how patient-reported experience measures can be utilized to drive improvements at the institutional and departmental level. To our knowledge, this is the first study involving repeated measurements at the national level including both the experiences of patients with health-care services and the assessment of employees and how they use these experiences to improve the health care quality.

2 Material and methods

2.1 Data collection

When preparing for data collection in the patient-experience surveys, we first established contact with all public residential institutions as well as private residential institutions that had contracts with the regional health authorities. Each institution named a local project manager that would be the NIPH contact responsible for the data collection at their institution. The patient-experience data were collected using a cross-sectional design on a single day decided by the institutions during a single designated week decided by the NIPH. All patients were asked to complete the surveys on the same day to avoid discussions between patients and potential coordination of responses. The health personnel at each institution were responsible for distributing and collecting the completed questionnaires, making this an on-site survey. The questionnaires were provided to the institutions in pre-packed envelopes, each containing an information sheet, the questionnaire itself, and a return envelope. On the designated day of data collection, the staff were instructed to give one envelope to each patient who consented to participate. Patients were informed that participation was voluntary and that their responses would remain confidential. Returning a completed questionnaire was treated as implied consent to participate, following established procedures. The surveys were conducted anonymously as part of a quality assurance project, and no additional demographic data were collected beyond what was included in the questionnaire. Institutions provided NIPH with the number of eligible patients and reasons for ineligibility, but no further information about the individual respondents (10, 20).

When preparing the data collection amongst employees, we asked every local project manager to recruit the following employees from their institutions to participate in an employee survey: department manager, institution manager, quality advisor, and one or two employees who were responsible for quality assurance and improvement. This would ensure up to five recruited employees from every institution in Norway. To ensure that the local project managers were able to recruit the necessary employees, the NIPH provided them with an electronic information sheet about the survey, as well as instructions to forward an email with this information sheet attached from the NIPH. This approach aimed to facilitate recruitment by establishing the NIPH's authority over the survey and to minimize hierarchical differences within the institution. The recruited employees were provided with a link via email to the employee survey during the week prior to each of the four patient-experience surveys. Non-respondents received up to two reminders during that week.

2.2 Questionnaires

The reliability and validity of the questionnaire used in the patient-experience surveys have been reported previously (10). Only small changes have been made in it over the years, with some items added but none removed. The questionnaire used in 2017 comprised 58 closed-ended questions, with response scales mostly ranging from 1 (“not at all”) to 5 (“to a very large extent”). The questionnaire also included two open-ended questions where patients could report the help they received from the current institution and the municipality. Tests of these surveys have yielded 3 quality indicators: (i) treatment and personnel (12 items), (ii) milieu (5 items), and (iii) outcome (5 items) (20).

The employee questionnaire was based on previous studies (13, 21), but modified for use in the present population. The original questionnaire was developed through semi-structured face-to-face interviews with staff at a French hospital that had been conducting patient-experience surveys systematically for several years (21). Content analysis was applied to the results from the interviews to identify important themes to be included in an employee-questionnaire. The original questionnaire comprised 17 closed-ended and 4 open-ended questions. Factor analysis revealed two factors with a Cronbach's α > 0.70 (21). The original version was modified for use in specialized paediatric departments in Norway. The modifications were mostly to ensure that the wording was relevant and understandable in the new setting. The Norwegian questionnaire comprised 18 closed-ended and 6 open-ended questions (13). Some further modifications were necessary to ensure relevance in the current population, i.e., employees at residential institutions. The 2013 questionnaire comprised seven closed-ended questions on attitudes towards patient involvement and patient-experience surveys. It was also added a question on whether their institution had carried out any local patient-experience surveys. Six background questions were also included, i.e., gender, age, how many years they had been employed at the current institution, work description, whether they had a managerial position or not, and professional background. The questionnaires used in the three following employee surveys was expanded into 27 items, adding items on the use and usefulness of the national patient-experience surveys when planning and conducting quality-improvement work. Many of the questions were scored on a 5-point response scale ranging from 1 (“not at all”) to 5 (“to a very large extent”), and most of the remaining questions were scored on a categorical response scale. An additional five open-ended questions allowed the employees to provide information on (i) behavioural changes among the staff after patient surveys, (ii) initiatives taken to improve patient experiences and (iii) barriers for using the results from the patient survey previous year, (iv) comments regarding how the results were presented, and (v) report on whatever topics they felt the questionnaire did not cover.

2.3 Analysis

Descriptive statistics are presented for the answers of the patients and employees to the closed-ended questions. Mean and SD values are reported for questions scored on the 5-point response scale. One-way ANOVA with Bonferroni post-hoc correction was used to test differences in continuous variables between years, while chi-square tests were applied to categorical responses. Five questions measuring attitudes towards patient-experience surveys were included in an exploratory factor analysis with principal-axis factoring to ensure a unidimensional scale, and Cronbach's alpha was checked for internal consistency. The scale scores were linearly converted from the questionnaire to a scale ranging from 0 to 100, with a higher score indicating a better outcome. Statistical analyses were performed using SPSS version 25.0.

Content analysis was applied to the open-ended comments in the employee survey. This method was chosen to identify key topics and themes that were described by the employees, and hereby ensure that these were the focus of the reporting of the results. Two researchers (M.H. and H.H.I.) read and analysed the responses independently, before discussing and reaching a consensus on their content. Most of the questions in the employee questionnaire focused on the categories from the patient questionnaire or categories that corresponded to one of them. Accordingly, the categories in the patient questionnaire were used to structure the responses to the open-ended comments.

3 Results

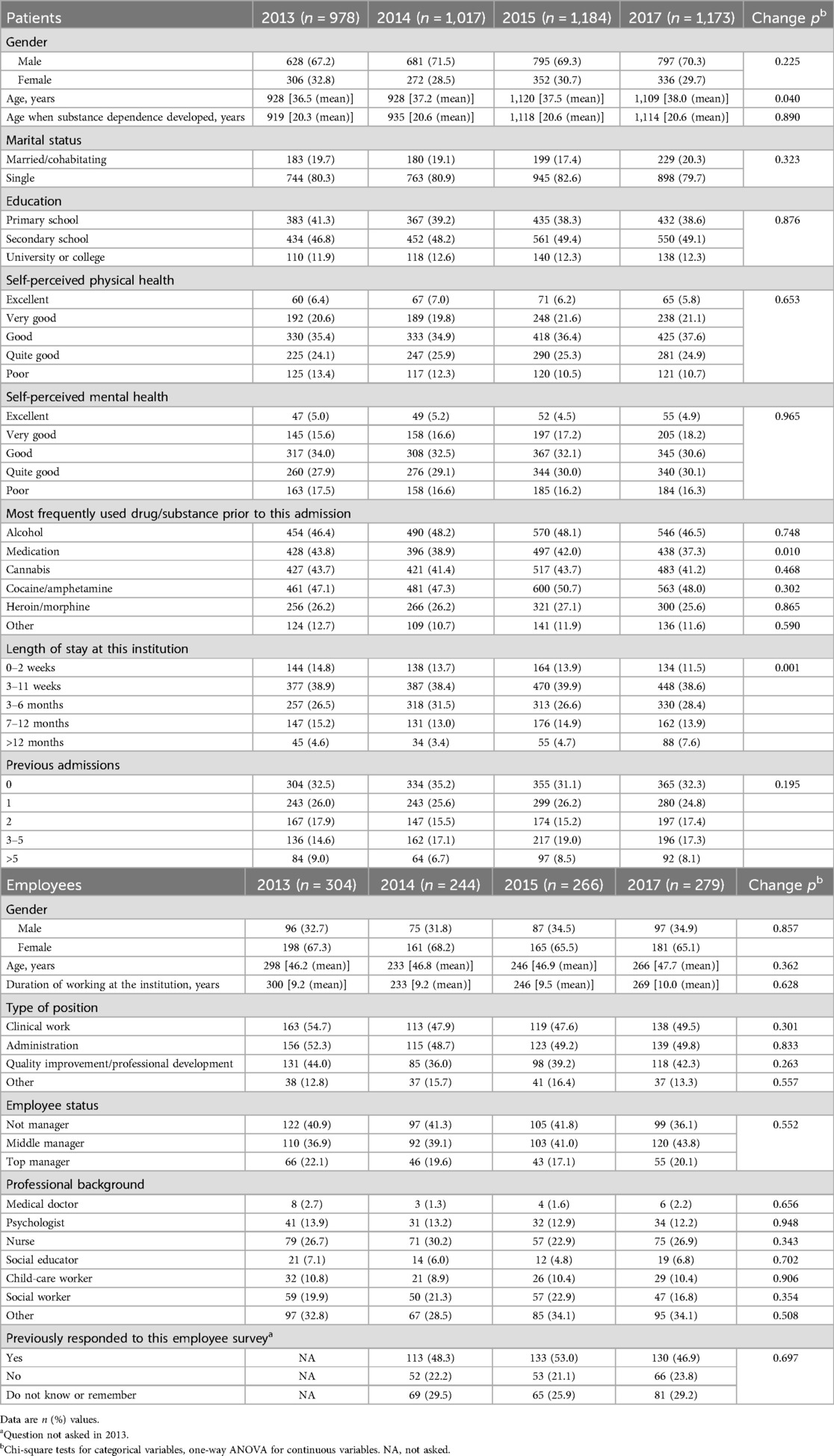

There were 98, 101, 110, and 110 institutions that participated in the national patient surveys in 2013, 2014, 2015 and 2017, respectively. For each of the surveys the number of respondents were 978 (91%), 1,017 (91%), 1,184 (90%), and 1,173 (91%), respectively. As shown in Table 1, more than two-thirds of the patients were male, and their mean ages ranged from 36 to 38 years all four surveys. The mean age when they had developed substance dependence was around 20, and around 80% reported being single. Around half of the respondents had finished secondary school. Around 40% reported alcohol, medication, cannabis, and cocaine/amphetamine as the drug/substance they most frequently used, meaning that some reported several drugs/substances. The most commonly reported “length of stay at this institution” was 3–11 weeks, and more than 50% had either none or one previous admission prior to the current stay. For most of the background variables there were no statistically significant differences between the years. The very few statistically significant differences that were found (i.e., age, length of stay and medication as the most frequently used drug/substance used) were small. This gives a population that were similar over the four surveys.

The numbers of employees who were recruited and who responded were 384 and 304, respectively, in 2013, 403 and 244 in 2014, 432 and 266 in 2015, and 416 and 279 in 2017 (response rates of 79%, 61%, 62% and 67% in the four years). Many of the employees participated in several of the surveys. In all four surveys, two-thirds of the respondents were female, and their mean age was around 47 years. The mean duration of work experience at the institution was 9–10 years (Table 1). More than 20% of the employees were nurses, social workers or had other professional backgrounds. Around 20% were top managers, while 40% were middle managers and 40% were not managers. Approximately half of the respondents cited clinical work and/or administration as their position type, while around 40% reported that they were involved in quality improvement or professional development.

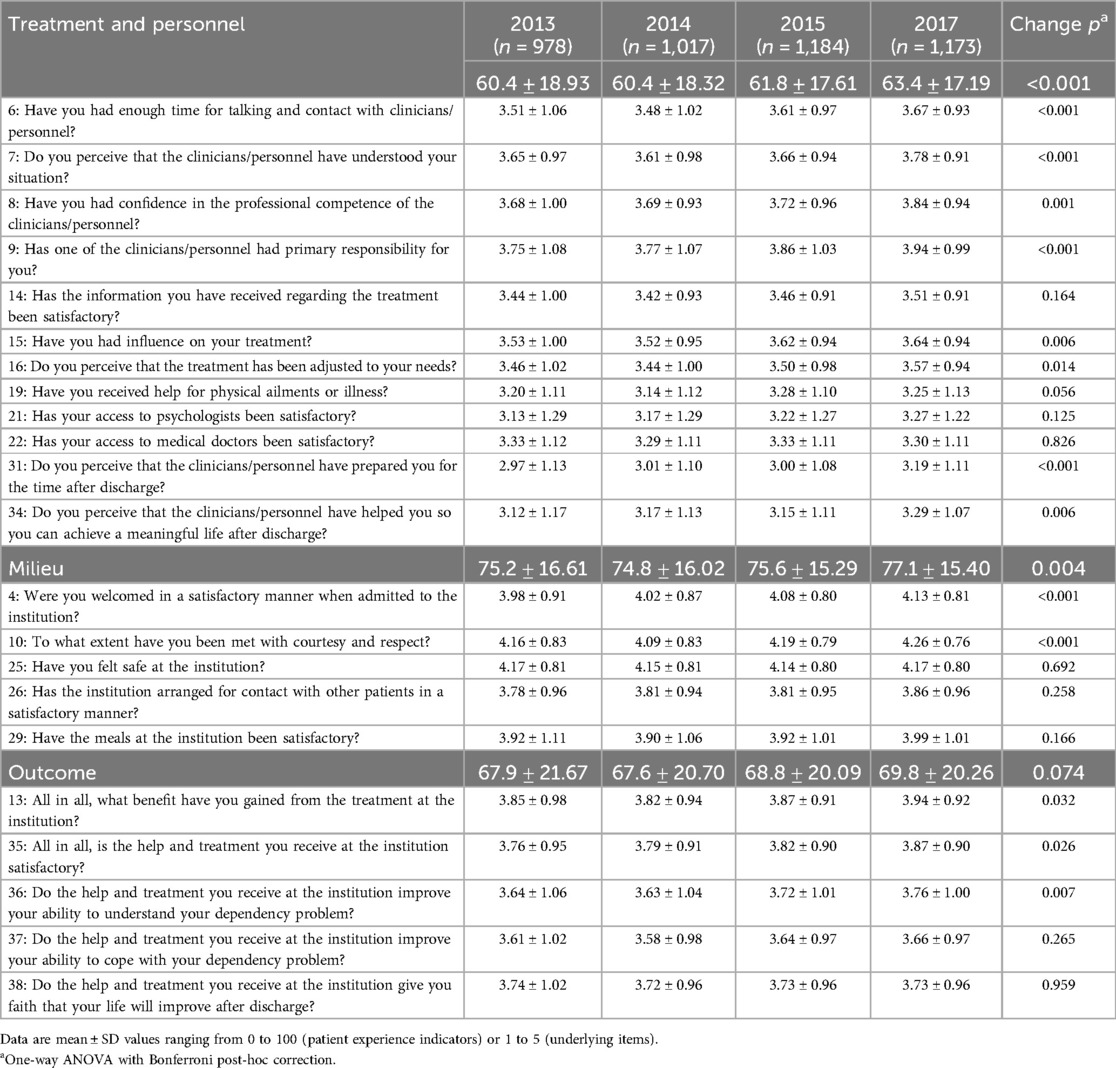

Table 2 lists the results from all four survey years for the patient experience indicators and their underlying items. The scores across all years were best for the milieu indicator, at 75–77, followed by the outcome indicator (68–70) and the treatment-and-personnel indicator (60–63). The scores increased over time, with Bonferroni post-hoc correction showing that the differences between the years were mostly due to the scores in 2017 being higher than those in 2013 or 2014.

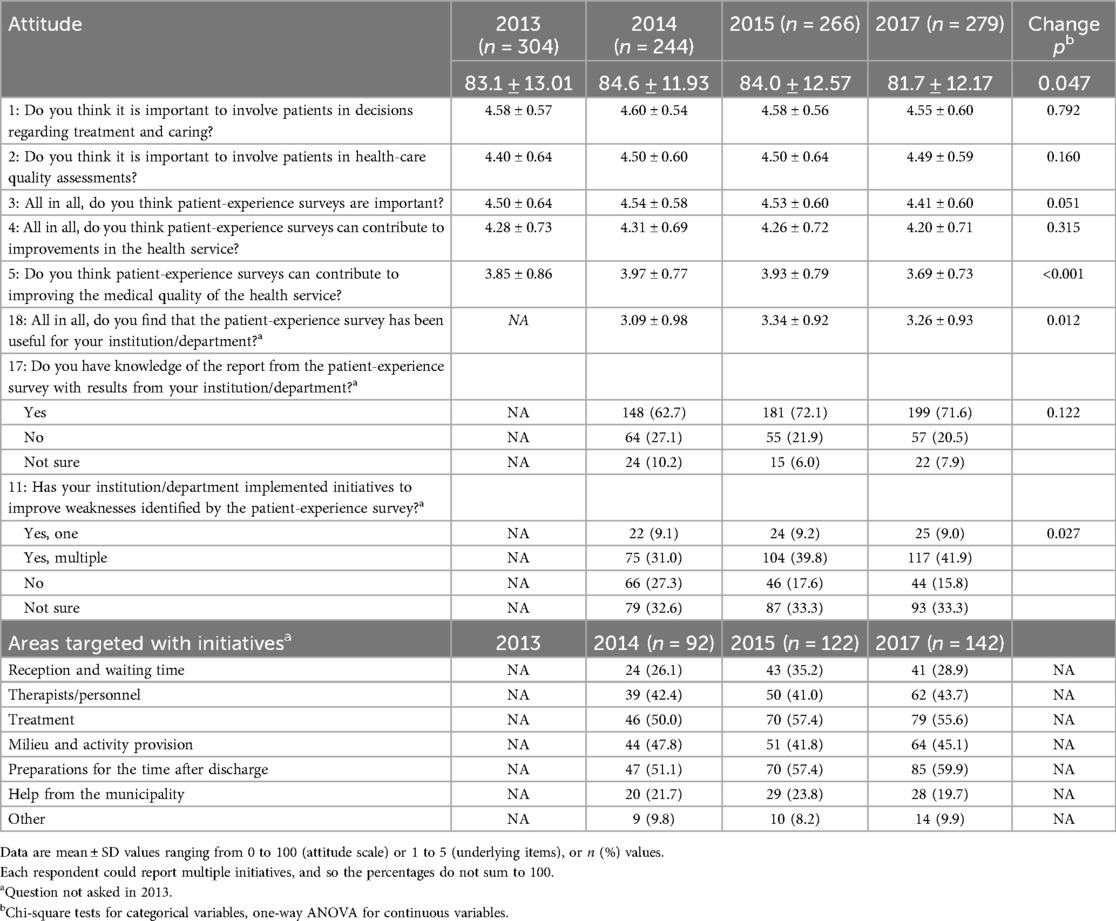

Exploratory factor analysis confirmed that the five items measuring attitudes towards patient-experience surveys could be meaningfully reported as a unidimensional scale, for which Cronbach's alpha was 0.8 for all four years. As shown in Table 3, the five items constituting the attitude scale were all rated positively, with scores higher than 80 for all survey years, but a slightly lower score in the 2017 survey. Even though the one-way ANOVA showed a statistically significant change over time, the Bonferroni post-hoc correction revealed no significant changes in the attitude scale. However, when testing the single scale items, the scores on item 5 were significantly lower in 2017 than in the two earlier surveys. The results were somewhat less positive for whether the national patient-experience survey was useful for their institution, but they remained over 3. Forty percent of the respondents reported that their institution had implemented at least one improvement initiative after the patient survey of the previous year. This proportion increased to around 50% for 2015 and 2017. Most of the initiatives targeted the preparations for the post-discharge time, the treatment, the milieu and activities, and the therapists or the personnel.

Table 3. Selected employee-dependent variables, including the attitude scale and its underlying items, and areas targeted with initiatives after completing the patient-experience survey as reported by employees.

One of the open-ended questions asked the employees to describe changes in their behaviour due to the results from the patient-experience surveys. The number of responses to this question increased over time, at 48 (12%), 74 (28%) and 94 (34%) in 2014, 2015 and 2017, respectively. Content analysis showed that 50 comments (23%) mentioned how employees had increased the user involvement in their work. Some gave examples of their institution developing a more formalized or structured approach to ensuring dialogue between patients and employees and leaders (e.g., “There has been a focus on making more time for contact persons to spend with their patients”). Others described that they now strived to include patients in more meetings or decisions (e.g., “Increased understanding and even more user management. We have, among other things, conducted group work, personnel and patients, where we have worked together on quality initiatives. We have more meetings with patients and fewer without”). Forty employees responded that they had tried to improve the areas identified from the patient-experience survey as being worse than expected or desired, or had targeted areas they knew they could improve (e.g., “In areas we scored lower than last year, we have changed the treatment in line with what the users want”). Others addressed the treatment, communication and information, the relationship between health personnel and patients, preparations for the post-discharge time, the milieu and activity provision, and providing more resources and positions and organization in general.

The employees who responded that they had implemented improvement initiatives after the previous patient survey were prompted to provide more detail. This open-ended question was answered by 33%–41% of the respondents each year. Many of the initiatives focused on organization of or methods employed at the institution. Two popular topics were routines, with 81 descriptions of different initiatives (27%) for ensuring that tasks were done in a timely manner and in a better/more systematic order than previously, such as regular meetings with interdisciplinary teams and patient assessments (e.g., “Procedure bindings are made, so that temps are also updated at all times”). The other popular topic was changing routines for the goals of patients or their treatment plans (e.g., “Therapists/patients work more systematically with a treatment plan and evaluations during the treatment”).

Many of these comments described how the treatments were structured and their contents. Routines for admitting new patients were highlighted in 58 comments (19%), such as introduction packages, contact with patients before they were admitted and buddy systems (e.g., “We have employed a collaboration consultant in the department. The task is to contact the patient and initiate measures before admission, inform about the treatment, initiate responsibility group meetings, etc”). Sixty comments (20%) described initiatives targeted at ensuring that patients were better prepared for the post-discharge time. These initiatives focused on the earlier onset of these preparations, and better routines for care after discharge (e.g., “Greater focus on preparation for the time after discharge is done with the patient and the support system—we have created a 100% position for a social worker and hired a psychologist. All patients should have an interdisciplinary team around them which consists of a therapist, a social worker, a nurse and a supervisor. The team should have 2 or 3 meetings with the patient during treatment in the clinic”). Other topics covered in these open-ended comments include a greater focus on activities for the patients, education and counselling for employees, patient involvement, working with friends or family, coordinating care, hiring more clinicians/personnel, and providing better information.

About half of the employees responded to the open-ended question regarding barriers to using the results from each patient-experience survey, with 40%–50% of them implying that they experienced no barriers. The most-common nuanced explanation of barriers was a lack of resources (n = 50, 13%), such as money, time, personnel and workload (e.g., “A lot of new hires mean that we have to carry out new training constantly. This is not good for continuity and relationships with patients”). Other employees (n = 26, 7%) found the patient-experience survey difficult to use due to the time between measurement and reporting, or had difficulty interpreting the results. These difficulties were due to the questions not fitting the patient group, or patients no longer being in the institution and new patients not agreeing with the results or knowing what the previous patients meant when responding (e.g., “It is difficult when a long time passes between the time of the survey and when the results come. Our patients partially distanced themselves from the critical findings we presented because they were not receiving treatment from us when the study had taken place”). Twenty-one employees (5%) replied that there were few respondents for their institution, making the limited results more uncertain. Other barriers included (i) a lot of other things going on at the institution, such as changes or reorganization, (ii) lack of interest or priorities from the leaders, (iii) other initiatives or surveys already being implemented, and (iv) the results being primarily positive.

4 Discussion

This study found that employees at residential institutions providing interdisciplinary treatment for substance dependence actively use the results from national patient-experience surveys in quality-improvement. The employees generally had positive attitudes towards patient-experience surveys, but were somewhat less positive about the usefulness of the national surveys for their own institutions. The most-common quality-improvement initiatives cited targeted areas that had the worst findings in the patient-experience survey, such as preparations for the post-discharge time, or to broader aspects of the treatment such as increasing patient involvement and developing the structure and content of the treatment. The experiences of the patients improved slightly over time, with the best experiences being reported in the most-recent survey for all indicators.

These findings are consistent with previous ones reported for paediatric departments in Norway (13), and also elsewhere where staff members considered patient-experience surveys as important for improvement efforts (22) User-experience surveys were considered important, with around half of the respondents reporting that they had implemented improvement actions, and addressed problems identified in the national survey. However, it is important to consider that the potential for improvement likely varied across institutions. Some institutions may have started with relatively high patient-experience scores in 2013, leaving less room for substantial improvement. In such cases, institutions may have only a few targeted areas where changes were necessary, and these areas may have been more challenging to address. On the other hand, institutions with lower initial scores may have had a broader range of areas for improvement, allowing for more targeted and actionable quality initiatives. This difference in the potential for improvement could explain why some institutions experienced more noticeable improvements in patient-experience scores over time, while others saw more modest changes.The employees reported several types of quality initiatives implemented at their institutions. A recent systematic review identified that many quality initiatives focused on organizational change and staff education (18). This is consistent with the current study finding a focus on more-systematic patient involvement in their day-to-day activities, and changing routines for both employees and patients. Several of the respondents mentioned education and counselling for employees, which constituted the second-most-common type of quality initiatives, a finding supported in the literature (18, 23). The most-common target areas reported by employees showed clear improvements in patient experiences, such as 50%–60% of employees reporting initiatives related to preparation for the post-discharge time, and both of the related patient-experience items improved significantly during the study period. This suggests causality, even though we should stress that the actual initiatives might have varied between institutions, and the modest nature of the changes at the national level.

The qualitative data showed that many employees focused their quality-improvement initiatives on areas with the worst results in the patient surveys. Additionally, employees often focused on areas where they knew they could improve their results, with more than half of the respondents reporting initiatives targeting “the treatment”. This is a similar approach as found by other studies (7, 12). This approach aligns with the potential for improvement perspective, as employees would naturally prioritize areas with the greatest potential for measurable impact. Nevertheless, institutions that started with fewer areas needing improvement may have faced greater difficulty in achieving significant changes. Still this high rate of reported initiatives supports the value of national surveys where the results can be reported at several levels of the health-care system. Each participating institution received the detailed survey results for their patients, allowing them to assess their relative scores and implement initiatives that are directly relevant to their patients and their own resources.

Several of the employees cited specific barriers to the utilization of data in quality initiatives. Such barriers have often been categorized into data-related, professional, and organizational ones (18, 24). In our study, the data-related barriers mentioned included the time between measurement and reporting, making the results less valid and/or difficult to utilize, as previously reported across different healthcare contexts (7). A small sample in an institution was also reported as a barrier by the employees that made the responses less trustworthy. Organizational barriers found in this study related to a lack of interest or priorities from the leaders or the institution already having many other things going on (either quality initiatives or their own surveys). Changes in the organization also reportedly increased the difficulty of prioritizing quality initiatives based on the national patient surveys. Some research has shown that employees may mistrust survey data (24, 25), but clinical scepticism was not prominent in the present study. The most commonly cited barrier reported by employees was a lack of resources to fully utilize the data. Although the employees were willing to engage with the survey findings, they reported to lack the time, personnel and financial resources to implement changes, a challenge echoed in international research (23, 26).

In January 2020 the NIPH started national continuous electronic measurements of the experiences of patient receiving interdisciplinary treatment for substance dependence and mental health care. All patients at the relevant institutions are asked to complete the patient-experience questionnaire a few days before being discharged. This method of data collection could overcome some of the barriers reported for this population, since the respondents will be at the same place in their clinical pathway (i.e., close to discharge) and the patient sample for each institution will be larger than in previous cross-sectional surveys, hence resulting in even more-timely reporting and more-robust statistical results. This data-collection method will continue until at least 2025, and there will be several opportunities to follow up the employee data and to analyse how the patient-experience scores change over time. Such research can reveal how and why patient experiences change over time, and which interventions are more effective in this population.

Previous systematic reviews of patient-experience surveys have summarized the reported barriers and facilitators (7, 12), which are consistent with the present finding of employees having positive attitudes towards patient-experience surveys. However, attitudes do not always lead to changes, and facilitators such as management support and a supportive culture are important for quality improvements, as well as ensuring that employees have sufficient time to review the results and plan the improvements to be implemented (7, 12, 18).

The four national patient-experience surveys and their preceding employee surveys were conducted consecutively between 2013 and 2017, and so the attitudes of employees might have been affected by collecting and reporting fatigue for externally initiated data. This may also have affected the response rates, as these decreased somewhat from the first survey to the remaining. Nevertheless, the employees generally reported very positive attitudes towards patient-experience surveys, and considered their associated workload to be relatively low. While the attitude score showed a negative trend over the four surveys, the scale score remained high and the changes over time were small.

4.1 Implications

Locally adapted and implemented quality-improvement initiatives have a potential for improving patient involvement and patient experience. As shown in this study, employees at the institutions gave several examples on how they tried to improve the patients’ experiences. Many of these local initiatives were focusing on becoming more patient-centred, involving patients earlier, and integrating patients’ views into both treatment and discharge planning. However, certain barriers—including limited time, financial constraints, and workforce shortages—hinder the full utilization of patient-experience data for quality improvement. The NIPH is actively addressing data-related barriers by improving the timeliness and accessibility of patient-experience data through continuous electronic measurement. However, institutional support is crucial for fostering a culture that embraces patient feedback as an integral part of quality improvement, and a lack of resources to use patient-experience data in local quality work is a barrier that needs to be addressed by the health services. All in all, the positive changes in patient experiences over time implies a fruitful collaboration between a national measurement initiative and local health care units. A final implication is thus the importance of accommodating OECDs principle of sustainability in national patient experience systems to monitor trends longitudinally (27) and to secure continuous feedback to the quality improvement work at the local health care units.

4.2 Strengths and limitations

The NIPH has been responsible for the four national patient-experience surveys and the four employee surveys. While this has provided an opportunity to follow the populations over time, individual patients were discharged from the institutions after different periods, sometimes earlier than planned, which made it difficult to track the patients in these surveys. The employees could theoretically have been followed across the years, but the national surveys were treated as stand-alone surveys rather than as yearly follow-ups. This was due to the nature of the surveys and how they were commissioned, and the permissions necessary to link individual data from multiple years were not applied for.

We originally planned to analyse the data at institution level, including to determine whether interventions described in one year induced changes in patient experiences in the subsequent surveys. Many of the institutions were small and we recruited only selected employees, resulting in the number of responses at the institution level being too small to conduct analysis at that level. Many employees reported implementing quality initiatives to improve patient experiences, but only small changes were seen in the national quality indicators. Given the large number of participating institutions and a range of initiatives implemented, it will be difficult to register changes in patient experiences on the national level. Furthermore, a systematic review found that studies focused on smaller numbers of initiatives were more likely to measure effects (18). Many of the employees in the present study reported implementing several initiatives, which may have confounded their effectiveness. While it was not possible to analyse data at the institution level in this study, it is likely that larger changes in patient experiences would be found when analysing larger samples. Nevertheless, positive changes in the quality indicators were found from the first to the last patient survey.

Overall, employees expressed positive attitudes towards patient-experience surveys, though this sentiment may have been influenced by the specific roles of the respondents. The recruitment strategy primarily targeted employees directly involved in quality assurance and improvement initiatives, which could naturally result in more favorable views towards the surveys, given the direct relevance to their work responsibilities. Employees not engaged in these initiatives might have different attitudes, which were less represented in the study. The recruitment criteria specifically asked project managers to recruit the following employees: department managers, institution managers, quality advisors, and one or two employees responsible for quality assurance and improvement. “Type of position” and “professional background” were both questions where the employees could tick more than one answer, giving an accumulated percentage higher than 100. This focused selection aimed to gather insights from employees directly involved in decision-making and quality initiatives at their institutions. The approach ensured a distribution among both leadership and frontline staff as well as a balanced representation of non-managerial staff, ensuring that the employee survey captured perspectives from across the organizational hierarchy. Thus, the survey responses reflected perspectives from key employees engaged in quality improvement across different levels of the organizational hierarchy, type of position and professional background.

Even though the employee surveys revealed mainly positive attitudes towards patient-experiences surveys and that many had implemented quality initiatives, it is difficult to know how much they influenced the patient experiences. It is a known limitation that cross-sectional surveys cannot measure cause-and-effect relationships. The variations across the participating institutions and other factors besides patient-experience surveys such as other initiatives, legislation and plans may affect how institutions treat their patients. Randomized controlled trials are the gold standard for revealing which interventions cause the greatest changes in patient experiences. The methodology and aims of our surveys meant that we did not have control over other variables that could influence both the employees and the experiences of the patients.

The long time periods between the patient-experience surveys, the reporting of results and the following employee surveys may have resulted in some employees experiencing difficulties recalling what had been done at their institution. Nevertheless, this was a known risk, and since there is always a time delay before an intervention produces any effect, the NIPH considered that the employee survey should be applied some time after reporting the results to allow for the implementation and local recording of possible interventions and their early effects.

5 Conclusions

This study found positive changes in patient experiences with interdisciplinary treatment for substance dependence across the yearly national cross-sectional surveys. Also, the employees had positive perceptions of the patient-experiences surveys. Even though their usefulness scores were lower, the results were still positive, with about half of respondents indicating that the institutions had implemented initiatives based on the results. The yearly surveys have followed patient and employee populations for which systematic knowledge and studies are still scarce, and hence constitute a unique source of data for on-going measurements. The design of this study makes it impossible to infer whether the reported initiatives induced the changes in patient experiences, but the most-common target areas reported by employees showed clear improvements in patient experiences.

This study presents unique data in several respects: all patients from all institutions in Norway were invited to participate in the patient surveys, and several employees from the same institutions were invited to share their attitudes towards such surveys, as well as to report on if and how they used the data in local quality work.

Future studies should perform follow-up assessments so that patient experiences can be measured before and after implementing local improvement initiatives in order to determine which interventions are the most effective. The impact of this research could be increased by conducting institution-specific follow-up surveys.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Patient data were collected anonymously, with no registration of the patients being surveyed. Patients were informed that participation was voluntary and that they would remain anonymous. Ethical approval was waived by The Norwegian Social Science Data Services as the project was run as part of the national program and was an anonymous quality assurance project. According to the Norwegian Regional Committees for Medical and Health Research Ethics, research approval is not required for quality assurance projects. In accordance with all the patient surveys in the national program, health professionals at the institutions could exclude individual patients for special ethical considerations. Employee surveys: 2013-, 2014- and 2015-surveys approved by The Norwegian Social Science Data Services. 2017-survey approved by NIPH's Data Protection Official. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin because Return of the questionnaire constituted informed consent in these surveys, which is the standard procedure in all national patient experience surveys conducted by the NIPH.

Author contributions

MH: Conceptualization, Formal Analysis, Methodology, Project administration, Writing – original draft, Writing – review & editing. HI: Conceptualization, Formal Analysis, Methodology, Supervision, Writing – review & editing. OB: Conceptualization, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The Norwegian Knowledge Centre for the Health Services, now part of the NIPH, was responsible for the planning and execution of the data collections that are described in this manuscript. The NIPH is responsible for the current study. The Norwegian Directorate of Health funded the national patient surveys.

Acknowledgments

We thank Marit Seljevik Skarpaas and Inger Opedal Paulsrud for data collection and management, and Linda Selje Sunde for administrative help with the data collection. We thank Kjersti Eeg Skudal who were NIPH's project manager of the 2017 survey. We are grateful to the contact persons and project-personnel in the departments, institutions, and health regions. We also thank both the patients and the employees who participated in the surveys.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Anhang Price R, Elliott MN, Zaslavsky AM, Hays RD, Lehrman WG, Rybowski L, et al. Examining the role of patient experience surveys in measuring health care quality. Med Care Res Rev. (2014) 71(5):522–54. doi: 10.1177/1077558714541480

2. Doyle C, Lennox L, Bell D. A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ Open. (2013) 3(1):e001570. doi: 10.1136/bmjopen-2012-001570

3. Garratt A, Solheim E, Danielsen K. National and Cross-National Surveys of Patient Experiences: A Structured Review. Oslo: Nasjonalt kunnskapssenter for helsetjenesten (2008).

4. Davidson KW, Shaffer J, Ye S, Falzon L, Emeruwa IO, Sundquist K, et al. Interventions to improve hospital patient satisfaction with healthcare providers and systems: a systematic review. BMJ Qual Saf. (2017) 26(7):596–606. doi: 10.1136/bmjqs-2015-004758

5. Edwards KJ, Walker K, Duff J. Instruments to measure the inpatient hospital experience: a literature review. Patient Exp J. (2015) 2(2):77–85. doi: 10.35680/2372-0247.1088

6. Beattie M, Murphy DJ, Atherton I, Lauder W. Instruments to measure patient experience of healthcare quality in hospitals: a systematic review. Syst Rev. (2015) 4:97. doi: 10.1186/s13643-015-0089-0

7. Gleeson H, Calderon A, Swami V, Deighton J, Wolpert M, Edbrooke-Childs J. Systematic review of approaches to using patient experience data for quality improvement in healthcare settings. BMJ Open. (2016) 6(8):e011907. doi: 10.1136/bmjopen-2016-011907

9. Coulter A, Locock L, Ziebland S, Calabrese J. Collecting data on patient experience is not enough: they must be used to improve care. Br Med J. (2014) 348:g2225. doi: 10.1136/bmj.g2225

10. Haugum M, Iversen HH, Bjertnaes O, Lindahl AK. Patient experiences questionnaire for interdisciplinary treatment for substance dependence (PEQ-ITSD): reliability and validity following a national survey in Norway. BMC Psychiatry. (2017) 17(1):73. doi: 10.1186/s12888-017-1242-1

11. Bjertnaes O, Iversen HH, Kjollesdal J. PIPEQ-OS—an instrument for on-site measurements of the experiences of inpatients at psychiatric institutions. BMC Psychiatry. (2015) 15:234. doi: 10.1186/s12888-015-0621-8

12. Haugum M, Danielsen K, Iversen HH, Bjertnaes O. The use of data from national and other large-scale user experience surveys in local quality work: a systematic review. Int J Qual Health Care. (2014) 26(6):592–605. doi: 10.1093/intqhc/mzu077

13. Iversen HH, Bjertnaes OA, Groven G, Bukholm G. Usefulness of a national parent experience survey in quality improvement: views of paediatric department employees. Qual Saf Health Care. (2010) 19(5):e38. doi: 10.1136/qshc.2009.034298

14. Haugum M, Iversen HH, Bjertnaes OA. Pasienterfaringer med Døgnopphold Innen Tverrfaglig Spesialisert Rusbehandling—resultater Etter en Nasjonal Undersøkelse i 2013. PasOpp-rapport nr. 7−2013. Oslo: Nasjonalt kunnskapssenter for helsetjenesten (2013).

15. Haugum M, Iversen HH. Pasienterfaringer med Døgnopphold Innen Tverrfaglig Spesialisert Rusbehandling—Resultater Etter en Nasjonal Undersøkelse i2014. PasOpp-rapport nr. 6−2014. Oslo: Nasjonalt kunnskapssenter for helsetjenesten (2014).

16. Haugum M, Holmboe O, Iversen HH, Bjertnæs ØA. Pasienterfaringer med Døgnopphold Innen Tverrfaglig Spesialisert Rusbehandling (TSB). Resultater Etter en Nasjonal Undersøkelse i 2015. PasOpp-rapport nr. 1−2016. Oslo: Folkehelseinstituttet (2016).

17. Skudal KE, Holmboe O, Haugum M, Iversen HH. Pasienters Erfaringer med Døgnopphold Innen Tverrfaglig Spesialisert Rusbehandling (TSB) i 2017. PasOpp-rapport nr. 453−2017. Oslo: Folkehelseinstituttet (2017).

18. Bastemeijer CM, Boosman H, van Ewijk H, Verweij LM, Voogt L, Hazelzet JA. Patient experiences: a systematic review of quality improvement interventions in a hospital setting. Patient Relat Outcome Meas. (2019) 10:157–69. doi: 10.2147/PROM.S201737

19. Kumah E, Osei-Kesse F, Anaba C. Understanding and using patient experience feedback to improve health care quality: systematic review and framework development. J Patient Cent Res Rev. (2017) 4(1):24–31. doi: 10.17294/2330-0698.1416

20. Haugum M, Iversen HH, Helgeland J, Lindahl AK, Bjertnaes O. Patient experiences with interdisciplinary treatment for substance dependence: an assessment of quality indicators based on two national surveys in Norway. Patient Prefer Adherence. (2019) 13:453–64. doi: 10.2147/PPA.S194925

21. Boyer L, Francois P, Doutre E, Weil G, Labarere J. Perception and use of the results of patient satisfaction surveys by care providers in a French teaching hospital. Int J Qual Health Care. (2006) 18(5):359–64. doi: 10.1093/intqhc/mzl029

22. Flott KM, Graham C, Darzi A, Mayer E. Can we use patient-reported feedback to drive change? The challenges of using patient-reported feedback and how they might be addressed. BMJ Qual Saf. (2017) 26:502–7. doi: 10.1136/bmjqs-2016-005223

23. Shunmuga Sundaram C, Campbell R, Ju A, King MT, Rutherford C. Patient and healthcare provider perceptions on using patient-reported experience measures (PREMs) in routine clinical care: a systematic review of qualitative studies. J Patient Rep Outcomes. (2022) 6:122. doi: 10.1186/s41687-022-00524-0

24. Davies E, Cleary PD. Hearing the patient’s voice? Factors affecting the use of patient survey data in quality improvement. Qual Saf Health Care. (2005) 14(6):428–32. doi: 10.1136/qshc.2004.012955

25. Sheard L, Marsh C, O'Hara J, Armitage G, Wright J, Lawton R. The patient feedback response framework—understanding why UK hospital staff find it difficult to make improvements based on patient feedback: a qualitative study. Soc Sci Med. (2017) 178:19–27. doi: 10.1016/j.socscimed.2017.02.005

26. Locock L, Graham C, King J, Parkin S, Chisholm A, Montgomery C, et al. Understanding how front-line staff use patient experience data for service improvement: an exploratory case study evaluation. Health Soc Care Deliv Res. (2020) 8(13):1–170. doi: 10.3310/hsdr08130

Keywords: patient satisfaction, patient experience, quality of health care, employees’ viewpoints, quality improvement, health services research, survey

Citation: Haugum M, Iversen HH and Bjertnaes O (2024) National surveys of patient experiences with addiction services in Norway: do employees use the results in quality initiatives and are results improving over time? Front. Health Serv. 4:1356342. doi: 10.3389/frhs.2024.1356342

Received: 15 December 2023; Accepted: 24 October 2024;

Published: 12 November 2024.

Edited by:

Vaibhav Tyagi, The University of Sydney, AustraliaReviewed by:

Kimberley Foley, Imperial College London, United KingdomCandan Kendir, Organisation For Economic Co-Operation and Development, France

Copyright: © 2024 Haugum, Iversen and Bjertnaes. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mona Haugum, bW9uYS5oYXVndW1AZmhpLm5v

Mona Haugum

Mona Haugum Hilde Hestad Iversen

Hilde Hestad Iversen Oyvind Bjertnaes

Oyvind Bjertnaes