- 1Kaiser Permanente Washington Health Research Institute, Seattle, WA, United States

- 2Independent Researcher, Seattle, WA, United States

- 3Department of Psychiatry and Behavioral Sciences, University of Washington, Seattle, WA, United States

- 4School of Information, University of Michigan, Ann Arbor, MI, United States

- 5Department of Global Health, University of Washington, Seattle, WA, United States

- 6Department of Health Systems and Population Health, University of Washington, Seattle, WA, United States

There has been a call to shift from treating theories as static products to engaging in a process of theorizing that develops, modifies, and advances implementation theory through the accumulation of knowledge. Stimulating theoretical advances is necessary to improve our understanding of the causal processes that influence implementation and to enhance the value of existing theory. We argue that a primary reason that existing theory has lacked iteration and evolution is that the process for theorizing is obscure and daunting. We present recommendations for advancing the process of theorizing in implementation science to draw more people in the process of developing and advancing theory.

1. Introduction

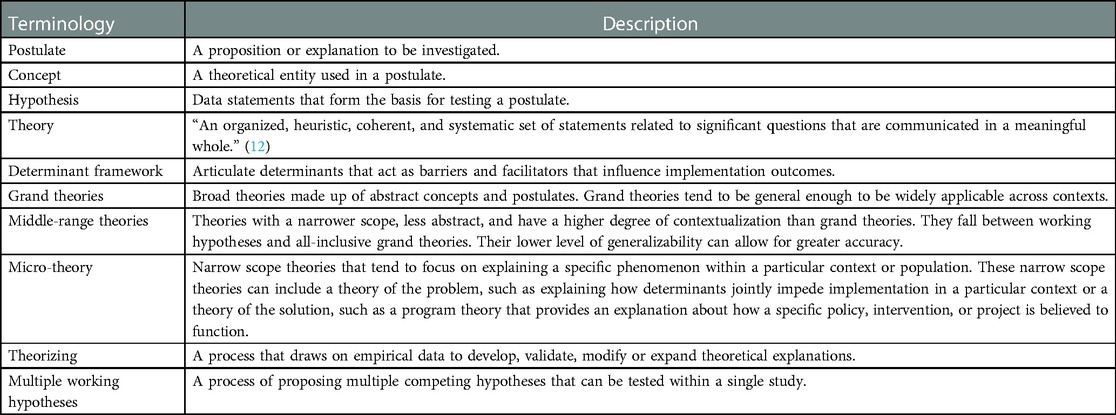

Theories and frameworks (i.e., theoretical products) bring clarity to complex systems within which implementation occurs (1) and provide explicit assumptions that can be collectively tested, validated, or refined (2) (see Table 1 for definitions). As such, they support efficiency in generalizing knowledge across contexts (3). Determinant frameworks commonly describe what is likely to impact implementation by defining and organizing determinants while implementation theories often provide explanations for how change is believed to occur (2). Theoretical products are often used deductively to guide empirical enquiry, yet we fail to inductively modify theory based on findings (1, 4, 5). In doing so, we miss opportunities to advance theory in light of accumulating evidence, leaving implementation science susceptible to stagnation.

There has been a call to shift from treating theories as static products to engaging in a process of theorizing that draws on empirical data to develop, validate, modify or expand theoretical explanations in implementation science (4). Theorizing, as described here, includes the development of new explanations, but also the refinement of existing theoretical explanations. Everyone has the potential to contribute to theorizing, but many do not explicitly do so. This is partly due to two reasons. First, our understanding of what constitutes a theory is too grand. Others have outlined the characteristics of strong theory, such as clarity in relationships between concepts, explanatory power, and generalizability (6). These characteristics are the aspirational endpoint of good theories, not the starting point.

Second, many do not engage in theorizing due to a failure to recognize opportunities for research to contribute to advancing, refining, or (in)validating theory. When findings conflict with theory, authors rarely question the theory's validity, but rather consider explanations such as weaknesses in study design (7). Researchers should be empowered to challenge theory, regardless of its popularity, prestige, or longevity when warranted by evidence.

Increasing explicit engagement in theorizing will require that researchers are equipped with tools to support theorizing. Inspired by writers like bell hooks who sought to communicate feminist thinking in a way that was accessible to everyone (8), we strive to make clear how theorizing is for everybody. To facilitate this, we draw on the building blocks of theory (9, 10) to describe how empirical research can advance the parsimony and comprehensiveness of theory, and elucidate the boundary conditions under which theory is most accurate. We illustrate how these building blocks can be used to develop micro-theories that provide explanations for how implementation determinants influence implementation. Lastly, we discuss how adopting multiple working hypotheses can discourage the calcification and reification of premature theories by arbitrating between multiple tenable explanations for a phenomenon (11).

2. Theorizing in implementation science

2.1. Sources for theorizing

Theorizing can be inspired by direct observation or vicariously through the synthesis of existing knowledge. Existing theoretical products in implementation science have stemmed from developers' experience, synthesis of empirical evidence, and drawing on or synthesizing existing theories and frameworks (2). Micro, middle-range, and grand theories can have reciprocal influences on one another. For instance, lower-order theories can be inspired by focusing on a narrow element of a grand theory or, conversely, higher order theories can emerge from synthesis of narrower middle-range and micro theories (13). Whether developing a novel micro-theory from limited empirical observations or modifying a middle-range theory through synthesizing numerous studies, such theorizing can have implications for the full ladder of theories.

2.2. Building blocks of theory

The building blocks of theory construction have previously been outlined to describe the attributes of a well-formed comprehensive theory (9, 10). We draw on them to demonstrate how research and reasoning that addresses any one of these questions can contribute to advancing theory.

What refers to concepts and constructs relevant in explaining a phenomenon. Research can inform the sufficiency and parsimony of middle-range theoretical products by answering the questions what is missing from the explanation of this phenomenon and what is not contributing to explaining this phenomenon? While implementation science must not stop at classifying determinants (14), determinant frameworks are critical in organizing the science. They influence study questions, hypotheses, measurement, and implementation targets (2, 15). Determinant frameworks were informed by varying degrees of evidence (2), so assessing the validity of their postulated determinants to inform their refinement is important. Within implementation science, evidence syntheses are beginning to answer the question what is missing (16–18), proposing key concepts, such as the health systems' architecture, previously overlooked in frameworks (16). These questions can advance existing theory as well. For instance, studies have provided evidence that additional constructs may improve the explanatory power of the Theory of Planned Behavior (19, 20). They suggest that constructs such as self-identity and past behavior may improve the prediction of behavior (21). By asking these questions, everyone can contribute to advancing existing theoretical products.

How refers to explanations of causes, consequences, mechanisms, and conditions. Theoretical products describing what have outpaced explanations of how in implementation science, as evidenced by numerous determinant frameworks but fewer explanatory theories (22). However, empirical enquiries often attempt to establish causes and consequences and, more recently, mechanisms (23–25). Evidence syntheses can assess the evidence for postulates in existing middle-range theories or propose novel theoretical explanations based on evidence. For instance, Meza and colleagues synthesized evidence for the relationship between first-level leadership and inner-context and implementation outcomes (26). They found support for some postulates in existing leadership theory, such as the positive influence of first-level leadership on organizational and implementation climate (27). But also identified limited and inconsistent evidence supporting the commonly regarded postulate that first-level leadership influences implementation outcomes. Individual studies can also contribute to explanations of how by directly testing the postulates of theory to evaluate their validity. For instance, Williams and colleagues designed a study to test several postulates of the theory of strategic implementation leadership and articulated how their findings would support or challenge the validity of those postulates (24).

Individual studies can also develop novel explanations of how using situation-specific micro-theories. Micro-theories can begin with “cheap” theorizing, formulating tentative and narrow postulates to be evaluated and refined by research. Supported postulates can be maintained and their generalizability further tested, while unsupported postulates discarded or refined. Through such a process, micro-theories can inform middle-range theories with greater generalizability.

Developing novel causal explanations can seem daunting. But researchers and stakeholders can contribute to causal explanations. Humans naturally organize events into causes and consequences. Simple tools can support causal thinking. Qualitative interviewing can elicit implicit causal explanations and coding can characterize those relationships. Linguistic expressions, such as because and since, shed light on causal conceptualizations (28). The word because helps to differentiate a central concept from its determinants. “I knew that administering the screener (central concept) was a priority because its administration was being measured (determinant).” Stakeholders can participate in reasoning exercises to clarify their causal thinking. For instance, through counterfactual reasoning, stakeholders can imagine what could have or what may have happened during implementation. This provides answers to questions such as, “how would implementation have been different if there was consumer demand for the innovation?” Drawing on direct experience or observations, if-then statements can organize causal thinking. “If a mandate is instated (cause), then the screening will be administered (effect), but only if screening materials are available (necessary condition).” We illustrate the application of these tools to prioritize determinants.

Who, where, and when refer to boundaries of a theory's generalizability. Theoretical products are developed within limited contexts and their generalizability is tested when applied outside of that context (29). Empirical research can inform how broadly theories should be applied. Boundary conditions, such as conditions of time and space (30), describe the limits of the generalizability of theoretical assumptions (9). Theorizing about boundary conditions can move us beyond selecting familiar theories, to those best suited to a context. Testing moderators of theoretical postulates can also inform boundary conditions. For instance, implementation theories suggest that implementation climate is a driver of innovation use (31–33). Williams and colleagues found the relationship between implementation climate and evidence-based practice use was contingent on a positive molar climate, suggesting that positive molar climates may be a boundary condition under which implementation climate has the strongest effects (34). Applying theory in research outside of the original context in which it was developed can also elucidate boundary conditions. For instance, research can speak to whether the postulates of COM-B (Capability, Opportunity, Motivation and Behavior) (35) hold true across diverse populations, types of behaviors, and in novel contexts. In instances where these postulates are not supported, researchers are encouraged to speculate about potential theoretical boundaries to advance the precision by which we select and apply theory.

3. Theorizing about implementation determinants

Efforts to identify implementation determinants frequently surface dozens (36), producing a formidable task of deciding which to target. Existing methods, such as prioritizing determinants deemed important and feasible to address (37, 38), treat determinants as independent, ignoring their complex relationships that may inform their importance. An overly simplistic understanding of how intervention characteristics, implementer activities, and the contextual conditions jointly influence implementation limits our understanding of the key (clusters of) determinants to prioritize. Developing a micro-theory of how determinants unfold can help to organize these complex relationships.

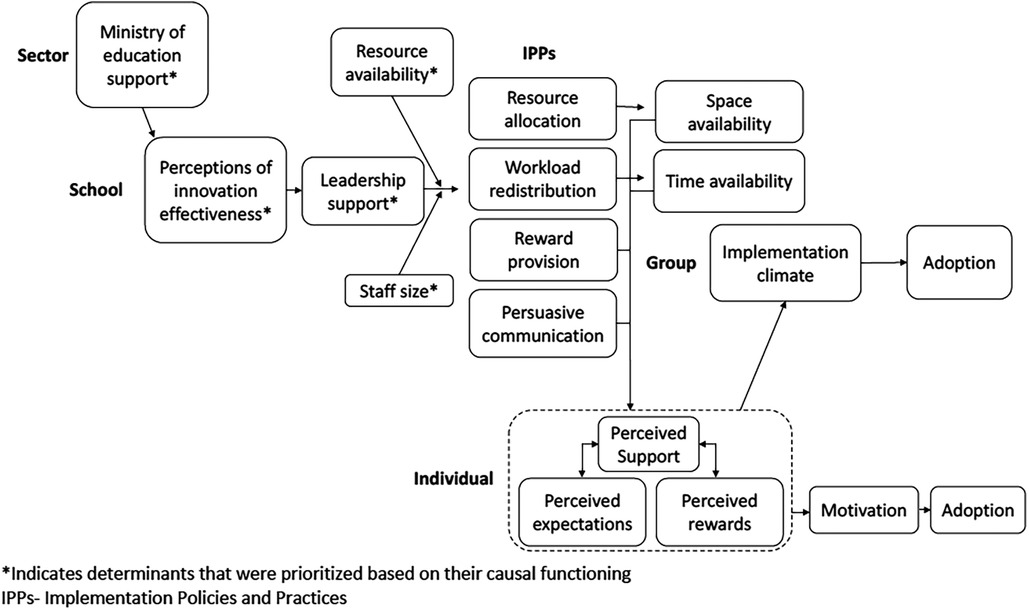

Figure 1 provides an illustration of a micro-theory of how determinants influence school and teacher adoption of a group-based intervention, informed by the questions what, how, who, where and when. We illustrate our approach to stimulate theorizing, not to suggest it be followed as a recipe. We drew on qualitative interviews with stakeholders (teachers and principals) from schools following a phase of implementation-as-usual. Originally, qualitative interviews were used to identify all determinants, stakeholders prioritized determinants based on feasibility and importance, and strategies were aligned with those determinants. Here, we reapproach that process to prioritize determinants based on their causal functioning.

3.1. What determinants and IPPs influenced adoption?

Using qualitative interviews, we identified the presence of determinants and implementation policies and practices (IPPs) that schools used to support adoption (39). We inductively coded concepts that emerged, and when aligned, used a combination of determinant frameworks and the theory of organizational determinants of effective implementation (39) to provide a common terminology and conceptual clarity to emergent concepts.

3.2. How did determinants and IPPs unfold to influence adoption?

We examined transcripts for linguistic expressions that described the nature of relationships between concepts. If using this approach a priori, interviews could be designed to ask about causal explanations. In our post-hoc approach, we looked for terms like since and because to indicate causal explanations (e.g., I had time to attend the training because our principal asked the deputy teacher to cover my class). This produced many antecedent–outcome linkages (e.g., workload adjustment provided teachers with time for intervention activities) and pointed to moderators (e.g., supportive leaders allocated space for delivery, but only when classrooms were available).

Qualitative responses will undoubtedly lack precision in the chain-of-events that occur between antecedents and outcomes. For instance, rewards were described as influential for adoption, without explanation. To expand on these, the research team constructed if-then statements based on impressions from observations (e.g., if a counselor was recognized by their principal (reward), then this would enhance their motivation (motivation), and increase their likelihood of delivering the intervention (adoption). We used counterfactual reasoning to theorize about the effect of events that did not happen to identify necessary conditions [e.g., if the ministry of education had not signaled support (necessary condition), leaders in each school would not have engaged].

We drew on existing theoretical postulates to inform the integration of antecedent–outcome linkages. For instance, interviews suggested several linkages between different IPPs and the perception that implementation was expected, supported, and rewarded (i.e., implementation climate). Drawing on theory (31, 39), we conceptualized IPPs as having an additive effect (i.e., the more IPPs present, the stronger the influence on adoption) and a compensatory effect (i.e., the presence of some high quality IPPs can compensate for the absence or low-quality use of others).

A participatory approach could be used throughout these steps. For instance, initial antecedent-outcome linkages could be presented to stakeholders for member-checking and stakeholders could co-develop if-then statements and engage in counterfactual reasoning [e.g., if X had (not) happened, what do you think would result?] to expand on gaps in the causal chain-of-events.

3.3. For whom, where, and when do postulates apply?

A primary function of considering boundary conditions of a situation-specific micro-theory is ensuring its applicability across the contexts it is applied. Including extreme cases is one way to do this. We purposively sampled from schools with varied characteristics (e.g., small and large staff sizes) to surface explanations across diverse characteristics. We modified explanations to be valid in schools with the most extreme characteristics. For instance, we added staff size as a moderator because leadership support only led to workload redistribution in schools with a moderate-to-large staff size. A micro-theory will inherently be bounded within a narrower context. As their postulates are empirically supported in new contexts or refined, they can inform middle-range theories.

3.4. Prioritizing determinants based on functioning

Determinants can be prioritized based on their theorized influence (see Figure 1). For instance, we may prioritize those occurring early in the causal chain of events that have a cascading influence (e.g., perceptions of innovation effectiveness), moderators that could diminish the effects of other targeted determinants (e.g., resource availability), or necessary determinants that would preclude successful implementation (e.g., Ministry of Education support).

4. Using multiple working hypotheses to support theoretically informative research

The tools discussed so far can be used to leverage empirical research to develop novel theory or refine existing theory. An equally important part of theorizing is validating existing theory. Validating theory should push us toward strong theory, while its invalidation should push us to move away from or refine existing theory. With over one hundred theoretical products available (40), their utility must be tested to lead the field toward high value theories. While a couple of theoretical products are most commonly used, the criteria for selecting them is inconsistent (15). The lack of information on the value of theories may maintain the use of familiar theories “without thought or reflection.” (4) We argue, as has been argued for decades before us, that leveraging multiple working hypotheses can produce research that guides the field toward high value theories (11, 41).

Hypotheses are driven by the postulates of theory, whether that theory is explicit or implicit. Platt argued the most rapid scientific advances can be made using multiple hypothesis generation followed by careful experimental design that arbitrates between hypotheses (41). With a single hypothesis, we can only affirm and refine a single theory that may or may not be a reasonable approximation of reality. Imagine the scientific process as a tree diagram with a single path to follow. We might be able to meander down various smaller paths, but we leave other branches unexplored. If, instead, we introduce multiple plausible hypotheses we open all branches we can generate. Good experiments will produce findings consistent with some families of hypotheses, but more importantly, they provide results inconsistent with others. An iterative process of this kind is more efficient in pushing the field toward theories with greater explanatory power and protect against uncritical and superficial theory application. The existence of multiple competing hypotheses in the literature is a sign of health for the field.

Modern statistical analyses have provided tools to evaluate the plausibility of multiple competing hypotheses or models through approaches such as structural equation model fit comparisons and Bayesian alternatives to null hypothesis significance testing. For instance, Bayesian statistical approaches can be used to estimate the posterior probabilities of several competing models given the data, and models with the greatest probabilities can then be selected as the starting point for additional model development. Unfortunately, even moderately complex models may require large sample sizes (N > 500) to correctly reach a true model among competing options (42).

As the field responds to growing calls for mechanism-based explanations (43, 44), this will be an important place to adopt multiple hypotheses. Among behavior change theories, there are numerous postulated pathways through which behavior change occurs. For instance, COM-B proposes that capability, opportunity and motivation produce behavior, which in turn influences these components (35). In contrast, the Theory of Planned Behavior posits that attitude toward a behavior, subjective norms, and perceived behavioral control, together shape an individual's behavioral intentions, which, in turn, shapes behavior (19). Rather than proposing hypotheses intended to test the postulates of a single theory, we can compare the explanatory power of each theory in a single study. This approach can also be used to pit multiple novel competing theoretical explanations that emerge through theorizing against one another. This allows for “cheap” theorizing in which we produce many explanations and allow evidence to push us towards those of value. Above all, theorists should feel empowered to readily eliminate unsupported hypothesized determinants or poorly fitting theories.

5. Discussion

Complexity is the rule, not the exception, in the change efforts we undertake in implementation science. The classification and organization of constructs into frameworks, delineation of concepts, and theories that explain and predict implementation processes have contributed to creating order and clarity within this complexity (1). Many have argued for the relevance of theory to even the most practical among us who undertake change efforts (45). We agree and also argue that everyone can play a part in advancing theory. All research is related to theory and relevant for pushing theory forward. While many implementation scientists may not identify as philosophers of implementation science, we all play a direct role in theory advancement.

We offer three recommendations for increasing engagement in theorizing. First, articulate the contribution a study can make to advancing or modifying existing theory. To do so, studies must be designed to question the postulates of existing theory (i.e., what, how, who, where, when) and findings interpreted in terms of their support for, or against, those postulates. Second, engage in novel, “cheap”, micro-theory development. The field is increasingly moving towards articulating causal pathways to open the “black box” of implementation (14, 46, 47). This will require greater engagement in developing theories of the problem (e.g., how determinants unfold to influence implementation) and of the solution (e.g., how strategies can address determinants). We advocate for “cheap” theorizing, in which researchers are empowered to draw on empirical evidence to formulate tentative and narrow postulates to be evaluated and refined by research. As these micro-theories are tested and refined through empirical enquiry, they can inform the foundation of generalizable middle-range theory. Third, to continue advancing existing and novel theories forward, researchers should adopt multiple working hypotheses that pit competing explanations against one another. This approach ensures that our theories do not stay stagnant in their nascent and tentative forms and pushes the field towards high value theories.

One barrier to theorizing that we do not address is funder's expectation for studies to adopt existing theory. The popularity of particular theories, despite a lack of strong empirical support has long been an issue (11). Therefore, we urge that theory not be judged on its longevity or popularity, but on its empirical foundation. If theories developed “in-house” have a strong empirical basis and are being advanced by additional empirical enquiry, this is a benefit to the field. Theory development is never done.

We have sought to clarify how theorizing is for everybody and to demonstrate how the questions we ask and hypotheses we test contribute to theoretically informative research that advances theory. Drawing more people into the process of theorizing is precisely how we push our field towards the advancement and elevation of good theories.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for this study in accordance with the local legislation and institutional requirements.

Author contributions

RDM, BJW, CCL, PK and MDP: contributed to the conception of the manuscript. RDM: wrote the first draft of the manuscript. JCM and MDP: contributed to sections of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by funding from the National Cancer Institute (grant no. R01CA262325 & P50CA244432) and National Institute of Mental Health (grant no. P50MH126219).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Damschroder LJ. Clarity out of chaos: use of theory in implementation research. Psychiatry Res. (2020) 283:1–6. doi: 10.1016/j.psychres.2019.06.036

2. Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. (2015) 10(1):53. doi: 10.1186/s13012-015-0242-0

3. Foy R, Ovretveit J, Shekelle PG, Pronovost PJ, Taylor SL, Dy S, et al. The role of theory in research to develop and evaluate the implementation of patient safety practices. BMJ Qual Saf. (2011) 20(5):453–9. doi: 10.1136/bmjqs.2010.047993

4. Kislov R, Pope C, Martin GP, Wilson PM. Harnessing the power of theorising in implementation science. Implement Sci. (2019) 14:1–8. doi: 10.1186/s13012-019-0957-4

5. May CR, Cummings A, Girling M, Bracher M, Mair FS, May CM, et al. Using normalization process theory in feasibility studies and process evaluations of complex healthcare interventions: a systematic review. Implement Sci. (2018) 13(1):1–42. doi: 10.1186/s13012-018-0758-1

6. Michie S, West R, Campbell R, Brown J, Gainforth H. ABC of behaviour change theories. Silverback Publishing (2014). Available at: https://books.google.com/books?id=WQ7SoAEACAAJ

7. Ogden J. Some problems with social cognition models: a pragmatic and conceptual analysis. Heal Psychol. (2003) 22(4):424–8. doi: 10.1037/0278-6133.22.4.424

8. Hooks B. Feminism is for everybody: passionate politics. Cambridge, MA: South End Press (2000). 123 p.

9. Whetten DA. What constitutes a theoretical contribution? Acad Manag Rev. (1989) 14(4):490–5. doi: 10.2307/258554

11. Chamberlin TC. The method of multiple working hypotheses. Science. (1890) 148:754–9. doi: 10.1126/science.148.3671.754

12. McDonald KM, Graham ID, Grimshaw J. Toward a theoretic basis for quality improvement interventions. In: Shojania K, McDonald K, Wachter R, Owens D, editors. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies. Rockville: Agency for Healthcare Research and Quality (2004). p. 27–37.

13. Pinder CC, Moore LF. The resurrection of taxonomy to aid the development of middle range theories of organizational behavior. Adm Sci Q. (1979) 24(1):99–118. doi: 10.2307/2989878

14. Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Heal. (2018) 6:1–6. doi: 10.3389/fpubh.2018.00136.

15. Birken SA, Powell BJ, Shea CM, Haines ER, Alexis Kirk M, Leeman J, et al. Criteria for selecting implementation science theories and frameworks: results from an international survey. Implement Sci. (2017) 12(1):1–9. doi: 10.1186/s13012-016-0533-0

16. Means AR, Kemp CG, Gwayi-Chore MC, Gimbel S, Soi C, Sherr K, et al. Evaluating and optimizing the consolidated framework for implementation research (CFIR) for use in low- and middle-income countries: a systematic review. Implement Sci. (2020) 15:1–19. doi: 10.1186/s13012-020-0977-0

17. Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implement Sci. (2019) 14(1):1–16. doi: 10.1186/s13012-018-0842-6

18. Damschroder LJ, Reardon CM, Opra Widerquist MA, Lowery J. Conceptualizing outcomes for use with the Consolidated Framework for Implementation Research (CFIR): the CFIR outcomes addendum. Implement Sci. (2022) 17(1):7. doi: 10.1186/s13012-021-01181-5

19. Ajzen I. The theory of planned behavior. Orgnizational Behav Hum Decis Process. (1991) 50(2):179–211. doi: 10.1016/0749-5978(91)90020-T

20. Ajzen I. The theory of planned behavior. In: Handbook of theories of social psychology. Vol. 1. Thousand Oaks, CA: Sage Publications Ltd. (2012). p. 438–59.

21. Ajzen I. The theory of planned behavior: frequently asked questions. Hum Behav Emerg Technol. (2020) 2(4):314–24. doi: 10.1002/hbe2.195

22. Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. (2012) 43(3):337–50. doi: 10.1016/j.amepre.2012.05.024

23. Kwak L, Toropova A, Powell BJ, Lengnick-Hall R, Jensen I, Bergström G, et al. A randomized controlled trial in schools aimed at exploring mechanisms of change of a multifaceted implementation strategy for promoting mental health at the workplace. Implement Sci. (2022) 17(1):1–20. doi: 10.1186/s13012-022-01230-7

24. Williams NJ, Wolk CB, Becker-haimes EM, Beidas RS. Testing a theory of strategic implementation leadership, implementation climate, and clinicians’ use of evidence-based practice: a 5-year panel analysis. Implement Sci. (2020) 15(1):1–15. doi: 10.1186/s13012-019-0962-7

25. Lee H, Hall A, Nathan N, Reilly KL, Seward K, Williams CM, et al. Mechanisms of implementing public health interventions: a pooled causal mediation analysis of randomised trials. Implement Sci. (2018) 13(1):1–11. doi: 10.1186/s13012-017-0699-0

26. Meza RD, Triplett NS, Woodard GS, Martin P, Khairuzzaman AN, Jamora G, et al. The relationship between first-level leadership and inner-context and implementation outcomes in behavioral health : a scoping review. Implement Sci. (2021) 16(1):1–21. doi: 10.1186/s13012-020-01068-x

27. Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. (2014) 35(1):255–74. doi: 10.1146/annurev-publhealth-032013-182447

28. Solstad T, Bott O. 31 Causality and causal reasoning in natural language causality in the verbal domain. In: Waldmann MR, editors. The Oxford handbook of causal reasoning. New York: Oxford University Press (2017). p. 619–44.

29. Ford ED. How theories develop and how to use them. In: E. David Ford, editor. Scientific method for ecological research. Cambridge, UK: Cambridge University Press (2010). p. 103–30.

30. Bacharach SB. Organizational theories: some criteria for evaluation. Acad Manag Rev. (1989) 14(4):496–515. doi: 10.2307/258555

31. Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. (1996) 21(4):1055–80. doi: 10.2307/259164

32. Helfrich CD, Weiner BJ, Mckinney MM, Minasian L. Determinants of implementation effectiveness: adapting a framework for complex innovations. Med Care Res Rev. (2007) 64(3):279–303. doi: 10.1177/1077558707299887

33. Birken SA, Lee S-YD, Weiner BJ. Uncovering middle managers’ role in healthcare innovation implementation. Implement Sci. (2012) 7(28):1–12. doi: 10.1186/1748-5908-7-28

34. Williams NJ, Ehrhart MG, Aarons GA, Marcus SC, Beidas RS. Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: cross-sectional and lagged analyses from a 2-year observational study. Implement Sci. (2018) 13(1):1–13. doi: 10.1186/s13012-018-0781-2

35. Michie S, Van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implementation Science. (2011) 42:1–11. doi: 10.1186/1748-5908-6-42.

36. Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci. (2014) 9(1):102. doi: 10.1186/s13012-014-0102-3

37. Zbukvic I, Rheinberger D, Rosebrock H, Lim J, McGillivray L, Mok K, et al. Developing a tailored implementation action plan for a suicide prevention clinical intervention in an Australian mental health service: a qualitative study using the EPIS framework. Implement Res Pract. (2022) 3:263348952110657. doi: 10.1177/26334895211065786

38. Lewis CC, Puspitasari A, Boyd MR, Scott K, Marriott BR, Hoffman M, et al. Implementing measurement based care in community mental health: a description of tailored and standardized methods. BMC Res Notes. (2018) 11(1):1–6. doi: 10.1186/s13104-018-3193-0

39. Weiner BJ, Lewis MA, Linnan LA. Using organization theory to understand the determinants of effective implementation of worksite health promotion programs. Health Educ Res. (2009) 24(2):292–305. doi: 10.1093/her/cyn019

40. Rabin BA, Glasgow RE, Ford B, Huebschmann A, Marsh R, Tabak R, et al. Dissemination & Implementation Models in Health: An Interactive Webtool to Help you Use D&I Model.

41. Platt JR. Strong inference: certain systematic methods of scientific thinking may produce much more rapid progress than others. Science (80-). (1964) 146(3642):347–53. doi: 10.1126/science.146.3642.347

42. MacCallum R. Specification searches in covariance structure modeling. Psychol Bull. (1986) 100(1):107–20. doi: 10.1037/0033-2909.100.1.107

43. Lewis CC, Boyd MR, Walsh-bailey C, Lyon AR, Beidas R, Mittman B, et al. A systematic review of empirical studies examining mechanisms of implementation in health. (2020) 15(21):1–25. doi: 10.1186/s13012-020-00983-3

44. Lewis CC, Powell BJ, Brewer SK, Nguyen AM, Schriger SH, Vejnoska SF, et al. Advancing mechanisms of implementation to accelerate sustainable evidence-based practice integration: protocol for generating a research agenda. BMJ Open. (2021) 11(10):1–10. doi: 10.1136/bmjopen-2021-053474

45. Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and Its use in improvement. BMJ Qual Saf. (2015) 24(3):228–38. doi: 10.1136/bmjqs-2014-003627

46. Smith JD, Li DH, Rafferty MR. The implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. (2020) 15(1):1–12. doi: 10.1186/s13012-020-01041-8

Keywords: theorizing, determinant prioritization, mechanisms, implementation science, causality

Citation: Meza RD, Moreland JC, Pullmann MD, Klasnja P, Lewis CC and Weiner BJ (2023) Theorizing is for everybody: Advancing the process of theorizing in implementation science. Front. Health Serv. 3:1134931. doi: 10.3389/frhs.2023.1134931

Received: 31 December 2022; Accepted: 20 February 2023;

Published: 10 March 2023.

Edited by:

Per Nilsen, Linköping University, SwedenReviewed by:

David Sommerfeld, University of California, San Diego, United States© 2023 Meza, Moreland, Pullmann, Klasnja, Lewis and Weiner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rosemary D. Meza Um9zZW1hcnkueDEuTWV6YUBrcC5vcmc=

Specialty Section: This article was submitted to Implementation Science, a section of the journal Frontiers in Health Services

Rosemary D. Meza

Rosemary D. Meza James C. Moreland2

James C. Moreland2 Michael D. Pullmann

Michael D. Pullmann Cara C. Lewis

Cara C. Lewis Bryan J. Weiner

Bryan J. Weiner