- 1Center for Public Health Systems Science, Brown School, Washington University in St. Louis, St. Louis, MO, United States

- 2Division of Public Health Sciences, Department of Surgery, Washington University in St. Louis School of Medicine, St. Louis, MO, United States

Background: Within many public health settings, there remain large challenges to sustaining evidence-based practices. The Program Sustainability Assessment Tool has been developed and validated to measure sustainability capacity of public health, social service, and educational programs. This paper describes how this tool was utilized between January 2014 and January 2019. We describe characteristics of programs that are associated with increased capacity for sustainability and ultimately describe the utility of the PSAT in sustainability research and practice.

Methods: The PSAT is comprised of 8 subscales, measuring sustainability capacity in eight distinct conceptual domains. Each subscale is made up of five items, all assessed on a 7-point Likert scale. Data were obtained from persons who used the PSAT on the online website (https://sustaintool.org/), from 2014 to 2019. In addition to the PSAT scale, participants were asked about four program-level characteristics. The resulting dataset includes 5,706 individual assessments reporting on 2,892 programs.

Results: The mean overall PSAT score was 4.73, with the lowest and highest scoring subscales being funding stability and program adaptation, respectively. Internal consistency for each subscale was excellent (average Cronbach's alpha = 0.90, ranging from 0.85 to 0.94). Confirmatory factor analysis highlighted good to excellent fit of the PSAT measurement model (eight distinct conceptual domains) to the observed data, with a comparative fit index of 0.902, root mean square error of approximation equal to 0.054, and standardized root mean square residual of 0.054. Overall sustainability capacity was significantly related to program size (F = 25.6; p < 0.001). Specifically, smaller programs (with staff sizes of ten or below) consistently reported lower program sustainability capacity. Capacity was not associated with program age and did not vary significantly by program level.

Discussion: The PSAT maintained its excellent reliability when tested with a large and diverse sample over time. Initial criterion validity was explored through the assessment of program characteristics, including program type and program size. The data collected reinforces the ability of the PSAT to assess sustainability capacity for a wide variety of public health and social programs.

Introduction

What enables programs to continue delivering effective services over time? This is an important question funders and public health leaders pose as they look beyond the initial investment and implementation of a program. As Shelton et al. (1) note, funders want to know the investment they make into a program will continue to have an impact long after the investment ends. In addition, communities come to rely on these programs and if they end prematurely it may have lasting consequences. The discontinuation of these programs results in communities developing “low levels of community support and trust in research and public health/medical institutions” therefore creating challenges to future community efforts (1, 2). Program sustainability has been identified as critical for realizing the long-term impacts of a program. However, researchers and practitioners still lack knowledge about how to measure or enhance sustainment of public health or clinical care programs. The growing evidence base in dissemination and implementation science focuses mostly on the translation of research into practice to develop effective programs and policies (1). Many implementation science studies focus on the early stages of implementation and short-term outcomes; thus there is an important research and evaluation opportunity focusing on how effective programs are sustained over time after their initial adoption (1, 3, 4).

Sustainability has been defined as the ongoing use of an intervention with enough fidelity to continue to have desired program impact with subsequent improved outcomes (1). In addition to this foundational definition, recent empirical work has examined various research and measurement aspects of assessing sustainment in public health settings (5–8). Together, this information to improve sustained implementation will help both researchers and practitioners realize the full impact of their programs and practices.

Despite these research successes, it remains challenging for practitioners to maintain evidence-based activities and programs across a wide range of settings. Public health and community programs often depend on time-limited financial resources, after which programs are expected to secure alternative funding (9). Programs may also lose political and community support, become targets of political or commercial opposition, or face organizational challenges such as staff turnover (1). Sustaining programs that work is the main way we can ensure that communities get their intended health benefits; for that reason it is critical to be able to measure and understand the factors influencing program sustainability.

Public health program sustainability can take many forms (10). For example, practitioners can seek to maintain program activities, community-level partnerships, organizational practices, benefits to clients, and the salience of the program's core issue (11). However, little is known about how a program can best position itself to deliver these outcomes over time. Research and theory on the concept of value-based care has also focused on some of these organizational activities and describes a need to focus on overall value of care and team-based care instead of simply focusing on reducing costs of care (12). By focusing on building sustainability capacity, or the structures and processes that allow a program to leverage resources to effectively implement and maintain evidence-based policies and activities, programs can better understand and strengthen the factors within their control to increase the likelihood of maintaining benefits to clients in some form over time (10).

To better understand the factors that affect a program's ability to deliver benefits over time, Schell et al. (10) developed a sustainability conceptual framework using concept mapping, reviews of the implementation science literature, and expert input. This framework identifies a set of organizational factors affecting program sustainability capacity. These factors are organized into external (environmental support, funding stability) and internal (partnerships, organizational capacity, program evaluation, program adaptation, communications, and strategic planning) domains (see Figure 1).

This eight-domain conceptual framework is useful to help programs and organizations looking to understand the factors beyond simple funding that affect a program's capacity for sustainability. For example, programs that collect evaluation data about their processes and outcomes can better demonstrate the necessity of their program to leadership and stakeholders (13). Communicating concise outcome data to policymakers and showing a program's impact on the community better positions the program for continued funding and support (14).

To address the relative lack of tools available to evaluate program sustainability, Luke et al. (15) translated the program sustainability conceptual framework into a measurement instrument: the Program Sustainability Assessment Tool (PSAT). The development of the tool was guided by four basic design principles: (1) short and easy to use; (2) usable by both small and large programs; (3) applicable to a wide variety of program types; and (4) useful as a research, evaluation, and program planning tool.

The PSAT was originally created to reflect the concepts represented in each of the eight domains in the program sustainability framework (10). The assessment instrument was developed and tested on over 250 state and local public health programs across a variety of program types (tobacco control, obesity, nutrition, etc.). Psychometric analyses of the PSAT using these original data demonstrated good reliability (i.e., internal consistency) and confirmatory factor analysis supported the suitability of eight domains measured by five items per domain (15).

The PSAT was designed to allow comparisons between programs as well as within-program comparisons over time. Since 2014, the tool has been used by more than 5,000 people to rate sustainability capacity of more than 2,500 public health, social services, clinical care, and education programs of varying sizes in the US and internationally. Among many others, the PSAT has been used to examine the sustainability capacity of inter-professional collaborative practice model for population health, evidence-based practices in adolescent substance abuse, local health department programs and policies, and chronic disease prevention interventions (16–21).

The variety of settings and amount of use provides the opportunity for further exploration of program sustainability capacity across program type, size, implementation level, and age. An example application of the PSAT in public health programming is how the Centers for Disease Control & Prevention (CDC)'s Office on Smoking and Health requires state tobacco control programs create a sustainability plan and encourages programs to use the Program Sustainability Assessment Tool to drive sustainability planning (22). Additional departments within CDC (Division of Population Health, Division of Nutrition, Physical Activity and Obesity, and the Center for Putting Prevention to Work, among others) have encouraged their state programs to use the PSAT, as have other federal organizations (National Cancer Institute, Canadian Partnership Against Cancer), and many state health departments, health foundations, and professional networks (American Evaluation Association, Association of State Public Health Nutritionists, and CDC-OSH National Partner Network) (23).

In this paper we describe program sustainability capacity for public health, clinical care, social services, and education programs using data passively collected on https://sustaintool.org between January 2014 and January 2019. We also examine how a small set of programmatic factors are related to program sustainability capacity. This paper adds to the sustainability knowledge base in two important ways, first, by identifying characteristics of programs that are associated with organizational capacity for sustainability, and second by providing further evidence supporting the utility of the PSAT as a continuing part of the implementation science sustainability toolkit.

Methods

In this paper we report updated reliability, measurement model characteristics, and validity data for the Program Sustainability Assessment Tool (PSAT), which can be used to assess capacity for sustainability of a wide variety of public health, social service, and educational programs.

Measures

The PSAT (Additional File 1) consists of 40 questions, five items in each of eight domain subscales, with 7-point Likert-scale responses. Individual items can be rated from 1 (program has or does this to no extent) to 7 (program has or does this to the full extent). Subscale and total scores are the averages of the individual item scores, so scores can range from 1 to 7. Higher scores are interpreted as the program having greater sustainability capacity in that area (e.g., funding stability, program evaluation, etc.).

In addition to the PSAT total scale and subscale scores, participants provided information on four important program-level characteristics. Program type classified programs into five groups: public health, social service, clinical care, education, and other. As an example of a clinical setting, the PSAT has been used to examine the sustainability capacity of pediatric asthma care coordination (17). Public health programs both in the United States and abroad used the assessment. The Reducing Violence against Women and their Children grants program used the PSAT for funded prevention initiatives in diverse settings across Victoria, Australia to prevent violence against women (24). In the United states, Well-Ahead Louisiana, the state tobacco cessation and prevention program used the PSAT to assess their comprehensive statewide tobacco prevention efforts (25).

Program level captured how the program was organized and who it served. Programs were either community-level, state-level, or greater than state level. This latter category included national, tribal, and international programs. An example of a community-level program is the “Som la Pera” intervention; a school-based, peer-led, social-marketing intervention that encourages healthy diet and physical activity, in low socioeconomic adolescents (26). Staff size was the number of staff and personnel who were directly involved with the program or project being rated, including volunteers. Program age was the number of years that the program or project had been in existence.

PSAT data collection

The PSAT analyses presented here are based on PSAT profile and data passively collected on https://sustaintool.org/ between January 2014 and January 2019 through individual and group self-assessments. Per the site privacy statement (with associated Washington University IRB approval), users passively consented to analysis of their de-identified PSAT profile data upon submission.

After downloading the PSAT data from the web server, the raw data were cleaned up by deleting test entries, and entries that had missing data for every item in the PSAT. (These were due to people who visited the website, started the PSAT assessment, but quit before filling anything out.) After cleaning, the dataset included a total of 5,706 respondents reporting on 2,892 programs. Examining missing data patterns, 65% of the respondents filled out every one of the 40 items in the scale, and 96% filled out at least half of the items (≥20).

Users of the online PSAT can fill out an individual assessment (one person rating the sustainability capacity of an individual program), or a group assessment (multiple people rating the sustainability capacity of the same program). Of the 2,892 program assessments included in the dataset, 2,283 were individual assessments (79%). For group assessments, the respondent numbers ranged from 2 to 31, with a median group size of 5. The main purpose of this paper is to understand characteristics of program sustainability capacity, so the raw data were aggregated by program. Specifically, the group PSAT total and subscale scores were calculated by averaging the scores for all individuals taking part in a particular group assessment. So, the scores are meant to represent program and organizational characteristics, not individual characteristics.

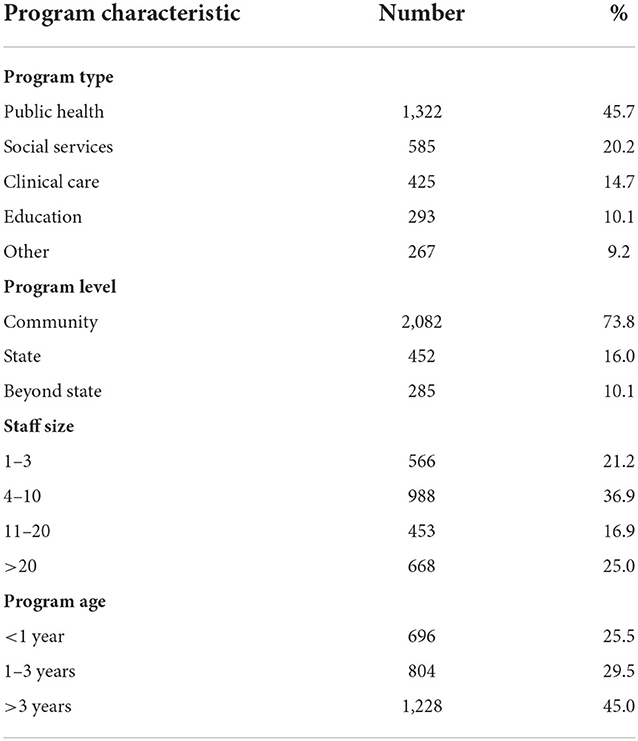

Table 1 presents the program-level characteristics of the total PSAT sample. This sample includes a wide variety of types of programs. Almost half of the programs are public health (46%), followed by social service (20%), clinical care (15%), and education (10%). A large majority of the programs are organized at the community level (74%), but over 700 programs are organized at higher levels (e.g., state, national, international). Programs represent both small and larger organizations, ranging from 3 or fewer staff members (21%) to more than 20 members (25%). The programs also varied in age, ranging from < 1 year of existence (26%) to over 3 years (45%).

Table 1. Program characteristics of PSAT sample (N = 2,892 programs, based on 5,706 individual assessments).

Analyses

Frequencies and means were calculated to obtain descriptive statistics of the sample, as appropriate. One-way analyses of variance were conducted to understand PSAT score differences related to program focus, size, age and level. Two-way analyses of variance were conducted to assess the interaction between program focus and program size, age and level. Psychometric analyses were conducted to assess the reliability (internal consistency) of the eight PSAT subscales. Finally, confirmatory factor analysis was used to test the measurement model of the PSAT, and how well that measurement model fit with the observed PSAT data.

Results

The goal of these analyses is to describe the characteristics of the Program Sustainability Assessment Tool (PSAT) as it has been applied to rate sustainability capacity in a variety of settings and programs. These analyses can help determine if the psychometric properties have remained stable as the PSAT has been rolled out for wider application, and to assess how a small number of program characteristics are related to PSAT overall and subscale scores.

Overall PSAT characteristics

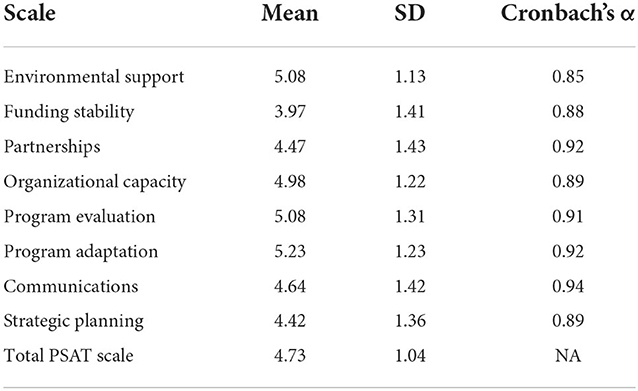

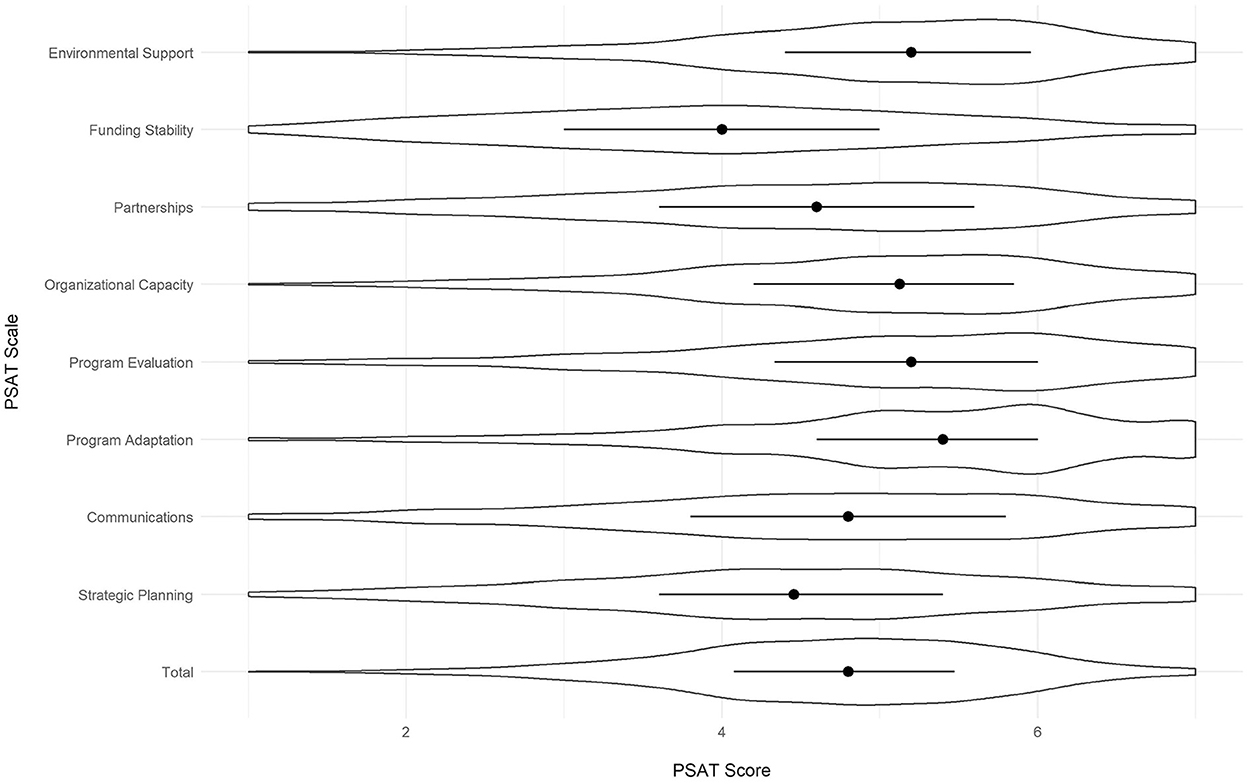

All of the scores for the PSAT were out of a possible total of 7, with 7 being the greatest extent of each domain. Across all programs, the mean PSAT score was 4.73 (Table 2). PSAT subscale scores were lowest for funding stability (M = 3.97), followed by strategic planning (M = 4.42), partnerships (M = 4.47), communications (M = 4.64), organizational capacity (M = 4.98), program evaluation (M = 5.08), environmental support (M = 5.08) and program adaptation had the highest average score (M = 5.23). Although the subscale and total mean scores are somewhat high relative to the seven-point scale, score variabilities are relatively high (standard deviations ranging from 1.13 to 1.43), indicating only minor issues with restriction of range. Figure 2 presents violin plots of the total and subscale scores, displaying the median values for each, as well as the score variabilities.

PSAT reliabilities and measurement structure

In our original PSAT development study, average internal consistency (Cronbach's alpha) of the 8 subscales was 0.88 and domain subscales ranged from 0.79 to 0.92 (15). In the current study, we had data on more programs, and these programs were more diverse (i.e., educational, clinical, social service, and public health programs). Despite the greater program diversity, psychometric analyses reveal that the PSAT maintains its excellent reliability (Table 2). Specifically, for the new data subscale reliabilities ranged from 0.85 to 0.94, with an average of 0.90.

In addition to the subscale reliabilities, we examined the domain structure of the PSAT using confirmatory factor analysis (CFA) to see how well the observed data matched our overall conceptual framework of eight distinct conceptual domains (see Figure 1). CFA results show an excellent fit of the data to the hypothesized measurement structure. Specifically, the fit indices for the eight factor model include the comparative fit index (CFI = 0.902), root mean square error of approximation (RMSEA = 0.054) and standardized root mean square residual (SRMR = 0.054). All indicate good to excellent fit (27–29). Furthermore, we compared the fit of the eight factor model to a simpler single factor model (that assumes that there is just a general concept of sustainability capacity that does not have a more complicated multi-domain structure). A comparison of the two models using Vuong's distinguishability test showed that the eight factor model was a significantly better fit to the data than the single factor model (LR = 47,277.2, p < 0.001) (30). More detailed results from the CFA analyses (including model fits and diagnostics) are available from the authors.

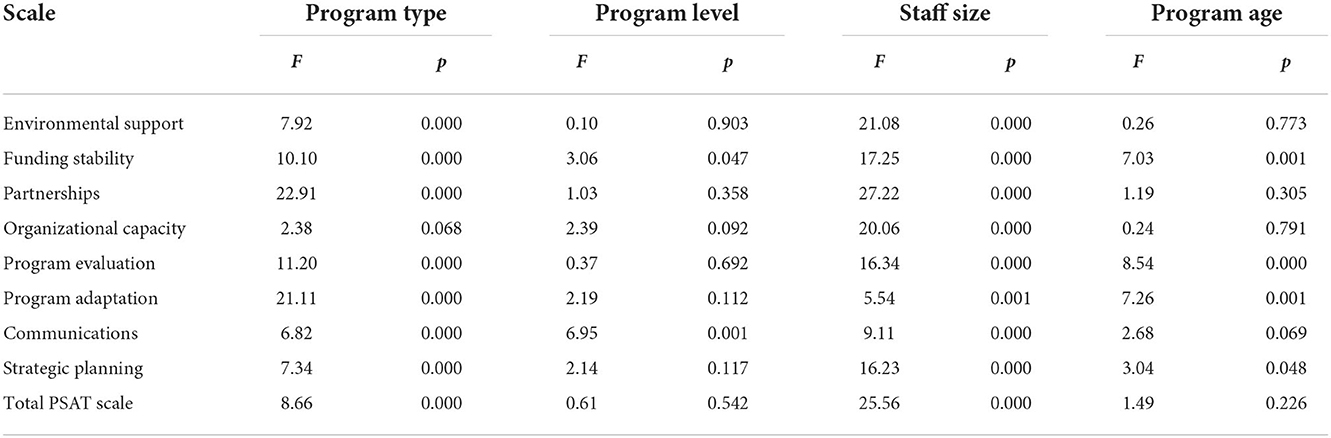

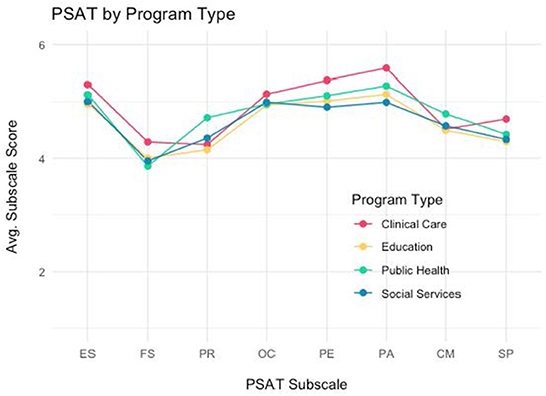

PSAT characteristics by program type

PSAT scores were analyzed by program type for four types of programs: public health, social services, clinical care and education. Overall, PSAT total and subscale scores varied significantly by program type, except for organizational capacity (see Table 3). Clinical programs reported the highest total PSAT scores (M = 4.92), followed by public health (M = 4.78), social services (M = 4.64), and finally education (M = 4.61). Figure 3 shows the pattern of PSAT total and subscale scores by the four types of programs. Clinical programs tended to show higher subscale scores, especially for engaged stakeholders, financial stability, program evaluation, and program adaptation. Public health programs, on the other hand, showed higher scores on partnerships and communications. Social service programs have the lowest score profile of the four program types, with the possible exception of partnerships.

Impact of programmatic factors on PSAT trends

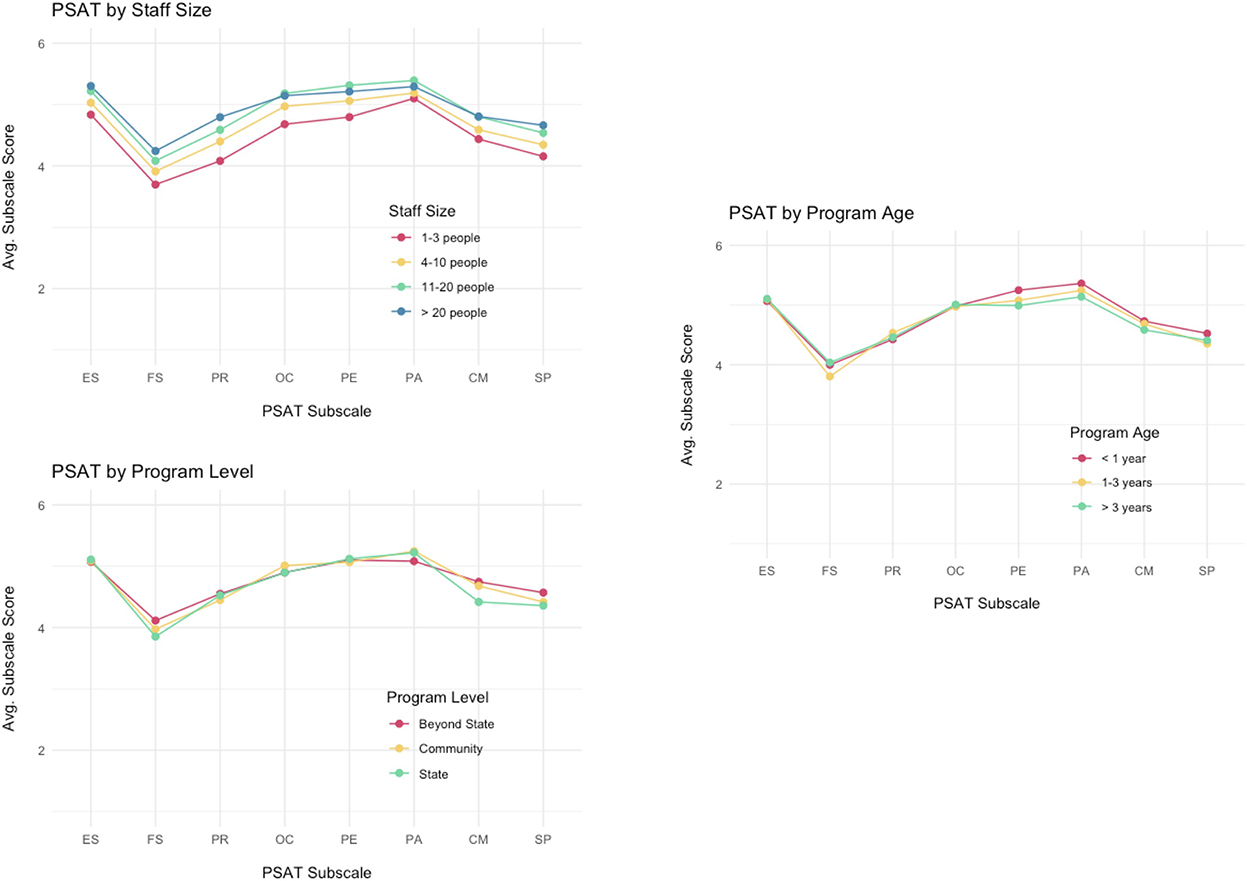

Additional analyses were conducted to understand how program size, age and level impact sustainability capacity (Table 3). Overall sustainability capacity was significantly related to program size (F = 25.6; p < 0.001). In general, larger programs (>20 staff and volunteers, M = 4.93) were perceived as more sustainable than smaller programs (< 4 staff and volunteers; M = 4.47). This pattern was apparent across all of the subscale domains as well-programs with three or fewer staff and volunteers reported significantly less capacity for sustainability compared to programs with 21 or more staff and volunteers for all eight of the subscales. Figure 4 shows the subscale means by size of the program, and there is a discernible dose-response pattern where larger staff sizes are associated with higher PSAT subscale scores.

In comparison, program level and program age were not as strongly or consistently related to sustainability capacity scores. Overall sustainability capacity was not associated with program age (F = 1.49; p = 0.226). However, older programs (>3 years, M = 4.04) reported higher capacity for funding stability (M = 4.04; F = 7.03; p = 0.001), while younger programs (< 1 year) showed greater capacity in program evaluation (M = 5.25; F = 8.54; p = 0.000) and program adaptation (M = 5.36; F = 7.26; p = 0.001).

Overall program sustainability capacity did not vary significantly by program level (F = 0.61; p = 0.54). However, state-level programs reported the lowest level of communications capacity (M = 4.42; F = 6.95; p = 0.001) while higher level (beyond state) programs reported higher financial stability (M = 4.11; F = 3.06, p = 0.047).

Discussion

This study evaluated the PSAT continued performance in two major areas: first, through assessment of reliability and measurement structure, and second, in understanding some program characteristics that affect programs' sustainability capacity. The PSAT maintained its excellent reliability when tested with a larger and more diverse sample over time, further solidifying it as a reliable tool for assessing sustainability capacity. Initial criterion validity was explored through the assessment of program characteristics, including program type and program size. The data collected across differing programs and users reinforces the ability of the PSAT to assess sustainability capacity in relevant areas. The PSAT aids in assessment of many areas of public health, including those that address addiction and mental health programming.

The PSAT, therefore, remains a reliable and valid instrument for practitioners to use when assessing their program's sustainability capacity. While some work has adapted the PSAT for specific areas, this work suggests that the PSAT remains valid in its entirety, even when assessed in a larger and more diverse sample (31). While there have been other measures developed for sustainability capacity in certain areas or to assess sustainment, this capacity focused measure is both pragmatic and generalizable to different settings and amongst those in different roles, including both practitioners and research team members (5). This assessment responds to the need for reliable measures within implementation science, specifically in the area of sustainability research (1, 32, 33).

Further, this data provides information about real-world programs to support and enhance program sustainability. This allows for practitioners and researchers to better understand what constructs should be targeted to enhance program sustainability in public health, mental health, and clinical care.

Ultimately, this theoretically driven work helps move from considerations about definition into better understanding of measurement of this construct. Next, studies of sustainability need to focus more about prediction and mechanisms on which sustainability acts. This study helps tie important information about theory and frameworks to data around these contextual factors that can drive sustainability capacity. These PSAT domains could inform future qualitative studies to explore the concepts further and elucidate how they could contribute to interventions to increase future sustainment.

A strength of this study is the large number of participants, even though the sample was comprised of those who sought out the measure for use. The time span covered by the sample further allows for strengthening of outcomes related to reliability and validity. Finally, this sample represents many types of programs as well as locations of assessment, including programs both within the United States and internationally. International participants were from countries including: Australia, Canada, and the United Kingdom. However, we do not collect specific location of sites through our online survey at this time. Additionally, this assessment was conducted prior to a Spanish translation of the measure being available, so is limited to English speaking respondents. Future validation work can expand on the initial variables used in this sample to assess criterion validity as well as explore PSAT responses in multiple languages.

The PSAT provides a reliable tool for assessing a program's sustainability capacity. In addition, the PSAT has been found to be easy to use, requiring no or minimal training (34). As a result, practitioners, evaluators, and researchers can use the PSAT in their sustainability planning efforts with confidence. This study further supports the reliability, validity, and usefulness of this instrument. While other instruments have been developed for specific settings, this tool assists with implementation practice and evaluating a wide variety of programs. This study does not connect sustainability capacity to sustainment outcomes, due to a lack of information about the sustainment metrics of the programs. Future research ought to investigate the link between sustainability capacity and sustainment outcomes.

Additionally, clinical settings often have been identified as having unique processes and structures to those in public health programs (6). For example, clinical settings are often less reliant on finances than public health programs. To assess these settings, an adaptation of the PSAT was developed that focuses on clinical programs and practices. The Clinical Sustainability Assessment Tool has also been translated for use in other languages and has been demonstrated as reliable in both domestic and global settings (35).

In addition to future work focused on broader dissemination of this tool for practice, there are opportunities to explore how different organization contexts influence program sustainability. The contexts within which different programs, such as public health and educational programs, are delivered can vary widely, even within similar geographic regions. Therefore, further work should focus on understanding this varying context, including differences in program level, populations, and settings, and their relationship to overall sustainability capacity. While other work has adapted this tool for specific clinical contexts, the PSAT should continue to be utilized and tailored for other audiences.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

CB led the design of the study and drafting the manuscript. SM assisted with planning the study, reviewing the data, and drafting the manuscript. KP provided supervision of the study and assisted with the design of the study and drafting the manuscript. RH assisted with data collection, data preparation, and editing the manuscript. MH assisted with the design of the study and drafting the manuscript. SD assisted with data collection and study design. DL led the data analysis and assisted with planning the study and drafting of the manuscript. All authors reviewed and approved the final manuscript.

Funding

This work was funded by the Centers for Disease Control and Prevention, Office on Smoking and Health, contract no. 75D301-20-R-68063.

Acknowledgments

The authors thank the Centers for Disease Control and Prevention, Office on Smoking and Health for their support of the tool development and website at www.sustaintool.org.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frhs.2022.1004167/full#supplementary-material

References

1. Shelton R, Cooper B, Stirman S. The sustainability of evidence-based interventions and practices in public health and health care. Ann Rev Public Health. (2018) 39:14731. doi: 10.1146/annurev-publhealth-040617-014731

2. Walugembe DR, Sibbald S, Le Ber MJ, Kothari A. Sustainability of public health interventions: where are the gaps? Health Res Policy Syst. (2019) 17:1–7. doi: 10.1186/s12961-018-0405-y

3. Proctor E, Luke D, Calhoun A, Nieboer AP. Sustainability of evidence-based healthcare: research agenda, methodological advances, and infrastructure support. Implement Sci. (2015) 10:1–13. doi: 10.1186/s13012-015-0274-5

4. Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, Hunter C. National institutes of health approaches to dissemination and implementation science: current and future directions. Am J Public Health. (2012) 102:1274–81. doi: 10.2105/AJPH.2012.300755

5. Palinkas LA, Chou CP, Spear SE, Mendon SJ, Villamar J, Brown CH. Measurement of sustainment of prevention programs and initiatives: the sustainment measurement system scale. Implement Sci. (2020) 15:1–15. doi: 10.1186/s13012-020-01030-x

6. Malone S, Prewitt K, Hackett R, Lin JC, McKay V, Walsh-Bailey C, et al. The clinical sustainability assessment tool: measuring organizational capacity to promote sustainability in healthcare. Implement Sci Commun. (2021) 2:1–12. doi: 10.1186/s43058-021-00181-2

7. Hoben M, Ginsburg LR, Norton PG, Doupe MB, Berta WB, Dearing JW, et al. Sustained effects of the INFORM cluster randomized trial: an observational post-intervention study. Implement Sci. (2021) 16:1–14. doi: 10.1186/s13012-021-01151-x

8. Lui JHL, Brookman-Frazee L, Lind T, Le K, Roesch S, Aarons GA, et al. Outer-context determinants in the sustainment phase of a reimbursement-driven implementation of evidence-based practices in children's mental health services. Implement Sci. (2021) 16:1–9. doi: 10.1186/s13012-021-01149-5

9. Dopp AR, Kerns SEU, Panattoni L, Ringel JS, Eisenberg D, Powell BJ, et al. Translating economic evaluations into financing strategies for implementing evidence-based practices. Implement Sci. (2021) 16:66. doi: 10.1186/s13012-021-01137-9

10. Schell SF, Luke DA, Schooley MW, Elliott MB, Herbers SH, Mueller NB, et al. Public health program capacity for sustainability: a new framework. Implement Sci. (2013) 8:1–9. doi: 10.1186/1748-5908-8-15

11. Scheirer MA, Dearing JW. An agenda for research on the sustainability of public health programs. Am J Public Health. (2011) 101:2059. doi: 10.2105/AJPH.2011.300193

12. Teisberg E, Wallace S, O'Hara S. Defining and implementing value-based health care: a strategic framework. Acad Med. (2020) 95:682–5. doi: 10.1097/ACM.0000000000003122

13. Nelson D, Reynolds J, Luke D, Mueller NB, Eischen MH, Jordan J, et al. Successfully maintaining program funding during trying times: lessons from tobacco control programs in five states. J Public Health Manag Pract JPHMP. (2007) 13:45. doi: 10.1097/01.PHH.0000296138.48929.45

14. Schmidt AM, Ranney LM, Goldstein AO. Communicating program outcomes to encourage policymaker support for evidence-based state tobacco control. Int J Environ Res Public Health. (2014) 11:12562–74. doi: 10.3390/ijerph111212562

15. Luke DA. The program sustainability assessment tool: a new instrument for public health programs. Prev Chronic Dis. (2014) 11:130184. doi: 10.5888/pcd11.130184

16. Tabak RG, Duggan K, Smith C, Aisaka K, Moreland-Russell S, Brownson RC. Assessing capacity for sustainability of effective programs and policies in local health departments. J Public Health Manag Pract. (2016) 22:129–37. doi: 10.1097/PHH.0000000000000254

17. Stoll S. A mixed-method application of the program sustainability assessment tool to evaluate the sustainability of 4 pediatric asthma care coordination programs. Prev Chronic Dis. (2015) 12:150133. doi: 10.5888/pcd12.150133

18. Hunter SB, Han B, Slaughter ME, Godley SH, Garner BR. Associations between implementation characteristics and evidence-based practice sustainment: a study of the adolescent community reinforcement approach. Implement Sci. (2015) 10:1–11. doi: 10.1186/s13012-015-0364-4

19. Kelly C, Scharff D. Peer reviewed: a tool for rating chronic disease prevention and public health interventions. Prevent Chronic Dis. (2013) 10:130173. doi: 10.5888/pcd10.130173

20. Shirey MR, Selleck CS, White-Williams C, Talley M, Harper DC. Sustainability of an interprofessional collaborative practice model for population health. Nurs Adm Q. (2020) 44:221–34. doi: 10.1097/NAQ.0000000000000429

21. Smith ML, Durrett NK, Schneider EC, Byers IN, Shubert TE, Wilson AD, et al. Examination of sustainability indicators for fall prevention strategies in three states. Eval Program Plann. (2018) 68:194–201. doi: 10.1016/j.evalprogplan.2018.02.001

22. Centers for Disease Control Prevention. Best Practices User Guide: Putting Evidence into Practice in Tobacco Prevention and Control. Atlanta: Centers for Disease Control and Prevention (2021). Available online at: https://www.cdc.gov/tobacco/stateandcommunity/guides/pdfs/putting-evidence-into-practice-508.pdf (accessed March 15, 2022).

23. National Cancer Institute. Implementation Science at a Glance: A Guide for Cancer Control Practitioners. Rockville, MD: National Institutes of Health (2018). Available online at: https://cancercontrol.cancer.gov/IS/tools/practice.html (accessed May 8, 2019)

24. Our Watch. Reducing Violence Against Women their Children Grants Program Phase II Evaluation Report. Melbourne: Our Watch (2015). Available online at: https://media-cdn.ourwatch.org.au/wp-content/uploads/sites/2/2019/11/12040744/OurWatch_CoP_FINAL_Accessible.pdf (accessed October 31, 2022).

25. Center for Public Health Systems Science Washington University in St. Louis. Well-Ahead Louisiana Tobacco Cessation and Prevention. Sustain Tool. St. Louis: Washington University (2022). Available online at: https://sustaintool.org/psat/case-study/well-ahead-louisiana-tobacco-cessation-prevention/ (accessed October 28, 2022)

26. Llauradó E, Aceves-Martins M, Tarro L, Papell-Garcia I, Puiggròs F, Prades-Tena J, et al. The “Som la Pera” intervention: sustainability capacity evaluation of a peer-led social-marketing intervention to encourage healthy lifestyles among adolescents. Transl Behav Med. (2018) 8:739–44. doi: 10.1093/tbm/ibx065

27. Kenny DA, McCoach DB. Effect of the number of variables on measures of fit in structural equation modeling. Struct Equ Model. (2003) 10:333–51. doi: 10.1207/S15328007SEM1003_1

28. Hu LT, Bentler PM. Evaluating model fit. In: Structural Equation Modeling: Concepts, Issues, and Applications. New York, NY: Sage Publications, Inc (1995), 76–99.

29. Hu L, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria vs. new alternatives. Struct Equ Model Multidiscipl J. (1999) 6:1–55. doi: 10.1080/10705519909540118

30. Vuong QH. Likelihood ratio tests for model selection and non-nested hypotheses. Econometrica. (1989) 57:307–33. doi: 10.2307/1912557

31. Hall A, Shoesmith A, Shelton RC, Lane C, Wolfenden L, Nathan N. Adaptation and validation of the program sustainability assessment tool (PSAT) for use in the elementary school setting. Int J Environ Res Public Health. (2021) 18:11414. doi: 10.3390/ijerph182111414

32. Braithwaite J, Ludlow K, Testa L, Herkes J, Augustsson H, Lamprell G, et al. Built to last? The sustainability of healthcare system improvements, programmers and interventions: a systematic integrative review. BMJ Open. (2020) 10:e036453. doi: 10.1136/bmjopen-2019-036453

33. Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. (2015) 10:155. doi: 10.1186/s13012-015-0342-x

34. Calhoun A, Mainor A, Moreland-Russell S, Maier RC, Brossart L, Luke DA. Using the program sustainability assessment tool to assess and plan for sustainability. Prev Chronic Dis. (2014) 11:E11. doi: 10.5888/pcd11.130185

35. Agulnik A, Malone S, Puerto-Torres M, Gonzalez-Ruiz A, Vedaraju Y, Wang H, et al. Reliability and validity of a Spanish-language measure assessing clinical capacity to sustain paediatric early warning systems (PEWS) in resource-limited hospitals. BMJ Open. (2021) 11:e053116. doi: 10.1136/bmjopen-2021-053116

Keywords: sustainability capacity, implementation science, program sustainability, evidence-based interventions, community, health

Citation: Bacon C, Malone S, Prewitt K, Hackett R, Hastings M, Dexter S and Luke DA (2022) Assessing the sustainability capacity of evidence-based programs in community and health settings. Front. Health Serv. 2:1004167. doi: 10.3389/frhs.2022.1004167

Received: 27 July 2022; Accepted: 09 November 2022;

Published: 30 November 2022.

Edited by:

Celia Laur, Women's College Hospital, CanadaReviewed by:

Feisul Mustapha, Ministry of Health Malaysia, MalaysiaSamuel Petrie, Carleton University, Canada

David Chambers, National Cancer Institute (NIH), United States

Copyright © 2022 Bacon, Malone, Prewitt, Hackett, Hastings, Dexter and Luke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Caren Bacon, Y2JhY29uQHd1c3RsLmVkdQ==

Caren Bacon

Caren Bacon Sara Malone

Sara Malone Kim Prewitt

Kim Prewitt Rachel Hackett1

Rachel Hackett1 Sarah Dexter

Sarah Dexter Douglas A. Luke

Douglas A. Luke