94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Dement., 25 July 2023

Sec. Dementia Care

Volume 2 - 2023 | https://doi.org/10.3389/frdem.2023.1226060

This article is part of the Research TopicCharacterizing and Measuring Behavioral and Psychological Symptoms of Dementia (BPSD)View all 8 articles

Federico Emanuele Pozzi1,2*

Federico Emanuele Pozzi1,2* Luisa Calì3

Luisa Calì3 Carlo Ferrarese1,2,3

Carlo Ferrarese1,2,3 Ildebrando Appollonio1,2,3

Ildebrando Appollonio1,2,3 Lucio Tremolizzo1,2,3

Lucio Tremolizzo1,2,3The behavioral and psychological symptoms of dementia (BPSD) are a heterogeneous set of challenging disturbances of behavior, mood, perception, and thought that occur in almost all patients with dementia. A huge number of instruments have been developed to assess BPSD in different populations and settings. Although some of these tools are more widely used than others, no single instrument can be considered completely satisfactory, and each of these tools has its advantages and disadvantages. In this narrative review, we have provided a comprehensive overview of the characteristics of a large number of such instruments, addressing their applicability, strengths, and limitations. These depend on the setting, the expertise required, and the people involved, and all these factors need to be taken into account when choosing the most suitable scale or tool. We have also briefly discussed the use of objective biomarkers of BPSD. Finally, we have attempted to provide indications for future research in the field and suggest the ideal characteristics of a possible new tool, which should be short, easy to understand and use, and treatment oriented, providing clinicians with data such as frequency, severity, and triggers of behaviors and enabling them to find appropriate strategies to effectively tackle BPSD.

The behavioral and psychological symptoms of dementia (BPSD) are a heterogeneous set of signs and symptoms of disturbed perception, thought content, mood, or behavior (Finkel et al., 1998). The prevalence of individual BPSD varies between 10% and 20%, but up to 90% of people living with dementia will have at least one, depending on the setting (Cerejeira et al., 2012; Kwon and Lee, 2021; Altomari et al., 2022). A few BPSD also have been included in the diagnostic criteria of specific dementias (Rascovsky et al., 2011; McKeith et al., 2017), and they are already frequently found in the prodromal phases (Pocnet et al., 2015; Van der Mussele et al., 2015; Wiels et al., 2021), sometimes even being the main presenting symptom in the preclinical condition called mild behavioral impairment (MBI) (Creese and Ismail, 2022). Various attempts have been made to cluster them into broader categories, such as psychosis, affective syndrome, apathy, mania, and psychomotor syndrome, but a consensus on the topic is lacking (D'antonio et al., 2022). Perhaps due to their elusive nature in terms of both definition and rigorous assessment, these non-cognitive symptoms, in spite of their enormous prevalence, have often been overshadowed by the various sets of diagnostic criteria for dementia syndrome, in general, and Alzheimer's disease, in particular, both of which mainly focus on the cognitive aspects of the two conditions (Gottesman and Stern, 2019).

Moreover, BPSD have a huge impact on persons living with dementia, caregivers, and healthcare systems, in terms of both quality of life and economic burden (Cerejeira et al., 2012). Current guidelines recommend the use of non-pharmacological interventions as a first line of treatment, although there is no consensus on what these interventions should entail (Azermai et al., 2012; Ma et al., 2022). In clinical practice, the use of antipsychotics remains common, although the effectiveness of most of them is still debated and their use may result in serious adverse events (Kales et al., 2014). This may also be partially due to the fact that BPSD are traditionally assessed with scales that are unsuitable for non-pharmacological interventions, and triggers of BPSD are rarely systematically explored (Kales et al., 2014). However, in new approaches, there have been attempts to include these aspects (Kales et al., 2014; Tible et al., 2017) and even to anticipate the future occurrence of BPSD with the aid of contextual screening (Evans et al., 2021). In a comprehensive review published a decade ago, more than 80 scales for BPSD assessment in different populations and settings were discovered, although not all of them were judged to be specific to patients with dementia (Van derlinde et al., 2014). Since then, the number of tools that clinicians can use has further increased, and new approaches have been introduced, resulting in a plethora of instruments from which clinicians can choose to frame the complex issue of behavioral problems in persons with dementia. Focusing on the measurements of BPSD is important since a better understanding of the problem is fundamental for guiding rational strategies to address it. Therefore, we suppose that a study that provides information to orient the choice of tool in specific contexts is needed.

The aim of the current study is to provide an updated overview of the instruments currently available for clinicians to evaluate BPSD in patients with dementia, together with the advantages and disadvantages of the different approaches.

Theoretical frameworks have been developed to explain BPSD from different points of view: the biomedical approach regards behaviors as neurocognitive symptoms, and the biopsychosocial view seeks to understand the behaviors by analyzing all possible person-centered and environmental contributors (Nagata et al., 2022). While our perspective in organizing this study may rely more on the former approach, we are not insensitive to the latter, and indeed, we recognize that combining the two views can help understand and address BPSD more effectively.

The scales and approaches included in our study were located through a non-systematic search of the major databases (including MEDLINE via PubMed and Scopus), a citation search on the articles included, and personal knowledge. While this does not exclude the possibility that some instruments have been left out, we presume that such tools would not have a great impact on the field, as they would have been mentioned somewhere in our extensive list of references.

We specifically excluded those scales that have been adapted from other contexts and conditions, such as psychiatric diseases (such as the state-trait anxiety inventory and the Hamilton depression scale) or that are intended as global screening tools for dementia (such as the Blessed dementia scale) and focused only on instruments that have been developed to capture behavioral disturbances in patients with cognitive impairment. We also excluded screening checklists, which can, however, be useful to identify unmet needs for specialized care, such as the BEHAV5+ checklist, which assesses the presence of six behavioral issues and is endorsed by the Alzheimer's Association within the Cognitive Impairment Care Planning Toolkit (Borson et al., 2014).

The present study is organized into four major sections. The first describes the characteristics of the instruments available for the assessment of BPSD, dividing them into broad and narrow tools, evaluating the population, the setting, the timing, and the rater, and considering their uses (paragraphs 3.1–3.4). Information on different translations used in research on the use of BPSD tools was retrieved by checking in Scopus the list of works that have cited the original paper for each instrument (paragraph 3.5). While this could leave out possible versions included in articles not indexed in Scopus or not used in any study at all, we believe that our approach would increase the reliability of the translations themselves. BPSD have been grouped into categories reflecting the 12 items of the Neuropsychiatric Inventory (NPI-12), probably the most common instrument used in clinical practice. However, we decided to separate physically and verbally aggressive behaviors, as these are often treated differently and may have different impacts on treatment choices. We also added three more categories: “harmful to self” and “uncooperativeness”, which would be proportionally more relevant in particular settings such as nursing homes and “other” to include all behaviors that do not fit into the aforementioned BPSD (such as toileting problems or hiding things). A list of possible synonyms used to categorize BPSD is presented in Table 1.

In the second section, we have discussed the methodological aspects of the scales that relate to their validity and reliability, which we presume are fundamental to understanding current pitfalls in the development of new instruments (paragraph 3.6), while the third section briefly focuses on new approaches, such as treatment-oriented approaches, and on actigraphy and other objective biomarkers of BPSD (paragraphs 3.7–3.8). Finally, we attempt to translate these data from the literature into indications for future research (paragraph 3.9).

For the purpose of the current classification, we have arbitrarily defined broad instruments as scales encompassing more than four BPSD. Broad instruments are summarized in Table 2.

A huge variety of scales exists, with different numbers of items per BPSD. Among these scales, the most complete is probably the NPI (Cummings et al., 1994), which also has the advantage of reducing redundancy by assigning only one item to each BPSD of interest. In the NPI-12 version, the scale also includes eating and sleeping problems. However, the NPI groups together physical and verbal aggression and agitation, which probably makes it less sensitive to problems that may be worth discriminating. Other instruments inspired by the same principles of comprehensiveness and brevity include the Columbia Behavior Scale for Dementia (CBS-8), the Abe's BPSD score (ABS), and the BPSD in Down syndrome scale (BPSD-DS). The ABS groups together delusion and hallucinations, eating and toileting problems, and agitation and euphoria but discriminates between verbal and physical aggression. However, the various items are scored differently, with proportionally more weight given to aberrant motor behavior and eating and toileting problems (Abe et al., 2015). The CBS-8 groups together agitation and anxiety, which may be harder for caregivers to distinguish, and does not register behaviors that are less disruptive, such as apathy and depression (Mansbach et al., 2021). The BPSD-DS (Dekker et al., 2018), such as the NPI, does not differentiate between physical and verbal aggression.

Almost all other broad instruments consider at least one BPSD with more than one item. When the scale is intended to be used with a cumulative score, this is equivalent to giving weights to single dimensions, and therefore, in most cases, the scale is oriented toward a certain cluster (or clusters) of BPSD. Some of these scales are more focused on “productive” behaviors. For instance, the Disruptive Behavior Scale (DBS) assigns proportionally more weight to physical aggression and disinhibition, with 14 and 10 items out of a total of 45 items pertaining to these categories (Beck et al., 1997). The Rating Scale for Aggressive Behavior in the Elderly (RAGE) uses nine items out of 21 to investigate aggression (Patel and Hope, 1992). The California Dementia Behavior Questionnaire Caregiver and Clinical Assessment of Behavioral Disturbance (CDBQ) assigns 12 items to delusion and 10 to hallucinations of a total of 81 items (Victoroff et al., 1997). The Columbia University Scale for Psychopathology in Alzheimer's disease (CUSPAD) follows in the same direction by assigning two-thirds of the items to delusions and hallucinations (Devanand et al., 1992a). The Manchester and Oxford Universities Scale for the Psychopathological Assessment of Dementia (MOUSEPAD) goes even further, with roughly half of its 59 items pertaining to the psychotic cluster (Allen et al., 1997). The Cohen-Mansfield Agitation Inventory (CMAI), which is intended as a measure of agitation, gives proportionally more weight to agitation and physical aggression but still includes a broad range of BPSD, such as aberrant motor behavior, disinhibition, and even depression (Cohen-Mansfield et al., 1989b).

In contrast, other scales focus more on “negative” behaviors, such as apathy or depression. For example, the Behavioral and Mood Disturbance Scale (BMDS) gives a relatively high weight to apathy, with eight items out of 34. However, this scale also includes 10 items related to cognitive aspects, which makes it less specific to the evaluation of BPSD (Greene et al., 1982). The Dementia Mood Assessment Scale (DMAS) and the Revised Memory and Behavior Problems Checklist (RMBPC) assign roughly one-third of their respective items to depression (Sunderland et al., 1988; Teri et al., 1992). Finally, the Cornell Scale for Depression in Dementia (CSDD), despite its name, includes a huge variety of BPSD, with only a quarter of the items properly pertaining to depression (Alexopoulos et al., 1988). It is clear that the choice of instrument implies different kinds of overall judgments on the degree of the patient's behavioral problems, and the specific characteristics of the tool need to be kept in mind when applying it in clinical practice.

Another common weakness of this second group of broad instruments is that they generally include a very high number of items (the Present Behavioral Examination [PBE (Hope and Fairburn, 1992)] contains more than 120 questions). This does not necessarily translate into a better characterization of the patient's problems, since the extreme parcellation of psychopathology this requires may, in fact, be limited by the ability of raters to differentiate between similar behavioral manifestations. Moreover, for practical rather than research purposes, such a level of detail might be completely useless and not worth the time spent acquiring the required information.

Other broad instruments evaluate BPSD in the context of a more comprehensive assessment that also includes cognitive aspects. This may be done by classifying BPSD as a specific separate section of a larger instrument, as is the case with the Cambridge Mental Disorders of the Elderly Examination (CAMDEX) (Roth et al., 1986) or the Alzheimer's Diseases Assessment Scale (ADAS-Noncog) (Rosen et al., 1984), or by mixing cognitive and behavioral items in the instrument, as in the Consortium to Establish a Registry for Alzheimer's Disease/Behavior Rating Scale for Dementia (CERAD/BRSD) (Tariot et al., 1995), the CDBQ (Victoroff et al., 1997), or the RMBPC (Teri et al., 1992).

Finally, for broad instruments, a single score may not be relevant at all for clinical or even research purposes, as it will not be able to discriminate between dramatically different types of patients. For instance, a patient with isolated severe apathy and a patient with important isolated aggressive behaviors may have the same, rather low score of 12 on the NPI. However, these are two completely different patients, who would require substantially contrasting approaches. A few instruments, such as the Behavioral Syndromes Scale for Dementia (BSSD), attempted to circumvent this issue by allowing the use of subscores for each cluster (Devanand et al., 1992b). The only reason for using broad instruments with a single summary score would be to give the clinician an idea of the amount of behavioral disturbances of a patient at a certain time point to evaluate the need for specific interventions and their efficacy, by administering the same scale at a second time point. However, qualitative data still need to be retained, and the scores cannot be used in an acritical manner.

Narrow instruments are summarized in Table 3.

The Algase Wandering Scale (AWS) was the only tool specifically designed to investigate taberrant motor behavior in patients in long-term care, with 28 items divided into a number of subscales (Algase et al., 2001).

Agitation can be better evaluated by the Brief Agitation Rating Scale [BARS (Finkel et al., 1993)], the Pittsburgh Agitation Scale [PAS (Rosen et al., 1994)], and the Scale for the Observation of Agitation in Persons with Dementia of the Alzheimer's type [SOAPD (Hurley et al., 1999)]. All of these scales have the advantage of being relatively short, with no more than 10 observational items; however, except for the SOAPD, they also include items related to aggression. However, the SOAPD scoring system is, unfortunately, extremely complicated, with weight given to each of its seven items, and this may explain its relatively low level of use. They are all to be used in nursing homes (NH) and rated by nurses; the PAS can also be used to evaluate hospital inpatients, and it is probably the best instrument to rate agitation.

The Ryden Aggression Scale (RAS) is a caregiver-rated instrument focusing specifically on aggression, with 20 items pertaining to aggression and five items more loosely related to disinhibition (Ryden, 1988). The Aggressive Behavior Scale (called as ABeS in this review to distinguish it from Abe's BPSD score, but commonly abbreviated as ABS) has almost the same features, but it has the advantage of being shorter (Perlman and Hirdes, 2008). The Disruptive Behavior Rating Scales (DBRS) are quick instruments focusing on particularly problematic symptoms and include aggression, agitation, and aberrant motor behaviors (Mungas et al., 1989). The ABC Dementia Scale (ABC-DS) has three domains: Domain B (BPSD) includes aspects of agitation, irritability, and uncooperativeness, while the other two domains pertain to activities of daily life and cognition (Kikuchi et al., 2018). The Irritability Questionnaire (IQ), which has two separate forms, one to be administered to the person and one to the caregiver, dedicates half the items in the latter to aggression, while the patient form focuses more properly on irritability (Craig et al., 2008). Other drawbacks of this scale are that it partially relies on the ability of the patient to self-report symptoms, which may be impaired in the later stages of dementia, and the fact that some items appear to be rated in opposite directions, which would make the use of a total score less meaningful. For instance, “I have been feeling relaxed” and “things are going according to plan” are rated the same as “I lose my temper and shout or snap at others”, which may be a mistake.

The Rating Anxiety in Dementia scale (RAID) offers a comprehensive evaluation of anxiety, although a number of items are quite non-specific (such as palpitations or fatigability, which could also be related to general medical conditions) (Shankar et al., 1999).

While no scale is specifically designed to rate depression, a few instruments focus specifically on apathy, such as the Apathy Inventory [probably the shortest one, with only three items (Robert et al., 2002)], the Dementia Apathy Interview and Rating scale [DAIR, only suitable for mild and moderate phases (Strauss and Sperry, 2002)], and the more recent Person–Environment Apathy Rating [PEAR (Jao et al., 2016), see Section 3.6]. Half the items in the Irritability-Apathy Scale (IAS) pertain to apathy and half to irritability, although they clearly do not reflect the same latent variable (Burns et al., 1990).

No tools focus specifically on symptoms such as delusions, hallucinations, or euphoria. Finally, a few scales are available for particular issues, such as the Signs and Symptoms Accompanying Dementia while Eating [SSADE (Takada et al., 2017)], the Sleep Disorders Inventory [SDI (Tractenberg et al., 2003)] and the Resistiveness to Care Scale [RTC-DAT (Mahoney et al., 1999)].

The vast majority of tools have been developed for patients with unspecified dementia, although the prevalence of BPSD such as apathy, depression, and anxiety may vary among different forms of neurodegenerative diseases (Collins et al., 2020). Considerably fewer instruments have been validated for particular diseases, the most common being AD. These instruments include the already mentioned ABC-DS, ADAS-Noncog, Apathy Inventory, CUSPAD, SDI, and SOAPD (Rosen et al., 1984; Devanand et al., 1992a; Hurley et al., 1999; Robert et al., 2002; Tractenberg et al., 2003; Kikuchi et al., 2018), and the Troublesome Behavior Scale [TBS (Asada et al., 1999)], the Agitated Behavior in Dementia scale [ABID (Logsdon et al., 1999)], and the Behavioral Pathology in Alzheimer's Disease Rating Scale [BEHAVE-AD (Reisberg et al., 1987)]. Two scales have been tested in mixed populations, with different results, as is the case with the IAS and IQ, which also included patients with Huntington's disease (HD). The IAS shows higher values for aggression for HD patients compared to AD patients (Burns et al., 1990), but neither scales demonstrated significant differences in irritability between the two conditions (Burns et al., 1990; Craig et al., 2008).

A few instruments explore BPSD in specific disorders. The BPSD-DS is intended to be used only in persons with Down syndrome aged over 30 years, and, accordingly, it includes items that are peculiar to the psychopathology of this disease, such as obstinacy (Dekker et al., 2018). The Mild Behavioral Impairment Checklist (MBI-C) is a rather long consensus-derived instrument, specifically envisaged for the recently introduced phase of MBI, in which mild behavioral symptoms analogous to BPSD may predate the onset of cognitive impairment. This scale is designed to comprehensively explore the diagnostic criteria for this condition; in this sense, it may be observed as a diagnostic tool rather than a form of behavioral assessment (Ismail et al., 2017). Subsequent papers introduce different cutoff levels for identifying MBI in subjective cognitive decline (>6.5) and mild cognitive impairment (>8.5) (Mallo et al., 2018, 2019).

Many instruments have been validated in the elderly population, with or without cognitive impairment, especially in the NH context. These instruments mostly focus on agitation and disruptive behaviors that may pertain to a more general population of cognitively impaired and psychiatric elderly patients, including the Agitation Behavior-Mapping Instrument [ABMI (Cohen-Mansfield et al., 1989a)], BARS (Finkel et al., 1993), CMAI (Cohen-Mansfield et al., 1989b), Harmful Behaviors Scale [HBS (Draper et al., 2002)], and RAGE (Patel and Hope, 1992). Another use of assessment scales in the general elderly population is in the diagnostic evaluation and follow-up assessment in outpatient memory clinics of persons who may have cognitive impairment. These instruments, which also include cognitive aspects, are represented by the CAMDEX, DBRI, and BMDS (Greene et al., 1982; Roth et al., 1986; Mungas et al., 1989). Finally, the Behavioral Problem Checklist (BPC) is a scale designed to capture aspects of psychopathology and caregiver distress in patients with dementia or chronic physical illness (O'Malley and Qualls, 2016).

The characteristics of the different tools in terms of population, rater, setting, and timing are summarized in Table 4.

A number of instruments are intended to be used in outpatient memory clinics. Often, the items in these scales have to be rated by caregivers. While the presence of an informant can surely be an advantage compared to the limited significance of behaviors directly observed by the clinician during the relatively short time of an outpatient visit, several general considerations need to be kept in mind. First, the way in which caregivers respond may be influenced by factors related to the scale, such as its length and complexity, and factors related to the caregiver, such as their age, education, gender, relationship with the patient, and even distress associated with this still underrecognized commitment. The complexity of the instrument appears to be the most limiting factor, as emerged from focus groups with caregivers which Kales and colleagues conducted before developing their own tool (Kales et al., 2017). Therefore, it is unlikely that scales like the CDBQ, which has an extremely high number of items, would be suitable for all caregivers (Victoroff et al., 1997). Second, an important issue is caregiver recall bias, which may lead to underreporting or overreporting of psychiatric symptoms in persons living with dementia (La Rue et al., 1992). While the majority of caregiver-rated scales require the informant to recall the last 1–4 weeks, some instruments, such as the BPC or the IAS, ask the rater to recall the last 4–6 months or even from the beginning of the illness, which may not be an optimal strategy for obtaining reliable information (Burns et al., 1990; O'Malley and Qualls, 2016). However, in the case of BPC, it is not clear whether this instrument needs to be used at home, as the authors report that they “elicit caregivers' observations of daily behaviors”. In any case, it is definitely not observational, as caregivers are asked to report their overall judgment about frequency or severity of behaviors.

Another important factor to consider is caregiver burden or distress. While some scales that evaluate this aspect exist and are widely used (Bédard et al., 2001), they are usually intended for any caregiver and are not specifically designed to capture the burden associated with BPSD. Some of the instruments administered to caregivers also include subscales to evaluate BPSD-related distress, which may be useful to identify unmet needs in carers. Examples of these are the BPSD assessment tool—Thai version [BPSD-T (Phannarus et al., 2020)], ABID, BEHAVE-AD, BMDS, BPSD-DS, CDBQ, SDI, and the currently most used version of NPI (Greene et al., 1982; Reisberg et al., 1987; Victoroff et al., 1997; Kaufer et al., 1998; Logsdon et al., 1999; Tractenberg et al., 2003; Dekker et al., 2018). It may be argued that, for most caregivers, separating the severity of a BPSD from its associated burden can be difficult, and collecting data that are expected to be correlated does not provide any added value. However, this may not apply to all cases.

A common way to overcome these limitations in the outpatient clinic is to have the clinician rate the items after a structured or, more rarely, unstructured interview with the patient and/or an informant. In a few cases [such as the ADAS-Noncog and the CAMDEX (Rosen et al., 1984; Roth et al., 1986)], the clinician can base their judgment also on observational data gathered during the visit. A special case is the MBI-C, which can be compiled by the patient, an informant, or the clinician (Ismail et al., 2017).

Instruments intended to be used in NH are almost inevitably rated by nurses and are often based on the direct observation of the patients. The use of (trained) professionals rather than informal caregivers probably increases the ability to discriminate between different items; nevertheless, this is not completely immune from recall bias. While this is minimized when the timing is within a nurse's shift [as in the CMAI, PAS or RTC-DAT (Cohen-Mansfield et al., 1989a; Rosen et al., 1994; Mahoney et al., 1999)], it can still be a problem when the nurse is required to recall periods as long as 2 months [in the Challenging Behaviors Scale, CBS (Moniz-Cook et al., 2001)]. The SSADE, rather than involving nurses, was rated by dietitians involved in nutritional care in NH, with direct observation during mealtimes over the course of a month (Takada et al., 2017).

A few instruments have been adapted for use in hospitals. These include the PAS, the Dementia Observation System (BSO-DOS, 2019), the Behavioral Activities in Demented Geriatric Patients [BADGP (Ferm, 1974)], the Behavioral and Emotional Activities Manifested in Dementia [BEAM-D (Sinha et al., 1992)], the RMBPC (Teri et al., 1992), and the TBS (Asada et al., 1999). Often, these instruments do not state the period of observation, which is probably intended to be tailored to the individual patient. Although considerably more effort has been expended on scales to identify and prevent delirium in geriatric inpatients, BPSD might be quite common in this specific population, with a study finding a prevalence of up to 76% in a general hospital. This may suggest that more useful observational tools need to be developed for assessing BPSD in hospitals, as their presence may result in longer and more expensive stays (Hessler et al., 2018).

Finally, a few instruments can be filled out at home. In the case of RAS or the ABS (Ryden, 1988; Abe et al., 2015), this would be no different from administering the scale in a memory clinic setting. The only tool specifically intended to be used at home is the WeCareAdvisor system (Kales et al., 2017), discussed in Section 3.7.

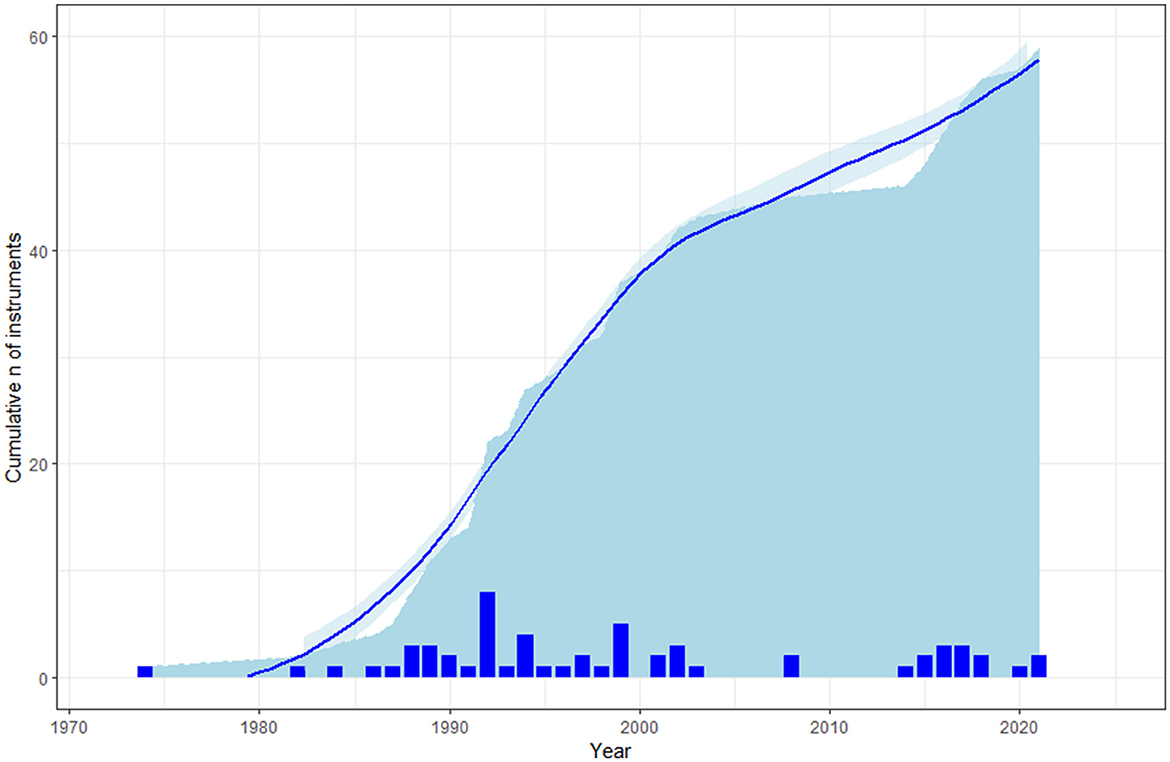

Although we did not include every possible instrument proposed in the literature, we were still able to define three main phases in the history of BPSD assessment, as shown in the cumulative graph of BPSD tools over time (Figure 1). The first phase, which ran from the 1980s to roughly the year 2000 and saw the proliferation of pen-and-paper scales, includes the vast majority of instruments available today. In the 2000s, only a few new tools were introduced. These were mostly narrow scales, perhaps attempting to fill the gap in the evaluation of specific BPSD domains (this phase corresponds to the plateau in Figure 1). Finally, we owe the steep increase in the number of instruments proposed in the last decade to a combination of scales dedicated to specific issues or populations, on the one hand, and new treatment-oriented approaches, on the other hand.

Figure 1. A cumulative graph of the instruments for evaluating BPSD included in the current review by year. The bars represent the number of new instruments for each year.

However, the majority of instruments used nowadays are still those that were introduced in the first phase. For instance, scales such as BEHAVE-AD were used in the trials leading to the approval of risperidone, rivastigmine, and memantine in AD. The BEHAVE-AD underwent two subsequent modifications resulting in the BEHAVE-AD-FW (which adds frequency weights to the already present severity weights) and the E-BEHAVE-AD (an empirical version which includes direct observation of BPSD) (Reisberg et al., 2014).

Table 5 shows various measures estimating the impact of the different tools on research. The most obvious measure is the number of citations of the original study (which was checked with Scopus on 31 December 2022). However, to provide a measure of the centrality of the tool itself, we also report the number of article abstracts that specifically cite it, and the ratio of these mentions to the total number of citations. We normalized citations and mentions in the abstract by the number of years since the tool was introduced. An example of the validity of this approach is shown by the ADAS-Noncog: the original study by Rosen is the second most-cited paper in our list, but it is only mentioned 15 times in the abstract, with considerably less success than the ADAS-Cog (Kueper et al., 2018). This may be due to a number of reasons, including the absence of normative data and the fact that some items are probably not BPSD. The same can be said for the CAMDEX, which is mostly used as an assessment tool for dementia and not primarily for BPSD.

Based on these data, it appears that the NPI is by far the most widely used instrument in clinical studies nowadays, and several additional versions have been developed, such as the NPI-12 [with the addition of eating and sleeping problems, which is now the standard (Cummings, 1997), together with the caregiver distress scale (Kaufer et al., 1998)], the NPI-Q [which is a quick tool administered to the caregiver (Kaufer et al., 2000)], the NPI-nursing home [NPI-NH (Wood et al., 2000), for which a diary version, the NPI-Diary, has also been developed (Morganti et al., 2018)], the NPI-plus [including a 13th item, cognitive fluctuations, and intended for patients with dementia with Lewy bodies (Kanemoto et al., 2021)], the NPI-C [an expanded clinician-rated version (De Medeiros et al., 2010)], and a mobile version developed in Thailand (Rangseekajee et al., 2021).

A few other scales have been subject to further modifications in a similar way to expand and adapt them to other settings. For instance, revisions to the AWS have resulted in a second version with more items [AWS2 (Algase et al., 2004a)] and in a version for individuals living in the community to be compiled by the caregiver [AWS-CV (Algase et al., 2004b)]. The BPSD-DS also underwent a revision and is now available in a second version [BPSD-DS II (Dekker et al., 2021)]. The CAMDEX was also revised [CAMDEX-R (Roth et al., 1998; Ball et al., 2004a)] and adapted for persons with Down syndrome [CAMDEX-DS and CAMDEX-DS II (Ball et al., 2004a)]. The MOUSEPAD also exists in a shorter version [mini-MOUSEPAD (Ball et al., 2004b)] and so do the DBDS [DBD13 (Machida, 2012)], the CERAD/BRSD [with a 17-item version (Fillenbaum et al., 2008)], and the CSDD [mCSDD4-MA, a four-item scale for advanced dementia (Niculescu et al., 2021)].

The BEHAVE-AD, CSDD, and CMAI are other widely used scales, and it is possible, based on the available data, that newer instruments such as the MBI-C may achieve comparable success in the near future.

Another factor that may be both a cause and an effect of an instrument's success is language availability. Table 6 lists the languages in which the various instruments have been used in the literature, with a focus on the 10 currently most spoken languages (https://www.ethnologue.com/guides/ethnologue200). However, we excluded Bengali, Russian, and Urdu from the columns of the table, as we are not aware of any scale other than the NPI being translated into these languages. The high number of languages into which they have been translated testifies to the success of instruments such as the NPI, CSDD and BEHAVE-AD. Regrettably, almost half of the tools are only available in English. Another way of looking at Table 6 is as a rough estimate of the scientific interest in BPSD assessment of different communities, which is not always proportional to their size. While it is foreseeable that most scales have been adapted for Spanish, French, Chinese or even Japanese, the fact that many of them have been validated in Italian (10 scales), Dutch (7), Korean (7), and Norwegian (5) probably reflects the great involvement of the respective countries in BPSD research.

Cross-cultural considerations need to be taken into account, as some instruments are specifically designed for issues that might not be as relevant in a different context. For instance, a number of scales developed in Japan include items related to toileting behaviors (Asada et al., 1999; Abe et al., 2015; Mori et al., 2018) or to aspects that seem less easy to rate objectively, such as interfering with a happy home circle (Asada et al., 1999). These BPSD are rarely mentioned in Western scales (incontinence is more often listed as a physical issue, rather than a behavior). Conversely, disinhibition, anxiety, and shadowing are almost never present in Japanese instruments. Although it is possible that some variation exists in the geographic prevalence of specific BPSD, it cannot be excluded that some behaviors, albeit present, are not perceived as issues in different cultures. Therefore, it is advisable to use instruments that are relevant to the context in which they are applied.

One important factor regarding usability is the way an instrument is scored and how the scale is interpreted. A significant number of instruments do not provide score cutoffs or interpretations, leaving the clinician with a tool that they might not know how to interpret. Even in longitudinal retest after treatment, it is not always clear how to consider changes, and the real additive value of even using a score is questionable if the overall judgment still has to be done in a rather personal way. This is especially true for broad instruments. To give a practical example of this issue, let us consider two different patients with an NPI of 24 points, the first resulting from 12 on apathy and depression, the second from 12 on aggression and delusions. After treatment, the first one obtains a score of 18 (with nine on apathy and depression), while the second gets a score of 12 (with no more delusions, but still 12 on aggression). It would appear that the second patient has improved more than the first, but, conversely, one might also judge that a 6-point change in the first case could be enough, while a 12-point change in the second case would still be far from acceptable.

Table 7 provides an overview of the scoring rules, the modalities that are evaluated, and the subscales of each instrument. With a few exceptions, the vast majority of the tools are based on Likert scales that evaluate frequency, severity, or both, and, in some cases, also caregiver distress. Alternatives include averaging subscale scores (also based on Likert scales) to make a total score and, in a few cases, using weighted coefficients for each item. These approaches make the use of the instrument more complex, as they imply calculations beyond simple sums [or sum of products, as in the case of the NPI, CBS, SDI, or the Apathy Inventory (Cummings et al., 1994; Moniz-Cook et al., 2001; Robert et al., 2002; Tractenberg et al., 2003)]. An extreme example of this has been provided by the ABC-DS, for which the authors proposed to calculate the Euclidean tridimensional distance between domains as a measure of the total score, with the additional caveat that each item is rated in an unusually decreasing way, with higher scores corresponding to less impairment (Kikuchi et al., 2018). A few tools do not provide any score at all, but rather a treatment-oriented analysis of the impact of behaviors, often in conjunction with an evaluation of possible triggers; these are discussed in the following section. Table 7 also provides a personal indication of the usability of each tool on a qualitative scale in the “Ease of use” column, where “-” means that the instrument is probably too complex, and “+++” means that it is extremely easy, mostly based on its length and scoring rules.

Another important consideration is the availability of the instrument. While the vast majority of published tools are free and—at least for the more recent ones—often included in open access papers, some others are intended for commercial use, such as the CAMDEX (Roth et al., 1986), the ABC-DS (Kikuchi et al., 2018), or the WeCareAdvisor platform (Kales et al., 2017). While the costs are not prohibitive in most cases, ranging from USD$ 20–130, the fact that they still need to be paid would probably make them less likely to be used worldwide.

The scales and instruments described above are often used as outcomes in clinical trials aiming at establishing the efficacy of interventions in BPSD. Therefore, it is crucial to be aware of their reliability, as this may influence the statistical power of these trials (Matheson, 2019). In this context, reliability can be understood as the ratio of the variance of the true score of a scale (without random errors) to the variance of the total score (which includes random errors) (David, 2003) or as the proportion of the variability of the score that is attributable to what the score should measure in relation to the total variability of the person's responses (Dunn et al., 2014).

However, as the reliability of a measure is dependent on its calibration within a specific sample and in a specific context (Dunn et al., 2014), the data provided by the original papers cannot always be assumed to reflect the real-world reliability of the respective instrument, as the samples to which that scale is to be applied might be more or less heterogeneous than the original sample (David, 2003). In other words, an instrument that was judged reliable enough within a sample of relatively young Canadian caregivers might not be reliable in a sample of older Italian caregivers. Keeping this in mind, in this section we have provided an overview of the reliability measurements of the different instruments as measured in the original papers. Scales for which reliability data are available are shown in Table 8. Moreover, education of the caregiver, although important, was left out, as it was available only for the ABID (13.3 ± 2.6 years).

Table 8. Reliability measures of the different scales. Only scales for which reliability data are available are shown.

Reliability can usually take one of the three forms: internal consistency, inter-rater reliability, and test-retest reliability. Internal consistency is generally understood as the ratio of the variability between the responses to individual items of a scale and the overall variability of the scale. It is generally measured with Cronbach's alpha, although several limitations and interpretation caveats should be borne in mind with this approach. First, Cronbach's alpha depends on how much the individual items correlate between themselves, and as such, it is expected to be lower for broad constructs and higher for narrow ones. Moreover, while a very low (or even negative) alpha would reflect general disagreement between the items, a very high coefficient would be indicative of redundancy (in this sense, an alpha higher than 0.90 should raise suspicion). Indeed, high alphas seem to be artificially driven in many scales by the use of indefensibly similar items and/or multiplication of items describing the same latent variable, as alpha is also proportional to the number of items (David, 2003). As shown more than 30 years ago, a scale with poor average item correlation (0.30) and three independent dimensions will have an alpha of 0.28 with six items, and an alpha of 0.64 with 18 items (Cortina, 1993). For instance, a scale with 10 items for apathy and 4 items for depression will predictably show a higher alpha than a scale with just two items for each category, but the latter would probably be less redundant and time-consuming. Yet, over the past three decades, a number of scales with an enormously large number of items have been published, with Cronbach's alphas provided in an attempt to justify the value of the instrument.

Another common misconception is that the adaptation of original scales on the basis of alpha after removal of items would result in a better scale. This is a common methodological error, as even in the context of unidimensionality of the scale, the item removed might have lower variance than other items and, therefore, be more reflective of a “true” value within the sample (Dunn et al., 2014).

Furthermore, alpha is often inappropriately reported as a point estimate (Dunn et al., 2014), and no article gives confidence intervals for alphas. However, one has to bear in mind that the smaller the sample, the more imprecise the estimate of alpha (Knapp, 1991). Another common poor practice in articles is to provide total Cronbach's alphas (and total scores for the instrument) even when factor analyses demonstrate that there are many poorly correlated dimensions evaluated by the instrument (Knapp, 1991). This is especially applicable to broad instruments, where a total score is usually a poor indication of the real problems of the patient. However, even as an index of unidimensionality, Cronbach's alpha performs relatively poorly compared to other less-used indexes, such as McDonald's omega (Revelle and Zinbarg, 2009; Dunn et al., 2014). Finally, there are several different alphas, such as Cronbach's alpha, standardized alpha (which is actually not an alpha), and weighted alpha, which is more suitable for scales where different items contribute in different ways to the total score (Knapp, 1991). Therefore, interpretation of the values provided is not always straightforward. The data in Table 8 will help the reader put the given alphas into context.

Inter-rater reliability measures the variation between two or more raters evaluating the same sample, while test-retest reliability measures the consistency of the results when the same test is repeated at different time points within the same sample. Both can be estimated with an intraclass correlation coefficient (ICC), but ICC does not represent a single measure, as there are up to 10 ways to calculate it, each of which is subject to different assumptions (model, actual measurement protocol and interest in absolute agreement vs consistency between raters) and interpretations (Koo and Li, 2016). Although the two-way random-effect model with absolute agreement would be the preferred ICC for inter-rater reliability because of its generalizability, and the two-way mixed-effect model should be used for test-retest reliability studies (Koo and Li, 2016), the specification of the ICC is rarely reported. As for Cronbach's alpha, ICCs are dependent on the degree of homogeneity of the sample for which they are calculated, and therefore, caution should be applied when extending its value to other populations (Aldridge et al., 2017; Matheson, 2019). Moreover, crude intra-observer agreement, Pearson's r, and Cohen's k are often used, even though Pearson's r represents only a correlation coefficient (but, on top of its several limitations, correlation does not imply agreement) (Aldridge et al., 2017), and Cohen's k is intended as a measure of inter-rater agreement for nominal data (Cohen, 1960), while most scales are actually based on ordinal or interval data. For ordinal rating scales, weighted Cohen's k is more appropriate (Cohen, 1968; de Raadt et al., 2021), but the way in which disagreement between observers can be regarded varies somewhat arbitrarily, depending on the weight model, as quadratic weights consider distant ratings more seriously than linear weights (de Raadt et al., 2021). However, as for the ICC, the type of weight used is not often reported, and the arbitrariness of the decision on the specific weights has led some authors to advise against its use (de Raadt et al., 2021). For the sake of consistency, only values for ICC are reported in Table 8. Traditionally, values between 0.4 and 0.6 have been considered fair, between 0.6 and 0.74 good, and above 0.75 excellent (Matheson, 2019).

For test-retest reliability, it is also important to know the time frame and the sample for which it has been estimated. Samples for test–reliability range from 35 days of the DBRI to 218 days of the ABC-DS, while the time frames are generally between one and two weeks.

Finally, concurrent validity is regarded as a measure of correlation between a new scale or instrument and a validated gold standard. Such a reference scale is obviously dependent on the type of tool to be assessed (broad vs narrow) and on historical considerations. For broad instruments, the gold standard is probably the NPI, but concurrent validity can only be established for scales published after its introduction. For narrow instruments, a series of scales have been used as comparators, depending on their status at the time of publication. Table 9 lists the concurrent validity of each instrument as provided in the original paper.

A common problem of the first pen-and-paper scales is the lack of systematic trigger assessment, which limits the ability of the clinician to tailor non-pharmacological interventions to the specific caregiver–patient dyad. Since the use of antipsychotics should be limited in light of the FDA black box warning, current guidelines recommend functional analysis-based non-pharmacological interventions as a first line of treatment for BPSD. These are largely based on modifications of the ABC algorithm (antecedent—behavior—consequence) and include a first phase when contextual data and triggering factors are gathered, a description of the target behavior is provided, and an evaluation of what happens after the behavior occurs, including, for instance, caregiver reactions (Kales et al., 2017; Dyer et al., 2018).

The first phase was generally neglected during the early stages of the BPSD scale development in the 20th century, although some examples of it exist. For instance, CABOS, an observational system to study disruptive vocalizations in NH, included data on location, activity, social environment, sound, and the use of physical restraint to orient treatment (Burgio et al., 1994). Even though the hardware and software described in the study are clearly outdated and the focus is limited to a single form of BPSD, this is perhaps the earliest example of these new approaches. The BSO-DOS system followed a few years later, and it is still quite widely used in NH in Canada, with updated manuals available. Rather than providing a score, it is a customizable and observational web-based tool that rates all the behaviors of patients, from sleeping to risk states, calculating the average episodes in terms of blocks and hours and risk. It also includes causes, planning, and interventions. All the data are analyzed and presented in the form of tables to aid in the understanding of the behavior (BSO-DOS, 2019). However, this system requires constant observation, and users require some training.

The Care Plan Checklist for Evidence of Person-Centered Approaches for BPSD (CPCE) differs in that it evaluates only those specific behaviors that are present and addressed (Resnick et al., 2018). This checklist may help explore possible neglected non-pharmacological interventions, but it is nevertheless limited to some items that might be more prevalent in the NH environment and do not take into account the severity of the behavior. The Assessment of the Environment for Person-Centered Management of BPSD and Assessment of Policies for Person-Centered Management of BPSD (AEPC), subsequently developed by the same group, completely forgoes the BPSD assessment, focusing instead on the implementation of policies and management strategies to prevent and minimize the impact of the behaviors in NH (Resnick et al., 2020). A pen-and-paper scale that combines a standard BPSD rating approach with the assessment of environmental factors is the PEAR. It has two subscales to evaluate apathy and environment, with scoring rules to guide attribution of points (Jao et al., 2016). While it is quicker than the previous instruments, it still requires some training, and its use is limited to the management of apathy in NH.

Two very similar theoretical systematizations of these new approaches have recently been proposed, which have the advantage of being implementable in a variety of settings, including home. The first is the DICE approach, which includes four different phases: Describe, Investigate, Create, and Evaluate. In the Describe phase, the caregiver is asked to “play back” the BPSD as if in a movie to let the clinicians understand and categorize the particular type of behavior rather than leaving the caregiver to define it. Recording of contextual factors in logs or diaries to be compiled at home is encouraged. The Investigate phase concerns the exclusion of potentially modifiable causes (including medical conditions), a strategy inherited from delirium management strategies, taking into account individual, caregiver, and environmental considerations. The Create step involves brainstorming with caregivers to ideate non-pharmacological treatment plans; this is followed by the Evaluate phase in which the efficacy and implementation of the interventions was evaluated (Kales et al., 2014).

Another very similar approach is the DATE algorithm, based on interprofessional Swiss recommendations. It includes the following steps, which are analogous to those of the DICE approach: Describe and measure, Analyze, Treat, and Evaluate (Tible et al., 2017). Both frameworks include the possibility of reverting to pharmacotherapy in case of potentially dangerous behaviors. The WeCareAdvisor platform is based on the DICE approach and was developed after a series of focus groups with caregivers. It is a customizable web-based tool which requires structured inputs from the users on a broad range of BPSD. It also allows for the presence of unstructured notes and feedback, includes a glossary and educational tools, and evaluates adherence to treatment. It is intended to provide caregivers with algorithm-based tailored solutions for a broad range of BPSD-related issues that they might face at home, while still warning them to seek medical advice in potentially dangerous situations (Kales et al., 2017). While it is certainly interesting, clinical trials of this system are currently ongoing, and therefore, whether it will lead to a reduction of BPSD or caregiver distress remains unknown. It also does not completely eliminate caregiver recall bias, and it still requires some training, access to a computer, and time, which might make it unsuitable for some caregivers. Moreover, the precise treatment algorithm is not published, which limits the generalizability of future results.

Although technology is at present extremely pervasive, it seems that the field of BPSD assessment has not fully profited from its potential. While it is not uncommon to observe caregivers showing video recordings of patients' behaviors, video analysis has rarely been considered as a source of information and mostly in NH. Indeed, a recent meta-analysis found that only 10 studies used video recordings to assess BPSD in NH, and the vast majority of studies relied on them to score already available scales, such as the PAS (Rosen et al., 1994) or the RTC-DAT (Mahoney et al., 1999); only in two cases did the authors develop a coding scheme for the behaviors exhibited by NH residents (Kim et al., 2019). While the use of video recordings in the home setting could have important ethical implications and could theoretically alter the behaviors of the patient and the caregiver (Moore et al., 2013), it might still be worth exploring the direct observation of BPSD with the aid of technological resources.

Finally, telemedicine approaches might be a feasible and useful method of treating BPSD, either with standard telephone-based assessment and interventions (Nkodo et al., 2022), or using artificial intelligence or dedicated mobile apps. An example of the latter is currently being studied in a registered clinical trial (Braly et al., 2021).

While it may seem surprising to talk about biomarkers of BPSD, given the importance of the setting and context, it is possible that some specific behaviors, rather than the whole spectrum of BPSD, could be captured and quantified by objective measures and surrogate markers.

Bearing in mind that caregiver report bias is a major problem, it is not surprising that research efforts have attempted to define more “objective” markers of BPSD, directly measurable on the patient. While direct clinical observation of the patient by the treating physician could be more than adequate for this purpose, it is well known that patients' behavior can change dramatically during outpatient visits, giving misleading information. Therefore, researchers have attempted to not only define surrogate markers for selected BPSD, mainly by analyzing biological fluids, such as blood or CSF (Bloniecki et al., 2014; Tumati et al., 2022), but also through different biosignals, including neurophysiological (Yang et al., 2013) or autonomic system-related ones (Suzuki et al., 2017).

Prolonged recording by wearable devices could also represent an interesting opportunity to gain insights into the real triggers of BPSD and hence to improve treatment. One of the first such attempts is actigraphy, which is used mainly, but not solely, for assessing aberrant sleep behaviors (Ancoli-Israel et al., 1997; Nagels et al., 2006; Fukuda et al., 2022).

Globally, these surrogate markers have not yet attained clinically useful status due to difficulties defining specific associations with selected behavioral phenotypes and generating meaningful cutoff values. Nevertheless, the amount of data available in the literature is quite significant and deserves a full discussion in a dedicated paper (manuscript in preparation).

While a large number of tools are available to the clinician to evaluate BPSD, it is extremely hard to establish which instruments are the best. Each tool has advantages and disadvantages that depend on the setting, the expertise required, and the people involved. We hope to have provided clinicians with enough data to decide which instrument would be the most appropriate to address their clinical problem when managing BPSD.

New scales and tools are constantly being developed, probably because, despite more than four decades of research, results in the BPSD field remain unsatisfactory. It may therefore be worth considering which features a new instrument should have to be useful and successful. Based on the data provided here, it is clear that a new instrument should be short, easy to understand and use, require little to no training (Kales et al., 2017), and be adapted to the specific setting for which it is intended. It should also be treatment oriented, including triggers and contextual factors, evaluating both frequency and severity, and possibly providing data to clinicians. There should be a strategy to avoid caregiver recall bias, possibly through the use of technologies that allow timely online recording of BPSD. Attention should also be given to cross-cultural options and free availability of the tool to ensure its adoption in our increasingly diverse modern world. A good option would also be to make it customizable to allow for the evaluation of BPSD that need to be addressed.

Finally, for broad instruments, a global score is of limited usefulness, and we would advise against the use of statistics such as Cronbach's alpha to evaluate internal consistency. If a score is considered useful, an interpretation of it should be provided.

Our work also has implications for the concept of BPSD itself. Some models seek to explain the occurrence of BPSD within a common theoretical framework, but even these can only explain a fraction of the extreme variability in behavior (Nagata et al., 2022). As an umbrella term for a disparate and possibly ill-defined set of heterogeneous behavioral alterations, BPSD can most usefully be considered together when there is a possibility of addressing them effectively. This may account for the fact that research on BPSD is moving away from psychometric and toward tailored intervention-oriented approaches. With this practical goal in mind, we believe that drawing together different symptoms and signs under the label of BPSD still has value. However, we should consider psychometrics to be useful mainly in the context of narrow instruments, as this could still have important implications for clinical practice.

Our study is the result of a significant effort to locate the different approaches and instruments used to assess BPSD, and as such, it has some limitations. First of all, albeit comprehensive, this is not a systematic review, and we cannot exclude the possibility that some interesting tools have been left out. Readers can ask to contribute to a Google sheet containing the data we have collected so far by following this link: https://tinyurl.com/bpsdinstruments. Ideally, this could represent a resource to inform clinicians of new approaches that will be available after the publication of our paper.

Second, estimating the real impact of the instruments is clearly difficult, and our method of counting citations and mentions in the abstract of citing papers only provides a rough estimate of the influence of the tools in research. A broader estimation of the impact on clinical practice should ideally rely on a survey or other methods of capturing how favorably clinicians regard the instrument, which could possibly include both awareness of the existence of the tools and their usage.

Finally, our approach to translations does not rule out the possibility that we have overlooked translations into some languages. We would be grateful if readers who are aware of further translations could contribute to the Google sheet previously identified.

In this review, we have described the characteristics of a comprehensive number of instruments to assess BPSD in terms of items, setting, target population, usage, reliability, and theoretical approach. There is a high heterogeneity among different tools, and while the NPI is probably the current gold standard, specific scales may be more suitable for particular settings. Every instrument has some limitations, and there is still a need to develop better tools for evaluating BPSD and guiding their management in clinical practice. We suggest that psychometrically sound scales should only be retained within narrow instruments to assess particular behaviors and that there should be a move toward intervention-oriented approaches when evaluating the total burden of BPSD in a patient.

The process of searching, screening, and appraising the relevant studies has been performed by FP and LC. The manuscript has been drafted by FP and LT and critically revised and commented upon by all authors. All authors contributed to the article and approved the submitted version.

This publication was produced with the co-funding European Union's Next Generation EU, in the context of the National Recovery and Resilience Plan, Investment Partenariato Esteso PE8 Conseguenze e sfide dell'invecchiamento, Project Age-It (Aging Well in an Aging Society).

FP, CF, IA, and LT declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abe, K., Yamashita, T., Hishikawa, N., Ohta, Y., Deguchi, K., Sato, K., et al. (2015). A new simple score (ABS) for assessing behavioral and psychological symptoms of dementia. J. Neurol. Sci. 350, 14–17. doi: 10.1016/j.jns.2015.01.029

Aldridge, V. K., Dovey, T. M., and Wade, A. (2017). Assessing test-retest reliability of psychological measures: Persistent methodological problems. Eur. Psychol. 22, 207–218. doi: 10.1027/1016-9040/a000298

Alexopoulos, G. S., Abrams, R. C., Young, R. C., and Shamoian, C. A. (1988). Cornell scale for depression in dementia. Biol. Psychiatry. 23, 271–284. doi: 10.1016/0006-3223(88)90038-8

Algase, D. L., Beattie, E. R. A., Bogue, E. L., and Yao, L. (2001). The Algase wandering scale: initial psychometrics of a new caregiver reporting tool. Am. J. Alzheimers. Dis. Other Demen. 16, 141–152. doi: 10.1177/153331750101600301

Algase, D. L., Beattie, E. R. A., Song, J. A., Milke, D., Duffield, C., Cowan, B., et al. (2004a). Validation of the algase wandering scale (Version 2) in a cross cultural sample. Aging Ment Heal. 8, 133–142. doi: 10.1080/13607860410001649644

Algase, D. L., Son, G. R., Beattie, E., Song, J. A., Leitsch, S., Yao, L., et al. (2004b). The interrelatedness of wandering and wayfinding in a community sample of persons with dementia. Dement. Geriatr. Cogn. Disord. 17, 231–239. doi: 10.1159/000076361

Allen, N. H. P., Gordon, S., Hope, T., and Burns, A. (1997). Manchester and Oxford university scale for the psychopathological assessment of dementia. Int. Psychogeriatrics. 9, 131–136. doi: 10.1017/S1041610297004808

Altomari, N., Bruno, F., Laganà, V., Smirne, N., Colao, R., Curcio, S., et al. (2022). A comparison of behavioral and psychological symptoms of dementia (BPSD) and BPSD Sub-syndromes in early-onset and late-onset Alzheimer's disease. J. Alzheimer's Dis. 85, 687–695. doi: 10.3233/JAD-215061

Ancoli-Israel, S., Clopton, P., Klauber, M. R., Fell, R., and Mason, W. (1997). Use of wrist activity for monitoring sleep/wake in demented nursing-home patients. Sleep 20, 24–27. doi: 10.1093/sleep/20.1.24

Asada, T., Kinoshita, T., Morikawa, S., Motonaga, T., and Kakuma, T. A. (1999). Prospective 5-year follow-up study on the behavioral disturbances of community-dwelling elderly people with Alzheimer disease. Alzheimer Dis. Assoc. Disord. 13, 202–208. doi: 10.1097/00002093-199910000-00005

Azermai, M., Petrovic, M., Elseviers, M. M., Bourgeois, J., Van Bortel, L. M., Vander Stichele, R. H., et al. (2012). Systematic appraisal of dementia guidelines for the management of behavioural and psychological symptoms. Ageing Res. Rev. 11, 78–86. doi: 10.1016/j.arr.2011.07.002

Ball, S., Simpson, S., Beavis, D., and Dyer, J. (2004b). Duration of stay and outcome for inpatients on an assessment ward for elderly patients with cognitive impairment. Qual Ageing Older Adults. 5, 12–20. doi: 10.1108/14717794200400009

Ball, S. L., Holland, A. J., Huppert, F. A., Treppner, P., Watson, P., Hon, J., et al. (2004a). The modified CAMDEX informant interview is a valid and reliable tool for use in the diagnosis of dementia in adults with Down's syndrome. J. Intellect. Disabil. Res. 48, 611–620. doi: 10.1111/j.1365-2788.2004.00630.x

Baumgarten, M., Becker, R., and Gauthier, S. (1990). Validity and reliability of the dementia behavior disturbance scale. J. Am. Geriatr. Soc. 38, 221–226. doi: 10.1111/j.1532-5415.1990.tb03495.x

Beck, C., Heithoff, K., Baldwin, B., Cuffel, B., O'sullivan, P., and Chumbler, N. R. (1997). Assessing disruptive behavior in older adults: the Disruptive Behavior Scale. Aging Ment. Health. 1, 71–79. doi: 10.1080/13607869757407

Bédard, M., Molloy, D. W., Squire, L., Dubois, S., Lever, J. A., and O'donnell, M. (2001). The Zarit Burden Interview: A new short version and screening version. Gerontologist. 41, 652–657. doi: 10.1093/geront/41.5.652

Bloniecki, V., Aarsland, D., Cummings, J., Blennow, K., and Freund-Levi, Y. (2014). Agitation in dementia: relation to core cerebrospinal fluid biomarker levels. Dement. Geriatr. Cogn. Dis. Extra. 4, 335–343. doi: 10.1159/000363500

Borson, S., Scanlan, J. M., Sadak, T., Lessig, M., and Vitaliano, P. (2014). Dementia services mini-screen: a simple method to identify patients and caregivers in need of enhanced dementia care services. Am. J. Geriatr. Psychiat.ry. 22, 746–755. doi: 10.1016/j.jagp.2013.11.001

Braly, T., Muriathiri, D., Brown, J. C., Taylor, B. M., Boustani, M. A., Holden, R. J., et al. (2021). Technology intervention to support caregiving for Alzheimer's disease (I-CARE): study protocol for a randomized controlled pilot trial. Pilot Feasibility Stud. 7, 1–8. doi: 10.1186/s40814-020-00755-2

BSO-DOS (2019). BSO-DOS Informing Person and Family-Centred Care Direct Observation Documentation. Available online at: https://brainxchange.ca/BSODOS

Burgio, L. D., Scilley, K., Hardin, J. M., Janosky, J., Bonino, P., Slater, S. C., et al. (1994). Studying disruptive vocalization and contextual factors in the nursing home using computer-assisted real-time observation. Journals Gerontol. 49, 230–239. doi: 10.1093/geronj/49.5.P230

Burns, A., Folstein, S., Brandt, J., and Folstein, M. (1990). Clinical assessment of irritability, aggression, and apathy in huntington and Alzheimer's disease. J. Nerv. Ment. Dis. 178, 20–26. doi: 10.1097/00005053-199001000-00004

Cerejeira, J., Lagarto, L., and Mukaetova-Ladinska, E. B. (2012). Behavioral and psychological symptoms of dementia. Front. Neurol. 1–22, 73. doi: 10.3389/fneur.2012.00073

Cohen, J. (1968). Weighted kappa: nominal scale agreement provision for scaled disagreement or partial credit. Psychol. Bull. 70, 213–220. doi: 10.1037/h0026256

Cohen, J. A. (1960). Coefficient of agreement for nominal scales. Educ Psychol Meas. 20, 37–46. doi: 10.1177/001316446002000104

Cohen-Mansfield, J., Marx, M. S., and Rosenthal, A. S. A. (1989a). description of agitation in a nursing home. Journals Gerontol. 44, M77–84. doi: 10.1093/geronj/44.3.M77

Cohen-Mansfield, J., Marx, M. S., and Werner, P. (1989b). Full moon: does it influence agitated nursing home residents? J. Clin. Psychol. 45, 611–614.

Collins, J. D., Henley, S. M. D., and Suárez-González, A. (2020). A systematic review of the prevalence of depression, anxiety, and apathy in frontotemporal dementia, atypical and young-onset Alzheimer's disease, and inherited dementia. Int Psychogeriatrics. doi: 10.1017/S1041610220001118

Cortina, J. M. (1993). What is coefficient alpha? An examination of theory and applications. J. Appl. Psychol. 78, 98–104. doi: 10.1037/0021-9010.78.1.98

Craig, K. J., Hietanen, H., Markova, I. S., and Berrios, G. E. (2008). The Irritability Questionnaire: a new scale for the measurement of irritability. Psychiatry Res. 159, 367–375. doi: 10.1016/j.psychres.2007.03.002

Creese, B., and Ismail, Z. (2022). Mild behavioral impairment: measurement and clinical correlates of a novel marker of preclinical Alzheimer's disease. Alzheimer's Res Ther. 14, 1–5. doi: 10.1186/s13195-021-00949-7

Cummings, J. L. (1997). The neuropsychiatric inventory: assessing psychopathology in dementia patients. Neurology. 48, 6. doi: 10.1212/WNL.48.5_Suppl_6.10S

Cummings, J. L., Mega, M., Gray, K., Rosenberg-Thompson, S., Carusi, D. A., Gornbein, J., et al. (1994). The neuropsychiatric inventory: comprehensive assessment of psychopathology in dementia. Neurology. 44, 2308–2308. doi: 10.1212/WNL.44.12.2308

D'antonio, F., Tremolizzo, L., Zuffi, M., Pomati, S., Farina, E., Bpsd, S., et al. (2022). Clinical perception and treatment options for behavioral and psychological symptoms of dementia (BPSD) in Italy. Front. Psychiat. 1, 843088. doi: 10.3389/fpsyt.2022.843088

David, L. (2003). Streiner. Starting at the beginning: an introduction to coefficient alpha and internal consistency. J. Pers. Assess. 80, 99–103. doi: 10.1207/S15327752JPA8001_18

De Medeiros, K., Robert, P., Gauthier, S., Stella, F., Politis, A., Leoutsakos, J., et al. (2010). The Neuropsychiatric inventory-clinician rating scale (NPI-C): reliability and validity of a revised assessment of neuropsychiatric symptoms in dementia. Int Psychogeriatrics. 22, 984–994. doi: 10.1017/S1041610210000876

de Raadt, A., Warrens, M. J., Bosker, R. J., and Kiers, H. A. L. (2021). A comparison of reliability coefficients for ordinal rating scales. J Classif. 38, 519–543. doi: 10.1007/s00357-021-09386-5

Dekker, A. D., Sacco, S., Carfi, A., Benejam, B., Vermeiren, Y., Beugelsdijk, G., et al. (2018). The behavioral and psychological symptoms of dementia in down syndrome (BPSD-DS) scale: comprehensive assessment of psychopathology in down syndrome. J. Alzheimer's Dis. 63, 797–820. doi: 10.3233/JAD-170920

Dekker, A. D., Ulgiati, A. M., Groen, H., Boxelaar, V. A., Sacco, S., Falquero, S., et al. (2021). The behavioral and psychological symptoms of dementia in down syndrome scale (BPSD-DS II): optimization and further validation. J Alzheimer's Dis. 81, 1505–1527. doi: 10.3233/JAD-201427

Devanand, D. P., Brockington, C. D., Moody, B. J., Brown, R. P., Mayeux, R., Endicott, J., et al. (1992a). Behavioral syndromes in Alzheimer's disease. Int Psychogeriatrics. 4, 161–184. doi: 10.1017/S104161029200125X

Devanand, D. P., Miller, L., Richards, M., Marder, K., Bell, K., Mayeux, R., et al. (1992b). The Columbia university scale for psychopathology in Alzheimer's disease. Arch. Neurol. 49, 371–376. doi: 10.1001/archneur.1992.00530280051022

Drachman, D. A., Swearer, J. M., O'Donnell, B. F., Mitchell, A. L., and Maloon, A. (1992). The caretaker obstreperous-behavior rating assessment (COBRA) scale. J. Am. Geriatr. Soc. 40, 463–470. doi: 10.1111/j.1532-5415.1992.tb02012.x

Draper, B., Brodaty, H., Low, L. F. F., Richards, V., Paton, H., Lie, D., et al. (2002). Self-destructive behaviors in nursing home residents. J. Am. Geriatr. Soc. 50, 354–358. doi: 10.1046/j.1532-5415.2002.50070.x

Dunn, T. J., Baguley, T., and Brunsden, V. (2014). From alpha to omega: a practical solution to the pervasive problem of internal consistency estimation. Br. J. Psychol. 105, 399–412. doi: 10.1111/bjop.12046

Dyer, S. M., Harrison, S. L., Laver, K., Whitehead, C., and Crotty, M. (2018). An overview of systematic reviews of pharmacological and non-pharmacological interventions for the treatment of behavioral and psychological symptoms of dementia. Int Psychogeriatrics. 30, 295–309. doi: 10.1017/S1041610217002344

Evans, T. L., Kunik, M. E., Snow, A. L., Shrestha, S., Richey, S., Ramsey, D. J., et al. (2021). Validation of a brief screen to identify persons with dementia at risk for behavioral problems. J. Appl. Gerontol. 40, 1587–1595. doi: 10.1177/0733464821996521

Ferm, L. (1974). Behavioural activities in demented geriatric patients. Gerontol Clin. (Basel). 16, 185–194. doi: 10.1159/000245521

Fillenbaum, G. G., van Belle, G., Morris, J. C., Mohs, R. C., Mirra, S. S., Davis, P. C., et al. (2008). Consortium to establish a registry for Alzheimer's disease (CERAD): the first twenty years. Alzheimer's Dement. 4, 96–109. doi: 10.1016/j.jalz.2007.08.005

Finkel, S. I., Lyons, J. S., and Anderson, R. L. A. (1993). Brief agitation rating scale (BARS) for nursing home elderly. J. Am. Geriatr. Soc. 41, 50–52. doi: 10.1111/j.1532-5415.1993.tb05948.x

Finkel, S. I., Silva, J. C., Cohen, G. D., Miller, S., and Sartorius, N. (1998). Behavioral and psychological symptoms of dementia: A consensus statement on current knowledge and implications for research and treatment. Am. J. Geriatr. Psychiatry. 6, 97–100. doi: 10.1097/00019442-199821000-00002

Fukuda, C., Higami, Y., Shigenobu, K., Kanemoto, H., and Yamakawa, M. (2022). Using a non-wearable actigraphy in nursing care for dementia with lewy bodies. Am. J. Alzheimers. Dis. Other Demen. 37, 1–9. doi: 10.1177/15333175221082747

Gerolimatos, L. A., Ciliberti, C. M., Gregg, J. J., Nazem, S., Bamonti, P. M., Cavanagh, C. E., et al. (2015). Development and preliminary evaluation of the anxiety in cognitive impairment and dementia (ACID) scales. Int. Psychogeriatrics. 27, 1825–1838. doi: 10.1017/S1041610215001027

Gottesman, R. T., and Stern, Y. (2019). Behavioral and psychiatric symptoms of dementia and rate of decline in Alzheimer's disease. Front. Pharmacol. 10, 1–10. doi: 10.3389/fphar.2019.01062

Greene, J. G., Smith, R., Gardiner, M., and Timbury, G. C. (1982). Measuring behavioural disturbance of elderly demented patients in the community and its effects on relatives: a factor analytic study. Age Ageing. 11, 121–126. doi: 10.1093/ageing/11.2.121

Hessler, J. B., Schäufele, M., Hendlmeier, I., Junge, M. N., Leonhardt, S., Weber, J., et al. (2018). Behavioural and psychological symptoms in general hospital patients with dementia, distress for nursing staff and complications in care: results of the General Hospital Study. Epidemiol. Psychiatr. Sci. 27, 278–287. doi: 10.1017/S2045796016001098

Hope, T., and Fairburn, C. G. (1992). The present behavioural examination (pbe)1: the development of an interview to measure current behavioural abnormalities. Psychol. Med. 22, 223–230. doi: 10.1017/S0033291700032888

Hurley, A. C., Volicer, L., Camberg, L., Ashley, J., Woods, P., Odenheimer, G., et al. (1999). Measurement of observed agitation in patients with dementia of the Alzheimer type. J. Ment. Health Aging. 5, 117–132.

Ismail, Z., Agüera-Ortiz, L., Brodaty, H., Cieslak, A., Cummings, J., Fischer, C. E., et al. (2017). The mild behavioral impairment checklist (MBI-C): a rating scale for neuropsychiatric symptoms in pre-dementia populations. J. Alzheimer's Dis. 56, 929–938. doi: 10.3233/JAD-160979

Jao, Y. L., Algase, D. L., Specht, J. K., and Williams, K. (2016). Developing the person–environment apathy rating for persons with dementia. Aging Ment. Heal. 20, 861–870. doi: 10.1080/13607863.2015.1043618

Kales, H. C., Gitlin, L. N., and Lyketsos, C. G. (2014). Management of neuropsychiatric symptoms of dementia in clinical settings: recommendations from a multidisciplinary expert panel. J. Am. Geriatr. Soc. 62, 762–769. doi: 10.1111/jgs.12730

Kales, H. C., Gitlin, L. N., Stanislawski, B., Marx, K., Turnwald, M., Watkins, D. C., et al. (2017). WeCareAdvisorTM: The development of a caregiver-focused, web-based program to assess and manage behavioral and psychological symptoms of dementia. Alzheimer Dis. Assoc. Disord. 31, 263–270. doi: 10.1097/WAD.0000000000000177

Kanemoto, H., Sato, S., Satake, Y., Koizumi, F., Taomoto, D., Kanda, A., et al. (2021). Impact of behavioral and psychological symptoms on caregiver burden in patients with dementia with lewy bodies. Front. Psychiat. 12, 753864. doi: 10.3389/fpsyt.2021.753864

Kaufer, D. I., Cummings, J. L., Christine, D., Bray, T., Castellon, S., Masterman, D., et al. (1998). Assessing the impact of neuropsychiatric symptoms in Alzheimer's disease: the neuropsychiatric inventory caregiver distress scale. J. Am. Geriatr. Soc. 46, 210–215. doi: 10.1111/j.1532-5415.1998.tb02542.x

Kaufer, D. I., Cummings, J. L., Ketchel, P., Smith, V., MacMillan, A., Shelley, T., et al. (2000). Validation of the NPI-Q, a brief clinical form of the neuropsychiatric inventory. J. Neuropsychiatry Clin. Neurosci. 12, 233–239. doi: 10.1176/jnp.12.2.233

Kikuchi, T., Mori, T., Wada Isoe, K., Kameyama, Y. U., Kagimura, T., Kojima, S., et al. (2018). A novel dementia scale for Alzheimer's disease. J. Alzheimer's Dis. Park. 08, 1000429. doi: 10.4172/2161-0460.1000429

Kim, D. E., Sagong, H., Kim, E., Jang, A. R., and Yoon, J. Y. A. (2019). systematic review of studies using video-recording to capture interactions between staff and persons with dementia in long-term care facilities. J. Korean Acad Community Heal. Nurs. 30, 400–413. doi: 10.12799/jkachn.2019.30.4.400

Knapp, T. R. (1991). Focus on psychometrics. Coefficient alpha: conceptualizations and anomalies. Res. Nurs. Health. 14, 457–460. doi: 10.1002/nur.4770140610

Koo, T. K., and Li, M. Y. (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163. doi: 10.1016/j.jcm.2016.02.012

Kueper, J. K., Speechley, M., and Montero-Odasso, M. (2018). The Alzheimer's disease assessment scale-cognitive subscale (ADAS-Cog): modifications and responsiveness in pre-dementia populations. a narrative review. J Alzheimer's Dis. 63, 423–444. doi: 10.3233/JAD-170991