- 1Department of Computer Sciences, College of Computer Engineering and Sciences, Prince Sattam bin Abdulaziz University, Al-Kharj, Saudi Arabia

- 2Higher School of Communication of Tunis (SUP’COM), LR11TIC04, Communication Networks and Security Research Lab. & LR11TIC02, Green and Smart Communication Systems Research Lab, University of Carthage, Ariana, Tunisia

In recent years, the increasing use of drones for both commercial and recreational purposes has led to heightened concerns regarding airspace safety. To address these issues, machine learning (ML) based drone detection and classification have emerged. This study explores the potential of ML-based drone classification, utilizing technologies like radar, visual, acoustic, and radio-frequency sensing systems. It undertakes a comprehensive examination of the existing literature in this domain, with a focus on various sensing modalities and their respective technological implementations. The study indicates that ML-based drone classification is promising, with numerous successful individual contributions. It is crucial to note, however, that much of the research in this field is experimental, making it difficult to compare results from various articles. There is also a noteworthy lack of reference datasets to help in the evaluation of different solutions.

1 Introduction

Drones, or unmanned aerial vehicles (UAVs), are increasingly prevalent in various fields such as agriculture, film-making, security, and disaster assistance. However, this growth has also raised security concerns, such as terrorist attacks and illegal drone use. To address these issues, reliable drone detection and classification systems have been developed. The ability to efficiently recognize and categorize drones is crucial for ensuring security and facilitating safe cohabitation between drones and people. Consequently, the need for real-time accurate detection and classification of drones is more urgent than ever as demonstrated in Azari et al. (2018a).

The increasing prevalence of amateur drones has raised significant concerns regarding privacy and cybersecurity. These concerns have driven the development of advanced detection methods utilizing deep learning technologies as presented in Al-lQubaydhi et al. (2024). Drones can be detected using various sensors, including radars, acoustic sensors, and visual cameras. These sensors help in identifying the presence of drones in different environments. Once detected, it’s essential to classify the drones to assess whether they pose a threat. Classification can be performed using either supervised or unsupervised ML techniques. Supervised learning involves training a model on a labeled dataset, where the input data (e.g., flying patterns, size, and other features) are already categorized. The model learns to map the input data to the corresponding labels, allowing it to classify new, unseen data accurately. This method is beneficial for scenarios where there is a clear distinction between different types of drones and their behaviors. On the other hand, unsupervised learning does not rely on labeled data. Instead, it involves identifying patterns and structures within the input data to group similar data points together. This method is useful in situations where there is less prior knowledge about the data, and the goal is to discover underlying structures without predefined labels. Both supervised and unsupervised classification methods are critical for distinguishing between benign and potentially harmful drones, enabling appropriate responses to various UAV activities. The classification can have two type: 1) Binary classification, which distinguishes drones from other aircraft or birds, is often used; and 2) Multi-class classification is utilized to identify drone types and specify their characteristics, such as the number of rotors or the estimated payload. If a drone poses a threat, it must be neutralized using methods such as signal jamming, taking control of the drone, or even directly shooting it down. The type of neutralization depends on the drone’s categorization and local anti-drone system laws. Machine learning relies on the quality and quantity of data used in training and testing to create powerful classification models with low bias and variance. To reduce bias and prevent over-fitting, data should cover a wide range of real-life situations. In drone classification, researchers often generate their own data through simulations, lab experiments, and outdoor measurements. Raw sensor data requires pre-processing, such as filtering and feature engineering, to ensure efficient learning and model generation. Researchers use different features in time and frequency domains depending on the modalities used. Deep learning, which learns features inherently, omits feature extraction and selection but at the cost of complexity and higher data demand.

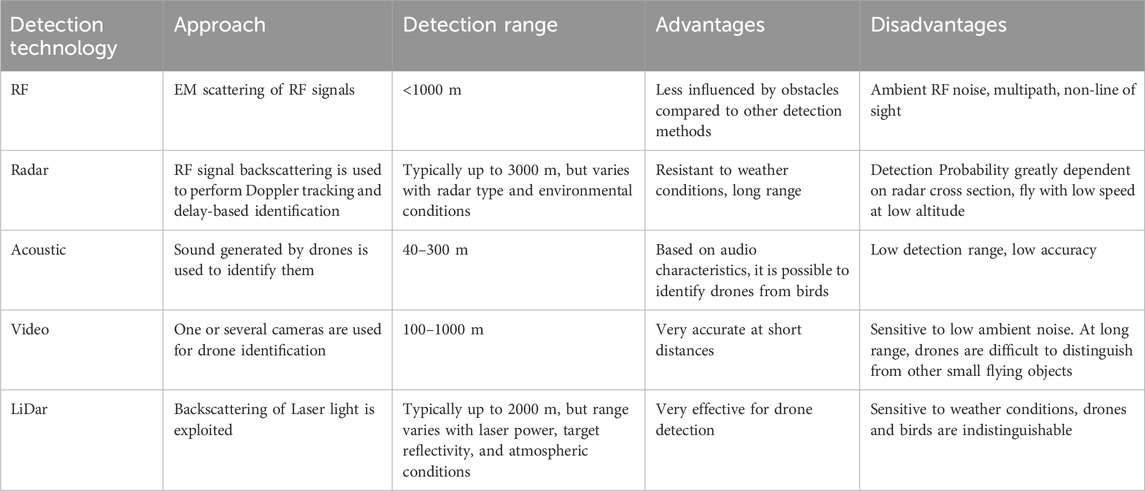

This study provides an in-depth examination of the various machine learning methods used for drone detection and classification, with the goal of providing a better understanding of their usefulness and limits. Numerous technical articles have provided a succinct overview of drone detection and classification research (Kaleem and Rehmani, 2018; Azari et al., 2018b; Guvenc et al., 2018; Lu et al., 2019). However, as shown in Table 1, the majority of these studies mainly focus on the functional aspects of various technologies and give limited evaluations, comparing their benefits and drawbacks. Our study provides a notable academic contribution by conducting a comprehensive investigation into the machine learning techniques utilized for drone detection and classification. Rather than merely listing these ML methods, our study focuses on critically evaluating their practical utility and limitations. Through detailed analysis, we seek to enhance our understanding of their effectiveness in real-world contexts, considering factors such as scalability, robustness, and adaptability. Additionally, our research aims to fill gaps in existing literature by providing practical insights for researchers, practitioners, and policymakers.

By highlighting both the strengths and weaknesses of different machine learning approaches, our study contributes to advancing this field and facilitates informed decision-making in the realm of aerial security and surveillance. Indeed, we highlight fundamental issues related to ML algorithms’ application and suggest future research topics for further development in this field.

2 Machine learning algorithms

There are numerous algorithms based on machine learning that may be used for drone detection and classification, depending on the use case and available data. In the following, we present the concept of the main ML algorithms employed to improve drone detection and classification performances.

3 Radar-based drone detection and classification using ML algorithms

Radar systems send radio waves through the air until they come into contact with an object, allowing the radar receiver to determine its distance, speed, and direction. There are various types of radars used in various applications, including pulse radar, continuous wave (CW) radar and Frequency-Modulated Continuous Wave (FMCW) radar. Pulse radar estimates range by calculating the time delay between the transmitted pulse and the returned echo. Continuous wave radar detects frequency shifts in received signals, enabling target velocity calculation. FMCW radar transmits a signal with changing frequency and measures the frequency difference for range estimation. Several studies have proposed the integration of machine learning algorithms into radar devices for improved drone identification accuracy and efficacy over long distances.

3.1 Existing works

The authors of Oh et al. (2019) developed an UAV classification system using micro-Doppler signatures from FMCW radar. The system analyzes radar signals from various UAVs and non-UAV objects to determine their unique properties and motion patterns. The system uses empirical mode decomposition (EMD) to extract information from radar signals. The 13 intrinsic mode functions (IMFs) offer classification information for UAVs. The method’s accuracy in classifying non-UAVs vs. UAVs was 94.39%, while its accuracy in classifying objects into three categories was 90.59%.

A novel technique for locating and identifying UAVs utilizing 5G millimetre wave radar is presented in the study in Zhao et al. (2019). The technique makes use of the high-resolution range profile (HRRP) to locate the UAVs, the cepstrum method to calculate rotor number and speed, and micro-Doppler characteristics to identify the UAVs. The separation between multiple UAVs is achieved using sinusoidal frequency modulation parameter optimization. The technique gathers information such as the number of rotors, rotation speed, and micro-Doppler properties. The strategy also provides a GPS-free UAV tracking mechanism, guaranteeing adequate detection precision for reliable tracking data.

The researchers in Fu et al. (2021) propose an improved LSTM model for drone categorization based on radar cross section characteristics at mmWave frequencies. In low-light environments like indoors, this model enables more precise drone detection at smaller sizes. To train the LSTM model, they applied a weight optimization strategy and an ALRO model (adaptive learning rate optimization). The experiment’s findings demonstrated that the LSTM-ALRO model outperformed the prior CNN-based model in drone identification by 99.88%.

The authors of Raval et al. (2021) use a CNN algorithm to classify different types of drones. The dataset for this study was created using a realistic drone model with input parameters from the Martin and Mulgrew models. Micro-Doppler fingerprints derived from radar waves were employed for classification. The CNN model trained on data from an X-band radar system with a 2 kHz pulse repetition frequency achieved an F1 score of 0.816, indicating high accuracy across the dataset. In comparison, the CNN model trained on data from a W-band radar system with a 20 kHz pulse repetition frequency produced produced less performant results.

The authors of Garcia et al. (2022) suggest an enhanced radar-based drone detection system based on a Neural Network (CNN-32DC) that may identify approaching drone threats and aid in protecting infrastructure against them. This system’s radar device runs on a frequency band centred at 8.75 GHz. The Real Doppler RAD-DAR database utilized in this system was obtained and built by Microwave and Radar Group, and it includes over 17,000 data samples from vehicles, drones, and humans. The suggested CNN-32DC network has been demonstrated to be more accurate and efficient than existing networks with less processing time, attaining a high-accuracy result of 99.48% in identifying drones from other objects.

The work Gong et al. (2023) presents a novel method to detect small drones using radar, employing the Doppler Signal-to-Clutter Ratio (DSCR) detector. This detector extracts the DSCR value of targets and can detect radar signals in real-time without tracking. It outperforms typical SNR detectors in terms of detection probability and decreases missed targets. The authors will investigate different solutions for detecting and recognizing drones using the DSCR detector in future works.

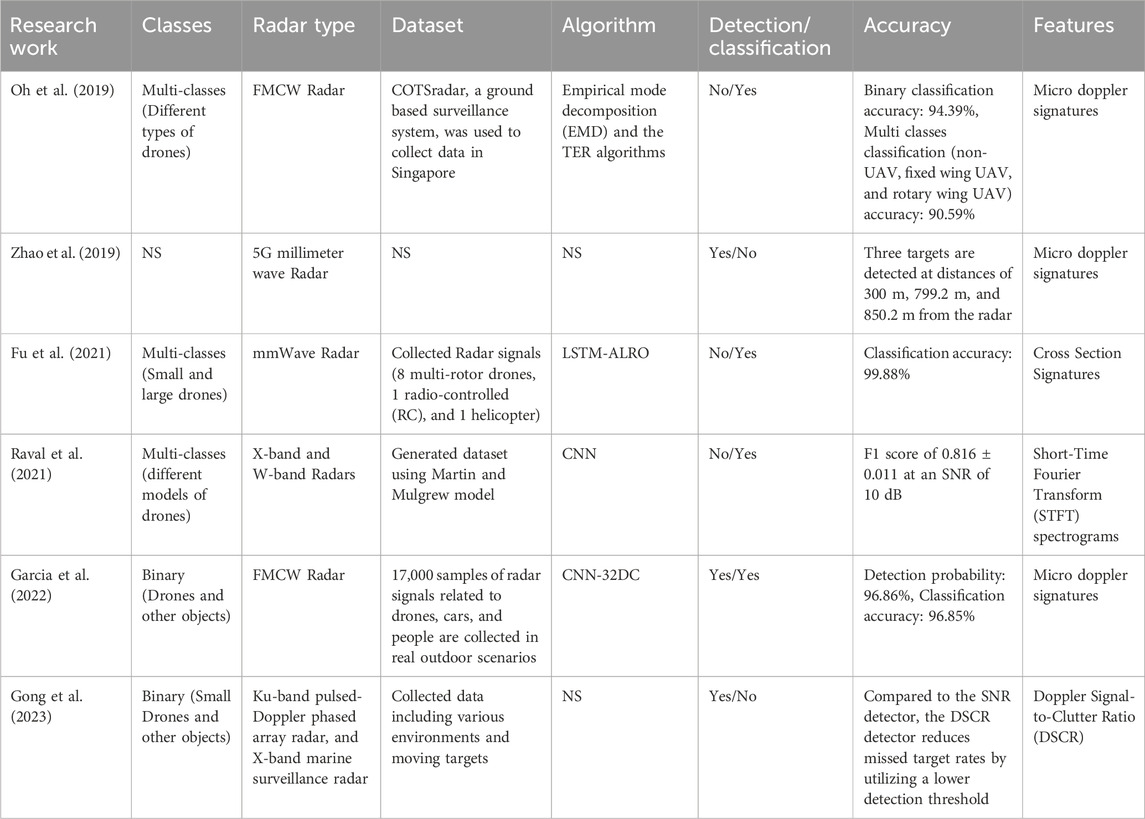

In conclusion, the presented studies have produced considerable advancements in drone identification utilizing deep learning and hybrid models. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) were used to handle RADAR signals, yielding high accuracy in drone categorization. For example, UAV classification approaches that use FMCW radar and micro-Doppler signature analysis have demonstrated high accuracy in recognizing drones. Similarly, deep learning-based drone classification systems using radar cross-section signatures at mmWave frequencies have shown high classification accuracy. In Table 2, we compare the presented research works, based on classification type, radar type, dataset, ML algorithm, accuracy, and used features.

Table 2. Comparison of Related Works on Radar-Based Drone detection and Classification Using ML Algorithms.

3.2 Discussion

All reviewed publications indicate favourable classification results for radar systems, indicating that the technique is promising. However, it is unclear if these techniques can be adapted to encompass more drone types, greater ranges, other radar sensors, and alternative signal processing schemes. The majority of research is experimental, with little consideration given to design options.

Most publications discuss machine learning’s capacity to categorize drones vs. drones or drones vs. birds, although they often presume detection. Second, owing to the continually developing nature of UAV technology, keeping a broad and up-to-date signature database for multiple UAV types is a considerable issue. Radar systems could have trouble correctly identifying new or modified UAVs without a comprehensive database. Additionally, stealthy UAVs with low radar cross-sections are made to be as undetectable as possible, making detection and categorization more difficult. The need to track UAVs at various heights and distances complicates the design of the radar system, which affects its effectiveness and detection range. Furthermore, it could be challenging to distinguish UAVs from ground vehicles or stationary clutter due to their slow speed and low Doppler shifts. The DSCR detector successfully collects radar signals from tiny drones, even at clutter levels, according to the authors’ research in Gong et al. (2023). In fact, the DSCR method improves the accuracy of drone recognition by successfully rejecting clutter and reducing false alarms brought on by stationary or slowly moving objects. Its increased sensitivity allows it to identify small and slow-moving drones, making it useful in situations where traditional radar systems may struggle. Real-time radar data processing for UAV detection and classification is a considerable computing challenge, especially when multiple UAVs are detected. For timely detection, the radar system must handle enormous data quantities while maintaining low latency.

In order to overcome current obstacles, subsequent investigations in the field of radar-based drone detection should focus on various critical aspects. Firstly, it is crucial to improve the resilience of detection algorithms to environmental conditions such as rain and fog. This entails the creation of adaptable algorithms capable of maintaining a consistently high level of accuracy in the face of changing weather circumstances. Furthermore, enhancing the ability to handle data in real-time is essential for practical applications. One way to achieve this is to investigate lightweight machine learning models that are suitable for running on edge devices. Furthermore, it is imperative to tackle the limited availability of extensive annotated datasets by employing data augmentation methods and incorporating synthetic data produced through simulations.

4 Acoustic-based drone detection and classification using ML algorithms

Acoustic drone detection systems employ sound waves in order to recognize and locate drones in the airspace. These devices work by analyzing the acoustic signature that a drone emits when its motor and rotors are in operation. Machine learning is used in acoustic drone detection and classification because it may help distinguish exactly between the sound of a drone and other noises in the environment, such as wind or background noise.

4.1 Existing works

The authors of Matson et al. (2019) present a technique for identifying drones that makes use of numerous acoustic nodes as well as machine learning algorithms. Through realistic tests, they enhanced the configuration of these nodes. During training, the system made use of the short-time Fourier transform and Mel-frequency cepstral coefficients. Support vector machines and convolutional neural networks were trained using real-world data. Four sensor node configurations were tested, and the optimal configuration was determined to maximize detection range without blind areas.

The research paper Dumitrescu et al. (2020) proposes an acoustic system for detecting, locating, and transmitting the positions of drones. This system might be helpful for monitoring sensitive areas and private property holdings. The system was evaluated using a spiral MEMS (Micro-Electro-Mechanical Systems) microphone array, utilizing concurrent neural networks and acoustic signal processing. According to the study, medium multi-rotors can be observed from a distance of 380 m, and large multi-rotors may be seen from a distance of 500 m.

The study in Casabianca and Zhang (2021) explores the use of machine learning algorithms for detecting UAVs using acoustic signals. CNNs, RNNs, and Convolutional Recurrent Neural Networks (CRNNs) are three types of deep neural networks (DNNs) the researchers employ for analyzing mel-spectrograms. The CNN algorithm performs better at detecting UAVs than both RNNs and CRNNs. The researchers also investigated late fusion methods, reaching an average accuracy of 94.7%.

The study in Ahmed et al. (2022) presents an acoustic-based drone detection system that employs machine learning. The authors retrieved 26 Mel Frequency Cepstral Coefficients (MFCCs) from audio signals using a publicly available drone database and environmental signals. The Random Forest and MLP algorithms employed MFCCs to achieve an average F-score of 0.92 on the training data, indicating the reliability of the system.

The study in Henderson et al. (2022) presents an auditory drone detection and identification system using SNNs and LSMs algorithms. The proposed approach achieves an accuracy of 97.13% for detection and 93.25% for identification tasks on a publicly available acoustic drone dataset. The results emphasizes the potential of neuromorphic hardware, which is lightweight, efficient, and low-cost, for drone applications in energy-constrained environments.

The paper Akbal et al. (2023) introduces a new sound dataset for detecting acoustic drones (ADr) and presents an accurate classification model for ADr detection. The model uses the skinny pattern and iterative neighbourhood component analysis (INCA) feature selector to extract features at multiple levels from ADr sounds. The model is tested using 16 classifiers in five categories, including decision tree, discriminant, support vector machine, k-nearest neighbour, and ensemble classifiers. The best classification accuracy achieved was 99.72% using Fine k-nearest neighbour, demonstrating the success of the skinny pattern and INCA-based ADr detection model in accurately identifying acoustic drone sounds.

In Anwar et al. (2019), the authors address the growing security concerns associated with UAVs by proposing a novel ML framework for detecting amateur drones (ADr) based on sound. Their approach involves extracting features from drone sounds using Mel Frequency Cepstral Coefficients (MFCC) and Linear Predictive Cepstral Coefficients (LPCC). The study employs SVM with various kernels for classification. The experimental results reveal that the SVM with a cubic kernel and MFCC features achieves an impressive accuracy of approximately 96.7% in detecting drone sounds. Additionally, the proposed ML framework outperforms traditional correlation-based methods by more than 17% in detection accuracy, demonstrating its effectiveness in distinguishing drone sounds from other environmental noises like birds, airplanes, and thunderstorms.

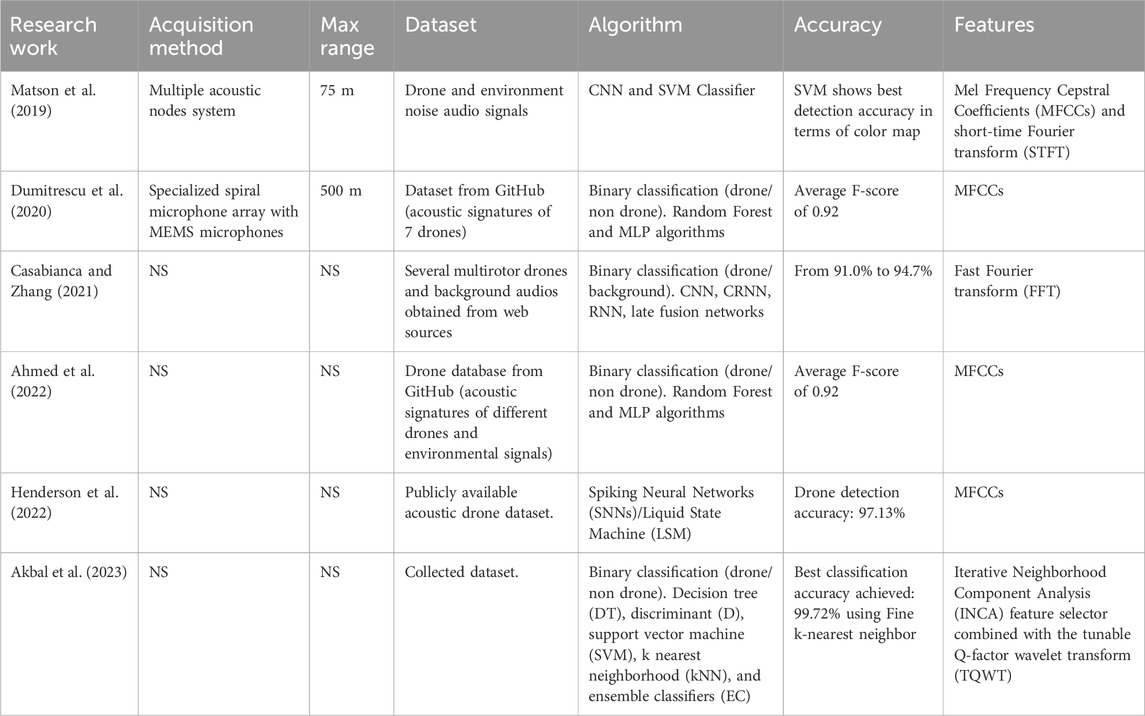

In summary, recent studies underscore the effectiveness of ML algorithms in enhancing acoustic-based drone detection. High detection accuracy has been achieved by utilizing multiple acoustic nodes coupled with ML models, demonstrating the potential of distributed acoustic sensing systems for reliable drone detection. Further research highlighted the power of deep learning by achieving significant improvements in detection accuracy through the late fusion of deep neural networks. This approach leverages multiple layers of data processing, enhancing the system’s ability to distinguish drone sounds from background noise effectively. Robust performance in drone sound classification using various ML techniques has also been showcased, emphasizing the adaptability and precision of these models in different acoustic environments. In Table 3, we compare the presented research works based on acquisition method, maximum range, dataset, ML algorithm, accuracy, and used features.

Table 3. Comparison of Related Works on Acoustic-Based Drone detection and classification using ML Algorithms.

4.2 Discussion

The research on acoustic drone detection using machine learning is still in its early stages, with limited studies utilizing microphone arrays for localization. Comparing contributions is challenging due to variations in drone types, ranges, features, classification methods, and performance metrics. The lack of extensive databases containing the acoustic signatures of various UAVs hinders the development and validation of classification algorithms. Background noise can obscure UAV sounds, making it difficult to distinguish them from other sources. The variability in acoustic signatures among different UAV types and models complicates the creation of accurate classification systems. Acoustic detectors proposed so far have a maximum detection range of 500 m, as indicated in Dumitrescu et al. (2020). However, the impact of distance and environmental factors on acoustic signals, which may limit the range of detection and classification, has not been thoroughly investigated in the reviewed papers. Furthermore, acoustic sensors can be integrated with event-driven processing techniques, such as SNNs, to process sound events in an energy-efficient manner. As demonstrated in Henderson et al. (2022), SNNs and Liquid State Machines (LSMs) offer valuable advantages in energy-constrained environments like drones. SNNs operate using discrete spikes, reducing computational and energy requirements compared to traditional continuous-valued neural networks. They process information event-driven, consuming resources only when a spike occurs, resulting in energy savings during periods of inactivity. SNNs can transmit information using spikes, which are binary events, reducing communication overhead compared to continuous activations. LSMs often rely on local computations and connections, minimizing the need to transmit data to a central processing unit. They draw inspiration from biological neural systems, making them well-suited for energy-constrained environments. On the other hand, LSMs use reservoir computing to process temporal signals, capturing complex patterns in input data, reducing computational costs and enabling more efficient data processing.

Future research in acoustic-based drone detection and classification should concentrate on several key areas to enhance performance and address current limitations. Firstly, improving the robustness of detection algorithms against varying background noise levels is essential. Acoustic systems can be significantly impacted by environmental sounds, so developing advanced signal processing techniques to filter out these interferences effectively is crucial. Secondly, the integration of machine learning models capable of real-time analysis is critical. Many existing systems struggle with processing speed, so future work should focus on lightweight and efficient algorithms that can operate on edge devices, enabling faster detection and classification. Thirdly, expanding the dataset diversity used for training acoustic models is vital. Current datasets often lack representation of various drone types and operational conditions. Research should focus on collecting extensive real-world acoustic data and employing data augmentation techniques to enhance model training.

5 Visual-based drone detection and classification using ML algorithms

Visual drone detection systems take videos or images of drones and use image processing algorithms to interpret the data. These devices capture high-resolution images of the airspace while recording drone size, shape, and movement. These systems can recognize and track drones with greater precision and are less impacted by weather conditions. Machine learning approaches can increase the reliability and accuracy of these systems by providing an exhaustive view of the airspace.

5.1 Existing works

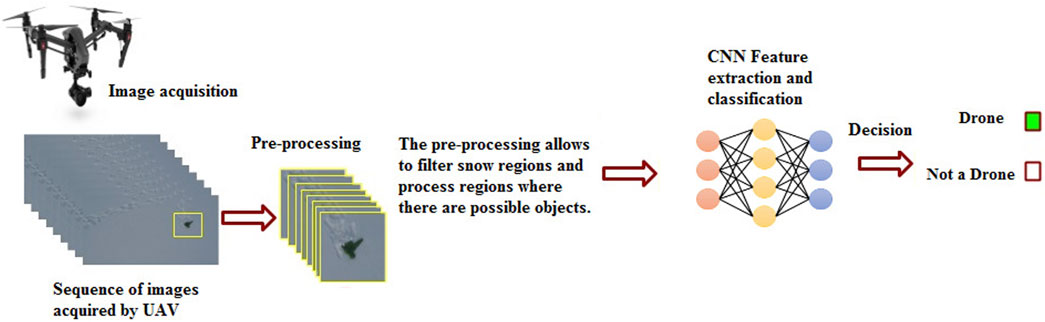

In Seidaliyeva et al. (2020), the authors present a real-time drone detection algorithm in a video with a static background. The method separates the work into detection and classification phases, identifying moving objects using background removal and morphological processes. The detected objects are classified as drones, birds, or backgrounds using a CNN algorithm. The training dataset consists of 1,000 video frames with a resolution of 640 × 480 pixels. The algorithm’s accuracy for drone recognition is stated to be 94.5%, however, it is heavily dependent on the presence of a moving background. Indeed, the dynamic nature of backgrounds like wind-driven vegetation or water bodies can make it difficult to identify drones from background motion, resulting in increased false positive and negative rates.

In Garcia et al. (2020), the authors present a vision-based drone detection system that uses neural networks to detect drones and prevent cross-border crime such as human trafficking and the smuggling of drugs. The system uses a faster R-CNN with a ResNet-101 network. Using the SafeShore dataset, the proposed technique for visual sensing has an accuracy of 93.40% and correctly identifies the drone in the test video simulation without confusing it with a bird.

The authors in Wisniewski et al. (2021) propose a method for generating synthetic drone images to train a convolutional neural network to identify drone models in real-life video streams. They use the Anti-UAV dataset, which includes videos and ground truth labels for various drone models (DIJ Inspire, DIJ Mavic-Pro, DJI Phantom, DJI Mavic-Air, DJI Spark, and Parrot drones). The DenseNet201 architecture achieved an average accuracy of 92.4% on the test dataset.

The study in Samadzadegan et al. (2022) developed a deep CNN algorithm for detecting and recognizing drones using visible imagery. The model used public images and videos of multirotor and helicopter drones and various bird species. The model used features like colour, texture, and shape. It achieved an mAP (mean Average Precision) of 84% and an accuracy of 83%, indicating high performance in drone detection and recognition. mAP is a common performance metric used to evaluate the accuracy of object detection and localization models in computer vision. Average Precision (AP) measures how well a model ranks and localizes objects in its predictions, and the mean Average Precision (mAP) calculates the average AP across multiple classes.

The study Aydin and Singha (2023) introduces You Only Look Once version 5 (YOLOv5), a one-shot detector for drone detection that uses pre-trained weights and data augmentation to train using YOLOv5, a fast object detection algorithm. A one-shot detector is a type of object detection model that aims to recognize and locate objects in images or video frames with minimal training examples. Unlike traditional object detectors that often require a large dataset for training, one-shot detectors are designed to achieve reasonable accuracy with only a single example of each object category. In this work, the proposed YOLOv5 model uses images of drones and birds from public sources like Google, Kaggle and Instagram. The drone photos were captured from various heights, angles, and backgrounds, to ensure the diversity in the dataset. The model achieved a 90.40% mAP improvement over the previous You Only Look Once version 4 (YOLOv4) model.

The MultiFeatureNet (MFNet) and its variations, including MFNet-Feature Attention (MFNet-FA), are promising in drone detection and classification Khan et al. (2022). These models improve detection accuracy and efficiency by capturing concentrated feature maps and adaptively weighting input feature channels. MFNet uses convolutional neural networks to process and analyze visual data, extracting detailed features from drone images. Khan et al. (2024) used various versions of MFNet to cater to different detection requirements and computational constraints. The dataset used for training and evaluation included diverse scenarios with drone types, backgrounds, and environmental conditions. MFNet-M achieved a precision score of 99.8% for bird detection, while MFNet-L achieved 97.2% for UAV detection. MFNet-FA-S, which incorporates the Feature Attention module, enables real-time inference and multiple-object detection, enhancing practical deployment in surveillance and security applications.

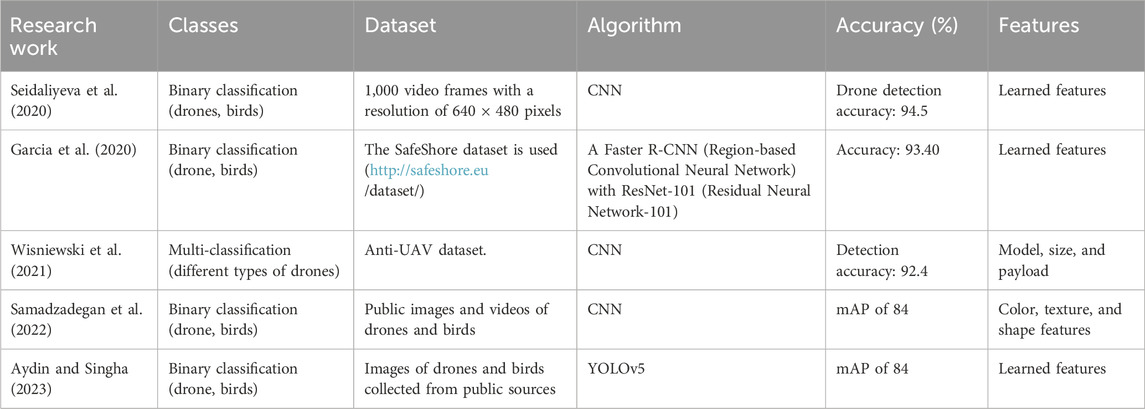

In conclusion, recent studies have highlighted the effectiveness of ML algorithms in visual-based drone detection. High accuracy in real-time drone detection using static background videos has been demonstrated, showcasing the practical applicability of Convolutional Neural Networks in surveillance systems. The potential of deep learning has been further validated by significant improvements in detection accuracy with neural network-based anti-drone systems. Additionally, robust performance in drone model identification and recognition has been demonstrated, emphasizing the versatility of CNNs in handling various visual data types. Collectively, these studies illustrate the transformative impact of ML algorithms, particularly CNNs, in enhancing the accuracy and reliability of visual-based drone detection systems. In Table 4, we compare the presented research works based on classification type, dataset, ML algorithm, accuracy, and used features.

Table 4. Comparison of Related Works on Visual-Based Drone detection and classification using ML algorithms.

5.2 Discussion

Research on drone detection and classification through visual systems confronts a range of challenges. Firstly, the diversity of drone designs in terms of size, shape, and appearance hampers the creation of a universally applicable detection model. Secondly, the complex and varied backgrounds in which drones operate pose significant challenges for accurate detection. Drones can easily blend into their surroundings, making them difficult to differentiate from natural objects or other elements in the environment. This camouflage effect can lead to false negatives or missed detection, which can be a critical issue in security, surveillance, and safety applications. Additionally, the dynamic nature of lighting conditions, including shadows and glare, adds complexity to the process, potentially leading to both false positives and negatives. Another significant set of challenges involves the technical aspects of detection. Detecting drones accurately across different scales and distances is an ongoing struggle. Privacy concerns and legal restrictions hinder the acquisition of comprehensive and diverse datasets for training, limiting the effectiveness of machine learning models. To address this, some authors resort to transfer learning or generate synthetic images using dedicated software to increase the dataset size, while others consider data augmentation to enhance the dataset size and diversity. By applying various transformations to existing images, such as rotations, translations, and brightness adjustments, the dataset becomes more robust. This aids in training models to be resilient to different visual conditions or generative models like Generative Adversarial Networks (GANs) to create artificial data similar to real data. Finally, visual drone detection heavily relies on a clear line of sight (LOS) between the drone and camera system. This requirement limits the system’s effectiveness in scenarios where obstacles, adverse weather, altitude variations, and urban environments obstruct the direct visual connection. Obstacles like buildings and trees can obscure drones, adverse weather conditions can diminish visibility, altitude differences can lead to drones being out of the camera’s field of view; and complex urban layouts can create shadows and occlusions.

Future research in visual-based drone detection should concentrate on several key areas to enhance current capabilities. One critical area is developing adaptive algorithms that can maintain high detection accuracy in dynamic and cluttered environments, addressing challenges posed by varying lighting conditions and complex backgrounds. Additionally, improving real-time processing capabilities through lightweight and efficient machine learning models suitable for edge devices is essential for practical applications like security and surveillance. The scarcity of labeled visual datasets remains a significant challenge. Future efforts should focus on advanced data augmentation techniques and the use of synthetic data generated through simulations to enhance model training. Leveraging transfer learning, where pre-trained models on large datasets are fine-tuned for specific tasks, can also help mitigate data scarcity issues.

6 RF-based drone detection and classification using ML algorithms

RF systems detect unique electromagnetic signals emitted by drones, such as remote control, telemetry, or GPS signals, for identification and tracking. Environmental factors like the weather and terrain can have an impact on these signals. Machine learning can analyze these signals to extract drone presence features and filter out noise and interference. By incorporating machine learning algorithms, these systems can adapt to new or unknown signals and distinguish between different drone types, especially as drone technology evolves rapidly with new types and different radio signals.

6.1 Existing works

The authors in Al-Sa’D et al. (2019) use deep learning to detect and identify drones using RF-based methods. They provide an open-source drone database and show average classification performance for three deep neural networks. The first DNN achieved 99.7% accuracy for binary classification, 84.5% for multi-class classification, and 46.8% for multi-class classification. The accuracy of multi-class classification decreases because of similarities in drone RF spectra, which may be reduced by utilizing sophisticated classification methods.

In Allahham et al., 2020), the authors developed a drone detection and identification system utilizing RF sensing. For classification and feature extraction; the system employs a multi-channel, 1-dimensional convolutional neural network. The authors introduced a new dataset called DroneRF, which includes RF signals from several drone types. The classification process is divided into three stages: detecting the drone’s presence, recognizing its type, and determining its flying mode. The first stage achieved an average accuracy of 100%, followed by the second stage at 94.6%, and the last stage at 87.4%.

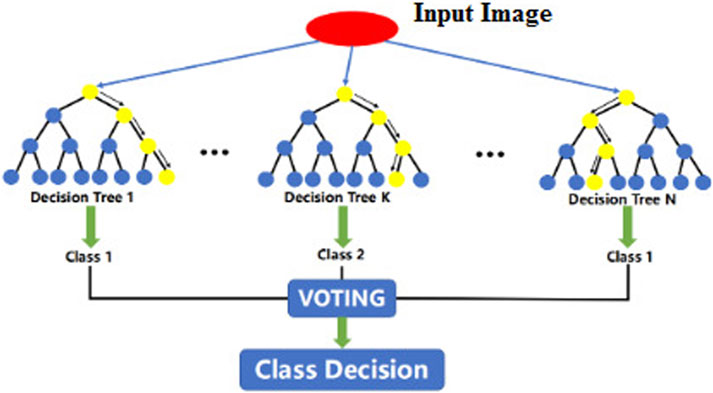

In Medaiyese et al. (2020), the authors employ an ensemble machine learning algorithm called XGBoost (Extreme Gradient Boosting) for drone detection and identification using RF-based signals. In XGBoost, individual decision trees undergo incremental training to correct the mistakes made by their predecessors. The final predictions are then generated by combining these trees. XGBoost optimizes the ensemble process by using gradient descent to minimize a loss function, effectively enhancing the model’s performance across iterations. It also employs regularization techniques to control overfitting and enhance generalization. In this work, the authors used half of the feature vector from a previous work and input the lower and upper bands of the RF signature. The XGBoost model is used in experiments on the DroneRF dataset, with three cases: detecting drone presence, identifying a specific type, and determining the drone’s operational mode. The models achieve an average accuracy of 99.96% using only the lower band of the RF signature as input features.

The algorithm proposed in Mo et al. (2022) involves constructing a deep learning UAV detection and classification network based on radio frequency compressed signals, performing filtering and feature extraction on the compressed measurement signal, and using machine learning algorithms such as KNN and XGBoost to classify the UAV types and modes. The features used for classification are extracted from the RF signals, and the dataset used for evaluation is a publicly available dataset. The type of UAV and corresponding flying modes are determined with an average accuracy of about 99%.

The research in Alam et al. (2023) suggests a complete way to find and identify drones using deep learning and RF technology with different signal-to-noise ratios and the CardRF dataset. The proposed approach used a CNN-based model to classify RF signals emitted by drones and identify their models. The algorithm achieves an accuracy of 97.53% for the detection task and an accuracy of 76.42% for the identification task.

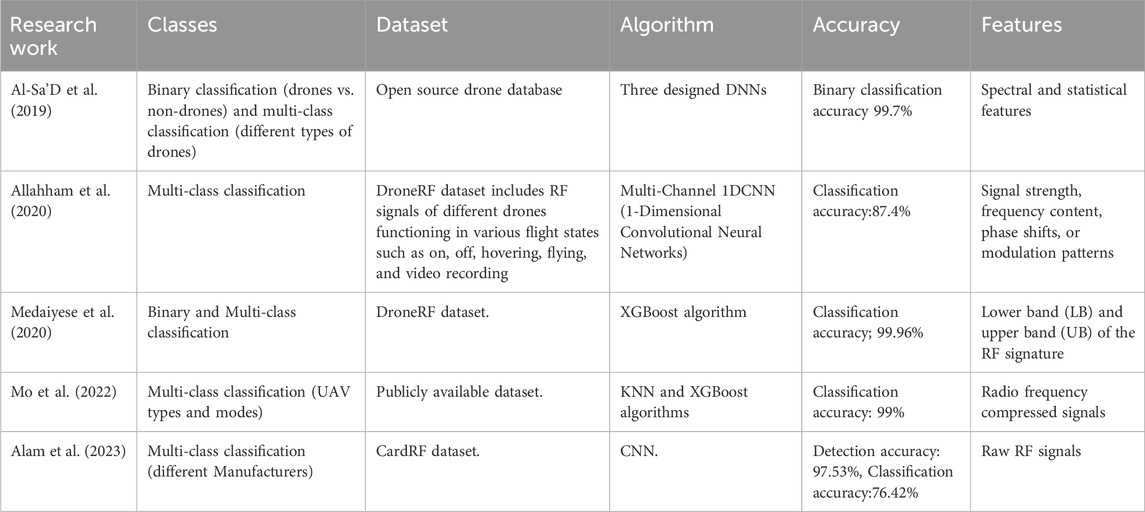

In conclusion, recent studies have demonstrated the effectiveness of ML algorithms in enhancing RF-based drone detection. High accuracy in drone identification has been achieved by training deep learning models on extensive RF datasets, highlighting the potential of deep learning to handle complex signal data. Similarly, detection accuracy has been significantly improved with a multi-channel CNN approach, which effectively processes complex RF signals and enhances classification reliability. Robust performance in drone detection and identification has also been shown using an ML framework, validating the integration of ML algorithms with RF signal analysis. These key results underscore the advancements in RF-based detection systems through the application of deep learning techniques, paving the way for more accurate and reliable drone monitoring solutions. In Table 5, we compare the presented research works based on classification type, dataset, ML algorithm, accuracy, and used features.

Table 5. Comparison of Related Works on RF-based Drone detection and classification using ML Algorithms.

6.2 Discussion

The role of RF signals in drone technology is pivotal, offering potential applications in detection and localization. However, their efficacy can be compromised when drones venture into autonomous operations, navigating predefined GPS routes with minimal RF connectivity to ground stations. This autonomy restricts communication, making it challenging to utilize RF signals effectively. Machine learning for RF data analysis is a relatively new field, hindered by a lack of comprehensive public RF datasets, which hinders the validation and benchmarking of various techniques.

Furthermore, current techniques are limited in their capacity to perform well in low signal-to-noise ratio circumstances. This limitation underscores the need for robust strategies capable of extracting meaningful information from RF signals even amidst significant background noise. Additionally, the current research landscape predominantly relies on controlled indoor environments, failing to capture the complexities of real-world scenarios where RF signals are susceptible to degradation, jamming, and interference. For RF-based drone detection and localization techniques to evolve into dependable solutions, they must confront these practical adversities, adapting to the challenges posed by genuine operational conditions.

To advance RF-based drone detection technology, future research must adopt a multifaceted approach. Developing innovative communication protocols is essential for enhancing interactions between drones and ground stations, especially during autonomous flight. Creating diverse and representative RF datasets is crucial for effective algorithm development and assessment, as it will improve the robustness of detection systems in varied RF environments. Addressing the limitations of machine learning algorithms under low signal-to-noise conditions will require the exploration of advanced signal processing techniques and the integration of domain expertise. Additionally, enhancing real-time processing capabilities through lightweight and efficient machine learning models for edge devices is vital for practical implementation. This involves optimizing computational efficiency without compromising accuracy to enable quicker and more responsive detection systems.

7 LiDAR-based drone detection and classification using ML algorithms

LiDAR is a remote sensing technology that utilizes laser beams to measure the time it takes for reflected light to return to a sensor. It can detect drones and provide information about their speed, direction, and altitude. LiDAR’s primary advantage is its ability to accurately measure distances between objects, enabling drones to distinguish themselves from other objects like birds. It excels in low-light or adverse weather conditions, where visual or RF-based detection methods may be hindered. Compared to radar, LiDAR offers higher spatial resolution, enabling the creation of precise 3D maps for drone identification in complex environments. It also improves object recognition, reduces false alarms, and improves detection accuracy. However, LiDAR requires direct line-of-sight and can be more expensive and power-intensive than radar.

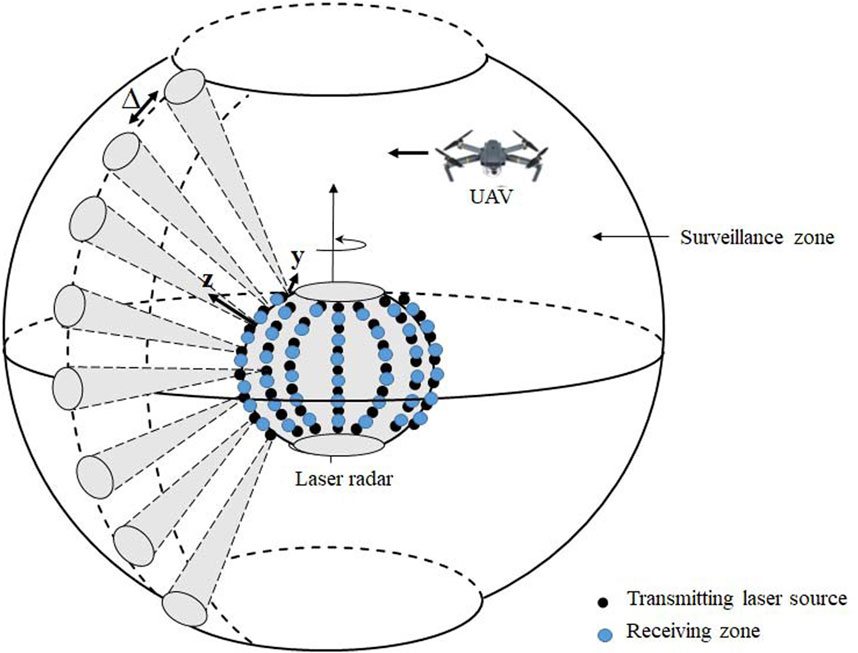

When using Lidar systems in combination with machine learning for drone detection, the LiDAR system provides information on the distance and position of objects in its field of view. This data may be used to generate a 3D point cloud representation of the environment, which machine learning algorithms can use to identify and categorize drones. The authors in Hammer et al. (2020) propose a method to differentiate between drones and birds using LiDAR point clouds and images. They use standard 360° scanning LiDAR sensors for automotive applications to identify and track flying objects at 50-meter distances. A grayscale camera points towards the object and records a picture, then uses a CNN algorithm to distinguish between the two. The proposed Light Imaging Detection and Ranging system in (Salhi and Boudriga (2020) uses a quasi-spherical shape with vertically arranged transmitters and receivers to form one or multiple arrays of receivers and transmitters, as shown in Figure 3 The system transmits laser light to target potential drones within a predefined surveillance zone. A portion of the emitted light is reflected back by the target, reaches optical receivers, and is collected by a central photodiode. Two alternatives are available: one-array turning Lidar, which consists of only one array with rotational movement, and multi-array static Lidar, which has a fixed Lidar system with multiple arrays for increased drone detection.

LiDAR technology holds significant promise for drone detection but also presents several challenges that future research must address. One key limitation is the relatively short range of commercial LiDAR systems compared to radar, which limits their effectiveness for detecting drones at high altitudes or over long distances. Additionally, buildings and dense vegetation can obstruct LiDAR’s line-of-sight capabilities, complicating the detection of hidden drones. LiDAR also struggles with distinguishing materials or surfaces with similar reflectance properties, which can lead to false positives or negatives in environments with highly reflective surfaces.

Future research directions should focus on enhancing the robustness of LiDAR-based detection systems in various environmental conditions. This involves developing algorithms that can maintain high accuracy in adverse weather conditions, such as rain, fog, and other challenging environments that can degrade LiDAR performance. Another critical area is improving real-time processing capabilities by developing lightweight and efficient ML models suitable for deployment on edge devices. This enhancement is crucial for applications in surveillance and security, where timely detection and response are essential. The integration of LiDAR data with other sensor modalities, such as radar and cameras, is another important research avenue. Multimodal sensor fusion techniques should be investigated to improve detection accuracy and robustness by leveraging the strengths of different sensor types. For example, combining LiDAR with radar can enhance detection capabilities in poor visibility conditions, while integrating cameras can provide additional visual context for better classification. Addressing the scarcity of labeled datasets is also vital for the development of robust ML models for drone detection. Research should explore data augmentation techniques and the use of synthetic data generated through simulations to create large, diverse datasets. These datasets will enable the training of more robust and generalizable ML models.

8 Multi sensor systems

Multi-sensor systems, which combine several sensors, including radar, lidar, cameras, acoustics, and RF, to improve performance and accuracy, are crucial for drone detection and classification. These systems combine data from several sensors to produce a reliable detection and classification solution while taking into account the advantages and disadvantages of each sensor. Multi-sensor systems can increase detection range and accuracy by integrating many sensors, especially in difficult weather or complex environments. In addition, having redundant sensors increases system reliability. If one sensor fails or faces interference, other sensors can still provide data for detection and classification, ensuring continuous operation. The use of multi-sensor systems allows for the integration of data from multiple sensors, allowing for more accurate and reliable drone classification. Each sensor provides unique information that, when fused, can result in a more detailed and comprehensive classification and allow better tracking and localization of drones, enabling real-time monitoring and response. Multi-sensor systems enable cross-validation of detected targets. By confirming the presence of a drone using multiple sensors, false alarms can be reduced, leading to a more reliable threat assessment. Also, some sensors excel at specific aspects of detection and classification. For example, cameras are useful for visual identification, while radar can detect non-line-of-sight objects. Integrating these complementary technologies enhances overall system capabilities.

In the literature, several multi-sensor information fusion approaches are proposed, such as (Liu et al., 2017; Jovanoska et al., 2018; Diamantidou et al., 2019; Dudczyk et al., 2022; Fei et al., 2022). Meanwhile, there are several critical challenges that must be addressed. First and foremost, an appropriate and effective method of combining information collected from several sensors should be developed since the collected data contains a lot of noise in many cases. Furthermore, multi-sensor information is acquired utilizing various sensor configurations, implying that the raw data will have different representations. This leads to variable predictive power. Key results from the presented studies underscore the effectiveness of multi-sensor systems in drone detection and classification. These systems combine data from various sensors, such as cameras, microphones, radar, and LiDAR, to enhance accuracy and reliability. High accuracy in drone detection has been achieved using an audio-assisted camera array, demonstrating the practical applicability of integrating audio and visual data. Significant improvements in detection and tracking accuracy have been realized with multi-sensor data fusion approaches, showcasing the potential of combining data from various sensors to improve performance in different environments. Additionally, multimodal deep learning frameworks and 3D data fusion have demonstrated robust performance in UAV detection, further validating the effectiveness of these approaches in handling complex data from multiple sources and enhancing detection capabilities. Future research in multi-sensor drone detection systems should address several critical areas to enhance their effectiveness and applicability. One major focus should be the integration of data from multiple sensors presents significant challenges. Advanced sensor fusion techniques should be investigated to effectively combine different types of sensor data, enhancing detection accuracy and robustness. The use of synthetic data generated through simulations can also help address the scarcity of labeled data for training ML models.

9 Conclusion

The urgency of drone detection and classification stems from the rapid expansion of the drone market and the inherent risks. ICT solutions like the Low Altitude Authorization and Notification Capability (LAANC) aim to streamline authorization processes, yet ensuring compliance remains challenging due to both negligent and malicious users. Therefore, the ability to detect, classify, and identify drones in low-altitude airspace is crucial for risk assessment and intervention. Different sensing modes for drone detection–radar, acoustic, visual, RF, and multi-sensor systems–each have unique strengths and limitations. Radar systems provide precise location data and are effective in all weather conditions but can be expensive and resource-intensive. Acoustic systems are cost-effective but can be impacted by environmental noise. Visual systems, particularly those using deep learning, offer high accuracy but struggle in low-light conditions and require a clear line of sight. RF systems effectively detect drones based on communication signals but may face challenges in congested RF environments. Multi-sensor systems, which combine data from various sensors, offer enhanced accuracy and reliability by leveraging the strengths of each sensor type. However, integrating data from multiple sensors increases complexity and requires advanced sensor fusion techniques. Most proposed solutions are grounded in static detection setups, posing limitations in urban areas characterized by obstacles and noise. To address these challenges in drone detection and classification, distributed and collaborative detection systems that harness wide-area solutions or city-wide sensor networks are suggested as a promising approach. Future research should focus on developing algorithms that enhance robustness in diverse environmental conditions and improving real-time processing capabilities with lightweight and efficient ML models for edge devices. Advancing sensor fusion techniques and using synthetic data through simulations can address integration challenges. By focusing on these areas, future research can further enhance the effectiveness and applicability of multi-sensor drone detection systems. This comprehensive approach will ensure that these systems continue to evolve to meet the demands of modern applications and mitigate the risks associated with the increasing prevalence of drones.

Author contributions

MM: Writing–original draft, Writing–review and editing. MS: Writing–original draft, Writing–review and editing. LA: Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2024/R/1445).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahmed, C. A., Batool, F., Haider, W., Asad, M., and Hamdani, S. H. R. (2022). “Acoustic based drone detection via machine learning,” in 2022 international Conference on IT and industrial technologies (ICIT), 01–06.

Akbal, E., Akbal, A., Dogan, S., and Tuncer, T. (2023). An automated accurate sound-based amateur drone detection method based on skinny pattern. Digit. Signal Process 136, 104012. doi:10.1016/j.dsp.2023.104012

Akter, R., Doan, V.-S., Lee, J.-M., and Kim, D.-S. (2021). Cnn-ssdi: convolution neural network inspired surveillance system for uavs detection and identification. Comput. Netw. 201, 108519. doi:10.1016/j.comnet.2021.108519

Alam, S. S., Chakma, A., Rahman, M. H., Mofidul, R. B., Alam, M. M., Utama, I. B. K. Y., et al. (2023). Rf-enabled deep-learning-assisted drone detection and identification: an end-to-end approach. Sensors (Basel, Switzerland) 23, 4202. doi:10.3390/s23094202

Alharam, A. K., and Shoufan, A. (2020). “Optimized random forest classifier for drone pilot identification,” in 2020 IEEE international symposium on circuits and systems (ISCAS), 1–5.

Allahham, M. S., Khattab, T. M. S., and Mohamed, A. M. (2020). “Deep learning for rf-based drone detection and identification: a multi-channel 1-d convolutional neural networks approach,” in 2020 IEEE international conference on informatics, IoT, and enabling technologies (ICIoT), 112–117.

Al-lQubaydhi, N., Alenezi, A., Alanazi, T., Senyor, A., Alanezi, N., Alotaibi, B., et al. (2024). Deep learning for unmanned aerial vehicles detection: a review. Comput. Sci. Rev. 51, 100614. doi:10.1016/j.cosrev.2023.100614

Al-Sa’D, M. F., Al-Ali, A. K., Mohamed, A. M., Khattab, T. M. S., and Erbad, A. M. (2019). Rf-based drone detection and identification using deep learning approaches: an initiative towards a large open source drone database. Future Gener. comput. Syst. 100, 86–97. doi:10.1016/j.future.2019.05.007

Anwar, M. Z., Kaleem, Z., and Jamalipour, A. (2019). Machine learning inspired sound-based amateur drone detection for public safety applications. IEEE Trans. Veh. Technol. 68, 2526–2534. doi:10.1109/tvt.2019.2893615

Aydin, B., and Singha, S. (2023). Drone detection using yolov5. Eng 4, 416–433. doi:10.3390/eng4010025

Azari, M. M., Sallouha, H., Chiumento, A., Rajendran, S., Vinogradov, E., and Pollin, S. (2018a). Key technologies and system trade-offs for detection and localization of amateur drones. IEEE Commun. Mag. 56, 51–57. doi:10.1109/MCOM.2017.1700442

Azari, M. M., Sallouha, H., Chiumento, A., Rajendran, S., Vinogradov, E., and Pollin, S. (2018b). Key technologies and system trade-offs for detection and localization of amateur drones. IEEE Commun. Mag. 56, 51–57. doi:10.1109/MCOM.2017.1700442

Bazi, Y., and Melgani, F. (2018). Convolutional svm networks for object detection in uav imagery. IEEE Trans. Geoscience Remote Sens. 56, 3107–3118. doi:10.1109/tgrs.2018.2790926

Casabianca, P., and Zhang, Y. (2021). Acoustic-based uav detection using late fusion of deep neural networks. Drones 5, 54. doi:10.3390/drones5030054

Diamantidou, E., Lalas, A., Votis, K., and Tzovaras, D. (2019). “Multimodal deep learning framework for enhanced accuracy of uav detection,” in International conference on virtual storytelling.

Dudczyk, J., Czyba, R., and Skrzypczyk, K. (2022). Multi-sensory data fusion in terms of uav detection in 3d space. Sensors (Basel, Switzerland) 22, 4323. doi:10.3390/s22124323

Dumitrescu, C., Minea, M., Costea, I. M., Chiva, I. C., and Semenescu, A. (2020). Development of an acoustic system for uav detection. Sensors (Basel, Switzerland) 20, 4870. doi:10.3390/s20174870

Fei, S., Hassan, M. A., Xiao, Y., Su, X., Chen, Z., Cheng, Q., et al. (2022). Uav-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 24, 187–212. doi:10.1007/s11119-022-09938-8

Fu, R., Al-Absi, M. A., Kim, K.-H., Lee, Y.-S., Al-Absi, A. A., and Lee, H.-J. (2021). Deep learning-based drone classification using radar cross section signatures at mmwave frequencies. IEEE Access 9, 161431–161444. doi:10.1109/access.2021.3115805

Garcia, A. J., Aouto, A., Lee, J.-M., and Kim, D. (2022). Cnn-32dc: an improved radar-based drone recognition system based on convolutional neural network. ICT Express 8, 606–610. doi:10.1016/j.icte.2022.04.012

Garcia, A. J., Lee, J.-M., and Kim, D.-S. (2020). “Anti-drone system: a visual-based drone detection using neural networks,” in 2020 international conference on information and communication technology convergence (ICTC), 559–561.

Gong, J., Yan, J., Hu, H., Kong, D., and Li, D. J. (2023). Improved radar detection of small drones using Doppler signal-to-clutter ratio (dscr) detector. Drones 7, 316. doi:10.3390/drones7050316

Guvenc, I., Koohifar, F., Singh, S., Sichitiu, M. L., and Matolak, D. (2018). Detection, tracking, and interdiction for amateur drones. IEEE Commun. Mag. 56, 75–81. doi:10.1109/MCOM.2018.1700455

Hammer, M., Borgmann, B., Hebel, M., and Arens, M. (2020). “Image-based classification of small flying objects detected in lidar point clouds,” in Defense + commercial sensing.

Henderson, A., Yakopcic, C., Harbour, S. D., and Taha, T. M. (2022). “Detection and classification of drones through acoustic features using a spike-based reservoir computer for low power applications,” in 2022 IEEE/AIAA 41st digital avionics systems conference (DASC), 1–7.

Ivanov, V. A., and Michmizos, K. P. (2021). Increasing liquid state machine performance with edge-of-chaos dynamics organized by astrocyte-modulated plasticity. ArXiv abs/2111, 01760. doi:10.48550/arXiv.2111.01760

Jin, Y., Liu, Y., and Li, P. (2016). “Sso-lsm: a sparse and self-organizing architecture for liquid state machine based neural processors,” in 2016 IEEE/ACM international symposium on nanoscale architectures (NANOARCH), 55–60.

Jovanoska, S., Broetje, M., and Koch, W. (2018). “Multisensor data fusion for uav detection and tracking,” in 2018 19th international radar symposium (IRS), 1–10.

Kaiser, J., Stal, R., Subramoney, A., Roennau, A., and Dillmann, R. (2017). Scaling up liquid state machines to predict over address events from dynamic vision sensors. Bioinspiration and Biomimetics 12, 055001. doi:10.1088/1748-3190/aa7663

Kaleem, Z., and Rehmani, M. H. (2018). Amateur drone monitoring: state-of-the-art architectures, key enabling technologies, and future research directions. IEEE Wirel. Commun. 25, 150–159. doi:10.1109/MWC.2018.1700152

Khan, M. U., Dil, M., Alam, M., Orakazi, F. A., Almasoud, A. M., Kaleem, Z., et al. (2022). Safespace mfnet: precise and efficient multifeature drone detection network. IEEE Trans. Veh. Technol. 73, 3106–3118. doi:10.1109/tvt.2023.3323313

Liu, H., Wei, Z., Chen, Y., Pan, J., Lin, L., and Ren, Y. (2017). “Drone detection based on an audio-assisted camera array,” in 2017 IEEE third international conference on multimedia big data (BigMM), 402–406.

Lu, J., Yang, J., Liu, X., Liu, G., and Zhang, Y. (2019). Robust direction of arrival estimation approach for unmanned aerial vehicles at low signal-to-noise ratios. IET Signal Process 13, 456–463. doi:10.1049/iet-spr.2018.5275

Matson, E. T., Yang, B., Smith, A. H., Dietz, E., and Gallagher, J. C. (2019). “Uav detection system with multiple acoustic nodes using machine learning models,” in 2019 third IEEE international conference on robotic computing (IRC), 493–498.

Medaiyese, O. O., Syed, A. S., and Lauf, A. P. (2020). “Machine learning framework for rf-based drone detection and identification system,” in 2021 2nd international conference on smart cities, automation and intelligent computing systems (ICON-SONICS), 58–64.

Mo, Y., Huang, J., and Qian, G. (2022). Deep learning approach to uav detection and classification by using compressively sensed rf signal. Sensors (Basel, Switzerland) 22, 3072. doi:10.3390/s22083072

Oh, B.-S., Guo, X., and Lin, Z. (2019). A uav classification system based on fmcw radar micro-Doppler signature analysis. Expert Syst. Appl. 132, 239–255. doi:10.1016/j.eswa.2019.05.007

Raval, D., Hunter, E., Hudson, S., Damini, A., and Balaji, B. (2021). Convolutional neural networks for classification of drones using radars. Drones 5, 149. doi:10.3390/drones5040149

Salhi, M., and Boudriga, N. (2020). “Multi-array spherical lidar system for drone detection,” in 2020 22nd international conference on transparent optical networks (ICTON), 1–5.

Samadzadegan, F., Javan, F. D., Mahini, F. A., and Gholamshahi, M. (2022). Detection and recognition of drones based on a deep convolutional neural network using visible imagery. Aerospace 9, 31. doi:10.3390/aerospace9010031

Seidaliyeva, U., Akhmetov, D., Ilipbayeva, L., and Matson, E. T. (2020). Real-time and accurate drone detection in a video with a static background. Sensors (Basel, Switzerland) 20, 3856. doi:10.3390/s20143856

Soures, N., and Kudithipudi, D. (2019). Deep liquid state machines with neural plasticity for video activity recognition. Front. Neurosci. 13, 686. doi:10.3389/fnins.2019.00686

Utebayeva, D., Almagambetov, A., Alduraibi, M., Temirgaliyev, Y., Ilipbayeva, L., and Marxuly, S. (2020). “Multi-label uav sound classification using stacked bidirectional lstm,” in 2020 fourth IEEE international conference on robotic computing (IRC), 453–458.

Utebayeva, D., Ilipbayeva, L., and Matson, E. T. (2022). Practical study of recurrent neural networks for efficient real-time drone sound detection: a review. Drones 7, 26. doi:10.3390/drones7010026

Wijesinghe, P., Srinivasan, G., Panda, P., and Roy, K. (2019). Analysis of liquid ensembles for enhancing the performance and accuracy of liquid state machines. Front. Neurosci. 13, 504. doi:10.3389/fnins.2019.00504

Wisniewski, M., Rana, Z. A., and Petrunin, I. (2021). “Drone model identification by convolutional neural network from video stream,” in 2021 IEEE/AIAA 40th digital avionics systems conference (DASC), 1–8.

Yamazaki, K., Vo-Ho, V.-K., Bulsara, D., and Le, N. T. H. (2022). Spiking neural networks and their applications: a review. Brain Sci. 12, 863. doi:10.3390/brainsci12070863

Keywords: drone detection, drone classification, machine learning, radar, acoustic, RF, visual, lidar

Citation: Mrabet M, Sliti M and Ammar LB (2024) Machine learning algorithms applied for drone detection and classification: benefits and challenges. Front. Comms. Net 5:1440727. doi: 10.3389/frcmn.2024.1440727

Received: 29 May 2024; Accepted: 01 October 2024;

Published: 17 October 2024.

Edited by:

Ella Pereira, Edge Hill University, United KingdomReviewed by:

Zeeshan Kaleem, King Fahd University of Petroleum and Minerals, Saudi ArabiaMatthew Ritchie, University College London, United Kingdom

Copyright © 2024 Mrabet, Sliti and Ammar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Manel Mrabet, bS5iZW5yYXNoZWRAcHNhdS5lZHUuc2E=

Manel Mrabet

Manel Mrabet Maha Sliti

Maha Sliti Lassaad Ben Ammar1

Lassaad Ben Ammar1