- Department of Electrical and Computer Engineering, American University of Beirut (AUB), Beirut, Lebanon

The COVID-19 pandemic has attracted the attention of big data analysts and artificial intelligence engineers. The classification of computed tomography (CT) chest images into normal or infected requires intensive data collection and an innovative architecture of AI modules. In this article, we propose a platform that covers several levels of analysis and classification of normal and abnormal aspects of COVID-19 by examining CT chest scan images. Specifically, the platform first augments the dataset to be used in the training phase based on a reliable collection of images, segmenting/detecting the suspicious regions in the images, and analyzing these regions in order to output the right classification. Furthermore, we combine AI algorithms, after choosing the best fit module for our study. Finally, we show the effectiveness of this architecture when compared to other techniques in the literature. The obtained results show that the accuracy of the proposed architecture is 95%.

1 Introduction

Artificial intelligence has been a great contributor to the field of medical diagnosis and the design of new medications. Experts believe that artificial intelligence will have a huge impact by providing radiologists with tools for faster and more accurate diagnoses and prognoses, resulting into more effective treatment. Big data and artificial intelligence will change the way radiologists work because computers will be able to process huge amounts of patient data, enabling them to become experts on very specific tasks (Shen et al., 2017a). In the past, artificial intelligence has succeeded in overcoming different challenges such as chronic diseases and skin cancer (Esteva et al., 2017). Currently, scientists are expecting artificial intelligence to have a significant role in the search for a treatment to the emerging corona virus, and hence in alleviating the associated panic that is affecting people worldwide.

Recently, the health-care system has been facing strenuous challenges in terms of supporting the ever-increasing number of patients and associated costs due to the COVID-19 pandemic. Thus, the recent impact of COVID-19 mandates a shift in the health-care sector mindset. As such, it becomes essential to benefit from modern technology such as artificial intelligence, in order to design and develop intelligent and autonomous health-care solutions. When compared to other viruses, COVID-19 is characterized by its fast spreading ability, which made it a worldwide pandemic in a record time. The medical and health-care systems are still studying and investigating it in order to gain reliable information and more insight about this serious problem of fast spreading. Accordingly, the objective of successfully modeling the COVID-19 spread remains a high priority in fighting this virus. Currently, the commonly used diagnosis method is the real-time reverse transcription–polymerase chain reaction (RT-PCR) detection of viral RNA from sputum or nasopharyngeal swab. However, these tests need human intervention, present low positive rate at early infection stages, and need up to 6 h to give results. Thus, to speed up the control of this pandemic, there is a need for fast and early diagnosis tools, especially that for the long term, when lockdowns measures are completely lifted, tests should be done at a large scale to prevent the rebound of such a pandemic.

Due to limited resources and technologies, testing has been limited in some countries to patients exhibiting symptoms and, in many cases, multiple symptoms. Needless to point out, the large strain that the situation has imposed on the national health-care systems and workers, even those in the most developed countries, feeds into the difficulties of identifying and tracking possible cases.

Artificial intelligence algorithms, which are approaches used to implement AI systems, help with many questions concerning the pandemic, starting from vaccine and drug research, tracking people mobility, and how and whether they adhere to the social distancing guidelines, to ending in evaluating lung CT-scans and X-rays for faster diagnosis and for tracking the progression of such patients.

Progressive efforts, relying on AI algorithms, are currently being exerted for the diagnosing of COVID-19. Advanced types of neural networks are being used, over a large-scale screening of the virus, to classify patients based on their respiratory pattern (Wang et al., 2020). Similarly, the detection of COVID-19 was targeted by analyzing chest CT scans in the study by Gozes et al. (2020). The development of automated diagnostic systems enhances the accuracy and speed of the diagnosis, and protects workers in the health sector by notifying them of the condition severity of each infected patient (Alimadadi et al., 2020).

In this context, we are proposing an AI medical platform that aims to collect multimodal data from different sources, integrating both network and medical sensory systems, with the goal to efficiently participate in the worldwide fight against this pandemic. The objective is to address several challenges faced by low-income and middle-income countries due to people’s limited access to quality care and limited medical resources available or offered by the governments when facing large-scale pandemics such as COVID-19.

Different segmentation techniques are available in the literature. However, for the scope of this article, we focused on AI-based techniques and managed a thorough literature review on the topic. It is worth mentioning that this article is part of an ongoing research. In order to reach an optimal solution, we intend to go further and investigate other types of segmentation techniques and compare results according to accuracy and efficiency.

The outline of this article is as follows: Section 2 presents a literature review covering AI applications in the field of medical imaging with a brief description of each deep learning method used. The literature review also covers many techniques involving the detection of COVID-19 as well as its severity. Section 3 covers the methodology that was adopted in the development of the platform. Section 4 includes the results achieved by the AI medical hub platform. Finally, Section 5 concludes the article with insights on other usages of the platform.

2 Related Work

In this section, we cover a wide scope of the literature as related to the use of artificial intelligence algorithms in various applications of medical imaging. This section also covers different computer vision and image processing methods in relation to COVID-19 medical diagnosis.

2.1 AI in Medical Imaging

Image classification can be described as the task of sorting images into one of numerous classes. It is a basic computer vision issue. It provides the backbone for other computer vision functions such as detection, segmentation, and localization. This type of problems has been solved in recent years using deep learning models that leverage several layers of nonlinear acquired knowledge processing, for the extraction and transformation of functions as well as for pattern classification and analysis (Rawat and Wang, 2017).

Computer-assisted image processing has been essential in problems involving the area of medical imaging. Recent developments in AI, particularly in deep learning, led to a major breakthrough in the field of image interpretation to help recognize, classify, and quantify patterns in medical images (Shen et al., 2017b). In particular, the usage of hierarchical function representations, gained exclusively from data, is the cornerstone of the innovations instead of handcrafted features which often focused on domain-specific information. Deep learning thus easily appears to be the new building block for achieving increased efficiency in numerous medical applications (Shen et al., 2017b).

The key force behind the advent of AI in medical imaging was the need for better quality and effectiveness in clinical treatment. Radiology imaging data tend to increase at a significant pace relative to the amount of qualified readers available. This fact has pressured health-care professionals to compensate through efficiency in analyzing images (Wu et al., 2016).

Remarkable developments in other AI methods in the literature have been reported through the use of deep learning. These achievements were compelling enough to draw researchers into the field of computational medical imaging to explore, for example, the ability of deep learning in medical images acquired with computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography PET, and X-ray. The following are functional implementations of deep learning for image localization, cell structure recognition, tissue segmentation, and computer-aided disease diagnosis (Shen et al., 2017b).

Artificial neural networks belong to a family of models in AI that emulate the structural beauty of the human neural system to comprehend and learn from hidden patterns via massive observations. The first trainable neural network consisted of a single layer: the perceptron (Rosenblatt, 1958). The modified perceptron, with several output units, is considered as a linear model that prevents applications from dealing with complex data patterns, even with the use of nonlinear functions in the output layer. This constraint is effectively bypassed by adding a hidden layer between the input layer and the output layer. Taking into consideration certain assumptions on the activation function, a two-layer neural network consisting of a finite number of hidden layers can estimate any continuous function (Chen et al., 1995), and is therefore called a universal approximator. However, it is often possible to estimate functions at the same precision using a deep design, that is more than two layers, with less units in total (Bengio, 2009). Thus, the number of trainable parameters may be decreased, facilitating the training process with a comparatively limited dataset (Schwarz, 1978).

2.2 AI for COVID-19 Detection

The medical sector is seeking, in this global health crisis, innovative solutions to track and contain the COVID-19 (coronavirus) pandemic. Artificial intelligence is a technology that scientists can rely on, since it can quickly classify high-risk patients, monitor the progression of this virus, and effectively manage this outbreak in real time. This technology also has the power of estimating the severity of cases by examining previous patients’ records, but still the rates of accuracy, true negatives, and false positives can be further enhanced to avoid misinterpretation in medical treatment (Haleem et al., 2020; Bai et al., 2020; Hu et al., 2020). The main application of AI in the COVID-19 pandemic, which this work targets, is the early detection and diagnosis of the infection. Artificial intelligence could easily analyze abnormalities viewed by symptoms and the so-called red flags, warning patients and health-care authorities through this process (Ai et al., 2020; Luo et al., 2020). It creates a cost-effective quick decision-making algorithm. With the help of numerous algorithms in AI, modern COVID-19 cases can be detected and managed in a classified framework. Computed tomography (CT) and magnetic resonance imaging (MRI) represent valuable input to AI algorithms, scanning human body sections for the sake of diagnosis.

The basic phase in image processing and interpretation to detect and assess COVID-19 is segmentation. It defines the key factor for the AI algorithm, which is the regions of interest (ROIs) captured in chest X-rays or CT images. Self-learned features or even handcrafted ones can be derived using these segmented regions (Shi et al., 2020a).

Computed tomography is one of the main contributors of high-quality 3D images in the field of COVID-19 detection. Deep learning techniques shine in the domain of ROI segmentation where the most popular ones used for coronavirus are U-Net (Cao et al., 2020; Gozes et al., 2020; Huang et al., 2020; Li et al., 2020; Qi et al., 2020; Zheng et al., 2020), U-Net++ (Chen et al., 2020; Jin et al., 2020), and VB-Net (Shan+ et al., 2021). Despite the dominance of X-ray over CT in the medical sector due to its accessibility, the segmentation process in X-ray images is more difficult. This is a result of the image contrast in the 2D projection of ribs onto soft tissue.

In the context of COVID-19, segmentation is demonstrated as an essential block in the interpretation of this virus. Gaál et al. (2020) proposed Attention-U-Net for the segmentation of the lungs that can extract features related to pneumonia. Such a method can be associated with the diagnosis of coronavirus (Shi et al., 2020a).

Segmentation mechanisms are categorized into two groups: segmentation of the lung according to regions or according to damages. The first separates the lung itself and its lobes from other regions in X-ray or CT, and this is the first step in every application targeting COVID-19 (Cao et al., 2020; Gozes et al., 2020; Huang et al., 2020; Jin et al., 2020; Qi et al., 2020; Shan+ et al., 2021; Tang et al., 2020a; Zheng et al., 2020). The second separates the damages inside the lung from the regions of the lung (Cao et al., 2020; Chen et al., 2020; Gozes et al., 2020; Huang et al., 2020; Jin et al., 2020; Li et al., 2020; Qi et al., 2020; Shan+ et al., 2021; Shen et al., 2020; Tang et al., 2020a). These lesions are variable in shape and in texture, and thus, identifying them is considered a complex task. However, Gaál et al. (2020) presented an attention mechanism that was regarded as an effective localization algorithm in screening lesions. This mechanism can be used to track damages caused by COVID-19. Back to the first group, Jin et al. (2020) created a pipelined method consisting of two stages to capture COVID-19 in CT images, and the first stage is to detect the lung region. This method was based on U-Net++.

In the literature, lung segmentation was tackled with several methods aiming for different goals (Cicek et al., 2016; Milletari et al., 2016; Isensee et al., 2018; Zhou et al., 2018). One of the popular techniques used in COVID-19 apps is U-Net (Cao et al., 2020; Huang et al., 2020; Qi et al., 2020; Zheng et al., 2020). This method proved its capability in both groups mentioned before. Ronneberger (Ronneberger et al., 2015), the father of U-Net, defined this algorithm to be a fully CNN that has a U-shape structure with the feature of symmetry in both encoding and decoding paths. At each level, a shortcut connection is introduced, if the layers are connected in these paths. As a result, visual semantics can be efficiently learned, as well as textures, which are the main concern in medical segmentation. An advanced version of U-Net has been developed in the COVID-19 field. Higher dimension U-Net (3D) was presented by Cicek et al. (2016), where layers in this technique were exchanged by a 3D model. U-Net++ is a more adaptive model (Zhou et al., 2018), where the nested convolutional architecture is inserted between the encoding and decoding paths. This algorithm also contributes to the solutions for the pandemic through localization of damages in COVID-19 diagnosis (Chen et al., 2020). A composite method that uses the attention mechanism and U-Net was able to extract exact features in medical images, which can be considered as an appropriate segmentation method to deal with COVID-19. Another technique that serves segmentation is V-Net (Milletari et al., 2016), which considers a residual module to be the basic convolutional module, and Dice loss as its optimizer. By adding bottleneck to the convolutional module, VB-net leads to an effective segmentation (Shan+ et al., 2021). All these types of networks exhibit good segmentation performance. However, the main concern is how the training procedure would be done. Adequate labeled data are the principle constraint for training a segmentation process. The availability of sufficient data was a hard task in COVID-19 image segmentation since handcrafted portraying of lesions is exhaustive in terms of labor and time. Researchers and radiologists are collaborating to solve this problem. An initial seed that was fed into the U-Net algorithm showed that it converges toward an acceptable threshold (Qi et al., 2020). Zheng et al. (2020) restructured the problem as an unsupervised method in order to create a pseudo-segmentation mask for CT images. The COVID-19 literature reveals that unsupervised and weakly supervised learning mechanisms are desired techniques due to the shortage of labelled medical images.

Segmentation in COVID-19 apps has been a rich topic in the literature. Li et al. (2020) performed lung segmentation to differentiate between coronavirus and pneumonia using U-Net on chest CT. Early detection of COVID is the most important factor in protecting the community, so fast AI methods should be used in the process of diagnosis. Jin et al. (2020) proposed such a method using CT slices as input to the model where these slices were the result of a segmentation network. As a conclusion, the segmentation process is a foundation in the realm of COVID-19 apps as it makes radiologists’ lives much easier; it provides them with accurate recognition of regions of interest and reliable diagnosis of the virus.

Another efficient method to ignore unnecessary parts of the image is image preprocessing. This can play the role of segmentation in COVID-19 apps. Image preprocessing can be subdivided into two categories, restoration and reconstruction of the image. The basic approach of image restoration is to eliminate noise through filtering. Neural network–based filters have been developed with multilayers in order to recognize image edges and enhance the noise detection mechanism (Suzuki et al., 2001; Suzuki et al., 2002a; Suzuki et al., 2002b;Suzuki et al., 2004). Reconstruction of medical images can be a complex problem where noisy data include nonlinearity. Therefore, this problem was considered ill-conditioned, and hence, it can only be dealt with by relaxing conditions and simplified assumptions. Feed forward (Nejatali and Ciric, 1998; El et al., 2000) and Kohonen neural networks (Adler and Guardo, 1995; Comtat and Morel, 1995) are common techniques in the field of reconstruction since they produce a linear approximation of the noisy data.

Before discussing the classification of COVID-19 from other diseases and the severity of the virus infecting certain patients, a brief summary concerning the stages of radiology patterns in CT images must be conducted. Pan et al. (2020) reported that these patterns are analyzed over four stages. The first stage is the early stage (day 0 to day 4), whereby initial symptoms arise, and lesions’ regions, extracted from chest CT, can be observed in the lower lobes of the lung. In stage two (day 5 to day 8), lesions spread out and become thicker reaching multilobes. In the third stage (day 9 to day 14), lesions are widespread with a dense intensity; this is the dangerous stage. An absorption stage defines the last stage where the virus is contained. The importance of these patterns lies mainly in the classification and severity analysis of the infection state.

Distinguishing COVID-19 patients from other patients is a challenging task that recent studies have targeted. Chen et al. (2020) deployed the segmentation model obtained via U-Net++ to label patients as COVID-19 and non–COVID-19. Segmented lesions in this article were sufficient to predict the label and distinguish COVID-19 patients from patients with other diseases. The study used CT images of 106 patients and classified them. These contributions save the reading time of radiologists. Another paper (Zheng et al., 2020) used a combination of the U-Net model for the segmentation process and a 3D convolutional neural network that takes the output of the previous model and generates the probability of labels. A dataset consisting of 540 chest CT images was tested, yielding the following rates: sensitivity of 0.907, specificity of 0.911, and accuracy of 0.959. Yet another method (Jin et al., 2020) combined U-Net++ for the sake of lesion localization and ResNet50 as a classification mechanism. The technique showed better results in specificity and sensitivity (0.922 and 0.974, respectively) based on 1,136 chest CT images.

Another factor that plays a principal role in medical treatment is the severity assessment. Vb-Net was used to identify this factor in the study by Shi et al. (2020b). The segmentation was done based on infection volumes in the regions of interest in order to train the RF architecture. Another RF-based architecture was introduced by Tang et al. (2020b) to classify the COVID-19 level as severe or non-severe, where 176 chest CT images were tested. The architecture showed an accuracy rate of 0.875. As a conclusion, the researchers developed promising techniques with the use of artificial intelligence for the diagnosis of COVID-19. The results of these studies are considered reliable for classifying patients. Also, severity estimation is a key factor in the treatment procedure because it determines the need of ICU, for example.

3 The COVID-19 Detection System

This section covers the methodology that was adopted in the development of the platform. It includes detailed explanation of the architecture, defines each block and its aspects, and demonstrates the followed evaluation measurements and calibration metrics.

3.1 Architecture Overview and Challenges

One of the main challenges in every health-care system is the limited resources (Abdellatif et al., 2019; Abdellatif et al., 2020). The availability and quality of data determine the reliability of the study and quality of the architecture. Using CT images requires a large dataset to ensure the aforementioned influences of data availability. Therefore, a large dataset was deployed in this study, consisting 6,752 CT scans (see Section 4.1).

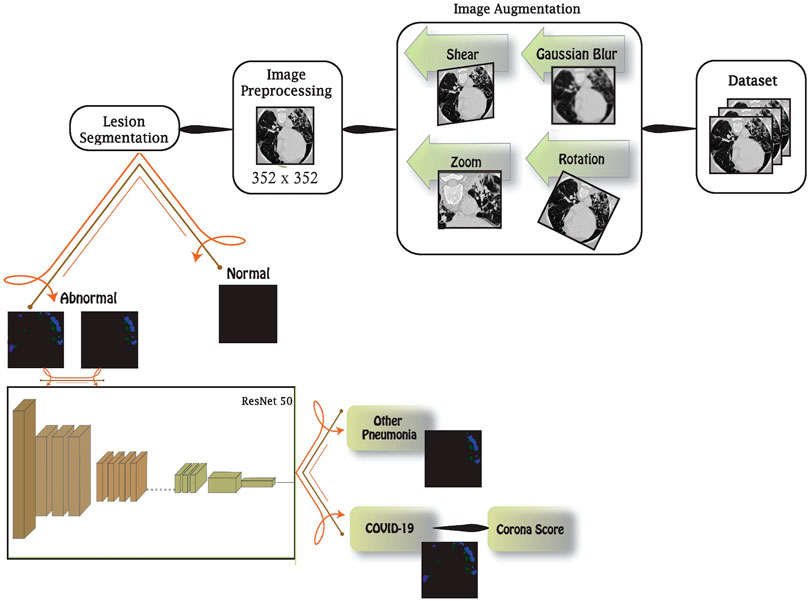

We are proposing an AI-based medical hub platform that integrates AI and image processing for early detection of different problematic medical conditions. This platform targets medical problems that involve medical imaging. It provides the detection of abnormalities and classifies them accordingly. For the purpose of this work, we are targeting COVID-19 as a priority medical condition. The chest images, in the dataset, go through several blocks in this architecture, as shown in Figure 1.

First, the data are augmented to enrich the latter blocks with more images and to highlight special features to be recognized. This is done by rotating, shearing, zooming, and blurring images. Then, augmented data are preprocessed in two stages: standardization and normalization. These stages are essential to unify the data fed to the network. In order to identify abnormalities in images, the preprocessed images are fed into the lesion segmentation block that uses InfNet to treat abnormal aspects and features. In addition to early detection using this block, we are enabling it to detect the condition severity through further image analysis. Finally, the abnormal images are loaded into a deep network using the transfer learning method in order to distinguish between COVID-19 and other viral pneumonia conditions. Detailed information on each block will be elaborated in the following sections.

3.2 Image Augmentation

Data augmentation is a method used to yield extra data from the current dataset. It creates perturbed copies of the existing images, in this case. The main goal is to reinforce the neural network with various diversities, which leads to a network that distinguishes relevant from irrelevant characteristics in the dataset. Image augmentation can be done using several techniques. Augmentation techniques are used efficiently when necessary according to data availability and quality. Our proposal integrates multiple techniques, to support a large number of dataset for different conditions, as follows:

• Rotation: the image is rotated within an interval between −10° and 10°.

• Zoom: scaling the image by zooming in or out would also increase the set.

• Shear: image shearing can be performed using rotation with the third dimension imitation factor.

• Gaussian blur: using a Gaussian filter, high-frequency factors can be eliminated, causing a blurred version of an image.

Using these methods, the dataset was enlarged and used in the training phase. Nevertheless, during the testing phase, the testing set will not be augmented. This would assert the robustness of the architecture and avoid over-fitting.

3.3 Image Preprocessing

Since the data typically come from various origins, a strategy to guide the complexity and accuracy is essential. Image preprocessing ensures complexity reduction and better accuracy yielded from certain data. This method standardizes the data through several stages in order to feed the network with a clean dataset. In this architecture, data preprocessing is performed through the following stages:

• Image standardization: neural networks that deal with images need unified aspect ratio images. Therefore, the first step is to resize the images into unique dimensions and a square shape, which is the typical shape used in neural networks.

• Normalization: input pixels to any AI algorithm must have normalized data distribution to enhance the convergence of the training phase. Normalization is the action of subtracting the mean of the distribution from each pixel and dividing by standard deviation. To achieve positive values, scaling normalized data is considered at the end of this step.

3.4 Lesions’ Segmentation

Ground glass opacities (GGOs) and consolidation are the main extracted features in the case of COVID-19 patients, where 97.86% of patients have such infections within 21 days of the presence of COVID-19 (Liang et al., 2020). Therefore, we used lesions’ segmentation to identify the existence of these infections and the infected volume. These infections are also caused by other viral pneumonia, so this block will classify an input image as normal or abnormal. Normal ones are released as output, while abnormal ones will enter the ResNet block in order to identify COVID-19 patients. The segmentation method uses the architecture of InfNet.

The preprocessed data consisting of CT images will undergo the famous split ratio mentioned by the Pareto principle. 80% of the images will be considered in the training process, while 20% of the dataset will be used in testing the network.

The preprocessed CT images are fed into two convolutional layers where low-resolution features are extracted. Then, these features are inserted into three convolutional layers to extract the high-level ones. In the context of segmentation, it was shown that edge information can be beneficial, and thus, an edge attention unit is placed to enhance the demonstration of regions of interest.

Global map is produced by accumulating the high-level features using a parallel partial decoder to segment the lung lesions. The output and high-level features are joined with low-level features to be inserted in cascaded reverse attention units based on the global map. Finally, the output is inserted into an activation function (Sigmoid) to estimate the regions of infections (Fan et al., 2020).

This block segments the infected sections in a CT-scan image to create a colored representation of the GGO and consolidation segment. The absence of such sections will result in a blank black image. Blank images are classified as normal. The rest of the abnormal images are fed to the ResNet50 deep network model. These representations are also quantified to be used later in calculating the corona score.

3.5 ResNet50 Deep Network

Transferring learning is a well-known method that can be used to train convolutional neural networks as it occupies a huge space within the literature. Using this method, the network is pretrained based on a vast database known as ImageNet. This step leads to the initialization of the layers’ weights where loading such weights before deploying the network in the current architecture diminishes the vanishing gradient problem. This is a key advantage for transfer learning that enhances the convergence of the objective. Another advantage using this type of learning is to extract relevant features of the images, such as shape and edges. As a result, the computational time is reduced by limiting the computations to the final layers in the training procedure.

The residual network ResNet is one of the advanced deep learning algorithms that surpass many other dense networks in various metrics, especially accuracy and computational complexity (He et al., 2016; Vatathanavaro et al., 2018). This is why we used this algorithm for detecting the coronavirus and distinguishing it from other viral pneumonia infections using transfer learning.

In order to identify the dataset through numerous classes, the pretrained model characterizes the last layers as classification layers, and thus, the extracted features will be saved in the last convolutional layer to be transformed into prediction values for each class. The initialized weights (the fundamental factor of transfer learning methods) do not change, except for the last layer, which will be trained to produce estimates, which leads the classification process for our new set of images. Other layers are inserted into this model like the pooling layer, dropout layer, flattening layer, and the activation functions (rectified linear unit).

The residual network used in this architecture consists of 50 layers, ResNet50 (Vatathanavaro et al., 2018), which is a deep network that considers the learning rate as an assessment in the stage to adapt the weights of the layers. In each iteration, the weights are updated based on the loss derived from the input and expected values. The formula of the updated weights is as follows:

where w represents the weight, i is the iteration in the process, L is the loss function, and α is the learning rate.

3.6 Evaluation Metrics

The performance of the proposed architecture is evaluated based on several statistical measures in addition to our new metric, defined as the corona score.

3.6.1 Accuracy

Accuracy is a metric that quantifies the competency of the method in defining the correct predicted cases:

• TP: true positive is equal to the number of correct predicted positive cases.

• FP: false positive is equal to the number of incorrect predicted positive cases.

• TN: true negative is equal to the number of correct predicted negative cases.

• FN: false negative is equal to the number of incorrect predicted negative cases

3.6.2 Recall

Recall is the sensitivity of the method:

3.6.3 Precision

Precision is the ratio of the unnecessary positive case to the total number of positives:

3.6.4 Specificity

Specificity is the ratio of correct predicted negatives over negative observations:

3.6.5 F1-Score

F1-Score is the measure of the quality of detection:

3.7 Corona Score

The corona score is a new measure introduced to evaluate the severity of the virus inside the lungs. It depends on the volume of the lungs and the volume of the infected part evaluated by the segmentation block. This measure is calculated as follows:

• Given a lung CT image, the volume of the lung is first observed

• The alveolar region, known as parenchyma, represents 90% of the total volume of the lung (Knudsen and Ochs, 2018).

• Radiologists categorize the severity of coronavirus based on the degree of GGOs and consolidation present in lung CT images: minimal (10% less than of lung parenchyma), moderate (10–25%), intermediate (25–50%), severe (50–75%), and critical (more than 75% of lung parenchyma) (Guillo et al., 2020).

3.8 Calibration Metrics

This architecture integrates different calibration techniques according to the test results and task at hand. In this section, we highlight the different techniques accounted for in our platform.

3.8.1 Learning Rate

This rate is a critical value when updating the weights formula. Its optimal value is unreachable sometimes. Low and high values of learning rates lead to several problems. Low values decrease the speed of the training process, causing a delay in the whole procedure. In contrast, high values increase the speed of convergence and reduce the set weights, and hence, no suboptimal weight is achieved. In this model, we used the optimal choice of this rate proposed by Smith (2017). The authors varied the learning rates cyclically in a practical interval to achieve better classification accuracy. Another technique for defining this rate is through initializing it and updating it using the SGD optimizer.

where iterations represent the steps within an epoch, and decay is a decaying parameter proposed by the optimizer.

3.8.2 Loss Function

The loss function is a way of calculating the performance of the algorithm upon training it with the used dataset. It estimates how far the predictions are from reality. This factor is used later in optimizing the algorithm by minimizing the incurred loss. In fact, the loss function is an indicator whether tuning the algorithm in a certain way is beneficial. There are different categories of loss functions: regression, binary classification, and multi-class classification. In the current study, binary classification loss functions are more likely to be used, since the output of both blocks, segmentation and ResNet50, are producing binary classification (normal, abnormal; COVID, non-COVID), respectively. Under this category, there exists several loss functions: binary cross entropy, hinge loss, and squared hinge loss.

In this architecture, we used binary cross entropy as a loss function for the segmentation block and the ResNet50 block. The equation of BCE is as follows:

where M is the output size,

3.8.3 Regularization

One of the main problems in AI algorithms to be avoided is over-fitting, which could be interpreted by subjecting the architecture of the algorithm explicitly to the training set. The output of the network is restricted only to the specific output obtained previously by the training set, irrespective of the input. Regularization is a mechanism that adjusts the mapping and mitigates over-fitting. Data augmentation is known to be used for increasing the training dataset, thus reducing over-fitting, but large memory cost could be a big concern in this method. Regularization methods can be used irrespective of the training dataset size. Dropout and drop-connect are the most famous regularization techniques. Fully connected networks are usually subjected to dropout mechanisms based on the probability distributions of connections in each layer. In this process, connections are dropped and nodes would be dropped as a result. Dropout has many versions that are utilized according to the given problem, such as fast dropout, adaptive dropout, evolution dropout, spatial dropout, nested dropout, and max pooling dropout.

4 Model Simulation and Results

The proposed architecture was implemented in Python v3.6 using PyCharm in Windows 10 environment with the aid of different AI and image processing libraries that increase training efficiency to achieve a better performance. Our testing environment employed fastai, numpy, scipy, and openCV libraries and was accelerated by NVIDIA GeForce RTX 2070 super GPU with 8 GB dedicated memory. The simulation is based on Linux and Python coding that is compatible with most hardware, and we are testing our models using real datasets used in the literature.

In this section, we will discuss the testing procedure of both blocks, lesion segmentation and ResNet50 deep network, separately. Each block will have its own evaluation metrics based on the predicted output. Next, the entire system will be subjected to a full test to evaluate the system’s performance. The platform test results are shown in the following tables and figures.

4.1 Dataset

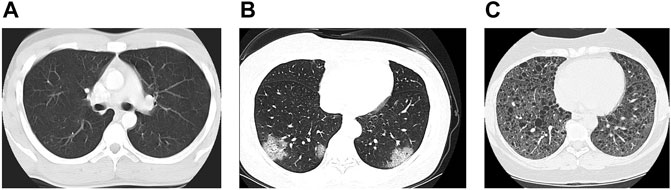

The CT chest image dataset used in our study was presented by the China National Center for Bio-information. These images are labeled into three categories: coronavirus pneumonia, common pneumonia, and normal. The China National Center released these datasets publicly to assist researchers in their fight with the pandemic. The dataset consists of 617,775 CT slices of 6,752 CT scans from 4,154 patients distributed over 999 COVID-19 patients, and 1,687 normal and 1,468 common pneumonia patients (Zhang et al., 2020). Figure 2 shows the different classes conducted in this study: a) normal, b) COVID-19 pneumonia, and c) common pneumonia. In our study, we chose 450 images randomly from each class. Augmenting the chosen dataset produced 150 additional images, 50 from each class, yielding 1,500 images in total.

4.2 Lesion Segmentation Block

Our proposed model was trained to analyze CT-scan chest images aiming to separate abnormal (infected lungs) from normal (healthy lungs).

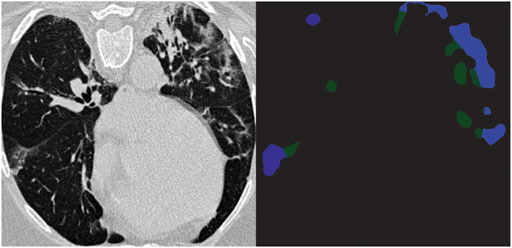

The segmentation block was fed with a large dataset including augmentation to analyze and detect the existence of GGOs and consolidation, and to measure their size and position in patients’ lungs. The existence of one or both of these infections was the criterion in classifying normal and abnormal cases. The result of augmented data and real data was 1,500 CT chest images: 500 normal, 500 COVID-19, and 500 other pneumonia diseases. As mentioned earlier, 80% of these images (1,200 images) were used as a training set. The output representation of this block is either blank that refers to the absence of infection or segmented version of the image, showing GGO and consolidation in blue and green, respectively, as shown in Figure 3. After abnormality is detected, the corona score is calculated and passed as an output alongside the positive COVID-19 prediction in the latter block.

The size of the CT image fed to the system is 352 × 352. Images are resampled in order to generalize the module. The learning rate in this module is set through Adam optimizer to be 0.0001. The training part considers pseudo values that gain convergence in about 8 h based on 200 epochs. The loss function used is BCE, as mentioned in the calibration metrics section. This function considers the ground truth map and the edge map generated by the convolutional layer. The trained data are then regularized using a fast dropout.

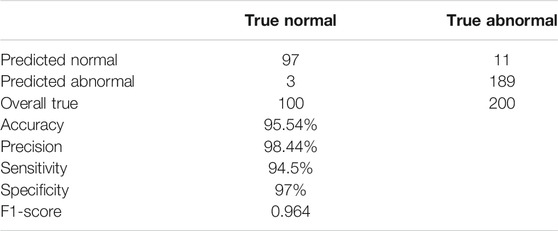

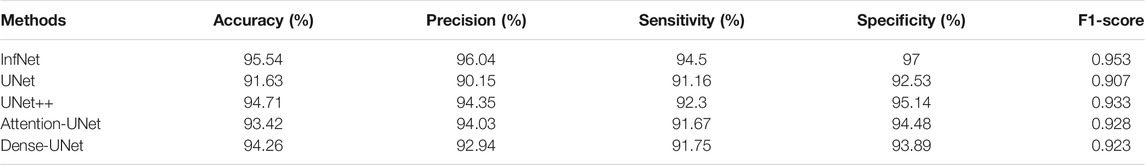

The trained InfNet model showed 95.54% accuracy. Some of the abnormal images were misclassified due to the initial or early stage of pneumonia where the lungs are not slightly damaged; thus, infections are not recognized yet. Table 1 summarizes the results of the testing dataset. The proposed model showed that the prediction of abnormal images is 189 out of 200 (94.5%) and 97 out of 100 for normal images that are equal to sensitivity and specificity, respectively, with an f1–score equal to 0.964, and 98.44% for precision.

In order to make sure that Infnet is the best choice to be deployed in this architecture, different segmentation methods were tested and compared to these results.

All of the above methods in Table 2 were tested to detect the infected regions (regions of interest). Accordingly, the classification is done and the evaluation metrics were derived.

4.3 ResNet50 Deep Network Block

The deep network model ResNet50 is trained to classify the nature of pneumonia by distinguishing between COVID and non-COVID pneumonia presented in the images. This AI model depends on error backpropagation between layers. At the same time, weights are updated according to the learning derived to be suboptimal, 0.001 (Smith, 2017). This algorithm has 23 million parameters that are tuned through several optimization techniques. One of these parameters is the loss function. In our current system, we used the binary cross entropy loss function. Similar to the segmentation block, the augmented dataset is fed to this block excluding normal cases. 800 images are considered to train the algorithm and 200 images for validation. The validation process is based on the value of the loss function, which depends on the difference between the predicted and true value and the probability of the class.

In the testing mode, the network focuses on the probability score of each class in order to make a prediction. Based on the minimum loss value, the class is predicted. Segmented images decreased the model convergence time by minimizing the size of region of interest. Moreover, better knowledge is acquired through the process of positioning of the infection where COVID-19 is known to infect the edges of the lungs bilaterally (Ding et al., 2020).

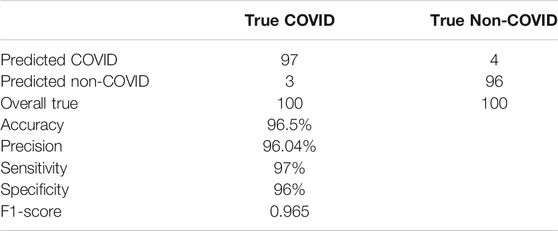

The trained deep network showed a 96.5% accuracy. Table 3 summarizes the results of the testing phase. The proposed model predicted the presence of COVID-19 in 97 out of 100 and its absence in 96 out of 100, where these values are equal to sensitivity and specificity of the model, respectively. It also showed an f1–score of 0.965, and 96.04% for precision.

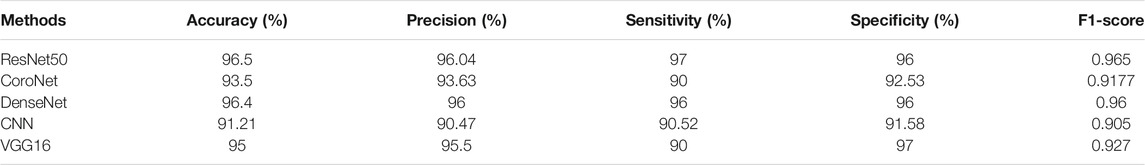

In order to make sure that ResNet50 is the best choice to be deployed in this architecture, different classification methods were tested and compared to these results.

The methods in Table 4 were tested to distinguish between COVID infection and other pneumonia diseases. As a result, the classification is done and the evaluation metrics were derived.

4.4 Complete Model

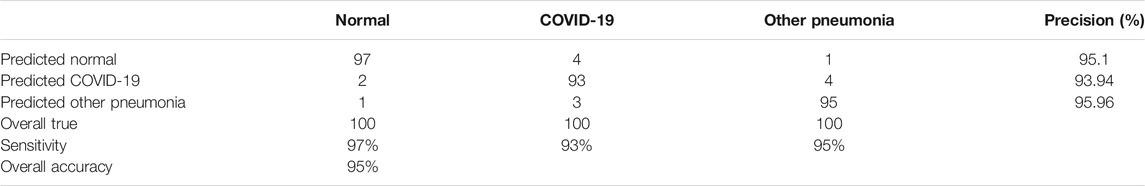

Each block was tested separately to validate its effectiveness. The later block leverages the fact that it is tested with segmented images for convergence purposes. A full system test was performed by passing the dataset as input to evaluate the overall performance. Such a scenario undergoes a more realistic test environment were the ResNet block receives a sample of wrongly classified normal images from the previous block. The accuracy of the full system test was shown to be 95%. Table 5 illustrates all classifications and metrics where each classification has it own precision and sensitivity.

4.5 Corona Score

At the output, a corona score was derived for all positive COVID-19 scans, and the cases were categorized as discussed earlier into four categories. By referring to the ground truth segmentation figures, we verified the correctness of the severity classifications and discovered that the severity of all cases was correctly classified. The corona score of Figure 3 is 0.1323, so its severity class is moderate.

4.6 Computational Efficiency

The overall system features exhibit better computational performance through different measures.

• Ternary problem partition: the use of block partitioning resulted in better computational performance through dividing the objective into two binary classification blocks.

• Time efficiency: one of the main benefits of such an architecture is the efficiency of detection with respect to time when compared with similar systems, therefore achieving better convergence time.

The complexity of prediction in neural networks is approximated to be

In order to validate our assumptions, we designed the setup of this ternary problem. The same architecture applies here except for the segmentation block. ResNet50 is now the classification block for the whole system. This block receives the whole dataset after being augmented, preprocessed, and divided into training and testing sets. The results showed a decrease of 36% of the computational time in the testing phase, and 28% in the training phase.

5 Future Work

This article is part of an ongoing research. The article focused on comparing AI-based segmentation techniques. Future work will include comparing our findings with traditional and non–AI-based segmentation techniques. Our overall objective is to develop a comprehensive medical hub that will support detection and analysis of several medical conditions. This medical hub would not be limited to COVID- 19, but it shall include the detection of other conditions and their diagnosis severity. Moreover, our team prioritizes keeping the system up-to-date, benefiting from new techniques and published work in the literature. Finally, we are currently working on reducing the false positives and errors by introducing and testing a new layer of validation and enhancing our calibration techniques.

6 Conclusion

In this article, we proposed an advanced medical hub architecture with an AI system that consists of two phases: segmentation and ResNet deep network. The first phase is responsible of recognizing abnormalities in the CT images to separate normal from abnormal patients. The second one is responsible of distinguishing COVID-19 cases from other pneumonia conditions. Both phases showed promising performances. The accuracy of the segmentation was 95.54 and 96.5% for the ResNet50 block. The overall accuracy proved to be 95%. The whole model showed to be reliable and demanded much less computational time, since better convergence was achieved due to the presence of the segmentation block. Our study also introduced an evaluation score for the severity of COVID-19 cases (corona score). Even minimal severity can be detected, which could initiate an instantaneous quarantine decision to avoid the spread of the virus. This platform concentrated on the detection COVID-19 in the current study, but it can be deployed and used for medical imaging analysis of other diseases.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material; further inquiries can be directed to the corresponding author.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This research was funded by TELUS Corp., Canada, American University of Beirut Research Board and the Lebanese National Council for Scientific Research (CNRS), Lebanon.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to thank TELUS Corporation, the research board of the American University of Beirut (URB) (PROJECT/AWARD: 25350/103780) and the Lebanese National Council for Scientific Research (CNRS) for supporting this research work.

References

Abdellatif, A. A., Mohamed, A., Chiasserini, C. F., Tlili, M., and Erbad, A. (2019). Edge Computing for Smart Health: Context-Aware Approaches, Opportunities, and Challenges. IEEE Netw. 33, 196–203. doi:10.1109/mnet.2019.1800083

Abdellatif, A. A., Samara, L., Mohamed, A., Erbad, A., Chiasserini, C. F., Guizani, M., et al. (2020). I-health: Leveraging Edge Computing and Blockchain for Epidemic Management, arXiv:2012.14294.

Adler, A., and Guardo, R. (1995). Neural Network Image Reconstruction Technique for Electrical Impedance Tomography. Medical Imaging. IEEE Trans. 13, 594–600. doi:10.1109/42.363109

Ai, T., Yang, Z., Hou, H., Zhan, C., Chen, C., Lv, W., et al. (2020). Correlation of Chest Ct and Rt-Pcr Testing for Coronavirus Disease 2019 (Covid-19) in china: A Report of 1014 Cases. Radiology 296, 200642. doi:10.1148/radiol.2020200642

Alimadadi, A., Aryal, S., Manandhar, I., Munroe, P., Joe, B., and Cheng, X. (2020). Artificial Intelligence and Machine Learning to Fight Covid-19. Physiol. Genomics 52, 200–202. doi:10.1152/physiolgenomics.00029.2020

Bai, H., Hsieh, B., Xiong, Z., Halsey, K., Choi, J., Tran, T., et al. (2020). Performance of Radiologists in Differentiating Covid-19 from Viral Pneumonia on Chest Ct. Radiology 296, 200823. doi:10.1148/radiol.2020200823

Cao, Y., Xu, Z., Feng, J., Jin, C., Wu, H., and Shi, H. (2020). Longitudinal Assessment of Covid-19 Using a Deep Learning–Based Quantitative Ct Pipeline: Illustration of Two Cases. Radiol. Cardiothorac. Imaging 2, e200082. doi:10.1148/ryct.2020200082

Chen, J., Wu, L., Zhang, J., Zhang, L., Gong, D., Zhao, Y., et al. (2020). Deep Learning-Based Model for Detecting 2019 Novel Coronavirus Pneumonia on High-Resolution Computed Tomography: a Prospective Study in 27 Patients, 10(1):19196. doi:10.1038/s41598-020-76282-0

Chen, T., Chen, H., and Liu, R.-w. (1995). Approximation Capability in by Multilayer Feedforward Networks and Related Problems. Neural Networks. IEEE Trans. 6, 25–30. doi:10.1109/72.363453

Cicek, O., Abdulkadir, A., Lienkamp, S., Brox, T., and Ronneberger, O. (2016). 3d U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. Image Comput. Computer-Assisted Intervention, 424–432. doi:10.1007/978-3-319-46723-8_49

Comtat, C., and Morel, C. (1995). Approximate Reconstruction of Pet Data with a Self-Organizing Neural Network. Neural Networks. IEEE Trans. 6, 783–789. doi:10.1109/72.377988

Ding, X., Xu, J., Zhou, J., and Long, Q. (2020). Chest Ct Findings of Covid-19 Pneumonia by Duration of Symptoms. Eur. J. Radiol. 127, 109009. doi:10.1016/j.ejrad.2020.109009

El, F., Ali, A., Nakao, Z., Chen, Y.-W., Matsuo, K., and Ohkawa, I. (2000). An Adaptive Backpropagation Algorithm for Limited-Angle CT Image Reconstruction. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 83 (6), 1049–1058. doi:10.1117/12.461668

Esteva, A., Kuprel, B., Novoa, R., Ko, J., Swetter, S., Blau, H., et al. (2017). Dermatologist-level Classification of Skin Cancer with Deep Neural Networks. Nature 542 (7639), 115–118. doi:10.1038/nature21056

Fan, D.-P., Zhou, T., Ji, G.-P., Zhou, Y., Chen, G., Fu, H., et al. (2020). Inf-net: Automatic Covid-19 Lung Infection Segmentation from Ct Images. IEEE Trans. Med. Imaging, 1. doi:10.1109/TMI.2020.2996645

Gaál, G., Maga, B., and Lukács, A. (2020). Attention U-Net Based Adversarial Architectures for Chest X-ray Lung Segmentation, arXiv:2003.10304.

Gozes, O., Frid-Adar, M., Greenspan, H., Browning, P. D., Zhang, H., Ji, W., et al. (2020). Rapid AI Development Cycle for the Coronavirus (Covid-19) Pandemic: Initial Results for Automated Detection and Patient Monitoring Using Deep Learning Ct Image Analysis, arXiv:2003.05037.

Guillo, E., Gomez, I., Dangeard, S., Bennani, S., Saab, I., Tordjman, M., et al. (2020). Covid-19 Pneumonia: Diagnostic and Prognostic Role of Ct Based on a Retrospective Analysis of 214 Consecutive Patients from paris, france. Eur. J. Radiol. 131, 109209. doi:10.1016/j.ejrad.2020.109209

Haleem, A., Javaid, M., and Vaishya, R. (2020). Effects of Covid 19 Pandemic in Daily Life. Curr. Med. Res. Pract. 10(2), 78–79. doi:10.1016/j.cmrp.2020.03.011

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep Residual Learning for Image Recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, June 27–30, 2016, 770–778. doi:10.1109/CVPR.2016.90

Hu, Z., Ge, Q., Jin, L., and Xiong, M. (2020). Artificial Intelligence Forecasting of Covid-19 in china. Int. J. Educ. Excell. 6 (1), 71–94. doi:10.18562/ijee.054

Huang, L., Han, R., Ai, T., Yu, P., Kang, H., Tao, Q., et al. (2020). Serial Quantitative Chest Ct Assessment of Covid-19: Deep-Learning Approach. Radiol. Cardiothorac. Imaging 2, e200075. doi:10.1148/ryct.2020200075

Isensee, F., Petersen, J., Klein, A., Zimmerer, D., Jaeger, P., Kohl, S., et al. (2018). Nnu-Net: Self-Adapting Framework for U-Net-Based Medical Image Segmentation. Informatik aktuell,Bildverarbeitung für die Medizin 2018, 22. doi:10.1007/978-3-658-25326-4_7

Jin, S., Wang, B., Xu, H., Luo, C., Wei, L., Zhao, W., et al. (2020). Ai-assisted Ct Imaging Analysis for Covid-19 Screening: Building and Deploying a Medical Ai System in Four Weeks. doi:10.1101/2020.03.19.20039354

Knudsen, L., and Ochs, M. (2018). The Micromechanics of Lung Alveoli: Structure and Function of Surfactant and Tissue Components. Histochem. Cel Biol. 150. doi:10.1007/s00418-018-1747-9

Li, L., Qin, L., Xu, Z., Yin, Y., Wang, X., Kong, B., et al. (2020). Artificial Intelligence Distinguishes Covid-19 from Community Acquired Pneumonia on Chest Ct. Radiology 296, 200905. doi:10.1148/radiol.2020200905

Liang, T., Liu, Z., Wu, C., Jin, C., Zhao, H., Wang, Y., et al. (2020). Evolution of Ct Findings in Patients with Mild Covid-19 Pneumonia. Eur. Radiol. 30, 1–9. doi:10.1007/s00330-020-06823-8

Luo, H., Tang, Q.-l., Shang, Y.-x., Liang, S.-B., Yang, M., Robinson, N., et al. (2020). Can Chinese Medicine Be Used for Prevention of corona Virus Disease 2019 (Covid-19)? a Review of Historical Classics, Research Evidence and Current Prevention Programs. Chin. J. Integr. Med. 26, 243–250. doi:10.1007/s11655-020-3192-6

Milletari, F., Navab, N., and Ahmadi, S.-A. (2016). V-net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. Fourth Int. Conf. 3D Vis. (3dv) 2016, 565–571. doi:10.1109/3DV.2016.79

Nejatali, A., and Ciric, I. (1998). An Iterative Algorithm for Electrical Impedance Imaging Using Neural Networks. Magnetics, IEEE Trans. 34, 2940–2943. doi:10.1109/20.717686

Pan, F., Ye, T., Sun, P., Gui, S., Liang, B., Li, L., et al. (2020). Time Course of Lung Changes on Chest Ct during Recovery from 2019 Novel Coronavirus (Covid-19) Pneumonia. Radiology 295, 200370. doi:10.1148/radiol.2020200370

Qi, X., Jiang, Z., Yu, Q., Shao, C., Zhang, H., Yue, H., et al. (2020). Machine Learning-Based Ct Radiomics Model for Predicting Hospital Stay in Patients with Pneumonia Associated with Sars-Cov-2 Infection: A Multicenter Study. 8(14):859. doi:10.21037/atm-20-3026

Rawat, W., and Wang, Z. (2017). Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 29, 1–98. doi:10.1162/NECO_a_00990

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-net: Convolutional Networks for Biomedical Image Segmentation. vol. 9351, 234–241. doi:10.1007/978-3-319-24574-4_28

Rosenblatt, F. (1958). The Perceptron: a Probabilistic Model for Information Storage and Organization in the Brain. Psychol. Rev. 65 (6), 386–408. doi:10.1037/h0042519

Schwarz, G. (1978). Estimating the Dimension of a Model. Ann. Stat. 6, 461–464. doi:10.1214/aos/1176344136

Shan+, F., Gao+, Y., Wang, J., Shi, W., Shi, N., Han, M., et al. (2021). Abnormal lung quantification in chest CT images of COVID‐19 patients with deep learning and its application to severity prediction. Med. Phys. 1633–1645. doi:10.1002/mp.14609

Shen, C., Yu, N., Cai, S., Zhou, J., Sheng, J., Liu, K., et al. (2020). Quantitative Computed Tomography Analysis for Stratifying the Severity of Coronavirus Disease 2019. J. Pharm. Anal. 10 (2), 123–129. doi:10.1016/j.jpha.2020.03.004

Shen, D., Wu, G., and Suk, H.-I. (2017a). Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 19, 221–248. doi:10.1146/annurev-bioeng-071516-044442

Shen, D., Wu, G., and Suk, H.-I. (2017b). Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 19, 221–248. doi:10.1146/annurev-bioeng-071516-044442

Shi, F., Wang, J., Shi, J., Wu, Z., Wang, Q., Tang, Z., et al. (2020a). Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation and Diagnosis for Covid-19. IEEE Reviews in Biomedical Engineering PP, 4–15. doi:10.1109/RBME.2020.2987975

Shi, F., Xia, L., Shan, F., Wu, D., Wei, Y., Yuan, H., et al. (2020b). Large-scale Screening of Covid-19 from Community Acquired Pneumonia Using Infection Size-Aware Classification. Phys. Med. Biol. 66 (6), 065031. doi:10.1088/1361-6560/abe838

Smith, L. (2017). Cyclical Learning Rates for Training Neural Networks. IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, March 24–31, 2017, 464–472. doi:10.1109/WACV.2017.58

Suzuki, K., Horiba, I., and Sugie, N. (2001). A Simple Neural Network Pruning Algorithm with Application to Filter Synthesis. Neural Process. Lett. 13, 43–53. doi:10.1023/A:1009639214138

Suzuki, K., Horiba, I., and Sugie, N. (2002a). Efficient Approximation of Neural Filters for Removing Quantum Noise from Images. Signal. Processing, IEEE Trans. 50, 1787–1799. doi:10.1109/TSP.2002.1011218

Suzuki, K., Horiba, I., Sugie, N., and Nanki, M. (2002b), Neural Filter with Selection of Input Features and its Application to Image Quality Improvement of Medical Image Sequences. IEICE Transactions on Information and Systems E85-D. 85, 1710–1718. The Institute of Electronics, Information and Communication Engineers.

Suzuki, K., Horiba, I., and Sugie, N. (2004). Neural Edge Enhancer for Supervised Edge Enhancement from Noisy Images. Pattern Analysis And Machine Intelligence. IEEE Trans. 25, 1582–1596. doi:10.1109/TPAMI.2003.1251151

Tang, L., Zhang, X., Wang, Y., and Zeng, X. (2020a). Severe Covid-19 Pneumonia: Assessing Inflammation burden with Volume-Rendered Chest Ct. Radiol. Cardiothorac. Imaging 2, e200044. doi:10.1148/ryct.2020200044

Tang, Z., Zhao, W., Xie, X., Zhong, Z., Shi, F., Liu, J., et al. (2020b). Severity Assessment of Coronavirus Disease 2019 (Covid-19) Using Quantitative Features from Chest Ct Images. Phys. Med. Biol. 66 (3), 035015. doi:10.1088/1361-6560/abbf9e

Vatathanavaro, S., Tungjitnob, S., and Pasupa, K. (2018). White Blood Cell Classification: A Comparison between Vgg-16 and Resnet-50 Models 12, 4–5.

Wang, Y., Hu, M., Zhou, Y., Li, Q., Yao, N., Zhai, G., et al. (2020). Unobtrusive and Automatic Classification of Multiple People's Abnormal Respiratory Patterns in Real Time Using Deep Neural Network and Depth Camera. IEEE Internet of Things Journal. 9, 8559–8571. doi:10.1109/JIOT.2020.2991456

Wu, G., Kim, M., Wang, Q., Munsell, B. C., and Shen, D. (2016). Scalable High-Performance Image Registration Framework by Unsupervised Deep Feature Representations Learning. IEEE Trans. Biomed. Eng. 63, 1505–1516. doi:10.1109/TBME.2015.2496253

Zhang, K., Liu, X., Shen, J., Li, Z., Sang, Y., Wu, X., et al. (2020). Clinically Applicable Ai System for Accurate Diagnosis, Quantitative Measurements, and Prognosis of Covid-19 Pneumonia Using Computed Tomography. Cell 182 (5), 1360. doi:10.1016/j.cell.2020.08.029

Zheng, C., Deng, X., Fu, Q., Zhou, Q., Feng, J., Ma, H., et al. (2020). Deep Learning-Based Detection for Covid-19 from Chest Ct Using Weak Label. doi:10.1101/2020.03.12.20027185

Keywords: COVID-19, corona score, medical imaging analysis, AI medical platform, deep learning, computed tomography, segmentation

Citation: Kaheel H, Hussein A and Chehab A (2021) AI-Based Image Processing for COVID-19 Detection in Chest CT Scan Images. Front. Comms. Net 2:645040. doi: 10.3389/frcmn.2021.645040

Received: 22 December 2020; Accepted: 30 June 2021;

Published: 09 August 2021.

Edited by:

Hamed Ahmadi, University of York, United KingdomReviewed by:

Alaa Awad Abdellatif, Qatar University, QatarJoanna Olszewska, University of the West of Scotland, United Kingdom

Copyright © 2021 Kaheel, Hussein and Chehab. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hussein Kaheel, aGFrNDdAbWFpbC5hdWIuZWR1;, YWloMTBAbWFpbC5hdWIuZWR1;, Y2hlaGFiQGF1Yi5lZHUubGI=

Hussein Kaheel

Hussein Kaheel Ali Hussein

Ali Hussein Ali Chehab

Ali Chehab