- Department of Economics and Finance, Utah State University, Logan, UT, United States

This paper demonstrates how people can manipulate their beliefs in order to obtain the self-image of an altruistic person. I present an online experiment in which subjects need to decide whether to behave altruistically or selfishly in an ambiguous environment. Due to the nature of ambiguity in this environment, those who are pessimistic have a legitimate reason to behave selfishly. Thus, subjects who are selfish but like to think of themselves as altruistic have an incentive to overstate their pessimism. In the experiment, I ask subjects how optimistic or pessimistic they feel about an ambiguous probability and then, through a separate task, I elicit their true beliefs about the same probability. I find that selfish subjects claim to be systematically more pessimistic than they truly are whereas altruistic subjects report their pessimism (or optimism) truthfully. Given the experiment design, the only plausible explanation for this discrepancy is that selfish subjects deliberately overstate their pessimism in order to maintain the self-image of an altruistic person. Altruistic subjects, whose behavior has already proven their altruism, have no such need for belief manipulation.

JEL Classifications: C91, D82, D83, D84.

1 Introduction

We like to think of ourselves as good people and, at the same time, like to act in ways that conflict with our definition of being good. As these conflicting desires coexist, we experience an unpleasant tension called cognitive dissonance1. According to traditional cognitive dissonance theory, an individual may resolve this dissonance by changing either her behavior or her self-concept (Festinger, 1957). However, advances in this theory show that belief manipulation is another mechanism of resolving cognitive dissonance (Akerlof and Dickens, 1982; Rabin, 1994). This paper adds to this literature by presenting a simple experiment demonstrating the use of belief manipulation for resolving cognitive dissonance. Specifically, the experiment shows that, by adopting greater pessimism, people can behave selfishly and still obtain the self-image of an altruistic person2.

As a motivating example, suppose that an individual faces the decision of whether to give a dollar to a panhandler. On the one hand, giving money to this panhandler allows her to maintain the self-image of an altruistic person, something that results in positive utility. On the other hand, giving money reduces her own monetary payoff, resulting in negative utility. The conflicting desires for behaving selfishly and for being an altruistic person cause this individual to experience cognitive dissonance. To resolve this dissonance, she must either behave altruistically or accept the self-image of a selfish person, at least according to traditional cognitive dissonance theory. However, if she is able to strategically manipulate her beliefs, then she does have to choose one or the other. Through belief manipulation, she can behave selfishly and still maintain the self-image of an altruistic person. In this example, she could manipulate her belief by, say, overstating the panhandler's likelihood of being a drug addict, as this would provide her with a valid excuse to not give money in this particular instance and let her continue believing that she would have given money under “normal” circumstances.

This paper adds to an extensive and still growing literature on using belief manipulation to excuse selfish behavior. It builds on the idea that people get utility from a favorable self-image (Bodner and Prelec, 2003; Grossman, 2015; Grossman and Van Der Weele, 2017a,b). It also adds to a large set of experimental studies that demonstrate various mechanisms of belief manipulation in the context of trust games and dictator games (Dana et al., 2007; Haisley and Weber, 2010; Di Tella et al., 2015; Exley, 2015, 2020; Konig-Kersting and Trautmann, 2016; Grossman and Van Der Weele, 2017b; Andreoni and Sanchez, 2020; Zimmermann, 2020; Chen and Heese, 2021; Regner and Matthey, 2021; Buchanan and Razzolini, 2022; Gately et al., 2023).

Methodologically, the two most closely related papers are Di Tella et al. (2015) [henceforth DPBS] and Andreoni and Sanchez (2020) [henceforth A&S], both of which give their experimental subjects an opportunity to falsify their beliefs in order to obtain the image of an altruistic person by eliciting beliefs in an unincentivized way. The current study differs from these two papers in one key aspect: while the two aforementioned papers let subjects manipulate their beliefs about their opponent's altruism, I let subjects manipulate beliefs about the realization of a random variable, particularly one whose mean and distribution are known. The latter manipulation ought to be significantly more challenging, given that the belief in question is less subjective.

DPBS design a game in which an “allocator” decides whether to behave altruistically toward a “seller.” If the allocator believes that the seller is unkind, then he can behave selfishly without feeling guilty. The authors find that when allocators must behave altruistically, they do not think that sellers are unkind. However, in if allocators are allowed to behave selfishly, they do so and state that they think that sellers are unkind. The authors interpret this as evidence of self-serving belief manipulation.

In a similar endeavor, A&S experimentally implement a modified trust game with binary decisions in which they ask senders whether they think the receiver will behave altruistically or selfishly. They find that only the senders who play selfishly are also the ones who expect receivers to play selfishly. However, when asked whether they would like to be paid according to the outcome of the trust game or an outside gamble (and the outside gamble would be better if receivers are indeed likely to play selfishly), the same senders choose to be paid according to the trust game. This indicates that they truly did not think that receivers would behave selfishly but only claimed to do so. By ruling out other possibilities, A&S argue that the only explanation for this discrepancy is that selfish subjects merely made a false claim in order to justify their selfish behavior.

The current paper makes three important contributions to the experimental literature on belief manipulation. First, it tests for belief manipulation using a much simpler experiment than previous studies. Second, by providing evidence of belief manipulation in another setting, it improves our understanding of the types of situations where incentivized beliefs are likely to differ from unincentivized beliefs. Third, by using A&S's methodology in a completely different game, it provides a stress test and a robustness test on their methodology. While A&S check for belief manipulation in a trust game, this paper does that for a donation game (a dictator game where the recipient is a charity). The fact that I find evidence of belief manipulation suggests that this method is indeed robust enough to work in other situations.

I implement a donation game in an online experiment where subjects need to decide whether to donate half of their endowment to a charity or keep all of it. If they choose to donate, their donation gets converted into an ambiguous lottery that pays the charity 2.4 times the donation amount with a probability of p and pays nothing with the remaining probability of 1−p. Subjects do not know the realized value of p but are told that it will be randomly selected from the following eleven numbers: 0, 0.1, 0.2, ... , 13. Before submitting their donation decisions, subjects are asked what value they think p will take, without being offered a monetary reward for accuracy or honesty. The unincentivized nature of this question is crucial because this is what gives subjects the opportunity to manipulate their beliefs.

After subjects submit their donation decision and guess about p, they proceed to a second, seemingly unrelated task. In this task, subjects are told that they will now get to play a lottery to earn some additional money. The choice they need to make is whether to play the same ambiguous lottery that was given to the charity (in the previous task) or play a lottery with a known probability (e.g., 0.). Subjects make this choice for several different known probabilities, in a price list format.

More specifically, subjects are given eleven decision rows and are asked to pick one of the two lotteries shown in each decision row. In all eleven rows, the first lottery is always the same, paying a positive payoff with probability p and a zero payoff with 1−p. The only difference between rows is the probability with which the second lottery pays a positive amount. In the first decision row, the second lottery pays a positive amount with certainty. In each subsequent row, the probability of the positive payoff decreases by 0.1, reaching zero in the last decision row. Therefore, in each row, the second lottery is less attractive than it was in the previous row.

The decision row in which subjects switch from preferring the first lottery to preferring the second lottery indirectly reveals their incentivized beliefs about p. For distinction, let us refer to beliefs elicited through this incentive compatible method as subjects' true beliefs, and the beliefs elicited through the unincentivized method as subjects' adopted beliefs.

The results of the experiment show that selfish subjects manipulate beliefs whereas altruistic subjects do not. Specifically, subjects who behave selfishly in the donation task adopt significantly lower, i.e., more pessimistic, beliefs than their true beliefs. By contrast, subjects who behave altruistically in the donation task show no discrepancy between their adopted beliefs and true beliefs. Specifically, I find that altruistic subjects' true and adopted beliefs and selfish subjects' true beliefs are not significantly different from 0.5 (the actual expected value), but selfish subjects' adopted beliefs are. By ruling out other possibilities, I show that the only plausible explanation for this difference is that selfish subjects merely claim to be pessimistic in an attempt to justify their selfish behavior, a finding that is consistent with Di Tella et al. (2015) and Andreoni and Sanchez (2020).

2 Experiment design

The experiment involves two decision tasks, each of which contributes to determining subjects' final payoff. First, subjects decide whether or not to donate 50 tokens to a charity from an endowment of 100 tokens, knowing that their donation will get converted to an ambiguous lottery before being given to the charity. Specifically, if they make the donation, the charity will receive 120 tokens with a some probability p, or 0 tokens with 1−p. Subjects do not know the realized value of p but know its distribution. As they make their decision, they are also asked whether they feel optimistic or pessimistic about the realized value of p. Next, subjects participate in a seemingly separate task that indirectly elicits their true beliefs about the value of p. This design provides a framework for comparing subjects' adopted beliefs and true beliefs, for both selfish and altruistic subjects, where the classifications of selfish and altruistic are assigned based on their actions in the donation task.

2.1 Donation task

In the first task of the experiment, the Donation Task, subjects are endowed with 100 experimental tokens (equivalent to $12) and are asked to decide whether or not to donate half of their endowment. To minimize the range of excuses available to subjects for not donating, subjects are asked to first choose a charity from a list of eleven popular charities, each supporting a different cause. By doing so, subjects can donate to a cause they feel passionate about, and be less likely to not donate because of not agreeing with a particular cause. Following their charity selection, subjects decide whether to donate 0 or 100 tokens to that charity. This binary choice is later used to divide subjects into altruistic or selfish types4. To test whether subjects use the binary choice to justify their failure to donate, the post-experiment questionnaire asks them if they would have donated another amount if they were not constrained by a binary decision5. For the purposes of this study, a binary choice is advantageous because it forces subjects to take an action that is either clearly altruistic or selfish and makes it challenging for subjects to view the choice of not donating as only slightly less altruistic than the choice of donating.

Subjects who choose not to donate keep their entire endowment of 100 tokens, while those who donate keep the remaining 50 tokens of their endowment. If a subject donates, then the charity receives 120 tokens (which is 2.4 times the donation amount) with a probability p, while with the remaining probability of 1−p, the charity receives nothing. The value of p is unknown, but subjects are informed that it will be selected randomly from the following numbers: {0, 0.1, ... , 0.9, 1}. In addition to deciding whether to donate, subjects may also provide an email address where they would receive a donation receipt directly from the charity. The ability to obtain proof of donation eliminates the possibility of subjects using their skepticism as an excuse to rationalize selfish behavior, such as thinking that the charity might not receive the money or the lottery might be rigged.

On the same screen and before submitting their donation decision, subjects are asked to guess the value that p will take. This guess, henceforth called subjects' adopted beliefs, may or may not be their true beliefs. Subjects are reminded there is no reward or penalty for the accuracy of their guess. Keeping this question deliberately unincentivized is crucial because it allows subjects to adopt pessimistic beliefs even if they are not truly pessimistic. Both Di Tella et al. (2015) and Andreoni and Sanchez (2020) provide similar explanations for eliciting unincentivized beliefs in their experiments6.

Adopting pessimistic beliefs can allow subjects to behave selfishly and still maintain the self-image of an altruistic person. Therefore, selfish subjects, particularly those who derive utility from considering themselves altruistic, have an incentive to adopt pessimistic beliefs, whereas altruistic subjects do not. If the unincentivized nature of this question causes subjects to respond carelessly or not think carefully enough, the responses will be noisy, with misstatements in both directions for both selfish and altruistic. However, if selfish subjects manipulate their beliefs in order to obtain an altruistic self-image, then selfish subjects' adopted beliefs will be misreported in one particular direction (with adopted beliefs being systematically more pessimistic than their true beliefs).

2.2 Lottery task

The second task of the experiment, the Lottery Task, gives subjects the opportunity to play a lottery. Subjects are informed that their earnings from the lottery will be in addition to the participation fee and earnings from the donation task. The objective of this task is for subjects to select the lottery that they would like to play. They are presented with a choice between an ambiguous lottery that pays 120 tokens with a probability of p or a simple lottery that pays 120 tokens with a known probability. Subjects are also informed that the value of p used for this lottery will be the same as the one used to pay the charity in the donation task. Subjects make this choice in a multiple price list format where the probability of the simply lottery ranges from 0% to 100% in increments of 10%. Thus, subjects make a total of eleven decisions, each being a choice between between the ambiguous lottery from the donation task and a simple lottery. At the end of the task, one of these decisions is randomly selected to determine subjects' payoffs7.

Subjects are presented with eleven decision rows, each of which contains two options. The first option is always an ambiguous lottery that pays 120 tokens with probability p and 0 tokens with probability 1−p, where p is the same as it was in the donation task. The second option in each row is a simple lottery that pays 120 tokens with a known probability and 0 tokens with the complementary probability. The only difference between each row is the value of this known probability. In the first decision row, the known probability is 100%, ensuring that the second option pays 120 tokens with certainty. In each subsequent row, this known probability decreases by 10%, such that in the last decision row, the known probability is 0%, and the second option pays nothing. As a result, the ambiguous lottery is optimal in the last decision row, while the simple lottery is the better choice in the first decision row8. In all other rows, the better choice depends on subjects' beliefs; subjects who believe that p is greater than the known probability of the second option would find the ambiguous lottery more attractive. Subjects' true beliefs about the value of p is revealed by the row in which they switch from the simple lottery to the ambiguous lottery.

The main purpose of the lottery task is to elicit subjects' true beliefs about p, so that they can later be compared with the beliefs reported in the donation task. In order to elicit true beliefs, subjects need to have an incentive to respond truthfully, that is, the belief elicitation method should be a proper scoring rule. However, not all proper scoring rules, such as the quadratic scoring rule, are suitable in this situation because subjects may have a stronger incentive to remain consistent with their previous response, even if it means forgoing some monetary gain. Therefore, subjects' true beliefs about p are indirectly elicited through another task that appears to have a completely different purpose. As Andreoni and Sanchez (2020, p. 6) explain, “When subjects have private incentives to mislead us or themselves on their true beliefs, the QSR or any other devise that asks directly for beliefs can be expected to elicit biased reports from subjects, even if it is a proper scoring rule. We instead must derive true beliefs by masking them in another task which, without the subject's awareness, will indirectly reveal beliefs”9. This is exactly what the lottery task accomplishes. Subjects are not informed about the lottery task until they actually begin the task itself, so when asked to guess the value of p in the donation task, they are free to strategically manipulate their beliefs. Even if subjects realize that the lottery task is an attempt to elicit their true beliefs about something they previously stated, they would become more inclined to provide responses that are consistent with their earlier statements. This would reduce the average difference between adopted and true beliefs, making the results only more conservative.

2.3 Questionnaire

The last task of the experiment is to complete a brief questionnaire that collects some basic demographic information, such as gender, age, race, religiousness, education, and income. The questionnaire also provides an opportunity for subjects to explain their choices. For instance, one of the questions asks, “What was your primary motivation to donate 50 (or 0) tokens?” Although these qualitative responses are not included in the formal analysis, they are used to investigate whether subjects have other justifications for not donating.

2.4 Implementation

The experiment is conducted in the form of an online computerized survey, using Amazon's micro-employment platform called Mechanical Turk (MTurk). This platform allows any adult with a social security number to register as a worker and complete small tasks for compensation. Numerous studies show that using MTurk workers as experimental subjects yields no different results than using undergraduate students, despite the fact that compensation of MTurk workers is typically much lower than that of student participants (e.g., Horton et al., 2011; Amir et al., 2012; Arechar et al., 2018; Johnson and Ryan, 2020; Snowberg and Yariv, 2021).

To ensure worker anonymity, Amazon brokers all payments to workers and does not provide any identifiable information of workers to experimenters. Experimenters only know workers by their unique ID, which is a randomly generated 14–20 character alphanumeric code. Amazon also implements several measures to prevent fraudulent activity, such as prohibiting individuals from creating multiple worker accounts and requiring workers to demonstrate traits of human intelligence. Nonetheless, I include a CAPTCHA test at the start of the experiment to prevent bots from participating.

To ensure that subjects are able to comprehend the experiment instructions, they are required to correctly answer some qualifying questions before being allowed to participate. Those who pass the qualifying questions are guaranteed a participation payment of $4. In addition to this payment, subjects can earn tokens during the experiment which are converted to US dollars at an exchange rate of $0.12/token. These tokens are paid to them as bonus payments shortly after the experiment. Given that participants can earn either 50 or 100 tokens in the first task and 0 or 120 tokens in the second task, resulting in a total bonus payment of $6.00, $12.00, $20.40, or $26.40. Given that the entire experiment takes no more than 30 minutes to complete, these are fairly generous amounts according to MTurk standards. Subjects make all choices through their own computers, and cannot go back to a previous page at any point in the survey. Experiment instructions are provided in Appendix B.

The experiment was advertised on MTurk as a research study and made available to 70 workers on a first-come-first-serve basis. Thus, the first 70 people to pass the qualifying questions got to participate in the experiment. The final data comprised 62 subjects, as 8 were dropped for switching multiple times during the lottery task. Of these 62 subjects, 27 (44%) chose to donate in the donation task, while the remaining 35 (56%) did not. The subsequent analysis refers to the subjects who donated as altruistic and the subjects who did not donate as selfish.

2.5 Hypotheses

If a subject's adopted belief is the same as her true belief, then we can assume that the subject reported her true belief in both instances. If, however, there is a disparity between a subject's true belief and adopted belief, then there are two possibilities. The first possibility is that the subject responded randomly or carelessly when asked about her belief in the donation task, as that question was unincentivized. In this case, the subject's adopted belief could be more or less pessimistic than her true belief. The second possibility is that the subject deliberately adopted a false belief in the donation task, which can only happen if an incentive exists. Individuals who did not donate any money may be incentivized to adopt more pessimistic beliefs about p than their true belief whereas those who donated may be incentivized to adopt more optimistic beliefs. Therefore, if this is the case, we should observe that selfish subjects' adopted beliefs are systematically more pessimistic than their true beliefs while altruistic subjects' adopted beliefs are more optimistic than their true beliefs. This would point to belief manipulation10.

This leads to the main testable hypothesis of this experiment, that selfish subjects' adopted beliefs will be more pessimistic than their true beliefs. Such beliefs would be self-serving as pessimism in this case can help justify selfish behavior.

3 Results

The experimental results offer compelling evidence of systematic belief manipulation. Specifically, selfish subjects' adopted beliefs (in the donation task) were significantly more pessimistic than their true beliefs (as revealed in the lottery task). In contrast, altruistic subjects' adopted beliefs were only marginally less pessimistic than their true beliefs. This suggests that selfish subjects manipulate beliefs as a means of maintaining their self-image as altruistic individuals. Altruistic subjects, on the other hand, do not need this mechanism, as they have already demonstrated their altruism through their donation.

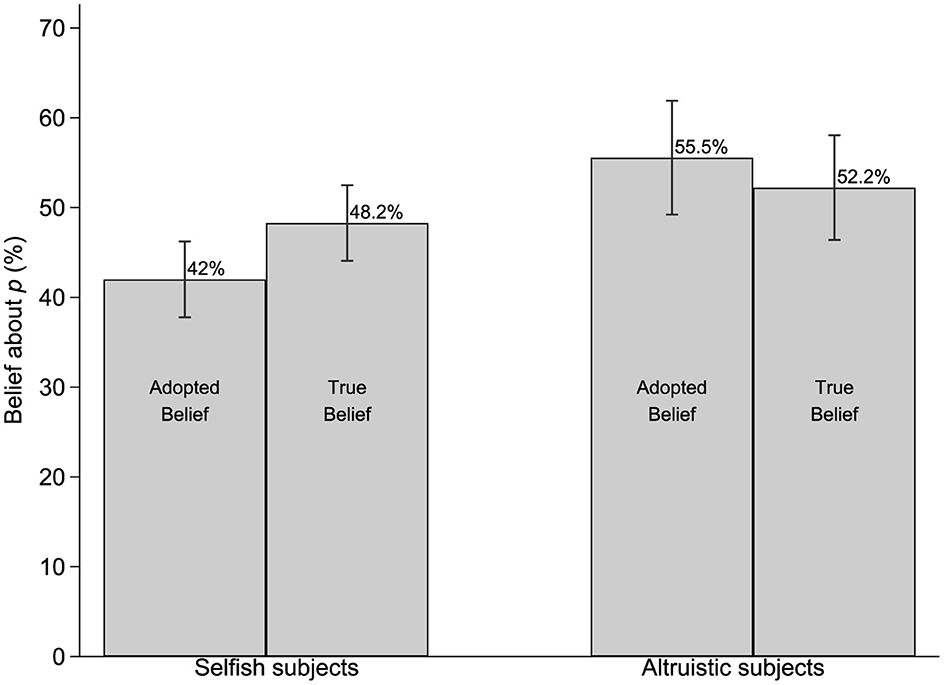

Figure 1 presents the true and adopted beliefs for both selfish and altruistic subjects. Selfish subjects' true beliefs were about 48% and adopted beliefs were about 42%, on average. The six percentage points mean difference is statistically significant (t-test p = 0.036, Mann-Whitney p = 0.076). By contrast, altruistic subjects' true and adopted beliefs were 52% and 55%, respectively, and the three percentage point different between them was not statistically significant (t-test p = 0.344, Mann-Whitney p = 0.331). This suggests that selfish subjects manipulated their beliefs whereas altruistic subjects did not. This finding is also supported by regression results presented in Appendix Table 3.

Figure 1. Average values of true and adopted beliefs. Error bars represent 95% confidence intervals. Thus, selfish subjects' true beliefs are significantly different from their adopted beliefs at the 5% level. However, selfish and altruistic subjects' true beliefs are not significantly different.

Moreover, the difference between selfish and altruistic subjects' true beliefs is not significantly different from zero (Mann-Whitney p = 0.372, t-test p = 0.256). This means that selfish subjects were not truly pessimistic about p, at least not any more than altruistic subjects were, and therefore, true pessimism could not have been the reason for their their selfish behavior. The summary statistics in Appendix A provides additional t-test results.

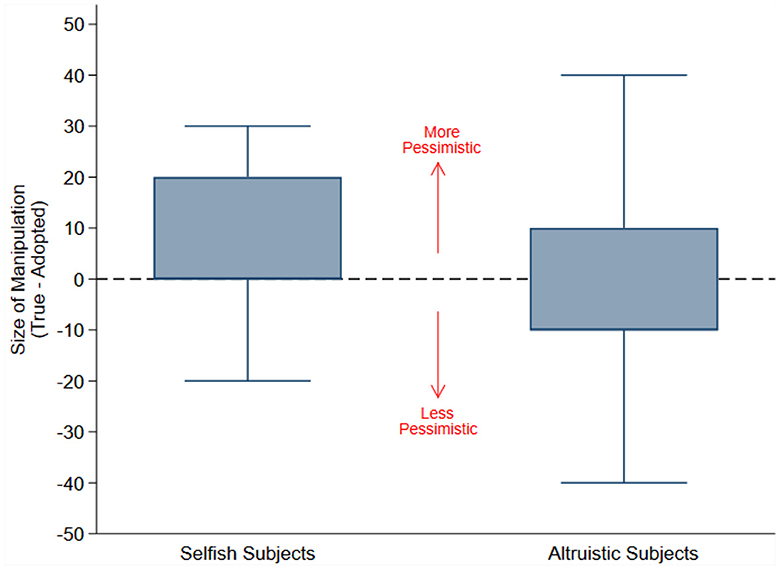

Figure 2 presents a box plot of the size of belief manipulation for selfish and altruistic subjects, where size of belief manipulation is defined as the difference between a subject's true and adopted beliefs. The figure shows that selfish subjects are more likely to have a positive value of size of belief manipulation or in other words, that selfish subjects' adopted beliefs tend to be more pessimistic than their true beliefs.

Figure 2. Belief manipulation. When selfish subjects' adopted beliefs differed from their true beliefs, their adopted beliefs were almost always more pessimistic. By contrast, when altruistic subjects' adopted beliefs differed from their true beliefs, the difference was in both directions.

A closer look at the data shows that there were ten (out of 35) selfish subjects whose adopted belief was more than 10 percentage points away from their true belief (in either direction). The adopted beliefs of nine of these ten subjects were more pessimistic than their true beliefs, suggesting that selfish subjects deliberately manipulated their beliefs. Among altruistic subjects, there were ten (out of 27) whose adopted belief was more than 10 percentage points away from their true beliefs. However, for these subjects, there was not a clear majority who adopted beliefs in one particular direction—four of them adopted more pessimistic beliefs than their true beliefs while the remaining six adopted less pessimistic (or more optimistic) beliefs, suggesting that their discrepancy between true and adopted beliefs is random, likely due to carelessness.

4 Discussion

This paper uses a within-subject design to find that subjects manipulate their beliefs in a donation task. Specifically, subjects adopt pessimistic beliefs about a probability as that allows them to behave selfishly while maintaining an altruistic self-image. The experiment design has some resemblance with the experiment by Andreoni and Sanchez (2020) in which subjects play a trust game and manipulate their beliefs about their opponent's altruism. However, because their experimental setup involves a two-player game, their subjects are primarily driven by a desire for a positive social image, whereas in my game, subjects are mainly motivated by a desire for a positive self-image. Nonetheless, applying their methodology to a different game allows for stress testing of their approach and enhances the external validity of their study.

The fact that this design can reconcile incentivized and unincentivized beliefs means that it can potentially result in path-breaking contributions in experimental economics and settle debates about when unincentivized belief elicitation is appropriate. Whenever a particular belief is self-serving or image-enhancing, people will have an incentive to gravitate toward it. In such situations, it is crucial that beliefs be elicited through an incentivized method.

Future work in this area can use a similar within-subject design in other domains to check if unincentivized beliefs align with incentivized ones. In many situations, the incentive to misreport beliefs is obviously present. For example, someone who convinces themselves that their amount of snacking is negligible can deceptively maintain the self-image of a disciplined person on a strict diet. Similarly, a person who does not consider white lies to be lies at all can maintain the image of an honest person despite being a habitual white liar. In other situations, however, the incentive to misreport beliefs is less obvious. For example, if only a particular color (e.g., green) of a product is on sale, would people have an incentive to convince themselves that green is their favorite color? If they do, this would get manifested by a discrepancy in their incentivized and unincentivized beliefs.

Lastly, the finding that people manipulate their beliefs to excuse themselves from donations can help charities and non-profits improve their fundraising efforts, and can help people in their character development. For example, if charities know that spontaneous belief manipulation (or excuse-making) is a common mechanism that people use to withhold their donations, they can modify their solicitation methods in such a way that minimizes potential donors' room for excuse-making. Likewise, if people understand that such type of belief manipulation is a natural part of human behavior, they can use this knowledge to become more honest with themselves.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Vanderbilt University Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

ZS: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation, Conceptualization.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frbhe.2024.1412437/full#supplementary-material

Footnotes

1. ^I use the definition of cognitive dissonance provided by Akerlof and Dickens (1982) and Rabin (1994). For example, Akerlof and Dickens (1982, p.308) write, “cognitive dissonance reactions stem from peoples' view of themselves as ‘smart, nice people.' Information that conflicts with this image tends to be ignored, rejected, or accommodated by changes in other beliefs.”

2. ^The idea that people derive utility from an altruistic self-image is well-established. For example, several experimental studies (Shariff and Norenzayan, 2007; Ahmed and Salas, 2011; Battigalli et al., 2013; Tonin and Vlassopoulos, 2013; Lambarraa and Riener, 2015) show that people are more willing to give money when their self-image is at stake, compared to when their self-image is not at stake. As for why people want to think of themselves as altruistic, Benabou and Tirole (2002, p. 872) suggest three possible reasons, “First, people may just derive utility from thinking well of themselves, and conversely find a poor self-image painful. Second, believing—rightly or wrongly—that one possesses certain qualities may make it easier to convince others of it. Finally, confidence in his abilities and efficacy can help the individual undertake more ambitious goals and persist in the face of adversity.”

3. ^In other words, subjects are told that p is uniformly distributed between 0 and 1. The mode of the distribution is each of these 11 numbers, while the mean and median are 0.5.

4. ^For the purposes of this study, the words altruistic and selfish are merely used as descriptive labels for subjects' actions, and not intended to represent a moral judgment of those actions.

5. ^For example, subjects might engage in self-deception by thinking that they would have liked to donate a smaller number of tokens and the fact that they cannot is why they are choosing to donate zero tokens. I find that some selfish subjects do claim that they would have made a donation if they could have donated any amount of their choice; however, all of these subjects also manipulated their beliefs about the probability p, suggesting that self-deceivers find multiple ways to deceive themselves.

6. ^For example, (Di Tella et al., 2015, p. 3422) write, “There was no monetary reward for making the correct guess in this version of the experiment, as we wanted to give subjects an opportunity to express their beliefs without a cost.” Andreoni and Sanchez (2020) write, “If the failure to incentivize reports here results in inaccuracy, then one is hard-pressed to think of reasons other than belief manipulations that should systematically bias reports in one particular direction.

7. ^For a discussion of the multiple price list method and randomly selecting one outcome for payment, see Andersen et al. (2006), Schotter and Trevino (2014), Schlag et al. (2015), Andreoni and Sanchez (2020).

8. ^In several previous experiments that use price lists, some subjects switch multiple times between the two options presented (Holt and Laury, 2002; Jacobson and Petrie, 2009; Meier and Sprenger, 2010). Multiple switch points can indicate subject confusion and are difficult to rationalize, so it is generally accepted to use a framing device to avoid confusion and clarify the decision process (Andreoni and Sprenger, 2011; Exley, 2015). Thus, the instructions explicitly mentioned that the choices in the first and last rows involve certain outcomes and also pre-highlight the clearly better choices in those rows. Despite these efforts, there were eight subjects who switched multiple times in this task.

9. ^They also mention, “this method is superior to the QSR since it is valid beyond the case of risk neutrality” (Andreoni and Sanchez, 2020, p.7).

10. ^Note that if true beliefs of selfish subjects are different from those of altruistic subjects, then that does not point to belief manipulation. In fact, such a difference might even help explain why some subjects donated and others did not.

References

Ahmed, A. M., and Salas, O. (2011). Implicit influences of christian religious representations on dictator and prisoner's dilemma game decisions. J. Socio-Econ. 40, 242–246. doi: 10.1016/j.socec.2010.12.013

Akerlof, G. A., and Dickens, W. T. (1982). The economic consequences of cognitive dissonance. Am. Econ. Rev. 72, 307–319.

Amir, O., Rand, D. G., and Gal, Y. K. (2012). Economic games on the internet: the effect of $1 stakes. PLoS ONE 7:e31461. doi: 10.1371/journal.pone.0031461

Andersen, S., Harrison, G. W., Lau, M. I., and Rutström, E. E. (2006). Elicitation using multiple price list formats. Exper. Econ. 9, 383–405. doi: 10.1007/s10683-006-7055-6

Andreoni, J., and Sanchez, A. (2020). Fooling myself or fooling observers? Avoiding social pressures by manipulating perceptions of deservingness of others. Econ. Inquiry 58, 12–33. doi: 10.1111/ecin.12777

Andreoni, J., and Sprenger, C. (2011). Uncertainty equivalents: Testing the limits of the independence axiom. Technical report, National Bureau of Economic Research. doi: 10.3386/w17342

Arechar, A. A., Gächter, S., and Molleman, L. (2018). Conducting interactive experiments online. Exper. Econ. 21, 99–131. doi: 10.1007/s10683-017-9527-2

Battigalli, P., Charness, G., and Dufwenberg, M. (2013). Deception: the role of guilt. J. Econ. Behav. Organ. 93, 227–232. doi: 10.1016/j.jebo.2013.03.033

Benabou, R., and Tirole, J. (2002). Self-confidence and personal motivation. Quart. J. Econ. 117, 871–915. doi: 10.1162/003355302760193913

Bodner, R., and Prelec, D. (2003). Self-signaling and diagnostic utility in everyday decision making. Psychol. Econ. Decis. 1, 105–126. doi: 10.1093/oso/9780199251063.003.0006

Buchanan, J., and Razzolini, L. (2022). How dictators use information about recipients. J. Behav. Fin. 23, 408–426. doi: 10.1080/15427560.2022.2081971

Chen, S., and Heese, C. (2021). Fishing for good news: Motivated information acquisition. Technical report, University of Bonn and University of Mannheim, Germany.

Dana, J., Weber, R. A., and Kuang, J. X. (2007). Exploiting moral wiggle room: experiments demonstrating an illusory preference for fairness. Econ. Theory 33, 67–80. doi: 10.1007/s00199-006-0153-z

Di Tella, R., Perez-Truglia, R., Babino, A., and Sigman, M. (2015). Conveniently upset: avoiding altruism by distorting beliefs about others' altruism. Am. Econ. Rev. 105, 3416–3442. doi: 10.1257/aer.20141409

Exley, C. L. (2015). Excusing selfishness in charitable giving: the role of risk. Rev. Econ. Stud. 83, 587–628. doi: 10.1093/restud/rdv051

Exley, C. L. (2020). Using charity performance metrics as an excuse not to give. Manag. Sci. 66, 553–563. doi: 10.1287/mnsc.2018.3268

Festinger, L. (1957). A theory of Cognitive Dissonance, volume 2. Redwood City, CA: Stanford University Press. doi: 10.1515/9781503620766

Gately, J. B., et al. (2023). At least I tried: partial willful ignorance, information acquisition, and social preferences. Rev. Behav. Econ. 10, 163–187. doi: 10.1561/105.00000167

Grossman, Z. (2015). Self-signaling and social-signaling in giving. J. Econ. Behav. Organ. 117, 26–39. doi: 10.1016/j.jebo.2015.05.008

Grossman, Z., and Van Der Weele, J. J. (2017a). Dual-process reasoning in charitable giving: Learning from non-results. Games 8:36. doi: 10.3390/g8030036

Grossman, Z., and Van Der Weele, J. J. (2017b). Self-image and willful ignorance in social decisions. J. Eur. Econ. Assoc. 15, 173–217. doi: 10.1093/jeea/jvw001

Haisley, E. C., and Weber, R. A. (2010). Self-serving interpretations of ambiguity in other-regarding behavior. Games Econ. Behav. 68, 614–625. doi: 10.1016/j.geb.2009.08.002

Holt, C. A., and Laury, S. K. (2002). Risk aversion and incentive effects. Am. Econ. Rev. 92, 1644–1655. doi: 10.1257/000282802762024700

Horton, J. J., Rand, D. G., and Zeckhauser, R. J. (2011). The online laboratory: conducting experiments in a real labor market. Exper. Econ. 14, 399–425. doi: 10.1007/s10683-011-9273-9

Jacobson, S., and Petrie, R. (2009). Learning from mistakes: what do inconsistent choices over risk tell us? J. Risk Uncert. 38, 143–158. doi: 10.1007/s11166-009-9063-3

Johnson, D., and Ryan, J. B. (2020). Amazon mechanical turk workers can provide consistent and economically meaningful data. Southern Econ. J. 87, 369–385. doi: 10.1002/soej.12451

Konig-Kersting, C., and Trautmann, S. T. (2016). Ambiguity attitudes in decisions for others. Econ. Lett. 146, 126–129. doi: 10.1016/j.econlet.2016.07.036

Lambarraa, F., and Riener, G. (2015). On the norms of charitable giving in Islam: two field experiments in Morocco. J. Econ. Behav. Organ. 118, 69–84. doi: 10.1016/j.jebo.2015.05.006

Meier, S., and Sprenger, C. (2010). Present-biased preferences and credit card borrowing. Am. Econ. J. 2, 193–210. doi: 10.1257/app.2.1.193

Rabin, M. (1994). Cognitive dissonance and social change. J. Econ. Behav. Organ. 23, 177–194. doi: 10.1016/0167-2681(94)90066-3

Regner, T., and Matthey, A. (2021). Actions and the self: I give, therefore I am? Front. Psychol. 12:684078. doi: 10.3389/fpsyg.2021.684078

Schlag, K. H., Tremewan, J., and Van der Weele, J. J. (2015). A penny for your thoughts: a survey of methods for eliciting beliefs. Exper. Econ. 18, 457–490. doi: 10.1007/s10683-014-9416-x

Schotter, A., and Trevino, I. (2014). Belief elicitation in the laboratory. Annu. Rev. Econ. 6, 103–128. doi: 10.1146/annurev-economics-080213-040927

Shariff, A. F., and Norenzayan, A. (2007). God is watching you: priming god concepts increases prosocial behavior in an anonymous economic game. Psychol. Sci. 18, 803–809. doi: 10.1111/j.1467-9280.2007.01983.x

Snowberg, E., and Yariv, L. (2021). Testing the waters: behavior across participant pools. Am. Econ. Rev. 111, 687–719. doi: 10.1257/aer.20181065

Tonin, M., and Vlassopoulos, M. (2013). Experimental evidence of self-image concerns as motivation for giving. J. Econ. Behav. Organ. 90, 19–27. doi: 10.1016/j.jebo.2013.03.011

Keywords: self-deception, self-image, altruism, motivated beliefs, belief manipulation, ambiguity aversion

Citation: Samad Z (2024) Conveniently pessimistic: manipulating beliefs to excuse selfishness in charitable giving. Front. Behav. Econ. 3:1412437. doi: 10.3389/frbhe.2024.1412437

Received: 04 April 2024; Accepted: 17 May 2024;

Published: 26 June 2024.

Edited by:

J. Jobu Babin, Western Illinois University, United StatesReviewed by:

J. Braxton Gately, Western Illinois University, United StatesTimothy Flannery, Missouri State University, United States

Copyright © 2024 Samad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zeeshan Samad, emVlc2hhbi5zYW1hZEBnbWFpbC5jb20=

†ORCID: Zeeshan Samad orcid.org/0000-0002-7438-3097

Zeeshan Samad

Zeeshan Samad