- 1Adelson School of Entrepreneurship, Reichman University, Herzliya, Israel

- 2The School of Social and Policy Studies, Tel Aviv University, Tel Aviv, Israel

In a world grappling with technological advancements, the concept of Artificial Intelligence (AI) in governance is becoming increasingly realistic. While some may find this possibility incredibly alluring, others may see it as dystopian. Society must account for these varied opinions when implementing new technologies or regulating and limiting them. This study (N = 703) explored Leftists’ (liberals) and Rightists’ (conservatives) support for using AI in governance decision-making amidst an unprecedented political crisis that washed through Israel shortly after the proclamation of the government’s intentions to initiate reform. Results indicate that Leftists are more favorable toward AI in governance. While legitimacy is tied to support for using AI in governance among both, Rightists’ acceptance is also tied to perceived norms, whereas Leftists’ approval is linked to perceived utility, political efficacy, and warmth. Understanding these ideological differences is crucial, both theoretically and for practical policy formulation regarding AI’s integration into governance.

Introduction

“There can be no serious conflicts on Earth, in which one group or another can seize more power than it has for what it thinks is its own good despite the harm to Mankind as a whole, while the Machines rule.” (Isaac Asimov, I, Robot)

In this quote, Asimov argues that the destructive human tendency to hoard power and resources for one’s group while harming others may be curbed by (artificially intelligent, i.e., AI) robotic guidance. This idea of AI-guided governing was completely unrealistic until recently. However, AI is gradually finding its way into every aspect of our lives, including our governments (Chiusi et al., 2020; Coglianese and Ben Dor, 2020; Makridakis, 2017). AI is currently used to decipher medical tests (Yu et al., 2018), and various research papers include analyses conducted by AI and even parts that AI engines like Chat GPT wrote (see Böhm et al., 2023; Alser and Waisberg, 2023; Garg et al., 2023). AI has been increasingly integrated into legal systems that, in many states, suffer from great delays due to case overload (Sharma et al., 2022). Moreover, some countries have already piloted or incorporated AI in various branches of governance. For example, in Poland, AI is forming recommendations regarding resource allocations to maximize employment. Although these are meant to be revised by a person, the responsible clerks question less than 1% of recommendations (Kuziemski and Misuraca, 2020). This raises the question: Who will support this innovation, embracing the idea of an artificially intelligent, non-human decision-maker? Also, under what conditions would people be willing to waver the idea of self-governing in favor of AI governance?

The rise of generative AI

We are currently in the course of an ongoing technological revolution. Retailers use AI to estimate demand, evaluate market potential for new products, and analyze consumers’ online responses (Mariani and Wamba, 2020). AI is also used to predict medical patients’ risks and initiate personalized medicine on a large scale (Israel Innovation Authority, 2018). Generative Artificial Intelligence (GAI) such as ChatGPT, Claude, Bard, and others are now available to the public and used by an increasing number of people for diverse purposes such as education (Baidoo-Anu and Ansah, 2023), medicine (Shoja et al., 2023), mental health care (Tal et al., 2023), as well as scientific analyses and writing (Alser and Waisberg, 2023; Garg et al., 2023). Yet, another field AI is gradually entering is public service and governance (Sousa et al., 2019). Incorporating AI in governance has an immense potential to better our lives and advance our society. Governments could save funds by automating work, using AI to reduce “red tape” and improve the services given to the public (Aung et al., 2021; Golding and Nicola, 2019; van Noordt and Misuraca, 2022). For example, AI has been increasingly integrated into legal systems, where it promises more efficient and fairer outcomes through automation (Sharma et al., 2022). Also, Canada has been incorporating AI into its public services, enhancing the efficiency and effectiveness of its governmental operations. This includes using AI to assist with government forms and respond to public inquiries, streamlining traditionally more time-consuming and labor-intensive processes. Additionally, Canada has piloted an AI immigration decision-making system that evaluates immigration applications, provides recommendations, and notes potential red flags in applications (Kuziemski and Misuraca, 2020).

However, like any technological revolution, it has great disruptive and destructive potential. Experts’ concerns regarding the adverse effects of using AI technologies range from privacy and copyright issues to fear of global annihilation (Bartneck et al., 2021; Hristov, 2016; Yudkowsky, 2008; Yudkowsky et al., 2010). More moderate assessments of the concerns regarding the use of AI refer to issues of fairness, ethical use, inconsistency in service delivery, and discrimination (Nzobonimpa and Savard, 2023). For instance, AI often relies on datasets that recount past information unvetted for fairness or representativeness. Consequently, the data guiding AI’s current decision-making might be biased, reflecting past inequalities rather than ideal, equitable norms (Caliskan et al., 2017). Furthermore, AI technology is essentially different from biological intelligence and other technologies, and we have no clear understanding of how it may further develop and interact with the world (Yudkowsky, 2008). Therefore, we cannot deduce or even have any basis for estimating the implications of the AI revolution on society (Yudkowsky et al., 2010).

As with any new technology, some people rush to use AI technologies, while others lag (Lockey et al., 2021; Sharma et al., 2021). Knowing who is more likely to adopt these new technologies and what contexts may lead to increased use of them is crucial for the mitigation of their negative repercussions as well as reaping their benefits. Past research found that acceptance of AI-based decision-making systems is associated with peoples’ expectations of the system’s performance, the anticipated effort that using them would require, social influence (such as perceived group norms), and having the needed resources to use them (Sharma et al., 2022). People’s preference to use or avoid using AI may also be affected by characteristics of the person, like world views (belief in equality and collectivism) as well as income, gender (being male), irreligiosity, education, and familiarity with AI. These were all found to be associated with the acceptance of AI systems decision-making (Mantello et al., 2023).

Political ideology

One aspect that has yet to be thoroughly investigated and is likely to impact how individuals perceive AI and their willingness to endorse its application in governance is political ideology. Political ideology is a set of attitudes that encompasses cognitive, emotional, and motivational aspects. Political ideology organizes peoples’ views of the world and their values and helps to explain their political behavior (Tedin, 1987). Political ideology is therefore associated not only with voting behaviors but with how a person sees the world and their personality. Rightists (i.e., conservatives) and Leftists (i.e., liberals) tend to prioritize different values. Rightists generally favor binding values that promote social order and cohesion within communities and groups, while Leftists typically emphasize individuating values focused on individual rights, personal freedoms, and social justice (Stewart and Morris, 2021). Consequently, Rightists are often more influenced by values such as tradition, loyalty to the group, and respect for authority, whereas Leftists are generally more motivated by values such as inclusiveness, personal freedoms, and social justice (Haidt and Graham, 2007).

According to the Uncertainty-Threat Model of political conservatism, Rightists (i.e., conservatives) perceive the world as exceedingly threatening and feel a great need to avoid uncertainty. Therefore, they tend to resist change in general and social change specifically and be more accepting of social inequality since it provides a known structure and stability (Jost et al., 2003a, 2003b). Indeed, studies found that political right-wing ideology is associated with less preference for social equality and a reduced tendency to support marginalized groups (Kluegel, 1990; Skitka and Tetlock, 1993). Further findings indicate that Leftists tend to be curious and seek innovations, while Rightists are conventional and more organized (Carney et al., 2008). When examining the associations between ideology and The Big Five personality traits, Leftists (i.e., liberals) were found to be more open to new experiences (Jost et al., 2003a, 2007), while Rightists show greater conscientiousness (Van Hiel et al., 2004).

These differences should translate to different perceptions and levels of support for using AI in governance decisions. While Rightists may be more deterred by its unconventionality and the uncertainty it brings, Leftists may find these aspects less intimidating and be more intrigued by the innovation that AI may present. Indeed, recent studies found that Rightists showed less support for technological innovations due to binding value concerns (Claudy et al., 2024). However, the context in which a decision or evaluation is made may also influence the ways in which Rightists and Leftists view the use of technological advances. For instance, one research found that Rightists favored government use of automated decision systems such as AIs more than Leftists (Schiff et al., 2022). However, another research found that Leftists prefer support for the use of AI in policing is higher than Rightists and that the support of each varies for different contextual factors such as local sheriffs versus FBI use of AI and use for internal review of officers versus predictive policing of the public (Schiff et al., 2023).

Trust in the government and acceptance of AI

Many contextual-situational factors were found to increase (or decrease) trust in AI, thus affecting support for integrating AI into governance (Kaplan et al., 2023; Madhavan and Wiegmann, 2007). For instance, people have less trust in AI regarding decisions and tasks that are typically human (intuitive judgments, emotional responses, etc.) but more trust in AI regarding seemingly logical decisions and tasks (Lee, 2018; Stai et al., 2020). People tend to have greater support for AI making medical resource allocation decisions after being exposed to information emphasizing racial and economic inequality in medical outcomes (Bigman et al., 2021). Similarly, having more trust in the system is associated with greater trust in a human physician than an AI; however, less trust in the medical system is associated with no such preference for a human over an AI (Lee and Rich, 2021). Furthermore, people from countries that are characterized by high levels of corruption tend to support the use of AI in decision-making more than people from countries that are not characterized by corruption (Castelo, 2023).

In recent years, there has been a surge of right-wing organizations and political parties disseminating messages aimed at eroding trust in governing institutions and challenging their legitimacy on a global scale (Greven, 2016; Rodrik, 2021). For instance, a recent survey conducted in the US found that about 4 in 10 Leftists (Democrats) and 7 in 10 Rightists (Republicans) do not trust the federal government to do what is right (Hitlin and Shutava, 2022).1 This phenomenon can weaken democratic systems and may impact the extent to which individuals trust AI in shaping governance decisions. Although individuals tend to place greater trust in human judgment regarding decisions involving elements of intuition, morality, or emotions (Lee, 2018); when trust in the existing system is notably diminished, people may begin to place AI-generated decisions on par with those made by individuals who represent the system (Lee and Rich, 2021).

The current research

Similar to numerous other nations, Israel has experienced substantial political turmoil in recent times. Israelis were called to vote in national elections five times between 2019 and 2022. The government that was established following the 2022 November elections was the most Right-winged in Israel’s history,2 and soon after its formation, it set out to reform Israel’s governing systems. The suggested reform would allow the government greater power while weakening the judicial system, similarly to changes that steered Poland and Hungary away from liberal democracy (Gidron, 2023). At the time (as is still true at the time these lines are being written), the fate of Israel’s democracy seems unclear.

In the past, it was suggested that there is a gap in our understanding of peoples’ attitudes regarding the use of AI indicating that additional research is needed to explore the influence of political ideology on support for the use of AI in governance in various contexts (Schiff et al., 2022). This study was conducted during the initial waves of protests that washed through Israel shortly after the proclamation of the government’s intentions to initiate reform.3 In this study, we examined the interplay between political ideology and the current political situation in Israel. We hypothesized that Leftists would show greater support for using AI in governance decision-making since they tend to be more open to changes and seek innovations (Carney et al., 2008). Interestingly, in the case of AI use, the Leftist tendency to embrace innovation may be somewhat contradictory with Leftist values since AI uses past decisions to make current decisions, which may lead to both biased and conservative patterns (Buolamwini and Gebru, 2018; Chouldechova, 2017; Eubanks, 2017). In the context of this research, Leftists may be more concerned that the new reform could increase inequality and social injustice and, therefore, be motivated to limit its power, even by an unknown mechanism such as AI. This is somewhat like Schiff et al. (2023) suggestion that Leftists may support the use of AI in policing not because they believe it is unbiased but because they believe that the current police bias is worse. We did not phrase assumptions regarding the different features associated with support for using AI in governance decision-making for Leftists versus Rightists.

Methods

The institutional ethics committee approved the research.4 The comprehensive study plan, items (translated to English), data, and code required for replicating the results presented in this study can be accessed through the Open Science Framework at the following link: https://osf.io/sbdjc/?view_only=d2ddcf4f920b41209e1b45f4a99d3b4a. This study was not preregistered.

Sample

A representative sample in terms of gender, age, and place of residence of Jewish Israeli society (N = 703; 53.6% women; Mage = 48.74, SD = 16.52) was recruited via an online survey company (HaMidgam).5 Regarding political ideology, 43.1% of the participants defined themselves as Rightists, 32.7% as centrists, and 24.2% as Leftists.6 The checkmarket sample size calculator7 was used to assess the minimal required sample size for the population of 7.106 million Jews (Israel Central Bureau of Statistics, 2022). A 4% margin of error and a 95% confidence interval yielded a minimal sample size of 601 participants. The required sample size of 650 participants was calculated using G*Power version 3.1.9.4 (Faul et al., 2007; Faul et al., 2009). This calculation was based on conducting a multiple linear regression analysis involving 29 predictors. The anticipated effect size was set at f2 = 0.07, which falls between a small and medium effect and the desired statistical power of 0.8. These specifications yielded a desired sample size of 325 participants. However, the analysis was planned to be carried out separately for two groups (Rightists and Center-Leftists); thus, the total sample size needed was doubled, resulting in a target of 650 participants.

Procedure

Data was collected by a survey company (“Midgam Project Web Panel”), between April 3rd and April 5th, 2023. Participants were asked to participate in exchange for monetary compensation, provided informed consent, and proceeded to complete the study questionnaire. Participants reported their attitudes and perceptions regarding AI and decision-making by the government at the time. Because there were fewer participants with left-leaning political views in our sample, we merged the center and left-leaning individuals into a single “Center-Leftist” group (56.9%) for comparison with the “Rightist” group (43.1%).

Measures

As this study was exploratory, we investigated a range of measures. To effectively categorize these measures, we have separated them into two groups: those related to AI and technology and those concerning government and the suggested reform.

Measures related to technology and AI

Technology knowledge (Tech Know). Participants’ perception of their technological literacy was assessed using participants’ responses to 4 items adapted from Mantello et al. (2023), e.g., “How familiar are you with coding and programming?” (𝛼 = 0.86). High scores denote high perceived self-knowledge of technology. The scales were all translated to Hebrew, and unless otherwise mentioned, responses to all scales ranged from 1 (completely disagree) to 6 (greatly agree). Note: This and the following variables will appear in an abbreviated form in Table. The abbreviated forms of the variables are listed in brackets next to their entire label.

Technology readiness (Tech readiness). Willingness to accept and use new technologies, in general, was assessed via participants’ responses to 18 items previously used by Lam et al. (2008), e.g., “You enjoy the challenge of figuring out high tech gadgets” (𝛼 = 0.86). High scores denote willingness to adopt new technologies.

Legitimacy of using AI in governance (Legitimacy(AI)). Perceived legitimacy was assessed using five items regarding fairness, equality, and safety. Equality items were adapted from the legitimacy scale used by Starke and Lünich (2020), e.g., “The AI-based governance decision-making process would be fair” (𝛼 = 0.85). High scores denote high perceived legitimacy of the use of AI in governance decision making.

Usefulness of AI in governance (Usefulness(AI)). The perceived usefulness of AI was assessed by six items adapted from Maaravi and Heller (2021), e.g., “Using AI-based decision-making mechanisms may improve the effectiveness of governance management” (𝛼 = 0.80). High scores denote high perceived efficiency (effectiveness) of the use of AI in governance decision making.8

Warmth toward AI (Warmth(AI)). We assessed warmth toward AI using the feeling Thermometer. The feeling thermometer is a prevalent measure of general positivity versus negativity toward an entity often used to assess affective polarization (Gidron et al., 2019). Participants rated their feelings toward AI on a thermometer scale ranging from 0 (highly cold/unfavorable) to 100 (highly warm/favorable).

Emotions regarding using AI in governance. Participants were asked to assess their own emotional state when thinking of AI being used to make governance decisions. Participants rated how much fear, anxiety, excitement, despair, anger, hope, and sadness they felt at using AI in governance decision-making.

Fear of personal harm if AI is used in governance (Fear of harm(AI)). Perceived risk of harm was assessed by three items, e.g., “Are you concerned that decisions made using AI could harm your or your family members’ well-being? “(𝛼 = 0.89). High scores denote perceived high risk or high levels of fear for oneself and one’s family due to use of AI in governance decision making.

Perceived public support regarding the use of AI in governance (Norms(AI)). Perceived norms were examined using two items in which the participant assessed the percentage of Israelis supporting using AI in governance decision-making. The items were: “In your opinion, what percentage of Israelis support the use of AI as a tool for governance decision-making?” and “In your opinion, what percentage of Israelis believe that governance decisions made by AI are legitimate?.” Participants responded to these items using a slider ranging from 0 to 100% (r = 0.74, p < 0.001). High scores denote the perception that the use of AI in governance is normative and supported by much of society. 9

Attitudes regarding AI use in governance (Attitudes(AI)). Attitudes regarding the use of AI as an aid in governance decisions were assessed using two items adapted from Maaravi and Heller (2021), e.g., “Using AI-based decision-making mechanisms as a tool in governance management is a good idea” (r = 0.76, p < 0.001). High scores denote the acceptance of the use of AI in governance decision-making.10

Support for the use of AI in governance decision-making (Support(AI)). Support for the use of AI was assessed by eight items adapted from Maaravi and Heller (2021), e.g., “I will support the use of AI as often as possible in governance decision-making” (𝛼 = 0.89). High scores denote support for the use of AI in governance decision-making.

Measures related to the current government

Political efficacy (Political efficacy). Participants’ political self-efficacy (i.e., the perception that one can affect the political processes or situations) was assessed by two items: “As a citizen, you can influence the decision-making processes in the country” and “As a citizen, you can influence policy decisions that will affect your future” (r = 0.77, p < 0.001). High scores denote high political self-efficacy.

Warmth to various factions in the government (Warmth(GOV)). We assessed warmth toward the government using the feeling Thermometer (Gidron et al., 2019). Participants rated their feelings toward the current government and the coalition on a thermometer scale ranging from 0 (highly cold/unfavorable) to 100 (highly warm/favorable).

Emotions regarding the current government. Participants were asked to assess their emotional state when thinking of the current government making governance decisions. Participants rated how much fear, anxiety, excitement, despair, anger, hope, and sadness they felt at the thought regarding the current government’s decision-making. High scores denote feeling more intense emotions.

Fear of personal harm due to the current government’s political decisions (Fear of harm(GOV)). Perceived risk of harm was assessed by three items; e.g., “Are you concerned that decisions made by the current government could harm your or your family members’ well-being? “(𝛼 = 0.94). High scores denote perceived high risk or high levels of fear for oneself and one’s family due to the current government political decisions.

Public support of the reform (Norms(GOV)). Perceived norms were examined using six items in which the participant assessed the percentage of Israelis supporting the government’s proposed reforms, e.g., “In your opinion, what percentage of Israelis support the government’s latest reforms?.” Participants responded to these items using a slider ranging from 0 to 100% (𝛼 = 0.77). High scores denote the perception that much of society supports the proposed reform.

Participants also reported social demographic measures and other additional measures not used in the current paper (for the complete questionnaire, see Appendix C).

Results

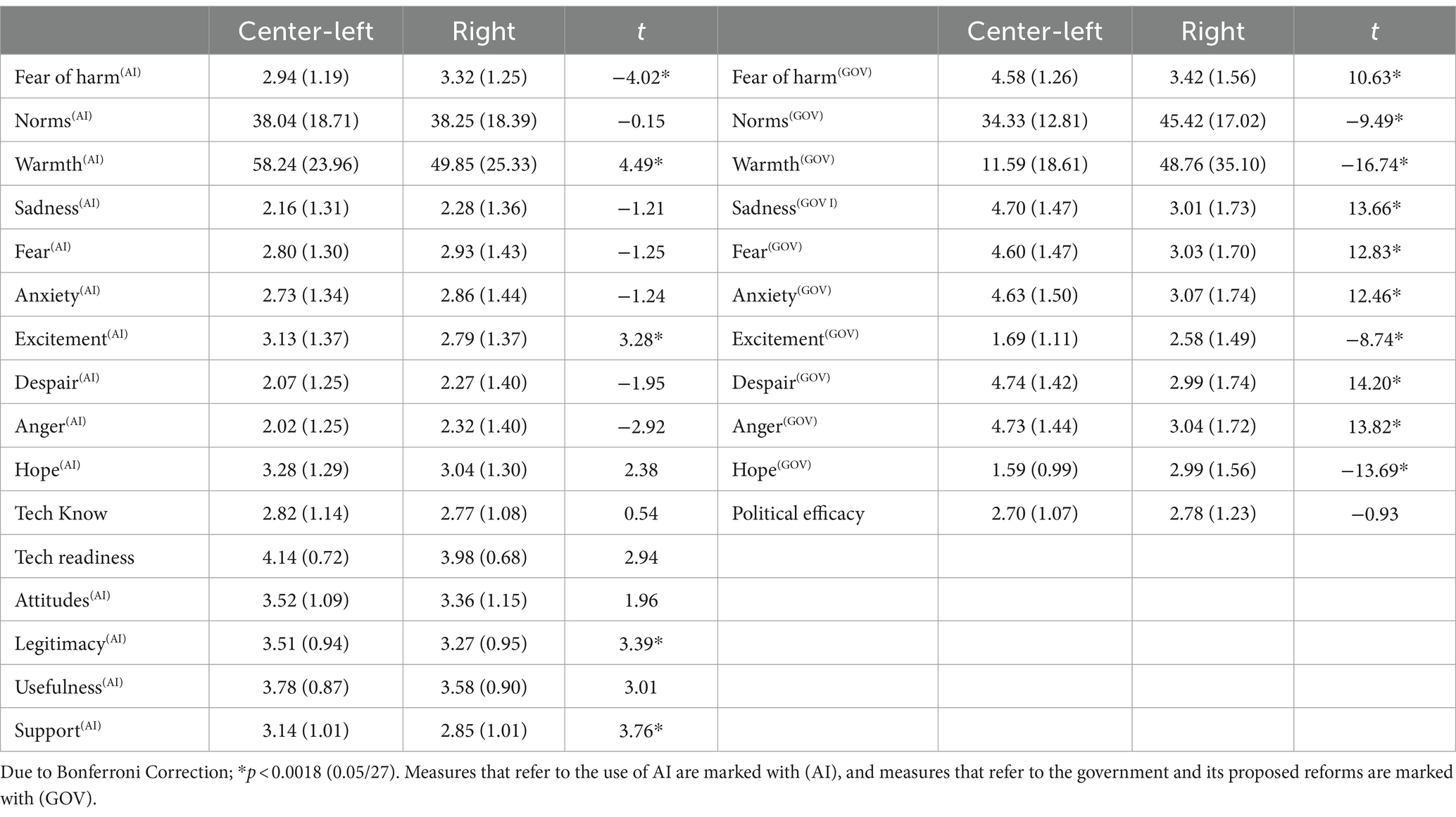

We first examined the differences between Rightists’ and Center-leftists’ perceptions, attitudes, and emotions regarding the use of AI within governance decision-making. As hypothesized, the results indicate that compared with Rightists, Center-leftists reported more favorable attitudes toward technology in general and specifically toward the use of AI within governance decision-making, more positive emotions and less negative emotions regarding the use of AI within governance, and greater support for the use of AI in governance decision making. Interestingly, Center-leftists and Rightists did not differ in the extent to which they rated their technology knowledge or their views about public support (norms) regarding the use of AI in governance decision-making.

We then examined the differences between Rightists’ and Center-leftists’ perceptions, attitudes, and emotions regarding the government, its governance decision-making, and the proposed reforms. The results indicate that Center-leftists reported more negative attitudes and feelings toward the government, its governance decision-making, and the proposed reforms than Rightists. Interestingly, Rightists and Center-leftists did not differ in their perceived political efficacy (despite having a right-wing government; See Table 1).

Table 1. Means, standard deviations (in parentheses), and comparisons between rightists and center-leftists.

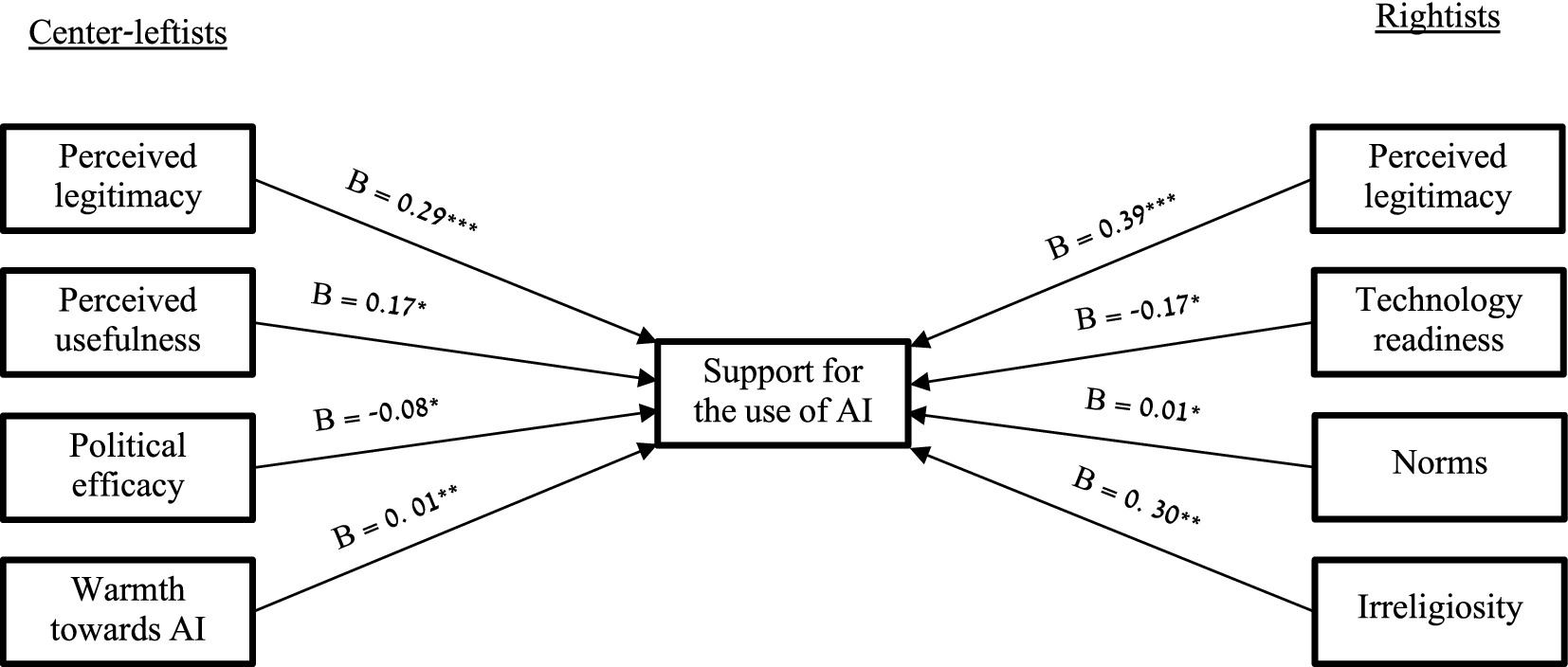

Finally, we conducted an exploratory analysis of the data to examine the issues associated with support for using AI in governance. We conducted two linear regressions (separately for Center-leftists and Rightists) with support for using AI in governance as an outcome variable and the other measures mentioned above as predictors (while holding age and gender). Among the Center-leftists, the significant predictors of support for the use of AI were perceived usefulness of the use of AI (B = 0.17, SE = 0.08, t = 2.04, p = 0.042), warmth toward AI (B = 0.01, SE = 0.002, t = 2.85, p = 0.005), and political efficacy (more political efficacy associated with less support for the use of AI; B = −0.08, SE = 0.04, t = −2.20, p = 0.028). For Rightists, technology readiness (B = −0.17, SE = 0.08, t = −1.98, p = 0.049), perceived public support (B = 0.01, SE = 0.003, t = 2.21, p = 0.028), and irreligiosity (i.e., being secular; B = 0.30, SE = 0.10, t = 3.04, p = 0.003) were significant predictors of support for the use of AI in governance decision-making. Interestingly, when controlling for all other variables, technology readiness predicts less support for using AI in governance. Perceived legitimacy of AI as an aid in making governance decisions predicted support for the use of AI as a governance decision-making tool among both Center-leftists (B = 0.29, SE = 0.08, t = 3.83, p < 0.001) and Rightists (B = 0.39, SE = 0.09, t = 4.24, p < 0.001; see Appendix B for complete statistics; Figure 1).

Figure 1. Significant predictors of support for using AI in governance. *p < 0.05; **p < 0.01; ***p < 0.001.

Discussion

This research investigates the relationship between political ideology and new technologies in a particular political context, focusing on support for using AI in governance. The study was conducted in Israel during political unrest, as a radical political reform pushed forward by an extreme Right-wing government faced significant public opposition. It explores how Rightists (conservatives) and Leftists (liberals) differ in their acceptance and perception regarding allowing AI to have a role in governance decision-making. As expected, our findings show that Center-leftists feel greater warmth and excitement regarding the use of AI and are less fearful of using AI in governance while also perceiving it as more legitimate than Rightists.

Our study analyzed the differences between Rightists and Center-leftists in Israel. During the survey period, a Rightist block supported the right-wing government and its reforms, while a similar-sized block of Centrists and Leftists opposed them. Notably, though Leftists were a minority, many Centrists also resisted the government’s policies (Anabi, 2022; Hermann and Anabi, 2023b; Hermann and Anabi, 2023a). Our findings reveal stark contrasts between these groups’ attitudes and emotions regarding the government and its proposed reforms. Center-leftists generally exhibited less warmth, hope, and excitement and heightened despair, anxiety, anger, sadness, and fear, including concerns for personal and family safety and well-being.

The study also reveals different underlying factors between Rightists’ and Center-leftists’ support for using AI in governance. Both groups’ support was influenced by perceived legitimacy. However, Rightists are swayed by social norms regarding AI use, technology readiness, and irreligiosity, while Leftists are influenced by perceived usefulness, political efficacy, and warmth toward AI. Surprisingly, among Rightists, higher levels of technology readiness predicted less support for using AI in governance. A possible explanation for this surprising finding is that the perceived legitimacy of AI and norms regarding the use of AI (and other predictors like the perceived usefulness of AI) account for most of the variance in support for using AI in governance having to do with perceptions of safety, efficiency, and legitimacy. After controlling for these predictors, the remaining variance that technology readiness can account for may have to do with being comfortable with current technologies, while AI may signify a very different type of technology which may be intimidating.

These results may signal that Rightists’ support for using AI in governance is rooted in social acceptance. In contrast, Leftists’ support is rooted in usefulness and the potential for change in situations they find objectionable. This research highlights the complex interplay between political ideology, technology adoption, and current political climates, particularly in contexts like Israel’s precarious political situation.

The findings extend the Uncertainty-Threat Model of political conservatism to a new, previously unstudied context (i.e., the use of AI in governance decision-making). According to the Uncertainty-Threat Model (Jost et al., 2003a), rightists tend to avoid change as it is often ripe with uncertainty that they are very averse to, while the use of AI represents the possibility of immense changes and uncertainty as there is so much that is unknown about this new technology. We also found that one issue that underlies Rightists (but not Leftists) support for using AI was perceived norms, i.e., how accepted by society is this specific use for AI. This is consistent with past research that found that perceived norms influence Rightists’ behavior more than Leftists (Cavazza and Mucchi-Faina, 2008; Kaikati et al., 2017).

Leftists’ perceptions of AI are interesting since, on the one hand, Leftists seem to be more open to societal changes and innovations (Carney et al., 2008; Hibbing et al., 2014), yet AI is an innovation that uses existing societal knowledge to make new decisions and thus may reinforce existing societal structures and discriminatory processes that are inconsistent with liberal values (Howard and Borenstein, 2018; Mehrabi et al., 2021). For instance, due to ad optimization algorithms, women are exposed to fewer advertisements promoting jobs in Science, Technology, Engineering, and Math (STEM) fields (Lambrecht and Tucker, 2019). Also, AI used by courts in the United States was found to falsely evaluate African American offenders’ risk of committing another crime as higher than White offenders (Mehrabi et al., 2021). Our findings indicate that while Leftists were more supportive of the use of AI in making decisions, the concerns that underlie their support were associated less with perceptions of fairness and more with the practicality of the matter, i.e., usefulness and political efficacy (that refers to whether they felt they could change governance in Israel in another way).

The current study adds to the body of knowledge regarding how Leftists and Rightists respond to new technological situations, thus adding to our understanding of the issues that underlie peoples’ support for new technologies and the differences between Leftists and Rightists. This examination focused on the increasing power we give AI mechanisms over our lives, an irrelevant issue until very recently but looms over us as AI mechanisms are integrated into more and more areas of our lives. These findings are important as they expose possible differences regarding the issues that affect support for the use of AI within government decision-making among people with different political ideologies.

Limitations and future directions

The current study examined different responses based on political orientation during a politically turbulent time in Israel’s history. This led to some interesting findings, yet future studies should examine these issues in other countries and different political situations to disentangle the effects of political orientation from the effects of one’s perception of the government. We examined people’s responses to the use of AI in governance but did not delve into the various branches of government AI could be used in or the different roles it may have. Future research should investigate support for AI use in specific governance functions, thus allowing for more nuanced insights, as different applications of AI might carry different meanings. We compared the responses of Rightists to those of Center-leftists due to the low percentage of Leftists in the current population of Israel. Future studies should examine support for using AI in governance in populations that allow for a representative yet balanced sample of Rightists and Leftists.

We found that perceptions of legitimacy underlie both Rightists and Center-leftists’ support for using AI in governance. However, we did not delve into what that legitimacy might entail, as Rightists and Leftists may stress different aspects of legitimacy. For instance, when referring to fairness, do the Rightists and Leftists note interactional fairness, distributive fairness, or informational fairness; when referring to an appropriate and satisfactory process, do the Rightists and Leftists refer to the same things? Future studies should more thoroughly examine the mechanisms that lead to one’s choice regarding giving AI mechanisms great power. Future studies should also further examine additional spheres in which people may support using AI in making crucial decisions and further examine issues that underlie peoples’ support for using AI in making decisions. Future studies may track changes in attitudes over time, and examine these changes in light of political events and developments in AI technology. This research focused on people’s perceptions of AI and support for using it; another important avenue of research that should be examined in the future is the actual utility and drawbacks of different uses of AI. Future research should identify policy areas where AI could be most beneficial, compare outcomes of AI-driven vs. human-driven policies, and examine strategies to ensure unbiased, democratic AI-driven policies. Finally, additional research is needed to address ethical implications and strategies for maintaining ethics and public trust in AI-driven governance.

Conclusion

The current research found different issues underlie Leftists and Rightists’ support for using AI in governance. However, beyond the contribution to the understanding of political ideology and support for innovations and the use of AI, this paper points to a broader societal aspect: individuals in dire situations may demonstrate varying levels of willingness to embrace new technologies, while it is not clear whether they fully comprehend or consider the potential consequences. This variability in receptivity underscores the need for thoughtful policy design. Whether the goal is to promote the adoption of emerging technologies or to implement regulatory measures, policymakers and inventors of new technologies must consider the diverse attitudes and circumstances of those affected. Recognizing these nuances will be essential in navigating the intricate landscape of AI integration into governance decision-making processes.

Data availability statement

The data and code used for the statistical analysis presented in this study can be accessed through the Open Science Framework at the following link: https://osf.io/sbdjc/?view_only=d2ddcf4f920b41209e1b45f4a99d3b4a.

Ethics statement

The studies involving humans were approved by Adelson School of Entrepreneurship at Reichman University IRB. The studies were conducted in accordance with the locallegislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

TG: Data curation, Formal analysis, Methodology, Project administration, Visualization, Writing – original draft. BH: Conceptualization, Methodology, Writing – review & editing. YM: Conceptualization, Methodology, Project administration, Resources, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frai.2024.1447171/full#supplementary-material

Footnotes

1. ^The survey was conducted during Biden’s presidency.

2. ^This government included the traditionally moderate right Likud party, ultra-Orthodox parties (i.e., Shas and Yahadut Hatora), and the Religious Zionism alliance of Right-wing parties. Prior to 2022, the Religious Zionism alliance was seen by both moderate Leftists and moderate Rightists as too extreme to be included in the government.

3. ^This survey was conducted before the 2023 October 7th attack on Israel and the war that followed.

4. ^Approval number 023 (dated April 2, 2023).

5. ^For additional demographic information about the sample, see Appendix A.

6. ^In this sample, we intentionally overrepresented leftists, who comprise approximately 11% of the population (Anabi, 2022), to ensure a sufficient number for analysis. However, even with the oversampling of Leftists, there was no balance in the percentage of Rightists and Leftists. Therefore, we combined the Leftists and the Centrists to create a Center-Leftist group, which we compared to the Rightist group.

7. ^Checkmarket sample size calculator, retrieved from: https://www.checkmarket.com/sample-size-calculator/#sample-size-calculator.

8. ^Participants responded to this measure twice, and their response consistency was examined. We found no significant differences in patterns of responses given in each of the times, t (702) = 0.59, p = 0.559; r = 0.85, p < 0.001.

9. ^An additional item (What percentage of Israelis do you think believe that using artificial intelligence as a tool for policy decision-making should be avoided altogether?) was removed as it reduced Cronbach’s Alpha reliability.

10. ^An additional item (The thought of using AI-based decision-making mechanisms in state management is unpleasant) was removed as it reduced Cronbach’s Alpha reliability.

References

Alser, M., and Waisberg, E. (2023). Concerns with the usage of ChatGPT in academia and medicine: a viewpoint. Am. J. Med. 9:100036. doi: 10.1016/j.ajmo.2023.100036

Anabi, O. (2022). Jewish Israeli voters moving right - analysis : The Israel Democracy Institute. Available online: https://en.idi.org.il/articles/45854 (Accessed on June 5, 2024).

Aung, Y. Y. M., Wong, D. C. S., and Ting, D. S. W. (2021). The promise of artificial intelligence: a review of the opportunities and challenges of artificial intelligence in healthcare. Br. Med. Bull. 139, 4–15. doi: 10.1093/bmb/ldab016

Baidoo-Anu, D., and Ansah, L. O. (2023). Education in the era of generative artificial intelligence (AI): understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 7, 52–62. doi: 10.61969/jai.1337500

Bartneck, C., Lütge, C., Wagner, A., Welsh, S., Bartneck, C., Lütge, C., et al. (2021). Privacy issues of AI. An introduction to ethics in robotics and AI, SpringerBriefs in Ethics. Cham: Springer. 61–70.

Bigman, Y. E., Yam, K. C., Marciano, D., Reynolds, S. J., and Gray, K. (2021). Threat of racial and economic inequality increases preference for algorithm decision-making. Comput. Hum. Behav. 122:106859. doi: 10.1016/j.chb.2021.106859

Böhm, R., Jörling, M., Reiter, L., and Fuchs, C. (2023). People devalue generative AI’s competence but not its advice in addressing societal and personal challenges. Communications Psychol. 1:32. doi: 10.1038/s44271-023-00032-x

Buolamwini, J., and Gebru, T. (2018). “Gender shades: intersectional accuracy disparities in commercial gender classification.” In Proceedings of machine learning research, 81:77–91. New York, NY: USA.

Caliskan, A., Bryson, J. J., and Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science 356, 183–186. doi: 10.1126/science.aal4230

Carney, D. R., Jost, J. T., Gosling, S. D., and Potter, J. (2008). The secret lives of liberals and conservatives: personality profiles, interaction styles, and the things they leave behind. Polit. Psychol. 29, 807–840. doi: 10.1111/j.1467-9221.2008.00668.x

Castelo, N. (2023). Perceived corruption reduces algorithm aversion. J. Consum. Psychol. 34, 326–333. doi: 10.1002/jcpy.1373

Cavazza, N., and Mucchi-Faina, A. (2008). Me, us, or them: who is more conformist? Perception of conformity and political orientation. J. Soc. Psychol. 148, 335–346. doi: 10.3200/SOCP.148.3.335-346

Chiusi, F., Fischer, S., Kayser-Bril, N., and Spielkamp, M. (2020). Automating society report 2020. US: Algorithm Watch, Bertelsmann Foundation.

Chouldechova, A. (2017). Fair prediction with disparate impact: a study of bias in recidivism prediction instruments. Big Data 5, 153–163. doi: 10.1089/big.2016.0047

Claudy, M. C., Parkinson, M., and Aquino, K. (2024). Why should innovators care about morality? Political ideology, moral foundations, and the acceptance of technological innovations. Technol. Forecast. Soc. Chang. 203:123384. doi: 10.1016/j.techfore.2024.123384

Coglianese, C., and Ben Dor, L. M. (2020). AI in adjudication and administration. Brooklyn Law Rev. 86, 791–838. doi: 10.2139/ssrn.3501067

Eubanks, V. (2017). Automating inequality: How high-tech tools profile, police, and punish the poor. first Edn. New York, NY: St. Martin's Press.

Faul, F., Erdfelder, E., Buchner, A., and Lang, A. G. (2009). Statistical power analyses using G* power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Faul, F., Erdfelder, E., Lang, A. G., and Buchner, A. (2007). G* power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Garg, R. K., Urs, V. L., Agrawal, A. A., Chaudhary, S. K., Paliwal, V., and Kar, S. K. (2023). Exploring the role of chat GPT in patient care (diagnosis and treatment) and medical research: a systematic review. Health Promotion Perspectives, 13, 183.

Gidron, N. (2023). Why israeli democracy is in crisis. J. Democr. 34, 33–45. doi: 10.1353/jod.2023.a900431

Gidron, N., Adams, J., and Horne, W. (2019). Toward a comparative research agenda on affective polarization in mass publics. APSA Comparative Politics Newsletter 29, 30–36.

Golding, L. P., and Nicola, G. N. (2019). A business case for artificial intelligence tools: the currency of improved quality and reduced cost. J. Am. Coll. Radiol. 16, 1357–1361. doi: 10.1016/j.jacr.2019.05.004

Greven, T. (2016). The rise of right-wing populism in Europe and the United States. A Comparative Perspective. Washington DC Office: Friedrich Ebert Foundation, 1–8.

Haidt, J., and Graham, J. (2007). When morality opposes justice: conservatives have moral intuitions that liberals may not recognize. Soc. Justice Res 20, 98–116. doi: 10.1007/s11211-007-0034-z

Hermann, T., and Anabi, A. (2023a). A majority of Israelis think that Israel is currently in a state of emergency. Israel: Israeli Voice Index, The Israel Democracy Institute.

Hermann, T., and Anabi, A. (2023b). National Mood Unrestful. Israel: Israeli voice index, The Israel Democracy Institute.

Hibbing, J. R., Smith, K. B., and Alford, J. R. (2014). Differences in negativity bias underlie variations in political ideology. Behav. Brain Sci. 37, 297–307. doi: 10.1017/S0140525X13001192

Hitlin, P., and Shutava, N. (2022). Trust in government. Partnership for Public Service and Freedman Consulting. Retrieved from: https://ourpublicservice.org/wp-content/uploads/2022/03/Trust-in-Government.pdf (Accessed June 5, 2024).

Howard, A., and Borenstein, J. (2018). The ugly truth about ourselves and our robot creations: the problem of bias and social inequity. Sci. Eng. Ethics 24, 1521–1536. doi: 10.1007/s11948-017-9975-2

Israel Central Bureau of Statistics (2022). Population of Israel on the eve of 2023, retrieved from: https://www.cbs.gov.il/en/mediarelease/Pages/2022/Population-of-Israel-on-the-Eve-of-2023.aspx (Accessed June 5, 2024).

Israel Innovation Authority. (2018). AI, Meuhedet health fund (HMO), and Soroka university medical center, Innovation Report 2018. Retrieved from: https://innovationisrael.org.il/en/article/ai-meuhedet-health-fund-hmo-and-soroka-university-medical-center (Accessed June 5, 2024).

Jost, J. T., Glaser, J., Kruglanski, A. W., and Sulloway, F. J. (2003a). Political conservatism as motivated social cognition. Psychol. Bull. 129, 339–375. doi: 10.1037/0033-2909.129.3.339

Jost, J. T., Glaser, J., Kruglanski, A. W., and Sulloway, F. J. (2003b). Exceptions that prove the rule—using a theory of motivated social cognition to account for ideological incongruities and political anomalies: reply to Greenberg and Jonas (2003). Psychol. Bull. 129, 383–393. doi: 10.1037/0033-2909.129.3.383

Jost, J. T., Napier, J. L., Thorisdottir, H., Gosling, S. D., Palfai, T. P., and Ostafin, B. (2007). Are needs to manage uncertainty and threat associated with political conservatism or ideological extremity? Personal. Soc. Psychol. Bull. 33, 989–1007. doi: 10.1177/0146167207301028

Kaikati, A. M., Torelli, C. J., Winterich, K. P., and Rodas, M. A. (2017). Conforming conservatives: how salient social identities can increase donations. J. Consum. Psychol. 27, 422–434. doi: 10.1016/j.jcps.2017.06.001

Kaplan, A. D., Kessler, T. T., Brill, J. C., and Hancock, P. A. (2023). Trust in artificial intelligence: Meta-analytic findings. Hum. Factors 65, 337–359. doi: 10.1177/00187208211013988

Kluegel, J. R. (1990). Trend in whites’ explanations of the black-white gap in socioeconomic status, 1977-1989. Am. Sociol. Rev. 55, 512–525. doi: 10.2307/2095804

Kuziemski, M., and Misuraca, G. (2020). AI governance in the public sector: three tales from the frontiers of automated decision-making in democratic settings. Telecommun. Policy 44:101976. doi: 10.1016/j.telpol.2020.101976

Lam, S. Y., Chiang, J., and Parasuraman, A. (2008). The effects of the dimensions of technology readiness on technology acceptance: an empirical analysis. J. Interact. Mark. 22, 19–39. doi: 10.1002/dir.20119

Lambrecht, A., and Tucker, C. (2019). Algorithmic bias? An empirical study of apparent gender-based discrimination in the display of STEM career ads. Manag. Sci. 65, 2966–2981. doi: 10.1287/mnsc.2018.3093

Lee, M. K. (2018). Understanding perception of algorithmic decisions: fairness, trust, and emotion in response to algorithmic management. Big Data Soc. 5:2053951718756684. doi: 10.1177/2053951718756684

Lee, M. K., and Rich, K. (2021). “Who is included in human perceptions of AI?: trust and perceived fairness around healthcare AI and cultural mistrust.” In Proceedings of the 2021 CHI conference on human factors in computing systems (pp. 1–14).

Lockey, S., Gillespie, N., Holm, D., and Someh, I. A. (2021). “A review of trust in artificial intelligence: challenges, vulnerabilities and future directions.” Proceedings of the 54th Hawaii International Conference on System Sciences.

Maaravi, Y., and Heller, B. (2021). Digital innovation in times of crisis: how mashups improve quality of education. Sustain. For. 13:7082. doi: 10.3390/su13137082

Madhavan, P., and Wiegmann, D. A. (2007). Effects of information source, pedigree, and reliability on operator interaction with decision support systems. Hum. Factors 49, 773–785. doi: 10.1518/001872007X230154

Makridakis, S. (2017). The forthcoming artificial intelligence (AI) revolution: its impact on society and firms. Futures 90, 46–60. doi: 10.1016/j.futures.2017.03.006

Mantello, P., Ho, M. T., Nguyen, M. H., and Vuong, Q. H. (2023). Bosses without a heart: socio-demographic and cross-cultural determinants of attitude toward emotional AI in the workplace. AI & Soc. 38, 97–119. doi: 10.1007/s00146-021-01290-1

Mariani, M. M., and Wamba, S. F. (2020). Exploring how consumer goods companies innovate in the digital age: the role of big data analytics companies. J. Bus. Res. 121, 338–352. doi: 10.1016/j.jbusres.2020.09.012

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., and Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM computing surveys (CSUR) 54, 1–35. doi: 10.1145/3457607

Nzobonimpa, S., and Savard, J. F. (2023). Ready but irresponsible? Analysis of the government artificial intelligence readiness index. Policy Internet, 15, 397–414. doi: 10.1002/poi3.351

Rodrik, D. (2021). Why does globalization fuel populism? Economics, culture, and the rise of right-wing populism. Annual Rev. Econ. 13, 133–170. doi: 10.1146/annurev-economics-070220-032416

Schiff, K. J., Schiff, D. S., Adams, I. T., McCrain, J., and Mourtgos, S. M. (2023). Institutional factors driving citizen perceptions of AI in government: evidence from a survey experiment on policing. Public Adm. Rev. doi: 10.1111/puar.13754

Schiff, D. S., Schiff, K. J., and Pierson, P. (2022). Assessing public value failure in government adoption of artificial intelligence. Public Adm. 100, 653–673. doi: 10.1111/padm.12742

Sharma, S., Gamoura, S., Prasad, D., and Aneja, A. (2021). Emerging legal informatics towards legal innovation: current status and future challenges and opportunities. Leg. Inf. Manag. 21, 218–235. doi: 10.1017/S1472669621000384

Sharma, S., Islam, N., Singh, G., and Dhir, A. (2022). Why do retail customers adopt artificial intelligence (Ai) based autonomous decision-making systems? IEEE Trans. Eng. Manag. 71, 1846–1861. doi: 10.1109/TEM.2022.3157976

Shoja, M. M., Van de Ridder, J. M., and Rajput, V. (2023). The emerging role of generative artificial intelligence in medical education, research, and practice. Cureus 15:e40883. doi: 10.7759/cureus.40883

Skitka, L. J., and Tetlock, P. E. (1993). Providing public assistance: cognitive and motivational processes underlying liberal and conservative policy preferences. J. Pers. Soc. Psychol. 65, 1205–1223. doi: 10.1037/0022-3514.65.6.1205

Sousa, W. G., Melo, E. R. P., Bermejo, P. H. D. S., Farias, R. A. S., and Gomes, A. O. (2019). How and where is artificial intelligence in the public sector going? A literature review and research agenda. Gov. Inf. Q. 36:101392. doi: 10.1016/j.giq.2019.07.004

Stai, B., Heller, N., McSweeney, S., Rickman, J., Blake, P., Vasdev, R., et al. (2020). Public perceptions of artificial intelligence and robotics in medicine. J. Endourol. 34, 1041–1048. doi: 10.1089/end.2020.0137

Starke, C., and Lünich, M. (2020). Artificial intelligence for political decision-making in the European Union: effects on citizens’ perceptions of input, throughput, and output legitimacy. Data & Policy 2:e16. doi: 10.1017/dap.2020.19

Stewart, B. D., and Morris, D. S. (2021). Moving morality beyond the in-group: liberals and conservatives show differences on group-framed moral foundations and these differences mediate the relationships to perceived bias and threat. Front. Psychol. 12:579908. doi: 10.3389/fpsyg.2021.579908

Tal, A., Elyoseph, Z., Haber, Y., Angert, T., Gur, T., Simon, T., et al. (2023). The artificial third: utilizing ChatGPT in mental health. Am. J. Bioeth. 23, 74–77. doi: 10.1080/15265161.2023.2250297

Van Hiel, A., Mervielde, I., and De Fruyt, F. (2004). The relationship between maladaptive personality and right wing ideology. Personal. Individ. Differ. 36, 405–417. doi: 10.1016/S0191-8869(03)00105-3

van Noordt, C., and Misuraca, G. (2022). Artificial intelligence for the public sector: results of landscaping the use of AI in government across the European Union. Gov. Inf. Q. 39:101714. doi: 10.1016/j.giq.2022.101714

Yu, K. H., Beam, A. L., and Kohane, I. S. (2018). Artificial intelligence in healthcare. Nature Biomedical Eng. 2, 719–731. doi: 10.1038/s41551-018-0305-z

Yudkowsky, E. (2008). Artificial intelligence as a positive and negative factor in global risk. Global catastrophic risks 1:184. doi: 10.1093/oso/9780198570509.003.0021

Keywords: artificial intelligence (AI), political ideology, artificial intelligence in governance, technology acceptance, governance

Citation: Gur T, Hameiri B and Maaravi Y (2024) Political ideology shapes support for the use of AI in policy-making. Front. Artif. Intell. 7:1447171. doi: 10.3389/frai.2024.1447171

Edited by:

Ritu Shandilya, Mount Mercy University, United StatesReviewed by:

Bertrand Kian Hassani, University College London, United KingdomSugam Sharma, Iowa State University SUF/ eLegalls LLC, United States

Carrie S. Alexander, University of California, Davis, United States

Copyright © 2024 Gur, Hameiri and Maaravi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tamar Gur, dGFtYXIuZ3VyLWFyaWVAbWFpbC5odWppLmFjLmls

Tamar Gur

Tamar Gur Boaz Hameiri

Boaz Hameiri Yossi Maaravi

Yossi Maaravi