94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Artif. Intell., 01 December 2023

Sec. AI for Human Learning and Behavior Change

Volume 6 - 2023 | https://doi.org/10.3389/frai.2023.1198180

This article is part of the Research TopicArtificial Intelligence Education & Governance - Preparing Human Intelligence for AI-Driven Performance AugmentationView all 9 articles

Yana Samuel1

Yana Samuel1 Margaret Brennan-Tonetta2

Margaret Brennan-Tonetta2 Jim Samuel2*

Jim Samuel2* Rajiv Kashyap3

Rajiv Kashyap3 Vivek Kumar4

Vivek Kumar4 Sri Krishna Kaashyap2

Sri Krishna Kaashyap2 Nishitha Chidipothu2

Nishitha Chidipothu2 Irawati Anand2

Irawati Anand2 Parth Jain2

Parth Jain2Artificial Intelligence (AI) has become ubiquitous in human society, and yet vast segments of the global population have no, little, or counterproductive information about AI. It is necessary to teach AI topics on a mass scale. While there is a rush to implement academic initiatives, scant attention has been paid to the unique challenges of teaching AI curricula to a global and culturally diverse audience with varying expectations of privacy, technological autonomy, risk preference, and knowledge sharing. Our study fills this void by focusing on AI elements in a new framework titled Culturally Adaptive Thinking in Education for AI (CATE-AI) to enable teaching AI concepts to culturally diverse learners. Failure to contextualize and sensitize AI education to culture and other categorical human-thought clusters, can lead to several undesirable effects including confusion, AI-phobia, cultural biases to AI, increased resistance toward AI technologies and AI education. We discuss and integrate human behavior theories, AI applications research, educational frameworks, and human centered AI principles to articulate CATE-AI. In the first part of this paper, we present the development a significantly enhanced version of CATE. In the second part, we explore textual data from AI related news articles to generate insights that lay the foundation for CATE-AI, and support our findings. The CATE-AI framework can help learners study artificial intelligence topics more effectively by serving as a basis for adapting and contextualizing AI to their sociocultural needs.

“It is crucial to make learning authentic and contextualize it in the lives and cultures of students so that it becomes meaningful for them. Especially with the task of teaching AI and ethics, … contextualization of the materials and topics used in curriculum help them make sense…”

Interest in artificial intelligence (AI) has peaked since November 2022 when OpenAI released ChatGPT. AI has escaped the confines of expert discussions, labs, devices, and technology applications and to emerge as a driver of mainstream societal progress in various domains such as education, healthcare and finance (King, 2022; Dowling and Lucey, 2023; Rudolph et al., 2023). AI technologies have rapidly invaded news media and public conversations and our analysis explored over forty thousand articles and posts (Figure 1). Our analysis covered media mentions of AI, natural language processing (NLP) and large language models (LLMs) in the media since 2022. Despite lack of knowledge, the appeal of generative AI has significantly increased public confidence in artificially intelligent capabilities. In the absence of rapid intervention and public education, this might lead to overconfidence and an over-reliance on AI tools like ChatGPT. The absence of sufficient sound conceptual and theoretical discussions on AI, human interaction with AI and AI education presents a compelling need to explore relevant conceptual frameworks.

AI and its subfield natural language processing (NLP) have become increasingly prevalent in every aspect of our lives, with public engagement intensifying since the advent of OpenAI's Generative Pretrained Transformers (GPT) based ChatGPT application (Wei et al., 2022). It is important to note that ChatGPT, a language model developed by OpenAI that uses machine learning to generate human-like responses to text prompts, functions largely as an opaque blackbox. Given that “every human will interact with AI in the visible future, directly or indirectly, some for creating products and services, some for research, some for government, some for education, and many for consumption,” it is vital to understand AI education (Samuel et al., 2022a). Consequently, we have witnessed an increase in AI curriculum, courses, programs, and academic efforts (Touretzky et al., 2019; Chiu et al., 2021; Samuel, 2021b). It is imperative to evolve AI education, as every individual will inevitably engage with artificial intelligence (AI) in the foreseeable future.

AI powered applications released within the past year such as BigScience Large Open-science Open-access Multilingual (BLOOM), Large Language Model Meta AI (LLaMA), Pathways Language Model (PaLM) and OpenAI (Google, 2023; HuggingFace, 2023; Meta, 2023; Open AI, 2023) represent the next wave of innovations that will help reimagine and reshape the future of the human race. These foundation models have been accompanied by pathbreaking AI research, which portend greater productivity per worker and enormous socioeconomic impacts. We assert that these advancements only serve to underscore the critical need to address the challenges of AI education.

Widespread ignorance about AI technologies and their global ramifications has rendered society unprepared for the impending AI wave. AI education must be re-envisioned so that future generations can deploy AI technologies responsibly and comprehend their potential consequences. AI education that emanates from the global North often encounters resistance due to fear of unintended consequences. However, to protect against the prospect of AI supremacy over humans (Samuel, 2022), we must step up efforts to spread AI education across the world.

Since AI education does not occur in a vacuum, each person's cultural background determines her or his ability to absorb AI instruction. AI education must be globally facilitated to ensure inclusiveness and equity, and locally contextualized to assure sensitivity to the needs of people of all ages, genders, races and cultures.

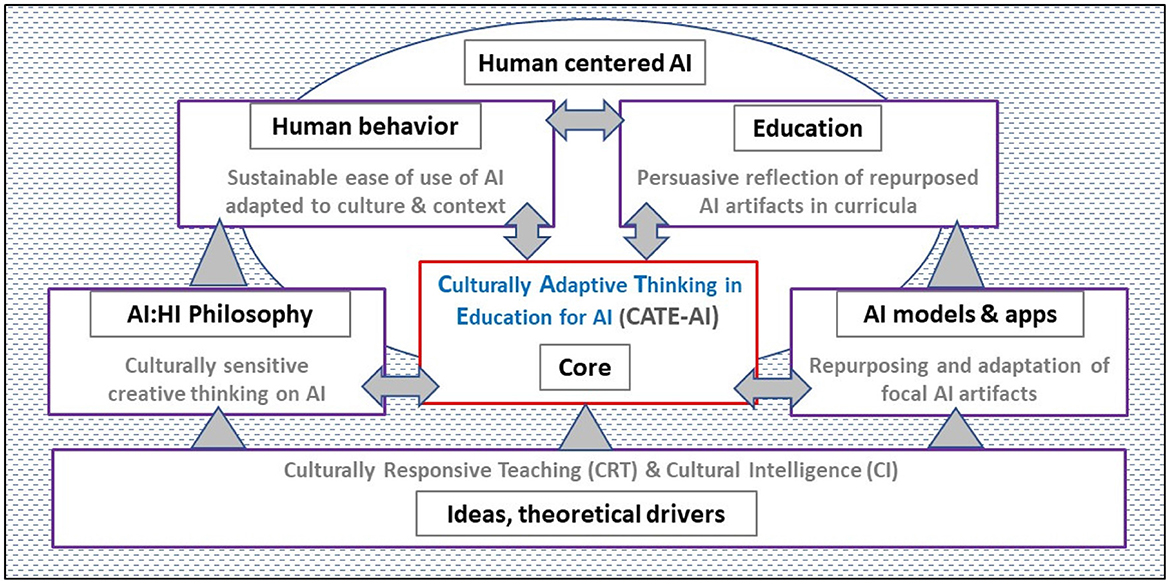

AI innovations, AI education, and AI technologies are interrelated and interdependent. Our research unifies the most significant themes to provide a framework that we refer to as Culturally Adaptive Thinking in Education for AI (CATE-AI) as presented in Figure 2. CATE-AI provides a lens to focus AI education through cultural adaptivity, which embodies sensitivity to gender, ethnic, and age-based needs. Our exploratory analysis precludes hypotheses development and empirical tests. Instead, we employ inductive reasoning based on previous research, current and emerging technology trends, and cases drawn from news media.

Figure 2. Culturally adaptive thinking in education for artificial intelligence (CATE-AI) framework.

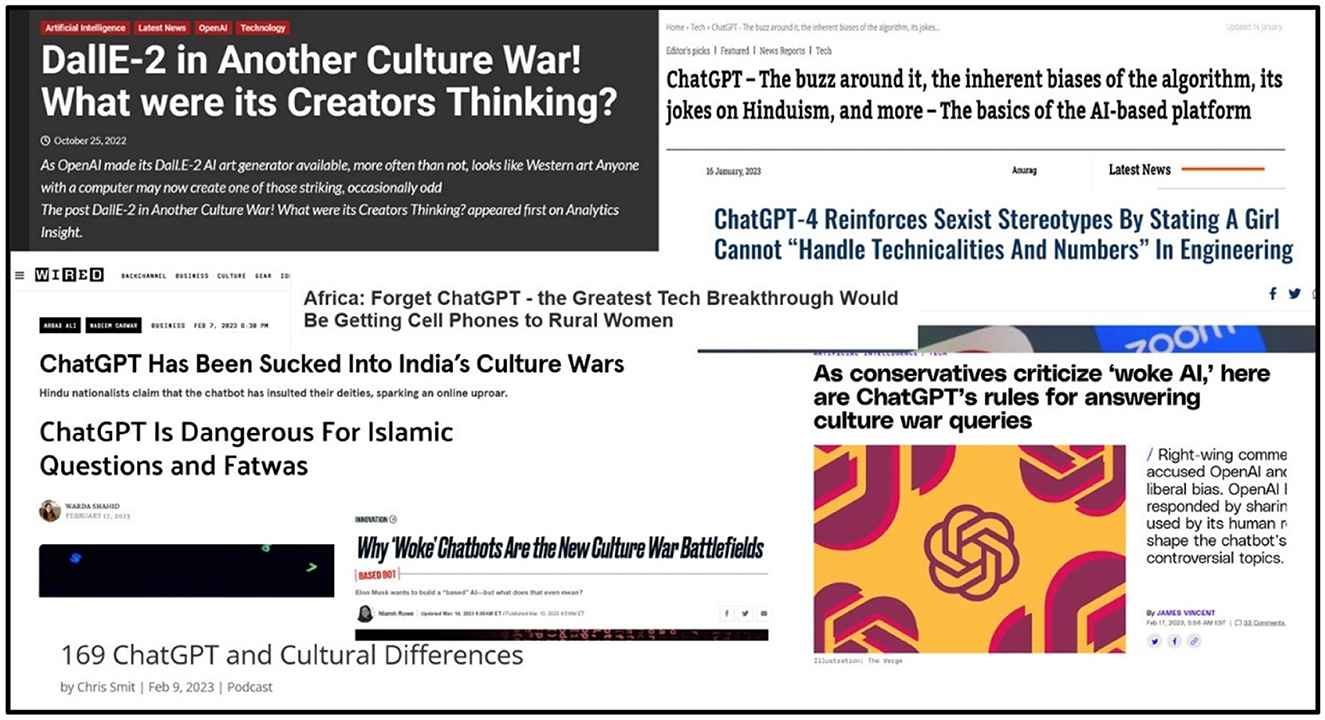

Our literature review elaborates and ties the relevant threads of research on AI, NLP, AI education, culturally responsive teaching, and cultural intelligence. This allows us to identify the unique needs of AI education and its challenges. Thereafter, we introduce our data, which consists of news headlines and illustrative examples of cultural bias of recent AI applications (Figure 3). Our findings from our exploratory NLP analysis demonstrate the need for increased cultural adaptivity. Next, we introduce and develop arguments for the CATE-AI framework (Figure 2), which is followed by a discussion of the limitations of the present study and ideas for future research. We make recommendations to enable the adoption of CATE-AI and conclude with a discussion of future research opportunities in AI education.

Figure 3. Need for adaptation of AI Tools and AI education evidenced globally. Sources for articles displayed in Figure, all accessed in March 2023: (1) https://www.equalitynow.org/news_and_insights/chatgpt-4-reinforces-sexist-stereotypes/; (2) https://allafrica.com/stories/202303070096.html; (3) https://www.opindia.com/2023/01/chatgpt-artifical-intelligence-chatbot-biased-here-is-how/; (4) https://www.thedailybeast.com/why-woke-chatbots-are-the-new-culture-war-battlefields; (5) https://www.theverge.com/2023/2/17/23603906/openai-chatgpt-woke-criticism-culture-war-rules; (6) https://theislamicinformation.com/news/chatgpt-dangerous-for-islamic-questions-fatwas/; (7) https://culturematters.com/chatgpt-and-cultural-differences/; (8) https://www.analyticsinsight.net/dalle-2-in-another-culture-war-what-were-its-creators-thinking/; (9) https://www.wired.com/story/chatgpt-has-been-sucked-into-indias-culture-wars/.

We review extant literature and use inductive reasoning to conceptualize the CATE-AI framework. The CATE-AI framework is built upon relevant research, AI and technology trends, and textual data from news media. We lay the foundation for the CATE-AI framework by drawing theories and concepts from the literature review. These include culturally responsive teaching and cultural intelligence, which help distinguish between AI education and educational AI. The adoption of a robust philosophy to understand the dynamics of interactions between humans and AI is a key tenet of CATE-AI. It serves as the crucible for melding the conceptual blocks that are the essence of CATE-AI.

Artificial intelligence in education is an ambiguous phrase that may be used to refer to AI curricula in education OR the use of AI technologies in education. We refer to the former as AI Education and the latter as Educational AI. In this paper, our primary focus is on AI Education and we clarify the difference. AI Education is the study of artificial intelligence, in the same way as mathematics education involves the study of mathematics. This means teaching the development of computer systems to perform tasks that typically require human intelligence, such as learning, problem-solving, perception, reasoning, and natural language processing. AI Education can be defined as the endeavors and objectives that are intrinsic to instructing and acquiring knowledge about artificial intelligence (Wollowski et al., 2016; Chen et al., 2020; Su et al., 2022; Ng et al., 2023).

In contrast, Educational AI denotes the utilization of artificial intelligence to enhance teaching and learning processes. This encompasses intelligent tutoring systems (Vanlehn, 2011; D'mello and Graesser, 2013), adaptive learning platforms (Muñoz et al., 2022), tools grounded in Natural Language Processing (NLP) (Chen et al., 2022; Shaik et al., 2022), chatbot applications (Paschoal et al., 2018), and learning analytics (Leitner et al., 2017).

Our research on AI Education is distinct from previous research on the use of AI technologies in education (i.e., Educational AI) (Chassignol et al., 2018; Tuomi, 2018). This paper focuses on contextualizing AI Education through cultural insights to facilitate access and adoption of Educational AI through fair and equitable processes.

Understanding the impacts of diverse cultures on human behavior is of vital importance due to the growing emphasis on the social dimensions of human interactions in the context of virtual agents (Mascarenhas et al., 2016). Understanding AI capabilities and the dynamics of interactions between AIs and human intelligences is critical if we are to establish a foundation for Human-centered AI. AI capabilities are based on emulating human intelligence functions, particularly cognitive and logical processes (Samuel, 2021a). AI capabilities have allowed us to reshape conventions for interactions with machines, while disrupting diverse industries such as healthcare, manufacturing, music, media, and research. The ramifications are evident: AI's disruptive influence and reconfiguration of established social norms are propelling the advent of a fourth industrial revolution. Our interactions with machines have rapidly evolved due to the capacity of AI to replicate human decision-making. AI is poised to exert a substantial influence on our day-to-day lives as it will affect convenience, efficiency, personalization, privacy protection, and security (Feijóo et al., 2020). These changes are already underway, fundamentally reshaping our attitudes and dynamics of conduct.

The advancement of AI-driven technology marks the latest phase in our ongoing endeavor to automate human-performed tasks (Tai, 2020). While AI can be viewed as a continuation of innovations in automation, it stands apart from prior non-AI-driven technological progress. A fundamental distinction lies in how past scientific and industrial revolutions replaced human physical labor, whereas the current AI-led transformation has the potential to replace human intellectual capacities Samuel et al. (2022a). The potential of AI to substitute human intelligence has also prompted experts to differentiate between “weak AI,” focusing on specialized tasks, and “strong AI,” which emulates human cognitive functions. For example, while “weak AI” may excel in specific tasks like chess or equation solving, “strong AI” would surpass humans across a wide spectrum of cognitive activities (Lu et al., 2018).

Despite AI's swift progress and far-reaching societal effects, the global population remains largely uninformed about AI capabilities and impacts on lives and livelihoods. This lack of awareness may be attributed to efforts to mystify AI to circumvent the need to explain complex technologies and create a sense of awe about AI capabilities (Campolo and Crawford, 2020). As a result, societies are ill-prepared for the impending AI revolution (Samuel, 2022). This trend of mystifying AI to the non-expert audience must be replaced with proactive education and training (Gleason, 2018), where AI is centered around humans and human wellbeing in the future.

Therefore, we define human-centered AI (HAI) as consisting of the principles, practice and information management of AI development and deployment with the foremost goal of ensuring human wellbeing, progress, safety and satisfaction. Fortunately, a shift in focus from a technology-centric approach to one that prioritizes people (Bingley et al., 2023) suggests that HAI is evolving in the right direction. HAI will be critical to ensure sustainable human engagement with AI (Shneiderman, 2020).

Hype about AI capabilities is matched by fears about its influence, effects, and unintended consequences. We discuss a few examples as we make a case for AI Education below.

First, there is considerable uncertainty about the cumulative systemic risks posed by AI, especially since it is rapidly changing the ways in which financial institutions and markets operate and are regulated. An increased use of AI technologies has raised the specter of unstable markets due to an overreliance on algorithmic trading and potential failures of regulatory policy. In addition, this has given rise to fears of systemic instabilities and mass unemployment if AI replaces humans in future organizational workforces (Daníelsson et al., 2022).

Second, there is a widespread fear that AI can be leveraged to invade privacy and foster a culture of surveillance. Researchers fear an assault on individual privacy and rights as AI enabled surveillance grows at an alarming pace (Bartneck et al., 2021). In addition, unintended consequences may arise from the sale and use of private data by companies for non-intended purposes.

Third, there is a fear that algorithmic biases can accelerate lack of access and opportunities to disadvantaged populations and create unintended consequences. Algorithmic biases such as dataset bias, association bias, automation bias, interaction bias, and confirmation bias, amplify social biases (Chou et al., 2017; Lloyd, 2018). Software and technological implementations may contain implicit biases from developers or from the development ecosystem (Baker and Hawn, 2021). For instance, natural language processing (NLP) applications are known to amplify gender bias and Automated Speech Recognition (ASR) technologies have been found to display racial bias (Mengesha et al., 2021). Algorithmic bias can lead to multiple deliberate and unintended consequences such as discrimination, biased outcomes, and a lack of transparency and knowledge about how AI is involved in decision outcomes (Mikalef et al., 2022).

To assuage these fears and counter these challenges, we must develop new multidisciplinary and interdisciplinary frameworks (Touretzky et al., 2019; Chiu et al., 2021) to devise strategies to counter AI-induced risks and challenges and impart AI education globally.

However, AI education poses several unique challenges. Teaching AI topics across diverse cultural contexts can be daunting because the ability and willingness to learn often varies across cultures. Research has shown that cultural ecosystems, which impose their own systemic needs, play an important role in influencing their members' willingness to adopt new technology. Cultural differences between nations and people have an impact on how technology is adopted as some cultures are less open to new ideas than others (Tubadji et al., 2021). As a result, educators may be required to teach students who are resistant to learning AI because they either distrust or fear AI, or perceive AI technology to be counter to the norms of their cultures. Further, there is a growing perception that AI-related research is predominantly undertaken by the WEIRD (White, Educated, Industrialized, Rich and Democratic) countries, displaying cultural imbalances in AI research (Henrich et al., 2010; Schulz et al., 2018; Mohammed and Watson, 2019). This suggests that AI research often overlooks the distinct challenges of implementing AI in cultures beyond the predominant ones found in WEIRD countries. Therefore, it is imperative to foster greater inclusivity in AI research, while prioritizing the urgent need for AI education that is sensitive to cultural context.

Culture encompasses elements such as language, beliefs, values, norms, behaviors, and material objects, which are transmitted from one generation to the next. Additionally, it's important to note that every individual worldwide belongs to at least one culture (Skiba and Ritter, 2011; Bal, 2018). Individuals are impacted by the values upheld within specific cultures, resulting in differing levels of receptiveness toward AI e. Bal (2018) concisely expressed culture's substantial effect on human nature, arguing that culture pervades all aspects of human existence. This underscores the importance of culturally sensitive AI design to achieve optimal AI adoption and engagement.

Despite previous attempts at technology-based solutions for cultural and learning style mapping, there are currently few effective AI applications that can guide the selection of learning models tailored to specific learning contexts (Bajaj and Sharma, 2018). For instance, in the field of human computer interaction, experts agree that a User Interface (UI) design that meets the preferences, differences, and needs of a group of users can potentially increase the usability of a system (Alsswey and Al-Samarraie, 2021). However, the widespread adoption of exclusionary AI, such as Automated Speech Recognition (ASR) technologies that frequently exhibit racial bias, demonstrates the lack of progress in the development of intuitive and culturally-sensitive AI (Mengesha et al., 2021). Further, the underrepresentation of non-dominant communities in AI research exacerbates bias due to the imbalance between the volume of research conducted in WEIRD nations vs. non-dominant communities.

To develop more inclusive and intuitive AI technology, it is critical to impart quality AI education using personalized learning approaches (Chassignol et al., 2018). Culturally Responsive Teaching (CRT) is a personalized framework sensitive to the learner's cultural context.

The CRT framework utilizes the cultural knowledge, past experiences, reference points, and performance of students from diverse ethnic backgrounds to enhance the relevance and effectiveness of their learning experiences (Gay, 1993, 2002, 2013, 2021). CRT guides instruction by emphasizing and leveraging their inherent strengths. It supports their behaviors, knowledge, beliefs, and values, while acknowledging the significance of racial and cultural diversity in the learning process. CRT's intuitive appeal results from its flexibility to its manifestation in various forms, each with its distinct shapes and outcomes. Due to its intuitive appeal, CRT empowers educators to embrace the framework and integrate its principles into teaching diverse groups of learners.

There are several advantages to leveraging Artificial Intelligence (AI) technologies in education. AI can be an effective tool for formulating personalized instructional systems. Such AI-supported systems can promote exploratory learning via dialogues, analyze student writing, simulate game-based environments with intelligent agents, and resolve issues with chatbots. AI can also facilitate student/tutor matching, putting students in control of their own learning (Holmes et al., 2020). This can be especially helpful for students who are at a disadvantage in learning due to teaching styles or lack of physical access to school. However, establishing digital infrastructure and providing access to digital learning platforms will be necessary for this to work.

There are two ways in which AI technologies can improve the quality of education. First, AI can support educators by improving efficiency in the performance of administrative tasks, such as reviewing student work, grading, and providing feedback on assignments through automation using web-based platforms or computer programs (Chen et al., 2020). This can enable educators to devote valuable time to focus on research and improve their teaching methodology and content. In the long-term, this can also help in improving the mental health of educators. Second, AI can support learners by customizing and personalizing curriculum and content in line with learners' needs, abilities, and capabilities (Mikropoulos and Natsis, 2011). By analyzing performance data, AI can identify gaps in knowledge and tailor instructional materials and resources to better suit individual learners. This can help students learn at their own pace and in a way that suits their learning style, leading to better academic performance and increased motivation to learn.

In today's globalized world, effective cross-cultural communication is becoming increasingly important. As a result, the concept of cultural intelligence is gaining considerable traction. Moreover, while previous emphasis has been on developing Intelligence Quotient (IQ) and Emotional Quotient (EQ), cultural intelligence is a newer concept that builds upon emotional intelligence (Van Dyne et al., 2010). Emotional intelligence (EI) involves the ability to carry out accurate reasoning about emotions and the ability to use emotions and emotional knowledge to enhance thought (Mayer et al., 2008). Cultural intelligence, or cultural quotient, takes this a step further by emphasizing the importance of understanding and adapting to different cultural contexts. As a result, there is a substantial push to impart cultural knowledge among those in leadership positions in order to improve productivity. However, it is important that cultural intelligence is not only emphasized in the corporate sector, but also in education, which is the foundation of society (Earley and Mosakowski, 2004).

To be successful in today's diverse and interconnected world, educators must also be able to effectively navigate and communicate with individuals from different cultures. This requires cultural intelligence, which refers to not only a basic understanding of different cultural norms and practices, but also the ability to adapt one's behavior and communication style to different cultural contexts. Cultural intelligence encompasses more than just knowledge about different cultures; it also includes the ability to build relationships with people from diverse backgrounds and effectively collaborate with them. Van Dyne et al. (2010) have developed a four-factor model of cultural intelligence that can be effective in developing cultural intelligence in the field of teaching in an AI-powered world. This model includes Motivational Cultural Quotient, which refers to a leader's interest, drive, and energy to adapt cross-culturally; Cognitive Cultural Quotient, which involves a leader's cognitive understanding of culture; Metacognitive Cultural Quotient, which involves their ability to strategize across cultures; and Behavioral Cultural Quotient, which provides the ability to engage in effective, flexible leadership across cultures.

In an AI-powered world, it is becoming increasingly crucial for educators to leverage cultural intelligence. As the world becomes more interconnected, educators need to be equipped with the ability to navigate diverse cultural contexts, communicate effectively with individuals from different backgrounds, and collaborate with them. It is important for educators to develop the necessary skills to succeed in this globalized landscape, adapting their teaching style and creating inclusive learning environments that embrace diversity and promote cross-cultural understanding.

A clear philosophical perspective is needed to frame the importance of AI and AI education. It is only with a well-integrated philosophical perspective that we can weave AI ethics, sociocultural priorities and sensitivity to humans into AI development and deployment. AI philosophy is critical for the sustainability of AI as a science. At the core of the philosophy of AI and human interaction, is the research driven belief that AI can augment human intelligence, if applied correctly, and enhance human performance to optimal levels using adaptive cognitive fit mechanisms (Samuel et al., 2022b).

Furthermore, as the development of virtual agents increasingly focuses on the social aspects of human interaction, it becomes crucial to address the notion of culture and its impact on human behavior (Mascarenhas et al., 2016). By doing so, greater equity in the field of AI can be achieved, leading to inclusive outcomes such as a more equitable representation of people from diverse contexts in AI, along with greater willingness to use AI technologies. It is important to distinguish between teaching AI as a subject and using AI as a tool to teach other subjects. AI education does not happen in a vacuum; rather, it takes place in diverse settings where learners from different cultural backgrounds are present. Cultural context plays a crucial role in shaping the ability and willingness of learners to engage with AI education.

Algorithms often embed designer or societal biases, resulting in discriminatory predictions or inferences (Baker and Hawn, 2021). Consequently, ensuring the representation of people from different cultures is crucial in developing AI technologies that can be used globally and across cultures. However, AI education itself is not inclusive for all cultures. Research has shown that cultural ecosystems, which impose their own systemic needs, play an important role in influencing their members' willingness to adopt new technology. Another important factor contributing to an individual's attitude toward AI technologies is gender (Horowitz and Kahn, 2021). “Because some cultures are less receptive to new ideas than others, cultural differences across countries and individuals influence the adoption of technology” (Tubadji et al., 2021).

There are several ways in which cultural differences manifest when it comes to learning about AI. Gender, which typically cuts across cultures, disadvantages people who do not identify as men. There is frequently a lack of gender diversity in the field of AI (Samuel et al., 2018). This is partially a result of the manner in which AI is taught in educational institutions. In schools, AI curriculum is typically offered as part of computer science and STEM subjects, which are science, technology, engineering, and mathematics. However, AI instruction has often been conducted after formal lessons and outside of regular classroom settings. As a result, the subject suffers from a lack of diversity, with most participants being high-achieving boys (Xia et al., 2022). This lack of diversity creates challenges related to inclusion and equity. Instances such as these underline the need for designing AI curricula which are sensitive to the needs of learners coming from different cultural contexts.

We used the aforementioned theories and concepts with the goals of HAI, and developed a framework to facilitate AI learning delivered in a way that is contextualized to social, cultural, individual and future workplace factors and needs: Culturally Adaptive Thinking in Education for Artificial Intelligence (CATE-AI) framework (Figure 2). These concepts are fundamental to the conceptualization, understanding and application of the CATE-AI framework: the foundational ideas and theoretical drives of CRT and cultural intelligence, the critical role of philosophy in framing the interaction between artificial and human intelligences for culturally sensitive creative thinking on AI, the importance of factoring the multidimensional aspects of human behavior to facilitate sustainable ease of use of AI adapted to culture and context, the increasingly powerful role of AI models and AI applications in human society and the need to repurpose and adapt focal AI artifacts to culture, context and geography, the cultivation of HAI through AI education which embodies persuasive reflections of repurposed and adapted AI artifacts in curricula, and the co-evolution of all of these with the emerging dynamics of the human centered AI ecosystem. Information and perceptions about AI play a critical role in shaping public opinion about AI and this is undoubtedly an influential force, which we consider as being an uncontrollable part of the HAI ecosystem, without a condition of alignment with the goals of HAI. The CATE-AI framework therefore posits five principles:

1. AI education needs to be developed with theoretical and philosophical foundations which specifically address human behavior, technological capabilities and human centered AI.

2. AI education needs to adapt to sociocultural contexts and demonstrate resilience against AI biases and limitations.

3. Culturally adaptive thinking is expected to lead to richer learning experiences and enhanced interest in the study and application of AI.

4. AI education must remain adaptive to changes in mutually influencing forces of AI technologies and human behavior.

5. Human intelligence and AI interaction philosophical foundation are expected to guide ethical, human values based and human centered AI development, deployment and education.

The CATE-AI framework can be applied to influence multiple levels of education and learning of AI. It can be used in developing curricula, learning management systems design, course and program effectiveness evaluation and also in measuring student satisfaction outcomes. The CATE-AI framework could serve as a valuable framework for informing faculty and those involved in course delivery–the adaptive “thinking” in CATE-AI refers to a mindset that applies to those who teach and to those who learn.

In addition to theorization, we explored two sets of data qualitatively: the first is a set of outputs generated by GPT 3.5 Turbo based OpenAI application ChatGPT and the second consist of a collection of new headlines on AI from 2022 to 2023 (Figure 3). Our objectives were to explore the presence of bias and limitations in ChatGPT output, and to explore emerging and dominant societal themes on AI as reflected in news headlines and their influence on HAI initiatives. While we had nothing new and interesting to report in this study, our review of ChatGPT output confirmed numerous biases and limitations (Azaria, 2022; Borji, 2023). Furthermore, a review of news articles from around the world revealed that responses to AI, NLP, LLMs and applications such as ChatGPT varied significantly by region and culture. ChatGPT apparent biases related to religions, political ideologies, gender and race were observed (Figure 3).

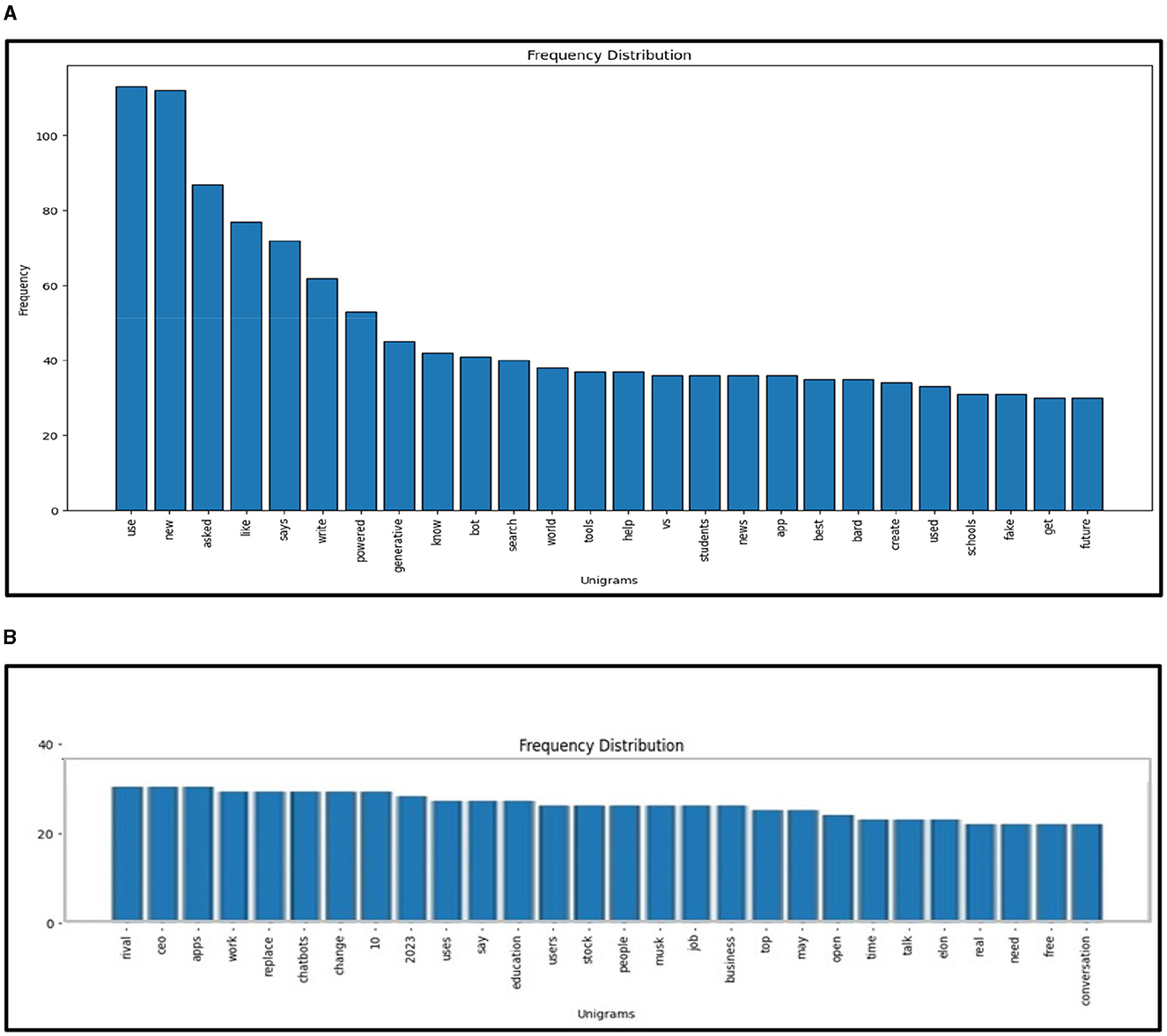

Applying NLP methods, we also explored and summarized news headlines or articles relevant to AI, NLP, LLMs and ChatGPT by sentiment, and categorized them into positive, neutral and negative categories (Figures 4A, B, 5). We used NLTK for sentiment analysis (Bird et al., 2009). In addition to examining specific cases to motivate and inform CATE-AI, we performed exploratory analysis by visualizing word clouds from the text of the news headlines we collected from around the world. We also explored unigrams as visualized in a split manner in Figures 6A, B. Common and expected words such as “ai” and “gpt” were excluded from the unigrams to highlight the array of other high frequency words.

Figure 6. (A) Unigrams (part-1) of AI-ChatGPT news articles. (B) Unigrams (part-2) of AI-ChatGPT news articles.

A review of the unigrams showed some interesting emphasis on topics in the conversations on AI, and some of these can be interpreted as being associated with most recent developments at the time of data collection, while other can be treated as being more persistent themes. We identified a fair amount of interest in technological developments with key words such as “generative,” “powered,” “bot” and “tools,” in concerns and risks of the future of AI with key words such as “fake,” “bot,” “future” and “rival,” in unusual focus on entities such as “Musk” and “Elon,” and in an emphasis on workplace themes with key words such as “use,” “new,” “job,” “write,” “create,” “ceo” and “business.”

Our NLP based analysis of the text revealed useful insights. Positive headlines outnumbered the negative ones at around a 5:3 ratio (Figure 5). The presence of such a notable number of negative headlines highlights the significant number of unaddressed concerns, issues and examples of AI failures and misses. The negative sentiment word cloud (Figure 4B) highlights high frequency words which were clustered to identify dominant themes such as concerns surrounding the misuse of AI for deception (fear, fake, wrong, cheating, banned, scam, trick), issues in education (school, student, cheating, test), jobs (job, employee, lawsuit, crisis) and security (block, warning, dead, war, battle, fight, police, threat). Additional bigram, trigram and quadgram analyses revealed similar patterns of word frequencies, including regional headlines with the implication of banning ChatGPT, using generative AIs unethically, emergence of fake AI apps and concerns around the impacts of AIs on human life, jobs and society (Samuel, 2023c). Some of these were identified as regional or ideological themes illustrating the need for CATE-AI, such as “collapse creative process,” “woke ChatGPT accused,” “AI arms race,” “ChatGPT passed Wharton MBA,” “students using AI” and “destroyed Google business.” We validated our findings on thematic topics by using the GENSIM Latent Dirichlet allocation (LDA) model with iterations of intertopic mapping and review of topics by key words associated with the topics (Rehurek and Sojka, 2010, 2011). We ran multiple iterations by removing disruptive and overbearing key words to evocate and affirm underlying themes and topics. The visualization of one such iteration is displayed in Figure 7, and this iteration lent support to the themes on concerns regarding the use of generative AI and AI in general for education (“college,” “exam,” “test,” “testing” and “questions”) and misuse (“fake,” “court” and “bot”). This process affirmed the dominant themes initially identified through exploratory textual analytics using word clouds and n-grams as described in the sections above.

We identified several interesting themes revolving around fear of AI, need to regulate AI, sociocultural challenges of AI applications, AI bias, limitations and inaccuracies in AI output, how-to topics, and unfairness-such as in comparison of religions. The variations in these themes observed across different regions and cultures underscore the importance of utilizing CATE-AI to create culturally sensitive AI education frameworks and curricula. CATE-AI can help address the challenges of sociocultural differences by guiding the adaptation of categorization frameworks of human-thought clusters (Murphy and Medin, 1985; Barsalou, 1989). Ideally, all relevant education initiatives should help learners understand four critical aspects of AI:

1. What is AI–a philosophical foundation for engaging AI.

2. How AI works–the science of AI technologies.

3. AI contextualization-how AI can be adapted to sociocultural contexts.

4. Optimal AI–using AI to support adaptive cognitive fit (Samuel et al., 2022b).

This will help prepare humans face a future filled with ubiquitous AIs through systematics AI literacy. CATE-AI can also help facilitate AI adaptation to sociocultural contexts across the world, with the potential to improve ease of use through informed use and user satisfaction outcomes.

Our study provides robust conceptualization and theorization to advance the CATE-AI framework. However, additional work is needed to elaborate its tenets and develop applied solutions. However, we still need to conduct experiments and analyze implementation cases for CATE-AI to strengthen and further validate the framework. We caution that due to the exploratory nature of our analysis, we applied stop-words, including custom stop-words of common but non-insightful words (e.g., company names), to the textual data corpus to generate word clouds, sentiment analysis, and intertopic maps using LDA modeling (Figures 4A, B, 5, 7). Our analysis revealed the need for a dedicated study of this textual data corpus with additional NLP methods including clustering for topic and theme identification, information retrieval, named entity recognition (NER) and sentiment analysis to gauge sentiment toward AI applications by region using domain-knowledge bases (Kumar et al., 2022, 2023). These are avenues for future research and further intrinsic development of CATE-AI. Numerous extensions and applications of CATE-AI are possible, such as the extending its principles to AI generated multilingual solutions for making adaptive sense of human language and emotions across languages, regions and cultures (Anderson et al., 2023). Further, in many cultures, handwritten text is an essential part of educational and societal processes, and it will be interesting to see how AI tools for OCR can be adapted for better HAI design (Jain et al., 2023).

Our research addresses a critical concern regarding an issue of global importance with significant implications for the future. AI cannot be paused or stopped. The extraordinary speed at which AI models and applications are being developed presents complex challenges and new opportunities (Samuel, 2023a,b). AI as a cluster of powerful transformative technologies has the potential to shape the future of human society. Therefore, AI education is of utmost importance and urgency for sustainable and equitable advancement. AI education is no longer optional and any proactive approach toward maximizing the benefits of AI and minimizing the risks and harms of AI must include a proactive approach to adaptive AI education (Samuel, 2021a). Insensitivity toward sociocultural needs and HAI education can lead to a rise in AI illiteracy, a growth in negative public perception, and heightened resistance toward AI. CATE-AI provides a futuristic framework to catalyze sensitive, fair and relevant AI education to the masses. Ideally CATE-AI must be co-implemented with proactive policies for mass-public education implementation initiatives based on continuing-education models. As we look forward to reaping the benefits of AI, we anticipate that CATE-AI and other AI educations models will play a crucial role in shaping the future.

Data used in this study can be found online, as schematic sample, via the following link: https://github.com/ay7n/CATE.

All authors listed have made fair intellectual contributions to the manuscript and approved it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Alsswey, A., and Al-Samarraie, H. (2021). The role of hofstede's cultural dimensions in the design of user interface: the case of Arabic. Artif. Intell. Eng. Des. Anal. Manuf. AIEDAM. 35, 116–127. doi: 10.1017/S0890060421000019

Anderson, R., Scala, C., Samuel, J., Kumar, V., and Jain, P. (2023). Are Emotions Conveyed Across Machine Translations? Establishing an Analytical Process for the Effectiveness of Multilingual Sentiment Analysis with Italian Text. doi: 10.20944/preprints202308.1003.v1

Bajaj, R., and Sharma, V. (2018). Smart Education with artificial intelligence based determination of learning styles. Procedia Comput. Sci. 132, 834–842. doi: 10.1016/j.procs.2018.05.095

Baker, R. S., and Hawn, A. (2021). Algorithmic bias in education. Int J Artif Intell Educ. 1–41. doi: 10.1007/s40593-021-00285-9

Bal, A. (2018). Culturally responsive positive behavioral interventions and supports: a process–oriented framework for systemic transformation. Rev. Educ. Pedagogy Cult. Stud. 40, 144–174. doi: 10.1080/10714413.2017.1417579

Barsalou, L. W. (1989). “Intraconcept similarity and its implications for interconcept similarity,” in Similarity and Analogical Reasoning, eds S. Vosniadou and A. Ortony (Cambridge: Cambridge University Press), 76–121. doi: 10.1017/CBO9780511529863.006

Bartneck, C., Lütge, C., Wagner, A., and Welsh, S. (2021). “Privacy issues of AI,” in An Introduction to Ethics in Robotics and AI. SpringerBriefs in Ethics. Cham: Springer. doi: 10.1007/978-3-030-51110-4

Bingley, W. J., Curtis, C., Lockey, S., Bialkowski, A., Gillespie, N., Haslam, S. A., et al. (2023). Where is the human in human-centered AI? insights from developer priorities and user experiences. Comput. Hum. Behav. 141:107617. doi: 10.1016/j.chb.2022.107617

Bird, S., Klein, E., and Loper, E. (2009). Natural Language Processing With Python: Analyzing Text With the Natural Language Toolkit. “O'Reilly Media, Inc..”

Borji, A. (2023). A categorical archive of chatgpt failures. arXiv preprint arXiv. doi: 10.21203/rs.3.rs-2895792/v1

Campolo, A., and Crawford, K. (2020). Enchanted determinism: power without responsibility in artificial intelligence. Engag. Sci. Technol. Soc. 6, 1–19. doi: 10.17351/ests2020.277

Chassignol, M., Khoroshavin, A., Klimova, A., and Bilyatdinova, A. (2018). Artificial Intelligence trends in education: a narrative overview. Procedia Comput. Sci. 136, 16–24. doi: 10.1016/j.procs.2018.08.233

Chen, L., Chen, P., and Lin, Z. (2020). Artificial intelligence in education: a review. IEEE Access 8, 75264–75278. doi: 10.1109/ACCESS.2020.2988510

Chen, X., Zou, D., Xie, H., Cheng, G., and Liu, C. (2022). Two decades of artificial intelligence in education. Educ. Technol. Soc. 25, 28–47.

Chiu, T. K., Meng, H., Chai, C. S., King, I., Wong, S., and Yam, Y. (2021). Creation and evaluation of a pretertiary artificial intelligence (AI) curriculum. IEEE Trans. Educ. 65, 30–39. doi: 10.1109/TE.2021.3085878

Chou, J., Murillo, O., and Ibars, R. (2017). What the Kids' Game ‘Telephone' Taught Microsoft About Biased AI. Fast Company. com. Available online at: https://www.fastcompany.com/90146078/what-thekids-game-telephone-taught-microsoft-about-biased-ai

Daníelsson, J., Macrae, R., and Uthemann, A. (2022). Artificial intelligence and systemic risk. J. Bank. Finance. 140:106290. doi: 10.1016/j.jbankfin.2021.106290

D'mello, S., and Graesser, A. (2013). AutoTutor and affective AutoTutor: Learning by talking with cognitively and emotionally intelligent computers that talk back. ACM Trans. Interact. Intell. Syst. 2, 1–39.

Dowling, M., and Lucey, B. (2023). ChatGPT for (finance) research: the bananarama conjecture. Finance Res. Lett. 53:103662. doi: 10.1016/j.frl.2023.103662

Earley, P. C., and Mosakowski, E. (2004). Cultural Intelligence. Harvard Business Review. Available online at: https://hbr.org/2004/10/cultural-intelligence

Eguchi, A., Okada, H., and Muto, Y. (2021). Contextualizing AI education for K-12 students to enhance their learning of AI literacy through culturally responsive approaches. KI-Künstliche Intell. 35, 153–161. doi: 10.1007/s13218-021-00737-3

Feijóo, C., Kwon, Y., Bauer, J. M., Bohlin, E., Howell, B., Jain, R., et al. (2020). Harnessing artificial intelligence (AI) to increase wellbeing for all: the case for a new technology diplomacy. Telecomm. Policy. 44:101988. doi: 10.1016/j.telpol.2020.101988

Gay, G. (1993). Building cultural bridges: a bold proposal for teacher education. Educ. Urban Soc. 25, 285–299. doi: 10.1177/0013124593025003006

Gay, G. (2002). Preparing for culturally responsive teaching. J. Teach. Educ. 53, 106–116. doi: 10.1177/0022487102053002003

Gay, G. (2013). Teaching to and through cultural diversity. Curriculum inquiry 43, 48–70. doi: 10.1111/curi.12002

Gay, G. (2021). “Culturally responsive teaching: ideas, actions, and effects,” in Handbook of Urban Education eds H. Richard Milner and K. Lomotey (New York, NY: Routledge), 212−233. doi: 10.4324/9780429331435-16

Gleason, N. W. (2018). Higher Education in the Era of the Fourth Industrial Revolution. Singapore: Springer Nature. Available online at: https://link.springer.com/book/10.1007/978-981-13-0194

Google (2023). Available online at: https://ai.googleblog.com/2022/04/pathways-language-model-palm-scaling-to.html

Henrich, J., Heine, S. J., and Norenzayan, A. (2010). Beyond WEIRD: towards a broad-based behavioral science. Behav. Brain Sci. 33:111.

Holmes, W., Bialik, M., and Fadel, C. (2020). “Artificial intelligence in education,” in Encyclopedia of Education and Information Technologies, eds H. Richard Milner and K. Lomotey (New York, NY: Springer International Publishing), 88–103. doi: 10.1007/978-3-030-10576-1_107

Horowitz, M. C., and Kahn, L. (2021). What influences attitudes about artificial intelligence adoption: evidence from U.S. local officials. PLoS ONE 16:e0257732. doi: 10.1371/journal.pone.0257732

HuggingFace (2023). Available online at: https://huggingface.co/bigscience/bloom

Jain, P. H., Kumar, V., Samuel, J., Singh, S., Mannepalli, A., and Anderson, R. (2023). Artificially intelligent readers: an adaptive framework for original handwritten numerical digits recognition with OCR Methods. Information 14:305. doi: 10.3390/info14060305

King, M. R. (2022). The future of AI in medicine: a perspective from a Chatbot. Ann. Biomed. Eng. 51, 291–295. doi: 10.1007/s10439-022-03121-w

Kumar, V., Medda, G., Recupero, D. R., Riboni, D., Helaoui, R., and Fenu, G. (2023). “How do you feel? information retrieval in psychotherapy and fair ranking assessment,” in International Workshop on Algorithmic Bias in Search and Recommendation (Cham: Springer Nature Switzerland), 119–133. doi: 10.1007/978-3-031-37249-0_10

Kumar, V., Recupero, D. R., Helaoui, R., and Riboni, D. (2022). K-LM: Knowledge augmenting in language models within the scholarly domain. IEEE Access 10, 91802–91815. doi: 10.1109/ACCESS.2022.3201542

Leitner, P., Khalil, M., and Ebner, M. (2017). Learning analytics in higher education-a literature review. Learn. Anal. 1–23.

Lloyd, K. (2018). Bias amplification in artificial intelligence systems. arXiv preprint arXiv. doi: 10.48550/arXiv.1809.07842

Lu, H., Li, Y., Chen, M., Kim, H., and Serikawa, S. (2018). Brain intelligence: go beyond artificial intelligence. Mobile Netw. Appl. 23, 368–375. doi: 10.1007/s11036-017-0932-8

Mascarenhas, S., Degens, N., Paiva, A., et al. (2016). Modeling culture in intelligent virtual agents. Auton. Agent Multi-Agent Syst. 30, 931–962. doi: 10.1007/s10458-015-9312-6

Mayer, J. D., Roberts, R. D., and Barsade, S. G. (2008). Human abilities: emotional intelligence. Annu. Rev. Psychol. 59, 507–536. doi: 10.1146/annurev.psych.59.103006.093646

Mengesha, Z., Courtney, H., Michal, L., Juliana, S., and Elyse, T. (2021). “I don't think these devices are very culturally sensitive.”—impact of automated speech recognition errors on african americans. Front. Artif. Intell. 4:725911. doi: 10.3389/frai.2021.725911

Meta (2023). Available online at: https://ai.meta.com/blog/large-language-model-llamameta-ai/

Mikalef, P., Conboy, K., Lundström, J. E., and Popovič, A. (2022). Thinking responsibly about responsible AI and ‘the dark side' of AI. Eur. J. Inform. Syst. 31, 257–268. doi: 10.1080/0960085X.2022.2026621

Mikropoulos, T. A., and Natsis, A. (2011). Educational virtual environments: a ten-year review of empirical research (1999–2009). Comput. Educ. 56, 769–780. doi: 10.1016/j.compedu.2010.10.020

Mohammed, P. S., and Watson, E. (2019). “Towards inclusive education in the age of artificial intelligence: perspectives, challenges, and opportunities,” in Artificial Intelligence and Inclusive Education, eds J. Knox, Y. Wang, and M. Gallagher (Singapore: Springer), 17–37. doi: 10.1007/978-981-13-8161-4_2

Muñoz, J. L. R., Ojeda, F. M., Jurado, D. L. A., Peña, P. F. P., Carranza, C. P. M., Berríos, H. Q., et al. (2022). Systematic review of adaptive learning technology for learning in higher education. Eur. J. Educ. Res. 98, 221–233. doi: 10.14689/ejer.2022.98.014

Murphy, G. L., and Medin, D. L. (1985). The role of theories in conceptual coherence. Psychol. Rev. 92, 289–316. doi: 10.1037/0033-295X.92.3.289

Ng, D. T. K., Lee, M., Tan, R. J. Y., Hu, X., Downie, J. S., and Chu, S. K. W. (2023). A review of AI teaching and learning from 2000 to 2020. Educ. Inform. Technol. 28, 8445–8501.

Open AI (2023). Available online at: https://openai.com/product/gpt-4

Paschoal, L. N., de Oliveira, M. M, and Chicon, P. M. M., (2018). “A chatterbot sensitive to student's context to help on software engineering education,” 2018 XLIV Latin American Computer Conference (CLEI) (São Paulo), 839–848. doi: 10.1109/CLEI.2018.00105

Rehurek, R., and Sojka, P. (2010). “Software framework for topic modelling with large corpora,” in Conference: Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks (Malta).

Rehurek, R., and Sojka, P. (2011). Gensim—Statistical Semantics in Python. Available online at: genism org

Rudolph, J., Tan, S., and Tan, S. (2023). ChatGPT: bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 6, 342–362.. doi: 10.37074/jalt.2023.6.1.9

Samuel, J. (2021a). A Call for Proactive Policies for Informatics and Artificial Intelligence Technologies. Scholars Strategy Network. Available online at: https://scholars.org/contribution/call-proactive-policies-informatics-and

Samuel, J. (2022). 2050: Will Artificial Intelligence Dominate Humans? (AI Slides). doi: 10.2139/ssrn.4155700

Samuel, J. (2023a). The Critical Need for Transparency and Regulation Amidst the Rise of Powerful Artificial Intelligence Models. Available online at: https://scholars.org/contribution/critical-need-transparency-and-regulation (accessed August 02, 2023).

Samuel, J. (2023b). Response to the March 2023 “Pause Giant AI EXPERIMENTS: An Open Letter” by Yoshua Bengio, Stuart Russell, Elon Musk, Steve Wozniak, Yuval Noah Harari and others. Available online at: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4412516

Samuel, J. (2023c). Two Keys for Surviving the Inevitable AI Invasion. Available online at: https://aboveai.substack.com/p/two-keys-for-surviving-the-inevitable

Samuel, J., Friedman, L. W., Samuel, Y., and Kashyap, R. (2022a). Artificial Intelligence Education and Governance: Preparing Human Intelligence for AI Driven Performance Augmentation. Frontiers in Artificial Intelligence, Editorial. Available online at SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4019977

Samuel, J., Kashyap, R., Samuel, Y., and Pelaez, A. (2022b). Adaptive cognitive fit: artificial intelligence augmented management of information facets and representations. Int. J. Inf. Manage. 65:102505. doi: 10.1016/j.ijinfomgt.2022.102505

Samuel, Y., George, J., and Samuel, J. (2018). “Beyond STEM, how can women engage big data, analytics, robotics and artificial intelligence? An exploratory analysis of confidence and educational factors in the emerging technology waves influencing the role of, and impact upon, women,” in 2018 NEDSI Proceedings (Providence).

Schulz, J., Bahrami-Rad, D., Beauchamp, J., and Henrich, J. (2018). The origins of WEIRD psychology. Available online at: https://ssrn.com/abstract=3201031

Shaik, T., Tao, X., Li, Y., Dann, C., McDonald, J., Redmond, P., et al. (2022). A review of the trends and challenges in adopting natural language processing methods for education feedback analysis. IEEE Access. 10, 56720–56739.

Shneiderman, B. (2020). Human-centered artificial intelligence: three fresh ideas. AIS Transac. Hum. Comput. Interact. 12, 109–124. doi: 10.17705/1thci.00131

Skiba, R., and Ritter, S. (2011). “Race is not neutral: understanding and addressing disproportionality in school discipline,” in 8th International Conference on Positive Behavior Support (Denver, CO).

Su, J., Zhong, Y., and Ng, D. T. K. (2022). A meta-review of literature on educational approaches for teaching AI at the K-12 levels in the Asia-Pacific region. Comp. Educ. 3:100065. doi: 10.1016/j.caeai.2022.100065

Tai, M. C. (2020). The impact of artificial intelligence on human society and bioethics. Tzu Chi Med. J. 32, 339–343. doi: 10.4103/tcmj.tcmj_71_20

Touretzky, D., Gardner-McCune, C., Martin, F., and Seehorn, D. (2019). Envisioning AI for K-12: What should every child know about AI? Proc. AAAI Conference Artif. Intell. 33, 9795–9799. doi: 10.1609/aaai.v33i01.33019795

Tubadji, A., Nijkamp, P., and Huggins, R. (2021). Firm survival as a function of individual and local uncertainties: an application of Shackle's potential surprise function. J. Econ. Issue. 55, 38–78. doi: 10.1080/00213624.2021.1873046

Tuomi, I. (2018). The Impact of Artificial Intelligence on Learning, Teaching, and Education. Luxembourg: Publications Office of the European Union. Available online at: https://repositorio.minedu.gob.pe/handle/20.500.12799/6021

Van Dyne, L., Ang, S., and Livermore, D. (2010). Cultural intelligence: a pathway for leading in a rapidly globalizing world. Leading Across Differ. 4, 131–138.

Vanlehn, K. (2011). The relative effectiveness of human tutoring, intelligent tutoring systems, and other tutoring systems. Educ. Psychol. 46, 197–221. doi: 10.1080/00461520.2011.611369

Wei, J., Tay, Y., Bommasani, R., Raffel, C., Zoph, B., Borgeaud, S., et al. (2022). Emergent abilities of large language models. arXiv preprint arXiv. doi: 10.48550/arXiv.2206.07682

Wollowski, M., Selkowitz, R., Brown, L., Goel, A., Luger, G., et al. (2016). “A survey of current practice and teaching of AI,” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 30.

Keywords: human centered artificial intelligence, education, culture, AI, AI education, educational AI, culturally responsive teaching, AI philosophy

Citation: Samuel Y, Brennan-Tonetta M, Samuel J, Kashyap R, Kumar V, Krishna Kaashyap S, Chidipothu N, Anand I and Jain P (2023) Cultivation of human centered artificial intelligence: culturally adaptive thinking in education (CATE) for AI. Front. Artif. Intell. 6:1198180. doi: 10.3389/frai.2023.1198180

Received: 31 March 2023; Accepted: 27 September 2023;

Published: 01 December 2023.

Edited by:

Marco Temperini, Sapienza University of Rome, ItalyReviewed by:

Gabriel Badea, University of Craiova, RomaniaCopyright © 2023 Samuel, Brennan-Tonetta, Samuel, Kashyap, Kumar, Krishna Kaashyap, Chidipothu, Anand and Jain. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jim Samuel, amltQGFpa25vd2xlZGdlY2VudGVyLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.