94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Artif. Intell. , 26 April 2022

Sec. AI for Human Learning and Behavior Change

Volume 5 - 2022 | https://doi.org/10.3389/frai.2022.844817

A common but false perception persists about the level and type of personalization in the offerings of contemporary software, information systems, and services, known as Personalization Myopia: this involves a tendency for researchers to think that there are many more personalized services than there genuinely are, for the general audience to think that they are offered personalized services when they really are not, and for practitioners to have a mistaken idea of what makes a service personalized. And yet in an era, which mashes up large amounts of data, business analytics, deep learning, and persuasive systems, true personalization is a most promising approach for innovating and developing new types of systems and services—including support for behavior change. The potential of true personalization is elaborated in this article, especially with regards to persuasive software features and the oft-neglected fact that users change over time.

During the past few decades many contributions have been made to the body of scientific knowledge on personalized information technology, especially regarding user-modeling and user-adapted interaction (e.g., Brusilovsky, 2001; Fischer, 2001; Kobsa, 2001). The general audience has been awakening to this topic little by little since the late 90s after the introduction of e-commerce services for consumers. More recently requests for data analytics and adaptation of services to user needs have rapidly grown, and myriads of web-based and mobile services now claim to offer personalized solutions (Langrial et al., 2012).

Personalization as a research construct is, however, much more complex than it appears on the surface (Tam and Ho, 2005, 2006). Moreover, a common but false perception about the level and types of personalization, known as the Personalization Myopia (Oinas-Kukkonen, 2018) persists: researchers tend to think that there are many more personalized services than there really are. Likewise, the general audiences are under the impression that they are offered personalized services when it is not the case, and practitioners often have a mistaken idea of what actually makes a service or system personalized (Oinas-Kukkonen, 2018).

In this article, we will discuss personalization myopia and especially how to undo its consequences and thus ultimately to do away with it. The fundamental question under investigation is how to draw advantages from true personalization when designing such systems. After describing personalization myopia, two issues with strong personalization are recognized: going beyond mere personalized content by offering personalized software features and addressing the oft-neglected fact in personalization efforts that also users change over time. Finally, it is explained how artificial intellegence techniques can be employed to improve true personalization and ethical considerations for true personalization are discussed.

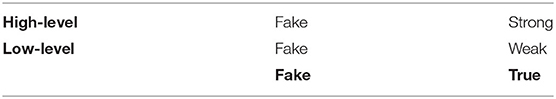

The depth and actuality of personalization implementations vary. For this reason, Oinas-Kukkonen (2018) suggests a taxonomy of personalization instantiations in which true and false personalization are differentiated and the depth of true personalization in systems and services is suggested to vary between low and high levels. The latter archetypes are called weak and strong personalization. See Table 1.

Table 1. Depth and reality of personalization (Oinas-Kukkonen, 2018).

Contemporary web and mobile users have become accustomed to services, which actively use a user's name in feedback sent to the user. However, in many if not most of the cases where service providers claim to offer personalized services, they may be just trying to make the user feel more comfortable without offering personalized features other than using one's name; or they are simply unknowledgeable of what personalization really would involve. This is fake personalization, in other words, it is actually not personalization at all. One reason why this has become so popular among commercial services is that consumers seem to be influenced by this approach in spite of it not being true personalization. This is because how people perceive the services to be rather than what the services actually are, which persuades people to take action.

Sometimes users may think that they are provided with information that is personalized for them individually, whereas in reality these systems offer information that is only slightly modified from standard information or is in truth targeted at a larger group of users. Targeting at a given user segment is known as tailoring (Oinas-Kukkonen and Harjumaa, 2009), and it is a widely studied software feature (Torning and Oinas-Kukkonen, 2009). We suggest that tailoring is low-level or weak personalization, which naturally may be valuable even if it is not the more sophisticated form of true personalization. Information provided by the system may indeed be persuasive if it is tailored to the potential needs, interests, use context, or other factors relevant to a user group. For example, Parmar et al. (2009) studied the use of weak personalization through a tailored health solution designed to influence the health behaviors of rural Indian women, aiming at increasing their awareness about menses and maternal health. The system employed social cues to increase this group's, i.e., rural women, perceived behavioral control and motivation to challenge existing social beliefs and practices, and in this manner persuading them to follow evidence-based health practices. Their study demonstrates how weak personalization, in this setting through providing tailored health content for this particular group, rather than providing either generic or individualized health content can be useful. In other studies, the impact of computer-tailored health interventions on behavior change were investigated and it was concluded that that tailored interventions should adapt not only the content of the message but also how the message is presented to the users (van Genugten et al., 2012; Nikoloudakis et al., 2018).

True high-level personalization, strong personalization, would mean that the information system really offers individualized content and/or services for its users. For instance, a system would first provide arguments that are most likely to be relevant for the individual user before any wider pre-defined user group or instead of simply presenting them random order. For an example of the effectiveness of strong personalization, Andrews (2012) investigated how a user's degree of extraversion influences perceived persuasiveness and perceived trustworthiness of a system. According to this study dependencies between a user's personality and perception of the system were evident. In another example, Dijkstra (2006) studied the impact of persuasive messages on students who smoked tobacco daily. After completing a pre-test questionnaire on a computer, the participants read information about their own condition and filled in an immediate post-test questionnaire. After 4 months, they were sent a follow-up questionnaire to assess their quitting activity. The results showed that significantly more participants quit smoking after 4 months when they received personalized feedback instead of standardized information. Moreover, the effect of condition on quitting activity was mediated by individuals' evaluations of the extent to which the information took into account personal characteristics.

Naturally, strong and weak personalization approaches are closely related and demonstrate the same spirit of developing information systems and services, and in some cases, they can even co-exist. Yet, tailoring as a weak approach is only low-level personalization. What tailoring may offer is often mistakenly considered by end-users as the pinnacle of what personalization can offer, which may give a false idea about the potential of personalization and in this manner contribute to the prevalence of the Personalization Myopia (Oinas-Kukkonen, 2018). Another reason why this Myopia is so widespread today and is likely to persist in the near future is that strong personalization does demand exceptionally careful modeling and analysis of the individual user and his or her susceptibility (Andrews, 2012; Kaptein et al., 2012) for information presentation and perhaps the adaptation of software functionalities offered. Modeling this is a most intriguing but challenging task, requiring a mindset and often also time and data analysis resources of an academic researcher, which are not necessarily available to all practitioners. There is a difference also between customization and personalization. Customization means the modification of the system and/or its preferences by the user. Thus, customization may be considered as a form of personalization. An example of a combination of customization and personalization would be software that lets a user define when he or she wants to be reminded.

To help understand what is required when designing weak vs. strong personalization, the Persuasive Systems Design model's Use and User Contexts can be applied (Oinas-Kukkonen and Harjumaa, 2009). Use Context considers characteristics arising from the problem domain and potential user segments in it, whereas User Context aims at recognizing individual differences. In this sense, weak personalization is mostly linked with Use Context, whereas strong personalization is inherently linked also with User Context. In strong personalization, an individual's User Context such as a user's susceptibility to the content presentation strategy at hand needs to be thoroughly understood, which may require, for example, understanding one's need for cognition (Petty and Wegener, 1998; Cacioppo et al., 2010), stage of change (Sporakowski et al., 1986), and integration into the individual life situation, experience, and self-efficacy (Borghouts et al., 2021), or perhaps even one's personality (Halko and Kientz, 2010) or temperament dimensions that impact affective-motivational and attentional systems that includes scales such as satisfaction/frustration, attention shifting/focusing, sociability, pleasure reactivity, discomfort, fear, and activity level (Rothbart and Bates, 1977; Rothbart et al., 2000; Evans and Rothbart, 2007; Rothbart, 2007). The need for modeling user susceptibility is especially important when designing for health behavior change support systems (Oinas-Kukkonen, 2013) that seek to offer true personalization (Berkovsky et al., 2012). An important approach specific to personalized persuasive systems has been suggested (Kaptein and Eckles, 2010), known as persuasion profiling, which is a collection of expected effects of different influence strategies for a specific individual. In spite of the complexity of modeling User Context and temptation of focusing only on user perceptions instead of actual outcomes, strong personalization still is a most promising approach to innovation, as well as for supporting behavior change in important areas such as health and sustainability (Berkovsky et al., 2012; Oinas-Kukkonen, 2013).

Even if there are substantial amounts of research on personalization, the volume of implemented software applications offering strong personalization is not as notable—and at the very least it is less notable than academics seem to think (Oinas-Kukkonen, 2018). Moreover, sometimes there seems to be much more interest in weak personalization rather than strong personalization, which, of course, is not to be automatically deemed as “bad”. Indeed, a separation between weak and strong personalization does not put forward the claim that the weak would be worse than the strong. Rather both weak and strong personalization approaches are needed just as both weak and strong ties are in social network analysis. At the end of the day, it seems to be oftentimes the perception of personalization rather than the actual, which persuades people into action.

Personalization is often only considered regarding contents provided for the users. For instance, when a user sets personal goals and tracks parameters that are important to him or her, the system may provide progress feedback and/or suggestions based on user preferences. Thus, the contents received by a user are individualized, but the software functionality and features remain the same for all users. Similarly, a user's self-representation such as avatars may visualize the desired self instead of random pictures or personally relevant information, but again the software functionality remains the same for all users. In previous works, most of the attention in strong personalization research has focused on personalized content (see, e.g., Andrews, 2012; Cremonesi et al., 2012; Kaptein et al., 2012), but more than that could be offered (Klasnja et al., 2011).

We recognize here two issues with strong personalization which earn research interest and ways to mitigate them:

i. Going beyond mere offering personalized content by personalized software features.

ii. Addressing the oft-neglected fact in personalization efforts that also users change over time.

Personalized software features are more elaborate albeit more challenging approach than personalized content, and naturally some software features are more prone to strong personalization than others. For example, even though self-monitoring relates to an individual's measurements the key in it is not to convey the feeling that the software feature would be available “only for me”. Thus, data is personalized but the software feature is not since many other users will be able to conduct similar self-monitoring also. Virtual rehearsal is slightly different in this sense. When a user notices that a specific rehearsal suggested for them is unique rather only than selected from a list of options, it may influence the user to do the rehearsal. Similarly, virtual rewards have no intrinsic need for being personalized as there is no need for a user to think that no one else could get the same reward if they achieved the same results; in fact, it may be just the opposite, because social recognition may add to the power of virtual rewards. Also similarity and liking may benefit from a user's perception of strong personalization in modifying the way how information is being presented for the user. Thus, the key is whether a user's perception of using individualized software functionality instead of him or her feeling like a member of a target group plays a role or not.

Most personalization implementations today seem to assume that users stay the same over any given period of time, i.e., there is no modeling of a user's possible change. And yet, a user is more likely to change in multiple ways over any (longer) observation period. Similarly, persuasion profiles should not be static, but they could change over time, sometimes perhaps even with short notice. Moreover, a user may adopt certain roles or fulfill certain tasks that at times may require adopting a specific behavioral pattern, which may or may not be typical for the person. If the personalization engine in the software does not have the capability to recognize and process these types of inputs, any susceptibility model it produces may quickly become biased or even obsolete.

In a digital intervention the content delivered, the timing of the intervention, as well as the interface used can be personalized to suit the needs and wants of a user (Berkovsky et al., 2012). Expanding from it, personalized software features involve the systematic use of individual user characteristics to determine relevant software features for the user. Personalization strategies may include adaptation, context awareness, and self-learning: Adaptation uses responses a user provides to questions to create personalized user experience, whereas context awareness senses and uses, for instance, the user's current location, location history, date, and/or time to create it, and self-learning uses new behavior data generated from the changing behavioral patterns of the user and system used to automatically adapt to the user's preferences (Monteiro-Guerra et al., 2020). Personalization by self-learning strategy is much more dynamic and requires developing much more complex algorithms than adaptation and context-awareness.

Some software features are more sensitive than others to provide opportunities for strong personalization. Persuasive software features in the Persuasive Systems Design model (Oinas-Kukkonen and Harjumaa, 2009) can be used to identify features that are sensitive to strong personalization. See Table 2. Features that enable strong personalization are mainly related to the computer-human dialogue support.

A system can offer personalized reminders by predicting opportune moments for sending notifications for a user or delivering reminders based on, e.g., a user's activity learned over time (Ghanvatkar et al., 2019). Such reminders provide unique functionality for a user because they are based on the appropriate times that are convenient for the user to engage in the desired activity. Reminders can also be designed to be customizable by the user. Enabling the user to customize a reminder is a means of prompting the user at their preferred time. However, there is the danger that timeslots chosen by the user aren't effective in terms of engaging in the desired activity; hence a context-aware reminder that learns the user's interaction behavior may perhaps be more effective (Singh and Varshney, 2019).

Generic virtual rewards tailored for users can be persuasive but there is also room to personalize rewards so that rewards could become even more relevant or appealing to the user. This can be done by using the user's preference of a reward, matching with the user's values, beliefs and culture, among other characteristics. Personalized rewards tend to be valuable to the receiver because they can be designed to be especially meaningful for her (Paay et al., 2015) and to reinforce the desired behavior by boosting a user's motivation and hence be persuasive (Li et al., 2021).

Users tend to prefer people or things that resemble themselves in some manner, such as in their values and previous experience; thus, similarity means that the system is analogous to the user in some way. For example, avatars can be created to resemble the user in the information system (Rheu et al., 2020), it is possible to mimic a user's living environment or culture (Li et al., 2021), or the self-representation of a user can be personalized using dialect or slang.

Personalized user interfaces (Nivethika et al., 2013) can make them more attractive and fit with a person's liking and subsequently enhance their persuasiveness. To enhance look and feel of a user interface, it is important to consider the depth to which it can be personalized. Forms of personalization can include user-enabled customizations and system-driven personalization (Bunt et al., 2007; Abdullah and Adnan, 2008), the latter of which can be based on user preferences or those aspects that the system learns from a user by means of machine learning algorithms. Context-awareness may help the system to adapt to the user's situation at hand (e.g., Lee and Choi, 2011).

In addition to the computer-human dialogue features of reminders, rewards, similarity, and liking, also primary task support by virtual rehearsal is sensitive to strong personalization. Personalized virtual rehearsals are relevant for behavior change interventions that require the user to learn a new desired behavior (Peng, 2009). Providing personalized behavioral rehearsals can support a user to practice the desired behavior (Langrial et al., 2014). For example, in the study by Clarke et al. (2020) the application encourages physical activity and guides the user to learn new physical movements and practice the desired behavior by adapting to the user's movements in a virtual rehearsal video and providing real-time feedback.

An important question is: at what point should a user notice that software is personalized in such a manner that the features and content are specific to his or her preferences, and what is the impact of this perceived personalization on user experience. This goes beyond simply claiming that software is personalized, which is usually the case for the one-fits-all type of systems when the extent of personalization is far from true personalization. In the same vein, the loose use of personalization as a marketing buzzword (Kim, 2002) is not advisable.

A major challenge with strong personalization naturally is having enough detailed information about the user. In the beginning of use there is typically not enough data to start personalizing the app; therefore some applications often run into the so-called cold-start problem (Banovic and Krumm, 2017). This can have an immediate impact on users who have high expectations for the system they are about to start using (Koch, 2002). To mitigate the cold-start problem and to meet the expectations of strong personalization, surveying questions about their preferences can be asked from users. Here again the level of personalization will be determined by the amount of information the user is willing to give about oneself to the app at a stage when they know so little about the app (Koch, 2002).

Some applications allow users to sign-up with user profiles from other platforms such as Facebook, Twitter, or LinkedIn. This may appeal particularly to users who like to maintain one profile across multiple applications (Karunanithi and Kiruthika, 2011); many of the user preferences have already been defined in that platform and they may be ready to be harnessed in other platforms, too. With the enforcement of the European Union's General Data Protection Regulation (GDPR) users own their data and can request a copy of their data, which may also contain information about their preferences in a format that can be, at least ideally speaking, imported into another application (Agyei and Oinas-Kukkonen, 2020). However, this often remains quite far from reality in practice.

Ideally, personalized interventions should perform better than one-size-fits-all interventions because they can better meet the specific and changing needs of a user (Rabbi et al., 2015a). In addition to that the extent of personalization in software may vary from weak to strong also the type of personalization may evolve over time. This can occur in two major ways. The system may adapt to the user preferences and directly influence the interaction, or the user interacts with the app in such manner that it changes the user model based on user's behavior (Zhu et al., 2021). If the system dynamically adapts to the user, then the level or spectrum of personalization offered by the system will vary at different points in time. To achieve the goal of strong personalization in information systems there is a need to constantly keep learning and adapting to users' changing needs and preferences. Designers should seek to ensure that true personalization really happens and that it is made clearly recognizable for users. By examining the software and its features and determining the extent to which they can and should be personalized on par with studying users' perception of such features may provide meaningful insights on the value of personalization as well as users' desire for true personalization.

A user of a behavior change support system may have had specific goals in mind when he or she started to use the system. However, these goals may change significantly or even become obsolete over time. There is also a high likelihood that something is going to interfere with the continued use of the system. A hectic situation in life may cause a temporary lapse in use, which in turn can lead the user even to stop interacting with the system altogether. Modern information systems can monitor users' interactions effectively and can perhaps even ascertain when these changes happen but are not very good at determining the reasons for it as it often requires direct feedback from the user. This lack of real-time and accurate predictive capability is one of the reasons why systems often do not correctly interpret the situation the user is in. Any interaction with the user who experiences a major change in life (for example, the person is hospitalized), can potentially result in miscommunication and thus may influence a decision to stop using the system.

It has been established that many users do not adhere to digital health interventions because of changes they face in their lives (Eysenbach, 2005; Karppinen et al., 2016; Lie et al., 2017). Eysenbach (2005) provides a list of hypothetical factors influencing non-usage and dropouts. For example, mundane reasons like lack of time are very common for not continuing in an intervention (Karppinen et al., 2016; Lie et al., 2017). Such lack of time is often due to difficulties participants face in their life that de-prioritizes the intervention. These events can vary a great deal, but it essentially means that there and then the use of the system is not very high on a user's priority list. The challenge for the support system is to handle these occurrences.

It is a common characteristic of digital health interventions to suffer from high dropout rates (Bremer et al., 2020). The number of dropouts tends to be heaviest within the first few weeks of the intervention (Eysenbach, 2005), and dropouts can often be attributed to attrition (Eysenbach, 2005). There have been a few advances in predicting dropouts. Pedersen et al. (2019) similarly observed that most dropouts happen early during the intervention, but they also identified three significant factors predicting dropouts: 2 weeks of inactivity in using the app, receiving less advice and engagement from the health coach, and quality of intervention program providers. Attrition happens over time, but the users tend to reduce their activity significantly a few weeks before the dropout (Pedersen et al., 2019). In many of these cases, it would be difficult to determine the real reasons without obtaining feedback from the user. User feedback is often provided reflectively when the period of use is over, but then it will be already too late to do anything about it. Thus, monitoring activity over time and responding to these lapses in a timely manner becomes the key to overcome the challenge (Pedersen et al., 2019). In their study of digital health interventions, Pedersen et al. (2019) were able to predict dropouts with 89 percent precision using the machine learning technique known as the random forest model (cf. Ho, 1995). This model has been used also in other fields, for example, to predict academic grades and academic dropouts (Rovira et al., 2017). A particular challenge that remains is to design systems that can reduce dropouts and improve adherence at the beginning of system's use when users typically value pragmatic aspects (Biduski et al., 2020).

There is also a temporal aspect related to intention to use and actual use of the system, namely a user perceives the system differently over time (Kujala et al., 2013). The temporality of user experience starts from anticipation and expectations of the interaction (Karapanos et al., 2009), and sequential process for user experience lifecycle can be defined (Pohlmeyer et al., 2010). Three main forces that are responsible for shifts in user's change have been described as familiarity, functional dependency, and emotional attachment (Karapanos et al., 2009).

Machine learning algorithms have potential to predict dropouts, changes in users' goals or preferences, and most suitable intervention types. Some behavior change support systems have demonstrated strategies which use personalized messaging, rotating interventions, and multi-armed bandits to gain feedback from users whilst trying out different intervention strategies (Paredes et al., 2014; Dempsey et al., 2015; Rabbi et al., 2015b; Kovacs et al., 2018). Rotating interventions seek to avoid the decline in effectiveness from static interventions by offering a multitude of different types of interventions where the support system acts more as a coach (Kovacs et al., 2018). With multi-armed bandits, the recommender system proposes different interventions for the user and learns directly from the feedback it receives (Paredes et al., 2014). Machine learning algorithms may ease the effort to understand user's changes on the individual level and respond to those changes accordingly.

Recommender systems provide an example of weak personalization in e-commerce is (Resnick and Varian, 1997; Lu et al., 2015). Sophisticated algorithms used by companies such as Netflix and Amazon are prolific at recommending products to people who use their services. On the one hand such algorithms have been developed to increase the profits and competitiveness of these companies, and on the other hand to increase user loyalty and retention over time. In reality, the weak personalization they offer does not care that much about the individual user and can even feel impersonal for the user.

Attrition chasm is defined as the point where the user stops using the system or at least the use of the system declines steeply over time; these phases were described by Eysenbach (2005) with recognition of high dropout rates from digital health trials after the curiosity phase. In Figure 1, we highlight the need to cross the attrition chasm to reach a more stable use phase. Biduski et al. (2020) further suggest that the user preferences change over time from general pragmatic aspects toward more individualized needs, where the user develops a deeper relationship with the system. We postulate that once the user gets past the curiosity phase of the experience, the benefits from true and from strong personalization increase relative to time spent with the system. Considering the behavior change types, A-Change regarding attitudes, B-Change related to behaviors and C-Change for compliance, research shows that it is considerably easier to make the user comply a few times during an intervention (C-Change), but to achieve attitude change (A-Change) takes a much longer time (Oinas-Kukkonen, 2013). If the desired outcome of the system is to bring about an A-Change, moving from weak to strong personalization is likely required, while not neglecting the psychological and emotional needs of the user during the overall process. In Figure 1, functional dependence starts with pragmatic qualities but continues to build over time along with familiarity, while the personalized qualities that cause emotional attachment are similarly built slowly and over time.

During the curiosity phase and shortly afterward, C-Change is more readily achievable. However, as time passes the user is likely to appreciate ways to individualize the system to fit their preference. In Figure 1, the types of behavior changes are presented to highlight the relative time required to achieve that type of change rather than that the change could not occur at an earlier or later stage depending on the individual. Notably, after the curiosity phase, the level of engagement comes to play, as the system builds a relationship with the user through meaningful interactions. This is when the system could learn a lot from the user. Nevertheless, Yardley et al. (2016) emphasize that more engagement with the user does not always mean that it is effective. This means that the system must be designed so that it is intelligent enough to adapt its level and timing of engagement.

Based on the three phases illustrated, Table 3 provides an example of true personalization strategies that can be used with a health behavior change support system. It should be noted that the curiosity phase can be relatively short while crossing the attrition chasm can take a longer time. As the duration of these phases may differ a lot between users and between systems, the goal should be to use the time effectively to learn and adapt to users' preferences and needs. In the example in Table 3, the system offers a variety of intervention mechanisms (content and software functionality) while employing machine learning algorithms not only to better predict user needs but also to improve the existing user profiles. Such a system can be made more adaptative to users by incorporating behavior change dimensions into the user profiles, for example, with the use of persuasion profiles (Kaptein et al., 2015). In addition, monitoring adherence and usage gaps in health interventions is vital from early on. This should continue throughout the life cycle of the intervention. While not possible to thwart all drop-outs, this personalization strategy should increase the adherence rate. Once the user reaches the stable use phase, the system should no longer rely on the baseline user profiles as it provides content and functionality based on the individualized user profile.

Users' needs, wants, goals and preferences often change during their time with a system, which further influences its usage patterns. For example, the experience of anticipating the use of a new system can positively or negatively result in the intention to use the system—or it can have very little effect on it. It depends on the anticipation and expectations that the user has toward the system, but also on their prior experience with similar systems (Karapanos et al., 2009; Biduski et al., 2020). The study from Biduski et al. (2020) indicates that it is important early on to build a positive image of the system and focus on more pragmatic aspects as mentioned earlier. In this, the focus should be on system qualities such as ease of use, usefulness, efficiency, convenience, and understandability, even if admittedly this can also vary between different kinds of users. Then over time, the relationship should be fostered with the user. It might be prudent to guide the user through the earlier experience for example by utilizing tunneling to support one to perform the primary task of the system.

Recognizing the user's personality can provide an additional layer of opportunities for strong personalization. The influence of personality traits demonstrates a clear effect but the degree can vary between different domains (Kaptein et al., 2015; Hales et al., 2017). For example, Su et al. (2020) found that young patients who are introverted but open to experience were both interested and willing to use a mobile diabetes application. In their study, conscientiousness that also reflects self-discipline did not play a significant role in the app use intentions. However, this result is inconsistent with another study on weight loss carried out by Hales et al. (2017) where self-discipline was found to be a significant factor. Su et al. (2020) argue that this discrepancy is because of population differences but it could also be because they are different types of applications. Su et al. (2020) also found out that open-mindedness was an important predictor of app use and adoption, and confirmed earlier findings that more conscientious users are likely to perform tasks more dutifully (Shambare, 2013). Yet, conscientious users may have difficulties adapting to new methods if they consider them as time-consuming or overly complex (Shambare, 2013; Su et al., 2020). Surprisingly, in spite of their attempt to investigate the role of emotional stability Su et al. (2020) didn't find that it to play a significant role in the acceptance of the app. Nevertheless, their conclusion was that understanding personality traits is important and can actually be used to predict who will continue to use the system in the longer span or to adhere to a health intervention.

Also user experiences develop and change over time and impact long-term use (Vermeeren et al., 2010; Kim et al., 2015). For instance, the use of a behavior change support system designed for a year-long weight loss journey typically starts with an active curiosity phase, but as time elapses so does the user activity. There is also a great deal of variance in the activity between users. Indeed, in most cases, users are far from being a homogenous group, and often those with less interaction with the system tend to have poorer outcomes (Karppinen et al., 2016). As time passes the relationship the user has with the system also changes, and those users who have developed a stronger and more meaningful relationship with it are likely to get a better user experience over time, which then results in an improved outcome for behavior change intervention (Karapanos et al., 2009; Biduski et al., 2020).

We suggest that it might not always be the best strategy to provide strong personalization in the early stages. At this time, a user may be overwhelmed with the amount of information as well as the amount of software functionality and navigational options the new system has to offer; basic design principles related to ease of use and providing guidance apply here, too. In later use periods, personalization may become much more evident when the user already has become accustomed to the system and familiar with its contents and features. Interactions can be further improved when the system knows the user better. It is essential to foster user loyalty by building a relationship between the user and the system. Therefore, true personalization may benefit from interactions instituted incrementally over time.

Artificial intelligence (AI) is an umbrella term that is a combination of techniques and methods for creating systems that can sense, reason, learn, act, and be used to solve problems (Rowe and Lester, 2020). AI systems can be made to perform a variety of tasks such as playing chess or intellectual tasks involving the use of some human elements of senses and reason. AI methodologies include big data analytics (to discover users' behavioral patterns patterns), machine learning (using algorithms to find patterns in data using supervised, unsupervised, semi-supervised, reinforced learning, and deep learning methods), natural language processing (capability of computers to process, analyze, and synthesize human languages), and cognitive computing (simulating human thinking processes and self-learning capability) (Chang, 2020). Table 4 outlines AI methodologies, their affordances for personalization, example applications, and ethical constraints.

AI can drive the development of truly personalized systems. For example, this can involve effecting behavior change by enhancing the efficiency of self-monitoring (Chew et al., 2021). Data can be collected and used to optimize goal setting and action planning (building and validating personalized predictive models), and provide personalized micro-interventions (e.g., prompts, nudges, and suggestions) in real-time to achieve the desired goal (Chew et al., 2021). Personalization enables system-tailored content and functionality to be offered to users based on their characteristics, needs, and preferences. Such systems can produce rich data about the user, process the data, provide insights, and support the user's ongoing activities. For instance, just-in-time systems (Intille et al., 2003) employ decision rules which use the current state of the user (e.g., emotional state and environmental conditions) as input to choose the time and type of intervention to deliver to the user (Menictas et al., 2019).

To elaborate on the move toward a strong personalization strategy, developers need to aim beyond user segments by designing and developing systems that recognize individual differences and preferences. Figure 2 provides a framework that describes how such personalization can be achieved. Availability of both technology and related skills need to be considered when utilizing artificial intelligence. It is crucial to carefully consider the type of AI needed to improve personalization. For example, tailoring might be good enough for weak personalization and suitable for systems that intend to affect a compliance type of change (C-Change). This is because the aim is for users simply to comply with the requests of the system. Very limited AI capability may well be enough to achieve this effect. Strong personalization, to help realize B-Change or A-Change, may require much more complex AI mechanisms that can model the user characteristics, preferences, changing needs, etc., and often in real-time.

In developing personalized systems powered by AI, ethical challenges can emerge from the Use Context (including laws and regulations in specific problem domains such as health, finance, or retail), User Context (including privacy, safety, and preferences), or Technology Context (including security and technological limitations) or any combination of these. Although AI can increase the feasibility of developing truly personalized systems, there are ethical issues that developers grapple with. These include, but are not limited to, transparency (Yu and Alı̀, 2019), privacy (Chowdhary, 2020), safety and performance (Behera et al., 2022), accessibility to artificial intelligence (Morris, 2020), moral agency (Swanepoel, 2021), biases stemming from data collection process, data, and algorithm (Li et al., 2019), threats to human autonomy (Sankaran et al., 2020), environmental and ecological challenges arising from hardware and energy consumptions required to develop and operate AI technologies (Li et al., 2019), liability issues in event of a damage or harm (Cerka et al., 2015), and social acceptability (Morris, 2020).

The ethical issues in AI technologies are caused by limitations in the technology itself [e.g., the use of black-box algorithms such as deep learning, random forest, support vector machines which are less explainable but provide high accuracy precision (Chang, 2020)], insufficiencies in AI regulations and policy, and insufficiency of existing ethical design principles (Li et al., 2019). Also, true personalization requires detailed information about the user and hence users must be willing to reveal personal information to benefit from it (Kobsa, 2002). This personal information needs to be collected, analyzed, and interpreted so that truly personalized user experiences can be provided. For this process, many data management, sharing, and privacy requirements should be considered and adhered to. These ethical issues make it critical to obtain informed consent from the user by providing clear and adequate information (Agyei and Oinas-Kukkonen, 2020; The European Commission, 2021). Indeed, there is all the reason to believe that AI methods will be used in the future to a very large extent to enhance the personalization. From an ethical perspective, it is important to be transparent to the user about the intention of the AI system in use while also not collecting information about the user that is not relevant to the operation of the system.

In the era when there is so much discussion around personalized systems and technologies, this article sought to raise interest and discuss regarding Personalization Myopia and the nature of true personalization. Even with a plethora of academic research into personalization in general, strong personalization is yet to gain notable momentum. Personalizing software functionality is much more complex than personalizing contents via, e.g., feedback, simulation (Mcalpine and Flatla, 2016), or social comparison (Zhu et al., 2021). Thus, “personal” and “personalized” are different concepts.

Users also change over time. This means that also the grounds for personalization may change without the system noticing it, thus leading to the system having an outdated view of the user. We posited here that trying to make a system personalized from the very beginning of usage might be even harmful, but rather the depth of personalization could be increased over time. It remains a challenge, how a change in behavior can be reliably detected and measured. In addition, how can a change in a user's goal be detected and determined, if the user is not provided with an explicit goal-setting feature? Furthermore, technological platforms upon which the information system has been built may also change.

Yet, whether strong or weak personalization, some kind of user profiles are needed. With users being profiled, the need for privacy and acknowledgment of laws and regulations (e.g., GDPR and Medical Device Regulation in the European Union) related to it play a critically important role in the development of applications. In practice, weak personalization in many cases may be a more desirable approach as it is likely to require a lesser amount of data from the users on an individual level. We would also like to see more contributions to the scientific discourse around theory vs. design-driven approaches (Arriaga et al., 2013).

There remains many research challenges. The user's emotions impacts both user experience and continuance intention, but the relationship between user emotions and personalization is not well understood and should be explored in more detail for instance with regards to health behavior change. For another matter, support systems in the health domain often require continuous use and adherence to fulfill their purpose. Other open questions are many, too: What kind of general claims can be made of perceived user experiences if each user is offered a different software entity? Does customization lead to the Do-Your-Own-System dilemma? Information systems with their endeavor to provide a user with fitting, useful, and/or influential information at the same time may also be filtering out information that actually could be highly relevant or perhaps even critical for the user; thus, personalized solutions are predisposed to filter paradox (Oinas-Kukkonen and Oinas-Kukkonen, 2013, p. 84). Furthermore, there may be underprivileged user groups such as the elderly who might have little say or perhaps no understanding at all about the downside of what such filtering would mean in practice. Yet another challenging question is whether personalization kills exploration? Such questions earn more attention in future research.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

HO-K: conceptualization, methodology, funding acquisition, resources, and supervision. HO-K, SP, and EA: writing—original draft preparation and review and editing. All authors have read and agreed to the published version of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdullah, N., and Adnan, W. A. W. (2008). Personalized user interface model of web application. 2008 Int. Symp. Inform. Technol. 1, 1–5. doi: 10.1109/ITSIM.2008.4631581

Agyei, F. E. E. Y., and Oinas-Kukkonen, H. (2020). GDPR and systems for behaviour change: a systematic review. Int. Conf. Pers. Technol. 234–246. doi: 10.1007/978-3-030-45712-9_18

Andrews, P. Y. (2012). system personality and persuasion in human-computer dialogue. ACM Trans. Interact. Intellig. Syst. (TiiS) 2, 1–27. doi: 10.1145/2209310.2209315

Arriaga, R. I., Miller, A. D., Mynatt, E. D., Poole, E. S., and Pagliari, C. (2013). “Theory vs. design-driven approaches for behavior change research,” in CHI'13 Extended Abstracts on Human Factors in Computing Systems (Paris), 2455–2458.

Banovic, N., and Krumm, J. (2017). Warming up to cold start personalization. Proc. ACM Interact. Mobile Wearable Ubiquitous Technol. 1, 1–13. doi: 10.1145/3161175

Barrett, M. A., Humblet, O., Hiatt, R. A., and Adler, N. E. (2013). Big data and disease prevention: from quantified self to quantified communities. Big Data 1, 168–175. doi: 10.1089/big.2013.0027

Behera, R. K., Bala, P. K., and Dhir, A. (2019). The emerging role of cognitive computing in healthcare: a systematic literature review. Int. J. Med. Inform. 129, 154–166. doi: 10.1016/j.ijmedinf.2019.04.024

Behera, R. K., Bala, P. K., Rana, N. P., and Kizgin, H. (2022). Cognitive computing based ethical principles for improving organisational reputation: a B2B digital marketing perspective. J. Bus. Res. 141, 685–701. doi: 10.1016/j.jbusres.2021.11.070

Berkovsky, S., Freyne, J., and Oinas-Kukkonen, H. (2012). Influencing individually: fusing personalization and persuasion. ACM Trans. Interact. Intellig. Syst. (TiiS) 2, 312. doi: 10.1145/2209310.2209312

Biduski, D., Bellei, E. A., Rodriguez, J. P. M., Zaina, L. A. M., and De Marchi, A. C. B. (2020). Assessing long-term user experience on a mobile health application through an in-app embedded conversation-based questionnaire. Comput. Hum. Behav. 104, 106169. doi: 10.1016/j.chb.2019.106169

Borghouts, J., Eikey, E., Mark, G., De Leon, C., Schueller, S. M., Schneider, M., et al. (2021). Barriers to and facilitators of user engagement with digital mental health interventions: systematic review. J. Med. Internet Res. 23, e24387. doi: 10.2196/24387

Bremer, V., Chow, P. I., Funk, B., Thorndike, F. P., and Ritterband, L. M. (2020). Developing a process for the analysis of user journeys and the prediction of dropout in digital health interventions: machine learning approach. J. Med. Internet Res. 22, e17738. doi: 10.2196/17738

Brusilovsky, P. (2001). Adaptive hypermedia. User Model. User Adapted Interact. 11, 87–110. doi: 10.1023/A:1011143116306

Bunt, A., Conati, C., and Mcgrenere, J. (2007). “Supporting interface customization using a mixed-initiative approach,” in Proceedings of the 12th International Conference on Intelligent User Interfaces (Honolulu, HI), 92–101.

Cacioppo, J. T., Petty, R. E., and Kao, C. F. (2010). The efficient assessment of need for cognition. J. Pers. Assess. 48, 306–307. doi: 10.1207/s15327752jpa4803_13

Cerka, P., Grigiene, J., and Sirbikyte, G. (2015). Liability for damages caused by artificial intelligence. Comput. Law Secur. Rev. 31, 376–389. doi: 10.1016/j.clsr.2015.03.008

Chang, A. (2020). “The role of artificial intelligence in digital health,” in Digital Health Entrepreneurship, eds. S. Wulfovich and A. Meyers (Springer, Cham), 71–81.

Chew, H. S. J., Ang, W. H. D., and Lau, Y. (2021). The potential of artificial intelligence in enhancing adult weight loss: a scoping review. Public Health Nutr. 24, 1993–2020. doi: 10.1017/S1368980021000598

Chowdhary, K. R. (2020). Natural language processing. Fund. Artif. Intellig. 603–649. doi: 10.1007/978-81-322-3972-7_19

Clarke, C., Cavdir, D., Chiu, P., Denoue, L., and Kimber, D. (2020). “Reactive video: adaptive video playback based on user motion for supporting physical activity,” in Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (New York, NY), 196–208.

Cremonesi, P., Garzotto, F., and Turrin, R. (2012). Investigating the persuasion potential of recommender systems from a quality perspective. ACM Trans. Interact. Intellig. Syst. (TiiS) 2, 1–41. doi: 10.1145/2209310.2209314

Dempsey, W., Liao, P., Klasnja, P., Nahum-Shani, I., and Murphy, S. A. (2015). Randomised trials for the Fitbit generation. Significance 12, 20–23. doi: 10.1111/j.1740-9713.2015.00863.x

Dijkhuis, T. B., Blaauw, F. J., van Ittersum, M. W., Velthuijsen, H., and Aiello, M. (2018). Personalized physical activity coaching: a machine learning approach. Sensors 18, 623. doi: 10.3390/s18020623

Dijkstra, A. (2006). “Technology adds new principles to persuasive psychology: evidence from health education,” in International Conference on Persuasive Technology. Berlin, Heidelberg: Springer, 16–26.

Dijkstra, A. (2014). The persuasive effects of personalization through: name mentioning in a smoking cessation message. User Model. User Adapt. Interact. 24, 393–411. doi: 10.1007/s11257-014-9147-x

Evans, D. E., and Rothbart, M. K. (2007). Developing a model for adult temperament. J. Res. Pers. 41, 868–888. doi: 10.1016/j.jrp.2006.11.002

Eysenbach, G. (2005). The law of attrition. J. Med. Internet Res. 7, e402. doi: 10.2196/jmir.7.1.e11

Fischer, G. (2001). User modeling in human–computer interaction. User Model. User Adapt. Interact. 11, 65–86. doi: 10.1023/A:1011145532042

Ghanvatkar, S., Kankanhalli, A., and Rajan, V. (2019). User models for personalized physical activity interventions: scoping review. JMIR mHealth uHealthUhealth 7, e11098. doi: 10.2196/11098

Habegger, B., Hasan, O., Brunie, L., Bennani, N., Kosch, H., and Damiani, E. (2014). Personalization vs. privacy in big data analysis. Int. J. Big Data, 25–35.

Hales, S., Turner-McGrievy, G. M., Wilcox, S., Davis, R. E., Fahim, A., Huhns, M., et al. (2017). Trading pounds for points: engagement and weight loss in a mobile health intervention. Digital Health 3, 205520761770225. doi: 10.1177/2055207617702252

Halko, S., and Kientz, J. A. (2010). “Personality and persuasive technology: an exploratory study on health-promoting mobile applications,” in International Conference on Persuasive Technology. Berlin, Heidelberg: Springer, 150–161.

Hariri, R. H., Fredericks, E. M., and Bowers, K. M. (2019). Uncertainty in big data analytics: survey, opportunities, and challenges. J. Big Data 6, 1–16. doi: 10.1186/s40537-019-0206-3

Hildesheim, W., and Hildesheim, W. (2018). Cognitive computing – the new paradigm of the digital world. Digital Marketpl. Unleas. 265–274. doi: 10.1007/978-3-662-49275-8_27

Ho, T. K. (1995). “Random decision forests,” in Proceedings of the International Conference on Document Analysis and Recognition. (Montreal, QC: IEEE Computer Society), 278–282.

Intille, S. S., Kukla, C., Farzanfar, R., and Bakr, W. (2003). “Just-in-time technology to encourage incremental, dietary behavior change,” in AMIA Annual Symposium Proceedings. American Medical Informatics Association, 874.

Kaptein, M., De Ruyter, B., Markopoulos, P., and Aarts, E. (2012). Adaptive persuasive systems: a study of tailored persuasive text messages to reduce snacking. ACM Trans. Interact. Intellig. Syst. (TiiS) 2, 1–25. doi: 10.1145/2209310.2209313

Kaptein, M., and Eckles, D. (2010). “Selecting effective means to any end: futures and ethics of persuasion profiling,” in International Conference on Persuasive Technology, Berlin, Heidelberg: Springer, 82–93.

Kaptein, M., Markopoulos, P., De Ruyter, B., and Aarts, E. (2015). Personalizing persuasive technologies: explicit and implicit personalization using persuasion profiles. Int. J. Hum. Comput. Stud. 77, 38–51. doi: 10.1016/j.ijhcs.2015.01.004

Karapanos, E., Zimmerman, J., Forlizzi, J., and Martens, J. B. (2009). “User experience over time: an initial framework,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Boston, MA), 729–738.

Karppinen, P., Oinas-Kukkonen, H., Alahäivälä, T., Jokelainen, T., Keränen, A. M., Salonurmi, T., et al. (2016). Persuasive user experiences of a health Behavior Change Support System: a 12-month study for prevention of metabolic syndrome. Int. J. Med. Inform. 96, 51–61. doi: 10.1016/j.ijmedinf.2016.02.005

Karunanithi, D., and Kiruthika, B. (2011). “Single sign-on and single log out in identity management,” in International Conference on Nanoscience, Engineering and Technology. (Chennai: ICONSET), 607–611.

Kim, H. K., Han, S. H., Park, J., and Park, W. (2015). How user experience changes over time: a case study of social network services. Hum. Fact. Ergon. Manufact. Serv. Indust. 25, 659–673. doi: 10.1002/hfm.20583

Kim, W. (2002). Personalization: definition, status, and challenges ahead. J. Object Technol. 1, 29–40. doi: 10.5381/jot.2002.1.1.c3

Klasnja, P., Consolvo, S., and Pratt, W. (2011). “How to evaluate technologies for health behavior change in HCI research,” in Conference on Human Factors in Computing Systems – Proceedings, 3063–3072.

Kobsa, A. (2001). “Generic user modeling systems,” in User Modeling and User-Adapted Interaction. Springer, 49–63.

Kobsa, A. (2002). Personalized hypermedia and international privacy. Commun. ACM 45, 64–67. doi: 10.1145/506218.506249

Koch, M. (2002). “Global identity management to boost personalization,” in Proceedings of Research Symposium on Emerging Electronic Markets (Basel), 137–147.

Kovacs, G., Wu, Z., and Bernstein, M. S. (2018). “Rotating online behavior change interventions increases effectiveness but also increases attrition,” in Proceedings of the ACM on Human-Computer Interaction. New York, NY: ACM PUB27, 1–25.

Kujala, S., Vogel, M., Obrist, M., and Pohlmeyer, A. E. (2013). “Lost in time: the meaning of temporal aspects in user experience,” in CHI'13 Extended Abstracts on Human Factors in Computing Systems. Association for Computing Machinery (Paris: Association for Computing Machinery), 559–564.

Langrial, S., Lehto, T., Oinas-Kukkonen, H., Harjumaa, M., and Karppinen, P. (2012). “Native mobile applications for personal well-being: a persuasive systems design evaluation,” in PACIS 2012 Proceedings (Hochiminh City).

Langrial, S., Oinas-Kukkonen, H., Lappalainen, P., and Lappalainen, R. (2014). “Managing depression through a behavior change support system without face-to-face therapy,” in International Conference on Persuasive Technology (Berlin), 155–166.

Lee, D., Oh, K. J., and Choi, H. J. (2017). “The chatbot feels you - A counseling service using emotional response generation,” in 2017 IEEE International Conference on Big Data and Smart Computing (BigComp) (Jeju: Institute of Electrical and Electronics Engineers Inc), 437–440.

Lee, H., and Choi, Y. S. (2011). “Fit your hand: personalized user interface considering physical attributes of mobile device users,” in Proceedings of the 24th Annual ACM Symposium Adjunct on User Interface Software and Technology (Santa Barbara), 59–60.

Li, G., Deng, X., Gao, Z., and Chen, F. (2019). “Analysis on ethical problems of artificial intelligence technology,” in Proceeding of the 2019 on Modern Educational Technology. Association for Computing Machinery, 101–105.

Li, T., Chen, P., and Tian, Y. (2021). Personalized incentive-based peak avoidance and drivers' travel time-savings. Transport Policy 100, 68–80. doi: 10.1016/j.tranpol.2020.10.008

Lie, S. S., Karlsen, B., Oord, E. R., Graue, M., and Oftedal, B. (2017). Dropout from an eHealth intervention for adults with type 2 diabetes: a qualitative study. J. Med. Internet Res. 19, e7479. doi: 10.2196/jmir.7479

Lu, J., Wu, D., Mao, M., Wang, W., and Zhang, G. (2015). Recommender system application developments: a survey. Decision Supp. Syst. 74, 12–32. doi: 10.1016/j.dss.2015.03.008

Mcalpine, R., and Flatla, D. R. (2016). “Real-time mobile personalized simulations of impaired colour vision,” in Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessbility (Reno), 181–189.

Menictas, M., Rabbi, M., Klasnja, P., and Murphy, S. (2019). Artificial intelligence decision-making in mobile health. Biochemist 41, 20–24. doi: 10.1042/BIO04105020

Monteiro-Guerra, F., Rivera-Romero, O., Fernandez-Luque, L., and Caulfield, B. (2020). Personalization in real-time physical activity coaching using mobile applications: a scoping review. IEEE J. Biomed. Health Inform. 24, 1738–1751. doi: 10.1109/JBHI.2019.2947243

Nikoloudakis, I. A., Crutzen, R., Rebar, A. L., Vandelanotte, C., Quester, P., Dry, M., et al. (2018). Can you elaborate on that? Addressing participants' need for cognition in computer-tailored health behavior interventions. Health Psychol. Rev. 12, 437–452. doi: 10.1080/17437199.2018.1525571

Nivethika, M., Vithiya, I., Anntharshika, S., and Deegalla, S. (2013). “Personalized and adaptive user interface framework for mobile application,” in 2013 International Conference on Advances in Computing, Communications and Informatics (ICACCI). (Mysore: IEEE), 1913–1918.

Oinas-Kukkonen, H. (2013). A foundation for the study of behavior change support systems. Personal Ubiquit. Comput. (Tampere), 17, 1223–1235. doi: 10.1007/s00779-012-0591-5

Oinas-Kukkonen, H. (2018). “Personalization myopia: a viewpoint to true personalization of information systems,” in Proceedings of the 22nd International Academic Mindtrek Conference, 88–91.

Oinas-Kukkonen, H., and Harjumaa, M. (2009). Persuasive systems design: key issues, process model, and system features. Commun. Assoc. Inform. Syst. 24, 485–500. doi: 10.17705/1CAIS.02428

Oinas-Kukkonen, H., and Oinas-Kukkonen, H. (2013). Humanizing the Web: Change and Social Innovation. London: Palgrave Macmillan.

Paay, J., Kjeldskov, J., Skov, M. B., Lichon, L., and Rasmussen, S. (2015). “Understanding individual differences for tailored smoking cessation apps,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul: Crossings), 1699–1708.

Paredes, P., Gilad-Bachrach, R., Czerwinski, M., Roseway, A., Rowan, K., and Hernandez, J. (2014). “Poptherapy: coping with stress through pop-culture,” in Proceedings of the 8th International Conference on Pervasive Computing Technologies for HealthCare (Oldenburg: ICST), 109–117.

Parmar, V., Keyson, D., and de Bont, C. (2009). Persuasive technology to shape social beliefs: a case of persuasive health information systems for rural women in India. Commun. Assoc. Inform. Syst. 24, 25. doi: 10.17705/1CAIS.02425

Pedersen, D. H., Mansourvar, M., Sortsø, C., and Schmidt, T. (2019). Predicting dropouts from an electronic health platform for lifestyle interventions: analysis of methods and predictors. J. Medical Intern. Res. 21, e13617. doi: 10.2196/13617

Peng, W. (2009). Design and evaluation of a computer game to promote a healthy diet for young adults. Health Commun. 24, 115–127. doi: 10.1080/10410230802676490

Petty, R., and Wegener, D. (1998). “Attitude change: multiple roles for persuasion variables. - PsycNET,” in The Handbook of Social Psychology, eds. D. T. Gilbert, S. Fiske, and G. Lindzey (Boston: McGraw-Hill), 323–390.

Pohlmeyer, A., Hecht, M., and Blessing, L. (2010). User experience lifecycle model continue [Continuous user experience]. Der Mensch im Mittepunkt technischer Systeme. Fortschritt-Berichte VDI Reihe 22, 314–317.

Rabbi, M., Aung, M. H., Zhang, M., and Choudhury, T. (2015a). “MyBehavior,” in Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing. New York, NY: ACM Press, 707–718.

Rabbi, M., Pfammatter, A., Zhang, M., Spring, B., and Choudhury, T. (2015b). Automated personalized feedback for physical activity and dietary behavior change with mobile phones: a randomized controlled trial on adults. JMIR mHealth uHealth 3, e42. doi: 10.2196/mhealth.4160

Resnick, P., and Varian, H. R. (1997). Recommender systems. Commun. ACM 40, 56–58. doi: 10.1145/245108.245121

Rheu, M. M., Jang, Y., and Peng, W. (2020). Enhancing Healthy Behaviors Through Virtual Self: A Systematic Review of Health Interventions Using Avatars. Games Health J. 9, 85–94. doi: 10.1089/g4h.2018.0134

Rothbart, M., and Bates, J. (1977). “Temperament,” in Hand Book of Child Psychology, eds. E. Nancy, D. William, and M. L. Richard (New York, NY: John Wiley and Sons, Inc.) 99–166.

Rothbart, M. K. (2007). Temperament, development, and personality. Curr. Direct. Psychol. Sci. 16, 207–212. doi: 10.1111/j.1467-8721.2007.00505.x

Rothbart, M. K., Ahadi, S. A., and Evans, D. E. (2000). Temperament and personality: origins and outcomes. J. Pers. Soc. Psychol. 78, 122–135. doi: 10.1037/0022-3514.78.1.122

Rovira, S., Puertas, E., and Igual, L. (2017). Data-driven system to predict academic grades and dropout. PLoS ONE 12, e0171207. doi: 10.1371/journal.pone.0171207

Rowe, J. P., and Lester, J. C. (2020). Artificial intelligence for personalized preventive adolescent healthcare. J. Adolesc. Health 67, 52–58. doi: 10.1016/j.jadohealth.2020.02.021

Sankaran, S., Zhang, C., Gutierrez Lopez, M., and Väänänen, K. (2020). “Respecting human autonomy through human-centered AI,” in Proceedings of the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society. New York, NY: ACM, 1–3.

Sarker, I. H. (2021). Machine learning: algorithms, real-world applications and research directions. SN Comput. Sci. 2, 160. doi: 10.1007/s42979-021-00592-x

Shambare, N. (2013). Examining the influence of personality traits on intranet portal adoption by faculty in higher education (ProQuest Dissertations and Theses), Prescott Valley: ProQuest LLC.

Singh, N., and Varshney, U. (2019). Medication adherence: a method for designing context-aware reminders. Int. J. Med. Inform. 132, 103980. doi: 10.1016/j.ijmedinf.2019.103980

Sporakowski, M. J., Prochaska, J. O., and DiClemente, C. C. (1986). The transtheoretical approach: crossing traditional boundaries of therapy. Fam. Relat. 35, 601. doi: 10.2307/584540

Su, J., Dugas, M., Guo, X., and Gao, G. G. (2020). Influence of personality on mhealth use in patients with diabetes: prospective pilot study. JMIR mHealth uHealth 8, e17709. doi: 10.2196/17709

Šuster, S., Tulkens, S., and Daelemans, W. (2017). A short review of ethical challenges in clinical natural language processing. arXiv[Preprint].arXiv:1703.10090 doi: 10.18653/v1/W17-1610

Swanepoel, D. (2021). The possibility of deliberate norm-adherence in AI. Ethics Inform. Technol. 23, 157–163. doi: 10.1007/s10676-020-09535-1

Tam, K. Y., and Ho, S. Y. (2005). Web personalization as a persuasion strategy: an elaboration likelihood model perspective. Inform. Syst. Res. 16, 271–291. doi: 10.1287/isre.1050.0058

Tam, K. Y., and Ho, S. Y. (2006). Understanding the impact of web personalization on user information processing and decision outcomes. MIS Quart. 30, 865. doi: 10.2307/25148757

The European Commission (2021). Europe Fit for the Digital Age: Commission Proposes New Rules and Actions for Excellence and Trust in Artificial Intelligence. Available online at: https://ec.europa.eu/commission/presscorner/detail/en/IP_21_1682 (accessed February 23, 2022).

Torning, K., and Oinas-Kukkonen, H. (2009). “Persuasive system design,” in Proceedings of the 4th International Conference on Persuasive Technology - Persuasive'09, New York, NY: ACM Press, 1.

Valdivia, A., Sánchez-Monedero, J., and Casillas, J. (2021). How fair can we go in machine learning? Assessing the boundaries of accuracy and fairness. Int. J. Intellig. Syst. 36, 1619–1643. doi: 10.1002/int.22354

van Genugten, L., van Empelen, P., Boon, B., Borsboom, G., Visscher, T., and Oenema, A. (2012). Results from an online computer-tailored weight management intervention for overweight adults: randomized controlled trial. J. Med. Internet Res. 14, e44. doi: 10.2196/jmir.1901

Vermeeren, A. P. O. S., Law, E. L.-C., Roto, V., Obrist, M., Hoonhout, J., and Väänänen-Vainio-Mattila, K. (2010). “User experience evaluation methods,” in Proceedings of the 6th Nordic Conference on Human-Computer Interaction Extending Boundaries - NordiCHI'10. New York, NY: ACM Press, 521–530.

Yardley, L., Spring, B. J., Riper, H., Morrison, L. G., Crane, D. H., Curtis, K., et al. (2016). Understanding and promoting effective engagement with digital behavior change interventions. Am. J. Preven. Med. 51, 833–842. doi: 10.1016/j.amepre.2016.06.015

Yu, R., and Alì, G. S. (2019). What's inside the black box? AI challenges for lawyers and researchers. Legal Inform. Manag. 19, 2–13. doi: 10.1017/S1472669619000021

Keywords: personalization, tailoring, customization, persuasive systems, change management

Citation: Oinas-Kukkonen H, Pohjolainen S and Agyei E (2022) Mitigating Issues With/of/for True Personalization. Front. Artif. Intell. 5:844817. doi: 10.3389/frai.2022.844817

Received: 28 December 2021; Accepted: 14 March 2022;

Published: 26 April 2022.

Edited by:

Ifeoma Adaji, University of British Columbia, CanadaReviewed by:

Mirela Popa, Maastricht University, NetherlandsCopyright © 2022 Oinas-Kukkonen, Pohjolainen and Agyei. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Harri Oinas-Kukkonen, aGFycmkub2luYXMta3Vra29uZW5Ab3VsdS5maQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.