95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Artif. Intell. , 17 August 2022

Sec. AI in Business

Volume 5 - 2022 | https://doi.org/10.3389/frai.2022.831046

Mels de Kloet

Mels de Kloet Shengyun Yang*

Shengyun Yang*Voice intelligence is a revolutionary “zero-touch” type of human-machine interaction based on spoken language. There has been a recent increase in the number and variations of voice assistants and applications that help users to acquire information. The increased popularity of voice intelligence, however, has not been reflected in the customer value chain. Current research on the socio-technological aspects of human-technology interaction has emphasized the importance of anthropomorphism and user identification in the adoption of the technology. Prior research has also pointed out that user perception toward the technology is key to its adoption. Therefore, this research examines how anthropomorphism and multimodal biometric authentication influence the adoption of voice intelligence through user perception in the customer value chain. In this study we conducted a between-subjects online experiment. We designed a 2 × 2 factorial experiment by manipulating anthropomorphism and multimodal biometric authentication into four conditions, namely with and without a combination of these two factors. Subjects were recruited from Amazon MTurk platform and randomly assigned to one of the four conditions. The results drawn from the empirical study showed a significant direct positive effect of anthropomorphism and multimodal biometric authentication on user adoption of voice intelligence in the customer value chain. Moreover, the effect of anthropomorphism is partially mediated by users' perceived ease of use, perceived usefulness, and perceived security risk. This research contributes to the existing literature on human-computer interaction and voice intelligence by empirically testing the simultaneous impact of anthropomorphism and biometric authentication on users' experience of the technology. The study also provides practitioners who wish to adopt voice intelligence in the commercial environment with insights into the user interface design.

The rapid technological development of artificial intelligence (AI), particularly machine learning and Natural Language Processing (NLP), have made it possible to transform the binary language of computers into understandable verbalized output (Hirschberg and Manning, 2015) and have enabled the voice user interface, also called “voice intelligence”. Voice intelligence accepts voice as input, after which it is processed and transformed into voice-based output (Oberoi, 2019) and responds with answers similar to everyday real-time human-to-human interaction. In turn, it has led to a “zero-touch” user interface, demonstrating how human-machine interaction has shifted away from screens and keyboards (Pemberton, 2018) with the use of biometrics of the human body (in this case, voice) in this interaction process (Mahfouz et al., 2017).

Voice intelligence is seen as the third key user interface of the past three decades, following the World Wide Web and smartphones (Kinsella, 2019). Both the World Wide Web and smartphones require users to learn new languages and interaction methods before they can successfully use the interfaces (Kinsella, 2019). However, voice intelligence differs from its predecessors in that it does not engender any learning curve among its users but accepts their voices as a natural interface. As such, it has been predicted that there will be a growing interest in voice intelligence (Kinsella, 2020).

In addition to offering an improved user experience, voice intelligence has become a solution for the sub-optimal connectivity of a previously neglected segment of the world's population (Chérif and Lemoine, 2019; ITU, 2019; Lee and Yang, 2019). First, for individuals who are unable to fully interact due to illiteracy, physical or motorial disability, arthritis, or reading impairments (e.g., dyslexia), voice intelligence allows them to more fully access the Internet (Chérif and Lemoine, 2019; Max Planck Institute for Psycholinguistics, 2019). Second, in many developing countries, the issue of written language inconsistencies are pervasive, such as a lack of a standard keyboard alphabet. Meanwhile, over 75% of the developing world's population actively uses a mobile broadband subscription. Therefore, voice intelligence can be a crucial and leveling intermediary for populations in developing countries to access the Internet, information, and online services. In short, voice intelligence makes a practical contribution to inclusiveness and helps remove barriers to digital accessibility worldwide (Delić et al., 2014).

Meanwhile, voice intelligence can create new business opportunities by being an intermediary in human-computer interaction (HCI), connecting users with results applicable to their needs (Liu, 2021). Along with the growing “always-online” mentality (Chuah et al., 2016; Rauschnabel et al., 2018) and the increased number of recognized languages due to good development trends, it has been estimated that voice intelligence will have a market value of USD 7.7 billion and a target population surpassing one billion recurring users by 2025 (Kinsella, 2019). This remarkable potential implies that as consumers' first-choice of platforms, voice intelligence software will regulate a substantial part of the customer value chain (Kinsella, 2019). Leading big tech companies recognize this transition and are thus challenging each other to become market leaders (Liu, 2021).

Nevertheless, consumer adoption of voice intelligence remains underdeveloped (PWC, 2018). Multiple technological and social characteristics form barriers. For example, privacy concerns, security risks, social acceptability, and user hesitancy toward the technological capabilities are identified as fundamental problems decelerating the consumer adoption progress (Moorthy and Vu, 2015; Efthymiou and Halvey, 2016; Bajorek, 2019; Zhang et al., 2019).

Furthermore, most current voice intelligence users are “early adopters” (Moore, 1991; Kinsella, 2019). Early adopters are characterized by their lower loyalty toward technologies due to the excitement they experience from trying out new products (Moore, 1991). Stepping up the adoption curve is crucial for continuing the development of voice intelligence (Rogers, 1995). Therefore, further research is needed to overcome prior adoption barriers and to guide the transformation process.

This study investigates, from a socio-technological perspective, the different characteristics and features of voice intelligence that need to be transformed in order to raise user perception of it to a level equivalent to that of stationary computers or mobile phones. This acknowledgment is essential for establishing a sustainable human-technology relationship that meets the product standards of current technological intermediaries (Moore, 1991).

In this research, we propose that incorporating anthropomorphism and multimodal biometric authentication enhances users' perception toward voice intelligence, improving their experiences of the relational exchanges and thus increasing their willingness to adopt voice intelligence in the customer value chain. Accordingly, the following research question was put forth:

How do anthropomorphism and multimodal biometric authentication influence the adoption of voice intelligence in becoming an acknowledged intermediate technology in the customer value chain?

To empirically validate the hypotheses, we conducted a 2 × 2 online experiment. We designed and developed four conditions based on with or without anthropomorphic characteristics and multimodal biometric authentication in collaboration with the Amazon developer community. Two hundred and forty subjects were recruited via Amazon Mechanical Turk (MTurk), a crowdsourcing platform.

The results confirm the influence of anthropomorphic characteristics and multimodal biometric authentication on user experience in the customer value chain, and thus further lead to an impact on the adoption of voice intelligence. While the former predictor has an impact on specific user perceptions, the latter influences the aggregate level of user perception.

The structure of this paper is as follows: Section Theory and hypothesis development presents the theoretical background of this study and hypothesis development. Section Research method delineates the research methodology, including the experiment design and measures of variables. Section Analysis and results elaborates on the results drawn from the experiment and validates the hypotheses. Section Discussion further discusses the results. Section Conclusion concludes with a summary of the findings and academic as well as practical contributions.

In this section, we review the literature on the voice intelligence, human-technology interaction, and user behavior in the digital age. Thereafter, we present the research model and develop our hypotheses based on the literature review. Figure 1 illustrates the research model and hypothesized association between the variables.

Voice intelligence, based on AI, has realized a long-existing human desire to communicate with technology through a natural interface (Hoy, 2018). The unique linguistic software of voice intelligence forms the base that makes it possible to establish a successful dialogue between humans and technology. Nevertheless, customer awareness and adoption of voice intelligence are nascent and thus underdeveloped (PWC, 2018). As such, we commenced our literature survey with voice intelligence to better understand its fundamentals. This subsection introduces the voice intelligence process, its current applications in different domains, and its advantages and challenges.

The unique linguistic software of voice intelligence forms the base for establishing a successful dialogue. Conversational agents in the form of chatbots or virtual assistants are interfaces that apply voice intelligence to communicate with users (Janssen et al., 2020). After hearing specific keywords, such as “Hey Alexa” or “Ok Google”, voice software within virtual assistants and chatbots redirects the user input to a specialized server. Subsequently, the server transforms the information into a command. This command then triggers voice intelligence to respond by providing the requested information, activating an application, executing a given task independently, or interacting with connected devices (Amazon, n.d.). The virtual assistants called Alexa or Google assistant are classified as intelligent because of their ability to apply cloud-based text-to-speech and speech-to-text services as initial reaction on the given user input (Jadczyk et al., 2021). Accordingly, virtual assistants or chatbots are conversational software tools that use artificial voice intelligence and NLP-technology to support users in their daily lives (Janssen et al., 2020).

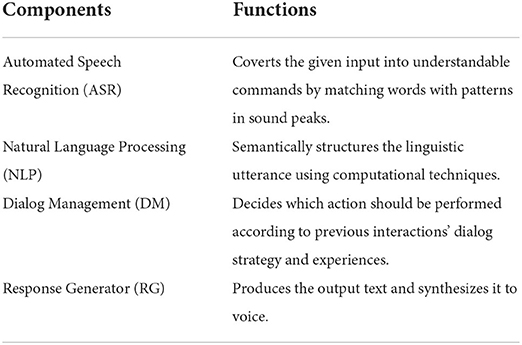

During the voice intelligence process, four essential elements within voice intelligence cooperate consecutively with each other to successfully effectuate the intended user experience and output (de Barcelos Silva et al., 2020). Table 1 explains the different voice intelligence components and how these elements successively work together.

Table 1. Components of voice intelligence (Pieraccini, 2012; Hirschberg and Manning, 2015; de Barcelos Silva et al., 2020; Amazon, 2021).

The current applications of voice intelligence can be classified into non-commercial and commercial uses. Non-commercial applications are commonly seen in the educational and medical environments (de Barcelos Silva et al., 2020). Voice intelligence is primarily used in educational settings to learn a second language or for student assistance purposes (Zhu et al., 2007; Todorov et al., 2018). NLP makes it beneficial to use voice intelligence software for learning foreign languages due to its textual aspect and familiar way of interacting (Zhu et al., 2014). This personal feeling enables users to comfortably interact with a system that records their voice for review purposes (Todorov et al., 2018). This personalized relationship helps to improve recognizability and the user experience by learning from user linguistic data (Zhu et al., 2014). Based on this extensive user profile, voice intelligence software can further tailor teaching methods to the capabilities of their users (Todorov et al., 2018).

The implications of voice intelligence in healthcare are vast, varying from taking over mandatory data collection tasks to improving healthcare's accessibility (de Barcelos Silva et al., 2020). The COVID-19 pandemic started a re-evaluation of the doctor-patient relationship (Pederson and Jalaliniya, 2015). The abrupt increase in healthcare intensity exposed the vulnerability of relying almost exclusively on a face-to-face care delivery model (Sezgin et al., 2020; McKinsey and Company, 2021). Accordingly, during the pandemic the healthcare industry in the United States utilized voice intelligence in various initiatives, varying from information distribution to question handling (de Barcelos Silva et al., 2020). This continuous remote healthcare management and the role of voice intelligence as a supportive application was able to provide a solution for many vulnerable population groups (Sezgin et al., 2020).

The use of voice intelligence in the commercial environment is demonstrated in the customer value chain. The customer value chain consists of three phases: evaluation, purchase, and use (Cuofano, 2021). The users' focus in this value chain is to find products or services that correctly serve their needs (Lazarus, 2001). Voice intelligence can play a vital role in the evaluation and purchase phases by improving the friction–time trade-off, which explains how perceived convenience affects the customer experience (Kemp, 2019). Voice intelligence improves this trade-off by providing less friction and saving valuable time during the interaction (Guzman, 2018; Kemp, 2019). The time it takes to find and purchase the right product significantly affects user satisfaction; hence, the ability to save time motivates customers to recurrently use voice intelligence throughout the value chain.

Despite the environments and domains to which voice intelligence is applied, the perceived convenience and enhanced user experience are deemed to be the most notable advantages of voice intelligence.

First, the perceived convenience during the human brain's decision-making process is an important reason for this wide incorporation (Shugan, 1980; Kemp, 2019). Convenience is described as experiencing minimal effort or barriers during activities (Nass and Brave, 2005). The human brain wants to make quick decisions, especially for low involvement purchase, without processing too much information (Klaus and Zaichkowsky, 2020). Self-learning algorithms enable voice intelligence to design a personalized user profile based on a user's purchase history and user activity by excluding interferences of low-involvement purchases. This possibility for shortening value chain processes through voice intelligence thus increases users' experienced convenience (Klaus and Zaichkowsky, 2020). Compared to the “old-fashioned” value chain that combines visual and touch elements, this convenient new “zero-touch” consumer purchasing behavior is revolutionary (Kinsella, 2019).

Delivering a high level of convenience is crucial for consumer loyalty and company valuation (Porcheron et al., 2018). This increase in loyalty and valuation is substantiated by consumer willingness to pay a significant percentage (up to 91%) more for experiencing the ease of receiving home delivery meals (Chen et al., 2020). Accordingly, performing multiple tasks simultaneously through voice interaction while saving physical and cognitive time leads to more convenience through flexibility and potential usability, which forms a practical tool for assisting the growing societal eagerness for multitasking (Moorthy and Vu, 2015).

Another benefit of voice intelligence is the enhanced user experience. Voice user interface has an essential role in perceived user experience through verbal communication (Oberoi, 2019). Verbalizing information is a distinctive anthropomorphic characteristic of voice intelligence that leads to an improved user experience (Porcheron et al., 2018). Anthropomorphism is the assigning of human attributes to non-organic things (Duffy, 2003). The verbalization of information is the reason why voice interaction is seen as more human, as voice effectuates a stronger social presence and more personification (Bartneck and Okada, 2001; Nass and Lee, 2001). Accordingly, oral interaction between users and technology leads to a more natural and productive conversation, as voice output results in a higher degree of perceived user credibility and competence (Chérif and Lemoine, 2019).

Meanwhile, voice intelligence faces several major challenges. These barriers have internal and external causes, which form decisive factors to its incorporation. Voice intelligence's first challenge is social acceptability (Efthymiou and Halvey, 2016; de Barcelos Silva et al., 2020). Social acceptability affects users' willingness to interact with technology. This willingness depends on three factors: the search case, the audience, and the user's location (Efthymiou and Halvey, 2016). Interacting with technology to execute a search case depends heavily on the environment and audience (Rico and Brewster, 2010; Moorthy and Vu, 2015). Accordingly, being with relatives positively affects the user's perceived social acceptability for interacting with voice intelligence, especially when sharing personal information (Rico and Brewster, 2010; Efthymiou and Halvey, 2016).

The second challenge is the perceived privacy issue while interacting (Alepis and Patsakis, 2017; McLean and Osei-Frimpong, 2019). To deliver a seamless user experience, voice software needs to have a significant amount of linguistic data input to learn and to adjust its interaction capability (Zhu et al., 2014). Accordingly, the intended constant interaction helps voice intelligence to improve its algorithms and excel in its role (Ezrachi and Stucke, 2017). This feature also means that voice-based assistants obtain more personal information than any other technology. Therefore, the continuous interaction possibility forms a privacy challenge that requires the rethinking of current privacy laws (McLean and Osei-Frimpong, 2019).

The third challenge is the perceived security risk. Voice interaction is a basis of biometric technology (Mahfouz et al., 2017). Biometric technology comprises an automated identification method which uses physiological or behavioral characteristics (Quatieri, 2002). Voice recognition verifies users by recognizing sound patterns as a unique authorizer for multiple systems (Rashid et al., 2008). This type of verification is safer than older password methods, as the physiological characteristics of voice patterns are difficult to alter (Hanzo et al., 2000). Nevertheless, using voice as a means of authorization also allows malicious actors to obtain private information through harmful applications (Alepis and Patsakis, 2017). The constantly active modus of voice intelligence gives multiple applications, including harmful variants, access to communication channels and sensitive information without the user's knowledge. Accordingly, the current biometric model results in security problems that challenge an individual's perception of voice intelligence technology.

HCI is crucial to incentivizing individuals to use technology to complement, unburden and improve their daily lives (de Boer and Drukker, 2011; Jokinen, 2015). This subsection reviews the underlying factors, namely human emotions and user perceptions, associated with HCI and their influences on the level of adoption of voice intelligence.

In recent decades, human emotions have captured increased attention in relation to technology adoption (Hassenzahl and Tractinsky, 2011). Human emotions comprise a reaction triad on external and internal components, consisting of subjective feelings, neurophysiological response patterns and motorial expressions (Johnstone and Scherer, 2000). This emotional triad is crucial to understanding human incentives for using technology (de Boer and Drukker, 2011).

Past studies on HCI have focused on its efficiency (Thüring and Mahlke, 2007; Mahlke and Minge, 2008). However, there is a growing idea that emotional reactions and enjoyability during interactions impact the user experience (Hassenzahl and Tractinsky, 2011). The appraisal theory introduced by Scherer (2009) further substantiated this trend of research (Jokinen, 2015). The theory entails primary and secondary appraisals (Lazarus et al., 1970; Lazarus, 2001). The primary appraisal evaluates a situation through personal goals and values (Lazarus, 2001). The secondary appraisal comprises the user's control and ability to adjust to specific events (Jokinen, 2015). Both appraisals indicate user willingness and motivation to interact with technology; hence, it is beneficial to incorporate the appraisal theory as a base for this research, considering the voice intelligence adoption process.

Confidence is one of the essential emotional states that influences a user's attitude toward and frequent usage of technology (Gardner et al., 1993). The Mobile Phone Technology Adoption Model (MOPTAM) identified that perceived ease of use and perceived usefulness are two vital influential factors of user confidence (van Biljon and Kotzé, 2004; Wong and Hsu, 2008). Perceived ease of use is defined as the experience of minimal physical or mental effort when using a technology (Davis et al., 1989). According to Hackbarth et al. (2003), perceived ease of use can be improved by increasing an individual's system experience. Therefore, decreasing user friction while interacting with voice intelligence leads to increased confidence and a higher perceived ease of use (Davis et al., 1989; Wang, 2015).

Furthermore, perceived usefulness is defined as an individual's perception that using technology increases performance (Davis et al., 1989). This increase in performance is effectuated by choosing the most suitable technology to efficiently execute and finalize the intended tasks (Chitturi et al., 2008; Kim et al., 2016).

Another emotional state that influences HCI is user trust (Zhang et al., 2019). Trust comprises a person's ability in and benevolence toward using technology, as well as how the user interprets a system's functionality (Szumski, 2020). The perceived security risks have a substantial influence on user trust in technology (Alford, 2020; Szumski, 2020). The main reasons for distrust in voice security measures are the single authentication systems (Wu et al., 2015). Still, voice intelligence interaction is primarily effectuated by using a unimodal biometric system (Dimov, 2015; Thakkar, 2021). The authentication process within unimodal systems uses a single biometric characteristic as digital information key for user validation and verification (Oloyede and Hancke, 2016). Unfortunately, this way of interacting is vulnerable to false interpretations (Zhang et al., 2019). Security risks in voice intelligence comprise the misinterpretation and impersonation of voice input (Wu et al., 2015). Two types of malignant functions cause security risks that affect voice intelligence perception: voice squatting and voice masquerading (Zhang et al., 2019). Voice squatting exploits different ways of placing a request and variations in pronouncing the action phrase (Brewster, 2017). External threats can easily incorporate the names of multinationals in action phrases, which are then linked to accompanied applications (Zhang et al., 2019). Furthermore, voice masquerading focuses on the sequence structure in voice commands (Brewster, 2017). A pernicious form of malware uses masquerading to gather sensitive information by quietly continuing to operate after pretending to hand over the control to the next application. Therefore, perceived security risks have been identified as the third influential factor of the adoption of voice intelligence in this research.

Privacy concerns, the second substantial factor, affect trust perception (Moorthy and Vu, 2015; Condliffe, 2019). Voice intelligence is used widely and therefore requires many software permissions (Alepis and Patsakis, 2017). An individual's privacy can be harmed because of information gathered without his or her knowledge (Collier, 1995). Because of the intensified interaction, cloud-native voice intelligence data contains more personal and sensitive information than predecessor technology (Cho et al., 2010). Accordingly, malintended individuals can use this data for harmful actions (Cho et al., 2010; Wu et al., 2015). This fear for privacy vulnerability has a negative influence on perceived trust of voice intelligence (Moorthy and Vu, 2015). Accordingly, perceived privacy concerns have been identified as the fourth influential factor for the adoption of voice intelligence in this study.

Perceived social benefit, which relies on creating a social entity (McLean and Osei-Frimpong, 2019), is deemed to be a key factor of user perception (Chitturi et al., 2008). Creating a social entity is effectuated by merging technological and social characteristics (Moussawi et al., 2020). Speech is crucial during interactions, as it gives essential insights into personality and intentions (Edwards et al., 2019). Accordingly, HCI through voice can be adjusted significantly by applying social rules like politeness and courtesy to the AI device during a dialogue (Moon, 2000). This familiar mannerism during interaction drives users to allocate human-like characteristics to the device, such as expertise and gender (Edwards et al., 2019). This anthropomorphic tendency evokes social presence and attractiveness, leading individuals to experience a higher willingness to interact with AI technology in the same way as they do with others. As a result, users become comfortable during conservations, forming an emotional connection with the AI entity (Cerekovic et al., 2017).

Anthropomorphism is a user's willingness to allocate human emotional characteristics to non-organic agents (Verhagen et al., 2014). It has become imperative in the research on HCI interface design, as it is a promising influential factor of AI adoption (Li and Suh, 2021). Three main anthropomorphic research streams have been identified. The first emphasizes the positive effect of anthropomorphism on technological trust and perceived enjoyability in autonomous vehicles and on intelligent speaker adoption (Waytz et al., 2014; Wagner et al., 2019; Moussawi et al., 2020). The second stream reveals the positive influence of anthropomorphism on user adoption of chatbots and smart-speakers in the consumer journey by enhancing user enjoyment and trust (Rzepka and Berger, 2018; Moussawi et al., 2020; Melián-González et al., 2021). The third stream highlights the positive effect of emotional factors on trust and service evaluation and how language variation affects this user perception (Choi et al., 2001; Qiu et al., 2020; Toader et al., 2020), discussing the influences of anthropomorphism on various aspects of AI technology. All these research streams confirm the significant effect of anthropomorphism on user perception of technology.

Nevertheless, finding the right technological improvements that do not interfere with user experience is challenging, due to the novelty of zero-touch interfaces. Prior research on website personality, mobile interface personality and brand personality illustrated the most prominent similarities of technical capabilities and user interaction to voice intelligence based on user experiences (Aaker, 1997; Chen and Rodgers, 2006; Johnson et al., 2020). Four characteristics have been identified as having potentially significant effects: functional intelligence, sincerity, information creativity, and applicable voice tone and intonation (Kinsella, 2019; Poushneh, 2021).

The first of these, functional intelligence, is the level of effectiveness, usefulness and reliability generated to answer or perform a given request (Pitardi and Marriott, 2021). This capability increases the technology's reliability and improves user perception (Waytz et al., 2014), resulting in an individual gaining trust and confidence in using technology for task completion processes.

Second, sincerity is defined as honesty and genuineness toward social entities (Johnson et al., 2020). Like functional intelligence, sincerity allows an individual to experience a higher level of control. Perceived control during the interaction is stimulated by the device's adoption of a submissive attitude toward the user (Exline and Geyer, 2004). This characteristic results in a higher sense of user control during the interaction, which creates an incentive to intensify the human-technology relationship (Stets and Burke, 1996). Therefore, positioning the user as the dominant entity during the interaction results in the user experiencing more trust and enjoyment when operating the device.

Third, information creativity of voice intelligence can be defined as combining both novel and informative elements in a helpful response (Zeng et al., 2011). Piffer (2012) clarified creativity by introducing a three-dimensional framework that measures the novelty, usefulness and impact of products or information. As a result, information used in responses formulated by voice intelligence needs to comply with every dimension before it can be assessed as “creative”. This compliance is essential, as the perceived degree of creativity affects users' interest in learning more about technological capabilities and motivates regular use (Poushneh, 2021).

Fourth, the tone of voice determines the feelings a group of words gives when a message is communicated (Sethi and Adhikari, 2010). Choosing a tone that fits the situation is crucial for perceiving user satisfaction. For example, a humoristic voice tone does not work with a profound elaboration of a financial rapport (Moran, 2016). Users experience higher satisfaction when computers have a specific gender (Schwär and Moynihan, 2020). Therefore, changing the tone of voice depending on the activity can positively affect the adoption of voice intelligence in the customer value chain. In addition, according to the given request, adjusting the correct voice intonation can significantly affect an individual's experienced confidence and trust in both the evaluation and purchase phases in the consumer journey (Moran, 2016).

According to the discussion above, when these anthropomorphic characteristics are properly embedded in the interface design of the voice intelligence, user perception toward the voice intelligence is enhanced. First, the application of anthropomorphic characteristics reduces a tasks' difficulty by increasing trust in the technology (Gardner et al., 1993; Kinsella, 2019), resulting in a higher level of perceived ease of use. Thus, the first hypothesis is constructed as follows:

H1: Anthropomorphic characteristics are likely to increase users' perceived ease of use, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Second, incorporating functional intelligence and information creativity as additional voice intelligence output leads to a more informative and valuable interaction between user and machine (Pitardi and Marriott, 2021). These anthropomorphic characteristics give a user more context in addition to the actual responses. When voice intelligence transforms the output into messages with more valuable information, users can benefit from higher user productivity and improved user performance (van Biljon and Kotzé, 2004). Accordingly, the second hypothesis of this study is constructed as follows:

H2: Anthropomorphic characteristics are likely to increase users' perceived usefulness, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Third, creating a social entity by implementing anthropomorphic characteristics, such as a formal voice intonation, improves user perception toward the technical competence of a voice intelligence device. In turn, applying the proper anthropomorphic characteristics transforms the human-machine interaction into a more trustworthy process (Moller et al., 2006). This increase in trust eventually reduces users' perceived security risk when they use voice intelligence for relational exchanges. Thus, the third hypothesis is established as follows:

H3: Anthropomorphic characteristics are likely to decrease users' perceived security risks, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Fourth, humanizing the interaction with technology helps to strengthen the user relationship and results in more perceived control and reliability (Exline and Geyer, 2004; Pitardi and Marriott, 2021). The higher level of perceived control and reliability mitigates users' privacy concerns and thus increases their trust toward the interaction with the computer, which has a decisive influence on their recurring usage of the technology (Waytz et al., 2014). Therefore, the fourth hypothesis is the following:

H4: Anthropomorphic characteristics are likely to decrease users' perceived privacy concerns, which in turn positively influences the adoption of voice intelligence in the customer value chain.

User identification plays a vital role in the adoption of technologies (Bhattacharyya et al., 2009; Zhang et al., 2019). The technological world is traditionally protected by security codes and “traditional” hardware keys. These security measures are vulnerable to malicious activities (Mahfouz et al., 2017). Biometric authentication solves this problem by using human characteristics as verification (Kinsella, 2019). This identification method is safer because of the uniqueness of an individual's characteristics, which are significantly less vulnerable to harmful activities (Zhang et al., 2019).

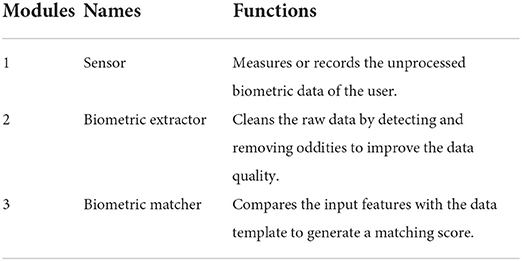

The biometric authentication process starts with the enrollment phase, in which the user shares his or her biometric data for the first time (Jain et al., 2004). These biometrics are analyzed by the system and separated as distinctive features. While filtering the data, the system builds a features template based on the identification characteristics (Liu et al., 2017). The recognition phase starts after the enrollment is finalized. This second phase compares re-acquired biometric data with the stored feature template (Jain et al., 2004). Table 2 shows the technical modules used for the recognition and identification of the characteristics in both phases (Mahfouz et al., 2017). The system ends the process by generating a similarity score. A higher matching score indicates a better similarity between the two datasets (Liu et al., 2017).

Table 2. Technical modules of the biometric authentication process (Liu et al., 2017; Mahfouz et al., 2017).

Deloitte reported that biometric authentication has attained critical mass as a safe and convenient identification method (Westcott et al., 2018). Biometric authentication can serve as a faster, more convenient, and practical user identification method, by which the system does not need to ask for any non-natural interference. Subsequently, this shorter and quicker authentication process improves the experienced utilitarian benefits (Wang, 2015; Rauschnabel et al., 2018), particularly ease of use and usefulness, and the overall human-technology relationship (McLean and Osei-Frimpong, 2019).

A reason for the increase in biometric authorization is the growing consumer awareness of data breaches and personal data theft (Westcott et al., 2018). Developments in biometrics answer this growing demand by delivering a more personal and robust identification option. Biometric systems have gained higher acceptance levels by ensuring user security and privacy (Jain et al., 2004). Accordingly, the use of biometric authentication mitigates users' perceived security risks and privacy concerns regarding technological capabilities to safely execute relational exchanges (Mahfouz et al., 2017).

In turn, these prior studies demonstrated the desired effect of biometric characteristics on the perceived ease of use, usefulness, security risks and privacy concerns, stimulating the adoption of technologies.

However, the overall number of available (unimodal) authentication systems still rely on only one biometric information source: either voice or facial. This reliability on one characteristic could lead to authorization problems caused by noisy data (Thakkar, 2021). In voice intelligence, voice recognition is the most dominant biometric authentication to date. However, using voice recognition for relational exchanges is susceptible to malicious activities (Dimov, 2015; Wu et al., 2015). Downloading anti-malicious software packages does not provide full security measures. The use of facial recognition as a second physiological authorization method in addition to voice recognition could improve the security of user privacy and personal data and thereby raise user trust (PWC, 2018). Thus, combining two characteristics, namely voice and facial recognition, in an authentication method solves the limitations of a unimodal biometric system. This combination consolidates data from different sources to compensate for the limits of either characteristic (Jain et al., 2004). Accordingly, a multimodal authentication system that combines two different biometric features is suggested.

Voice input and facial recognition are biometric characteristics with a significant and advanced role in “zero-touch” HCI (Liu et al., 2017). Combining both physiological attributes in the authentication process results in a 50% reduction of the error rate compared to using one or the other unimodal biometric system independently (Hazen et al., 2003). Consequently, expanding a unimodal system into a multimodal variant significantly improves the user journey within the human-machine interaction.

To be specific, multimodal biometric authentication enhances user perception and positively influences the adoption of voice intelligence in the customer value chain. First, incorporating a multimodal biometric authentication system into voice intelligence shortens and accelerates the authentication process, which improves the perceived ease of use (Mahfouz et al., 2017). In turn, compared to the previous identification methods, this shorter and quicker authentication process strengthens overall rapport between the user and voice assistant, thus increasing the likelihood that a user will adopt voice intelligence. Therefore, the fifth hypothesis is constructed as follows:

H5: Multimodal biometric authentication is likely to increase users' perceived ease of use, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Second, using multimodal biometric characteristics leads to not only a more convenient but also a safer authentication process than its predecessors (Kinsella, 2019). This improvement is effectuated by offering a higher level of continuity and transparency in the customer value chain (Mahfouz et al., 2017). Accordingly, users perceive a higher level of usefulness, which comprises the idea that machine interaction increases actual productivity and thus leads to a higher level of adoption (Davis et al., 1989). Accordingly, the sixth hypothesis is proposed as follows:

H6: Multimodal biometric authentication is likely to increase users' perceived usefulness, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Third, a unimodal authentication system that focuses on only one biometric characteristic is vulnerable to security risks due to noisy data (Thakkar, 2021). Implementing a multimodal biometric authentication system with both voice and facial recognition mitigates this perceived security risk by reducing the error rate of noisy data (Hazen et al., 2007). This reduction in perceived security risk results in a safer and improved user experience of a relational exchange, in turn increasing users' willingness to adopt the technology. Accordingly, the following hypothesis is formulated:

H7: Multimodal biometric authentication is likely to decrease users' perceived security risk, which in turn positively influences the adoption of voice intelligence in the customer value chain.

Fourth, additional measures embedded in the multimodal biometric authentication offer a personalized authorization process in the customer value chain, strengthening users' trust in the technology's ability to effectively handle privacy concerns. This strengthened trust in technology based on reduced privacy concerns in the customer value chain will eventually result in a more diverse and frequent use of voice intelligence (Condliffe, 2019). Therefore, the final hypothesis is constructed as follows:

H8: Multimodal biometric authentication is likely to decrease users' perceived privacy concerns, which in turn positively influences the adoption of voice intelligence in the customer value chain.

We carried out a 2 × 2 online experiment in April 2021 for four main reasons. First, an experiment allowed us to better examine the association of multiple influential factors and the adoption of voice intelligence by having the possibility to control cofounding variables and to measure and eliminate the tertium quid (Field and Hole, 2003).

Second, in this research, we have two conditions, namely anthropomorphism and multimodal biometric authentication, that simultaneously manipulate the subjects. Therefore, experimentation is the desired research method (Field and Hole, 2003; Haerling Adamson and Prion, 2020).

Third, a 2 × 2 factorial design allowed us to efficiently compare parallel manipulations (Hearling and Prion, 2020), making it possible to cross both predictors to determine the main and interaction effects (Landheer and van den Wittenboer, 2015; Asfar et al., 2020).

Fourth, using Internet as the medium, we were able to reach larger and diverse samples with limited financial costs. The web-based design was especially helpful when a physical laboratory experiment was not possible during the pandemic.

A pilot study, with 12 subjects per condition (Julious, 2005), was conducted before the actual experiment to test feasibility, and to determine and forecome potential consequences (Thabane et al., 2010).

We manipulated two experimental conditions, namely with and without, for anthropomorphic characteristics and multimodal biometric authentication, respectively. Table 3 exhibits the four conditions, including (T1) without anthropomorphic characteristics and multimodal biometric authentication; (T2) without anthropomorphic characteristics but with multimodal biometric authentication; (T3) with anthropomorphic characteristics but without multimodal biometric authentication; and (T4) with anthropomorphic characteristics and multimodal biometric authentication.

The four conditions were materialized in four short audio files. Each lasted approximately 3 min and consisted of a conversation between a voice assistant called “Iris” and its user (see Appendix I in Supplementary material). During this dialogue, the user executed multiple tasks in both customer value chain phases. Each phase included a voice intelligence process in which “Iris” used cloud-based text-to-speech and speech-to-text services as initial response for each activity. Accordingly, the voice assistant called “Iris” was used as a tool to let the user interact with voice intelligence during different tasks.

During the evaluation phase, the user executed tasks which resembled straight-forward recurring activities, such as checking the weather forecast and adding items to a shopping list. As argued by Kemp (2019), for recurring system usage, it is essential to improve the friction-time trade-off through process optimalization by shortening the execution time of simple tasks, such as checking the weather results, in a higher perceived convenience.

After the evaluation activities, the user performed multiple transactions within the purchase phase of the customer value chain. During these activities, the user ordered the voice intelligence software to purchase the items from the previously created shopping list in the evaluation phase.

Subsequently, the user decided to transfer money between banking accounts and perform a banking transaction to another person with voice intelligence.

Accordingly, each treatment consisted of different manipulations for each customer value chain phase applicable to their design. The audio files were created with help from the Amazon developers' community.

To manipulate the treatment of anthropomorphic characteristics, we took into consideration the four main characteristics that were identified as having potentially significant effects on user perception (see Section Anthropomorphism and user perceptions).

First, functional intelligence within “Iris” was expressed by formulating a helpful solution with an extra touch. This extra touch of functional intelligence delivers a valuable and efficient output applicable to the given command (Pitardi and Marriott, 2021). Functional intelligence was expressed during voice interaction by delivering an answer to a request followed by additional information applicable to that particular situation (Chen and Rodgers, 2006).

Second, sincerity within audio input comprises the technological capability to deliver a response that expresses honesty, friendliness, and humbleness toward users (Aaker, 1997). This anthropomorphic characteristic was manipulated through audio by expressing the supportive and modest role of “Iris” during the human-technology interaction (Aaker, 1997; Exline and Geyer, 2004). The modest attitude during the manipulation was expressed by asking the user if (s)he would like to receive more information about a requested topic (Exline and Geyer, 2004).

Third, the presence of information creativity within audio comprises the ability to deliver entertaining and bright information as attractive output (Poushneh, 2021). Information creativity was incorporated in voice intelligence through delivering an enthusiastic and helpful response applicable to the given situation (Zeng et al., 2011; Poushneh, 2021).

Last, fluctuating voice intonation was manipulated by incorporating an enthusiastic or causal response within the evaluation phase (Moran, 2016) and a formal response during the purchase phase in the customer value chain (Brandt, 2017). Accordingly, “Iris” will adapt an enthusiastic or causal response during simple daily tasks and formulates formal output when the user starts to perform financial transaction or purchase activities.

Voice recognition formed the dominant unimodal authenticator within voice intelligence. However, this dependance on a single information source suffered from authentication issues and problematic performances in real world applications (Wu et al., 2015). Multimodal biometric systems combine biometric information from two independent sources for validation purposes (Oloyede and Hancke, 2016). Accordingly, a second information source was added to the existing verbal validation process of voice intelligence. Voice interaction is predominantly executed through mobile phone use within a unimodal environment (PWC, 2018). Face identification in mobile technology formed the second biometric characteristic that perceived the zero-touch experience (Liu et al., 2017). Appending face recognition as second independent identifier transformed the existing unimodal system of voice intelligence into a new multimodal variant. Accordingly, the effect of multimodal biometric authentication was manipulated by incorporating an extra face recognition step (Pieraccini, 2012). The user was asked to first scan additional facial characteristics before the software accepted linguistic traits as validation during specific activities. This additional authentication was applied during the purchase phase in the customer value chain.

Amazon MTurk was used as a crowdsourcing platform to recruit subjects. By using MTurk, it was possible to filter the subjects against our criterion: subjects were required to have access to a mobile Internet connection (Singh, 2018). This is because voice intelligence is predominantly installed and used in portable devices, such as smartphones and tablets, making stationary and office-based settings inapplicable to this technology (PWC, 2018). To enhance the validity of the responses from the subjects, we further required the subjects to have the relevant user experience.

A subject who passed the given requirement was offered a small incentive of USD 0.40 to participate in one of the four conditions. A subject was allowed to participate in the experiment only once.

This research adopted a between-subjects design. Compared to a within-subject design, it minimized the learning and transfer effect across conditions, shortened the length of a treatment session and made subject randomization manageable (Suresh, 2011; Allen, 2017; Budiu, 2018).

Subjects were randomly assigned to one of the four conditions. When the experiment started, the subject received a vignette. This vignette consisted of two parts. The first part presented the purpose of this study and asked the subject's permission to proceed further. The second part introduced and explained voice intelligence technology to help the subjects form an equal basic understanding of the technology (Gourlay et al., 2014). To ensure that all subjects had read the vignette, participants were not able to click on the next-step button before a specific time period had elapsed.

Subsequently, the subject was asked to listen to a conversation between “Iris” and its user, representing one of the experimental conditions. Afterwards, the subject was required to fill in an online questionnaire, which measured the subject's perception toward the technology and his or her willingness to adopt it. Finally, at the end of the questionnaire, the subject was asked to answer demographic questions, which helped us delineate subgroups within the samples (Field and Hole, 2003).

The measurement items for each construct in the research model was adopted or adapted from the existing literature (see Appendix II in Supplementary material). The items were measured on a seven-point Likert scale (Strongly Disagree—Strongly Agree). A neutral alternative was also added to the scale to increase measurement quality (DeCastellarnau, 2018).

We obtained a total of 266 observations. Two attention checks were incorporated in the questionnaire to ensure participant attention. The first attention check required the subject to answer the control question: “Select option 5: Somewhat agree”. The second attention check asked the subjects to turn in a unique code after finishing the questionnaire. This unique code was given after the demographic question. Responses that failed either attention check were excluded from the dataset. Accordingly, we identified 240 valid observations, or 60 per treatment, for further analysis.

Table 4 shows the demographic profiles of subjects in this study. Male subjects (69.2%) and subjects aged between 18 and 34 (57.9%) were the major ones in the sample. The majority of all respondents were highly educated; almost 90% of subjects held Bachelor or Master degrees. Since we sourced subjects via Amazon MTurk, an American crowdsourcing platform, over 80% of subjects in this study were residents of North America.

Reliability and validity tests were performed to evaluate the measurement model. Cronbach's alpha and composite reliability tests were performed to measure the internal consistency of the scale items. Table 5 shows that Cronbach's alpha values and the composite reliability scores of all the variables were higher than 0.6 and 0.7, respectively. These results substantiate the internal consistency of the scale items in this study (Hair et al., 2011, 2019; Hamid et al., 2017).

To determine the construct validity, both convergent and discriminant validity tests (Peter, 1981) were carried out. Convergent validity was tested based on Average Variance Extracted (AVE) per variable. As shown in Table 5, all AVE scores exceeded the 0.5 rule of thumb (Fornell and Larcker, 1981), indicating a sufficient convergent validity of the variables (Hair et al., 2011).

Discriminant validity assessment was based on the difference between the square root value of AVE and the correlation of variables; the correlation between variables must be lower than the square root value of AVE (Hair et al., 2011). As shown in Table 5, the square root value of AVEs exceeded their corresponding intercorrelation.

Table 6 lists the descriptive statics of all the variables of user perception based on the total observations. These variables are: Perceived Ease of Use (PEU), Perceived Usefulness (PU), Perceived Security Risk (PSR) and Perceived Privacy Concerns (PPC). All variables in this study had an average score of 5. The PEU (M = 5.66, SD = 0.94) and PU (M = 5.53, SD = 0.93) had the highest overall mean. This means that subjects perceived the practical potential of voice interaction technology in their daily lives. Meanwhile, subjects expressed their concerns about the intensive use of voice intelligence by also giving high scores to PPC (M = 5.66, SD = 0.94) and PSR (M = 5.53, SD = 0.93). In general, subjects showed a moderate willingness to adopt voice intelligence (M = 5.48, SD = 0.97) in various daily activities.

Table 7 displays the descriptive statistics per treatment. The group treated with a combined anthropomorphic and biometric element had the highest level of PEU (M = 5.92, SD = 0.73) and PU (M = 5.79, SD = 0.80). However, their PPC (M = 5.36, SD = 1.4) was also relatively higher than any other group. The group with only anthropomorphic treatment had the lowest PPC (M = 5.10, SD = 1.01) and PSR (M = 5.12, SD = 0.78). This implies that the incorporation of anthropomorphic characteristics into voice intelligence may reduce users' concerns about perceived privacy concerns and security risks.

Overall, subjects perceived a higher level of ease of use and usefulness and were more willing to adopt the voice intelligence when anthropomorphic or multimodal biometric treatments were applied.

We first performed a factorial ANOVA to examine the direct effect of anthropomorphic and multimodal biometric characteristics and their interaction effects on user adoption of voice intelligence. Table 8 exhibits the analysis results. Both factors showed significant effects on users' willingness to adopt voice intelligence, while the interaction between them did not show a significant effect [F(1) = 3.043, p > 0.08].

The positive effect of multimodal biometrics [F(1) = 14.935, p = 0.00] is higher than that of anthropomorphism [F(1) = 4.160, p = 0.04]. Further examination of the effect size (see Table 8), according to the guidelines of Cohen (1988), revealed that the effect of multimodal biometrics was medium (η2 > 0.06), while the effect of anthropomorphism was marginal (η2 < 0.02).

In short, the factorial ANOVA indicated significant effects of both anthropomorphism and multimodal biometric authentication on users' willingness to adopt voice intelligence. The absence of an interaction effect suggests that these two predictors do not substantially affect each other.

Structural equation modeling (SEM) was performed to validate the hypotheses by using the LAVAAN-package in R-studio. The results of the path analyses are presented in Table 9. Anthropomorphism showed a significant impact on all the perceived factors, including PEU (β = 0.67, p < 0.05), PU (β = 0.40, p < 0.01), and PSR (β = −0.77, p < 0.05), except for PPC (β = −0.52, p > 0.24). It can be argued that the incorporation of anthropomorphic characteristics into voice intelligence increases users' perceived ease of use and perceived usefulness while reducing the perceived security risks toward voice intelligence in the customer value chain. Nevertheless, it did not significantly affect users' perceived privacy concerns.

On the contrary, the results did not reveal any significant influence of multimodal biometric authentication on users' PEU (β = −0.25, p > 0.39), PU (β = −0.29, p > 0.30), PSR (β = −0.03, p > 0.92) or PPC (β = −0.28, p > 0.53). This implies that the effect of multimodal biometric authentication on a user's adoption of voice intelligence is not through these four user perceptions.

The analysis further revealed that the direct effects of PEU (β = 0.65, p < 0.001), PU (β = 0.66, p < 0.001), PSR (β = −0.56, p < 0.001), and PPC (β = −0.33, p < 0.001) on user adoption of voice intelligence were all significant. This indicates that a higher level of perceived ease of use and perceived usefulness and a lower level of perceived security risks and perceived privacy concerns lead to an increased likelihood of users adopting voice intelligence in the customer value chain.

The mediation test confirmed the mediating role of PEU (β = 0.42, p < 0.05), PU (β = 0.25, p < 0.05) and PSR (β = 0.43, p < 0.05) between anthropomorphism and user adoption of voice intelligence. This signifies that incorporation of anthropomorphic characteristics into voice intelligence positively influences user adoption via perceived ease of use, perceived usefulness, and perceived security risks.

The SEM analysis used bootstrapping to create 10,000 resamples to assess the mediation effect. Bootstrapping is a superior method because it does not tend to systematically shift toward zero due to positively skewed values (Koopmans et al., 2014). Nevertheless, existing literature pointed out the importance of considering a possible high Type 1 error rate when applying bootstrapping (Fritz et al., 2012; Koopmans et al., 2014). A Type 1 error comprises the event of rejecting a true null hypothesis (Cohen et al., 2003). The sample size of this study leads to the possible increase in Type 1 error rate of bootstrapping; the probability of a fluctuating error rate above 5% starts to increase after the sample size exceeds N = 140 observations (Koopmans et al., 2014). Therefore, an additional Sobel test was utilized to substantiate the previous mediating testing results.

The additional Sobel tests results of the mediating effect. Similarly to the SEM analysis with bootstrapping, the Sobel tests demonstrated that PEU (Z = 2.28, p < 0.05), PU (Z = 2.52, p < 0.05), and PSR (Z = 2.12, p < 0.05) significantly mediate the effect of anthropomorphism on the adoption of voice intelligence.

According to the aforementioned analysis, anthropomorphism affects user adoption of voice intelligence through perceived ease of use, perceived usefulness, and perceived security risks, and hence hypotheses H1 –H3 were supported. Anthropomorphism does not influence users' perceived privacy, and multimodal biometric authentication does not affect any user perception. However, all of the types of user perception affect users' willingness to adopt voice intelligence. Therefore, hypotheses H4 –H8 were partially supported. Table 10 summarizes the results of the hypotheses testing.

User perception in this study consists of four specific types, namely PEU, PU, PSR, and PPC. The average score of these four factors in each response was calculated to represent an overall user perception toward the voice intelligence. A factorial ANOVA analysis was subsequently performed to examine the effect of ANT and BA on user overall perception. Table 11 reveals that both ANT [F(1) = 11.72, p < 0.001] and MBA [F(1) = 28.27, p < 0.001], as well as their interactions [F(1) = 6.50, p < 0.05], significantly influence user perception of voice intelligence.

Moreover, a multiple regression model was utilized to examine the impact of the demographic and control variables, in addition to the four mediators, on user adoption of voice intelligence. The results (see Table 12) show that the effects of all the mediators on user adoption remained significant. Additionally, having an age between 35 and 44 (β = 0.42, p < 0.05), Bachelor degree (β = 0.50, p < 0.01) or Master degree (β = 0.42, p < 0.05) were shown to be predictors of user adoption of voice intelligence. Gender and location of residence did not influence user behavior.

These findings suggest that while location and gender do not affect user adoption of voice intelligence, users with a higher level of education are more likely to adopt voice intelligence in the customer value chain.

In this section, we further discuss the results drawn from the online experiment. Our study confirms the effect of perceived ease of use, usefulness, security risk, and privacy concerns on user adoption of voice intelligence. These findings are in line with prior research on the influence of different user perceptions on technology adoption (e.g., de Boer and Drukker, 2011; Jokinen, 2015; Moorthy and Vu, 2015; Zhang et al., 2019). However, our findings of the effect of anthropomorphism and multimodal biometric authentication show contradiction with our hypotheses and with existing studies. Therefore, our discussion focuses on these two influential factors.

Our study confirms that when anthropomorphic characteristics are incorporated into voice intelligence, users are more likely to adopt the technology in the customer value chain, specifically the evaluation and purchase phases. This effect is largely indirect through users' perceived ease of use, perceived usefulness and perceived security risk This finding is in line with previous research. These existing studies contend that anthropomorphism enhances human-machine interaction (Waytz et al., 2014; Kinsella, 2019) by incorporating characteristics that represent a higher state of mind (Choi et al., 2001; Qiu et al., 2020; Toader et al., 2020), and thus further motivate users to more frequently and widely adopt the technology in their daily lives (de Boer and Drukker, 2011; Jokinen, 2015).

However, according to our research, the use of anthropomorphic characteristics does not influence user adoption of voice intelligence through users' perceived privacy concerns, because anthropomorphism does not affect perceived privacy concerns. This contradicts the literature on anthropomorphism that asserts a positive effect of humanizing the interaction with technology on mitigating users' privacy concerns by strengthening perceived control and reality (Exline and Geyer, 2004; Pitardi and Marriott, 2021).

A possible reason for this is the influence of demographic factors. Graeff and Harmon (2002) point out that consumers' privacy concerns vary among demographic market segments when they purchase online. They find that younger consumers are more aware of data collection and privacy risks. This may be due to their relatively high data literacy as “digital natives”. Other prior research also demonstrates a positive relationship between education and privacy concerns (Graeff and Harmon, 2002; Zhang et al., 2002). With more knowledge about the technology, data collection and risks, consumers may have more privacy concerns when they use the technology throughout the customer value chain.

In our experiment, almost 90 percent of the subjects were young, aged between 18 and 44, and highly educated, with either Bachelor or Master degrees. According to the aforementioned discussion, they may have had a better understanding of voice intelligence and its associated personal and sensitive information collection. This may have further engendered their higher awareness of the possible risks when interacting with voice intelligence in the customer value chain. As a result, in general, their perceived privacy concerns toward voice intelligence were salient (M = 5.19, SD = 1.17), despite the social presence and attractiveness evoked by the anthropomorphic characteristics.

Our research does not manifest the hypothesized effect of multimodal biometric authentication on users' perceived ease of use, usefulness, security risks or privacy concerns. This contradicts existing studies that demonstrate the significance and growing importance of biometric authentication in both user trust and user confidence during HCI (Gardner et al., 1993; Westcott et al., 2018). A possible reason is the influence of the friction–time trade-off (Guzman, 2018; Kemp, 2019). Kemp (2019) argues that the friction–time trade-off affects users' perceived ease of use and usefulness toward the technology. In their study on continuous multimodal biometric authentication (CMBA), Ryu et al. (2021) found that current biometric authentication systems predominantly focus on the user re-authentication process without giving enough attention to the user experience. These additional authentication steps require more time and effort from consumers during HCI in the customer value chain. When the authentication window time exceeds users' perceived benefits, consumers perceive no improvement, sometimes even experiencing a negative effect on ease of use and usefulness of the technology (El-Abed et al., 2010).

In our study, when multimodal biometric authentication was applied, in addition to voice recognition, subjects needed to take additional steps to complete the facial recognition for authentication in the purchase phase of the customer value chain. Compared to conditions with unimodal biometric authentication, this multimodal approach requires users to spend more time and make more effort. As a result, their perceived ease of use and usefulness toward the voice intelligence in the customer value chain are not likely to improve. The path analyses even indicate a negative, though not statistically significant, effect on perceived ease of use (β = −0.25) and usefulness (β = −0.29). This finding is similar to the study on CMBA (Ryu et al., 2021).

Moreover, although the results drawn from our study do not show significant statistical evidence to support the influence of multimodal biometric authentication on users' perceived security risks and privacy concerns, the path analysis exhibited the hypothesized association between them: implementing multimodal biometric authentication in voice intelligence reduces users' perceived security risks (β = −0.033) and privacy concerns (β = −0.280). The insignificant statistical analysis results may be due to the lack of an immersive experimental environment. In our experiment, subjects could only listen to a conversation instead of directly talking with “Iris”. This may have caused a different, inaccurate or even biased perspective toward the voice intelligence device. As a result, the measured perceived security risk and privacy concerns may not completely, accurately or precisely reflect the effect of multimodal biometric authentication.

Finally, according to the post-hoc analysis in Section Post-hoc analysis, both anthropomorphism [F(1) = 11.72, p < 0.001] and multimodal biometric authentication [F(1) = 28.27, p < 0.001] have an impact on overall user perception toward voice intelligence. The effect of multimodal biometric authentication (η2 = 0.11) is more significant than the effect of anthropomorphism (η2 = 0.05). Unlike the four specific user perceived factors, this finding shows results similar to those of previous research. Zhang et al. (2019) conclude that offering an authentication process that does not demand any touch interference by the user increases the overall experience during human-machine interaction. It can also be argued that while the use of multimodal biometric authentication may not significantly enhance any of the specific user perceptions, it has a striking influence on the aggregate level of user experience.

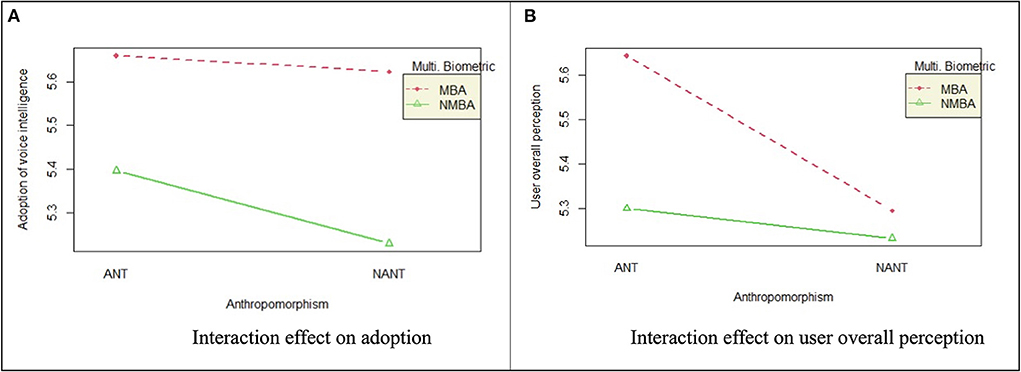

Our research does not reveal an interaction effect of anthropomorphism or multimodal biometric authentication on user adoption of voice intelligence. However, we have obtained some interesting findings based on their marginal interaction effect, illustrated by the evident non-parallel lines in Figure 2.

Figure 2. Interaction effects. (A) Examining the effect of anthropomorphism and multimodal biometric authentication on the customer's willingness to adopt voice technology in their value chain. (B) Examining the effect of anthropomorphism and multimodal biometric authentication on the overall customer perception of voice technology in general.

First, as can be seen from Figure 2A, the direct effect of anthropomorphism on user adoption of voice intelligence varies according to the presence or absence of multimodal biometric authentication. When multimodal biometric authentication is absent, the difference of user adoption between with and without anthropomorphic characteristics is more compelling than the situation when multimodal biometric authentication is present. This indicates that the effect of anthropomorphism on user adoption is influenced by multimodal biometric authentication.

Second, despite the presence or absence of anthropomorphic characteristics, the impact of multimodal biometric authentication on user adoption remains similar (see Figure 2A). This suggests that the influence of multimodal biometric authentication is barely affected by anthropomorphism.

Third, overall, users show most willingness to adopt voice intelligence when both features are applied, and least willingness when neither of these are present.

Regarding the effect of these two influential factors on overall user perception, an interaction effect is captured [F(1) = 6.50, p < 0.05], though it is considered small (η2 = 0.03). Figure 2B illustrates that compared to the situation when multimodal biometric authentication is absent, the difference in overall user perception between with and without anthropomorphic characteristics is more remarkable when multimodal biometric authentication is present. This indicates that the effect of anthropomorphism on user overall perception is influenced by multimodal biometric authentication.

Similarly, when anthropomorphic characteristics are present, the difference in overall user perception between with and without multimodal biometric authentication is more noticeable than the situation when anthropomorphic characteristics are not incorporated.

Moreover, user perception reaches its highest level when both anthropomorphic characteristics and multimodal biometric authentication are applied to the voice intelligence device. On the contrary, when neither of these two features are incorporated, the level of user perception is lowest. This finding is consistent with prior research, which states that both anthropomorphism and multimodal biometric authentication affect user perception through visualizing and creating a more personalized and safer interaction (Jain et al., 2004; Waytz et al., 2014; Li and Suh, 2021).

Accordingly, it can be argued that the use of multimodal biometric authentication can leverage the advantages of incorporating anthropomorphic characteristics into voice intelligence. It further enhances users' perception toward the technology when they interact with voice intelligence in different phases of the customer value chain. As a result, users are more likely to adopt voice intelligence. Nevertheless, when anthropomorphic characteristics are absent in the interface design, the use of multimodal biometric authentication becomes of paramount importance in strengthening user perception and adoption of voice intelligence.

In this section, we summarize the key findings of this study, present its contributions to the literature and business implications, and discusses its limitations and possible directions for future research.

This paper examines the influence of socio-technological factors on the adoption of AI voice intelligence. We studied the impact of anthropomorphism and multimodal biometric authentication on user adoption of voice intelligence in the customer value chain. Our empirical findings reveal that the use of anthropomorphic characteristics and multimodal biometric authentication positively affects users' willingness to adopt voice intelligence through the enhanced overall experience of the interaction with the technology. Users' perceptions, especially perceived ease of use, usefulness, security and privacy, determine their willingness to adopt voice intelligence.

The effect of anthropomorphism on the adoption of voice intelligence is present specifically in perceived ease of use, usefulness and security risk. Multimodal biometric authentication directly affects users' adoption of voice intelligence. Although it does not have an influence on any specific perceived factors, at the aggregate level it improves overall user perception of the technology. Privacy concerns, nevertheless, are not significantly affected by either of the influential factors. This may be due to the demographic characteristics of the sample population; young and highly educated users generally have a high awareness of the privacy concerns of data collection and its possible risks.

When both anthropomorphism and multimodal biometric authentication are incorporated into the interface design, users' perception toward the human-machine interaction and their willingness to adopt voice intelligence reach the highest level.

This research makes several contributions to the literature on HCI and voice intelligence. First, we theoretically develop and empirically test the simultaneous impact of anthropomorphism and biometric authentication on user experience of interaction with machine and their adoption of the technology. Unlike prior research that was limited to either anthropomorphism (Choi et al., 2001; Waytz et al., 2014; Rzepka and Berger, 2018; Moussawi et al., 2020; Toader et al., 2020; Melián-González et al., 2021) or biometric authentication (Jain et al., 2004; Zhang et al., 2019), this paper examines their interaction effect, contributing to the further understanding of these two influential factors of HCI.

Second, this paper extends the existing literature on the impact of HCI on adoption of mobile technology. We identify the user perception factors of HCI based on the view of fundamental human emotions (de Boer and Drukker, 2011; Hassenzahl and Tractinsky, 2011; Jokinen, 2015) and their relevance to verbalized information-based technology (van Biljon and Kotzé, 2004; van Biljon and Renaud, 2008). Thus, this study also contributes to the literature on technology acceptance in the specific context of the use of natural languages.

With regard to voice intelligence, this study is one of the first to empirically test the effect of multimodal biometric authentication on user experience and adoption of AI-based technology. This extends the literature on biometric authentication and the authentication process of AI during various value chain activities (Ross and Jain, 2004; Mahfouz et al., 2017). In addition, to complement existing studies that focus primarily on non-commercial applications in the healthcare and education sectors (Todorov et al., 2018; de Barcelos Silva et al., 2020), we emphasize the use of voice intelligence in the customer value chain. This contributes to the understanding of user perception toward voice intelligence when consumers evaluate and purchase products and services.

This paper provides valuable insights into the user interface design of voice intelligence. First, to incorporate anthropomorphic characteristics into voice intelligence, it is important to address functional intelligence, sincerity, information creativity, and voice tone variation. These elements are associated with user perception and experience. According to our findings, the proper design of these four anthropomorphic characteristics improves the user experience of voice intelligence during the customer journey.

Second, multimodal biometric authentication can significantly improve user perception and motivate users to adopt voice intelligence in the customer value chain. Although our research does not show its association with specific perceived factors, it is evident that the use of multimodal biometric authentication positively influences overall user perception.

Moreover, anthropomorphic characteristics and multimodal biometric authentication complement each other. The benefit of adopting either of them is compelling. When both are utilized, customers may experience a promising interaction with voice intelligence.

Our study also contributes to companies that wish to adopt zero-touch interaction with their customers. We find that individuals between the ages of 35 and 44 and with a high level of education are more likely to adopt voice intelligence in the customer value chain. However, in general, they also have a high concern for privacy. Since they have more knowledge about technology and data collection, they have more awareness of potential risks and privacy issues. High privacy concern negatively influences user adoption. Those who wish to successfully target this segment in the use of voice intelligence must carefully handle customers' personal data involved in the interaction in order to mitigate users' perceived privacy concerns.

As discussed, in our online experiment, subjects did not have the opportunity to directly interact with a voice assistant; we were only able to provide them with a conversation to listen to. Therefore, their perceptions were measured based on indirect user experience. This may have negatively influenced the accuracy of the measurement. In the future, more advanced treatment can be developed to enable a direct verbal dialogue between the subjects and the voice assistance. The creation of a real-life scenario and user experience can enhance the accuracy of the measured user perception.