- 1Department of Computer Science, Colorado State University, Fort Collins, CO, United States

- 2The U.S. Naval Research Laboratory, Washington, DC, United States

When working in an unfamiliar online environment, it can be helpful to have an observer that can intervene and guide a user toward a desirable outcome while avoiding undesirable outcomes or frustration. The Intervention Problem is deciding when to intervene in order to help a user. The Intervention Problem is similar to, but distinct from, Plan Recognition because the observer must not only recognize the intended goals of a user but also when to intervene to help the user when necessary. We formalize a family of Intervention Problems and show that how these problems can be solved using a combination of Plan Recognition methods and classification algorithms to decide whether to intervene. For our benchmarks, the classification algorithms dominate three recent Plan Recognition approaches. We then generalize these results to Human-Aware Intervention, where the observer must decide in real time whether to intervene human users solving a cognitively engaging puzzle. Using a revised feature set more appropriate to human behavior, we produce a learned model to recognize when a human user is about to trigger an undesirable outcome. We perform a human-subject study to evaluate the Human-Aware Intervention. We find that the revised model also dominates existing Plan Recognition algorithms in predicting Human-Aware Intervention.

1. Introduction

Even the best plan can go wrong. Dangers arise from failing to execute a plan correctly or as a result of actions executed by a nefarious agent. Consider route planning where a driver is unaware of upcoming road damage or a traffic jam. Or consider cyber-security where a user is unaware of an unsafe hyperlink. In both, plans achieving the desirable goal have similar prefixes to those that result in undesirable outcomes. Suppose an observer watches the actions of a user working in a risky environment where plans may be subverted to reach an undesirable outcome. We study the problem of how the observer decides whether to intervene if the user appears to need help or the user is about to take an action that leads to an undesirable outcome.

We introduce Plan Intervention as a new computational problem and relate it to plan recognition. The Plan Intervention problem can be thought of as consisting of two sub-problems: (1) Intervention Recognition and (2) Intervention Recovery. In the Intervention Recognition phase, the observer needs to make a decision whether or not the user's likely plan will avoid the undesirable state. Thus, it is possible to argue that if the observer implements an existing state-of-the-art plan recognition algorithm (e.g., Plan Recognition as Planning Ramırez and Geffner, 2009), then intervention can take place when the likely goal of the recognized plan satisfies the undesirable state.

In fact, using existing plan recognition algorithms to identify when intervention is required has been studied in several works (Pozanco et al., 2018; Shvo and McIlraith, 2020). A key strategy in these solutions is to reduce the pending goal hypotheses set to expedite recognition. In this paper, we propose a different approach to perform recognition where the observer uses automated planning combined with machine learning to decide whether the user must be interrupted to avoid the undesirable outcome. Our long term objective is to develop this intervention model as an assistive teaching technology to gradually guide human users through cognitively engaging tasks. To this end, for the Intervention Recovery phase we want to enable the observer to actively help the human user recover from intervention and continue the task. We leave Intervention Recovery for future work.

We show that the Intervention Problem carries subtleties that the state-of-the-art Plan Recognition Algorithms do not address where it is difficult for the observer to disambiguate the desirable and the undesirable outcomes. We propose two complementary solutions for Plan Intervention: (1) Unsafe Suffix Intervention and (2) Human-aware Intervention. We show that these two complementary solutions dominate state-of-the-art plan recognition algorithms in correctly recognizing when intervention is required.

Typically, the state-of-the-art plan recognition algorithms reconstruct plan hypotheses from observations. Ramırez and Geffner (2009) and Ramırez and Geffner (2010) reconstruct plan hypotheses by compiling the observations away and then using an automated planner to find plans that are compatible with the observations. Another approach generates the plan hypotheses by concatenating the observations with a projected plan obtained from an automated planner (Vered and Kaminka, 2017). The costs of the reconstructed plan hypotheses then lead to a probability distribution over likely goals of the user. Existing plan recognition algorithms require that prior probabilities of likely goals be provided as input.

For intervention, providing goal priors is difficult because certain facts about the domain are hidden to the user and unintended goals may be enabled during execution regardless of the priors. Furthermore, human actors may not construct plans the same way as an automated planner. They may make mistakes early on during tasks having a steep learning curve. Partial knowledge about the domain may preclude the human user from knowing the full effects of his actions or he may not be thinking about the effects at all. Therefore, the observer may not always be able to accurately estimate what the users are trying to do.

We study two kinds of Intervention Problems. In Unsafe Suffix Intervention, the observer uses automated planners to project the remaining suffixes and extract features that can differentiate between safe and unsafe plans. We evaluate the recognition accuracy of Unsafe Suffix Intervention on benchmark planning problems. In Human-aware Intervention, the observer uses the observed partial solution to extract features that can separate safe and unsafe solutions. We evaluate the accuracy of Human-aware Intervention on a new Intervention Planning benchmark called Rush Hour.

The contributions of this paper are:

• formalizing the online Intervention Problem to determine when to intervene;

• modeling the observer's decision space as an Intervention Graph;

• defining features to assess the criticality of a state using the Intervention Graph and the sampled plans;

• extending existing benchmarks by Ramırez and Geffner (2009; 2010) to incorporate Intervention and evaluating our intervention approach for the extended benchmarks;

• introducing a new Plan Intervention benchmark domain called Rush Hour. This is a cognitively engaging puzzle solving task where a player moves vehicles arranged on a grid to clear a path for a target vehicle.

• formalizing the Human-aware Intervention Problem for the Rush Hour planning task and designing features to estimate the criticality using behavior features derived from the observed partial plan;

• presenting the results from a human-subjects study where we collected human behavior on Rush Hour;

• extending an existing plan recognition model to the Intervention Problem; and

• training and evaluating the classification models using Rush Hour puzzle solutions collected from a human subject experiment and showing that the approach works well for the Rush Hour problem.

The rest of this paper is organized as follows. In Section 2, we distinguish intervention from plan recognition. In Section 3 we define a general form of the Intervention Problem and introduce three variants: (1) Intervention for a Single User, (2) Intervention in the Presence of a Competitor and (3) Human-aware Intervention. In Section 4, we present approaches for Intervention for a Single User and Intervention in the Presence of a Competitor, both of which use the Intervention Graph and the sampled plans to recognize unsafe suffixes. Section 5 presents our evaluation of Unsafe Suffix Intervention. We compare the accuracy of our proposed algorithms against the state-of-the-art plan recognition algorithms on planning domains from the International Planning Competition (IPC). In Section 6, we present Human-aware Intervention, that uses machine learning to determine when to intervene. We introduce a new planning domain called Rush Hour and study how human users solve Rush Hour puzzle as a planning task. We discuss the accuracy of Human-aware Intervention compared to the state-of-the-art plan recognition algorithms when predicting intervention for human users in Section 7. In Section 8, we discuss the state-of-the-art in plan and goal recognition and human user behavior classification. The discussion in Section 9 presents our analysis on why plan recognition falls short in solving Intervention Problems. Section 10 presents the open questions for future research in designing human-aware Intervention models. Section 11 details the human subject experiment we conducted to collect the data required for Human-aware Intervention.

2. Distinguishing Intervention From Plan Recognition

We model intervention in environments where a user is trying to achieve desirable goal(s), denoted d, while avoiding undesirable outcomes, denoted u. Some environments include a competitor who may also take actions in the world, but we assume the user is not aware of the competitor's actions.

We define the observer to be the intervening assistant agent. An observer receives each action and decides whether to 1) intervene, or 2) to allow the action to be executed. The observer holds a history of previous observations H = (o1, o2, …, oi−1) that indicate the actions executed by the user or competitor. Based on these observations, the observer must decide the user is about to do something unsafe (u) or is moving too far away from a desirable goal (d) by creating a projection of possible actions. We call such a projection a suffix. We denote a single suffix projection as X and the set of projections as because there will usually be many projections.

At first glance, it might seem that intervention is a variant of Plan Recognition for d and u. However, there are several subtleties that make intervention unique, which we now discuss.

• Intervention is an online problem. In most cases Plan Recognition (e.g., Ramırez and Geffner, 2009, 2010; Sohrabi et al., 2016a) is an offline problem; there are a few notable exceptions (Mirsky et al., 2018). However, intervention is inherently online and dynamic. The observer decides whether to intervene (or not) every time the user(s) presents an action oi. In order to make the decision, the observer uses the observation history H, which contains accepted actions. Intervention is a multi-agent problem as well. This has ramifications in environments where the user and the competitor compete to achieve close but different goals. With intervention, the observer can help the user by accepting actions into H that only help further the user's goal. This is not possible with offline Plan recognition.

• Agents may have distinct views of the problem. The user and the competitor are modeled with different domain definitions. The user wants to satisfy the desirable state (d), while the observer wants to avoid the hidden undesirable state (u). The competitor is trying to subvert the user's goal by enabling preconditions for u without the user's knowledge. This follows from many real world applications such as cyber-security, where an attacker sends an email to trick the user into visiting a phishing website and reveal a password. When the user and the competitor (if present) reveal their plan(s) incrementally, the observer needs to decide whether the revealed actions make it impossible for the user to avoid state u considering the plans from the user's and the competitor's domain definitions collectively. Any action that make it impossible for the user to avoid u must be for flagged for intervention.

We cannot assume that only the competitor's actions will satisfy u. In the cyber-security example, the attacker only sends the click-bait email. The user, while executing routine tasks on the computer, in fact follows the link and submits password to the phishing web site satisfying u. If the plans for u and d share a long common prefix, it may be difficult for the observer to disambiguate between the goals in time to help the user avoid u.

• Partitioned suffixes. The observer should allow the user to pursue suffixes leading to d and intervene when actions are presented from suffixes that get “too close” to u. Our key insight is to model the “goals” of the user and competitor, which justifies our use of planning to find these suffixes. The observer needs to consider two kinds of goals that might require intervention:

1. Cases where the user is headed toward an undesirable outcome u. These cases can be solved by plan recognition.

2. Cases where the user unwittingly enables an undesirable outcome u by taking actions toward a desired outcome d. There is an inherent trade-off between intervening and allowing the user some freedom to pursue d. Suppose that some suffix Xu leads to u and suffix Xd leads to d. Then it can happen that by simply following the plan leading to d, the user enables u when there is enough overlap between Xu and Xd. To manage these cases, we must consider plans leading to both d and u together. We use the notation u ∪ d to identify when the satisfying d also satisfies u as a side effect. A planning problem with u ∪ d as the goal must generate plans that satisfy both u and d as solutions. The recognition approach we present in this paper focuses on identifying plan suffixes that satisfy d and also will satisfy u as a side effect. Our approach relies on features extracted from the planning problem representation to generate learned models that can solve this recognition problem.

Partitioning the suffixes allows the observer to learn the differences between the safe and the unsafe suffixes and balance specific unsafe actions against allowing users to pursue their goals. For example, in a malicious email attack such as phishing, the user will still want to check email. When u and d are too close, disambiguation based on plan cost may not be sufficient (we will demonstrate with an example shortly).

• Goal priors cannot be estimated reliably. Plan Recognition algorithms use a prior probability distribution over the goal hypotheses to estimate the posterior probability of the likely goals given the observations. We assume the user does not intend to reach the undesired state u (i.e., prior probability ≈ 0). In contrast, a competitor does intend to achieve u (i.e., prior probability ≈ 1). In general, if the priors are not accurate, it can be difficult to disambiguate between plans that reach desirable and undesirable goal states.

• Emphasis on suffix analysis. In Plan Recognition, the observer uses H to derive the user's likely plan. H can be either an ordered sequence of actions (Ramırez and Geffner, 2009, 2010) or an ordered sequence of states (Sohrabi et al., 2016a). Here, our approach deviates from existing plan recognition problem formulation. In the first form of intervention, the observer considers the remaining plans (i.e., suffixes) in safe and unsafe partitions (instead of H) to learn to recognize unsafe suffixes in order to help the user avoid u. We call the first intervention model, Unsafe Suffix Intervention. In the second form of intervention, the observer learns to recognize that the user is not making progress toward d by analyzing the H. However, in contrast to existing plan recognition, which uses the plan cost to recognize the user's plan, we use domain-specific features to recognize in advance that the user's current plan will satisfy u. In the next phase of this work, we intend to study how to enable the observer to help the user recover from intervention to guide the user toward d. The second intervention model is particularly useful when the user is a human, who may not precisely follow an optimal plan. Therefore, we call the second intervention model, Human-aware Intervention.

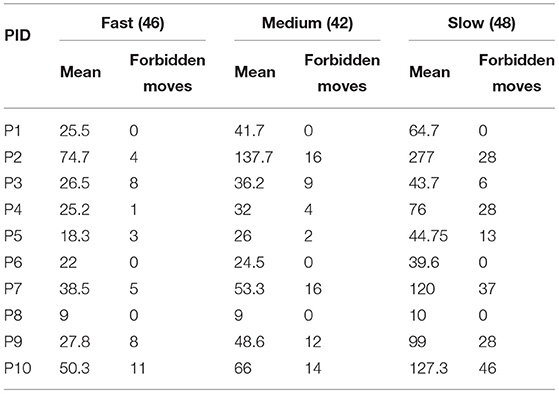

We will present two examples for Unsafe Suffix Intervention. The Grid Navigation domain example is used to illustrate intervention with the user and observer. In the grid navigation task illustrated in Figure 1, the user navigates from W1 to Z3 by moving vertically and horizontally and Y3 contains a pit the user cannot see: d= (AT Z3) and u= (AT Y3). Plans corresponding to paths A, B, C are all feasible solutions to the user's planning task. However, plans B and C are unsafe because they satisfy {u ∪ d}.

Figure 1. In this Grid Navigation domain, a rational user who is unaware of u, may choose to execute plans A, B, or C because they are of equal (optimal) cost. Observer must recognize that intervention is required if the user is executing plans B or C before u is satisfied.

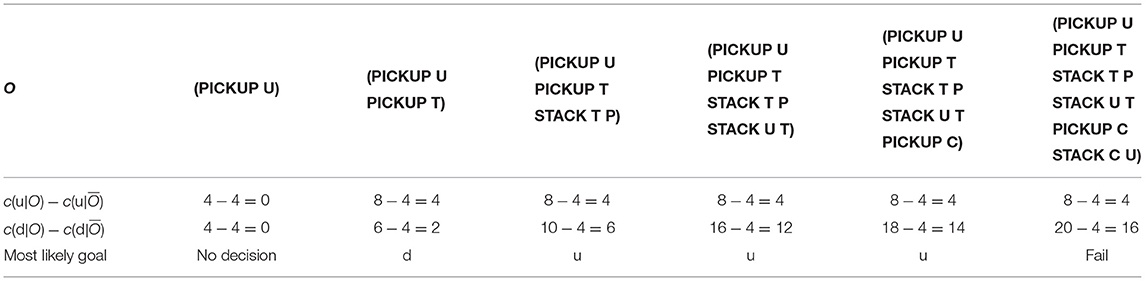

Table 1 shows how an observer modeled as an offline Plan Recognition agent will recognize the goals given observations O for the Grid Navigation problem in Figure 1. Let us assume that the observer implements the Plan Recognition as Planning algorithm introduced by Ramırez and Geffner (2010) to disambiguate between u and d. They show that the most likely goal will be the one that minimizes the cost difference . For each incrementally revealed O (shown in columns), the observer finds the most likely goal that agrees with O. We assumed that the user is following a satisficing plan to achieve goals. For the Grid Navigation example, the observer cannot correctly disambiguate between d and u. More importantly, the final last column satisfies u and it is too late for the user to avoid u.

Table 1. Observer modeled as a plan recognition agent for the grid navigation example in Figure 1.

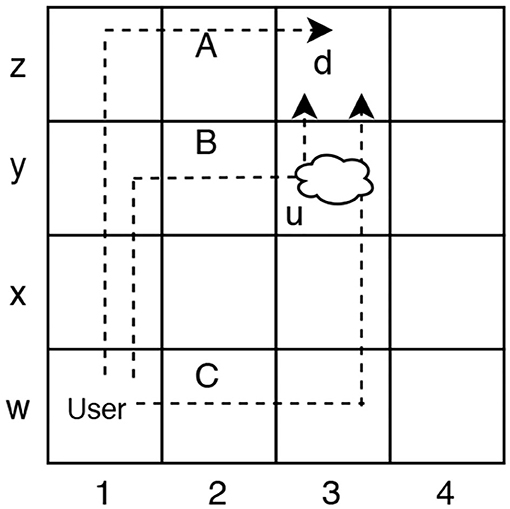

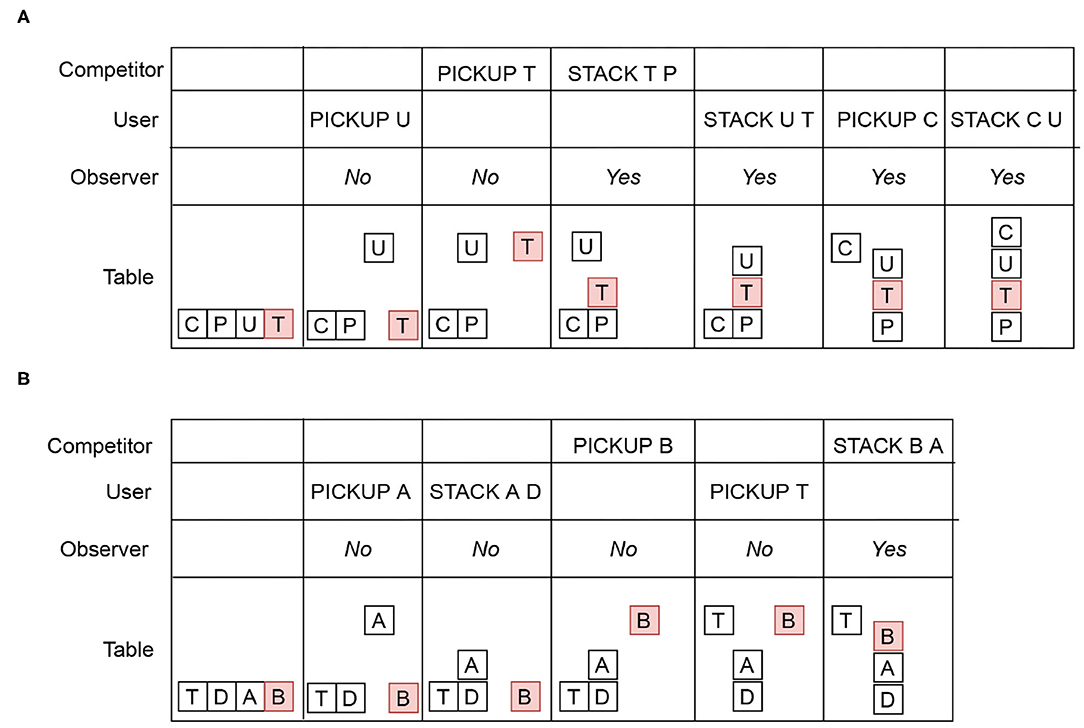

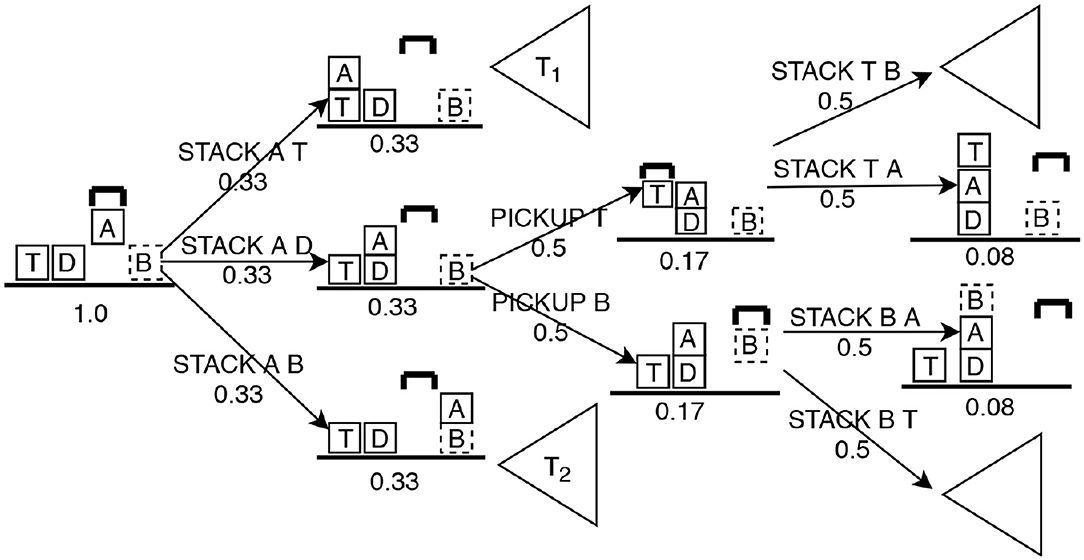

Now let us consider a situation where there may be some additional agent. For example, in a cyber-security application, a second agent may insert malicious code in a file to gain access to a privileged information. More generally, we call this additional agent a competitor, since it is not always the case that they are “attacking” a user. The Blocks Word domain example from Ramırez and Geffner (2009) is used to illustrate intervention with the user, the competitor and the observer. In the Intervention Problem illustrated in Figure 2A, the competitor's goal u= {(CLEAR C)(ON C U)(ON U T)}(i.e., CUT) and the user's goal d = {(CLEAR C)(ON C U)(ON U P)}(i.e., CUP). The user cannot recognize the block T (shown in red). The competitor only modify the state of block T and executes actions with the block T. As shown in the first column, initially, all four blocks are on the table. Both the user and the competitor incrementally reveal their plans. The user's and the competitor's rows in Figure 2A show a reveal sequence from left to right. The row for the table shows the resulting states after each reveal. The observer needs to recognize that when the competitor reveals STACK T P, it becomes impossible for the user to avoid u. However, the user may continue to reveal actions because he cannot recognize the post-condition of STACK T P. The Yes labels in the observer's row indicate that intervention is required. Note that in the Blocks Words example, d and u are distinct enough to disambiguate early.

Figure 2. (A) User and competitor intervention modeled in the Blocks Words domain, where u= (CUT) and d= (CUP). (B) User and competitor intervention where u= (BAD) and d= (TAD). Initially, all four blocks are on the table. An action in the user or competitor row indicates the Intervention Suffix. The Yes label in the observer's row indicates that intervention is required. The No label indicates that intervention is not required.

Table 2 shows how an observer modeled as an offline Plan Recognition agent will recognize the goals for the Blocks Words problem in Figure 2A. Similar to the Grid Navigation example, the Plan Recognition agent implements the Plan Recognition as Planning algorithm introduced by Ramırez and Geffner (2010) to disambiguate between u and d. All other assumptions in the Grid Navigation Plan Recognition task also hold in this problem. The observer distinguishes u and d somewhat better than the Grid Navigation example, because the goals are different enough. The most likely goal identified by the observer aligns with the Yes/No decisions in Figure 2A. However, in the last reveal, the observer correctly identifies u as the goal. However, the observer cannot help the user avoid u in the final reveal because O satisfies u by definition of O in Plan Recognition as Planning. With the proposed intervention algorithms, which learn the distinctions between unsafe and safe plans, we hope to improve the intervention recognition accuracy for the observer, while allowing the user some freedom to satisfy d avoiding u. Furthermore, by accepting actions that only help the user advance toward d safely into the observation history H, our intervention algorithm ensures that the user avoids u.

Table 2. Observer modeled as a Plan Recognition agent for the Blocks Words example in Figure 2A.

3. Defining Intervention Problems

In this section we outline our main assumptions (Section 3.1), discuss the STRIPS planning model (Section 3.2), discuss an important notion of direct or indirect actions leading to u (Section 3.3), and define the general form of the Intervention Problem as well as highlight the family of problems we study in this paper (Section 3.4).

3.1. Preliminaries and Assumptions

Because the observer's objective is to help the user safely reach d, an intervention episode (i.e., a sequence of intervention decisions) is defined from the initial state s0 until d is satisfied. If the competitor is present, the observer decides which actor's presented action to process first, randomly. The actor(s) take turns in presenting actions from their respective domain definitions to the observer until the intervention episode terminates.

We make some simplifying assumptions in this study. Observability: The observer has full observability, and knows about states d and u. u is unknown to the user. d is unknown to the competitor. When we say unknown, it means that the agent does not actively execute actions to enable the unknown goal. The user cannot recognize the effects of a competitor's actions. Plans: The user follows a satisficing plan to reach d, but may reach u unwittingly. There is a satisficing plan to reach u ∪ d and we assume that it has a common prefix with a plan to reach d. We assume that the user continues to present the observer with actions from his original plan even after the first positive flag and does not re-plan. Competitor: When present, the competitor only performs actions using objects hidden to the user; this restriction follows from many security domains where an attacker is a remote entity that sets traps and expects the user to become an unwitting accomplice. The user and the competitor are (bounded) rational agents.

3.2. The Intervention Model

We model the users in the intervention environment as STRIPS planning agents (Fikes and Nilsson, 1971). A STRIPS planning domain is a tuple D = 〈F, A, s0〉 where F is the set of fluents, s0 ⊆ F is the initial state, and A is the set of actions. Each action a ∈ A is a triple a = 〈Pre(a), Add(a), Del(a)〉 that consists of preconditions, add and delete effects, respectively, where Pre(a), Add(a), Del(a) are all subsets of F. An action a is applicable in a state s (represented by subsets of F) if preconditions of a are true in s; pre(a) ∈ s. If an action a is executed in state s, it results in a new state s′ = (s\del(a) ∪ add(a)), and defined by the state transition function γ(s, a) = s′. A STRIPS planning problem is a tuple P = 〈D, G〉, where D is the STRIPS planning domain and G ⊆ F represents the set of goal states. A solution for P is a plan π = {a1, …, ak} of length k that modifies s0 into G by execution of actions a1, …, ak. The effect of executing a plan is defined by calling γ recursively: γ(…γ(γ(s0, a1), a2)…, ak) = G.

The Intervention Problem requires domain models that are distinct for the user, observer, and competitor. Let us define the user's domain model as Duser = (Fuser, Auser, s0). The competitor can see the effects of the user but cannot take user actions. We denote the domain model for the competitor as Dother = (Fuser ∪ Fother, Aother, s0). Although the observer can see the actions of the user and the competitor, it does not execute actions. This is because we are only focused on the recognition aspect of the Intervention Problem. So the observer's domain model is Dobserver = (Fuser ∪ Fother, Auser ∪ Aother, s0).

3.3. The Unsafe Intervention Suffix and Direct/Indirect Contributors

As seen in Section 2, when presented with an action, the observer must intervene after analyzing the remaining plans considering u and u ∪ d. The Intervention Suffix analysis allows the observer to identify the observations that help the user avoid u.

Definition 1 (Intervention Suffix). Let ak be an action that achieves some goal g from state sk−1 (i.e., γ(sk−1, ak) = g). An Intervention Suffix Xg = (a1, a2, …, g) is a sequence of actions that starts in a1 and ends at g ⊂ {u, u ∪ d}.

Suppose that we want to determine a path to u where the Intervention Suffix is Xu = (oi, …, u). By replacing g with u, or u ∪ d, an automated planner can be used to generate an Intervention Suffix. We use the set of Intervention Suffixes (), where generated by the Top-K planner (Riabov et al., 2014) to evaluate Unsafe Suffix Intervention in Section 5. We refer to a single suffix (i.e., plan) leading to u as πu and a set of such plans as Πu. Similarly, we refer to suffixes leading to d and avoids u as πd and a set of such plans as Πd.

Actions may directly or indirectly contribute to u. The direct and indirect contributors express different degrees of urgency to intervene. A directly contributing action indicates that u is imminent and intervention must happen immediately. An indirectly contributing sequence indicates that u is not imminent, but intervention may still be an appropriate decision. Next, we define directly contributing actions and indirectly contributing sequences.

Definition 2 (Direct Contributor). A directly contributing action acrit occurs in an undesirable plan πu ∈ Πu and execution of acrit in state s results in a state s′ such that γ(s, acrit) = u.

Example of a directly contributing action. In the example illustrated in Figure 2B, d = {(ON T A)(ON A D)}(i.e., TAD) and u = {(ON B A)(ON A D)} (i.e., BAD). The user may enable d when he reveals {(STACK USER A D)}, but at the same time this create an opportunity for the competitor to reach u first. However, the observer flags {(STACK COMPETITOR B A)} for intervention (marked Yes) because the post-condition of STACK B A satisfies u. Therefore, STACK COMPETITOR B A is a directly contributing action.

Definition 3 (Indirect Contributor). An indirectly contributing sequence qcrit is a totally ordered action sequence in an undesirable plan in Πu and the first action in qcrit is equal to the first action in the Intervention Suffix x1 ∈ Xu ∪ d. Executing actions in qcrit from state s results in a state s′ such that γ(s, qcrit) = {u ∪ d}.

Example of an indirectly contributing sequence. Figure 2A illustrates an indirectly contributing sequence. The totally ordered sequence {STACK COMPETITOR T P, STACK USER U T, PICKUP USER C, STACK USER C U} is an indirectly contributing sequence because the actions in the sequence together satisfies u ∪ d. Any Intervention Suffix Xg containing actions from an indirectly contributing sequence must be flagged for intervention.

We next formally define the Unsafe Intervention Suffix Xunsafe.

Definition 4 (Unsafe Suffix). An Intervention Suffix X of length k is unsafe if there is at least one action xi ∈ X (1 ≤ i ≤ |X|) such that xi is a directly contributing action or xi is in a indirectly contributing sequence.

In the example in Figure 2B, Xunsafe = (PICKUP USER A, STACK USER A D, PICKUP COMPETITOR B, PICK USER UP T, STACK COMPETITOR B A, u) because of the directly contributing action STACK COMPETITOR B A. In the example in Figure 2A, Xunsafe = (PICKUP USER U, PICKUP COMPETITOR T, STACK COMPETITOR T P, STACK COMPETITOR T P, STACK USER U T, PICKUP USER C, STACK USER C U, u ∪ d) because it contains the actions from an indirectly contributing sequence.

3.4. The Family of Intervention Problems

We now define a general form of the Intervention Problem. Let plan(oi, g) be some general method to generate suffixes for πd and πu; in Section 4.2 we will show how we can use classical planning.

Definition 5 (Intervention Problem). Let be a tuple where D = 〈F, A, s0〉 is a planning domain, d ⊂ F is a desirable state, u ⊂ F is an undesirable state, H = (o1, o2, …, oi−1) is a history of previously observed actions, oi is the presented action that the user would like to perform, and for j ≥ 0 is a set of suffixes leading to u and u ∪ d. The Intervention Problem is a function that determines for the presented action oi whether to intervene.

To decide whether contains an unsafe suffix, the observer analyzes suffixes for plan(oi, u), and plan(oi, u ∪ d). If the observer finds that contains an unsafe suffix then oi is not accepted into H. Let history H = (o1[s1], o2[s2], …, oi−1[sH]) be a sequence of previously observed actions, which started from s0 with the implied resulting states in brackets. The state resulting from applying history to s0 is sH = γ(s0, H). If oi is accepted, then and the effect of oi is represented in state as defined by γ(sH, oi). A solution to is sequence of {No, Yes} decisions for each step i of observations. We next explore special cases of the Intervention Problem, namely single-user intervention, competitive intervention, and the most general form of multi-agent intervention.

3.4.1. Intervention for a Single User

When only the user and the observer are present, the user solves the planning problem Puser = 〈Fuser, Auser, s0, d〉, and incrementally reveals it to the observer. At each point in the plan solving Puser, the observer must analyze , where is generated in some sensible way.

3.4.2. Intervention in the Presence of a Competitor

If a competitor is present, the user's planning problem Puser is the same as before. However, the competitor also solves a planning problem Pother = 〈Fuser ∪ Fother, Aother, s0, u〉. Note that the competitor has a limited set of actions in Aother to create states that will lead to u and Aother ∩ Auser = ∅. The user's and the competitors solutions to Puser and Pother are revealed incrementally. Therefore, . To decide whether X is unsafe, the observer analyzes , where Dother = 〈Fuser ∪ Fother, Aother ∪ Auser, s0〉. The observer accepts oi into H as before.

3.4.3. Human-Aware Intervention

When performing tasks with a steep learning curve (e.g., a puzzle, problem solving), human users may initially make more mistakes or explore different (sub-optimal) solution strategies. Over time, a human may learn to make better choices that result in more efficient plans. Because of the inconsistencies in solution search strategy, we cannot accurately project the goals of the human user. Learning properties about the history H will help the observer recognize when the user is about to make a mistake and use that information to guide the search task on behalf of the user. When humans are solving problems in real time, the criteria for intervention may place more emphasis on the history H than on the suffixes . In Section 6, we consider the special case where .

4. Recognizing Unsafe Suffixes

We present two solutions for recognizing unsafe suffixes. Recall that in Definition 5, the observer's decision space is derived such that for j≥0. In the first solution, we implement the function plan(oi, g) as an Intervention Graph (in Section 4.1 and Section 4.2). In the second solution, we implement plan(oi, g) by sampling the plan space using an automated planner and use plan distance metrics to make the decision about whether to intervene (Section 4.3).

4.1. The Intervention Graph

The Intervention Graph models the decision space of the observer for the Intervention Problem . We can extract several features from the Intervention Graph to derive functions that map the presented observation oi to intervention decisions. The Intervention Graph captures where u, and u ∪ d lie in the projected state space from sH. We can use properties of the graph to evaluate how close the current projection H is to u and u ∪ d and identify directly and indirectly contributing actions.

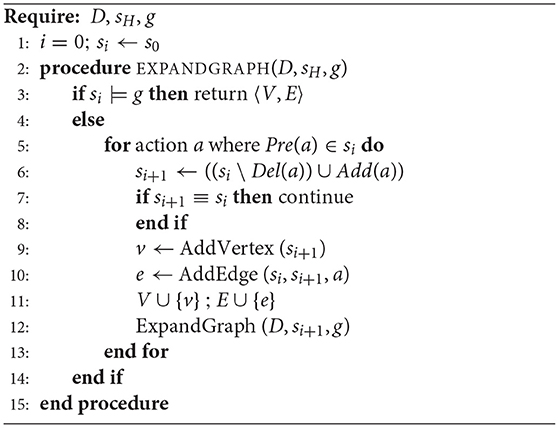

The Intervention Graph consists of alternating state and action layers where each state layer consists of predicates that have been made true by the actions in the previous layer. The root node of the tree is sH. An action layer consists of actions (defined in Duser or Dother) whose preconditions are satisfied in the state from the previous layer. Algorithm 1 describes the process of building the Intervention Graph. The algorithm takes as input a domain theory D (for Duser or Dother), sH and g = {u, u ∪ d} (lines 1-2). When H = ∅, the root of the tree is set to s0. Next, using the domain theory, actions whose preconditions are satisfied at current state are added to the graph (lines 5-6). Each action in level i spawns possible states for level i + 1. Line 7 ensures that the actions that immediately inverts the previous action are not added to the graph. For each resulting state a search node is created, with an edge representing the action responsible for the state transition (lines 8-10). The method is executed recursively for each open search node until d and u are added to the graph generates for the observer (line 11). To ensure that only realistic plans are explored, we do not add no-op actions to the action layers in the graph. When the user and the competitor present new actions, the root of the graph is changed to reflect the new state sH and subsequent layers are modified to that effect.

The Intervention Graph is a weighted, single-root, directed acyclic connected graph IG = 〈V, E〉, where V is the set of vertices denoting possible states the user could be in leading to g, and E is the set of edges representing actions from Duser or Dother depending on single user intervention or competitive intervention. Xunsafe is a path from the root of the IG to u ∪ d or u. In contrast, a safe suffix Xsafe is a path from the root of the IG to d and avoids u.

4.2. Intervention Graph Features

We extract a set of features from the Intervention Graph that help determine whether to intervene. These features include: Risk, Desirability, Distance to d, Distance to u and Percentage of active undesirable landmarks. We use these features to train a classifier that learns to identify actions in acrit and qcrit. Figure 3 illustrates a fragment of the Intervention Graph from Figure 2B after the user presents the action PICK-UP A, which we will use as a running example to discuss feature computation.

Figure 3. Fragment of the decision space after PICKUP A has been proposed for block-words example in Figure 2B. Numbers under each state and action indicate the probability. Sub trees T1 and T2 are not expanded for simplicity.

4.2.1. Risk (R)

Risk quantifies the probability that the presented action will lead to u. We model the uncertainty the observer has about the next action as a uniform probability distribution across the set of applicable actions whose preconditions are satisfied in current state. We define risk R as the posterior probability of reaching u while the user is trying to achieve d. We extract from the Intervention Graph by searching breadth-first from the root until vertices containing d is found, including the paths in which the user has been subverted to reach u. By construction, d will always be a leaf. Let . The set of unsafe intervention suffixes, is such that and and (m ≤ n). We compute posterior probability of reaching u for , using the chain rule in probability as, , and αj ∈ {Auser} or αj ∈ {Auser ∪ Aother} and k is the length of the suffix until u is reached. Then:

In Figure 3, (n = 6) and (m = 1). Since we assumed full observability for the observer, the root of the tree (current state) is assigned the probability of 1.0. Actions that are immediately possible after the current state are each assigned probabilities following a uniform distribution across the branching factor (0.33). Then for each applicable action in the current state, the resulting state gets the probability of (1.0 × 0.33 = 0.33). Similarly, we apply the chain rule of probability for each following state and action level in the graph until u first appears in the suffix. .

4.2.2. Desirability (D)

Desirability measures the effect of the observed action to help the user pursue d safely. Let be the set of suffixes that reach d while avoiding u. Then . We compute posterior probability of reaching d avoiding u for , using the chain rule in probability as, , and αj ∈ {Auser} or αj ∈ {Auser ∪ Aother} and k is the length of path. Then:

In Figure 3, there are five paths where user achieved d without reaching u (two in subtree T1, three in the expanded branch). Following the same approach to assign probabilities for states and actions, . R and D are based on probabilities indicating the confidence the observer has about the next observation.

4.2.3. Distance to u (δu)

This feature measures the distance to state u from the current state in terms of the number of edges in the paths in extracted from the Intervention Graph. We extract from the Intervention Graph from the root to any vertex containing d, including the paths in which the user has been subverted to reach u instead. Let . The set of suffixes that reach u, is such that and and (m ≤ n). We count s, the number of the edges (actions) before u is reached for each path in and δu is defined as the average of these distance values:

In this formula, −1 indicates that the undesirable state is not reachable from the current state. For the example problem illustrated in Figure 3, .

4.2.4. Distance to d (δd)

This feature measures the distance to d from current state. The path set contains action sequences that reach d without reaching u. We count t, the number of the edges where d is achieved safely for a path in . Then, δd is defined as the average of these distances given by the formula:

In this formula, −1 indicates that d cannot be reached safely from the current state. For the example problem illustrated in Figure 3, .

4.2.5. Active Attack Landmark Percentage ()

This feature captures the criticality of the current state toward contributing to u. We used the algorithm proposed by Hoffmann et al. (2004) to extract fact landmarks for the planning problem P = 〈Dother, u〉 or P = 〈Duser, u〉. Landmarks have been successfully used in deriving heuristics in Plan Recognition (Vered et al., 2018) and generating alternative plans (Bryce, 2014). We define attack landmarks () to be those predicates which must be true to reach u. We compute the percentage of active attack landmarks in the current state (), where . In Figure 3, l = 4 and . For each presented action, the Intervention Graph is generated and features are computed, producing the corresponding feature vector. Landmarks are computed apriori.

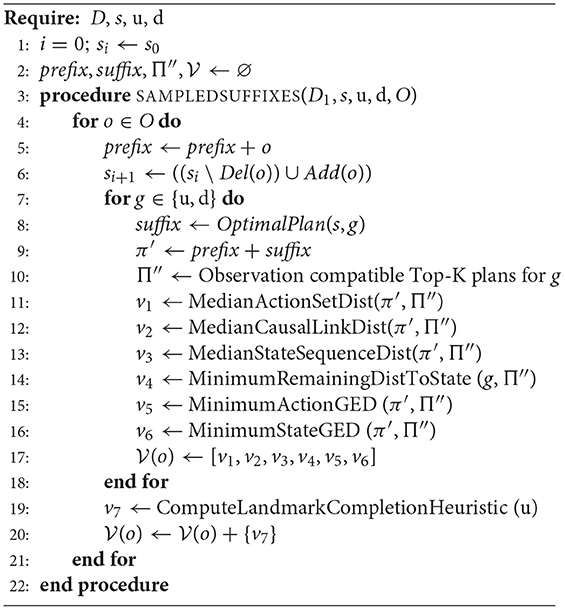

4.3. Plan Space Sampling and Plan Distance Metrics

Extracting Intervention Graph features can be intractable when the graph is large. Therefore, for our second method for implementing plan(oi, g) we define an additional set of features, called Sampled Features by sampling the plan space for the observer. If the Intervention Problem is defined for a single user, we sample the observer's plan space by using an automated planner to find solutions for Pobserver = 〈Fuser, Auser, s0, g〉, where g ∈ {d, u}. If the Intervention Problem is defined for a user and a competitor, we sample the observer's plan space by using an automated planner to find solutions for Pobserver = 〈Fuser ∪ Fother, Auser ∪ Aother, s0, g〉, where g ∈ {d, u}. Note that in this method we omit u ∪ d for generating plans. Sampled plans are generated with the Top-K planner (Riabov et al., 2014). We estimate the Risk and Desirability using plan distance metrics. The intuition is that if the actor is executing an unsafe plan, then that plan should be more similar to a sample of unsafe plans, compared to a sample of safe plans.

For each presented action, the observer computes plan distances between a reference plan (π′) and sampled plans (Π′′) for both u and d. We generate the observation compatible plan by concatenating the observation history with the optimal plan that reaches u (and d) to produce π′ (see Vered and Kaminka, 2017).

We use the Top-K planner with K=50 to sample the plan space. We use Action Set Distance (ASD), State Sequence Distance (SSD), Causal Link Distance (CLD) (Nguyen et al., 2012), Generalized Edit Distance (GED) for sequences of states and actions (Sohrabi et al., 2016b) to measure the distances between the reference plan and the sampled plans for d and u. When an action is presented, π′ is computed. Then, observation compatible Top-K plans are produced for u and d separately. The medians of ASD, CLD and SSD, minimum remaining actions to u and d, minimum action GED and state GED are computed for u and d for all 〈reference, sample〉 pairs. Finally, we also compute the Landmark Completion Heuristic proposed by Pereira et al. (2017). This produces the Sampled Feature vector for the presented action. Algorithm 2 shows the pseudo-code for computing the Sampled Feature vector.

4.4. Learning Intervention

We train a classifier to categorize the presented observation oi into two classes: (Yes) indicating intervention is required and (No) indicating otherwise. We chose Naive Bayes, K-nearest neighbors, decision tree and logistic regression classifiers from Weka1. Given observations labeled as Yes/No and corresponding feature vectors as training examples, we train the classifiers with 10-fold cross validation. The trained model is used to predict intervention for previously unseen Intervention Problems. Attribute selected classifiers filter the feature vector to only select critical features. This step reduces complexity of the model, makes the outcome of the model easier to interpret, and reduces over-fitting.

We generated training data from 20 Intervention Problems using the benchmark domains. We used the Blocks World domain to model competitive Intervention Problems and Ferry, EasyIPC and Navigator domains to model Standard Intervention Problems. We restricted the number of observation traces per Intervention Problem to 100 for training the classifiers.

The decision tree classifier is tuned to pruning confidence = 0.25 and minimum number of instance per leaf = 2. The K-nearest neighbor classifier is tuned to use k = 1 and distance measure = Euclidean. The logistic regression classifier is tuned for ridge parameter = 1.0E-8. The Naive Bayes classifier is tuned with the supervised discretization = True.

5. Evaluating Intervention Recognition

We compare the learning based intervention accuracy to three state-of-the-art Plan Recognition algorithms from the literature. Our evaluation focuses on two questions: (1) Using domain-independent features indicative of the likelihood to reach u from current state, can the observer correctly recognize directly and indirectly contributing suffixes to prevent the user from reaching u? and (2) How does the learning approach perform against state-of the-art Plan Recognition? To address the first question, we evaluated the performance of the learned model on unseen Intervention Problems.

The benchmarks consist of Blocks-words, IPC Grid, Navigator and Ferry domains. For the Blocks-words domain, we chose word building problems. The user and the competitor want to build different words with some common letters. The problems in Blocks-1 model intervention by identifying the direct contributors (acrit), whereas the problems in Blocks-2 model intervention by identifying the indirect contributors (qcrit) in the Blocks-words domain. In the IPC grid domain, the user moves through a grid to get from point A to B. Certain locked positions on the grid can be opened by picking up keys. In the Navigator domain, the user moves from one point on a grid to another. In IPC Grid and Navigator domains, we designated certain locations on the grid as traps. The goal of the user is to navigate to a specific point on the grid without passing through the trap. In the Ferry domain, a single ferry moves cars between different locations. The ferry's objective is to transport cars to specified locations without using a port, which has been compromised.

To evaluate our trained classifiers, we generate 3 separate test problem sets with 20 problems in each set (total of 60) for the benchmark domains. The test problems differ from the training data. The three test problems vary the number of blocks in the Blocks Words domain, size of the grid (Navigator, IPC-Grid), accessible and inaccessible paths on the grid (Navigator, IPC-Grid), and properties of the artifacts in the grid (IPC-Grid). Each test problem includes 10 observation traces (total of 600 test cases). We designed the Blocks-1, IPC-Grid, Ferry, and Navigator problems specifically considering desirable/undesirable goal pairs that are difficult to disambiguate using existing plan recognition algorithms. We designed the problems in Blocks-2 domain to include problems that will be easier to solve by existing plan recognition algorithms.

We define true-positive as the classifier correctly identifying the presented action to be in acrit or qcrit. True-negative is an instance where the classifier correctly identifying an action as not belonging to acrit or qcrit. False-positives are instances where classifier incorrectly identifies an action as belonging to acrit or qcrit. False-negatives are instances where the classifier incorrectly identifies the presented action as not belonging to acrit or qcrit. Naturally, our test observation traces contain a large number of negatives. To offset the bias introduced to the classifier by the class imbalance, we report Matthews correlation coefficient (MCC) because it gives an accurate measure of the quality of a binary classification while taking into account the different class sizes. We also report the F-score for the classifiers, where tp, fp, fn are the number of true positives, false positives and false negatives, respectively.

We implemented three state-of-the art Plan Recognition algorithms to compare the accuracy of intervening by Plan Recognition to the proposed learning based intervention. We selected Ramirez and Geffener's probabilistic Plan Recognition algorithm (Ramırez and Geffner, 2010) (both the satisficing, and optimal implementations) and the Goal Recognition with Goal Mirroring algorithm (Vered et al., 2018). To generate satisficing plans we used the Fast Downward planner with the FF heuristic and context-enhanced additive heuristic (Helmert, 2006). To generate the optimal cost plans we used the HSP planner (Bonet and Geffner, 2001). For each presented action, the observer solves a Plan Recognition problem using each approach. We assumed uniform priors for over u and d. If u is the top ranked goal for the presented action, then it is flagged as requiring intervention. The assumption is that these algorithms must also be able to correctly identify u as the most likely goal for the actions in acrit and qcrit. We used the same test data to evaluate accuracy.

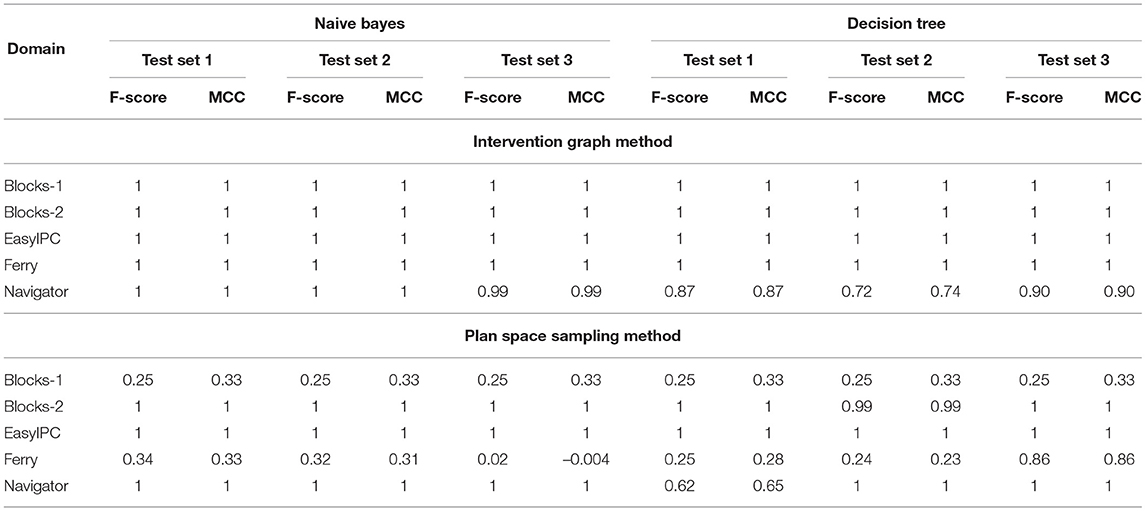

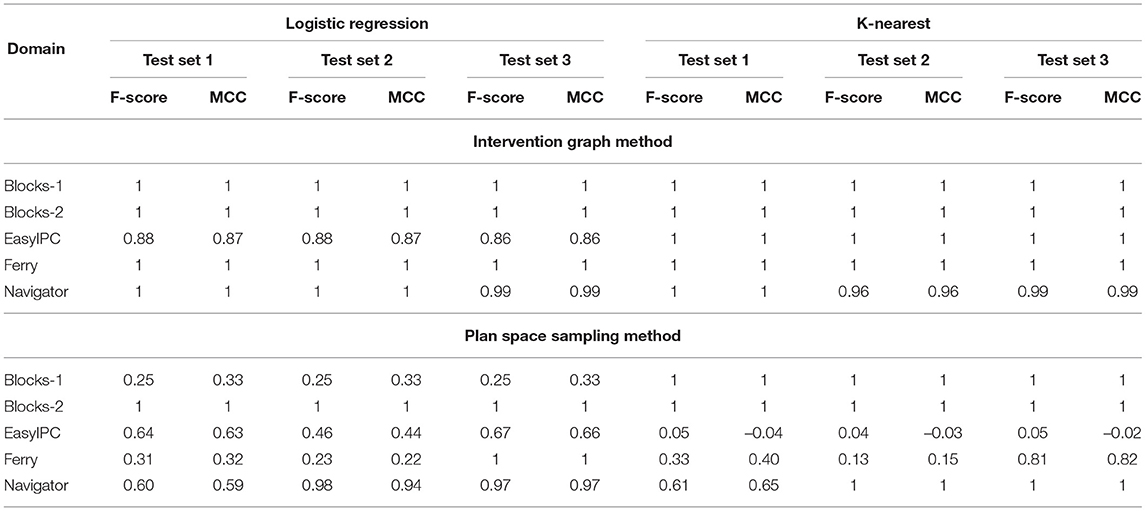

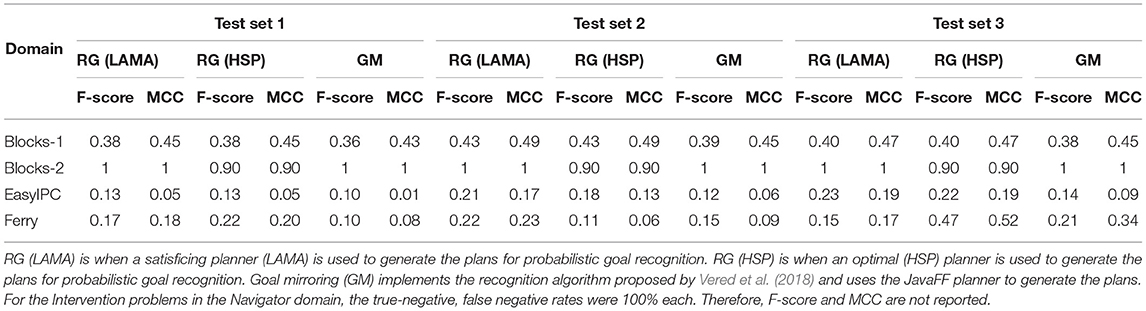

Tables 3 and 4 shows that the classifiers trained with features from the Intervention Graph achieve high accuracy for all the domains when predicting intervention for both identifying actions in acrit and qcrit. The MCC value shows that the imbalance in class sizes does not bias the classifier. Low false positives and false negatives suggest that the user will not be unnecessarily interrupted. As expected performance degrades when we use a sampled plan space to derive features. However, the features derived from sampling the plan space produce equally good classifiers compared to the intervention graph method, when modeling intervention by identifying actions in acrit and qcrit for the Blocks-word problems. For the Intervention Problems modeled using the benchmark domains, we were able to find that at least one of the selected classifiers responded with very high F-score when trained using plan similarity features. The exception to this pattern was the Intervention Problems modeled using the Ferry domain.

Table 3. F-score and MCC for predicting intervention using Intervention Graph and the Plan Space Sampling methods for Naive Bayes and Decision Tree classifiers.

Table 4. F-score and MCC for predicting intervention using Intervention Graph and Plan Space Sampling methods for logistic regression and k-nearest neighbor classifiers.

Features derived from the Intervention Graph accurately recognize actions in acrit and qcrit where the user has limited options available to reach the desirable goal while avoiding the undesirable state. Thus the classifiers perform well in recognizing critical actions in new problems. The sampled features rely on the learning algorithm to produce accurate results when predicting intervention.

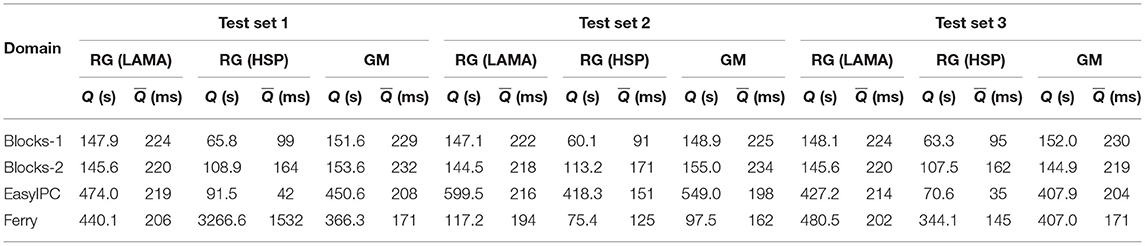

Comparing the results in Table 5, learning methods outperform existing Plan Recognition algorithms when predicting intervention. The algorithms we selected clearly struggled to predict intervention in the Navigator domain producing many false positives and false negatives. These results suggest that although we can adopt existing Plan Recognition algorithms to identify when the user needs intervention, it also produces a lot of false negatives and false positives in correctly identifying which state (desirable/undesirable) is most likely given the observations, especially when the desirable and undesirable plans have a lot of overlap. Comparing recognition accuracy of Blocks-1 and Blocks-2 problems using plan recognition algorithms to intervene, we see that when the undesirable state develops over a long period of time, the plan recognition algorithms recognize the undesirable plan with high accuracy. In other words, if the d and u are sufficiently distinct and are far apart in the state space, intervention by using plan recognition algorithms are as effective as our proposed learning based intervention methods in most cases. When the desirable and undesirable states are closer, existing plan recognition algorithms produce many false positives/negatives.

Table 5. F-score and Matthews Correlation Coefficient (MCC) for recognizing intervention using probabilistic goal recognition (RG) algorithm (Ramırez and Geffner, 2010).

This is unhelpful specially considering human users because, when the intervening agent produces many false alarms and/or miss critical events that must be intervened, the human users may get frustrated and turn off intervention. Therefore, the learning approach is better suited for intervention because the observer can target specific critical actions and at the same time the user is given some freedom to pursue a desirable goal.

5.1. Processing Times

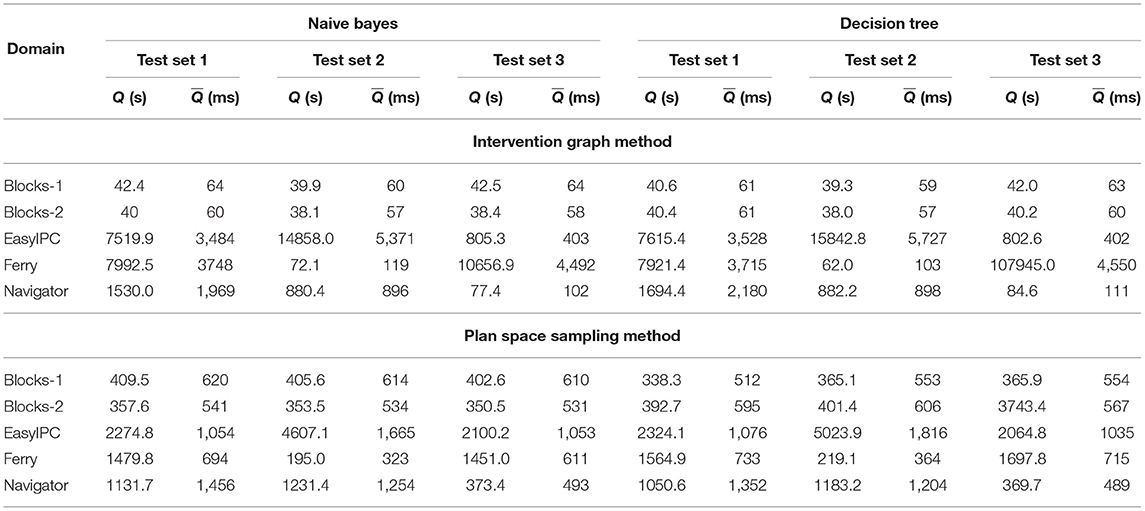

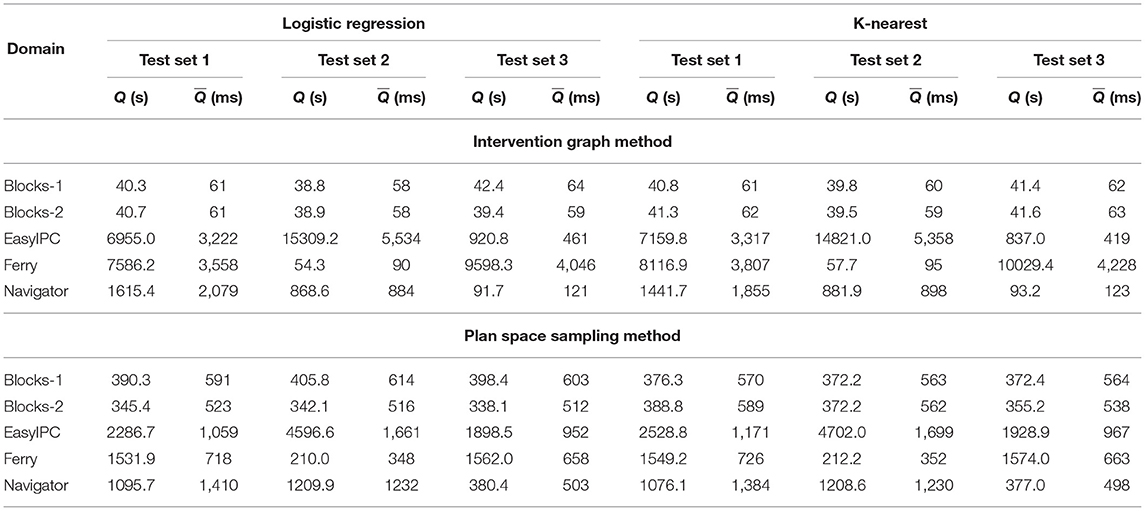

Because the Intervention problem is defined for online environments, we now report the processing time comparison among the two proposed learning based Intervention algorithms and intervention using existing plan recognition algorithms. The experiments were run in an Intel Core i7 CPU at 1.30GHz x 8 machine running on Ubuntu 20.04LTS. We compute two evaluation metrics for the processing time comparison. The total processing time (Q) is the CPU time in milliseconds taken to process all the 20 Intervention problems in a test set. The mean processing time () is the CPU time in milliseconds taken to return the intervention decision for one incremental observation reveal. It is given by the equation: . Tables 6 and 7 show the Q and values for returning the intervention decisions using the classifiers trained with the Intervention Graph features and the plan distance features. When the classifiers are trained with the Intervention Graph features the smallest (< 70 ms) are reported for the Blocks-words domain. The largest values are reported for the EasyIPC and the Ferry domain (< 5.5 s). When the classifiers are trained with the plan distance features, is larger compared to the previous case. However, the values for are still lower (< 620 ms) compared to the other domains. The largest are reported for the EasyIPC and the Ferry domains (< 1.8 s). There is a large range of the total processing times (maximum Q- minimum Q) among the Intervention problems from different domains.

Table 6. Total processing time in seconds (Q) for all Intervention problems in set 1, 2, and 3 and the mean processing time in milliseconds for each incrementally revealed observation () for predicting intervention with Naive Bayes and Decision Tree classifiers using features from the Intervention Graph and the Plan Space Sampling methods.

Table 7. Total processing time in seconds (Q) for all Intervention problems in set 1, 2, and 3 and the mean processing time in milliseconds for each incrementally revealed observation () for predicting intervention with logistic regression and k-nearest neighbor classifiers using features from the Intervention Graph and the Plan Space Sampling methods.

In contrast, Table 8 shows that when using plan recognition algorithms for intervention, is smaller compared to the in learning based intervention. For the Test Set 1, the smallest is reported for the EasyIPC problems when using probabilistic plan recognition using the optimal plans (< 42 ms). The largest is reported for the Ferry domain when using probabilistic plan recognition using the optimal plans (1.5 s), also in Test Set 1. Most of values are less than 232 ms. The range of the total processing times (maximum Q- minimum Q) among the Intervention problems from different domains is smaller compared to the range of the total processing times using the learning based intervention. While plan recognition algorithms sometimes return an intervention decision more quickly than the learning based intervention, their accuracy, precision, and recall is substantially lower than learning based intervention.

Table 8. Total processing time in seconds (Q) for all Intervention problems in set 1, 2, and 3 and the mean processing time in milliseconds for each incrementally revealed observation () for recognizing undesirable states with the algorithms: probabilistic goal recognition using satisficing (RG-LAMA) and optimal (RG-HSP) plans and goal mirroring (GM) using the JavaFF planner.

6. Human-Aware Intervention

The Human-aware Intervention Problem is a variant of the single-user intervention, so the history H consists of only accepted user actions. However, as mentioned in Section 3.2, the set of projections will be empty, that is because planning is less accurate for projecting what the human user may do. This means the observer must emphasize analysis of H. Instead of relying on projections in , the observer learns the function intervenei. That is, at the presented action oi it considers only H, d, and u and uses a trained machine learning algorithm to decide whether to intervene.

We introduce the Rush Hour puzzle as a benchmark in order to study how human users solve the puzzle as a planning task that requires intervention. We begin with the formal definitions of the Rush Hour puzzle. Next, we translate the Rush Hour problem into a STRIPS planning task and through a human subject study, we allow human users solve the planning problem and collect observation traces. We formally define the Human-aware Intervention problem and propose a solution that uses machine learning to learn properties of H to determine whether or not intervention is required. The observer for Human-aware Intervention should offer different levels of freedom to the user. At the lowest level of freedom, the observer will intervene just before the undesirable state. At increased levels of freedom, the observer offers the user enough time to recover from the undesirable situation. Varying the level of freedom allows the observer to gradually guide the user toward d without directly giving away the complete solution. We evalute the accuracy of our learning approach using the observation traces collected from the human subject study.

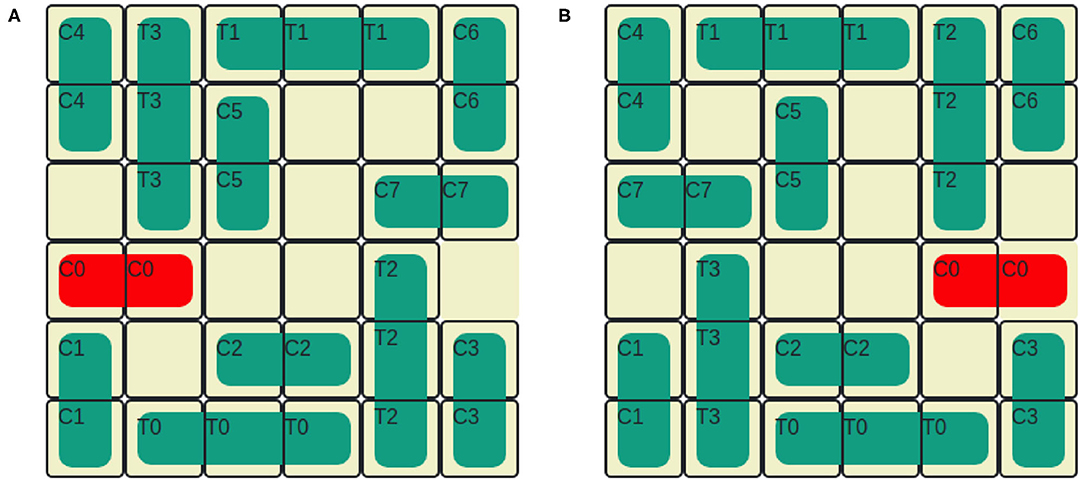

6.1. The Rush Hour Puzzle

The Rush Hour puzzle is a game for ages 8 and above (Flake and Baum, 2002). Figure 4A shows an initial puzzle configuration on a 6 × 6 grid, where cars (length 2) and trucks (length 3) are arranged. Vertically aligned vehicles can only move up and down and horizontal vehicles can move left and right. Vehicles can be moved one at a time and into adjacent empty spaces. The solution to the Rush Hour puzzle is a sequence of legal moves that transforms the initial board state shown in Figure 4A to the goal state in Figure 4B. For the puzzle shown in Figure 4, the shortest solution has 21 moves, if the number of moves is considered as the optimizing criteria. If optimized for the number of vehicles moved, the puzzle can be solved optimally by moving only 8 vehicles. It is important to note that one can obtain different “optimal solutions" depending on whether the number of moves is minimized, or if the number of vehicles moved is minimized. Humans tend not to clearly make this distinction when playing the game.

We adopt the formal definition of a Rush Hour instance from Flake and Baum (2002).

Definition 6. A Rush Hour instance is a tuple such that:

• (w, h) ∈ ℕ2 are the grid dimensions. In the standard version, w = h = 6

• (x, y), x ∈ {1, w} and y ∈ {1, h} are the coordinates of the exit, which must be on the grid perimeter.

• n ∈ ℕ the number of non-target vehicles

• is the set of n + 1 vehicles comprised of cars and trucks . Note that

is identified as {C0, …, Cl}, where and is identified as {T0, …, Tm}, where .

A vehicle is a tuple vi = 〈xi, yi, oi, si〉, where are the vehicle coordinates, oi ∈ {N, E, S, W} is the vehicle orientation for North, East, South, West, si ∈ {2, 3} is the vehicle size and C0 is the target vehicle.

Flake and Baum (2002) also defines the solution to a Rush Hour instance as a sequence of m moves, where each move consists of a vehicle identifier i, a direction that is consistent with the initial orientation of vi, and a distance. Each move, in sequence must be consistent with itself and with the configuration prior to the move. Further, in order to move a distance d the configuration must be consistent for all d′ such that, 0 ⩽ d′ ⩽ d (i.e., a vehicle cannot jump over other vehicles on its path).

6.2. The Rush Hour Puzzle as a STRIPS Planning Task for Intervention

We study how human users approach solving a cognitively engaging problem as a planning task and evaluate whether we can use domain-specific features to predict intervention. To collect realistic data, it is critical that the human users were willing to participate in the task. The Rush Hour puzzle addresses this requirement well, as evidenced by the feedback about the task we received from the demographic survey. See Section 11.2 in the Appendix for details. Although the Rush Hour puzzle is a game, the environment is rich enough to simulate undesirable consequences and also offer the users a task that is challenging enough.

We translate the Rush Hour puzzle in Definition 6 into a grounded STRIPS planning task P = 〈F, A, s0, G〉 as follows:

• F = { - for the vehicles,

{ and li ∈ {(x, y)|x ∈ [1..w], y ∈ [1..h]}, (at ?vi ?li)} - for the vehicle positions,

{ and di ∈ {NS, SN, EW, WE}, (face ?vi ?di)} - for direction of vehicles (North to South (down), South to North (up), East to West (left), West to East (right), respectively),

{∀li ∈ {(x, y)|x ∈ [1..w], y ∈ [1..h]}, (free ?li)} - for open positions,

{∀li, lj ∈ {(x, y)|x ∈ [1..w], y ∈ [1..h]} and di ∈ {NS, SN, EW, WE}, (next ?di ?li ?lj)} - for direction of the adjacent locations) }

• A = {move-car = 〈pre(move-car), add(move-car), del(move-car)〉 ⊆ F,

move-truck = 〈pre(move-truck), add(move-truck), del(move-truck)〉 ⊆ F}

• s0 ⊆ F

• G = {(at C0 li)(at C0 lj)}, where li = (w, 3) and lj = (w−1, 3)

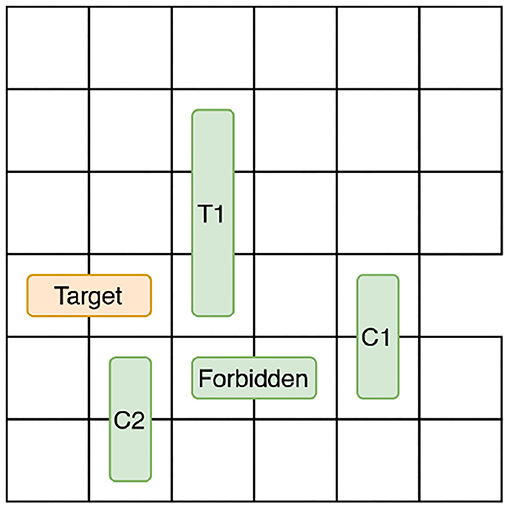

In order to configure the Rush Hour STRIPS planning task for intervention, we introduce an undesirable state, u by designating one vehicle as forbidden. The post-conditions of any action that moves the forbidden vehicle satisfies u. The puzzle can be solved without moving the forbidden vehicle. Therefore, moving the forbidden vehicle is also an unnecessary action, indicating that the user is exploring an unhelpful region in the state space. Here, intervention is required to guide the user toward exploring more helpful regions in the state space. In Figure 4A, the forbidden vehicle is C2. If the user moves C2 to the left, then the board state satisfies u. The user's goal d = G. The vehicle movement constraint introduced by the presence of the forbidden vehicle adds an extra level of difficulty to the user's planning task.

The Rush Hour problem is also unique in that the observer is more focused on states than actions. Recall that a history H = (o1[s1], o2[s2], …, oi−1[sH]) is a sequence of previously observed actions, which started from s0 with the implied resulting states in brackets. For Rush Hour, the observer relies on those implied states instead of just the actions. For simplicity in notation, we present intervention in terms of states, although it is easy to map between actions and states because of the deterministic state transition system of the planning model.

6.3. Domain-Specific Feature Set

For the observer to learn intervenei, we develop a set of domain-specific features for the Rush Hour problem. We want the feature set to capture whether the user is advancing toward d by making helpful moves, or whether the user currently exploring a risky part in the state space and getting closer to u. We hypothesize that the behavior patterns extracted from H as features have a correlation to the event of the user moving the forbidden vehicle.

6.3.1. Features Based on State

The features based on state analyze the properties of the sequence of state transitions in H from s0 to sH ([s0], [s1], [s2], …, [sH]). Specifically, we look at the mobility of the objects: target vehicle (C0), the forbidden vehicle and the vehicles adjacent to the target and the forbidden vehicles. We use the state features associated with the target vehicle to measure how close the user is to d. The state features associated with the forbidden car evaluate how close the user is to triggering u.

We manually examined the solutions produced in the human subject experiment (described later) to identify common movement patterns. Our analysis revealed that if the user was moving vehicles adjacent to the forbidden vehicle in such a way that the forbidden vehicle was freed, most users ended up moving the forbidden vehicle. Therefore, by monitoring the state changes occurring around the forbidden vehicle, we can estimate whether the user will end up moving the forbidden vehicle or not (i.e., trigger u). Similarly, state changes occurring on the target car's path to the exit, for example, the moves that result in reducing the number of vehicles blocking the target car is considered to be helpful to move the state closer to d.

We refer to the vehicles adjacent to the target and the forbidden vehicles as blockers and introduce two additional object types to monitor mobility: target car blockers and forbidden car blockers. Figure 5 illustrates an example state. The target car's path is blocked by two vehicles C1 and T1. Therefore, target car blockers = {C1, T1}. We only consider the vehicles that are between the target car and the exit cell as target car blockers because, only those vehicles are preventing the target car from reaching d. The forbidden vehicle's movement is blocked by two vehicles C1 and C2. Therefore, forbidden car blockers = {C1, C2}. We now describe the features based on state that are used to predict intervention in Human-aware Intervention Problems.

• blocks: number of times a move increased the number of cars blocking the target car's path

• frees: number of times a move freed up empty spaces around the forbidden vehicle

• freebci: number of times the number of empty spaces around the forbidden vehicle blockers increased

• freebcd: number of times the number of empty spaces around the forbidden vehicle blockers decreased

• freegci: number of times the number of empty spaces around the target car blockers increased

• freegcd: number of times the number of empty spaces around the target car blockers decreased

• mgc: mean number of empty spaces around the target car blockers

• mbc: mean number of empty spaces around the forbidden car blockers

• reset: number of times the current move changed the state back to the initial puzzle configuration

6.3.2. Features Based on User Actions

The features based on user state analyze the properties of the sequence of actions from o1 to oi−1 in H (o1, o2, …, oi−1) We follow the same manual analysis of solutions produced in the human subject experiment to identify common movement patterns. We found that the users who produce unsafe solutions often made unhelpful moves such as moving the same vehicle back and forth many times in quick succession, causing their solution to be longer compared to a safe solution. We statistically verified whether the relationship between the solution length and the number of forbidden vehicle moves is significant for the human subject data using Spearman's Rank Correlation Coefficient. The test showed that the relationship is significant (p < 0.05). See Section 11.3 in the Appendix for a summary of raw data.

Similarly, we observed that comparing the number of moves of the user's solution to an optimal solution produced by an automated planner is helpful in identifying whether the user is moving away from d or making progress. In order to verify this observation, we use the HSP planner (Bonet and Geffner, 2001) to find cost optimal solutions for the Rush Hour planning tasks (see Section 6.2) used in the human subject experiment. We statistically verified that the relationship between the length difference between the user's solution and the optimal solution found by an automated planner, and the number of forbidden vehicle moves is significant using Spearman's Rank Correlation Coefficient (p < 0.05).

Thus, we conclude that features derived from the length and number of backtracking moves in H can be used to predict when the user is getting close to u. We introduce an unhelpful move called the h-step backtrack, which is a move that takes the state back to a previously seen state by h number of steps (i.e., an undo operation). When deriving the feature to capture backtracking moves, we only consider h = 1, which asks the question did the observation oi−1 undo the effect of the observation oi−2?

We now describe the features based on actions that are used to predict intervention in Human-aware Intervention Problems.

• len: number of moves in H

• len-opt: difference of the number of moves in H and the number of moves in the safe optimal solution produced by an automated planner for the same planning task.

• backtracks: number of 1-step backtrack actions in H

• first: number of moves until the forbidden vehicle was moved for the first time in H

• prop: number of moves until the forbidden vehicle was moved for the first time in H divided by the number of moves in the safe, optimal solution produced by an automated planner for the same planning task.

• moved: number of vehicles moved in H.

7. Evaluating Human-Aware Intervention

To evaluate the efficacy of the features based on state and features based on actions in predicting intervention for Human-aware Intervention Problems, we use actual observation traces collected from a human subject study, where human users solve Rush Hour planning tasks on a Web simulator. We generate learned models that predict intervention while offering different levels of freedom to the user. We consider three levels of freedom (k = {1, 2, 3}) for the evaluation. A model for the lowest level of freedom (k = 1), predicts intervention one move before the undesirable state. This configuration offers no time for the user to recover from the undesirable state. A model for the next level of freedom (k = 2), intervenes the user two moves before the undesirable state is satisfied and offers the user some time to take corrective action. A model for the highest level of freedom (k = 3), intervenes the user three moves before the undesirable state. We begin with the experiment protocol and briefly describe the findings. Next, we discuss the learning methods used to predict intervention. Finally, we discuss the accuracy of prediction compared to the Plan Recognition as Planning algorithm proposed by Ramırez and Geffner (2010).

7.1. Rush Hour Experiment Protocol

We recruited subjects from a university student population. The sample comprised of college students in Computer Science, Psychology, Agriculture and Business majors. One hundred and thirty six participants completed the study. The participants were not compensated for their time. After obtaining informed consent, the participants were directed to the Web URL (https://aiplanning.cs.colostate.edu:9080/), which hosted the Rush Hour simulator software. Each participant was assigned to solve one randomly selected Rush Hour puzzle. We did not place any time restriction for the puzzle solving task. Participants also had the option to use an online tutorial (available on the Web simulator application) on how to play the Rush Hour puzzle. Each puzzle contained one forbidden vehicle. Once the puzzle solving task was completed, the participants were asked to complete a short demographic survey on their general puzzle solving habits. One hundred and seventeen of the 136 participants also completed the demographics survey.

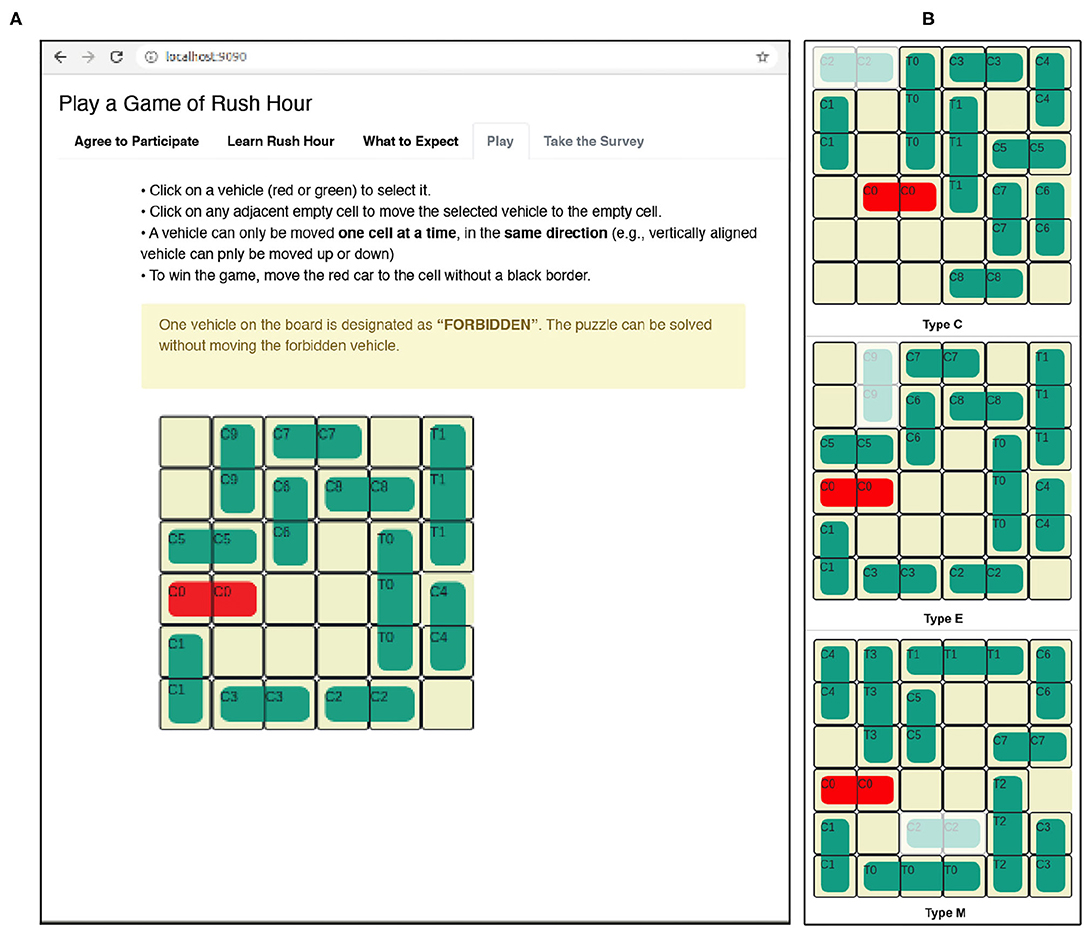

When choosing Rush Hour puzzle instances for the human subject study, we want to carefully balance the puzzle's difficulty for a human user. Especially, considering the PSPACE-completeness of the (generalized) puzzle, we need the puzzles to be solvable by human users in a reasonable time. We used a pilot study to determine the puzzle difficulty. See Section 11.1 in the Appendix. We ensured that the experiment protocol fully adhered to the Rush Hour planning task definitions (Section 6.2). The goal of the Rush Hour planning task (d) is clearly communicated to the user. To instill the importance of avoiding the forbidden vehicle in the user's mind, we provided an information message (yellow information bar in Figure 6) to inform about the presence of a forbidden vehicle without specifying the vehicle identifier. The users were also informed that the puzzle can be solved without moving the forbidden vehicle. If the user moved the forbidden vehicle, no visual cues (error messages, blocks) were given. Therefore, the specific undesirable state (u) remained hidden to the user, but they were made aware of its presence. We informed the human users that there is a forbidden vehicle and they must try to solve the puzzle without moving it to prime them toward thinking more deeply about the puzzle and raise the awareness of raise the aware of the undesirable state. This technique allowed us to increase the cognitive load on the human user because it conditioned the human users to study the puzzle to guess/recognize the forbidden vehicle. Further it primed the users to make thoughtful moves instead of making random moves and accidentally finding the solution.

Figure 6. (A) Rush Hour planning task Web interface. The forbidden vehicle for this configuration is C9. (B) Rush Hour puzzle configuration types with forbidden vehicles highlighted.

To simulate discrete actions, the vehicles on the board could only be moved one cell at a time. The user could click on the object to select it and move it by clicking on an empty adjacent cell. Invalid moves (vehicle dragging and jumps) were blocked and the user was notified via an alert message. We recorded the user's solution to the STRIPS planning task as a sequence of actions in a text file.

We used ten Rush Hour planning tasks for the experiment. For analysis purposes (see Appendix), we separated the ten puzzles into three groups by the position of the forbidden vehicle. As shown in Figure 6B, type C has the forbidden vehicle in the corner of the board. Type E has the forbidden vehicle on an edge. Type M has the forbidden vehicle in the middle. The experiment used four puzzles of type C, five puzzles of type E and one puzzle of type M.

7.2. Length Distributions of Human Users' Solutions

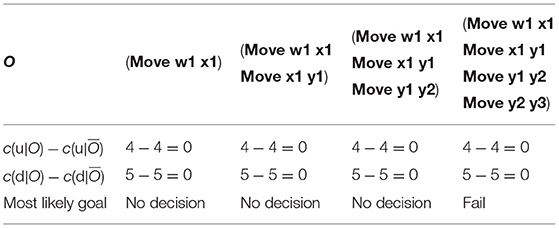

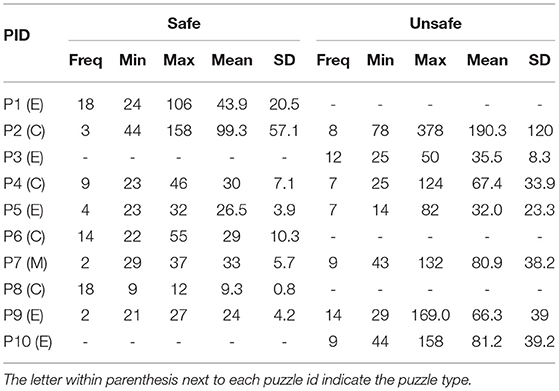

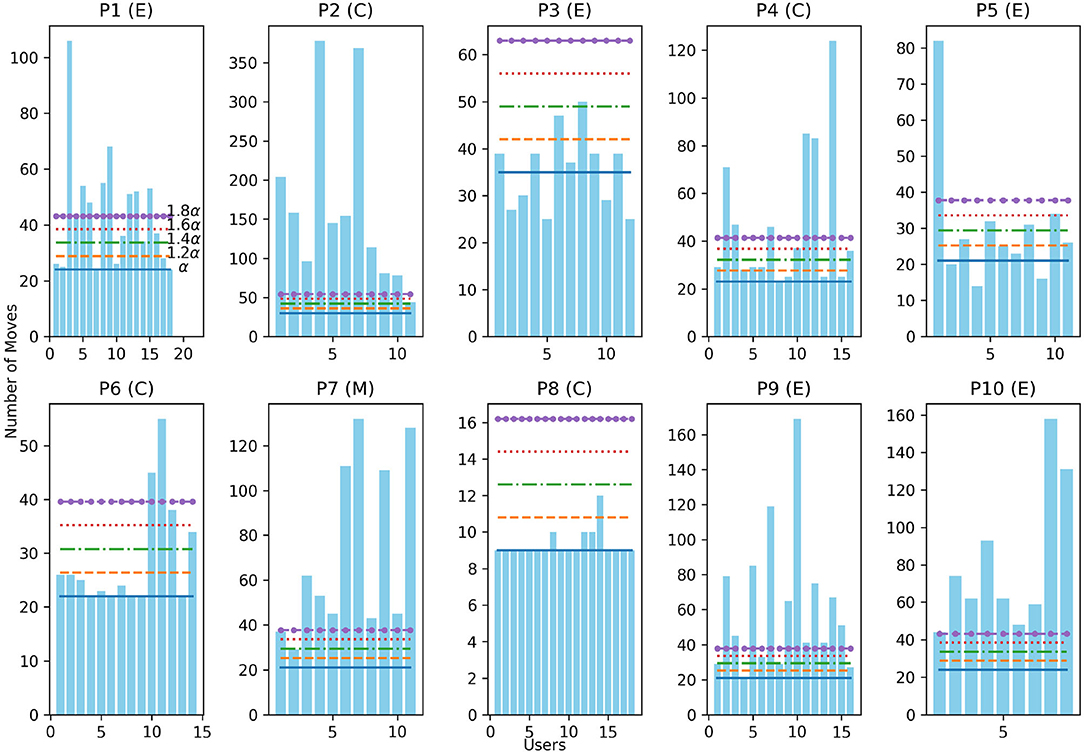

We now compare the cost of the solutions produced by human users by dividing the solutions into two types: safe and unsafe. In this analysis, we assumed each move is unit cost, therefore the cost of the solution is equal to the number of moves. We refer to solutions that did not move the forbidden vehicle as safe and solutions that moved the forbidden vehicle as unsafe. Sixty six users from the total 136 solutions that involved moving the forbidden vehicle (49%). From those who moved the forbidden vehicle, 54 users moved the vehicle more than once (82%). Table 9 describes the summary statistics for the safe and unsafe solutions.

Table 9. Frequency, minimum, maximum, mean, and standard deviation (SD) of the number of moves in human user solutions for the Rush Hour planning tasks P1 through P10.

We see that the planning task P1 did not produce any unsafe solutions. The reason for this observation is that when examining the structure of P1 it can be seen that moving the forbidden vehicle makes the planning task unsolvable. Second, the planning task P8 did not produce any unsafe solutions. Furthermore, P8 and P6 planning tasks in type C did not produce any unsafe solutions. Human users found it difficult to avoid the forbidden car for type E planning tasks, where the forbidden car was positioned along the edge of the board. For type E planning tasks (P3, P5, P9, P10), there are more unsafe solutions than safe solutions and also the mean solution length for unsafe solutions is larger compared to the safe solutions mean. We only had 1 planning task for type M (P7), where the forbidden vehicle was placed in the middle of the board. The users who attempted P7 found it difficult to solve the planning task without moving the forbidden vehicle.

Figure 7 illustrates how the number of moves in users' solutions compare to a set of threshold values derived from the optimal solution for each Rush Hour planning task. We define the threshold set θ as: given the optimal number of moves α for a puzzle, θ = {α, 1.2α, 1.4α, 1.6α, 1.8α}. The letter in parenthesis indicates the puzzle group (see Figure 6B for the three puzzle types) of each puzzle.

Figure 7. Number of moves in the users' solution compared to the optimal number of moves α, 1.2α, 1.4α, 1.6α, 1.8α for puzzles P1 through P10.

It can be seen that human solvers' solutions to P8 were very close to the optimal solution in the number of moves. Human solvers' found it very difficult to find a solution closer to the optimal number of moves for P2. Users who attempted P3 and P5 found solutions shorter than the safe, optimal. Shorter solutions for these two puzzles all required the user to move the forbidden vehicles. This observation allows us to draw a conclusion that the recovery process of the Human-aware Intervention needs to aim at reducing the remaining number of moves the user has to execute to help them avoid the forbidden vehicle.

7.3. The Learning Methods

Our solution to the Human-aware Intervention Problem uses machine learning to predict whether u will be reached in k moves, given H, where k = {1, 2, 3}. To produce the learned models, we first partition the 136 human user solution from the experiment into training (70%) and test (30%) sets. To produce the H for a user, the user's solution is pre-processed to only include the moves until one step, two steps and three steps before the forbidden vehicle was moved for the first time. For example, in a solution O = {o1, …, oi}, if a user moved the forbidden vehicle in step i, we generate three observation traces O1 = {o1, …, oi−1}, O2 = {o1, …, oi−2} and O3 = {o1, …, oi−3} corresponding to that user. Observation traces of type O1 were used to train the model for k = 1, observation traces of type O2 were used to train the model for k = 2 and so on. Given the sequence of actions in the user's solution, the corresponding state after each move required for H is derived using the STRIPS planning model for the corresponding Rush Hour puzzle. We use the features based on state and features based on actions together to train five classifiers with 10-fold cross validation for each value of k. We explore a number of classifiers: the decision tree, K-nearest neighbor, Logistic Regression and Naive Bayes. The classifiers are used in the supervised learning mode. We summarize the parameters used in each learning method below:

• Decision Tree: We use the J48 classifier available on the WEKA platform (Hall et al., 2009). This classifier implements the C4.5 algorithm (Quinlan, 1993). The decision tree classifier is tuned to user pruning confidence = 0.25 and the minimum number of instances per leaf = 2.

• k Nearest Neighbor (KNN): We use this classifier with a Euclidean distance metric, considering the value k = 1

• Logistic Regression: This classifier is tuned for ridge parameter = 1.0E − 8.

• Naive Bayes: This classifier is tuned with the supervised discretization = True.

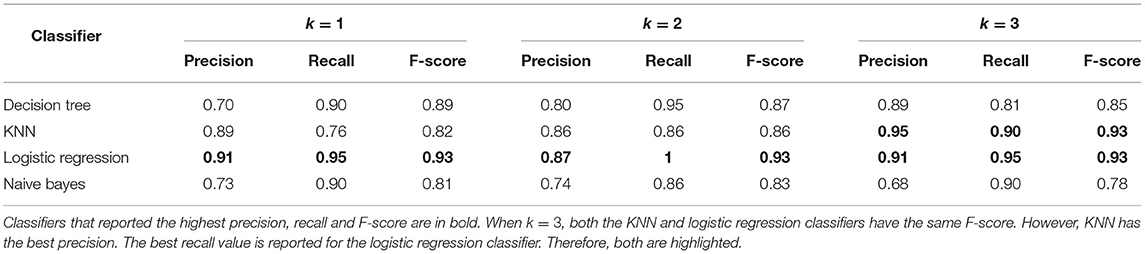

7.3.1. Human-Aware Intervention Accuracy

We used the learned models on the test data set to predict whether the observer should intervene given H. In order to evaluate the classifier accuracy, we first define true-positives, true-negatives, false-positives, false-negatives for the Human-aware Intervention Problem. A true-positive is when the classifier correctly predicts that u will be reached in k moves given H. A true-negative is when the classifier correctly predicts that the u will not be reached in k moves. A false-positive is when where the classifier incorrectly identifies that the u will be reached in k moves. A false-negative is when the classifier identifies that the u will not be reached in k moves, but in fact it does.