95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Artif. Intell. , 21 July 2021

Sec. Medicine and Public Health

Volume 4 - 2021 | https://doi.org/10.3389/frai.2021.612914

This article is part of the Research Topic Robotics, Autonomous Systems and AI for Nonurgent/Nonemergent Healthcare Delivery During and After the COVID-19 Pandemic View all 35 articles

Hanqiu Deng1,2

Hanqiu Deng1,2 Xingyu Li1*

Xingyu Li1*Since the first case of coronavirus disease 2019 (COVID-19) was discovered in December 2019, COVID-19 swiftly spread over the world. By the end of March 2021, more than 136 million patients have been infected. Since the second and third waves of the COVID-19 outbreak are in full swing, investigating effective and timely solutions for patients’ check-ups and treatment is important. Although the SARS-CoV-2 virus-specific reverse transcription polymerase chain reaction test is recommended for the diagnosis of COVID-19, the test results are prone to be false negative in the early course of COVID-19 infection. To enhance the screening efficiency and accessibility, chest images captured via X-ray or computed tomography (CT) provide valuable information when evaluating patients with suspected COVID-19 infection. With advanced artificial intelligence (AI) techniques, AI-driven models training with lung scans emerge as quick diagnostic and screening tools for detecting COVID-19 infection in patients. In this article, we provide a comprehensive review of state-of-the-art AI-empowered methods for computational examination of COVID-19 patients with lung scans. In this regard, we searched for papers and preprints on bioRxiv, medRxiv, and arXiv published for the period from January 1, 2020, to March 31, 2021, using the keywords of COVID, lung scans, and AI. After the quality screening, 96 studies are included in this review. The reviewed studies were grouped into three categories based on their target application scenarios: automatic detection of coronavirus disease, infection segmentation, and severity assessment and prognosis prediction. The latest AI solutions to process and analyze chest images for COVID-19 treatment and their advantages and limitations are presented. In addition to reviewing the rapidly developing techniques, we also summarize publicly accessible lung scan image sets. The article ends with discussions of the challenges in current research and potential directions in designing effective computational solutions to fight against the COVID-19 pandemic in the future.

COVID-19, caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), was noted to be infectious to humans in December 2019 in Wuhan, China. Afterward, it swiftly spread to most countries around the world. People infected with COVID-19 present with have fever, cough, difficulty in breathing, and other symptoms, while there are also asymptomatic infectious patients (Bai et al., 2020).

For COVID-19 diagnosis, on the one hand, the reverse transcription polymerase chain reaction (RT-PCR) is a specific and simple qualitative analysis method for the detection of COVID-19 (Tahamtan and Ardebili, 2020). Despite its high sensitivity and strong specificity, the RT-PCR test has several limitations. First, false-negative results for the SARS-CoV-2 test are very common in clinical diagnosis of COVID-19 due to various factors, e.g., an insufficient amount of virus in a sample (Xiao et al., 2020). Second, the RT-PCR test provides a yes/no answer without any indication of disease progression. On the other hand, clinical studies have discovered that most COVID-19 patients, even in the early course of infection or without showing any clinical symptoms, possess common features in their lung scans (Hao and Li, 2020; Long et al., 2020; Salehi et al., 2020; Wong et al., 2020; Zhou et al., 2020a). These patterns in lung images are believed to be a complement to the RT-PCR test and thus form an alternative important diagnostic tool for the detection of COVID-19. Particularly, among various non-invasive techniques to view and examine internal tissues and organs in chest, ultrasound (US) does not depict the differences between COVID-19 and other viral types of pneumonia well and magnetic resonance imaging (MRI) suffers from long scan times and high costs. Consequently, CT scans and chest X-ray (CXR) are the widely used techniques in lung scans for the clinical diagnosis of COVID-19 (Vernuccio et al., 2020; Dong et al., 2021). Currently, chest imaging has been used for preliminary/emergency screening, monitoring, and follow-up check-ups in COVID-19 treatment in China and Italy.

AI-empowered computational solutions have been successfully used in many medical imaging tasks. Particularly to combat COVID-19, computational imaging technologies include, but are not limited to, lung and infection region segmentation, chest image diagnosis, infection severity assessment, and prognosis estimation. Compared to physician’s examination, computational solutions are believed to be more consistent, efficient, and objective. In literature, early works on chest image examination for COVID-19 patients have usually adopted the paradigm of supervised learning to build an image analysis model. These learning algorithms range from support vector machine (SVM), K-nearest neighbor, random forest, decision tree to deep learning. Lately, to improve learning models’ generalization, transfer learning, multi-task learning, and weakly supervised learning have become popular.

In this article, we review the state-of-the-art AI diagnostic models particularly designed to examine lung scans for COVID-19 patients. To this end, we searched for papers and preprints on bioRxiv, medRxiv, and arXiv published for the period from January 1, 2020, to March 31, 2021, with keywords of COVID, lung scans, and AI. After quality inspection, 96 papers were included in this article, among which most are peer-reviewed and published in prestigious venues. We also included a small portion of reprints in this review due to their methodology innovations. Particularly, this review presents in-depth discussions on methodologies of region-of-interest (ROI) segmentation and chest image diagnosis in Segmentation of Region of Interest in Lung Scans and COVID-19 Detection and Diagnosis, respectively. Infection severity assessment and prognosis prediction from COVID-19 lung scans are closely related and thus presented together in COVID-19 Severity Assessment and Prognosis Prediction. Since AI solutions are usually data-driven, Public COVID-19 Chest Scan Image Sets lists primary COVID-19 lung image sets publicly accessible to researchers. Limitations and future directions on AI-empowered computational solutions to COVID-19 treatment are summarized at the end of this article.

Several review papers have been published on AI solutions to combat COVID-19. Pham et al. (2020) and Latif et al. (2020) have emphasized the importance of artificial intelligence and big data in responding to the COVID-19 outbreak and preventing the severe effects of the COVID-19 pandemic, but computational medical imaging was not their focus. (Dong et al. (2021) and Roberts et al. (2021) have broadly covered the use of various medical imaging modalities for COVID-19 treatment and Shi et al. (2021) overviewed all aspects along the chest imaging pipeline, from imaging data acquisition to image segmentation and diagnosis. This article constitutes the latest technical review (up to March 31, 2021) of AI-based lung scan screening for the COVID-19 examination. In contrast to previous review papers, this review particularly focuses on the AI-driven techniques for COVID-19 chest image analysis. We present an in-depth discussion on various AI-based methods, from their motivations to specific machine learning models and architectures. The specific scope and updated, in-depth review of technology distinguish this article from previous works.

The region of interest in lung scans is usually lung fields, lesions, or infection regions. As a prerequisite procedure, obtaining accurate segmentation of lung field or other ROIs in chest images is essential. It helps avoid the interference of non-lung regions in subsequent analysis (Majeed et al., 2020). This section provides a comprehensive review of AI-driven solutions for ROI segmentation for COVID-19 treatment. We will start with performance metrics for segmentation evaluation. Then, computational solutions are grouped based on image modalities (first with CXR, followed by CT). Note that though many studies have focused on lung segmentation, this article surveys publications directly related to COVID-19 treatment.

Dice coefficient is the most common metric used to evaluate segmentation methods. It quantifies the agreement between ground truth mask and segmentation results. Specifically, Dice coefficient is defined as follows:

where A and B are ground truth and segmented regions, respectively;

Among various machine learning methods for ROI segmentation, the encoder-decoder architecture such as U-Net (Ronneberger et al., 2015) is the common backbone model. The encoder extracts numerical representations from a query image and the decoder generates a segmentation mask in the query image size. To boost the performance of U-Net shape models, different deep learning strategies are investigated to address unique challenges that exist in lung scans of COVID-19 patients. We specify these novel algorithms and models as follows.

Chest X-ray images from COVID-19 patients usually suffer from various levels of opacification. This opacification masks the lung fields in CXRs and makes accurate segmentation of lung fields difficult. To tackle this problem, Selvan et al. (2020) have proposed a weak supervision method that fuses a U-Net and a variational autoencoder (VAE) to segment lungs in high-opacity CXRs. The novelty in their method is the use of VAE for data imputation. In addition, three data augmentation techniques are attempted to improve the generalization of the proposed method.

In the literature, many studies have proposed improvements for ROI segmentation in lung CT images. For instance, an attention mechanism is often deployed for segmentation recently. For automated segmentation of multiple COVID-19 infection regions, Chen X. et al. (2020) have applied the soft attention mechanism to improve the capability of U-Net to detect a variety of symptoms of the COVID-19. The proposed aggregated residual transformation facilitates the generation of a robust and descriptive feature representation, further improving the segmentation performance. Inf-Net (Fan et al., 2020) is a semi-supervised segmentation framework based on a randomly selected propagation strategy. It utilizes implicit reverse attention and explicit edge attention to enhance abstract representations and model boundaries of lung infection regions, respectively. Similar to Inf-Net, COVID-SegNet proposed by Yan et al. (2020) introduces two attention layers in a novel feature variation (FV) block for lung infection segmentation. The channel attention handles confusing boundaries of COVID-19 infection regions, and the spatial attention in the FV block optimizes feature extraction in the encoder model.

Alternatively, multi-task learning is used to leverage useful information in multiple related tasks to boost the performance of both segmentation and classification (Amyar et al., 2020). In this study, a common encoder is shared by two decoders and one classification layer for COVID-19 infection segmentation, lung image reconstruction, and CT image binary classification (i.e., COVID-19 and non-COVID-19). Similarly, Wu et al. (2021) have designed a joint classification and segmentation framework, which used a decoder to map the combined features from the classification network and an encoder to the segmentation results.

In addition to the development of advanced deep learning models, several studies have tried to improve the segmentation performance by either synthesizing CT image samples or massaging image labels. For instance, Liu et al. (2020) have proposed using GAN to synthesize COVID-19 opacity on normal CT images. To address data scarcity, Zhou. et al. (2020) have created a CT scan simulator that expands the data by fitting variations in the patient’s chest images at different time points. Meanwhile, they have transformed the 3D model into three 2D segmentation tasks, thus not only reducing the model complexity but also improving the segmentation performance. On the other hand, instead of generating new, “fake” CT images for training, Laradji et al. (2020) have built an active learning model for image labeling. The active image labeling and infection region segmentation are iteratively performed until performance converges. Wang et al. (2020) have introduced a noise-robust Dice loss to improve the robustness of the model against noise labels. In addition, an adaptive self-ensembling framework based on the teacher-student architecture was incorporated to further improve noise-label robustness in image segmentation.

Pneumonia detection from lung images is a key part of an AI-based diagnostic system for fast and accurate screening of COVID-19 patients. In this regard, machine learning methods, especially discriminative convolutional neural networks (CNN), are deployed for COVID-19 detection (binary classification of COVID-19 and non-COVID-19) and multi-category diagnosis (classification of normal, bacterial, COVID-19, and other types of viral pneumonia).

The widely used measurement metrics for image classification are accuracy, precision, sensitivity, specificity, and F1 score. The areas under the ROC curve (AUC) were also reported in some studies. ROC curve describes the performance of a classification model at various classification thresholds and AUC measures the area underneath the obtained ROC curve.

where

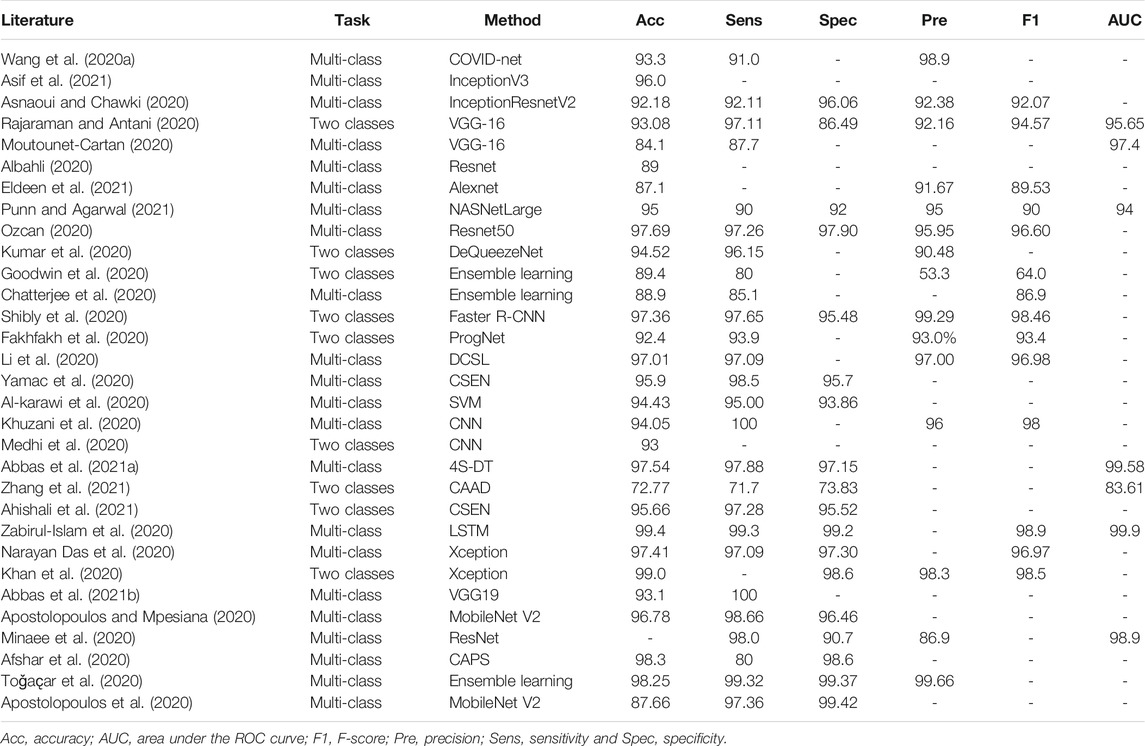

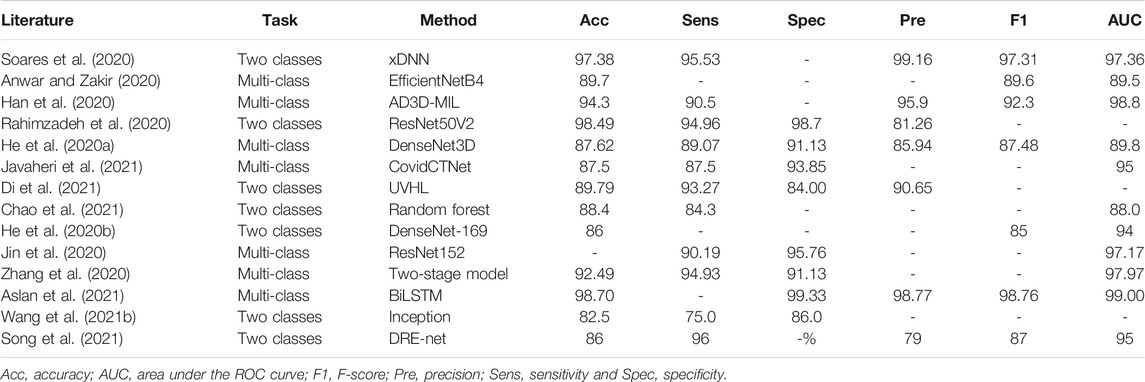

The biggest challenge in the problem of COVID-19 detection from chest images is data scarcity. To address this issue, early works have usually designed diagnostic systems following the handcraft engineering paradigm. Moreover, solutions based on transfer learning, ensemble learning, multi-task learning, semi-supervised learning, and self-supervision have been proposed in recent publications. For ease of comparison, we summarize the reviewed methods for CXRs and CT scans in Table 1 and Table 2, respectively.

TABLE 1. Summary of COVID-19 detection from CXR images in the literature (%). If multiple models are used in a study, we report the best-performance model here.

TABLE 2. Summary of COVID-19 detection from lung CT slides in the literature (%). If multiple models are used in a study, we report the best-performance model here.

Handcrafted engineering is believed to be effective when prior knowledge of the problem is known. It is also preferred over deep learning when a training set is small. To solve the problem of COVID-19 diagnosis from chest X-ray images, Al-karawi et al. (2020) have proposed manually extracting image texture descriptors (LBP, Gabor features, and histograms of oriented gradient) for downstream SVM classification. Similarly, Khuzani et al. (2020) have suggested extracting numerical features from both spatial domain (Texture, GLDM, and GLCM) and frequency domain (FFT and Wavelet). Instead of SVM, a multi-layer neural network was designed for triple classification (normal, COVID-19, and other pneumonia). In the diagnostic pipeline introduced by Medhi et al. (2020), a CXR image is first converted to the grayscale version for image thresholding. Then, the obtained binary images are passed to a shallow net to distinguish normal images and COVID-19 cases. Chandra et al. (2021) have proposed extracting 8,196 radiomic texture features from lung X-ray images and differentiated different pneumonia types by multi-classifier voting.

Recently, with the increase in the collections of CXR images from COVID-19 patients, deep learning methods have become a major technique. Wang L. et al. (2020) have designed a lightweight diagnostic framework, namely, COVID-Net, for triple classification (i.e., no infection, non-COVID-19 infection, and COVID-19 viral infection). The tailored deep net makes heavy use of a residual projection-expansion-projection-extension (PEPX) design pattern and enhances representational capacity while maintaining relatively low computational complexity. Zabirul-Islam et al. (2020) have introduced an interesting hybrid deep CNN-LSTM network for COVID-19 detection. In this work, deep features of a CXR scan are extracted from a tailored CNN and passed to a long short-term memory (LSTM) unit for final classification. Since LSTM replaces a fully connected layer in the CNN-LSTM model, the number of trainable parameters in the model is reduced due to the parameter sharing property of LSTM.

Among the large volume of literature, transfer learning is one of the most common strategies in deep learning to combat data scarcity. It retrains a deep model on large-scale datasets and fine-tunes it on target COVID-19 image sets (Ahishali et al., 2021; Apostolopoulos et al., 2020; Apostolopoulos and Mpesiana, 2020; Asnaoui and Chawki, 2020; Khan et al., 2020; Moutounet-Cartan, 2020; Narayan Das et al., 2020; Ozcan, 2020; Ozturk et al., 2020; Punn and Agarwal, 2021; Abbas et al., 2021b; Asif et al., 2021; Eldeen et al., 2021). These models include, but are not limited to, Inception, ResNet, VGG-16, NASNet, and AlexNet. To further leverage the discriminative power of different models, ensemble learning is deployed, where multiple deep nets are used to vote for the final results. For example, DeQueezeNet, proposed by Kumar et al. (2020), ensembles DenseNet and SqueezeNet for classification. Similar models were proposed by Goodwin et al. (2020); Chatterjee et al. (2020); Shibly et al. (2020); Minaee et al. (2020); Toǧaҫar et al. (2020) Alternatively, Afshar et al. (2020) haveintroduced a capsule network-based model for CXR diagnosis, where transfer learning is exploited to boost the performance. To “open” the black box in a deep learning-based model, Brunese et al. (2020) have introduced an explainable detection system where transferred VGG-16 and class activation maps (CAM) (Zhou et al., 2016) were leveraged to detect and localize anomalous areas for COVID-19 diagnosis. Furthermore, Majeed et al. (2020) have performed a comparison study on pretrained CNN models and deployed CAM to visualize the most discriminating regions. Based on the experimental results, Majeed et al. (2020) have recommended performing ROI segmentation before diagnostic analysis for reliable results. The study by Hirano et al. (2020) focused on the vulnerability of deep nets against universal adversarial perturbation (UAP) with the application of detecting COVID-19 cases from chest X-ray images. The experimentation suggests that deep models are vulnerable to small UAPs and that adversary training is a necessity.

Since direct transfer across datasets from different domains may lead to poor performance, researchers have developed various strategies to mitigate the effects of domain difference on transfer performance. Li et al. (2020) have proposed a discriminative cost-sensitive learning (DCSL) model for a triple-category classification between normal, COVID-19, and other types of pneumonia. It uses a pre-trained VGG16 as the backbone net, where the first 13 layers are transferred and the two top dense layers are refined using an auxiliary conditional center loss to decrease the intra-class variations in representation learning. Convolution Support Estimation Network (CSEN) (Ahishali et al., 2021; Yamac et al., 2020) targets bridging the gap between model-based methods and deep learning approaches. It takes the numerical representations from pre-trained ChXNet as input and innovates a non-iterative mapping for sparse representation learning. In addition, Zhou et al. (2021) have considered the problem of COVID-19 CXR image classification in a semi-supervised domain adaptation setting and proposed a novel domain adaptation method, namely, semi-supervised open set domain adversarial network (SODA). It aligns data distributions in different domains through domain adversarial training (Ganin et al., 2016). To address highly imbalanced image sets, Zhang et al. (2021) have formulated the task of differentiating viral pneumonia in lung scans into a one-class classification-based anomaly detection problem and proposed a confidence-aware anomaly detection model (CAAD). CAAD consists of a shared feature extractor derived from a pre-trained EfficientNet, an anomaly detection module, and a confidence prediction module. A sample is detected as a COVID-19 case if it has a large anomaly score or a small confidence score.

Another strategy to tackle the data scarcity issue is data augmentation. For instance, offline augmentation strategies, such as adjusting noise, shear, and brightness, are adopted to solve the data imbalance problem by Ucar and Korkmaz (2020). To further address the shortage of COVID-19 CXR images, Albahli (2020) and Waheed et al. (2020) have proposed using GAN to synthesize CXR images directly. To leverage a large amount of unlabeled data in COVID-19 CXR detection, Rajaraman and Antani (2020) have introduced a semi-supervised model to generate pseudo-annotation for unlabeled images. Then, recognizing COVID-19 pneumonia opacities is achieved based on these “newly” labeled samples. Similarly, Abbas et al. (2021a) have introduced a self-supervision method to generate pseudo-labels. With abstract representations generated by the bottleneck layer of an autoencoder, unlabeled samples are clustered for downstream training.

Transfer learning is still the most common technique among the diverse methods to detect COVID-19 from lung CT images (Anwar and Zakir, 2020; He et al., 2020b; Chowhury et al., 2020; Soares et al., 2020; Wang S. et al., 2021). Particularly, previous studies (He et al., 2020a; Ardakani et al., 2020) have built a benchmark to evaluate state-of-the-art 2D and 3D CNN models (e.g., DenseNet and ResNet) for lung CT slides classification. It is worth mentioning that in the study of Wang S. et al. (2021), the model also performed re-detection on the results of the nucleic acid testing. According to this study, fine-tuned deep models can detect false-negative results. In addition, a lightweight 3D network optimized by neural architecture search was introduced for comparison in the proposed benchmark. To address the issue of large domain shift between source data and target data in transfer learning, He et al. (2020b) have proposed a self-supervised transfer learning approach called Self-Trans. By integrating contrastive self-supervision (Chen T. et al., 2020) in the transfer learning process to adjust the network weights pre-trained on source data, the bias incurred by source data is reduced in the target task. Aslan et al. (2021) have introduced a hybrid pre-trained CNN model and BiLSTM architecture to form a detection framework to improve the diagnosis performance.

In addition to transfer learning, diagnostic solutions based on weak supervision, multi-instance learning, and graphic learning were proposed in the literature. Rahimzadeh et al. (2020) have introduced a deep model that combined ResNet and the feature pyramid network (FPN) for CT image classification. ResNet is used as the backbone network and FPN generates a feature hierarchy from the backbone net’s features at different scales. The obtained feature hierarchy helps detect COVID-19 infection in different scales. DRE-Net proposed by Song et al. (2021) has a similar architecture that combines ResNet and FPN to achieve detail relation extract for image-level prediction. This study also implements Grad-CAM on ResNet layers for main lesion region visualization. Javaheri et al. (2021) have introduced a multi-step pipeline of a deep learning algorithm, namely, CovidCTNet, to detect COVID-19 from CT images. Using controlled CT slides as a reference, the dual function of BCDU-Net (Azad et al., 2019) in terms of anomaly detection and noise cancellation was exploited to differentiate COVID-19 and community-acquired pneumonia anomalies. An attention-based deep 3D multiple instance learning (AD3D-MIL) was proposed for accurate and interpretable screening of COVID-19 with weak labels (Han et al., 2020). In the AD3D-MIL model, a bag of raw CT slides is transformed to multiple deep 3D instances. Then, an attention-based pool layer is utilized to generate a Bernoulli-distributed bag label. COVID-19 and community-acquired pneumonia (CAP) have very similar clinical manifestations and imaging features in CT images. To differentiate the confusing cases in these two groups, Di et al. (2021) have designed an uncertainty vertex-weighted hypergraph learning (UVHL) method to identify COVID-19 from CAP. In this method, a hypergraph structure is constructed where each vertex corresponds to a sample and hyperedges connect neighbor vertices that share common features. Hypergraph learning is repeated till the hypergraph is converged.

Alternatively, instead of directly detecting COVID-19 from CT scans using one deep model, some researchers have proposed AI-based diagnosis systems that consist of multiple deep models, each completing one sub-task in sequential order. For example, Jin et al. (2020) have introduced an AI system that consisted of five key parts: 1) lung segmentation network, 2) slice diagnosis network, 3) COVID-infectious slice locating network, 4) visualization module for interpreting the attentional region of deep networks, and 5) image phenotype analysis module for explaining the features of the attentional region. By sequentially completing the key tasks, the whole system achieves 97.17% AUC on an internal large CT set. Zhang et al. (2020) have innovated a two-stage model to distinguish novel coronavirus pneumonia (NCP) from other types of pneumonia and normal controls in CT scans. Particularly, a seven-category lung-lesion segmentation model is deployed for ROI mask and the obtained lung-lesion map is fed to a deep model for COVID-19 diagnosis. Similarly, Wang B. et al. (2021) have introduced a diagnosis system consisting of a segmentation model and a classification model. The segmentation model detects ROI from lung scans and then the classification model determines if it is associated with COVID-19 for each lesion region.

Though most works on COVID-19 focus on ROI segmentation and chest image diagnosis, severity assessment and prognosis prediction are of significance. Severity assessment facilitates monitoring the COVID-19 infection course. Furthermore, it is closely related to prognosis outcomes (Fang et al., 2021), and detection of high-risk patients with early intervention is highly important to lower the fatality rate of COVID-19. Thus, we reviewed AI algorithms and models proposed for COVID-19 severity assessment and prognosis prediction in one section. Note that though it is closely related to severity assessment, prognosis prediction is a very difficult and challenging task. It requires monitoring patients’ outcomes over time, spanning from several days to several weeks. Given this challenge in data collection, the research on prognosis prediction relatively lags behind compared to COVID-19 detection and diagnosis.

To evaluate the quality of COVID-19 severity estimation, we used Spearman’s rank correlation coefficient between the ground truth and prediction as the evaluation metric. Spearman’s ρ is defined as follows:

where

To assess the pneumonia severity in a CXR, Signoroni et al. (2020) have proposed a novel end-to-end scheme deploying U-Net++ as the backbone net. With the lung segmentation network (i.e., U-Net++), feature maps that come from different CNN layers of the encoder are masked with segmentation results and fed to a global average pooling layer with a SoftMax activation for final severity score. Cohen et al. (2020) have proposed a transfer learning-based method for assessing the severity of COVID-19 infection. With a pre-trained DenseNet as the backbone architecture, the convolutional layers transform an input image into a 1,024-dimensional vector and the dense layers serve as task prediction layers to detect 18 medical evidences for COVID-19 diagnosis. Finally, a linear regression model is deployed to fuse the 1024D features and 18 evidences for COVID-19 infection prediction.

The severity of COVID-19 can be measured by different quantities. Goncharov et al. (2020) have proposed using infected lung percentage as an indicator of COVID-19 severity. In this regard, the study has deployed multi-task learning to detect COVID-19 samples and estimate the percentage of infected lung areas simultaneously. Since the method requires lung segmentation, U-Net is used as the backbone in the proposed multi-task learning. In the work proposed by Chao et al. (2021), an integrative analysis pipeline for accurate image-based outcome prediction was introduced. In the pipeline, patient metadata, including both imaging and non-imaging data, is passed to a random forest for outcome prediction. Besides, to address the challenges of weak annotation and insufficient data in COVID-19 severity assessment with CT, Li et al. (2021) have proposed a novel weak multi-instance learning framework for severity assessment, where instance-level augmentation was adopted to boost the performance.

Due to the complexity of prognosis estimation, previous studies usually fused lung ROI segmentation, COVID-19 diagnosis results, and patient’s metadata for a prognosis outcome. Note that in contrast to other tasks that follow similar evaluation protocols, AI-based prognosis prediction models are usually evaluated by different metrics in the literature. Depending on the specific setup and context, either classification accuracy or regression error can be used as model evaluation quantities. Thus, instead of summarizing the prognosis performance metrics in one sub-section independently, we will specify the evaluation protocols for each reviewed study in the following section.

To evaluate the COVID-19 course in patients for prognosis analysis, a deep model that leverages RNN and CNN architectures to assess the temporal evolution of images was proposed by Fakhfakh et al. (2020). The multi-temporal classification of X-ray images, together with clinical and radiological features, is considered as the foundation of prognosis and assesses COVID-19 infection evolution in terms of positive/negative evolution. Since this study formulates the prognosis prediction as a binary classification problem, conventional classification metrics, including accuracy, precision, recall, and F1 score, are reported.

Prior models of COVID-19 prognosis prediction from lung CT volume can be roughly categorized into two different scenarios. In the first scenario, prognosis prediction is formulated as a classification problem and the output is a classification result from a predefined outcome set (Meng et al., 2020; Chao et al., 2021; Shiri et al., 2021). For instance, Meng et al. (2020) have proposed a 3D DenseNet-similar prognosis model, namely, De-COVID19-Net, to predict a patient’s death. In this study, CT images are first segmented using a threshold-based method and the detected lung regions are fed into De-COVID19-Net. Before the final classification layer, clinic metadata and the obtained numerical features in De-COVID-Net are fused for the final prediction. Similarly, Shiri et al. (2021) have introduced an XGBoost classifier to predict patient’s survival based on radiomic features in lung CT scans and clinical data. Moreover, Chao et al. (2021) have implemented a prognosis model using a random forest to identify high-risk patients who need ICU treatment. Following a similar data processing flow from lung region segmentation, CT scan feature learning, metadata fusion to classification, a binary classification outcome in terms of ICU admission prediction is generated. For prognosis prediction models belonging to the first scenario, conventional classification evaluation metrics such as AUC and sensitivity are used.

In the second scenario, prognosis estimation is formulated by a regression problem (Wang S. et al., 2020; Zhang et al., 2020; Lee et al., 2021). Specifically, Zhang et al. (2020) have defined the prognosis output by the time in days that critical care demands are needed after hospital admission. In this regard, a light gradient boosting machine (LightGBM) and Cox proportional-hazards (CoxPH) regression model are built. The Kaplan–Meier analysis in model evaluation suggests that incorporating lung lesions and clinical metadata boosts prognosis prediction performance. Alternatively, Wang S. et al. (2020) have defined the prognostic event as the hospital stay time until discharge and proposed using two deep nets, one for lung region segmentation and the other for CT feature learning, for a multivariate Cox proportional hazard regression. In this study, Kaplan–Meier analysis and log-rank test are used to evaluate the performance of the proposed prognostic analysis. Under the same prognosis regression setting in (Wang S. et al., 2020), Lee et al. (2021) have developed a deep learning convolutional neural network, namely, Deep-COVID-DeteCT (DCD), for prognosis estimation based on the entire chest CT volume and experimentally demonstrates that multiple scans during hospitalization provide a better prognosis.

Machine learning is one of the core techniques in AI-driven computational solutions. Data are the stepstone to develop any machine learning-based diagnostic system. This section includes primary COVID-19 chest image sets that are publicly accessible to researchers. We will start with CXR datasets, followed by chest CT image sets. Note that when a dataset contains both X-ray images and CT scans, it will be summarized in the CXR section.

COVID-19 CXR image data collection (Cohen et al., 2020) is an open public dataset of chest X-ray images collected from patients who are positive or suspected of COVID-19 or other types of viral and bacterial pneumonia (including MERS and SARS). The collection contains 589 chest X-ray images (542 frontal and 47 lateral views) from 282 people over 26 countries, among which 176 patients are male and 106 are female. Of the frontal views, 408 images are taken with standard frontal PA/AP (posteroanterior/anteroposterior) position and the other 134 are AP Supine (anteroposterior laying down). In addition to CXR, the dataset also provides clinical attributes, including survival, ICU stay, intubation events, blood tests, and location, and is free from clinical notes for each image/case.

BIMCV COVID-19

COVID-19 Radiography Database (Chowhury et al., 2020) consists of 219 COVID-19 positive CXR images, 1,341 normal images, and 1,345 viral pneumonia images. All images are stored in grayscale PNG format with a resolution of 1024 by 1024 pixels.

COVID-CT-dataset (Yang et al., 2020) provides 349 CT scans with clinical characteristics of COVID-19 from 216 patients and 463 non-COVID-19 CTs. Images in this set are collected from COVID19-related papers from medRxiv, bioRxiv, NEJM, JAMA, and Lancet and thus in different sizes. The number of CT scans that a patient has ranges from 1 to 16, with an average of 1.6 per patient. The utility of these samples is confirmed by a senior radiologist who has been diagnosing and treating COVID-19 patients since the outbreak of the COVID-19 pandemic. Meta-information, including patient ID, patient information, DOI, and image caption, is available in this dataset.

COVID-CTset (Rahimzadeh et al., 2020) is a large CT images dataset that collected 15,589 COVID-19 images from 95 patients and 48,260 normal images from 282 persons from the Negin Medical Center located at Sari in Iran. The patient’s private information is removed and each image is stored in 16-bit grayscale TIFF format with 512*512-pixel resolution.

MosMedData (Morozov et al., 2020) contains 1,100 lung CT scans from municipal hospitals in Moscow, Russia, between March 1, 2020, and April 25, 2020. Among the 1,100 images, 42% are of male and 56% of female, with the rest 2% unknown. The dataset groups samples into five categories (i.e., zero, mild, moderate, severe, and critical) based on the severity of lung tissue abnormalities related to COVID-19, where the sample ratios of the five categories are 22.8, 61.6, 11.3,4.1, and 0.2%, respectively. In addition to severity labels, a small subset with 50 cases in MosMedData is annotated with binary ROI masks in the pixel level, which localizes the ground-class opacifications and regions of consolidations in CT images.

CC-CCII CT image set (Zhang et al., 2020) consists of a total of 617,775 CT images from 4,154 patients in China to differentiate between NCP due to SARS-CoV-2 virus infection, common pneumonia incurred by viral, bacterial, or mycoplasma, and normal controls. Each image is accompanied by corresponding metadata (patient ID, scan ID, age, sex, critical illness, liver function, lung function, and time of progression). Furthermore, 750 CT slices from 150 COVID-19 patients are manually annotated at the pixel level and classified into four classes: background, lung field, ground-glass opacity, and consolidation.

COVID-19 CT segmentation dataset (Jenssen, 2020) consists of 100 axial CT images associated with confirmed COVID-19 cases from the Italian Society of Medical and Interventional Radiology. Each image is segmented by a radiologist using three labels: ground-glass (mask value =1), consolidation (=2), and pleural effusion (=3) and stored in a single NIFTI file with a size of

SARS-CoV-2 CT scan dataset (Soares et al., 2020) contains 1252 CT scans that are positive for SARS-CoV-2 infection and 1,230 images from non-COVID-19 patients from hospitals in Sao Paulo, Brazil. This dataset is used to develop artificial intelligence methods to identify if a person is infected by SARS-CoV-2 through the analysis of his/her CT scans.

AI and machine learning have been applied in the fight against the COVID-19 pandemic. In this article, we reviewed the state-of-the-art solutions to lung scan examination for COVID-19 treatment. Though promising results have been reported, many challenges still exist that should be discussed and investigated in the future.

First, when studying these publications, we find it very challenging to compare their performance. Prior works have usually evaluated model performance on either their private dataset or a combination of several public image sets. Furthermore, the use of different evaluation protocols (e.g., binary classification vs. multi-category classification) and various performance metrics makes the comparison very difficult. We argue that the lack of benchmark hinders the development of AI solutions based on state of the art. With more chest images being available, we expect a comprehensive benchmark for fair comparison among different solutions in the near future.

Second, AI-based methods, especially deep learning, usually require a huge amount of training data with quality annotations. It is always more difficult and expensive to collect medical images to collect natural image samples. Compared to the model sizes, which are easily up to millions of training parameters, the sample size in the current public lung scan image sets is relatively small. This observation is more noticeable in the literature of prognosis estimation. Consequently, the generalizability of the state-of-the-art models on unseen data is in question. In addition, since current lung scan image sets contain many images from heavily or critically ill patients, there is a debate on if AI can differentiate nuances between mild/moderate COVID-19 and other lower respiratory illnesses in real clinical settings. The data bias in training data would greatly harm model’s generalizability. Without tackling these data bias issues, data-driven solutions are hardly ready for deployment clinically. There are two possible solutions to address this issue. On the one hand, collecting large image sets that cover a variety of COVID-19 cases is demanding. On the other hand, methods based on self-supervision anomaly detection can help mitigate data bias in data-driven solutions. Specifically, it is relatively easier to collect a large number of lung scans from healthy subjects. By studying the normal patterns in these negative cases, AI-based anomaly detection methods are expected to detect positive chest images by identifying any abnormal patterns that do not follow the normal patterns.

Third, in COVID-19 treatment, examination based on data from one modality is usually not sufficient. For instance, some COVID-19 patients do not experience fever and cough, while others have no symptoms in their chest images. To tackle this problem, omni-modality learning capable of holistically analyzing patients’ clinical information, for example, blood test results, age, chest images, and RT-PCR test, is highly desired for COVID-19 treatment. We have witnessed the trend of including multi-modality data in prognosis estimation. However, from the technical aspect, current multi-modality data fusion methods are too simple. How to effectively combine the lung scans with patients’ clinical records is still an open question.

Last but not least, despite the promising results reported in prior arts, the issue of explainability in these AI models is less addressed. Decision-making in a medical setting can have serious health consequences; it is often not enough to have a good decision-making or risk-prediction system in the statistical sense. Conventional medical diagnosis and prognosis usually are concluded with evidence. However, such evidence is usually missed in current AI-based methods. We argue that this limitation of explainability is another hurdle in deploying AI technology on lung scans for COVID-19 examination. A desirable system should not only indicate the existence of COVID-19 (with yes/no) but also be able to identify what structures/regions in images are the basis for its decision.

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbas, A., Abdelsamea, M., and Gaber, M. (2021a). 4S-DT: Self Supervised Super Sample Decomposition for Transfer Learning with Application to COVID-19 Detection. IEEE Trans. Neural Netw. Learn. Syst. 32 (7), 2798–2808. doi:10.1109/TNNLS.2021.3082015

Abbas, A., Abdelsamea, M. M., and Gaber, M. M. (2021b). Classification of COVID-19 in Chest X-ray Images Using DeTraC Deep Convolutional Neural Network. Appl. Intell. 51, 854–864. doi:10.1007/s10489-020-01829-7

Afshar, P., Heidarian, S., Naderkhani, F., Oikonomou, A., Plataniotis, K. N., and Mohammadi, A. (2020). COVID-CAPS: A Capsule Network-Based Framework for Identification of COVID-19 Cases from X-ray Images. Pattern Recognition Lett. 138, 638–643. doi:10.1016/j.patrec.2020.09.010

Ahishali, M., Degerli, A., Yamac, M., Kiranyaz, S., Chowdhury, M., Hameed, K., et al. (2021). A Comparative Study on Early Detection of COVID-19 from Chest X-ray Images. IEEE Access 9, 41052–41065. doi:10.1109/ACCESS.2021.3064927

Al-karawi, D., Al-Zaidi, S., Polus, N., and Jassim, S. (2020). Artificial Intelligence-Based Chest X-Ray Test of COVID-19 Patients. Int. J. Comput. Inform. Engg., 14 (10).

Albahli, S. (2020). Efficient GAN-based Chest Radiographs (CXR) Augmentation to Diagnose Coronavirus Disease Pneumonia. Int. J. Med. Sci. 17, 1439–1448. doi:10.7150/ijms.46684

Amyar, A., Modzelewski, R., Li, H., and Ruan, S. (2020). Multi-task Deep Learning Based CT Imaging Analysis for COVID-19 Pneumonia: Classification and Segmentation. Comput. Biol. Med. 126, 104037. doi:10.1016/j.compbiomed.2020.104037

Anwar, T., and Zakir, S. (2020). Deep Learning Based Diagnosis of COVID-19 Using Chest CT-scan Images. In IEEE International Multitopic Conference (INMIC), Bahawalpur, Pakistan, 5-7 Nov. 2020. IEEE. doi:10.1109/INMIC50486.2020.9318212

Apostolopoulos, I. D., Aznaouridis, S. I., and Tzani, M. A. (2020). Extracting Possibly Representative COVID-19 Biomarkers from X-ray Images with Deep Learning Approach and Image Data Related to Pulmonary Diseases. J. Med. Biol. Eng. , 1, 1, 8. doi:10.1007/s40846-020-00529-4

Apostolopoulos, I. D., and Mpesiana, T. A. (2020). COVID-19: Automatic Detection from X-ray Images Utilizing Transfer Learning with Convolutional Neural Networks. Phys. Eng. Sci. Med. 43, 635–640. doi:10.1007/s13246-020-00865-4

Ardakani, A. A., Kanafi, A. R., Acharya, U. R., Khadem, N., and Mohammadi, A. (2020). Application of Deep Learning Technique to Manage COVID-19 in Routine Clinical Practice Using CT Images: Results of 10 Convolutional Neural Networks. Comput. Biol. Med. 121, 103795. doi:10.1016/j.compbiomed.2020.103795

Asif, S., Wenhui, Y., Jin, H., Tao, Y., and Jinhai, S. (2021). Classification of COVID-19 from Chest X-ray Images Using Deep Convolutional Neural Networks, Proceedings of International Conference on Computer and Communications.

Aslan, M. F., Unlersen, M. F., Sabanci, K., and Durdu, A. (2021). CNN-based Transfer Learning-BiLSTM Network: A Novel Approach for COVID-19 Infection Detection. Appl. Soft Comput. 98, 106912. doi:10.1016/j.asoc.2020.106912

Asnaoui, K. E., and Chawki, Y. (2020). Using X-ray Images and Deep Learning for Automated Detection of Coronavirus Disease. J. Biomol. Struct. Dyn. 5, 1–12. doi:10.1080/07391102.2020.1767212

Azad, R., Asadi, M., Fathy, M., and Escalera, S. (2019). Bi-Directional ConvLSTM U-Net with Densley Connected Convolutions. Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops. doi:10.1109/ICCVW.2019.00052

Bai, Y., Yao, L., Wei, T., Tian, F., Jin, D.-Y., Chen, L., et al. (2020). Presumed Asymptomatic Carrier Transmission of COVID-19. JAMA 323, 1406–1407. doi:10.1001/jama.2020.2565

Brunese, L., Mercaldo, F., Reginelli, A., and Santone, A. (2020). Explainable Deep Learning for Pulmonary Disease and Coronavirus COVID-19 Detection from X-Rays. Computer Methods Programs Biomed. 196, 105608. doi:10.1016/j.cmpb.2020.105608

Chandra, T. B., Verma, K., Singh, B. K., Jain, D., and Netam, S. S. (2021). Coronavirus Disease (COVID-19) Detection in Chest X-ray Images Using Majority Voting Based Classifier Ensemble. Expert Syst. Appl. 165, 113909. doi:10.1016/j.eswa.2020.113909

Chao, H., Fang, X., Zhang, J., Homayounieh, F., Arru, C. D., Digumarthy, S. R., et al. (2021). Integrative Analysis for COVID-19 Patient Outcome Prediction. Med. Image Anal. 67, 101844. doi:10.1016/j.media.2020.101844

Chatterjee, S., Saad, F., Sarasaen, C., Ghosh, S., Khatun, R., Radeva, P., et al. (2020). Exploration of Interpretability Techniques for Deep COVID-19 Classification Using Chest X-ray Images. arXiv:2006,02570 [Epub ahead of print].

Chen, T., Kornblith, S., Norouzi, M., and Hinton, G. (2020a). A Simple Framework for Contrastive Learning of Visual Representations. International Conference on Machine LearningICML.

Chen, X., Yao, L., and Zhang, Y. (2020b). Residual Attention U-Net for Automated Multi-Class Segmentation of COVID-19 Chest CT Images. arXiv:2004.05645 [Epub ahead of print].

Chowhury, M., Rahman, T., Khandakar, A., Mazhar, R., Kadir, M., Mahbub, Z., et al. (2020). Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access, 8, 132665- 132676. doi:10.1109/ACCESS.2020.3010287

Cohen, J., Morrison, P., Dao, L., Roth, K., Duong, T., and Ghassemi, M. (2020). COVID-19 Image Data Collection: Prospective Predictions Are the Future. J. Mach. Learn.

Cohen, J. P., Dao, L., Roth, K., Morrison, P., Bengio, Y., Abbasi, A. F., et al. (2020). Predicting COVID-19 Pneumonia Severity on Chest X-ray with Deep Learning. Cureus 12, e9448. doi:10.7759/cureus.9448

Di, D., Shi, F., Yan, F., Xia, L., Mo, Z., Ding, Z., et al. (2021). Hypergraph Learning for Identification of COVID-19 with CT Imaging. Med. Image Anal. 68, 101910. doi:10.1016/j.media.2020.101910

Dong, D., Tang, Z., Wang, S., Hui, H., Gong, L., Lu, Y., et al. (2021). The Role of Imaging in the Detection and Management of COVID-19: a Review. IEEE Rev. Biomed. Eng. 14, 16–29. doi:10.1109/rbme.2020.2990959

Eldeen, N., Smarandache, F., and Loey, M. (2021). A Study of the Neutrosophic Set Significance on Deep Transfer Learning Models: An Experimental Case on a Limited COVID-19 Chest X-ray Dataset. Cognit. Comput. 4, 1–10. doi:10.1007/s12559-020-09802-9

Fakhfakh, M., Bouaziz, B., Gargouri, F., and Chaari, L. (2020). ProgNet: COVID-19 Prognosis Using Recurrent and Convolutional Neural Networks. Open Med. Imaging J. 12. doi:10.2174/1874347102012010011

Fan, D.-P., Zhou, T., Ji, G.-P., Zhou, Y., Chen, G., Fu, H., et al. (2020). Inf-net: Automatic COVID-19 Lung Infection Segmentation from CT Images. IEEE Trans. Med. Imaging. 39, 2626 – 2637. doi:10.1109/tmi.2020.2996645

Fang, X., Kruger, U., Homayounieh, F., Chao, H., Zhang, J., Digumarthy, S. R., et al. (2021). Association of AI Quantified COVID-19 Chest CT and Patient Outcome. Int. J. CARS. 16, 435–445. doi:10.1007/s11548-020-02299-5

Ganin, Y., Ustinova, E., Ajakan, H., Germain, P., Larochelle, H., Laviolette, F., et al. (2016). Domain-adversarial Training of Neural Networks. J. Machine Learn. Res. 17, 2096–2030.

Goncharov, M., Pisov, M., Shevtsov, A., Shirokikh, B., Kurmukov, A., Blokhin, I., et al. (2020). CT-based COVID-19 Triage: Deep Multitask Learning Improves Joint Identification and Severity Quantification. arXiv:2006.01441. [Epub ahead of print].

Goodwin, B., Jaskolski, C., Zhong, C., and Asmani, H. (2020). Intra-model Variability in COVID-19 Classification Using Chest X-ray Images. eprint arXiv. 2005, 02167. [Epub ahead of print].

Han, Z., Wei, B., Hong, Y., Li, T., Cong, J., Zhu, X., et al. (2020). Accurate Screening of COVID-19 Using Attention-Based Deep 3D Multiple Instance Learning. IEEE Trans. Med. Imaging 39, 2584–2594. doi:10.1109/tmi.2020.2996256

Hao, W., and Li, M. (2020). Clinical Diagnostic Value of CT Imaging in COVID-19 with Multiple Negative RT-PCR Testing. Trav. Med Infect Dis. Infect. Dis. 34, 101627. doi:10.1016/j.tmaid.2020.101627

He, X., Wang, S., Shi, S., Chu, X., Tang, J., Liu, X., et al. (2020a, Benchmarking Deep Learning Models and Automated Model Design for COVID-19 Detection with Chest CT Scans. eprint medRvix: doi:10.1101/2020.06.08.20125963

He, X., Yang, X., Zhang, S., Zhao, J., Zhang, Y., Xing, E., et al. (2020b). Sample-efficient Deep Learning for COVID-19 Diagnosis Based on CT Scans. eprint medRxiv: doi:10.1101/2020.04.13.20063941

Hirano, H., Koga, K., and Takemoto, K. (2020). Vulnerability of Deep Neural Networks for Detecting COVID-19 Cases from Chest X-ray Images to Universal Adversarial Attacks. PLoS ONE 15 (12). doi:10.1371/journal.pone.0243963

Javaheri, T., Homayounfar, M., Amoozgar, Z., Reiazi, R., Homayounieh, F., Abbas, E., et al. (2021). CovidCTNet: An Open-Source Deep Learning Approach to Identify COVID-19 Using CT Image. Npj Digit. Med. 4. doi:10.1038/s41746-021-00399-3

Jenssen, H. (2020). COVID-19 CT Segmentation Dataset. Availableat: https://medicalsegmentation.com/about/.

Jin, C., Chen, W., Cao, Y., Xu, Z., Tan, Z., Zhang, X., et al. (2020). Development and Evaluation of an Artificial Intelligence System for COVID-19 Diagnosis. Nat. Commun. 11, 5088. doi:10.1038/s41467-020-18685-1

Khan, A. I., Shah, J. L., and Bhat, M. M. (2020). CoroNet: A Deep Neural Network for Detection and Diagnosis of COVID-19 from Chest X-ray Images. Computer Methods Programs Biomed. 196, 105581. doi:10.1016/j.cmpb.2020.105581

Kumar, S., Mishra, S., and Singh, S. K. (2020). Deep Transfer Learning-Based Covid-19 Prediction Using Chest X-Rays. eprint medRxiv: doi:10.1101/2020.05.12.20099937

Laradji, I., Rodriguez, P., Branchaud-Charron, F., Lensink, K., Atighehchian, P., Parker, W., et al. (2020). A Weakly Supervised Region-Based Active Learning Method for COVID-19 Segmentation in CT Images. arXiv:2007.07012 [Epub ahead of print].

Latif, S., Usman, M., Manzoor, S., Iqbal, W., Qadir, J., Tyson, G., et al. (2020). Leveraging Data Science to Combat COVID-19: A Comprehensive Review. IEEE Trans. Artif. Intelligence 1, 85–103. doi:10.1109/TAI.2020.3020521

Lee, E. H., Zheng, J., Colak, E., Mohammadzadeh, M., Houshmand, G., Bevins, N., et al. (2021). Deep COVID DeteCT: an International Experience on COVID-19 Lung Detection and Prognosis Using Chest CT. NPJ Digit Med. 4, 11. doi:10.1038/s41746-020-00369-1

Li, T., Han, Z., Wei, B., Zheng, Y., Hong, Y., and Cong, J. (2020). Robust Screening of COVID-19 from Chest X-ray via Discriminative Cost-Sensitive Learning. arXiv. 2004, 12592 [Epub ahead of print].

Li, Z., Zhao, W., Shi, F., Qi, L., Xie, X., Wei, Y., et al. (2021). A Novel Multiple Instance Learning Framework for COVID-19 Severity Assessment via Data Augmentation and Self-Supervised Learning. Med. Image Anal. 69. doi:10.1016/j.media.2021.101978

Liu, S., Georgescu, B., Xu, Z., Yoo, Y., Chabin, G., Chaganti, S., et al. (2020). 3D Tomographic Pattern Synthesis for Enhancing the Quantification of COVID-19. eprints arXiv:2005.01903.

Long, C., Xu, H., Shen, Q., Zhang, X., Fan, B., Wang, C., et al. (2020). Diagnosis of the Coronavirus Disease (COVID-19): rRT-PCR or CT? Eur. J. Radiol. 126, 108961. doi:10.1016/j.ejrad.2020.108961

Majeed, T., Rashid, R., Ali, D., and Asaad, A. (2020). Problems of Deploying Cnn Transfer Learning to Detect COVID-19 from Chest X-Rays. medRxiv[Epub ahead of print]. doi:10.1101/2020.05.12.20098954

Medhi, K., Jamil, M., and Hussain, I. (2020). Automatic Detection of COVID-19 Infection from Chest X-ray Using Deep Learning. medRxiv [Epub ahead of print]. doi:10.1101/2020.05.10.20097063

Meng, L., Dong, D., Li, L., Niu, M., Bai, Y., Wang, M., et al. (2020). A Deep Learning Prognosis Model Help Alert for COVID-19 Patients at High-Risk of Death: A Multi-center Study. IEEE J. Biomed. Health Inform. 24, 3576–3584. doi:10.1109/JBHI.2020.3034296

Minaee, S., Kafieh, R., Sonka, M., Yazdani, S., and Jamalipour Soufi, G. (2020). Deep-COVID: Predicting COVID-19 from Chest X-ray Images Using Deep Transfer Learning. Med. Image Anal. 65, 101794. doi:10.1016/j.media.2020.101794

Morozov, S., Andreychenko, A., Pavlov, N., Vladzymyrskyy, A., Ledikhova, N., Gombolevskiy, V., et al. (2020). MosMedData: Chest CT Scans with COVID-19 Related Findings Dataset. arXiv.2005, 06465. doi:10.1101/2020.05.20.20100362

Moutounet-Cartan, P. (2020). Deep Convolutional Neural Networks to Diagnose COVID-19 and Other Pneumonia Diseases from Posteroanterior Chest X-Rays. arXiv. 2005, 00845. [Epub ahead of print].

Narayan Das, N., Kumar, N., Kaur, M., Kumar, V., and Singh, D. (2020). Automated Deep Transfer Learning-Based Approach for Detection of COVID-19 Infection in Chest X-Rays. IRBM: Biomed. Eng. Res. 10. doi:10.1016/j.irbm.2020.07.001

Ozcan, T. (2020). A Deep Learning Framework for Coronavirus Disease (COVID-19) Detection in X-ray Images. [Epub ahead of print]. doi:10.21203/rs.3.rs-26500/v1

Ozturk, T., Talo, M., Yildirim, E. A., Baloglu, U. B., Yildirim, O., and Rajendra Acharya, U. (2020). Automated Detection of COVID-19 Cases Using Deep Neural Networks with X-ray Images. Comput. Biol. Med. 121, 103792. doi:10.1016/j.compbiomed.2020.103792

Pham, Q., Nguyen, D., Huynh-The, T., Hwang, W.-J., and Pathirana, P. (2020). Artificial Intelligence (AI) and Big Data for Coronavirus (COVID-19) Pandemic: A Survey on the State-Of-The-Arts. IEEE Access 8. doi:10.1109/access.2020.3009328

Punn, N., and Agarwal, S. (2021). Automated Diagnosis of COVID-19 with Limited Posteroanterior Chest X-ray Images Using fine-tuned Deep Neural Networks. Appl. Intell. 51, 2689–2702. doi:10.1007/s10489-020-01900-3

Rahimzadeh, M., Attar, A., and Sakhaei, S. M. (2020, A Fully Automated Deep Learning-Based Network for Detecting COVID-19 from a New and Large Lung CT Scan Dataset. medRxiv: doi:10.1101/2020.06.08.20121541

Rajaraman, S., and Antani, S. (2020). Weakly Labeled Data Augmentation for Deep Learning: A Study on COVID-19 Detection in Chest X-Rays. Diagnostics 10, 358. doi:10.3390/diagnostics10060358

Roberts, M., Driggs, D., Driggs, D., Thorpe, M., Gilbey, J., Yeung, M., et al. (2021). Common Pitfalls and Recommendations for Using Machine Learning to Detect and Prognosticate for COVID-19 Using Chest Radiographs and CT Scans. Nat. Mach Intell. 3, 199–217. doi:10.1038/s42256-021-00307-0

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention. 234–241. doi:10.1007/978-3-319-24574-4_28

Salehi, S., Abedi, A., Balakrishnan, S., and Gholamrezanezhad, A. (2020). Coronavirus Disease 2019 (COVID-19): A Systematic Review of Imaging Findings in 919 Patients. Am. J. Roentgenology 215, 87–93. doi:10.2214/ajr.20.23034

Selvan, R., Dam, E., Detlefsen, N., Rischel, S., Sheng, K., Nielsen, M., et al. (2020). Lung Segmentation from Chest X-Rays Using Variational Data Imputation. ICML Workshop on the Art of Learning with Missing Values ICML.

Shi, F., Wang, J., Shi, J., Wu, Z., Wang, Q., and Tang, Z., and (2021). Review of Artificial Intelligence Techniques in Imaging Data Acquisition, Segmentation and Diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 14, 4–15. doi:10.1109/rbme.2020.2987975

Shibly, K., Dey, S., Islam, M., and Rahman, M. (2020). COVID Faster R-CNN: A Novel Framework to Diagnose Novel Coronavirus Disease (COVID-19) in X-ray Images. Inform. Med. Unlocked. 20. doi:10.1016/j.imu.2020.100405

Shiri, I., Sorouri, M., Geramifar, P., Nazari, M., Abdollahi, M., Salimi, Y., et al. (2021). Machine Learning-Based Prognostic Modeling Using Clinical Data and Quantitative Radiomic Features from Chest CT Images in COVID-19 Patients. Comput. Biol. Med. 132. doi:10.1016/j.compbiomed.2021.104304

Signoroni, A., Savardi, M., Benini, S., Adami, N., Leonardi, R., Gibellini, P., et al. (2020). End-to-end Learning for Semiquantitative Rating of COVID-19 Severity on Chest X-Rays. arXiv:2006.04603 [Epub ahead of print].

Soares, E., Angelov, P., Biaso, S., Froes, M. H., and Abe, D. K. (2020). SARS-CoV-2 CT-scan Dataset: A Large Dataset of Real Patients CT Scans for SARS-CoV-2 Identification. medRxiv. doi:10.1101/2020.04.24.20078584

Song, Y., Zheng, S., Li, L., Zhang, X., Zhang, X., Huang, Z., et al. (2021). Deep Learning Enables Accurate Diagnosis of Novel Coronavirus (COVID-19) with CT Images. IEEE/ACM Trans. Comput. Biol. Bioinform. , 1. doi:10.1109/tcbb.2021.3065361

Tahamtan, A., and Ardebili, A. (2020). Real-time RT-PCR in COVID-19 Detection: Issues Affecting the Results. Expert Rev. Mol. Diagn. 20, 453–454. doi:10.1080/14737159.2020.1757437

Toǧaҫar, M., Ergen, B., and Cömert, Z. (2020). COVID-19 Detection Using Deep Learning Models to Exploit Social Mimic Optimization and Structured Chest X-ray Images Using Fuzzy Color and Stacking Approaches. Comput. Biol. Med. 121, 103805.

Ucar, F., and Korkmaz, D. (2020). COVIDiagnosis-Net: Deep Bayes-Squeezenet Based Diagnosis of the Coronavirus Disease 2019 (COVID-19) from X-ray Images. Med. Hypotheses 140, 109761. doi:10.1016/j.mehy.2020.109761

Vayá, M. I., Saborit, J., Montell, J., Pertusa, A., Bustos, A., Cazorla, M., et al. (2020). BIMCV COVID-19+: a Large Annotated Dataset of RX and CT Images from COVID-19 Patients. arXiv:2006.01174.

Vernuccio, F., Giambelluca, D., Cannella, R., Lombardo, F., Panzuto, F., Midiri, M., et al. (2020). Radiographic and Chest CT Imaging Presentation and Follow-Up of COVID-19 Pneumonia: a Multicenter Experience from an Endemic Area. Emerg. Radiol. 27, 623–632. doi:10.1007/s10140-020-01817-x

Waheed, A., Goyal, M., Gupta, D., Khanna, A., Al-Turjman, F., and Pinheiro, P. R. (2020). CovidGAN: Data Augmentation Using Auxiliary Classifier GAN for Improved COVID-19 Detection. IEEE Access 8, 91916–91923. doi:10.1109/ACCESS.2020.2994762

Wang, B., Jin, S., Yan, Q., Xu, H., Luo, C., Wei, L., et al. (2021a). Ai-assisted CT Imaging Analysis for COVID-19 Screening: Building and Deploying a Medical AI System. Appl. Soft Comput. 98, 106897. doi:10.1016/j.asoc.2020.106897

Wang, G., Liu, X., Li, C., Xu, Z., Ruan, J., Zhu, H., et al. (2020). A Noise-Robust Framework for Automatic Segmentation of COVID-19 Pneumonia Lesions from CT Images. IEEE Trans. Med. Imaging 39, 2653–2663. doi:10.1109/tmi.2020.3000314

Wang, L., Lin, Z. Q., and Wong, A. (2020a). COVID-net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-ray Images. Sci. Rep. 10, 19549. doi:10.1038/s41598-020-76550-z

Wang, S., Kang, B., Ma, J., Zeng, X., Xiao, M., Guo, J., et al. (2021b). A Deep Learning Algorithm Using Ct Images to Screen for corona Virus Disease (Covid-19). Eur. Radiol. doi:10.1007/s00330-021-07715-1

Wang, S., Zha, Y., Li, W., Wu, Q., Li, X., Niu, M., et al. (2020b). A Fully Automatic Deep Learning System for COVID-19 Diagnostic and Prognostic Analysis. Eur. Respir. J. 56. doi:10.1183/13993003.00775-2020

Wong, H., Lam, H., Fong, A., Leung, S., Chin, T., Lo, C., et al. (2020). Frequency and Distribution of Chest Radiographic Findings in COVID-19 Positive Patients. Radiology 269, E72–E78. doi:10.1148/radiol.2020201160

Wu, Y., Gao, S., Mei, J., Xu, J., Fan, D., Zhang, R., et al. (2021). JCS: An Explainable COVID-19 Diagnosis System by Joint Classification and Segmentation. IEEE Trans. Image Process. 30, 3113–3126. doi:10.1109/tip.2021.3058783

Xiao, A., Tongn, Y., and Zhang, S. (2020). Profile of RT-PCR for SARS-CoV-2: a Preliminary Study from 56 COVID-19 Patients. Clin. Infect. Dis. 71, 2249–2251. doi:10.1093/cid/ciaa460

Yamac, M., Ahishali, M., Degerli, A., Kiranyaz, S., Chowdhury, M., and Gabbouj, M. (2020). Convolutional Sparse Support Estimator Based COVID-19 Recognition from X-ray Images. arXiv. 2005. 04014.

Yan, Q., Wang, B., Gong, D., Luo, C., Zhao, W., Shen, J., et al. (2020). COVID-19 Chest CT Image Segmentation – a Deep Convolutional Neural Network Solution. arXiv. 2004, 10987.

Yang, X., He, X., Zhao, J., Zhang, Y., Zhang, S., and Xie, P. (2020). COVID-CT-Dataset: A CT Scan Dataset about COVID-19. arXiv. 2003, 13865.

Zabirul-Islam, M., Milon-Islam, M., and Asraf, A. (2020). A Combined Deep CNN-LSTM Network for the Detection of Novel Coronavirus (COVID-19) Using X-ray Images. Inform. Med. Unlocked 20, 100412. doi:10.1016/j.imu.2020.100412

Zargari Khuzani, A., Heidari, M., and Shariati, A. (2020). COVID-classifier: An Automated Machine Learning Model to Assist in the Diagnosis of COVID-19 Infection in Chest X-ray Images. medRxiv: doi:10.1101/2020.05.09.20096560

Zhang, J., Xie, Y., Liao, Z., Pang, G., Verjans, J., Li, W., et al. (2021). Viral Pneumonia Screening on Chest X-ray Images Using Confidence-Aware Anomaly Detection. IEEE Trans. Med. Imaging 40, 879–890. doi:10.1109/tmi.2020.3040950

Zhang, K., Liu, X., Shen, J., Li, Z., Sang, Y., Wang, X., et al. (2020). Clinically Applicable AI System for Accurate Diagnosis, Quantitative Measurements, and Prognosis of COVID-19 Pneumonia Using Computed Tomography. Cell 181, 1423–1433. doi:10.1016/j.cell.2020.04.045

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., and Torralb, A. (2016). Learning Deep Features for Discriminative Localization. Conf. Computer Vis. Patter Recognition , 2921–2929.

Zhou, J., Jing, B., and Wang, Z. (2021). SODA: Detecting COVID-19 in Chest X-Rays with Semi-supervised Open Set Domain Adaptation. IEEE/ACM Trans. Comput. Biol. Bioinform.

Zhou, L., Li, Z., Zhou, J., Li, H., Chen, Y., Huang, Y., et al. (2020a). A Rapid, Accurate and Machine-Agnostic Segmentation and Quantification Method for CT-based COVID-19 Diagnosis. IEEE Trans. Med. Imaging 39, 2638–2652. doi:10.1109/tmi.2020.3001810

Keywords: chest imaging, image analysis, severity assessment, COVID-19, prognosis prediction, ROI segmentation, diagnostic model, machine learning

Citation: Deng H and Li X (2021) AI-Empowered Computational Examination of Chest Imaging for COVID-19 Treatment: A Review. Front. Artif. Intell. 4:612914. doi: 10.3389/frai.2021.612914

Received: 02 October 2020; Accepted: 23 June 2021;

Published: 21 July 2021.

Edited by:

Simon DiMaio, Intuitive Surgical, Inc., United StatesReviewed by:

Mehdi Moradi, IBM Research Almaden, United StatesCopyright © 2021 Deng and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xingyu Li, eGluZ3l1LmxpQHVhbGJlcnRhLmNh

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.