- 1Department of Computer Science, University of British Columbia, Vancouver, BC, Canada

- 2Associate Academic Member and Canada CIFAR AI Chair, Mila Institute, Montreal, QC, Canada

- 3Department of Engineering Science, University of Oxford, Oxford, United Kingdom

- 4Epistemix Inc., Pittsburgh, PA, United States

In this work we demonstrate how to automate parts of the infectious disease-control policy-making process via performing inference in existing epidemiological models. The kind of inference tasks undertaken include computing the posterior distribution over controllable, via direct policy-making choices, simulation model parameters that give rise to acceptable disease progression outcomes. Among other things, we illustrate the use of a probabilistic programming language that automates inference in existing simulators. Neither the full capabilities of this tool for automating inference nor its utility for planning is widely disseminated at the current time. Timely gains in understanding about how such simulation-based models and inference automation tools applied in support of policy-making could lead to less economically damaging policy prescriptions, particularly during the current COVID-19 pandemic.

1 Introduction

Our goal in this paper is to demonstrate how the “planning as inference” methodology at the intersection of Bayesian statistics and optimal control can directly aid policy-makers in assessing policy options and achieving policy goals, when implemented using epidemiological simulators and suitable automated software tools for probabilistic inference. Such software tools can be used to quickly identify the range of values towards which controllable variables should be driven by means of policy interventions, social pressure, or public messaging, so as to limit the spread and impact of an infectious disease such as COVID-19.

In this work, we introduce and apply a simple form of planning as inference in epidemiological models to automatically identify policy decisions that achieve a desired, high-level outcome. As but one example, if our policy aim is to contain infectious population totals below some threshold at all times in the foreseeable future, we can condition on this putative future holding and examine the allowable values of controllable behavioural variables at the agent or population level, which in the framework of planning as inference is formalized in terms of a posterior distribution. As we already know, to control the spread of COVID-19 and its impact on society, policies must be enacted that reduce disease transmission probability or lower the frequency and size of social interactions. This is because we might like to, for instance, not have the number of infected persons requiring hospitalization exceed the number of available hospital beds.

Throughout this work, we take a Bayesian approach, or at the very least, a probabilistic consideration of the task. Especially in the early stages of a new outbreak, the infectious dynamics are not known precisely. Furthermore, the spread of the disease cannot be treated deterministically, as a result of either fundamental variability in the social dynamics that drive infections, or of uncertainty over the current infection levels, or of uncertainty over appropriate models for analyzing the dynamics. Therefore, developing methods capable of handling such uncertainty correctly will allow for courses of action to be evaluated and compared more effectively, and could lead to “better” policies: a policy that surpasses the desired objective with 55% probability, but fails with 45% probability, may be considered “worse” than a policy that simply meets the objective with 90% probability. Bayesian analysis also offers a form of probabilistic inference in which the contribution of individual variables to the overall uncertainty can be identified and quantified. Beyond simply obtaining “the most effective policy measure,” this may be of interest to analysts trying to further understand why certain measures are more effective than others.

We first show how the problem of policy planning can be formulated as a Bayesian inference task in epidemiological models. This framing is general and extensible. We then demonstrate how particular existing software tools can be employed to perform this inference task in preexisting stochastic epidemiological models, without modifying the model itself or placing restrictions on the models that can be analyzed. This approach is particularly appealing, as it decouples the specification of epidemiological models by domain experts from the computational task of performing inference. This shift allows for more expressive and interpretable models to be expediently analyzed, and the sophistication of inference algorithms to be adjusted flexibly.

As a result, the techniques and tools we review in this paper are applicable to simulators ranging from simple compartmental models to highly expressive agent-based population dynamics models. In the former, the controls available to policy-makers are blunt–e.g., “reduce social interactions by some fractional amount”–but how best to achieve this is left as an exercise for policy-makers. In the latter, variables like “probability of individuals adhering to self-isolation” and “how long should schools be closed if at all” can be considered and evaluated in combination and comparison to others as potential fine-grained controls that could achieve the same policy objective more efficiently.

When governments impose any such controls, both citizens and financial markets want to know how draconian these measures must be and for how long they have to be in effect. Policy analysis based on models that reflect variability in resources such as healthcare facilities in different jurisdictions could hopefully make the answers to these questions more precise and the controls imposed more optimal in the utility maximizing sense. The same holds for the difference between models that can or cannot reflect variations in population mixing between rural and urban geographic areas. A person living in a farming county in central Illinois might reasonably wonder if it is as necessary to shelter in place there as it is in Chicago.

Current approaches to model-based policy-making are likely to be blunt. Simple models, e.g., compartmental models with few compartments, are rapid to fit to new diseases and easy to compute, but are incapable of evaluating policy options that are more fine-grained than the binning used, such as regionalized measures. The net effect of being able to only consider blunt controls arguably has contributed to a collective dragging of feet, even in the face of the current COVID-19 pandemic. This delayed reaction, combined with brute application of control, has led to devastating socioeconomic impact, with many sectors such as education, investment markets and small-medium enterprises being directly impacted.

We can and should be able to do better. We believe, and hope to demonstrate, that models and software automation focused on planning as inference for policy analysis and recommendation is one path forward that can help us better react to this and future pandemics, and improve our public health preparedness.

We upfront note that the specific models that we use in this paper are far from perfect. First, the pre-existing models we use to demonstrate our points in this paper are only crudely calibrated to present-day population dynamics and specific COVID-19 characteristics. We have made some efforts on the latter point, in particular sourcing a COVID-19 adapted compartmental model (Ogilvy Kermack and McKendrick, 1927; Blackwood and Childs, 2018b; Hill et al., 2020) and parameters from (Bhalchandra and SnehalShekatkar, 2020; Ferguson et al., 2020; Magdon-Ismail, 2020; Massonnaud et al., 2020; Peng et al., 2020; Riou and Althaus, 2020; Rovetta et al., 2020; Russo et al., 2020; Traini et al., 2020; Wen et al., 2020), but we stress this limitation. In addition, in simple cases, the type of problems for which we discuss solutions in this paper may be solved with more straightforward implementations involving parameter sweeps and “manual” checking for desired policy effects, albeit at potentially higher human cost. In this sense, our goal is not to claim fundamental novelty, uniqueness or superiority of any particular inference technique, but rather to raise awareness for the practical feasibility of the Bayesian formulation of planning as inference, which offers a higher level of flexibility and automation than appears to be understood widely in the policy-making arena.

Note also that current automated inference techniques for stochastic simulation-based models (Toni et al., 2009; Kypraios et al., 2017; McKinley et al., 2018; Chatzilena et al., 2019; Minter and Retkute, 2019) are computationally demanding and are by their very nature approximate. The academic topic at the core of this paper and the subject of a significant fraction of our academic work (Paige et al., 2014; Wood et al., 2014; Tolpin et al., 2015; van de Meent et al., 2015; Paige and Wood, 2016; Rainforth et al., 2016; Rainforth et al., 2018; Naderiparizi et al., 2019; Zhou et al., 2019; Warrington et al., 2020) deals with this challenge. Furthermore, the basic structure of simulators currently available may lack important policy-influenceable interaction parameters that one might like to examine. If viewed solely in light of the provided examples, our contribution could reasonably be seen both as highlighting the utility of inference for planning in this application setting, and as automating the manual selection of promising policy parameters. The tools we showcase are capable of significantly more; however, for expediency and clarity, we have focused on control as inference, an application that has seen relatively little specific coverage in the literature, and the simplest possible inference methods which do not require familiarity with the technical literature on approximate Bayesian inference. We leave other straightforward applications of automated inference tools in this application area, like parameter inference from observed outbreak data (Toni et al., 2009; Kypraios et al., 2017; McKinley et al., 2018; Chatzilena et al., 2019; Minter and Retkute, 2019), to others.

That being said, our hope is to inform field epidemiologists and policy-makers about an existing technology that could, right now, be used to support public policy planning towards more precise, potentially tailored interventions that ensure safety while also potentially leading to fewer economic ramifications. Fully probabilistic methods are apparently only relatively recently being embraced by the epidemiology community (Lessler et al., 2016; Funk and King, 2020), while the communities for approximate Bayesian inference and simulation-based inference have remained mostly focused on the tasks of parameter estimation and forecasting (Toni et al., 2009; Kypraios et al., 2017; McKinley et al., 2018), rather than control as inference. Beyond this demonstration, we hope to encourage timely and significant developments on the modeling side, and, if requested, to actually aid in the fight against COVID-19 by helping arm policy-makers with a new kind of tool and training them how to use it rapidly. Finally, we hope to engage the machine learning community in the fight against COVID-19 by helping translate between the specific epidemiological control problem and the more general control problem formulations on which we work regularly.

2 Assumptions and Findings

We start with the assumption that the effectiveness of policy-making can be significantly improved by consulting the outputs of model-based tools which provide quantitative metrics for the ability of particular policy actions to achieve specific formalized goals. In particular, we imagine the following scenario. There exists some current population, and the health status of its constituents is only partially known. There exists a disease whose transmission properties may be only partially known, but whose properties cannot themselves be readily controlled. There exists a population dynamic that can be controlled in some limited ways at the aggregate level. There exists a “policy goal” or target which we will refer to as the allowable, allowed, or goal set of system trajectories. An example of this could be “the total number of infectious persons should not ever exceed some percentage of the population” or “the first date at which the total number of infectious persons exceeds some threshold is at least some number of days away.” We finally assume an implied set of allowable policy prescriptions, defined in the sense that population dynamics behaving according to such policies will be exactly the ones to attain the goal with high probability. In general, this set of allowable policies is intractable to compute exactly, motivating the use of automated tools implementing well established approximate Bayesian inference methods. We explicitly do not claim “completeness” of the stochastic dynamic models in any realistic sense, disregarding complications such as potential agent behaviour in strategic response to regionalized policies, and do not attempt to quantify all costs and benefits of the considered policies, for example economic or cultural impacts. Rather, formulating the problem described above in terms of Bayesian inference results in a posterior distribution over policies which have been conditioned on satisfying the formalized policy desiderata within the formalized dynamical model. Effectively, this is to be understood as “scrutinizing,” “weighting,” “prioritizing,” or “focusing” potential policy actions for further consideration, rather than as an “optimal” prescription. When selecting a policy based on the posterior distribution, policy-makers are expected to account for additional, more complex socio-economic phenomena, costs and benefits using their own judgment.

The only things that may safely be taken away from this paper are the following:

• Existing compartmental models and agent-based simulators can be used as an aid for policy assessment via a Bayesian planning as inference formulation.

• Existing automated inference tools can be used to perform the required inferential computation.

• Opportunities exist for various fields to come together to improve both understanding of and availability of these techniques and tools.

• Further research and development into modeling and inference is recommended to be immediately undertaken to explore the possibility of more efficient, less economically devastating control of the COVID-19 pandemic.

What should not be taken away from this paper are any other conclusions, including in particular the following:

• Any conclusion or statements that there might exist less aggressive measures that could still be effective in controlling COVID-19.

• Any substantial novelty, uniqueness or performance claims about the particular numerical methods and software implementations for Bayesian inference which were used for the purpose of demonstrating the findings above. In particular, on the one hand, similar results could have been obtained using other software implementation strategies in principle, and on the other hand, more advanced inference methods could have been applied using the same software tools at the expense of rendering the conceptual exposition less accessible to audiences outside of the Bayesian inference community.

As scientists attempting to contribute “across the aisle,” we use more qualifying statements than usual throughout this work in an attempt to reduce the risk of misunderstandings and sensationalism.

3 Approach

In this section we formalize the policy-making task in terms of computing conditional probabilities in a probabilistic model of the disease spread. While the technical description can get involved at times, we emphasize that in practice the probabilistic model is already defined by an existing epidemiological simulator and the probabilistic programming tools we describe in this paper provide the ability to compute the required conditional probabilities automatically, including automatically introducing required approximations, so the users only need to focus on identifying which variables to condition on, and feeding real-world data to the system. Readers familiar with framing planning as inference may wish to skip directly to Section 4.

Being able to perform probabilistic inference is crucial for taking full advantage of available simulators in order to design good policies. This is because in the real world, many of the variables crucially impacting the dynamics of simulations are not directly observable, such as the number of infectious but asymptomatic carriers or the actual number of contacts people make in different regions each day. These variables are called latent, as opposed to observable variables such as the number of deaths or the number of passengers boarding a plane each day, which can often be directly measured with high accuracy. It is often absolutely crucial to perform inference over some latent variables to achieve reliable forecasts. For example, the future course of an epidemic like COVID-19 is driven by the number of people currently infected, rather than the number of people currently hospitalized, while in many countries in the world currently only the latter is known.

While performing inference over latent variables is very broadly applicable, the scenario described above being but one example, in this paper we primarily address the problem of choosing good policies to reduce the impact of an epidemic which can also be formulated as an inference problem. This choice of problem was driven by the hypothesis that the search for effective controls may not in fact be particularly well-served by automation at the current time. In the epidemiological context, the questions we are trying to answer are ones like “when and for how long do we need to close schools to ensure that we have enough ventilators for everyone who needs them?” While obviously this is overly simplistic and many different policy decisions need to be enacted in tandem to achieve good outcomes, we use this example to illustrate tools and techniques that can be applied to problems of realistic complexity.

Our approach is not novel, it has been studied extensively under the name “control via planning as inference” and is now well understood (Todorov, 2008; Toussaint, 2009; Kappen et al., 2012; Levine, 2018). What is more, the actual computations that result from following the recipes for planning as inference can be, in some cases readily, manually replicated. Again, our aim here is to inform or remind a critically important set of policy-makers and modellers that these methodologies are extremely relevant to the current crisis. Moreover, at least partial automation of model-informed policy-guidance is achievable using existing tools, and, may even lead to sufficient computational savings to make their use in current policy-making practical. Again, our broader hope here is to encourage rapid collaborations leading to more targeted and less-economically-devastating policy recommendations.

3.1 An Abstract Epidemiological Dynamics Model

In this work we will look at both compartmental and agent-based models. An overview of these specific types of models appears later. For the purposes of understanding our approach to planning as inference, it is helpful to describe the planning as inference problem in a formalism that can express both types of models. The approach of conducting control via planning as inference follows a general recipe:

1) Define the latent and control parameters of the model and place a prior distribution over them.

2) Either or both define a likelihood for the observed disease dynamics data and design constraints that define acceptable disease progression outcomes.

3) Do inference to generate a posterior distribution on control values that conditions both on the observed data and the defined constraints.

4) Make a policy recommendation by picking from the posterior distribution consisting of effective control values according to some utility maximizing objective.

We focus on steps 1–3 of this recipe, and in particular do not explore simultaneous conditioning. We ignore the observed disease dynamics data and focus entirely on inference with future constraints. We explain the rationale behind these choices near the end of the paper.

Very generally, an epidemiological model consists of a set of global parameters and time dependent variables. Global parameters are (θ, η), where θ denotes parameters that can be controlled by policy directives (e.g. close schools for some period of time or decrease the general level of social interactions by some amount), and η denotes parameters which cannot be affected by such measures (e.g. the incubation period or fatality rate of the disease).

The time dependent variables are (Xt, Yt, Zt) and jointly they constitute the full state of the simulator. Xt are the latent variables we are doing inference over (e.g. the total number of infected people or the spatio-temporal locations of outbreaks), Yt are the observed variables whose values we obtain by measurements in the real world (e.g. the total number of deaths or diagnosed cases), and Zt are all the other latent variables whose values we are not interested in knowing (e.g. the number of contacts between people or hygiene actions of individuals). For simplicity, we assume that all variables are either observed at all times or never, but this can be relaxed.

The time t can be either discrete or continuous. In the discrete case, we assume the following factorization

Note that we do not assume access to any particular factorization between observed and latent variables. We assume that a priori the controllable parameters θ are independent of non-controllable parameters η to avoid situations where milder control measures are associated with better outcomes because they tend to be deployed when the circumstances are less severe, which would lead to erroneous conclusions when conditioning on good outcomes in Section 3.3.

3.2 Inference

The classical inference task (Toni et al., 2009; Kypraios et al., 2017; McKinley et al., 2018; Chatzilena et al., 2019; Minter and Retkute, 2019) is to compute the following conditional probability

In the example given earlier Xt would be the number of infected people at time t and Yt would be the number of hospitalized people at time t. If the non-controllable parameters η are known they can be plugged into the simulator, otherwise we can also perform inference over them, like in the equation above. This procedure automatically takes into account prior information, in the form of a model, and available data, in the form of observations. It produces estimates with appropriate amount of uncertainty depending on how much confidence can be obtained from the information available.

The difficulty lies in computing this conditional probability, since the simulator does not provide a mechanism to sample from it directly and for all but the simplest models the integral cannot be computed analytically. The main purpose of probabilistic programming tools is to provide a mechanism to perform the necessary computation automatically, freeing the user from having to come up with and implement a suitable algorithm. In this case, approximate Bayasian computation (ABC) would be a suitable tool. We describe it below, emphasizing again that its implementations are already provided by existing tools (Toni et al., 2009; Kypraios et al., 2017; McKinley et al., 2018; Chatzilena et al., 2019; Minter and Retkute, 2019).

The main problem in this model is that we do not have access to the likelihood p(Yt ∣ Xt, θ, η) so we cannot apply the standard importance sampling methods. To use ABC, we extend the model with auxiliary variables

which we can solve by importance sampling from the prior. Algorithmically, this means independently sampling a large number N of trajectories from the simulator

computing their importance weights

and approximating the posterior distribution

where δ is the Dirac delta putting all the probability mass on the point in its subscript. In more intuitive terms, we are approximating the posterior distribution with a collection of weighted samples where weights indicate their relative probabilities.

3.3 Control as Inference: Finding Actions That Achieve Desired Outcomes

In traditional inference tasks we condition on data observed in the real world. In order to do control as inference, we instead condition on what we want to observe in the real world, which tells us which actions are likely to lead to such observations. This is accomplished by introducing auxiliary variables that indicate how desirable a future state is or is not. In order to keep things simple, here we restrict ourselves to the binary case where Yt ∈ {0, 1}, where 1 means that the situation at time t is acceptable and 0 means it is not. This indicates which outcomes are acceptable, allowing us to compute a distribution over those policies, while leaving the choice of which specific policy is likely to produce an acceptable outcome to policy-maker(s). For example, Yt can be 1 when the number of patients needing hospitalization at a given time t is smaller than the number of hospital beds available and 0 otherwise.

To find a policy θ that is likely to lead to acceptable outcomes, we need to compute the posterior distribution

Once again, probabilistic programming tools provide the functionality to compute this posterior automatically. In this case, rejection sampling would be a simple and appropriate inference algorithm. The rejection sampling algorithm repeatedly samples values of θ from the prior p(θ), runs the full simulator using θ, and keeps the sampled θ only if all Yt are 1. The collection of accepted samples approximates the desired posterior. We use rejection sampling in our agent-based modeling experiments, but emphasize that other, more complex and potentially more computationally efficient, approaches to computing this posterior exist.

This tells us which policies are most likely to lead to a desired outcome but not how likely a given policy is to lead to that outcome. To do that, we can evaluate the conditional probability p(∀t>0 : Yt = 1 ∣ θ), which is known as the model evidence, for a particular θ. A more sophisticated approach would be to condition on the policy leading to a desired outcome with a given probability p0, that is

For example, we could set p0 = 0.95 to find a policy that ensures availability of hospital beds for everyone who needs one with at least 95% probability. The conditional probability in Eq. 8 is more difficult to compute than the one in Eq. 7. It can be approximated by methods such as nested Monte Carlo (NMC) (Rainforth et al., 2018), which are natively available in advanced probabilistic programming systems such as Anglican (Tolpin et al., 2016) and WebPPL (Goodman and Stuhlmüller, 2014a) but in specific cases can also be implemented on top of other systems, such as PyProb (Le et al., 2017), with relatively little effort, although using NMC usually has enormous computational cost.

To perform rejection sampling with nested Monte Carlo, we first draw a sample θi ∼ p(θ), then draw N samples of

However we compute the posterior distribution, it contains multiple values of θ that represent different policies that, if implemented, can achieve the desired result. In this setup it is up to the policy-maker(s) to choose a policy θ* that has support under the posterior, i.e., yields the desired outcomes, taking into account some notion of utility.

Crucially, despite their relative simplicity, the rejection sampling algorithms we have discussed evaluate randomly sampled values of θ. This is a fundamental difference from the commonly used grid search over a deterministic array of parameter values. In practical terms, this is important because “well distributed” random samples are sufficient for experts to gauge the quantities of interest, and avoid grid searches that would be prohibitively expensive for θ with more than a few dimensions.

3.4 Stochastic Model Predictive Control: Reacting to What’s Happened

During an outbreak governments continuously monitor and assess the situation, adjusting their policies based on newly available data. A convenient theoretical and general framework to formalize this is that of model predictive control (Camacho and Alba, 2013). In this case, Yt consists of variables

Then at time t = 1 they will have gained additional information

Generally, at time t we compute the posterior distribution conditioned on the current state and on achieving desirable outcomes in the future

Policy-maker(s) then can use this distribution to choose the policy

Eq. 11 can be computed using methods described in Section 3.2, while Eq. 10 can be computed using methods described in Section 3.3. As such, the policy enacted evolves over time, as a result of re-solving for the optimal control based on new information.

We note that what we introduce here as MPC may appear to be slightly different from what is commonly referred to as model predictive control (García et al., 1989). Firstly, instead of solving a finite-dimensional optimization problem over controls at each step, we perform a Bayesian update step and sample from the resulting posterior distribution over controls. Secondly, in more traditional applications of MPC a receding horizon (Jacob et al., 2011) is considered, where a finite and fixed-length window is considered. The controls required to satisfy the constraint over that horizon are then solved for and applied–without consideration of timesteps beyond this fixed window. At the next time step, the controls are then re-solved for. In this work, we rather consider a constant policy for the remaining T − t time steps, as opposed to allowing a variable policy (discussed below) over a fixed window. We note that time-varying controls are fully permissible under the framework we present, as we demonstrate in Section 3.5. Furthermore, we could easily consider a fixed horizon, just by changing the definition of

Before we proceed, it is critical to note that the posterior

3.5 Time-Varying Control: Long Term Planning

It is also possible to explicitly model changing policy decisions over time, which enables more detailed planning, such as when to enact certain preventive measures such as closing schools. Notationally, this means instead of a single θ there is a separate θt for each time t. We can then find a good sequence of policy decisions by performing inference just like in Section 3.3 by conditioning on achieving the desired outcome

The inference problem is now more challenging, since the number of possible control sequences grows exponentially with the time horizon. Still, the posterior can be efficiently approximated with methods such as Sequential Monte Carlo.

It is straightforward to combine this extension with model predictive control from Section 3.4. The only required modification is that in Eq. 10 we need to compute the posterior over all future policies,

At each time t the policy-maker(s) only choose the current policy

In models with per-timestep control variables θt, it is very important that in the model (but not in the real world) the enacted policies must not depend on anything else. If the model includes feedback loops for changing policies based on the evolution of the outbreak, it introduces positive correlations between lax policies and low infection rates (or other measures of severity of the epidemic), which in turn means that conditioning on low infection rates is more likely to produce lax policies. This is a known phenomenon of reversing causality when correlated observational data is used to learn how to perform interventions (Pearl, 2000).

We note another potential pitfall that may be exacerbated by time-varying control: if the model used does not accurately reflect reality, any form of model-based control is likely to lead to poor results. To some extent, this can be accounted for with appropriate distributions reflecting uncertainty in parameter values. However, given the difficulty of modeling human behavior, and especially of modeling people’s reactions to novel policies, there is likely to be some mismatch between modeled and real behavior. To give an example of a possible flaw in an agent-based model: if a proposed fine-grained policy closed one park while keeping open a second, nearby, park, the model may not account for the likely increase in visitors to the second park. The larger and more fine-grained (in terms of time or location) the space of considered policies is, the more such deficiencies may exist. We therefore recommend that practitioners restrict the space of considered policies to those which are likely to be reasonably well modeled.

3.6 Automation

We have intentionally not really explained how one might actually computationally characterize any of the conditional distributions defined in the preceding section. For the compartmental models that follow, we provide code that directly implements the necessary computations. Alternatively we could have used the automated inference facilities provided by any number of packages or probabilistic programming systems. Performing inference as described in existing, complex simulators is much more complex and not nearly as easy to implement from scratch. However, it can now be automated using the tools of probabilistic programming.

3.6.1 Probabilistic Programming

Probabilistic programming (van de Meent et al., 2018) is a growing subfield of machine learning that aims to build an analogous set of tools for automating inference as automatic differentiation did for continuous optimization. Like the gradient operator of languages that support automatic differentiation, probabilistic programming languages introduce observe operators that denote conditioning in the probabilistic or Bayesian sense. In those few languages that natively support nested Monte Carlo (Goodman and Stuhlmüller, 2014b; Tolpin et al., 2016), language constructs for defining conditional probability objects are introduced as well. Probabilistic programming languages (PPLs) have semantics (Staton et al., 2016) that can be understood in terms of Bayesian inference (Bishop, 2006; Gelman et al., 2013; Ghahramani, 2015). The major challenge in designing useful PPL systems is the development of general-purpose inference algorithms that work for a variety of user-specified programs. The work in the paper uses only the very simplest, and often least efficient, general purpose inference algorithms, importance sampling and rejection sampling. Others are covered in detail in (van de Meent et al., 2018).

Of all the various probabilistic programming systems, only one is readily compatible with inference in existing stochastic simulators: PyProb (Le et al., 2019). Quoting from its website1 “PyProb is a PyTorch-based library for probabilistic programming and inference compilation. The main focus of PyProb is on coupling existing simulation codebases with probabilistic inference with minimal intervention.” A textbook, technical description of how PyProb works appears in (van de Meent et al., 2018, Chapt. 6).

Recent examples of its use include inference in the standard model of particle physics conditioning on observed detector outputs (Baydin et al., 2018; Baydin et al., 2019), inference about internal composite material cure processes through a composite material cure simulator conditioned on observed surface temperatures (Munk et al., 2020), and inference about malaria spread in a malaria simulator (Gram-Hansen et al., 2019).

3.6.2 Alternative Approaches

It is important to note upfront that our ultimate objective is to understand the dependence of the policy outcome on the controllable parameters (for instance, as defined by Eqs 7, 8). There are a litany of methods to quantify this dependency. Each method imposes different constraints on the model family that can be analyzed, and the nature of the solution obtained. For instance, the fully Bayesian approach we take aims to quantify the entire distribution, whereas traditional optimal control methods may only seek a pointwise maximizer of this probability. Therefore, before we introduce the models we analyze and examples we use to explore the proposed formulation, we give a brief survey of alternative approaches one could use to solve this problem, and give the benefits and drawbacks of our proposed formulation compared to these approaches.

The traditional toolkits used to analyze problems of this nature are optimal control (Vinter and Vinter, 2010; Lewis et al., 2012; Sharomi and Malik, 2017; Bertsekas, 2019) and robust control (Zhou and Doyle, 1998; Hansen and Sargent, 2001; Green and Limebeer, 2012). Most generally, optimal control solves for the control inputs (here denoted θ) such that some outcome is achieved (here denoted

Many traditional control approaches can exploit a mathematical model of the system to solve for controls that consider multiple timesteps, referred to as model predictive control (MPC) (Morari and Lee, 1999; Rawlings, 2000; Camacho and Alba, 2013). Alternatively, control methods can be model-free, where there is no notion of the temporal dynamics of the system being controlled, such as the canonical PID control. Model-based methods often solve for more effective control measures with lower overall computational costs, by exploiting the information encoded in the model. However, model predictive control methods are only applicable when an (accurate) model is available.

One could re-frame the objective as trying to maximize the reward of some applied controls. Here, reward may be a 0–1 indicator corresponding to whether or not the hospital capacity was exceeded at any point in time. The reward may also be more sophisticated, such as by reflecting the total number of people requiring hospital treatment, weighted by how critical each patient was. Reward may also internalize some notion of the cost of a particular control. Shutting workplaces may prevent the spread of infection, but has economic and social implications that (at least partially) counteract the benefit of reducing the infection rate. This can be implemented as earning progressively more negative reward for stronger policies.

This type of analysis is formalized through reinforcement learning (RL) (Sutton, 1992; Kaelbling et al., 1996; Sutton and Barto, 2018; Bertsekas, 2019; Warrington et al., 2021). In RL, a policy, here θ, is solved for that maximizes the expected reward. RL is an incredibly powerful toolkit that can learn highly expressive policies that vary as a function of time, state, different objectives etc. RL methods can also be described as model-based (Moerland et al., 2021) and model-free (Bertsekas, 2019) analogously to traditional control methods. While we do not delve into RL in detail here, it is important to highlight as an alternative approach, and we refer the reader to Levine (2018) for a more detailed introduction of RL, to Bertsekas (2019) for a discussion of the relationship between RL and control methods, and to Levine (2018) for a discussion of the relationship between RL and planning-as-inference.

However, a critical drawback of both control and RL is the strong dependence on the loss functions or specific models considered, both in terms of convergence and the optimal solution recovered (Adam and DeJong, 2003). This dependence may therefore mean that the solution recovered is not representative of the “true” optimal solution. Furthermore, RL approaches can be incredibly expensive to train, especially in high-dimensional time series models, with a large array of controls and sparse rewards. More traditional control-based methods can be limited in the models that they can analyze, both in terms of the transition distribution and the range of controls that can be considered. Finally, both RL and control-based approaches offer limited insight into why certain controls were proposed. This can limit the ability of researchers and modellers to expediently investigate the emergent properties of complex, simulation-based models. Furthermore, this lack of transparency may be disadvantageous for policy-makers, who may be required to justify why certain policy decisions were made, and the confidence with which that policy decision was believed to be optimal.

All of these methods, at least in terms of their core intentions, are very similar. Different methods impose different restrictions on the models that can be analyzed, and the nature of the solution obtained. In this work, we take the approach of framing control and planning as fully Bayesian inference with a binary objective. We choose this formulation as it places very few restrictions on the model class that can be analyzed, allows uncertainty to be succinctly handled throughout the inference, a broad range of objectives to be defined under the same framework, and correctly calibrated posterior distributions over all random variables in the model to be recovered. Furthermore, this formulation allows us to access the ever-growing array of powerful inference algorithms to perform the inference. While naive approaches such as grid-search or random sampling may be performant in low-dimensional applications, they do not scale to high dimensions through the curse of dimensionality (Köppen, 2000; Owen, 2013). Therefore, we focus on methods that can scale to high-dimensional applications (Owen, 2013). Finally, Bayesian inference methods are, arguably, the most complete formulation. Once the full posterior or joint distribution have been recovered, many different methodologies, constraints, objectives etc. can be formulated and evaluated post facto. This flexibility allows for fine-grained analysis to be conducted on the inferred distributions to provide analysts with a powerful and general tool to further analyze and understand the model. Less myopically, this analysis and the understanding garnered may be more beneficial to our broader understanding of outbreaks and the feasibility and efficacy of particular responses.

Finally, we note that while throughout the experiments presented in Section 5.2 we use the PyProb framework, PyProb is not the only framework available. Any inference methodology could be used to compute the probability in Eq. 7. However, PyProb is a natural choice as it allows for greater flexibility in interfacing between black-box simulator code, and powerful and efficiently implemented inference algorithms, without modifying either simulator or inference code. This reduces the implementation overhead to the analyst, and reduces the scope for implementation bugs and oversights to be introduced.

4 Models

Epidemiological dynamics models can be used to describe the spread of a disease such as COVID-19 in society. Different types span vastly different levels of fidelity. There are classical compartmental models (SIR, SEIR, etc.) (Blackwood and Childs, 2018a) that describe the bulk progression of diseases of different fundamental types. These models break the population down to a series of compartments (e.g. susceptible (S), infectious (I), exposed (E), and recovered (R)), and treat them as continuous quantities that vary smoothly and deterministically over time following dynamics defined by a particular system of ordinary differential equations. These models are amenable to theoretical analyses and are computationally efficient to forward simulate owing to their low dimensionality. As policy-making tools, they are rather blunt unless the number of compartments is made large enough to reflect different demographic information (age, socio-economic info, etc.), spatial strata, or combinations thereof.

At the other end of the spectrum are agent-based models (Hunter et al., 2017; Badham et al., 2018; Hunter et al., 2018; Tracy et al., 2018) (like the University of Pittsburgh Public Health Dynamics Laboratory’s FRED (Grefenstette et al., 2013) or the Institute for Disease Modeling’s EMOD (Anna et al., 2018)) that model populations and epidemiological dynamics via discrete agent interactions in “realistic” space and time. Imagine a simulation environment like the game Sim-CityTM, where the towns, populations, infrastructure (roads, airports, trains, etc.), and interactions (go to work, school, church, etc.) are modeled at a relatively high level of fidelity. These models exist only in the form of stochastic simulators, i.e., software programs that can be initialized with disease and population characteristics, then run forward to show in a more fine-grained way the spread of the disease over time and space.

Both types of models are useful for policy-making. Compartmental models are usually more blunt unless the number of compartments is very high and it is indexed by spatial location, demographics and age categories. Increasing the number of compartments adds more unknown parameters which must be estimated or marginalized. Agent-based models are complex by nature, but they may be more statistically efficient to estimate, as they are parameterized more efficiently, often directly in terms of actual individual and group behaviour choices. In many cases, predictions made by such models are more high fidelity, certainly more than compartmental models with few compartments, and this has implications for their use as predictive tools for policy analysis. For instance, policies based on simulating a single county in North Dakota with excellent hospital coverage and a highly dispersed, self-sufficient population could lead to different intervention recommendations compared to a compartmental model of the whole of the United States with only a few compartments.

4.1 A Compartmental Model of COVID-19

We begin by introducing a low-dimensional compartmental model to explore our methods in a well-known model family, before transitioning to a more complex agent-based simulator. The model we use is an example of a classical SEIR model (Ogilvy Kermack and McKendrick, 1927; C Blackwood and Childs, 2018; Hill et al., 2020). In such models, the population is subdivided into a set of compartments, representing the susceptible (uninfected), exposed (infected but not yet infectious), infectious (able to infect/expose others) and recovered (unable to be infected). Within each compartment, all individuals are treated identically, and the full state of the simulator is simply the size of the population of each compartment. Our survey of the literature found a lack of consensus about the compartmental model and parameters which most faithfully simulate the COVID-19 scenario. Models used range from standard SEIR (Liu et al., 2020a; Massonnaud et al., 2020; Rovetta et al., 2020; Traini et al., 2020), SIR (Jia et al., 2020; Pujari and Shekatkar, 2020; Teles, 2020; Traini et al., 2020; Weber et al., 2020), SIRD (Anastassopoulou et al., 2020; Liu et al., 2020b; Caccavo, 2020), QSEIR (Liu et al., 2020c), and SEAIHRD (Arenas et al., 2020). The choice depends on many factors, such as how early or late in the stages of an epidemic one is, what type of measures are being simulated, and the availability of real word data. We opted for the model described in this section, which seems to acceptably represent the manifestation of the disease in populations. Existing work has investigated parameter estimation in stochastic SEIR models (Lekone and Finkenstädt, 2006; Roberts et al., 2015). Although we will discuss how we set the model parameters, we emphasize that our contribution is instead in demonstrating how a calibrated model could be used for planning.

4.1.1 Model Description

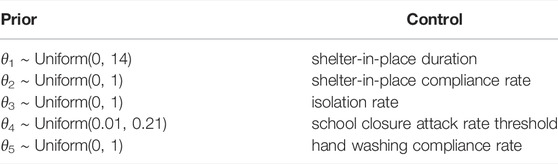

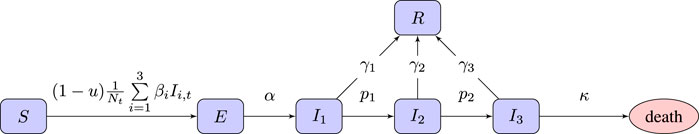

We use an SEI3R model (Hill et al., 2020), a variation on the standard SEIR model which allows additional modeling freedom. It uses six compartments: susceptible (S), exposed (E), infectious with mild (I1), severe (I2) or critical infection (I3), and recovered (R). We do not include baseline birth and death rates in the model, although there is a death rate for people in the critically infected compartment. The state of the simulator at time t ∈ [0, T] is Xt = {St, Et, I1,t, I2,t, I3,t, Rt} with St, Et, I1,t, I2,t, I3,t, and Rt indicating the population sizes (or proportions) at time t. The unknown model parameters are η = {α, β1, β2, β3, p1, p2, γ1, γ2, γ3, κ}, each with their own associated prior. To the model we add a free control parameter, denoted

FIGURE 1. Flow chart of the SEI3R model we employ. A member of the susceptible population S moves to exposed E after being exposed to an infectious person, where “exposure” is defined as the previous susceptible person contracting the illness. After some incubation period, a random duration parameterized by α, they develop a mild infection (I1). They may then either recover, moving to R, or progress to a severe infection (I2). From I2, they again may recover, or else progress further to a critical infection (I3). From I3, the critically infected person will either recover or die.

For the purposes of simulations with this model, we initialize the state with 0.01% of the population having been exposed to the infection, and the remaining 99.99% of the population being susceptible. The population classified as infectious and recovered are zero, i.e.,

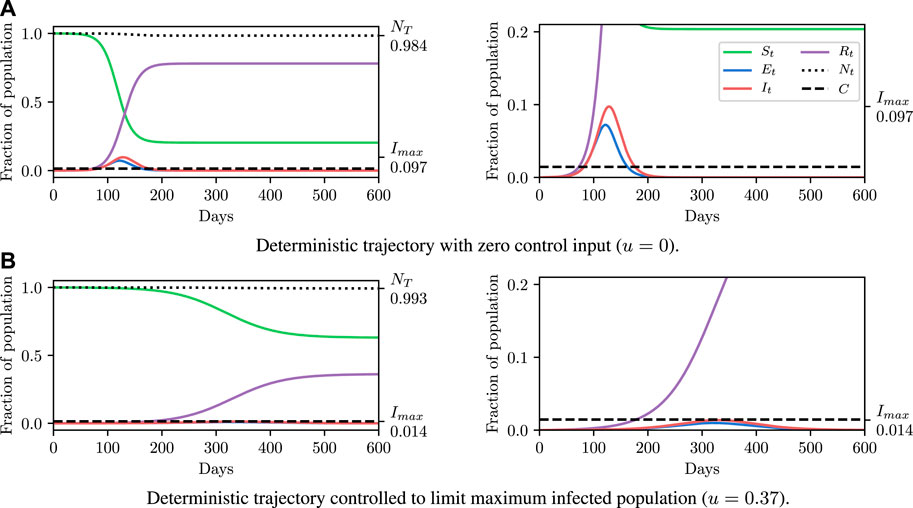

4.1.2 Example Trajectories

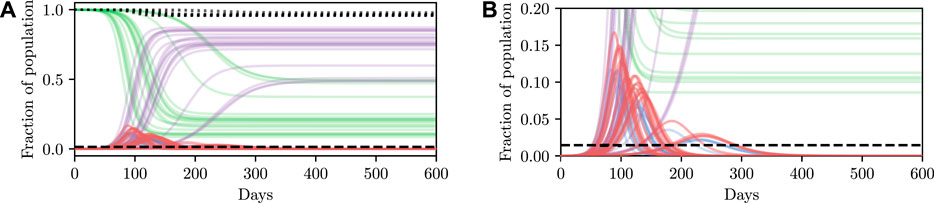

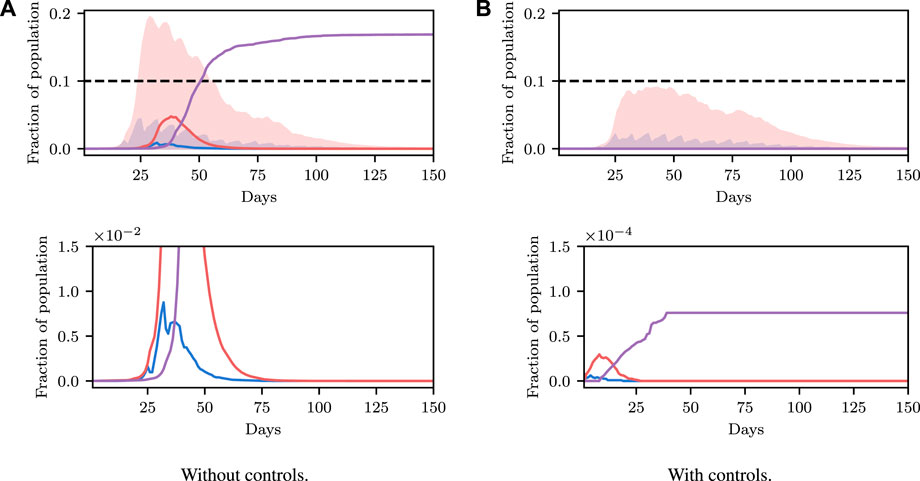

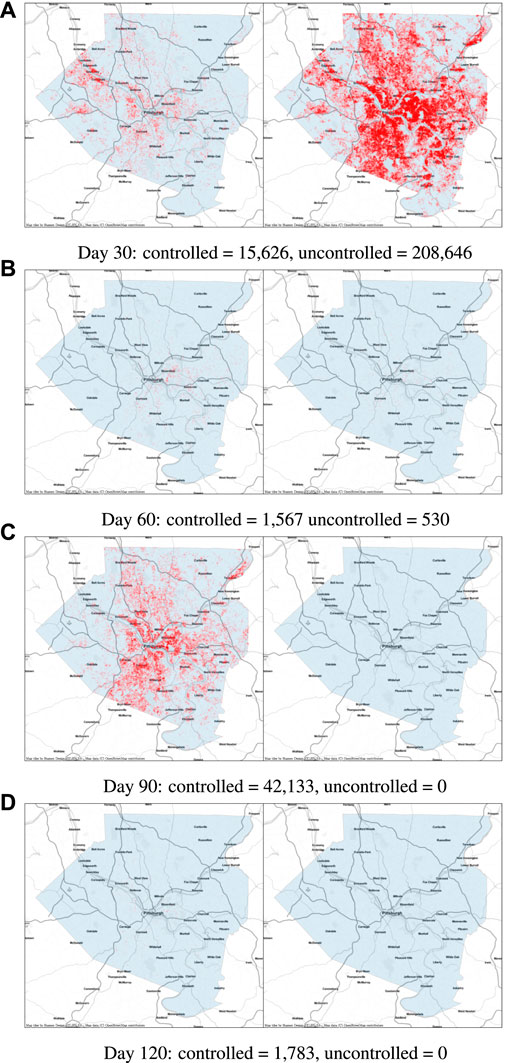

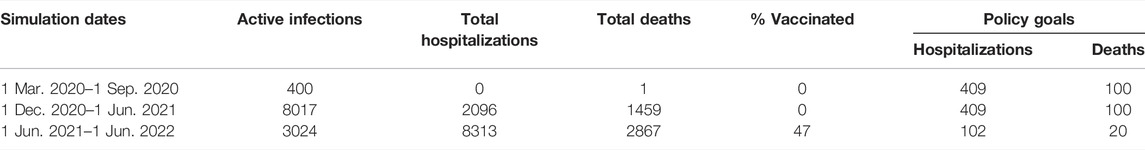

Before explaining how we set the SEI3R model parameters, or pose inference problems in the model, we first verify that we are able to simulate feasible state evolutions. As we will describe later, we use parameters that are as reflective of current COVID-19 epidemiological data as possible at the time of writing. Figures 2A,B show deterministic simulations from the model with differing control values u. Shown in green is the susceptible population, in blue is the exposed population, in red is the infectious population, and in purple is the recovered population. The total live population is shown as a black dotted line. All populations are normalized by the initial total population, N0. The dashed black line represents a threshold under which we wish to keep the number of infected people at all times. The following paragraph provides the rationale for this goal.

FIGURE 2. Populations per compartment during deterministic SEI3R simulations, both without intervention (A) and with intervention (B). Plots in the left column show the full state trajectory, and in the right column are cropped to more clearly show the exposed and infected populations. Without intervention, the infected population requiring hospitalization (20% of cases) exceeds the threshold for infected population (0.0145, black dashed line), overwhelming hospital capacities. With intervention (u = 0.37) the infected population always remains below this limit. Note that we re-use the colour scheme from this figure through the rest of the paper.

4.1.3 Policy Goal

As described in Section 3.4, parameters should be selected to ensure that a desired goal is achieved. In all scenarios using the SEI3R model, we aim to maintain the maximal infectious population proportion requiring healthcare below the available number of hospital beds per capita, denoted C. This objective can be formulated as an auxiliary observation,

where I1,0:T, I2,0:T and I3,0:T are sampled from the model, conditioned on a θ value. This threshold value we use was selected to be 0.0145, as there are 0.0029 hospital beds per capita in the United States (World Bank, 2020), and roughly 20% of COVID-19 cases require hospitalization. This constraint was chosen to represent the notion that the healthcare system must have sufficient capacity to care for all those infected who require care, as opposed to just critical patients. However, this constraint is only intended as a demonstrative example of the nature of constraints and inference questions one can query using models such as these, and under the formalism used here, implementing and comparing inferences under different constraints is very straightforward. More complex constraints may account for the number of critical patients differently to those with mild and severe infections, model existing occupancy or seasonal variations in capacity, or, target other metrics such as the number of deceased or the duration of the epidemic.

The constraint is not met in Figure 2A, but is in Figure 2B, where a greater control input u has been used to slow the spread of the infection. This is an example of the widely discussed “flattening of the curve.” As part of this, the infection lasts longer but the death toll is considerably lower.

4.1.4 Control Input

As noted before, we assume that only a single “controllable” parameter affects our model, u. This is the reduction in the “baseline reproductive ratio,” R0, due to policy interventions. Increasing u has the same effect as reducing the infectiousness parameters β1, β2 and β3 by the same proportion. u can be interpreted as the effectiveness of policy choices to prevent new infections. Various policies could serve to increase u, since it is a function of both, for example, reductions in the “number of contacts while infectious” (which could be achieved by social distancing and isolation policy prescriptions), and the “probability of transmission per contact” (which could be achieved by, e.g., eye, hand, or mouth protective gear policy prescriptions). It is likely that both of these kinds of reductions are necessary to maximally reduce u at the lowest cost.

For completeness, the baseline reproductive ratio, R0, is an estimate of the number of people a single infectious person will in turn infect and can be calculated from other model parameters (Hill et al., 2020). R0 is often reported by studies as a measure of the infectiousness of a disease, however, since R0 can be calculated from other parameters we do not explicitly parameterize the model using R0, but we will use R0 as a convenient notational shorthand. We compactly denote the action of u as controlling the baseline reproductive rate to be a “controlled reproductive rate,” denoted

4.1.5 Using Point Estimates of Model Parameters

We now explain how we set the model parameters to deterministic estimates of values which roughly match COVID-19. The following section will consider how to include uncertainty in the parameter values. Specifically, the parameters are the incubation period α−1; rates of disease progression p1 and p2; rates of recovery from each level of infection, γ1, γ2, and γ3; infectiousness for each level of infection, β1, β2, and β3; and a death rate for critical infections, κ. u ∈ [0, 1] is a control parameter, representing the strength of action taken to prevent new infections (Boldog et al., 2020). To estimate distributions over the uncontrollable model parameters, we consider their relationships with various measurable quantities

Given the values of the left-hand sides of each of Eqs 21–28, (as estimated by various studies) we can calculate model parameters α, p1, p2, γ1, γ2, γ3 and κ by inverting this system of Equations. These parameters, along with estimates for β1, β2, and β3, and a control input u, fully specify the model. Hill et al. (2020) use such a procedure to deterministically fit parameter values. Given the parameter values, the simulation is entirely deterministic. Therefore, setting parameters in this way enables us to make deterministic simulations of “typical” trajectories, as shown in Figure 2. Specifying parameters in this way and running simulations in this system provides a low overhead and easily interpretable environment, and hence is an invaluable tool to the modeller.

4.1.6 Dealing With Uncertainty About Model Parameter Values

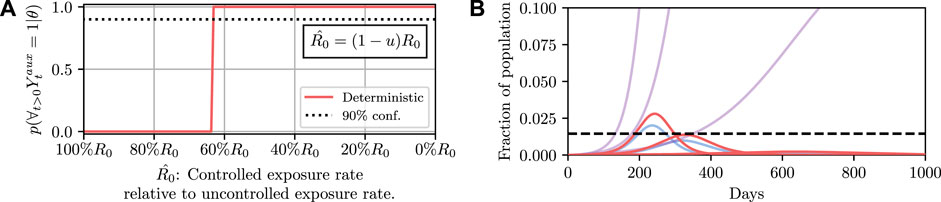

Deterministic simulations are easy to interpret on a high level, but they require strong assumptions as they fix the values of unknown parameters to point estimates. We therefore describe how we can perform inference and conditioning in a stochastic model requiring less strict assumptions, and show that we are able to provide meaningful confidence bounds on our inferences that can be used to inform policy decisions more intelligently than without this stochasticity. As described in Section 3, stochasticity can be introduced to a model through a distribution over the latent global parameters η. Examples of stochastic simulations are shown in Figure 3A. Clearly there is more capacity in this model for representing the underlying volatility and unpredictability of the precise nature of real-world phenomena, especially compared to the deterministic model.

FIGURE 3. Stochastic simulations from the SEI3R model. (A) shows the full trajectory while (B) is cropped to the pertinent region. Compared to the deterministic simulations in Figure 2A, stochastic simulations have the capacity to be much more infectious. Therefore, fully stochastic simulations are required to accurately quantify the true risk in light of the uncertainty in the model. As a result of this, Bayesian methods, or at least methods that correctly handle uncertainty, are required for robust analysis. We reuse the colour scheme defined in Figure 2A for trajectories.

However, this capacity comes with the reality that increased effort must be invested to ensure that the unknown latent states are correctly accounted for. For more specific details on dealing with this stochasticity please refer back to Section 3, but, in short, one must simulate for multiple stochastic values of the unknown parameters, for each value of the controllable parameters, and agglomerate the many individual simulations appropriately for the inference objective. When asking questions such as “will this parameter value violate the constraint?” there are feasibly some trajectories that are slightly above and some slightly below the trajectory generated by the deterministic simulation due to the inherent stochasticity (aleatoric uncertainty) in the real world. This uncertainty is integrated over in the stochastic model, and hence we can ask questions such as “what is the probability that this parameter will violate the constraint?” Using confidence values is this way provides some measure of how certain one can be about the conclusion drawn from the inference–if the confidence value is very high then there is a measure of “tolerance” in the result, compared to a result with a much lower confidence.

We define a joint distribution over model parameters as follows. We consider the 95% confidence intervals of β1, β2, and β3 and the values in the left-hand sides of Eqs 21–24, and assume that their true values are uniformly distributed across these confidence intervals. Then at each time t in a simulation, we sample these values and then invert the system of Eqs 21–28 to obtain a sample of the model parameters. More sophisticated distributions could easily be introduced once this information becomes available. We now detail the nominal values used for typical trajectories (and the confidence intervals used for sampling). The nominal values are mostly the same as those used by (Hill et al., 2020). We use: an incubation period of 5.1 days (4.5–5.8) (Lauer et al., 2020); a mild infection duration of 6 days (5.5–6.5) (Wölfel et al., 2020); a severe infection duration of 4.5 days (3.5–5.5) (Sanche et al., 2020); a critical infection duration of 6.7 days (4.2–10.4); fractions of mild, severe, and critical cases of 81, 14, and 5% (Wu and McGoogan, 2020); and a fatality ratio of 2% (Wu and McGoogan, 2020). We also use β1 = 0.33/day (0.23–0.43), and β2 = 0/day (0–0.05), and β3 = 0/day (0–0.025). Where possible, the confidence intervals are obtained from the studies which estimated the quantities. Where these are not given, we use a small range centred on the nominal value to account for possible imprecision.

4.2 Agent-Based Simulation

While compartmental models, such as the SEIR model described in Section 4.1, provide a mathematically well understood global approximation to disease dynamics, due to their coarse-grained statistical nature they cannot capture many important aspects and local details of the physical and social dynamics underlying the spread of a disease. These aspects include geographic information, spatio-temporal human interaction patterns in social hubs such as schools or workplaces, and the impact of individual beliefs on transmission events. To address these limitations, agent-based simulators (ABS) have been introduced. Such simulators have practically no restrictions in terms of expressiveness, i.e., they can make use of all features of modern Turing-complete programming languages, at the significant computational cost of simulating all details involved.

4.2.1 FRED: Fine-Grained Simulation of Disease Spreading

FRED2 (Grefenstette et al., 2013) is an instance of the class of epidemiological agent-based simulators that are currently available for use in policy-making. FRED is an agent-based modeling language and execution platform for simulating changes in a population over time. FRED represents individual persons, along with social contacts and interactions with the environment. This enables the model to include individual responses and behaviors that vary according to the individual’s characteristics, including demographics (age, sex, race, etc.), as well as the individual’s interactions with members of various social interaction groups, such as their neighborhood, school or workplace. The FRED user can define and track any dynamic condition for the individuals within the population, including diseases (such as COVID-19), attitudes (such as vaccine acceptance), and behaviors (such as social distancing).

FRED captures demographic and geographic heterogeneities of the population by modeling every individual in a region, including realistic households, workplaces and social networks. Using census-based models available for every state and county in the US and selected international locations, FRED simulates interactions within the population in discrete time steps of 1 h. Transmission kernels model the spatial interaction between infectious places and susceptible agents. These characteristics enable FRED to provide much more fine-grained policy advice at either the regional or national level, based on socio-economic and political information which cannot be incorporated into compartmental models.

We chose to use FRED in this work because it has been used to evaluate potential responses to previous infectious disease epidemics, including vaccination policies (Lee et al., 2011), school closure (Potter et al., 2012), and the effects of population structure (Kumar et al., 2015) and personal health behaviors (Kumar et al., 2013; Liu et al., 2015).

After 10 years of develoment as an academic project, FRED has been licensed by the University of Pittsburgh to Epistemix 3, to develop commercial applications of the FRED modeling technology. In turn, Epistemix has developed a detailed COVID-19 model in FRED, which is used in the experiments described here.

The FRED COVID-19 model includes three interconnected components: 1) The natural history of COVID-19; 2) The social dynamics/behavior of individuals; and 3) The Vaccination Program. The COVID-19 model was designed using the latest scientific data, survey information from local health authorities, and in consultation with expert epidemiologists. This model has been used to project COVID-19 cases in universities, K-12 school districts, large cities, and offices.

The FRED COVID-19 natural history model represents the period of time and trajectory of an individual from infection or onset to recovery or death. In the current version of the model, when an individual, or an agent, is exposed to SARS-CoV-2, the virus that causes COVID-19, the individual enters a 2-day latent period before they become infectious. In the infectious state, individuals can either be asymptomatic, symptomatic, or hospitalized. The probability of entering any of these infectious states is based on the individual’s age, infection history, and vaccination history. Individuals have a duration of illness (i.e., number of days they can transmit the virus) which is dependent on infectious state, a severity of disease (i.e., magnitude of transmissibility), and a disease outcome (recovery or death).

When an agent is exposed to SARS-CoV-2 and becomes symptomatic, the individual chooses whether or not to isolate themselves from normal activities. Approximately 20% of individuals continue regular daily activities while symptomatic. Agents who are exposed and develop an asymptomatic infection do not isolate themselves and go about their regular activities. This introduces both symptomatic and asymptomatic forms of transmission into the model.

Prevalence of mask wearing and adherence to social distancing are unique to each location and change over time. The level of compliance to these behaviors is set based on the number of active infections that were generated from reported cases in the previous 2 weeks. Social distancing is assumed to reduce the number of contacts between agents in each place the agent attends.

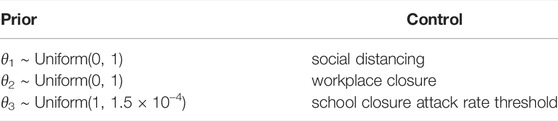

Following the general recipe for framing planning as inference in Section 3.1, the following section defines what a prior on controls θ is in terms of FRED internals, how FRED parameters relate to η, and how to condition FRED on desirable future outcomes

4.2.2 Turning FRED Into a Probabilistic Program

The FRED simulator has a parameter file which stipulates the values of θ and η. In other words, both the controllable and non-controllable parameters live in a parameter file. FRED, when run given a particular parameter file, produces a sample from the distribution p(X0:T, Z0:T|θ, η). Changing the random seed and re-running FRED will result in a new sample from this distribution.

The difference between X0:T and Z0:T in FRED is largely in the eye of the beholder. One way of thinking about it is that X0:T are all the values that are computed in a run and saved in an output file and Z0:T is everything else.

In order to turn FRED into a probabilistic programming model useful for planning via inference several small but consequential changes must be made to it. These changes can be directly examined by browsing one of the public source code repositories accompanying this paper.4 First, the random number generator and all random variable samples must be identified so that they can be intercepted and controlled by PyProb. Second, any variables that are determined to be controllable (i.e., part of θ) need to be identified and named. Third, in the main stochastic simulation loop, the state variables required to compute

In the interest of time and because we were familiar with the internals of PyProb and knew that we would not be using inference algorithms that were incompatible with this choice, the demonstration code does not show a full integration in which all random variables are controlled by the probabilistic programming system. Instead, it only controls the sampling of θ and the observation of

4.2.3 Details of FRED+PyProb Integration

Our integration of PyProb into FRED required only minor modifications to FRED’s code base, performed in collaboration with the FRED developers at Epistemix. More details about the integration of FRED and PyProb include:

1) The simulator is connected to PyProb through a cross-platform execution protocol (PPX5). This allows PyProb to control the stochasticity in the simulator, and requires FRED to wait, at the beginning of its execution, for a handshake with PyProb through a messaging layer.

2) PyProb overwrites the policy parameter values θ with random draws from the user-defined prior. While PyProb internally keeps track of all random samples it generates, we also decided to write out the updated FRED parameters to a parameter file in order to make associating θ(i) and

3) For each daily iteration step in FRED’s simulation, we call PyProb’s observe function with a likelihood corresponding to the constraint we would like to hold in that day.

With these connections established, we are able to select an inference engine implemented by PyProb to compute the posterior. We use a particularly simple algorithm, namely rejection sampling, in order to focus our exposition on the conceptual framework of planning as inference. PyProb implements multiple other, more complex, algorithms, which may be able to better approximate the posterior with a given computational budget. However for the inference task we consider, in which we attempt to infer only a small fraction of the random variables in the simulator, we find that rejection sampling is sufficiently performant.

We also remind the reader that, like in Section 3.5, more complex controls can be considered, in principle allowing for complex time-dependent policies to be inferred. We do not examine this here, but note that this extension is straightforward to implement in the probabilistic programming framework, and that PyProb is particularly well adapted to coping with the additional complexity. Compared to sampling parameter values for FRED at the beginning of the simulation, such time-varying policies could be implemented through changing the FRED model source code directly. This approach will be explored in future research.

5 Experiments

We now demonstrate how inference in epidemiological dynamics models can be used to inform policy-making decisions. We organize this section according to a reasonable succession of steps of increasing complexity that one might take when modeling a disease outbreak. We again stress that we are not making COVID-19 specific analyses here, but instead highlight how framing the task as in Section 3 allows existing machine learning machinery to be leveraged to enhance analysis and evaluation of outcomes; and avoid some potential pitfalls.

We begin by showing how a simple, deterministic compartmental SEIR-based model can be used to inform policy-making decisions, and show how analysis derived from such a deterministic model can fail to achieve stated policy goals in practice. Next, we demonstrate how using a stochastic model can achieve more reliable outcomes by accounting for the uncertainty present in real world systems. While these stochastic models address the limitations of the deterministic model, low-fidelity SEIR models are, in general, not of high enough fidelity to provide localized, region-specific policy recommendations. To address this we conclude by performing inference in an existing agent-based simulator of infectious disease spread and demonstrate automatic determination of necessary controls.

5.1 SEI3R Model

The most straightforward approach to modeling infectious diseases is to use low-dimensional, compartmental models such as the widely used susceptible-infectious-recovered (SIR) models, or the SEI3R variant introduced in Section 4.1. These models are fast to simulate and easy to interpret, and hence form a powerful, low-overhead analysis tool.

5.1.1 Deterministic Model

The system of equations defining the SEI3R model form a deterministic system when global parameter values, such as the mortality rates or incubation periods, are provided. However, the precise values of these parameter values are unknown, and instead only confidence intervals for these parameters are known, i.e., the incubation period is between 4.5 and 5.8 (Lauer et al., 2020). This variation may be due to underlying aleatoric uncertainty prevalent in biological systems, or epistemic uncertainty due to the low-fidelity nature of SIR-like models. We do not discuss them here, but work exists automatically fitting point-wise estimates of model parameter values directly from observed data (Wearing et al., 2005; Mamo and Koya, 2015).

Regardless of whether one obtains a point estimate of the parameter values by averaging confidence intervals, or by performing parameter optimization, the first step is to use these values to perform fully deterministic simulations, yielding simulations such as those shown in Figure 2A. Simulations such as these are invaluable for understanding the bulk dynamics of systems, investigating the influence of variations in global parameter values or investigating how controls affect the system. However, the ultimate utility in these models is to use them to inform policy decisions to reduce the impact of outbreaks. As eluded to above, this is the primary thrust of this work, combining epidemiological simulators with automated machine learning methodologies to model policy outcomes, by considering this problem as conditioning simulations on outcomes.

To demonstrate such an objective, we consider maintaining the infected population below a critical threshold C at all times. In a deterministic system there are no stochastic quantities and hence whether the threshold is exceeded is a deterministic function of the controlled parameters, i.e., the value of

Results for this are shown in Figure 4. It can then be read off that under the deterministic model

FIGURE 4. Here we demonstrate planning using the deterministic SEI3R model. (A) shows, in red, the probability that the constraint is met using the deterministic simulator. The probability jumps from zero to one at a value of approximately u = 0.37. (B) then shows trajectories using three salient parameter values, specifically,

5.1.2 Stochastic Simulation

While the above example demonstrates how parameters can be selected by conditioning on desired outcomes, we implicitly made a critical modeling assumption. While varying the free parameter u, we fixed the other model parameter values (α−1, γ1, etc.) to single values. We therefore found a policy intervention in an unrealistic scenario, namely one in which we (implicitly) claim to have certainty in all model parameters except u.

To demonstrate the pitfalls of analyzing deterministic systems and applying the results to an inherently stochastic system such as an epidemic, we use the permissible value of u solved for in the deterministic system, u = 0.375, and randomly sample values of the remaining simulation parameters. This “stochastic” simulator is a more realistic scenario than the deterministic variant, as each randomly sampled η represents a unique, plausible epidemic being rolled out from the current world state.

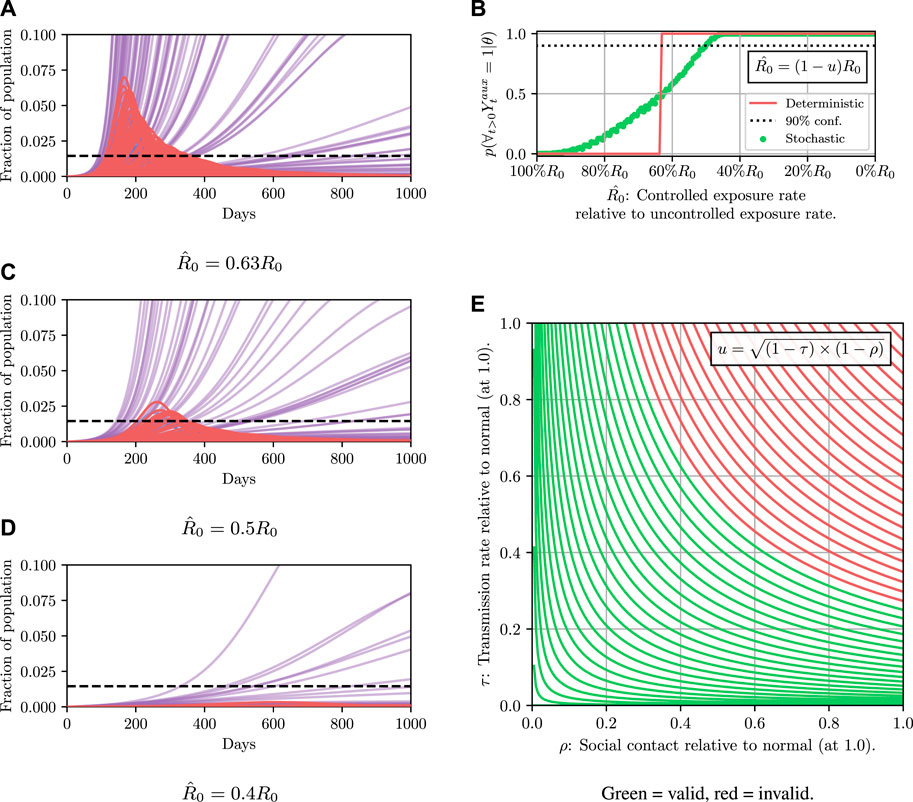

The results are shown in Figure 5A. Each line represents a possible epidemic. We can see that using the previously found value of u results in a large number of epidemics where the infectious population exceeds the constraint, represented by the red trajectories overshooting the dotted line. Simply put, the control parameter we found previously fails in an unacceptable number of simulations.

FIGURE 5. Comparison of stochastic and deterministic SEI3R models for policy selection. We reuse the colour scheme defined in Figure 2A for trajectories. (A) shows a stochastic simulation using

This detail highlights the shortcomings of the deterministic model: in the deterministic model a parameter value was either accepted or rejected with certainty. There was no notion of the variability in outcomes, and hence we have no mechanism to concretely evaluate the risk of a particular configuration.

Instead, we can use a stochastic model which at least does account for some aleatoric uncertainty about the world. We repeat the analysis picking the required value of u, but this time using the stochastic model detailed in Section 4.1. In practice, this means the (previously deterministic) model parameters detailed in Eqs 21–28 are randomly sampled for each simulation according to the procedure outlined following the equations.

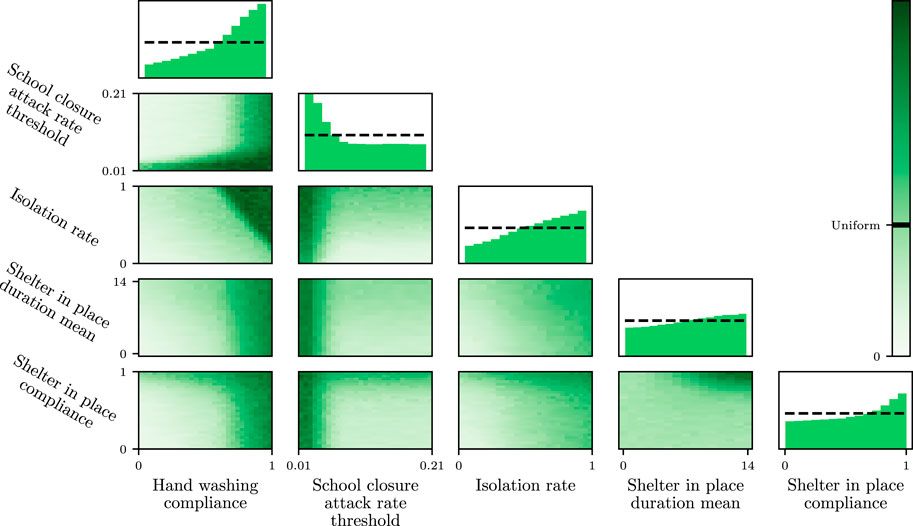

To estimate the value of

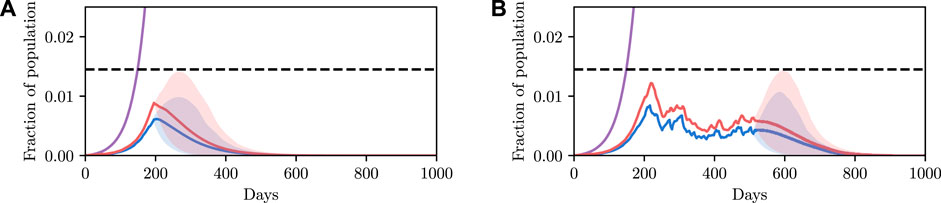

The results are shown in Figure 5B. The likelihood of the result under the stochastic model is not a binary value like in the deterministic case, and instead occupies a continuum of values representing the confidence of the results. We see that the intersection between the red and green curves occurs at approximately 0.5, explaining the observation that approximately half of the simulations in Figure 5A exceed the threshold. We can now ask questions such as: “what is the parameter value that results in the constraint not being violated, with 90% confidence?” We can read off rapidly that we must instead reduce the value of

5.1.3 Model Predictive Control

We have shown how one can select the required parameter values to achieve a desired objective. To conclude this example, we apply the methodology to iterative planning. The principal idea underlying this is that policies are not static and can be varied over time conditioned on the current observed state. Under the formalism used here, re-evaluating the optimal control to be applied, conditioned on the new information, is as simple as re-applying the planning algorithm at each time step. Note that here we consider constant control. However, more complex, time-varying control policies can easily be considered under this framework. For instance, instead of recovering a fixed control parameter, the parameter values of a polynomial function defining time-varying control input could be recovered, or, a scalar value determining the instantaneous control input at each time-step. This is a benefit of the fully Bayesian and probabilistic programming-based approach we have taken: the model class that can be analyzed is not fixed and can be determined (and easily changed and iterated on) by the modeller, and the inference back-end cleanly and efficiently handles the inference.

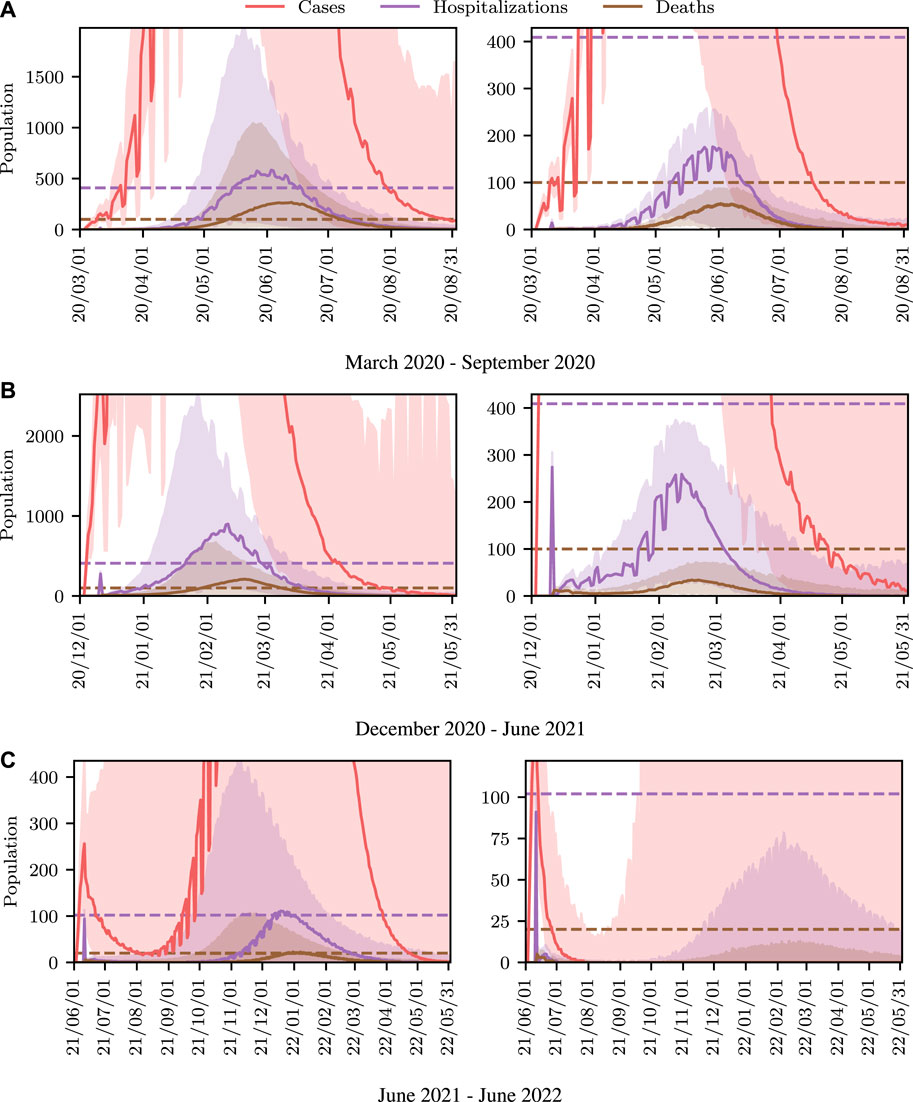

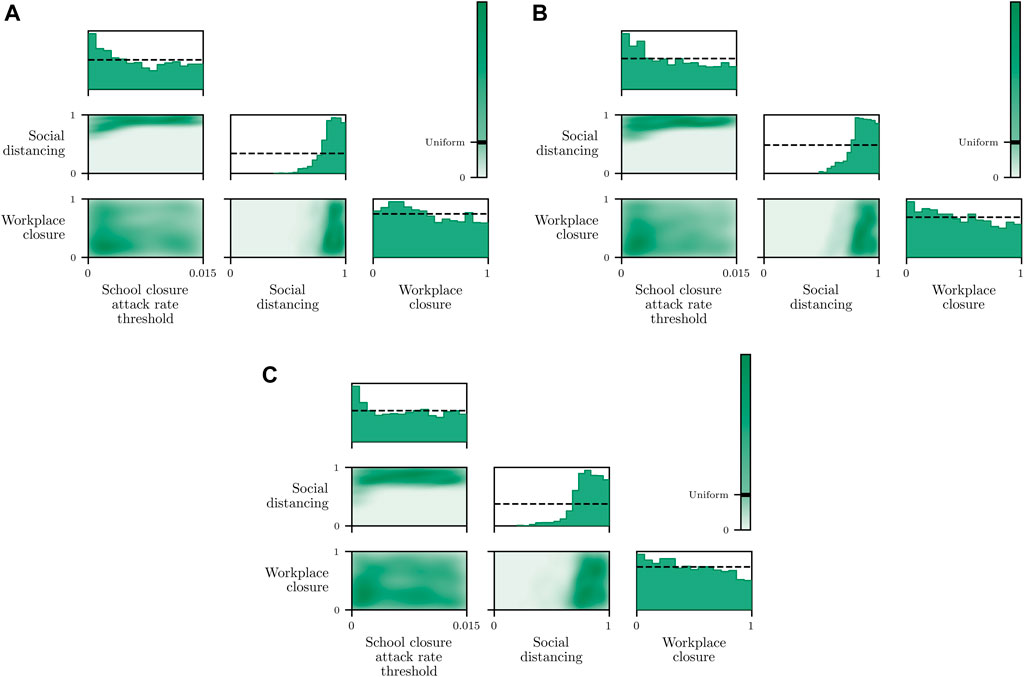

We show a demonstration of this in Figure 6. In this example, we begin at time t = 200 with non-zero infection rates. We solve for a policy that satisfies the constraint with 90% certainty, and show this confidence interval over trajectories as a shaded region. We then simulate the true evolution of the system for a single step sampling from the conditional distribution over state under the selected control parameter. We then repeat this process at regular intervals, iteratively adapting the control to the new world state. We see that the confidence criterion is always satisfied and that the infection is able to be maintained at a reasonable level. We do not discuss this example in more detail, and only include it as an example of the utility of framing the problem as we have, insomuch as iterative re-planning based on new information is a trivial extension under the formulation used.