Abstract

Adaptive agents must act in intrinsically uncertain environments with complex latent structure. Here, we elaborate a model of visual foraging—in a hierarchical context—wherein agents infer a higher-order visual pattern (a “scene”) by sequentially sampling ambiguous cues. Inspired by previous models of scene construction—that cast perception and action as consequences of approximate Bayesian inference—we use active inference to simulate decisions of agents categorizing a scene in a hierarchically-structured setting. Under active inference, agents develop probabilistic beliefs about their environment, while actively sampling it to maximize the evidence for their internal generative model. This approximate evidence maximization (i.e., self-evidencing) comprises drives to both maximize rewards and resolve uncertainty about hidden states. This is realized via minimization of a free energy functional of posterior beliefs about both the world as well as the actions used to sample or perturb it, corresponding to perception and action, respectively. We show that active inference, in the context of hierarchical scene construction, gives rise to many empirical evidence accumulation phenomena, such as noise-sensitive reaction times and epistemic saccades. We explain these behaviors in terms of the principled drives that constitute the expected free energy, the key quantity for evaluating policies under active inference. In addition, we report novel behaviors exhibited by these active inference agents that furnish new predictions for research on evidence accumulation and perceptual decision-making. We discuss the implications of this hierarchical active inference scheme for tasks that require planned sequences of information-gathering actions to infer compositional latent structure (such as visual scene construction and sentence comprehension). This work sets the stage for future experiments to investigate active inference in relation to other formulations of evidence accumulation (e.g., drift-diffusion models) in tasks that require planning in uncertain environments with higher-order structure.

1. Introduction

Our daily life is full of complex sensory scenarios that can be described as examples of “scene construction” (Hassabis and Maguire, 2007; Zeidman et al., 2015; Mirza et al., 2016). In its most abstract sense, scene construction describes the act of inferring a latent variable (or “scene”) given a set of (potentially ambiguous) sensory cues. Sentence comprehension is a prime example of scene construction: individual words are inspected in isolation, but after reading a sequence one is able to abduce the overall meaning of the sentence that the words are embedded within (Tanenhaus et al., 1995; Narayanan and Jurafsky, 1998; Ferro et al., 2010). This can be cast as a form of hierarchical inference in which low-level evidence (e.g., words) is actively accumulated over time to support disambiguation of high-level hypotheses (e.g., possible sentence meanings).

We investigate hierarchical belief-updating by modeling visual foraging as a form of scene construction, where individual images are actively sampled with saccadic eye movements in order to accumulate information and categorize the scene accurately (Yarbus, 1967; Jóhannesson et al., 2016; Mirza et al., 2016; Yang et al., 2016; Ólafsdóttir et al., 2019). In the context of scene construction, sensory uncertainty (e.g., blurry images) can limit the ability of individual cues to support inference about the overarching visual scene. Such sensory uncertainty can be partially “overridden” using prior knowledge, which might be built into the agent's internal model, innately or based on previous experience. While there is an enormous body of literature on the resolution of uncertainty with prior information (Trueswell et al., 1994; Rayner and Well, 1996; Körding and Wolpert, 2004; Stocker and Simoncelli, 2006; Girshick et al., 2011), relatively little research has examined interactions between sensory uncertainty and prior information in the context of a dynamic, active vision task like visual foraging or scene construction (with notable exceptions: e.g., Quétard et al., 2016).

Building on a previous Bayesian formulation of scene construction, in this work use we use active inference to model visual foraging in a hierarchical scene construction task (Friston et al., 2012a,b, 2017a; Mirza et al., 2016), and to study different types of uncertainty across distinct “layers of inference.” We present simulations of active inference agents performing hierarchical scene construction while parametrically manipulating sensory uncertainty and prior beliefs. The (sometimes counterintuitive) results of our simulations invite new perspectives on active sensing and hierarchical inference, which we discuss in the context of experimental design for both visual foraging experiments and perceptual decision-making tasks more generally. We examine the model's behavior in terms of the tension between instrumental (or utility-driven) and exploratory (epistemically-driven) drives, and how active inference explains both by appealing to a single pseudo- “value function”: the expected free energy.

The rest of this paper is structured as follows: first, we summarize active inference and the free energy principle, highlighting the expected free energy, a quantity that prescribes behavior with both goal-satisfying and information-gathering components, under the single theoretical mantle of maximizing model evidence. Next, we discuss the original model of scene construction that inspired the present work, and move on to introduce random dot motion stimuli and the ensuing ability to parametrically manipulate uncertainty across hierarchical levels, which distinguishes the current model from the original. Then we detail the Markov Decision Process generative model that our active inference agents entertain, and describe the belief-updating procedures used to invert generative models, given observed sensory data. Having appropriately set up our scene construction task, we then report the results of simulations, with differential effects of sensory uncertainty and prior belief strength appearing in several aspects of active evidence accumulation in this hierarchical environment. These computational demonstrations motivate our conclusion, where we discuss the implications of this work for experimental and theoretical studies of active sensing and evidence accumulation under uncertainty.

2. Free Energy Minimization and Active Inference

2.1. Approximate Inference via Variational Bayes

The goal of Bayesian inference is infer possible explanations for data—this means obtaining a distribution over a set of parameters x (the causal variables or explanations), given some observations õ, where the tilde ~ notation indicates a sequence of such observations over time . Note we use the notation x to refer to a set of causal variables, which may include (sequences of) states and/or hyperparameters. This is also called calculating the posterior probability P(x|õ); it encodes the optimal belief about causal variables x, after having observed some data õ. To compute the posterior requires solving using Bayes rule:

Importantly, computing this quantity requires calculating the marginal probability P(õ), also known as the evidence:

Solving this summation1 (in the continuous case, integration) quickly becomes intractable for high-dimensional models, since the evidence needs to be calculated for every possible combination of parameters x. The marginalization in Equation (2) renders exact Bayesian inference expensive or impossible in many cases, motivating approximate inference methods. One of the leading classes of methods for approximate inference are the variational methods (Beal, 2004; Blei et al., 2017). Variational inference circumvents the issue of exact inference by introducing an arbitrary distribution Q(x) to replace the true posterior. This replacement is often referred to as the variational or approximate posterior. By constraining the form of the variational distribution, tractable schemes exist to optimize it in a way that (approximately) maximizes evidence. This optimization occurs with respect to a quantity called the variational free energy, which is a computable upper-bound on surprise, or the negative (log) evidence −ln P(õ). The relationship between surprise and free energy can be shown as follows using Jensen's inequality:

where F is the variational free energy and P(õ, x) is the joint probability of observations and hidden causes, also known as the generative model. The free energy can itself be decomposed into:

This decomposition allows us to see that the free energy becomes a tighter upper-bound on surprise the closer the variational distribution Q(x) comes to the true posterior P(x|õ), as measured by the Kullback-Leibler divergence2. When Q(x) = P(x|õ), the divergence disappears and the free energy equals the negative log evidence, rendering inference exact. Variational inference is thus often described as the conversion of an integration problem (computing the marginal likelihood of observations as in Equation (2)) into an optimization problem, wherein the parameters of the variational distribution are changed to minimize F:

2.2. Active Inference and Expected Free Energy

Having discussed the variational approximation to Bayesian inference via free energy minimization, we now turn our attention to active inference. Active inference is a framework for modeling and understanding adaptive agents, premised on the idea that agents engage in approximate Bayesian inference with respect to an internal generative model of sensory data. Crucially, under active inference both action and perception are realizations of the single drive to minimize surprise. By using variational Bayesian inference to achieve this, an active inference agent generates Bayes-optimal beliefs about sources of variation in its environment by free-energy-driven optimization of an approximate posterior Q(x). This can be analogized to the idea of perception as inference, wherein perception constitutes optimizing the parameters of an approximate posterior distribution over hidden states , under a particular policy π3. In the context of neural systems, it is theorized that the parameters of these posterior beliefs about states are encoded by distributed neural activity in the agent's brain (Friston, 2008; Friston and Kiebel, 2009; Huang and Rao, 2011; Bastos et al., 2012; Parr and Friston, 2018c). Parameters of the generative model itself (such as the likelihood mapping P(o|s)) are hypothesized to be encoded by the network architectures, synaptic strengths, and neuromodulatory elements of the nervous system (Bogacz, 2017; Parr et al., 2018, 2019).

Action can also be framed as a consequence of variational Bayesian inference. Under active inference, policies (sequences of actions) correspond to sequences of “control states” —a type of hidden state that agents can directly influence. Actions are treated as samples from posterior beliefs about policies (Friston et al., 2012b). However, optimizing beliefs about policies introduces an additional complication. Optimal beliefs about hidden states are a function of current and past observations. However, as the instantaneous free energy is a direct function of observations, it is not immediately clear how to optimize beliefs about policies when observations from the future are not available. This motivates the introduction of the expected free energy, or beliefs about the free energy expected in the future when pursuing a policy π. The free energy expected at future time point τ under a policy π is given by G(π, τ). Replacing the expectation over hidden states and outcomes in Equation (3) with the expectation over hidden states and outcomes in the future, we have:

Here, we equip the agent with the prior belief that its policies minimize the free energy expected (under their pursuit) in the future. Under Markovian assumptions on the dependence between subsequent time points in the generative model and a mean-field factorization of the approximate posterior across time (such that ), we can write the prior probability of a policy as proportional to the sum of the expected free energies over time under each policy:

We will not derive the self-consistency of the prior belief that agents (believe they) will choose free-energy-minimizing policies, nor the full derivation of the expected free energy here. Interested readers can find the full derivations in Friston et al. (2015, 2017a) and Parr and Friston (2019). However, it is worth emphasizing that different components of the expected free energy clarify its implications for optimal behavior in active inference agents. These components are formally related to other discussions of adaptive behavior, such as the trade-off between exploration and exploitation. We can re-write the expected free energy for a given time-point τ and policy π as a bound on the sum of two expectations:

From this decomposition of the quantity bounded by the expected free energy G we illustrate the different kinds of “value” that contribute to behavior in active inference (Friston et al., 2013, 2015; Parr and Friston, 2017; Mirza et al., 2018). See the Appendix for a derivation of Equation (8). The left term on the RHS of the second line is a term that has been called negative information gain. Under active inference, the most likely policies are those that minimize the expected free energy of their sensory consequences—therefore, minimizing this left term promotes policies that disclose information about the environment by reducing uncertainty about the causes of observations, i.e., maximizing information gain. The right term on the RHS of the second line is often called negative extrinsic (or instrumental) value, and minimizing this term promotes policies that lead to observations that match the agent's prior expectations about observations, The relationship of these prior expectations to goal-directed behavior will become clear later in this section. We also offer an alternative decomposition of the expected free energy, formulating it in terms of minimizing a combination of ambiguity and risk:

See the Appendix for a derivation of Equation (9). The first term on the RHS of the first line (previously referred to as information gain) we hereafter refer to as “epistemic value” (Friston et al., 2015; Mirza et al., 2016). It is equivalent to expected Bayesian surprise in other accounts of information-seeking behavior and curiosity (Linsker, 1990; Itti and Baldi, 2009; Gottlieb and Oudeyer, 2018). Such an epistemic drive has the effect of promoting actions that uncover information about hidden states via sampling informative observations. This intrinsic drive to uncover information, and its natural emergence via the minimization of expected free energy, is integral to accounts of exploratory behavior, curiosity, salience, and related active-sensing phenomena under active inference (FitzGerald et al., 2015; Friston et al., 2017b,d; Parr and Friston, 2017, 2018b; Mirza et al., 2019b). An alternative formulation of the expected free energy is given in the second line of Equation (9), where minimizing expected free energy promotes policies that reduce “ambiguity,” defined as the expected uncertainty of observations, given the states expected under a policy. These notions of information gain and expected uncertainty will serve as a useful construct in understanding the behavior of active inference agents performing hierarchical scene construction later.

In order to understand how minimizing expected free energy G relates to the pursuit of preference-related goals or drives, we now turn to the second term on the RHS of the first line of Equation (9). In order to enable instrumental or “non-epistemic” goals to drive action, we supplement the agent's generative model with an unconditional distribution over observations P(o) (sometimes called P(o|m), where m indicates conditioning on the generative model of the agent)—this also factors into the log joint probability distribution in the first line of Equation (8). By fixing certain outcomes to have high (or low) probabilities as prior beliefs, minimizing G imbues action selection with an apparent instrumental or exploitative component, measured by how closely observations expected under a policy align with baseline expectations. Said differently: active inference agents pursue policies that result in outcomes that they a priori expect to encounter. The distribution P(o) is therefore also often called the “prior preferences.” Encoding preferences or desires as beliefs about future sensory outcomes underwrites the known duality between inference and optimal control (Todorov, 2008; Friston et al., 2009; Friston, 2011; Millidge et al., 2020). In the language of Expected Utility Theory (which explains behavior by appealing to the principle of maximizing expected rewards), the logarithm of such prior beliefs is equivalent to the utility function (Zeki et al., 2004). This component of G has variously been referred to as utility, extrinsic value, or instrumental value (Seth, 2015; Friston et al., 2017a; Biehl et al., 2018; Seth and Tsakiris, 2018); hereafter we will use the term instrumental value. A related but subtly different perspective is provided by the second term on the RHS of the second line of Equation (9): in this formulation, prior preferences enter the free energy through a “risk” term. The minimization of expected risk favors actions that minimize the KL-divergence between outcomes expected under a policy and preferred outcomes, and is related to formulations like KL-control or risk-sensitive control (Klyubin et al., 2005; van den Broek et al., 2010).

3. Scene Construction With Random Dot Motion

3.1. The Original Model

We now describe an abstract scene construction task that will serve as the experimental context within which to frame our hierarchical account of active evidence accumulation. Inspired by a previous active inference model of scene construction introduced by Mirza et al. (2016), here we invoke scene construction in service of a categorization game. In each trial of the task, the agent must make a discrete choice to report its belief about the identity of the “hidden scene.” In the formulation by Mirza et al., the scenes are represented by three abstract semantic labels: “flee,” “feed,” and “wait” (see Figure 1). Each scene manifests as a particular spatial coincidence of two pictures, where each picture is found within a single quadrant in a 2 × 2 visual array. For example, the “flee” scene is defined as when a picture of a cat and a picture of a bird occupy two quadrants lying horizontally adjacent to one other. The scene identities are also invariant to two spatial transformations: vertical and horizontal inversions. For example, in the “flee” scene, the bird and cat pictures can be found in either in the top or bottom row of the 2 × 2 array, and they can swap positions; in any of these cases the scene is still “flee.” The task requires active visual interrogation of the environment because quadrants must be gaze-contingently unveiled. That means, by default all quadrants are covered and their contents not visible; the agent must directly look at a quadrant in order to see its contents. This task structure and the ambiguous nature of the picture → scene mapping means that agents need to actively forage for information in the visual array in order to abduce the scene.

Figure 1

The scene configurations of the original formulation. The three scenes characterizing each trial in the original scene construction study, adapted with permission from Mirza et al. (2016).

3.2. Introducing Random Dot Motion

In the current work, scene construction is also framed as a categorization task, requiring the gaze-contingent disclosure of quadrants whose contents furnish evidence for beliefs about the scene identity. However, in the new task, the visual stimuli occupying the quadrants are animated random dot motion or RDM patterns, instead of static pictographs. An RDM stimulus consists of a small patch of dots whose correlated displacement over time gives rise to the perception of apparent directed motion (see Figure 2). By manipulating the proportion of dots moving in the same direction, the apparent direction of motion can be made more or less difficult to discriminate (Shadlen and Newsome, 1996). This discriminability is usually operationalized as a single coherence parameter, which defines the percentage of dots that appear to move in a common direction. The remaining non-signal (or “incoherent”) dots are usually designed to move in random independent directions. This coherence parameter thus becomes a simple proxy for sensory uncertainty in motion perception: manipulating the coherence of RDM patterns has well-documented effects on behavioral measures of performance, such as reaction time and discrimination accuracy, with increasing coherence leading to faster reaction times and higher accuracy (Palmer et al., 2005). In the current formulation, each RDM pattern is characterized by a unique primary direction of motion that belongs to one of the four cardinal directions: UP, RIGHT, DOWN, or LEFT. For example, in a given trial one quadrant may contain a motion pattern moving (on average) upwards, while another quadrant contains a motion pattern moving (on average) leftwards. These RDM stimuli are suitable for the current task because we can use the coherence parameter to tune motion ambiguity and hence sensory uncertainty. Applying this metaphor to the original task (Mirza et al., 2016): we might imagine blurred versions of the cat and bird pictures, such that it becomes difficult to tell whether a given image is of a bird or a cat—this low-level uncertainty about individual images may then “carry forward” to affect scene inference. An equivalent analogy might be found in the problem of reading a hastily-written phone number, such that it becomes hard to distinguish the number “7” from the number “1.” In our case, the motion coherence of RDMs controls how easily an RDM of one direction can be confused with another direction—namely, a more incoherent dot pattern is more likely to be mistaken as a dot pattern moving in a different direction.

Figure 2

Random Dot Motion Stimuli (RDMs). Schematic of random dot motion stimuli, with increasing coherence levels (i.e., % percentage of dots moving upwards) from left to right.

We also design the visual stimulus → scene mapping such that scenes are degenerate with respect to individual visual stimuli, as in the previous task (see Figure 3). There are four scenes, each one defined as the co-occurrence of two RDMs in two (and only two) quadrants of the visual array. The two RDMs defining a given scene move in perpendicular directions; the scenes are hence named: UP-RIGHT, RIGHT-DOWN, DOWN-LEFT, and LEFT-UP. Discerning the direction of one RDM is not sufficient to disambiguate the scene; due to the degeneracy of the scene configurations with respect to RDMs, the agent must always observe two RDMs and discern their respective directions before being able to unambiguously infer scene identity. The task requires two nested inferences—one about the contents of the currently-fixated quadrant (e.g., “Am I looking at an UP-wards moving RDM?”) and another about the identity about the overarching scene (e.g., “is the scene UP-RIGHT?”). During each trial, an agent can report its guess about the scene identity by choosing one of the four symbols that signify the scenes (see Figure 3), which ends the trial. This concludes our narrative description of the experimental setup.

Figure 3

The mapping between scenes and RDMs. The mapping between the four abstract scene categories and their respective dot motion pattern manifestations in the context of the hierarchical scene construction task. As an example of the spatial invariance of each scene, the bottom right panels show two possible (out of 12 total) RDM configurations for the scene “RIGHT-DOWN,” where the two constitutive RDMs of that scene are found in exactly two of the four quadrants. The ‘scene symbols' at the bottom of the visual array represent the categorization choices available to the subject, with each symbol comprised of two overlapping arrows that indicate the directions of the motion that define the scene.

3.3. Summary

We have seen how both perception and action emerge as consequences of free energy minimization under active inference. Perception is analogized to state estimation and corresponds to optimizing variational beliefs about the hidden causes of sensory data x. Meanwhile actions are sampled from inferred sequences of control states (policies). The likelihood of a policy is inversely proportional to the free energy expected under that policy. We demonstrated that expected free energy can be decomposed into the sum of two terms, which respectively encode the drive to resolve ambiguity about the hidden causes of sensory data (epistemic value) and to satisfy agent-specific preferences (instrumental value) (first line of Equation 9). In this way active inference theoretically dissolves the exploration-exploitation dilemma often discussed in decision sciences and reinforcement learning (March, 1991; Schmidhuber, 1991; Sutton and Barto, 1998; Parr, 2020) by choosing policies that minimize expected free energy. This unification of perception and action under a common Bayesian ontology underlies the power of active inference as a normative framework for studying adaptive behavior in complex systems. In the following sections we will present a (hierarchical) Markov Decision Process model of scene construction, where stochastic motion stimuli serve as observations for an overarching scene categorization task. We then discuss perception and action under active inference in the context of hierarchical scene construction, with accompanying computational demonstrations.

4. Hierarchical Markov Decision Process for Scene Construction

We now introduce the hierarchical active inference model of visual foraging and scene construction. The generative model (the agent) and the generative process of the environment both take the form of a Markov Decision Process or MDP. MDPs are a simple class of probabilistic generative models where space and time are treated discretely (Puterman, 1995). In the MDP used here, states are treated as discrete samples from categorical distributions and likelihoods act as linear transformations of hidden states, mapping states at one time step to the subsequent time step, i.e., P(st|st−1). This specification imbues the environment with Markovian, or “memoryless” dynamics. An extension of the standard MDP formulation is the partially-observed MDP or POMDP, which includes discrete observations that are mapped (via a likelihood function P(ot|st)) from states to observations at a given time.

A generative model is simply a joint probability distribution over sensory observations and their latent causes P(õ, x), and is often factorized into the product of a likelihood and a set of marginal distributions over latent variables and hyperparameters, e.g., where refer to the various latent causes. Note that in the current formulation the only hidden variables subject to variational inference are hidden states and policies π. The discrete MDP constrains these distributions to have a particular form; here, the priors over initial states, transition and likelihood matrices are encoded as categorical distributions over a discrete set of states and observations. Agents can only directly observe sensory outcomes õ, meaning that the agent must infer hidden states by inverting the generative model to estimate the causes of observations. Hierarchical models take this a step further by adding multiple layers of hidden-state inference, allowing beliefs about hidden states at one level to act as so-called “inferred observations” õ(i+1) for the level above, with associated priors and likelihoods operating at all levels. This marks a departure from previous work in the hierarchical POMDP literature (Pineau et al., 2001; Theocharous et al., 2004), where the hierarchical decomposition of action is emphasized and used to finesse the exponential costs of planning; states and observations, on the other hand, are often coarse-grained using separate schemes or left fully enumerated (although see Sridharan et al., 2010). In the current formulation, we adopt a hybrid scheme, where at a given level of depth in the hierarchy, observations can be both passed in at the same level (from the generative process), as well as via “inferred” observations from the level below. Note that as with õ, we use to denote a sequence of hidden states over time .

4.1. Hierarchical MDPs

Figure 4 summarizes the structure of a generic two-layer hierarchical POMDP model, outlining relationships between random variables via a Bayesian graph and their (factorized, categorical) forms in the left panel. In the left panel of Figure 4, õ and indicate sequences of observations and states over time. In the MDP model, the probability distributions that involve these sequences are expressed in a factorized fashion. The model's beliefs about how hidden states cause observations õ(i) are expressed as multidimensional arrays in the likelihood matrix A(i),m, where i indicates the index of the hierarchical level and m indicates a particular modality (Mirza et al., 2016; Friston et al., 2017d). The (x, y) entry of a likelihood matrix A(i),m prescribes the probability of observing the outcome x under the modality m at level i, given hidden state y. In this way, the columns of the A matrices are conditional categorical distributions over outcomes, given the hidden state indexed by the column. The dynamics that describe how hidden states at a given level s(i) evolve over time are given by Markov transition matrices B(i),n(u) which express how likely the next state is given the current state—in the generative model, this is equivalent to the transition distribution P(st|st−1, ut). Here n indexes a particular factor of level i's hidden states, and u indexes a particular control state or action. Actions in this scheme are thus treated as controlled transitions between hidden states. We assume that the posterior distribution over different dimensions of hidden states factorize, leading to conditional independence between separate hidden state factors. This is known as the mean-field approximation, and allows the sufficient statistics of posterior beliefs about different hidden state variables to be updated separately (Feynman, 1998). This results in a set of relatively simple update equations for posterior beliefs and is also consistent with known features of neuroanatomy, e.g., functional segregation in the brain (Felleman and Van, 1991; Ungerleider and Haxby, 1994; Friston and Buzsáki, 2016; Mirza et al., 2016; Parr and Friston, 2018a). The hierarchical MDP formulation notably permits a segregation of timescales across layers and an according mean-field approximation on their respective free energies, such that multiple time steps of belief-updating at one level can unfold within a single time step of inference at the level above. In this way, low-level beliefs about hidden states (and policies) can be accumulated over time at a lower layer, at which point the final posterior estimate about hidden states is passed “up” as an inferred outcome to the layer above. Subsequent layers proceed at their own characteristic (slower) timescales (Friston et al., 2017d) to update beliefs about hidden states at their respective levels. Before we describe the particular form of the hierarchical MDP used (as both the generative process and generative model) for deep scene construction, we provide a brief technical overview of the update scheme used to solve POMDPs with active inference.

Figure 4

A partially-observed Markov Decision Process with two hierarchical layers. Schematic overview of the generative model for a hierarchical partially-observed Markov Decision Process. The generic forms of the likelihoods, priors, and posteriors at hierarchical levels are provided in the left panels, adapted with permission from Friston et al. (2017d). Cat(x) indicates a categorical distribution, and indicates a discrete sequence of states or random variables: . Note that priors at the highest level (Level 2) are not shown, but are unconditional (non-empirical) priors, and their particular forms for the scene construction task are described in the text. As shown in the “Empirical Priors” panel, prior preferences at lower levels can be a function of states at level i + 1, but this conditioning of preferences is not necessary, and in the current work we pre-determine prior preferences at lower levels, i.e., they are not contextualized by states at higher levels (see Figure 8). Posterior beliefs about policies are given by a softmax function of the expected free energy of policies at a given level. The approximate (variational) beliefs over hidden states are represented via a mean-field approximation of the full posterior, such that hidden states can be encoded as the product of marginal distributions. Factorization of the posterior is assumed across hierarchical layers, across hidden state factors (see the text and Figures 6, 7 for details on the meanings of different factors), and across time. “Observations” at the higher level (õ(2)) may belong to one of two types: (1) observations that directly parameterize hidden states at the lower level via the composition of the observation likelihood one level P(o(i + 1)|s(i + 1)) with the empirical prior or “link function” P(s(i)|o(i + 1)) at the level below, and (2) observations that are directly sampled at the same level from the generative process (and accompanying likelihood of the generative model P(o(i + 1)|s(i + 1))). For conciseness, we represent the first type of mapping, from states at i + 1 to states at i through a direct dependency in the Bayesian graphical model in the right panel, but the reader should note that in practice this is achieved via the composition of two likelihoods: the observation likelihood at level i + 1 and the link function at level i. This composition is represented by a single empirical prior P(s(i)|s(i + 1)) = Cat(D(i)) in the left panel. In contrast, all observations at the lowest level (õ(1)) feed directly from the generative process to the agent.

4.1.1. Belief Updating

Figure 5 provides a schematic overview of the belief update equations for state estimation and policy inference under active inference. For the sake of clarity here we only consider a single “layer” of a POMDP generative model, i.e., we don't include the top-down or bottom-up beliefs that parameterize priors over hidden states (from the layer above) or inferred observations (from the layer below). Note that in this formulation, instead of directly evaluating the solution for states with lowest free energy s*, we use a marginal message passing routine to perform a gradient descent on the variational free energy at each time step, where posterior beliefs about hidden states and policies are incremented using prediction errors ε (see Figure 5 legend for more details). In the context of deep temporal models, these equations proceed independently at each level of the hierarchy at each time step. At lower levels, the posterior over certain hidden state factors at the first timestep can be initialized as the “expected observations” o(i+1) from the level above, and “inferred observations” at higher levels are inherited as the final posterior beliefs over the corresponding hidden state at lower levels. This update scheme may sound complicated; however, when expressed as a gradient descent on free energy, with respect to the sufficient statistics of beliefs about expected states, it reduces to a remarkably simple scheme that bears resemblance to neuronal processing: see Friston et al. (2015) for details. Importantly, the mean-field factorization of the generative model across hierarchical layers allows the belief updating to occur in isolation at each layer of the hierarchy, such that only the final posterior beliefs at one layer need to be passed to the layer above. The right side of Figure 5 shows a simple schematic of how the particular random variables that make up generative model might correspond to neural processing in known brain regions. Evidence for the sort of hierarchical processing entailed by such generative models abounds in the brain, and is the subject of a wealth of empirical and theoretical neuroscience research (Lee and Mumford, 2003; Friston, 2008; Hasson et al., 2008; Friston et al., 2017c; Runyan et al., 2017; Pezzulo et al., 2018).

Figure 5

Belief-updating under active inference. Overview of the update equations for posterior beliefs under active inference. (A) Shows the optimal solution for posterior beliefs about hidden states s* that minimizes the variational free energy of observations. In practice the variational posterior over states is computed as a marginal message passing routine (Parr et al., 2019), where prediction errors minimized over time until some criterion of convergence is reached (ε ≈ 0). The prediction errors measure the difference between the current log posterior over states and the optimal solution ln s*. Solving via error-minimization lends the scheme a degree of biological plausibility and is consistent with process theories of neural function like predictive coding (Bastos et al., 2012; Bogacz, 2017). An alternative scheme would be equating the marginal posterior over hidden states (for a given factor and/or timestep) to the optimal solution —this is achieved by solving for s* when free energy is at its minimum (for a particular marginal), i.e., . This corresponds to a fixed-point minimization scheme (also known as coordinate-ascent iteration), where each conditional marginal is iteratively fixed to its free-energy minimum, while holding the remaining marginals constant (Blei et al., 2017). (B) Shows how posterior beliefs about policies are a function of the free energy of states expected under policies F and the expected free energy of policies G. F is a function of state prediction errors and expected states, and G is the expected free energy of observations under policies, shown here decomposed into the KL divergence between expected and preferred observations or risk () and the expected entropy or ambiguity (). A precision parameter γ scales the expected free energy and serves as an inverse temperature parameter for a softmax normalization σ of policies. See the text (Section 4.1.1) for more clarification on the free energy of policies F. (C) Shows how actions are sampled from the posterior over policies, and the posterior over states is updated via a Bayesian model average, where expected states are averaged under beliefs about policies. Finally, expected observations are computed by passing expected states through the likelihood of the generative model. The right side shows a plausible correspondence between several key variables in an MDP generative model and known neuroanatomy. For simplicity, a hierarchical generative model is not shown here, but one can easily imagine a hierarchy of state inference that characterizes the recurrent message passing between lower-level occipital areas (e.g., primary visual cortex) through higher level visual cortical areas, and terminating in “high-level,” prospective and policy-conditioned state estimation in areas like the hippocampus. We note that it is an open empirical question, whether various computations required for active inference can be localized to different functional brain areas. This figure suggests a simple scheme that attributes different computations to segregated brain areas, based on their known function and neuroanatomy (e.g., computing the expected free energy of actions (G), speculated to occur in frontal areas).

We also find it worthwhile to clarify the distinction between the variational free energy of policies F(π) and the expected free energy of policies G(π), both of which are needed to compute the posterior over policies Q(π). The final posterior probability over policies is a softmax function of both quantities (see Figure 5B), where the former can be seen as the evidence afforded by past and ongoing observations, that a given policy is currently being pursued, whereas the latter is the evidence expected to be gathered in favor of pursuing a given policy, where this expected evidence is biased by prior beliefs about what kinds of observations the agent is likely to encounter (via the prior preferences C). Starting with the definition of the free energy of the (approximate) posterior over both hidden states and policies::

Where ln P(π) = G(π) is a prior of the generative model that encodes the self-consistent belief that the prior probability of a policy is proportional to its negative expected free energy G(π). Please see the Appendix for a fuller derivation of Equation (10). Note that (due to the factorization of the approximate posterior over time, cf. Section 2.2) the variational free energy of a policy F(π) is the sum of the individual free energies for a given policy afforded by past observations, up to and including the current observation:

The Iverson brackets [τ ≤ t] return 1 if τ ≤ t and 0 otherwise.

4.2. From Motion Discrimination to Scene Construction: A Nested Inference Problem

We now introduce the deep, temporal model of scene construction using the task discussed in Section 3 as our example (Figure 6). We formulate perception and action with a hierarchical POMDP consisting of two distinct layers that are solved via active inference. The first, shallowest level (Level 1) is an MDP that updates posterior beliefs about the most likely cause of visual stimulation (RDM direction), where we model the ongoing contents of single fixations—the stationary periods of relative retinal-stability between saccadic eye movements. This inference is achieved with respect to the (spatially-local) visual stimuli underlying current foveal observations. A binary policy is also implemented, encoding the option to continue holding fixation (and thus keep sampling the current stimulus) or to interrupt sampling and terminate updates at the lower level. The second, higher level (Level 2) is another MDP that performs inference at a slower timescale, with respect to the overarching hidden scene that describes the current trial. Here, we enable policies that realize visual foraging. These policies encode controlled transitions between different states of the oculomotor system, serving as a model of saccadic eye movements to different parts of the visual array. This method of encoding saccades as controlled transitions between locations is inspired by the original scene construction formulation in Mirza et al. (2016). We will now discuss both layers individually and translate different elements of the MDP generative model and environment to task-relevant parameters and the beliefs of the agent.

Figure 6

Level 1 MDP. Level 1 of the hierarchical POMDP for scene construction (see Section 4.2.1 for details). Level 1 directly interfaces with stochastic motion observations generated by the environment. At this level hidden states correspond to: (1) the true motion direction s(1),1 underlying visual observations at the currently-fixated region of the visual array and (2) the sampling state s(1),2, an aspect of the environment that can be changed via actions, i.e., selections of the appropriate state transition, as encoded in the B matrix. The first hidden state factor s(1),1 can either correspond to a state with no motion signal (“Null,” in the case when there is no RDM or a categorization decision is being made) or assume one of the four discrete values corresponding to the four cardinal motion directions. At each time step of the generative process, the current state of the RDM stimulus s(1),1 is probabilistically mapped to a motion observation via the first-factor likelihood A(1),1 (shown in the top panel as ARDM). The entropy of the columns of this mapping can be used to parameterize the coherence of the RDM stimulus, such that the true motion states s(1),1 cause motion observations o(1),1 with varying degrees of fidelity. This is demonstrated by two exemplary ARDM state matrices in the top panel (these correspond to A(1),1): the left-most matrix shows a noiseless, “coherent” mapping, analogized to the situation of when an RDM consists of all dots moving in the same direction as described by the true hidden state; the matrix to the right of the noiseless mapping corresponds to an incoherent RDM, where instantaneous motion observations may assume directions different than the true motion direction state, with the frequency of this deviation encoded by probabilities stored in the corresponding column of ARDM. The motion direction state doesn't change in the course of a trial (see the identity matrix shown in the top panel as BRDM, which simply maps the hidden state to itself at each subsequent time step)—this is true of both the generative model and the generative process. The second hidden state factor s(1),2 encodes the current “sampling state” of the agent; there are two levels under this factor: “Keep-sampling” or “Break-sampling.” This sampling state (a factor of the generative process) is directly represented as a control state in the generative model; namely, the agent can change it by sampling actions (B-matrix transitions) from the posterior beliefs about policies. The agent believes that the “Break-sampling” state is a sink in the transition dynamics, such that once it is entered, it cannot be exited (see the right-most matrix of the transition likelihood BSampling state). Entering the “Break-sampling” state terminates the POMDP at Level 1. The “Keep-sampling” state enables the continued generation of motion observations as samples from the likelihood mapping A(1),1. A(1),2 (the “proprioceptive” likelihood, not shown for clarity) deterministically maps the current sampling state s(1),2 to an observation o(1),2 thereof (bottom row of lower right panel), so that the agent always observes which sampling state it is in unambiguously.

4.2.1. Level 1: Motion Discrimination via Motion Sampling Over Time

Lowest level (Level 1) beliefs are updated as the agent encounters a stream of ongoing, potentially ambiguous visual observations—the instantaneous contents of an individual fixation. The hidden states at this level describe a distribution over motion directions, which parameterize the true state of the random motion stimulus within the currently-fixated quadrant. Observations manifest as a sequence of stochastic motion signals that are samples from the true hidden state distribution.

The generative model has an identical form as the generative process (see above) used to generate the stream of Level 1 outcomes. Namely, it is comprised of a set of likelihoods and transitions as the dynamics describing the “real” environment (Figure 6). In order to generate a motion observation, we sample the probability distribution over motion direction given the true hidden state using the Level 1 generative process likelihood matrix A(1),1. For example, if the current true hidden state at the lower level is 2 (implying that an RDM stimulus of UPwards motion occupies the currently fixated quadrant), stochastic motion observations are sampled from the second column of the generative likelihood mapping A(1),1. The precision of this column-encoded distribution over motion observations determines how often the sampled motions will be UPwards signals and thus consistent with the true hidden state. The entropy or ambiguity of this likelihood mapping operationalizes sensory uncertainty and in this case, motion incoherence. For more details on how states and outcomes are discretized in the generative process, see Figure 6 and its legend.

Inference about the motion direction (Level 1 state estimation) roughly proceeds as follows: (1) at time t a motion observation is sampled from the generative process A(1),1; (2) posterior beliefs about the motion direction at the current timestep are updated using a gradient descent on the variational free energy. In addition, we included a second, controllable hidden state factor at Level 1 that we refer to as the abstract “sampling state” of the agent. We include this in order to enable policies at this level, which entail transitions between the two possible values of this control state. These correspond to the choice to either keep sampling the current stimulus or break sampling. These policies are stored as two 2 × 2 transition matrices in B(2),2, where each transition matrix B(2),2(u)encodes the probability of transitioning to “Keep-sampling” or “Break-sampling,” given an action u and occupancy in one of the two sampling states. Note that these policies only consider actions at the next time step, meaning that the policy-space is identical to the action-space (there is no sequential aspect to the policies). Selecting the first action keeps the Level 1 MDP in the “Keep-sampling” state, triggering the generation of another motion observation from the generative process. Engaging the second “Break-sampling” policy moves the agent‘s sampling regime into the second state and terminates any further updates at Level 1. At this point the latest posterior beliefs from Level 1 are sent up as observations for Level 2. It is worth noting that implementing “breaking” the MDP at the lower level as an explicit policy departs from the original formulation of deep, temporal active inference. In the formulation developed in Friston et al. (2017d), termination of lower level MDPs occurs once the entropy of the lower-level posterior over the hidden states (only those factors that are linked with the level above) is minimized beyond a fixed value4. We chose to treat breaking the first level MDP as an explicit policy in order to formulate behavior in terms of the same principles that drive action selection at the higher level—namely, the expected free energy of policies. In the Simulations section we explore how the dynamic competition between the “Break-” and “Keep-sampling” policies induces an unexpected distribution of break latencies.

We fixed the maximum temporal horizon of Level 1 (hereafter T1) to be 20 time steps, such that if the “Break-sampling” policy is not engaged before t = 20 (implying that “Keep-sampling” has been selected the whole time), Level 1 automatically terminates after the 20th time step and the final posterior beliefs are passed up as outcomes for Level 2.

4.2.2. Level 2: Scene Inference and Saccade Selection

After beliefs about the state of the currently-foveated visual region are updated via active inference at Level 1, the resulting posterior belief about motion directions is passed up to Level 2 as a belief about observations. These observations (which can be thought of as the inferred state of the visual stimulus at the foveated area) are used to update the statistics of posterior beliefs over the hidden states operating at Level 2 (specifically, the hidden state factor that encodes the identity of the scene, e.g., UP-RIGHT). Hidden states at Level 2 are segregated into two factors, with corresponding posterior beliefs about them updated independently.

The first hidden state factor corresponds to the scene identity. As described in Section 3, there are four possible scenes characterizing a given trial: UP-RIGHT, RIGHT-DOWN, DOWN-LEFT, and LEFT-UP. The scene determines the identities of the two RDMs hiding throughout the four quadrants, e.g., when the scene is UP-RIGHT, one UPwards-moving RDM is found in one of the four quadrants, and a RIGHTwards-moving RDM is found in another quadrant. The quadrants that are occupied by RDMs for a given scene is random, meaning that agents have to forage the 2 × 2 array for the RDMs in order to infer the scene. We encode the scene identities as well as their “spatial permutability” (with respect to quadrant-occupancy) by means of a single hidden state factor that exhaustively encodes the unique combinations of scenes and their spatial configurations. This first hidden state factor is thus a 48-dimensional state distribution (4 scenes × 12 possible spatial configurations—see Figure 7 for visual illustration).

Figure 7

Level 2 MDP. Level 2 of the hierarchical POMDP for scene construction. Hidden states consist of two factors, one encoding the scene identity and another encoding the eye position (i.e., current state of the oculomotor system). The first hidden state factor s(2),1 encodes the scene identity of the trial in terms of two unique RDM directions occupy two of the quadrants (four possible scenes as described in the top right panel) and spatial configuration (one of 12 unique ways to place two RDMs in four quadrants). This yields a dimensionality of 48 for this hidden state factor (4 scenes × 12 spatial configurations). The second hidden state factor s(2),2 encodes the eye position, which is initialized to be in the center of the quadrants (Location 1). The next four values of this factor index the four quadrants (2–5), and the last four are indices for the choice locations (the agent fixates one of these four options to guess the scene identity). As with the sampling state factor at Level 1, the eye position factor s(2),2 is controllable by the agent through the action-dependent transition matrices B(2),2. Outcomes at Level 2 are characterized by three modalities: the first modality o(2),1 indicates the visual stimulus (or lack thereof) at the currently-fixated location. Note that during belief updating, the observations of this modality o(2),1 are inferred hidden states over motion directions that are passed up after solving the Level 1 MDP (see Figure 6). An example likelihood matrix for this first modality is shown in the upper left, showing the conditional probabilities for visual outcomes when the 1st factor hidden state has the value 32. This corresponds to the scene identity DOWN-LEFT under spatial configuration 8 (the RDMs occupy quadrants indexed as Locations 2 and 4). The last two likelihood arrays A(2),2 and A(2),3 map to respective observation modalities o(2),2 and o(2),3, and are not shown for clarity; the A(2),2 likelihood encodes the joint probability of particular types of trial feedback (Null, Correct, Incorrect—encoded by o(2),2) as a function of the current hidden scene and the location of the agent's eyes, while A(2),3 is an unambiguous proprioceptive mapping that signals to the agent the location of its own eyes via o(2),3. Note that these two last observation modalities o(2),2 and o(2),3 are directly sampled from the environment, and are not passed up as “inferred observations” from Level 1.

The second hidden state factor corresponds to the current spatial position that's being visually fixated—this can be thought of as a hidden state encoding the current configuration of the agent's eyes. This hidden state factor has nine possible states: the first state corresponds to an initial position for the eyes (i.e., a fixation region in the center of the array); the next four states (indices 2–5) correspond to the fixation positions of the four quadrants in the array, and the final four states (6–9) correspond to categorization choices (i.e., a saccade which reports the agent's guess about the scene identity). The states of the first and second hidden state factors jointly determine which observation is sampled at each timestep on Level 2.

Observations at this level comprise three modalities. The first modality encodes the identity of the visual stimulus at the fixated location and is identical in form to the first hidden state factor at Level 1: namely, it can be either the “Null” outcome (when there is no visual stimulus at the fixated location) or one of the four motion directions. The likelihood matrix for the first-modality on Level 2, namely A(2),1, consists of probabilistic mappings from the scene identity /spatial configuration (encoded by the first hidden state factor) and the current fixation location (the second hidden state factor) to the stimulus identity at the fixated location, e.g., if the scene is UP-RIGHT under the configuration where the UPwards-moving RDM is in the upper left quadrant and the RIGHTwards-moving RDM is in the upper right quadrant and the current fixation location (the second hidden state) is the upper left quadrant, then the likelihood function will determine the first-modality observation at Level 2 to be UP. When the agent is fixating either an empty quadrant, the starting fixation location, or one of the response options (locations 6–9), the observation in the first modality is Null. The likelihood functions are deterministic and identical in both the generative model and generative process—this imbues the agent with a kind of “prior knowledge” of the (deterministic) mapping between the scenes and their respective visual manifestations in the 2 × 2 grid. The second observation modality is a ternary variable that returns feedback to the agent based on its scene categorization performance—it can assume the values of “No Feedback,” “Correct,” or “Incorrect.” Including this observation modality (and prior beliefs about the relative probability of its different values) allows us to endow agents with the drive to report their guess about the scene, and to do so accurately in order to maximize the chance of receiving correct feedback. The likelihood mapping for this modality A(2),2 is structured to return a “No Feedback” outcome in this modality when the agent fixates any area besides the response options, and returns “Correct” or “Incorrect” once the agent makes a saccade to one of the response options (locations 6–9)—the particular value it takes depends jointly on the true hidden scene and the scene identity that the agent has guessed. We will further discuss how a drive to respond accurately emerges when we describe the prior beliefs parameterized by the C and D arrays. The final observation modality at Level 2 is a proprioceptive mapping (similar to “sampling-state” modality at Level 1) that unambiguously signals which location the agent is currently fixating via a 9 × 9 identity matrix A(2),3.

The transition matrices at Level 2, namely B(2),1 and B(2),2, describe the dynamics of the scene identity and of the agent's oculomotor system, respectively. We assume the dynamics that describe the scene identity are both uncontrolled and unchanging, and thus fix B(2),1 to be an identity matrix that ensures the scene identity/spatial configuration is stable over time. As in earlier formulations (Friston et al., 2012a; Mirza et al., 2016, 2019a) we model saccadic eye movements as transitions between control states in the 2nd hidden state factor. The dynamics describing the eye movement from the current location to a new location is encoded by the transition array B(2),2 (e.g., if the action taken is 3 then the saccade destination is described by a transition matrix that contains a row of 1s on the third row, mapping from any previous location to location 3).

Inference and action selection at Level 2 proceeds as follows: based on the current hidden state distribution and Level 1's likelihood mapping A(1),1 (the generative process), observations are sampled from the three modalities. The observation under the first-modality at this level (either “Null” or a motion direction parameterizing an RDM stimulus) is passed down to Level 1 as the initial true hidden state. The agent also generates expectations about the first-modality observations via , where A(1),1 is the generative model's likelihood and Q(st) is the latest posterior density over hidden states (factorized into scene identity and fixation location). This predictive density over (first-modality) outcomes serves as an empirical prior for the agent's beliefs about the hidden states in the first factor—motion direction—at Level 1. Belief-updating and policy selection at Level 1 then proceeds via active inference using the empirical priors inherited from Level 2 in addition to its own generative model and process (as described in Section 4.2.1). Once the motion observations and belief updating terminates at Level 1, the final posterior beliefs about the 1st factor hidden states are passed to Level 2 as “inferred” observations of the first modality. The belief updating at Level 2 proceeds as usual, where observations (both those “inferred” from Level 1 and the true observations from the Level 2 generative process: the oculomotor state and reward modality) are integrated using Level 2's generative model to form posterior beliefs about hidden states and policies. The policies at this level, like at the lower level, only consider one step ahead in the future—so each policy consists of one action (a saccade to one of the quadrants or a categorization action), to be taken at the next timestep. An action is sampled from the posterior over policies at ~ Q(π), which changes hidden states in the next time step to generate a new observation, thus closing the action-perception cycle. In this spatiotemporally “deep” version of scene construction, we see how a temporally-extended process of active inference at the lower level (capped at T1 = 20 time steps in our case) can be nested within a single time step of a higher-level process, endowing such generative models with a flexible, modular form of temporal depth. Also note the asymmetry in informational scheduling across layers, with posterior beliefs about those hidden states linked with the higher level being passed up as evidence for outcomes at the higher level, with observations at the higher level being passed down as empirical priors over hidden states at the lower level.

4.2.3. Priors

In addition to the likelihood A and B arrays that prescribe the probabilistic relationships between variables at each level, the generative model is also equipped with prior beliefs over observations and hidden states that are respectively encoded in the so-called C and D arrays. See Figure 8 for schematic analogies for these arrays and their elements for the two hierarchical levels.

Figure 8

C's and D's. Prior beliefs over observations and hidden states for both hierarchical levels. Note that superscripts here index the hierarchical level, and separate modalities/factors for the C and D matrices are indicated by stacked circles. At the highest level (Level 2), prior beliefs about second-modality outcomes (C(2),2) encode the agent's beliefs about receiving correct and avoiding incorrect feedback. Prior beliefs over the other outcome modalities (C(2),1 and C(2),3) are all trivially zero. These beliefs are stationary over time and affect saccade selection at Level 2 via the expected free energy of policies G. Prior beliefs about hidden states D(2) at this level encode the agent's initial beliefs about the scene identity and the location of their eyes. This prior over hidden states can be manipulated to put the agent's beliefs about the world at odds with the actual hidden state of the world. At Level 1, the agent's preferences about being in the “Break-sampling” state increases over time and is encoded in the preferences about second modality outcomes (C(1),2), which corresponds to the agents umambiguous perception of its own sampling state. Finally, the prior beliefs about initial states at Level 1 (D1) correspond to the motion direction hidden state (the RDM identity) as well as which sampling-state the agent is in. Crucially, the first factor of these prior beliefs D(1),1 is initialized as the “expected observations” from Level 2: the expected motion direction (first modality). These expected observations are generated by passing the variational beliefs about the scene at Level 2 through the modality-specific likelihood mapping: Q(o(2),1|s(2),1) = P(o(2),1|s(2),1)Q(s(2),1). The prior over hidden states at Level 1 is thus called an empirical prior as it is inherited from Level 2. The red arrow indicates the relationship between the expected observation from Level 2 and the empirical prior over (first-factor) hidden states at Level 1.

The C array contains what are often called the agent's “preferences” P(o) and encodes the agent‘s prior beliefs about observations (an unconditional probability distribution). Rather than an explicit component of the generative model, the prior over outcomes is absorbed into the prior over policies P(π), which is described in Section 2.2. Policies that are more likely to yield observations that are deemed probable under the prior (expressed in terms of agent's preferences P(o)) will have less expected free energy and thus be more likely to be chosen. Instrumental value or expected utility measures the degree to which the observations expected under a policy correlate with prior beliefs about those observations. For categorical distributions, evaluating instrumental value amounts to taking the dot product of the (policy-conditioned) posterior predictive density over observations Q(oτ|π) with the log probability density over outcomes logP(oτ). This reinterpretation of preferences as prior beliefs about observations allows us to discard the classical notion of a “utility function” as postulated in fields like reward neuroscience and economics, instead explaining both epistemic and instrumental behavior using the common currency of log-probabilities and surprise. In order to motivate agents to categorize the scene, we embed a self-expectation of accuracy into the C array of Level 2; this manifests as a high prior expectation of receiving “Correct” feedback (a relative log probability of +2 nats) and an expectation that receiving “Incorrect” feedback is unlikely (relative log probability of −4 nats). The remaining outcomes of the other modalities at Level 2 have equal log-probability in the agent's prior preferences, thus contributing identically (and uninformatively) to instrumental value. At Level 1 we encoded a form of “urgency” using the C matrix; we encoded the prior belief that the probability of observing the “Break-sampling” state (via the umambiguous mapping A(1),2) increases over time. This necessitates that the complementary probability of remaining in the “Keep-sampling” state decreases over time. Equipping the Level 1 MDP with such preferences generates a tension between the epistemic drive to resolve uncertainty about the hidden state of the currently-fixated stimulus and the ever-strengthening prior preference to terminate sampling at Level 1. In the simulation results to follow, we explore this tension more explicitly and report an interesting yet unexpected relationship between sensory uncertainty and fixational dwell time, based on the dynamics of various contributions to expected free energy.

Finally, the D array encodes the agent's initial (prior) beliefs over hidden states in the environment. By changing prior beliefs about the initial states, we can manipulate an agent's beliefs about the environment independently of the true hidden states characterizing that environment. In the Section 5.2 below we describe the way we parameterize the first hidden state factor of the Level 2 D matrix to manipulate prior beliefs about the scene. The second hidden state factor at Level 2 (encoding the saccade location) is always initialized to start at Location 1 (the generic “starting” location). At Level 1, the first-factor of the D matrix (encoding the true motion direction of an RDM) is initialized to the posterior expectations from Level 2, i.e., . The second-factor belief about hidden states (encoding the sampling state) is initialized to the “Keep-sampling” state.

In the following sections, we present hierarchical active inference simulations of scene construction, in which we manipulate the uncertainty associated with beliefs at different levels of the generative model to see how uncertainty differentially affects inference across levels in uncertain environments.

5. Simulations

Having introduced the hierarchical generative model for our RDM-based scene construction task, we will now explore behavior and belief-formation in the context of hierarchical active inference. In the following sections we study different aspects of the generative model through quantitative simulations. We relate parameters of the generative model to both “behavioral” read-outs (such as sampling time, categorization latency and accuracy) as well as the agents' internal dynamics (such as the evolution of posterior beliefs, the contribution of different kinds of value to policies, etc.). We then discuss the implications of our model for studies of hierarchical inference in noisy, compositionally-structured environments.

5.1. Manipulating Sensory Precision

Figures 9, 10 show examples of deep active inference agents performing the scene construction task under two levels of motion coherence (high and low, respectively for Figures 9, 10), which is equivalent to the reliability of motion observations at Level 1. In particular, we operationalize this uncertainty via an inverse temperature p that parameterizes a softmax transformation on the columns of the Level 1 likelihood mapping to RDM observations A(1),1. Each each column of A(1),1 is initialized as a “one-hot” vector that contains a probability of 1 at the motion observation index corresponding to the true motion direction, and 0s elsewhere. As p decreases, A deviates further from the identity matrix and Level 1 motion observations become more degenerate with respect to the hidden state (motion direction) underlying them. Note that this parameterization of motion incoherence only pertains to the last four rows/columns of A(1),1, as the first row/column of the likelihood (A(1),1(1, 1)) corresponds to observations about the “Null” hidden state, which is always observed unambiguously when it is present. In other words, locations that do not contain RDM stimuli are always perceived as “Null” in the first modality with certainty.

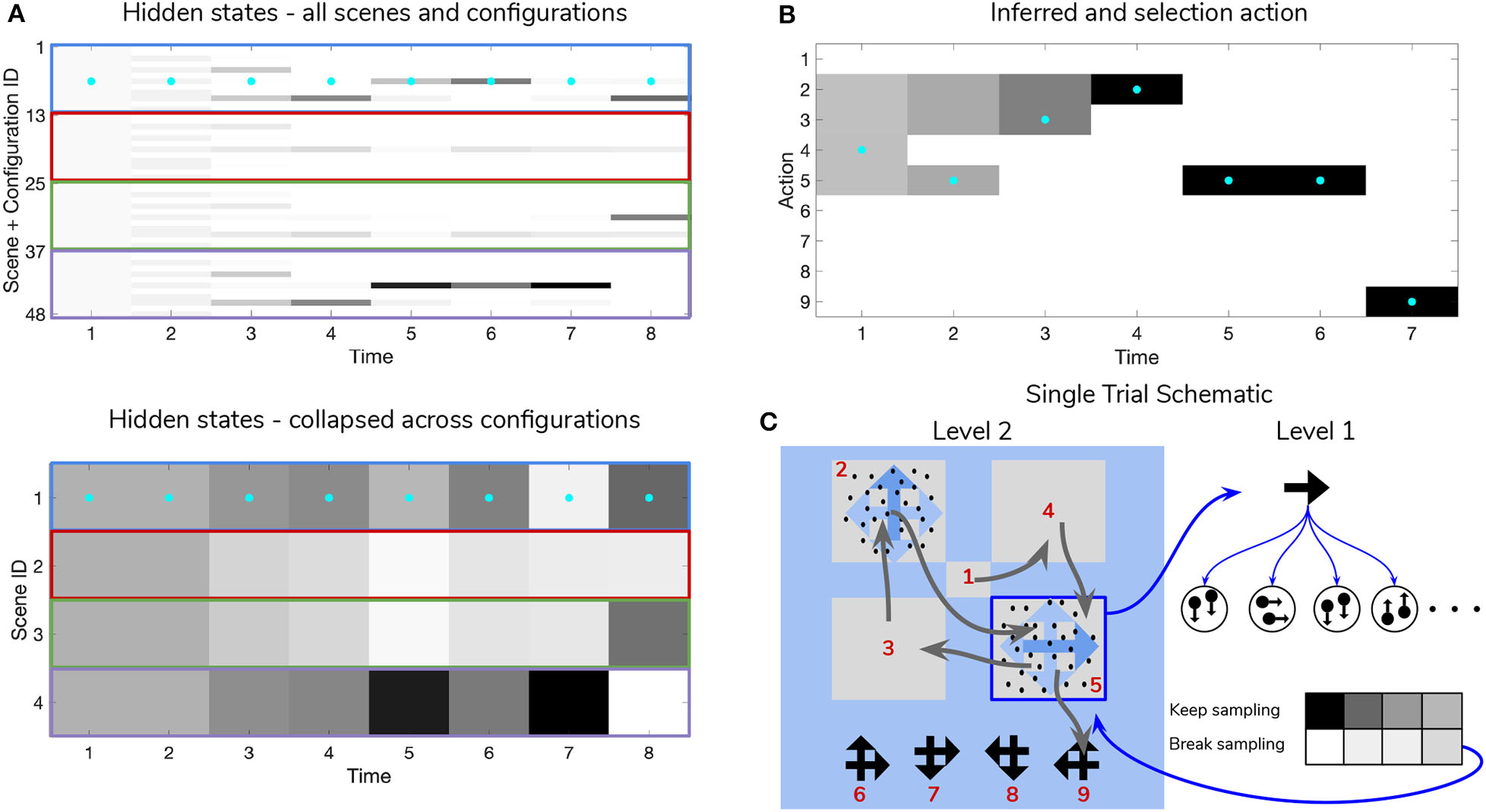

Figure 9

Simulated trial of scene construction under high sensory precision. (A) The evolution of posterior beliefs about scene identity—the first factor of hidden states at Level 2—as a deep active inference agent explores the visual array. In this case, sensory precision at Level 1 is high, meaning that posterior beliefs about the motion direction of each RDM-containing quadrant are resolved easily, resulting in fast and accurate scene categorization. Cells are gray-scale colored according to the probability of the belief for that hidden state and time index (darker colors correspond to higher probabilities). Cyan dots indicates the true hidden state at each time step. The top row of (A) shows evolving beliefs about the fully-enumerated scene identity (48 possibilities), with every 12 configurations highlighted with a differently-colored bounding box, correspond to beliefs about each type of scene (i.e., UP-RIGHT, RIGHT-DOWN, DOWN-LEFT, LEFT-UP). The bottom panel shows the collapsed beliefs over the four scenes, computed by summing the hidden state beliefs across the 12 spatial configurations. (B) Evolution of posterior beliefs about actions (fixation starting location not shown), culminating in the categorization decision (here, the scene was categorized as UP-RIGHT, corresponding to a saccade to location 6. (C) Visual representation of the agent's behavior for this trial. Saccades are depicted as curved gray lines connecting one saccade endpoint to the next. Fixation locations (corresponding to 2nd factor hidden state indices) are shown as red numbers. The Level 1 active inference process occurring within a single fixation is schematically represented on the right side, with individual motion samples shown as issued from the true motion direction via the low level likelihood A(1),1. The agent observes the true RDM at Level 1 and updates its posterior beliefs about this hidden state. As uncertainty about the RDM direction is resolved, the “Break-sampling” action becomes more attractive (since epistemic value contributes increasingly less to the expected free energy of policies). In this case, the sampling process at Level 1 is terminated after only three timesteps, since the precision of the likelihood mapping is high (p = 5.0) which relates to the speed at which uncertainty is resolved about the RDM motion direction—see the text for more details.

Figure 10

Simulated trial of scene construction with low sensory precision. Same as in Figure 9, except in this trial the precision of the mapping between RDM motion directions and samples thereof is lower, p = 0.5. This leads to an incorrect sequence of inferences, where the agent ends up believing that the scene identity is LEFT-UP and guessing incorrectly. Note that after this choice is made and incorrect feedback is given, the agent updates their posterior in terms of the “next best” guess, which is from the agent's perspective either UP-RIGHT or DOWN-LEFT (see the posterior at Time step 8 of (A)). (C) Shows that the relative imprecision of the Level 1 likelihood results in a sequence of stochastic motion observations that frequently diverge from the true motion direction (in this case, the true motion direction is RIGHT in the lower right quadrant (Location 5)). Level 1 belief-updating gives rise to an imprecise posterior belief over motion directions that are passed up as inferred outcomes to Level 2, leading to false beliefs about the scene identity. Note the “ambivalent,” quadrant-revisiting behavior, wherein the agent repeatedly visits the lower-right quadrant to resolve uncertainty about the RDM stimulus at that quadrant.

Figure 9 is a simulated trial of scene construction with sensory uncertainty at the lower level set to p = 5.0. This manifests as a stream of motion observations at the lower level that reflect the true motion state ~98% of the time, i.e., highly-coherent motion. As the agent visually interrogates the 2 × 2 visual array (the 2nd to 5th rows of Panel B), posterior beliefs about the hidden scene identity (Panel A) converge on the true hidden scene. After the first RDM in the lower right quadrant is seen (and its state resolved with high certainty), the agent's Level 2 posterior starts to only assign non-zero probability to scenes that include the RIGHTwards-moving motion stimulus. Once the second, UPwards-moving RDM stimulus is perceived in the upper left, the posterior converges upon the correct scene (in this case, indexed as state 7, one of the 12 configurations of UP-RIGHT). Once uncertainty about the hidden scene is resolved, G becomes dominated by instrumental value, or the dot-product of counterfactual observations with prior preferences. Expecting to receive correct feedback, the agent saccades to location 6 (which corresponds to the scene identity UP-RIGHT) and receives a “Correct” outcome in the second-modality of Level 2 observations. The agent thus categorizes the scene and remains there for the remainder of the trial to exploit the expected instrumental value of receiving “Correct” feedback (for the discussion about how behavior changes with respect to prior belief and sensory precision manipulations, we only consider behavior up until the time step of the first categorization decision).

Figure 10 shows a trial when the RDMs are incoherent (p = 0.5, meaning the Level 1 likelihood yields motion observations that reflect the true motion state ~35% of the time). In this case, the agent fails to categorize the scene correctly due to the inability to form accurate beliefs about the identity of RDMs at Level 1—this uncertainty carries forward to lead posterior beliefs at Level 2 astray. Interestingly, the agent still forms relatively confident posterior beliefs about the scene (see the posterior at Timestep 7 of Figure 11A), but they are inaccurate since they are based on inaccurate posterior beliefs inherited from Level 1. This is because even though the low-level belief is built from noisy observations, posterior probability ends up still “focusing” on a particular dot direction based on the particular sequence of observations that is sampled; this is then integrated with empirical priors and subsequent observations to narrow the possible space of beliefs about the scene. The posterior uncertainty also manifests as the time spent foraging in quadrants before making categorization (nearly double the time spent by the agent in Figure 9). The cause of this increase in foraging time is 2-fold. First of all, since uncertainty about the scene identity is high, the epistemic value of policies that entail fixations to RDM-containing quadrants remains elevated, even after all the quadrants have been visited. This is because uncertainty about hidden states is unlikely to be resolved after a single saccade to a quadrant with an incoherent RDM, meaning that the epistemic value of repeated visits to such quadrants decreases slowly with repeated foraging. Secondly, since Level 2 posterior beliefs about the scene identity are uncertain and are distributed among different states, the instrumental value of categorization actions remains low—remember that instrumental value depends not only on the instrumental value of receiving “Correct” feedback, but also on the agent's expectation about the probability of receiving this feedback upon making an action, relative to the probability of receiving “Incorrect” feedback. The relative values of the prior preferences for being “Correct” vs. “Incorrect” thus tune the risk-averseness of the agent, and manifest as a dynamic balance between epistemic and instrumental value. See Mirza et al. (2019b) for a quantitative exploration of these prior preferences and their effect on active inference.

Figure 11

Effect of sensory precision on scene construction performance. Average categorization latency (A) and accuracy (B) as a function of sensory precision p which controls the entropy of the (Level 1) likelihood mapping from motion direction to motion observation. We simulated 185 trials of scene construction under hierarchical active inference for each level of p (12 levels total), with scene identities and configurations randomly initialized for each trial. Sensory precision is shown on a logarithmic scale.