95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Public Health , 20 June 2023

Sec. Infectious Diseases: Epidemiology and Prevention

Volume 11 - 2023 | https://doi.org/10.3389/fpubh.2023.1183725

This article is part of the Research Topic Artificial Intelligence in Infectious Diseases: Pathogenesis and Therapy View all 7 articles

Saeed Shakibfar1*

Saeed Shakibfar1* Fredrik Nyberg2

Fredrik Nyberg2 Huiqi Li2

Huiqi Li2 Jing Zhao3,4

Jing Zhao3,4 Hedvig Marie Egeland Nordeng3,4

Hedvig Marie Egeland Nordeng3,4 Geir Kjetil Ferkingstad Sandve4,5

Geir Kjetil Ferkingstad Sandve4,5 Milena Pavlovic4,5

Milena Pavlovic4,5 Mohammadhossein Hajiebrahimi6

Mohammadhossein Hajiebrahimi6 Morten Andersen1

Morten Andersen1 Maurizio Sessa1

Maurizio Sessa1Aim: To perform a systematic review on the use of Artificial Intelligence (AI) techniques for predicting COVID-19 hospitalization and mortality using primary and secondary data sources.

Study eligibility criteria: Cohort, clinical trials, meta-analyses, and observational studies investigating COVID-19 hospitalization or mortality using artificial intelligence techniques were eligible. Articles without a full text available in the English language were excluded.

Data sources: Articles recorded in Ovid MEDLINE from 01/01/2019 to 22/08/2022 were screened.

Data extraction: We extracted information on data sources, AI models, and epidemiological aspects of retrieved studies.

Bias assessment: A bias assessment of AI models was done using PROBAST.

Participants: Patients tested positive for COVID-19.

Results: We included 39 studies related to AI-based prediction of hospitalization and death related to COVID-19. The articles were published in the period 2019-2022, and mostly used Random Forest as the model with the best performance. AI models were trained using cohorts of individuals sampled from populations of European and non-European countries, mostly with cohort sample size <5,000. Data collection generally included information on demographics, clinical records, laboratory results, and pharmacological treatments (i.e., high-dimensional datasets). In most studies, the models were internally validated with cross-validation, but the majority of studies lacked external validation and calibration. Covariates were not prioritized using ensemble approaches in most of the studies, however, models still showed moderately good performances with Area under the Receiver operating characteristic Curve (AUC) values >0.7. According to the assessment with PROBAST, all models had a high risk of bias and/or concern regarding applicability.

Conclusions: A broad range of AI techniques have been used to predict COVID-19 hospitalization and mortality. The studies reported good prediction performance of AI models, however, high risk of bias and/or concern regarding applicability were detected.

Coronavirus Disease 2019 (COVID-19) was declared a global pandemic on 11th March 2020 by the World Health Organization (WHO) (1). In 2020 COVID-19 spread all over the world and has already infected more than 623 million individuals and caused more than 6 million deaths worldwide (2) and, in the U.S., more than 5 million hospitalizations by the 1st of September, 2022 (3).

Huge efforts has been made by the scientific community to promote the integration of artificial intelligence (AI) into predictive modeling of COVID-19-related outcomes (4). Artificial intelligence is defined as “the theory and development of computer systems able to perform tasks normally requiring human intelligence” (5). Within AI, especially machine learning which is defined as “the theory and development of automatic discovery of regularities in data through the use of computer algorithms and with the use of these regularities to take actions such as classifying the data into different categories”, has the potential of achieving high prediction accuracy and scalability of models based on data availability (6). This is crucial in fast-pacing scenarios such as the COVID-19 pandemic (3).

Considering that none of the available reviews on the use of AI in the predictive modeling of COVID-19-related outcomes have performed a bias/applicability assessment of AI models used to predict such outcomes (7–14) (Table 1), we conducted a systematic screening aiming at filling this knowledge gap. According to the definitions of the tool used for assessing the risk of bias in this systematic review (i.e., Bias analysis using the Prediction model Risk Of Bias ASsessment Tool, PROBAST), bias was defined to occur when shortcomings in the study design, conduct, or analysis lead to systematically distorted estimates of model predictive performance. Concerns regarding applicability of AI were considered high when the population, predictors, or outcomes of the study differ from those specified in the review question.

We performed a systematic literature search in Ovid MEDLINE to identify studies using AI models to predict COVID-19-related hospitalization/mortality. AI-, data-, and epidemiological-related aspects were extracted and assessed as these aspects are critical for the scientific robustness of the published articles.

Ovid MEDLINE (from 01/01/2019 to 2022/08/22) was searched, along with the reference lists in the reviews identified with our research query (Supplementary Table 1). Search terms included in the query have been previously used in the context of systematic reviews of AI/ML models and were described by Sessa et al. elsewhere (15, 16). This review was performed according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (17). The PRISMA checklist is provided in Supplementary Table 2.

We evaluated observational studies, meta-analyses, and clinical trials that developed, validated, or updated machine learning prognostic prediction models for COVID-19-related hospitalization and mortality. However, we excluded studies providing an overview and epidemiological modeling tasks such as predicting COVID-19 peaks. Additionally, we excluded articles focusing on the use of AI for image, signal, or time-series processing for prediction of COVID-19-related outcomes and those articles focusing on the use of AI for assessing the safety/effectiveness of vaccines for COVID-19 or drug discovery for COVID-19 treatments. Finally, we excluded studies focusing on AI algorithms development that did not test the algorithm using clinical data. Only studies for which the full text was available in the English language were considered eligible. Abstracts sent to international or national conferences, letters to the editor, and case reports/series were considered ineligible along with articles evaluating natural language processing techniques. The reference list of narrative and systematic reviews included with our MEDLINE query were further screened for undetected records.

In the first screening procedure, titles and abstracts of retrieved records were screened by two independent researchers (SS&MS). All articles that were considered eligible at the first screening procedure underwent a full-text evaluation. If disagreements regarding eligibility of the articles arose during the two steps evaluation process, it was resolved by consensus.

The primary aim of the systematic review was the assessment of biases in retrieved articles and in depth description of the AI models used for covid-19 risk prediction. This included a description of their performance, their application for external/internal validation, the type of filtering/prioritization approach that was used, and the approaches for models' learning (i.e., supervised/not supervised). Additionally, we investigated the use of calibration, and models' bias assessment tools, the type of data (i.e., country where the data were generated, single/multi-country data sources, type of covariates generated from the data, and primary vs. secondary data), and epidemiological aspects of AI models' application (i.e., nationwide/sampled population, risk factors/disease risk score development for COVID-19-related mortality/hospitalization). The secondary aim was the visualization (plots/tables) of the information described for the primary aim and an outline of why the bias occurred, and what the main sources of bias were.

A data extraction form was developed for this systematic review (Supplementary Table 3).

We extracted the following data-related information from each eligible study:

1) Type of data;

Type of data was defined as: “a particular kind of data item, as defined by the values it can take, the programming language used, or the operations that can be performed on it” (18). We extracted and categorized data into four data types: pharmacological treatments [e.g., drug prescriptions], clinical (e.g., signs, symptoms, physician notes, and patients' diagnoses), laboratory (biochemical or immunological laboratory test results), and demographic data (e.g., age and gender).

2) Single or multi-countries data sources;

A multi-country data source was defined as a data collection process from more than one country.

3) Country of data collection;

Country of data collection was defined as a nation with its own government, occupying a particular territory where data were collected .

4) Type of covariates;

A covariate was defined as an independent variable that can influence the outcome of a given statistical trial (19). In this review these consisted of predictors of hospitalization/death due to COVID-19 and potential confounders of these predictors. Covariates were extracted as presented in the statistical analysis section of retrieved articles.

5) Primary or secondary data;

We defined primary data as information collected directly by the researchers using interviews –personal or by telephone– or self-administered questionnaires (20). Secondary data were defined as data that were previously collected for other purposes than for the study at hand (20).

We extracted the following information directly related to the AI modeling for each study:

6) Model;

We defined a statistical model as a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population) (21).

7) Model performance;

Model performance was assessed using methods and metrics described by Steyerberg et al. (22). In this review, we used traditional measures for assessing overall model performance [e.g., area under the receiver operating characteristic curve (AUC) and goodness-of-fit statistics for calibration as reported in the original studies (22)].

8) Internal/External validation;

Internal validity was defined as the extent to which the observed results represent the truth in the population actually studied and, thus, are not due to methodological errors. External validity refers to the extent to which the results of a study are generalizable to patients in daily practice outside the study population, especially for the population that the sample is thought to represent (23).

9) Type of filtering/prioritization;

Filtering/prioritization exert the process of over-emphasizing or censoring certain information based on their perceived importance (24).

10) Supervised/unsupervised machine learning;

Supervised learning refers to techniques in which a model is trained on a range of inputs (or features) which are associated with a known outcome (25).

11) Calibration;

Calibration was defined as a procedure in statistical classification to determine class membership probabilities which assess the uncertainty of assigning a given new observation into established classes (26).

12) Bias analysis using the Prediction model Risk Of Bias ASsessment Tool (PROBAST).,

PROBAST was used as a systematic bias assessment tool for assessing the risk of bias and applicability of prediction models in the retrieved studies according to the procedures described by Wolff et al. (27).

We extracted the following epidemiological-related information for each study:

13) Nationwide/sampled population;

We defined nationwide data as data that were available for the entire population in a specific geographical region.

14) Risk factors for hospitalization/mortality;

Risk factors for hospitalization/mortality were defined as a list of predictors to identify positive tested COVID-19 patients at high risk of hospitalization or mortality.

15) Disease risk score for severe COVID-19 disease (death or hospitalization);

Disease risk score was defined as a summary measure derived from the observed values of the risk factors that were able to predict severe outcomes of COVID-19.

Two researchers (SS&MS) independently used PROBAST to assess the risk of bias and applicability of prognostic prediction models in the included studies. If multiple prognostic prediction models were reported in a study, only the model with the best predictive performance was considered. The PROBAST statement was divided into four domains: participants, predictors, outcome, and analysis. These domains contain a total of 20 signal questions to help structure judgment of risk of bias for prediction models, such as the range of the included patients, whether the same predictors and results were defined for all participants, whether the clinical decision rules were determined prospectively, and whether a relevant measure of accuracy was reported (27). Additionally, PROBAST requires an assessment of the applicability of models when the population, predictors, or outcomes of the study differ from those specified in the review question.

For the secondary aims, descriptive analysis and visualization was performed using R 4.2.1 (28).

We retrieved 13,050 studies of which 12,794 studies were excluded according to the exclusion criteria detailed. After reading the full texts, an additional 217 studies (13,011 total) were excluded. We identified 3 additional studies by checking reference lists of the retrieved articles and literature reviews that were excluded. In total, we included 39 studies for bias assessment and data analysis (Figure 1).

We included 39 studies related to AI-based prediction of hospitalization and death related to COVID-19. In all, 27 studies used AI to predict COVID-19 mortality, 9 studies used AI to predict COVID-19-related hospitalization, and 3 studies had both outcomes.

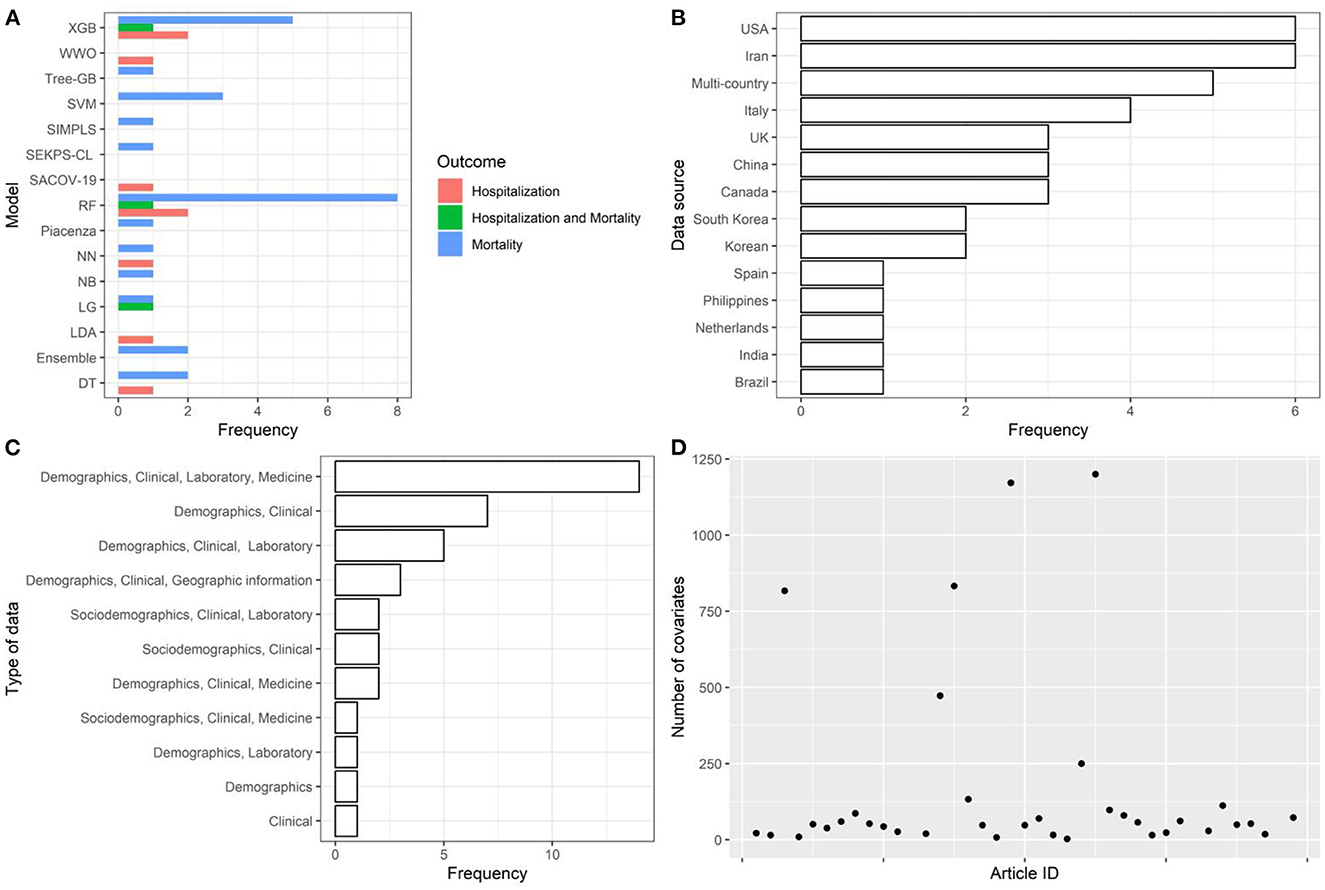

AI models were trained using cohorts of individuals sampled from populations of European and non-European countries, mostly with cohort sample size <5,000. Data collection generally included information on demographics, clinical records, laboratory results, and pharmacological treatments (i.e., high-dimensional datasets—only 3 studies with more than 1,200 covariates of which one omitted from Figure 2).

Figure 2. Descriptive analysis—part 1. (A): models, (B): data source, (C): type of data, (D): number of covariates. Extreme Gradient Boosting (XGB), Water Wave Optimization (WWO), Support Vector Machine (SVM), Inspired Modification of Partial Least Square (SIMPLS), SEKPS-CL, Random Forest (RF), Neural Network (NN), Naive Bayes (NB), Logistic Regression (LR), Linear Discriminant Analysis (LDA), Ensemble, Decision Tree (DT), Disease Risk Score.

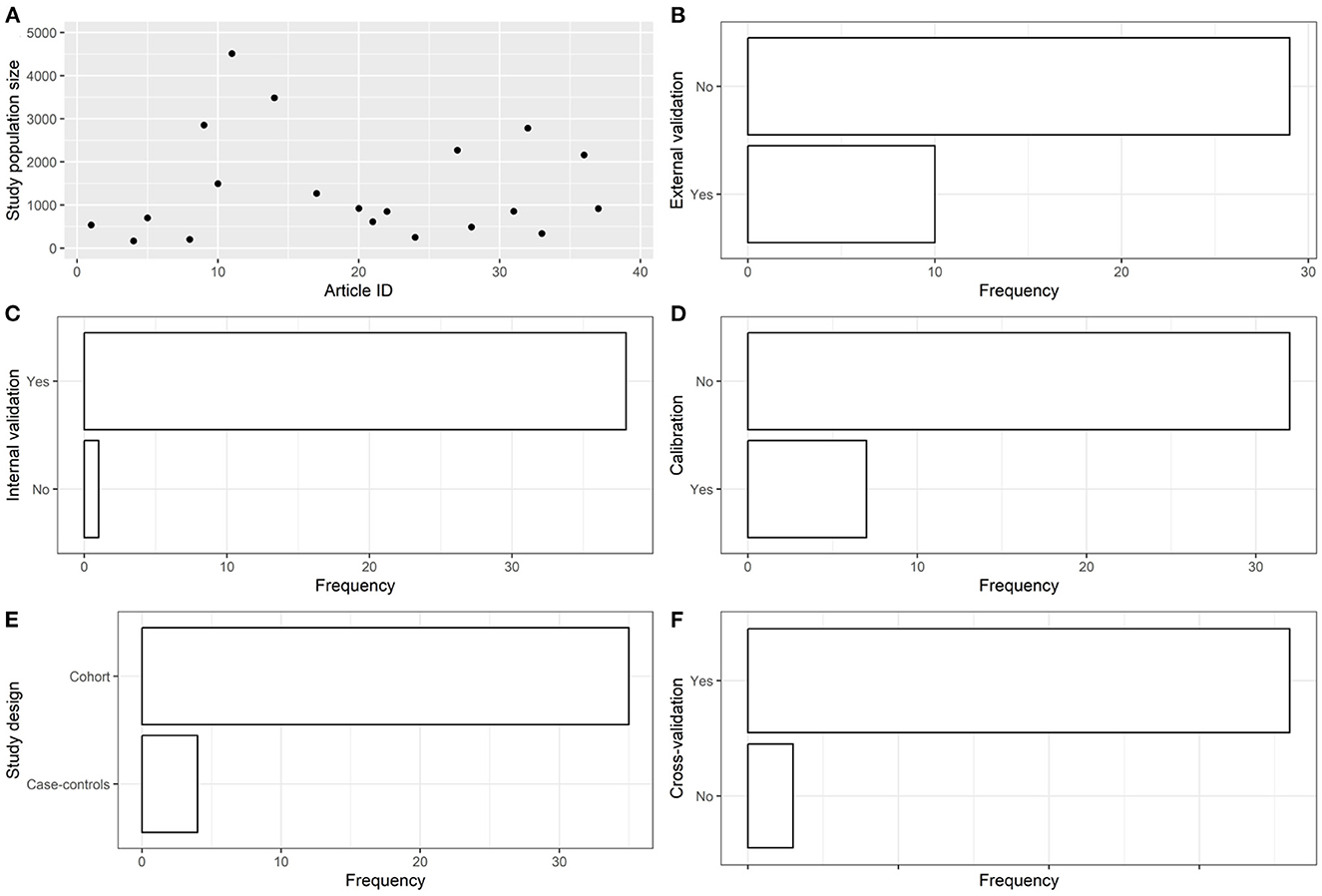

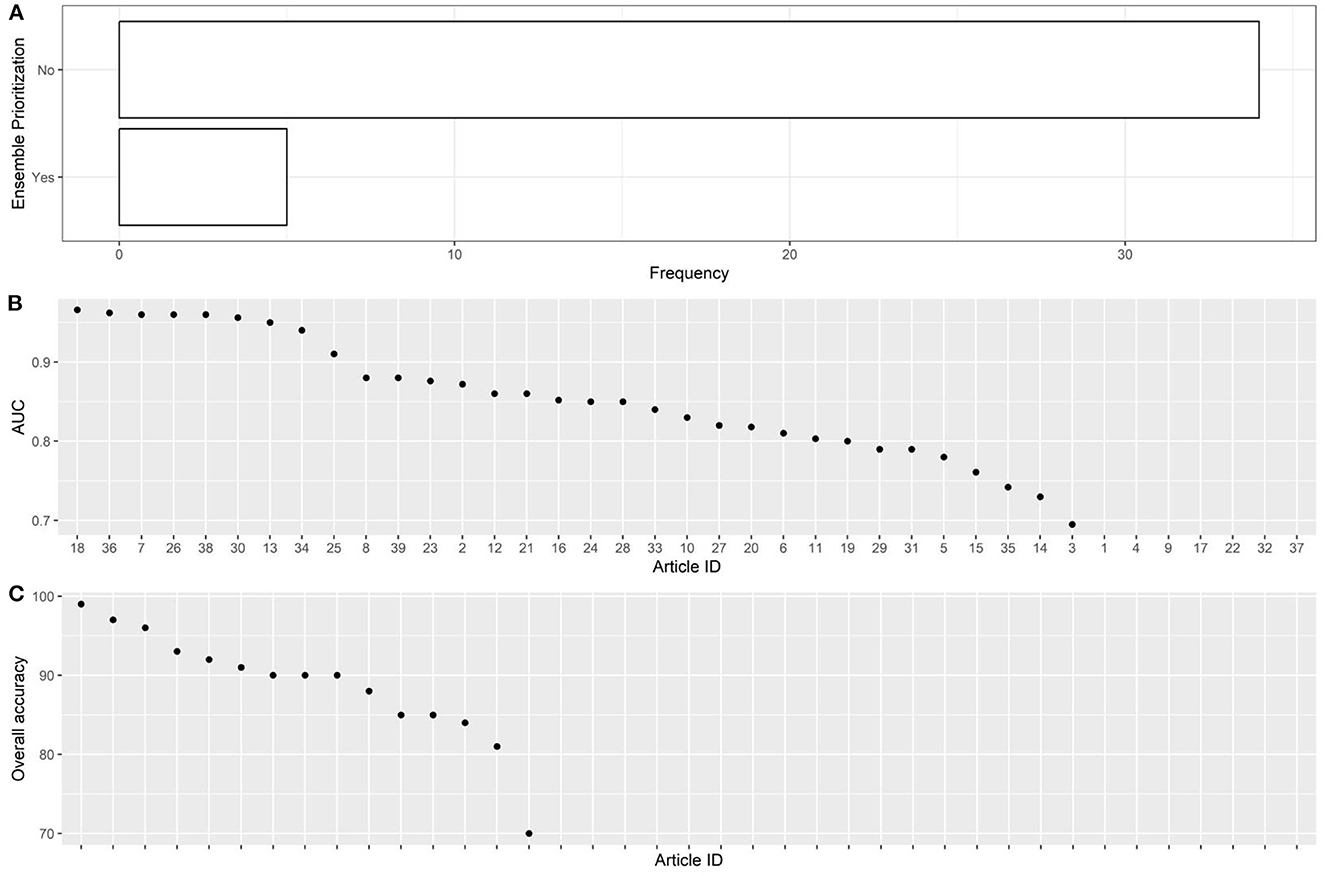

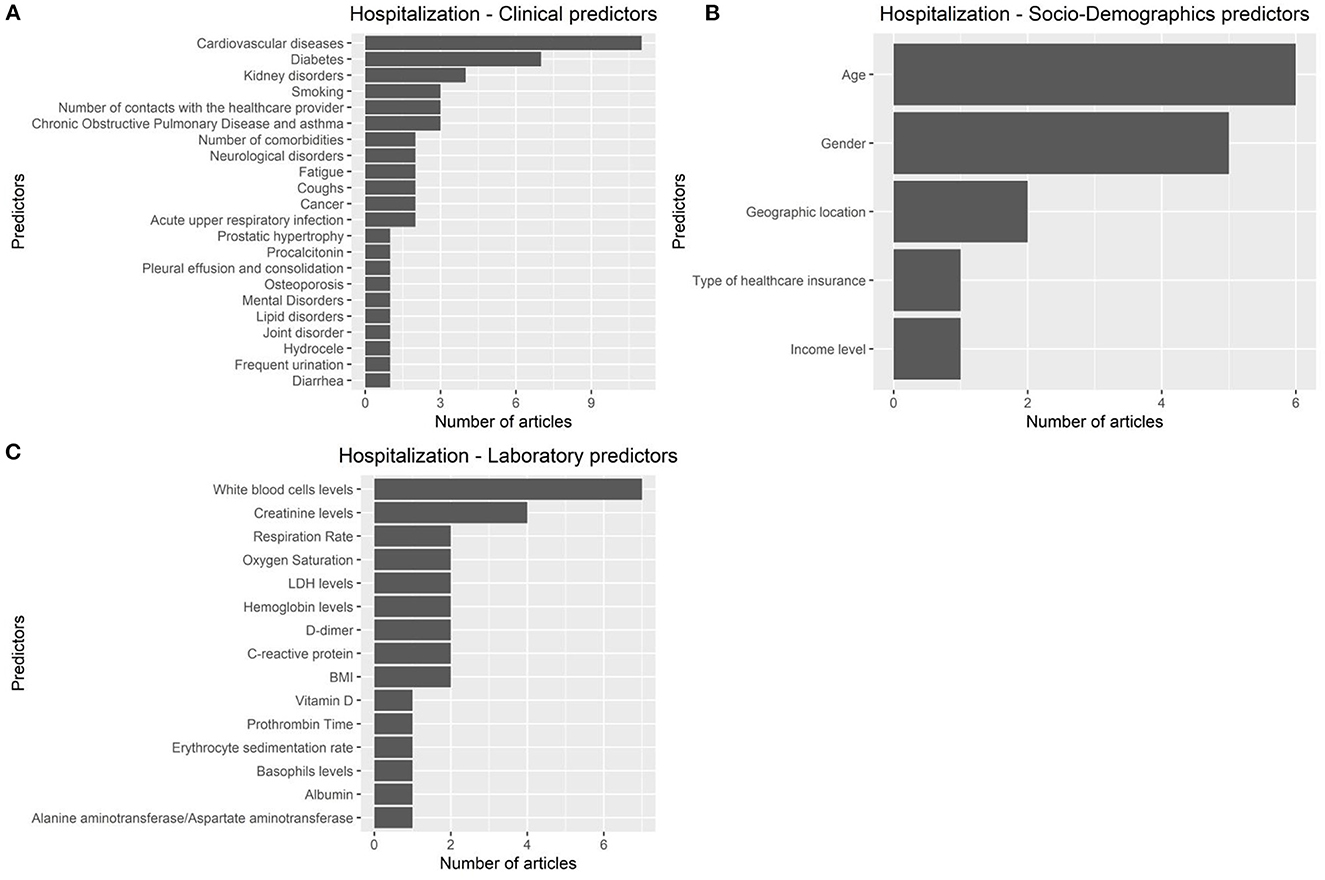

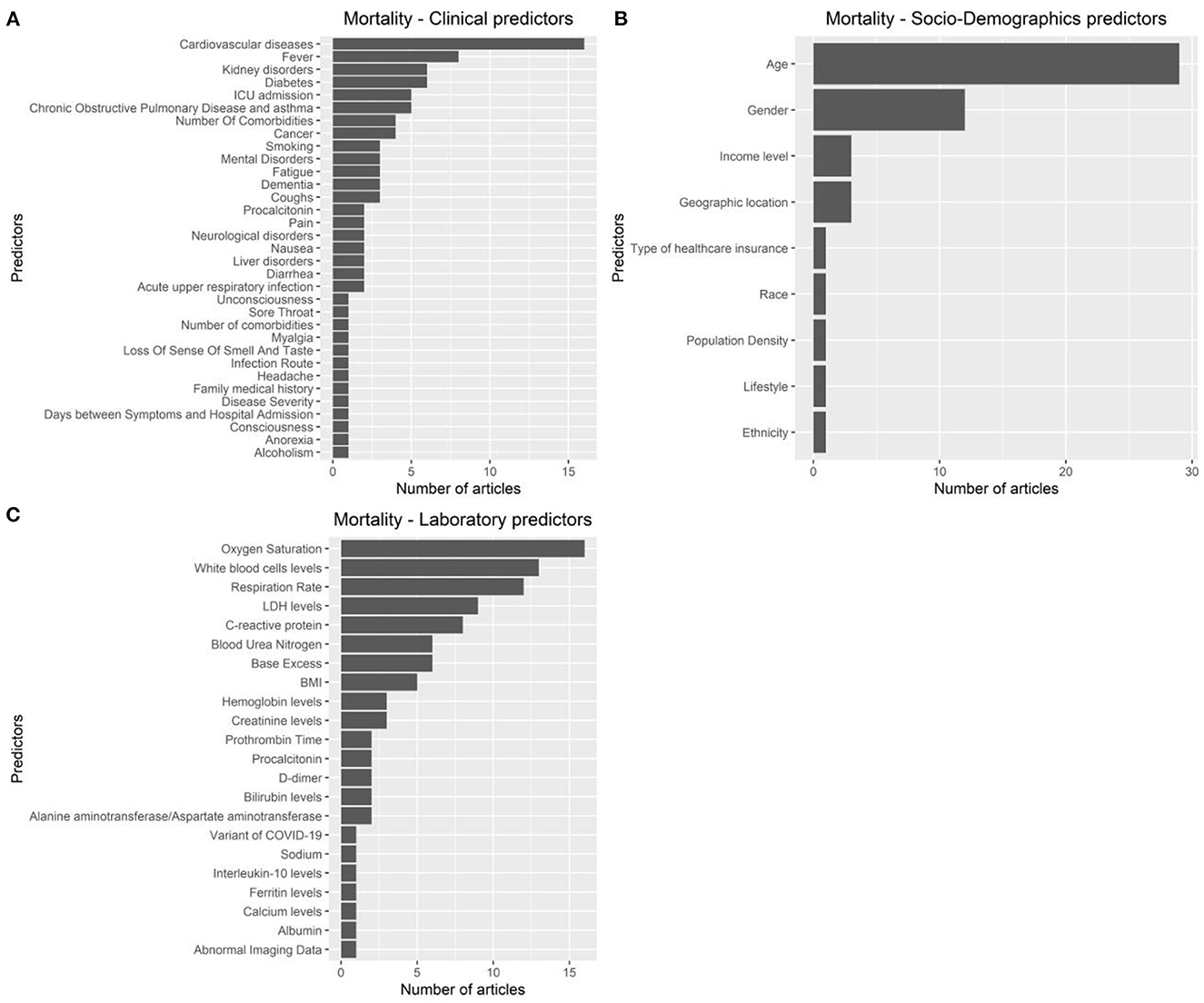

The articles were published in the period 2019–2022, and mostly used Random Forest (Figures 2A, B). In most studies, the models were internally validated with cross-validation, but the majority of studies lacked external validation and calibration (Figures 2C, D, 3). Covariates were not prioritized using ensemble approaches in most of the studies, however, models still showed moderately good performances with Area under the Receiver operating characteristic Curve (AUC) values >0.7 (Figure 4). An overview of the predictors for COVID-19-related hospitalization and death is provided in Figures 5, 6.

Figure 3. Descriptive analysis - part 2. (A): study population size, (B): using external validation, (C): using internal validation, (D): using calibration, (E): study design, (F): using cross-validation.

Figure 4. Descriptive analysis - part 3. (A): using ensemble prioritization, (B): AUC, (C): overall accuracy.

Figure 5. Predictors of COVID 19-related hospitalization. (A): clinical predictors. (B): socio-demohraphics predictrs. (C): laboratory predictors.

Figure 6. Predictors of COVID-19-related mortality. (A): clinical predictors. (B): socio-demohraphics predictrs. (C): laboratory predictors.

For all articles included in the systematic review, we performed a bias and applicability analysis using PROBAST (Appendices 1–38). Additionally, we have provided a narrative description and a summary of the key considerations we included in the bias analysis leading to the bias scoring (Appendix 39). Table 2 provides the results of PROBAST to evaluate the risk of bias and the applicability and quality of the AI models described in the retrieved articles. According to the assessment with PROBAST, all models had either high risk of bias or high concerns related to applicability.

COVID-19 is the first pandemic that occurred in a digitized world and has sparked an unprecedented global research effort (8). With computers being ubiquitously used in modern societies, they are also expected to constitute a novel tool to fight global health emergencies. Therefore, it is not unexpected to observe an extensive application of AI to try solving key prediction tasks for important clinical outcomes such as COVID-19-related hospitalization and mortality.

The COVID-19 pandemic has stimulated considerable research efforts worldwide, but research output on this topic varies among countries as observed in our systematic review in the context of the use of AI for prediction tasks for important clinical outcomes such as COVID-19-related hospitalization and mortality. This heterogeneity can be attributed to several factors, including financial resources, research infrastructure, government support, outbreak severity, and collaboration and partnerships. Economic strength enables countries to invest more in research, and established universities and research institutions are better equipped to conduct COVID-19-related research. Government funding and policies that foster research are also essential for research development. Furthermore, countries that were hit hard by the pandemic may have prioritized research to combat the virus, and collaborations with other countries and international organizations may have increased research output (68–71).

In all, 27 studies used AI to predict COVID-19 mortality, 9 studies used AI to predict COVID-19-related hospitalization, and 3 studies had both outcomes. The articles focusing on hospitalization typically used patient demographics, medical history, vital signs, and laboratory results as input variables for their machine learning models. The primary goal of these studies was to identify high-risk patients early on, so that appropriate medical interventions can be initiated promptly. Similarly, the articles focusing on mortality used similar input variables, but with additional focus on disease severity and progression. The main objective of these studies was to detect patients with a high likelihood of mortality, allowing them to receive close monitoring and more intensive care. The articles that predicted both hospitalization and mortality outcomes using machine learning models utilize the same input variables as those concentrating only on hospitalization or mortality. However, they also take into account the interplay between these outcomes. Overall, the key differences between these article groups lie in their primary outcome of interest and the input variables used in their machine learning models.

In the articles retrieved in our systematic review, several machine learning models have been used to predict COVID-19-related hospitalization and mortality, with Random Forest being the model most frequently used. It cannot be excluded that this result is due to publication bias. However, the potential analytical advantage of using Random Forest in terms of prediction accuracy should be acknowledged as Random Forest undoubtedly represents an important and widely used tool for prediction in medical research (72).

While it is possible that research teams in certain countries may have a preference for using certain models or methods, such as Random Forest models, in their research, it is unlikely to be the primary driver for our findings. Research on COVID-19 encompasses various disciplines that require different models and methods. Therefore, the choice of models and methods used in research is usually based on their relevance to the research question at hand, rather than personal preference or bias. However, in the retrieved article, we have not seen any argumentation for why applied studies choose to use particular models, and the authors often seem to use only a few (seemingly arbitrarily selected) ones, and even so, the authors often use some of these methods only with default parameters (which are again arbitrary), instead of motivating hyper-parameter choices by the application or selecting their values by a systematic hyper-parameter search.

AI models were trained using cohorts of a median sample size of 4,000 individuals (the smallest sample was 165 individuals) collecting information on demographics, clinical records, laboratory results, and pharmacological Studies had a median of 53 covariates, with only 3 studies having more than 1,200 covariates. This is not surprising considering that most studies were hospital-based and collected information on individuals admitted to wards from hospital databases.

In most studies, the models were internally validated with cross-validation, but the majority of studies lacked external validation and calibration. External validation is a crucial activity when using AI in prediction modelling. In absence of external validation, it is often not possible to determine a prediction model's reproducibility and generalizability to new and different sets of data generated in different settings and/or different time points of a specific setting (e.g., different COVID-19 waves) (73).

Calibration is important, albeit often overlooked, aspect of training AI models. It's important to recognize the fact that calibration directly modifies the outputs of machine learning models after they have been trained and can have an impact on the accuracy of the model. When assessing a model's validity, calibration is as important as other performance metrics and should be evaluated and reported. Model calibration refers to the agreement between subgroups of predicted probabilities and their observed frequencies. To assess model calibration, a calibration plot can be generated by ordering the predicted probabilities, dividing them into subgroups, and then plotting the average predicted probability vs. the average outcome for each subgroup (22).

In most of the studies, however, models still showed moderately good performances with AUC values >0.7. It cannot be excluded that this result is due to publication bias.

According to the assessment with PROBAST, models had high risk of bias and/or poor applicability. Commonly identified biases included condition on a future event, misclassification of exposure/outcome, and or selection bias. The main concern we had in retrieved studies regarding the applicability of the models is related to their description of the study aims and their target population. Articles for which we identified concerns related to applicability did not confine the generalizability of their models to settings in which the models were developed but rather had fairly general claims that their model could generalize in other settings. When describing the area of applicability of an AI model it is crucial to set boundaries of which settings and necessary conditions will guarantee the applicability of the model. When this information is missing in the manuscript, applicability can be considered global and, therefore, the study prone to selection bias. This is especially true for hospital-based studies, as emphasized by Kopec and Grimes (74, 75).

A broad range of AI techniques have been used to predict COVID-19 hospitalization and mortality. The studies reported good prediction performance for the AI Models. However, according to the assessment with PROBAST, all models had a high risk of bias and/or concern regarding applicability.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

MS and SS conceived of the presented idea. SS developed the theory and performed the computations. All authors discussed the results and contributed to the final manuscript.

This work was performed as part of the Nordic COHERENCE Project, Project No. 105670 funded by NordForsk under the Nordic Council of Ministers and the EU-COVID-19 Project, Project No. 312707 funded by the Norwegian Research Council and by a grant from the Novo Nordisk Foundation to the University of Copenhagen (NNF15SA0018404). The Swedish SCIFI-PEARL project has received basic funding from the Swedish state under the agreement between the Swedish government and the county councils, the ALF-agreement (Avtal om Läkarutbildning och Forskning/Medical Training and Research Agreement) grants ALFGBG-938453, ALFGBG-971130, ALFGBG-978954 and during 2020–2021 had funding from FORMAS (Forskningsrådet för miljö, areella näringar och samhällsbyggande/Research Council for Environment, Agricultural Sciences and Spatial Planning), a Government Research Council for Sustainable Development, Grant 2020-02828. Additional grants supporting different aspects of ongoing research within the study include: the Swedish Heart Lung Foundation (20210030 and 2021-0581), grants from the SciLifeLab National COVID-19 Research Program, financed by the Knut och Alice Wallenberg Foundation (KAW 2020.0299), the Swedish Research Council (2021-05045, 2021-05450), the Swedish Social Insurance Agency (FK 2021/011186) and Forte (Swedish Research Council for Health, Working Life and Welfare), grant 2022-00444.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2023.1183725/full#supplementary-material

1. WorldoMeter. COVID-19 CORONAVIRUS PANDEMIC. (2022). p. 1. Available online at: https://www.worldometers.info/coronavirus/ (accessed December 1, 2022).

2. CDC. Update on COVID-19–Related Deaths. (2022). p. 1. Available online at: https://www.cdc.gov/coronavirus/2019-ncov/covid-data/covidview/index.html (accessed December 1, 2022).

3. Ourworldindata. Number of COVID-19 Patients in Hospital Per Million. (2022). p. 1. Available online at: https://ourworldindata.org/grapher/current-covid-hospitalizations-per-million?tab=map (accessed December 15, 2022).

4. Wang L, Zhang Y, Wang D, Tong X, Liu T, Zhang S, et al. Artificial Intelligence for COVID-19: a systematic review. Front Med. (2021) 8:704256. doi: 10.3389/fmed.2021.704256

5. Dictionary OE. Artificial Intelligence. (2018). Available online at: https//www lexico com/definition/artificial_intelligence ( accessed July 7, 2020).

6. Jordan M, Kleinberg J, Schölkopf B. Information Science and Statistics. New York, NY: Springer (2006).

7. Saleem F, Al-Ghamdi ASA-M, Alassafi MO, AlGhamdi SA. Machine learning, deep learning, and mathematical models to analyze forecasting and epidemiology of COVID-19: a systematic literature review. Int J Environ Res Public Health. (2022) 19:5099. doi: 10.3390/ijerph19095099

8. Napolitano F, Xu X, Gao X. Impact of computational approaches in the fight against COVID-19: an AI guided review of 17 000 studies. Brief Bioinform. (2022) 23:bbab456. doi: 10.1093/bib/bbab456

9. Lyu J, Cui W, Finkelstein J. Use of Artificial Intelligence for Predicting COVID-19 Outcomes: A Scoping Review. Stud Health Technol Inform. (2022) 289:317–20. doi: 10.3233/SHTI210923

10. Bottino F, Tagliente E, Pasquini L, Napoli A Di, Lucignani M, Figà-Talamanca L, et al. COVID mortality prediction with machine learning methods: a systematic review and critical appraisal. J Pers Med. (2021) 11:893. doi: 10.3390/jpm11090893

11. Guo Y, Zhang Y, Lyu T, Prosperi M, Wang F, Xu H, et al. The application of artificial intelligence and data integration in COVID-19 studies: a scoping review. J Am Med Inform Assoc. (2021) 28:2050–67. doi: 10.1093/jamia/ocab098

12. Shi C, Wang L, Ye J, Gu Z, Wang S, Xia J, et al. Predictors of mortality in patients with coronavirus disease 2019: a systematic review and meta-analysis. BMC Infect Dis. (2021) 21:663. doi: 10.1186/s12879-021-06369-0

13. Syed M, Syed S, Sexton K, Greer ML, Zozus M, Bhattacharyya S, et al. Deep learning methods to predict mortality in COVID-19 patients: a rapid scoping review. Stud Health Technol Inform. (2021) 281:799–803. doi: 10.3233/SHTI210285

14. Alballa N, Al-Turaiki I. Machine learning approaches in COVID-19 diagnosis, mortality, and severity risk prediction: a review. Informatics Med unlocked. (2021) 24:100564. doi: 10.1016/j.imu.2021.100564

15. Sessa M, Khan AR, Liang D, Andersen M, Kulahci M. Artificial intelligence in pharmacoepidemiology: a systematic review. part 1-overview of knowledge discovery techniques in artificial intelligence. Front Pharmacol. (2020) 11:1028. doi: 10.3389/fphar.2020.01028

16. Sessa M, Liang D, Khan AR, Kulahci M, Andersen M. Artificial intelligence in pharmacoepidemiology: a systematic review. part 2–comparison of the performance of artificial intelligence and traditional pharmacoepidemiological techniques. Front Pharmacol Frontiers. (2021) 11:2270. doi: 10.3389/fphar.2020.568659

17. Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. (2009) 6:e1000097. doi: 10.1371/journal.pmed.1000097

18. Ranganathan P, Gogtay NJ. An introduction to statistics - data types, distributions and summarizing data. Indian J Crit care Med. (2019) 23:S169–70. doi: 10.5005/jp-journals-10071-23198

19. Dictionary OE. Oxford English Dictionary. Simpson, Ja Weiner, Esc. Oxford: Dictionary OE 1989. p. 3.

20. Prada-Ramallal G, Roque F, Herdeiro MT, Takkouche B, Figueiras A. Primary versus secondary source of data in observational studies and heterogeneity in meta-analyses of drug effects: a survey of major medical journals. BMC Med Res Methodol. (2018) 18:97. doi: 10.1186/s12874-018-0561-3

21. Adèr HJ, Mellenbergh GJ. Advising on research methods. In: Proceedings of the 2007 KNAW Colloquium. Huizen: Johannes van Kessel Publ. (2008).

22. Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, Obuchowski N, et al. Assessing the performance of prediction models: a framework for some traditional and novel measures. Epidemiology NIH Public Access. (2010) 21:128. doi: 10.1097/EDE.0b013e3181c30fb2

23. Patino CM, Ferreira JC. Internal and external validity: can you apply research study results to your patients? J Bras Pneumol SciELO Brasil. (2018) 44:183. doi: 10.1590/s1806-37562018000000164

24. Jalali Sefid Dashti M, Gamieldien J. A practical guide to filtering and prioritizing genetic variants. Biotechniques. (2017) 62:18–30. doi: 10.2144/000114492

25. Sidey-Gibbons JAM, Sidey-Gibbons CJ. Machine learning in medicine: a practical introduction. BMC Med Res Methodol. (2019) 19:64. doi: 10.1186/s12874-019-0681-4

27. Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med. (2019) 170:51–8. doi: 10.7326/M18-1376

28. R Core Team. R: A language environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. (2022). Available online at: https://www.R-project.org/.

29. Polilli E, Frattari A, Esposito JE, D'Amato M, Rapacchiale G, D'Intino A, et al. Reliability of predictive models to support early decision making in the emergency department for patients with confirmed diagnosis of COVID-19: the Pescara Covid Hospital score. BMC Health Serv Res. (2022) 22:1062. doi: 10.1186/s12913-022-08421-4

30. Wanyan T, Lin M, Klang E, Menon KM, Gulamali FF, Azad A, et al. Supervised pretraining through contrastive categorical positive samplings to improve COVID-19 mortality prediction. ACM-BCB. (2022) 2022:9. doi: 10.1145/3535508.3545541

31. Lazzarini N, Filippoupolitis A, Manzione P, Eleftherohorinou H. A machine learning model on real world data for predicting progression to acute respiratory distress syndrome (ARDS) among COVID-19 patients. PLoS ONE. (2022) 17:e0271227. doi: 10.1371/journal.pone.0271227

32. Wang A, Li F, Chiang S, Fulcher J, Yang O, Wong D, et al. Machine Learning Prediction of COVID-19 Severity Levels From Salivaomics Data. Ithaca, NY: ArXiv (2022).

33. Vezzoli M, Inciardi RM, Oriecuia C, Paris S, Murillo NH, Agostoni P, et al. Machine learning for prediction of in-hospital mortality in coronavirus disease 2019 patients: results from an Italian multicenter study. J Cardiovasc Med. (2022) 23:439–46. doi: 10.2459/JCM.0000000000001329

34. Ali S, Zhou Y, Patterson M. Efficient analysis of COVID-19 clinical data using machine learning models. Med Biol Eng Comput. (2022) 60:1881–96. doi: 10.1007/s11517-022-02570-8

35. Zarei J, Jamshidnezhad A, Haddadzadeh Shoushtari M, Mohammad Hadianfard A, Cheraghi M, Sheikhtaheri A. Machine learning models to predict in-hospital mortality among inpatients with COVID-19: underestimation and overestimation bias analysis in subgroup populations. J Healthc Eng. (2022) 2022:1644910. doi: 10.1155/2022/1644910

36. Baik S-M, Lee M, Hong K-S, Park D-J. Development of machine-learning model to predict COVID-19 mortality: application of ensemble model and regarding feature impacts. Diagnostics. (2022) 12:1464. doi: 10.3390/diagnostics12061464

37. Shanbehzadeh M, Yazdani A, Shafiee M, Kazemi-Arpanahi H. Predictive modeling for COVID-19 readmission risk using machine learning algorithms. BMC Med Inform Decis Mak. (2022) 22:139. doi: 10.1186/s12911-022-01880-z

38. Song W, Zhang L, Liu L, Sainlaire M, Karvar M, Kang M-J, et al. Predicting hospitalization of COVID-19 positive patients using clinician-guided machine learning methods. J Am Med Inform Assoc. (2022) 29:1661–7. doi: 10.1093/jamia/ocac083

39. Willette AA, Willette SA, Wang Q, Pappas C, Klinedinst BS, Le S, et al. Using machine learning to predict COVID-19 infection and severity risk among 4510 aged adults: a UK Biobank cohort study. Sci Rep. (2022) 12:7736. doi: 10.1038/s41598-022-07307-z

40. Wan T-K, Huang R-X, Tulu TW, Liu J-D, Vodencarevic A, Wong C-W, et al. Identifying predictors of COVID-19 mortality using machine learning. Life. (2022) 12:547. doi: 10.3390/life12040547

41. Park MS, Jo H, Lee H, Jung SY, Hwang HJ. Machine learning-based COVID-19 patients triage algorithm using patient-generated health data from nationwide multicenter database. Infect Dis Ther New Zealand. (2022) 11:787–805. doi: 10.1007/s40121-022-00600-4

42. Jakob CEM, Mahajan UM, Oswald M, Stecher M, Schons M, Mayerle J, et al. Prediction of COVID-19 deterioration in high-risk patients at diagnosis: an early warning score for advanced COVID-19 developed by machine learning. Infection Germany. (2022) 50:359–70. doi: 10.1007/s15010-021-01656-z

43. Hernández-Pereira E, Fontenla-Romero O, Bolón-Canedo V, Cancela-Barizo B, Guijarro-Berdiñas B, Alonso-Betanzos A. Machine learning techniques to predict different levels of hospital care of CoVid-19. Appl Intell. (2022) 52:6413–31. doi: 10.1007/s10489-021-02743-2

44. Gutierrez JM, Volkovs M, Poutanen T, Watson T, Rosella LC. Risk stratification for COVID-19 hospitalization: a multivariable model based on gradient-boosting decision trees. CMAJ Open. (2021) 9:E1223–31. doi: 10.9778/cmajo.20210036

45. Guan X, Zhang B, Fu M, Li M, Yuan X, Zhu Y, et al. Clinical and inflammatory features based machine learning model for fatal risk prediction of hospitalized COVID-19 patients: results from a retrospective cohort study. Ann Med. (2021) 53:257–66. doi: 10.1080/07853890.2020.1868564

46. Feng C, Kephart G, Juarez-Colunga E. Predicting COVID-19 mortality risk in Toronto, Canada: a comparison of tree-based and regression-based machine learning methods. BMC Med Res Methodol. (2021) 21:267. doi: 10.1186/s12874-021-01441-4

47. Kasturi SN, Park J, Wild D, Khan B, Haggstrom DA, Grannis S. Predicting COVID-19-Related health care resource utilization across a statewide patient population: model development study. J Med Internet Res. (2021) 23:e31337. doi: 10.2196/31337

48. Murri R, Lenkowicz J, Masciocchi C, Iacomini C, Fantoni M, Damiani A, et al. A machine-learning parsimonious multivariable predictive model of mortality risk in patients with Covid-19. Sci Rep. (2021) 11:21136. doi: 10.21203/rs.3.rs-544196/v1

49. Tabatabaie M, Sarrami AH, Didehdar M, Tasorian B, Shafaat O, Sotoudeh H. Accuracy of machine learning models to predict mortality in COVID-19 infection using the clinical and laboratory data at the time of admission. Cureus. (2021) 13:e18768. doi: 10.7759/cureus.18768

50. Moulaei K, Ghasemian F, Bahaadinbeigy K, Ershad Sarbi R, Mohamadi Taghiabad Z. Predicting mortality of COVID-19 patients based on data mining techniques. J Biomed Phys Eng Iran. (2021) 11:653–62. doi: 10.31661/jbpe.v0i0.2104-1300

51. Migriño JRJ, Batangan ARU. Using machine learning to create a decision tree model to predict outcomes of COVID-19 cases in the Philippines. West Pacific Surveill response. J WPSAR. (2021) 12:56–64. doi: 10.5365/wpsar.2021.12.3.831

52. Banoei MM, Dinparastisaleh R, Zadeh AV, Mirsaeidi M. Machine-learning-based COVID-19 mortality prediction model and identification of patients at low and high risk of dying. Crit Care. (2021) 25:328. doi: 10.1186/s13054-021-03749-5

53. Dabbah MA, Reed AB, Booth ATC, Yassaee A, Despotovic A, Klasmer B, et al. Machine learning approach to dynamic risk modeling of mortality in COVID-19: a UK Biobank study. Sci Rep. (2021) 11:16936. doi: 10.1038/s41598-021-95136-x

54. De Souza FSH, Hojo-Souza NS, Dos Santos EB, Da Silva CM, Guidoni DL. Predicting the disease outcome in COVID-19 positive patients through machine learning: a retrospective cohort study with Brazilian data. Front Artif Intell Switzerland. (2021) 4:579931. doi: 10.3389/frai.2021.579931

55. Ottenhoff MC, Ramos LA, Potters W, Janssen MLF, Hubers D, Hu S, et al. Predicting mortality of individual patients with COVID-19: a multicentre Dutch cohort. BMJ Open. (2021) 11:e047347. doi: 10.1136/bmjopen-2020-047347

56. Mahdavi M, Choubdar H, Zabeh E, Rieder M, Safavi-Naeini S, Jobbagy Z, et al. A machine learning based exploration of COVID-19 mortality risk. PLoS ONE. (2021) 16:e0252384. doi: 10.1371/journal.pone.0252384

57. Jamshidi E, Asgary A, Tavakoli N, Zali A, Dastan F, Daaee A, et al. Symptom prediction and mortality risk calculation for COVID-19 using machine learning. Front Artif Intell. (2021) 4:673527. doi: 10.3389/frai.2021.673527

58. Snider B, McBean EA, Yawney J, Gadsden SA, Patel B. Identification of variable importance for predictions of mortality from COVID-19 using AI models for Ontario, Canada. Front public Heal. (2021) 9:675766. doi: 10.3389/fpubh.2021.759014

59. Halasz G, Sperti M, Villani M, Michelucci U, Agostoni P, Biagi A, et al. A machine learning approach for mortality prediction in COVID-19 pneumonia: development and evaluation of the piacenza score. J Med Internet Res. (2021) 23:e29058. doi: 10.2196/29058

60. Karthikeyan A, Garg A, Vinod PK, Priyakumar UD. Machine learning based clinical decision support system for Early COVID-19 mortality prediction. Front public Heal. (2021) 9:626697. doi: 10.3389/fpubh.2021.626697

61. Tezza F, Lorenzoni G, Azzolina D, Barbar S, Leone LAC, Gregori D. Predicting in-hospital mortality of patients with COVID-19 using machine learning techniques. J Pers Med. (2021) 11:343. doi: 10.3390/jpm11050343

62. Pourhomayoun M, Shakibi M. Predicting mortality risk in patients with COVID-19 using machine learning to help medical decision-making. Smart Heal. (2021) 20:100178. doi: 10.1016/j.smhl.2020.100178

63. Jimenez-Solem E, Petersen TS, Hansen C, Hansen C, Lioma C, Igel C, et al. Developing and validating COVID-19 adverse outcome risk prediction models from a bi-national European cohort of 5594 patients. Sci Rep. (2021) 11:3246. doi: 10.1038/s41598-021-81844-x

64. Gao Y, Cai G-Y, Fang W, Li H-Y, Wang S-Y, Chen L, et al. Machine learning based early warning system enables accurate mortality risk prediction for COVID-19. Nat Commun. (2020) 11:5033. doi: 10.1038/s41467-020-18684-2

65. Wang T, Paschalidis A, Liu Q, Liu Y, Yuan Y, Paschalidis IC. Predictive models of mortality for hospitalized patients with COVID-19: retrospective cohort study. JMIR Med Inform. (2020) 8:e21788. doi: 10.2196/21788

66. An C, Lim H, Kim D-W, Chang JH, Choi YJ, Kim SW. Machine learning prediction for mortality of patients diagnosed with COVID-19: a nationwide Korean cohort study. Sci Rep. (2020) 10:18716. doi: 10.1038/s41598-020-75767-2

67. Vaid A, Somani S, Russak AJ, De Freitas JK, Chaudhry FF, Paranjpe I, et al. Machine learning to predict mortality and critical events in a cohort of patients with COVID-19 in New York City: model development and validation. J Med Internet Res. (2020) 22:e24018. doi: 10.2196/24018

68. Diseases TLI. Riding the coronacoaster of uncertainty. Lancet Infect Dis. (2020) 20:629. doi: 10.1016/S1473-3099(20)30378-9

69. Health TLP. COVID-19 in Spain: a predictable storm? Lancet Public Heal. (2020) 5:e568. doi: 10.1016/S2468-2667(20)30239-5

70. Lambert H, Gupte J, Fletcher H, Hammond L, Lowe N, Pelling M, et al. COVID-19 as a global challenge: towards an inclusive and sustainable future. Lancet Planet Heal Netherlands. (2020) 4:e312–4. doi: 10.1016/S2542-5196(20)30168-6

71. Magnusson RS, Patterson D. The role of law and governance reform in the global response to non-communicable diseases. Global Health. (2014) 10:44. doi: 10.1186/1744-8603-10-44

72. Chen JH, Asch SM. Machine learning and prediction in medicine - beyond the peak of inflated expectations. N Engl J Med. (2017) 376:2507–9. doi: 10.1056/NEJMp1702071

73. Ramspek CL, Jager KJ, Dekker FW, Zoccali C, van Diepen M. External validation of prognostic models: what, why, how, when and where? Clin Kidney J. (2021) 14:49–58. doi: 10.1093/ckj/sfaa188

74. Grimes DA, Schulz KF. Bias and causal associations in observational research. Lancet Elsevier. (2002) 359:248–52. doi: 10.1016/S0140-6736(02)07451-2

Keywords: AI, COVID-19, pharmacoepidemiology, bias, PROBAST, predictive modeling

Citation: Shakibfar S, Nyberg F, Li H, Zhao J, Nordeng HME, Sandve GKF, Pavlovic M, Hajiebrahimi M, Andersen M and Sessa M (2023) Artificial intelligence-driven prediction of COVID-19-related hospitalization and death: a systematic review. Front. Public Health 11:1183725. doi: 10.3389/fpubh.2023.1183725

Received: 15 March 2023; Accepted: 31 May 2023;

Published: 20 June 2023.

Edited by:

Jason C. Hsu, Taipei Medical University, TaiwanReviewed by:

Yen Kuang Lin, National Taiwan Sport University, TaiwanCopyright © 2023 Shakibfar, Nyberg, Li, Zhao, Nordeng, Sandve, Pavlovic, Hajiebrahimi, Andersen and Sessa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Saeed Shakibfar, c2FlZWQuc2hha2liZmFyQHN1bmQua3UuZGs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.