- 1Hongqiao International Institute of Medicine, Shanghai Tongren Hospital and School of Public Health, Shanghai Jiao Tong University School of Medicine, Shanghai, China

- 2Department of Cardiothoracic Surgery, Xinhua Hospital, School of Medicine, Shanghai Jiao Tong University, Shanghai, China

- 3Department of Orthopaedic Surgery, Shanghai Jiaotong University Affiliated Sixth People's Hospital, Shanghai, China

- 4Shanghai Clinical Research Promotion and Development Center, Shanghai Shenkang Hospital Development Center, Shanghai, China

Objectives: Quality can be a challenge for Investigator initiated trials (IITs) since these trials are scarcely overseen by a sponsor or monitoring team. Therefore, quality assessment for departments managing clinical research grants program is important and urgently needed. Our study aims at developing a handy quality assessment tool for IITs that can be applied by both departments and project teams.

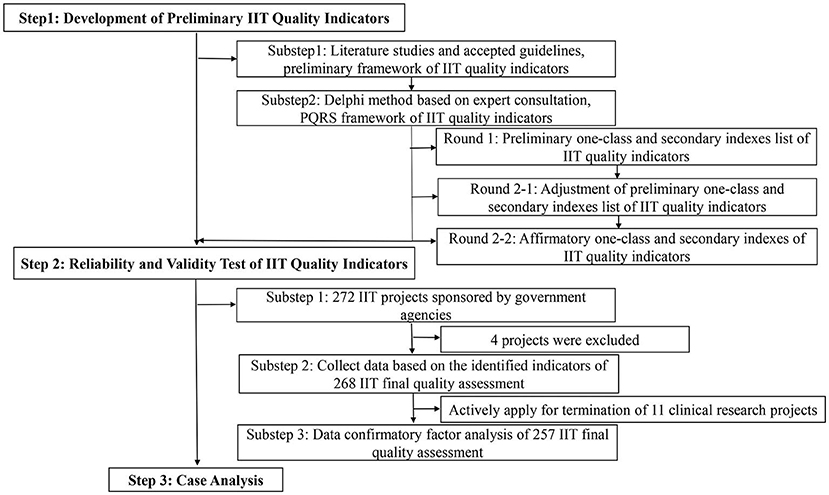

Methods: The framework of the quality assessment tool was developed based on the literature studies, accepted guidelines and the Delphi method. A total of 272 ongoing IITs funded by Shanghai non-profit organizations in 2015 and 2016 were used to extract quality indexes. Confirmatory factor analysis (CFA) was used to further evaluate the validity and feasibility of the conceptual quality assessment tool.

Results: The tool consisted of 4 critical quality attributes, including progress, quality, regulation, scientificity, and 13 observed quality indexes. A total of 257 IITs were included in the validity and feasibility assessment. The majority (60.29%) were Randomized Controlled Trial (RCT), and 41.18% were multi-center studies. In order to test the validity and feasibility of IITs quality assessment tool, CFA showed that the model fit the data adequately. (CMIN/DF = 1.868, GFI = 0.916; CFI = 0.936; TLI = 0.919; RMSEA = 0.063; SRMR = 0.076). Different types of clinical studies fit well in the tool. However, RCT scored lower than prospective cohort and retrospective study in enrollment progress (7.02 vs. 7.43, 9.63, respectively).

Conclusion: This study established a panoramic quality assessment tool based on the Delphi method and CFA, and the feasibility and effectiveness of the tool were verified through clinical research examples. The use of this tool can help project management departments effectively and dynamically manage research projects, rationally allocate resources, and ensure the quality of IITs.

Introduction

Investigator initiated trials (IITs) complement the industry-sponsored trials (ISTs), optimize existing therapies or treatment approaches and attempt to answer clinical problems (1, 2). In addition, IITs do not only facilitate a better understanding of disease domain and drug effect, but help translate academic research into clinical practice. Recent literature also reported that IITs have changed the practice of medicine (3).

Industry-sponsored trials have rigorous monitoring and auditing to ensure the authenticity and reliability of data, while IITs often lack resources and may not have similar quality checks (4, 5). IITs are equally important to ISTs as they explore the use of marketed drugs for new indications and clinical diagnosis or treatment effect comparison (4). Thus the quality of IITs should be taken seriously (6). For hospitals, an alternative quality management system could be adopted to alleviate regulatory pressures (7), and a comprehensive and feasible quality assessment tool is also urgently required as IITs faced challenges both in design and conduct.

Over the past decade, more and more studies emphasized on the importance of methodology and the quality of research report, both in IITs and ISTs (8, 9). Several publications highlighted that risk-adapted monitoring was important for quality control and sufficient to identify critical questions in the conduct of clinical trials (10, 11). Quality assessment tools for research design or reporting were abundant, while few for operation and funding decision (12). The Risk-Based Monitoring Toolbox of European Clinical Research Infrastructure Network provided information on tools available for risk assessment, monitoring and study conduct. The toolbox was mainly created following literature review or surveys, and was a collection of risk-based tools and strategies (13). Take RACT and ADAMON as examples. In 2013, TransCelerate BioPharma, an independent non-profit organization, developed a Risk Assessment and Categorization Tool (RACT), which many biopharmaceutical companies have used to assess the risk level of clinical trials before the start of the trial as well as regular checkups. Despite that the RACT offered a very useful methodology for risk assessments of study level, it had some weaknesses when used to evaluate IITs. The RACT was prone to subjectivity and lacked important categories (14). Adapters Monitoring (ADAMON)1 risk scale can be used for IITs, but it's just a tool for assessing the required amount of on-site monitoring, not whole quality. Furthermore, Patwardhan et al. (15) drew up a checklist consisting of various criteria that were essential in IITs documentation, while not suitable to assess large-scale IITs. Systematic study about quality assessment tools designed for large-scale IITs was rare. The main goal of this study is to develop an operational quality assessment tool for IITs to enhance the quality of clinical research, ensure the safety of subjects and the authenticity and reliability of data, and avoid waste of resources.

Materials and methods

Index system construction

The quality indicators were extracted based on the following three principles. The indicators can be (1) used to evaluate the quality of clinical research (2) relatively simple and easy to understand, and (3) easily operated to ensure smooth progress of quality assessment. The preliminary quality indicators and the basic framework of IITs were firstly developed through indices development committee discussion, focus group interview, reviewing literature studies and accepted guidelines, including International Council on Harmonization E6 Guidance Revision 2 [ICH E6(R2)] (16), United States Food and Drug Administration (US FDA) (17), European Medicines Agency (EMA) (18), SPIRIT 2013 Statement (19), and CONSORT 2010 statement (20). The indices development committee that developed the IITs indices included various roles in clinical researches: those were a clinical expert, two statisticians, a project manager (PM), a data manager (DM), two clinical research associates (CRA), a senior research manager and a financial expert, all staffs have received GCP training.

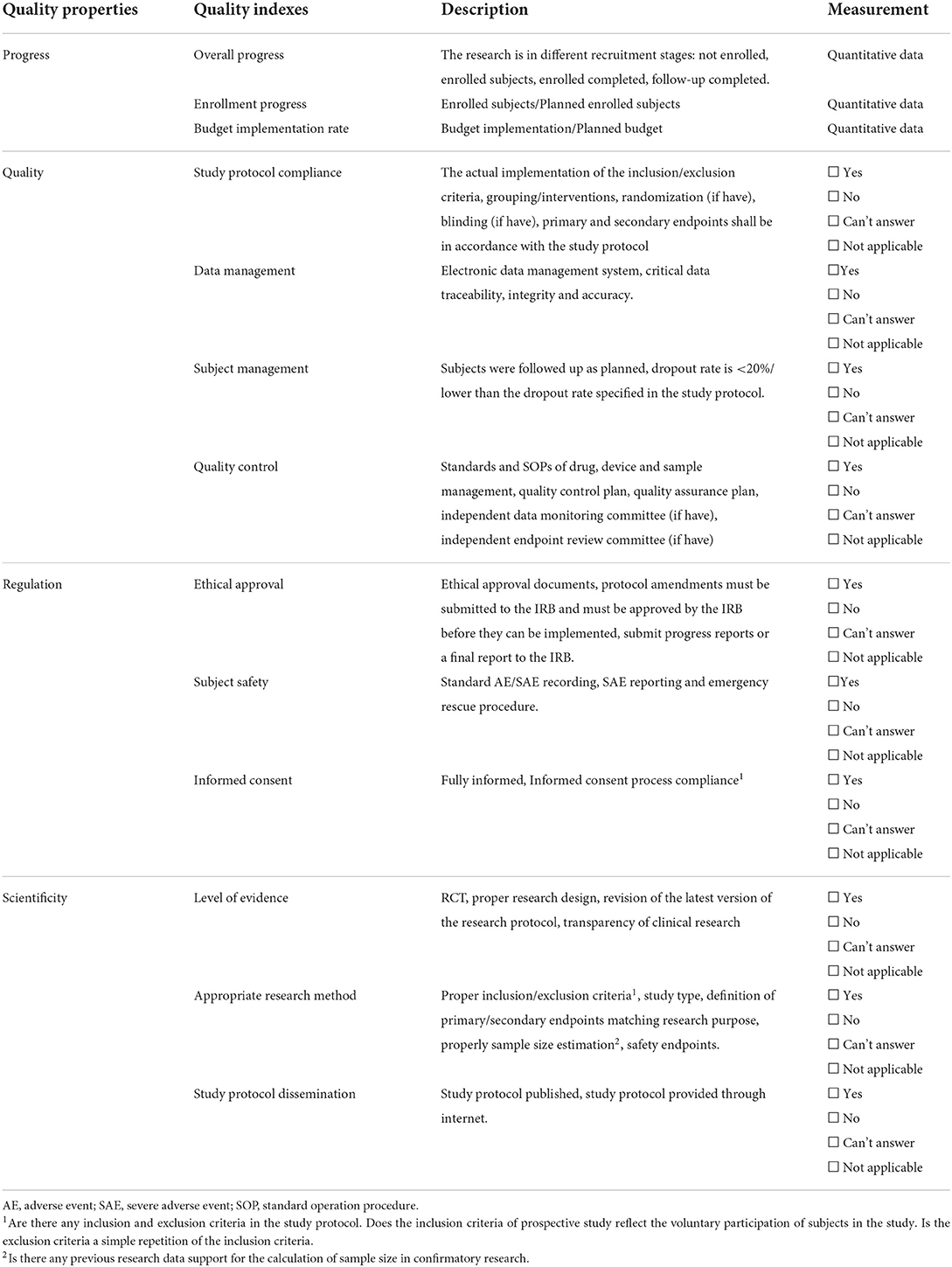

Investigator initiated trials should follow GCP principles to ensure protection of the trial subjects and assures quality and credibility of the data obtained. We used focus group interviews to collect and build critical attributes of IITs that the funding agencies like NIH, clinical research organizations and hospitals is most concerned about. Finally, four critical attributes of IITs were obtained, namely: progress, quality, regulation, and scientificity. We further expanded indices according to four critical attributes of IIT by referring to literature and accepted guidelines (Table 1). The importance and feasibility of the indicators were further evaluated through two rounds of the Delphi method. We consulted more than 20 experts with senior titles in each round, including clinical research methodologists, research managers, and clinicians engaged in clinical research. Furthermore, this research used confirmatory factor analysis (CFA) and structural equation modeling as tools to evaluate the structural validity of the index system (Figure 1).

Data source

This study obtained 272 IITs sponsored by Shanghai non-profit organizations in 2015 and 2016, mainly including the standardized application of clinical diagnosis and treatment technology for frequently occurring diseases, chronic diseases, and difficult diseases and the promotion of research achievements. We included IITs that are (1) on human subjects, (2) with protocol attachment or published protocol, and (3) with enrolled patients study. Animal or vitro experiments, projects without data collection, and studies without protocols were excluded. Finally, a total of 257 IITs were included in our research, owing to four projects were excluded due to not human subjects, and another 11 were excluded due to actively apply for project termination.

Data extraction and quality assessment

Our previous study refers to the risk-based monitoring method advocated by the international community and globally. It explored a set of standard processes in the framework of clinical research and regulation, which mainly included preparation before quality assessment, self-assessment of the project team, centralized inspection, on-site inspection, report writing, and comprehensive assessment. Referring to the clinical research quality assessment process, our team provided mid-term verification and quality control technical support for the quality assessment of the implementation process of IIT projects (21). 257 clinical research projects' quality assessment was also evaluated through the standard processes. Data were collected based on the review of research protocols, ethical approval, case report forms (CRFs), and informed consent submitted by researchers.

A nine-member committee is mainly responsible for data collection and access the indicators of IIT, including PM, DM, CRA, statistician, clinical expert, financial expert, scientific research management expert. PM was responsible for work coordination, DM was responsible for database building and management, two CRA were responsible for the collection of data on quality and ethical regulation, a financial expert was responsible for the collection of data on implementation of the project funds, a senior research manager was responsible for the collection of data on overall progress and enrollment progress of IITs, and the statisticians and clinical experts are responsible for the collection of data on scientific aspects which mainly assess level of evidence and appropriate research method of IITs. All text reviewers were trained in standard procedures to review research files.

For quantitative analysis, the following quality indexes were assessed: overall progress, enrollment progress, budget implementation rate. Each quality index was scored from 0 to 10. Overall progress was divided into not-enrolled, enrolled, treatment completed, follow-up completed, research type including RCT, Prospective cohort, Retrospective study, Real world research, Others. These statuses were given different scores. For quantifying data, each quality index was scored based on the components in research files proportionally. The total score for each index is 10 point. If any “No” and “Can't answer” were answered in each subindex, one point would be deducted. The minimum score of each index is 0.

Statistical methods

The REDCap [Research electronic data capture (http://projectredcap.org)] was used to input data to control the quality of data. All statistical analyses were performed by R statistical software. Descriptive of basic characteristics of included IITs was presented by mean standard deviation or percentage, as appropriate. The structure validity of the index system of the quality evaluation of clinical research was tested by confirmatory factor analysis. Model fit was evaluated using the Tucker-Lewis Index (TLI), CMIN/DF the ratio chi-square (χ)/degrees of freedom (DF), Goodness-of-Fit Index (GFI), Comparative Fit Index (CFI), Standardized Root Mean Squares Residual (SRMR), and the Root Mean Square Error of Approximation (RMSEA). This index ranged from 0 to 1, the better the fit is, and it is generally believed that CFI and TLI should be >0.9, and GFI is at least >0.80 (22). RMSEA is the index of evaluation model fitting. SRMR index measures the fitting degree of the model by measuring the standardized difference between the observed correlations and the model implied correlations about variables. The closer it is to 0, RMSEA values as high as 0.07 were regarded as acceptable and SRMR should be <0.08 (23). It is considered that the model fits well (22). All statistical analyses were performed by using the R statistical software, “lavaan” package, and “semPlot” package (R version 3.5.3). Two-sided P values of < 0.05 were considered to indicate statistical significance. In Excel, the radar plot was generated by using the insert function.

Results

The framework of quality indexes for IITs

We conducted two rounds of Delphi panels. The positive coefficient of the two rounds of experts in this study was 100% and the degree of expert authority was 0.932, indicating that the experts participated actively, showing a high degree of authority and a good effect of consultation. We revised some indices by summarizing and analyzing experts' opinions in the first round. For example, study protocol dissemination, one of the scientificity attributes, has been included. Experts believed that all prospective clinical trials should have an appropriate registry before the first participant is enrolled, and study protocol should be made public. At the end of the first round, we deleted two indicators and increased one indicator, and we modified the expression. In the second round of Delphi, we fed back the results of the first round consultation to the experts. Experts reached consensus on the revised indicators during the second round. Furthermore, the CFA method was used to analyze the reliability and validity of the indicators. The research finally identified the conceptual quality indicators for IITs which contain four themes: progress, quality, regulation, and scientificity. As shown in Table 1, 4 quality properties and 13 quality indices were developed through conducting clinical trials.

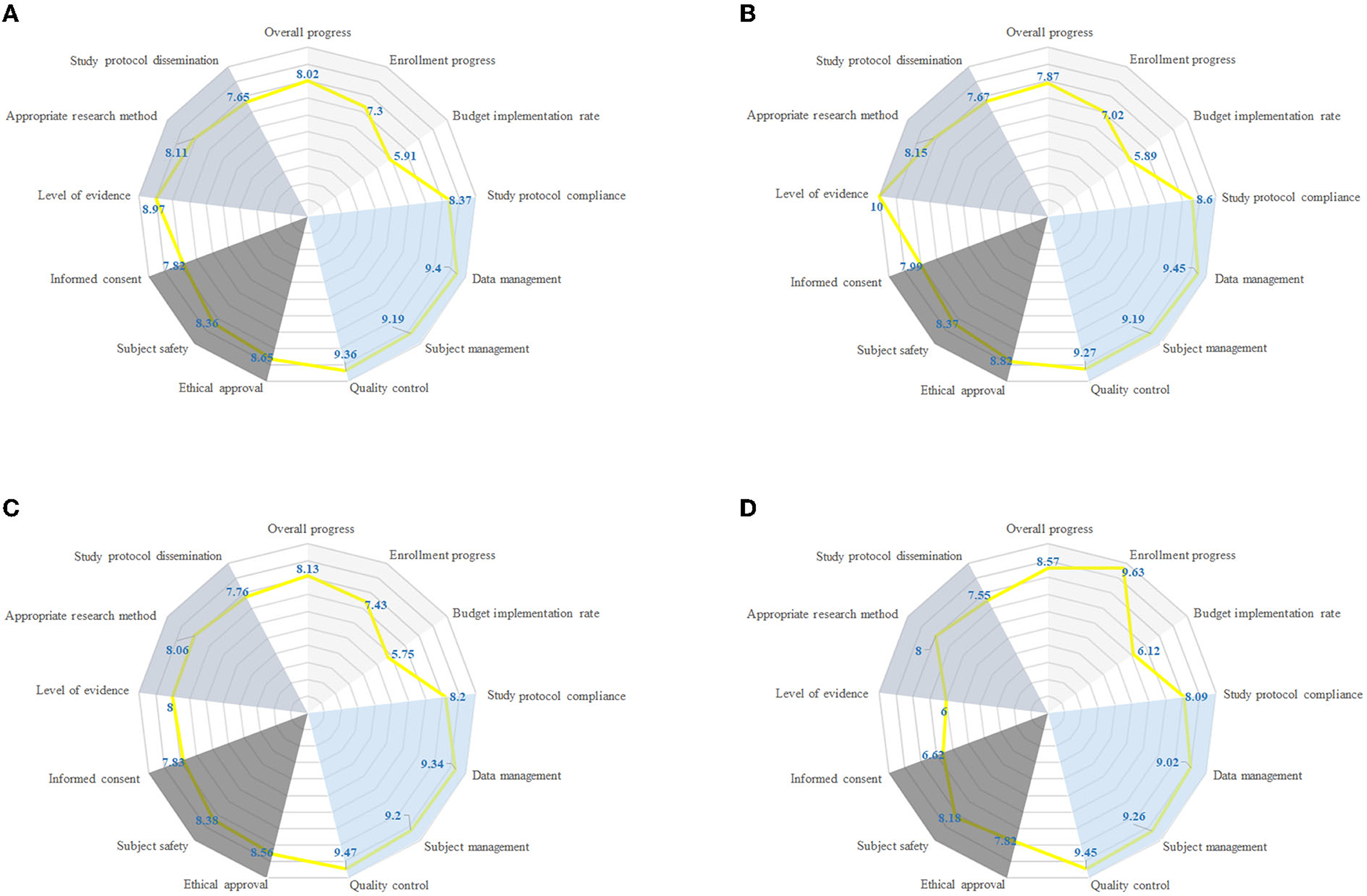

Characteristics of included IITs

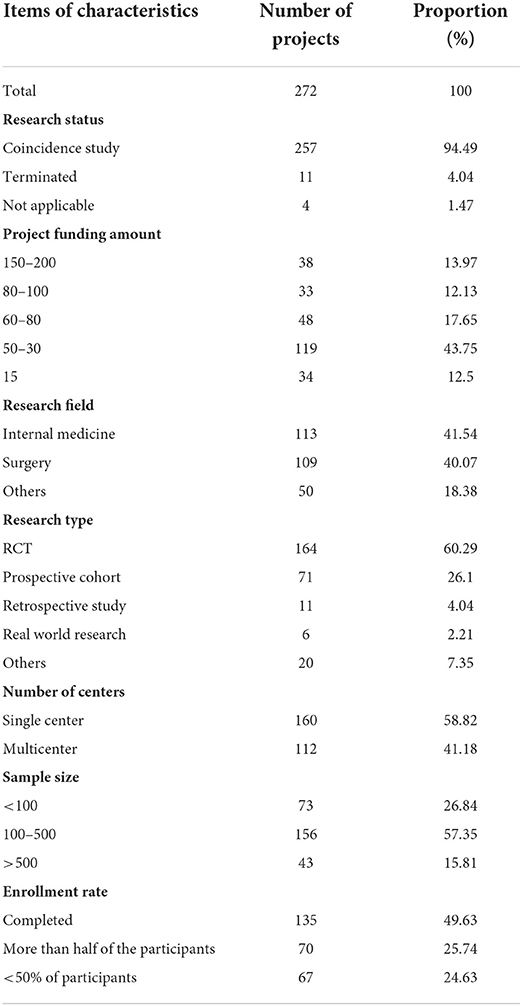

In 2015 and 2016, non-profit government funded 272 IITs, covering 30 tertiary first-class hospitals in Shanghai. It was concluded in 2019, 4 projects were excluded and actively apply for the termination of 11 clinical research projects. Finally, 257 studies were included for validation of quality indicators for IITs: 94.49% (n = 272) studies were assessed, 4.04% (n = 272) and 1.47% (n = 272) studies terminated early (Table 2). 41.54% (n = 272) studies were internal medicine, others (18.38%, n = 272) mainly included department of facial features, obstetrics and Gynecology, pediatrics. The majority of the studies (60.29%, n = 272) were in RCT, others (7.35%, n = 272) mainly included diagnostic and cross-sectional study. 58.82% (n = 272) of the studies were Single center. 57.35% of the studies had a sample size of 100–500 and only 26.84% (n = 272) of the studies had a sample size of <100. In 45.59% of the projects, the research protocol changed during the implementation of the study. As it is shown in Figure 2, 8.82% of trials changed inclusion criteria during the implementation of the study. Interventions and sample size also changed (5.88%, 4.78%).

Table 2. Basic characteristics of included for investigator-initiated trials validation of quality assessment tool.

Figure 2. Distributions of research type and protocol change for IITs. (A) Distribution of research type for IITs. (B) Distribution of protocol change for IITs.

Validation of quality assessment tool of IITs

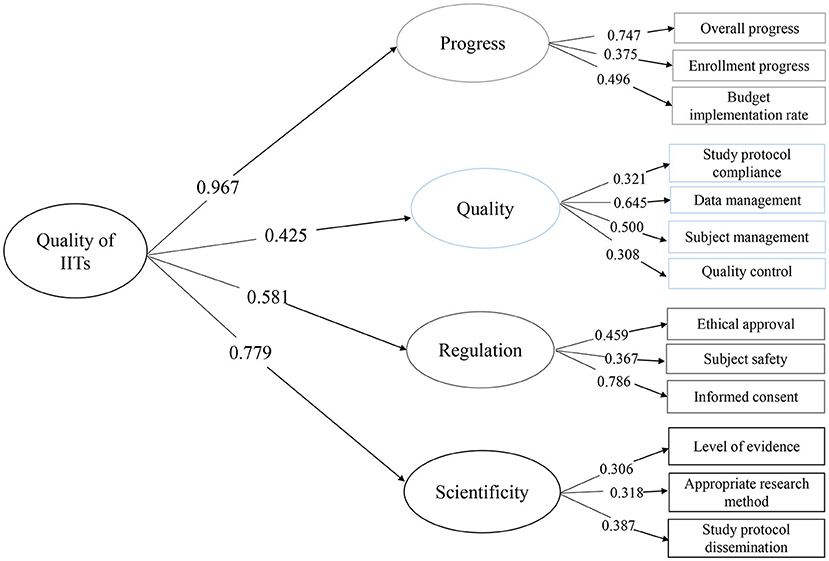

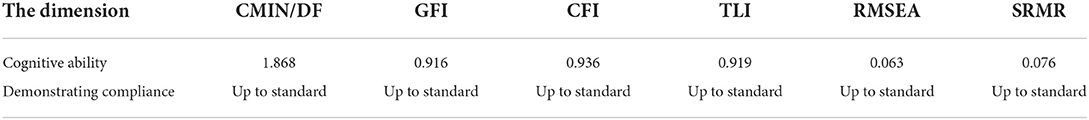

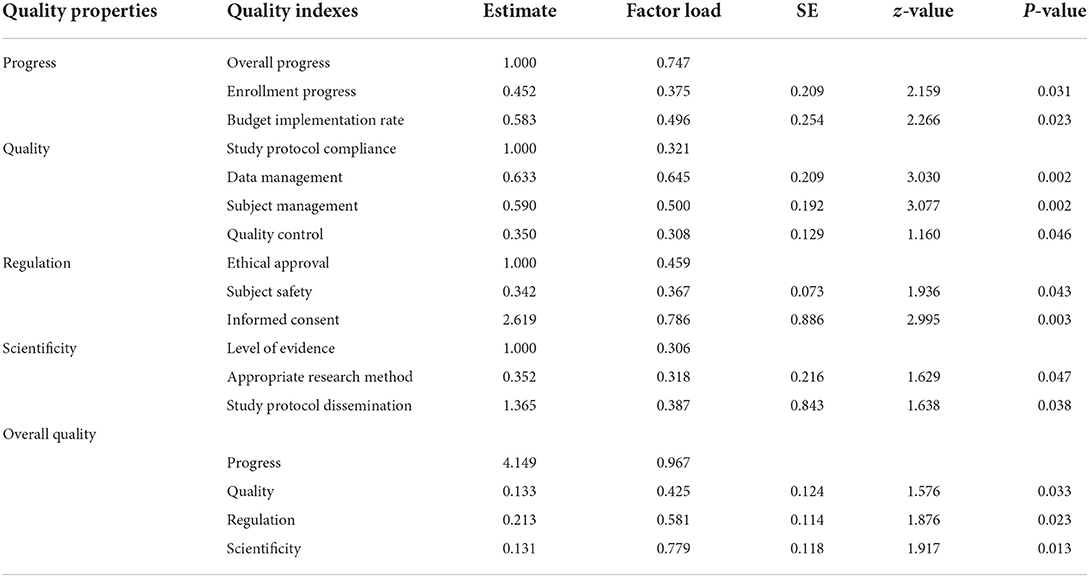

The preliminary quality indicators of quality assessment tools were further evaluated construct validity by using CFA (Figure 3). The critical quality properties and quality indexes between expertise and CFA are consistent. In this study, CMIN/DF, GFI, CFI, TLI, RMSEA, and SRMR indexes were selected as the indexes of the statistical model. It can be noticed in Table 3 that CMIN/DF was 1.868 <3, GFI, CFI, and TLI were all larger than 0.9, RMSEA was <0.07, and SRMR was <0.08, and it can be considered that the above quality assessment index extraction results were feasible. As is shown in Table 4, factor loadings were most of all P < 0.05 and ranged from a minimum of 0.306 to a maximum of 0.786. Meanwhile, the factor loadings between overall IITs quality and one-class index ranged from 0.425 to 0.967 with P < 0.05. CFA was used for the index screening of 257 IITs on 17 indices. The results of CFA suggested that the standardized factor loading of 17 indices were all statistically significant, and the factor loading all remained above 0.3 (23), and thus the preliminary entry screening did not delete any index.

Results of quality assessment of IITs

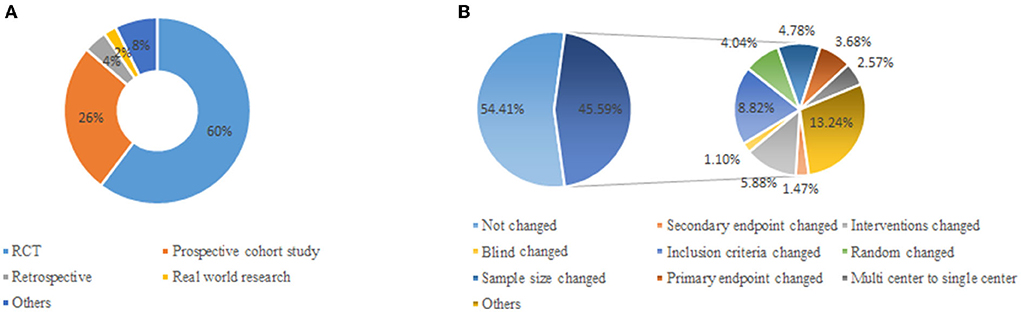

Radar graphing was used to display data. Radar plots had a series of spokes or rays arising from a central point, with each ray showing a different index, such as data management. Figure 4 illustrated that polygons were created with each spoke showing one of the secondary indexes and each point on the spoke reflecting the magnitude of the mean results, and different colors region represent the different one-class index. Among 257 IITs, data management was well-presented with an average score of 9.40, followed by quality control of 9.36. Enrollment progress and budget implementation rate scored low, which were 7.30 and 5.91, respectively. RCT, prospective cohort and retrospective performed similarly in subject management, appropriate research method, and quality control. However, RCT had lower scores than prospective cohort and retrospective study in enrollment progress (7.02 vs. 7.43, 9.63, respectively).

Figure 4. Radar chart comparing different research type with respect to 13 quality characteristics (individual radial axes). Each axis shows fraction of IITs with given property, such as overall progress, enrollment progress, budget implementation rate. (A) Quality characteristics by all IITs. (B) Quality characteristics by RCT. (C) Quality characteristics by prospective cohort. (D) Quality characteristics by retrospective study.

Discussion

Our study proposed a proactive quality assessment consideration for IITs which consisted of four aspects: progress, quality, regulation, and scientificity. A total of 257 IITs were included for the validation of quality indicators. We further confirmed the structural validity of the critical quality properties and quality indexes as latent variables. According to the results of CFA, CMIN/DF, GFI, CFI, TLI, RMSEA, SRMR, and other indicators used in the model test all met the requirements. The CFA model revealed strong positive links from four quality indexes to the overall quality of ITTs.

The panorama quality assessment tool can effectively evaluate the quality of research, and help find major bias. For the management side like National Institutes of Health, the assessment tool can effectively improve the efficiency and effectiveness of funding. With the help of this tool, funding agencies can better decide which project should be supported or terminated. Assessment tool is very important to manage and improve the quality of clinical trials. Quality assessment standard processes in the framework of clinical research and regulation follow international guidelines. Traditionally, the International Conference on Harmonization–Good Clinical Practices (ICH-GCP) described two verification activities: quality control and quality assurance, with the aims to protect the rights and wellbeing of subjects and ensure protocol compliance and data integrity. FDA (17) and EMA (18) guidelines both issued in 2013, ICH GCP guidelines (16) issued in 2016 and NMPA GCP (24) issued in 2020 suggested focusing on critical data and critical processes, and encouraged to adopt risk-based approaches to monitor clinical trials. A series of research studies reported that risk-based monitoring had the potential to make trials more efficient and reduced costs (25, 26). In this study, risk-based approach was adopted to identify critical data of IITs and improve the capacity of self-regulate overall quality. Various research types of IIT projects will increase the difficulty of quality evaluation. Standardization of practices in monitoring activities will be a suitable method for the management of IITs.

We further confirmed the structural validity of the IITs quality assessment tools by CFA. The model also presented the importance of progress and scientificity. Progress, measured jointly by overall progress, enrollment progress, budget implementation rate, exerted direct and indirect effects on the overall quality of IITs in our theory which was confirmed by the model. Poor recruitment of participants is the most common reason for the RCT discontinuation, which reflects a large waste of scarce research resources (27). In this study, enrollment progress and budget implementation rate scored 7.30 and 5.91 respectively, which were rather low. The reason for the low recruitment progress of subjects may be linked with funding, design, recruiter, or participant (28). In addition, there were differences in the progress of subject recruitment among different research types, and the lowest recruitment progress is RCT. Scientificity, measured jointly by level of evidence, appropriate research method, and study protocol dissemination had direct and indirect impacts on the overall quality of IITs in our theory which was confirmed by the model. Our study found that almost half (117/257) of the research protocol adjusted during the implementation process may be associated with poor design. It is important that all research findings, including negative and inconclusive results are reported transparently and made publicly available in order to avoid unnecessary duplication of research or biases in the clinical knowledge base (8). While our study found that 80% of the project research protocols have not been published in public journals or websites, which was consistent with the literature (29). Also, poorly conducted research may result in slow dissemination of research results which has been reported among registered clinical studies (29), with almost half of the studies remaining unpublished years after completion may be aroused by “a lack-of-time or low priority,” followed by “results not important enough” and “journal rejection” (30).

Different from previous assessment tools like RACT, which is more suitable for ISTs. The panorama tool developed in this study was optimized for funding agencies to assess the quality of ongoing IITs. For ISTs, the responsibility to avoid failure due to unsatisfactory progress or scientificity is mostly up to the industrial sponsor. Therefore, the regulatory department can focus on the ethics and quality aspects of the studies. However, for IITs, funding agencies should take progress and scientificity into consideration that the resources will be used more efficiently. Therefore, unlike the traditional point that the most important aspects of clinical trials are subject safety and rights plus data quality, this tool also emphasized the importance of progress and scientificity. Also, the tool can be used to assess the quality of both study design and implementation, and can be used throughout the whole clinical research, regardless of the research types. What's more, a little different from other risk based monitoring tools, this tool not only can find risks and determine the monitoring methods, also can be used to compare the quality of several clinical studies.

Our study had several advantages and limitations as well. First, the samples used to confirm the CFA were collected from ongoing studies, and no previous research was identified to discuss the ongoing studies. Our study developed the tool can objectively reflect the current research status, regardless of the research types. Second, the sample size was up to standard. We collected more than 200 samples for CFA and while CFI is a non-centrality parameter-based index designed to overcome the limitation of sample size effects (31). Third, our study adopted a risk-based monitoring method to identify critical data and processes, which was in line with international trends and saved resources. The quality assessment tool for IITs enabled us to evaluate the overall quality of IITs and helped refine quality practices in IITs. However, we only included the clinical research projects in Shanghai hospitals. Further research is needed to confirm this tool in more general scenarios.

Conclusion

The results of critical quality properties and quality indexes between expertise and confirmatory factor analysis were basically consistent, indicating applying this panoramic quality assessment tool for overall quality evaluation of IITs is feasible and validated. This panorama tool can enable project management departments to effectively and dynamically manage the quality of their studies, and can timely and dynamically find errors, take actions to prevent major bias. Furthermore, the project management departments will be able to terminate the “low-quality” project in advance, and provide rolling support for the “high quality” project based on the situation of the projects. It is hoped that this tool can provide project management departments with resources for effective and dynamic management of researches and avoid waste of resources, as well as a manner to improve the quality of IITs in the future.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

WL, TH, WZ, and BQ designed the study. WZ and BQ co-ordinated the study. WL, TH, JJ, TQ, ES, JD, and XM performed the acquisition of data and the statistical analysis. WL and TH drafted the manuscript. All authors revised the final manuscript and approved this version to be published.

Funding

This work was supported by the Project of Shanghai Jiao Tong University School of Medicine (Grant No. WK2003), Shanghai Jiao Tong University Medical and Industrial Cross Project (YG2022QN004), and Program of Shanghai Academic/Technology Research Leader (Grant No. 21XD1402600).

Acknowledgments

We thank all the researchers who were engaged in quality evaluation of IITs.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

AE, adverse event; CFI, comparative fit index; GCP, good clinical practice; ICH, International Conference on Harmonization; PQRS, progress-quality-regulation- scientificity; RMSEA, root mean square error of approximation; NMPA, National Medical Products Administration; SAE, severe adverse event; SEM, structural equation modeling; SOP, standard operation procedure; TLI, Tucker-Lewis index.

Footnotes

References

1. Konwar M, Bose D, Gogtay NJ, Thatte UM. Investigator-initiated studies: challenges and solutions. Perspect Clin Res. (2018) 9:179–83. doi: 10.4103/picr.PICR_106_18

2. Herfarth HH, Jackson S, Schliebe BG, Martin C, Ivanova A, Anton K, et al. Investigator-initiated IBD trials in the United States: facts, obstacles, and answers. Inflamm Bowel Dis. (2017) 23:14–22. doi: 10.1097/MIB.0000000000000907

3. Williams B, Poulter NR, Brown MJ, Davis M, McInnes GT, Potter JF, et al. British hypertension society guidelines for hypertension management 2004 (BHS-IV): summary. BMJ. (2004) 328:634–40. doi: 10.1136/bmj.328.7440.634

4. Davis S. Embedding good clinical practice into investigator-initiated studies or trials. Perspect Clin Res. (2020) 11:1–2. doi: 10.4103/picr.PICR_2_20

5. Figer BH, Sapra KP, Gogtay NJ, Thatte UM. A comparative study to evaluate quality of data documentation between investigator-initiated and pharmaceutical industry-sponsored studies. Perspect Clin Res. (2020) 11:13–7. doi: 10.4103/picr.PICR_122_18

6. Kondo S, Hosoi H, Itahashi K, Hashimoto J. Quality evaluation of investigator-initiated trials using post-approval cancer drugs in Japan. Cancer Sci. (2017) 108:995–9. doi: 10.1111/cas.13223

7. van Oijen JCF, Grit KJ, Bos WJW, Bal R. Assuring data quality in investigator-initiated trials in Dutch hospitals: balancing between mentoring and monitoring. Account Res. (2021) 1–29. doi: 10.1080/08989621.2021.1944810

8. Blümle A, Wollmann K, Bischoff K, Kapp P, Lohner S, Nury E, et al. Investigator initiated trials versus industry sponsored trials - translation of randomized controlled trials into clinical practice (IMPACT). BMC Med Res Methodol. (2021) 21:182. doi: 10.1186/s12874-021-01359-x

9. Zhu RF, Gao YL, Robert SH, Gao JP, Yang SG, Zhu CT. Systematic review of the registered clinical trials for coronavirus disease 2019 (COVID-19). J Transl Med. (2020) 18:274. doi: 10.1186/s12967-020-02442-5

10. Brosteanu O, Schwarz G, Houben P, Paulus U, Strenge-Hesse A, Zettelmeyer U, et al. Risk-adapted monitoring is not inferior to extensive on-site monitoring: results of the ADAMON cluster-randomized study. Clin Trials. (2017) 14:584–96. doi: 10.1177/1740774517724165

11. Stenning SP, Cragg WJ, Joffe N, Diaz-Montana C, Choudhury R, Sydes MR, et al. Triggered or routine site monitoring visits for randomized controlled trials: results of TEMPER, a prospective, matched-pair study. Clin Trials. (2018) 15:600–9. doi: 10.1177/1740774518793379

12. Lundh A, Rasmussen K, Stengaard L, Boutron I, Stewart LA, Hróbjartsson A. Systematic review finds that appraisal tools for medical research studies address conflicts of interest superficially. J Clin Epidemiol. (2020) 39:17–21. doi: 10.1016/j.jclinepi.2019.12.005

13. European Clinical Research Infrastructure Network (ECRIN). Risk-Based Monitoring Toolbox. (2015). Available online at: https://ecrin.org/tools/risk-based-monitoring-toolbox (accessed August 19, 2022).

14. TransCelerate. Risk-Based Monitoring Position Paper. (2013). Available online at: http://admin.cqaf.org/theme/default/cache/file/20170718/1500346900606780.pdf (accessed March 1, 2022).

15. Patwardhan S, Gogtay N, Thatte U, Pramesh CS. Quality and completeness of data documentation in an investigator-initiated trial versus an industry-sponsored trial. Indian J Med Ethics. (2014) 11:19–24. doi: 10.20529/IJME.2014.006

16. ICH. ICH harmonized Guideline: Integrated Addendum to ICH E6(R1): Guideline for Good Clinical Practice E6(R2) (Current Step 4 version). (2016). Available online at: https://www.ich.org/fileadmin/Public_Web_Site/ICH_Products/Guidelines/Efficacy/E6/E6_R2__Step_4_2016_1109.pdf (accessed March 1, 2022).

17. FDA. Guidance for Industry: Oversight of Clinical Investigations—A Risk-Based Approach to Monitoring. (2013). Available online at: https://www.fda.gov/media/116754/download (accessed March 1, 2022).

18. EMA. Reflection Paper on Risk-Based Quality Management in Clinical Trials. (2013). Available online at: http://www.ema.europa.eu/docs/en_GB/document_library/Scientific_guideline/2013/11/WC500155491.pdf (accessed March 1, 2022).

19. Chan AW, Tetzlaff JM, Altman DG, Dickersin K, Moher D. SPIRIT 2013: new guidance for content of clinical trial protocols. Lancet. (2013) 381:91–2. doi: 10.1016/S0140-6736(12)62160-6

20. Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. BMJ. (2010) 340:c332. doi: 10.1136/bmj.c332

21. Lv WW, Hu TT, Zhang WT, Qu TT, Shen EL, Sun Z, et al. Discussion on quality assessment process of investigator-initiated trial implementation. Chin J New Drugs Clin Remedies. (2020) 39:17–21. doi: 10.14109/j.cnki.xyylc.2020.01.04

22. Ondé D, Alvarado JM. Reconsidering the conditions for conducting confirmatory factor analysis. Span J Psychol. (2020) 23:e55. doi: 10.1017/SJP.2020.56

23. Kline RB. Principles and Practice of Structural Equation Modeling 2nd ed. New York, NY: Guilford (2005).

24. NMPA. Good Clinical Practice. (2020). Available online at: http://www.nhc.gov.cn/yzygj/s7659/202004/1d5d7ea301f04adba4c4e47d2e92eb96.shtml (accessed March 1, 2022).

25. Beever D, Swaby L. An evaluation of risk-based monitoring in pragmatic trials in UK clinical trials units. Curr Control Trials Cardiovasc Med. (2019) 20:556. doi: 10.1186/s13063-019-3619-6

26. Journot V, Pignon JP, Gaultier C, Daurat V, Bouxin-Métro A, Giraudeau B, et al. Validation of a risk-assessment scale and a risk-adapted monitoring plan for academic clinical research studies–the pre-optimon study. Contemp Clin Trials. (2011) 32:16–24. doi: 10.1016/j.cct.2010.10.001

27. Kasenda B, Elm EV, You J, Blümle A, Tomonaga Y, Saccilotto R, et al. Prevalence, characteristics, and publication of discontinued randomized trials. JAMA. (2014) 311:1045–51. doi: 10.1001/jama.2014.1361

28. Briel M, Olu KK, von Elm E, Kasenda B, Alturki R, Agarwal A, et al. A systematic review of discontinued trials suggested that most reasons for recruitment failure were preventable. J Clin Epidemiol. (2016) 80:8–15. doi: 10.1016/j.jclinepi.2016.07.016

29. Magdalena Z, Mark D, Hingorani AD, Jackie H. Clinical trial design and dissemination: comprehensive analysis of clinicaltrials.gov and PubMed data since 2005. BMJ. (2018) 361:k2130. doi: 10.1136/bmj.k2130

30. Song F, Parekh S, Hooper L, Loke YK, Harvey IM. Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess. (2010) 14:1–193. doi: 10.3310/hta14080

Keywords: investigator initiated trials, quality assessment, tool, quality attributes, structural model

Citation: Lv W, Hu T, Jiang J, Qu T, Shen E, Duan J, Miao X, Zhang W and Qian B (2022) Panoramic quality assessment tool for investigator initiated trials. Front. Public Health 10:988574. doi: 10.3389/fpubh.2022.988574

Received: 07 July 2022; Accepted: 25 August 2022;

Published: 13 September 2022.

Edited by:

Songlin He, Chongqing Medical University, ChinaReviewed by:

Aizuddin Hidrus, University of Malaysia Sabah, MalaysiaBaosen Zhou, China Medical University, China

Zhi-Jie Zheng, Peking University, China

Copyright © 2022 Lv, Hu, Jiang, Qu, Shen, Duan, Miao, Zhang and Qian. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Biyun Qian, cWlhbmJpeXVuJiN4MDAwNDA7c2p0dS5lZHUuY24=; Weituo Zhang, d2VpdHVvemhhbmcmI3gwMDA0MDsxMjYuY29t

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work and share last authorship

Wenwen Lv

Wenwen Lv Tingting Hu1†

Tingting Hu1† Weituo Zhang

Weituo Zhang Biyun Qian

Biyun Qian