- 1Medical School of the Chinese PLA, Beijing, China

- 2Department of General Medicine, The First Center of the Chinese PLA General Hospital, Beijing, China

- 3School of Control Science and Engineering, Shandong University, Jinan, Shandong, China

- 4Luoyang Outpatient Department of 63650 Army Hospital of the Chinese PLA, Luoyang, China

- 5Department of Orthopedics, Chinese PLA General Hospital, National Clinical Research Center for Orthopedics, Sports Medicine and Rehabilitation, Beijing, China

Background: According to the WHO, anemia is a highly prevalent disease, especially for patients in the emergency department. The pathophysiological mechanism by which anemia can affect facial characteristics, such as membrane pallor, has been proven to detect anemia with the help of deep learning technology. The quick prediction method for the patient in the emergency department is important to screen the anemic state and judge the necessity of blood transfusion treatment.

Method: We trained a deep learning system to predict anemia using videos of 316 patients. All the videos were taken with the same portable pad in the ambient environment of the emergency department. The video extraction and face recognition methods were used to highlight the facial area for analysis. Accuracy and area under the curve were used to assess the performance of the machine learning system at the image level and the patient level.

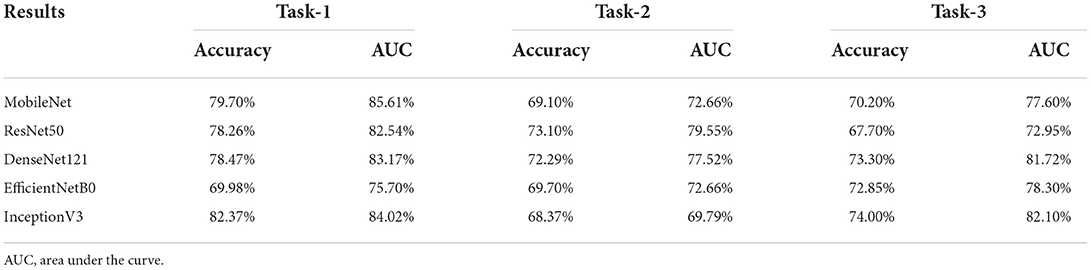

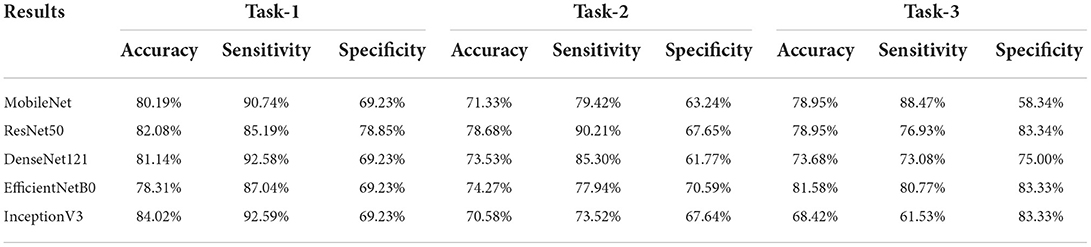

Results: Three tasks were applied for performance evaluation. The objective of Task 1 was to predict patients' anemic states [hemoglobin (Hb) <13 g/dl in men and Hb <12 g/dl in women]. The accuracy of the image level was 82.37%, the area under the curve (AUC) of the image level was 0.84, the accuracy of the patient level was 84.02%, the sensitivity of the patient level was 92.59%, and the specificity of the patient level was 69.23%. The objective of Task 2 was to predict mild anemia (Hb <9 g/dl). The accuracy of the image level was 68.37%, the AUC of the image level was 0.69, the accuracy of the patient level was 70.58%, the sensitivity was 73.52%, and the specificity was 67.64%. The aim of task 3 was to predict severe anemia (Hb <7 g/dl). The accuracy of the image level was 74.01%, the AUC of the image level was 0.82, the accuracy of the patient level was 68.42%, the sensitivity was 61.53%, and the specificity was 83.33%.

Conclusion: The machine learning system could quickly and accurately predict the anemia of patients in the emergency department and aid in the treatment decision for urgent blood transfusion. It offers great clinical value and practical significance in expediting diagnosis, improving medical resource allocation, and providing appropriate treatment in the future.

Introduction

Anemia is characterized by a hemoglobin concentration below a specified cut-off point; this cut-off point depends on the age, gender, physiological status, smoking habits, and altitude at which the population being assessed lives. Current hemoglobin cut-off recommendations range from 13 to 14.2 g/dl in men and 11.6 to 12.3 g/dl in women (1). Severe anemia is often a sequela of malnutrition, parasitic infections, or underlying disease (2) and is also caused by trauma or other medical conditions such as gastrointestinal hemorrhage. In emergency departments, acute blood loss diseases often cause severe anemia, such as trauma, gastrointestinal hemorrhage, etc., and require quick identification and prompt restoration of the circulation volume to save the patients. Without immediate attention, patients will bleed to death from hemorrhagic shock (3). The classic symptoms of anemia are fatigue and shortness of breath, paleness of the mucous membranes and resting tachycardia (4). Interestingly, several reports have shown that anemia can be qualitatively associated with subjective assessment of the pallor in various parts of the body, such as the conjunctiva, face, lips, fingernails, and palmer creases (5–11). Previous studies have demonstrated that hemoglobin absorbs green light and reflects red light (12); hemoglobin concentration can affect tissue color.

Nowadays, a complete blood count is a common way to diagnose anemia. However, blood samples are obtained via invasive venipuncture, which necessitates the presence of professional medical staff and equipment (13–15). In the emergency department or ICU, obtaining information about blood hemoglobin levels is essential to ensure whether the patient needs an instant blood transfusion to save their lives (16). Thus, CBC may not be adequate or fast enough to meet the demand of doctors when screening for anemia patients fast and accurately, especially in mass casualty incidents such as war settings. With the rapid development of technology, noninvasive facial recognition technology has been widely used in medicine, such as the area of diagnosis of genetic disorder diseases diagnosis (17, 18), the area of diagnosis of dermatological diseases diagnosis (19, 20), the area of nervous system diseases (21, 22), etc. Researchers have been studying mucous membrane color changes as a potential biomarker for rapid and reliable anemia diagnosis using facial recognition technology in recent years (23–25).

Deep learning (DL), a subfield of artificial intelligence (AI), passes input through a large number of layers of interconnected nonlinear processing units to represent complicated and abstract concepts (26). Deep learning has had numerous important breakthroughs in fields as diverse as speech recognition, image recognition, natural language processing, translation, etc. (27–30). In this study, we will use the deep learning method to extract features from facial images and establish a correlation with the anemic state through layers of training.

Our study aims to determine whether the facial images taken under specific circumstances correlate with the anemic state. Researchers like Dr. Suner and Dr. Collings have found a model to detect the hemoglobin concentration from the analysis of conjunctiva (23, 24). Our goal is to develop a model to predict patients' anemic states using images of the patients with the analysis of the entire face taken from the portable pad so that it can promote fast and accurate screening for the anemic state of emergent and severe patients.

Materials and methods

Video collection

This was an observational prospective sample study. From October 1, 2021, to 13th April 2022, all videos were collected from patients in the critical care area in the emergency department of Chinses PLA General Hospital First Central Division for any chief complaint. The inclusion criteria were as follows: (1) 18 years old. The exclusion criteria were as follows: (1) patients or guardians unwilling to provide written consent, (2) Patients suffering from diseases affecting the color of the face except for blood loss (such as jaundice, skin diseases that affect the skin color, etc.), (3) known hypoxia (SpO2 <90%), and (4) receiving or due to receive a blood transfusion before video collection and blood sample measurement. All patients who participated provided their informed written consent.

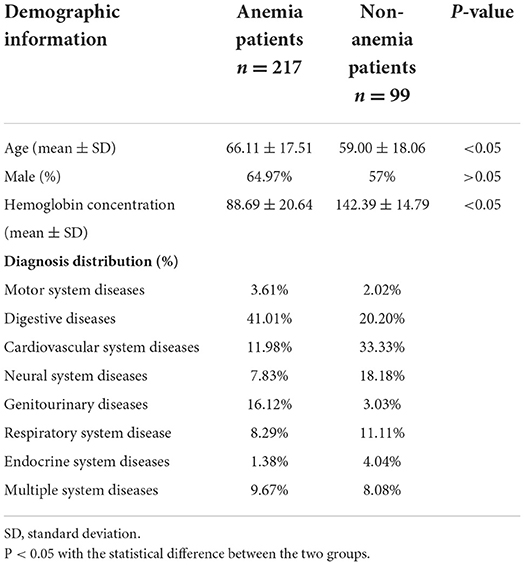

All patients were asked to lie supine as comfortably as possible. Videos were made under the ambient indoor stable light with the camera of a pad (AIM75-WIFI). The pad was positioned 40 cm directly in front of the patients' faces to ensure that the whole face was captured on the screen. We shot a 5-s video with the pad placed in front of the faces; then, we rotated the pad 45 degrees to the left and right of the faces, with the distance unchanged, and shot another two 5-s videos. This way, we made a 15-s video of each patient who agreed to participate. The automatic focus was used throughout, and the flash was forbidden. Videos were captured in High Frame Rate 60 format and stored in Mp4 format. The resolution of the videos was 1,280 × 720. The blood sample of each participant was acquired immediately after the video, and hemoglobin measurement was carried out within 30 min of sample acquisition, ensuring that hemoglobin results matched the video analysis. Demographic information for patients included gender, age, admitting diagnosis, and hospital laboratory-reported hemoglobin results (Table 1). All videos and demographic information were collected by a single operator to reduce the variability. Research approval was granted by the Institutional Review Board (IRB) of the Medical Ethics Committee of Chinese PLA General Hospital.

Procedure

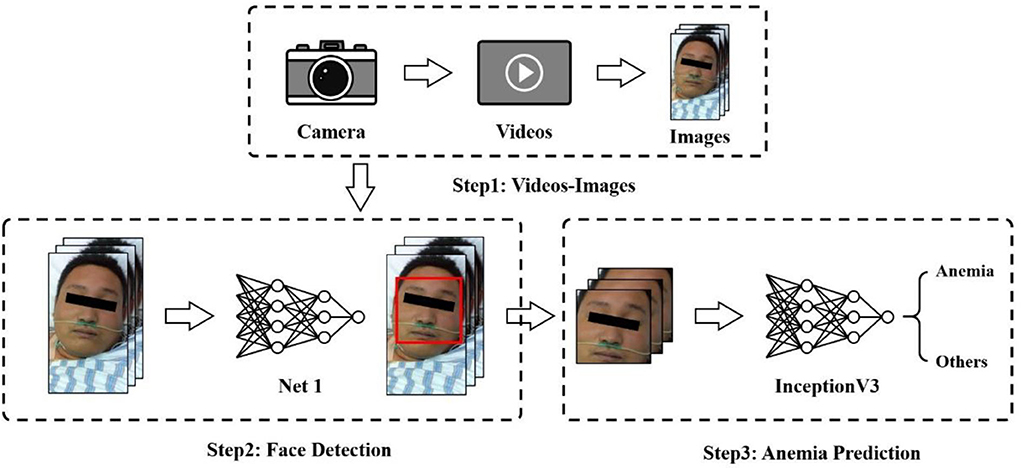

This section presents our proposed framework for anemia prediction, as shown in Figure 1. The framework consists of three major modules: the video-image module, face detection network, and anemia prediction network. Patient videos were first fed into a video-image module and converted to images. Then, a face recognition algorithm was performed to produce the detected faces of patients, which were the inputs of the anemia prediction network.

Data pre-processing

As anemia prediction relied on an image classification strategy, video frames were retrieved and saved as images. During shooting, patients could assume different positions. Face correction was performed to correct the face direction. Considering that there were hundreds of frames in a video, we chose to utilize some of them. Images were extracted and stored every 50 frames from videos of all patients, which finally built the dataset used in our experiments. Data augmentation, such as horizontal flipping, zooming, and rotation, was used to reduce overfitting and improve model performance.

In the study, we labeled the data at the patient level. All the patients in the research received a complete blood count test shortly after the video collection to determine whether they were anemic or not. Although one patient might have multiple videos, images extracted from videos have the same label if they belong to the same person. Specifically, if a patient was diagnosed with anemia, all images extracted from the videos of the patient were labeled as anemic or otherwise.

Face detection

We used the service offered by Megvii Co., Ltd., known as Face++, as the face recognition and detection solution. Megvii is a Chinese technology company that mainly focuses on developing image recognition and deep learning software. Megvii manages one of the world's largest research institutes specializing in computer vision, and it is the largest provider of third-party authentication software in the world. Its product, Face++, is the world's largest open-source computer vision platform. The service released on their artificial intelligence open platform can detect and analyze human faces with the provided images. Even patients with different postures or expressions can produce results, which saves us quite a lot of time in annotating and training a face detector from scratch.

We used the Face++ detector to detect faces within images and got back face bounding boxes for each detected face. The values of the bounding boxes were used to crop and extract face regions from the original images. The images, after cropping, were down-sampled and resized into 224 × 224-pixels for subsequent anemia prediction.

Anemia prediction

While most traditional machine learning methods depend largely on hand-crafted features, deep learning techniques have received sufficient attention for various computer science tasks, including classification, object detection, and segmentation. In this study, we compared and showed the classification results of five convolutional neural networks, including ResNet50, MobileNet, InceptionV3, EfficientNetB0, and DenseNet121 (Tables 2, 3) before finally choosing InceptionV3 as the proposed model.

In addition to data augmentation, applying pre-trained models learned from large-scale datasets, such as ImageNet, was another way to reduce overfitting (31). In this study, we initialized the models for anemia prediction with pre-trained ImageNet weights and fine-tuned them on our own training dataset. Specifically, taking a 224 × 224-pixel image as input, the anemia prediction network was initially initialized with pre-trained ImageNet weights. We froze all layers and trained only the top layers until convergence. For this step, a relatively large learning rate (1e−3) was used. Then, we unfroze all the layers, fitted the model using smaller learning (1e−4), and saved the best model.

Experimental settings

We conducted five-fold cross-validation with different seeds to evaluate our prediction method and reported the mean accuracy and AUC among five runs of combined test folds. For each experiment, we split the dataset into training and validation sets with a ratio of 7:3 at a patient level, which meant that images extracted from one person did not appear in different datasets, i.e., training or validation set. We set different class weights to solve the class imbalance problem that emerged in our research. Only the best model was saved based on the quantity monitor's valid accuracy. The model was validated on a holdout set, and the performance was assessed by sensitivity, specificity, accuracy, and area under the receiver operating characteristic curve (AUC). All codes were implemented in Tensorflow and run on an NVIDIA GeForce RTX 2080 Ti GPU.

Clinician assessment

Two senior emergency department doctors were invited to subjectively assess the validation videos. Doctors were first shown three example videos of anemic patients and three videos of non-anemic patients. Then, all the videos in the validation set were shown randomly to the doctors. Doctors were asked to rank each video as “anemic” or “not anemic.” Each doctor's accuracy, sensitivity, and specificity were assessed to show the clinical performance in detecting anemia patients.

Results

Our research recruited 362 patients, and 362 videos of faces were taken. Two experienced physicians were invited to assess the quality of the videos, and 45 videos were removed for a low definition or failure to display a complete face image. Thus, 316 face videos of 316 patients were used in the research, of which 217 patients were diagnosed with anemia based on the results of a complete blood count, and the average hemoglobin concentration was 10.55 g/dl. One hundred ninety-eight male and 118 female patients were included in the research, and the average age was 63.88. Demographic information of the patients is shown in Table 1. Three tasks were performed in the research. Task 1 aimed to predict the anemia of patients (Hb <13 g/dl in men and Hb <12 g/dl in women), while Task 2 aimed to predict the mild anemia of patients (Hb <9 g/dl). Finally, Task 3 aimed to predict the severe anemia of patients (Hb <7 g/dl).

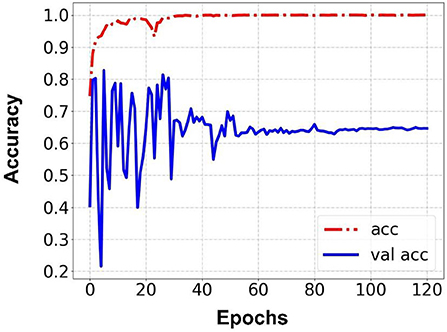

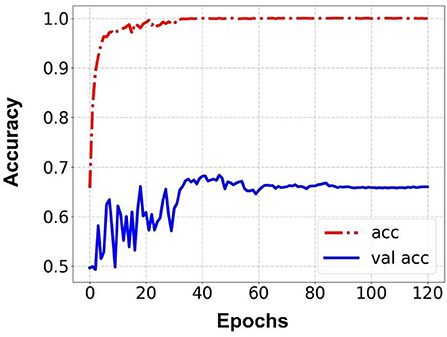

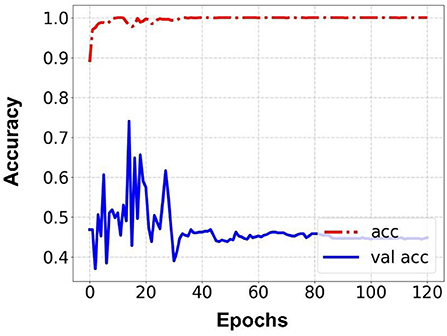

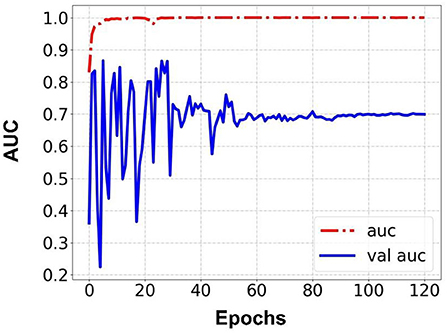

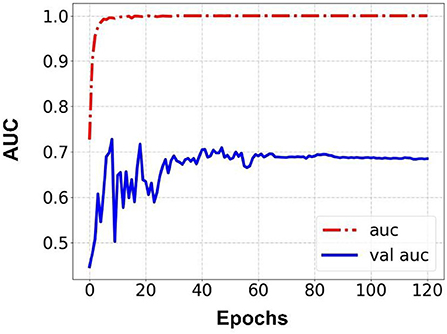

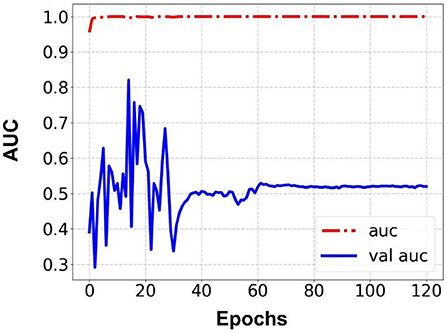

Prediction results were performed at two levels for each task: image and patient levels. With the help of data augmentation technology, 6,993 images of patients were extracted from the 316 videos and split into training data sets and validation data sets with a ratio of 7:3. The image level and patient level were two evaluation forms for the results. The image level and the prediction results of the model were assessed by the accuracy and AUC. Table 2 shows the results of three tasks at the image level. Figures 2–4 demonstrated the accuracy results for Task 1, Task 2, and Task 3 in image level, and Figures 5–7 demonstrate the AUC results for Task 1, Task 2, and Task 3. The patient-level results were the aggregating results of the image level and directly reflected the model's prediction ability in the clinical environment. Accuracy, sensitivity, and specificity were used to evaluate the prediction ability at the patient level, which is shown in Table 3.

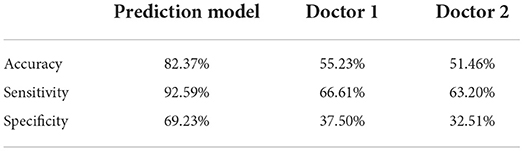

To compare the performance of the prediction model and assess the clinical acceptability, we invited two senior doctors from the emergency department to assess each video in the validation set as anemic or not. The performance of each doctor was assessed by the accuracy, sensitivity, and specificity, and the comparison between the prediction model and the doctors' prediction is shown in Table 4.

Discussion

Our research used the facial images of patients combined with the technology of deep learning to build the prediction model for the detection of anemia. The research performed three tasks to examine the model's ability to detect patients with varying anemia degrees. Two comparisons were made to evaluate the prediction model: the first comparison was between our model and Collings's model (23) and Hermoza's model (32). Collings's prediction was based on the conjunctiva and showed 76% accuracy in predicting anemia. Hermoza's model was based on the fingernail and showed 68% accuracy in predicting anemia. Meanwhile, our prediction model reached 84.02% accuracy, indicating that anemia showed better prediction ability than other prediction models. The second comparison was between our model and senior doctors. Both these doctors have less accuracy than the prediction model, scoring 55.23 and 51.46% accuracy, respectively, indicating the promising clinical utility of our model. All the results showed that the model could accurately predict the anemia patients with the facial images and had relatively good performance for predicting mild and severe anemia patients. In particular, for the severe anemia prediction, our results showed the promising performance of the model in the area of clinical treatment aid. The strengths and limitations of each will be discussed in the following paragraphs.

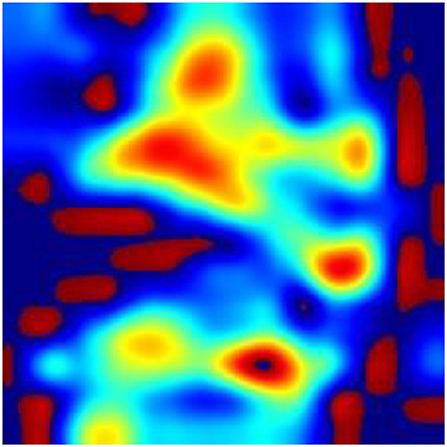

According to the WHO, anemia is a highly prevalent disease, affecting over two billion people worldwide (33). In recent years, rapid screening and diagnosis of anemic patients has become a hot topic. The theoretical support for the research is obvious: anemia may correlate with the pallor in various regions of the patient's body, such as fingernail beds, conjunctiva, palmar creases, and so on (7, 9, 10). The rapid development of new devices (34) combined with the theory leads to great achievement in the area of the noninvasive, quick detection of anemia. Learning from the reports about the detection of anemia in recent years, fingernail beds and conjunctiva are two important regions for detecting anemia. Fingernail beds and conjunctiva are short of melanocytes, which can affect the red light reflection of hemoglobin. Thus, they are of great use in anemia detection. However, it is worth noting that those researchers require good patient compliance and that patients need to finish the movement to expose the conjunctiva or their fingernail beds. Although this movement is quite easy for healthy people or patients with mild disease, it is difficult for severely anemic patients or those in a coma, compromising the research findings due to insufficient exposure to the characteristic areas. It can also be learned from the previous reports that after the collected data was filtered, it was necessary to eliminate some data whose feature areas were not fully exposed, leading to the reduction of the data (23). In this research, we collected the whole facial images for analysis instead of focusing on a specific organ or region of the face and used a deep learning model to automatically extract facial features to make a prediction. To explore the technical details related to facial feature recognition used by the proposed deep learning model, we used a technique called Gradient-weighted Class Activation Mapping (Grad-CAM) (35) to produce visual explanations for decisions of a convolutional neural network (CNN)-based model. Grad-CAM uses the gradients of any target concept, flowing into the final convolutional layer to produce a coarse localization map highlighting the important regions in the image for predicting the concept. The Grad-CAM results in Figures 8–10 demonstrate that the features extracted from eyes and lips contribute more to the prediction. Multiple facial features extracted from the videos assist the model in achieving better prediction performance compared with other models in previous reports. At the same time, the acquisition of facial images often only requires consent from patients or guardians without specific body positions or movement, which is convenient, reduces the potential harm to the patients, and is more in line with the requirements of medical ethics.

In this research, all the research equipment is a pad commonly used in public without the participation of additional auxiliary equipment. At present, a lot of auxiliary equipment is often used for image acquisition and analysis, such as a flash lamp, calibration card, etc. (36, 37). The use of these auxiliary equipment hinders the practical application of the experimental results. Each piece of additional auxiliary equipment adds an uncontrolled influencing factor to the research. Our research used only a pad to complete all video acquisition work. The videos were analyzed by artificial intelligence, realizing the rapid, convenient, and accurate detection of the anemic state, and the comparison result also showed promising clinical utility in the future. Promoting this deep learning-based anemia detection technology, especially in areas short of resources, will enable medical staff to quickly screen and detect the anemic state of patients with fewer resources.

Another strength of our research is that all the participants were patients in the critically ill area of the emergency department. Compared to previous research whose inclusion patients were clearly diagnosed and the diagnosis was simple or even just diagnosed as anemia, the diagnosis of inclusion patients in our research was more complicated, and the condition was more critical, such as gastrointestinal hemorrhage, severe trauma, acute myocardial infarction, and other emergencies. Some patients' diagnoses were combined with several diseases; some were even diagnosed with multiple organ dysfunction syndromes (MODS), and anemia was often diagnosed only after a routine blood test. Therefore, the facial changes of these patients were more complicated. In addition to the facial pallor and indifference caused by anemia, the facial changes caused by other diseases, such as the painful face caused by trauma and the face of comatose patients, would impact this research. The facial videos were analyzed by deep learning technology to screen for the features most relevant to anemia, contributing to the facial analysis model and establishing the prediction model of anemia from facial images with high accuracy.

In reality, detecting anemia via facial images is a part of our facial recognition research. In the emergency environment, patients' conditions are often complex and difficult to diagnose. The core point of triage is how to quickly judge the patient's condition and deploy the most appropriate treatment for them without wasting medical resources. Currently, the commonly used triage methods include “Simple Triage And Rapid Treatment” (START), “Abbreviated Injury Scale” (AIS), “Injury Severity Score” (ISS), and so on (38). Reasonably and accurately applying the triage methods often requires professional and experimental medical staff, which many medical institutions, especially in areas with limited resources, fail to meet the demand (39). Our research has realized the noninvasive, rapid, accurate detection of anemia for urgent cases of anemia. In the future, the application of our triage research will favor the quick judgment of patients' conditions to make reasonable triage decisions. Emergency and severely ill patients often exhibit different emergency faces, such as cyanosis and dyspnea of acute airway obstruction, breathing like dying patients, etc. We can make full use of these characteristics to establish a connection between the facial images and the critical degree of the patients so that we can find a new rapid and simple triage method. This will be a very complex but meaningful challenge for us.

Besides diagnosis and triage, our prediction model also has the potential to aid treatment. Many severely traumatic patients will appear in large-scale battlefield or mass casualty incidents where they may suffer from traumatic hemorrhagic shock, and timely blood transfusion treatment will significantly influence their prognosis (40). However, providing blood transfusion treatment to every patient with limited blood resources is impossible. Many patients suffer from severe trauma without obvious bleeding, such as closed abdominal or pelvic trauma. When there are not enough laboratory devices, it is difficult to diagnose the anemia state of these patients and whether they need an urgent blood transfusion. Our research achieved high accuracy in detecting severe anemia with the help of a portable device (Hb < 70 g/L), which was also the threshold of blood transfusion (41); it could aid doctors in the treatment decision for or against transfusion fast and accurately.

The limitation of this research is that we used a single pad to finish the research, and the changes in research results after using different devices or different deep learning technology may lead to the deviation of detection of anemia, which will be further verified in subsequent research. The validation set was from the same hospital, and multicenter validation is an important task in future studies. Our research participants were all Chinese, meaning the research results might not apply to white people or black people. Analyzing the imperfect results of our research, especially for mild and severe anemia, we attribute the limitations to two reasons: small facial changes in mild anemia and insufficient data. However, the promising results of this research convince us that further research with more data will bring us better results.

Conclusion

Patients' anemia in the ED might be diagnosed fast and correctly by the machine learning prediction model, which would help physicians decide whether or not to administer a blood transfusion. It offers great clinical value and practical significance, expediting diagnoses, improving medical resource allocation, and providing appropriate treatment in the future.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

AZ: research design and writing. JLo: analysis and model design. ZP: data review and supervision. JLu and HZ: data collection. XZ: data collection and validation. JLi: data review and methodology. LW: data review. XC: data validation. BJ: model design and supervision. LC: research supervision and article review. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the National Key R&D Program of the Ministry of Science and Technology (2020YFB1313904).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Cappellini MD, Motta I. Anemia in clinical practice-definition and classification: does hemoglobin change with aging? Semin Hematol. (2015) 52:261–9. doi: 10.1053/j.seminhematol.2015.07.006

2. Scott SP, Chen-Edinboro LP, Caulfield LE, Murray-Kolb LE. The impact of anemia on child mortality: an updated review. Nutrients. (2014) 6:5915–32. doi: 10.3390/nu6125915

3. Meneses E, Boneva D, McKenney M, Elkbuli A. Massive transfusion protocol in adult trauma population. Am J Emerg Med. (2020) 38:2661–6. doi: 10.1016/j.ajem.2020.07.041

4. Cascio MJ, DeLoughery TG. Anemia: evaluation and diagnostic tests. Med Clin North Am. (2017) 101:263–84. doi: 10.1016/j.mcna.2016.09.003

5. Thaver IH, Baig L. Anaemia in children: part I. Can simple observations by primary care provider help in diagnosis? J Pak Med Assoc. (1994) 44:282–4.

6. Sheth TN, Choudhry NK, Bowes M, Detsky AS. The relation of conjunctival pallor to the presence of anemia. J Gen Intern Med. (1997) 12:102–6. doi: 10.1007/s11606-006-5004-x

7. Chalco JP, Huicho L, Alamo C, Carreazo NY, Bada CA. Accuracy of clinical pallor in the diagnosis of anaemia in children: a meta-analysis. BMC Pediatr. (2005) 5:46. doi: 10.1186/1471-2431-5-46

8. Weber MW, Kellingray SD, Palmer A, Jaffar S, Mulholland EK, Greenwood BM. Pallor as a clinical sign of severe anaemia in children: an investigation in the Gambia. Bull World Health Organ. (1997) 75:113–8.

9. Kalantri A, Karambelkar M, Joshi R, Kalantri S, Jajoo U. Accuracy and reliability of pallor for detecting anaemia: a hospital-based diagnostic accuracy study. PLoS ONE. (2010) 5:e8545. doi: 10.1371/journal.pone.0008545

10. Strobach RS, Anderson SK, Doll DC, Ringenberg QS. The value of the physical examination in the diagnosis of anemia. Correlation of the physical findings and the hemoglobin concentration. Arch Intern Med. (1988) 148:831–2. doi: 10.1001/archinte.148.4.831

11. Adeyemo TA, Adeyemo WL, Adediran A, Akinbami AJ, Akanmu AS. Orofacial manifestations of hematological disorders: anemia and hemostatic disorders. Indian J Dent Res. (2011) 22:454–61. doi: 10.4103/0970-9290.87070

12. Setaro M, Sparavigna A. Quantification of erythema using digital camera and computer-based colour image analysis: a multicentre study. Skin Res Technol. (2002) 8:84–8. doi: 10.1034/j.1600-0846.2002.00328.x

13. Gottfried EL. Erythrocyte indexes with the electronic counter. N Engl J Med. (1979) 300:1277. doi: 10.1056/NEJM197905313002219

14. Karnad A, Poskitt TR. The automated complete blood cell count. Use of the red blood cell volume distribution width and mean platelet volume in evaluating anemia and thrombocytopenia. Arch Intern Med. (1985) 145:1270–2. doi: 10.1001/archinte.145.7.1270

15. Neufeld L, García-Guerra A, Sánchez-Francia D, Newton-Sánchez O, Ramírez-Villalobos MD, Rivera-Dommarco J. Hemoglobin measured by hemocue and a reference method in venous and capillary blood: a validation study. Salud Publica Mex. (2002) 44:219–27. doi: 10.1590/S0036-36342002000300005

16. Denny SD, Kuchibhatla MN, Cohen HJ. Impact of anemia on mortality, cognition, and function in community-dwelling elderly. Am J Med. (2006) 119:327–34. doi: 10.1016/j.amjmed.2005.08.027

17. Gurovich Y, Hanani Y, Bar O, Nadav G, Fleischer N, Gelbman D, et al. Identifying facial phenotypes of genetic disorders using deep learning. Nat Med. (2019) 25:60–4. doi: 10.1038/s41591-018-0279-0

18. Basel-Vanagaite L, Wolf L, Orin M, Larizza L, Gervasini C, Krantz ID, et al. Recognition of the cornelia de lange syndrome phenotype with facial dysmorphology novel analysis. Clin Genet. (2016) 89:557–63. doi: 10.1111/cge.12716

19. Kharazmi P, Zheng J, Lui H, Jane Wang Z, Lee TK, A. Computer-aided decision support system for detection and localization of cutaneous vasculature in dermoscopy images via deep feature learning. J Med Syst. (2018) 42:33. doi: 10.1007/s10916-017-0885-2

20. Choi JW, Kim BR, Lee HS, Youn SW. Characteristics of subjective recognition and computer-aided image analysis of facial erythematous skin diseases: a cornerstone of automated diagnosis. Br J Dermatol. (2014) 171:252–8. doi: 10.1111/bjd.12769

21. Jin B, Qu Y, Zhang L, Gao Z. Diagnosing Parkinson disease through facial expression recognition: video analysis. J Med Internet Res. (2020) 22:e18697. doi: 10.2196/18697

22. Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, et al. Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am J Psychiatry. (2003) 160:1768–74. doi: 10.1176/appi.ajp.160.10.1768

23. Collings S, Thompson O, Hirst E, Goossens L, George A, Weinkove R. Non-invasive detection of anaemia using digital photographs of the conjunctiva. PLoS ONE. (2016) 11:e0153286. doi: 10.1371/journal.pone.0153286

24. Suner S, Rayner J, Ozturan IU, Hogan G, Meehan CP, Chambers AB, et al. Prediction of anemia and estimation of hemoglobin concentration using a smartphone camera. PLoS ONE. (2021) 16:e0253495. doi: 10.1371/journal.pone.0253495

25. Chen YM, Miaou SG. A kalman filtering and nonlinear penalty regression approach for noninvasive anemia detection with palpebral conjunctiva images. J Healthc Eng. (2017) 2017:9580385. doi: 10.1155/2017/9580385

27. Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet large scale visual recognition challenge. arXiv. (2014) 1–42. doi: 10.48550/arXiv.1409.0575

28. Oord A, Dieleman S, Zen H, Simonyan K, Kavukcuoglu K. WaveNet: a generative model for raw audio. arXiv. (2016). doi: 10.48550/arXiv.1609.03499

29. Shen J, Pang R, Weiss RJ, Schuster M, Jaitly N, Yang Z, et al. Natural TTS synthesis by conditioning wavenet on mel spectrogram predictions. arXiv. (2017). doi: 10.48550/arXiv.1712.05884

30. Wu Y, Schuster M, Chen Z, Le QV, Norouzi M, Macherey W, et al. Google's neural machine translation system: bridging the gap between human and machine translation. arXiv. (2016). doi: 10.48550/arXiv.1609.08144

31. Xu Q, Liu L, Ji B. Knowledge distillation guided by multiple homogeneous teachers. Inf Sci. (2022) 607:230–43. doi: 10.1016/j.ins.2022.05.117

32. Hermoza L, De La Cruz J, Fernandez E, Castaneda B. Development of a semaphore of anemia: screening method based on photographic images of the ungueal bed using a digital camera. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Annual International Conference. San Miguel; Lima, Vol. 2020. (2020), p. 1931–5. doi: 10.1109/EMBC44109.2020.9176017

33. McLean E, Cogswell M, Egli I, Wojdyla D, de Benoist B. Worldwide prevalence of anaemia, WHO vitamin and mineral nutrition information system, 1993-2005. Public Health Nutr. (2009) 12:444–54. doi: 10.1017/S1368980008002401

34. Index VN. Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2016–2021 White Paper. San Jose, CA.

35. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra DJIJoCV. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. (2020) 128:336–59. doi: 10.1007/s11263-019-01228-7

36. Suner S, Crawford G, McMurdy J, Jay G. Non-invasive determination of hemoglobin by digital photography of palpebral conjunctiva. J Emerg Med. (2007) 33:105–11. doi: 10.1016/j.jemermed.2007.02.011

37. Muthalagu R, Bai VT, Gracias D, John S. Developmental screening tool: accuracy and feasibility of non-invasive anaemia estimation. Technol Health Care. (2018) 26:723–7. doi: 10.3233/THC-181291

38. Bazyar J, Farrokhi M, Khankeh H. Triage systems in mass casualty incidents and disasters: a review study with a worldwide approach. Open Access Maced J Med Sci. (2019) 7:482–94. doi: 10.3889/oamjms.2019.119

40. Spahn DR, Bouillon B, Cerny V, Duranteau J, Filipescu D, Hunt BJ, et al. The European guideline on management of major bleeding and coagulopathy following trauma: fifth edition. Crit Care. (2019) 23:98. doi: 10.1186/s13054-019-2347-3

Keywords: anemia, deep learning, emergency medicine, facial recognition, diagnosis

Citation: Zhang A, Lou J, Pan Z, Luo J, Zhang X, Zhang H, Li J, Wang L, Cui X, Ji B and Chen L (2022) Prediction of anemia using facial images and deep learning technology in the emergency department. Front. Public Health 10:964385. doi: 10.3389/fpubh.2022.964385

Received: 17 June 2022; Accepted: 03 October 2022;

Published: 09 November 2022.

Edited by:

Vladimir Lj Jakovljevic, University of Kragujevac, SerbiaReviewed by:

Saneera Hemantha Kulathilake, Rajarata University of Sri Lanka, Sri LankaBin Yang, University of Chester, United Kingdom

Copyright © 2022 Zhang, Lou, Pan, Luo, Zhang, Zhang, Li, Wang, Cui, Ji and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bing Ji, Yi5qaUBzZHUuZWR1LmNu; Li Chen, Y2hlbmxpQDMwMWhvc3BpdGFsLmNvbS5jbg==

†These authors have contributed equally to this work and share first authorship

Aixian Zhang

Aixian Zhang Jingjiao Lou

Jingjiao Lou Zijie Pan4†

Zijie Pan4† Xiang Cui

Xiang Cui Bing Ji

Bing Ji